Channel Estimation for RIS Assisted Wireless Communications: Part II - An Improved Solution Based on Double-Structured Sparsity

(Invited Paper)

Abstract

Reconfigurable intelligent surface (RIS) can manipulate the wireless communication environment by controlling the coefficients of RIS elements. However, due to the large number of passive RIS elements without signal processing capability, channel estimation in RIS assisted wireless communication system requires high pilot overhead. In the second part of this invited paper, we propose to exploit the double-structured sparsity of the angular cascaded channels among users to reduce the pilot overhead. Specifically, we first reveal the double-structured sparsity, i.e., different angular cascaded channels for different users enjoy the completely common non-zero rows and the partially common non-zero columns. By exploiting this double-structured sparsity, we further propose the double-structured orthogonal matching pursuit (DS-OMP) algorithm, where the completely common non-zero rows and the partially common non-zero columns are jointly estimated for all users. Simulation results show that the pilot overhead required by the proposed scheme is lower than existing schemes.

Index Terms:

Reconfigurable intelligent surface (RIS), channel estimation, compressive sensing.I Introduction

In the first part of this two-part invited paper, we have introduced the fundamentals, solutions, and future opportunities of channel estimation in the reconfigurable intelligent surface (RIS) assisted wireless communication system. One of the most important challenges of channel estimation is that, the pilot overhead is high, since the RIS consists of a large number of passive elements without signal processing capability [1, 2]. By exploiting the sparsity of the angular cascaded channel, i.e., the cascade of the channel from the user to the RIS and the channel from the RIS to the base station (BS), the channel estimation problem can be formulated as a sparse signal recovery problem, which can be solved by compressive sensing (CS) algorithms with reduced pilot overhead [3, 4]. However, the pilot overhead of most existing solutions is still high.

In the second part of this paper, in order to further reduce the pilot overhead, we propose a double-structured orthogonal matching pursuit (DS-OMP) based cascaded channel estimation scheme by leveraging the double-structured sparsity of the angular cascaded channels111Simulation codes are provided to reproduce the results presented in this paper: http://oa.ee.tsinghua.edu.cn/dailinglong/publications/publications.html. . Specifically, we reveal that the angular cascaded channels associated with different users enjoy the completely common non-zero rows and the partially common non-zero columns, which is called as “double-structured sparsity” in this paper. Then, by exploiting this double-structured sparsity, we propose the DS-OMP algorithm based on the classical OMP algorithm to realize channel estimation. In the proposed DS-OMP algorithm, the completely common row support and the partially common column support for different users are jointly estimated, and the user-specific column supports for different users are individually estimated. After detecting all supports mentioned above, the least square (LS) algorithm can be utilized to obtain the estimated angular cascaded channels. Since the double-structured sparsity is exploited, the proposed DS-OMP based channel estimation scheme is able to further reduce the pilot overhead.

The rest of the paper is organized as follows. In Section II, we introduce the channel model and formulate the cascaded channel estimation problem. In Section III, we first reveal the double-structured sparsity of the angular cascaded channels, and then propose the DS-OMP based cascaded channel estimation scheme. Simulation results and conclusions are provided in Section IV and Section V, respectively.

Notation: Lower-case and upper-case boldface letters and denote a vector and a matrix, respectively; denotes the conjugate of vector ; and denote the transpose and conjugate transpose of matrix , respectively; denotes the Frobenius norm of matrix ; denotes the diagonal matrix with the vector on its diagonal; denotes the Kronecker product of and . Finally, denotes the probability density function of the circularly symmetric complex Gaussian distribution with mean and variance .

II System Model

In this section, we will first introduce the cascaded channel in the RIS assisted communication system. Then, the cascaded channel estimation problem will be formulated.

II-A Cascaded Channel

We consider that the BS and the RIS respectively employ the -antenna and the -element uniform planer array (UPA) to simultaneously serve single-antenna users. Let of size denote the channel from the RIS to the BS, and of size denote the channel from the th user to the RIS . The widely used Saleh-Valenzuela channel model is adopted to represent as [5]

| (1) |

where represents the number of paths between the RIS and the BS, , (), and () represent the complex gain consisting of path loss, the azimuth (elevation) angle at the BS, and the azimuth (elevation) angle at the RIS for the th path. Similarly, the channel can be represented by

| (2) |

where represents the number of paths between the th user and the RIS, , () represent the complex gain consisting of path loss, the azimuth (elevation) angle at the RIS for the th path. and represent the normalized array steering vector associated to the BS and the RIS, respectively. For a typical () UPA, can be represented by [5]

| (3) |

where and , is the carrier wavelength, and is the antenna spacing usually satisfying .

Further, we denote as the cascaded channel for the th user. Using the virtual angular-domain representation, can be decomposed as

| (4) |

where denotes the angular cascaded channel, and are respectively the and dictionary unitary matrices at the BS and the RIS [5]. Since there are limited scatters around the BS and the RIS, the angular cascaded channel has a few non-zero elements, which exhibits the sparsity.

II-B Problem Formulation

In this paper, we assume that the direct channel between the BS and the user is known for BS, which can be easily estimated as these in conventional wireless communication systems [5]. Therefore, we only focus on the cascaded channel estimation problem.

By adopting the widely used orthogonal pilot transmission strategy, all users transmit the known pilot symbols to the BS via the RIS over time slots for the uplink channel estimation. Specifically, in the th time slot, the effective received signal at the BS for the th user after removing the impact of the direct channel can be represented as

| (5) | ||||

where is the pilot symbol sent by the th user, is the reflecting vector at the RIS with representing the reflecting coefficient at the th RIS element in the th time slot, is the received noise with representing the noise power. According to the cascaded channel , we can rewrite (5) as

| (6) |

After time slots of pilot transmission, we can obtain the overall measurement matrix by assuming as

| (7) |

where and . By substituting (4) into (7), we can obtain

| (8) |

Let denote as the effective measurement matrix, and as the effective noise matrix, (7) can be rewritten as a CS model:

| (9) |

where is the sensing matrix. Based on (9), we can respectively estimate the angular cascaded channel for each user by conventional CS algorithms, such as OMP algorithm. However, under the premise of ensuring the estimation accuracy, the pilot overhead required by the conventional CS algorithms is still high.

III Joint Channel Estimation for RIS Assisted Wireless Communication Systems

In this section, we will first reveal the double-structured sparsity of the angular cascaded channels. Then, by exploiting this important channel characteristic, we will propose a DS-OMP based cascaded channel estimation scheme to reduce the pilot overhead. Finally, the computational complexity of the proposed scheme will be analyzed.

III-A Double-Structured Sparsity of Angular Cascaded Channels

In order to further explore the sparsity of the angular cascaded channel both in row and column, the angular cascaded channel in (4) can be expressed as

| (10) | ||||

where both and have only one non-zero element, which lie on the position of array steering vector at the direction in and . Based on (10), we can find that each complete reflecting path can provide one non-zero element for , whose row index depends on and column index depends on . Therefore, has non-zero rows, where each non-zero row has non-zero columns. The total number of non-zero elements is , which is usually much smaller than .

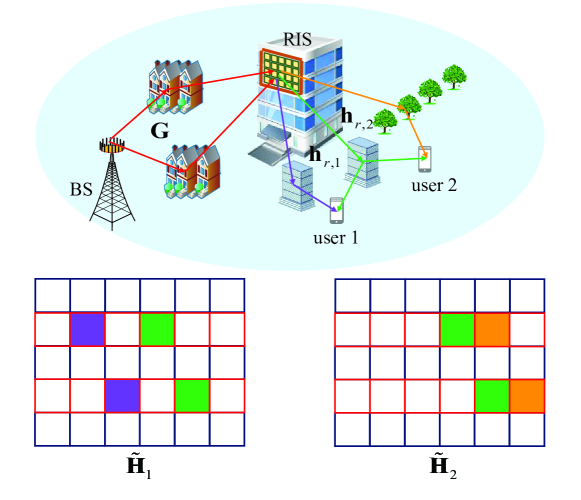

More importantly, we can find that different sparse channels exhibit the double-structured sparsity, as shown in Fig. 1. Firstly, since different users communicate with the BS via the common RIS, the channel from the RIS to the BS is common for all users. From (10), we can also find that is independent of the user index . Therefore, the non-zero elements of lie on the completely common rows. Secondly, since different users will share part of the scatters between the RIS and users, may enjoy partially common paths with the same angles at the RIS. Let () denote the number of common paths for , then we can find that for , there always exists shared by . That is to say, for each common non-zero rows (), enjoy common non-zero columns. This double-structured sparsity of the angular cascaded channels can be summarized as follows from the perspective of row and column, respectively.

-

•

Row-structured sparsity: Let denote the row set of non-zero elements for , then we have

(11) where represents the completely common row support for .

-

•

Partially column-structured sparsity: Let denote the column set of non-zero elements for the th non-zero row of , then we have

(12) where represents the partially common column support for the th non-zero row of .

Based on the above double-structured sparsity, the cascaded channels for different users can be jointly estimated to improve the channel estimation accuracy.

III-B Proposed DS-OMP Based Cascaded Channel Estimation

In this subsection, we propose the DS-OMP based cascaded channel estimation scheme by integrating the double-structured sparsity into the classical OMP algorithm. The specific algorithm can be summarized in Algorithm 1, which includes three key stages to detect supports of angular cascaded channels.

The main procedure of Algorithm 1 can be explained as follows. Firstly, the completely common row support is jointly estimated thanks to the row-structured sparsity in Step 1, where consists of row indexes associated with non-zero rows. Secondly, for the th non-zero row, the partially common column support can be further jointly estimated thanks to the partially column-structured sparsity in Step 2. Thirdly, the user-specific column supports for each user can be individually estimated in Step 3. After detecting supports of all sparse matrices, we adopt the LS algorithm to obtain corresponding estimated matrices in Steps 4-8. It should be noted that the sparse signal in (9) is , thus the sparse matrix estimated by the LS algorithm in Step 6 is . Finally, we can obtain the estimated cascaded channels by transforming angular channels into spatial channels in Step 9.

In the following part, we will introduce how to estimate the completely common row support, the partially common column supports, and the individual column supports for the first three stages in detail.

1) Stage 1: Estimating the completely common row support. Thanks to the row-structured sparsity of the angular cascaded channels, we can jointly estimate the completely common row support for by Algorithm 2.

From the virtual angular-domain channel representation (4), we can find that non-zero rows of are corresponding to columns with high power in the received pilots . Since have the completely common non-zero rows, can be jointly utilized to estimate the completely common row support , which can resist the effect of noise. Specifically, we denote of size to save the sum power of columns of , as in Step 2 of Algorithm 2. Finally, indexes of elements with the largest amplitudes in are selected as the estimated completely common row support in Step 4, where denotes a prune operator on that sets all but elements with the largest amplitudes to zero, and denotes the support of , i.e., .

After obtaining non-zero rows by Algorithm 2, we focus on estimating the column support for each non-zero row and each user by the following Stage 2 and 3.

2) Stage 2: Estimating the partially common column supports. Thanks to the partially column-structured sparsity of the angular cascaded channels, we can jointly estimate the partially common column supports for by Algorithm 3.

For the th non-zero row, we only need to utilize the effective measurement vector to estimate the partially common column support . The basic idea is that, we firstly estimate the column support with indexes for each user , then we select indexes associated with the largest number of times from all as the estimated partially common column support .

In order to estimate the column supports for each user , the correlation between the sensing matrix and the residual vector needs to be calculated. As shown in Step 5 of Algorithm 3, the most correlative column index in with is regarded as the newly found column support index . Based on the updated column support in Step 6, the estimated sparse vector is obtained by using the LS algorithm in Step 8. Then, the residual vector is updated by removing the effect of non-zero elements that have been estimated in Step 9. Particularly, the vector is used to count the number of times for selected column indexes in Step 10. Finally, the indexes of elements with the largest value in are selected as the estimated partially common column support in Step 13.

3) Stage 3: Estimating the individual column supports. Based on the estimated completely common row support and the estimated partially common column supports , the column support for each non-zero row and each user can be estimated by Algorithm 4.

For the th non-zero row, we have estimated column support indexes by Algorithm 3. Thus, there are user-specific column support indexes to be estimated for each user . The column support is initialized as . Based on , the estimated sparse vector and residual vector are initialized in Step 5 and Step 6. Then, the column support for and can be estimated in Steps 7-13 by following the same idea of Algorithm 3.

Through the above three stages, the supports of all angular cascaded channels are estimated by exploiting the double-structured sparsity. It should be pointed out that, if there are no common scatters between the RIS and users, the double-structured sparse channel will be simplified as the row-structured sparse channel. In this case, the cascaded channel estimation can also be solved by the proposed DS-OMP algorithm, where Stage 2 will be removed.

III-C Computational Complexity Analysis

In this subsection, the computational complexity of the proposed DS-OMP algorithm is analyzed in terms of three stages of detecting supports. In Stage 1, the computational complexity mainly comes from Step 2 in Algorithm 2, which calculates the power of columns of of size for . The corresponding computational complexity is . In Stage 2, for each non-zero row and each user in Algorithm 3 , the computational complexity is the same as that of OMP algorithm [6]. Considering iterations, the overall computational complexity of Algorithm 3 is . Similarly, the overall computational complexity of Algorithm 4 is . Therefore, the overall computational complexity of proposed DS-OMP algorithm is .

IV Simulation Results

In our simulation, we consider that the number of BS antennas, RIS elements and users are respectively (), (, ), and . The number of paths between the RIS and the BS is , and the number of paths from the th user to the RIS is set as for . All spatial angles are assumed to be on the quantized grids. Each element of RIS reflecting matrix is selected from by considering discrete phase shifts of the RIS [7]. , where denotes the distance between the BS and RIS and is assumed to be . , where denotes the distance between the RIS and user and is assumed to be for [7]. The SNR is defined as in (14) and is set as dB.

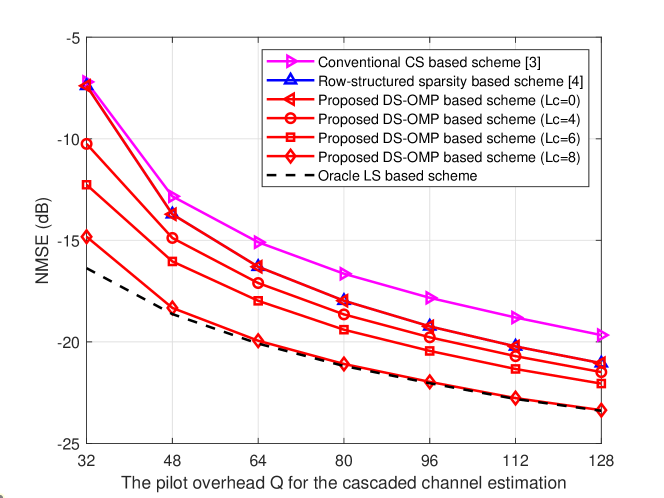

We compare the proposed DS-OMP based scheme with the conventional CS based scheme [3] and the row-structured sparsity based scheme [4]. In the conventional CS based scheme, the OMP algorithm is used to estimate the sparse cascaded channel for . In the row-structured sparsity based scheme, the common row support with indexes are firstly estimated, and then for each user and each non-zero row , column supports are respectively estimated by following the idea of the classical OMP algorithm. In addition, we consider the oracle LS scheme as our benchmark, where the supports of all sparse channels are assumed to be perfectly known.

Fig. 2 shows the normalized mean square error (NMSE) performance comparison against the pilot overhead, i.e., the number of time slots for pilot transmission. As shown in Fig. 2, in order to achieve the same estimation accuracy, the pilot overhead required by the proposed DS-OMP based scheme is lower than the other two existing schemes [3, 4]. However, when there is no common path between the RIS and all users, i.e., , the double-structured sparsity will be simplified as the row-structured sparsity [4]. Thus the NMSE performance of the proposed DS-OMP based and the row-structured sparsity based scheme is the same. With the increased number of common paths between the RIS and users, the NMSE performance of the proposed scheme can be improved to approach the benchmark of perfect channel supports.

V Conclusions

In this paper, we developed a low-overhead cascaded channel estimation scheme in RIS assisted wireless communication systems. Specifically, we first analyzed the double-structured sparsity of the angular cascaded channels among users. Based on this double-structured sparsity, we then proposed a DS-OMP algorithm to reduce the pilot overhead. Simulation results show that the pilot overhead required by the proposed DS-OMP algorithm is lower compared with existing algorithms. For the future work, we will apply the double-structured sparsity to the super-resolution channel estimation problem by considering the channel angles are continuous in practice.

References

- [1] L. Dai et. al, “Reconfigurable intelligent surface-based wireless communications: Antenna design, prototyping, and experimental results,” IEEE Access, vol. 8, pp. 45 913–45 923, Mar. 2020.

- [2] M. Di Renzo et. al, “Reconfigurable intelligent surfaces vs. relaying: Differences, similarities, and performance comparison,” IEEE Open J. Commun. Soc., vol. 1, pp. 798–807, Jun. 2020.

- [3] P. Wang, J. Fang, H. Duan, and H. Li, “Compressed channel estimation for intelligent reflecting surface-assisted millimeter wave systems,” IEEE Signal Process. Lett., vol. 27, pp. 905–909, May 2020.

- [4] J. Chen, Y.-C. Liang, H. V. Cheng, and W. Yu, “Channel estimation for reconfigurable intelligent surface aided multi-user MIMO systems,” arXiv preprint arXiv:1912.03619, Dec. 2019.

- [5] C. Hu, L. Dai, T. Mir, Z. Gao, and J. Fang, “Super-resolution channel estimation for mmWave massive MIMO with hybrid precoding,” IEEE Trans. Veh. Technol., vol. 67, no. 9, pp. 8954–8958, Sep. 2018.

- [6] X. Gao, L. Dai, S. Zhou, A. M. Sayeed, and L. Hanzo, “Wideband beamspace channel estimation for millimeter-wave MIMO systems relying on lens antenna arrays,” IEEE Trans. Signal Process., vol. 67, no. 18, pp. 4809–4824, Sep. 2019.

- [7] Q. Wu and R. Zhang, “Beamforming optimization for wireless network aided by intelligent reflecting surface with discrete phase shifts,” IEEE Trans. Commun., vol. 68, no. 3, pp. 1838–1851, 2020.