spacing

\onlineid1879

\vgtccategoryResearch

\preprinttextTo appear in an IEEE VGTC sponsored conference.

\teaser

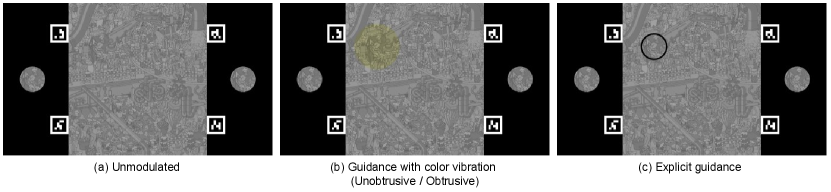

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/272db309-3202-41c9-9de1-d60dc425cb21/x1.png) Overview of our unobtrusive visual guidance (VG) using color vibrations. (a) MacAdam ellipse [17] contains colors that are indistinguishable from the average human eye when juxtaposed with the color at the center of the ellipse on chromaticity diagram. For illustration, the ellipses are shown at 10 times their actual size. (b) We select color pairs for color vibration by multiplying the major axis of each MacAdam ellipse by the amplitude . We empirically determine the range of suitable for unobtrusive VG. (c) By modulating regions of interest in an image with the determined in (b), we implement VG without significantly altering the perceptual appearance of the image.

Overview of our unobtrusive visual guidance (VG) using color vibrations. (a) MacAdam ellipse [17] contains colors that are indistinguishable from the average human eye when juxtaposed with the color at the center of the ellipse on chromaticity diagram. For illustration, the ellipses are shown at 10 times their actual size. (b) We select color pairs for color vibration by multiplying the major axis of each MacAdam ellipse by the amplitude . We empirically determine the range of suitable for unobtrusive VG. (c) By modulating regions of interest in an image with the determined in (b), we implement VG without significantly altering the perceptual appearance of the image.

ChromaGazer: Unobtrusive Visual Modulation using

Imperceptible Color Vibration for Visual Guidance

Abstract

Visual guidance (VG) is critical for directing user attention in virtual and augmented reality applications. However, conventional methods using explicit visual annotations can obstruct visibility and increase cognitive load. To address this, we propose an unobtrusive VG technique based on color vibration, a phenomenon in which rapidly alternating colors at frequencies above 25 Hz are perceived as a single intermediate color. We hypothesize that an intermediate perceptual state exists between complete color fusion and perceptual flicker, where colors appear subtly different from a uniform color without conscious perception of flicker. To investigate this, we conducted two experiments. First, we determined the thresholds between complete fusion, the intermediate state, and perceptual flicker by varying the amplitude of color vibration pairs in a user study. Second, we applied these threshold parameters to modulate regions in natural images and evaluated their effectiveness in guiding users’ gaze using eye-tracking data. Our results show that color vibration can subtly guide gaze while minimizing cognitive load, providing a novel approach for unobtrusive VG in VR and AR applications.

keywords:

visual guidance, imperceptible color vibration, color perception, augmented realityIntroduction

Visual guidance (VG) is crucial for directing users’ attention to specific areas of interest by overlaying information on virtual or real scenes. Beyond traditional applications in graphics and web design [23], VG has gained prominence in virtual reality (VR) and augmented reality (AR) environments, such as virtual training [38], exploration support [20], and memory support [34]. However, traditional VG methods that use explicit visual annotations such as arrows or circles [25, 26, 35] can increase cognitive load and obscure target objects.

To address these challenges, researchers have explored unobtrusive VG techniques that minimize visual distraction while preserving scene context. One approach is to manipulate visual saliency [14], the perceptual quality that makes certain elements stand out and attract attention. Previous studies have modulated various aspects of visual saliency, such as color and contrast [3, 21, 19, 32], or introduced flicker [4, 20, 31], to direct users’ gaze to regions of interest. These methods aim to enhance the salience of target objects without adding extraneous graphical elements. However, most saliency-based techniques inevitably alter the appearance of the scene, potentially altering the meaning of the content, causing important information to be missed, and reducing consistency with the real world in AR applications.

To overcome these limitations, we introduce ChromaGazer, a VG method that uses imperceptible color vibration to guide the user’s gaze without altering the visual appearance of the content. Imperceptible color vibration occurs when two colors of the same luminance alternate at frequencies above the critical color fusion frequency (CCFF) [5], approximately 25 Hz. At these frequencies, the human visual system perceives the alternating colors as a single fused color. We hypothesize that by carefully selecting color pairs and adjusting their vibration amplitude, it is possible to create an intermediate perceptual state that subtly attracts users’ attention to target regions without them consciously perceiving changes in the object’s appearance. We define this intermediate state as one in which the target appears slightly different from a monochromatic color, but the flicker is not overtly perceived.

To assess the feasibility of using color vibration for VG, we conducted two experiments. In the first experiment, we systematically varied the vibration amplitude of color pairs based on the MacAdam ellipse [17] and asked participants to identify thresholds between three perceptual states: explicit flicker, intermediate, and fused colors. By determining these thresholds, we aimed to identify optimal parameters for producing the desired intermediate state. In the second experiment, we applied color vibrations with the parameters identified in the first experiment to specific regions within natural images. We then evaluated their effectiveness in directing participants’ gaze using eye-tracking data. The results showed that our method successfully redirected users’ gaze without their conscious awareness.

Our main contributions include:

-

•

We propose ChromaGazer, a VG technique that uses imperceptible color vibrations to guide the user’s gaze without altering the appearance of the scene.

-

•

We identify the optimal parameters for creating an intermediate state that balances the attention and visual integrity of content through a user study.

-

•

We demonstrate the effectiveness of our color-vibration-based VG method in guiding users’ gaze to target regions within natural images, as measured by eye-tracking data.

-

•

We explore the design considerations of our unobtrusive VG approach using color vibration and suggest avenues for future research.

1 Related Work

1.1 Visual Guidance

Visual guidance has been extensively studied due to its wide range of applications, such as guiding users through digital information [23, 20], assisting in industrial tasks [28], and supporting training [34, 39, 38]. The control of visual attention involves two mechanisms: top-down and bottom-up attention [24]. Top-down attention is controlled by internal factors such as intentions, goals, and knowledge, and is typically attracted by methods such as arrows, icons, or textual instructions. Conversely, bottom-up attention is controlled by external factors, such as the characteristics of visual stimuli such as flickering lights, bright colors, and motion.

Traditionally, most visual guidance techniques have relied on approaches that attract top-down attention, such as outlines like circular or rectangular frames [12, 13, 36] or arrows [27, 37, 39]. On the other hand, methods that use bottom-up attention for visual guidance have also been the subject of longstanding research. These approaches manipulate the visual saliency of specific elements within a visual scene, making them stand out from other elements. Various parameters for adjusting scene saliency have been investigated, with most methods falling into two categories: color adjustment [3, 21, 19, 32] or flicker control [4, 20, 31]. In addition, control techniques based on saliency maps [14], which quantify visual saliency using insights from neuroscience, have been proposed. For example, Kokui et al. [15] applied color shifts based on saliency maps, while other studies have focused on modulating spatial frequency or texture power maps. Furthermore, researchers have explored combining multiple parameters that influence saliency to achieve more effective gaze guidance. Examples include the simultaneous modulation of color and brightness [7, 29], the introduction of subtle blur effects to modulate visual saliency [8], and the application of genetic algorithms [22].

Recently, Suzuki et al.proposed an approach that combines various parameters that affect visual saliency, such as blur, brightness, saturation, and contrast [33]. Also, Sutton et al.proposed a method of gaze guidance using a see-through optical head-mounted display to modulate multiple visual salience parameters of a real see-through view [32]. However, most existing saliency manipulation methods inevitably alter the appearance of the content, which can be problematic. This study investigates a novel approach that exploits the phenomenon of imperceptible color vibration to control visual saliency and guide gaze without altering the appearance of the content.

1.2 Imperceptible Color Vibration

Imperceptible color vibration exploits the human visual property that observers perceive an intermediate color when two colors of the same luminance are alternated at high frequencies [5]. The critical color fusion frequency (CCFF), the frequency at which humans cannot perceive color vibrations, is approximately 25 Hz, which is about half of the critical flicker fusion frequency (CFF) at which luminance flicker becomes imperceptible [18]. This imperceptible color vibration can be used without the need for dedicated high-refresh-rate displays since the refresh rates of commercially available LCD monitors and projectors exceed 60 Hz. Researchers are investigating the application of this principle to embed invisible information by rapidly altering the colors of specific areas of an image displayed on a screen, such as embedding invisible 2D QR codes that can be read by a camera [1, 2].

Several studies have been conducted on efficient search methods for imperceptible color vibration pairs that can be detected by the color sensor [1, 9]. Recently, Hattori et al. [10] further improved the efficiency of color search by using MacAdam ellipses [17] as the basis for perceptual thresholds. They conducted user experiments to investigate the threshold for perceiving flicker by varying the amplitude of the color vibration along the long diameter of the MacAdam ellipse. In this paper, we extend their perceptual search for color vibration pairs to the application of gaze control.

1.3 Color Vibration and Bottom-up Attention

Color vibrations are difficult to consciously perceive beyond CCFF. However, while the ventral occipital (VO) cortex, which is involved in advanced color processing and visual cognition, does not perceive color vibrations, the earlier stages of the visual cortex V1 to V4 perceive color vibrations [11]. In light of this finding, it is hypothesized that since bottom-up attention originates from primitive visual stimuli in V1 to V4, although the color vibration is not perceived until they reach higher-order information processing areas in the brain, they may attract bottom-up attention in the visual cortex and evoke visual attention. This hypothesis supports the underlying idea of this research. Although accurate measurements of brain activity are needed to test this hypothesis in detail and accurately, this study can be positioned as a first pilot test of this hypothesis.

2 Evaluation of Intermediate Perception in Color Vibration

We hypothesize that there is an intermediate state in the perception of color vibrations in which the object appears to be subtly different from a solid color, but without a clearly perceptible flicker. Before considering gaze guidance using this intermediate state, this study first investigated whether such intermediate states can occur and, if so, in what range of amplitudes they fall.

Hattori et al. [10] selected color vibration pairs based on MacAdam ellipses as a perceptual criterion and confirmed the threshold of color vibration amplitude at which flicker is not detectable by the human eye, using the major axis of the ellipses as a reference. This study further subdivides this color vibration detection threshold and investigates in detail, through user experiments, the vibration amplitude threshold for color pairs that produce the intermediate state.

2.1 Selection of Perceptual Color Vibration Pairs

To select suitable color vibration pairs for our experiments, we used MacAdam ellipses to account for the non-uniformity in human color perception.

Figure ChromaGazer: Unobtrusive Visual Modulation using Imperceptible Color Vibration for Visual Guidance (a) shows the distribution of MacAdam ellipses on the chromaticity diagram. Each ellipse represents a region where colors are indistinguishable from a center reference color when placed side by side, as determined experimentally. By using MacAdam ellipses, we can select color vibration pairs that take into account the varying sensitivity of the human visual system to different colors. For example, the MacAdam ellipses are larger in the green region than in the blue region, indicating that small color differences in green are less perceptible than those in blue within the chromaticity space.

Each MacAdam ellipse is defined at 25 points on chromaticity diagram by their center , rotation angle , and the lengths and of long and short diameter, respectively. We choose color pairs multiplied by ratio along the long diameter of these ellipses as color vibration pairs (Fig. ChromaGazer: Unobtrusive Visual Modulation using Imperceptible Color Vibration for Visual Guidance b), which denoted as

| (1) |

This allows for the selection of color vibration pairs while considering the non-uniformity of human color perception.

chromaticity diagram is calculated by normalizing the luminance of the CIEXYZ color space. Therefore, when displaying colors based on the selected color pairs, it is necessary to complement the luminance. As luminance approaches 0, the color pairs approach black, and the colors become nearly invisible. In contrast, as approaches 1, the brightness of the color pairs exceeds the sRGB range. Therefore, we set and converted to XYZ as

| (2) |

Then, color pairs selected in CIEXYZ are converted to the sRGB color system for display:

| (3) |

The following gamma transformations were then applied to each channel :

| (4) | |||||

| (5) |

For implementation, we use the color science library in Python 111Colour 0.4.4 by Colour Developers, https://zenodo.org/records/10396329. The CIE 1931 2∘ observer function under D65 illumination is used for color conversion.

Hattori et al. [10] previously selected pairs of color vibrations by varying according to the above criteria and presented them to the participants. They derived a threshold of at which 50 % of the participants perceived color vibration, and considered values below this threshold as imperceptible color vibration and applied it to the detection of color vibration by cameras. In contrast, we further subdivide the perception of color vibration and evaluate the range of that results in a state of “different from a solid color but not clearly flickering”.

2.2 Experiment Setup

2.2.1 Participants

17 participants (13 males, 4 females; age range 23–45 years, mean age 30.3 years) participated in the study. 9 participants used corrective lenses (glasses or contact lenses). The study was approved by the Institutional Review Board at [name of institution, omitted for double-blind review].

2.2.2 Generation of Color Vibration Images

In this experiment, we varied three parameters: the amplitude ratio , the diameter of the image circle, and the display position of the image. We presented various combinations of color vibrations to participants and measured human perceptual thresholds based on their responses.

Participants were asked to choose one of the following three perceptual states:

-

1.

Indistinguishable from a solid color: The image appears as a uniform color with no noticeable differences.

-

2.

Different from a solid color, but not clearly flickering: The image appears slightly different from a solid color, but there is no noticeable flickering.

-

3.

Clearly flickering: The image has noticeable flickering.

We selected the point from the MacAdam ellipses in the chromaticity space as the base color for generating color vibration pairs. The luminance was set to . This color is near the center of the chromaticity diagram and ensures that the color pairs remain within the sRGB gamut even as increases. In the sRGB color space, this corresponds to . We generated color pairs with values ranging from 0 to 50 in increments of 5, resulting in 11 sets of color vibration pairs.

Note that, in this paper we focus only on vibrating grayscale colors, since individual perception of color vibration varies with hue and saturation. The investigation of the parameter for any color is left for future work.

2.2.3 Apparatus

Participants were seated with their chins on a rest to stabilize head position, ensuring their eyes were level with the center of a 42.5-inch LCD display (43UN700-BAJP, LG Electronics) positioned 500 mm away. The display was calibrated to the sRGB color space using a monitor calibration tool (Spyder X Elite, Datacolor) and set to a luminance of 166 cd/m2.

2.2.4 Experimental Conditions and Procedure

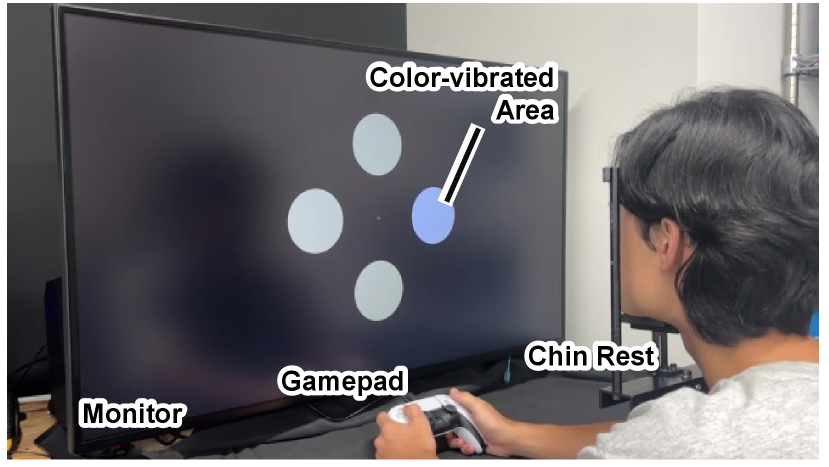

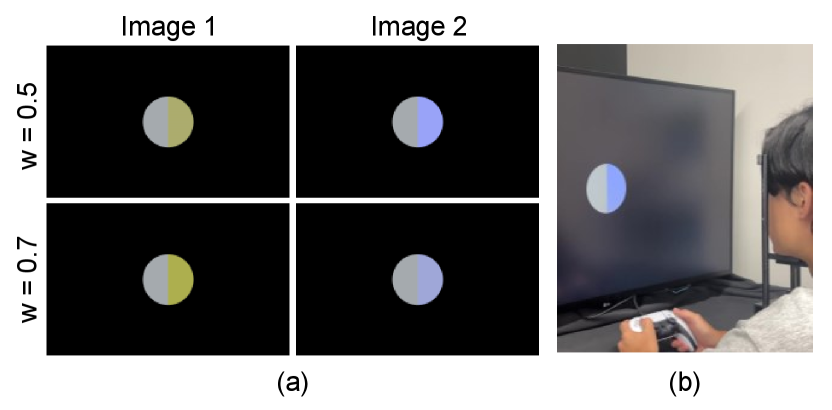

Figure 1 shows the experimental setup. Prior to the main experiment, we conducted a color adaptation procedure to account for individual differences in color perception. Participants were shown an image circle (diameter 120 mm) centered on the display, which was vertically split: the left half showed a solid color without vibration, and the right half showed a color-vibrating image at a specific value. Participants adjusted the parameter – which determined the weight between the two colors in the vibrating pair – using the left and right buttons of a gamepad in increments of 0.02, aiming to make the left and right halves appear identical (Fig. 2).

Mathematically, the vibrating color pair was adjusted so that the ratio of the distances along the major axis of the MacAdam ellipse from the target color to the vibrating colors (yellowish side and bluish side) was , where . This adjustment personalized the center of the color vibration to each participant’s perception.

After each adaptation, an inverted stimulus image was displayed for 100 ms to reduce afterimage effects, where the image circle turned black and the surrounding background remained the display color. This fitting process was repeated five times with decreasing values () to familiarize participants with the range of color vibrations and to help them understand the concept of "flicker."

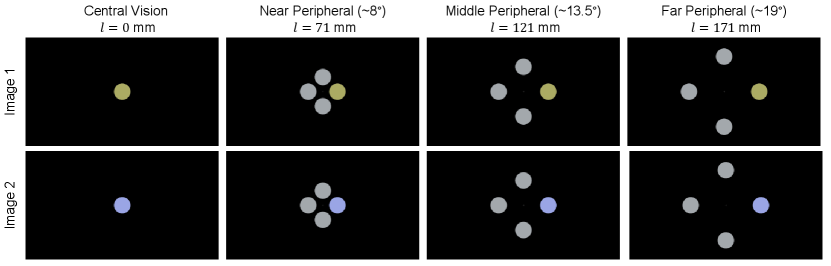

Figure 3 shows an example of the images presented in the main experiment. In the main experiment, color vibration images were displayed as circles with diameters set to 60 mm (small), 80 mm (medium), or 100 mm (large). The positions of the image circles were determined by the distance from the center of the display to the center of the circle, which was set to , 71, 121, or 171 mm. These distances correspond to central vision (0° visual angle), near peripheral vision ( 8∘), middle peripheral vision ( 13.5∘), and far peripheral vision ( 19∘), respectively. For positions other than central vision, four image circles were symmetrically arranged around the center to eliminate directional bias.

Participants were presented with a random sequence of the 132 combinations () of , , and . On each trial, they used a gamepad (DualSense Wireless Controller, Sony Interactive Entertainment) to select one of the three perceptual states described above. When multiple image circles were displayed (for peripheral vision conditions), participants also indicated which circle they perceived as vibrating. An inverted stimulus was displayed after each response to prevent afterimages. Participants were given short breaks after every 10 trials to reduce fatigue.

2.3 Result and Discussion

We calculated the proportion of participants who reported each perceptual state at different values for each condition. By fitting sigmoid functions to the data, we estimated the thresholds at which participants had a 50 % and 75 % probability of perceiving the image as "different from a solid color" and "clearly flickering". In the peripheral vision conditions, if a participant incorrectly identified which of the four image circles was vibrating, we assumed that they did not perceive an anomaly.

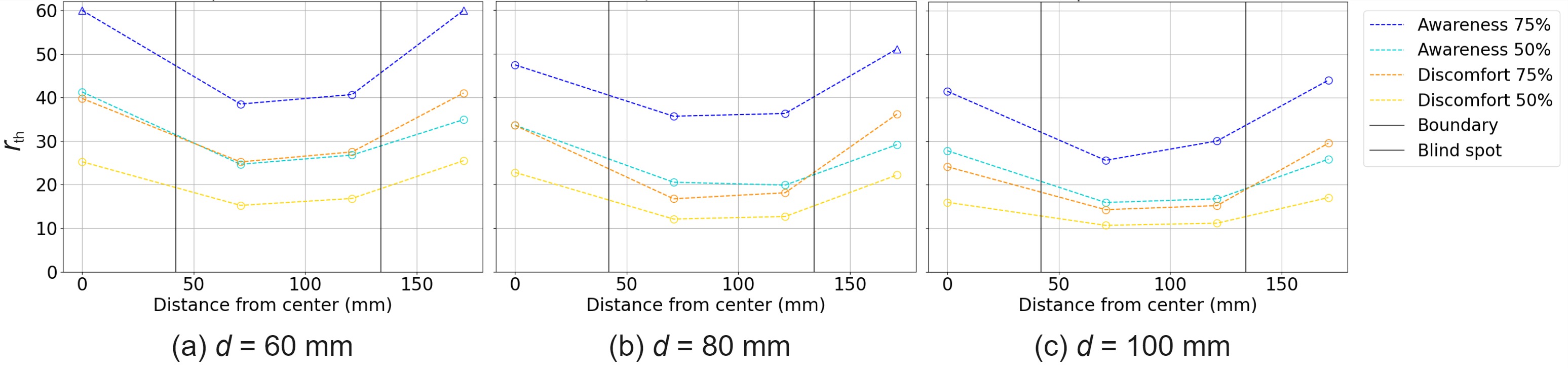

Figure 4 shows the experiment result. The graph shows, from left to right, the threshold at which subjects perceive a color as different from a single color with 50% and 75% probability, and the threshold at which they perceive an obvious flicker, for each display position of the image circle when the size of the image circle .

2.3.1 Effect of Color Vibration Size

Figure 4 shows that as the image circle diameter increases, the threshold decreases.

Specifically, for , 80, and 100 mm, the lowest thresholds at which participants perceived the image as “different from a solid color” with 50 % probability were , 16.73, and 14.29 at mm, and with 75 % probability were , , at = 71 mm, respectively. The thresholds for perceiving “obvious flicker” with 50 % probability were lower at , 12.08, and 10.68, respectively, and with 75 % probability were , , , respectively. This suggests that larger color vibration areas make it easier for participants to perceive color vibrations even at smaller values.

2.3.2 Effect of Display Position

From Fig. 4, the thresholds varied with display position. Perception of color vibration was less sensitive in central vision () compared to near and middle peripheral vision (, 121 mm). However, in the central vision condition, only one circle was displayed, eliminating the possibility of color comparison with adjacent stimuli, which may have contributed to the higher thresholds observed.

In far peripheral vision ( mm), sensitivity to color vibration decreased compared to near and middle peripheral vision. This indicates that the sensitivity to color vibration depends on the position within the visual field, likely due to the visual system’s characteristics, such as reduced spatial and temporal resolution in peripheral areas.

2.3.3 Intermediate Perceptual State of Color Vibrations

The observed difference between the thresholds for "different from a solid color" and "clearly flickering" supports the existence of an intermediate perceptual state. If such a state did not exist, these thresholds would be nearly identical under the same conditions. This indicates that the subject first perceives the color difference from the monochromatic color, and then there is a process of flicker perception.

These results show that the perception of color vibration is influenced by factors beyond the amplitude ratio , including image size and display position. By choosing appropriate values between the "different from a solid color" and "clearly flickering" thresholds for each condition, we can design effective gaze guidance that avoids causing noticeable flicker.

3 Evaluation of Color Vibration Vision Guidance

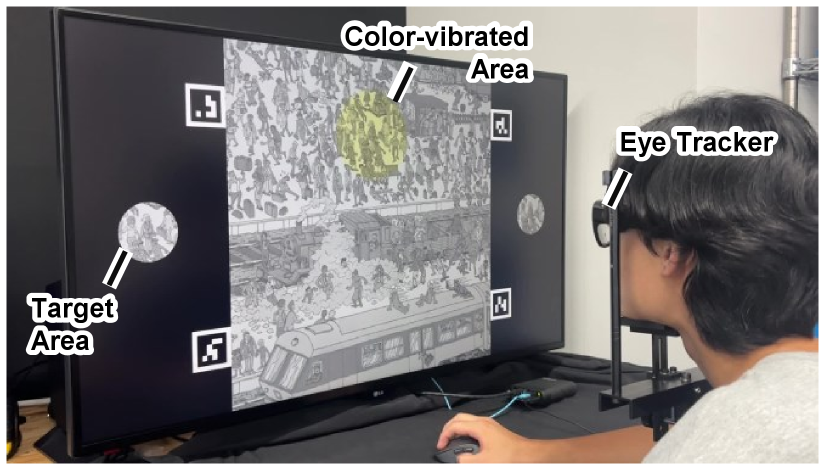

Next, based on the findings in Sec. 2, we conducted an experiment to investigate whether applying color vibration to a region of interest (ROI) in an image could attract users’ attention without altering the overall impression of the image. Figure 6 shows the experimental setup. The experimental protocol was approved by the [Omitted for double-blind review] Research Ethics Committee.

3.1 Experiment Setup

3.1.1 Participants

29 participants (20 males and 9 females; age range 23-50 years, mean age 30.7 years), none of whom had participated in the previous experiment, participated in this study. 6 participants corrected their vision with glasses and 12 used contact lenses.

3.1.2 Apparatus

Participants were seated with their chins resting on a chin rest placed in front of the same 42.5-inch LCD display used in Sec. 2.2. The display settings, viewing distance (500 mm), and ambient lighting conditions were the same as in the previous experiment. For 23 participants who did not wear glasses, we tracked and recorded their gaze using eyeglass-mounted eye trackers (Tobii Pro Glasses 3). To map gaze data to display coordinates, ArUco markers of known dimensions were displayed at the four corners of the content images, allowing homography transformation using the eye tracker’s cameras.

3.1.3 Stimuli Preparation

Figure 5 shows an example of the presentation image used in this experiment. For experiment images, we used images from "Pocket Edition NEW Where’s Wally!" by Martin Handford, a picture book in which readers search for a specific character among many others. Note that, in this experiment, the character that the participants have to find is not necessarily Wally, as they are asked to look at objects in various positions on the screen.

6 images were selected and scanned at 600 dpi using a scanner (ScanSnap iX1300, PFU). The images were cropped to 1200 px 1200 px to adjust the difficulty level to ensure that participants could locate the target character within the 30 second display limit even in the unmodulated condition. To facilitate the application of color vibration pairs to each pixel, images were converted to grayscale and pixel values were remapped from the original range [0, 255] to [60, 196]. The ROI was defined as a circular area 44 mm in diameter sufficient to enclose a single character (not necessarily Wally) and approximating the effective area of central vision (a circle 39 mm in diameter at 500 mm viewing distance). The positions of the ROIs were varied across images to avoid location bias.

The area of color vibration was defined as an 80 mm diameter circle encompassing the ROI. We applied four variations of visual guidance to the images:

-

1.

Unmodified: Original image without any modification.

-

2.

Unobtrusive color vibration: ROI modulated with subtle color vibration.

-

3.

Obtrusive Color Vibration: ROI modulated with more pronounced color vibration.

-

4.

Explicit guidance: ROI marked with a visible circle.

3.1.4 Determination of Color Vibration Parameters

The color vibration pairs were generated using the threshold values corresponding to the diameter of the vibrating area and the distance from the center of the display, as obtained in the previous experiment. Specifically, we used the values at which participants perceived the color vibration with 75 % probability for mm. We chose the 75 % threshold to ensure that the cue was effective in attracting users’ attention.

For the unobtrusive color vibration, we used the corresponding to the "Awareness" condition, while for the obtrusive color vibration, we used the corresponding to the "Discomfort" condition. If the ROI was in the central visual field (within 5 degrees of visual angle, mm), we used the corresponding . For ROIs in the peripheral visual field (approximately 8∘ to 19∘ of visual angle), we linearly interpolated the values based on the results for , 121, and 171 mm. We avoided placing ROIs outside of these ranges due to uncertainties in the applicability of linear interpolation.

Because the parameter varied with distance from the center of the display, we adjusted the color-fitting parameter accordingly for each participant, using linear interpolation based on the fitting results from the previous experiment.

3.1.5 Experiment Procedure

Participants provided informed consent and underwent the same color fitting process as in Sec. 2.2.4. Definitions of terms used in the Likert scale questionnaire were then explained. The eye tracker was calibrated before the experiment began.

During the experiment, participants rested their chin on the chin rest to maintain a constant viewing position. They were instructed to keep their gaze on the display and to use a mouse to select the target area within the content images. Reference images of the search target were displayed on either side of the content image for participants to refer to as needed.

Each participant viewed 6 sets of images, each set containing one of the 4 visual guidance conditions described above. The order of the image sets and the images within each set were randomized.

For each trial, the order was as follows:

-

1.

Fixation screen: A black screen with a white cross in the center was displayed, and participants were instructed to fixate on the cross.

-

2.

Target presentation: An image showing only the ROI (the target character) centered on the screen was presented to familiarize participants with the search target.

-

3.

Search task: The content he assigned visual guidance was displayed. Participants searched for the target character and indicated their selection with a mouse click.

-

4.

Questionnaire: After each image, participants rated the following on a 7-point Likert scale, following Sutton et al. [32]:

-

•

Naturalness: The extent to which the image appeared unprocessed (1: very unnatural – 7: very natural).

-

•

Obtrusiveness: The degree to which the image stood out undesirably (1: not at all obtrusive – 7: very obtrusive).

-

•

After every 6 images, participants took a short break and looked at a black screen to rest their eyes.

3.2 Results

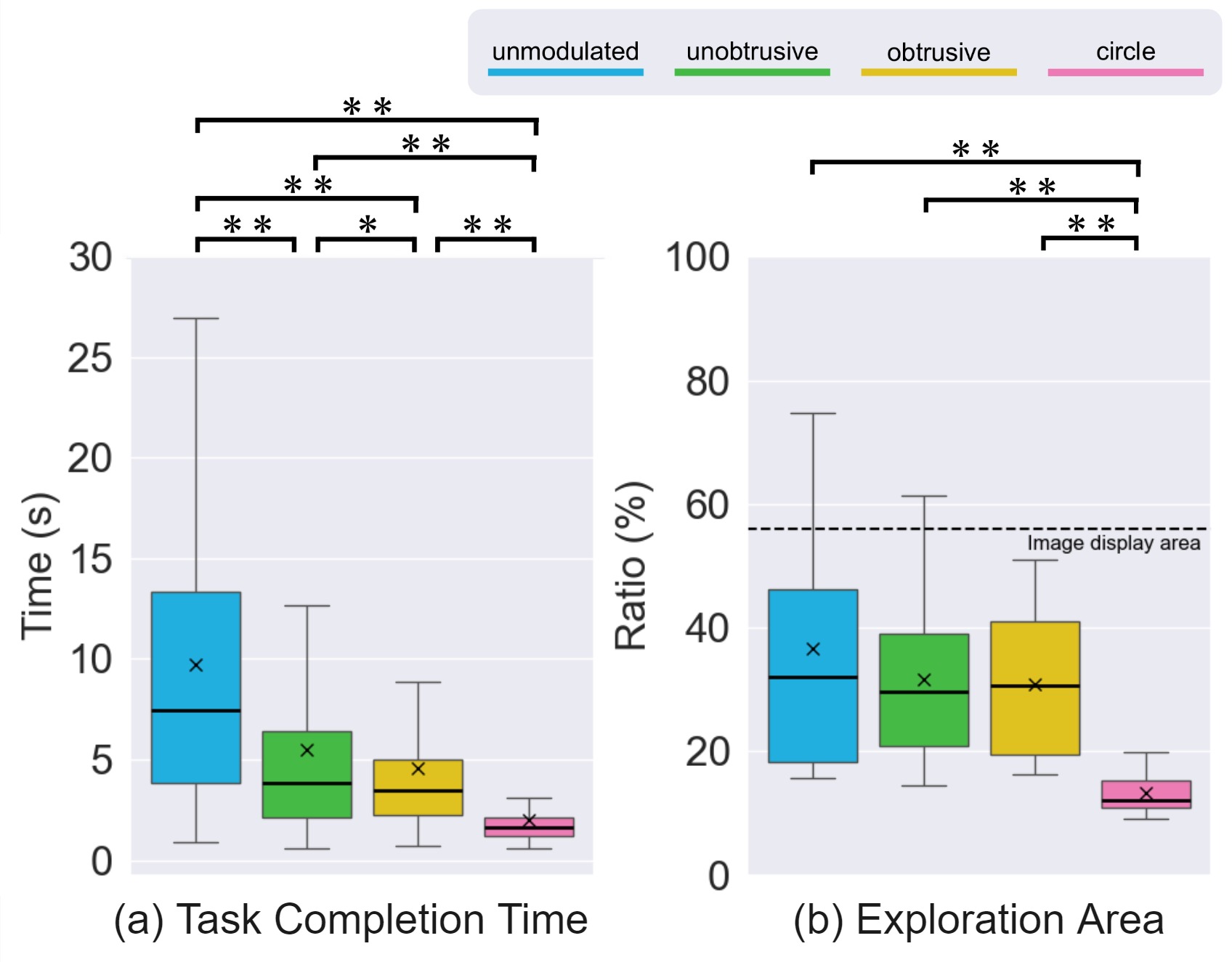

We evaluated the experiment from three perspectives: (1) task completion time (time to click on the correct search area), (2) proportion of the image explored by the gaze before task completion, and (3) scores from the user questionnaire.

3.2.1 Task Completion Time

Time was recorded based on participants’ responses. Correct responses were assigned their respective completion times, while incorrect responses or timeouts (exceeding the 30-second limit) were assigned a 30-second time.

Figure 7 (a) shows the task completion times for the four display conditions. As a result of conducting the Friedman test, the main effect of the display conditions on the evaluation score was significant ( = 252.730, p < .001). Since the main effect was significant, the Wilcoxon signed-rank test was repeated under the Holm method as a sub-test. As a result, the task completion time of unmodulated condition was significantly higher than the unobtrusive condition (p < .001, Cohen’s r = 0.425), the obtrusive condition (p < .001, Cohen’s r = 0.598), and the circle condition (p < .001, Cohen’s r = 0.835). The unobtrusive condition was also significantly higher than the obtrusive condition (p = .0011, Cohen’s r = 0.192) and the circle condition (p < .001, Cohen’s r = 0.731). The obtrusive condition was also significantly higher than the circle condition (p < .001, Cohen’s r = 0.748).

3.2.2 Proportion of Explored Area

We calculated the proportion of the image explored by the gaze using the eye-tracking data. For each gaze point, we considered the area within the central visual field (within 5 degrees of visual angle) as the exploration area. The total exploration area was divided by the total image area to obtain the proportion. The eye coordinate information was converted to display coordinate system coordinates by performing a homographic transformation using the coordinates of the ArUco marker detected by the eye tracker camera.

Figure 7 (b) shows the results. As a result of conducting the Friedman test, the main effect of the display conditions on the evaluation score was significant ( = 38.018, p < .001). Since the main effect was significant, the Wilcoxon signed-rank test was repeated under the Holm method as a sub-test. As a result, the ratio of exploration area of unmodulated condition was significantly higher than the circle condition (p < .001, Cohen’s r = 0.876), the unobtrusive condition was significantly higher than the circle condition (p < .001, Cohen’s r = 0.876), and the obtrusive condition was significantly higher than the circle condition (p < .001, Cohen’s r = 0.869). Whereas no significant differences were observed between the unmodulated and unobtrusive conditions (p = .248, Cohen’s r = 0.253), between the unmodulated and obtrusive conditions (p = .129, Cohen’s r = 0.329), and between the unobtrusive and obtrusive conditions (p = .924, Cohen’s r = 0.024).

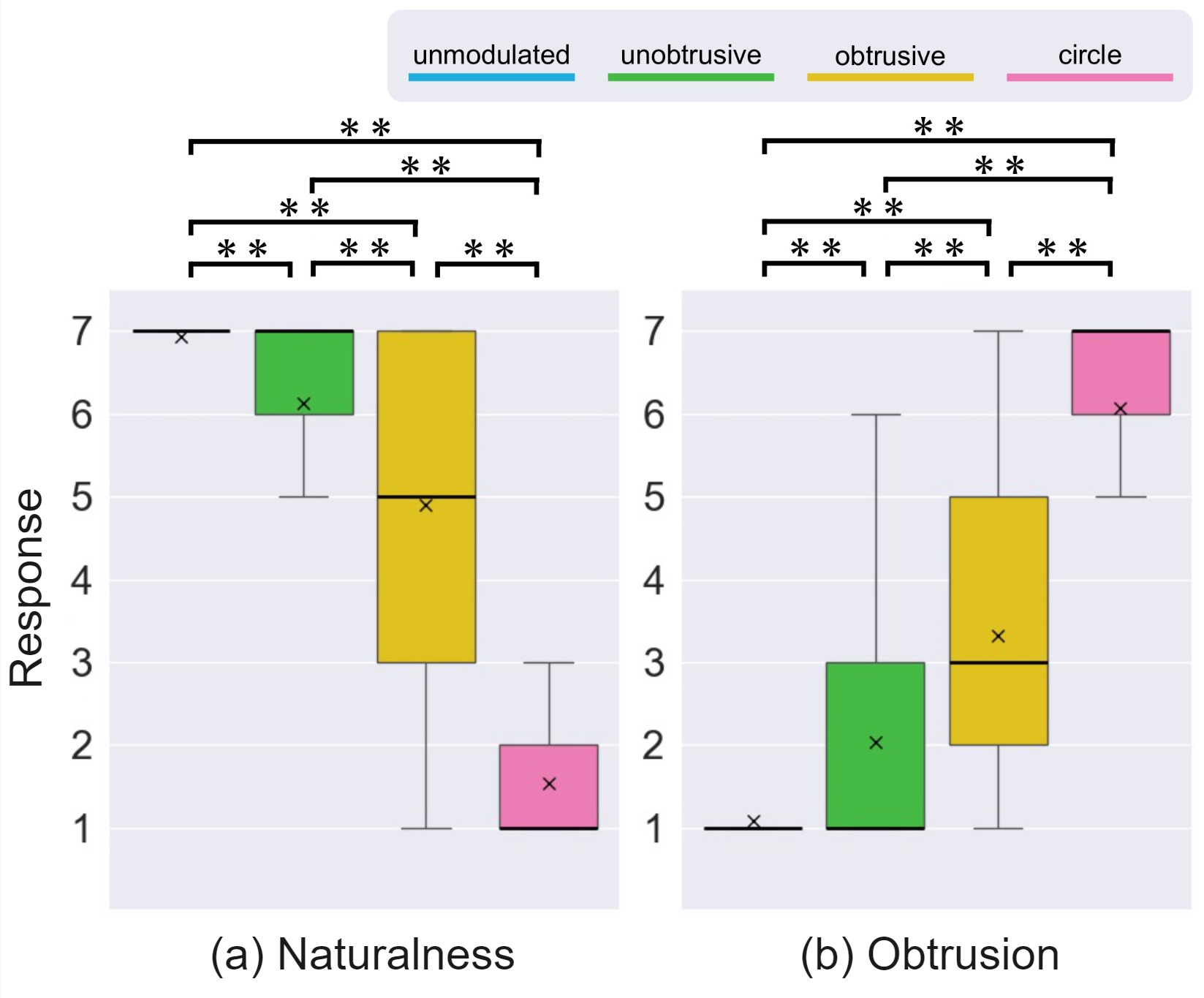

3.2.3 User Questionnaire

Figure 8 shows the questionnaire results for naturalness and obtrusiveness.

As a result of conducting the Friedman test, the main effect of the display conditions on the evaluation score was significant for all categories of questions (Naturalness: = 433.243, p < .001; Obtrusion: = 390.673, p < .001). Since the main effect was significant, the Wilcoxon signed-rank test was repeated under the Holm method as a sub-test. The results are shown below:

-

•

Naturalness: the score of unmodulated condition was significantly higher than the unobtrusive condition (p < .001, Cohen’s r = 0.863), the obtrusive condition (p < .001, Cohen’s r = 0.861), and the unobtrusive condition (p < .001, Cohen’s r = 0.867). The score of the unobtrusive condition was also significantly higher than the obtrusive condition (p < .001, Cohen’s r = 0.850) and the circle condition (p < .001, Cohen’s r = 0.866). The score of the obtrusive condition was also significantly higher than the circle condition (p < .001, Cohen’s r = 0.866).

-

•

Obtrusion: the score of unmodulated condition was significantly lower than the unobtrusive condition (p < .001, Cohen’s r = 0.861), the obtrusive condition (p < .001, Cohen’s r = 0.863), and the unobtrusive condition (p < .001, Cohen’s r = 0.866). The score of the unobtrusive condition was also significantly lower than the obtrusive condition (p < .001, Cohen’s r = 0.849) and the circle condition (p < .001, Cohen’s r = 0.864). The score of the obtrusive condition was also significantly lower than the circle condition (p < .001, Cohen’s r = 0.851).

3.3 Discussion

The task completion time result showed that there were significant differences between all four display conditions. This result indicates that unobtrusive gaze guidance using color vibration effectively directs users’ attention and improves task performance without significantly altering the overall scene. While its effectiveness is slightly lower than that of obtrusive color vibration and explicit guidance, it offers sufficient performance as a subtle gaze guidance method, avoiding excessive interference with the user experience.

In terms of the proportion of explored area, significant differences were observed only between the explicit guidance condition and the other three conditions. This suggests that gaze guidance using color vibration allows users to naturally explore the entire scene, unlike explicit guidance, which strongly directs gaze to a specific area and may limit exploration. With color vibration, users can broadly view the scene while still being subtly guided toward important information.

The questionnaire results show that unobtrusive gaze guidance was perceived as more natural and less obtrusive compared to obtrusive color vibration and explicit guidance. Although rated slightly less natural than the unmodulated condition, unobtrusive gaze guidance successfully attracted users’ attention without significantly affecting the scene context.

In the user questionnaire results, significant differences were observed among all display conditions in the evaluations of naturalness and obtrusiveness. Specifically, unobtrusive gaze guidance using color vibration was found to have significantly higher naturalness compared to obtrusive color vibration and explicit guidance. This is because unobtrusive gaze guidance can attract the user’s attention without significantly altering the scene’s context. On the other hand, since the obtrusiveness rating was higher than that of the unmodulated condition, it indicates that the unobtrusive gaze guidance is effectively capturing the user’s attention.

In terms of obtrusiveness, the unobtrusive gaze guidance showed less negative impact on the scene’s context compared to explicit guidance. Explicit gaze guidance may force the user’s attention toward a specific area, potentially impairing overall scene comprehension and immersion. In contrast, unobtrusive gaze guidance can promote attention to necessary information while respecting the user’s natural eye movements.

From these results, it is suggested that unobtrusive gaze guidance using color vibration is an effective method that contributes to improving task completion speed and naturally supports the user’s exploratory behavior. Because it can enhance access to necessary information without diminishing the user experience, practical applications are anticipated.

4 Application

We explored application scenarios for ChromaGazer proposed in this study and evaluated its effectiveness. Specifically, we propose the potential of using unobtrusive color vibration for visual guidance in three applications: advertising, picture books, and task assistance. Figure 9 illustrates these applications.

In the advertising application (Fig. 9a), we selected a region of interest (ROI) within an advertisement image and generated a pair of images with unobtrusive color vibration for that region. By displaying this image, it becomes possible to direct the user’s attention to specific products or information without compromising the design or aesthetic appeal of the advertisement. Unlike conventional pop-ups or explicit banners, unobtrusive visual guidance can enhance advertising effectiveness without disrupting the user experience.

In the picture book application (Fig. 9b), we unobtrusively guided the reader’s attention to specific regions within the images of a digital book, synchronized with the progression of the story. This allows readers to naturally focus on important characters or key elements of the narrative while maintaining immersion in the world of the picture book. Unobtrusive visual guidance supports readers in smoothly following the story’s development, contributing to improved readability and comprehension.

In the task assistance application (Fig. 9c), we envisioned a scenario where a user wearing AR glasses performs work using tools. By detecting in real time the regions of necessary tools or parts during the task and applying unobtrusive color vibration to those areas, the user’s attention can be naturally guided. This enables the user to quickly identify the next tool to use or the component to focus on without obstructing the view with overt guidance information. This approach is expected to lead to improvements in work efficiency and reduction of errors.

Through these application scenarios, the potential of ChromaGazer to achieve effective visual guidance while maintaining user immersion and a natural experience was demonstrated. By using unobtrusive color vibration, it is possible to direct the user’s attention to necessary information without significantly altering the context of the scene.

5 Limitation and Future Work

While this study is the first to demonstrate gaze guidance using color vibration, several areas require further investigation in order to generalize the perceptual parameters across different situations. We discuss the current limitations and potential avenues for future work.

Relation between color vibration amplitude and hue/brightness

We focus exclusively on vibrating grayscale colors because the perception of color vibration varies according to hue and saturation and we wanted to see if color vibration is effective in VG by controlling variables. Having verified the effectiveness of color vibration on VG in this paper, investigating the effect of color differences on amplitude parameters is the most important future work.

Moreover, our experiments assumed that the amplitude of chromaticity for a given hue is proportional to the major axis of the MacAdam ellipse. However, the perception of chromaticity may vary with hue and lightness. Future studies could model the amplitude as a function of hue and lightness through comprehensive experiments.

Generalized saliency model for color vibration

The images used in the current experiment are limited, and it is necessary to verify the effectiveness of the method for various scenes and objects in natural images. If an appropriate saliency model for color vibration is provided, the VG effect of color vibration will be evaluated more generally. However, at present, no appropriate model for saliency control of color vibration has been proposed, and this is positioned as a future research direction.

Neuroscientific exploration of the intermediate state of Color Vibration

As introduced in Sec. 1.3, the intermediate state of color vibration explored in this study was hypothesized based on neuroscientific findings. While this paper focused on user subjective evaluation and gaze analysis, estimating the intermediate state based on brain measurements such as fMRI is expected to deepen this method further.

Addressing individual differences

The perceptual characteristics of color vibration depend on the individual, and experiments with larger numbers of participants are needed. In particular, since the participants in our experiment were limited to a young age group, it is necessary to demonstrate the effect across a wider age range. In addition, the calibration and personalization of individual color vibration perception, including color vision deficiency [16], is considered a future research direction.

Experiments in different display environments

The current experiment demonstrated saliency control using color vibration through measurements on a color-calibrated monitor under natural light conditions. However, further research is needed on the relationship between monitor brightness, ambient light brightness, and color vibration perception parameters. In addition, research on applying gaze control to VR [30, 6] and AR [32, 31] environments that modulate real-world saliency is also progressing. Achieving optimal color vibrancy modulation in these environments is positioned as a future research challenge.

6 Conclusion

We introduced ChromaGazer, a visual guidance technique leveraging color vibration to direct users’ attention without perceptibly altering image appearance. Through user experiments, we determined thresholds for the intermediate perceptual state of color vibration, informing ChromaGazer’s design. Evaluations with natural images demonstrated the method’s effectiveness in guiding gaze while preserving naturalness compared to existing approaches. We also assessed the impact of color vibration amplitude on gaze guidance efficacy, emphasizing the need for optimal amplitude selection. This study opens opportunities for color vibration-based gaze guidance techniques. Generalizing our method requires further investigation into the perceptual characteristics of color vibration and the development of advanced gaze guidance strategies in diverse environments. We hope this research stimulates further exploration toward more sophisticated visual guidance systems.

Acknowledgements.

This study was supported by JST PRESTO Grant Number JPMJPR17J2 and JSPS KAKENHI Grant Number JP20H04222, JP23H04328, JP24KK0187, Japan.References

- [1] S. Abe, A. Arami, T. Hiraki, S. Fukushima, and T. Naemura. Imperceptible color vibration for embedding pixel-by-pixel data into LCD images. In Proceedings of the 2017 ACM Conference Extended Abstracts on Human Factors in Computing Systems, pages 1464–1470, May 2017.

- [2] S. Abe, T. Hiraki, S. Fukushima, and T. Naemura. Imperceptible color vibration for screen-camera communication via 2d binary pattern. ITE Transactions on Media Technology and Applications, 8(3):170–185, July 2020.

- [3] K. Azuma and H. Koike. A study on gaze guidance using artificial color shifts. In Proceedings of the 2018 International Conference on Advanced Visual Interfaces, AVI ’18, 2018.

- [4] R. Bailey, A. McNamara, N. Sudarsanam, and C. Grimm. Subtle gaze direction. ACM Transactions on Graphics, 28(4), sep 2009.

- [5] H. de Lange Dzn. Research into the dynamic nature of the human fovea→cortex systems with intermittent and modulated light. ii. phase shift in brightness and delay in color perception. Journal of the Optical Society of America, 48(11):784–789, Nov 1958.

- [6] S. Grogorick and M. Magnor. Subtle Visual Attention Guidance in VR, pages 272–284. Springer International Publishing, Cham, 2020.

- [7] A. Hagiwara, A. Sugimoto, and K. Kawamoto. Saliency-based image editing for guiding visual attention. In Proceedings of the 1st International Workshop on Pervasive Eye Tracking & Mobile Eye-Based Interaction, PETMEI ’11, page 43–48, 2011.

- [8] H. Hata, H. Koike, and Y. Sato. Visual guidance with unnoticed blur effect. In Proceedings of the International Working Conference on Advanced Visual Interfaces, AVI ’16, page 28–35, 2016.

- [9] S. Hattori and T. Hiraki. Accelerated and optimized search of imperceptible color vibration for embedding information into LCD images. In Proceedings of the ACM SIGGRAPH Asia 2022 Posters, number Article 16 in SA ’22, pages 1–2, Dec. 2022.

- [10] S. Hattori, Y. Hiroi, and T. Hiraki. Measurement of the imperceptible threshold for color vibration pairs selected by using macadam ellipse, 2024.

- [11] Y. Jiang, K. Zhou, and S. He. Human visual cortex responds to invisible chromatic flicker. Nature Neuroscience, 10(5):657–662, May 2007.

- [12] J. Jo, B. Kim, and J. Seo. Eyebookmark: Assisting recovery from interruption during reading. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI ’15, page 2963–2966, 2015.

- [13] D. Kern, P. Marshall, and A. Schmidt. Gazemarks: gaze-based visual placeholders to ease attention switching. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’10, page 2093–2102, 2010.

- [14] C. Koch and S. Ullman. Shifts in Selective Visual Attention: Towards the Underlying Neural Circuitry, pages 115–141. Springer Netherlands, Dordrecht, 1987.

- [15] T. Kokui, H. Takimoto, Y. Mitsukura, M. Kishihara, and K. Okubo. Color image modification based on visual saliency for guiding visual attention. In Proceedings of the 22nd IEEE International Workshop on Robot and Human Communication, RO-MAN ’13, pages 467–472, 2013.

- [16] T. Langlotz, J. Sutton, S. Zollmann, Y. Itoh, and H. Regenbrecht. Chromaglasses: Computational glasses for compensating colour blindness. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, CHI ’18, page 1–12, New York, NY, USA, 2018. Association for Computing Machinery.

- [17] D. L. MacAdam. Visual sensitivities to color differences in daylight. Journal of the Optical Society of America, 32(5):247–274, May 1942.

- [18] N. D. Mankowska, A. B. Marcinkowska, M. Waskow, R. I. Sharma, J. Kot, and P. J. Winklewski. Critical flicker fusion frequency: A narrative review. Medicina, 57(10), 2021.

- [19] V. A. Mateescu and I. V. Bajić. Attention retargeting by color manipulation in images. In Proceedings of the 1st International Workshop on Perception Inspired Video Processing, PIVP ’14, page 15–20, 2014.

- [20] A. McNamara, R. Bailey, and C. Grimm. Improving search task performance using subtle gaze direction. In Proceedings of the 5th Symposium on Applied Perception in Graphics and Visualization, APGV ’08, page 51–56, 2008.

- [21] T. V. Nguyen, B. Ni, H. Liu, W. Xia, J. Luo, M. Kankanhalli, and S. Yan. Image re-attentionizing. IEEE Transactions on Multimedia, 15(8):1910–1919, 2013.

- [22] R. Pal and D. Roy. Enhancing saliency of an object using genetic algorithm. In Proceedings of the 14th Conference on Computer and Robot Vision (CRV), pages 337–344, 2017.

- [23] X. Pang, Y. Cao, R. W. H. Lau, and A. B. Chan. Directing user attention via visual flow on web designs. ACM Transactions on Graphics, 35(6), dec 2016.

- [24] M. I. Posner. Orienting of attention. Quarterly Journal of Experimental Psychology, 32(1):3–25, 1980.

- [25] R. Reif, W. A. Günthner, B. Schwerdtfeger, and G. Klinker. Pick-by-vision comes on age: evaluation of an augmented reality supported picking system in a real storage environment. In Proceedings of the 6th International Conference on Computer Graphics, Virtual Reality, Visualisation and Interaction in Africa, AFRIGRAPH ’09, page 23–31, 2009.

- [26] P. Renner and T. Pfeiffer. Ar-glasses-based attention guiding for complex environments: requirements, classification and evaluation. In Proceedings of the 13th ACM International Conference on PErvasive Technologies Related to Assistive Environments, PETRA ’20, 2020.

- [27] A. Schmitz, A. MacQuarrie, S. Julier, N. Binetti, and A. Steed. Directing versus attracting attention: Exploring the effectiveness of central and peripheral cues in panoramic videos. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pages 63–72, 2020.

- [28] B. Schwerdtfeger and G. Klinker. Supporting order picking with augmented reality. In Proceedings of the 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, pages 91–94, 2008.

- [29] T. Shi and A. Sugimoto. Video saliency modulation in the hsi color space for drawing gaze. In R. Klette, M. Rivera, and S. Satoh, editors, Image and Video Technology, pages 206–219, Berlin, Heidelberg, 2014. Springer Berlin Heidelberg.

- [30] V. Sitzmann, A. Serrano, A. Pavel, M. Agrawala, D. Gutierrez, B. Masia, and G. Wetzstein. Saliency in vr: How do people explore virtual environments? IEEE Transactions on Visualization and Computer Graphics, 24(4):1633–1642, 2018.

- [31] J. Sutton, T. Langlotz, A. Plopski, and K. Hornbæk. Flicker augmentations: Rapid brightness modulation for real-world visual guidance using augmented reality. In Proceedings of the CHI Conference on Human Factors in Computing Systems, CHI ’24, 2024.

- [32] J. Sutton, T. Langlotz, A. Plopski, S. Zollmann, Y. Itoh, and H. Regenbrecht. Look over there! investigating saliency modulation for visual guidance with augmented reality glasses. In Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology, UIST ’22, 2022.

- [33] N. Suzuki and Y. Nakada. Effects selection technique for improving visual attraction via visual saliency map. In Proceedings of the 2017 IEEE Symposium Series on Computational Intelligence (SSCI), pages 1–8, 2017.

- [34] E. E. Veas, E. Mendez, S. K. Feiner, and D. Schmalstieg. Directing attention and influencing memory with visual saliency modulation. In Proceedings of the 2011 SIGCHI Conference on Human Factors in Computing Systems, CHI ’11, page 1471–1480, 2011.

- [35] B. Volmer, J. Baumeister, S. Von Itzstein, I. Bornkessel-Schlesewsky, M. Schlesewsky, M. Billinghurst, and B. H. Thomas. A comparison of predictive spatial augmented reality cues for procedural tasks. IEEE Transactions on Visualization and Computer Graphics, 24(11):2846–2856, 2018.

- [36] M. Waldner, M. Le Muzic, M. Bernhard, W. Purgathofer, and I. Viola. Attractive flicker — guiding attention in dynamic narrative visualizations. IEEE Transactions on Visualization and Computer Graphics, 20(12):2456–2465, 2014.

- [37] J. O. Wallgrün, M. M. Bagher, P. Sajjadi, and A. Klippel. A comparison of visual attention guiding approaches for 360° image-based vr tours. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pages 83–91, 2020.

- [38] K. Wang, S. J. Julier, and Y. Cho. Attention-based applications in extended reality to support autistic users: A systematic review. IEEE Access, 10:15574–15593, 2022.

- [39] A. Yoshimura, A. Khokhar, and C. W. Borst. Visual cues to restore student attention based on eye gaze drift, and application to an offshore training system. In Symposium on Spatial User Interaction, SUI ’19, 2019.