Classify Respiratory Abnormality in Lung Sounds Using STFT and a Fine-Tuned ResNet18 Network

Abstract

Recognizing patterns in lung sounds is crucial to detecting and monitoring respiratory diseases. Current techniques for analyzing respiratory sounds demand domain experts and are subject to interpretation. Hence an accurate and automatic respiratory sound classification system is desired. In this work, we took a data-driven approach to classify abnormal lung sounds. We compared the performance using three different feature extraction techniques, which are short-time Fourier transformation (STFT), Mel spectrograms, and Wav2vec, as well as three different classifiers, including pre-trained ResNet18, LightCNN, and Audio Spectrogram Transformer. Our key contributions include the bench-marking of different audio feature extractors and neural network based classifiers, and the implementation of a complete pipeline using STFT and a fine-tuned ResNet18 network. The proposed method achieved Harmonic Scores of 0.89, 0.80, 0.71, 0.36 for tasks 1-1, 1-2, 2-1 and 2-2, respectively on the testing sets in the IEEE BioCAS 2022 Grand Challenge on Respiratory Sound Classification.

Index Terms:

respiratory sounds, classification, respiratory diseases, audio, respiratory sound classificationI Introduction

Respiratory diseases are among the top three global causes of death according to World Health Organization [1]. Studies have shown early diagnosis not only helps prevent the spread of respiratory diseases, but also improves the effectiveness of treatment [2]. Clinical studies have identified traits in lung sounds associated with respiratory diseases. Non-invasive, time-saving, and inexpensive medical procedure by auscultation and expert analysis has been developed [3]. However, its broader adaptation is limited by the availability of experienced medical professionals and the subjectivity in the interpretations of lung sound patterns. Thus, there is a need for a consistent and accurate automated respiratory sound classification system.

Recent advances in solving visual and audio classification tasks using neural networks suggest a promising path for data-driven automation design. Machine learning methods have demonstrated the ability to classify lung sounds [3]. In particular, researchers have used conventional machine learning models such as Hidden Markov Models [4], Support Vector Machines [5], and Decision Trees [6] to classify lung sounds by first extracting the Mel-frequency cepstral coefficient (MFCC) as features. Many have generated two-dimensional spectrograms, then used them as inputs for different ML architectures such as Convolutional Neural Networks (CNNs) [7, 8] and Recurrent Neural Networks [9] to perform the classification task. The top performer [10] on a similar benchmark dataset from the 2017 Internal Conference on Biomedical Health Informatics [11] exploited short-time Fourier transformation (STFT) and Gammatone filters, and fed features into an ensemble network of CNN and autoencoder to classify four different respiratory sounds.

In this work, we explored the combination of various feature extraction techniques and classifier architectures. We propose an end-to-end pipeline, R-STFT, for classifying lung sounds, combining STFT and the pre-trained ResNet18 image classifier.

This paper is structured as follows: we first introduce the dataset and the tasks in the IEEE BioCAS 2022 Grand Challenge on Respiratory Sound Classification [12]. Next, we expand on three feature extractors, three neural network classifiers, along with the training procedure. Then we present a comparative analysis of their performance and highlight one combination: R-STFT. Finally, we discuss impacts and identify future improvements.

II Dataset and Metrics

The SPRSound: Open-Source SJTU Paediatric Respiratory Sound Database [12] is the first public database that collects data from the Shanghai Children’s Medical Center (SCMC), with ages of children ranging from 1 month to 18 years old. The database contains 6656 labeled events and 1949 labeled recordings. Each recording is segmented into multiple respiratory events, annotated as Normal (N, 77.5%), Rhonchi (R, 0.6%), Wheeze (W, 6.8%), Stridor (S, 0.2%), Coarse Crackle (CC, 0.7%), Fine Crackle (FC, 13.7%), or Wheeze & Crackle (W&C, 0.5%). Recordings are labeled as Normal (N, 66.9%), Continuous Adventitious Sounds (CAS, 6.9%), Discontinuous Adventitious Sounds (DAS, 12.7%), CAS & DAS (C&D, 4.4%) or Poor Quality (PQ, 9.1%). Recordings are collected at 8kHz for at least 9.2 seconds. This rich dataset provides a unique test ground for evaluating automated lung sound classification systems.

The IEEE BioCAS 2022 Grand challenge on Respiratory Sound Classification proposes four tasks based on this dataset [12].

-

•

Task 1-1: classify events as N or Adventitious.

-

•

Task 1-2: classify events as N, R, W, S, CC, FC, or W&C.

-

•

Task 2-1: classify recordings as N or Adventitious.

-

•

Task 2-2: classify recordings as N, CAS, DAS, or C&D.

The challenge uses five metrics to evaluate the performance of the classifiers: sensitivity (SE, subscript denotes task), specificity (SP), Average Score (AS), Harmonic Score (HS) and Score. They are defined as follows.

The overall pipeline is evaluated by a weighed sum of Scores for each task: .

III Methods

We pose the task as a supervised multiclass classification problem. Our solution consists of two components: a pre-processing step that extracts temporal and/or spectral features from the input wave signals, and a neural network-based classifier, as shown in Figure 1.

III-A Pre-processing and Feature Extraction

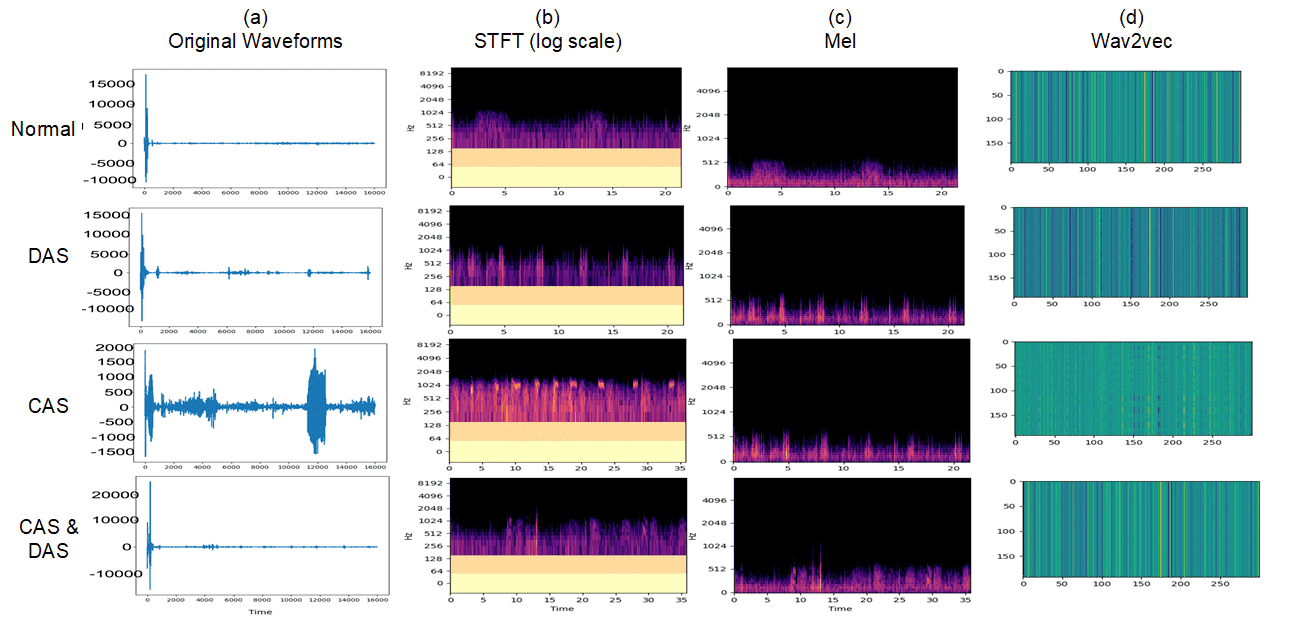

Three feature extraction methods were explored in our experiments, which include short-time Fourier transformation (STFT), Mel spectrogram, and Wav2vec (Figure 2).

III-A1 Short-time Fourier transformation

STFT is an established technique for extracting frequency features at local sections from temporal signals [13]. We selected the Hanning function as the windowing function for Fourier transformation, since adventitious lung sounds are not linear nor stationary. We chose a hop length of 0.01 seconds and a window length of 0.02 seconds between two adjacent Hanning windows, which has been used in a similar lung sound classification task by [14].

III-A2 Mel spectrogram

The Mel scale is a perceptual scale of pitches [15]. Mel spectrograms describe the audio signal in the Mel scale over time. We converted lung sounds to its Mel spectrograms to uncover pitch patterns informative to the domain experts. We used the hop length of 0.01s and a window length of 0.02s, the same configuration as STFT.

III-A3 Wav2vec features

Wav2vec (version 2) learns a speech audio embedded representation by pretraining on 960 hours of audiobooks and fine-tuning its transcripts [16]. It has proven successful in various domains such as speech recognition. We extracted the last layer output of auto speech recognition (ASR) pre-trained models, as the unique features of input audios.

III-B Classifier architecture

III-B1 LightCNN

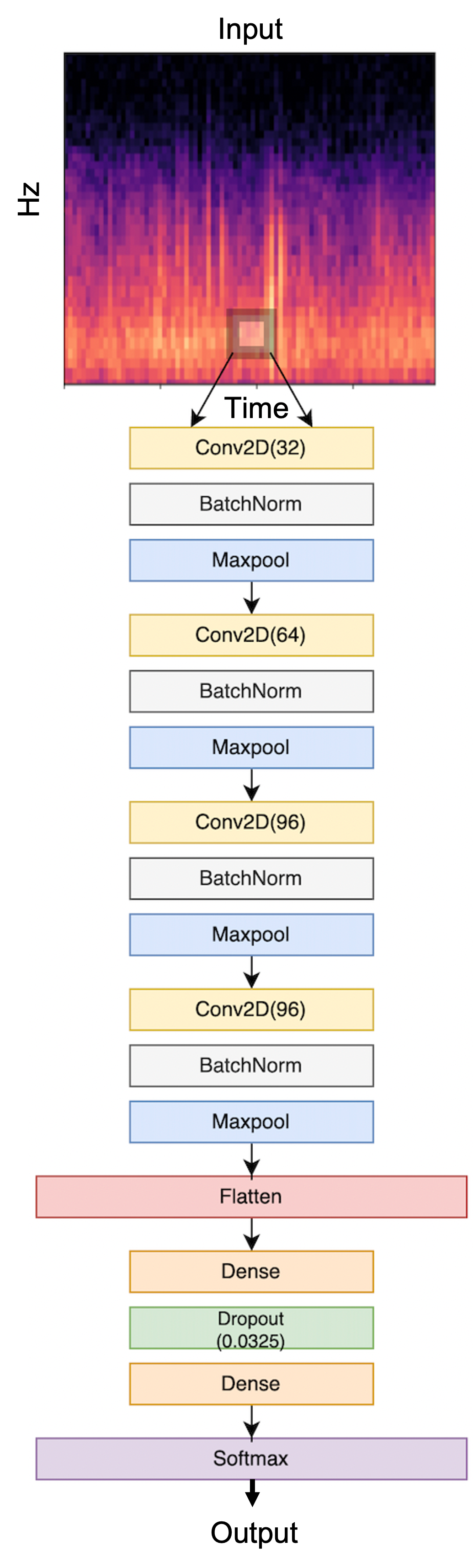

We proposed a simple convolutional neural network baseline, inspired by the LightCNN model for a similar task [7]. The model structure is illustrated in Figure 3. Briefly, The input layer corresponds to the 3-channel input of 224-by-224 images. The first convolutional layer uses 32 output filters with an 81-pixel square kernel, followed by a 4-pixel max-pooling layer. On top of the first layer, three convolutional layers are stacked, each with a 49-pixel, 25-pixel, 9-pixel kernel containing 64, 96 and 96 channels respectively and corresponding batch-normalization and max-pooling layers with 4-pixel pooling window. Then the CNN-extracted features are flattened in two fully connected layers and linked via a dropout layer (p=0.0325), followed by a SoftMax output layer to finally output the predicted probability for each class.

We chose the default ReLU function as the source of non-linearity [17]. Maximum pooling is applied to reduce feature dimension while retaining spatial invariance [18], [19]. The batch normalization layers normalize the extracted features, which is a common building block to stabilize neural network training by overcoming internal covariate shift [20].

III-B2 Pre-trained ResNet18

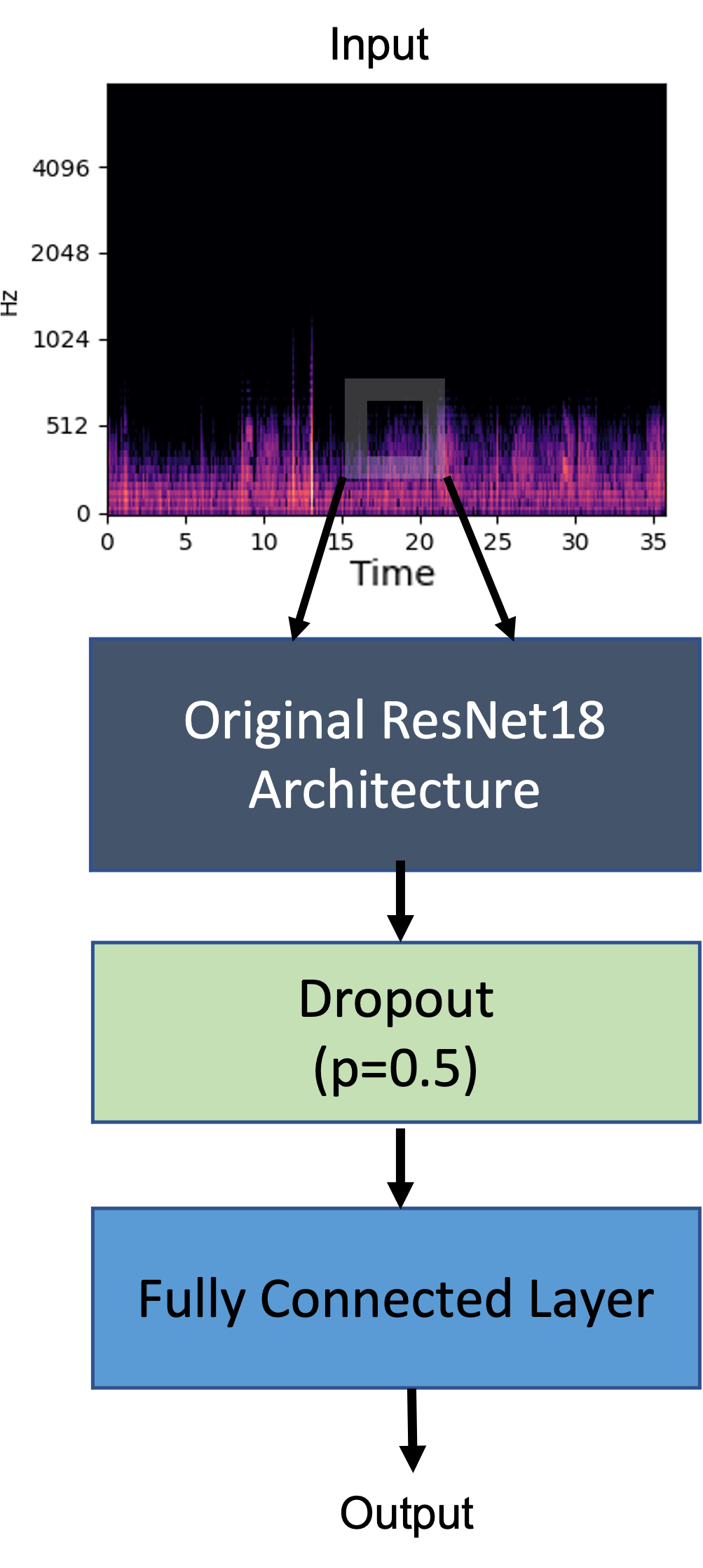

Pretraining classifiers on large general datasets and fine-tuning on smaller domain datasets are common practices in the applied machine learning community, thanks to their high training efficiency. Hence, we utilized pre-trained ResNet18 in our experiments. ResNet18 features residual blocks which retain gradients through deep networks. We opted for weights pre-trained on ImageNet1K [21] provided by the torchvision package in PyTorch ecosystem [22]. We added a final dropout layer (p=0.5) and a fully connected layer, on top of the 1000-D outputs of ResNet18. Figure 4 illustrates the high-level structure diagram of the modified ResNet18 model.

III-B3 Pre-trained Audio Spectrogram Transformer (AST)

III-C Training techniques

Weighed loss function was used to balance the over-representation of normal samples in the dataset. Specifically, the weights are proportional to the inverse of the square root of sample size in each target class. Moreover, we dropped the Poor Quality samples in the recording level (Task 2-1 and Task 2-2), as they do not contribute to SE nor SP.

We chose Adam [25] as the optimizer and the learning rate was initialized to 0.001 for CNN models and 0.0001 for pre-trained transformer models, decaying by every 50 epochs. We adapted 9:1 training:validation split. We selected 32 as the batch size unless limited by GPU memory. We ended training or fine-tuning if the validation loss is non-decreasing in 10 epochs. Our experiments were conducted on four NVIDIA® T4 GPUs.

IV Results

IV-A Task 1: event level classification

We experimented with all feature extraction techniques and two classifiers for Task 1. The architectures used are the pre-trained ResNet18 and the LightCNN models. In total, we trained four different models, including a pre-trained ResNet18 using STFT as training inputs (R-STFT), a LightCNN using STFT as training inputs (L-STFT), a LightCNN with Mel spectrograms as training inputs (L-MEL), and finally, a LightCNN with Wav2vec as training inputs (L-Wav2vec). As shown in Table I, R-STFT achieved the best training results for Task 1 (our submission). We have also attached the testing results evaluated by the Challenge committee. The details of the testing results are provided in [12].

| Training Results | ||||||||

|---|---|---|---|---|---|---|---|---|

| Model | R-STFT | L-STFT | L-MEL | L-Wav2vec | ||||

| Task level | 1-1 | 1-2 | 1-1 | 1-2 | 1-1 | 1-2 | 1-1 | 1-2 |

| SE | 0.67 | 0.43 | 0.60 | 0.36 | 0.00 | 0.00 | 0.00 | 0.00 |

| SP | 0.96 | 0.96 | 0.85 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| AS | 0.82 | 0.70 | 0.73 | 0.68 | 0.50 | 0.50 | 0.50 | 0.50 |

| HS | 0.79 | 0.59 | 0.70 | 0.53 | 0.00 | 0.00 | 0.00 | 0.00 |

| Score | 0.80 | 0.64 | 0.71 | 0.60 | 0.25 | 0.25 | 0.25 | 0.25 |

| Testing Results | ||||||||

| Model | R-STFT | |||||||

| Task level | SE | SP | AS | HS | ||||

| 1-1 | 0.89 | 0.90 | 0.89 | 0.89 | ||||

| 1-2 | 0.68 | 0.94 | 0.81 | 0.79 | ||||

IV-B Task 2: recording level classification

Similarly, we experimented with the feature extraction techniques and worked with an additional machine learning architecture, the audio spectrogram transformer architecture (AST) since the models that we have experimented in Task 1 (R-STFT and L-STFT) did not achieve comparable results for Task 2. There are four different models trained for this task, which includes R-STFT, L-STFT, a pre-trained ResNet18 with Mel spectrogram as inputs (R-MEL), and an audio spectrogram transformer architecture with Wav2vec feature as inputs (AST-Wav2vec). The training results are presented in Tables II.

From Table II, R-STFT and L-STFT achieved comparable results for Task 2-1 since they have similar Harmonic Scores. One could argue that R-STFT performed better in Task 2-2 and L-STFT performed better in Task 2-1 since they achieved a higher Score for Task 2-2 and Task 2-1 respectively. However, the team has submitted R-STFT for the challenge since the training results for L-STFT was not yet available at the submission deadline. The testing results of Task 2 for R-STFT is presented in Table II.

| Training Results | ||||||||

|---|---|---|---|---|---|---|---|---|

| Model | R-STFT | L-STFT | R-MEL | AST-Wav2vec | ||||

| Task level | 2-1 | 2-2 | 2-1 | 2-2 | 2-1 | 2-2 | 2-1 | 2-2 |

| SE | 0.43 | 0.09 | 0.57 | 0.00 | 0.14 | 0.00 | 1.00 | 0.33 |

| SP | 0.70 | 0.95 | 0.83 | 0.87 | 0.95 | 1.00 | 0.00 | 0.00 |

| AS | 0.57 | 0.52 | 0.70 | 0.44 | 0.55 | 0.50 | 0.50 | 0.17 |

| HS | 0.53 | 0.16 | 0.68 | 0.00 | 0.24 | 0.00 | 0.00 | 0.00 |

| Score | 0.55 | 0.34 | 0.69 | 0.22 | 0.39 | 0.25 | 0.25 | 0.08 |

| Testing Results | ||||||||

| Model | R-STFT | |||||||

| Task level | SE | SP | AS | HS | ||||

| 2-1 | 0.77 | 0.66 | 0.72 | 0.71 | ||||

| 2-2 | 0.23 | 0.86 | 0.54 | 0.36 | ||||

V Discussion

Overall, R-STFT achieved the best performance for Task 1. For Task 2, the pre-trained ResNet18 models (R-STFT, R-MEL) and the LightCNN model (L-STFT) produced comparable results. We continued to experiment with R-STFT and L-STFT for Task 2, but chose not to train with L-MEL and L-Wav2vec since these two models did not achieve promising results for Task 1. Hence, for Task 2, we tried with different combinations of preprocessing methods and machine learning architectures, which includes R-STFT, L-STFT, R-MEL and AST-Wav2vec. In general, R-STFT, L-STFT, and R-MEL achieved comparable results for Task 2. On the other hand, AST-Wav2vec did not perform well for Task 2.

R-STFT, L-STFT, and R-MEL achieved a lower sensitivity and a higher specificity score for Task 2. This could be that most examples in the dataset were labeled as Normal, which leads to an imbalanced training set despite our effort to counteract such imbalance with weighed loss function. To improve the SE and SP scores of our classifier, we can consider utilizing a over-sampling technique to increase the samples of other classes [26].

VI Conclusion

We experimented with different combinations of machine learning models and feature extraction techniques to perform the classification of respiratory sounds. R-STFT yielded a better performance consistently compared to the other combinations, achieving Scores of 0.89, 0.80, 0.71, 0.36 for tasks 1-1, 1-2, 2-1 and 2-2, respectively on the testing sets of the IEEE BioCAS 2022 Grand Challenge on Respiratory Sound Classification. In future work, addressing the imbalanced samples is essential to improving sensitivity scores. Experimenting with more combinations of architectures and feature extraction techniques that better suit the nature of lung sounds, as well as adopting a methodical approach such as hyperparameter sweeping to select the best hyperparameters are both promising directions to further improve our classifier’s performance.

References

- [1] WHO. The top 10 causes of death. [Online]. Available: https://www.who.int/news-room/fact-sheets/detail/the-top-10-causes-of-death

- [2] L. Pham, H. Phan, A. Schindler, R. King, A. Mertins, and I. McLoughlin, “Inception-based network and multi-spectrogram ensemble applied to predict respiratory anomalies and lung diseases,” in 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). IEEE, 2021, pp. 253–256. [Online]. Available: https://doi.org/10.1109/EMBC46164.2021.9629857

- [3] Y. Kim, Y. Hyon, S. S. Jung, S. Lee, G. Yoo, C. Chung, and T. Ha, “Respiratory sound classification for crackles, wheezes, and rhonchi in the clinical field using deep learning,” Scientific Reports, vol. 11, no. 1, p. 17186, Aug 2021. [Online]. Available: https://doi.org/10.1038/s41598-021-96724-7

- [4] N. Jakovljević and T. Lončar-Turukalo, “Hidden markov model based respiratory sound classification,” in International Conference on Biomedical and Health Informatics. Springer, 2017, pp. 39–43. [Online]. Available: https://doi.org/10.1007/978-981-10-7419-6_7

- [5] A. H. Falah and J. Jondri, “Lung sounds classification using stacked autoencoder and support vector machine,” in 2019 7th International Conference on Information and Communication Technology (ICoICT), 2019, pp. 1–5. [Online]. Available: https://doi.org/10.1109/ICoICT.2019.8835278

- [6] G. Chambres, P. Hanna, and M. Desainte-Catherine, “Automatic detection of patient with respiratory diseases using lung sound analysis,” 2018 International Conference on Content-Based Multimedia Indexing (CBMI), pp. 1–6, 2018. [Online]. Available: https://doi.org/10.1109/CBMI.2018.8516489

- [7] S. B. Shuvo, S. N. Ali, S. I. Swapnil, T. Hasan, and M. I. H. Bhuiyan, “A lightweight cnn model for detecting respiratory diseases from lung auscultation sounds using emd-cwt-based hybrid scalogram,” IEEE Journal of Biomedical and Health Informatics, vol. 25, no. 7, pp. 2595–2603, 2021. [Online]. Available: https://doi.org/10.1109/JBHI.2020.3048006

- [8] Z. Ren, T. T. Nguyen, and W. Nejdl, “Prototype learning for interpretable respiratory sound analysis,” 2021. [Online]. Available: https://arxiv.org/abs/2110.03536

- [9] K. Kochetov, E. Putin, M. Balashov, A. Filchenkov, and A. Shalyto, “Noise masking recurrent neural network for respiratory sound classification,” in International Conference on Artificial Neural Networks. Springer, 2018, pp. 208–217. [Online]. Available: https://doi.org/10.1007/978-3-030-01424-7_21

- [10] D. Ngo, L. Pham, A. Nguyen, B. Phan, K. Tran, and T. Nguyen, “Deep learning framework applied for predicting anomaly of respiratory sounds,” in 2021 International Symposium on Electrical and Electronics Engineering (ISEE). IEEE, 2021, pp. 42–47. [Online]. Available: https://doi.org/10.48550/arXiv.2012.13668

- [11] B. M. Rocha, D. Filos, L. Mendes, G. Serbes, S. Ulukaya, Y. P. Kahya, N. Jakovljevic, T. L. Turukalo, I. M. Vogiatzis, E. Perantoni, E. Kaimakamis, P. Natsiavas, A. Oliveira, C. Jácome, A. Marques, N. Maglaveras, R. P. Paiva, I. Chouvarda, and P. de Carvalho, “An open access database for the evaluation of respiratory sound classification algorithms,” Physiological Measurement, vol. 40, no. 3, p. 035001, mar 2019. [Online]. Available: https://doi.org/10.1088/1361-6579/ab03ea

- [12] Q. Zhang, J. Zhang, J. Yuan, H. Huang, Y. Zhang, B. Zhang, G. Lv, S. Lin, N. Wang, X. Liu, M. Tang, Y. Wang, H. Ma, L. Liu, S. Yuan, H. Zhou, J. Zhao, Y. Li, Y. Yin, L. Zhao, G. Wang, and Y. Lian, “Automated lung sound analysis in patients with pneumonia,” IEEE Transactions on Biomedical Circuits and Systems (TBioCAS), pp. 1–13, 2022.

- [13] X. Liu and A. G. Richardson, “Edge deep learning for neural implants: a case study of seizure detection and prediction,” Journal of Neural Engineering, vol. 18, no. 4, p. 046034, 2021.

- [14] Y. Ma, X. Xu, Q. Yu, Y. Zhang, Y. Li, J. Zhao, and G. Wang, “Lungbrn: A smart digital stethoscope for detecting respiratory disease using bi-resnet deep learning algorithm,” in 2019 IEEE Biomedical Circuits and Systems Conference (BioCAS), 2019, pp. 1–4. [Online]. Available: https://doi.org/10.1109/BIOCAS.2019.8919021

- [15] S. S. Stevens and J. Volkmann, “The relation of pitch to frequency: A revised scale,” The American Journal of Psychology, vol. 53, no. 3, pp. 329–353, 1940. [Online]. Available: https://doi.org/10.2307/1417526

- [16] A. Baevski, H. Zhou, A. Mohamed, and M. Auli, “wav2vec 2.0: A framework for self-supervised learning of speech representations,” CoRR, vol. abs/2006.11477, 2020. [Online]. Available: https://arxiv.org/abs/2006.11477

- [17] A. F. Agarap, “Deep learning using rectified linear units (relu),” arXiv preprint arXiv:1803.08375, 2018. [Online]. Available: https://arxiv.org/abs/1803.08375

- [18] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Advances in Neural Information Processing Systems 25, F. Pereira, C. J. C. Burges, L. Bottou, and K. Q. Weinberger, Eds. Curran Associates, Inc., 2012, pp. 1097–1105. [Online]. Available: http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

- [19] V. Basu and S. Rana, “Respiratory diseases recognition through respiratory sound with the help of deep neural network,” in 2020 4th International Conference on Computational Intelligence and Networks (CINE). IEEE, 2020, pp. 1–6. [Online]. Available: https://doi.org/10.1109/CINE48825.2020.234388

- [20] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in Proceedings of the 32nd International Conference on Machine Learning, ser. Proceedings of Machine Learning Research, F. Bach and D. Blei, Eds., vol. 37. Lille, France: PMLR, 07–09 Jul 2015, pp. 448–456. [Online]. Available: https://proceedings.mlr.press/v37/ioffe15.html

- [21] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009, pp. 248–255. [Online]. Available: https://doi.org/10.1109/CVPR.2009.5206848

- [22] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga et al., “Pytorch: An imperative style, high-performance deep learning library,” Advances in neural information processing systems, vol. 32, 2019. [Online]. Available: https://doi.org/10.48550/arXiv.1912.01703

- [23] Y. Gong, Y.-A. Chung, and J. Glass, “AST: Audio Spectrogram Transformer,” in Proc. Interspeech 2021, 2021, pp. 571–575. [Online]. Available: https://doi.org/10.21437/Interspeech.2021-698

- [24] J. F. Gemmeke, D. P. W. Ellis, D. Freedman, A. Jansen, W. Lawrence, R. C. Moore, M. Plakal, and M. Ritter, “Audio set: An ontology and human-labeled dataset for audio events,” in 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2017, pp. 776–780. [Online]. Available: https://doi.org/10.1109/ICASSP.2017.7952261

- [25] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” 2014. [Online]. Available: https://arxiv.org/abs/1412.6980

- [26] Z. Afzal, M. J. Schuemie, J. C. van Blijderveen, E. F. Sen, M. C. Sturkenboom, and J. A. Kors, “Improving sensitivity of machine learning methods for automated case identification from free-text electronic medical records,” BMC Medical Informatics and Decision Making, vol. 13, no. 1, 2013. [Online]. Available: https://doi.org/10.1186/1472-6947-13-30