Classifying herbal medicine origins by temporal and spectral data mining of electronic nose

Abstract

The origins of herbal medicines are important for their treatment effect, which could be potentially distinguished by electronic nose system. Because the difference in the odor fingerprint of herbal medicines from different origins can be tiny, the discrimination of origins can be much harder than that of different categories. Therefore, better feature extraction methods are significant for this task to be more accurately done, but there lacks systematic studies on different feature extraction methods and a standardized manner to extract features from e-nose signals upon which most researchers agree. To investigate the effectiveness of multiple feature engineering approaches, we classified different origins of three categories of herbal medicines with different feature extraction methods: manual feature extraction, mathematical transformation, deep learning. With 50 repetitive experiments with bootstrapping, we compared the effectiveness of the extractions with a two-layer neural network w/o dimensionality reduction methods (principal component analysis, linear discriminant analysis) as the three base classifiers. Compared with the conventional aggregated features, the Fast Fourier Transform (FFT) method and our novel approach (longitudinal-information-in-a-line) showed an significant accuracy improvement() on all 3 base classifiers and all three herbal medicine categories, with the highest median classification accuracy 0.675 and 0.7 over 30 experiments. Two of the deep learning algorithm we applied also showed partially significant improvement: one-dimensional convolution neural network(1D-CNN) and a novel graph pooling based framework - multivariate time pooling (MTPool), with the highest median accuracy 0.75 and 0.65.

keywords:

electronic nose , feature engineering , herbal medicine originsPACS:

0000 , 1111MSC:

0000 , 1111[inst1]organization=Institute of Cyber-syste and Control, Zhejiang University,city=Hangzhou, postcode=310007, country=China

[inst3]organization=Institute of Intellegent Agricultural Equipment,Zhejiang university, city=Hangzhou, postcode=310007, country=China

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/88477490-0da9-47da-a6c2-e242a204ba20/x1.png)

Different feature extraction methods were systematically investigated on classifying origins of herbal medicines.

The proposed long-line method includes both aggregated and temporal information, which outperformed the conventional aggregated method.

Feature extraction method containing temporal information gained remarkable accuracy improvement with dimensionality reduction methods.

1 Introduction

Different alternative herbal medicines have distinct pharmaceutical values, because of not only different categories but also different geographical origins [1]. The practictioners of herbal medicines attach significance to the specific medicines originated from the best-known origins [2]. Therefore, the medicines from different geographical locations have a high variance in price, leaving space for frauds. Due to the tiny odor difference, there has been frauds in the herbal medicine markets that uses cheaper and inferior herbal medicine ingradients not originated from the most well-known origins [3, 4]. To get the better treatment effect for patients, it is necessary to distinguish both the categories and geographical origins of the alternative herbal medicines. However, the similarities in appearances and odors make it difficult for experts to discriminate herbal medicines of the same category but from different origins. An accurate and cheap analytic method capturing the subtle differences is in need.

Electronic nose (e-nose), has been proved to be effective and affordable in pattern recognition based on volatile organic compounds (VOCs). It has been successfully applied in lung cancer detection [5], dendrobiums identification [6], and herbal medicine category classification[7, 8, 9].

Although the previous research showed prototypical success in classifying herbal medicine origins with a set of engineered features[10], there is still much room for improvement. First, features extracted from e-nose signals significantly influences the classification performance. However, most previous publications in herbal medicine classification did not systematically compare the feature extraction methods and their respective effectiveness in terms of classification accuracy. As there is no universal features for e-nose agreed by researchers, several studies adopted the aggregated features and deemed them as a standard pipeline for e-nose pattern recognition [10, 11]. The aggregated features involved the steady-state and transient information[12] of the signals, which were mainly based on subjective domain-expert experience but only partially exploited the signal information without mining the temporal and spectral details encapsulated in the e-nose response signals. For example, Zhan et al. revealed the overabundance and low predictive power of some of the aggregated features [10]. Therefore, more universally applicable feature engineering methods worth further investigation. Second, in the previous publication to validate the feature extraction methods[10], leave-one-out cross validation was used. The test data were used both in hyperparameter tuning and model evaluation. This might lead to overestimated accuracy. Furthermore, the previous study [10] did not verify the model robustness or test the statistical significance with repeated experiments.

In this study, we improved our previous study on herbal medicine origin classification by systematically comparing different feature engineering methods with parallel experiments, to explore effective feature extraction methods with temporal and spectral information for higher classification accuracy. With a stricter model development and evaluation design, we also tested the statistical significance in the difference among various feature engineering approaches, which addressed the limited reproducibility issue in previous studies.

2 Materials and Methods

2.1 Experiment and dataset

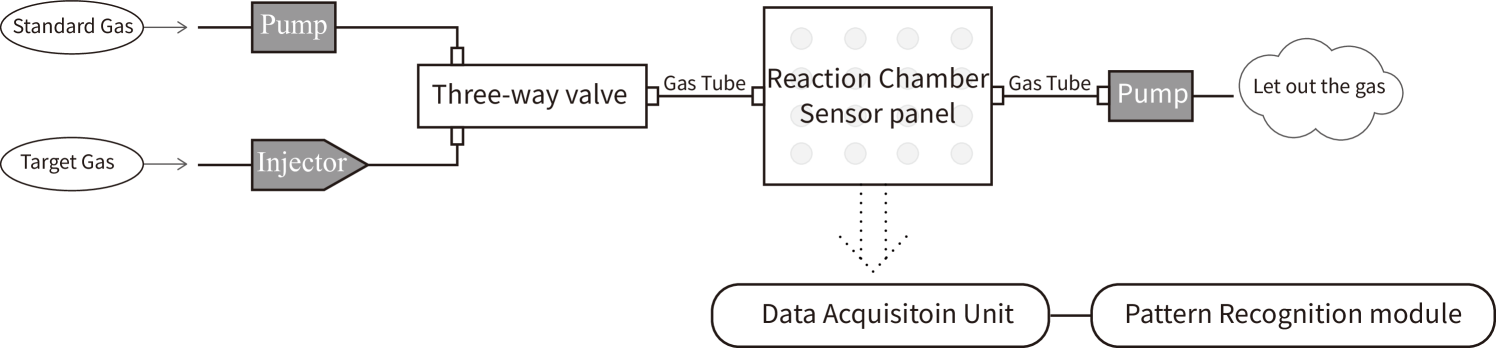

In this study, we chose three categories of alternative herbal medicines as the study cases and collected three independent datasets: Radix Angelicae, Angelica Sinensis and Radix Puerariaem. Each dataset included 160 samples from 4 different origins [10], as shown in Table 1. The data was collected at the State Key Laboratory of Industrial Control Technology, Zhejiang University from Dec.2017 to Jan.2018 with a self-assembled e-nose system [7, 10, 5, 11, 6]. This self-assembled e-nose system is composed with four modules: the gas conveying system, the sensor reaction chamber where the gas reacts with sensors, the data acquisition unit, and the pattern recognition module for the data processing and classification. The structure of the e-nose system is shown in Fig. 1. The gas conveying system involves two gas pumps and a three-way valve to control the gas flow of the target gas and the standard gas (dry and clean air).

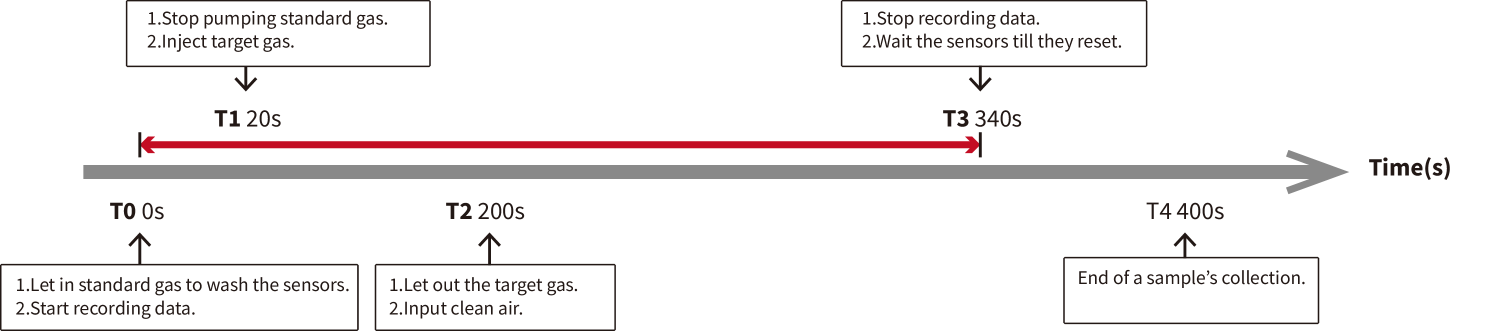

The sensor reaction chamber consists of 16 metal-oxide semi-conductive (MOS) sensors: TGS (Taguchi Gas Sensors) from Figaro Engineering Inc, Osaka, Japan [7, 8, 10], and the specialities of 16 sensors are displayed in Table 2. The data acquisition unit records the sensor reaction signals with a sampling rate of 100 Hz, with more details shown in Fig. 2. The entire process for a sample of target gas lasted for 400 seconds, and each sample of target gas stayed in the sensor reaction chamber for 180 seconds. The rest 220 seconds were for the sensors resetting process when we pumped the standard gas into the sensor reaction chamber for 20 seconds before pumping in the target gas and 200 seconds after pumping out the target gas.

For each type of the medicines, the following steps were applied to extract the target gas :

1. Grinded the alternative herbal medicines into powders with an electrical pulverizer.

2. Took 8 grams of the power and put it into a 125 ml glass jar, and then used a para-film to seal the jar.

3. Heated the sample powders for 10 hours in a 50 thermostatic chamber, and then enabled the volatile gases to diffuse in the glass jar for 10 more hours.

4. From the headspace of each glass jar, took 10 mL gas to be the target gas for each medicine sample.

Our experiment was done at laboratory where the environment temperature of 22 - 27 and the humidity was kept between 50 % - 70 %.

| Categories | Origin 1 | Origin 2 | Origin 3 | Origin 4 |

|---|---|---|---|---|

| Radix Angelicae | Anhui | Sichuan | Hubei | Zhejiang |

| Angelica Sinensis | Shaanxi | Gansu | Hubei | Sichuan |

| Radix Puerariae | Sichuan | Hubei | Anhui | Hunan |

| No. | The Type Of Sensors | Individual Response Sensitivity | ||

|---|---|---|---|---|

| 1 | TGS800 | Carbon monoxide, ethanol, methane, hydrogen, ammonia | ||

| 2 | TGS813 | Carbon monoxide, ethanol, methane, hydrogen, isobutane | ||

| 3 | TGS813 | Carbon monoxide, ethanol, methane, hydrogen, isobutane | ||

| 4 | TGS816 | Carbon monoxide, ethanol, methane, hydrogen, isobutane | ||

| 5 | TGS821 | Carbon monoxide, ethanol, methane, hydrogen | ||

| 6 | TGS822 |

|

||

| 7 | TGS822 |

|

||

| 8 | TGS826 | Ammonia, trimethyl amine | ||

| 9 | TGS830 | Ethanol, R-12, R-11, R-22, R-113 | ||

| 10 | TGS832 | R-134a, R-12 and R-22, ethanol | ||

| 11 | TGS880 | Carbon monoxide, ethanol, methane, hydrogen, isobutane | ||

| 12 | TGS2620 | Methane, Carbon monoxide, isobutane, hydrogen | ||

| 13 | TGS2600 | Carbon monoxide, hydrogen | ||

| 14 | TGS2602 | Hydrogen, ammonia ethanol, hydrogen sulfide, toluene | ||

| 15 | TGS2610 | Ethanol, hydrogen, methane, isobutane/propane | ||

| 16 | TGS2611 | Ethanol, hydrogen, isobutane, methane |

2.2 Feature extraction

2.2.1 Manual extraction methods

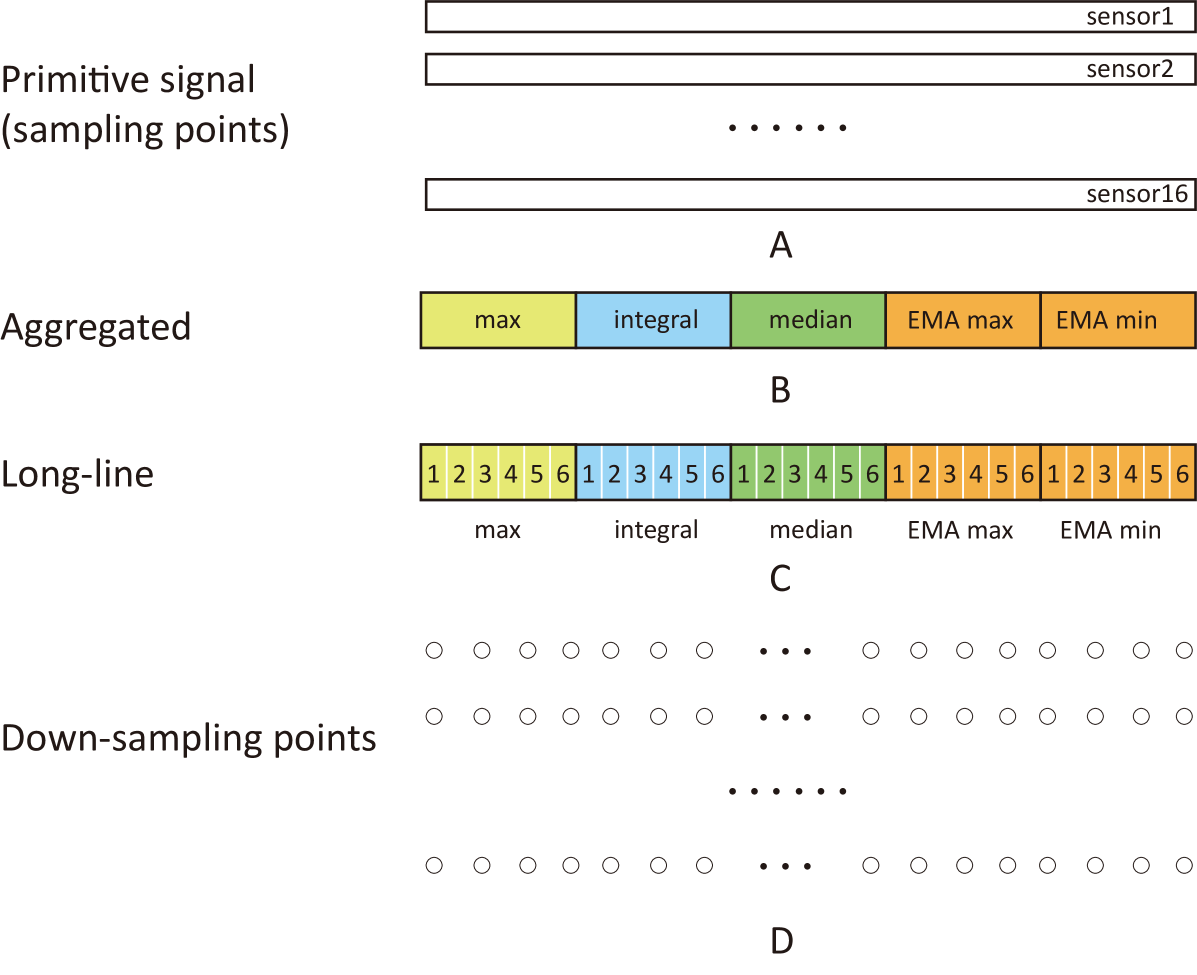

The manual feature extraction methods are shown in Fig. 3 and Table. 3. The primitive signal contained the 16-sensor responses in 318 seconds with a sampling rate of 100Hz, with baseline already removed from these signals:

| (1) |

In Fig. 3 A, each bar denotes a temporal response of one sensor. We firstly introduced the sampling rate as a variable in feature engineering: as the signals were not changing rapidly, we used the down-sampling method with a sampling rate of 1Hz to reduce dimensionality. Then, we took the aggregated feature extraction method which extracted 5 features from each sensor, including:

1. The maximum voltage:

| (2) |

2. The integral value of voltage:

| (3) |

3. The median of the temporal data series:

| (4) |

4-5. The maximum and minimum value of the exponential moving average (EMA) of the derivative of voltage, with , :

| (5) |

.

| (6) |

| (7) |

the effective of these aggregated features were proved effective in the previous publication[10, 7]. Then, we introduced a new method derived from the aggregated feature extraction method: the longitudinal-information-in-a-line (long-line) method. It was a variant version of the aggregated method proposed by us, with the aggregated features extracted from 6 separate time windows instead of the entire time range. In Fig. 3 B, each cell (e.g. max) denotes an array of the results extracted from 16 sensors. In fig.3 C, each sub-cell (e.g. the sub-cell in max) denotes an array with results from 16 sensors.

| Feature extraction types | Method | Dimensionality reduction method | Classifier |

| Manual Extraction | Aggregated | PCA, LDA | DNN |

| long-line | PCA, LDA | DNN | |

| Signal Sampling | Sampling points | PCA, LDA | DNN |

| down-sampling points | PCA, LDA | DNN | |

| Time-frequency Transformation | Fast Fourier transformation | PCA, LDA | DNN |

| Scalogram | PCA, LDA | VGG+DNN | |

| Deep Learning | LSTM | ||

| 1D-CNN | |||

| MTPool | |||

2.2.2 Mathematical transformation

To extract spectral features, we applied two time-frequency transformations (shown in Table. 3): Fast Fourier transform (FFT) and scalogram analysis. The FFT method extracts the spectral densities of a signal, which decomposes the original sequence into several components with different frequencies[13, 14]. Scalogram uses continuous wavelet transform filter bank (implemented with MATLAB R2020a) to decompose signals and display the spatio-temporal information of a signal with the graph format[15]. In this study, the sampling signals in Fig.3 A was used as the transformation input. We took the magnitudes of FFT results. Because after inspecting the spectral density distribution, we found the main frequency components gathered in 0-0.5HZ. We then used 10 frequency windows with a length of 0.05Hz to extract the spatial density information. The maximum, mean and median values in each window were collected as the featrues. The scalogram method requires image recognition method to extract numeral features from the scalograms, and therefore, we adopted pre-trained large convolutional neural network - VGG16[16, 17] to preprocess the scalogram features and condense the spatial-temporal information involved in the scalograms. To extract the coarse and detailed information of scalograms such as the edges and lines, we chose VGG’s fully connected layer 7 as the output before further processing.

2.2.3 Deep learning algorithms

To explore the data-driven feature extraction methods, we employed three deep learning algorithms (shown in Table.3): recurrent neural network with long short-term memory (LSTM), one-dimensional convolution neural network (1D-CNN), a newly proposed graph pooling based framework (MTPool) specific for multi-sensor data pattern recognition. Those algorithms can learn parameters to extract features from the primitive signals in a data-driven manner. Considering the size of our dataset, the computaional cost and to avoid overfitting, we chose the down-sampling points in Fig.3 D as the input. LSTM is a recurrent neural network (RNN) suited for time series data, and it is featured with the connection gates to utilize the information in previous state (also called memories)[18, 19]. We designed an LSTM layer followed by a linear layer for further information processing, which was further connected to the output layer. As for the 1D-CNN approach, it is a special deep neural network which uses a 1-D convolution kernel to generate the information in a graph, and the kernel only moves in time rather than across sensors [20, 21] to utilize the temporal relationship of each sensor. After the convolution operation, three fully connected layers were employed to further process the information and give the output. MTPool is a novel graph pooling based framework[22], which uses pairwise dependencies of multivariate time series to refine the nodes [23],[24]. The proposal of MTPool is based on the fact that current deep learning methods for MTSC have two limitations: (1) models which depend on the convolutional or recurrent neural networks cannot explicitly model the pairwise dependencies among variables; (2) current spatial-temporal models based on GNNs are inherently flat and cannot hierarchically aggregate hub data [25]. To overcome these challenges, MTPool views the MTSC task as a graph classification task and use a graph pooling-based method to deal with it. MTPool first converts MTS slices to graphs to attain the spatial-temporal features with graph structure learning and temporal convolution. In addition, MTPool utilizes a novel graph pooling strategy, where an ’encoder-decoder’ mechanism is used to determine adaptive clustering centroids for cluster assignments. Then GNNs and graph pooling layers are used for joint graph representation learning and graph coarsening, after which the graph is progressively coarsened to one node. At last, a differentiable classifier takes this coarsened one-node graph as input to get the final predicted class. What must be mentioned here, the hyperparameters of the deep learning network structure, such as the number of fully connected layers after the LSTM layer and the 1-D convolution layer, were tuned based on the validation data, which will be introduced in the next subsection.

2.3 Prediction and evaluation protocol

To avoid overestimating the classification accuracy, we adopted the hold-out test protocol instead of the ’leave-one-out’ validation protocol which was used in our previous study [10]: the test set for model evaluation was independent of the training and hyperparameter tuning processes. For each category in Table 1, we randomly selected 120 samples to be the training set, leaving the rest 40 for testing.

For manual extraction and mathematical transformation methods, because the feature extraction was independent of the class labels, we firstly applied the feature extraction methods on the entire dataset, and then partitioned the entire dataset into the training set and the test set. In this study, we chose DNN as the basic classifier because it is flexible and does not require strict model and data distribution assumptions.

Because dimensionality reduction is commonly used in many classification studies, as we are comparing the effectiveness of different feature extraction, we also evaluated the classification performances of these feature extraction methods in combination with the dimensionality reduction approaches for a more comprehensive conclusion. We employed 2 dimensionality reduction methods: principal component analysis (PCA), linear discriminant analysis (LDA), and combine them with the base classifier DNN to form the following three base classifiers: DNN, PCA-DNN, LDA-DNN. To tune the hyperparameters of the classifiers, we used a 5-fold cross validation protocol within the training set. The hyperparameters included: the length of width of the fixed spectral window in the FFT method, the reduced dimensionality in PCA and LDA, the image processing network (VGG or Inception) and its output layer, the number of hidden layers and number of hidden units of DNN, and the number of fully connected layers and the hidden units of 1D-CNN. To test the statistical significance in the difference in accuracy of various feature engineering methods, we repeated the experiment for 50 times by bootstrapping the 120 training samples with different random seeds, and then we recorded the prediction accuracy on the test set. With the fifty results, we performed Wilcoxon signed-rank tests to test statistical significance. The paired t-test was not used in this study, because the Shapiro-Wilk tests rejected the normality assumption of some groups of accuracy results and Wilcoxon signed-rank does not rely on the normal distribution assumption.

For the deep learning methods, as the training and validation took a longer time, we directly partitioned the entire dataset into 80/40/40 for train/validation/test sets. The validation set was used for hyperparameter tuning process, which included the convolution kernel size and the network structure in 1D-CNN, the hidden unit dimensionality, number of units in the linear layer after the recurrent layer, learning rate and training epochs in LSTM, the number of centroid heads and pooling layers in MTPool. With the hyperparameters tuned, we retrained the deep learning models using training set in the same bootstrapping pipeline, and tested its prediction accuracy performance on test set. We took the feature extraction method used in previous studies (aggregated features) as the baseline [10, 7], and applied Wilcoxon signed-rank tests to test the statistical significance.

3 Results

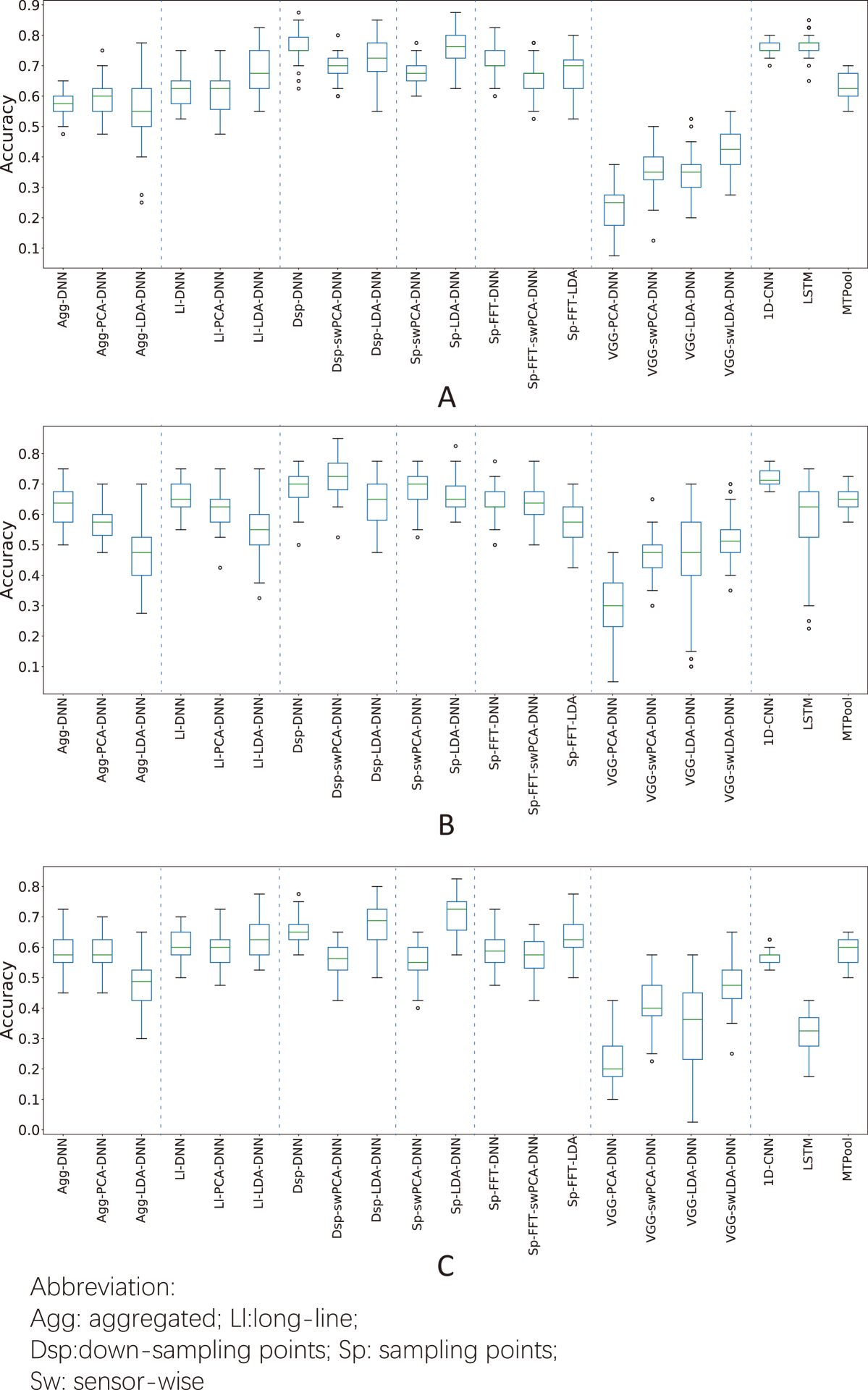

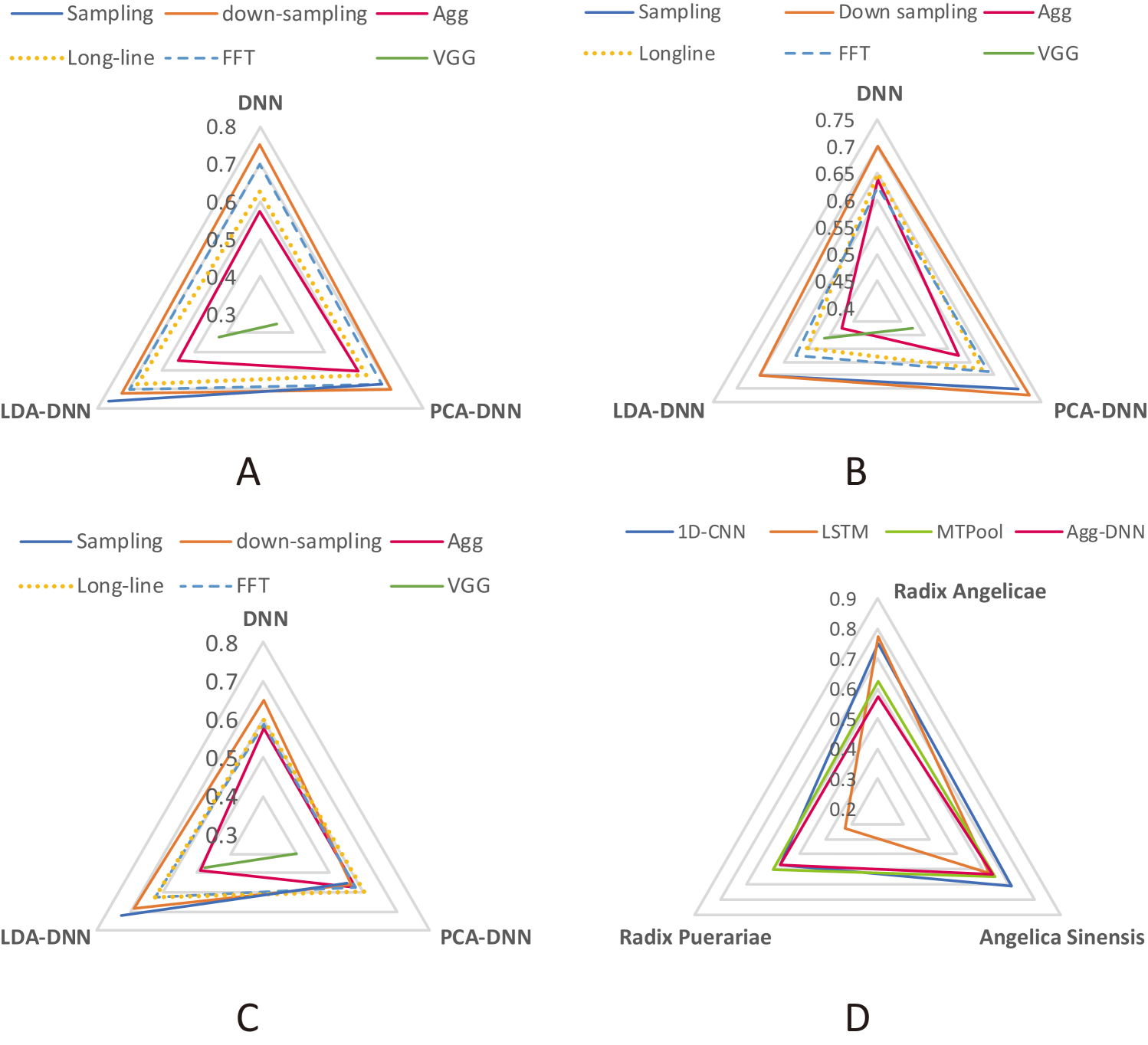

The accuracies of different feature extraction methods in the herbal medicine origin classification are shown in Fig. 5. For Radix Angelicae, except for the scalogram with VGG network, all the other feature extraction methods outperformed baseline on three classifiers(). The highest median classification accuracy was 0.775 reached by LSTM. The signal sampling methods(down-sampling points and sampling points) generally manifest a higher accuracy improvement than manual extraction methods (baseline and long-line), when working on a same classifier.

For Angelica Sinensis, the long-line, down-sampling, sampling and FFT methods outperformed the baseline when working on a classifier(). For deep learning methods, only 1D-CNN outperformed the baseline (median accuracy: 0.625).

For Radix Puerariae, long-line and FFT methods performed better than baseline on all classifiers. The best performance was from sampling points with LDA-DNN as the classifier (median accuracy: 0.725).

4 Discussion

Compared with the conventional aggregated features, the long-line and FFT feature extraction methods generally improved the classification accuracy with three classifiers on classifying different origins for all three different categories of herbal medicines. Furthermore, two feature extraction methods always performed better than baseline when we set the classifier as a fixed variable: the down-sampling points with DNN and LDA-DNN, sampling points methods with LDA-DNN. As for the deep learning methods, 1D-CNN and MTPool showed a general improvement on the origin classification tasks for three different categories of herbal medicines, which also manifested the power of deep learning. Compared with deep learning, the manual feature extraction methods (such as long-line, FFT) still show better effectiveness on our dataset. This is probably due to the small number of our samples, which may lead to the overfitting in the complicated deep learning models.

Based on the results and comparison among different feature engineering approaches, we provide the users who are willing to classify herbal medicine origins with e-nose with the following general suggestions on feature extraction strategies: with undetermined classifiers, for higher robustness in high classification accuracy, the long-line method, and the FFT approach are preferred than the conventional aggregated method; To pursue a faster feature extraction and classification process, down-sampling points and sampling points with LDA-DNN are recommended.

This study showed more convenient and efficient feature extraction methods than the aggregated features which have been used in many previous studies. However, in the experiments, the advantages of those methods might slightly vary when they are adopted on different categories of herbal medicines. This was probably due to the limitation of our small-scale dataset, which may lead to variance in the results. Therefore, further validation will be done on larger datasets with more categories of herbal medicines originated from different regions.

Besides the comprehensive analysis and comparison of feature extraction methods in this dataset, we published this dataset with the feature engineering methods we used (aggregated features, long-line, down-sampling points, sampling points, FFT) on Github (https://github.com/xzhan96-stf/Herbal-medicine-origin-e-nose) for researchers to develop better algorithms, which can be compared with the results in this study as the benchmark.

5 Conclusion

In this study, different feature extraction methods were investigated in the classification tasks where herbal medicine origins were discriminated, with the primitive signals collected by an e-nose system. To get a more credible conclusion, those approaches were individually tested on three categories of herbal medicine (each one has 4 origins) as three independent dataset. The feature extraction methods fall into four categories: signal sampling, manual extraction methods, mathematical transformation, and deep learning algorithms. Working with three basic classifiers ( DNN, LDA-DNN, PCA-DNN), the effectiveness of these approaches were compared. In general, with a fixed clssifier, the extraction methods with both temporal information and expert knowledge (such as longline and FFT) performs well and robustly. However, when working with a strong dimensionality reduction method (such as LDA, down sampling), the extraction methods containing more temporal information (such as down-sampling points and sampling points with LDA-DNN) can sometimes reach remarkably high accuracy. As there is no standard feature extraction pipeline for mining electronic nose data, this study provides the research community with a general guidance on the potential better choice of features when work with electronic nose to improve the accuracy of pattern recognition.

References

- [1] N. Li, Y. Wang, and K. Xu, “Fast discrimination of traditional chinese medicine according to geographical origins with ftir spectroscopy and advanced pattern recognition techniques,” Optics Express, vol. 14, no. 17, pp. 7630–7635, 2006.

- [2] F.-S. Li and J.-K. Weng, “Demystifying traditional herbal medicine with modern approach,” Nature plants, vol. 3, no. 8, pp. 1–7, 2017.

- [3] V. Scheid, D. Bensky, A. Ellis, and R. Barolet, Chinese herbal medicine: formulas & strategies. Eastland press, 2009.

- [4] S. Nishibe, “The plant origins of herbal medicines and their quality evaluation,” Journal-pharmaceutical society of japan, vol. 122, no. 6, pp. 363–380, 2002.

- [5] X. Zhan, Z. Wang, M. Yang, Z. Luo, Y. Wang, and G. Li, “An electronic nose-based assistive diagnostic prototype for lung cancer detection with conformal prediction,” Measurement, vol. 158, p. 107588, 2020.

- [6] Y. Wang, J. Diao, Z. Wang, X. Zhan, B. Zhang, N. Li, and G. Li, “An optimized deep convolutional neural network for dendrobium classification based on electronic nose,” Sensors and Actuators A: Physical, vol. 307, p. 111874, 2020.

- [7] X. Zhan, X. Guan, R. Wu, Z. Wang, Y. Wang, and G. Li, “Discrimination between alternative herbal medicines from different categories with the electronic nose,” Sensors, vol. 18, no. 9, p. 2936, 2018.

- [8] X. Zhan, X. Guan, R. Wu, Z. Wang, Y. Wang, Z. Luo, and G. Li, “Online conformal prediction for classifying different types of herbal medicines with electronic nose,” 2018.

- [9] L. Liu, X. Zhan, R. Wu, X. Guan, Z. Wang, W. Zhang, Y. Wang, Z. Luo, and G. Li, “Boost ai power: Data augmentation strategies with unlabelled data and conformal prediction, a case in alternative herbal medicine discrimination with electronic nose,” IEEE Sensors Journal (Accepted), 2021.

- [10] X. Zhan, X. Guan, R. Wu, Z. Wang, Y. Wang, and G. Li, “Feature engineering in discrimination of herbal medicines from different geographical origins with electronic nose,” in 2019 IEEE 7th International Conference on Bioinformatics and Computational Biology (ICBCB). IEEE, 2019, pp. 56–62.

- [11] Z. Wang, X. Sun, J. Miao, Y. Wang, Z. Luo, and G. Li, “Conformal prediction based on k-nearest neighbors for discrimination of ginsengs by a home-made electronic nose,” Sensors, vol. 17, no. 8, p. 1869, 2017.

- [12] A. Vergara, S. Vembu, T. Ayhan, M. A. Ryan, M. L. Homer, and R. Huerta, “Chemical gas sensor drift compensation using classifier ensembles,” Sensors and Actuators B: Chemical, vol. 166, pp. 320–329, 2012.

- [13] E. O. Brigham, The fast Fourier transform and its applications. Prentice-Hall, Inc., 1988.

- [14] W. T. Cochran, J. W. Cooley, D. L. Favin, H. D. Helms, R. A. Kaenel, W. W. Lang, G. C. Maling, D. E. Nelson, C. M. Rader, and P. D. Welch, “What is the fast fourier transform?” Proceedings of the IEEE, vol. 55, no. 10, pp. 1664–1674, 1967.

- [15] J. M. Lilly and S. C. Olhede, “Generalized morse wavelets as a superfamily of analytic wavelets,” IEEE Transactions on Signal Processing, vol. 60, no. 11, pp. 6036–6041, 2012.

- [16] Z. Ren, K. Qian, Z. Zhang, V. Pandit, A. Baird, and B. Schuller, “Deep scalogram representations for acoustic scene classification,” IEEE/CAA Journal of Automatica Sinica, vol. 5, no. 3, pp. 662–669, 2018.

- [17] C. Alippi, S. Disabato, and M. Roveri, “Moving convolutional neural networks to embedded systems: the alexnet and vgg-16 case,” in 2018 17th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN). IEEE, 2018, pp. 212–223.

- [18] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural computation, vol. 9, no. 8, pp. 1735–1780, 1997.

- [19] A. Graves, M. Liwicki, S. Fernández, R. Bertolami, H. Bunke, and J. Schmidhuber, “A novel connectionist system for unconstrained handwriting recognition,” IEEE transactions on pattern analysis and machine intelligence, vol. 31, no. 5, pp. 855–868, 2008.

- [20] S. Albawi, T. A. Mohammed, and S. Al-Zawi, “Understanding of a convolutional neural network,” in 2017 International Conference on Engineering and Technology (ICET). Ieee, 2017, pp. 1–6.

- [21] K. O’Shea and R. Nash, “An introduction to convolutional neural networks,” arXiv preprint arXiv:1511.08458, 2015.

- [22] H. Xu, Z. Duan, Y. Bai, Y. Huang, A. Ren, Q. Yu, Q. Zhang, Y. Wang, X. Wang, Y. Sun et al., “Multivariate time series classification with hierarchical variational graph pooling,” arXiv preprint arXiv:2010.05649, 2020.

- [23] H. Xu, Y. Huang, Z. Duan, J. Feng, and P. Song, “Multivariate time series forecasting based on causal inference with transfer entropy and graph neural network,” arXiv preprint arXiv:2005.01185, 2020.

- [24] Y. Wang, Z. Duan, Y. Huang, H. Xu, J. Feng, and A. Ren, “Mthetgnn: A heterogeneous graph embedding framework for multivariate time series forecasting,” arXiv e-prints, pp. arXiv–2008, 2020.

- [25] R. Ying, J. You, C. Morris, X. Ren, W. L. Hamilton, and J. Leskovec, “Hierarchical graph representation learning with differentiable pooling,” arXiv preprint arXiv:1806.08804, 2018.