Closed-form control with spike coding networks

Abstract

Efficient and robust control using spiking neural networks (SNNs) is still an open problem. Whilst behaviour of biological agents is produced through sparse and irregular spiking patterns, which provide both robust and efficient control, the activity patterns in most artificial spiking neural networks used for control are dense and regular — resulting in potentially less efficient codes. Additionally, for most existing control solutions network training or optimization is necessary, even for fully identified systems, complicating their implementation in on-chip low-power solutions. The neuroscience theory of Spike Coding Networks (SCNs) offers a fully analytical solution for implementing dynamical systems in recurrent spiking neural networks — while maintaining irregular, sparse, and robust spiking activity — but it’s not clear how to directly apply it to control problems. Here, we extend SCN theory by incorporating closed-form optimal estimation and control. The resulting networks work as a spiking equivalent of a linear–quadratic–Gaussian controller. We demonstrate robust spiking control of simulated spring-mass-damper and cart-pole systems, in the face of several perturbations, including input- and system-noise, system disturbances, and neural silencing. As our approach does not need learning or optimization, it offers opportunities for deploying fast and efficient task-specific on-chip spiking controllers with biologically realistic activity.

Index Terms:

Spiking Neural Networks, State Estimation, Optimal Control, Spike Coding Networks, Dynamical Systems.I Introduction

Brain and behaviour are inseparable. The activity of complex networks of neurons is strongly linked to the capacity of biological agents to move and interact in the world. These networks control the body through sparse spiking activity [1, 2], providing high energy-efficiency and robustness against perturbations (e.g. noise or neural silencing) [3, 4, 5]. Whilst SNNs are experiencing a qualitative improvement in recent years driven by neuromorphic hardware and learning algorithms developments [6, 7], there are still open challenges in applying them to control problems.

First, the majority of the SNN-based solutions rely on training or otherwise optimizing model parameters [8, 9, 6], even for fully identified systems. Analytical solutions are desirable for control, as they are interpretable, have the advantage of being well-applicable to identified systems, are quick and efficient to implement and deploy, and they are amendable to theoretical explorations of stability and function. In control theory, such fully analytical solutions are often possible, but are difficult to directly implement in SNNs due to their highly nonlinear and discontinuous nature.

Second, biological spiking codes are generally highly irregular and sparse [10, 11] — and require little energy. In contrast, SNN-based control solutions often produce and require highly dense and regular activity (e.g., [12, 13]), and are hence inefficient [14]. How we can reconcile precise and efficient control with a more biological irregular spiking code is an open problem.

While spiking irregularity is usually considered a consequence of noise, according to the neuroscience theory of spike coding networks (SCNs) it could also be a signature of a highly precise spiking code. SCN theory follows a similar principle as the theory of predictive coding. Neurons only fire when the network’s prediction error exceeds a threshold value, efficiently constraining this error. The resulting neural spiking activity is ‘coordinated’ across the neuron population, producing sparse and irregular patterns [15, 16], and is highly robust against several biological perturbations [17, 16]. SCNs have the great advantage that they are defined analytically — through a closed-form solution for the recurrent connectivity. They thus have the potential to solve both above challenges. However, while the SCN framework permits us to analytically implement [15, 18] or learn [19, 20, 21] any dynamical system, it is an open problem how they can be used to both estimate and control the state of an external system.

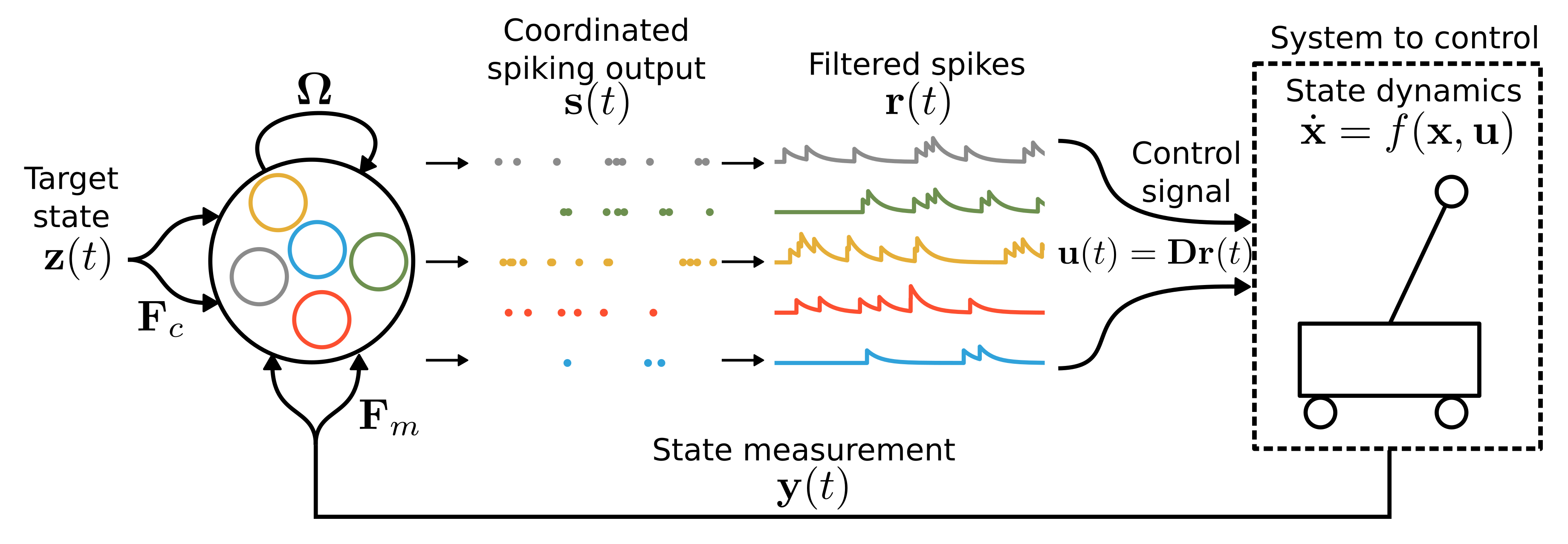

In this paper we combine optimal control and SCN theory to address both of the above challenges — providing a closed-form solution for optimal control using spiking neural networks, given a well identified system, while producing realistic and robust spiking patterns (Fig. 1). Our proposed method for estimation and control both expands on a promising theory for understanding biological spiking activity, as well as provides a major step towards developing low-power, high-efficiency, robust, and task-specific neuromorphic controllers.

I-A Contribution

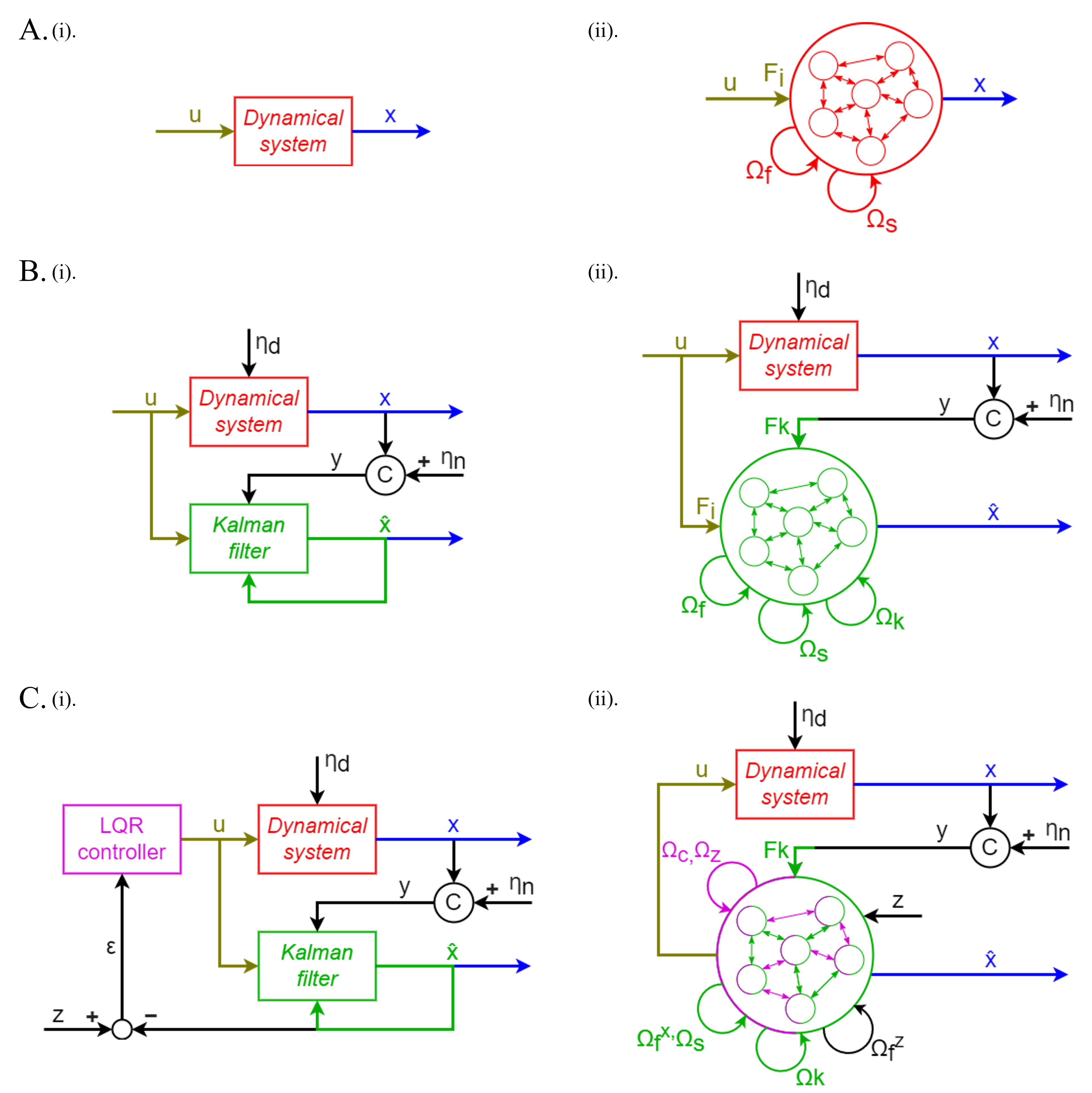

We mathematically formalize the link between optimal control of dynamical systems and the SCN framework. Particularly, we analytically derive the spiking equivalent of the linear–quadratic–Gaussian (LQG) control problem – the combination of a linear quadratic estimator (Kalman filter) and a linear–quadratic regulator (LQR) controller (Fig. 2B and C). We show that our proposed networks () accurately estimate and control well-known dynamical systems, achieving similar performance as their non-spiking counterpart, and, importantly, () they preserve the irregular and sparse spiking patterns and robustness to neural silencing.

I-B Related SCN literature

Here, we non-exhaustively review the main lines of research that followed a similar approach as us. For recent reviews on SNNs related to robotics and control see [8, 22]. Our work is based on [15], which originally showed how any linear dynamical system can be analytically implemented in an SCN. While there have been several follow-up papers on extending the framework for general computations [19, 23, 18], control has been less well-studied. In [21], spike coding networks were extended by adding learning rules for connectivity weights. This allowed them to perform forward prediction of the dynamical system state. As an additional result, they showed how to control a pendulum by using the network to simulate multiple future trajectories of the pendulum with different control policies. However, the control algorithm is not encoded within the network and does not provide robust estimation. In [24, 25], the authors proposed an analytical SCN-inspired framework for control. They mainly focused on producing the correct control signal, and derived network connectivities accordingly. The framework requires fully observable systems, and they were not able to provide a general, clear and simple mathematical model that is in line with both control and neuroscience communities. Furthermore, they did not investigate the beneficial properties of the spiking patterns generated by the coordination of the neurons. Here, we will provide a simpler analytical derivation more in line with existing SCN theory, derive networks for both estimation and control of partially observable systems, and investigate the robustness properties of the control and networks in more detail. Overall, our approach takes inspiration from the broader SCN literature to provide a unified mathematical framework to analytically compute optimal control in SNNs.

II Methods

II-A The spiking control problem

We have a system with state we would like to control with a spiking signal () emitted from a recurrent SNN (Fig. 1). The SNN is provided with some incomplete measurements of the system state and a target state , and has to generate a control signal .

The spiking patterns are generated according to some underlying voltage dynamics. There are many such models of varying complexities, but for most practical applications networks of leaky integrate-and-fire (LIF) neurons suffice. A network of such neurons is then defined by

| (1) |

where is the vector of neural voltages, are some input weights, is some -dimensional input, are the emitted spikes, are the filtered spike-trains111such that , see Fig. 1., and corresponds to independent voltage noise. Finally, there are fast synapses which affect the post-synaptic voltage instantaneously following a spike (through fast recurrent connections ), and slow synapses which cause an initially slow change in voltage (through the slow recurrent connections ).

Whenever a given neuron’s voltage reaches a threshold , that neuron will emit a spike at that time (resulting in spike-trains ). The voltage will then be reset to some resting value (here implemented through the diagonal elements of ).

How should the recurrent and input weights of the network be set, such that the output signal is as optimal as possible? One solution is to train the network using a cost function that enforces, for instance, sparsity [14]. Here we take another route by first defining the solution according to classical control theory, and then translating this solution into the spiking network parameters by using the neuroscience theory of Spike Coding Networks (SCN) [15, 26].

II-B Spike-coding network theory

For the sake of completeness, in this section we will give a brief overview of the original SCN framework (as originally proposed in [15]) for implementing linear dynamical systems in an SNN — for an extensive mathematical derivation of the SCN we refer the reader to [17, 18].

II-B1 Tracking a fully observable system

If a -dimensional state is fully observable without noise, SCN theory defines how a recurrent SNN can optimally track this signal [15]. The proof starts with two assumptions: 1) the input signal estimate can be linearly decoded from the spiking activity as , where are known decoding weights (in this paper randomly drawn from the normal distribution and normalized); and 2) spikes should only be emitted when this improves a coding error defined by the L2-norm, yielding a greedy spiking rule, i.e. neuron should spike iff . Here is the ’th column of , and reflects the change in error due to neuron spiking.

From these assumptions a recurrent network of leaky integrate-and-fire neurons is directly derived of the form

| (2) |

In this network, the input is encoded through forward weights , with the derivative term ensuring that quick changes in are adequately tracked. Effectively, the network takes in both the current state and its dynamics as inputs. The recurrent connections are given by fast connections . These connections make sure that the spiking in the network is coordinated across the neurons, such that there are no superfluous spikes. A neural post-spike ‘self-reset’ is implicitly included by ’s diagonal. Neurons will emit a spike when their threshold is hit, which follows from the same derivation as . We here assume instantaneous communication between neurons, such that only one neuron spikes at a given time (since they can instantly inhibit each-other), but note that this assumption is not strictly required[16]. The resulting spiking activity will optimally encode the input-signal such that .

II-B2 Implementing a dynamical system

Instead of tracking an external signal () one can also use SCN theory to define an SNN which implements a linear dynamical system of the form [15] (Fig. 2A). Briefly, we can replace (in Eq. 2) by the now known dynamics . The resulting voltage dynamics are given by

| (3) |

By next replacing the external state by the network’s internal estimate this can be further simplified as

| (4) |

where the derivation has resulted in an additional set of slow connections implementing the desired dynamics given by . In essence, the slow connectivity reads out the internal estimate of through . It then applies the dynamics of the linear dynamical system through , and encodes the result back into the network using . The network now keeps track of its own estimate on a fast time scale through the fast connections, and drifts this estimate according to the dynamics through the slow recurrent connections.

III Closed-form optimal estimation and control with SNNs

In the previous section, we summarized how SCNs can implement dynamical systems — but how can we link such a network to an external system and control it? Here we provide a new mathematical framework which extends SCN theory to allow the implementation of classical control in SNNs consisting of leaky integrate-and-fire neurons. The inner workings of the networks, and the interpretation of the different connectivities, remain unchanged from the previous section. The resulting recurrent SNN is able to simultaneously act as a state-estimator (Kalman filter) and a controller (LQR) for an external dynamical system. Precisely, we provide a linear–quadratic–Gaussian spiking controller with extended benefits of efficient spiking patterns coding.

III-A The classical control problem

Consider a -dimensional linear dynamical system in state-space representation (Fig. 2A.i):

| (5) | ||||

| (6) |

where is the state vector, is the system matrix conveying the dynamics of the system, is the input vector through which we can control the system, and is the input matrix. reflects internal disturbances, given by a zero-mean Gaussian process with co-variance . are observations about , where is the observability matrix. is sensor noise, given by a zero-mean Gaussian process with co-variance .

We will generally assume as known the measurement vector , the system matrix , input matrix , the input vector , the observability matrix , and co-variances and .

Our goal is to control to some reference state . To do this we must be able to estimate the state from the observations (estimation problem), and find the best control signal to do so (optimal control problem). In control theory this is largely solved, and we will now consider how to combine the resulting solutions with SCN theory to generate the correct u as an output of an SNN.

III-B Optimal state estimation with SCNs

Full-state estimates given a noisy and incomplete measurements in linear systems can be provided by a Kalman filter [27], which optimally balances an internal (predicted) state estimate to a noisy and/or partially observable external measurement (Fig. 2B.i). The Kalman filter in continuous time is given by a dynamical system of the form

| (7) |

The Kalman filter gain matrix, , is applied to an error between the observations of the dynamical system and the Kalman filter’s own internal estimate, . For fully identified systems can be found by solving an algebraic Riccati equation [27]. Assuming that is known, we can directly implement the optimal filtering under the SCN framework (by following Section II-B2) resulting in the following voltage update rule:

| (8) |

where, on top of the previously introduced slow and fast connectivity, we now also have input (control) connections mapping the control signal to the neurons, recurrent and feed-forward “Kalman filter” connections ( and ). The recurrent connections essentially read-out the internal state of the system, apply both the observability matrix and the Kalman filter gain matrix to predict its evolution, and maps this back into the network. The feed-forward connections take the partially observable state, , and applies the Kalman filter gain matrix. A more detailed derivation can be found in the appendix (section VIII). Now, the entire SCN, including all of its connections, represents a Kalman filter (Fig. 2B.ii). The network internally simulates a linear dynamical system of the form , but also corrects its own estimate according to the external input .

III-C Optimal control with SCNs

Now that we have optimal state-estimation we can extend the framework to optimal control by deriving the spiking version of a Linear-Quadratic Regulator (LQR) controller. Given a known system of the form in Eq. (5), this controller produces the optimal signal to minimize the squared error between the state and a target . This gives rise to a linear control law of the form

| (9) |

where is the LQR gain matrix, which can be found by solving an algebraic Riccati equation, given assumptions on the cost of state deviations and actuation [27].

In order to control a partially observable and/or noisy dynamical system, we can combine an LQR controller with a Kalman filter (see Fig. 2C.i). The Kalman filter should then be aware of the specific form used for the control, and we can swap out for the LQR control law (Eq. (9) in Eq. (7)), resulting in a dynamical system of the form

| (10) |

We will refer throughout to the combination of a Kalman filter and LQR control as the ideal controller. We can now use the same method as in the previous section to implement this in a spiking network.

To be able to read out the full control signal, we additionally encode the reference into the network with a new set of fast connections (similar to Eq. (2)), which allows us to read out from the internal estimates and of the network. For this we define two sets of decoding weights: for , and for .

Extending the SCN defined in Eq. (8) with LQR control and an internal encoding of , we get the final voltage update rule

| (11) |

where we have indicated the parts of the connectivity that are tracking the external system, the effect of the control on the system, the Kalman filter updates, and the representation of the target signal. For the internal estimate , we now have two sets of recurrent “control” and “target” connections, which represent the LQR controller within the Kalman filter SCN (see Fig. 2C.ii). The recurrent control connectivity, , decodes from the internal state of the SCN using , and applies the LQR gain matrix . The result is transformed using , and encoded back into the network. The recurrent target connectivity, does something similar, but first reads out using . The reference signal, , is encoded into the network using the same method as defined in Eq. (8). A more detailed derivation for all these connections can be found in the appendix (section VIII). Note that while there now appear to be a large set of separate recurrent connections, all the slow connectivities (, , , ) can be grouped together in a single connection matrix, as well as both the fast connectivities (, ).

We now have an SCN which implements the entirety of , but also internally keeps an estimate of the desired state or reference . We can obtain the output of the internal LQR controller in the SCN by defining the following new set of decoding weights: . The control signal can then directly be read-out from the neural activities as . This can be applied to an external dynamical system to control it, all whilst the SCN keeps an internal estimate of the external dynamical system. Hence, we have finally arrived at a fully derived recurrent SNN which allows us to track, replicate and control an external dynamical system.

IV Results

We evaluated the performance of the proposed mathematical framework (Sec. III) as well as analyzed the properties of the spiking patterns for internal robustness against perturbations (e.g., noise, neural silencing and external force)222The code used to generate the results is available in the following Github repository: https://github.com/FSSlijkhuis/SCN_estimation_and_control. We applied the networks we derived above to two standard dynamical systems: the linear spring-mass-damper system and the nonlinear cartpole system.

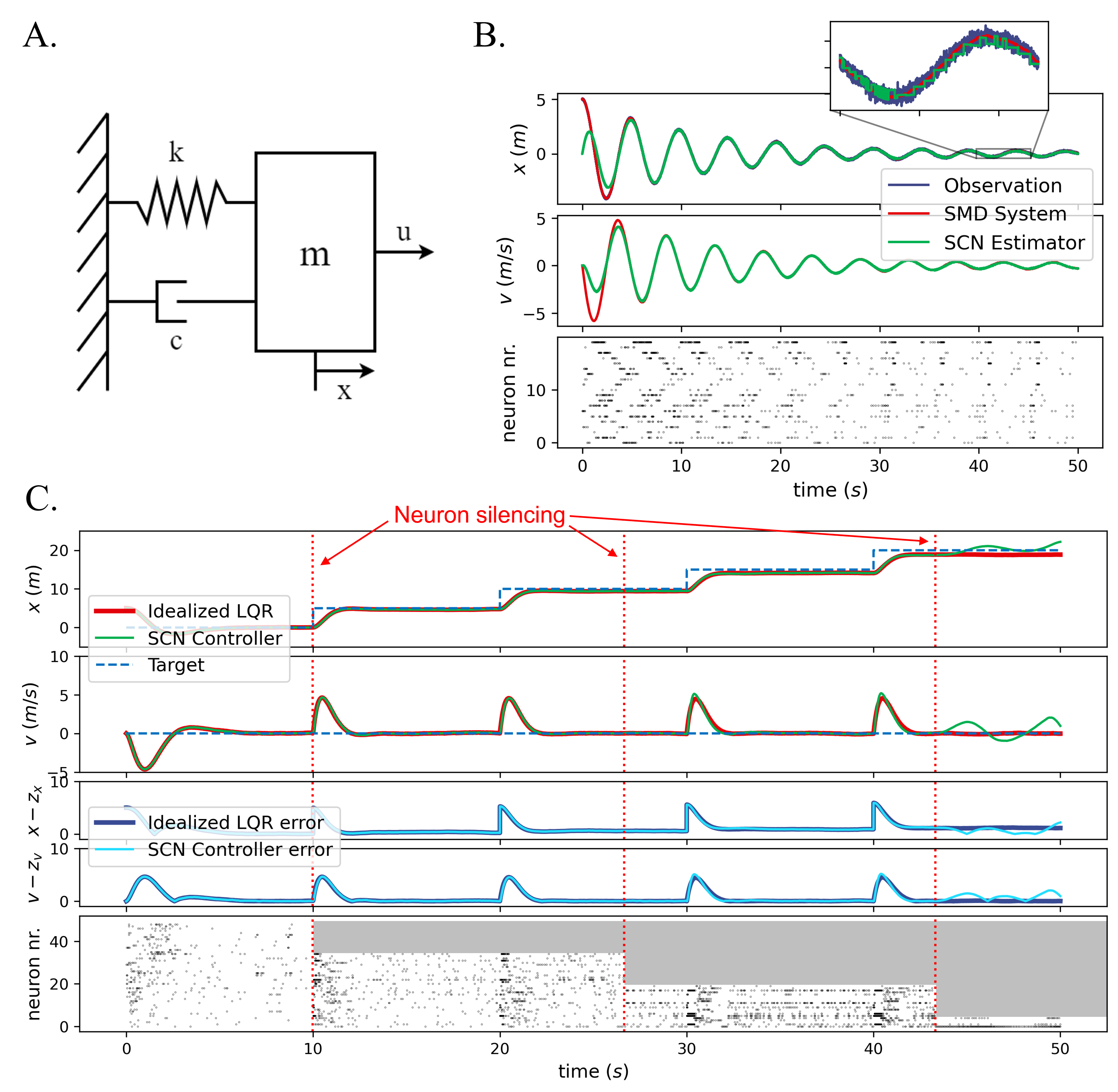

IV-A Spring-Mass-Damper (SMD) system

We compared our proposed SCN estimation and control with their non spiking counterparts for the spring-mass-damper system (Fig. 3A). The baselines comparison are a Kalman filter for estimation and LQG for control, which we refer to as the ”Idealized” estimator and controller. Furthermore, we evaluated the system robustness against input-noise, neural silencing and external perturbations. The SMD system has two dynamical variables: position () and velocity (). Our instance of the SMD system has an internal disturbance , and it outputs a partially observable state which measures only the position with sensor noise .

IV-A1 Estimation

We first evaluated whether an SCN Kalman filter can accurately track the SMD system based on incomplete and noisy measurements. For the estimation, we set , so there is no external force acting on our dynamical system. In Fig. 3B, we compared the estimation outputted by the spike coding network (green) to the numerical dynamical system simulation (red). Even though the estimation starts in a different initial state and there is both substantial measurement noise and internal disturbance in the real dynamical system, the network estimate quickly converges. Overall, the SCN estimates both dynamical variables of the real dynamical system with very high accuracy.

IV-A2 Control

To control a dynamical system, the SCN computes the optimal , such that the state of the simulated dynamical system converges towards a reference signal . In Fig. 3C, we show an SMD controlled through an idealized controller (red) and a dynamical system controlled using an SCN controller (green). Once again, the estimation from the SCN is very similar to the real dynamical system. On top of that, we show the reference signal (blue, dashed), which is a stair-wise increase of the SMD position, . We can see that both the estimation from the SCN and the real dynamical system follow the reference signal, which is an indication of the correct behavior of the internal controller of the SCN. When we investigate the control errors in detail (blue curves) we see that the two controllers match closely.

IV-A3 Controller robustness

In Fig. 3C, we further show the robustness of our framework, as the network is able to keep the system controlled when facing severe neural silencing (as in classic SCNs [17, 16]). Starting with 50 neurons, we progressively disable 15 neurons at certain timesteps (red, vertical dashed lines), by preventing these neurons to spike from this point on. The timestep at which the first 15 neurons are disabled (at 10 seconds) is exactly at the point of a change in the reference signal. In this case there is no visible effect on the performance. The neural silencing at the second timestep (at 26.6 seconds) again demonstrates little effect on the performance of the SCN controller, although a very subtle increase in the velocity error is observed. After the third timestep at which 15 neurons are disabled (at 43.3 seconds), only 5 neurons are available to the SCN controller. Only at this point does the SCN controller start to struggle to accurately control the SMD system.

In the raster plots in Fig. 3, the spike patterns are moderately sparse and irregular. For clarity of the spike trains we used smaller networks here, but the spiking patterns can be made arbitrarily sparse by adding more neurons (as then the spiking is coordinated across more neurons). In Fig. 3C, we can observe that when neurons are silenced, other neurons compensate for its spiking, to make sure that the estimation stays as accurate as possible (and the spikes become progressively less sparse). This is a clear indication of the coordinated spiking of the neurons in the network.

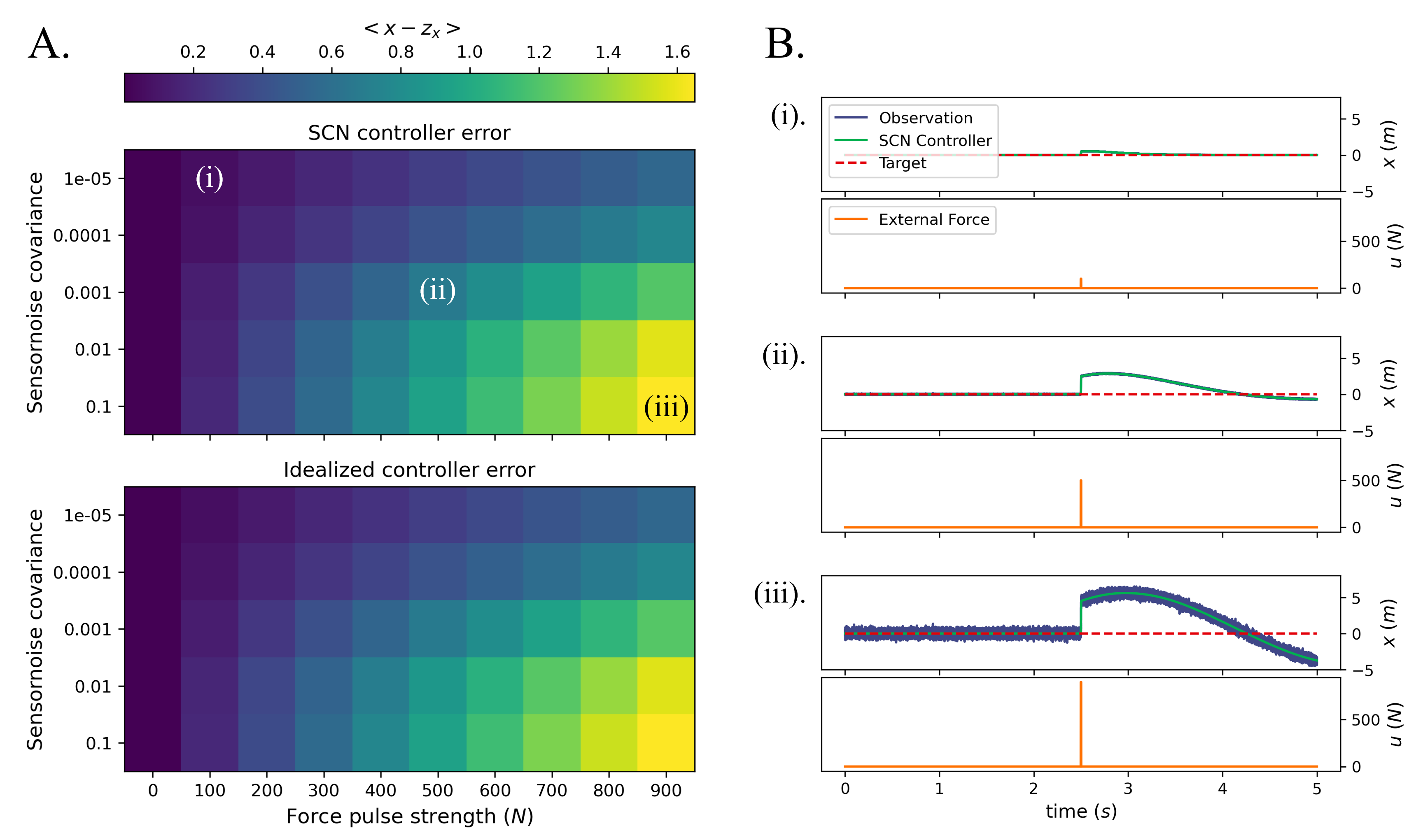

Fig. 4A shows the effects of different parameter-values for the sensor noise covariance and the effects of a sudden pulse of force into the SMD system on the controller error of the SCN controller (top) and the idealized LQR controller (bottom). Here, the controller error corresponds to the average error in the position of the mass between the SMD system and the reference signal across an entire simulation, when controlled by the respective controllers. This error increases for larger values of the sensor noise covariance, as well as with larger pulses of force into the SMD system. There is no noticeable difference between the control errors of the SCN controller and the idealized controller.

In Fig. 4B, the position of the mass in the SMD system controlled by the SCN controller is shown, using three sets of parameters indicated in Fig. 4A. Here, we clearly see the effect of the pulse on the SMD system, where a larger pulse corresponds to a larger displacement of the mass. The SCN controller quickly tries to correct for this displacement, bringing the mass back to its reference position. The observation, y, is also shown. Larger values for the sensor noise covariance are clearly visible in the observation. Not shown here is the SMD system controlled by the idealized controller, because there is no visible difference between the two SMD systems.

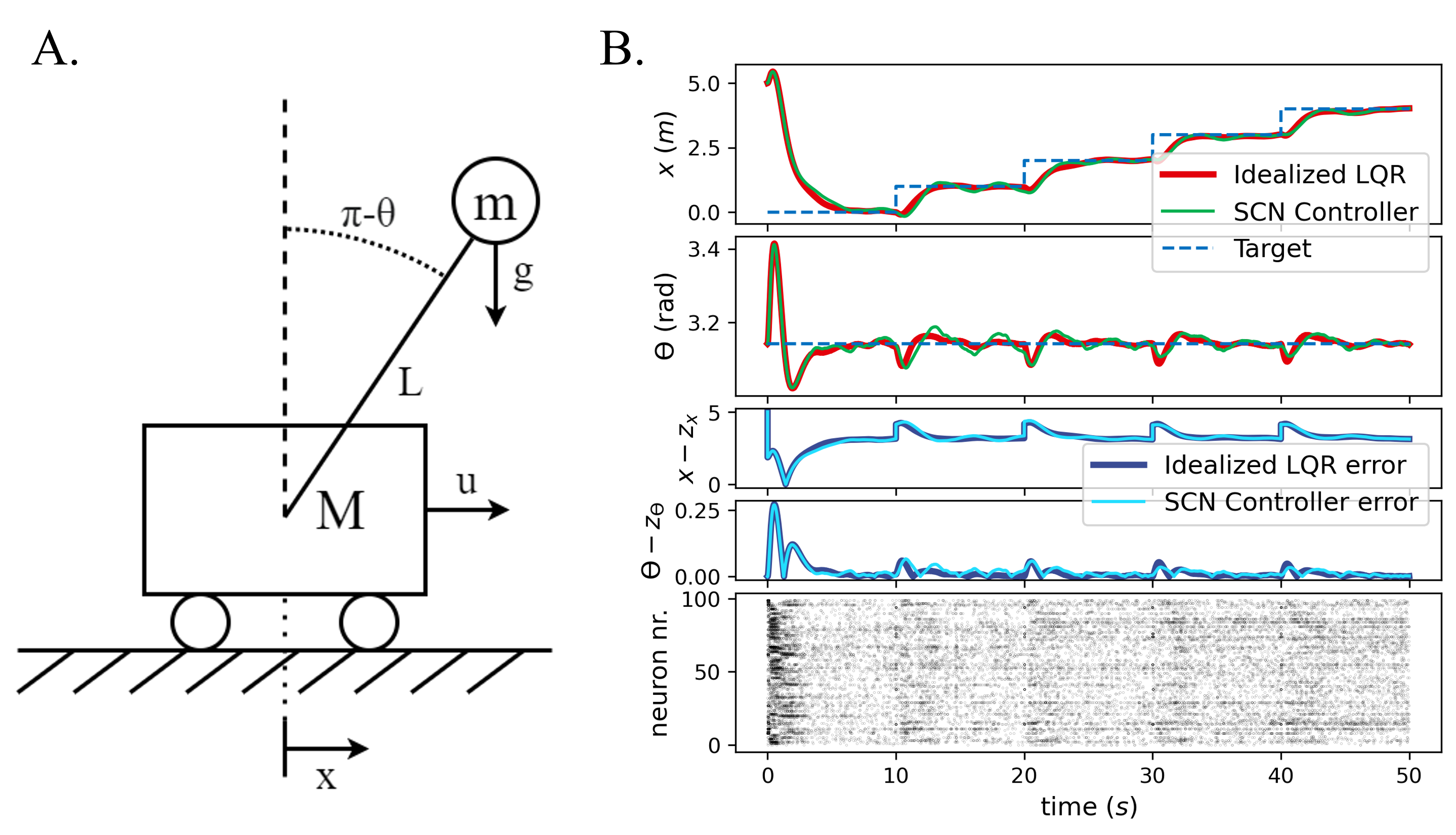

IV-B Cartpole system

We evaluated our proposed SCN controller (i.e., linear-quadratic-Gaussian spiking control) on the nonlinear cartpole system (see Fig. 5A). The cartpole has four dynamical variables: the position of the cart, (), the velocity of the cart (), the position of the pole, (), and the angular velocity of the pole, (). Just like the SMD, our instance of the cartpole has an internal disturbance , and it outputs a partially observable state consisting of only the position of the cart, , with sensor noise . Note that the cartpole system is a nonlinear dynamical system, but both our ideal and spiking controllers assume linear dynamics. To produce a meaningful control signal, we use linearized dynamics for the internal estimate. In our case, we linearized around the up-position of the pole ().

Fig. 5B compares a cartpole system controlled through an idealized controller (red) to a cartpole controlled through an SCN controller (green). On top of that, we show the reference signal (blue, dashed), which is a stair-wise increase of the cart position, . The task of the controllers is then to move the cart whilst keeping the pole upright. In the figure, we show the position () of the cart and the angle of the pole (). We see that both controllers follow the reference signal almost perfectly, and most importantly, keep the pole upright.

The spike plots show that especially with a large amount of neurons, the spiking is irregular and sparse. Increased spiking activity is observed when the reference signal demands a change of the state of the dynamical system.

IV-C Parameter settings

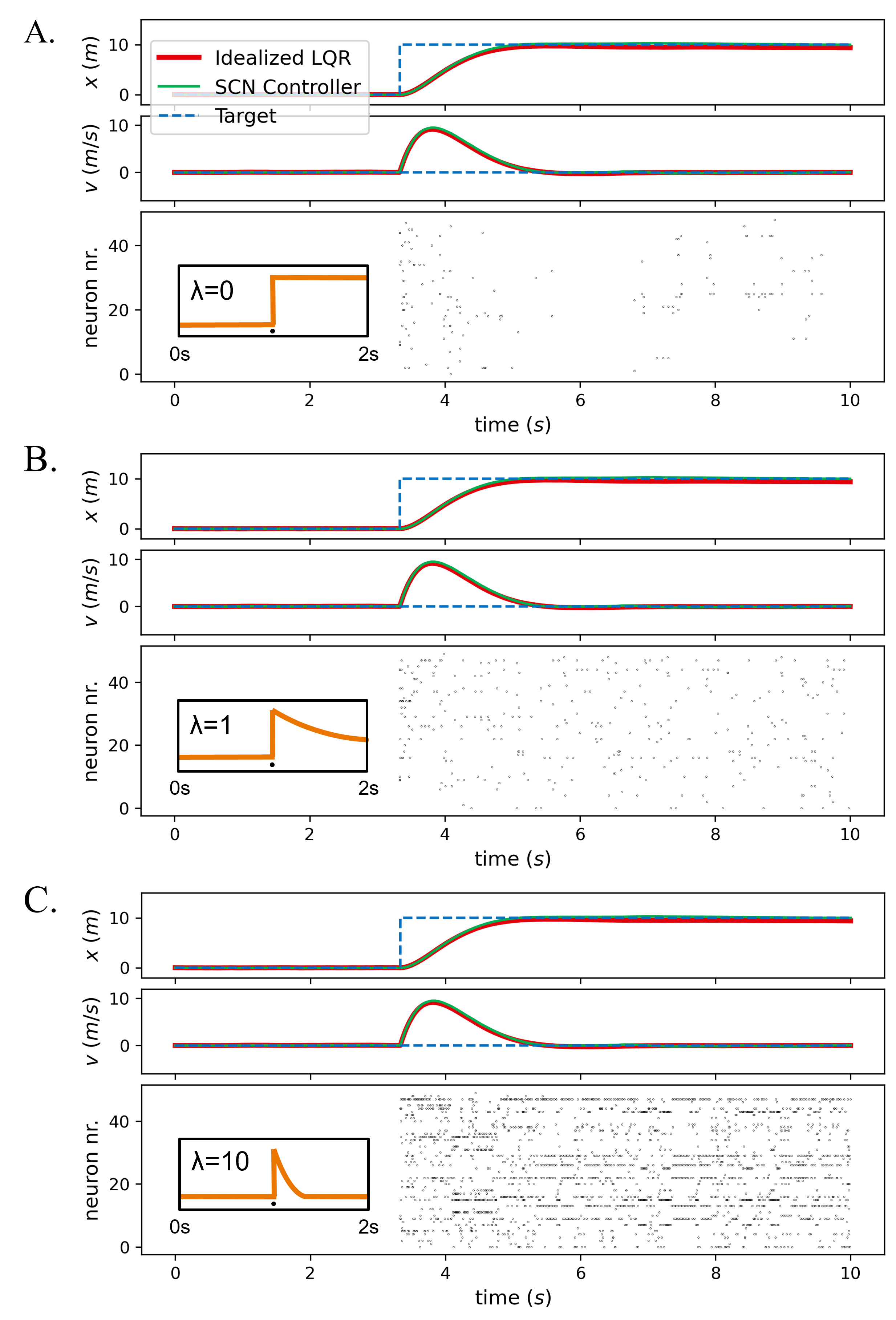

For each network, the decoding weights () are sampled from a normal distribution, with each column normalized to have norm 0.1, except for norm 0.01 in Fig. 5B and norm 1 in Fig. 6. 20 and 50 neurons were used in Fig. 3B and C respectively, 50 neurons were used in Fig. 4 and Fig. 6, and 100 neurons in Fig. 5B. The SMD parameters were , and for Fig. 3B and Fig. 4, while , and were used for Fig. 3C and Fig. 6. Fig. 3B and Fig. 6 used . Fig. 3C used . Fig. 4 used . The cartpole system parameters were , , , , and , with . All networks used and , except for Fig. 6, which used and varying . Forward Euler was used throughout, with a timestep of 1e-3s, except for 1e-4s in Fig. 4, Fig. 5B and Fig. 6. For control, . For control of the SMD system, the matrix prioritizes with weight 10, and with weight 1. For cartpole control, is weighted with 10 and the rest with 1.

V Advantages and limitations of SCN control

The use of SCN control provides several advantages and limitations. This section provides an overview.

V-1 Hardware implementations

Because SCN control is implemented through standard LIF neurons it has strong potential for neuromorphic deployment, such as on Loihi [28] or SpiNNaker [29] chips — allowing for potentially lower energy control implementations than classical von Neumann architectures. However, a general framework of how to transfer the theory to hardware is still lacking, and faces two main challenges. First, the theory assumes zero transmission delays between neurons, which effectively constraints one neuron to spike per time-frame. If this constraint is weakened, the networks can go into an epileptic state [16, 30]. On hardware implementations this assumption might not always be reasonable. As a solution, several extensions have been made to SCN theory that make it work with such delays [31, 30, 16, 32]. Second, for control we need two types of connections: fast and slow. The fast connections can be implemented by a simple change in the voltage following a spike, but the slow connections require longer lasting effects (through the filtered spike trains ). Not every hardware implementation might allow for such slow synapses, or allow the simultaneous use of both synapse types. It also requires every neuron to have access to the filtered spike trains of every other neuron, which might increase the connectivity requirements — which is again highly dependent on the exact hardware.

V-2 Explainability

A great benefit of SCN control is its explainability. There is a clear link between the different network parameters and the computation (as outlined in our methods section), as well as a rich literature studying their more in-depth properties. In particular, there is an elegant and in-depth geometric interpretation of the core functionality [16]. Thus, with new applications for the theory and resulting problems, there is a clear theoretical framework within which one can think through possible solutions. One can also easily adjust the model parameters for different activity and coding regimes, as demonstrated in Fig. 6. Another spiking neural network method that has a similar level of theoretical explainability is the neural engineering framework [12], which also allows one to implement dynamical systems, including for control[33]. However, as outlined next, SCNs come with a number of additional advantages — in particular for robustness and biological fidelity.

V-3 Biological fidelity

A scientifically motivated advantage of SCN control over other methods of control is its biological plausibility. Despite being directly derivable through the underlying theory, the resulting networks portray several features consistent with biology, such as sparse and irregular firing [10, 11], robustness to a range of perturbations[17, 16], and an underlying strict balance of excitation and inhibition[15, 26]. The theory is also extendable to more complex synapses and neural models either through learning[32] or design[18], enabling more complex computations.

V-4 Distributed and robust control

A core advantage of SCN control is that we inherit the property of previous SCN implementations of distributed and robust computations[15, 17]. Given a known linear control algorithm, the required computations can be effectively distributed across the neurons. Consequently, the resulting networks are highly robust to neural silencing (Fig. 3). The robustness to neural silencing might be highly useful for situations in which neuromorphic chips can get damaged during operation, such as in high radiation environments.

V-5 Temporal sparsity and energy use

The distributed and coordinated nature of SCNs ensures that spikes are only fired if they contribute to the required dynamics. As a result, the output spike patterns can be highly temporally sparse and efficient. Compared to other spiking neural network methods, SCNs therefore might potentially offer lower energy use. However, for a fair comparison to other methods we would need direct hardware implementations and comparison to other methods, and measure the energy directly. While this is out of scope for this paper, we here highlight a few important caveats. First, while the total spike count across the population is highly optimized, this does require highly dense recurrent connectivity, and thus significant spike-routing and potential energy use. While the density of connectivities can be drastically reduced[18], it would still need to be taken into account. Second, the use of both fast and slow connectivity types might complicate neural interactions, further increasing energy use. Finally, the sparsity might not be as optimized in the presence of synaptic delays. Even with these caveats, the theoretical understanding of the method should allow further optimization once comparisons can be made. As a demonstration, we show one can drastically reduce the total number of spikes by reducing the voltage leakage constant — without losing performance or needing retraining (Fig. 6) (at the cost of less biological realism and potentially higher memory requirements).

VI Conclusion

For fully identified systems there exist highly efficient closed-form control solutions. Ideally we would like to be able to implement these directly in spiking neural networks, and in a way that mimics the efficient and robust nature of the brain. In this work, we provided an extension of SCN theory to allow optimal estimation and control — providing spiking equivalents for Kalman filters and LQR control. We showed that these networks maintain sparse and irregular spiking patterns when controlling a dynamical system, and are robust to both severe neural silencing and system perturbations. Our networks are analytically derived and do not need learning nor optimization. Hence, our presented approach opens up the prospect for deploying fast, efficient and low-power SNN on-chip controllers with the advantage of having hardware intrinsic redundancy (i.e., the controller still works when some of the neurons stop working) and ensuring that neurons only fire when there is a prediction error.

As outlined in section V, there are several open challenges for future work, in particular for hardware implementations. Of particular note is that SCN theory assumes instantaneous synaptic delays, which is not realistic in either the brain or all neuromorphic hardware. There are several avenues to implement delays in SCNs [31, 30, 16], which can be well combined with the work presented in this paper. While here we focused on analytical implementations, learning rules do exist to implement SCN connectivities [19, 20, 34]. It is an open question whether these can also be applied for optimal control. Of particular difficulty then is online estimation of the Kalman and LQR gain matrices ( and ). This was recently shown to be possible in non-spiking networks [35], which gives a possible avenue for combining online gains optimization within a closed-form spiking implementation.

VII Acknowledgements

We thank Marcel van Gerven, Bodo Rückauer, Justus Hübotter, Christian Machens, William Podlaski, and Michele Nardin for helpful discussions on control and (spiking) networks.

VIII Appendix: detailed derivation of network connectivity

Here we will show how one can use SCN theory to take the dynamical system corresponding to the continuous Kalman filter and LQR control, and directly implement them in an SNN (following [15]). For both cases we start with the auto-encoding network

| (12) |

This network takes as an external input and its derivative and can accurately track this signal with the read-out . To implement a given dynamical system in this network we need to do two things. (1) replace the derivative of the input by the desired dynamics (). (2) Instead of feeding the state of as an external input, estimate the current state from the network read-out , and feed that back into the input (by simply replacing by ). This results in network dynamics given by:

| (13) |

If is purely linear this can be done through a set of slow connections, as in the main text. We do not consider nonlinear cases in this paper, but note that an analytical solution does exist[18].

VIII-A Estimation

For the continuous definition of the Kalman filter (Eq. 7), the above results in voltage dynamics given by

| (14) |

We can then replace , and group the different terms together to get the final voltage dynamics to implement a Kalman filter in a recurrent SNN given by Eq. 8.

VIII-B Control + estimation

For the continuous definition of a Kalman filter including LQR control we have equation 10. We can do the same as above and directly implement this in a recurrent SNN, but we also additionally need to represent the target state in the network (as explained in the main text). This together results in the voltage update rule

| (15) |

We can then replace , and group the different terms together the get the final voltage dynamics to implement a Kalman filter plus LQR controller in a recurrent SNN given by Eq. 11.

References

- [1] W. Gerstner, W. M. Kistler, R. Naud, and L. Paninski, Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition. Cambridge University Press, Jul. 2014, google-Books-ID: D4j2AwAAQBAJ.

- [2] L. F. Abbott, B. DePasquale, and R.-M. Memmesheimer, “Building functional networks of spiking model neurons,” Nature Neuroscience, vol. 19, no. 3, pp. 350–355, Mar. 2016, number: 3 Publisher: Nature Publishing Group. [Online]. Available: https://www.nature.com/articles/nn.4241

- [3] S. Denève, A. Alemi, and R. Bourdoukan, “The Brain as an Efficient and Robust Adaptive Learner,” Neuron, vol. 94, no. 5, pp. 969–977, Jun. 2017. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0896627317304178

- [4] M. C. Leary and J. L. Saver, “Annual Incidence of First Silent Stroke in the United States: A Preliminary Estimate,” Cerebrovascular Diseases, vol. 16, no. 3, pp. 280–285, 2003, publisher: Karger Publishers. [Online]. Available: https://www.karger.com/Article/FullText/71128

- [5] J. H. Morrison and P. R. Hof, “Life and Death of Neurons in the Aging Brain,” Science, vol. 278, no. 5337, pp. 412–419, Oct. 1997, publisher: American Association for the Advancement of Science. [Online]. Available: http://www.science.org/doi/10.1126/science.278.5337.412

- [6] M. Davies, A. Wild, G. Orchard, Y. Sandamirskaya, G. A. F. Guerra, P. Joshi, P. Plank, and S. R. Risbud, “Advancing Neuromorphic Computing With Loihi: A Survey of Results and Outlook,” Proceedings of the IEEE, vol. 109, no. 5, pp. 911–934, May 2021, conference Name: Proceedings of the IEEE.

- [7] E. O. Neftci, H. Mostafa, and F. Zenke, “Surrogate Gradient Learning in Spiking Neural Networks: Bringing the Power of Gradient-Based Optimization to Spiking Neural Networks,” IEEE Signal Processing Magazine, vol. 36, no. 6, pp. 51–63, Nov. 2019, conference Name: IEEE Signal Processing Magazine.

- [8] Z. Bing, C. Meschede, F. Röhrbein, K. Huang, and A. C. Knoll, “A Survey of Robotics Control Based on Learning-Inspired Spiking Neural Networks,” Frontiers in Neurorobotics, vol. 12, 2018. [Online]. Available: https://www.frontiersin.org/articles/10.3389/fnbot.2018.00035

- [9] M. Traub, R. Legenstein, and S. Otte, “Many-Joint Robot Arm Control with Recurrent Spiking Neural Networks,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sep. 2021, pp. 4918–4925, iSSN: 2153-0866.

- [10] D. J. Tolhurst, J. A. Movshon, and A. F. Dean, “The statistical reliability of signals in single neurons in cat and monkey visual cortex,” Vision Research, vol. 23, no. 8, pp. 775–785, Jan. 1983. [Online]. Available: https://www.sciencedirect.com/science/article/pii/0042698983902006

- [11] M. N. Shadlen and W. T. Newsome, “The Variable Discharge of Cortical Neurons: Implications for Connectivity, Computation, and Information Coding,” Journal of Neuroscience, vol. 18, no. 10, pp. 3870–3896, May 1998, publisher: Society for Neuroscience Section: ARTICLE. [Online]. Available: https://www.jneurosci.org/content/18/10/3870

- [12] C. Eliasmith, T. C. Stewart, X. Choo, T. Bekolay, T. DeWolf, Y. Tang, and D. Rasmussen, “A Large-Scale Model of the Functioning Brain,” Science, vol. 338, no. 6111, pp. 1202–1205, Nov. 2012, publisher: American Association for the Advancement of Science. [Online]. Available: http://www.science.org/doi/full/10.1126/science.1225266

- [13] D. Salaj, A. Subramoney, C. Kraisnikovic, G. Bellec, R. Legenstein, and W. Maass, “Spike frequency adaptation supports network computations on temporally dispersed information,” eLife, vol. 10, p. e65459, Jul. 2021, publisher: eLife Sciences Publications, Ltd. [Online]. Available: https://doi.org/10.7554/eLife.65459

- [14] J. F. Hübotter, P. Lanillos, and J. M. Tomczak, “Training Deep Spiking Auto-encoders without Bursting or Dying Neurons through Regularization,” arXiv:2109.11045 [cs], Sep. 2021, arXiv: 2109.11045. [Online]. Available: http://arxiv.org/abs/2109.11045

- [15] M. Boerlin, C. K. Machens, and S. Denève, “Predictive Coding of Dynamical Variables in Balanced Spiking Networks,” PLOS Computational Biology, vol. 9, no. 11, pp. 1–16, Nov. 2013, publisher: Public Library of Science. [Online]. Available: https://doi.org/10.1371/journal.pcbi.1003258

- [16] N. Calaim, F. A. Dehmelt, P. J. Gonçalves, and C. K. Machens, “The geometry of robustness in spiking neural networks,” eLife, vol. 11, p. e73276, May 2022, publisher: eLife Sciences Publications, Ltd. [Online]. Available: https://doi.org/10.7554/eLife.73276

- [17] G. T. Barrett David, D. Sophie, and C. K. Machens, “Optimal compensation for neuron loss,” eLife, vol. 5, 2016. [Online]. Available: https://ru.idm.oclc.org/login?url=https://www.proquest.com/scholarly-journals/optimal-compensation-neuron-loss/docview/2162464133/se-2

- [18] M. Nardin, J. W. Phillips, W. F. Podlaski, and S. W. Keemink, “Nonlinear computations in spiking neural networks through multiplicative synapses,” Peer Community Journal, vol. 1, p. e68, Dec. 2021. [Online]. Available: https://peercommunityjournal.org/articles/10.24072/pcjournal.69/

- [19] A. Alemi, C. Machens, S. Deneve, and J.-J. Slotine, “Learning Nonlinear Dynamics in Efficient, Balanced Spiking Networks Using Local Plasticity Rules,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, no. 1, Apr. 2018. [Online]. Available: https://ojs.aaai.org/index.php/AAAI/article/view/11320

- [20] W. Brendel, R. Bourdoukan, P. Vertechi, C. K. Machens, and S. Denève, “Learning to represent signals spike by spike,” PLOS Computational Biology, vol. 16, no. 3, p. e1007692, 2020, publisher: Public Library of Science. [Online]. Available: https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1007692

- [21] D. Thalmeier, M. Uhlmann, H. J. Kappen, and R.-M. Memmesheimer, “Learning Universal Computations with Spikes,” PLOS Computational Biology, vol. 12, no. 6, pp. 1–29, Jun. 2016, publisher: Public Library of Science. [Online]. Available: https://doi.org/10.1371/journal.pcbi.1004895

- [22] Y. Sandamirskaya, M. Kaboli, J. Conradt, and T. Celikel, “Neuromorphic computing hardware and neural architectures for robotics,” Science Robotics, vol. 7, no. 67, p. eabl8419, Jun. 2022, publisher: American Association for the Advancement of Science. [Online]. Available: http://www.science.org/doi/abs/10.1126/scirobotics.abl8419

- [23] A. Mancoo, S. Keemink, and C. K. Machens, “Understanding spiking networks through convex optimization,” in Advances in Neural Information Processing Systems, H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin, Eds., vol. 33. Curran Associates, Inc., 2020, pp. 8824–8835. [Online]. Available: https://proceedings.neurips.cc/paper/2020/file/64714a86909d401f8feb83e8c2d94b23-Paper.pdf

- [24] F. Huang, J. Riehl, and S. Ching, “Optimizing the dynamics of spiking networks for decoding and control,” in 2017 American Control Conference (ACC), May 2017, pp. 2792–2798, iSSN: 2378-5861.

- [25] F. Huang and S. Ching, “Dynamical Spiking Networks for Distributed Control of Nonlinear Systems,” in 2018 Annual American Control Conference (ACC), Jun. 2018, pp. 1190–1195, iSSN: 2378-5861.

- [26] S. Denève and C. K. Machens, “Efficient codes and balanced networks,” Nature neuroscience, vol. 19, no. 3, pp. 375–382, Mar. 2016. [Online]. Available: https://doi.org/10.1038/nn.4243

- [27] S. L. Brunton and J. N. Kutz, Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control, 1st ed. USA: Cambridge University Press, 2019.

- [28] M. Davies, N. Srinivasa, T.-H. Lin, G. Chinya, Y. Cao, S. H. Choday, G. Dimou, P. Joshi, N. Imam, S. Jain, Y. Liao, C.-K. Lin, A. Lines, R. Liu, D. Mathaikutty, S. McCoy, A. Paul, J. Tse, G. Venkataramanan, Y.-H. Weng, A. Wild, Y. Yang, and H. Wang, “Loihi: A Neuromorphic Manycore Processor with On-Chip Learning,” IEEE Micro, vol. 38, no. 1, pp. 82–99, Jan. 2018, conference Name: IEEE Micro.

- [29] O. Rhodes, L. Peres, A. G. D. Rowley, A. Gait, L. A. Plana, C. Brenninkmeijer, and S. B. Furber, “Real-time cortical simulation on neuromorphic hardware,” Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, vol. 378, no. 2164, p. 20190160, Dec. 2019, publisher: Royal Society. [Online]. Available: https://royalsocietypublishing.org/doi/10.1098/rsta.2019.0160

- [30] C. E. R. Buxó and J. W. Pillow, “Poisson balanced spiking networks,” PLOS Computational Biology, vol. 16, no. 11, p. e1008261, Nov. 2020, publisher: Public Library of Science. [Online]. Available: https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1008261

- [31] M. Chalk, B. Gutkin, and S. Denève, “Neural oscillations as a signature of efficient coding in the presence of synaptic delays,” eLife, vol. 5, p. e13824, Jul. 2016, publisher: eLife Sciences Publications, Ltd. [Online]. Available: https://doi.org/10.7554/eLife.13824

- [32] F. A. Mikulasch, L. Rudelt, and V. Priesemann, “Local dendritic balance enables learning of efficient representations in networks of spiking neurons,” Proceedings of the National Academy of Sciences, vol. 118, no. 50, p. e2021925118, Dec. 2021, publisher: Proceedings of the National Academy of Sciences. [Online]. Available: https://www.pnas.org/doi/full/10.1073/pnas.2021925118

- [33] T. DeWolf, T. C. Stewart, J.-J. Slotine, and C. Eliasmith, “A spiking neural model of adaptive arm control,” Proceedings of the Royal Society B: Biological Sciences, vol. 283, no. 1843, p. 20162134, Nov. 2016, publisher: Royal Society. [Online]. Available: https://royalsocietypublishing.org/doi/full/10.1098/rspb.2016.2134

- [34] J. Büchel, D. Zendrikov, S. Solinas, G. Indiveri, and D. R. Muir, “Supervised training of spiking neural networks for robust deployment on mixed-signal neuromorphic processors,” Scientific Reports, vol. 11, no. 1, p. 23376, Dec. 2021, number: 1 Publisher: Nature Publishing Group. [Online]. Available: https://www.nature.com/articles/s41598-021-02779-x

- [35] J. Friedrich, S. Golkar, S. Farashahi, A. Genkin, A. Sengupta, and D. Chklovskii, “Neural optimal feedback control with local learning rules,” in Advances in Neural Information Processing Systems, vol. 34. Curran Associates, Inc., 2021, pp. 16 358–16 370. [Online]. Available: https://proceedings.neurips.cc/paper/2021/hash/88591b4d3219675bdeb33584b755f680-Abstract.html