CMA-ES for Safe Optimization

Abstract.

In several real-world applications in medical and control engineering, there are unsafe solutions whose evaluations involve inherent risk. This optimization setting is known as safe optimization and formulated as a specialized type of constrained optimization problem with constraints for safety functions. Safe optimization requires performing efficient optimization without evaluating unsafe solutions. A few studies have proposed the optimization methods for safe optimization based on Bayesian optimization and the evolutionary algorithm. However, Bayesian optimization-based methods often struggle to achieve superior solutions, and the evolutionary algorithm-based method fails to effectively reduce unsafe evaluations. This study focuses on CMA-ES as an efficient evolutionary algorithm and proposes an optimization method termed safe CMA-ES. The safe CMA-ES is designed to achieve both safety and efficiency in safe optimization. The safe CMA-ES estimates the Lipschitz constants of safety functions transformed with the distribution parameters using the maximum norm of the gradient in Gaussian process regression. Subsequently, the safe CMA-ES projects the samples to the nearest point in the safe region constructed with the estimated Lipschitz constants. The numerical simulation using the benchmark functions shows that the safe CMA-ES successfully performs optimization, suppressing the unsafe evaluations, while the existing methods struggle to significantly reduce the unsafe evaluations.

1. Introduction

In the real-world applications within the medical and control engineering fields (Berkenkamp et al., 2023; Louis et al., 2022; Modugno et al., 2016; Sui et al., 2015), unsafe solutions may be present, and their evaluations involve risk such as clinical deterioration and breakdown of control systems. For instance, in spinal cord therapy (Sui et al., 2015), the configuration of the electrical stimulation is optimized to improve spinal reflex and locomotor function, where unsafe configuration can aggravate spinal cord injuries. In real-world optimization of drone control system (Berkenkamp et al., 2023), we have to optimize the system parameters preventing drone collisions with the surrounding objects so as not to break down the drone. These optimization problems, preventing the evaluation of unsafe solutions that should not be evaluated, are termed as safe optimization. Safe optimization is formulated as a specialized type of constrained optimization problem aiming to reduce the evaluations of the solutions whose safety function values exceed the safety threshold. Additionally, a set of safe solutions, referred to as safe seeds is provided to the optimizer.

Several methods have been developed for safe optimization. SafeOpt (Sui et al., 2015) is a representative in this category. SafeOpt relies on Bayesian optimization and constructs the safe region using the Lipschitz constant of the safety function not to evaluate the unsafe solutions. Several extensions of SafeOpt have been proposed, such as modified SafeOpt (Berkenkamp et al., 2016), which eliminates the need for the Lipschitz constant of the safety function, and swarm-based SafeOpt (Duivenvoorden et al., 2017), which introduces the particle swarm optimization (Serkan Kiranyaz, 2014) to improve the search performance on high-dimensional problems. In the context of evolutionary algorithms within safe optimization, a general approach called violation avoidance (Kaji et al., 2009) has been proposed. This method involves regenerating a solution when the nearest solution to the generated one is deemed unsafe. However, according to reference (Kim et al., 2022), it points out that the violation avoidance may not effectively suppress the evaluations of unsafe solutions compared with SafeOpt. Considering the computational cost of updates and the optimization performance with large budget, evolutionary algorithms have advantages over Bayesian optimization (Hutter et al., 2013). Consequently, there is a demand for the development of efficient evolutionary algorithms tailored for safe optimization.

This study focuses on the covariance matrix adaptation evolution strategy (CMA-ES) (Hansen and Ostermeier, 1996) as an efficient evolutionary algorithm. CMA-ES employs a multivariate Gaussian distribution as a sampling distribution for solutions and updates the distribution parameters to generate better solutions. The CMA-ES possesses several invariance properties, such as the invariance to affine transformations of the search space, and realizes a powerful optimization performance on ill-conditioned and non-separable problems (Hansen and Auger, 2014).

This study proposes an optimization method for safe optimization based on CMA-ES, termed safe CMA-ES, to realize both safety and efficiency in safe optimization. In addition to the original update procedure of the CMA-ES, the safe CMA-ES estimates the Lipschitz constant of the safety function and constructs a safe region to repair the samples from multivariate Gaussian distribution. The estimation process of the Lipschitz constant uses the Gaussian process regression (GPR) (Rasmussen et al., 2006) trained with the evaluated solutions and computes the maximum norm of the gradient of the prediction mean. The safe CMA-ES estimates the Lipschitz constant in the space transformed using the distribution parameter to inherit the invariance to affine transformations of the search space. Then, the safe CMA-ES projects the sample generated from the multivariate Gaussian distribution to the nearest point in the safe region computed with the estimated Lipschitz constant. Additionally, the safe CMA-ES corrects the initial distribution parameters using the safe seeds.

In numerical simulations, we evaluated the search performance of the safe CMA-ES on the benchmark functions for safe optimization. While the existing method for safe optimization failed to suppress the evaluation of unsafe solutions, the safe CMA-ES successfully found the optimal solution with few or no unsafe evaluations.

2. CMA-ES

The CMA-ES is a probabilistic model-based evolutionary algorithm for continuous black-box optimization problems. CMA-ES employs a multivariate Gaussian distribution parameterized by the mean vector , the covariance matrix , and the step-size .

We introduce the update procedure of the CMA-ES on the objective function . At first, the CMA-ES generates samples from the multivariate Gaussian distribution as

| (1) | ||||

| (2) | ||||

| (3) |

Then, the CMA-ES evaluates the samples on the objective function and computes their rankings. We denote the index of the -th best sample as .

Next, the CMA-ES updates two evolution paths , which are initialized as . The update of evolution paths uses two weighted sums of -best solutions and computed using the positive weights and is performed as

| (4) | ||||

| (5) |

where are the accumulation rates of the evolution paths and . The Heaviside function becomes one if and only if it satisfies

| (6) |

where is approximated value of the expectation .

Finally, the CMA-ES updates the distribution parameters of the multivariate Gaussian distribution as

| (7) | ||||

| (8) | ||||

| (9) | ||||

where are the learning rates and is the damping factor. The CMA-ES contains well-tuned default values for hyperparameters. Refer to the details in the references (Hansen, 2016; Hansen and Auger, 2014).

3. Formulation of Safe Optimization

We follow the formulation of the safe optimization outlined in the reference (Kim et al., 2021). We consider a constrained minimization problem of the objective function as

| (10) | ||||

where and are the safety function and the safety threshold, respectively. We consider a solution as a safe solution when it satisfies all safety constraints and regard a solution as an unsafe solution when it violates at least one safety constraint. In safe optimization, the optimizer can access the safety thresholds and is required to optimize the objective function, suppressing the evaluations of the unsafe solutions. In addition, the optimizer receives safe solutions as the safe seeds. The upper limit of unsafe evaluations depends on the target application. The evaluation of unsafe solutions is usually prohibited in medical applications, while a few unsafe evaluations may be acceptable for control system optimization. For example, in control system optimization for robots, one can prepare multiple robots of the same type in preparation for breakage, and the number of robots serves as the upper limit for unsafe evaluations in this case.

We assume that the evaluations of the objective function and the safety constraints are jointly performed. Additionally, we assume that the safety functions are noiseless black-box functions. For simplicity in this research, we assume that the evaluation values of the unsafe solutions on the objective function are accessible.

4. Proposed Method: Safe CMA-ES

This study proposes the safe CMA-ES, an extension of the CMA-ES that achieves efficient optimization performance in safe optimization. The safe CMA-ES constructs the safe region based on the estimated Lipschitz constants of the safety functions and projects the generated samples to the nearest point in the safe region to avoid evaluating unsafe solutions (Section 4.1). The Lipschitz constants are estimated in the transformed search space by the Gaussian process regression (Section 4.2). To further enhance safety, the safe CMA-ES initializes the distribution, ensuring that it fits within the safe region (Section 4.3). Algorithm 1 shows the pseudo-code of the safe CMA-ES.

4.1. Projection of Samples to Safe Region

The safe CMA-ES introduces the transformation using the current distribution parameters to inherit the invariance to affine transformations of the search space as

| (11) |

The safe CMA-ES constructs the safe region using the following fact: given the Lipschitz constant of the composition function , any safe solution and any solutions satisfy

| (12) |

The safe CMA-ES uses solutions evaluated in the last iterations to construct the safe region. The safe CMA-ES computes the safe region using safe solutions in evaluated solutions and the estimated Lipschitz constant , explained in the next section, as

The function returns the radius of the safe region with a given safe solution on the composition function , as

| (13) |

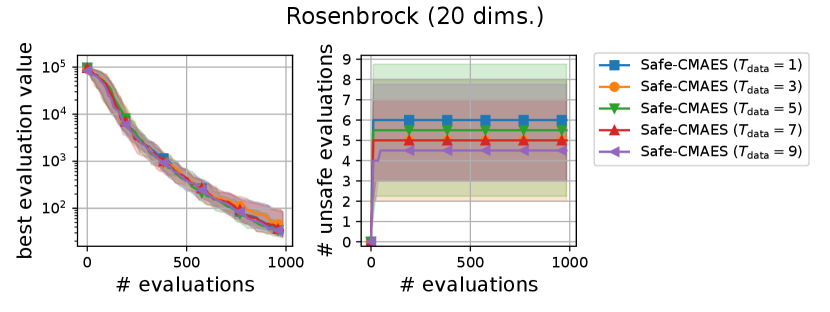

Figure 1 illustrates an example of the safe region. Figure 1 also shows the safe region computed with the Lipschitz constant of the safety function instead of the composition function for reference. It can be observed that the Lipschitz constant of our composition function expands the safe region when the distribution parameters of the CMA-ES is learned appropriately.

To prevent the evaluation of unsafe solutions, the safe CMA-ES projects the generated solution in (3) to the nearest point in the safe region with respect to the Mahalanobis distance as

| (14) |

The projection is performed by modifying the sample generated in (1) as

| (15) |

where and are given as

| (16) | ||||

| (17) |

The safe solution has the safe region closest to in , and determines between and , which becomes when the solution is originally generated in the safe region.

4.2. Estimation of Lipschitz Constants

Based on the updated distribution parameters, the safe CMA-ES estimates the Lipschitz constant of each safety function using the Gaussian process regression (GPR) trained with evaluated solutions. The safe CMA-ES also uses solutions evaluated in the last iterations (including the solutions evaluated in the current iteration) as the training data for the GPR. The safe CMA-ES normalizes the -th solution in the training data using the updated distribution parameters as . Additionally, the target variable corresponding to the -th training data is normalized as

| (18) |

where and are the average and standard deviation of the evaluation values on the -th safety function over the solutions, respectively. The safe CMA-ES computes the estimated Lipschitz constant of the composition function using the posterior distribution of GPR with the training data as

| (19) |

where is the mean of the posterior distribution. We set the search space for maximization in (19) as . Based on the approach in (Gonzalez et al., 2016), the safe CMA-ES applies a two-step optimization process to solve the maximization. In the first step, the safe CMA-ES generates samples from the standard multivariate normal distribution and computes the norm of the gradient of the mean of the posterior distribution with respect to each sample . In the second step, the safe CMA-ES employs L-BFGS (Liu and Nocedal, 1989) with box-constraint handling and runs L-BFGS from the maximizer in for 200 iterations. Following the settings of the surrogate-assisted CMA-ES with GPR (Toal and Arnold, 2020; Yamada et al., 2023), we use the RBF kernel as

| (20) |

We set the length scale and observation noise as and , respectively.

In addition, the safe CMA-ES has two correction mechanisms that increase the estimated Lipschitz constant in case the estimation using the GPR is unreliable. Introducing coefficients and for the correction, the estimated Lipschitz constant is computed as

| (21) |

The updates of those coefficients are as follows. The safe CMA-ES increases the coefficient when the number of training data is small because the prediction with small training dataset is unreliable. Recalling the maximum number of training data is , the coefficient is updated as

| (22) |

where determines the effect of the coefficient . The coefficient is set for each safety constraint and increased when the solutions violate it. Since the safety constraints violated by many solutions require drastic correction, the safe CMA-ES determines the update strength of the coefficient based on the ratio of solutions generated in the current iteration as

| (23) |

Then, the safe CMA-ES updates the coefficient as

| (24) |

where determines the effect of the coefficient . We set the initial value of the coefficient as .

4.3. Initialization of Distribution Parameters

The Lipschitz constants of the safety functions are estimated before generating solutions in the first iteration. In the first estimation, the safe CMA-ES requires a predefined lower bound for the estimated Lipschitz constant and estimates the Lipschitz constant as

| (25) |

We note is computed with the initial distribution parameters and the GPR with the safe seeds, and is computed with the number of the safe seeds. If the number of the safe seeds is one, the safe CMA-ES set because the GPR does not work well.

The safe CMA-ES also modifies the initial mean vector and step-size using the safe seeds. The initial mean vector is set to the safe seed with the best evaluation value on the objective function as

| (26) |

The step-size is modified to maintain the ratio of solutions not changed after the projection in (15) above as

| (27) |

where is the percent point function of the -distribution with the degree of freedom , which gives the squared radius of trust region on the standard -dimensional Gaussian distribution with the probability . We note the function is given by (13). We set the target ratio to .

5. Experiment

We investigate the following aspects through numerical simulation.111The code will be made available at https://github.com/CyberAgentAILab/cmaes (Nomura and Shibata, 2024).

-

•

The performance evaluation of the safe CMA-ES in the early phase of the optimization (Section 5.3).

-

•

The performance evaluation of the safe CMA-ES throughout the optimization (Section 5.4).

-

•

The performance comparison of the safe CMA-ES with the optimization methods for safe optimization (Section 5.5).

We also provide the results of sensitivity experiments of hyperparameters of the safe CMA-ES in the supplemental material.

5.1. Comparative Methods

SafeOpt

SafeOpt (Sui et al., 2015) is an optimization method for safe optimization based on Bayesian optimization. SafeOpt assumes accessibility to the Lipschitz constant of the safety function and constructs the safe region based on the upper confidence bound of the evaluation value on the safety function as 222Differently from the original paper (Sui et al., 2015), which assumes the safety constraint , we explain the update of SafeOpt with the safety constraint .

| (28) |

The initial safe region is given by the safe seeds. Subsequently, SafeOpt computes two regions within the safe region: the region , where the optimal solution seems to be included, and the region , comprising solutions that could potentially extend the safe region. The SafeOpt evaluates the solution with the largest confidence interval in the union . As a variant of SafeOpt, reference (Sui et al., 2015) also proposes SafeOpt-UCB, which evaluates the solution with the smallest lower bound of the predictive confidence interval within the safe region .

Several methods have been developed as extensions of SafeOpt. The reference (Berkenkamp et al., 2016) modified the update of SafeOpt not to require the Lipschitz constant of the safety function and proposed modified SafeOpt. Additionally, to reduce the computational cost in high-dimensional problems, swarm-based SafeOpt (Duivenvoorden et al., 2017) uses the particle swarm optimization (Serkan Kiranyaz, 2014) to search for the solution on the GPR.

Violation Avoidance

The violation avoidance (Kaji et al., 2009) is a general handling for evolutionary algorithms in the safe optimization. The violation avoidance modifies the generation process of the evolutionary algorithm to regenerate a solution when the nearest solution to the generated one is unsafe. The distance between the generated solution and the solution already evaluated is given by

| (29) |

The weight is determined based on whether is safe or unsafe as

| (30) |

where are the predefined weights. The evolutionary algorithm tends to evaluate a solution close to a safe solution when is larger than and close to an unsafe solutions otherwise.

In numerical simulation, we included the CMA-ES with violation avoidance as one of the comparative methods. We set the weights as . We sampled points from the multivariate Gaussian distribution and randomly selected solutions from the samples whose closest evaluated solutions are safe. We terminated the optimization when we could not obtain solutions from samples.

5.2. Experimental Setting

We used the following benchmark functions with a unique optimal solution .

-

•

Sphere:

-

•

Ellipsoid:

-

•

Reversed Ellipsoid:

-

•

Rosenbrock:

The sphere, ellipsoid, and rosenbrock functions are well-known benchmarks for continuous black-box optimization. Additionally, we designed the reversed ellipsoid function by reversing the order of the coefficients in the ellipsoid function. This reversed ellipsoid function was employed to investigate the impact of safety function settings on the performance of the safe CMA-ES.

In the first and second experiments, we compared the safe CMA-ES with three comparative methods: the naive CMA-ES, CMA-ES with constraint handling, and CMA-ES with violation avoidance. We used augmented Lagrangian constraint handling (Atamna et al., 2016)333We implemented the constraint handling with pycma (Hansen et al., 2019). We do not use the negative weights for fair comparison. as the constraint handling for the CMA-ES. We terminated the optimization when the best evaluation value reached or when the minimum eigenvalue of became smaller than .

In the third experiment, we compared the safe CMA-ES with the existing optimization methods designed for safe optimization: SafeOpt, modified SafeOpt, and swarm-based SafeOpt.444We used the implementation of SafeOpt, modified SafeOpt, and swarm-based SafeOpt provided by authors in https://github.com/befelix/SafeOpt. We used the RBF kernel in (20) as the kernel of them for a fair comparison with the safe CMA-ES.555 We did not use the automatic relevance determination (ARD) as it did not lead performance improvements in preliminary experiments. We optimized the hyperparameters of the kernel by the maximum likelihood method. As SafeOpt requires the Lipschitz constant of the safety function, we provided the maximum of the norm of the gradient over the grid points that divide each dimension on the search space into equally, i.e., points on the grid in total. For the violation avoidance, consistent with the setting in the original paper, we fixed and varied the weight for safe solution in addition to the default setting as .

For all methods, we set the search space as . We obtained the safe seeds from the samples that are generated uniformly at random within the search space and satisfy all safety constraints. We set the number of the safe seeds as . For the methods employing CMA-ES, the initial mean vector was set to the safe seed with the best evaluation value on the objective function. The initial step-size and the covariance matrix are given by and , respectively. It is important to note that the safe CMA-ES corrects the initial step-size using (27). We set the number of dimensions as . The population size was set as . We conducted 50 independent trials for each setting.

5.3. Result of Performance Evaluation in Early Phase of Optimization

We set the safety function and threshold to investigate the performance in the early phase of the optimization process as

| (31) |

where represents -quantile of the uniform distribution on the objective function over the search space . In this case, the solution with a poor evaluation value on the objective function is unsafe. The safety threshold was estimated using 10,000 samples generated uniformly at random across the search space. We set the total number of evaluations to 1,000.

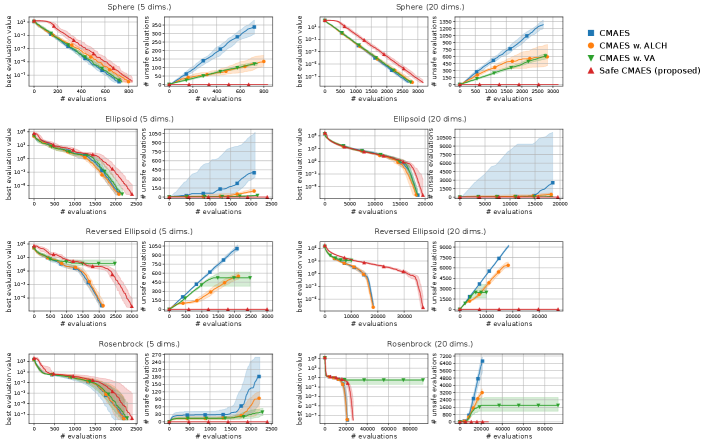

Figure 2 shows the transitions of the best evaluation value and the number of unsafe evaluations. Focusing on the result of 5-dimensional problems, the safe CMA-ES successfully optimized all benchmark functions, suppressing the unsafe evaluations. It is noteworthy that the safe CMA-ES avoided evaluating unsafe solutions in over 75% of the trials. Meanwhile, the violation avoidance did not significantly reduce the unsafe evaluations compared to the naive CMA-ES.

For 20-dimensional problems, the safe CMA-ES initially evaluated the unsafe solutions in the early iterations on the ellipsoid and rosenbrock functions. We consider that this is because the estimation of 20-dimensional GPR trained with limited training data was unreliable. The safe CMA-ES successfully evaluated only safe solutions after initial iterations. Meanwhile, we observe a gradual decrease in the best evaluation value of the safe CMA-ES on the sphere function compared to other methods. We consider that the overestimation of the Lipschitz constant of the safety function led to this slight deterioration. However, the safe CMA-ES accelerated the decrease of the best evaluation value on the ellipsoid function. This is because the safe solution had a good evaluation value in this setting, and the projection to the safe region improved the evaluation value of the samples on the objective function, thereby accelerating the optimization process.

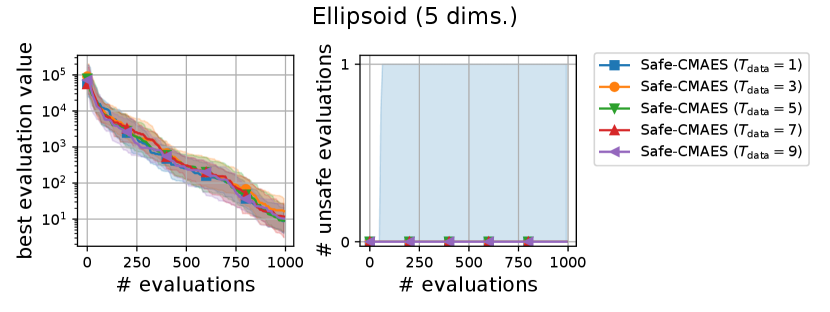

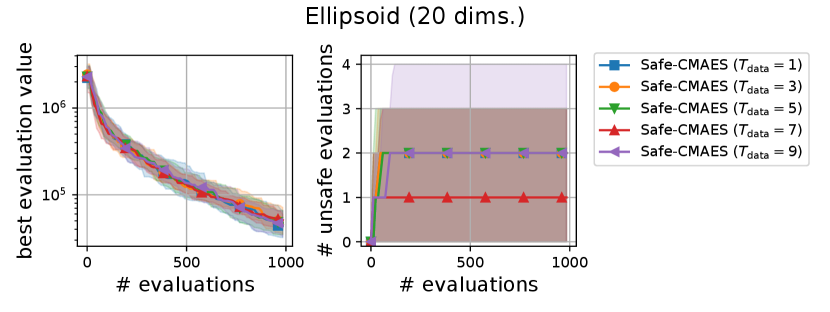

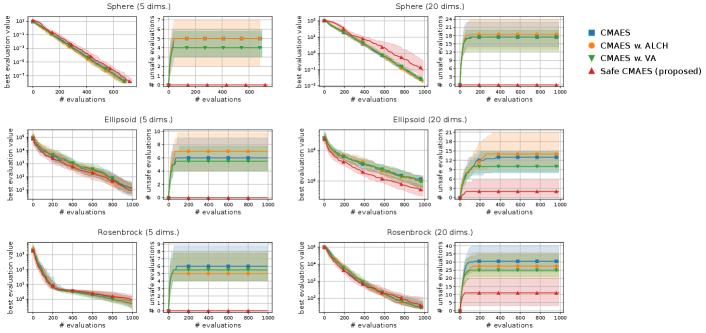

5.4. Result of Performance Evaluation Throughout Optimization Process

We used the safety function and safety threshold to investigate the performance throughout the optimization process as

| (32) |

This safety function constrains the first element of the solution. The optimization without evaluating unsafe solutions become challenging for the reversed ellipsoid function. We set the total number of evaluations as .

Figure 3 shows the transitions of the best evaluation value satisfying the safety constraint and the number of unsafe evaluations. It is noteworthy that the median numbers of the unsafe evaluations were zero for the safe CMA-ES in all problems. The results on the sphere function show that the best evaluation value of the safe CMA-ES stagnated in the early phase of the optimization. This occurred due to the overestimation of the Lipschitz constant of the safety function, and the modification of the initial step-size in (27) set an initial value smaller than necessary. On other functions, the safe CMA-ES required more evaluations to reduce the best evaluation value throughout the optimization compared to the comparative methods. The safe CMA-ES, especially on the 20-dimensional reversed ellipsoid, increased the number of evaluations by almost two times. However, the safe CMA-ES completed the whole optimization process without evaluating unsafe solutions, in contrast to the comparative methods, which continued to increase unsafe solutions. Additionally, the CMA-ES with violation avoidance failed to optimize the reversed ellipsoid and rosenbrock functions. This results show the safety and efficiency of the safe CMA-ES on safe optimization.

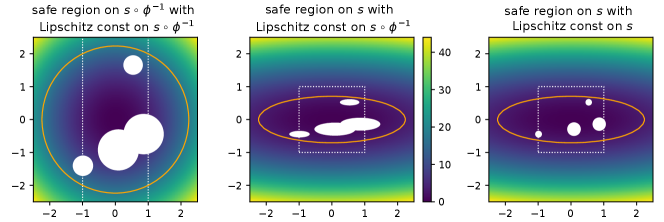

5.5. Result of Performance Comparison with Existing Methods

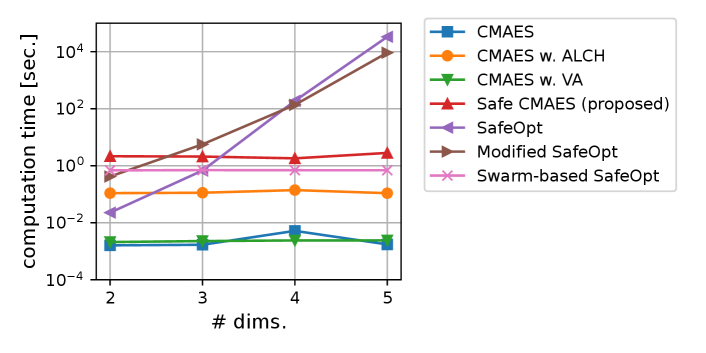

Finally, we compared the safe CMA-ES with existing optimization methods for safe optimization. Before evaluating the optimization performance, we compared the computational cost for updating. Figure 4 shows the computational time for performing five updates varying the number of dimensions as .666 We measured the computational time using AMD EPYC 7763 (2.45GHz, 64 cores). We implemented the safe CMA-ES and violation avoidance using NumPy 1.21.3 (Harris et al., 2020), SciPy 1.7.1 (Virtanen et al., 2020), and GpyTorch 1.10 (Gardner et al., 2018). We observed a significant increase in the computational costs of SafeOpt and modified SafeOpt as the number of dimensions increased, which made the comparison with the safe CMA-ES difficult. We consider that SafeOpt and modified SafeOpt used a grid space dividing the search space into even intervals, and the growing number of grid points resulted in an increased computational cost.777 We used a grid dividing each dimension into 20 points. We did not optimize the hyperparameters using the maximum likelihood method because it deteriorated their performance in our preliminary experiment.

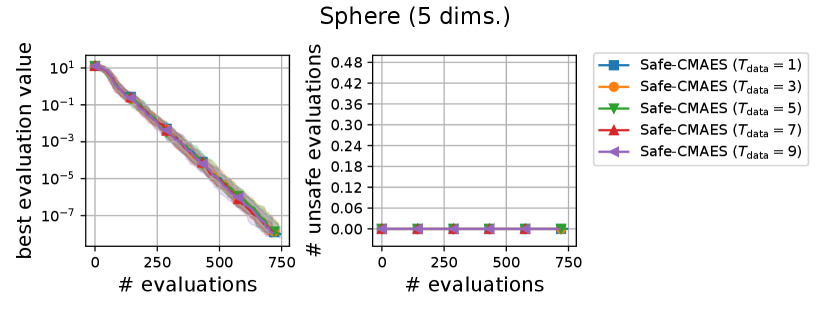

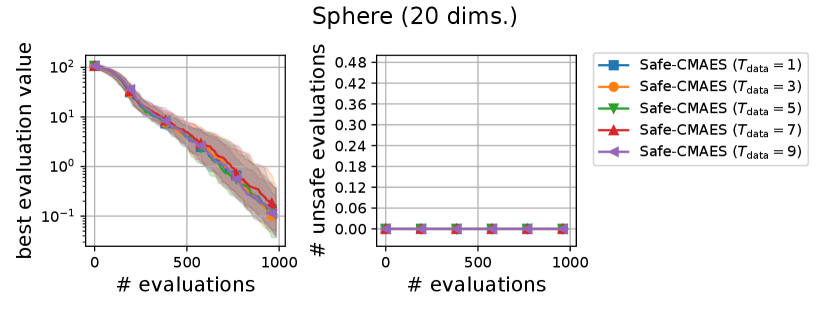

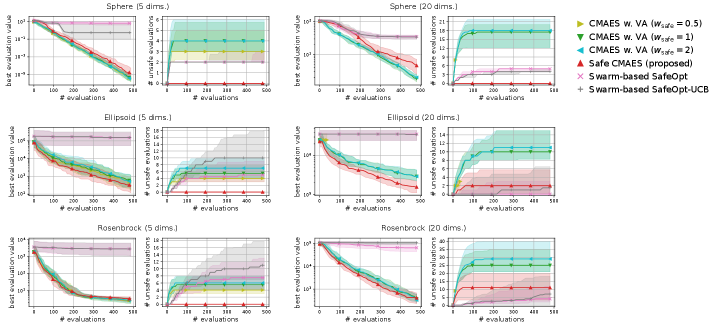

Figure 5 shows the comparison results between the safe CMA-ES, swarm-based SafeOpt, and violation avoidance using the safety constraint (31) as used in the first experiment. We observed that swarm-based SafeOpt failed to reduce the best evaluation value on the ellipsoid and rosenbrock functions. The reason for this failure is that the GPR could not accurately estimate the original landscape of those functions. In contrast, because the safe CMA-ES uses the GPR on the composition function , the safe CMA-ES obtained a reliable estimation realizing the safe optimization efficiently. Furthermore, more unsafe evaluations occurred in the violation avoidance than in the safe CMA-ES. Additionally, in 20-dimensional problems, the violation avoidance with terminated due to the inability to generate solutions whose nearest evaluated solutions were safe. These results reveal that the continued superiority of the safe CMA-ES is not lost even when adjusting the weights of violation avoidance.

6. Conclusion

We proposed the safe CMA-ES as an efficient optimization method tailored for safe optimization. The safe CMA-ES estimates the Lipschitz constants of the transformed safety function using the distribution parameters. The estimation process uses GPR trained with evaluated solutions and the maximum norm of the gradient on the mean of the posterior distribution. Additionally, the safe CMA-ES constructs the safe region and projects the samples to the nearest points in the safe region to reduce the unsafe evaluations. The safe CMA-ES also modifies the initial mean vector and initial step-size using the safe seeds. The numerical simulation shows that the safe CMA-ES optimized the benchmark problems suppressing the unsafe evaluations although the rate of improvement in the best evaluation value was slower compared to other methods. As the safe CMA-ES assumes the existence of the Lipschitz constant of the safety functions, the algorithm improvement for safe optimization with discontinuous safety functions is considered as the future work. Additionally, since we used the synthetic problems in our experiment, the evaluation of the safe CMA-ES in realistic problems is left as a future work.

Acknowledgements.

This work was partially supported by JSPS KAKENHI (JP23H00491, JP23H03466, JP23KJ0985), JST PRESTO (JPMJPR2133), and NEDO (JPNP18002, JPNP20006).References

- (1)

- Atamna et al. (2016) Asma Atamna, Anne Auger, and Nikolaus Hansen. 2016. Augmented Lagrangian Constraint Handling for CMA-ES — Case of a Single Linear Constraint. In Parallel Problem Solving from Nature – PPSN XIV. Springer International Publishing, Cham, 181–191.

- Berkenkamp et al. (2023) Felix Berkenkamp, Andreas Krause, and Angela P. Schoellig. 2023. Bayesian optimization with safety constraints: safe and automatic parameter tuning in robotics. Machine Learning 112, 10 (2023), 3713–3747. https://doi.org/10.1007/s10994-021-06019-1

- Berkenkamp et al. (2016) Felix Berkenkamp, Angela P. Schoellig, and Andreas Krause. 2016. Safe controller optimization for quadrotors with Gaussian processes. In 2016 IEEE International Conference on Robotics and Automation (ICRA). 491–496. https://doi.org/10.1109/ICRA.2016.7487170

- Duivenvoorden et al. (2017) Rikky R.P.R. Duivenvoorden, Felix Berkenkamp, Nicolas Carion, Andreas Krause, and Angela P. Schoellig. 2017. Constrained Bayesian Optimization with Particle Swarms for Safe Adaptive Controller Tuning. IFAC-PapersOnLine 50, 1 (2017), 11800–11807. https://doi.org/10.1016/j.ifacol.2017.08.1991 20th IFAC World Congress.

- Gardner et al. (2018) Jacob R Gardner, Geoff Pleiss, David Bindel, Kilian Q Weinberger, and Andrew Gordon Wilson. 2018. GPyTorch: Blackbox Matrix-Matrix Gaussian Process Inference with GPU Acceleration. In Advances in Neural Information Processing Systems.

- Gonzalez et al. (2016) Javier Gonzalez, Zhenwen Dai, Philipp Hennig, and Neil Lawrence. 2016. Batch Bayesian Optimization via Local Penalization. In Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, Vol. 51. PMLR, 648–657.

- Hansen (2016) Nikolaus Hansen. 2016. The CMA Evolution Strategy: A Tutorial. CoRR abs/1604.00772 (2016). arXiv:1604.00772

- Hansen et al. (2019) Nikolaus Hansen, Youhei Akimoto, and Petr Baudis. 2019. CMA-ES/pycma on Github.

- Hansen and Auger (2014) Nikolaus Hansen and Anne Auger. 2014. Principled Design of Continuous Stochastic Search: From Theory to Practice. Springer Berlin Heidelberg, Berlin, Heidelberg, 145–180. https://doi.org/10.1007/978-3-642-33206-7_8

- Hansen and Ostermeier (1996) Nikolaus Hansen and Andreas Ostermeier. 1996. Adapting arbitrary normal mutation distributions in evolution strategies: The covariance matrix adaptation. In Proceedings of IEEE International Conference on Evolutionary Computation. 312–317. https://doi.org/10.1109/ICEC.1996.542381

- Harris et al. (2020) Charles R. Harris, K. Jarrod Millman, St’efan J. van der Walt, Ralf Gommers, Pauli Virtanen, David Cournapeau, Eric Wieser, Julian Taylor, Sebastian Berg, Nathaniel J. Smith, Robert Kern, Matti Picus, Stephan Hoyer, Marten H. van Kerkwijk, Matthew Brett, Allan Haldane, Jaime Fernández del Río, Mark Wiebe, Pearu Peterson, Pierre Gérard-Marchant, Kevin Sheppard, Tyler Reddy, Warren Weckesser, Hameer Abbasi, Christoph Gohlke, and Travis E. Oliphant. 2020. Array programming with NumPy. Nature 585, 7825 (2020), 357–362. https://doi.org/10.1038/s41586-020-2649-2

- Hutter et al. (2013) Frank Hutter, Holger Hoos, and Kevin Leyton-Brown. 2013. An evaluation of sequential model-based optimization for expensive blackbox functions. In Proceedings of the 15th Annual Conference Companion on Genetic and Evolutionary Computation. Association for Computing Machinery, New York, NY, USA, 1209–1216. https://doi.org/10.1145/2464576.2501592

- Kaji et al. (2009) Hirotaka Kaji, Kokolo Ikeda, and Hajime Kita. 2009. Avoidance of constraint violation for experiment-based evolutionary multi-objective optimization. In 2009 IEEE Congress on Evolutionary Computation. 2756–2763. https://doi.org/10.1109/CEC.2009.4983288

- Kim et al. (2022) Youngmin Kim, Richard Allmendinger, and Manuel López-Ibáñez. 2022. Are Evolutionary Algorithms Safe Optimizers?. In Proceedings of the Genetic and Evolutionary Computation Conference. Association for Computing Machinery, 814–822. https://doi.org/10.1145/3512290.3528818

- Kim et al. (2021) Youngmin Kim, Richard Allmendinger, and Manuel López-Ibáñez. 2021. Safe Learning and Optimization Techniques: Towards a Survey of the State of the Art. In Trustworthy AI - Integrating Learning, Optimization and Reasoning. Springer International Publishing, Cham, 123–139.

- Liu and Nocedal (1989) Dong C Liu and Jorge Nocedal. 1989. On the limited memory BFGS method for large scale optimization. Mathematical Programming 45, 1 (1989), 503–528. https://doi.org/10.1007/BF01589116

- Louis et al. (2022) Maxime Louis, Hector Romero Ugalde, Pierre Gauthier, Alice Adenis, Yousra Tourki, and Erik Huneker. 2022. Safe Reinforcement Learning for Automatic Insulin Delivery in Type I Diabetes. In Reinforcement Learning for Real Life Workshop, NeurIPS 2022.

- Modugno et al. (2016) Valerio Modugno, Ugo Chervet, Giuseppe Oriolo, and Serena Ivaldi. 2016. Learning soft task priorities for safe control of humanoid robots with constrained stochastic optimization. In 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids). 101–108. https://doi.org/10.1109/HUMANOIDS.2016.7803261

- Nomura and Shibata (2024) Masahiro Nomura and Masashi Shibata. 2024. cmaes : A Simple yet Practical Python Library for CMA-ES. arXiv preprint arXiv:2402.01373 (2024).

- Rasmussen et al. (2006) Carl Edward Rasmussen, Christopher KI Williams, et al. 2006. Gaussian Processes for Machine Learning. Vol. 1. Springer.

- Serkan Kiranyaz (2014) Moncef Gabbouj Serkan Kiranyaz, Turker Ince. 2014. Multidimensional Particle Swarm Optimization for Machine Learning and Pattern Recognition (first ed.). Springer. https://doi.org/10.1007/978-3-642-37846-1

- Sui et al. (2015) Yanan Sui, Alkis Gotovos, Joel Burdick, and Andreas Krause. 2015. Safe Exploration for Optimization with Gaussian Processes. In Proceedings of the 32nd International Conference on Machine Learning (Proceedings of Machine Learning Research, Vol. 37). PMLR, 997–1005.

- Toal and Arnold (2020) Lauchlan Toal and Dirk V. Arnold. 2020. Simple Surrogate Model Assisted Optimization with Covariance Matrix Adaptation. In Parallel Problem Solving from Nature – PPSN XVI. Springer International Publishing, 184–197.

- Virtanen et al. (2020) Pauli Virtanen, Ralf Gommers, Travis E. Oliphant, Matt Haberland, Tyler Reddy, David Cournapeau, Evgeni Burovski, Pearu Peterson, Warren Weckesser, Jonathan Bright, Stéfan J. van der Walt, Matthew Brett, Joshua Wilson, K. Jarrod Millman, Nikolay Mayorov, Andrew R. J. Nelson, Eric Jones, Robert Kern, Eric Larson, C J Carey, İlhan Polat, Yu Feng, Eric W. Moore, Jake VanderPlas, Denis Laxalde, Josef Perktold, Robert Cimrman, Ian Henriksen, E. A. Quintero, Charles R. Harris, Anne M. Archibald, Antônio H. Ribeiro, Fabian Pedregosa, Paul van Mulbregt, and SciPy 1.0 Contributors. 2020. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nature Methods 17 (2020), 261–272. https://doi.org/10.1038/s41592-019-0686-2

- Yamada et al. (2023) Yutaro Yamada, Kento Uchida, Shota Saito, and Shinichi Shirakawa. 2023. Surrogate-Assisted (1+1)-CMA-ES with Switching Mechanism of Utility Functions. In Applications of Evolutionary Computation. Springer Nature Switzerland, 798–814.

Appendix A Investigation of Hyperparameter Sensitivity

We investigated the sensitivities of hyperparameters , , and of the safe CMA-ES. The effect of those hyperparameters are summarized as

-

•

The hyperparameter controls the rates of increase and decrease for the coefficient for the estimated Lipschitz constant when the unsafe solutions are evaluated.

-

•

The hyperparameter controls the coefficient for the estimated Lipschitz constant when the number of evaluated solutions used for the Gaussian process regression is small.

-

•

The hyperparameter controls the number of solutions to construct the safe region and the number of training data for Gaussian process regression.

We used the safety constraint used in the first and third experiments in the paper as

| (33) |

We ran the safe CMA-ES changing a single hyperparameter and remaining the other hyperparameters to their recommended settings. We ran 50 independent trials for each setting.

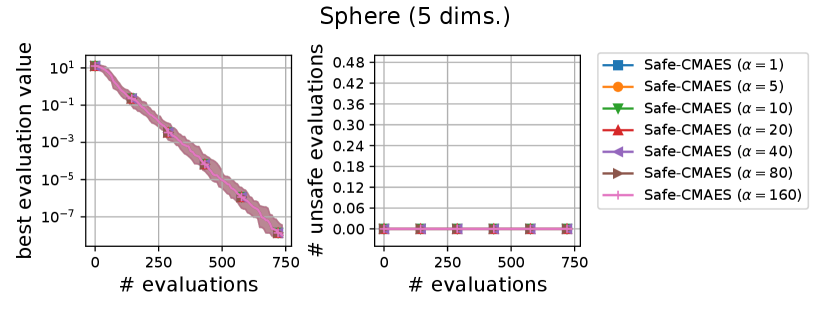

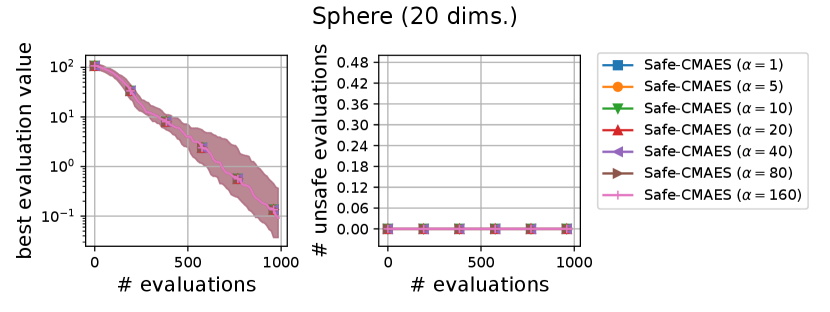

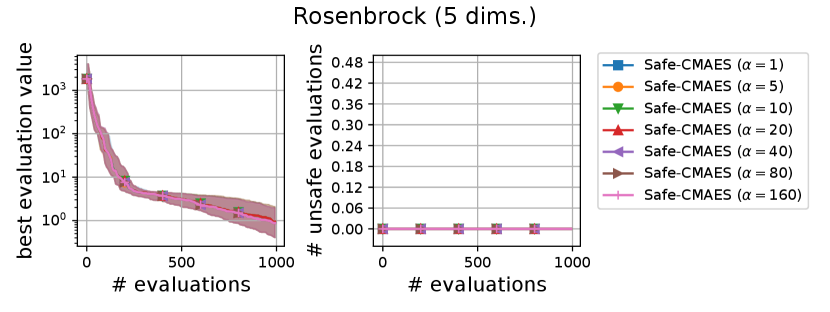

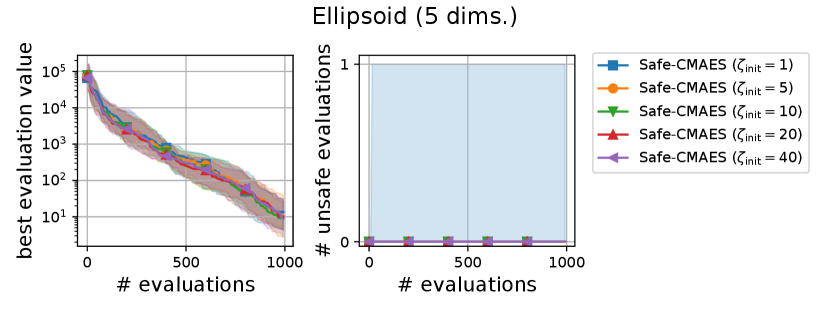

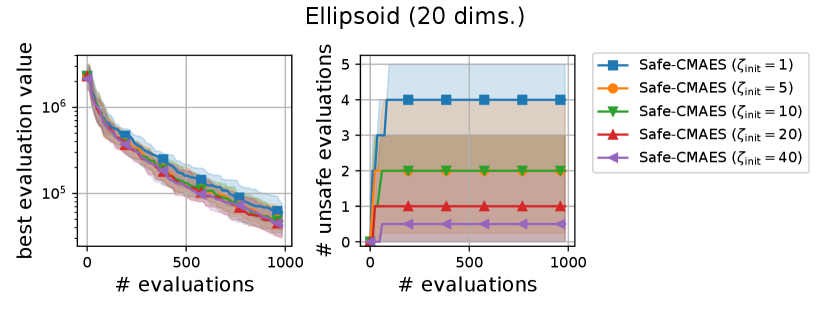

A.1. Result of Sensitivity Experiment of

We varied the setting of as . Figure 6 shows the transitions of the best evaluation value and the number of unsafe evaluations. We note that no unsafe evaluation occured in 5-dimensional functions and the 20-dimensional sphere function. Since the hyperparameter affects the dynamics only when an unsafe evaluation occurs, the dynamics of the safe CMA-ES were not changed in those cases. For the 20-dimensional ellipsoid function, the unsafe evaluations tended to be reduced as the hyperparameter increased, while the best evaluation value slightly stagnated in the first few updates. On the 20-dimensional rosenbrock function, a similar stagnation of the best evaluation value was observed with large , while the number of unsafe evaluations remained unchanged except for the case , i.e., the case without the adaptation of the coefficient . Overall, the recommended setting seems to be a reasonable choice.

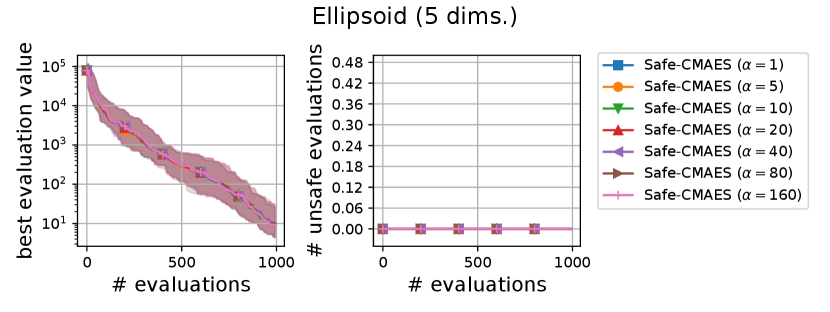

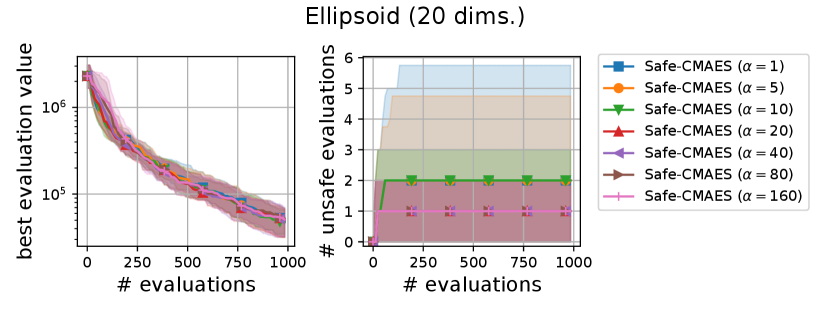

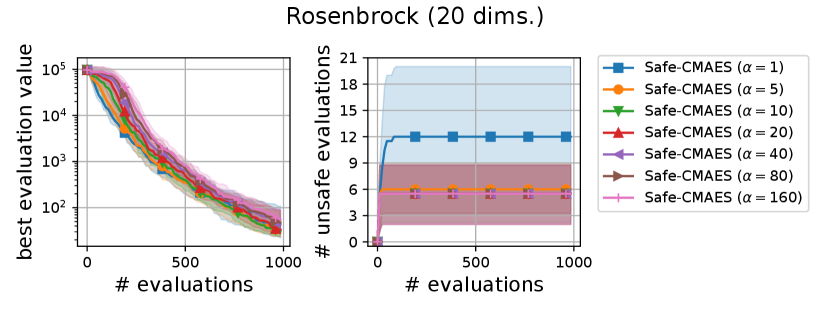

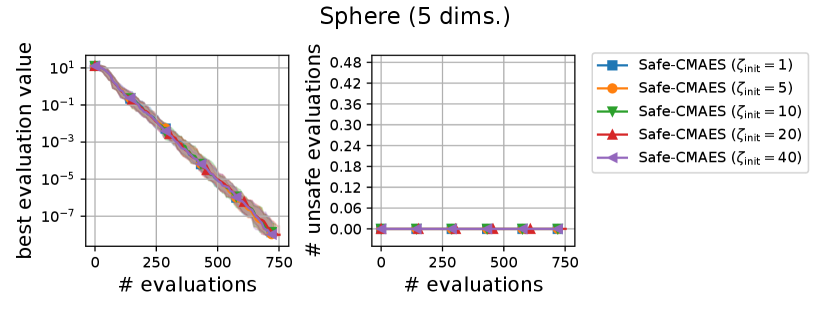

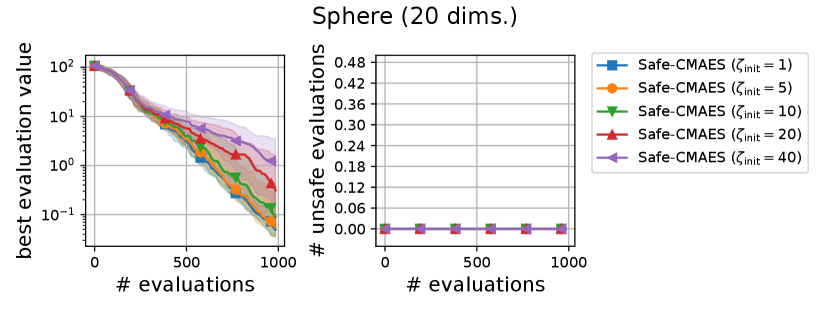

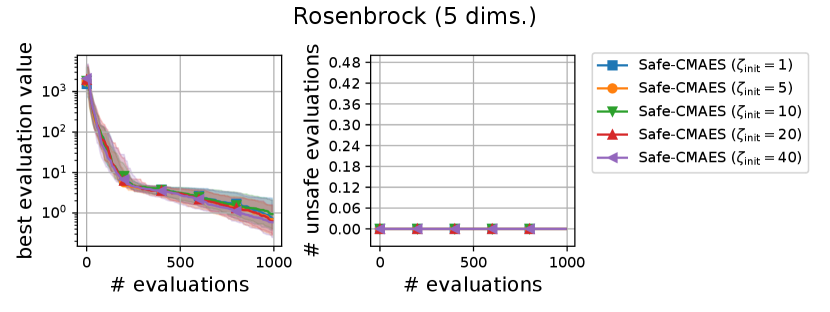

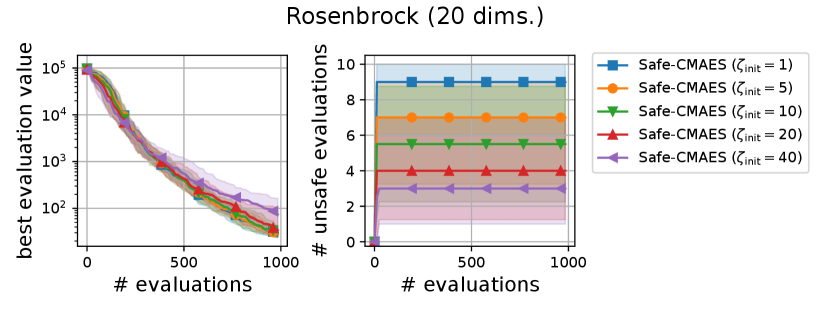

A.2. Result of Sensitivity Experiment of

We varied the setting of as . Figure 7 shows the transitions of the best evaluation value and the number of unsafe evaluations. When , indicating that the adaptation of coefficient is not applied, the unsafe evaluations were occasionally occurred on the 5-dimensional ellipsoid. On the 20-dimensional sphere function, a larger setting of delayed the decrease of the best evaluation value, while unsafe evaluation did not occur in any of the settings. In contrast, on the 20-dimensional ellipsoid function, a larger setting of reduced the number of unsafe evaluations while maintaining the decrease rate in the best evaluation value. We consider the performance of the safe CMA-ES to be a somewhat sensitive to , and the suitable setting of depends on the problem.

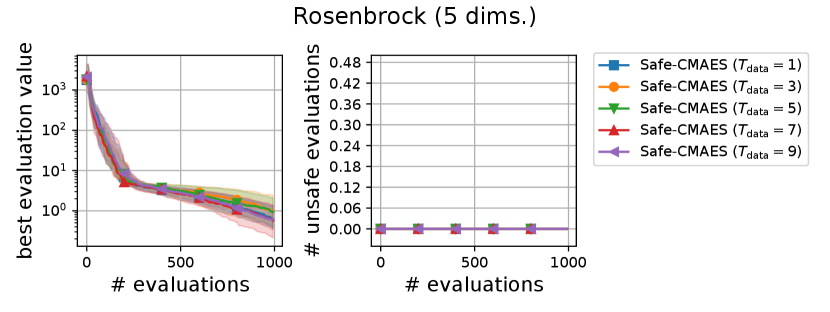

A.3. Result of Sensitivity Experiment of

We varied the setting of as . Figure 8 shows the transitions of the best evaluation value and the number of unsafe evaluations. The setting of did not significantly change the dynamics of the safe CMA-ES significantly on most of the problems. When , the unsafe evaluation occurred in the 5-dimensional ellipsoid function. On the 20-dimensional rosenbrock function, a lager reduced the unsafe evaluations. Overall, the recommended setting performed well across all problems.