CoBra: Complementary Branch Fusing Class and Semantic Knowledge for Robust Weakly Supervised Semantic Segmentation

Abstract

Leveraging semantically precise pseudo masks derived from image-level class knowledge for segmentation, namely image-level Weakly Supervised Semantic Segmentation (WSSS), remains challenging. While Class Activation Maps (CAMs) using CNNs have steadily been contributing to the success of WSSS, the resulting activation maps often narrowly focus on class-specific parts (e.g., only the face of a human). On the other hand, recent works based on vision transformers (ViT) have shown promising results based on their self-attention mechanism to capture the semantic parts but fail in capturing complete class-specific details (e.g., entire body parts of humans but also with a dog nearby). In this work, we propose Complementary Branch (CoBra), a novel dual branch framework consisting of two distinct architectures that provide valuable complementary knowledge of class (from CNN) and semantic (from ViT) to each branch. In particular, we learn Class-Aware Projection (CAP) for the CNN branch and Semantic-Aware Projection (SAP) for the ViT branch to explicitly fuse their complementary knowledge and facilitate a new type of extra patch-level supervision. Our model, through CoBra, fuses CNN and ViT’s complementary outputs to create robust pseudo masks that integrate both class and semantic information effectively. Extensive experiments qualitatively and quantitatively investigate how CNN and ViT complement each other on the PASCAL VOC 2012 and MS COCO 2014 dataset, showing a state-of-the-art WSSS result. This includes not only the masks generated by our model but also the segmentation results derived from utilizing these masks as pseudo labels.

1 Introduction

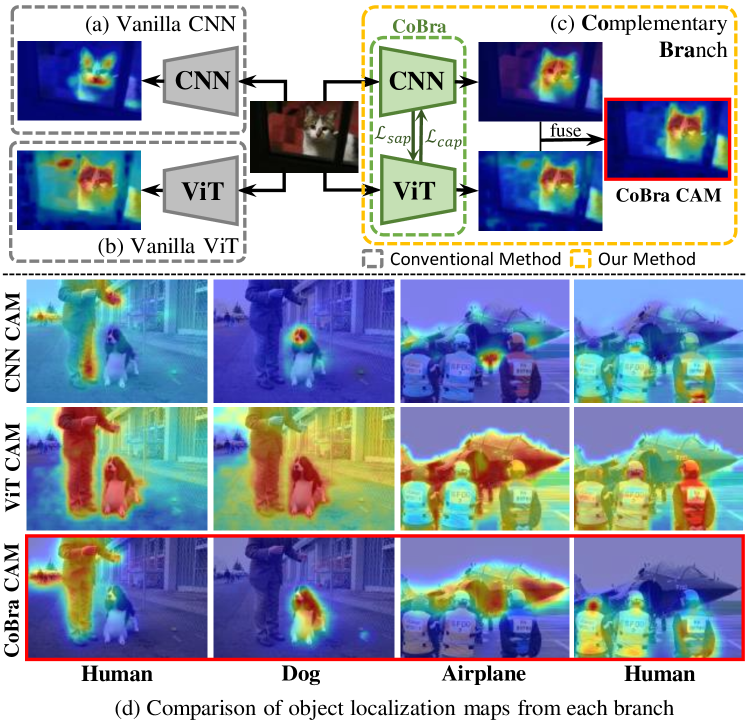

Among an array of contemporary computer vision tasks, semantic segmentation particularly continues to face difficulty acquiring accurate pixel-level segmentation labels which require a considerable amount of laborious human supervision. In response to such dependence on costly full supervision, weakly supervised semantic segmentation (WSSS) seeks to leverage other relatively less costly labels as weak supervisions such as points [4, 17], scribbles [32, 54], and bounding boxes [22, 12, 13]. Of particular interest is using image-level labels (e.g., object class in image) which are already prevalent in existing vision datasets such as PASCAL VOC [16]. In this work, we specifically discuss WSSS using the existing image-level class label as weak supervision. Unlike other types of weak supervision which naturally imply the object locations, the image-level class label only tells what the objects are but does not tell where they are. Thus, a line of prior works for (image-level) WSSS explored ways to utilize Class Activation Map (CAM) for generating object localization maps from CNN features [55, 39, 7, 26]. As shown in the first row of Fig. 1d, the objects are accurately localized by using the provided image-level class label (e.g., human, dog and airplane), primarily concentrating on the parts which contribute most to the classification task (e.g., face of cat in Fig. 1a). While this provides a strong class-specific localization cue, the resulting pixel-level pseudo label of the class often insufficiently covers the entire semantic region of the object (e.g., full body of cat in Fig. 1b). Interestingly, the recent surge of transformer-based models, namely vision transformer (ViT) [14], demonstrated their high fidelity localization maps [18, 50, 38]. Unlike CNN’s CAM, ViT’s localization map uses the self-attention mechanism to distinctly capture patch semantics, as seen in the middle row of Fig. 1d. While this results in a sufficient coverage of the semantically sensible object regions (e.g., full body of cat in Fig. 1b) with accurate object boundaries, discriminating multiple classes, and separating foreground from background are particularly challenging due to the lack of strong class-specific cues [18] (e.g., cat and window in Fig. 1b).

In this work, we actively look into such complementary characteristics of the aforementioned localization maps: class-specific localization map from CNN (e.g., Fig. 1d first row) and semantic-aware localization map from ViT (e.g., Fig. 1d middle row). To this end, we propose a novel Complementary Branch (CoBra) consisting of a CNN branch and ViT branch which effectively reciprocate the complementary knowledge to each other. This dual branch framework enjoys the best of both worlds thus resulting in a localization map with a semantically precise boundary of correctly identified object (e.g., Fig. 1d last row).

In practice, as illustrated in Fig. 1c, properly integrating the knowledge of these two completely different architectures (thus unshared weights) with distinct inductive biases requires special attention beyond the simple regularization of feature space. Related research also has been explored [35], where it analytically investigates the complementarity between Multi-head Self-Attention (MSA) and Convolutional Layers (Conv) and modifies the structural design of the model itself to integrate the strengths of both architectures. In contrast, we suggest that the mutual complementarity between the features of ViT and CNN can be facilitated using contrastive loss. To derive accurate representations of desired class and semantic features, we construct Class-Aware Projection (CAP) and Semantic-Aware Projection (SAP) from CNN and ViT respectively. Specifically, the CAP representation of each CNN CAM patch holds the class-specific information about the patch which is subsequently informed by the ViT attention map. Conversely, the SAP representation of each ViT attention map holds the semantic relations between patches which are subsequently informed by the CNN CAM features. This well-constructed knowledge properly guides each branch to minimize its shortcomings (i.e., CNN lacking semantic sensitivity and ViT lacking class specificity) to generate class-and-semantic aware localization maps. To be more precise, the ViT-based semantic attention map tends to associate semantically relevant patches, while often disregarding the class (e.g., Fig. 1d shows that each objects are semantically well highlighted without knowing which class they belong to).

Contributions. We provide the following contributions:

-

•

We propose a dual branch framework, namely CoBra, which aims to fuse the complementary nature of CNN and ViT localization maps.

-

•

We capture the class and semantic knowledge as Class-Aware Projection (CAP) and Semantic-Aware Projection (SAP) respectively for effective complementary guidance to the CNN and ViT branches in CoBra, employing contrastive learning for enhanced guidance.

-

•

We test our model’s image-level WSSS performance on PASCAL VOC 2012 dataset and MS COCO 2014 and analyze the complementary relationship of class and semantic localization maps.

2 Related Works

2.1 CAM and CNN for WSSS

For leveraging the image-level class label as weak supervision, CAM [55] has been the most widely used approach for generating pseudo masks given image-level class labels. In particular, various CNN-based WSSS approaches have leveraged CAM for generating the initial seeds for pseudo labels [45, 21, 28, 15] and develop post-process techniques to improve the following mask [23, 20, 49, 2, 1]. Methods further focus on expanding the existing seed to sufficiently cover the entire object [23, 20]. Similarly, additional semantic information has been utilized to propagate the class activation throughout the relevant areas [49, 2, 1]. Further, [26, 46] manipulated the input images in an adversarial manner to expand the seed by referring to obtained CAMs. To integrate additional weak supervision, the off-the-shelf saliency map was also considered as extra weak supervision [21, 28]. As seen above, a line of work observed that the CNN-based CAM provides strong localization maps, but they primarily operate as seeds which narrowly highlight only the most crucial object parts from the classification perspective.

2.2 ViT in WSSS

Originating from Transformer [43] in natural language processing, vision transformer (ViT) [14] has been making notable breakthroughs in traditional computer vision tasks [33, 5]. Analogous to the tokens from words, ViT partitions an image into patches to construct their tokens, and its self-attention mechanism effectively captures the patch-wise relations within the image. This unique mechanism provides strong semantic cues of objects which is particularly useful for WSSS [18, 50, 38]. For instance, TS-CAM [18] extracts the attention map which highlights the regions of strong attention to the classifying object. Similarly, AFA [38] is an end-to-end framework using ViT as a backbone which refines the initial pseudo labels via semantic affinity from multi-head self-attention. These attention maps, however, cannot derive an attention map for each class since the underlying ViT is based on a single, generic class token. MCTformer [50] proposed a multi-class token framework to generate a class-specific object localization map. These challenges still tend to include background and incorrect class.

2.3 CNN & ViT in WSSS

Given the proven complementary relationship between CNN and ViT††For brevity, we begin referring vision transformer also as ViT., as demonstrated in prior research [35], it’s not surprising that subsequent works have begun integrating both architectures. For instance, a straightforward hybrid approach either uses the embedding output of one architecture as an input to the other [36] or simply uses the CNN to compute the CAM based on the token outputs of the ViT [50]. On the other hand, there exist few prior works which explicitly attempt to make use of the localization maps of CNN and ViT. In particular, [36, 29] used multi-branch of CNN and transformer to retain the representation capability of local features and global representations to the maximum extent. Still, despite the impressive methodological developments, how such two models with vastly distinct inductive biases may bring complementary benefits toward image-level WSSS is unclear.

3 Methods

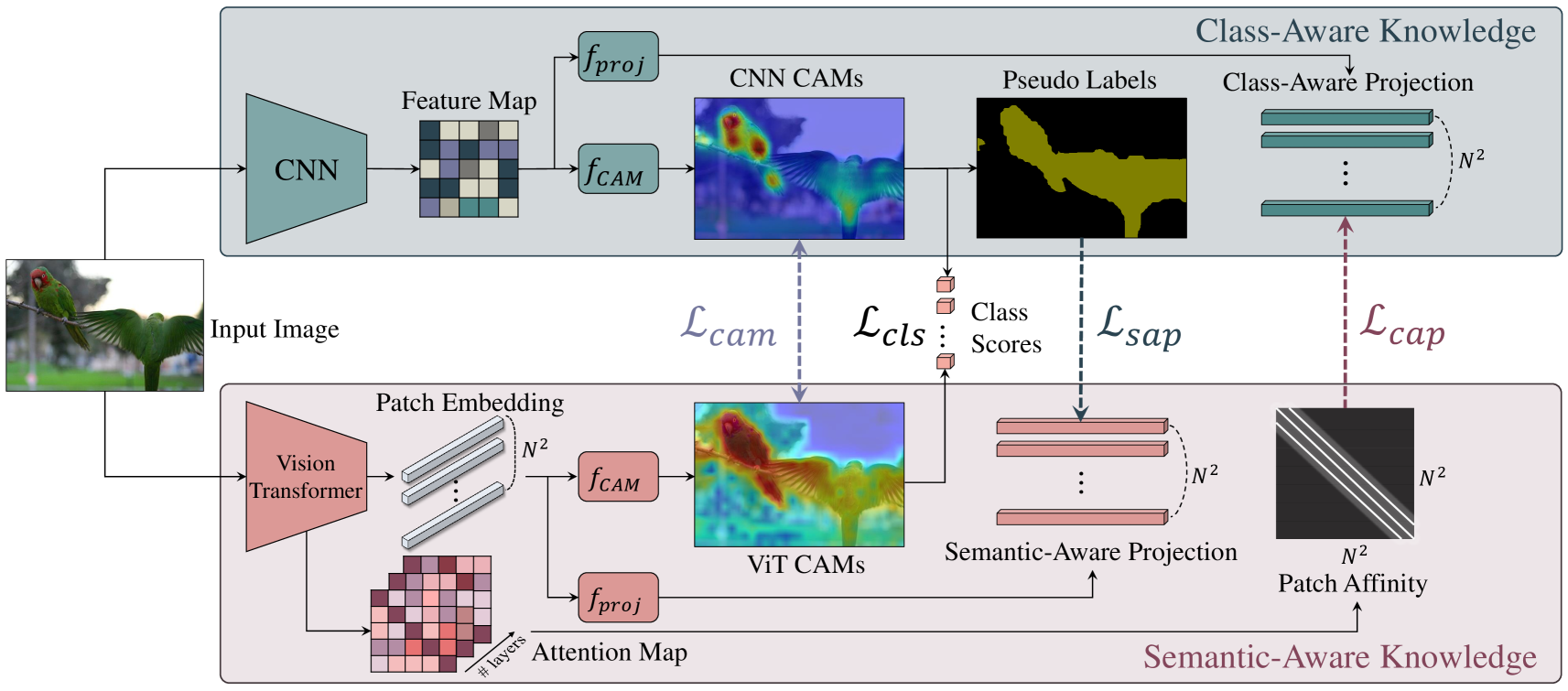

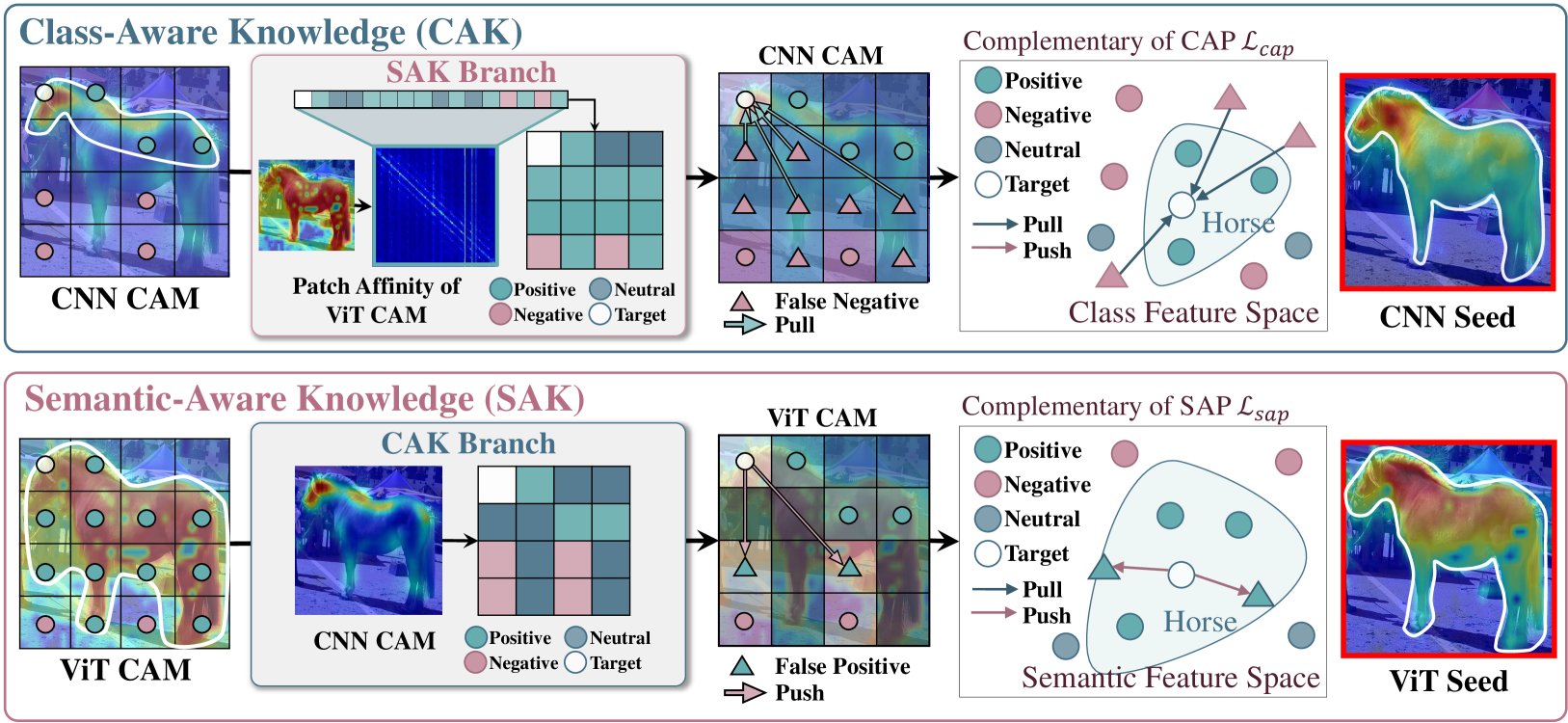

In this section, we describe our model CoBra (shown in Fig. 2). In Sec. 3.1, we provide an overview of our model motivating the dual branch setup consisting of the Class-Aware Knowledge (CAK) Branch (Fig. 2 top) and the Semantic-Aware Knowledge (SAK) Branch (Fig. 2 bottom). In Sec. 3.2, for each branch, we describe its architecture and various outputs required for complementary knowledge infusion. In Sec. 3.3, we formulate the complementary branch losses involving the Class-Aware Projection (CAP) and Semantic-Aware Projection (SAP). Finally, in Sec. 3.4, a straightforward fusion method is employed to seamlessly integrate different knowledge elements, leading to the detailed description of the final object localization map essential for pseudo mask generation.

3.1 Overview and Motivation

We first motivate our Complementary Branch (CoBra) setup consisting of the Class-Aware Knowledge (CAK) Branch with CNN and the Semantic-Aware Knowledge (SAK) Branch with ViT.

Class-Aware Knowledge Branch (CAK). Shown as the top branch in Fig. 2, CNN is the basis of various components which possess strong class-related cues. For instance, the CNN CAMs accurately localize the object with very few incorrect localized regions (e.g., in Fig. 1a with low class false positives). In other words, the CNN-based CAK branch demonstrates high class precision. Contrary to this benefit, the CNN CAMs tend to lack sufficient coverage of the remaining semantically relevant object parts (i.e., low semantic true positives). That is, CAK branch, on its own, has low semantic sensitivity for generating pixel-level pseudo labels; thus requires additional semantic cues.

Semantic-Aware Knowledge Branch (SAK). Shown as the bottom branch in Fig. 2, ViT and its self-attention mechanism are the basis of semantically strong representations. For instance, the semantically persistent object regions are thoroughly captured in the ViT CAMs (e.g., in Fig. 1b with vivid boundaries of the localized objects). In other words, the ViT-based SAK branch demonstrates high semantic sensitivity. Converse to this valuable trait, we also observe a considerable size of falsely localized areas (i.e., high class false positives) such as the background and incorrect classes. In other words, SAK branch, on its own, has low class precision for generating precise localization maps of specific objects; thus requires additional class cues.

Complementary Branch (CoBra). As described above, CAK branch has high class precision and low semantic sensitivity, while SAK branch has low class precision and high semantic sensitivity. Thus, we identify that the problem boils down to deriving an ideal object localization map which has high class precision and high semantic sensitivity. This motivates the complementary learning of our framework where one branch carefully guides the beneficial, complementary knowledge to its counterpart branch. In particular, the CAK branch provides class-aware knowledge from its pseudo labels to the SAP of the SAK branch ( in Fig. 2). Conversely, the SAK branch provides semantic-aware knowledge from its patch affinity map to the CAP of the CAK branch ( in Fig. 2). In Sec. 3.3, we show how the InfoNCE loss [34] is carefully adapted for this process. Complementary knowledge guides each branch to minimize weaknesses and preserve strengths.

3.2 Branch Details

In this section, we provide details of each branch including the backbone architecture and various outputs. Both CAK and SAK branches take the same input image of size.

CAK Branch. The CAMs are generated with the last downsampling layer to maintain resolution after passing through four layer blocks. We generate the following outputs using the feature map from the previous process (all found in Fig. 2 top branch):

-

1.

CNN CAMs: takes the feature map to generate CNN CAMs (). This, after global average pooling, produces the image-level class scores for the classification loss . becomes the basis of the CNN-based object localization map.

-

2.

Pseudo Labels: are softmaxed on a class-wise basis to calculate the probability values for positive class. The class with the highest probability is selected using argmax to produce the pseudo labels.

-

3.

Class-Aware Projection (CAP): Using the linear projection head , we generate CAP for each CAM pixel . Thus, each specifically entails the class-aware knowledge from the CNN CAMs. The semantic knowledge from SAK will refine these representations in Sec. 3.3.

SAK Branch. The ViT model of our model follow its input convention ( patches with one class token). The resulting tokens (i.e., where the embedding dimension is typically predefined) are transformed into three sets of vectors: query , key , and value . Then, we calculate a new set of vectors based on the following attention function: . This common self-attention mechanism is the basis of ViT-based models where the attention map of size is extracted (Attention Map in Fig. 2). Now, we generate the following outputs from SAK branch:

-

1.

ViT CAMs: The final tokens of ViT, excluding the class token size (Patch Embedding in Fig. 2) is fed to to generate the ViT CAMs ().

-

2.

Semantic-Aware Projection (SAP): Using the linear projection head in SAK branch, we generate SAP for each patch, . Each specifically contains the semantic-aware knowledge of the ViT tokens. The class-aware knowledge from CAK branch refines these tokens in Sec. 3.3.

-

3.

Patch Affinity: A unique product of ViT is the patch affinity (Patch Affinity in Fig. 2) derived from the attention map . Specifically, the patch affinity is a dimensional attention map which is the average of the layers attention maps corresponding to the pairwise patches, namely, the following submatrix: .

3.3 Complementary Branch Losses

In this section, we describe the losses involved in CoBra. We first explain two losses necessary for basic functionality. Then, we discuss the complementary branch losses which explicitly provide class-aware and semantic-aware knowledge to the complementary branch in a “one-way” manner.

3.3.1 Class and CAM Losses

CNN CAMs and ViT CAMs make the image-level class predictions by minimizing and softmax losses, which we combine as a single classification loss: . Also, after a single epoch of training, we enforce a simple consistency between the CNN CAMs and ViT CAMs via an L1 loss between them. In practice, since the localization maps are generated for the positive classes, an extra loss on the CAMs of positive classes ( and ) emphasizes their consistency: .

3.3.2 Refining Class-Aware Projection

The key feature of CoBra is its complementary branch losses, injecting knowledge from one branch to another. We first describe how CAP is refined using the semantic-aware knowledge from the patch affinity in SAK branch. The patch affinity matrix contains the semantic relations between all pairwise patches. For instance, the row shows the semantic relationship between the patch to all patches. Thus, allows us to identify semantically most similar patches as positive (), least similar as negative (), and neither as neutral patches with respect to the patch. This is illustrated in the left block of Fig. 3 showing how (i.e., the first row of ) chooses the patches semantically closest to the target patch (i.e., parts related to horse). This becomes an important cue to refine the CNN CAM which often excludes semantically relevant patches (thus low semantic sensitivity). Once we assign a patch to be either positive, negative, or neutral, we refine CAP such that for each positive target patch ( for ), (1) we pull other positive patches ( for ) towards and (2) we push negative patches ( for ) away from . Specifically, for each target patch projection , we compute the following CAP loss inspired by InfoNCE [34]:

| (1) |

where is a temperature term. As seen in the top row of Fig. 3, CNN CAM has low semantic sensitivity, e.g., weakly localizing the horse regions other than the head. The CAP loss refines the CAP representations such that CNN CAM leverages the semantically positive patches (green circles) to further localize the remaining object parts. Meaning, we observe that the semantic-aware knowledge of the SAK branch is provided as semantically relevant patches, and such high semantic sensitivity information refines the CAP representations to reduce false negatives; in effect, improving the semantic sensitivity.

3.3.3 Refining Semantic-Aware Projection

We now describe how SAP is refined using the class-aware knowledge from the pseudo labels in CAK branch. Specifically, the pseudo label is an matrix where each entry aligns to a class label from the CNN CAM location. For instance, if a patch in CNN CAM has the strongest activation response to class horse, the corresponding patch entry of the pseudo label is assigned as horse. This allows us to identify the patches corresponding to specific classes. In Fig. 3, the CNN CAM of a horse is used to identify positive patches (i.e., high activation score), negative patches (i.e., low activation score), and neutral patches (i.e., neither high nor low horse score). Analogous to the CAP loss, we show the SAP loss which pulls the semantically similar SAP (green circles) to the target SAP (white circle) and pushes away dissimilar SAP (red circles) away from the target:

| (2) |

where is a temperature term. As shown in Fig. 3 bottom row, SAK branch where for a positive target patch (white patch), SAP is updated to pull the positive (green circles) towards and push negative (red circles) away from the positive target SAP features. The resulting SAP refined CAM is also shown in Fig. 3 where the false positive ViT CAM patches (i.e., patches in ViT CAM incorrectly localizing horse) are correctly adjusted to avoid localizing non-horse regions. In other words, we observe that the class-aware knowledge of CAK branch is provided as class-specific patches, and such high class precision information refines the SAP representations to reduce false class positives; in effect, improving the class precision of the ViT CAM. In Sec. 4.2, we show how we chose and .

Final Loss Function. We let and to combine the losses across all possible patches. Then, and , the full objective function is defined as:

| (3) |

3.4 Seed and Mask Generation

After training our model, we generate a seed (i.e., CAM) for each image, which is then used to create a pseudo mask. This mask serves as the final segmentation label, derived from image-level weak supervision. Note that each branch generates its own CAM; thus, we discuss the available options for the best mask generation.

CNN CAM. The CAM (i.e., ) generated by the CAK Branch is made after global average pooling. Although has become much more semantically precise due to the complementary fusion with SAK, it still slightly lacks the preciseness of the ViT CAM (i.e., ).

ViT CAM. To generate CAM from SAK Branch, we use semantic-agnostic attention map [18] based on the attention map to generate the localization map as where is the element-wise product. Fusing the CAK branch results in more accurate class-specific localization maps in .

Fusion CAM. To overcome the intrinsic differences between CNN and ViT while utilizing better CAMs, we employ a simple CAM fusing mechanism for merging and . It takes advantage of both CAMs: . This simple but effective merging mechanism balances the two complementary knowledge by taking a simple average of and and maximizs to explicitly capture both strong class and semantic knowledge. is further used for generating the discretized mask via common post-processing using denseCRF. More details in Sec. 4.2

4 Experiments

| Method | Venue | Seed | Mask |

| PSA [2] | CVPR’18 | 48.0 | 61.0 |

| IRN [49] | CVPR’19 | 48.8 | 66.3 |

| Chang et al.[6] | CVPR’20 | 50.9 | 63.4 |

| CDA [40] | ICCV’21 | 55.4 | 63.4 |

| SEAM [45] | CVPR’21 | 55.4 | 63.6 |

| AdvCAM [26] | CVPR’21 | 55.6 | 68.0 |

| CPN [44] | ICCV’21 | 57.4 | 67.8 |

| MCTformer [50] | CVPR’22 | 61.7 | 69.1 |

| ReCAM [10] | CVPR’22 | 56.6 | 70.5 |

| AEFT [51] | ECCV’22 | 56.0 | 71.0 |

| ACR [25] | CVPR’23 | 60.3 | 72.3 |

| ACR+ViT [25] | CVPR’23 | 65.5 | 70.9 |

| Ours | 62.3 | 73.5 | |

Dataset. We evaluate our method and other baselines on PASCAL VOC2012 [16] and MS COCO 2014. PASCAL VOC2012 contains 20 foreground classes and 1 background class, comprised of 1,464 training, 1,449 validation, and 1,456 test sets. In practice, following the widely used convention from other literature, an augmented set of 10,582 images is used as the training set. MS COCO comprised of 80K and 40K for training and validation, respectively. It contains 80 object classes and one background class for semantic segmentation.

Evaluation Metrics. We compute the mean Intersection-over-Union (mIoU) to assess the segmentation performance on the validation (val) and test sets. In particular, the test set is evaluated on the PASCAL VOC online evaluation server.

Implementation Details. We use ResNet152 [19] following IRN [49] and DeiT-S/16 [42] pre-trained on ImageNet for CNN and ViT respectively. The training set images are randomly resized and cropped to . Following the prior works on semantic segmentation, we use DeeplabV3+ [8], SegFormer [48] based on ResNet101, MiT-B2, respectively. During the test, we use multi-scale and post-process with denseCRF. For all experiments, we used NVIDIA RTX A6000 GPU. Details are in the supplement.

| Method | Venue | Backbone | Sup. | Val | Test |

| EDAM | CVPR’21 | ResNet101 | I+S | 70.9 | 70.6 |

| L2G [21] | CVPR’21 | ResNet101 | I+S | 72.1 | 71.7 |

| EPS [28] | CVPR’21 | ResNet101 | I+S | 71.0 | 71.8 |

| OOA* [49] | ICCV’19 | ResNet101 | I | 65.2 | 66.4 |

| IRN [49] | CVPR’19 | ResNet50 | I | 63.5 | 64.8 |

| SEAM [45] | CVPR’20 | ResNet38 | I | 64.5 | 65.7 |

| CONTA [52] | NeruIPS’20 | WideResNet38 | I | 66.1 | 66.7 |

| CDA [40] | ICCV’21 | ResNet38 | I | 66.1 | 66.8 |

| ECS-Net [41] | ICCV’21 | ResNet38 | I | 66.6 | 67.6 |

| CPN [53] | ICCV’21 | WideResNet38 | I | 67.8 | 68.5 |

| AdvCAM [26] | CVPR’21 | ResNet101 | I | 68.1 | 68.0 |

| ReCAM [10] | CVPR’22 | ResNet101 | I | 68.5 | 68.4 |

| MCTformer [50] | CVPR’22 | WideResNet38 | I | 71.9 | 71.6 |

| AMN [27] | CVPR’22 | ResNet101 | I | 70.7 | 70.6 |

| AEFT [51] | ECCV’22 | ResNet101 | I | 70.0 | 71.3 |

| BECO [37] | CVPR’23 | ResNet101 | I | 72.1 | 71.8 |

| OCR+MCTformer [11] | CVPR’23 | WideResNet38 | I | 72.7 | 72.0 |

| ACR [25] | CVPR’23 | WideResNet38 | I | 71.9 | 71.9 |

| ACR+ViT [25] | CVPR’23 | WideResNet38 | I | 72.4 | 72.4 |

| Ours | ResNet101 | I | 74.0 | 73.9 | |

| AFA [47] | CVPR’23 | MiT-B1 | I | 66.0 | 66.3 |

| BECO [37] | CVPR’23 | MiT-B2 | I | 73.7 | 73.5 |

| Ours | MiT-B2 | I | 74.3 | 74.2 | |

| CAK branch | SAK branch | mIoU (%) | |||

| 48.8 | |||||

| 41.3 | |||||

| 53.2 | |||||

| 56.4 | |||||

| 59.2 | |||||

| 61.2 | |||||

| 62.3 | |||||

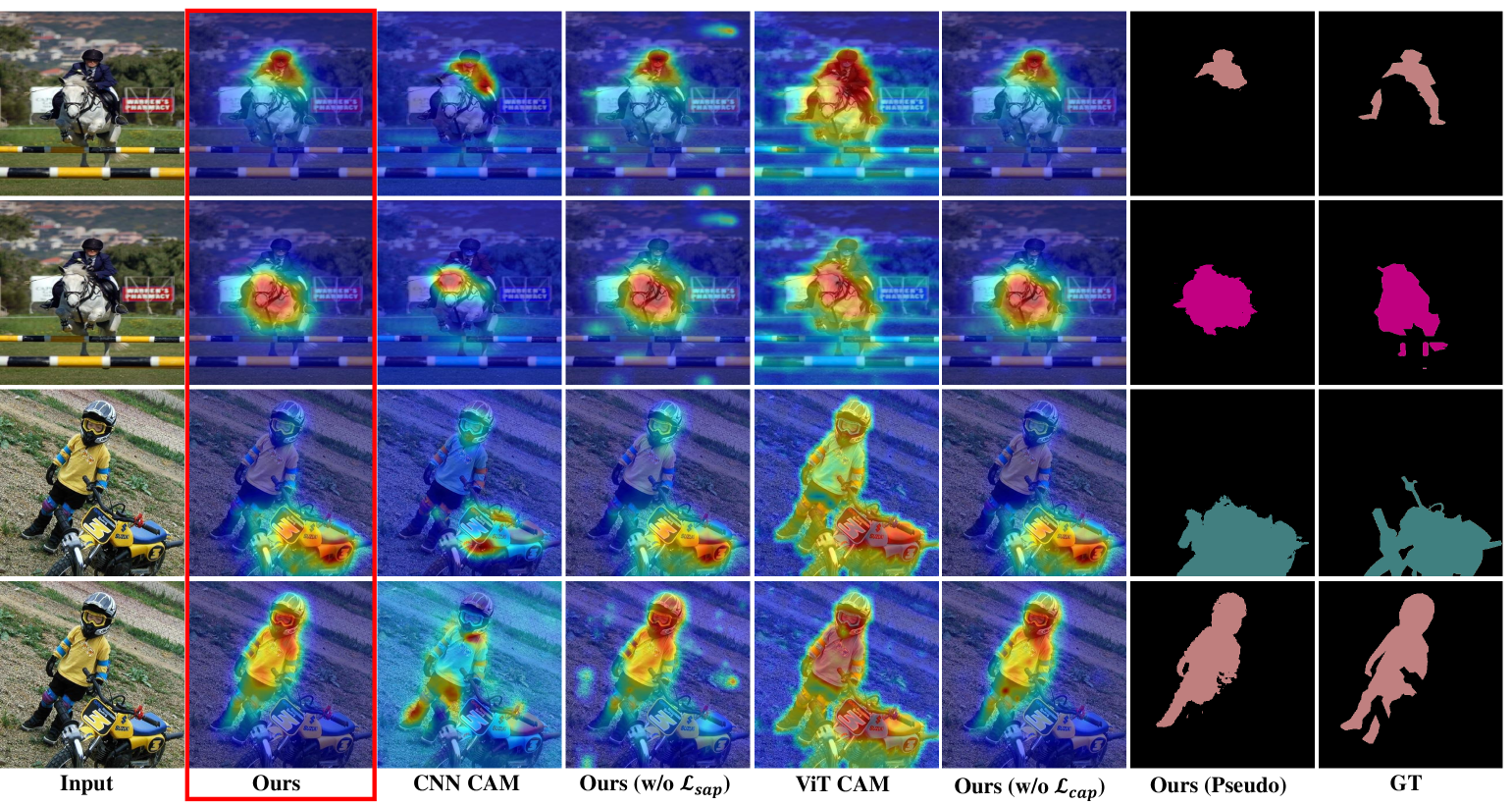

4.1 Evaluations

Seed and Mask. Our work follows the family of existing steps which generates object localization maps (seed) and produces pseudo semantic segmentation ground-truth labels (mask) with IRN [49]. Thus, we first evaluate the seed and mask quality by computing the mIoU on the ground-truth segmentation labels. In Table 1, CoBra achieves the second best seed (62.3%) without crf and the best mask (73.5%) compared to existing state-of-the-art methods. Even without using CRF, our mask achieves 72.5% mIoU which still surpasses others using CRF. See the Supplement.

Semantic Segmentation. Generated masks are used as pseudo labels for training a segmentation network, which is then evaluated on performance on the PASCAL VOC 2012 val and test datasets. As shown in Table 2, our model CoBra achieves the state-of-the-art results of 74.0% and 73.9% on val and test sets respectively for ResNet101 backbone and 74.3% and 74.2% for MiT-B2 backbone. The detailed results on MSCOCO are provided in the Supplement.

4.2 Ablation Studies

Effect of Cross Complementary Branch. The importance of our proposed dual-branch scheme is highlighted by evaluating the model with only one branch at a time. Specifically, when our model operates with a single branch and without cross-complementary losses, the CAK and SAK branches correspond closely to IRNet [49] and TS-CAM [18], respectively. We make minimal changes to properly produce the localization maps and assess their quality. In Table 3, the single branch setups exhibit lower mIoU compared to our dual branch setup, as shown in Fig. 4.

| 5 | 10 | 15 | 20 | |

| 5 | 62.3 | 61.9 | 59.1 | 56.8 |

| 10 | 61.3 | 60.0 | 58.4 | 55.7 |

| 15 | 59.1 | 58.3 | 56.3 | 54.6 |

| 20 | 58.9 | 57.2 | 55.4 | 53.8 |

| Source for Mask | Mask mIoU (%) |

| 71.6 | |

| 71.5 | |

| 72.2 | |

| 72.6 | |

| 73.5 | |

Loss Functions. We perform a detailed examination of the impact of various loss functions. Table 3 shows that each of and brings noticeable improvements while using all three losses is the best. This further implies that the complementary class and semantic knowledge with and bring significant benefits while retaining the similarity between the CNN and ViT CAMs via .

Choosing Positive and Negative Patches. Recall that the SAP loss (Eq. (2)) in the SAK branch learns from the complementary CAK pseudo label patches. Specifically, is the top scoring positive pseudo label patches from CAK branch, and is the bottom scoring negative pseudo label patches from CAK branch. Similarly, in the CAP loss (Eq. (1)) in the CAK branch learns from the complementary SAK patch affinity map. That is, is the top scoring positive pseudo label patch affinity map, and is the bottom scoring negative patch affinity map.

In this study, we analyze how , , , and affect the overall outcome. As we have observed before, the patches with positive pseudo labels from the CAK branch are highly precise. In other words, the positive pseudo labels from CAK are highly likely to be true positives. Thus, we explicitly set to be 20 (approximately 10% of the total patches) to maximally incorporate the confident knowledge about true positives from the CAK branch into the SAP loss.

Similarly, the patches with negative patch affinity map from the SAK branch are also highly precise. In other words, the negative patch affinity map from SAK branch is highly likely to be true negatives. Thus, we also set to be 20 to maximize the confident knowledge about true negatives from the SAK branch into the CAP loss.

Table 4, we show the seed mIoU of various combinations of and for fixed and . We observe that and showed the best result, implying that leveraging a relatively small number of competent patches brings robustness to our model.

Ensuring Branch Benefits. We note that it is crucial to ensure that the branches benefit each other while suppressing the drawbacks. Empirically, (53.2%) which mutually influences both branches outperforms each SAK (48.8%) and CAK (41.3%) results, indicating that the benefits outweigh the potential flow of drawbacks. Also, & mechanically ensure the “one-way” flow of benefits from one branch to the other by selectively updating only the receiving branch. Together with using the top competent patches, we quantitatively check how these losses robustly bring improvements as in Table 3.

Seed Fusion for Mask Generation. Our model, by construction, provides two complementarily useful seeds, namely, and which may be carefully fused for robust outcomes. In particular, as we discussed in Sec. 3.4, we have experimented with various combinations of the seeds as shown in Table 5. It shows combining and yields better results than using them individually. Considering the unique characteristics of each CAM, we discovered that the best results were obtained when they were fused as in . More details in the supplement.

5 Conclusion

In this work, we proposed a novel dual-branch framework for fusing complementary knowledge from CNN and vision transformer for WSSS. The quantitative results show state-of-the-art performance on the PASCAL VOC 2012 datasets and MS COCO 2014 datasets, while qualitatively demonstrating the significance of properly exchanging class- and semantic-aware knowledge via Class-Aware Projection and Semantic-Aware Projection. We particularly hope this work brings new insights toward considering both CNN and vision transformers as two equally crucial, complementary counterparts.

References

- Ahn and Kwak [2018a] Jiwoon Ahn and Suha Kwak. Learning pixel-level semantic affinity with image-level supervision for weakly supervised semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4981–4990, 2018a.

- Ahn and Kwak [2018b] Jiwoon Ahn and Suha Kwak. Learning pixel-level semantic affinity with image-level supervision for weakly supervised semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4981–4990, 2018b.

- Bae et al. [2020] Wonho Bae, Junhyug Noh, and Gunhee Kim. Rethinking class activation mapping for weakly supervised object localization. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XV 16, pages 618–634. Springer, 2020.

- Bearman et al. [2016] Amy Bearman, Olga Russakovsky, Vittorio Ferrari, and Li Fei-Fei. What’s the point: Semantic segmentation with point supervision. In European conference on computer vision, pages 549–565. Springer, 2016.

- Carion et al. [2020] Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, and Sergey Zagoruyko. End-to-end object detection with transformers. In European conference on computer vision, pages 213–229. Springer, 2020.

- Chang et al. [2020] Yu-Ting Chang, Qiaosong Wang, Wei-Chih Hung, Robinson Piramuthu, Yi-Hsuan Tsai, and Ming-Hsuan Yang. Weakly-supervised semantic segmentation via sub-category exploration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8991–9000, 2020.

- Chattopadhay et al. [2018] Aditya Chattopadhay, Anirban Sarkar, Prantik Howlader, and Vineeth N Balasubramanian. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In 2018 IEEE winter conference on applications of computer vision (WACV), pages 839–847. IEEE, 2018.

- Chen et al. [2018] Liang-Chieh Chen, Yukun Zhu, George Papandreou, Florian Schroff, and Hartwig Adam. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European conference on computer vision (ECCV), pages 801–818, 2018.

- Chen et al. [2022a] Qi Chen, Lingxiao Yang, Jian-Huang Lai, and Xiaohua Xie. Self-supervised image-specific prototype exploration for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4288–4298, 2022a.

- Chen et al. [2022b] Zhaozheng Chen, Tan Wang, Xiongwei Wu, Xian-Sheng Hua, Hanwang Zhang, and Qianru Sun. Class re-activation maps for weakly-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 969–978, 2022b.

- Cheng et al. [2023] Zesen Cheng, Pengchong Qiao, Kehan Li, Siheng Li, Pengxu Wei, Xiangyang Ji, Li Yuan, Chang Liu, and Jie Chen. Out-of-candidate rectification for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 23673–23684, 2023.

- Dai et al. [2015] Jifeng Dai, Kaiming He, and Jian Sun. Boxsup: Exploiting bounding boxes to supervise convolutional networks for semantic segmentation. In Proceedings of the IEEE international conference on computer vision, pages 1635–1643, 2015.

- Dong et al. [2021] Bowen Dong, Zitong Huang, Yuelin Guo, Qilong Wang, Zhenxing Niu, and Wangmeng Zuo. Boosting weakly supervised object detection via learning bounding box adjusters. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 2876–2885, 2021.

- Dosovitskiy et al. [2020] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- Du et al. [2022] Ye Du, Zehua Fu, Qingjie Liu, and Yunhong Wang. Weakly supervised semantic segmentation by pixel-to-prototype contrast. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4320–4329, 2022.

- Everingham et al. [2010] Mark Everingham, Luc Van Gool, Christopher KI Williams, John Winn, and Andrew Zisserman. The pascal visual object classes (voc) challenge. International journal of computer vision, 88(2):303–338, 2010.

- Gao et al. [2022] Shuyong Gao, Wei Zhang, Yan Wang, Qianyu Guo, Chenglong Zhang, Yangji He, and Wenqiang Zhang. Weakly-supervised salient object detection using point supervision. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 670–678, 2022.

- Gao et al. [2021] Wei Gao, Fang Wan, Xingjia Pan, Zhiliang Peng, Qi Tian, Zhenjun Han, Bolei Zhou, and Qixiang Ye. Ts-cam: Token semantic coupled attention map for weakly supervised object localization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 2886–2895, 2021.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- Huang et al. [2018] Zilong Huang, Xinggang Wang, Jiasi Wang, Wenyu Liu, and Jingdong Wang. Weakly-supervised semantic segmentation network with deep seeded region growing. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 7014–7023, 2018.

- Jiang et al. [2022] Peng-Tao Jiang, Yuqi Yang, Qibin Hou, and Yunchao Wei. L2g: A simple local-to-global knowledge transfer framework for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 16886–16896, 2022.

- Khoreva et al. [2017] Anna Khoreva, Rodrigo Benenson, Jan Hosang, Matthias Hein, and Bernt Schiele. Simple does it: Weakly supervised instance and semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 876–885, 2017.

- Kolesnikov and Lampert [2016] Alexander Kolesnikov and Christoph H Lampert. Seed, expand and constrain: Three principles for weakly-supervised image segmentation. In European conference on computer vision, pages 695–711. Springer, 2016.

- Kweon et al. [2021] Hyeokjun Kweon, Sung-Hoon Yoon, Hyeonseong Kim, Daehee Park, and Kuk-Jin Yoon. Unlocking the potential of ordinary classifier: Class-specific adversarial erasing framework for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF international conference on computer vision, pages 6994–7003, 2021.

- Kweon et al. [2023] Hyeokjun Kweon, Sung-Hoon Yoon, and Kuk-Jin Yoon. Weakly supervised semantic segmentation via adversarial learning of classifier and reconstructor. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11329–11339, 2023.

- Lee et al. [2021a] Jungbeom Lee, Eunji Kim, and Sungroh Yoon. Anti-adversarially manipulated attributions for weakly and semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4071–4080, 2021a.

- Lee et al. [2022] Minhyun Lee, Dongseob Kim, and Hyunjung Shim. Threshold matters in wsss: Manipulating the activation for the robust and accurate segmentation model against thresholds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4330–4339, 2022.

- Lee et al. [2021b] Seungho Lee, Minhyun Lee, Jongwuk Lee, and Hyunjung Shim. Railroad is not a train: Saliency as pseudo-pixel supervision for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 5495–5505, 2021b.

- Li et al. [2022a] Ruiwen Li, Zheda Mai, Chiheb Trabelsi, Zhibo Zhang, Jongseong Jang, and Scott Sanner. Transcam: Transformer attention-based cam refinement for weakly supervised semantic segmentation. arXiv preprint arXiv:2203.07239, 2022a.

- Li et al. [2021] Yi Li, Zhanghui Kuang, Liyang Liu, Yimin Chen, and Wayne Zhang. Pseudo-mask matters in weakly-supervised semantic segmentation. In Proceedings of the IEEE/CVF international conference on computer vision, pages 6964–6973, 2021.

- Li et al. [2022b] Yi Li, Yiqun Duan, Zhanghui Kuang, Yimin Chen, Wayne Zhang, and Xiaomeng Li. Uncertainty estimation via response scaling for pseudo-mask noise mitigation in weakly-supervised semantic segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 1447–1455, 2022b.

- Lin et al. [2016] Di Lin, Jifeng Dai, Jiaya Jia, Kaiming He, and Jian Sun. Scribblesup: Scribble-supervised convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3159–3167, 2016.

- Liu et al. [2021] Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10012–10022, 2021.

- Oord et al. [2018] Aaron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748, 2018.

- Park and Kim [2022] Namuk Park and Songkuk Kim. How do vision transformers work? arXiv preprint arXiv:2202.06709, 2022.

- Peng et al. [2021] Zhiliang Peng, Wei Huang, Shanzhi Gu, Lingxi Xie, Yaowei Wang, Jianbin Jiao, and Qixiang Ye. Conformer: Local features coupling global representations for visual recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 367–376, 2021.

- Rong et al. [2023] Shenghai Rong, Bohai Tu, Zilei Wang, and Junjie Li. Boundary-enhanced co-training for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19574–19584, 2023.

- Ru et al. [2022] Lixiang Ru, Yibing Zhan, Baosheng Yu, and Bo Du. Learning affinity from attention: End-to-end weakly-supervised semantic segmentation with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 16846–16855, 2022.

- Selvaraju et al. [2017] Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pages 618–626, 2017.

- Su et al. [2021] Yukun Su, Ruizhou Sun, Guosheng Lin, and Qingyao Wu. Context decoupling augmentation for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF international conference on computer vision, pages 7004–7014, 2021.

- Sun et al. [2021] Kunyang Sun, Haoqing Shi, Zhengming Zhang, and Yongming Huang. Ecs-net: Improving weakly supervised semantic segmentation by using connections between class activation maps. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 7283–7292, 2021.

- Touvron et al. [2021] Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, and Hervé Jégou. Training data-efficient image transformers & distillation through attention. In International Conference on Machine Learning, pages 10347–10357. PMLR, 2021.

- Vaswani et al. [2017] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- Wang et al. [2021] Wenguan Wang, Tianfei Zhou, Fisher Yu, Jifeng Dai, Ender Konukoglu, and Luc Van Gool. Exploring cross-image pixel contrast for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 7303–7313, 2021.

- Wang et al. [2020] Yude Wang, Jie Zhang, Meina Kan, Shiguang Shan, and Xilin Chen. Self-supervised equivariant attention mechanism for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 12275–12284, 2020.

- Wei et al. [2017] Yunchao Wei, Jiashi Feng, Xiaodan Liang, Ming-Ming Cheng, Yao Zhao, and Shuicheng Yan. Object region mining with adversarial erasing: A simple classification to semantic segmentation approach. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1568–1576, 2017.

- Wu et al. [2022] Tong Wu, Guangyu Gao, Junshi Huang, Xiaolin Wei, Xiaoming Wei, and Chi Harold Liu. Adaptive spatial-bce loss for weakly supervised semantic segmentation. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXIX, pages 199–216. Springer, 2022.

- Xie et al. [2021] Enze Xie, Wenhai Wang, Zhiding Yu, Anima Anandkumar, Jose M Alvarez, and Ping Luo. Segformer: Simple and efficient design for semantic segmentation with transformers. Advances in Neural Information Processing Systems, 34:12077–12090, 2021.

- Xu et al. [2022a] Lian Xu, Wanli Ouyang, Mohammed Bennamoun, Farid Boussaid, and Dan Xu. Multi-class token transformer for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4310–4319, 2022a.

- Xu et al. [2022b] Lian Xu, Wanli Ouyang, Mohammed Bennamoun, Farid Boussaid, and Dan Xu. Multi-class token transformer for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4310–4319, 2022b.

- Yoon et al. [2022] Sung-Hoon Yoon, Hyeokjun Kweon, Jegyeong Cho, Shinjeong Kim, and Kuk-Jin Yoon. Adversarial erasing framework via triplet with gated pyramid pooling layer for weakly supervised semantic segmentation. In European Conference on Computer Vision, pages 326–344. Springer, 2022.

- Zhang et al. [2020a] Dong Zhang, Hanwang Zhang, Jinhui Tang, Xian-Sheng Hua, and Qianru Sun. Causal intervention for weakly-supervised semantic segmentation. Advances in Neural Information Processing Systems, 33:655–666, 2020a.

- Zhang et al. [2021] Fei Zhang, Chaochen Gu, Chenyue Zhang, and Yuchao Dai. Complementary patch for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 7242–7251, 2021.

- Zhang et al. [2020b] Jing Zhang, Xin Yu, Aixuan Li, Peipei Song, Bowen Liu, and Yuchao Dai. Weakly-supervised salient object detection via scribble annotations. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 12546–12555, 2020b.

- Zhou et al. [2016] Bolei Zhou, Aditya Khosla, Agata Lapedriza, Aude Oliva, and Antonio Torralba. Learning deep features for discriminative localization. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2921–2929, 2016.

Supplementary Material

This supplementary material provides additional details on our experiments, including additional results for MS-COCO 2014 dataset (Section. 6), Additional visualization and analyses of the results (Section. 7). Ablation study for k values and embedding size are also provided (Section. 8). Further details and examples of our analysis on fusing ViT and CNN cam while generating masks (Section. 9). The experimental setup (Section. 10) and the discussion on the Methodology for Determining Thresholds (Section. 11) are both included. We also have described our results in an easily accessible manner on our project page. The link to the project page is as follows: https://micv-yonsei.github.io/cobra2024.

6 Additional Study for another dataset

In this section, we will discuss the segmentation results for the MS-COCO 2014 dataset.

6.1 MS-COCO 2014

| Method | Venue | Backbone | val |

| IRNet [49] | CVPR’19 | ResNet50 | 41.4 |

| SEAM [45] | CVPR’20 | WideResNet38 | 31.9 |

| OC-CSE [24] | ICCV’21 | WideResNet38 | 36.4 |

| PMM [30] | ICCV’21 | WideResNet38 | 36.7 |

| MCTformer [50] | CVPR’22 | WideResNet38 | 42.0 |

| URN [31] | CVPR’22 | WideResNet38 | 40.7 |

| SIPE [9] | CVPR’22 | WideResNet38 | 43.6 |

| Spatial-BCE [47] | ECCV’22 | VGG16 | 35.2 |

| ACR [25] | CVPR’23 | WideResNet38 | 45.3 |

| BECO [37] | CVPR’23 | ResNet101 | 45.1 |

| Ours | ResNet101 | 45.5 |

To validate the consistent performance of our methodology across diverse data types, we executed segmentation experiments on the MS-COCO 2014 dataset as seen in Table. 6. For these experiments, we employed ResNet101, following the previous studies [37]. Our results show impressive outcomes on the MS-COCO 2014 dataset. This not only confirms the consistency of our approach across different datasets but also indicates progress in the field. Specifically, our method achieves a marked improvement, with a gain of state-of-the-art MS-COCO 2014 dataset.

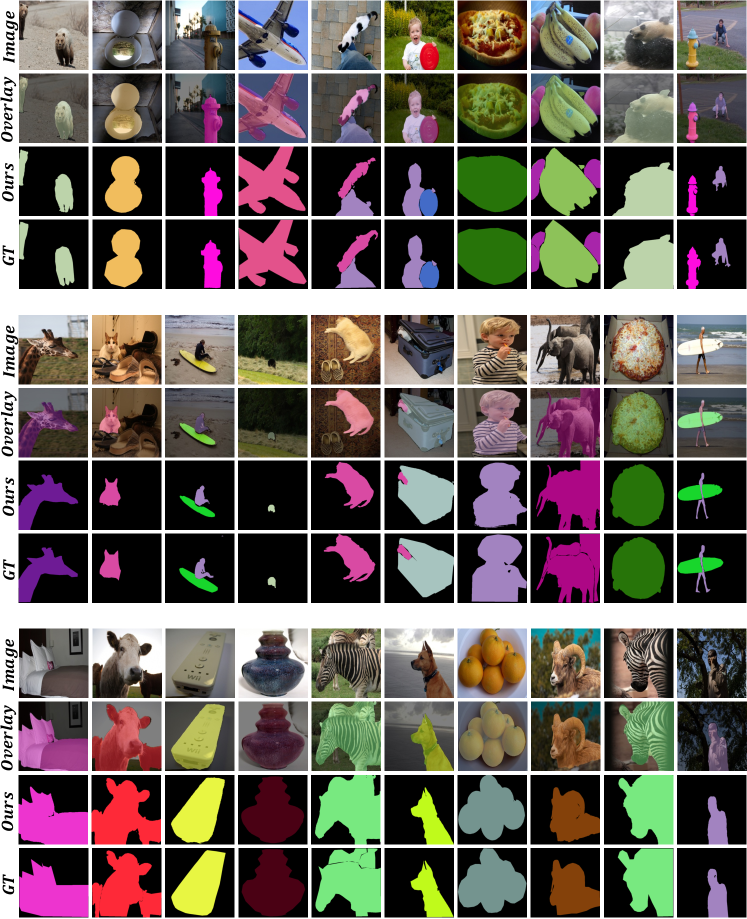

7 Additional Visualization and Analyses of the Results

In this section, we provide additional visualizations and analyses of the results. In Section. 7.1, we present further visualizations of the segmentation results. Section. 7.2 is dedicated to analyzing the impact of CRF, which is essential in the creation of pseudo labels. Lastly, in Section. 7.3, we document the mean Intersection over Union (mIoU) values for each class, focusing on our results, seeds, masks, and segmentation.

7.1 Qualitative Segmentation Results

In Fig. 6, we provide more samples on the PASCAL VOC 2012 val dataset. In Table. 7, mIoU values are presented for each class in the Pascal VOC 2012 dataset. More visualizations can be found on the following page. Additionally, in Fig. 7, we provide more samples on the MS-COCO val dataset. In Table. 8, mIoU and accuracy values are presented for each class in the MS-COCO 2014 dataset.

| background | airplane | bike | bird | boat | bottle | bus | car | cat | chair | cow | table | dog | horse | mbk | person | plant | sheep | sofa | train | tv | mIoU(%) | ||

| seed(train) | 85.26 | 59.90 | 37.03 | 68.79 | 48.30 | 58.55 | 77.13 | 68.00 | 70.89 | 29.19 | 72.04 | 55.53 | 75.68 | 70.55 | 70.74 | 63.74 | 56.70 | 76.71 | 56.86 | 64.81 | 41.41 | 62.28 | |

| mask(train) | 90.70 | 79.83 | 42.42 | 83.74 | 68.26 | 71.63 | 86.42 | 79.88 | 87.39 | 32.75 | 85.23 | 62.48 | 86.86 | 82.84 | 78.19 | 76.83 | 64.50 | 88.33 | 75.03 | 73.46 | 45.87 | 73.45 | |

| ResNet101 | seg (val) | 92.17 | 85.53 | 45.47 | 86.9 | 72.64 | 77.89 | 89.67 | 84.26 | 86.33 | 37.94 | 80.32 | 47.49 | 84.79 | 83.11 | 80.87 | 83.72 | 61.13 | 82.77 | 52.61 | 82.36 | 56.25 | 74.01 |

| seg (test) | 94.97 | 90.03 | 37.24 | 85.43 | 64.3 | 71.84 | 93.02 | 86.33 | 91.82 | 25.2 | 83.42 | 59.62 | 87.59 | 85.53 | 86.48 | 84.38 | 64.14 | 85.33 | 57.84 | 73.24 | 44.97 | 73.94 | |

| MiT-B2 | seg (val) | 94.90 | 87.39 | 36.08 | 89.63 | 72.17 | 74.55 | 92.12 | 84.27 | 92.47 | 28.45 | 92.54 | 45.57 | 88.29 | 87.44 | 80.74 | 83.71 | 64.38 | 93.13 | 48.92 | 78.17 | 45.37 | 74.30 |

| seg (test) | 95.79 | 89.88 | 35.74 | 82.82 | 65.33 | 70.51 | 90.87 | 85.75 | 94.08 | 24.92 | 87.13 | 60.80 | 89.87 | 86.52 | 86.50 | 84.17 | 65.83 | 87.55 | 55.58 | 71.50 | 47.08 | 74.20 | |

| Class | mIoU | mAcc | Class | mIoU | mAcc |

| background | 83.18 | 87.04 | person | 65.23 | 72.44 |

| bicycle | 51.23 | 59.2 | car | 51.1 | 59.21 |

| motorcycle | 65.97 | 72.21 | airplane | 66.68 | 72.13 |

| bus | 66.61 | 72.49 | train | 66.55 | 72.2 |

| truck | 48.95 | 57.35 | boat | 46.69 | 55.3 |

| traffic light | 53.31 | 60.57 | fire hydrant | 74.61 | 79.51 |

| stop sign | 77.83 | 82.69 | parking meter | 66.58 | 72.66 |

| bench | 37.33 | 45.17 | bird | 65.16 | 72.16 |

| cat | 79.27 | 83.71 | dog | 69.95 | 75.22 |

| horse | 67.68 | 73.0 | sheep | 71.67 | 76.6 |

| cow | 69.98 | 75.03 | elephant | 82.45 | 86.55 |

| bear | 81.05 | 85.34 | zebra | 83.72 | 87.34 |

| giraffe | 78.07 | 82.69 | backpack | 17.74 | 22.21 |

| umbrella | 64.55 | 72.31 | handbag | 49.09 | 57.35 |

| tie | 29.62 | 36.7 | suitcase | 50.76 | 58.99 |

| frisbee | 48.08 | 56.63 | skis | 15.8 | 20.03 |

| snowboard | 35.96 | 43.74 | sports ball | 11.54 | 14.74 |

| kite | 30.78 | 38.03 | baseball bat | 1.17 | 1.51 |

| baseball glove | 0.91 | 1.18 | skateboard | 9.84 | 12.61 |

| surfboard | 50.53 | 58.88 | tennis racket | 1.81 | 2.33 |

| bottle | 35.69 | 43.54 | wine glass | 31.49 | 38.81 |

| cup | 33.55 | 41.14 | fork | 16.96 | 21.29 |

| knife | 14.9 | 18.99 | spoon | 8.38 | 10.76 |

| bowl | 26.65 | 33.28 | banana | 65.55 | 71.96 |

| apple | 48.62 | 57.12 | sandwich | 44.28 | 53.02 |

| orange | 65.48 | 72.31 | broccoli | 54.53 | 61.79 |

| carrot | 44.53 | 53.17 | hot dog | 56.24 | 63.55 |

| pizza | 64.9 | 72.29 | donut | 58.77 | 66.02 |

| cake | 51.59 | 59.28 | chair | 27.0 | 33.54 |

| couch | 39.52 | 47.57 | potted plant | 16.52 | 20.79 |

| bed | 52.22 | 59.84 | dining table | 15.88 | 20.08 |

| toilet | 65.52 | 72.13 | tv | 51.66 | 59.53 |

| laptop | 53.05 | 60.62 | mouse | 16.44 | 20.74 |

| remote | 46.34 | 55.04 | keyboard | 53.28 | 60.71 |

| cell phone | 57.26 | 64.51 | microwave | 42.59 | 51.13 |

| oven | 34.46 | 42.15 | toaster | 0.0 | 0.0 |

| sink | 32.52 | 39.98 | refrigerator | 44.57 | 53.07 |

| book | 36.75 | 44.59 | clock | 47.53 | 56.15 |

| vase | 26.82 | 33.41 | scissors | 37.37 | 45.1 |

| teddy bear | 64.68 | 72.25 | hair drier | 0.0 | 0.0 |

| toothbrush | 15.11 | 19.21 | Total | 45.53 | 51.67 |

7.2 Comparison of CRF performance before creating pseudo labels

To more accurately assess the performance of our mask, we executed a separate experiment without the post-processing step of CRF. Interestingly, as can be seen in Table. 9, the results of our mask, even without CRF, proved to be superior to existing methods that include CRF.

7.3 Performance scores for seed, mask, and segmentation categorized by class

In this section, we have segmented and analyzed the performance of our model CoBra in terms of seed, mask, and segmentation (seg) values, broken down by class, and presented these as mIoU values. Table. 7 presents the mIoU values for each class on the Pascal VOC 2012 dataset. The seed and mask in Table. 7 are evaluated on the PASCAL VOC train dataset. Meanwhile, Table. 8 details the mIoU and accuracy values for each class based on the segmentation results obtained from the MS-COCO 2014 dataset.

8 Additional Ablation Study

In this section, we provide additional ablation analysis on the values (Section. 8.1) and exploration of embedding sizes (Section. 8.2).

8.1 Ablation Study for values

In Table. 10, we performed ablation on and , which are not demonstrated in the main paper. This proves our hypothesis that patches with positive labels from the CAK branch are highly precise, and similarly, the patches with negative patches from the affinity map derived from SAK branches are also highly precise. The max value was set to , as it was observed that the ratio of true positives exists at about 10% across all patches. Therefore, we concluded that the most reliable ratio stands at %. With a total of patches, each of size , we identified the top % - which amounts to patches - as the most trustworthy group for our study. Table. 10, we show the seed mIoU of various combinations of and for fixed and . We observe that and showed the best result.

| 5 | 10 | 15 | 20 | |

| 5 | 54.1 | 54.8 | 55.1 | 56.3 |

| 10 | 56.4 | 57.0 | 57.4 | 58.5 |

| 15 | 57.8 | 57.4 | 59.9 | 60.5 |

| 20 | 58.1 | 58.6 | 60.1 | 62.3 |

8.2 Ablation Study for embedding size

In determining the optimal embed size for our model, we present a series of comparative validations across a range of sizes in Table. 11. Although different embed sizes produced notable results, we found that the size of , which is currently utilized by our CoBra model, consistently achieved the highest values.

| Embedding size | 128 | 256 | 384 |

| seed mIoU(%) | 61.9 | 62.3 | 60.4 |

8.3 Ablation Study on Lambda Values Applied to the Loss Function

The overall loss function is defined as:

| (4) |

After performing several tests to determine the optimal loss weights for , and with various lambda values, it was found that the most favorable outcomes were achieved with at 0.1 and at 0.1. Moreover, it became apparent that our model’s performance remained consistently high, relatively unaffected by the variations in lambda values.

| value of | value of | mIoU(%) |

| 0.05 | 0.05 | 59.5 |

| 0.05 | 0.1 | 60.6 |

| 0.1 | 0.05 | 59.4 |

| 0.1 | 0.1 | 62.3 |

| 0.1 | 0.5 | 62.0 |

| 0.5 | 0.1 | 61.7 |

| 0.5 | 0.5 | 61.8 |

9 Mask Generation

In this section, we delve into the detailed process of creating masks from seeds (see Section. 9.1), and provide an in-depth explanation of how seeds are fused to generate masks (Section. 9.2).

9.1 Modifing Mask Generation

Thanks to IRNet [49], we were able to enhance the training of our model’s seeds by subsequently refining them. However, our approach did not utilize the IRNet model [49] in its original form; we introduced certain modifications to tailor it more fittingly to our seed. IRNet [49] conventionally sets a threshold to differentiate between the background and foreground before training the model, with this threshold being determined as a hyperparameter. If a value exceeds the foreground threshold, it is classified as foreground, whereas values lower than the background threshold are considered background. Values that fall in between these thresholds are categorized as unknown, and it is in this context that IRNet is trained. In our approach, we chose to dynamically compute this threshold, using values that we derived based on our model.

Inspired by TS-CAM [18], it is stated that in the ViT, the class token of the attention matrix aids in extracting both the foreground and background (referred to in the paper [18] as the semantic-agnostic attention map). We utilized this approach to set the background and foreground thresholds for training IRNet [49]. The background threshold was determined by assigning a certain weight to the semantic-agnostic attention map segment, and we established the foreground and background thresholds with an approximate gap of between them. This adjusted thresholding strategy contributed to an improvement in our results by an approximate margin of +0.4 over previous outcomes.

9.2 Seed Fusion for Mask Generation

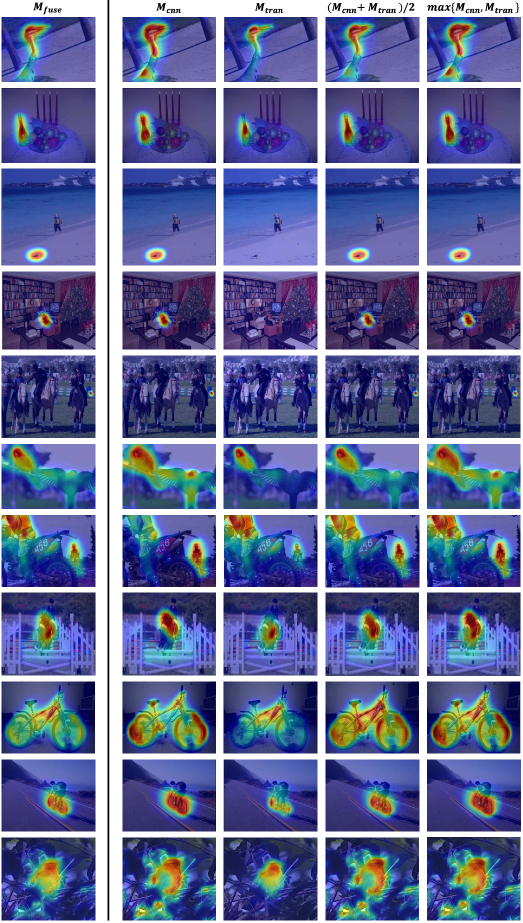

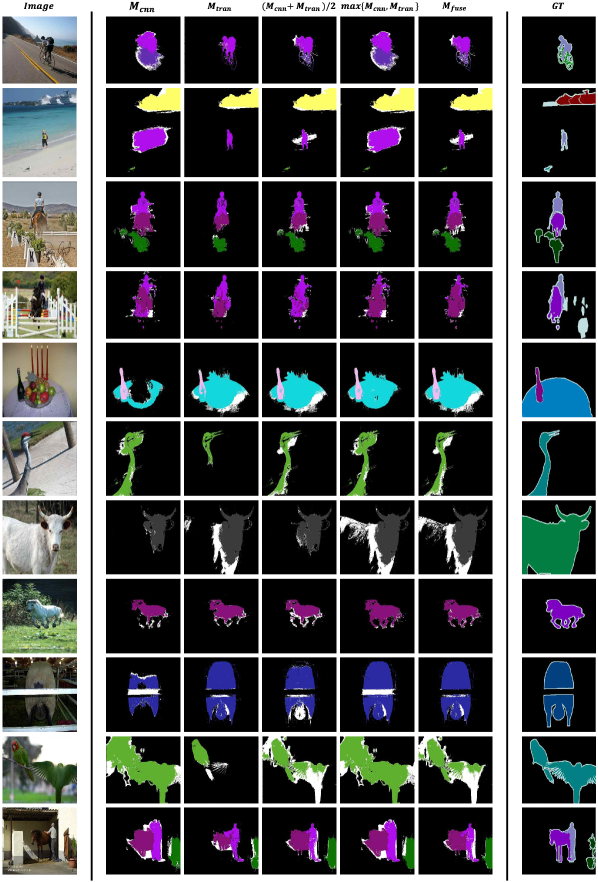

The fusion seed and mask are calculated as . Seed Fusion and Mask Fusion are illustrated in Fig. 8 and Fig. 9. As can be seen in the main paper (Table.5), the value of is higher than any other combination. As seen in Fig. 8 demonstrates the fusion method for creating a better seed using CAM obtained from each branch. The second and third column of the Fig. 8 shows and compensate each localization map. In particular, as seen in the sixth row of Fig. 8, while segments both birds, the only segments one bird. Notably, can detect more precisely than . Just to mention, in case it’s not clear, the third, fourth, and fifth rows represent the localization map for bird, dog, and chair, respectively. Since is trained on patches, its detection capability for small objects is relatively weak. compensates for this aspect. By using our , it is possible to detect missing parts as in the examples mentioned above.

Fig. 9 shows the label used in the training process to create a mask which is discussed in Section. 9.1. The colored parts, black and white areas represent the foreground, background, and unknown. These labels were created from the CoBra framework using the fusion method described earlier. Our fusion method aims to detect more accurately than others. In practice, owing to our approach for compensating missed elements, we successfully identified areas that could have been missed. Moreover, when excessive regions were detected, adjustments were made to accurately outline the boundaries.

10 Experimental Setup

As we added a loss function to properly integrate CoBra for training, we naturally adjusted the number of training epochs to 20 with 32 batch size. During the training of CoBra, and in the loss function are set to (see Eq. (4)). As illustrated in Table. 12, we experimented with various values of lambda to achieve the best score. We resized input images to . Localization maps are created by summing the output values generated from multiple scales (0.5, 0.75, 1.0, 1.25, 1.5, 1.75, 2.0). The localization map is interpolated in two different sizes, which is then used during the inference process and when refining the localization map through the IRN [49], called (more details on Sec. 9).

To create a mask (refined seed), the IRN [49] is trained with a 32 batch size, 512 sizes of cropped images, and 4 training epochs. After conducting a series of experiments on the embedding sizes used in and , we discovered that the optimal size was 256 in Table. 11, which demonstrated the best performance. The method for setting the threshold was inspired based on [3], which provides an insightful analysis of thresholding issues.

11 Discussion on the Methodology for Determining Thresholds

In the task of weakly supervised semantic segmentation, setting a threshold to distinguish the foreground is a common practice [50, 49, 47, 3]. Typically, this involves specifying a value within the 0 to 1 range of Class Activation Maps (CAMs) to define the background. However, this approach often encounters several issues. A notable problem arises from the varying confidence levels exhibited by different classes in the CAMs. For instance, the class chair might be confused with a desk, and its slender parts like legs result in generally lower confidence scores. By setting a lower background threshold, CAMs can more effectively identify the chair object. This means that the threshold can differ for each class. Another challenge is the need for a universal threshold for all images. Just as the foreground values vary across images, the background values can also differ; however, current thresholding methods do not adequately account for this variability. Some of the papers [27, 3] have discussed these issues with thresholding methods. We too carefully contribute to this discussion, proposing a novel approach to threshold determination. Drawing inspiration from TS-CAM [18], we considered the class token of the attention matrix indicated as the boundary of background and foreground, which can be found in the SAK branch in our model. We could derive the background threshold from this. The degree of weighting given to this factor was determined similarly to conventional threshold-setting methods. This approach has been shown to yield better results compared to traditional methods, demonstrating the effectiveness of our tailored thresholding strategy in weakly supervised semantic segmentation.