Collaborative rover-copter path planning and exploration with temporal logic specifications based on Bayesian update under uncertain environments

Abstract.

This paper investigates a collaborative rover-copter path planning and exploration with temporal logic specifications under uncertain environments. The objective of the rover is to complete a mission expressed by a syntactically co-safe linear temporal logic (scLTL) formula, while the objective of the copter is to actively explore the environment and reduce its uncertainties, aiming at assisting the rover and enhancing the efficiency of the mission completion. To formalize our approach, we first capture the environmental uncertainties by environmental beliefs of the atomic propositions, under an assumption that it is unknown which properties (or, atomic propositions) are satisfied in each area of the environment. The environmental beliefs of the atomic propositions are updated according to the Bayes rule based on the Bernoulli-type sensor measurements provided by both the rover and the copter. Then, the optimal policy for the rover is synthesized by maximizing a belief of the satisfaction of the scLTL formula through an implementation of an automata-based model checking. An exploration policy for the copter is then synthesized by employing the notion of an entropy that is evaluated based on the environmental beliefs of the atomic propositions, and a path that the rover intends to follow according to the optimal policy. As such, the copter can actively explore regions whose uncertainties are high and that are relevant to the mission completion. Finally, some numerical examples illustrate the effectiveness of the proposed approach.

1. Introduction

Autonomous systems play an important role to accomplish complex, high level scientific missions autonomously under uncertain environments. To increase the efficiency of completing the mission, integrating a collaboration of multiple, heterogeneous robots has attracted much attention in recent years, see, e.g., (lewis2014, ). In this paper, we are particularly interested in the situation, where completing the mission will be achieved by the collaboration of an unmanned ground vehicle (UGV), which is called a rover, and an unmanned aerial vehicle (UAV), which is called a (heli)copter. The utilization of the rover-copter collaboration is motivated by the fact that the rover has the role to complete the mission (e.g., search for a target object, etc.), while the copter has the role to assist the rover so as to enhance the efficiency of completing the mission. Specifically, the copter aims at actively exploring the environment and reducing its uncertainties by revealing which properties (obstacles, free space, etc.) are satisfied in each area of the environment. For example, the copter checks if no obstacles are present along with the path that the rover intends to follow in the environment. By doing so, the rover will be able to complete the mission while guaranteeing safety. Employing the rover-copter collaboration is also motivated by the fact that the National Aeronautics and Space Administration (NASA) is launching Mars 2020 mission (balaram2018, ). In particular, to investigate Martian geology and habitability, NASA has decided to send copters to Mars in order to help the rover discover target samples in an efficient way (landau2015, ). Motivated by this fact, several motion planning techniques employing the rover-copter collaboration for the Mars exploration have been investigated in recent years, see, e.g., (nilsson2018, ; bharadwaj2018, ; sasaki2020, ).

As briefly mentioned above, planning under environmental uncertainties has two distinct major problems; the first one is how to synthesize a control policy such that a complex, high level mission specification can be satisfied in an automatic way, and the second one is how to explore the environment so as to effectively reduce its uncertainties. In this paper, we propose a novel algorithm to solve these two problems by making use of the rover-copter collaboration. First, we tackle the former problem by employing temporal logic synthesis techniques (temporalreview1, ; temporalreview2, ; belta2017formal, ). More specifically, we express a mission specification by a syntactically co-safe linear temporal logic (scLTL). In contrast to the simple reach-avoid task, the scLTL formula has the ability to describe various complex specifications that involve logic and temporal constraints (belta2017formal, ). Moreover, the optimal policy that fulfills the scLTL specification can be synthesized using a value iteration algorithm, which is in general more computationally efficient than synthesizing controllers with the LTL (requires to solve the Rabin game) or the STL (requires to solve the (mixed) integer programming). Additionally, the utilization of the scLTL is sometimes natural in practice, since the path planning problem often deals with the mission that terminates in a finite time rather than the infinite time like LTL. To formalize our approach, we first capture the uncertain environment by assuming that, it is unknown which properties or atomic propositions are satisfied in each area of the environment. Specifically, we define environmental beliefs of the atomic propositions, which are described by posterior probabilities, that evaluate their uncertainties based on the sensor measurements in each area of the environment. As will be detailed later, these beliefs are updated according to the Bayes rule based on sensor measurements provided by both the copter and the rover. The optimal policy for the rover is then synthesized by maximizing a belief of the satisfaction of the scLTL formula for the controlled trajectory of the rover. In particular, based on an automata-based model checking (see, e.g., (baier, )), we combine a motion model of the rover described by a Markov decision process (MDP) and a finite state automaton (FSA) that accepts all good prefix satisfying the scLTL formula. This combined model, which is called a product belief MDP, has a transition function induced by the current environmental beliefs of the atomic propositions. The problem for finding the optimal policy is then reduced to a finite-time reachability problem in the product belief MDP, which can be solved via a value iteration algorithm.

The latter problem (i.e., how to explore the environment so as to effectively reduce its uncertainties) will be mainly solved by the copter, since it is able to move more quickly and freely than the rover and is thus suited for the exploration. Roughly speaking, the objective of the copter is to actively explore the environment and reduce its uncertainties by updating the environmental beliefs of the atomic propositions. We first describe the observations by employing a Bernoulli-type sensor model, see, e.g., (bertuccelli2005, ; wang2009, ; hussein2007, ; imai2013, ). The Bernoulli sensor abstracts the complexity of image processing into binary observations. Despite the simplicity, the Bernoulli sensor model is commonly used in the UAV community, due to the following reasons; (i) it is able to capture the erroneous observations; (ii) it is able to capture the limited sensor range; (iii) in contrast to the other sophisticated sensor models such as those that involve the probability density functions, the Bayesian update can be simply computed without any integrals or approximations. In particular, the third feature is well-suited for the copter’s exploration, as the computational power of the CPU and the battery capacity are often limited, and it is desirable to make the belief updates as “computationally light” as possible. The exploration algorithm is given by employing the notion of entropy that is evaluated based on the environmental beliefs of the atomic propositions, and a path that the rover intends to follow according to the current optimal policy. As such, the copter can put an emphasis on actively exploring regions whose uncertainties are high and that are relevant to the mission completion.

Related works and contributions of this paper

Based on the above, the approach presented in this paper is related to the previous works of literature in terms of the following aspects:

-

(1)

Motion planning/exploration employing the rover-copter collaboration;

-

(2)

Temporal logic planning under environmental uncertainties;

In what follows, we discuss how our approach differs from the previous works and highlight our main contributions.

Several motion planning/exploration techniques employing the rover-copter collaboration have been provided, see, e.g., (nilsson2018, ; bharadwaj2018, ; sasaki2020, ). In particular, our approach is closely related to (nilsson2018, ), in the sense that the overall synthesis problem is decomposed into two sub-problems, i.e., the problem of synthesizing a copter’s exploration policy in the uncertain environment, and the problem of synthesizing the optimal policy for the rover so as to satisfy the scLTL formula. Our approach builds upon this previous work in terms of both synthesis for the rover’s optimal policy and the copter’s exploration policy in the following ways. Rather than capturing the environmental uncertainties by the belief MDPs (see Section II.A in (nilsson2018, )), in which the environmental belief states are given in a discrete space, this paper captures the environmental uncertainties by assigning beliefs in a continuous space (i.e., the beliefs can take continuous values in the interval ). This allows us to apply the Bayes rule to update the beliefs according to the sensor measurements provided by both the rover and the copter. Moreover, while the previous work solves a value iteration over the state-space in a product MDP that involves the set of environmental belief states, our approach defines a product MDP that does not involve such states, i.e., the set of states of product MDP combines only the set of states of the MDP motion model of the rover and the set of states of the FSA that accepts all trajectories satisfying the scLTL formula (not including the environmental belief states). Hence, we can alleviate the time complexity of synthesizing the optimal policies for the rover in comparison with the previous work. In addition, we provide a theoretical, convergence analysis of the proposed algorithm, in which the environmental beliefs of the atomic propositions are shown to converge to the appropriate values.

Besides the above, many temporal logic planning schemes under environmental uncertainties have been proposed. Most of the previous works assume that the environment has unknown properties (atomic propositions) (ayala2013, ; fu2016, ; maly2013, ; meng2013a, ; meng2015a, ; meng2018, ; livingston2012, ; wongpiromsarn2012, ), or that the motion model of the robot includes uncertainties (lahijanian2010, ; yoo2016, ; sadigh16, ; vasile2016, ; leahy2019, ; wolff2012, ; ulusoy2014, ; wongpiromsarn2012, ; kazumune2020, ), or that the motion model of the robot is completely unknown (sadigh2014, ; jing2015, ; li2018, ; hasanbeig2019, ). Since this paper assumes that it is unknown which atomic propositions are satisfied in the environment, our approach is particularly related to the first category, i.e., (ayala2013, ; fu2016, ; maly2013, ; meng2013a, ; meng2015a, ; meng2018, ; livingston2012, ; wongpiromsarn2012, ). For example, (ayala2013, ) proposed to combine an automata-based model checking and run-time verification for synthesizing a temporal logic motion planning under an incomplete knowledge about the workspace. (fu2016, ) proposed a temporal logic synthesis under probabilistic semantic maps obtained by simultaneous localization and mapping (SLAM). Moreover, (meng2013a, ) proposed a planning revision scheme under incomplete knowledge about the workspace. Our approach is essentially different from the above previous works, in the sense that we incorporate a sensor failure about observations on the atomic propositions. In particular, as previously mentioned, we employ a Bernoulli-type sensor model to describe erroneous observations, and update the beliefs based on the Bayes rule. Besides, the synthesis approach (e.g., construction of the product MDP) is also different from the above previous works, since we make use of the beliefs to synthesize control policies, see Section 4.3. Moreover, our approach is different from the above previous works, in the sense that we incorporate an explicit algorithm for exploration, so as to reduce environmental uncertainties. Other than the above previous works, a few approaches that take into account sensor failures/noise have been provided, see, e.g., (johnson2013, ; johnson2015, ; nuzzo, ; TIGER2020325, ). For example, in (johnson2015, ), the authors proposed a probabilistic model checking for a reactive synthesis under sensor failures and actuator failures. Moreover, (nuzzo, ) introduced the concept of stochastic signal temporal logic (StSTL), and provided both verification and synthesis techniques using assume/guarantee contracts. In contrast to the above previous works, we here propose a Bayesian approach, in which the beliefs that are assigned in the environment are introduced, and these are updated based on observations provided by the Bernoulli sensors.

In summary, the main novelties of this paper with respect to the related works are as follows: using the copter-rover collaboration, we develop a new approach to synthesizing an optimal policy for the rover so as to satisfy an scLTL formula, and an exploration policy for the copter so as to update the environmental beliefs of the atomic propositions and reduce the environmental uncertainties. In particular:

-

(1)

Using the Bernoulli sensor model and the Bayesian update, we propose a novel exploration algorithm for the copter so as to update the environmental beliefs and effectively reduce the environmental uncertainties (for details, see Section 4.2);

-

(2)

We propose a novel framework to synthesize the optimal policy for the rover. In particular, we solve a value iteration over a product MDP, whose state-space does not involve the set of the states of the environmental beliefs. This leads to the reduction of time complexity of the value iteration in comparisons with the previous work (for details, see Section 4.3);

-

(3)

We provide a theoretical, convergence analysis of the proposed algorithm, where it is shown that the environmental beliefs of the atomic propositions converge to the appropriate values (for details, see Section 5).

The remainder of this paper is organized as follows. In Section 2, we provide some preliminaries of the Markov decision process and syntacticallly co-safe LTL formula.

In Section 3, we formulate a problem that we seek to solve in this paper. In Section 4, we describe the main algorithm that aims to synthesize both an exploration policy to update the environmental beliefs of the atomic propositions and the optimal policy to satisfy an scLTL formula.

In Section 5, we analyze the convergence property of the proposed algorithm.

In Section 6, we illustrate the effectiveness of the proposed approach through a simulation example. We finally conclude in Section 7.

Notation. Let , , , be the set of integers, non-negative integers, positive integers, and the set of integers in the interval , respectively. Let , , , be the set of reals, non-negative reals, positive reals, and the set of reals in the interval , respectively. For a given vector , denote by the -th element of . Given a finite set , let denote the set of all probability distributions on , i.e., the set of all functions such that .

2. Preliminaries

2.1. Markov Decision Process

A Markov Decision Process (MDP) is defined as a tuple , where is the finite set of states, is the initial state, is the finite set of control inputs, and is the transition probability function that associates, for each state and input , the corresponding probability distribution over . For simplicity of presentation, we abbreviate as . Given , a policy sequence is defined as an infinite sequence of the mappings , . Namely, each , represents a policy as a mapping from each state in onto the corresponding control input in . The policy sequence is called stationary if the policy is the invariant for all times, i.e., , . Given a policy sequence , a trajectory induced by is denoted by , where and , .

2.2. Syntactically co-safe LTL

Syntactically co-safe LTL (scLTL for short) is defined by using the set of atomic propositions , Boolean operators, and some temporal operators. Atomic propositions are the Boolean variables taking either true or false. Specifically, the syntax of the scLTL formulas are constructed according to the following grammar:

| (1) |

where is the atomic proposition, (negation), (conjunction), (disjunction) are the Boolean connectives, and (next), (until) are the temporal operators. The semantics of LTL formula is inductively defined over an infinite sequence of sets of atomic propositions . Intuitively, an atomic proposition is satisfied iff is true at (i.e., ). Moreover, is satisfied iff is not true at (i.e., ). is satisfied iff both and are satisfied. is satisfied iff or are satisfied. is satisfied iff is satisfied for the suffix of that begins from the next position, i.e., i.e., . Finally, is satisfied iff is satisfied until is satisfied. Given and an scLTL formula , we denote by iff satisfies . It is known that every that satisfies the scLTL formula contains a finite good prefix for some , such that also satisfies for all .

A finite state automaton (FSA) is defined as a tuple , where is a set of states, is the input alphabet, is the transition function, is the initial state, and is the set of accepting states. Moreover, denote by the successors for each state in , i.e., . It is known that any scLTL formula can be translated into the FSA with , in the sense that all good prefix for can be accepted by . We denote by the FSA corresponding to the scLTL formula . The translation from scLTL formulas to the FSA can be automatically done using several off-the-shelf tools, such as SCHECK2 (latvala2003, ).

3. Problem formulation

In this section we describe an uncertain environment, motion and sensor models of the rover and the copter, and the main problem that we seek to solve in this paper.

3.1. Uncertain environment

We capture an environment as a two-dimensional map consisting of cells. For example, this map is obtained by discretizing a given bounded search area into uniform grids with cells. Let , be the position or the centroid of the cell , and let . Moreover, we denote by the set of atomic propositions, which represents a set of labels or properties that can be satisfied in the states. In addition, we denote by the labeling function, which represents a mapping from each state onto the corresponding set of atomic propositions that are satisfied in . For example, if with , it intuitively means that there is an obstacle in the state . In this paper, it is assumed that the labeling function is unknown due to the uncertainty of the environment, i.e., we do not have a complete knowledge about the properties of states in the environment. Thus, instead of , we make use of the belief or the posterior probability (given the past observations) as follows:

| (2) |

for all and , where denotes that is satisfied in (i.e., ). For example, with intuitively means that, it is for sure that there exists an obstacle in . In addition, intuitively means that, it is completely unknown whether there exists an obstacle in . The belief that is not satisfied in is denoted as , and, from (2), it is computed as . In what follows, the beliefs in (2) are called the environmental beliefs of the atomic propositions. As we will see later, the environmental beliefs of the atomic propositions are updated based on the observations provided by sensors equipped with the copter and the rover.

3.2. Rover and copter model

3.2.1. Motion model

The motion of the rover is modeled by an MDP , where is the set of states (or the environment), is the initial state of the rover, is the finite set of inputs, and is the transition probability function. Similarly, the motion of the copter is modeled by an MDP , where is the set of states, is the initial state of the copter, is the finite set of inputs, and is the transition probability function.

3.2.2. Sensor model

The rover is equipped with sensors that can provide observations on several atomic propositions in . Specifically, let be a set of atomic propositions or the properties that can be observed by the rover’s sensors. For example, if , the rover is equipped with a sensor that can detect a target object. To describe the erroneous observations, we use a Bernoulli-type sensor model (see, e.g. (bertuccelli2005, ; wang2009, ; hussein2007, ; imai2013, )) as follows. Suppose that the rover’s position is , and we would like the rover to know whether an atomic proposition is satisfied at the position . Due to the fact that the rover can provide sensor measurements only in a limited range, it is assumed that , where is a given sensor range for . The corresponding observation is described by the binary variable, which is denoted by . For example, if with , the rover at the position detects an obstacle at by the corresponding sensor. The conditional probabilities that the sensor provides the correct or the false measurement are characterized as follows:

where are given parameters that characterize the precision of the sensor. For example, under the fact that is satisfied in (i.e., ), the probability of making the correct measurement (i.e., ) is . On the other hand, the probability of making the false measurement (i.e., ) is . For simplicity, it is assumed that the probabilities of making the correct measurements are the same, i.e., , and . Regarding , we assume that it is characterized by the fourth-order polynomial function of as follows (wang2009, ; hussein2007, ):

| (3) | |||||

| (4) |

for given . (3) and (4) imply that the reliability of the sensor decreases (and eventually becomes ) as the distance between and becomes larger.

Similarly, let be a set of atomic propositions or the properties that can be observed by the copter’s sensors. For simplicity, it is assumed that . Moreover, we denote by for each a given sensor range for . Suppose that the copter’s position is , and we would like the copter to know whether an atomic proposition is satisfied at the position with . The corresponding observation is denoted by . Moreover, denote by a given parameter that characterizes the precision of the sensor for given as with (3) and (4).

Despite simplicity, the Bernoulli sensor model is commonly used in the UAV community, due to the following reasons; (i) it is able to capture the erroneous observations; (ii) it is able to capture the limited sensor range; (iii) in contrast to the other sophisticated sensor models such as those that involve the probability density functions, the Bayesian update can be simply computed without any integrals or approximations. In particular, the third feature is well-suited for the copter’s exploration, as the computational power of the CPU and the battery capacity are often limited, and it is desirable to make the belief update as computationally light as possible.

3.3. Mission specification and problem formulation

The mission specification that the rover should satisfy is expressed by an scLTL, denoted by , over the set of atomic propositions . The satisfaction relation of the scLTL formula is given over the word generated by the trajectory of the rover. That is, given a policy sequence , we say that the trajectory satisfies , which we denote by , iff the corresponding word satisfies , i.e., . Since the rover aims at achieving the satisfaction of , we would like to derive an optimal policy, such that the probability of satisfying , i.e., , is maximized. However, since the labeling function is unknown, the values of are also unknown (i.e., we do not have direct access to this probability value). Hence, we will instead compute and maximize the belief that the trajectory of the rover satisfies , which we denote by

| (5) |

That is, indicates the posterior that the trajectory of the rover satisfies given the past observations (sensor measurements) provided by the rover and the copter. As we will see later, (5) is computed and maximized based on the environmental beliefs of the atomic propositions in (2). Since the environmental beliefs of the atomic propositions will be updated based on the sensor measurements, the optimal policy that maximizes (5) will be also updated accordingly. Moreover, since we would like to reduce the environmental uncertainties as much as possible (i.e., we would like to make converge to or for all ), it is also necessary to explore the state space so as to collect the sensor measurements and update the environmental beliefs of the atomic propositions. In this paper, the copter has the main role to explore the uncertain environment, since, as previously mentioned in Section 1, it is able to move more quickly and freely than the rover. Therefore, we need to synthesize not only an optimal policy for the rover such that (5) is maximized so as to increase the possibility to satisfy , but also an exploration policy for the copter so as to update the environmental beliefs of the atomic propositions and effectively reduce the environmental uncertainties.

Problem 1.

Consider the MDP motion models of the rover and of the copter , the Bernoulli sensor models as described in Section 3.2.2, and mission specification expressed by the scLTL formula . Then, synthesize for the copter-rover team a policy to increase the possibility to achieve the satisfaction of . Specifically, synthesize an optimal policy for the rover such that (5) is maximized, and an exploration policy for the copter so as to update the environmental beliefs of the atomic propositions in (2).

4. Approach

In this section we provide a solution approach to Problem 1. In Section 4.1, we provide the overview of the approach. Then, we provide the algorithms of the exploration phase and the mission execution phase in Section 4.2 and Section 4.3, respectively.

4.1. Overview of the approach

Following (nilsson2018, ), we consider a sequential approach to solve Problem 1. The overview of the approach is shown in Algorithm 1.

-

(1)

Exploration phase (see Section 4.2): The copter explores the state space for a given time period and update the environmental beliefs of the atomic propositions :

(6) Set and the copter transmits to the rover;

-

(2)

Mission execution phase (see Section 4.3): The rover computes the optimal policy such that (5) is maximized and executes it for a given time period . Moreover, update the environmental beliefs of the atomic propositions as well as the mapping , i.e.,

(7) Set and the rover transmits and to the copter;

With a slight abuse of notation, we denote by in the algorithm the set of all environmental beliefs of the atomic propositions, i.e., . Specifically, the approach mainly consists of the two phases: the exploration phase and the mission execution phase. During the exploration phase, the copter explores the state-space so as to update for a given time period . In (6) (as well as (7)), will denote a mapping from each state onto the corresponding maximum belief that the rover will reach within the time period according to the current optimal policy; for the detailed definition, see Section 4.3.3. As we will see later, the exploration policy is given by making use of the mapping and the entropy that will be derived from the current environmental beliefs of the atomic propositions . Once the exploration is done, the copter transmits the updated environmental beliefs of the atomic propositions to the rover and moves on to the mission execution phase. During the mission execution phase, the rover computes the optimal policy such that (5) is maximized, and executes the policy for a given time period . Moreover, during the execution, the rover provides sensor measurements to update . Once the execution is done, the rover transmits the updated and to the copter and moves back to the exploration phase. The sequential approach as above is motivated by the fact that, before allowing the rover to execute the optimal policy, we can let the copter in advance explore regions around the rover’s (future) path. For example, the copter checks if no obstacles are present along with the path that the rover intends to follow in the future. Then, if the copter finds some obstacles in the path, the rover can re-design the path for avoiding the obstacles and try to find another way to complete the mission. Such scheme is somewhat too careful, but may be necessary to be done especially for safety critical systems, such as the exploration on Mars.

As detailed below, the algorithms for both the exploration and the mission execution phases are significantly different from (nilsson2018, ) in the following three aspects. First, the environmental beliefs of the atomic propositions are updated based on the Bayes rule using the past sensor measurements. This allows us to provide novel copter’s explorations, in which the copter actively explores the environment by evaluating both the level of uncertainty and the relevancy to the mission completion (see Section 4.2). Second, we propose a novel framework to synthesize the optimal policy for the rover. In particular, we solve a value iteration over a product MDP, whose state-space does not involve the set of the states for the environmental beliefs. This leads to the reduction of the time complexity of solving the value iteration algorithm (for details, see Section 4.3). Finally, we provide a theoretical, convergence analysis of the proposed algorithm, where it is shown that the environmental beliefs of the atomic propositions converge to the appropriate values (for details, see Section 5).

4.2. Exploration phase

In this subsection, we propose an algorithm of how the copter explores the environment so as to effectively update the environmental beliefs of the atomic propositions.

4.2.1. Bayesian belief update

Using the sensor model described in Section 3.2.2, the copter updates the environmental beliefs of the atomic propositions based on the Bayes filter (wang2009, ). Suppose that the copter is in the position and, for some and with , it gives the corresponding observation as . Then, using this observation, the belief that is satisfied in , i.e., is updated by applying the Bayes rule as follows:

| (8) |

where , and is computed as

As will be clearer in the overall exploration algorithm given below, if the copter is placed at , it obtains the sensor measurements for all its neighbors, i.e., with and for all atomic propositions , and update the corresponding environmental beliefs of the atomic propositions according to (8).

4.2.2. Acquisition function for exploration

Let us now define an acquisition function to be evaluated for synthesizing the exploration strategy for the copter. First, we define the notion of an entropy as follows (cover2006, ):

| (9) |

for , where is to the base . In essence, for represents the level of uncertainty about whether is satisfied in , and takes the largest value if and the lowest value if or . Hence, by actively exploring the state space where the entropy is large and updating the corresponding beliefs according to (8), it is expected that the environmental uncertainties can be effectively reduced.

However, if the copter would explore the environment only by evaluating the above entropy, it might happen to explore the states that are completely irrelevant to the rover’s mission completion. In other words, since the copter knows the path that the rover intends to follow according to the current optimal policy, it is preferable that the copter should investigate areas around such path (before the rover executes it) so as to update the environmental beliefs of the atomic propositions. For example, the copter checks if no obstacles are present along with the path that the rover intends to follow in the environment, so that the rover will be able to complete the mission while avoiding any obstacles. In order to incorporate the rover’s path for exploration, recall that the current position of the rover is and we have the mapping (see Algorithm 1). As previously described in Section 4.1, for each indicates the belief that the rover will reach from within the time period according to the rover’s current optimal policy (for the detailed definition and the calculation on , see the mission execution phase in Section 4.3.3). Hence, for a large value of (), we have a high belief that the rover will reach within the time period . Combining the entropy (9) and , let us define the acquisition function as follows:

| (10) |

for all , where is the weight associated to .

4.2.3. Exploration algorithm

We now propose an exploration algorithm. In the following, we provide two different exploration strategies so as to take both the efficiency of computation and the coverage of exploration into account.

(Local selection-based policy): The first exploration strategy is the local selection-based policy, in which the copter executes a one step greedy exploration:

| (11) |

for all . (11) implies that the copter greedy selects a control input such that the corresponding next state provides the highest acquisition. The overall exploration algorithm based on the local selection-based policy is summarized in Algorithm 2. As shown in the algorithm, for each step , the copter computes the acquisition function and a control input according to Section 4.2.2 (line 6–line 2). Once is obtained, the copter applies it and samples the next state . Given the new current position, the copter makes the new sensor measurements for all its neighbors and the atomic propositions , and update the corresponding environmental beliefs of the atomic propositions (line 3–line 2). The above procedure is iterated for the copter’s time period . Note that, in order to enhance the exploration, the acquisition function as well as the policy computed in (11) are updated for each time when the copter obtains new observations.

The local selection-based policy is computationally efficient, since the optimal control input (line 2 in Algorithm 2) can be obtained by evaluating the acquisitions only for the next states. A disadvantage of this approach, however, is that it might not guarantee an effective exploration for the whole state space , since the copter evaluates the acquisitions only locally. Another exploration strategy would be therefore to select the optimal state to be visited by evaluating the acquisitions for all states in (instead of only locally for the next states), and then collect the sensor measurements to update the corresponding environmental beliefs. This leads us to a global selection-based approach, and the details are given below.

(Global selection-based policy): In the global selection-based approach, the copter first selects the optimal state that provides the highest acquisition in the whole state-space , i.e.,

| (12) |

Then, the copter computes the optimal policy such that the probability of reaching is maximized, i.e.,

| (13) |

where denotes the state trajectory of the copter by applying the policy , and indicates the property that the state trajectory reaches in finite time (which corresponds to the ”eventually” operator), i.e., iff there exists such that . (13) can be indeed solve via value iteration algorithm; for details, see Section 4.3.2. Then, the copter moves to from the current state by applying so as to collect the corresponding sensor measurements and update the environmental beliefs of the atomic propositions. Once is reached, the copter re-computes the new based on the updated environmental beliefs, and iterate the same procedure as above for the time period . The overall exploration algorithm based on the global selection-based policy is summarized in Algorithm 3. As shown in the algorithm, the copter first finds the optimal state that provides the highest acquisition among the whole state space , and compute the optimal policy to reach (line 3–line 3). The copter applies the optimal policy until it reaches and collects the corresponding sensor measurements (line 3–line 3). Note that the copter collects not only the sensor measurements for , but also the ones for the states that are traversed while reaching to , aiming to enhance the efficiency of exploration. The above procedure is iterated for the time period . The variable in Algorithm 2 counts the number of times when the copter successfully reaches .

The global selection-based approach should require a heavier computation than the local selection-based approach, since it needs to find the optimal state by evaluating the acquisitions for the whole state-space , as well as to compute the optimal policy to reach via a value iteration. However, the advantage of employing this approach is that we can guarantee the coverage of exploration, i.e., by repeating Algorithm 2, the environmental belief of the atomic propositions converge to the appropriate values; for details, see Section 5.

4.3. Mission execution phase

In this subsection, we propose a detailed algorithm of the rover’s mission execution phase.

4.3.1. Product belief MDP

Given the environmental beliefs of the atomic propositions in (2), let for , be the joint belief that all atomic propositions in are satisfied in , i.e., . Moreover, let be the joint belief that all atomic propositions in are satisfied in and all atomic propositions in other than (i.e., ) are not satisfied in , i.e.,

| (14) |

For simplicity of presentation, let . Moreover, let be an FSA corresponding to , and, for each , denote by a subset of input alphabets, for which the transition from to is allowed: . In addition, given and , we let

| (15) |

That is, represents the belief that makes the transition to from the atomic propositions that are satisfied in . Note that we have , since the collection of all events (the set of atomic propositions) corresponding to all outgoing transitions from are all possible events that can occur, i.e., . For this clarification, see an example below.

(Example): Consider the environment with two states and let and assume that the environmental beliefs of the atomic propositions are given by

| (16) |

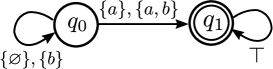

Moreover, the scLTL formula is assumed to be given by . The corresponding FSA that accepts all good prefix for is shown in Fig. 1. For example, since and , we have . Moreover, we have

| (17) |

which implies that, if the position of the rover is , we have a high belief that provides the self-loop, i.e., the belief of reaching the accepting state is low. This is due to the fact that we have a low belief that is satisfied in . Moreover, we have . Note that we have , satisfying the probabilistic nature. This is due to the fact that all outgoing transitions from are only and , and the collection of all the corresponding events (set of atomic propositions) is (), which is indeed all events that can occur. We also have , implying that we have a high belief of reaching the accepting state, which is due to the fact that we have the high belief that is satisfied in .

Based on the above, we define the product belief MDP as a composition of the rover’s motion model and the FSA as follows:

Definition 1.

Let and be the MDP motion model of the rover and the FSA corresponding to , respectively. Moreover, given the environmental beliefs of the atomic propositions (2), let for , be given by (15). Then, the product belief MDP between and is defined as a tuple , where

-

•

is the set of states;

-

•

is the initial state;

-

•

is the set of control inputs;

-

•

is the transition belief function, defined as

(18) -

•

is the set of accepting states, where .

As shown in (18), the transition function is called a belief function instead of a probability function, which is due to that it is computed based on the environmental beliefs of the atomic propositions (i.e., the posterior given the past observations). As previously mentioned, represents the belief that makes the transition to according to the atomic propositions that are satisfied in . Hence, indicates the joint belief that the pair makes the transition to by applying .

4.3.2. Value iteration

Given , we denote by a policy for , which associates a control input from for each state in . Then, let be the corresponding stationary policy sequence. We denote by with , , the trajectory of the product belief MDP , such that (i.e., , ) and for all . Given with , , we can induce the corresponding trajectory of as . If the trajectory of reaches the accepting state in in finite time, it means that the corresponding trajectory of reaches the accepting state in in finite time (i.e., it satisfies ). Hence, the problem of maximizing the belief for the satisfaction of defined by (5), can be reduced to the problem of maximizing the belief that the trajectory of reaches in finite time, i.e.,

| (19) |

The problem (19) can be indeed solved via a value iteration as follows (see, e.g., (abate2008, ; nilsson2018, )). Let be given by , if and otherwise. Then, set , and for all , ,

| (20) | |||

| (21) |

The above computations are given until they reach some fixed point, i.e., for some . Alternatively, one may iterate the above only for a given finite time steps with , i.e., iterate (20) and (21) for all and set . This in turn implies to obtain the optimal policy that maximizes the belief that the trajectory of reaches within the time interval . Hence, it implies that we maximize the belief that the length of the good prefix of the word satisfying is less than . Given the optimal policy computed as above, we can induce the policy sequence for the rover based on the trajectory of , i.e.,

| (22) |

where , .

Remark 1.

As shown in Definition 1, the product MDP involves only and , and does not involve the states of the environmental beliefs as formulated in (nilsson2018, ). In particular, since the environmental beliefs of the atomic propositions are assigned for every state in in our problem setup, the time complexity of solving the value iteration algorithm is in (nilsson2018, ) with being the set of states of the environment belief, while in our approach. This implies that the time complexity of the value iteration algorithm in the previous work is exponential with respect to , while it is polynomial in our approach. Therefore, our approach could alleviate the running time and the memory usage for synthesizing the optimal policies for the rover in contrast to the previous work.

4.3.3. Computing the reachability belief and

Suppose that the current rover’s position and the optimal policy is computed as above. Then, given and , we can compute a belief that the rover will reach from after time steps according to the optimal policy . To this end, we denote the collection of all states of by , where is the number of the states of . Given , we denote by the set of indices, for which the corresponding states of include , i.e., . If the policy is employed, the belief MDP can be viewed as a belief Markov chain induced by , which is denoted by , where is the set of states, is the initial state, and is the transition belief function defined by , .

Now, let for all be recursively given by , where is the transition matrix for the belief Markov chain , and if is the initial state (i.e., ) and if is not the initial state. That is, represents a belief that the state is reached after time steps from the initial state according to the optimal policy . Based on the above, for each , we can compute a belief that is reached after time steps, denoted by , as . Then, let be given by , i.e., indicates the maximum belief that the rover will reach (starting from ) within the time steps . That is, for a large value of (), we have a high belief that the rover will reach at some point in the time interval .

As previously described in Section 4.2.2, the mapping is utilized for the copter’s exploration, so as to effectively search cells that are relevant to the mission execution.

4.3.4. Overall mission execution algorithm

We now summarize the main algorithm of the mission execution phase in Algorithm 4. As shown in the algorithm, the rover computes the optimal policy by solving the value iteration and apply it for the time period . Moreover, while applying this policy, it takes the sensor measurements and updates the environmental beliefs of the atomic propositions (2) (line 4–line 4). Afterwards, the rover computes the mapping according to the procedure described in Section 4.3.3.

Finally, the algorithm returns the current rover’s position , the mapping and the updated belief for the atomic propositions in (2).

5. Convergence analysis

In this section, we analyze convergence property of the proposed algorithm presented in the previous section. In particular, we show that, by executing Algorithm 1 with the exploration phase given by the global selection-based approach (Algorithm 3), the environmental beliefs of the atomic propositions converge to the appropriate values, i.e., for all and , if , and if . Suppose that Algorithm 1 is implemented with the exploration phase given by Algorithm 3. To simplify the analysis, we make the following assumptions:

Assumption 1.

For every execution of Algorithm 3, it follows that .

Assumption 2.

For the sensor model of the copter and the rover, we assume that:

-

(i)

.

-

(ii)

for all .

-

(iii)

for all .

Assumption 1 excludes the case where Algorithm 3 is terminated without reaching any selected states to be explored. Moreover, the first assumption in Assumption 2 means that both the copter and the rover are equipped with all sensors for . The second assumption in Assumption 2 means from (3) and (4) that the copter and the rover can only take the sensor measurements only on their current states. The third assumption in Assumption 2 means that the precision of the sensor is the same for both the rover and the copter.

For simplicity, we let for all . Note that (for this clarification, see (3) and (4)). In addition, let denote the total number of times the copter/rover visits the state and takes the corresponding sensor measurements for each , and let (, ) denote the number of times the corresponding sensor measurements for are . In other words, represents the number of times the corresponding observations for are . Finally, we make the following assumption:

Assumption 3.

There exist with and , such that for all , and , we have if and if .

Assumption 3 implies that the ratio between the number of sensor measurements and the number of making the correct sensor measurements (i.e., if and if ) is -close to if the number of the visits (the sensor measurements) at is sufficiently large. The following theorem shows that, by executing Algorithm 1 with the exploration phase given by Algorithm 3, all the environmental beliefs of the atomic propositions converge to the appropriate values.

Theorem 1.

Recall that is defined in Algorithm 1 and represents the (global) time step during execution of Algorithm 1. Hence, Theorem 1 means that the environmental beliefs of the atomic propositions converge to the appropriate values as the number of the iterations for the exploration/mission execution phase goes to infinity. For the proof of Theorem 1, see Appendix A.

Remark 2.

As shown in Theorem 1, the convergence properties (23), (24) may not hold if in (10). Nevertheless, as previously stated in Section 4.2.2, setting is useful and important for practical applications, since it can avoid the exploration of states that are completely irrelevant to the mission execution. Additionally, (23), (24) may not hold if the exploration phase is given by the local selection-based policy Algorithm 2. Nevertheless, as previously stated in Section 4.2, utilizing Algorithm 2 is useful for practical applications where the computation capacity of the copter is severely limited, since the optimal control input can be obtained by evaluating the acquisitions only for the next states.

Now, let denote the optimal policy sequence that maximizes the probability of satisfying under the assumption that the labeling function is known, i.e., . If is known, can be derived by constructing a product MDP with the knowledge about and solving the value iteration algorithm (for details, see the proof of Corollary 1 in Appendix B). Note that given by (22) is not necessarily equal to , since is derived by maximizing the belief of satisfying based on the sensor measurements (i.e., ). The following Corollary is derived from Theorem 1, showing that converges as .

Corollary 1.

For the proof, see Appendix B.

6. Simulation results

In this section, we illustrate the effectiveness of the proposed algorithm through numerical simulations. The simulation was conducted on Python 3.7.9 with AMD Ryzen 7 3700U with Radeon Vega Mobile Gfx CPU and 16GB RAM.

6.1. Simulation 1

6.1.1. Problem setup

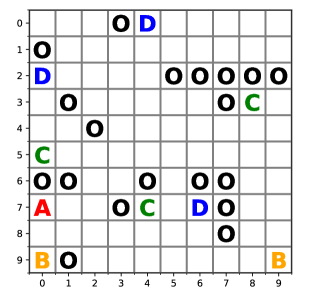

We consider the environmental map consisting of cells as shown in Fig. 2. The set of states of the environment (i.e., the positions or the centroids of the cells) is given by . The set of the atomic propositions is given by , where and represent the atomic propositions of target objects that the rover seeks to discover, and represents the atomic proposition of obstacles that the rover needs to avoid for all times. For both the MDP motion models of the rover and the copter, the set of states is given by . The sets of control inputs for both the rover and the copter, i.e., , , are also the same and consists of components: stay in the same cell, move up, move down, move right, and move left. Each control input drives the rover/copter towards one of the 8 cells adjacent to the current rover/copter’s position. The transition probability for the rover’s MDP is that there is % chance to move from the current cell to the desired cell, and there is the remaining 5% chance to move to one of the cells adjacent to the desired cell (with equal probability). Regarding the copter’s MDP, it is assumed that there is % chance to move from the current cell to the desired cell, and % chance to move to one of the cells adjacent to the desired cell. We assume that the copter is able to move without caring the obstacles (i.e., it can move on all states in ).

The rover is equipped with sensors to detect both the target objects and the obstacles, i.e., , while the copter is equipped with a sensor to detect only the obstacles, i.e., . Moreover, the sensor range is assumed to be given by , which implies from (3), (4), that the rover can provide high reliable sensor measurements only on its current cell and can provide row reliable measurements on its adjacent cells, and , which implies that the copter can detect obstacles within 4 cells far from the current cell. In addition, it is assumed that , which implies from (3), (4) that the rover’s maximum sensor accuracy is 100%. Moreover, , which implies that the copter’s maximum sensor accuracy is 90%. The scLTL specification for the rover is given by

| (25) |

where

| (26) |

with for . Intuitively, means that the rover should eventually discover the target while avoiding the obstacles. Moreover, (resp. ) means that the rover should eventually discover and then (resp. and then ) while avoiding the obstacles. During execution of Algorithm 1, we set , , and in the acquisition function of (10). Moreover, we assume that the mission is (regarded as) complete if the belief of satisfying by the rover’s optimal policy exceeds .

6.1.2. Simulation results

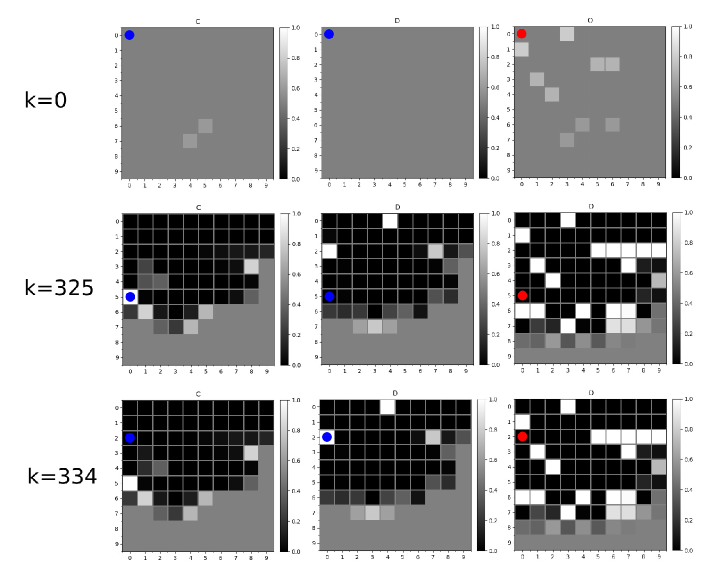

Some snapshots of the simulation result by applying Algorithm 1 are shown in Fig. 3. During execution of Algorithm 1, it is assumed that the copter executes the global selection-based policy (Algorithm 3) until the mission is complete. In the figure, the environmental beliefs of the atomic propositions are illustrated as the color maps, and, for simplicity, only the color maps for are shown. The rover’s position is illustrated as the blue circle (only shown in the figures of ), and the copter’s position is illustrated as the red circle (only shown in the figures of ). It can be shown from the figures that the rover has reached the cell where exists (at ), and then reached the cell where exists (at ), regarding that the mission is complete. The whole behaviors of both the rover and the copter as well as the time elapse of the environmental beliefs of the atomic propositions can be shown in the animation; see (animation, ).

To make comparisons between the local (Algorithm 2) and the global (Algorithm 3) selection-based exploration for the copter, we have iterated the following steps: (i) The initial positions of the rover and the copter are randomly chosen from ; (ii) Using the generated initial positions, execute Algorithm 1 with the local selection-based exploration (Algorithm 2); (iii) Using the generated initial positions, execute Algorithm 1 with the global selection-based exploration (Algorithm 3). The above steps have been iterated for 100 times, and for each exploration policy, we counted the number of times when the mission was successfully complete before . At the same time, we also measured the average running time (in sec) of the local/global exploration policy (i.e., the average execution time of Algorithm 2 and Algorithm 3). Table 1 illustrates the simulation results. The table indicates that the number of completing the mission via the global selection-based exploration is larger than the local one. This may be due to that the global selection-based exploration guarantees the convergence of the environmental beliefs of the atomic propositions, while the local one does not (see Section 4.2.3, Section 5). On the other hand, the table also shows that the global one requires heavier computation than the local one, which is due to that it needs to solve the value iteration algorithm to reach the selected state with the highest acquisition (for details, see (13)).

| Number of completing the mission | Average running time (s) | |

|---|---|---|

| Local (Algorithm 2) | 62 | 3.0 |

| Global (Algorithm 3) | 71 | 31 |

6.2. Simulation 2: comparison with the existing algorithm

In this section, we show that the proposed algorithm is advantageous over the existing algorithm (nilsson2018, ) in terms of the running time and the memory usage of solving the value iteration (see Remark 1 for the detailed explanation). We consider the environment with different size of the state space: . The set of the atomic propositions is given by , where indicates the target object and indicates the obstacle. The mission specification is given by . For each , we randomly generate the initial beliefs of the atomic propositions for all uniformly from the interval and solve the value iteration algorithm to synthesize the optimal policy for the rover according to the proposed approach (see Section 4.3, in particular, (20) and (21)). For the implementation of (nilsson2018, ), we assign the environmental belief for every state in the environment and solve the corresponding value iteration. The copter’s exploration has not been given in this simulation, since we would like to focus on evaluating the running time of synthesizing the optimal policy for the rover. Table 2 shows the resulting running time (in sec) of solving the value iterations, where the symbol ”—” indicates that the optimal policy could not be found due to the overflow of the memory. The table shows that the running time of solving the value iteration with the existing algorithm increases rapidly as increases and, in particular, it becomes infeasible when due to the overflow of the memory 1)1)1)Note that in the numerical simulation in (nilsson2018, ) considers the state space with . This is due to that the atomic propositions are assigned only for some small regions in the state space. Specifically, the numerical simulation in (nilsson2018, ) considers that only or regions in the state-space are of interest to be explored (see Fig. 6 in (nilsson2018, )), so that the reduction of the computational complexity of solving the value iteration is achieved. However, as can be seen in our problem setup, we assume to assign the environmental beliefs for the atomic propositions for every single cell in the state space. Thus, the algorithm in (nilsson2018, ) has becomes infeasible for in our problem setup.. As described in Remark 1, such blowup is due to the fact that the size of the state-space of the product MDP increases exponentially with respect to . Therefore, the proposed approach is shown to be more useful than the existing approach in terms of the running time and the memory usage for synthesizing the optimal policy for the rover.

| 6 | 9 | 12 | 15 | 50 | 100 | |

|---|---|---|---|---|---|---|

| Proposed approach | 0.02 | 0.05 | 0.13 | 0.22 | 3.91 | 20.35 |

| Previous approach in (nilsson2018, ) | 0.02 | 1.07 | 86.02 | — | — | — |

7. Conclusion and future works

In this paper, we investigate a collaborative rover-copter path planning and exploration with temporal logic specifications under uncertain environments. Mainly, the rover has the role to satisfy a mission specification expressed by an scLTL formula, while the copter has the role to assist the rover by exploring the uncertain environment and reduce its uncertainties. The environmental uncertainties are captured by the environmental beliefs of the atomic propositions, which represent the posterior probabilities that evaluate the level of uncertainties based on the sensor measurements. A control policy of the rover is then synthesized by maximizing a belief for the satisfaction of the scLTL formula through the implementation of an automata-based model checking. Then, an exploration policy for the copter is synthesized by evaluating the entropy that represents the level of uncertainties and the rover’s path according to the current optimal policy. Finally, the effectiveness of the proposed approach is validated through several numerical examples. Future works involve investigating safety guarantees (i.e., the rover avoids obstacles for all times during execution of Algorithm 1), as well as utilizing more sophisticated sensor models than the simple Bernoulli-type sensor models considered in this paper. In addition, extending the proposed approach to a more real-time and concurrency-related techniques, such as those that synthesize a supervisor that determines the activity of both the rover and the copter, should be studied for our further investigations.

Acknowledgement

This work was supported by JST ERATO Grant Number JPMJER1603, JST CREST Grant Number JPMJCR2012, Japan and JSPS Grant-in-Aid for Young Scientists Grant Number JP21K14184.

References

- (1) F. L. Lewis, H. Zhang, K. Hengster-Movric, A. Das, Cooperative Control of Multi-Agent Systems: Optimal and Adaptive Design Approaches, Springer, 2014.

- (2) B. Balaram, et al., Mars helicopter technology demonstrator, in: AIAA Atmospheric Flight Mechanics Conference, 2018, pp. 1–18.

- (3) D. Brown, et al., Mars helicopter to fly on nasa’s next red planet rover mission, in: NASA/JPL News Release, 2018.

- (4) P. Nilsson, S. Haesaert, R. Thakker, K. Otsu, C. Vasile, A. Agha-Mohammadi, R. Murray, A. D. Ames, Toward specification-guided active mars exploration for cooperative robot teams, in: Proceedings of Robotics: Science and Systems (RSS), 2018.

- (5) S. Bharadwaj, M. Ahmadi, T. Tanaka, U. Topcu, Transfer entropy in mdps with temporal logic specifications, in: 2018 IEEE Conference on Decision and Control (CDC), 2018, pp. 4173–4180.

- (6) T. Sasaki, K. Otsu, R. Thakker, S. Haesaert, A. Agha-mohammadi, Where to map? iterative rover-copter path planning for mars exploration, IEEE Robotics and Automation Letters 5 (2) (2020) 2123–2130.

- (7) H. Kress-Gazit, M. Lahijanian, V. Raman, Synthesis for Robots: Guarantees and Feedback for Robot Behavior, Annual Review of Control, Robotics, and Autonomous Systems 1 (2018) 211–236.

- (8) C. Belta, A. Bicchi, M. Egerstedt, E. Frazzoli, E. Klavins, G. J. Pappas, Symbolic planning and control of robot motion [Grand Challenges of Robotics], IEEE Robotics and Automation Magazine 14 (1) (2007) 61–70.

- (9) C. Belta, B. Yordanov, E. A. Gol, Formal methods for discrete-time dynamical systems, Vol. 89, Springer, 2017.

- (10) C. Baier, J.-P. Katoen, Principles of model checking, The MIT Press, 2008.

- (11) L. F. Bertuccelli, J. P. How, Robust uav search for environments with imprecise probability maps, in: Proceedings of the 44th IEEE Conference on Decision and Control, 2005, pp. 5680–5685.

- (12) Y. Wang, I. I. Hussein, Bayesian-based decision making for object search and characterization, in: 2009 American Control Conference, 2009, pp. 1964–1969.

- (13) I. I. Hussein, D. M. Stipanovic, Effective coverage control for mobile sensor networks with guaranteed collision avoidance, IEEE Transactions on Control Systems Technology 15 (4) (2007) 642–657.

- (14) K. Imai, T. Ushio, Effective combination of search policy based on probability and entropy for heterogeneous mobile sensors, in: 2013 IEEE International Conference on Systems, Man, and Cybernetics, 2013, pp. 1981–1986.

- (15) A. I. M. Ayala, S. B. Andersson, C. Belta, Temporal logic motion planning in unknown environments, in: 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2013, pp. 5279–5284.

- (16) J. Fu, N. Atanasov, U. Topcu, G. J. Pappas, Optimal temporal logic planning in probabilistic semantic maps, in: 2016 IEEE International Conference on Robotics and Automation (ICRA), 2016, pp. 3690–3697.

- (17) M. Maly, M. Lahijanian, L. E. Kavraki, H. Kress-Gazit, M. Y. Vardi, Iterative temporal motion planning for hybrid systems in partially unknown environments, in: Proceedings of the 16th International Conference on Hybrid Systems: Computation and Control, 2013, p. 353–362.

- (18) M. Guo, K. H. Johansson, D. V. Dimarogonas, Revising motion planning under linear temporal logic specifications in partially known workspaces, in: IEEE International Conference on Robotics and Automation (ICRA), 2013.

- (19) M. Guo, D. V. Dimarogonas, Multi-agent plan reconfiguration under local ltl specifications, The International Journal of Robotics Research 34 (2) (2015) 218–235.

- (20) M. Guo, M. M.Zavlanos, Probabilistic motion planning under temporal tasks and soft constraints, IEEE Transactions on Automatic Control 63 (12) (2018) 4051–4066.

- (21) S. C. Livingston, R. M. Murray, J. W. Burdick, Backtracking temporal logic synthesis for uncertain environments, in: 2012 IEEE International Conference on Robotics and Automation, 2012, pp. 5163–5170.

- (22) T. Wongpiromsarn, E. Frazzoli, Control of probabilistic systems under dynamic, partially known environments with temporal logic specifications, in: 2012 IEEE 51st IEEE Conference on Decision and Control (CDC), 2012, pp. 7644–7651.

- (23) M. Lahijanian, J. Wasniewski, S. B. Andersson, C. Belta, Motion planning and control from temporal logic specifications with probabilistic satisfaction guarantees, in: 2010 IEEE International Conference on Robotics and Automation (ICRA), 2010, pp. 3227–3232.

- (24) C. Yoo, C. Belta, Control with probabilistic signal temporal logic, in: Preprint: available on https://arxiv.org/pdf/1510.08474.pdf, 2015.

- (25) D. Sadigh, A. Kapoor, Safe control under uncertainty with probabilistic signal temporal logic, in: Proceedings of Robotics: Science and Systems, 2016.

- (26) C. Vasile, K. Leahy, E. Cristofalo, A. Jones, M. Schwager, C. Belta, Control in belief space with temporal logic specifications, in: 2016 IEEE 55th Conference on Decision and Control (CDC), 2016, pp. 7419–7424.

- (27) K. Leahy, E. Cristofalo, C. I. Vasile, A. Jones, E. Montijano, M. Schwager, C. Belta, Control in belief space with temporal logic specifications using vision-based localization, The International Journal of Robotics Research 38 (6) (2019) 702–722.

- (28) E. M. Wolff, U. Topcu, R. M. Murray, Robust control of uncertain markov decision processes with temporal logic specifications, in: 2012 IEEE 51st IEEE Conference on Decision and Control (CDC), 2012, pp. 3372–3379.

- (29) A. Ulusoy, T. Wongpiromsarn, C. Belta, Incremental controller synthesis in probabilistic environments with temporal logic constraints, The International Journal of Robotics Research 33 (8) (2014) 1130–1144.

- (30) K. Hashimoto, A. Saoud, M. Kishida, T. Ushio, D. V. Dimarogonas, Learning-based symbolic abstractions for nonlinear control systems, in arxiv, available on https://arxiv.org/abs/2004.01879 (2020).

- (31) D. Sadigh, E. S. Kim, S. Coogan, S. S. Sastry, S. A. Seshia, A learning based approach to control synthesis of markov decision processes for linear temporal logic specifications, in: 53rd IEEE Conference on Decision and Control, 2014, pp. 1091–1096.

- (32) J. Wang, X. Ding, M. Lahijanian, I. Paschalidis, C. Belta, Temporal logic motion control using actor-critic methods, The International Journal of Robotics Research 34 (10) (2015) 1329–1344.

- (33) X. Li, Y. Ma, C. Belta, A policy search method for temporal logic specified reinforcement learning tasks, in: 2018 Annual American Control Conference (ACC), 2018, pp. 240–245.

- (34) M. Hasanbeig, Y. Kantaros, A. Abate, D. Kroening, G. J. Pappas, I. Lee, Reinforcement learning for temporal logic control synthesis with probabilistic satisfaction guarantees, in: 2019 IEEE 58th Conference on Decision and Control (CDC), 2019, pp. 5338–5343.

- (35) B. Johnson, H. Kress-Gazit, Analyzing and revising high-level robot behaviors under actuator error, in: 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2013, pp. 741–748.

- (36) B. Johnson, H. Kress-Gazit, Analyzing and revising synthesized controllers for robots with sensing and actuation errors, The International Journal of Robotics Research 34 (6) (2015) 816–832.

- (37) P. Nuzzo, J. Li, A. L. Sangiovanni-Vincentelli, Y. Xi, D. Li, Stochastic assume-guarantee contracts for cyber-physical system design, ACM Transactions on Embedded Computing Systems 18 (1) (Jan. 2019).

- (38) M. Tiger, F. Heintz, Incremental reasoning in probabilistic signal temporal logic, International Journal of Approximate Reasoning 119 (2020) 325 – 352.

- (39) T. Latvala, Efficient model checking of safety properties, in: 10th International SPIN workshop, 2003, pp. 74–88.

- (40) T. M. Cover, J. A. Thomas, Elements of Information Theory, Wiley Series, 2006.

- (41) A. Abate, M. Prandini, J. Lygeros, S. Sastry, Probabilistic reachability and safety for controlled discrete time stochastic hybrid systems, Automatica 44 (11) (2008) 2724–2734.

- (42) [link to the animation]

Appendix A Proof of Theorem 1

Let us first rewrite the Bayesian update (8) by

| (27) |

for , where we let (resp. ) if the rover (resp. copter) provides the sensor measurement for at . That is, , represents the environmental belief of at computed after times visit (by either the rover or the copter) of . If at the -th visit of , the Bayesian update is given by

| (28) |

After some simple calculations, we then obtain , where we let . On the other hand, if at the -th visit, it follows that . Therefore, we obtain

| (29) | |||||

| (30) |

Suppose that the rover/copter visits the total times and that . From (29), (30), we have

Note that , since the initial belief of the atomic proposition is selected as (see line 2 in Algorithm 1). From Assumption 3, it follows that for all and . Thus, we obtain for all and . Noting that (see Assumption 3), and , we obtain as , which implies that as . Similarly, we obtain , i.e., if as . Hence, for all and , we have

| (31) | |||||

| (32) |

In other words, (23), (24) are satisfied for all and , if for all , the number of visits at goes to infinity as , i.e., as . In what follows, it is shown that as for all . With a slight abuse of notation, let and denote, respectively, the number of total times the rover/copter visits within the time step and the number of times the corresponding observations for are . To show by contradiction, let denote the set of all states at which the number of visits does not go to the infinity as , and assume that is non-empty. In other words, there exists a time step such that all are no more visited after , i.e., for all . Hence, we have for all , and, therefore, for all , which implies that the entropy remains constant and does not converge to . On the other hand, it follows that, for all , as . Hence, the environmental beliefs of the atomic proposition converge to the appropriate values, i.e., for all and , if and if as . Therefore, the entropy converges to , i.e., for all and , as .

Now, since for all , there exist a time step such that the following holds: for all , and ,

| (33) |

Recalling that we set in (10), the inequality (33) implies that, at a certain time step after , the copter would select some at the beginning of execution of Algorithm 3, which means from Assumption 1 that the copter would have visited after . However, this contradicts the assumption that all are not visited after . Overall, such contradiction follows from the fact that we assume is non-empty. Therefore, it follows that is empty, and thus as for all . In summary, (23) and (24) are satisfied for all and .

Appendix B Proof of Corollary 1

Let denote the product MDP between and , where , , and are the same as the product belief MDP defined in Definition 1, and is the transition probability function, defined by (for all and ) iff and otherwise. If is known, the optimal policy is then obtained by solving the value iteration algorithm over the product MDP . This fact, combined with Theorem 1, implies that if the product belief MDP in Definition 1 converges to the product MDP , i.e., as , then as . To show that as , we need to show that (for all and ). Suppose that (resp. ) for all (resp. ), i.e., all the environmental beliefs of the atomic propositions converge to the appropriate values. From (14), it then follows that iff and otherwise. From (15), we then obtain iff and otherwise. Hence, the transition belief function (18) becomes (for all and ) iff and otherwise, which indeed coincides with . Hence, it follows that (for all and ), and, therefore, as . As described above, this directly follows as .