Combinatorial Regularity for Relatively Perfect Discrete Morse Gradient Vector Fields of ReLU Neural Networks

1 Introduction

Much of the recent progress of machine learning is due to exponential growth in available computational power. This growth has enabled neural network models with trillions of parameters to be trained on extremely large datasets, with exceptional results. In contrast, environmental and economic concerns lead to the question of the minimal network architecture necessary to perform a specific task.

The theoretical characterization of the exact capabilities of fixed neural network architectures is still ongoing. To understand the classification capacity of ReLU neural network functions geometrically, researchers have investigated attributes such as the expected number of linear regions [11] and the average curvature of decision boundary [1]. To describe the topological capacity of such networks, one measure of interest is the achievable Betti numbers of decision regions and decision boundaries for a fixed neural network architecture[3, 10].

In this vein, we continue the development of algorithmic tools for characterizing the topological behavior of fully-connected, feedforward ReLU neural network functions using piecewise linear (PL) Morse and discrete Morse theory. Previous work has established that most ReLU neural networks are PL Morse, and identified conditions for a point in the input space of a ReLU neural network to be regular or have PL Morse index [8]. However, discrete Morse theory provides graph-theoretic algorithms for speeding up computations and narrowing down which computations even need to be performed [13]. Furthermore, discrete Morse theory has tools for canceling critical cells which allow for the simplification of sets of critical cells.

The usefulness of discrete Morse theory in algorithmic computations and understanding the topology of cellular spaces motivates us to bridge the existing gap between PL Morse functions and discrete Morse functions in the context of ReLU neural networks.

1.1 Contributions and Related Work

We follow a framework for the polyhedral decomposition of the input space of a ReLU neural network by the canonical polyhedral complex which we will denote as , as introduced in [7] and defined in Definition 14. This framework is expanded in [8], introducing a PL Morse characterization, and in [18], introducing the combinatorial characterization of the polyhedral decomposition by sign sequences.

Unfortunately, computing the canonical polyhedral complex and topological properties of its level and sublevel sets is expensive and can only be done for small neural networks, in part because has exponentially many vertices in the input dimension of . It is this drawback that is the main motivation for the construction of a discrete function associated to ; such a function will retain the topological information desired, but should ease issues of hight computational complexity.

We build on existing literature by providing a translation from the PL Morse characterization from [8] to a discrete Morse gradient vector field by exploiting both the combinatorics from [18] and a technique for generating relatively perfect discrete gradient vector fields from [6]. The main result of this paper (Theorem 3) provides a canonical way to directly construct a discrete Morse function (defined on the input space) which captures the same topological information as the existing PL Morse function. This paper is intended as an intermediary which should allow further computational tools to be developed.

While not the primary goal of this paper, a secondary contribution which we hope to highlight is the development of additional tools for translating from piecewise linear functions on non-simplicial complexes to discrete Morse gradient vector fields. To our knowledge, all current theory relating PL Morse functions to discrete Morse functions on the same complexes is detailed in [6]. We also provide realizability results for certain shallow networks (Theorem 2) - namely, we are able to characterize the possible homotopy types of the descision boundary for a generic PL Morse ReLU neural network by restricting the number and possible indices of the critical points of .

1.2 Outline

In Section 2, we review the mathematical tools we are using. In Section 3, we review the construction of the canonical polyhedral complex and share some novel realizability results for shallow neural networks. Section 4 contains our main result (Theorem 3). This theorem provides a translation from a PL Morse ReLU neural network to a relatively perfect discrete gradient vector field on . In Section 5 we discuss some of the issues surrounding effective computational implementation, and in Section 6, we provide concluding remarks.

2 Background: Polyhedral Geometry and Morse Theories

A ReLU neural network is a piecewise linear function on a polyhedral complex which we call the canonical polyhedral complex. Before developing constructions on this specific object, we review some general constructions in polyhedral and piecewise linear geometry which we will use repeatedly. Readers familiar with these topics may choose to begin at a later section and refer back to this section for notation if necessary.

Notably, we treat polyhedra as closed intersection of finitely many halfspaces in , and allow unbounded polyhedra. In our setting, a polyhedral complex is a set of polyhedra in which is closed under taking faces, such that every pair of polyhedra shares a common face (which may be the empty face). We denote the underlying set of a polyhedral complex by , and take the interior of a polyhedron to be its interior in the relative topology induced by . A function may be defined by functions with domain and codomain given by the respective underlying sets. Such functions are called piecewise linear on if the function on is continuous and, for each polyhedron , is affine. We refer the reader to [7] and [9] for an additional overview of definitions in polyhedral geometry relevant to this work.

2.1 Some piecewise linear and polyhedral constructions

The terms below are defined specifically for polyhedra and polyhedral complexes. We use definitions from [22] and [6]. These definitions for polyhedral complexes are motivated by similar constructions for simplicial complexes. The additional generalizations to local versions of these terms are necessary for working in the polyhedral setting. We will use the local star and link of a vertex to provide combinatorial regularity which we will exploit in the algorithms we describe in Section 4 and Section 5.

Definition 1 (Cone, Cone Neighborhood, cf. [22, 9]).

Let be a point in and a set in . We define and call a cone if each point in can be written uniquely as a linear combination of and an element of . A cone neighborhood of a point in a polyhedral complex is a closed neighborhood of in given by a cone , for a compact set .

Remark 1.

Every open neighborhood of in contains a cone neighborhood of in .

Next, we create local versions of the following constructions which are well known in simplicial complexes. (1-2) are adapted from [9], (3) is adapted from [6], and (4) is, to our knowledge, new:

Definition 2 (Star, Local Star, Lower Star, Local Lower Star).

-

1.

The star of a point in a polyhedral complex is the set of all polyhedra in which contain , that is, .

-

2.

Let be a compact set contained in such that the cone neighborhood satisfies . The local star of with respect to , denoted , is given by the cells .

-

3.

If is a piecewise linear function, the lower star of p relative to is the set

-

4.

Finally, the local lower star of with respect to and relative to , denoted , is given by the restriction of a lower star of to the cone neighborhood : .

Observe that for a given point and any cone neighborhood of , the local star and local lower star of have a poset structure given by containment and induced by that of the star of ; this poset structure is independent of choice of . This justifies calling our construction the local lower star of relative to .

In the simplicial context, the link can be thought of intuitively as the boundary of the star. We introduce a local version of the link in the polyhedral setting. However, in this polyhedral setting, in contrast to the star, the link and the local link may be combinatorially distinct, even though the star and local star are not.

Definition 3 (Link, Local Link, Local Lower Link).

Let be a point in for some polyhedral complex .

-

1.

The link of is the set of all faces of cells in that do not contain , denoted .

-

2.

If is a compact set such that is a cone neighborhood of , then we call a local link of .

-

3.

If is a local link of contained in , then the local lower link of is the restriction of to the lower star of : , denoted .

2.2 -orientations of PL functions

In Section 4 we will translate from piecewise linear Morse functions on certain PL manifolds to discrete Morse gradient vector fields on a corresponding polyhedral complex. A useful intermediary, and furthermore a useful tool for visualization, is what we call the -orientation on the PL manifold’s 1-skeleton.

Definition 4 ( orientation, [7]).

Let be a polyhedral complex and be the 1-skeleton of . Let be a piecewise linear function on . Then the following orientation on the 1-skeleton of is called the -orientation (read “grad-F orientation”) on .

-

1.

Orient the edges of on which is nonconstant in the direction of increase of .

-

2.

Do not assign an orientation to those edges of on which is constant.

We primarily consider the case where is only constant on vertices. For ReLU neural networks, this is not always the case, but is sufficiently common to treat as a distinguished case.

Lemma 1.

The following are properties of a -orientation on a polyhedral complex on which is only constant on vertices.

-

1.

There are no directed cycles in the directed graph.

-

2.

If is a closed, bounded polytopal cell of , then the orientation restricted to the boundary of the cell has a unique source and a unique sink.

-

3.

If a polytope has a source and a sink induced by the orientation on its edges, then there is a hyperplane sweep in that hits vertex first and vertex last.

Proof.

These are standard results in linear programming. (3) is due to the fact that induces a linear projection such that is minimal and is maximal. The preimage of each point in is a hyperplane intersected with . ∎

Remark 2.

As evidenced by (3), two combinatorially equivalent polyhedral complexes might have different sets of admissible PL gradients on their edges depending on the location of their vertices. The question of how to classify all geometrically realizable polyhedral complexes and corresponding -orientations by a ReLU neural network with a fixed architecture is open.

Lemma 2 (Cf. [8] Lemma 6.1).

If and are two vertices connected by a flat edge , and and are two edges incident to and respectively which bound the same cell , then the gradients on and have the same relative orientation; they must both be pointing away from and respectively, or both be pointing towards.

The above lemma implies the following corollary immediately.

Corollary 1.

If is a dimensional face of a -dimensional cell , and is a continuous affine linear function on and such that is constant, then or , with equality occurring only on .

We also find the following no-zigzags lemma useful in understanding realizable orientations.

Lemma 3 (No-zigzags lemma).

Let be a cell of a polyhedral complex and an affine function on . Let and be unbounded edges of with vertices and . Let be an edge of connecting and . If orientation on is towards and the orientation on is away from then the orientation on is from to .

Proof.

First, the existence of a orientation on and implies that is nonconstant on . As is affine, the level sets of in are lines.

Next, is not constant on , because if it were, then by Lemma 2 both and would be oriented in the same direction relative to and .

For the sake of contradiction, if the edge were oriented from to , then consider a level set of that includes at a point on the interior of . The -orientations on and imply that the level set containing also intersects the interiors of and in points and . The points and , on three different faces of , cannot be contained in a line because is a polyhedron, giving a contradiction. ∎

2.3 Piecewise linear Morse critical points

Smooth Morse theory describes the classical relationship between the homotopy type of sublevel sets of a smooth function and what is called the index of its critical points, the number of negative eigenvalues of the Hessian [20]. In non-smooth contexts, alternative tools are needed. One such tool is piecewise linear (PL) Morse theory. No one such theory is generally accepted, but we adapt the following definition due to its ease of use in this context, adapted from [9]:

Definition 5 (Piecewise Linear Morse Critical Point, Regular Point, Index).

Let be a combinatorial -manifold and let be piecewise affine on cells. Let . Let be the standard cross-polytope in centered at the origin and define by

If there are combinatorially equivalent link complexes for and contained in the stars of and such that and have the same signs at corresponding vertices, then is a PL critical point of with index .

Letting , we call a PL regular point of if, likewise, there is a combinatorially equivalent link complex for and contained in the stars of and such that and have the same signs at corresponding vertices.

If satisfies neither condition at , then is called a degenerate critical point of .

Here, the standard cross-polytope in is the convex hull of the points . One natural simplicial decomposition of consists of those simplices given by the convex hull of the origin, , together with one vertex which is nonzero in the th coordinate direction. This simplicial decomposition is compatible with the piecewise linear structure of for all .

We say that is PL Morse if all vertices are regular or critical with index for some . As in smooth Morse theory, the sublevel set topology of a PL function only changes at PL critical points, and if is PL Morse, the change in homotopy type at a PL critical point of index is consistent with attaching a -cell. Furthermore, if and is the only PL critical point satisfying that condition, the rank of the relative homology is and, for , have rank zero [8].

In most cases, PL critical points only occur at vertices of the polyhedral complex, but this relies on the function being nonconstant on positive-dimensional cells. In [8] and [18] it is shown that all ReLU neural networks which are nonconstant on positive-dimensional cells are PL Morse.

ReLU neural networks often have cells of their Canonical Polyhedral Complex (see Definition 14) on which they are constant (which we call flat cells, in line with [8]), and as a result not all ReLU neural networks are PL Morse. While it is a goal of the authors to extend our construction of a discrete Morse function for networks with flat cells (see Section 6 for a brief discussion on this topic), such networks are not in the scope of the current paper. Therefore, we restrict this paper to ReLU neural networks whose only flat cells are vertices.

2.4 Discrete Morse vector fields

The strength of discrete Morse theory is that it provides algorithmic tools for computing complete sublevel set topology [5].

Such tools, to our knowledge, have not been developed in generality for PL Morse theory. While the majority of discrete Morse theory has been developed in the context of simplicial complexes and CW complexes, the definitions are applicable in the context of polyhedral complexes with few changes, which we discuss at the end of this section. The main difference between polyhedral complexes and cellular complexes is the presence of unbounded polyhedra, which we address in Section 4.1.

We begin by reviewing standard definitions in discrete Morse theory; interested readers can find more details in [6].

Definition 6 (Discrete Morse Function ([5])).

Let be a simplicial [cellular] complex. A function is a discrete Morse function if, for every simplex [cell] ,

and

and at least one of the above equalities is strict. Simplices [cells] for which both equalities are strict are called critical.

A discrete Morse function has the property that it assigns higher values to higher dimensional simplices, except possibly with one exception locally for each simplex. For a given simplicial complex , there may be many discrete Morse functions; for example, any function which assigns increasing values to simplices with increasing dimension will be discrete Morse, and all simplices will be critical. However, it is usually possible to find a more “efficient” discrete Morse functions in the sense that complex has a smaller amount of critical simplices than overall simplices (see for example [4, 13, 17]).

Sublevel sets of discrete Morse functions are subcomplexes, and provide a way of building the simplical complex in question by adding simplices in order of increasing function value. As in classical Morse theory, the homotopy type of the sublevel set can only change when a critical simplex is added, and the dimension of the critical simplex indicates that homotopy type of the sublevel differs only by the attachment of a cell of the same dimension.

Associated to each discrete Morse function is a discrete gradient vector field. The information contained in the gradient vector field of a discrete Morse function is sufficient to determine the homotopy type of sublevel sets, and gradient vector fields have the benefit of a combinatorial formulation. To define the gradient vector field of a discrete Morse function, we first introduce the definition of a general discrete vector field.

Definition 7 (Discrete Vector Field, [6]).

Let be a simplicial [cellular] complex. A discrete vector field on is a collection of pairs of simplices [cells] of such that:

-

1.

-

2.

Each simplex [cell] of belongs to at most one pair in .

The discrete gradient vector field of a discrete Morse function on is the pairing that arises from as follows:

-

1.

If is critical, then it is unpaired,

-

2.

Otherwise, if there exists a face of with , then is paired with ,

-

3.

Otherwise, there is a coface of with , and is paired with .

It is clear from Definitions 6 and 7 that this collection of pairs of is indeed a discrete vector field.

Often, discrete Morse functions are represented only by their gradient vector fields, as opposed to giving the function itself. Therefore, it is valuable to be able to determine when a discrete vector field represents the gradient of a discrete Morse function. The discrete Morse analogue to gradient flow along a discrete vector field is a -path.

Definition 8 (-path, [6]).

Given a discrete vector field on a simplicial [cellular] complex , a -path is a sequence of cells:

such that for each , and .

A classical way of determining whether a smooth vector field can represent a gradient is when it lacks circulation. The analogue in the discrete setting to “lacking circulation” is “no non-trivial closed -paths”. The following theorem therefore indicates when a discrete vector field is the gradient of a discrete Morse function.

Theorem 1 ([5], Thm. 3.5).

A discrete vector field on a simplicial [cellular] complex is the gradient vector field of a discrete Morse function if and only if there are no non-trivial closed -paths.

The critical cells of a discrete gradient vector field can be thought of as tracking an analog of the topology of the polyhedral complex’s sublevel sets under a particular function which would induce said discrete vector field, as seen below.

Definition 9 (Perfect DGVF, Relatively Perfect DGVF [6]).

A discrete gradient vector field on simplicial [cellular] complex is called perfect if the number of critical cells of of dimension is equal to the rank of for all integers .

Let be a piecewise linear function on cells of . Let be restricted to the images of vertices of . For a given value of , define to be the greatest value of on vertices strictly less than .

A discrete gradient vector field on is called relatively perfect with respect to the function if ,

where denotes the number of discrete critical -simplices [cells] in ,

Ultimately, we hope the existence of a relatively perfect discrete gradient vector field will enable improved computation of topological features of the level and sublevel sets of a neural network .

2.5 Challenges in relating PL Morse and discrete Morse functions

Most known relationships beween PL Morse and discrete Morse constructions are implicit. In [2, 12, 21], we see that the construction of discrete gradient vector fields for cubical complexes from function data is well-studied; this is in part due to the regularity of their local combinatorics. For cubulations of compact regions of , for example, when simplifying persistence homology computations, there is a discrete Morse function constructed which has an implied analog of a smooth or piecewise linear function on the underlying space.

To our knowledge, there has been little work done to construct a discrete gradient vector field from a PL Morse function in a general setting. In a general setting, [16] compares the PL approximations of a scalar function defined on a triangulated surface to a discrete gradient vector field built using a greedy algorithm - they find that under certain regularity conditions, critical cells in the vector field are adjacent to critical vertices in the PL approximation. Likewise in [6] an algorithm is presented for constructing a discrete gradient vector field which is relatively perfect to a PL Morse function on a simplicial complex which is a combinatorial manifold. However, due to theoretical limitations for algorithms on -spheres for , the algorithm applies only to simplicial complexes of dimension .

As polyhedral complexes have fewer combinatorial restrictions on their structure than simplicial and cubical complexes, a general theory for creating discrete gradient vector fields on an arbitrary polyhedral complex from function values is likely to be similarly intractable.

Fortunately, due to the combinatorial regularity of the canonical polyhedral complex of a ReLU neural network, which we will describe in Section 3, we may follow an approach similar to that in [6] to constructively obtain a relatively perfect discrete gradient vector field when the network has vertices in general position.

3 Background: The canonical polyhedral complex

We now may discuss the specifics of the canonical polyhedral complex, beginning with its construction through a brief description of the combinatorial characterization which enables its topological properties to be studied.

3.1 Construction of

For , define the function as

Definition 10 (ReLU Neural Network; [7], Definition 2.1,[18], Definition 3).

Let . A (fully-connected, feed-forward) ReLU neural network with architecture is a collection of affine maps for . Such a collection determines a function , the associated neural network map, given by the composite

We say that this network has depth and width max. The maps are called the th layer maps. By abuse of notation, we often refer to as simply .

The terms fully-connected and feedforward are machine learning terms which indicate that there are no restrictions on each affine function and that there are no expectation of identical layer maps (i.e. no recurrence), respectively. We will omit these terms in the rest of this paper, but keep them in the definition to disambiguate for readers with a machine learning background.

Note that is a piecewise linear function, and that defines a polyhedral decomposition of its domain, . More specifically, we may decompose into a polyhedral complex by identifying the (maximal) subsets of on which each is affine linear. We call this decomposition the canonical polyhedral complex of , denoted . To give a precise definition of this complex, we first introduce notation concerning partial compositions of the layer maps. In particular, the canonical polyhedral complex can be defined using such partial composites.

Definition 11 ([18], Definition 4).

If is a ReLU neural network with , then we denote the composition of the first layers as ; i.e.

We refer to as ending at the th layer.

Conversely, we denote the composition of the last layers as ; i.e.

We refer to as starting at the th layer.

Clearly, . Furthermore, each has an associated natural polyhedral decomposition of . The canonical polyhedral complex will be defined as the common refinement of these iterative polyhedral decompositions. To formalize the polyhedral decomposition induced by , note that each affine linear map has an associated polyhedral complex.

Definition 12 (Notation ;[7], [18], Definition 4).

Let be an affine function for . Denote by the polyhedral complex associated to the hyperplane arrangement in , induced by the hyperplanes given by the solution set to , where is the projection onto the th coordinate in .

For , is not affine linear, but instead is piecewise linear. Therefore, the solution sets associated to are not necessarily hyperplanes. However, it is still possible to use these solution sets to determine a polyhedral decomposition of the input space, for each .

Definition 13 (Node maps and bent hyperplanes, [7], Definion 8.1, 6.1, [18], Definition 5, 6).

Given a ReLU neural network , the node map is defined by

A bent hyperplane of is the preimage of under a node map, that is, for fixed .

A bent hyperplane is generically a piecewise linear codimension submanifold of the domain (see [7] for more details). It is “bent” in that it is a union of polyhedra, and may not be contractible or even connected. For each , the associated bent hyperplanes induce a polyhdedral decompostion of which we denote . The canonical polyhedral complex can then defined iteratively, by intersecting the regions of with the polyhedral decomposition given at .

Definition 14 (Canonical Polyhedral Complex , [18], Definition 7).

Let be a ReLU neural network with layers. Define the canonical polyhedral complex of , denoted , as follows:

-

1.

Define by .

-

2.

Define be defined in terms of as the polyhedral complex consisting of the following cells:

Then is given by .

The above definition is the “Forward Construction” of in [18]. Alternatively, there is an “Backward Construction” which gives the same complex. This definition originally appeared in [7].

For example, in special case of a neural network with architecture , the canonical polyhedral complex is a decomposition of by lines, which with full measure will fall in general position. It is immediate that contains unbounded edges, unbounded polyhedra of dimension 2, and vertices.

3.2 Local characterization of vertices and PL Morse critical points in

For general piecewise linear functions on polyhedral complexes, it is not generally algorithmically decidable whether a vertex is PL critical or regular. However, the canonical polyhedral complex has combinatorial properties which make the question of PL criticality algorithmically decidable.

Following [18], under full-measure conditions called supertransversality and genericity, each cell of can be labeled with a sequence in , where . This construction is not new (for example, it also appears in [14]), but to our knowledge there is no standard reference.

Definition 15 (Sign Sequence, [18]).

The sign is given by the sign of , which is well-defined. The collection of all such for a specific cell is called its sign sequence, and is denoted .

These sign sequences encode the cellular poset of as follows:

Lemma 4 (Sign sequence properties, [18]).

Let be a supertransversal ReLU neural network. The following is true about any two cells and of :

Define by

Then , where is a cell in . Furthermore:

-

1.

is a face of (Lemma 18)

-

2.

if and only if (Lemma 19)

Finally, the cellular coboundary map in can be neatly described:

Lemma 5 (Sign sequence, [18], Lem. 21).

Let be a supertransversal, generic ReLU neural network. Let be a [polyhedral] cell of . Then the cells of which is a facet are given by the set of cells with sign sequence given by for all and except for exactly one, a location for which .

In other words, under supertransversality and genericity conditions each -cell of has exactly entries of its sign sequence equal to zero, and all incident cells can be identified by replacing each zero entry with . Not unsurprisingly, this identifies the intersection combinatorics of cells in the local lower star of a vertex with the intersection combinatorics of the coordinate planes in .

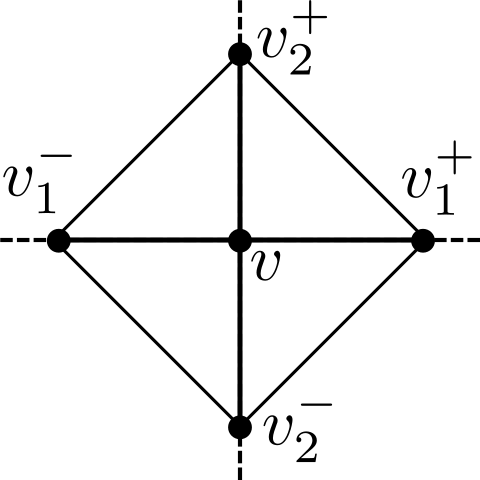

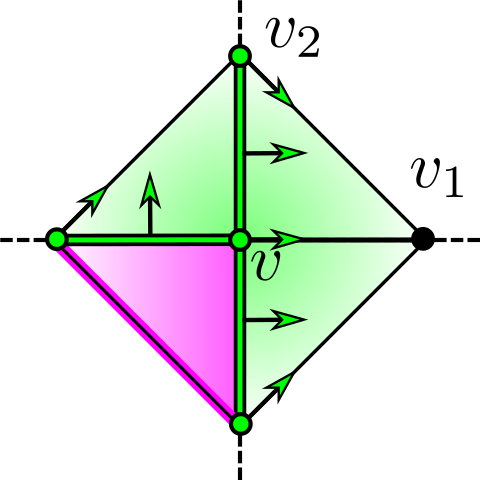

If is a vertex of , we can thus create a simplicial complex whose underlying set is the union of the local star and local link of , and which has the combinatorics of a cross-polytope in . The vertices of this simplicial complex are together with a point selected from each edge incident to . For each from to , replacing the th zero entry with a to obtain an edge , then selecting a point from that edge; likewise select from the edge obtained by replacing the th zero entry with a . The -simplices of this simplicial complex are the convex hull of the sets consisting of exactly one of and for each , together with .

The arguments in [8] and [19] use this characterization and variants of Definition 5 to show that a vertex of is PL critical if and only if the edges and have opposite -orientations for all , and if so, the index of the the critical point is given by the number of these pairs which are oriented towards ([19], Theorem 3.7.3). As a result, is PL Morse if and only if all edges are assigned a -orientation.

3.3 Realizability results for PL Morse ReLU neural networks

As an initial example of the usefulness of the combinatorial description given by sign sequences, we develop an exploration of some realizability results for ReLU networks by classifying all possible PL Morse ReLU neural networks on an architecture, up to -orientation (Theorem 2). These results are new in this context and our techniques are illustrative of sign sequence properties which will be used throughout the remainder of the paper. However, the main results of this paper do not rely on the results of this section.

Lemma 6.

If is a PL-Morse generic ReLU neural network with a architecture, then the -orientations on unbounded edges which share the same vertex is the same, but any set of -orientations on unbounded edges subject to this restriction is realizable.

Proof.

There is a unique generic affine hyperplane arrangment with hyperplanes in , up to affine transformations. It has a single bounded -cell which is, in fact, an -simplex, which we will call . All unbounded cells in this hyperplane arrangement share a face with this -simplex.

If is PL Morse, then none of the faces of is a flat cell. This means none of the -dimensional topes of has the sign sequence.

There are possible sign sequences in , and topes in . In particular, the cell is present. Furthermore, it cannot be an unbounded cell, as the opposite unbounded cell would have the sign sequence, so the cell is . Thus, the image of is contained in the first quadrant.

Next if is an unbounded tope of with a single vertex, then it has a sign sequence with exactly one entry which is a , corresponding with the axis its image is restricted to. That is , where is the index in which the sign sequence of is positive.

All unbounded edges of belong to exactly one tope of this form. An unbounded edge has exactly zero entries, and one nonzero entry, which must be otherwise the edge would be a face of the all region. The tope may be identified in sign sequence by setting all zero-entries of the edge’s sign sequence to .

All edges of are unbounded and share the same vertex. A path away from the vertex of along any edge of maps to a path along away from the origin under , the first layer of . The derivative of the restriction of along any edge of pointing away from the vertex of is a positive multiple of , which we denote .

The -orientation on an (outward-oriented) edge of is given by the sign of for the -dimensional vector giving the linear part of the affine function .

As for all edges of , for a positive , then the -orientation on is outward if and only if the th entry of is positive.

This shows all edges of have the same -orientation. Since there is such a tope for each , to induce an orientation on set to be positive or negative, as desired. This allows for all possible orientations on the unbounded edges of . ∎

Using both Lemma 6 and Lemma 3, we may further exploit properties specific to the polyhedral complex of a network with architecture to determine which vertices in are PL-critical, as shown in the following lemma.

Lemma 7.

Let be an generic, PL Morse ReLU neural network. Any critical points of are index- or index-, and there is at most one critical point.

Proof.

Any critical points of are vertices of . Let be a vertex of and suppose it is a critical point. Let be the unique unbounded tope of whose only vertex is . Note that has (unbounded) edges, and the -F orientation on these edges of either are all towards or are all away from , as seen in Lemma 6. As each edge of is opposite from an edge of , in order for to be critical all edges on containing must be oriented in the opposite direction from their paired edges on [8]. As a result, the edges in incident to are either all oriented towards or all oriented away from ; that is, is either index- or index-.

To see that there is at most one critical point, without loss of generality, assume is critical of index . Then all edges incident to are oriented away from . If is another vertex of , then there is an edge connecting to , and of course must be oriented away from . There is a unique unbounded cell in which contains and and no other vertices; it also contains . The unbounded edges of which contain must be oriented away from . By the no-zigzags lemma 3 the unbounded edges of which contain must be oriented away from (as each unbounded edge of containing shares a -cell with an unbounded edge of containing ). By Lemma 6 all unbounded edges pointing away from are oriented away from . In particular, the edge opposite is oriented away from . Because is oriented towards and its opposite edge is oriented away from , we conclude is a PL-regular point. ∎

Theorem 2.

Let be an generic, PL Morse ReLU neural network. Then the decision boundary of is empty, has the homotopy type of a point, or has the homotopy type of an -sphere.

Proof.

We now classify all possible PL Morse neural networks.

Corollary 2.

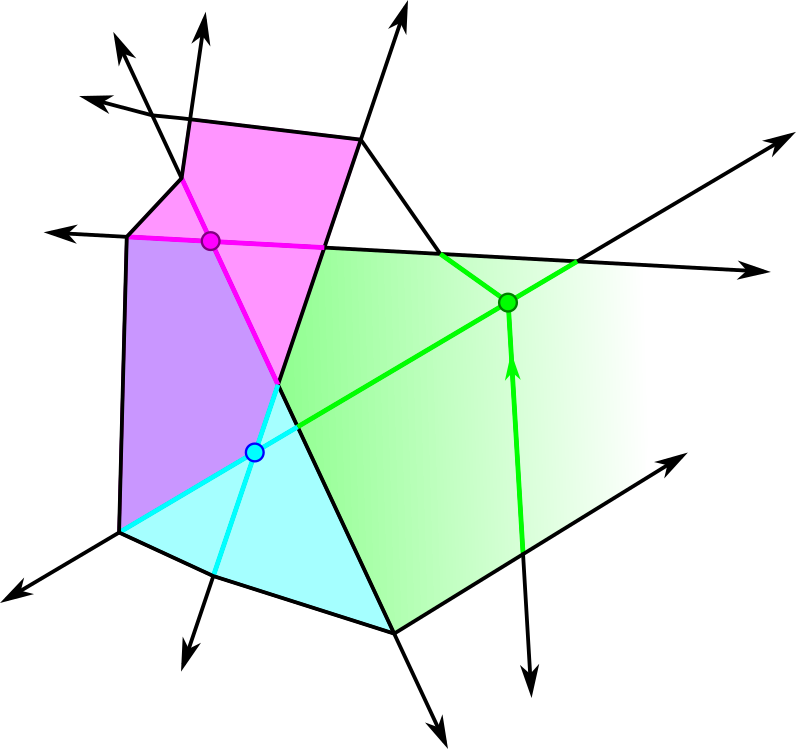

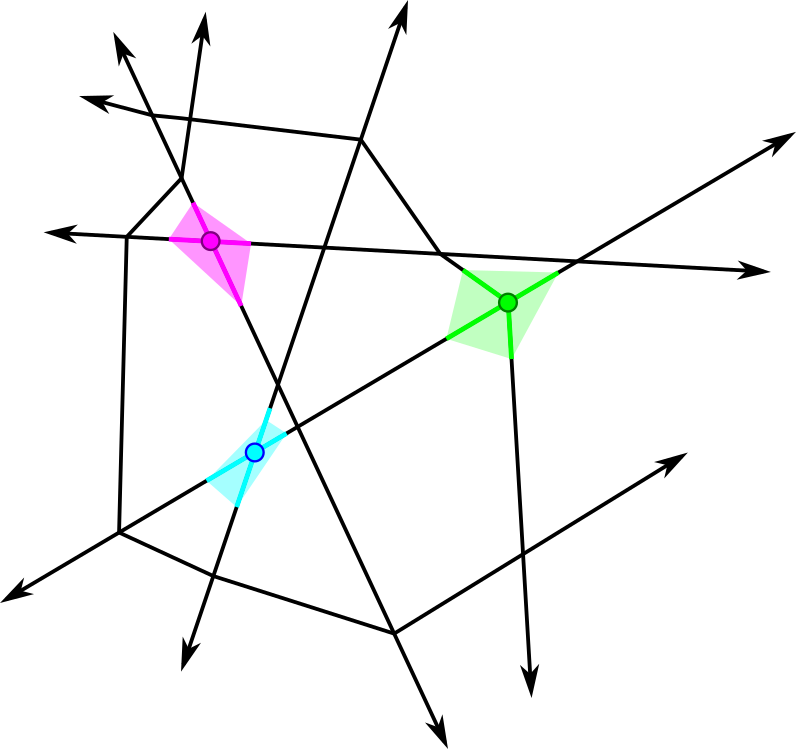

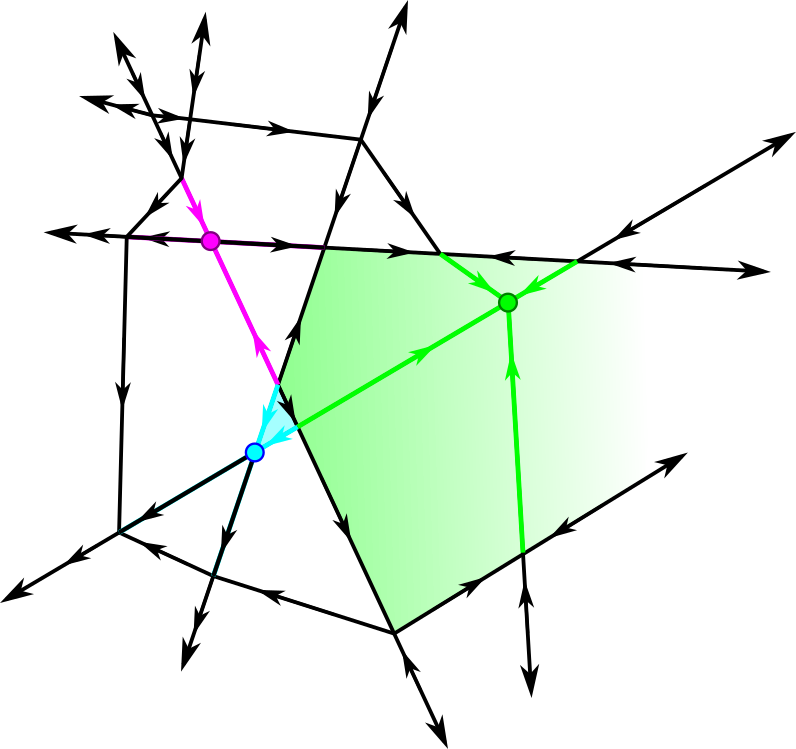

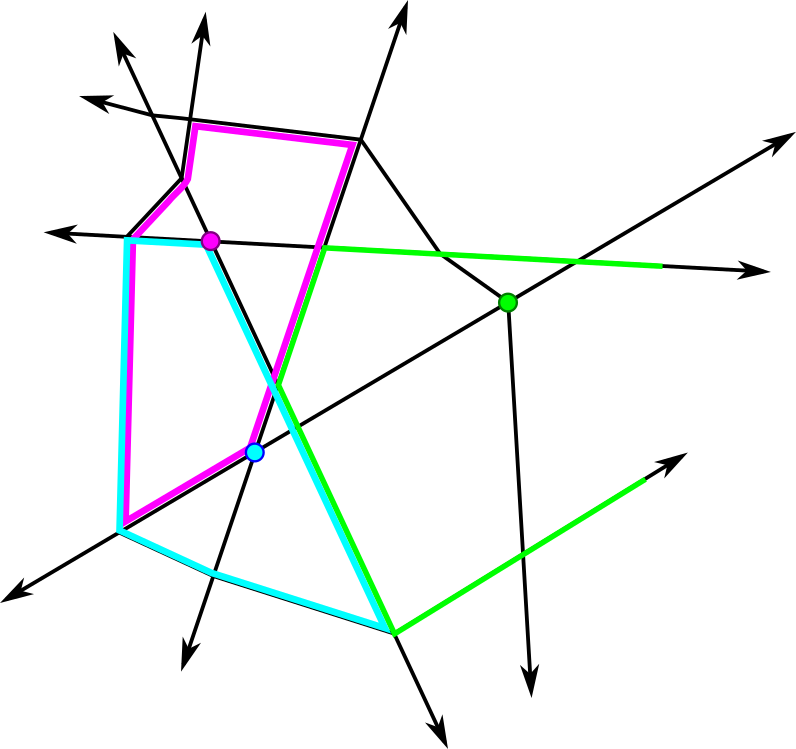

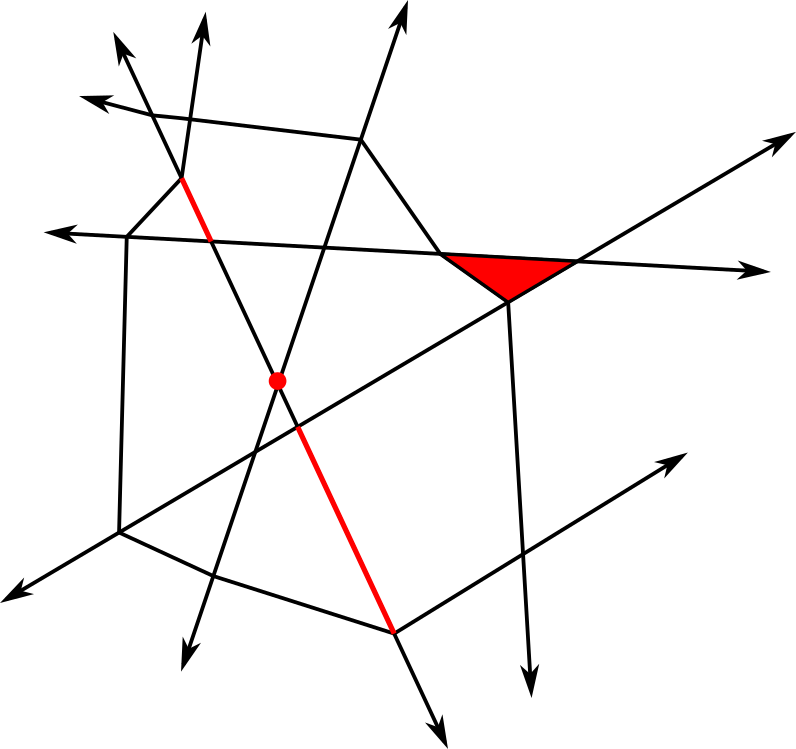

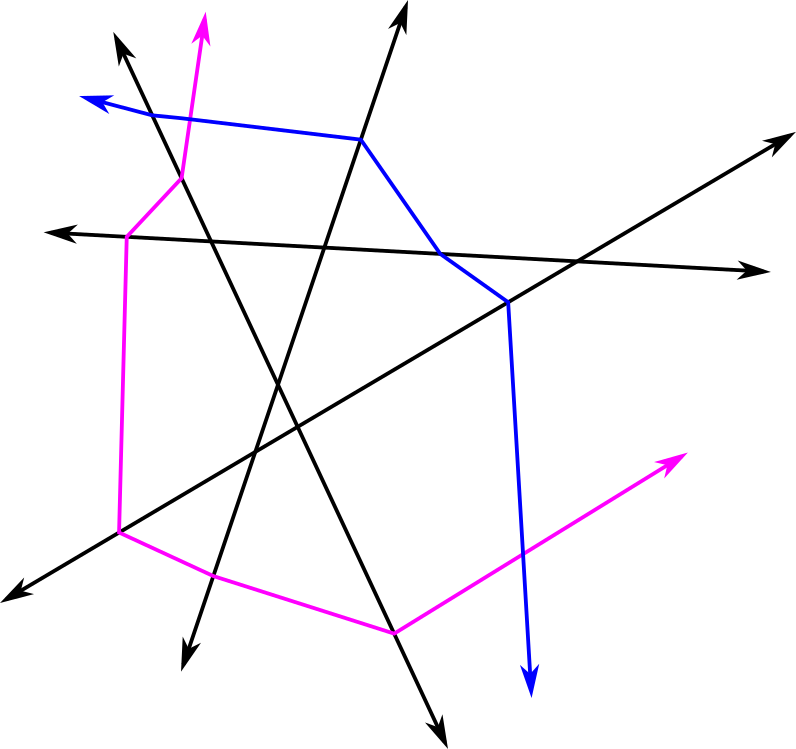

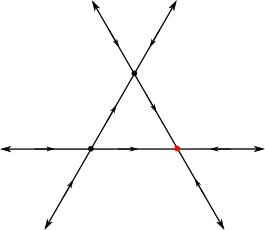

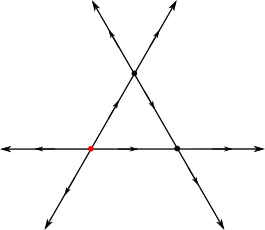

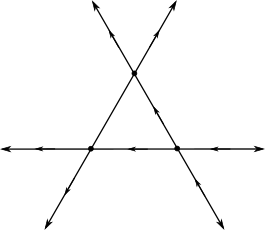

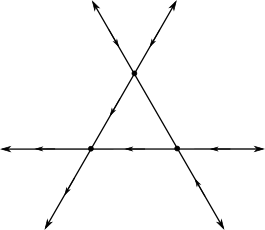

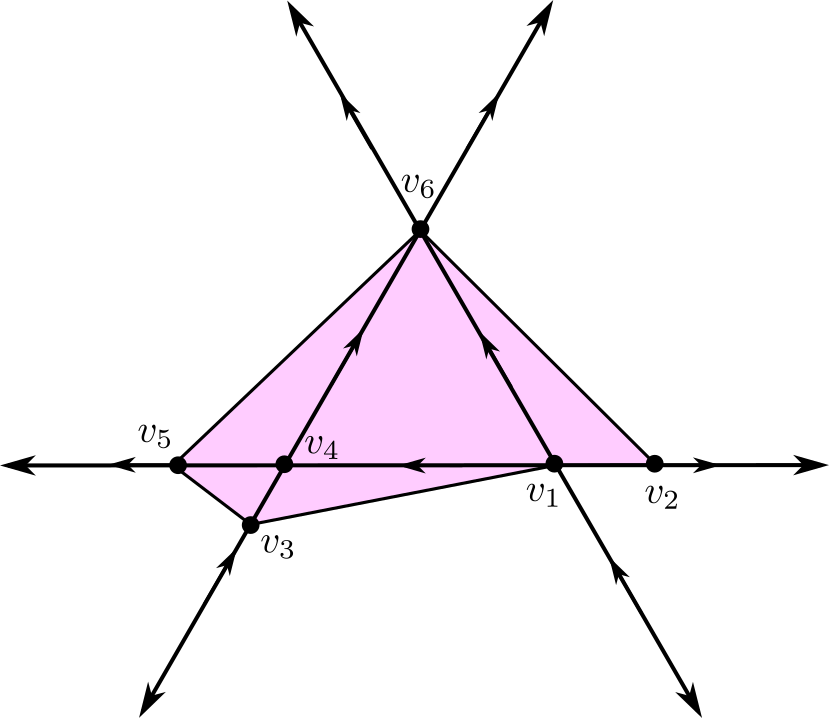

The -orientations depicted in Figure 6 are the only possible -orientations on a generic, supertransversal, PL Morse ReLU neural network, up to combinatorial equivalence.

Proof.

Let be such a network, and denote as the unique bounded -cell of . Following Lemma 6, there are 4 possible scenarios for the -orientations on the unbounded edges of :

-

1.

all unbounded edges are oriented towards (Figure 6(a)),

-

2.

all unbounded edges are oriented away from (Figure 6(b)),

-

3.

the unbounded edges of exactly one vertex of are oriented towards and all other unbounded edges are oriented away from (Figure 6(c)), or

-

4.

the unbounded edges of exactly one vertex of are oriented away from and all other unbounded edges are oriented towards (Figure 6(d)).

If not all unbounded edges have the same orientation with respect to , then Lemma 3 determines the orientation of two of the three edges of . Moreover, the orientations of these bounded edges ensure that none of the vertices in can be PL-critical.

If all unbounded edges have the same orientation with respect to , then Lemma 1 ensures that orientation on the edges of do not generate a cycle. Therefore, there must be a vertex of for which the orientation of each bounded edge adjacent to is towards , and a vertex of for which the orientation of each bounded edge adjacent to is away from .

If it is the case that all unbounded edges are oriented towards , then is a PL-critical vertex of index , and all other vertices are PL-regular.

If it is the case that all unbounded edges are oriented away , then is a PL-critical vertex of index , and all other vertices are PL-regular. ∎

Given a canonical polyhedral complex (arising from a ReLU neural network), not all functions on that canonical polyhedral complex are realizable as ReLU neural network functions. From [8] we see that that a ReLU neural network of the form generally has at most one -cell on which it is constant, for example, but other limitations exist as well.

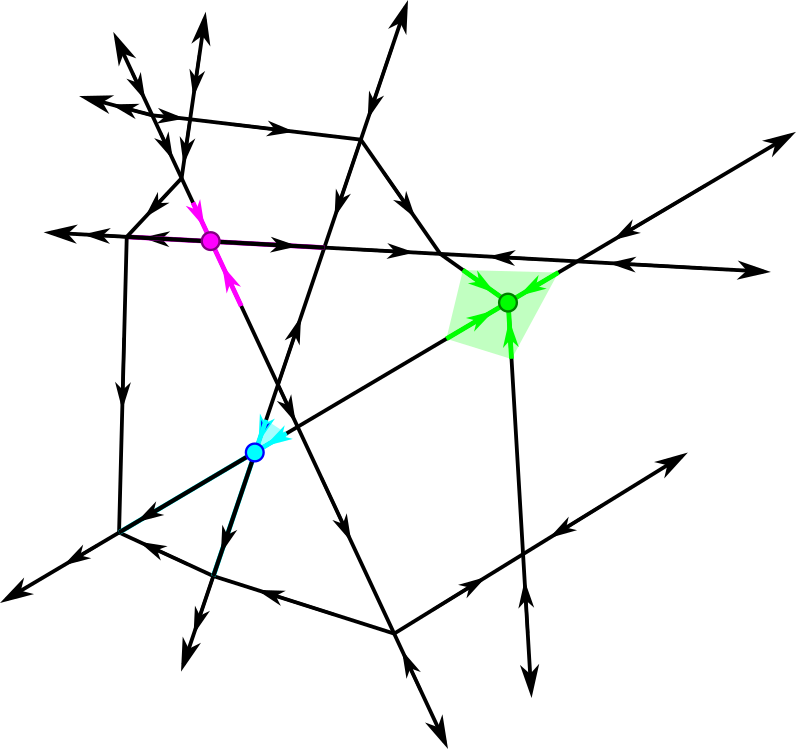

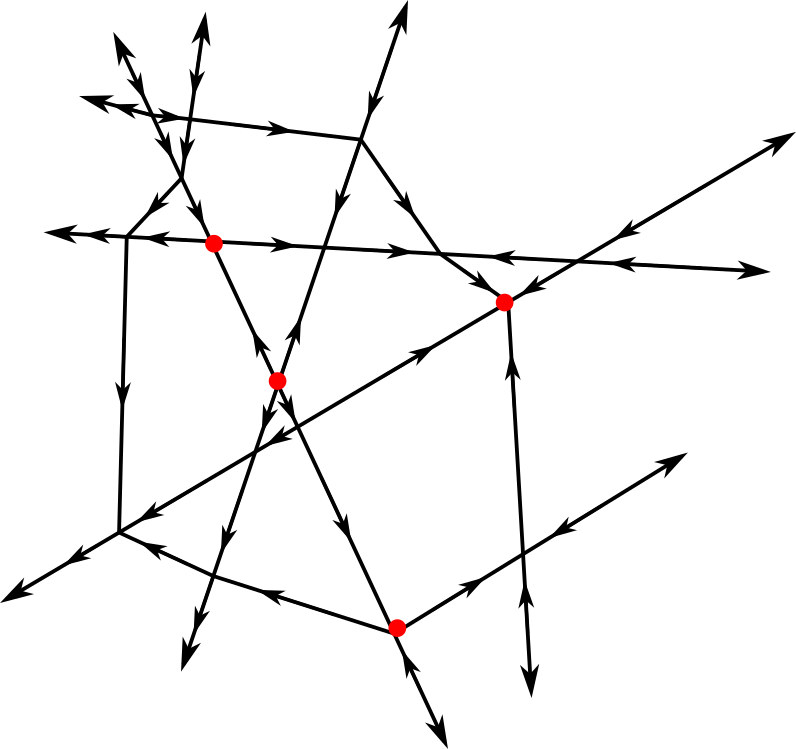

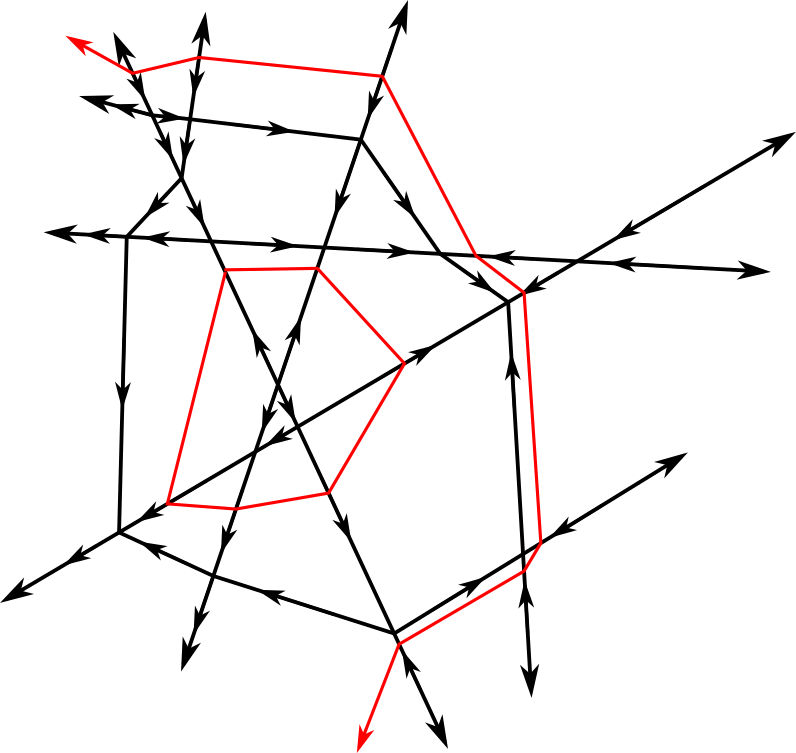

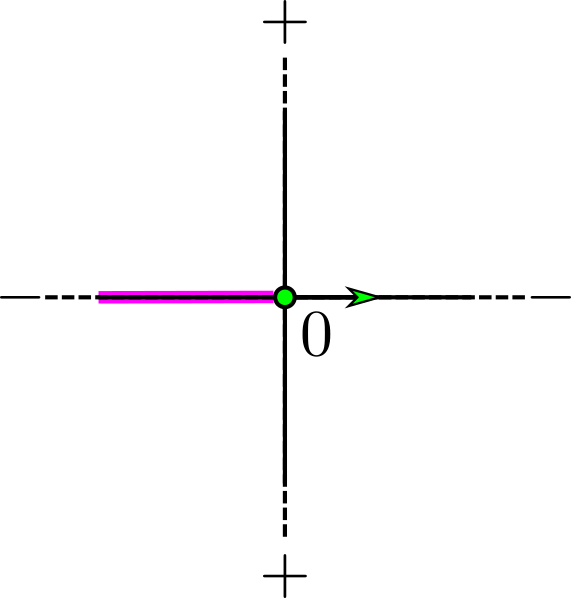

In fact, Figure 7 depicts a orientation on the same 3 hyperplanes which is realizable if is a PL function, but not if is a ReLU neural network of architecture . That it cannot be a ReLU network follows immediately from Corollary 2. That it is realizable can be seen by assigning and observing that on each simplex in the highlighted simplicial complex determines the orientation on the edges of the polyhedral cell which contains it. This results in the orientations pictured.

4 Relatively Perfect Discrete Gradient Vector Fields for ReLU Networks

Because our setup allows us to identify PL critical vertices, we further leverage this information to constructively establish the existence of a discrete gradient vector field on the cells of which are bounded above in . Moreover, this discrete gradient vector field has the property that critical cells are in bijection with PL critical vertices in a way which respects function values; this is a technical property introduced in [6] called relative perfectness (Definition 9). Ideally, the existence and algorithmic constructability of this discrete gradient vector field will enable faster computational measurements of the topology of ReLU neural networks’ decision regions.

In this section, we follow a similar construction to that in [6], where they establish an algorithm for finding a discrete gradient vector field which is relatively perfect to any given PL Morse function on a simplicial combinatorial manifold with dimension . In a similar vein, we construct a discrete gradient vector field by considering the lower stars of individual vertices of .

Some of the key differences in our algorithm are that (a) we are not dimensionally restricted, (b) is not generally a simplicial complex, and (c) we do not rely on nonconstructive existence theorems to assign local gradient vector fields. Instead, we exploit specific combinatorics of (as given in Section 3.2) to constructively produce the desired local pairings. To our knowledge, constructions establishing discrete gradient vector fields on polyhedral complexes with associated PL Morse functions are relatively unexplored.

4.1 Discrete Morse theory on unbounded polyhedral complexes

Before we introduce our construction, we must justify why it is reasonable to use the tools of discrete Morse theory on the polyhedral complex , which is not formally a cellular complex due to the presence of unbound cells. In fact, we will construct a discrete gradient vector field on an associated -complex with no unbounded cells.

Definition 16 ().

The Complete Lower Star Complex relative to is the subcomplex of containing all cells which are bounded above, i.e.

The one-point compactification of , which we call the one-point compactified complete lower star complex relative to is denoted . The distinguished point is formally assigned a function value .

Remark 3.

is indeed a subcomplex of , as if is a polyhedron satisfying , then this is true of all of its faces as well. Furthermore, even though contains unbounded cells, is a regular -complex.

In Theorem 3 we will identify a a discrete Morse function on with a single connected component appearing at . This new discrete Morse function will be relatively perfect to the PL function on the subset of given by . That this construction does not capture gradient information on cells whose image in is not bounded above is not a large problem: the homotopy type of the sublevel sets of only changes at , for a vertex of .

4.2 Networks which are injective on vertices

We start our construction locally, by showing that, for a given vertex in , it is always possible to generate pairings in the local lower star of which reflect the PL-criticality of .

Lemma 8.

Let be a fully-connected, feedforward ReLU neural network which is injective on vertices of . Then for each vertex in , there is a pairing in the local lower star of relative to satisfying exactly one of the two following conditions:

-

1.

If is a vertex of that is PL regular, then there exists a choice of complete acyclic pairing of cells in the local lower star of relative to .

-

2.

If is a vertex of that is PL critical of index , then there exists a choice of acyclic pairings of cells in the local lower star of which leaves exactly one -cell unpaired.

Furthermore, these pairings can be constructed algorithmically.

Proof.

As is injective on vertices, all edges have a -orientation and is PL Morse. For any vertex , following Section 3.2 there exists a compact set for which is a -dimensional cross-polytope, and the cells of this cross polytope are in one to one correspondence with the cells in . Through this correspondence, a pairing on the cells of may be used to induce a pairing on the cells of . This correspondence restricts to a correspondence between and .

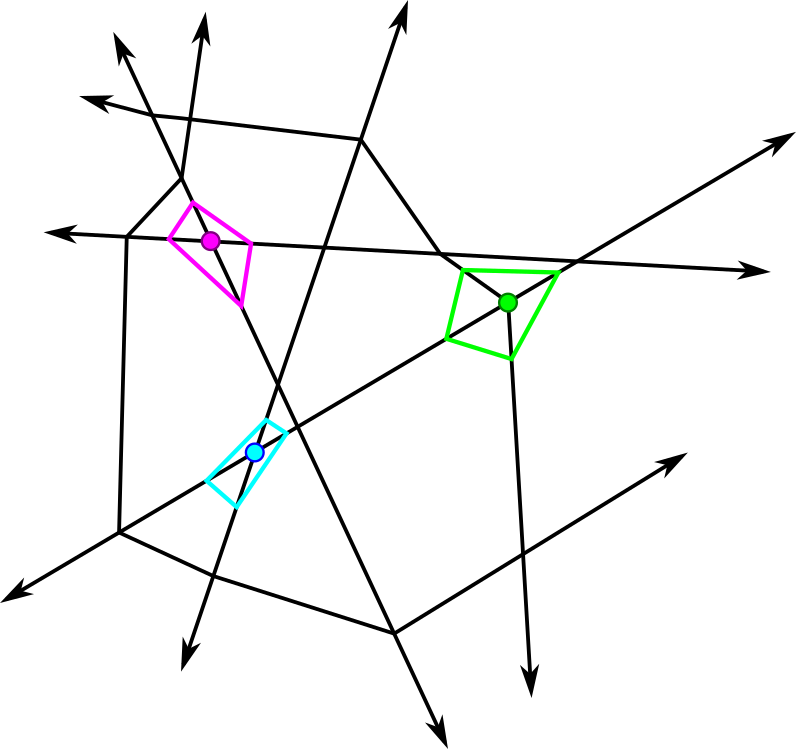

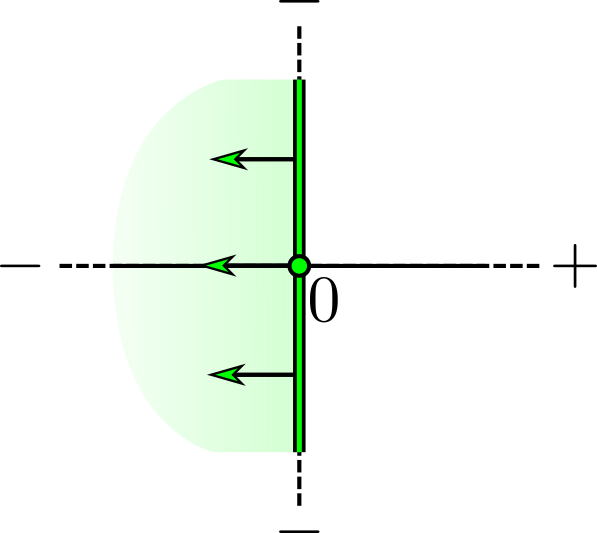

If is regular (Figure 8). Let be a PL regular point. Then there is at least on pair of edges for which and (or vice versa) (due to [19], Theorem 3.7.3). Assume without loss of generality it is (as local relabeling does not change the combinatorics).

Let denote the bounding vertex of in which is not . We may view as the cone of over , where is the -dimensional cross-polytope obtained when the antipodal edges and are removed from : i.e.,

We may obtain a discrete vector field on by pairing a -dimensional cell with the corresponding dimensional cell . This gives a complete pairing of all cells in .

To see that is acylic note that, by construction, any path within contains exactly one pair. For any choice of as an initial pair in a path, we have . Any choice of and has the property contains , so that paired with a codimension one face in . This ensures that any path terminates after one pair.

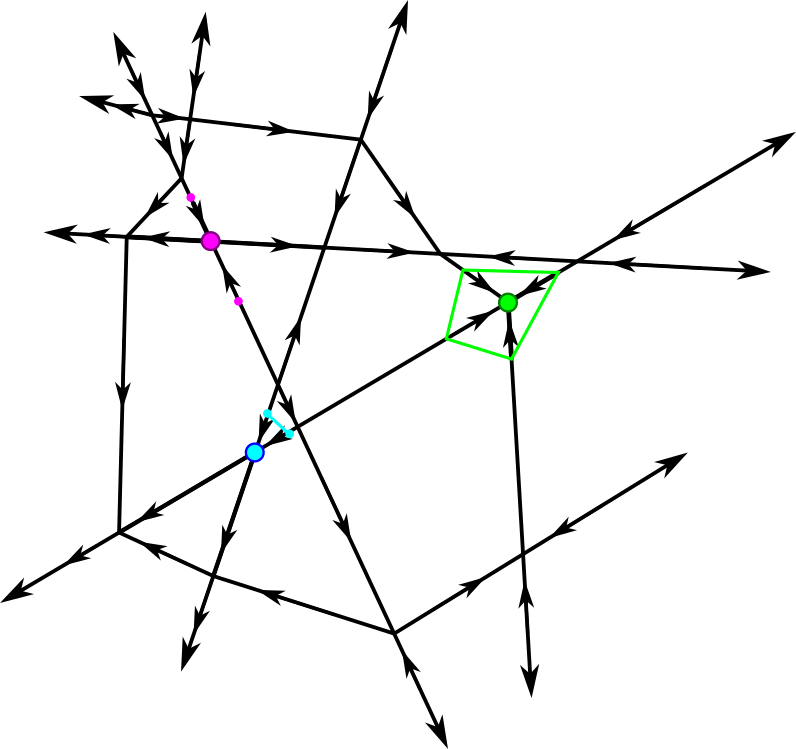

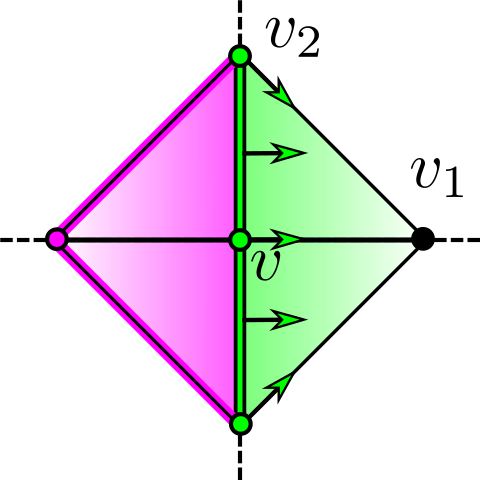

If is critical (Figure 9). Now suppose is critical of index . Let be a local lower link of . Then has the combinatorics of the coordinate axes of , and is the cone of with . Together, form a -dimensional simplicial complex, call it , whose underlying set is a -dimensional cross-polytope.

Selection step. For each coordinate direction , there is a pair of edges and in whose sign sequences differ by opposite signs in a single entry. The choice of labeling of determines . These edges intersect on opposite sides of in vertices . For each , let be a vertex selected from (distinguishing a single quadrant of ). Observe that each has an opposite vertex given by the other vertex in this set, which we will call . Once the choice of ordering coordinate directions and choosing a positive direction in each coordinate direction is complete, no other choices need to be made.

Pairing construction algorithm. We can then recursively assign the following pairings on . Recursively for , for each in (relative to ), add the pairing if has not yet been paired. These pairings restrict to a pairing on .

This operation constructs a discrete vector field. Namely, it does not try to pair any cell twice. Observe that at step if in is in the and has not yet been paired, then we claim has also not yet been paired. This is because each step adds cells which comprise the union of the star and the link of a single vertex in , a simplicial complex; this union is always a simplicial complex. If a cell has not been added to this union, then no cell it is the boundary of could have been added to the union either. Thus each cell is included in at most one pair of cells in the pairing, as is needed.

All cells in get paired except one, of dimension . By construction, the union of the cells of the resulting pairing is the union of the stars and links of the vertices in . Since is precisely all cells in which do not contain (and vice versa), we see that the only cell in which is not paired is the unique cell which contains none of the and is not in the stars of any of the . This is the interior of the simplex given by , which is a -simplex, as desired.

The resulting construction is acyclic. Let be a pair in arising from this algorithm. Then by construction for some satisfying .

Now we consider the possibilities for the next pair in the -path, . We know must be a codimension 1 element of the boundary of that is not . It also must be paired with a higher-dimensional coface.

Let be a candidate for , that is, an arbitrary codimension 1 element of the boundary of that is not . We observe that, as a boundary element of which is not ,

where is a codimension-one boundary element of .

Consider the step at which was added to the pairing.

If had not yet been added to the pairing by step , then contains for all (as otherwise would be in the star or link of ). Thus also contains for all as well, and also must not have been paired by step . If was not a member of a pair by step , then is a -pair, and is added to the pairing at step . Thus, is not the first element of a pairing and cannot be in a -path.

We conclude that if is a -path within the local lower star of , then was paired at a strictly earlier step than . As a result, contains no cycles.

∎

Remark 4.

Observe that the only choice made was in the Selection Step. To make the Selection Step deterministic and dependent on the values of , we observe that can “morally” be selected to be on those edges whose opposing vertex has the lowest value in , or if there is no opposing vertex, whose unbounded edge has the steepest directional derivative. However, the same result follows regardless of whether we made the “moral” choice. In fact, as we discuss in Section 5, it is potentially more computationally convenient to be amoral (in this sense).

We now are able to “stitch together” the local pairings on the lower stars of the vertices for a global discrete vector field which satisfies the desired properties, following a similar approach as in [6].

Theorem 3.

Let be a vertex in and let be an acyclic discrete vector field on obtained by the construction in Lemma 8. Then is a relatively perfect discrete gradient vector field to on , with a critical -cell.

Proof.

The lower stars of each of the vertices of are disjoint, and the union of all the lower stars of all the vertices of is .

is a valid discrete gradient vector field. Suppose we have a -path . If this path consists entirely of cells in the lower star of some fixed vertex then by Lemma 8 it is acyclic.

Otherwise, some pair satisfies the condition that is in the lower star of a different vertex than ; call these and respectively. (Observe we can make this statement because each -pair is contained in the lower star of the same vertex, by construction). As is also in the lower star of , is bounded above by . In particular, as is a face of , must also be bounded above by . Since is not in the lower star of (and is in the lower star of ), we conclude that that is strictly less than . As a result, all -paths cannot return to the lower star of a vertex once they have left it.

By construction has exactly one critical -cell for each vertex which is a PL critical point of with index , and a critical -cell with -value . Each critical -cell has maximal value given by for its corresponding vertex . As the vertex is an index- PL critical point, is rank 1 ([8]); this is precisely what is needed for to be relatively perfect to (Definition 9).

∎

In summary, the results of this section demonstrate a constructive algorithm for producing a relatively-perfect discrete gradient vector field to on the cells of .

5 Computational considerations

Computational implemention of the algorithm in Theorem 3 would rely upon identifying whether a vertex in is PL Morse, which involves computing the sign of the gradient on each edge incident to a vertex. Gradients are a local computation, but until now, identifying edges and vertices of this polyhedral complex computationally relied upon an algorithm which globally computes the location and sign sequence of all vertices of . This is inefficient if, for example, we wish to follow the local gradient flow and identify reasonable critical cells locally. In this section, we discuss algorithms which may be used to compute the -orientation of edges of locally. This computation allows us to construct the pairing from Theorem 3 locally to a given vertex, including whether this vertex is critical.

5.1 Partial Derivatives along Edges

Here we develop an analytic description of the gradient of restricted to a cell of , determined by the sign sequence of (Lemma 9). This gradient may then be used to explicitly compute a vector in the direction , that is, from a vertex to an incident edge for any vertex-edge pair in (Lemma 10). By multiplication, we may locally obtain the -orientation on (Corollary 3).

Lemma 9.

Let be a supertransversal neural network given by

Let be any top-dimensional cell of . Then,

where , are the linear weight matrices of and , respectively, and is the diagonal matrix with in each diagonal entry, and ranges from to .

Proof.

By construction is affine, and in fact each intermediate is also affine. Recall that each layer map is given by

where is an affine function and ReLU is the function applied coordinatewise.

By definition, if and only if . This is an affine map. If for a weight matrix and bias vector , then we note that is given by

where is the diagonal matrix with in each diagonal entry, where ranges from to (each of the output entries of ). This resulting map is affine. By composing these layer maps, we can express as

where is determined by expanding the composite matrix multiplication (and will ultimately not matter). Resultingly,

Now that we have an equation for . Letting (the last layer of ) we obtain the total gradient of given by followed by , for .

where is the weight matrix of the final affine function , as desired.

∎

We can obtain the gradient of along edges of by first identifying the direction of , which involves solving an system of equations.

Lemma 10.

Let be a supertransversal, generic neural network and let be a vertex of . Let be an edge of incident to . Let be sign sequences of and respectively. Let denote the positive ray of vectors spanned by a vector beginning at and ending at , a point in .

Let , consisting of the tuples for which . Distinguish as the unique coordinate for which .

Denote by the matrix whose th row is given by

Then

where is any positive scalar and is the standard basis vector in with a in the entry and elsewhere.

Proof.

We begin by finding a system of equations that satisfies, determined by its sign sequence.

Letting be the sign sequence of , let be the cell containing and whose sign sequence is obtained by replacing with for all entries that . By Lemma 4, contains (and ).

Since we can express the location of in by the solution of the system of equations given by equations obtained from the th rows of the th intermediate layer maps for each pair where :

| (1) |

Simultaneously, along every point in , we have (assuming without loss of generality that ) that

with all other equations in Equation 1 satisfied exactly.

Now consider the direction , that is, the direction . In each of the pairs for which , we have:

Selecting any value for with the appropriate sign gives a system of equations whose solution is a vector in the same direction , that is, . ∎

Corollary 3.

Let be a supertransversal neural network and be a vertex of . Let be an edge incident to . Let be the sign sequences of and , respectively. Denote by the partial derivative of in the direction .

Then,

where is the layer and neuron, respectively, for which .

Proof.

Multiply.

∎

Remark 5.

Observe that obtaining the sign of involves a total complexity of matrix multiplications (followed by ReLU coordinatewise), storing rows of the intermediates to obtain a system of equations to solve for . This process is comparable in complexity to evaluating at a point as long as is relatively low.

5.2 Following Gradient Flow

Here we describe an algorithm which can be used to locally compute a discrete gradient vector field at a single vertex for a fully-connected, feedforward ReLU neural network on without having computed all cells of .

In other words, suppose we have a cell with known sign sequence , and we wish to identify a pairing for in a vector field compatible with while relying on a minimal number of evaluations of or at individual points or along individual edges, respectively (as both computations are of similar complexity).

Knowing and the weights of gives an explicit set of linear inequalities which bound . The maximal (or minimal) value of (or any affine function) on can be identified via linear programming. In practice, applying a solver which uses the simplex algorithm [15] will quickly identify not only the vertex where the maximum (or minimum) of on is obtained, but also identifies the equations giving its precise location. Setting the signs of in the entries corresponding to those equations to zero will identify the sign sequence .

The zero entries of have an order determined by the existing parameter order of ; denote as the sign of in the th layer and th neuron (th coordinate direction in ). Ordering the entries of in lexicographic order induces an ordering on edges incident to by first ordering by which entry of does not equal that of , and second by whether the entry is negative or positive.

In order to apply Lemma 8 to we must evaluate the directional derivative of along each of the edges incident to whose sign sequences can be constructed. This can be done analytically via Corollary 3. If is regular, then take to be the first edge (with respect to the ordering ) where is negative but is positive; denote its sole additional nonzero entry the -th entry.

Then if , we have that is paired with its coface obtained by replacing in index with . In the case where the cell is paired with its face obtained by replacing with .

If is critical of index , then take the critical -cell to be the cell obtained by replacing the zero entries of that identify the cells in by . This an ordering and labeling of the edges for given by the restriction of to these edges. This completes the Selection Step, and the algorithm may proceed.

In this way, in order to identify a pairing to which belongs, we only need to 1) optimize on , then 2) evaluate directional derivatives of . The pairings are, in this sense, not determined by the value of on neighbors of , but instead the choice of ordering of the coordinates in each layer’s and the relative orientations of their corresponding bent hyperplanes at .

6 Conclusion

In this work, we introduce a schematic for translating between a given piecewise linear Morse function on a canonical polyhedral complex and a compatible (“relatively perfect”) discrete Morse function. Our approach is constructive, producing an algorithm that can be used to determine if a given vertex in a canonical polyhedral complex corresponds to a piecewise linear Morse critical point, and furthermore an algorithm for constructing a consistent pairing on cells in the canonical polyhedral complex which contain this vertex. However, though we discuss the principles necessary, we leave explicit computational implementation and experimental observations for future work.

As discussed in [8], not all ReLU neural networks are piecewise linear Morse, and this is a limitation of our work. Neural networks with “flat” cells (on which is constant) are not addressed by our algorithm. This work also defines homological tools which can be used to describe the local change in sublevel set topology at a subcomplex of flat cells, however extensive technical work is needed to provide a direct analog between the cellular topology of and the relevant sublevel set topology. We also leave this to future work.

We have reason to believe that our proposed algorithm is applicable to any setting in which the star neighborhoods of the vertices of a PL manifold with the structure of a polyhedral complex are locally combinatorially equivalent to a cross-polytope. The only broad class of functions which we are aware of that satisfies these conditions are ReLU neural networks and similar (for example, leaky ReLU networks or piecewise linear neural networks with activation functions that have several nonlinearities).

We intend that this work be used to develop further theoretical and computational tools for analyzing neural network functions from topological perspectives.

7 Acknowledgements

Many thanks to Eli Grigsby, without whom we may have never put our heads together.

References

- [1] Randall Balestriero, Romain Cosentino, Behnaam Aazhang, and Richard Baraniuk. The geometry of deep networks: Power diagram subdivision. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc., 2019.

- [2] Brian Brost, Jesper Michael Møller, and Pawel Winter. Computing Persistent Homology via Discrete Morse Theory. Technical report, 2013.

- [3] Ekin Ergen and Moritz Grillo. Topological expressivity of relu neural networks, 2024.

- [4] Robin Forman. Morse theory for cell complexes. Advances in Mathematics, 134(1):90–145, 1998.

- [5] Robin Forman. A user’s guide to discrete Morse theory. Sém. Lothar. Combin., 48:Art. B48c, 35, 2002.

- [6] Ulderico Fugacci, Claudia Landi, and Hanife Varlı. Critical sets of pl and discrete morse theory: A correspondence. Computers & Graphics, 90:43–50, 2020.

- [7] J. Elisenda Grigsby and Kathryn Lindsey. On transversality of bent hyperplane arrangements and the topological expressiveness of ReLU neural networks. SIAM Journal on Applied Algebra and Geometry, 6(2):216–242, 2022.

- [8] J. Elisenda Grigsby, Kathryn Lindsey, and Marissa Masden. Local and global topological complexity measures of ReLU neural network functions. Preprint arXiv:2204.06062, 2022.

- [9] Romain Grunert. Piecewise Linear Morse Theory. PhD thesis, 2017.

- [10] William H. Guss and Ruslan Salakhutdinov. On characterizing the capacity of neural networks using algebraic topology. CoRR, abs/1802.04443, 2018.

- [11] Boris Hanin and David Rolnick. Deep ReLU networks have surprisingly few activation patterns. In Advances in Neural Information Processing Systems (NeurIPS), 2019.

- [12] Shaun Harker, Konstantin Mischaikow, and Kelly Spendlove. Morse Theoretic Templates for High Dimensional Homology Computation. may 2021.

- [13] Patricia Hersh. On optimizing discrete morse functions. Advances in Applied Mathematics, 35(3):294–322, 2005.

- [14] Matt Jordan, Justin Lewis, and Alexandros G Dimakis. Provable certificates for adversarial examples: Fitting a ball in the union of polytopes. Advances in neural information processing systems, 32, 2019.

- [15] Howard Karloff. The Simplex Algorithm, pages 23–47. Birkhäuser Boston, Boston, MA, 1991.

- [16] Thomas Lewiner. Critical sets in discrete morse theories: Relating forman and piecewise-linear approaches. Computer Aided Geometric Design, 30(6):609–621, 2013. Foundations of Topological Analysis.

- [17] Thomas Lewiner, Hélio Lopes, and Geovan Tavares. Toward optimality in discrete morse theory. Experimental Mathematics, 12, 01 2003.

- [18] Marissa Masden. Algorithmic determination of the combinatorial structure of the linear regions of ReLU neural networks. Preprint arXiv:2207.07696, 2022.

- [19] Marissa Masden. Accessing the Topological Properties of Neural Network Functions. PhD thesis, University of Oregon, 2023.

- [20] John Willard Milnor. Morse theory. Number 51. Princeton university press, 1963.

- [21] Vanessa Robins, Peter John Wood, and Adrian P. Sheppard. Theory and algorithms for constructing discrete morse complexes from grayscale digital images. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(8):1646–1658, 2011.

- [22] C P Rourke and B J Sanderson. Introduction to piecewise-linear topology. Springer Study Edition. Springer, Berlin, Germany, January 1982.