Communication-Efficient Local SGD with

Age-Based Worker Selection

Abstract

A major bottleneck of distributed learning under parameter-server (PS) framework is communication cost due to frequent bidirectional transmissions between the PS and workers. To address this issue, local stochastic gradient descent (SGD) and worker selection have been exploited by reducing the communication frequency and the number of participating workers at each round, respectively. However, partial participation can be detrimental to convergence rate, especially for heterogeneous local datasets. In this paper, to improve communication efficiency and speed up the training process, we develop a novel worker selection strategy named AgeSel. The key enabler of AgeSel is utilization of the ages of workers to balance their participation frequencies. The convergence of local SGD with the proposed age-based partial worker participation is rigorously established. Simulation results demonstrate that the proposed AgeSel strategy can significantly reduce the number of training rounds needed to achieve a targeted accuracy, as well as the communication cost. The influence of the algorithm hyper-parameter is also explored to manifest the benefit of age-based worker selection.

Index Terms:

Local SGD, communication efficiency, age-based worker selection, distributed learningI Introduction

Parameter-Server (PS) setting is one of the most popular paradigms in distributed machine learning. In this setting, the PS broadcasts the current global model parameter to the workers for gradient computation and aggregates the computed gradients to update the global model. The operation repeats until some targeted convergence criterion is reached[1, 2, 3, 4]. However, the massive communication overhead between the PS and the workers has become the main bottleneck of the overall system performance as the sizes of the neural networks grow exponentially enormous[5].

In order to address the communication issue, [6] proposes a widely-studied algorithm, named federated averaging (FedAvg), where the PS randomly selects a subset of workers and sends the global model to the selected workers for a certain number of local updates at each round. The PS then collects the latest local models to update the global model and re-sends it to the re-selected workers for local updates again. By decreasing the communication frequency and the number of participating workers, FedAvg can achieve improved communication efficiency, with its convergence analysis extensively studied under both homegenous and heterogeneous data distribution cases in e.g., [7, 8, 9, 10, 11, 12]. Additionally, [13] proposes a robust aggregation scheme to improve the performance of FedAvg.

To further improve communication efficiency, instead of random selection as in FedAvg, adaptive worker selection technique has attracted increasing attention recently. One of the representative works on adaptive selection in SGD-based distributed learning is [14], in which a worker is required to upload its gradient only if its contribution (i.e., the change to the global model) is large enough or its local gradient has become overly stale. The asserted optimal client selection strategy (OCS) is further developed in [15] by selecting the workers with larger gradient norms to minimize the variance of the global update. In addition, another metric of “contribution”, i.e., local loss of workers, is also explored as a criterion for worker selection design [18, 16, 17].

On the other hand, imbalanced participation could render the training unstable or slow, especially under heterogeneous data distribution, since the influence of some parts of the data on the overall training process is weakened [20, 19]. Hence, the age of each worker, indicating the number of consecutive rounds where it has not participated in the computation, could be taken into consideration by adaptive selection techniques. To this end, [22] explores the influence of ages in gradient descent (GD) scenario. For distributed learning over wireless connections, [21] jointly leverages the channel quality and the ages of workers to improve the communication efficiency. Additionally, if with perfect lossless channel, the scheme reduces to the Round Robin policy. Moreover, [23] finds the age-optimal number of workers to select at each round, where the age is defined as the sum of computation time and uplink transmission time.

In this paper, we propose a novel age-based worker selection strategy for local SGD. In what we call AgeSel scheme, a simple age-based mechanism is implemented such that the workers are forced to be selected if they have not been involved for a certain number of consecutive rounds. Different from existing adaptive worker selection strategies, the proposed AgeSel relies on the age information that is readily available at the PS without additional communication and computation overhead. The convergence of AgeSel is also rigorously established to justify the benefit of age-based partial worker participation. The simulation results corroborate the superiority of AgeSel in terms of communication efficiency and required number of training rounds (to achieve a targeted accuracy) over state-of-the-art schemes.

Notations. denotes the real number fields; denotes the expectation operator; denotes the norm; denotes the gradient of function ; denotes the union of sets; represents that set is a subset of set ; and denotes the size of set .

II Problem Formulation

II-A System Model

Consider the PS-based framework of distributed learning with heterogeneous data distribution. There are distributed workers in set . Each worker maintains a local dataset of size . It is drawn from the global dataset , i.e., we have . Our objective is to minimize the weighted loss function

| (1) |

where is the -dimension parameter to be optimized, and we have the definition , with being the local loss function of worker and being the sample drawn randomly from its local dataset .

To elaborate on local SGD, we define as the global model parameter at training round , and as the local model of worker before operating local updates. At each training round , a subset of workers are selected to download the global model from the PS prior to computation. After that, each selected worker in sets its local model as and starts operating local iterations, with the updating formula given as

| (2) |

for any local iteration . Here is the stepsize and is the minibatch gradient to be computed by worker at local iteration , where is the minibatch size and is a sample drawn independently across iterations from the local dataset .

After all the workers in set have completed their local computations, a subset of workers are selected to upload their latest models, and the PS aggregates the models

| (3) |

Note that here we employ the unbiased aggregation since the weight of each worker is reflected in the worker selection process, as will be specified later.

The training process ends when some stopping criterion is satisfied, with the total number of rounds denoted as .

II-B Performance Metrics

To gauge the efficiency of the proposed scheme, we are interested in two performance metrics, i.e., the number of training rounds and the communication cost required to reach a targeted training accuracy.

Firstly, the number of training rounds of the algorithm to achieve a targeted test accuracy is used to reflect the training speed, as well as the communication cost of the algorithm.

Secondly, we define the communication cost at training round as the number of communication rounds between the PS and the workers, given as

| (4) |

This is due to the fact that the size of the global parameter downloaded by the workers is the same as that of the latest parameter uploaded by each selected worker. As a result, the total communication cost is given as .

These two metrics will be used in Section IV to compare the performance of different schemes.

III Adaptive Selection in Local SGD (AgeSel)

In this section, we first develop the novel AgeSel scheme that aims at improving communication efficiency by utilizing the age-based worker selection. Then the convergence of AgeSel is rigorously established.

III-A Algorithm Description

To elaborate on worker selection strategy in the proposed AgeSel, we define an -length vector to collect the ages of the workers, as in [14, 15]. Note that is maintained by the PS and initialized to be a zero vector. Each element of vector measures the number of consecutive rounds that worker has not been selected by the PS. Particularly, at each round , is updated as follows:

| (5) |

To identify the workers with low participation frequency, we pre-define a threshold . Accordingly, worker would be forced to participate when having its . The specific procedure of AgeSel is delineated next.

At each round , the PS selects workers to perform computation by checking the vector . More precisely, with vector , we can identify all the infrequent workers with their ages greater than , which would be considered first. Let denote the number of these workers. The set of selected workers can be determined as follows. For the first case with , the PS simply picks the workers in an age-descending order. Note that the the workers with larger sizes of local datasets are prioritized when there are ties in ages. For the second case with , the PS first picks all the infrequent workers and then chooses the rest without replacement from the workers having ages smaller than with the probabilities proportional to the sizes of their datasets.

Once the set is determined, the PS then broadcasts the global parameter to all the selected workers. By initiating its local model with , each worker in then starts iterations of local updates through (2) and sends its latest model to the PS, i.e., we have . Eventually, the PS updates the vector , along with the global model aggregated via (3).

Note that when is very large, we barely have infrequent workers. Then the set is indeed chosen by weights (i.e., the sizes of datasets), as with the FedAvg algorithm[6]; i.e., in this case, AgeSel is reduced to FedAvg.

Merits of AgeSel: The benefit from the age-based mechanism used in AgeSel is two-fold. First, it has been shown that less participation of some workers can be detrimental to convergence rate due to the lack of gradient diversity [20], especially for heterogeneous local datasets. Our age-based selection strategy can balance the worker participation, thereby preserving gradient diversity and ensuring the fast convergence. Second, generation of age information here incurs no extra communication and computation cost, in contrast to other information such as the (costly) norm of updates [15], used in existing alternatives. Hence, AgeSel is more communication- and computation-efficient.

III-B Convergence Analysis

We next establish the convergence of the proposed AgeSel algorithm under heterogeneous data distribution, with a general (not necessarily convex) objective function. Our analysis is based on the following two assumptions, which are widely adopted in related works such as [7, 8].

Assumption 1 (Smoothness and Lower Boundedness) Each local function is -smooth, i.e.,

| (6) |

. We also assume that the objective function is bounded below by .

Assumption 2 (Unbiasedness and Bounded Variance) For the given model parameter , the local gradient estimator is unbiased, i.e.,

| (7) |

Moreover, both the variance of the local gradient estimator and the variance of the local gradient from the global one are bounded, i.e., there exist two constants , such that

| (8) | |||

| (9) |

With these assumptions, we can derive an upper bound for the expectation of the average squared gradient norm , to prove the convergence of the proposed AgeSel. We start by presenting the following lemma.

Lemma 1: Under Assumptions 1-2 and is chosen such that , there exists a positive constant , such that

| (10) |

where we have defined , ; and is the cardinality of the set of workers selected by age at round , i.e., .

Proof.

The proof can be found in the appendix. ∎

Lemma 1 depicts the one-step difference of the objective function, from which we can see the impact of the age. Particularly, as grows larger, the variance term is reduced, while the variance term is increased. Therefore, the values of and , deciding jointly, have a significant impact on training speed, which will be further demonstrated in Section IV. Based on Lemma 1, we are able to arrive at the final convergence result.

Theorem 1: With the same conditions as in Lemma 1, we have

| (11) |

where we have defined the constant , and denotes the maximum number of rounds to traverse all the workers in due to the age-based mechanism.

Proof.

The proof can be found in the appendix. ∎

IV Simulation Results

In this section, we evaluate the effectiveness of the proposed AgeSel against state-of-the-art schemes including FedAvg[6], OCS[15] and Round Robin (RR) [25]. Note that AgeSel with being large reduces to FedAvg. Furthermore, when goes to zero, it is obvious that AgeSel is equivalent to RR. Since both and determine the value of , we will also explore the impact of the hyper-parameter on the performance of AgeSel.

We start by briefly introducing the three baseline algorithms. For FedAvg, the PS performs weighted selection according to the sizes of the local datasets and we have with . For OCS, the PS sends the global model to all the workers in to perform local computation and only the workers with larger contribution are selected, i.e., we have and . For RR, the workers are selected in a circular order with and the aggregation of the updates is weighted for fair comparison.

Simulation Setting. The dataset considered here is the EMNIST dataset. We aim to solve the image classification task with a two-layer fully connected neural network. There are workers in total and the data is heterogeneously distributed among them. Particularly, the samples of the dataset is sorted according to its labels and allocated to each worker in order with different sizes. The stepsize is 0.1; the batchsize is 100 and the number of local updates performed per round is set to be 5. All the schemes stop training once the test accuracy reaches 80%. For comparison, the number of workers selected to upload their local parameters at each round in all three schemes is designated to be . Moreover, we set for AgeSel.

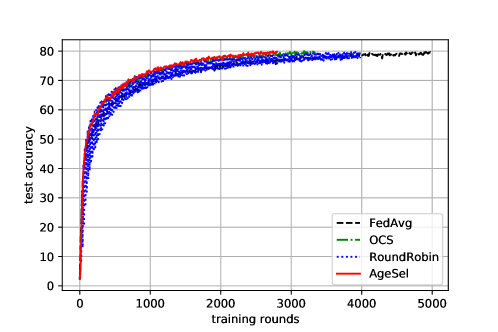

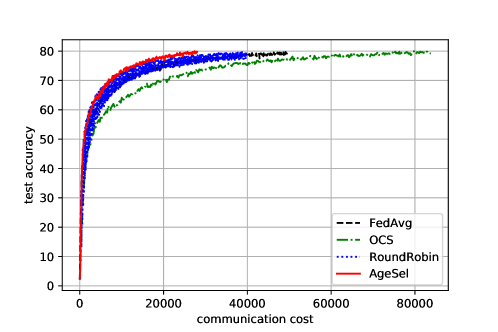

AgeSel Outperforms the State-of-Art Schemes in Both Performance Metrics. The performances of FedAvg, OCS, RR and AgeSel in terms of training rounds and communication cost with 10 Monte Carlo runs are depicted in Fig. 1 and Fig. 2, respectively.

As shown in Fig. 1, by either considering the weights (i.e., the sizes of datasets) or the ages only, FedAvg and RR require more training rounds than OCS and AgeSel in the presence of data heterogeneity. With larger norms for worker selection, OCS can converge faster. Clearly, AgeSel performs the best by achieving a desirable balance between ages and weights.

As illustrated in Fig. 2, OCS has the largest communication cost because all the workers download the global model while of them are eventually selected. As a result, the reduced training rounds cannot offset the increase of the per-round communication overhead, yielding a larger total cost than the other schemes. Since AgeSel requires fewer training rounds than FedAvg and RR, and they all have the same per-round communication cost, AgeSel achieves better communication efficiency than both FedAvg and RR. Overall, AgeSel is the most communication-efficient selection strategy among all these schemes.

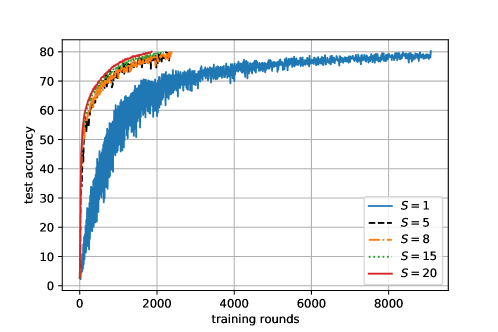

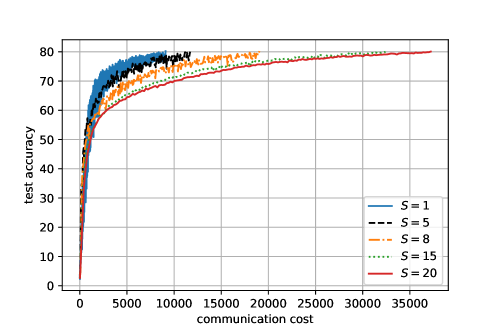

Exploration of . As illustrated in Fig. 3, when is larger than some value (e.g., ), the performance of AgeSel in terms of training round with partial participation becomes quite close to that of full participation (i.e., ). This implies that the age can indeed accelerate the training process. However, as increases, the per-round communication cost also monotonically increases, as shown in Fig. 4. From Fig. 14, we can see that, with proper values of and , AgeSel can strike a desirable balance in terms of convergence speed and communication overhead.

V Conclusions

We developed a novel AgeSel strategy to perform adaptive worker selection for PS-based local SGD under heterogeneous data distribution. The convergence of AgeSel scheme was rigorously proved. Simulation results showed that AgeSel is more communication-efficient and converges faster than state-of-the-art schemes.

Note that AgeSel is compatible with other techniques to jointly improve the overall performance of distributed learning. To name a few, it can be combined with straggler-tolerant techniques to render the learning robust to stragglers; it can also be used together with variance-reduction algorithms to further accelerate the training process. These interesting directions will be pursued in future work.

VI Appendix

VI-A Proof of Lemma 1

With the -smoothness of the objective function in Assumption 1, by taking expectation of all the randomness over we have:

| (12) |

where is due to the definitions where and ; and can be obtained directly from (a1).

We then bound the terms and respectively. For the term , with the definition of the weighted global update and the unbiased global update , we have

| (13) |

where splits the selected workers at round into the ones selected by ages in set with and the ones selected by weights in set ; is because the selection by ages is essentially unweighted random selection in expectation and the selection by weights is equivalent to in expectation. The two terms in (13) are then bounded separately.

To bound the first term, we have:

| (14) |

where is derived from the definition of ; and come from direct computation; uses the fact that ; is due to Jensen inequality and Cauchy-Schwartz inequality; follows from Assumption 1; and is due to the fact that and [24, Lemma 3], which proves that

| (15) |

under the condition that , where and are two constants defined in Assumption 2.

Likewise, the second term in (13) can be bounded as below, with replaced by :

| (16) |

With denoting the indicator function, and denotes the complementary set of set in set , the term can be bounded as

| (18) |

where is due to the definition of ; comes directly from ; follows from the fact that and ; follows from Cauchy-Schwartz inequality; is due to the fact that if s are independent with zero mean; is due to the definition that the subset of workers selected by ages at round is and ; is due to Cauchy-Schwartz inequality.

Next, we bound the term in (18) and (14) as follows:

| (19) |

where is due to Cauchy-Schwartz inequality and the bounded variance assumption; follows from (15); is due to the definition that and .

| (20) |

where comes from direct substitution; uses the result in (19); follows from direct computation; uses the fact that ; and follows from the fact that there exists a constant such that . The proof of Lemma 1 is then complete.

VI-B Proof of Theorem 1

With the age-based mechanism, we denote the minimum number of rounds to traverse all the workers in as , i.e., we have . By rearranging the terms in (21) and summing from , we can have:

| (22) |

where .

Thus, when is a multiple of , we can then readily write

| (23) |

where we used Assumption 1 and . The proof is then complete.

References

- [1] J. Dean, G. S. Corrado, R. Monga, C. Kai, and A. Y. Ng, “Large scale distributed deep networks,” in Proc. NeurIPS, vol. 25, 2012, pp. 1223–1231.

- [2] M. Li, D. G. Andersen, J. W. Park, A. J. Smola, and B. Y. Su, “Scaling distributed machine learning with the parameter server,” in Proc. USENIX OSDI, 2014, pp. 583–598.

- [3] X. Lian, C. Zhang, H. Zhang, C. J. Hsieh, W. Zhang, and J. Liu, “Can decentralized algorithms outperform centralized algorithms? A case study for decentralized parallel stochastic gradient descent,” in Proc. NeurIPS, vol. 30, 2017, pp. 5336–5346.

- [4] N. Yan, K. Wang, C. Pan, and K. K. Chai, “Performance analysis for channel-weighted federated learning in oma wireless networks,” IEEE Signal Process. Lett., vol. 29, pp. 772–776, 2022.

- [5] L. Bottou, “Large-scale machine learning with stochastic gradient descent,” in Proc. COMPSTAT, 2010, pp. 177–186.

- [6] H. B. Mcmahan, E. Moore, D. Ramage, S. Hampson, and B. Arcas, “Communication-efficient learning of deep networks from decentralized data,” in Proc. Artif. Intell. and Statist., 2017, pp. 1273–1282.

- [7] X. Li, K. Huang, W. Yang, S. Wang, and Z. Zhang, “On the convergence of FedAvg on non-IID data,” in Proc. ICLR, 2019.

- [8] H. Yang, M. Fang, and J. Liu, “Achieving linear speedup with partial worker participation in non-IID federated learning,” in Proc. ICLR, 2020.

- [9] F. Zhou and G. Cong, “On the convergence properties of a K-step averaging stochastic gradient descent algorithm for nonconvex optimization,” in Proc. Int. Joint Conf. Artif. Intell., 2018, pp. 3219–3227.

- [10] F. Haddadpour, M. M. Kamani, M. Mahdavi, and V. R. Cadambe, “Local SGD with periodic averaging: Tighter analysis and adaptive synchronization,” in Proc. Neural Inf. Process. Syst., vol. 32, 2019, pp. 11 082–11 094.

- [11] S. Wang, T. Tuor, T. Salonidis, K. K. Leung, C. Makaya, T. He, and K. Chan, “Adaptive federated learning in resource constrained edge computing systems,” IEEE J. Sel. Areas Commun., vol. 37, no. 6, pp. 1205–1221, 2019.

- [12] H. Yu, S. Yang, and S. Zhu, “Parallel restarted SGD with faster convergence and less communication: Demystifying why model averaging works for deep learning,” in Proc. AAAI Conf. Artif. Intell., vol. 33, no. 01, 2019, pp. 5693–5700.

- [13] M. P. Uddin, Y. Xiang, J. Yearwood, and L. Gao, “Robust federated averaging via outlier pruning,” IEEE Signal Process. Lett., vol. 29, pp. 409–413, 2021.

- [14] T. Chen, Z. Guo, Y. Sun, and W. Yin, “CADA: Communication-adaptive distributed adam,” in Proc. ICAIS, 2021, pp. 613–621.

- [15] W. Chen, S. Horvath, and P. Richtarik, “Optimal client sampling for federated learning,” Trans. Mach. Learn. Res., 2022.

- [16] M. Ribero and H. Vikalo, “Communication-efficient federated learning via optimal client sampling,” arXiv preprint arXiv:2007.15197, 2020.

- [17] Y. J. Cho, S. Gupta, G. Joshi, and O. Yağan, “Bandit-based communication-efficient client selection strategies for federated learning,” in Proc. Asilomar Conf. Signal, Syst., and Comput., 2020, pp. 1066–1069.

- [18] J. Goetz, K. Malik, D. Bui, S. Moon, H. Liu, and A. Kumar, “Active federated learning,” arXiv preprint arXiv:1909.12641, 2019.

- [19] S. Kaul, R. Yates, and M. Gruteser, “Real-time status: How often should one update?” in Proc. IEEE INFOCOM, 2012, pp. 2731–2735.

- [20] D. Yin, A. Pananjady, M. Lam, D. Papailiopoulos, K. Ramchandran, and P. Bartlett, “Gradient diversity: A key ingredient for scalable distributed learning,” in Proc. ICAIS, 2018, pp. 1998–2007.

- [21] H. H. Yang, A. Arafa, T. Q. S. Quek, and H. Vincent Poor, “Age-based scheduling policy for federated learning in mobile edge networks,” in Proc. IEEE ICASSP, 2020, pp. 8743–8747.

- [22] E. Ozfatura, B. Buyukates, D. Gündüz, and S. Ulukus, “Age-based coded computation for bias reduction in distributed learning,” in Proc. IEEE GLOBECOM, 2020, pp. 1–6.

- [23] B. Buyukates and S. Ulukus, “Timely communication in federated learning,” in Proc. IEEE INFOCOM, 2021, pp. 1–6.

- [24] S. J. Reddi, Z. Charles, M. Zaheer, Z. Garrett, K. Rush, J. Konečnỳ, S. Kumar, and H. B. McMahan, “Adaptive federated optimization,” in Proc. ICLR, 2020.

- [25] H. H. Yang, Z. Liu, T. Q. Quek, and H. V. Poor, “Scheduling policies for federated learning in wireless networks,” IEEE Trans. Commun., vol. 68, no. 1, pp. 317–333, 2019.