Compensation Learning

Abstract—Weighting strategy prevails in machine learning. For example, a common approach in robust machine learning is to exert lower weights on samples which are likely to be noisy or quite hard. This study reveals another undiscovered strategy, namely, compensating. Various incarnations of compensating have been utilized but it has not been explicitly revealed. Learning with compensating is called compensation learning and a systematic taxonomy is constructed for it in this study. In our taxonomy, compensation learning is divided on the basis of the compensation targets, directions, inference manners, and granularity levels. Many existing learning algorithms including some classical ones can be viewed or understood at least partially as compensation techniques. Furthermore, a family of new learning algorithms can be obtained by plugging the compensation learning into existing learning algorithms. Specifically, two concrete new learning algorithms are proposed for robust machine learning. Extensive experiments on image classification and text sentiment analysis verify the effectiveness of the two new algorithms. Compensation learning can also be used in other various learning scenarios, such as imbalance learning, clustering, regression, and so on.

Index Terms—Sample weighting, Compensation learning, Robust machine learning, Learning taxonomy.

1 Introduction

In supervised learning, a loss function is defined on the training set, and the training goal is to seek optimal models by minimizing the training loss. According to the degree of training difficulty, samples can be divided into easy, medium, hard, and noisy samples. Generally, easy and medium samples are indispensable and positively influence the training. The whole training procedure can significantly benefit from medium samples if appropriate learning manners are leveraged. However, the whole training procedure is vulnerable to noisy and partial quite hard samples.

A common practice is to introduce the weighting strategy if hard and noisy samples exist. Low weights are assigned to noisy and quite hard samples to reduce their negative influences during loss minimization. This strategy usually infers the weights and subsequently conducts training on the basis of the weighted loss [33]. Wang et al. [50] proposed a Bayesian method to infer the sample weights as latent variables. Kumar et al. [26] proposed a self-paced learning (SPL) manner that combines the two steps as a whole by using an added regularizer. Meta learning [29, 40, 54] is introduced to alternately infer weights and seek model parameters with an additional validation set.

Various robust learning methods exist that do not rely on the weighting strategy. For example, the classical method support vector machine (SVM) [5] introduces slack variables to address possibly noisy samples, and robust clustering [8] introduces additional vectors to cope with noises. However, a unified theory to better explain such methods and subsequently illuminate more novel methods remains lacking. In this study, another under-explored yet widely used strategy, namely, compensating, is revealed and further investigated. Mathematically, the compensating strategy actually adds111Weighting actually multiplies a term to a feature vector, a logit vector, a loss, etc. a perturbation term (called compensation term in this study) to a feature vector, a logit vector, a loss, etc. Many existing learning methods including some classical ones can be considered introducing or partial on the basis of compensating. Learning with compensating is referred to as compensation learning in this study.

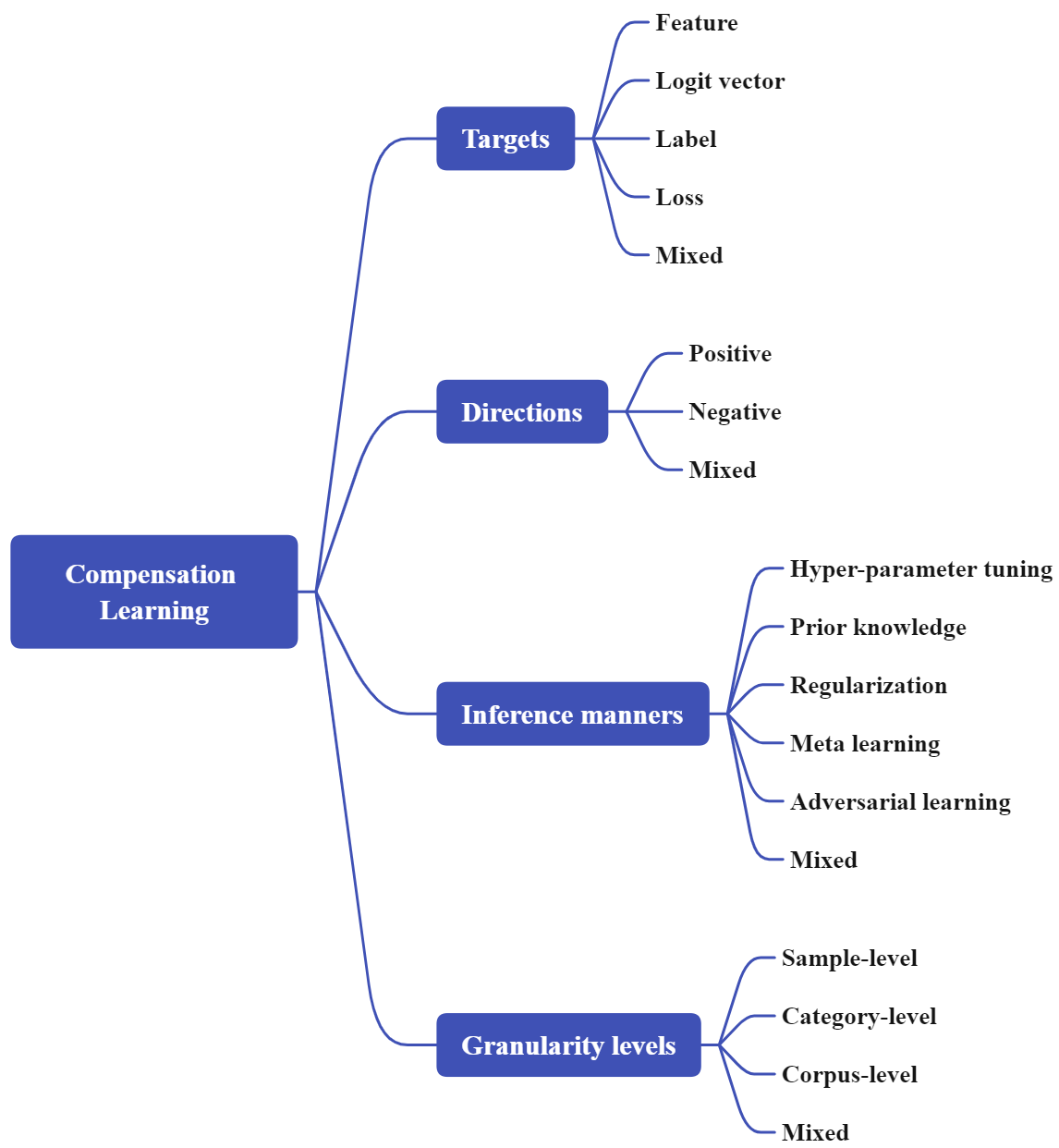

We conduct a pilot study for compensation learning in terms of theoretical taxonomy, connections with existing classical learning methods, and new concrete compensation learning methods. First, five compensation targets, three directions, five inference manners, and four granularity levels are defined. Second, several existing learning methods are re-explained from the viewpoint of compensation learning. Third, two concrete compensation learning algorithms are proposed, namely, logit compensation with 1-regularization (LogComp) and mixed compensation (MixComp). Last, the two proposed learning algorithms are evaluated on data corpora from image classification and text sentiment classification.

Our main contributions are summarized as follows:

1) An under-explored yet widely used learning strategy, namely, compensating, is identified and formalized in this study. A new learning paradigm, named compensation learning, is presented and a taxonomy is constructed for it. In addition to the robust learning mainly referred in this paper, other learning scenarios, such as imbalance learning can also benefit from compensation learning.

2) Several typical learning methods are re-explained with the viewpoint of compensation learning. New insights can be obtained for these methods. Theoretically, various new methods can be generated on the basis of introducing the idea of compensating into existing methods. Section V and VI-D present examples.

3) Two concrete new compensation learning methods are proposed. Experiments on robust learning on four benchmark sets verify their effectiveness compared with several existing classical methods.

2 Related Work

2.1 The Weighting Strategy in Machine Learning

Weighting is a widely used machine learning strategy in at least the following five areas: noise-aware learning [38], curriculum learning [1], crowdsourcing learning [7], cost-sensitive learning [4], and imbalance learning [21]. In noisy-aware and curriculum learning areas, weights are sample-wise; in cost-sensitive learning, weights can be sample-wise, category-wise, or mixed; in imbalance learning, weights are usually category-wise.

Intuitively, the weights of medium and partial hard samples are kept or enlarged; and the weights of quite hard samples should be kept or reduced. For example, in Focal loss [31], the weights of easy samples are (relatively) reduced and those of the hard222In fact, if the weights of quite hard samples are reduced, the performance will be increased [28]. samples are (relatively) enlarged. Most existing studies do not assume the above division. Instead, samples are usually divided into easy/non-easy or normal/noisy. For example, in Focal loss and Adaboost [9], the weights of non-easy samples are gradually increased.

In cost-sensitive learning, the weights are associated with the pre-determined costs. In imbalance learning, categories with lower proportions are usually negatively affected. Therefore, increasing the weights of samples in the low-proportion categories is a common practice.

The compensating strategy investigated in this study does not intend to eliminate the weighting strategy. Instead, this study summarizes various existing learning ideas which do not utilize weighting yet. These learning ideas are systematically investigated to attribute to a new learning paradigm, namely, compensation learning. These two strategies can be mutually beneficial333For example, a sample-level weighting method (e.g., Focal loss) can be transformed into a category-level weighting method (e.g., replace the sample-level prediction with the category-level average ) inspired by our taxonomy for compensating learning.. Theoretically, each concrete weighting-based learning method may correspond to a concrete compensating-based learning method. A solid and deep investigation for the weighting strategy in machine learning will significantly benefit compensation learning.

2.2 Noise-aware Machine Learning

This study investigates compensation learning mainly in learning with noisy labels. The weighting strategy is prevailing in this area. There exist two common technical solutions.

In the first solution, noise detection is performed and noisy samples may be assigned lower weights in the successive model training. Koh and Liang [15] defined an influence function to measure the impact of each sample on the model training. Samples with higher influence scores are more likely to be noisy. Huang et al. [22] conducted a cyclical pre-training strategy and recorded the training losses for each sample in the whole cycles. The samples with higher average training losses are more likely to be noisy.

In the second solution, an end-to-end procedure is leveraged to construct a noise-robust model. Reed et al. [39] proposed a Bootstrapping loss to reduce the negative impact of samples which may be noisy. Goldberger and en-Reuven [12] designed a noise adaptation layer to model the relationship between overserved labels that may be noisy and true latent labels.

A recent survey can be referred to [14]. Compensation learning can replace weighting in both above solutions. In this study, only the second solution is referred.

2.3 Robust Machine Learning

A formal definition for robust machine learning does not exist at present. There are two typical learning scenarios for robust machine learning. The first scenario refers to the robustness of a learning process, while the second scenario refers to the robustness of a trained model. In the first scenario, a robust learning method should cope well with training data that may be noisy [44, 56], imbalance [24], few-shot [53, 6], etc. In the second scenario, a robust trained model should cope well with adversarial attacks [58]. Both scenarios receive much and increasing attention in recent years. Both the weighting and the compensating strategies are widely-used in the first scenario, whereas only the compensating strategy is mainly utilized in the second scenario.

3 A Taxonomy of Compensation Learning

Compensating can be used in many learning scenarios. This section leverages classification as the illustrative example. Given a training set , , where is the -th sample, and is its categorical label. In a standard supervised deep learning context, let be the logit vector for output using a deep neural network. The training loss can be written as follows:

| (1) |

where transforms the logit vector into a soft label , represents a deep neural network, and .

In the weighting strategy, the loss function is usually defined as follows:

| (2) |

where is the weight associated to the sample . Theoretically, the more likely a sample is noisy or quite hard, the lower its weight.

The compensating strategy investigated in this study can also increase or reduce the influences of samples in model training on the basis of their degrees of training difficulty. For instance, a negative value can be added to reduce the loss incurred from a noisy sample. Resultantly, the negatively influence of this sample will be reduced because its impact on gradients is reduced. Contrarily, when the influence should be increased, a positive value can be added to the loss incurred from the sample. In terms of mathematical computation, “weighting” relies on the multiplication operation, whereas “compensating” relies on adding operation.

Fig. 1 shows the constructed taxonomy of the whole compensation learning. This section introduces each item in the taxonomy.

3.1 Compensation Targets

Eq. (1) contains four different types of variables for each sample, namely, raw feature , logit vector , label , and sample loss (=). Therefore, compensation targets can be feature, logit vector, label, and loss.

(1) Compensation for feature (Feature compensation). In this kind of compensation, the raw feature vector () or transformed feature vector (e.g., dense feature) of each sample can have a compensation vector (). Eq. (1) becomes

| (3) |

(2) Compensation for logit vector (Logit compensation). In this kind of compensation, the logit vector () of each sample can have a compensation vector (). Eq. (1) becomes

| (4) |

(3) Compensation for label (Label compensation). In this kind of compensation, the label () of each sample can have a compensation label (). Let . Eq. (1) becomes

| (5) |

In Eq. (5-i), is added to the true label , while in (ii) is added to the predicted label . Considering that labels after compensation should be a (soft) label, should satisfy the following requirements:

| (6) |

(4) Compensation for loss (Loss compensation). In this kind of compensation, the loss of each sample can have a compensation loss . Eq. (1) becomes

| (7) |

(5) Compensation for mixed targets (Mix-target compensation). In this kind of compensation, two or more of the aforementioned targets can have their compensation terms, simultaneously. For example, when both feature and label compensations are utilized, Eq. (3) becomes

| (8) |

where and are the feature and loss compensations, respectively444Lee et al. [27] combine adversarial training and label smoothing, which can be considered as mix-target (feature and label) compensation..

Remark: The compensation variables (i.e., , , , and ) are trainable during training. They are introduced to reduce the negative impact of some training samples (e.g., noisy or partial hard ones) and increase the positive impact of some samples (e.g., medium ones). For example, in raw feature-based compensation, let be the center vector of the category of . Ideally, if = – , the impact of is completely reduced if is noisy.

If the loss functions defined in Eqs. (3)–(8) are directly used without any other constrictions on the compensation variables, nothing can be learned as compensation variables are trainable. For example, when the loss in Eq. (4) is directly used, a random model will be produced because in the training, the value of will be learned to be equal to . How to infer them and learn with the above loss functions are described in the succeeding subsection.

There may exist other compensation candidates, such as view, structure (e.g., adjacency matrix in GCN), and gradient, which will be explored in future work.

3.2 Compensation Directions

There are two directions according to the loss variations after compensation.

(1) Positive compensation. If the compensation reduces the loss, then it is called positive compensation. Positive compensation can reduce the influence of noisy and quite hard samples during training.

(2) Negative compensation. If the compensation increases the loss, then it is called negative compensation. Negative compensation can increase the influence of easy and medium samples during training. This case will be discussed in the rest of this paper.

(3) Mix-direction compensation. If the compensation increases the losses of some training samples and decreases the losses of others simultaneously, then it is called mix-direction compensation. The logit adjustment method actually leverages this type of compensation, which will be discussed in Section IV.

3.3 Compensation Inference

In compensation learning, compensation variables in losses in Eqs. (3)–(7) should be inferred during training. There are five typical manners (maybe not exhaustive) to infer their values and optimize the whole loss.

(1) Inference with prior knowledge. In this manner, the compensation variables are inferred on the basis of prior knowledge. Alternatively, the compensation variables are fixed before the optimizing of training loss. Taking the label compensation as an example. Given that for each sample, we can obtain a predicted label by another model, the label compensation can be defined as

| (9) |

where is a hyper-parameter and locates in [0, 1]. defined in Eq. (9) satisfies the condition given by Eq. (6). If is in trust, then it is highly possible that approaches to zero if is normal, and it is large if is noisy. Assuming that is the output of the model in the previous epoch. Eq. (5-i) becomes

| (10) |

which is exactly the Bootstrapping loss [39].

(2) Inference with hyper-parameter tuning. In this manner, the compensation variable(s) is/are taken as hyper-parameter(s). Consequently, the optimal value is determined according to the manner of hyper-parameter tuning.

(3) Inference with regularization. In this manner, a regularization term is added for the compensation variables. For example, a natural assumption is that the proportion of the samples that require the compensation variables is small. Therefore, -norm can be used. Taking the logit compensation as examples. A loss function is defined as follows:

| (11) |

where is a hyper-parameter and is regularizer. This manner is similar to the self-paced learning [26]. When , no compensation is allowed and compensation learning is reduced to conventional learning.

(4) Inference with meta learning. In this manner, the compensation variables are inferred on the basis of another small clean validation set with meta learning. Given a clean validation set comprising clean training samples and taking loss compensation as an example. Let be the loss compensation variable for . We first define that

| (12) |

where is the model parameter set to be learned. Given , can be optimized on the training set by solving

| (13) |

After is obtained, can be optimized on the validation set by solving

| (14) |

These two optimizations can be performed alternately, and finally and are learned. When either logit or label compensation is used, the above optimization procedure can also be utilized with slight variations.

The above inference manner is similar with that used in the meta learning-based weighting strategy for robust learning [40]. Meta learning has been widely used in robust learning and many existing meta learning-based weighting methods [29, 54] can be leveraged for compensation learning.

(5) Inference with adversarial learning. In both feature and logit compensations, the compensation term can be obtained by adversarial learning. Taking feature compensation as an example, the objective function in negative compensation is

| (15) |

where is the bound. Likewise, the objective function in positive feature-level compensation can be

| (16) |

(6) Inference with mixed manners. Two or more of the above five manners can be combined together to infer the compensation term in a learning task.

Remark: An existing compensation-based learning method usually adopts one of the inference manners listed above. Theoretically, the inference manner can be changed from one manner to another and a new method will subsequently be obtained.

3.4 Compensation Granularity

Compensation granularity has four levels.

(1) Sample-level compensation. All the compensation variables discussed above are for samples. Each sample has its own compensation variable.

(2) Category-level compensation. In this level, samples within the same category share the same compensation. Taking the logit vector-based compensation as an example, when category-level compensation is utilized, the loss in Eq. (4) becomes

| (17) |

Category-level compensation mainly solves the problem when the impact of all the samples of a category should be increased. For example, in long-tail classification, the tail category should be emphasized in learning.

(3) Corpus-level compensation. In this level, samples within the whole training corpus share the same compensation. Take the negative compensation described in Eq. (15) as an example, the objective function becomes

| (18) |

which means that all samples share the same term . is exactly the universal adversarial perturbation [37].

(4) Mix-level compensation. In this level, more than one of the aforementioned three levels are utilized simultaneously. This case occurs in complex contexts, e.g., when noisy labels and category imbalance exist. Taking label-based compensation as an example. The loss in Eq. (4) can be written as

| (19) |

where is the category-level label compensation.

4 Connection with Existing Learning Paradigms

The weighting strategy is straightforward and quite intuitive, hence it has been widely used in the machine learning community. Compensating seems not as straightforward as weighting. However, it can play the same/similar role with weighting in machine learning. They both have their own strengths. Compensating can be used in the feature, logit vector, label, and loss, whereas weighting is usually used in the loss. Weighting is usually efficient, whereas the optimization in some compensating methods (e.g., Eq. (18)) is relatively complex. A theoretical comparison for them is beneficial for both strategies and we leave it as future work.

Many classical and newly proposed learning methods, which are on the basis of distinct inspirations and theoretical motivations, can be attributed to compensation learning or explained in the viewpoint of compensation learning. We choose the following methods as illustrative examples.

(1) Robust clustering [8]. Let be the cluster center of the -th cluster. Let () denote whether belongs to the -th cluster. The optimization form of conventional data clustering can be written as follows:

| (20) |

Given that outlier samples may exist, sample-level feature compensation (denoted as for ) can be introduced with regularization. When -norm is used, (20) becomes

| (21) |

which becomes the method proposed by Foreo et al. [8].

(2) Adversarial training. An adversarial sample can be regarded as a negatively compensated one for the original sample. Training with adversarial samples (i.e., adversarial training) is proven to be useful in many applications and various methods are proposed [35].

Shafahi et al. [41] proposed universal adversarial training which is actually based on a corpus-level negative feature compensation. The loss on adversarial samples is

| (22) |

Benz et al. [2] observed that universal adversarial perturbation does not attack all classes equally. They proposed a category-wise universal adversarial training method and the loss on adversarial samples is

| (23) |

which belongs to the category-level negative feature compensation. Motivated by our taxonomy, mixed corpus/category/sample-level adversarial perturbations can subsequently be generated. A mixed corpus/sample-level adversarial perturbation is described as an example:

| (24) |

where and are the corpus-level and sample-level perturbations, respectively. A further statistical analysis for the two levels of adversarial perturbations may illuminate us to better understand the adversarial characteristics of the data.

(3) Adversarial label smoothing [11]. Label smoothing is actually a type of sample-level label compensation. Its compensation term for a sample () is defined as follows:

| (25) |

where is a -dimensional vector and each element is equal to 1. Obviously, the compensation term is determined according to pre-definition (i.e., the prior knowledge manner).

According to the inference manner in our taxonomy, adversarial learning can be utilized to pursue the compensation term. Accordingly, the term is

| (26) |

where

| (27) |

Eq. (27) has an analytic solution such that is the one-hot vector for the category which corresponds to the minimum softmax value in .

(4) Logit adjustment-based imbalance learning [36]. In a multi-category classification problem, let be the proportion of the training samples in the -th category. Let . When the proportions are imbalanced, a corpus-level of logit compensation can be introduced as follows:

| (28) |

For the above loss, when is an increasing function, we conjecture that the influences of samples in the minority categories (i.e., ) on the loss are increased.

As the influences of samples in the minority categories on the loss are increased, the imbalanced problem can be alleviated by the logit compensation used in Eq. (28). When and cross-entropy loss are used, Eq. (28) becomes

| (29) |

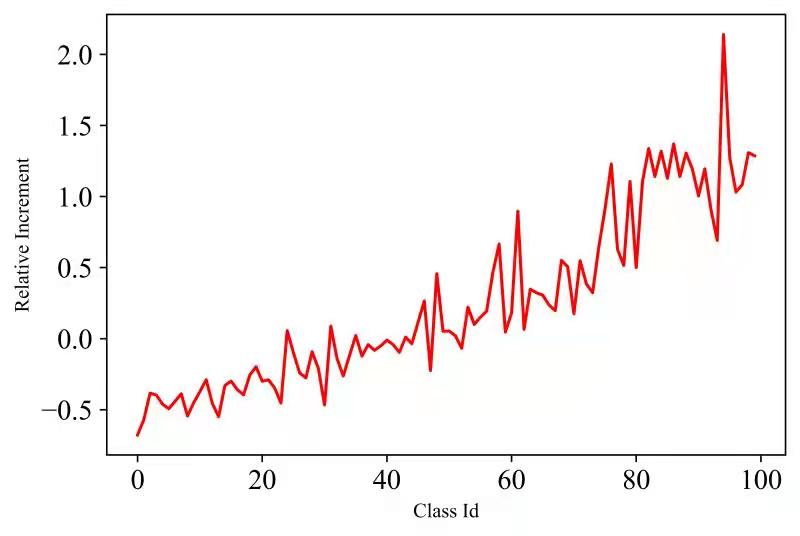

which is exactly the logit adjusted loss [36]. We make a statistic for the relative loss variations incurred by Logit adjustment for each category on the imbalanced version of the benchmark image classification data CIFAR-100 [25]. The results are presented in Fig. 2. The loss variations of head categories (those with small class Ids) are negative, and those of tail (those with small class Ids) are positive. In other words, both positive and negative compensations exist in logit adjustment. Intuitively, a category-level version can be obtained via meta learning, which is discussed in Appendix A.

(5) SVM [5]. This method is based on the following hinge loss:

| (30) |

To reduce the negative contributions of noisy or hard samples, the loss can be compensated as follows:

| (31) | ||||

Then the whole training loss with max margin and -norm for becomes

| (32) |

The minimization of Eq. (32) equals to the following optimization problem:

| (33) |

which is the standard form of SVM (without kernel). Alternatively, the slack variable can be seen as a loss compensation for SVM. Naturally, other types of compensation (e.g., label compensation) may be considered in SVM.

(6) Knowledge distillation [18]. In knowledge distillation, there are two deep neural networks called teacher and student, respectively. The output of the teacher model for is

| (34) |

where is the logit vector from the teacher model and is the temperature. can be viewed as a prior knowledge about the label compensation for the student model.

Then according to Eq. (6), the training loss of the student model with label compensation becomes

| (35) |

where . Eq. (35) is exactly the loss function of knowledge distillation.

(7) Implicit semantic data augmentation (ISDA) [51]. In contrast with previous data augmentation techniques, ISDA does not produce new samples or features. Instead, ISDA transforms the semantic data augmentation problem into the optimization of a new loss defined as

| (36) |

where is is the co-variance matrix for the th category; is the model parameter for the logit vectors and ( is the output of the last feature encoding layer for the sample ).

In Eq. (36), a logit compensation term is observed as follows:

| (37) |

where

| (38) |

Obviously, the compensation is category-level and determined with prior knowledge. In addition, the compensation direction is negative as the loss is increased for each training sample. The term is heavily dependent on the co-variance matrix , which can be further optimized via meta learning by minimizing the following loss on a validation set :

| (39) |

which is just the meta implicit data augmentation (MetaSAug) proposed by Li et al. [30]. MetaSAug is quite effective in long-tail classification.

(8) Arcface [32]. Arcface is a classical face recognition loss defined as follows:

| (40) |

where is the angle between the weight and the feature which are defined in the description for ISDA; is a hyper-parameter. Indeed, does not belong to the five compensation targets in our taxonomy. It is a corpus-level term and determined via hyper-parameter tuning.

Wang et al. [48] proposed a new Arcface loss, namely, Balancedloss, with the category-level compensation. The loss is defined as

| (41) |

where is the skin-tone category of the -th sample. Obviously, is a category-level term. It can be optimized via meta learning:

| (42) |

which is proven to be quite effective in the experiments conducted by Wang et al. [48].

5 Two New Learning Method Examples

This section introduces two method examples by introducing the idea of compensation learning into existing algorithms.

5.1 l1-based Logit Compensation

An example is given to explain how logit compensation works. Assume that the inferred logit vector of a noisy sample is and its (noisy) label is [0, 1, 0]. The cross-entropy loss incurred by this training sample is 2.36. This loss negatively affects in training because is noisy. To reduce the negative influence, if a compensation vector (e.g., [-1, 2, 0]) is learned, then the new logit vector becomes [2, 2.8, 0.2]. Consequently, the cross-entropy loss of is 0.42, which is much lower than 2.36. When -norm is used, the training loss is

| (43) |

where is the logit compensation vector and it is trainable during the training stage. If no noisy and quite hard samples are present, will approach to zero for all training samples. This method is called LogComp for brevity. The detailed steps are described in Algorithm 1.

5.2 Mixed Positive and Negative Compensation

We observed that large compensations (i.e., ) concentrate in samples with large losses during the running of LogComp in the experiments. Let . Motivated by adversarial training, (43) is modified into the following form

| (44) |

Compared with (43), (44) has one more hyper-parameter. Nevertheless, (44) is more flexible than (43). The results on image classification show that (44) is better than (43) if appropriate and are used.

Further, negative feature compensation can be used to increase the influences of samples whose losses are below the threshold in the optimization. A mixed compensation is subsequently obtained with the following loss:

| (45) | ||||

The main difference between (45) and the adversarial training loss [35] is that the losses of quite hard (including noisy) samples are not increased any more in (45). Instead, the losses of these samples are reduced as in (45). When , only the maximization part exists and the whole loss becomes the adversarial training loss; when , (45) is reduced to (44).

The minimization part in both (44) and (45) can be solved with an optimization approach similar to PGD [35]. This method is called MixComp for brevity. The PGD-like optimization for the minimization part in Eqs. (44) and (45) is as follows. First, we have

| (46) |

where is the one-hot vector of . Therefore, can be calculated by

| (47) |

where is the hyper-parameter. Accordingly, the updating of is

| (48) |

In our implementation, only one updating step is used. Consequently, if -norm is used, then we have

| (49) |

Therefore, we use to control the bound (i.e., ) of . The detailed steps of MixComp are described in Algorithm 2.

6 Experiments

This section evaluates our methods (LogComp and MixComp) in image classification and text sentiment analysis when there are noisy labels.

6.1 Competing Methods

As our proposed methods belong to the end-to-end noise-aware solution, the following methods are compared: soft/hard Bootstrapping [39], label smoothing [45], online label smoothing [57], progressive self label correction (ProSelfLC) [49], and PGD-based adversarial training (PGD-AT) [35].

The parameter settings are detailed in the corresponding subsections. In all experiments, the average classification accuracy and standard deviation of three repeated runs are recorded for each comparison.

6.2 Image Classification

| Random noise | Pair noise | ||||||

| 0% | 10% | 20% | 30% | 10% | 20% | 30% | |

| Base (ResNet-20) | 91.790.31 | 88.780.33 | 87.550.32 | 85.850.37 | 90.320.19 | 89.280.14 | 87.060.23 |

| Soft Bootstrapping | 91.830.12 | 89.370.18 | 87.520.37 | 85.590.33 | 90.440.23 | 89.160.22 | 87.080.25 |

| Hard Bootstrapping | 92.060.10 | 89.610.20 | 88.070.32 | 86.370.26 | 90.340.18 | 89.540.25 | 86.860.19 |

| Label Smoothing | 92.120.14 | 90.150.09 | 88.540.18 | 86.820.16 | 90.630.22 | 90.120.06 | 88.280.42 |

| Online Label Smoothing | 92.180.15 | 89.840.14 | 88.190.15 | 86.080.22 | 90.650.18 | 89.520.08 | 87.680.16 |

| ProSelfLC | 91.800.16 | 89.900.16 | 88.840.22 | 86.780.31 | 90.400.23 | 89.760.17 | 87.110.20 |

| PGD-AT | 89.900.08 | 87.560.13 | 86.870.13 | 84.800.17 | 88.900.15 | 88.380.07 | 87.440.10 |

| LogComp | 92.420.09 | 90.990.06 | 90.200.16 | 88.810.18 | 91.170.17 | 91.130.08 | 89.720.15 |

| MixComp | 92.260.04 | 91.090.11 | 90.630.12 | 88.980.15 | 91.290.03 | 91.150.05 | 90.010.16 |

| Random noise | Pair noise | ||||||

| 0% | 10% | 20% | 30% | 10% | 20% | 30% | |

| Base (ResNet-20) | 67.810.08 | 63.670.29 | 60.630.33 | 57.820.35 | 63.940.29 | 61.220.03 | 55.740.22 |

| Soft Bootstrapping | 68.380.24 | 64.010.23 | 60.660.28 | 57.970.23 | 64.290.31 | 60.710.23 | 56.270.26 |

| Hard Bootstrapping | 67.620.29 | 64.280.33 | 60.320.22 | 58.090.19 | 63.960.26 | 60.690.29 | 56.180.17 |

| Label Smoothing | 67.540.10 | 65.040.18 | 61.840.27 | 59.060.08 | 65.430.24 | 62.710.24 | 58.920.19 |

| Online Label Smoothing | 67.800.19 | 64.550.15 | 61.530.22 | 59.190.13 | 64.700.28 | 62.540.19 | 57.440.25 |

| ProSelfLC | 68.370.22 | 64.640.28 | 62.140.17 | 58.930.24 | 65.360.18 | 62.570.16 | 59.080.27 |

| PGD-AT | 64.370.17 | 60.390.24 | 57.380.21 | 54.230.16 | 60.410.20 | 58.080.13 | 54.370.22 |

| LogComp | 68.720.11 | 65.550.16 | 62.560.16 | 59.590.15 | 66.490.19 | 64.740.13 | 61.360.16 |

| MixComp | 68.710.15 | 65.790.14 | 62.760.20 | 60.170.12 | 66.810.16 | 64.830.11 | 63.550.13 |

Two benchmark image classification data sets, namely, CIFAR-10 and CIFAR-100 [25], are used. CIFAR-10 contains 10 categories and CIFAR-100 contains 100 categories. The details of these two data sets are shown in [25].

The synthetic label noises are simulated on the basis of the two common schemes used in [13, 15, 23]. The first is the random scheme in which each training sample is assigned to a uniform random label with a probability . The second is the pair scheme in which each training sample is assigned to the category next to its true category on the basis of the category list with a probability . The value of is set as 10%, 20%, and 30%.

The training/testing configuration used in [49] is followed. The parameter settings are as follows. The batch size and learning rate are set as 128 and 0.1, respectively. Other parameter settings are detailed in Appendix B.

The results are shown in Tables 1 and 2, respectively, when ResNet-20 [17] is used as the base neural network. Our methods, MixComp and LogComp, achieve twelve and two highest accuracies among the fourteen comparisons, respectively. The results of MixComp are obtained when equals to 0, indicating that only positive compensation is useful for the (clean) accuracy when there are noises. Indeed, both the hyper-parameters and balance the trade-off between positive and negative compensations.

| Random noise | 0% | 10% | 20% | 30% |

| Baseline (ResNet-20) | 91.790.31 | 88.780.33 | 87.550.32 | 85.850.37 |

| Only pos. comp. () | 92.260.04 | 91.090.11 | 90.630.12 | 88.980.15 |

| Only neg. comp. () | 91.660.12 | 88.690.22 | 87.330.10 | 85.710.31 |

| Both directions | 91.910.19 | 90.110.27 | 89.750.17 | 88.170.16 |

| 0 | 2/255 | 4/255 | 6/255 | 8/255 | |

| Clean accuracy(%) | 91.090.11 | 90.110.27 | 89.770.18 | 88.670.15 | 88.300.19 |

| Adversarial accuracy(%) | 11.570.36 | 53.380.31 | 64.950.24 | 68.200.16 | 70.250.14 |

| ResNet-32 | ResNet-44 | ResNet-56 | ResNet-110 | |

| Base | 92.500.26 | 92.820.15 | 93.030.34 | 93.510.18 |

| Soft Bootstrapping | 92.400.17 | 92.830.16 | 93.430.27 | 94.080.29 |

| Hard Bootstrapping | 92.190.23 | 92.940.11 | 93.380.25 | 94.020.23 |

| Label Smoothing | 92.750.24 | 92.890.18 | 93.050.23 | 93.920.43 |

| Online Label Smoothing | 92.610.19 | 92.930.34 | 93.410.20 | 93.540.18 |

| ProSelfLC | 92.870.22 | 92.980.28 | 93.210.19 | 93.580.37 |

| PGD-AT | 90.660.16 | 91.310.19 | 91.800.22 | 91.980.15 |

| LogComp | 93.420.11 | 93.590.09 | 93.800.17 | 94.400.12 |

| MixComp | 93.000.15 | 93.180.13 | 93.380.21 | 94.350.10 |

| ResNet-32 | ResNet-44 | ResNet-56 | ResNet-110 | |

| Base | 69.160.19 | 70.020.19 | 70.380.34 | 73.180.12 |

| Soft Bootstrapping | 69.760.25 | 70.760.34 | 71.010.40 | 74.190.24 |

| Hard Bootstrapping | 69.370.24 | 70.060.29 | 70.260.31 | 73.350.18 |

| Label Smoothing | 69.910.27 | 70.520.51 | 71.490.29 | 74.010.44 |

| Online Label Smoothing | 69.530.22 | 70.050.79 | 71.060.26 | 73.590.19 |

| ProSelfLC | 69.540.29 | 70.390.35 | 70.490.32 | 73.420.24 |

| PGD-AT | 65.940.18 | 66.550.26 | 67.580.29 | 70.830.17 |

| LogComp | 71.410.21 | 71.480.18 | 72.730.24 | 75.540.14 |

| MixComp | 70.290.17 | 71.240.19 | 71.810.28 | 74.310.16 |

| ResNet-32 | ResNet-44 | ResNet-56 | ResNet-110 | |

| Base | 62.460.54 | 62.730.64 | 63.370.22 | 67.510.19 |

| Soft Bootstrapping | 63.090.33 | 63.690.39 | 64.060.28 | 67.870.26 |

| Hard Bootstrapping | 63.030.41 | 63.570.32 | 63.990.34 | 67.400.23 |

| Label Smoothing | 64.450.28 | 65.720.27 | 66.500.74 | 69.430.36 |

| Online Label Smoothing | 63.940.66 | 65.180.70 | 65.450.52 | 68.380.34 |

| ProSelfLC | 64.040.37 | 65.040.44 | 63.940.41 | 68.860.26 |

| PGD-AT | 60.130.31 | 60.580.28 | 60.020.32 | 65.620.20 |

| LogComp | 66.500.27 | 66.970.29 | 68.570.25 | 71.740.21 |

| MixComp | 67.140.23 | 69.070.26 | 68.920.21 | 71.860.18 |

An ablation study is conducted for MixComp on CIFAR-10 (random noises) as MixComp involves both positive and negative compensations. The results in Table 3 indicate that negative compensation (i.e., adversarial training) and compensation with both directions do not improve the performance yet the positive compensation achieves the best performance. Table 4 lists the clean and adversarial accuracies of MixComp under different values of on the CIFAR-10 (10% random noises). The increase of improves the adversarial accuracies yet reduces the clean accuracies. Although negative compensation in MixComp does not improve the clean accuracy, it benefits the adversarial accuracy.

When LogComp and MixComp are used, some original labels with high average (positive) compensation terms are found to be erroneous. Fig. 3 shows two samples from CIFAR-10. Their labels seem wrong. Comparisons on other base networks [17], namely, ResNet-32, ResNet-44, ResNet-56, and ResNet-110 are also conducted. The same conclusions are still obtained. Tables 5- 7 present the classification accuracies of the competing methods with the above four base networks on partial noisy rates.

6.3 Text Sentiment Analysis

Two benchmark data sets are used, namely, IMDB and SST-2 [47]. Both are binary tasks and the details can be seen in [47]. Two types of label noises are added. In the first type (symmetric), the labels of the former 5%, 10%, and 20% (according to there indexes in the corpus) training samples are flipped to simulate the label noises; in the second type (asymmetric), the labels of the former 5%, 10%, and 20% positive samples are flipped to negative. The 300- Glove [59] embedding is used. The values for #epochs, batch size, learning rate, and dropout rate follow the settings in [19, 52]. The data split and other parameter settings are detailed in Appendix C.

The results of the competing methods on the IMDB and SST-2 for the symmetric and asymmetric label noises are shown in Tables 8 and 9, respectively, when BiLSTM with attention [10] is used as the base network. Our proposed method, MixComp, achieves the overall best results (13 highest accuracies among 14 comparisons). When no added label noises are present (0%), both MixComp and LogComp still achieve better results than the base method BiLSTM with attention on both sets.

| Symmetric noise | Asymmetric noise | ||||||

| 0% | 5% | 10% | 20% | 5% | 10% | 20% | |

| Base (BiLSTM+attention) | 84.390.34 | 83.040.17 | 81.900.61 | 78.130.13 | 82.350.88 | 79.532.68 | 73.741.14 |

| Soft Bootstrapping | 84.790.87 | 83.870.13 | 81.110.62 | 79.601.78 | 83.361.11 | 80.702.19 | 73.522.65 |

| Hard Bootstrapping | 84.440.93 | 84.100.54 | 83.010.70 | 80.841.07 | 82.481.72 | 81.421.55 | 75.261.02 |

| Label Smoothing | 84.620.18 | 83.140.24 | 82.410.51 | 80.730.20 | 82.750.29 | 82.280.33 | 74.700.48 |

| Online Label Smoothing | 84.830.51 | 84.140.37 | 82.090.54 | 80.911.17 | 83.780.77 | 81.350.92 | 73.751.38 |

| ProSelfLC | 84.790.39 | 83.210.44 | 82.170.47 | 80.420.41 | 83.220.91 | 81.580.85 | 74.963.01 |

| PGD-AT | 85.820.10 | 84.120.37 | 83.530.44 | 81.480.18 | 82.410.98 | 80.750.73 | 72.402.15 |

| LogComp | 85.170.16 | 84.530.20 | 83.750.46 | 81.640.22 | 84.450.39 | 81.440.36 | 76.870.30 |

| MixComp | 85.870.08 | 85.120.14 | 84.330.22 | 82.600.19 | 85.120.18 | 82.310.21 | 77.830.25 |

| Symmetric noise | Asymmetric noise | ||||||

| 0% | 5% | 10% | 20% | 5% | 10% | 20% | |

| Base (BiLSTM+attention) | 83.850.02 | 82.710.05 | 81.120.29 | 79.720.03 | 82.070.45 | 81.460.19 | 79.490.39 |

| Soft Bootstrapping | 83.770.33 | 83.250.17 | 82.210.23 | 80.400.42 | 82.780.27 | 81.660.44 | 79.140.25 |

| Hard Bootstrapping | 83.680.40 | 83.180.22 | 81.450.63 | 80.500.16 | 82.250.54 | 81.730.23 | 79.520.67 |

| Label Smoothing | 83.870.52 | 82.780.09 | 82.160.32 | 80.570.14 | 82.690.41 | 81.950.24 | 79.630.68 |

| Online Label Smoothing | 83.670.19 | 83.340.14 | 82.030.22 | 80.610.39 | 82.580.33 | 82.200.32 | 79.570.72 |

| ProSelfLC | 83.810.05 | 83.070.16 | 81.920.28 | 80.280.33 | 82.420.43 | 82.030.21 | 79.270.79 |

| PGD-AT | 83.880.14 | 82.150.23 | 81.810.19 | 80.330.12 | 82.360.23 | 81.690.18 | 73.810.24 |

| LogComp | 84.100.08 | 83.180.13 | 81.830.15 | 80.420.05 | 82.850.10 | 82.230.15 | 78.870.23 |

| MixComp | 84.340.05 | 83.460.08 | 82.310.16 | 80.990.13 | 82.750.18 | 82.300.12 | 79.800.21 |

| Symmetric noise | 0% | 5% | 10% | 20% |

| Baseline (BiLSTM+attention) | 84.390.34 | 83.040.17 | 81.900.61 | 78.130.13 |

| Only pos. comp. () | 84.650.11 | 83.530.21 | 82.910.33 | 80.100.22 |

| Only neg. comp. () | 85.840.36 | 84.870.22 | 83.890.27 | 81.730.24 |

| Both directions | 85.870.08 | 85.120.14 | 84.330.22 | 82.600.19 |

An ablation study is also conducted for MixComp on IMDB. Each compensation is useful and their combination achieves the best performance. The results are shown in Table 10. Given that judging the sentimental states of some sentences is difficult, inevitably, some original samples are quite hard or noisy. When LogComp is used, some original labels with high average compensation terms are found to be erroneous. For example, the sentence “Plummer steals the show without resorting to camp as nicholas’ wounded and wounding uncle ralph” is labeled as positive in the original set. More examples are listed in Tables A-1 and A-2 in the Appendix D.

LogComp also achieves the second-best results on IMDB. On IMDB, the base model is usually converged in the second epoch. However, LogComp is usually converged in the third or the fifth epoch. The validation accuracies of the six epochs for the base model and our LogComp are shown in Fig. 4. LogComp can decelerate the convergence speed leading that the training data can be more fully trained.

6.4 Discussion

More extensions and new methods can be obtained based on our taxonomy.

(1) The extension of the logit compensation described in (44). As previously mentioned, each weighting method may correspond to a compensating method. Self-paced learning (SPL) [26] is a classical sample weighting strategy in machine learning. The weights are obtained with the following objective function:

| (50) |

The solution is

| (51) |

which indicates that the weights of samples with larger losses than are set as 0. When the value of is increased, more samples will participate in the model training.

Fig. 5 shows the curves of weights for the original SPL and its variants. Logit compensation can be used to implement the SPL with (44) and (52) when the hyper-parameters and satisfy the following conditions:

| (52) |

where is the index of the current epoch. A new method is obtained and can be called self-paced logit compensation.

With Eq. (52), similar curves can also be obtained. Fig. 6 shows the curve of loss ratios (compensated loss : original loss) when on the CIFAR-100 data set. The curve indicates that our strategy can also exert higher weights () to samples with low losses and lower weights () to samples with high losses.

(2) The extension of MixComp. Indeed, the parameters and characterize the extent of positive/negative compensations, respectively. Intuitively, an example with a larger loss should have a greater positive compensation; while an example with a lower loss should have a greater negative compensation. Therefore, the constrains for the compensation terms in (26) can be redefined as follows:

| (53) |

(3) The extension of Bootstrapping. The Bootstrapping loss and the online label smoothing can be unified into the following new loss:

| (54) |

where is the category-level average prediction in the previous epoch; and are hyper-parameters and are located in [0, 1]. When equals 0, the above loss becomes the soft Bootstrapping loss. When equals 1, the loss becomes the online label smoothing loss with a little difference. Specifically, is defined as follows:

| (55) |

where is the prediction confidence of the prediction , and is the normalizer. Two typical definitions of are

| (56) | ||||

When the second definition is used and , the unified loss becomes the online label smoothing. Nevertheless, the values of obtained by the above two definitions are close to each other when #epoch >5 in most data sets according to our observations. The unified new method can be called mixBootstrapping.

7 Conclusions

This study reveals a widely used yet under-explored machine learning strategy, namely, compensating. Machine learning methods leveraging or partially leveraging compensating comprise a new learning paradigm called compensating learning. To solidify the theoretical basis of compensation learning, a systematic taxonomy is constructed on the basis of which to compensate, the direction, how to infer, and the granularity. To demonstrate the universality of compensation learning, several existing learning methods are explained within our constructed taxonomy. Furthermore, two concrete compensation learning methods (i.e., LogComp and MixComp) are proposed. Extensive experiments suggest that our proposed methods are effective in robust learning tasks.

Acknowledgement

We thank Mr. Mengyang Li for his useful suggestions on the experiments.

References

- [1] Yoshua Bengio, Jérôme Louradour, Ronan Collobert, and Jason Weston. Curriculum learning. In ICML, pages 41–48, 2009.

- [2] Philipp Benz, Chaoning Zhang, Adil Karjauv, and In So Kweon. Universal adversarial training with class-wise perturbations. In ICME, pages 1–6, 2021.

- [3] Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, and Tengyu Ma. Learning imbalanced datasets with label-distribution-aware margin loss. In NeurIPS, pages 1565–1576, 2019.

- [4] Kuang-Yu Chang, Chu-Song Chen, and Yi-Ping Hung. Ordinal hyperplanes ranker with cost sensitivities for age estimation. In CVPR, pages 585–592, 2011.

- [5] Corinna Cortes and Vladimir Vapnik. Support-vector networks. Machine learning, 20(3):273–297, 1995.

- [6] D. Das and Csg Lee. A two-stage approach to few-shot learning for image recognition. IEEE Transactions on Image Processing, 29(99):3336–3350, 2019.

- [7] Jia Deng, Jonathan Krause, and Li Fei-Fei. Fine-grained crowdsourcing for fine-grained recognition. In CVPR, pages 580–587, 2013.

- [8] Pedro A Forero, Vassilis Kekatos, and Georgios B Giannakis. Robust clustering using outlier-sparsity regularization. IEEE Transactions on Signal Processing, 60(8):4163–4177, 2012.

- [9] Yoav Freund and Robert E Schapire. A decision-theoretic generalization of on-line learning and an application to boosting. Journal of computer and system sciences, 55(1):119–139, 1997.

- [10] Felix A Gers, Jürgen Schmidhuber, and Fred Cummins. Learning to forget: Continual prediction with lstm. Neural Computation, 12(10):2451–2471, 2000.

- [11] Morgane Goibert and Elvis Dohmatob. Adversarial robustness via label-smoothing. arXiv preprint arXiv:1906.11567, 2019.

- [12] Jacob Goldberger and Ehud Ben-Reuven. Training deep neural-networks using a noise adaptation layer. In ICLR, 2017.

- [13] Sheng Guo, Weilin Huang, Haozhi Zhang, Chenfan Zhuang, Dengke Dong, Matthew R Scott, and Dinglong Huang. Curriculumnet: Weakly supervised learning from large-scale web images. In ECCV, pages 139–154, 2018.

- [14] Bo Han, Quanming Yao, Tongliang Liu, Gang Niu, Ivor W Tsang, James T Kwok, and Masashi Sugiyama. A survey of label-noise representation learning: Past, present and future. arXiv preprint arXiv:2011.04406, 2020.

- [15] Bo Han, Quanming Yao, Xingrui Yu, Gang Niu, Miao Xu, Weihua Hu, Ivor W Tsang, and Masashi Sugiyama. Co-teaching: Robust training of deep neural networks with extremely noisy labels. In NeurIPS, pages 8536–8546, 2018.

- [16] Jiangfan Han, Ping Luo, and Xiaogang Wang. Deep self-learning from noisy labels. In ICCV, pages 5137–5146, 2019.

- [17] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, pages 770–778, 2016.

- [18] Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531, 2015.

- [19] James Hong and Michael Fang. Sentiment analysis with deeply learned distributed representations of variable length texts. Stanford University Report, pages 1–9, 2015.

- [20] Hidekata Hontani, Takamiti Matsuno, and Yoshihide Sawada. Robust nonrigid icp using outlier-sparsity regularization. In CVPR, pages 174–181, 2012.

- [21] Chen Huang, Yining Li, Chen Change Loy, and Xiaoou Tang. Learning deep representation for imbalanced classification. In CVPR, pages 5375–5384, 2016.

- [22] Jinchi Huang, Lie Qu, Rongfei Jia, and Binqiang Zhao. O2u-net: A simple noisy label detection approach for deep neural networks. In ICCV, pages 3325–3333, 2019.

- [23] Lu Jiang, Zhengyuan Zhou, Thomas Leung, Li-Jia Li, and Li Fei-Fei. Mentornet: Learning data-driven curriculum for very deep neural networks on corrupted labels. In ICML, pages 2309–2318, 2018.

- [24] Justin M Johnson and Taghi M Khoshgoftaar. Survey on deep learning with class imbalance. Journal of Big Data, 6(1):1–54, 2019.

- [25] Alex Krizhevsky and Geoffrey Hinton. Learning multiple layers of features from tiny images. Technical report, 2009.

- [26] M Kumar, Benjamin Packer, and Daphne Koller. Self-paced learning for latent variable models. In NeurIPS, pages 1189–1197, 2010.

- [27] Wonseok Lee, Hanbit Lee, and Sang-goo Lee. Semantics-preserving adversarial training. arXiv preprint arXiv:2009.10978, 2020.

- [28] Buyu Li, Yu Liu, and Xiaogang Wang. Gradient harmonized single-stage detector. In AAAI, pages 8577–8584, 2019.

- [29] Junnan Li, Yongkang Wong, Qi Zhao, and Mohan S Kankanhalli. Learning to learn from noisy labeled data. In CVPR, pages 5051–5059, 2019.

- [30] Shuang Li, Kaixiong Gong, Chi Harold Liu, Yulin Wang, Feng Qiao, and Xinjing Cheng. Metasaug: Meta semantic augmentation for long-tailed visual recognition. In CVPR, pages 5212–5221, 2021.

- [31] Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, and Piotr Dollár. Focal loss for dense object detection. In ICCV, pages 2999–3007, 2017.

- [32] Hao Liu, Xiangyu Zhu, Zhen Lei, and Stan Z Li. Adaptiveface: Adaptive margin and sampling for face recognition. In CVPR, pages 11947–11956, 2019.

- [33] Tongliang Liu and Dacheng Tao. Classification with noisy labels by importance reweighting. IEEE Transactions on pattern analysis and machine intelligence, 38(3):447–461, 2016.

- [34] Xingjun Ma, Yisen Wang, Michael E Houle, Shuo Zhou, Sarah Erfani, Shutao Xia, Sudanthi Wijewickrema, and James Bailey. Dimensionality-driven learning with noisy labels. In ICML, pages 3361–3370, 2018.

- [35] Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. Towards deep learning models resistant to adversarial attacks. In ICLR, 2018.

- [36] Aditya Krishna Menon, Sadeep Jayasumana, Ankit Singh Rawat, Himanshu Jain, Andreas Veit, and Sanjiv Kumar. Long-tail learning via logit adjustment. In ICLR, 2021.

- [37] Seyed-Mohsen Moosavi-Dezfooli, Omar Fawzi, Alhussein amd Fawzi, and Pascal Frossard. Universal adversarial perturbations. In CVPR, pages 86–94, 2017.

- [38] Nagarajan Natarajan, Inderjit S Dhillon, Pradeep Ravikumar, and Ambuj Tewari. Learning with noisy labels. In NeurIPS, pages 1196–1204, 2013.

- [39] Scott Reed, Honglak Lee, Dragomir Anguelov, Christian Szegedy, Dumitru Erhan, and Andrew Rabinovich. Training deep neural networks on noisy labels with bootstrapping. In ICLR, 2015.

- [40] Mengye Ren, Wenyuan Zeng, Bin Yang, and Raquel Urtasun. Learning to reweight examples for robust deep learning. In ICML, pages 4331–4340, 2018.

- [41] Ali Shafahi, Mahyar Najibi, Zheng Xu, John Dickerson, Larry S Davis, and Tom Goldstein. Universal adversarial training. In AAAI, pages 5636–5643, 2020.

- [42] Jun Shu, Qi Xie, Lixuan Yi, Qian Zhao, Sanping Zhou, Zongben Xu, and Deyu Meng. Meta-weight-net: Learning an explicit mapping for sample weighting. In NeurIPS, pages 1917–1928, 2019.

- [43] Martin Slawski, Emanuel Ben-David, et al. Linear regression with sparsely permuted data. Electronic Journal of Statistics, 13(1):1–36, 2019.

- [44] Hwanjun Song, Minseok Kim, Dongmin Park, Yooju Shin, and Jae-Gil Lee. Learning from noisy labels with deep neural networks: A survey. arXiv preprint arXiv:2007.08199, 2020.

- [45] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jon Shlens, and Zbigniew Wojna. Rethinking the inception architecture for computer vision. In CVPR, pages 2818–2826, 2016.

- [46] Sunil Thulasidasan, Tanmoy Bhattacharya, Jeff Bilmes, Gopinath Chennupati, and Jamal Mohd-Yusof. Combating label noise in deep learning using abstention. In ICML, pages 6234–6243, 2019.

- [47] Chenglong Wang, Feijun Jiang, and Hongxia Yang. A hybrid framework for text modeling with convolutional rnn. In KDD, pages 2061–2069, 2017.

- [48] Mei Wang, Yaobin Zhang, and Weihong Deng. Meta balanced network for fair face recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021.

- [49] Xinshao Wang, Yang Hua, Elyor Kodirov, David A Clifton, and Neil M Robertson. Proselflc: Progressive self label correction for training robust deep neural networks. In CVPR, pages 752–761, 2021.

- [50] Yixin Wang, Alp Kucukelbir, and David M Blei. Robust probabilistic modeling with bayesian data reweighting. In ICML, pages 3646–3655, 2017.

- [51] Yulin Wang, Xuran Pan, Shiji Song, Hong Zhang, Cheng Wu, and Gao Huang. Implicit semantic data augmentation for deep networks. In NeurIPS, pages 12614–12623, 2019.

- [52] Yequan Wang, Aixin Sun, Jialong Han, Ying Liu, and Xiaoyan Zhu. Sentiment analysis by capsules. In WWW, pages 1165–1174, 2018.

- [53] Yaqing Wang, Quanming Yao, James T Kwok, and Lionel M Ni. Generalizing from a few examples: A survey on few-shot learning. ACM Computing Surveys (CSUR), 53(3):1–34, 2020.

- [54] Zhen Wang, Guosheng Hu, and Qinghua Hu. Training noise-robust deep neural networks via meta-learning. In CVPR, pages 4523–4532, 2020.

- [55] Xiaoxia Wu, Ethan Dyer, and Behnam Neyshabur. When do curricula work? In ICLR, 2021.

- [56] J. Yao, J. Wang, I. W. Tsang, Y. Zhang, J. Sun, C. Zhang, and R. Zhang. Deep learning from noisy image labels with quality embedding. IEEE Transactions on Image Processing, 28:1909–1922, 2019.

- [57] Chang-Bin Zhang, Peng-Tao Jiang, Qibin Hou, Yunchao Wei, Qi Han, Zhen Li, and Ming-Ming Cheng. Delving deep into label smoothing. IEEE Transactions on Image Processing, 30:5984–5996, 2021.

- [58] Wei Emma Zhang, Quan Z Sheng, Ahoud Alhazmi, and Chenliang Li. Adversarial attacks on deep-learning models in natural language processing: A survey. ACM Transactions on Intelligent Systems and Technology (TIST), 11(3):1–41, 2020.

- [59] Liang Zhao, Tianyang Zhao, Tingting Sun, Zhuo Liu, and Zhikui Chen. Multi-view robust feature learning for data clustering. IEEE Signal Processing Letters, 27:1750–1754, 2020.

Appendix A Meta Logit Adjustment

In Eq. (29) of the paper, the hyper-parameter is fixed for all categories. A category-wise setting for may be useful. Therefore, a new logit adjustment with meta optimization on is proposed and called Meta logit adjustment. Let be the validation set for meta optimization. According to Eqs. (12–14) in the paper, the new loss is

| (a-1) |

Given a value for , the network parameter can be obtained by solving

| (a-2) |

After is obtained, can be optimized by solving

| (a-3) |

Appendix B Parameter setting in image classification

For the two data sets, the #epochs are set as 300. The in LogComp is searched in {0.25, 0.35} and the learning rate for the compensation variable in LogComp is searched in {1.5, 3, 4.5, 6}. In MixComp, is searched in {0.5, 1.5, 2, 3, 4, 5}, and is searched in {0, 8/255, 10/255, 12/255}. is determined according to the top- percent of ordered losses, and the value of is searched in {0, 5, 7.5, 15, 25, 35, 45, 50}. In Soft/Hard Bootstrapping, Label Smoothing, and online label smoothing, the parameters follow the settings in [57]. In ProSelfLC, the parameters follow the settings in [49]. In PGD-AT, is is searched in {8/255, 10/255, 12/255}.

Appendix C Parameter setting in text sentiment analysis

For IMDB data set, the batch size is set as 64; the learning rate is set as 0.001; the number of epochs is set as 6; the proportion of train/val/test data is 4:1:5; the embedding dropout is set as 0.5; the dimension of hidden vectors is 100. In LogComp, the learning rate for the compensation variable is searched in {0.6, 0.7, 0.8, 0.9, 1}, and the is searched in {0.75, 1}. In MixComp, is searched in {0, 0.075, 0.15, 0.25, 0.5, 0.75, 1}, and is searched in {0, 0.005, 0.01, 0.015}. is determined according to the top- percent of ordered losses, and the value of is searched in {0, 5, 7.5, 15, 25, 35, 45, 50}.

For SST-2 data set, the batch size is set as 32; the learning rate is set as 0.0001; the number of epochs is set as 50; the division of train/val/test data follows the default split; the embedding dropout is set as 0.7; the dimension of hidden vectors is 256. In LogComp, the learning rate for the compensation variable is searched in {0.02, 0.025, 0.03, 0.035, 0.04}, and the is searched in {0.75, 1}. In MixComp, is searched in {0, 0.075, 0.15, 0.25, 0.5, 0.75, 1}, and is searched in {0, 0.005, 0.01, 0.015}. is determined according to the top- percent of ordered losses, and the value of is searched in {0, 5, 7.5, 15, 25, 35, 45, 50}.

Appendix D More sentence examples

Table A-1 shows some samples with higher -norm values of logit compensation whose labels are erroneous. Without contexts, we believe that these labels are wrong. Some readers may consider that the labels are correct in certain contexts. In our view, it is inappropriate to assume that annotators are familiar with these contexts in advance. Table A-2 shows some samples that are difficult to predict by machines. Their -norm values are also high.

| Sample | Original label | Our label |

| the exploitative, clumsily staged violence overshadows everything, including most of the actors. | 1 | 0 |

| .. a fascinating curiosity piece – fascinating, that is, for about ten minutes. | 0 | 1 |

| this is a great movie. I love the series on tv and so I loved the movie. One of the best things in the movie is that Helga finally admits her deepest darkest secret to Arnold!!! that was great. i loved it it was pretty funny too. It’s a great movie! Doy!!! | 0 | 1 |

| Sample | Original label |

| it ’s a boring movie about a boring man, made watchable by a bravura performance from a consummate actor incapable of being boring. | 1 |

| she is a lioness, protecting her cub, and he a reluctant villain, incapable of controlling his crew. | 1 |

| it made me want to get made-up and go see this movie with my sisters. | 1 |

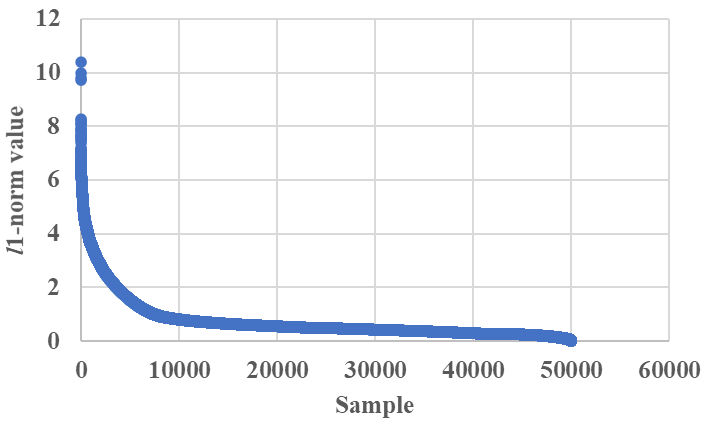

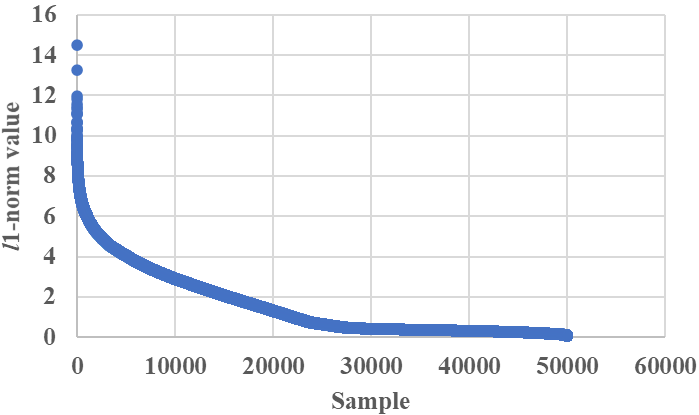

In addition, we plot the distribution of -norm of compensated logit vectors when using LogComp on CIFAR-10 and CIFAR-100 data sets when no added label noises are present (0%). The results are shown in Fig. A-1 and Fig. A-2. Both distribution curves show a long-tail trend, which is quite reasonable.