Competitive percolation strategies for network recovery

Abstract

Restoring operation of critical infrastructure systems after catastrophic events is an important issue, inspiring work in multiple fields, including network science, civil engineering, and operations research. We consider the problem of finding the optimal order of repairing elements in power grids and similar infrastructure. Most existing methods either only consider system network structure, potentially ignoring important features, or incorporate component level details leading to complex optimization problems with limited scalability. We aim to narrow the gap between the two approaches. Analyzing realistic recovery strategies, we identify over- and undersupply penalties of commodities as primary contributions to reconstruction cost, and we demonstrate traditional network science methods, which maximize the largest connected component, are cost inefficient. We propose a novel competitive percolation recovery model accounting for node demand and supply, and network structure. Our model well approximates realistic recovery strategies, suppressing growth of the largest connected component through a process analogous to explosive percolation. Using synthetic power grids, we investigate the effect of network characteristics on recovery process efficiency. We learn that high structural redundancy enables reduced total cost and faster recovery, however, requires more information at each recovery step. We also confirm that decentralized supply in networks generally benefits recovery efforts.

Introduction

Resilience of complex networks is one of the most studied topics of network science, with an expanding literature on spreading of failures, mitigation of damage, and recovery processes [1, 2, 3, 4, 5, 6]. The level of functionality of a network is typically quantified by its connectedness, e.g., size of the largest component [1], average path length [7, 8], or various centrality metrics [9]. Such simple topology-based metrics ensure mathematical tractability and allow us to analyze and compare networks that can be very different in nature, providing general insights into the organization of complex systems. However, such a perspective necessarily ignores important system-specific details. For example, abstracted topological models of infrastructure networks recovering from damage or catastrophic failure aim to rapidly restore the largest component [10, 11, 12, 13]. But, extensive connectivity is not a necessary condition to guarantee that all supply and demand can be met. For instance, consumers of a power grid can be served if they are connected to at least one power source and that source satisfies operational constraints [14]. The concept of “islanding”, a technique of intentionally partitioning the network to avoid cascading failures, is actually a practical strategy used to improve security and resilience during restoration efforts in power grids [15, 16, 17]. Indeed, after the 2010 earthquake in Chile the recovery process first created five islands, which were only connected to each other in the final steps of reconstruction [18].

The restoration of critical infrastructure operation after a catastrophic event, such as a hurricane or earthquake, is a problem of great practical importance and is the focus of a significant body of work in civil and industrial engineering disciplines. The goal of engineering based models of recovery is to provide system-specific predictions and actionable recommendations. This is achieved by incorporating component level details and realistic transmission dynamics into the models, often in the form of generalized formulations of the network design problems (NDPs), which satisfy network flows. In this context, objective functions of such NDP-based models aim to minimize the construction and/or operational costs of recovering edges and nodes in a utility network. Basic forms of the NDP have been well-studied [19, 20], and have recently been combined with scheduling and resource allocation problems to model the entire restoration process [21, 22, 23]. While these models provide a principled manner to obtain optimal, centralized recovery strategies, their complexity (at least NP-complete [19]) renders computation not scalable, and interpretation restricted in scope to small instances. More efficient approximate solutions for NDPs have been found using optimization meta-heuristics such as a hybrid ant system [24] and gradient descent [25] methods. Such algorithms are generally applicable to global search problems and were designed to reduce computational complexity by not guaranteeing optimality; therefore, provide limited insight into the mechanism of network formation during recovery. We will analyze the output of an NDP algorithm, and leveraging on these observations, we will develop a percolation-based model for network recovery with the goal of uncovering important principles of network formation and recoverability.

Percolation processes, often used for studying properties of stochastic network formation, have recently been applied to network recovery [26]. In the kinetic formulation of random percolation [27], we start with unconnected nodes and consider a discrete time process. At each timestep, an edge is selected from the set of all possible edges at random, and added to the network. Initially the largest connected component (LCC) is sublinear in ; above a critical edge density it spans a finite fraction of the network and the LCC is referred to as the giant component. Controlling location of the critical point is of great interest in many systems – for instance, suppressing the formation of the giant component may reduce the likelihood of virus spreading in social contact networks. This can be achieved by selecting candidate edges at each timestep, and adding the edge that optimizes some criteria. The general class of models that results from this choice is referred to as competitive percolation or an Achlioptas process [28, 29]. While simple, Achlioptas processes often have the benefit of being scalable, numerically analyzable, and provide a parameter, , for tuning how close the formation process is to matching the desired criteria. Note that when is equal to the number of possible edges, we always add the edge that is optimal with respect to the selection criteria. Previous percolation-based recovery models typically measure solution quality by how quickly the LCC grows [11, 12], or assume nodes which are not connected to the LCC to be nonfunctional [10, 13]. However, empirical studies of recovery scenarios suggest that these assumptions do not apply to infrastructure networks after catastrophic scenarios [18] .

In this work, we aim to narrow the gap between topology-based recovery approaches and computationally difficult optimization approaches by incorporating features which mirror infrastructure restoration processes. We start by applying a generalized version of a well-studied NDP recovery algorithm [22, 23, 30] to a small case study, and we identify that the satisfaction of demand is a key driving force in the initial periods of recovery, outranking operational efficiency and direct repair costs of network elements in importance. Motivated by this finding, we define a simple, competitive percolation-based model of recovery that aims to maximize the satisfaction of consumer demand in a greedy manner. We show that component size anti-correlates with the likelihood of further growth; therefore, leading to islanding and the suppression of the emergence of large-scale connectivity, similar to explosive percolation transitions [29] and in contrast with traditional recovery models. We apply our recovery algorithm to synthetic power grids to systematically investigate how realistic structural features of the network affect the efficiency of the recovery process. We learn that high structural redundancy (related to the existence of multiple paths between nodes) allows for reduced total cost and faster recovery time; however, to benefit from that redundancy, an increasing amount of information needs to be considered at each step of reconstruction. We also study the role of the ratio of suppliers and consumers and find that decentralized supply generally benefits recovery efforts, unless the fraction of suppliers becomes unrealistically high. Our model deepens our understanding of network formation during recovery and of the relationship between network structure and recoverability. We anticipate that our work can lead to efficient approximations of the NDP algorithm by leveraging the important mechanisms uncovered by our competitive percolation model.

Model

Problem statement and the optimal recovery model

We are interested in the problem of restoring the operation of a critical infrastructure system after sustaining large-scale damage. The infrastructure network is represented by a graph , where is the set of nodes corresponding to substations and is a set of edges corresponding to transmission lines, e.g., power lines, water or gas pipes. We introduce the parameter representing the commodity demand of node : if , node is a net consumer; if , node is a net supplier. We normalize such that the total consumption (or production) sums to unity, i.e., . Following a catastrophic event, a subset of the network becomes damaged. We study a discrete time reconstruction process: in each timestep we fix one damaged component, and the process ends once the entire network is functional. Our goal is to identify a sequence in which to repair the elements such that the total cost of recovery is minimized. In this manuscript, we focus on the fundamental case where all links are damaged but nodes remain functional, i.e., and .

Optimization frameworks are often used in order to explore the space of possible repair sequences and identify best solutions. We implement the time-dependent network design problem (td-NDP) algorithm [22, 23, 30], which is a well known example of an optimization algorithm for network recovery developed by the civil engineering community. Out of the recovery processes we examine in this paper, td-NDP is the most realistic, and therefore the most computationally complex. It is formulated as a mixed integer problem which optimizes a cost that includes reconstruction costs of network components, operational costs, and penalties incurred for unsatisfied demand, while taking constraints on flows of commodities into account. In general mixed integer program are known to be NP-hard except in special cases. For the td-NDP this means problems become exponentially harder as the size of the network to be reconstructed increases; therefore, it is common practice to break up the recovery into time windows of length , and find the locally optimal solution in each window. A formal definition of td-NDP is provided in the Methods section.

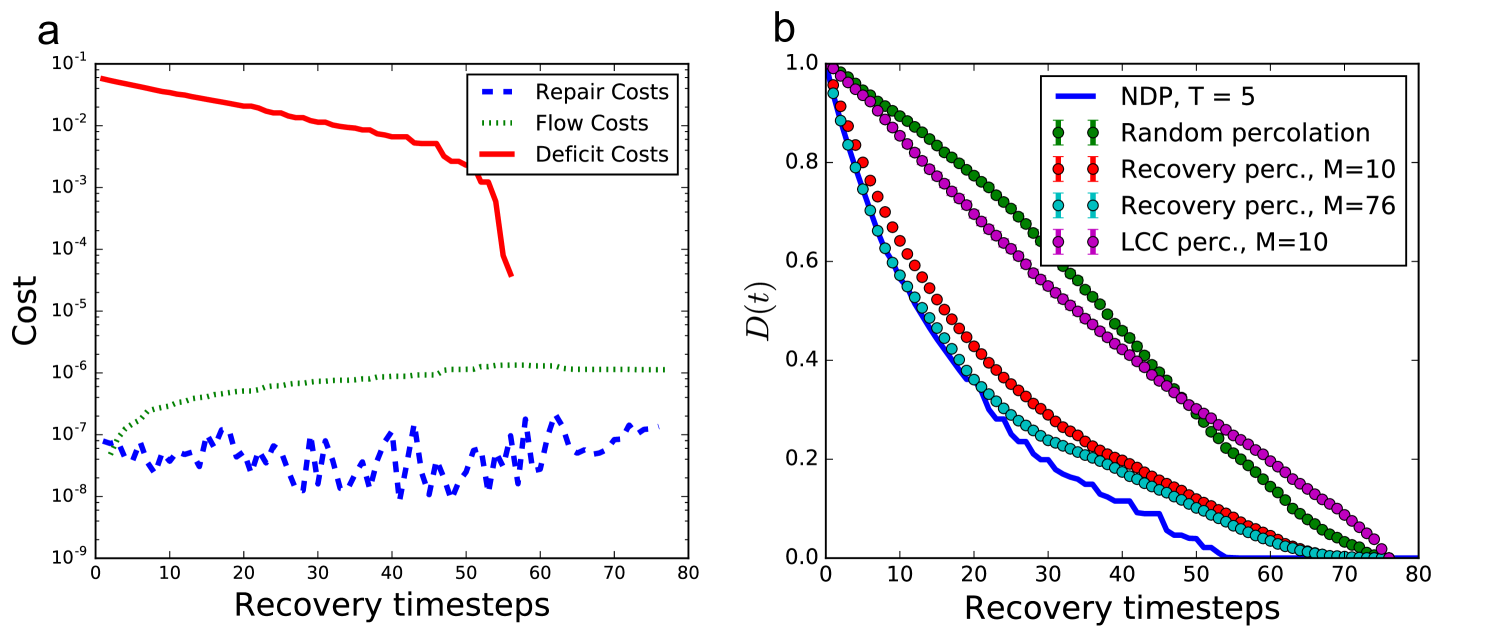

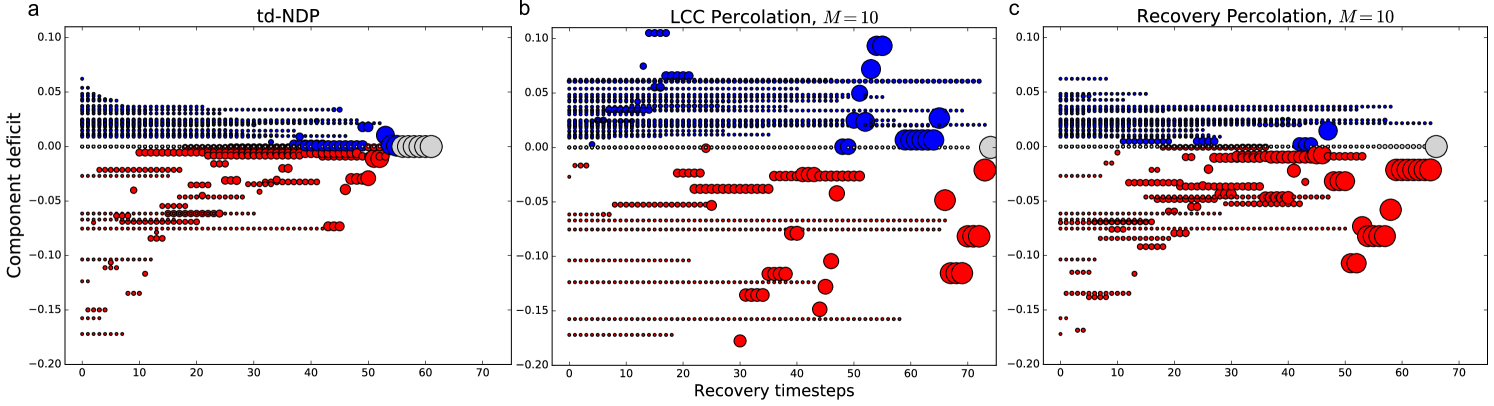

To uncover the key driving factors and properties of the recovery process, we apply the td-NDP to a representative example, the power grid of Shelby County, Tennessee, which consists of suppliers and consumers (with junction nodes where ), connected by transmission lines. Network topology and necessary parameters where obtained from Refs. [22, 23]. Figure 1a shows the total repair cost as a function of time as we perform the td-NDP with on a network that was initially completely destroyed, with cost broken down by the type of expense. We see that deficit cost (i.e., the penalty accrued for unsatisfied demand) is overwhelmingly dominant and exponentially decreasing throughout the initial stages of recovery. Investigating how this impacts the growth of the components, Figure 2a shows the commodity deficit or supply of each connected component throughout the recovery process, with circle sizes representing the component size, and colors representing if a component is over- (blue) or undersupplied (red). We see that the td-NDP process results in many small components initially, with relatively small surplus/deficit, and only towards the end of the process all components are joined. This is consistent with islanding techniques discussed in engineering practice. Our goal is to develop a simple and computationally efficient model of the recovery process that captures these key features.

Competitive percolation optimizing for LCC growth

Previous topology-based recovery processes prioritize the rapid growth of the Largest Connected Component (LCC) [11, 12, 10, 13]. Models vary in the details, such as the type of failure (random, localized, catastrophic) and additional secondary objectives (such as prioritizing nodes based on population), but the metric for the quality of the solution is directly related to how quickly the LCC grows.

As a representative example of topology driven recovery strategies, we implement an Achiloptas process using a selection rule that maximizes the sum of the resulting component, which we refer to as LCC percolation. In this process, we randomly select candidate edges out of the set of damaged edges at each discrete timestep. We then examine the impact that each individual edge would have and select the edge that, when added, creates the largest connected component. More specifically, let denote the size of the component to which node belongs. If nodes and belong to separate components, repairing edge creates a component with size ; if they belong to the same component, the size of the component does not change and we set . Out of the candidate edges, we repair the one that maximizes ; if multiple candidate links have the same maximal , we select one of them uniformly at random. If , the process is equivalent to traditional percolation. If , the process is largely deterministic, we always repair an edge that is optimal with respect to the selection criteria.

We now apply LCC percolation as a model for the recovery of the Shelby County power grid and compare the results to the benchmark td-NDP process. Figure 2b shows that if we use growth of the LCC as our objective, the LCC, represented by the largest circle, grows rapidly throughout the process as expected. However, the deficit/surplus of this component fluctuates greatly. As indicated by the magnitude of total commodity deficit , i.e., the total unsatisfied demand in the network, in Figure 1b, such a recovery algorithm is costly and leaves large portions of the grid without power until the final steps of the recovery algorithm. To conclude, we find that a recovery process based on quick growth of the LCC is neither cost efficient nor effective for satisfying consumer’s demand as quickly as possible. As a result, we do not consider this algorithm for further studies.

Competitive percolation optimizing for demand satisfaction

We have shown that the key driving factor in recovery processes is reduction of the total commodity deficit, i.e., the total unsatisfied demand, and that optimizing LCC fails to capture this. To capture the essence of real recovery strategies, we propose a competitive percolation process which we refer to as recovery percolation that, instead of optimizing for LCC growth, aims to directly reduce the unsatisfied demand. In addition to network topology, this recovery process also takes into account the net demand or production of the individual nodes.

We define as the commodity deficit of the connected component to which node belongs. We assume that capacity constraints of the transmission lines are sufficient and thus do not limit the flow of commodities during the recovery process, a common practice in infrastructure recovery literature [14, 22, 23]. Therefore the commodity deficit of a component is the sum of demand or supply of individual nodes belonging to the component, i.e., , where is the set of nodes belonging to the component containing node .

We use commodity deficit of the components as a selection criteria for the competitive percolation model to account for the goal of balancing supply and demand. Similar to LCC percolation discussed above, we randomly select damaged candidate edges, from which one is chosen to be repaired and added to the network at each timestep as follows. We first consider how much demand would be met by adding each of the edges individually to the network. More specifically, if nodes and belong to components such that , then repairing edge reduces the total commodity deficit by ; if , then there is no commodity deficit reduction, i.e., . Out of the candidate edges, we repair the one that maximizes ; if multiple candidates have the same maximal , we repair one of them chosen uniformly at random. As shrinks and approaches , the process becomes more stochastic; while if the process is largely deterministic, as we always select an edge that is optimal with respect to the selection criteria.

Figure 1b shows that for the Shelby County power grid the total commodity deficit during the recovery percolation for well approximates the td-NDP, especially at the beginning of the recovery process when costs are much higher. We also see that even when , corresponding to only of the total edges considered at each timestep, the approximation remains very effective. Figure 2c shows similar dynamical behavior in recovery percolation as in the td-NDP solution (cf. Figure 2a): larger components delay formation, and tend to have smaller commodity deficit.

Results

In the following, we apply the recovery percolation model to various network topologies to identify important mechanisms driving network formation and to understand how network structure affects the efficiency of recovery efforts. For each synthetically generated network, the demand distribution is chosen to approximate the demand observed in real power grids (details are provided in the Methods section).

Recovery percolation on complete networks

We have shown that recovery percolation follows our benchmark td-NDP solution closely on a real-world topology. We also observed that the growth of connected components is suppressed via recovery percolation as compared to LCC percolation. To understand this behavior, we study large systems with nodes and we allow potential edges to exist between any node pair, removing underlying topology constraints. Note that the td-NDP process is intractable for networks of this size.

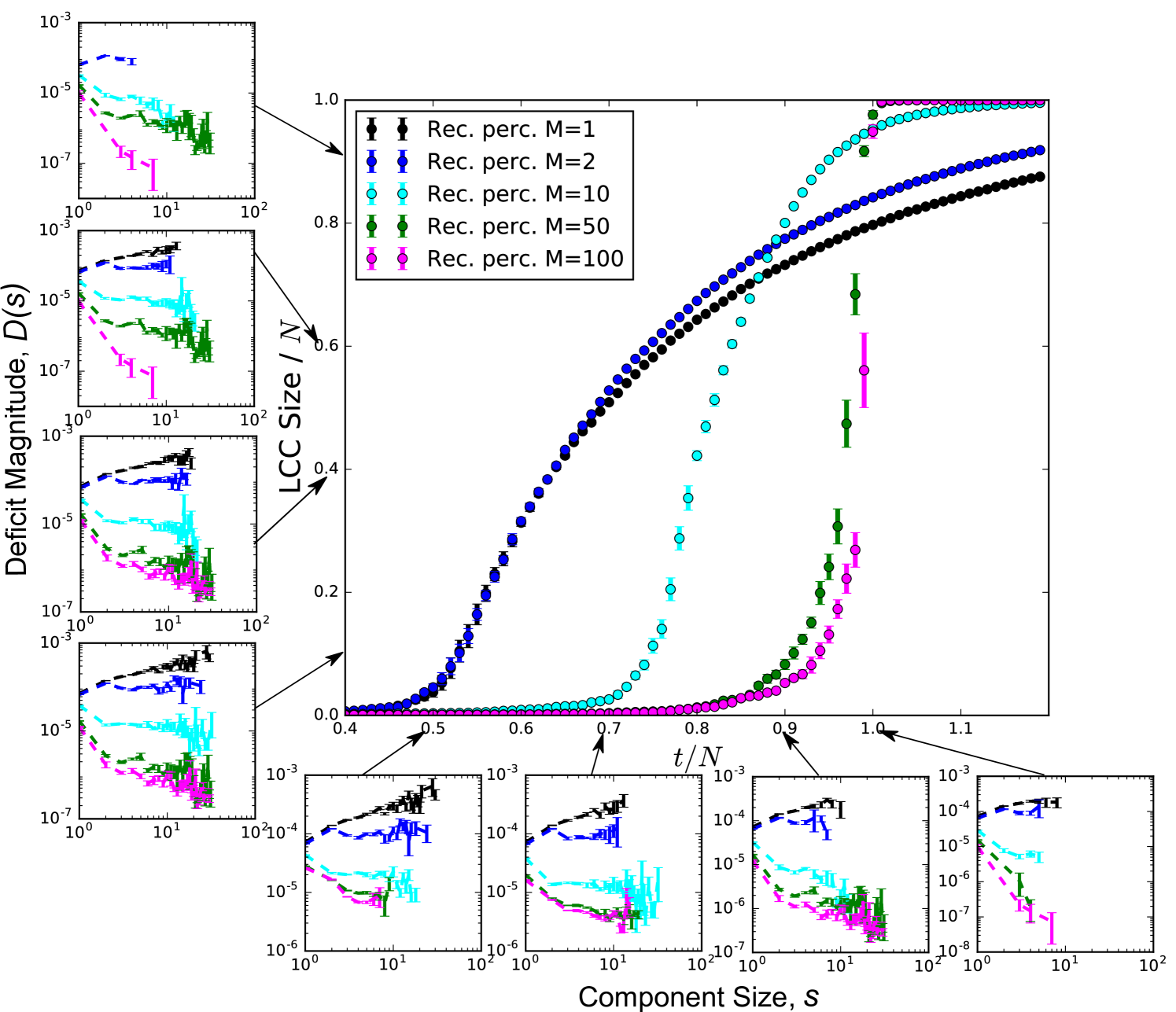

Figure 3 (main figure) shows the growth of the LCC for a range of values. For , the model reduces to random percolation which has a second-order phase transition at , and above this critical point the LCC becomes proportional to . As we increase , the apparent transition point shifts to higher values and approaches , indicating that the appearance of large-scale connectivity is suppressed; however, once the transition point is reached, the growth of the LCC becomes increasingly abrupt. This observation is analogous to explosive percolation, where links are chosen to be constructed explicitly to delay component growth [29]. In contrast, in recovery percolation it is an indirect consequence of the restoration strategy.

To understand the underlying mechanism of component growth, we plot the average component sizes and their corresponding average undersupply at various points during the reconstruction process in the bottom row of plots in Figure 3. Note that average oversupply behaves in a similar manner, but is omitted for clarity. The left column of plots in Figure 3 shows the same quantities such that the size of the LCC is fixed. The main trend we observe at any given point in time is that for large enough there is negative correlation between component size and undersupply, and this correlation becomes stronger as increases. This means that as components grow their commodity deficit is reduced and therefore the likelihood of further growth is also reduced, ultimately suppressing the appearance of large scale connectivity.

The observed two features also describe islanding, an intentional behavior in resilience planning and recovery in real-world power grids. This islanding behavior is already observed in early stages of the restoration process, becoming more apparent as approaches the transition point.

Recovery percolation on synthetic power grids

So far we investigated the recovery process on an underlying graph without topological constraints. We also wish to analyze more realistic networks and turn our attention to synthetic power grids [31, 32]. This allows us to systematically investigate how typical structural features of power grids affect the efficiency of the recovery process.

Power grids are spatially embedded networks, and physical constraints limit the maximum number of connections a node can have; their degree distributions, therefore, have an exponential tail, in contrast to many complex networks that display high levels of degree heterogeneity. Power grids typically have average degree between and [32, 33]. An important requirement of power grids is structural redundancy, meaning that the failure of a single link cannot cause the network to fall into disconnected components. A network without redundancy has tree structure, has average degree 2 and all node pairs are connected through a unique path. Any additional link creates loops and improves redundancy. Structural redundancy can be characterized locally by counting short range loops. For example, power grids have a high clustering coefficient , typically ranging between and [32]. The algebraic connectivity, denoted by , is the second smallest eigenvalue of the network’s Laplacian matrix and captures a measure of global redundancy: it is related to the number of links that have to be removed in order to break the network into two similarly sized components, with high value corresponding to high redundancy. The exact value of depends on system size, where for a given number of nodes is minimal for tree structure, and monotonically increases as further links are added [34].

To generate networks that exhibit these features, we use a simplified version of a practical model developed by Schultz et al. [32]. The model generates spatially embedded networks mimicking the growth of real-world power grids. The process is initiated by randomly placing nodes on the unit square and connecting them with their minimum spanning tree. To increase redundancy, () number of links are added one-by-one, such that each link is selected to minimize the redundancy-cost trade-off function

| (1) |

where and are two nodes not connected directly, is their shortest path distance in the network, and is their Euclidean distance. The parameter controls the trade-off between creating long loops to improve redundancy and the cost of building power lines. After the initialization, we add nodes through a growth process. In each time step a new node is added: with probability the node is placed in a random position and connects to the nearest node; with probability a randomly selected link is split and a new node is placed halfway between nodes and and is connected to both of them. To increase redundancy, in each time step an additional link is added with probability connecting a randomly selected node to node , such that is minimized. Finally, a fraction of nodes are randomly selected to be suppliers, the rest are assigned to be consumers.

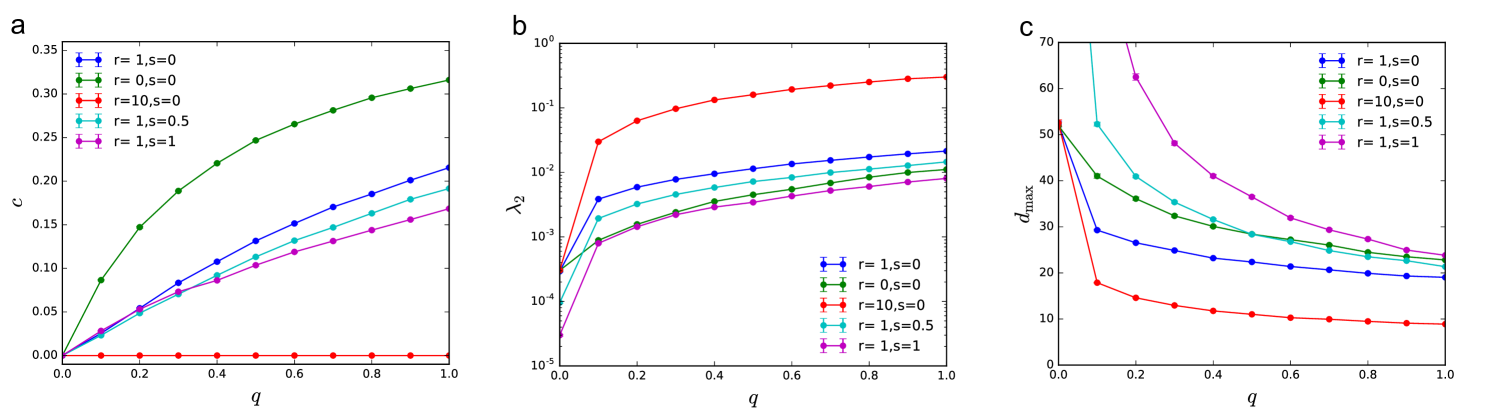

Changing parameters , , and allows us to systematically explore how these parameters impact the structure of these model power grids (Fig. 4): controls the average degree and adds redundancy to the network; controls how loops are formed, where small favors short distance connections leading to high and low , while large favors long loops leading to low and high ; and increases typical distances in the network, lowering both and .

Comparing recovery percolation and td-NDP

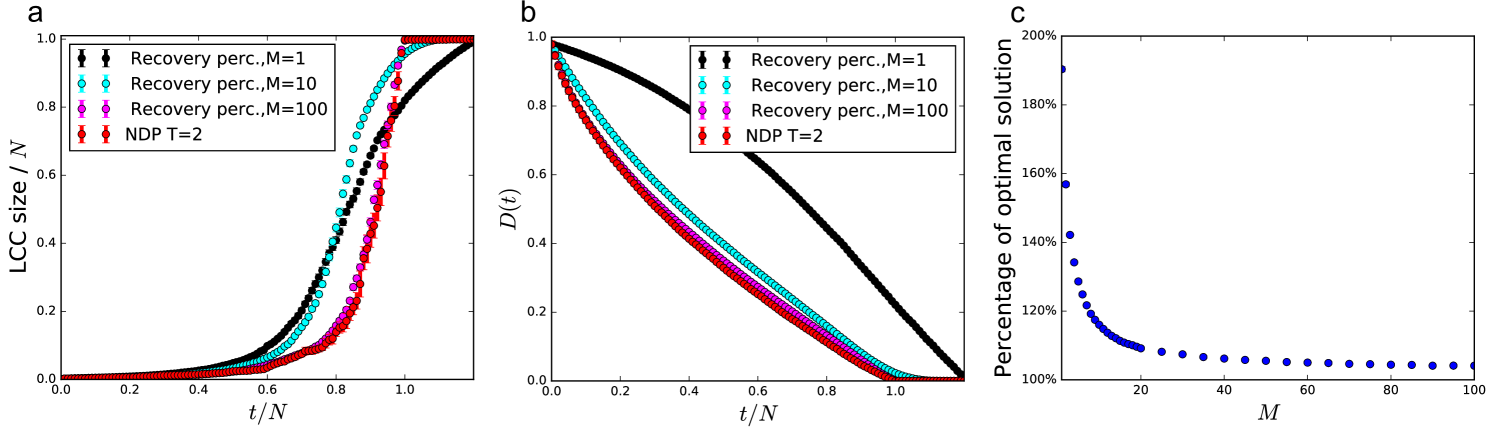

We first consider a set of parameters that yield typical topologies and compare the performance of recovery percolation to that of the locally optimal td-NDP recovery. We choose the parameters to create networks similar to the Western US grid following the specifications of Ref. [32] (, , , and ). For the td-NDP analysis, we reduce the time window from , as used in the Shelby County model, down to for tractability reasons since our synthetic networks are much larger (increasing causes an exponential increase in complexity). While this will result in a suboptimal solution, it still considers future timesteps, a dimension not present in percolation models. Figure 5a shows the growth of the LCC for the td-NDP process and recovery percolation varying from to . For recovery percolation we observe similar behavior to that seen for studies on the complete networks: as is increased the growth of the LCC is suppressed, and the formation of large-scale connectivity is delayed, and when it forms it grows more rapidly. For large , the recovery percolation closely resembles the td-NDP recovery in terms of LCC formation.

As the dominant cost factor in recovery of infrastructure networks is the total commodity deficit , this is the most important metric in network recoverability, beyond the size of the LCC. Figure 5b shows reduction throughout the recovery process. As increases, we see a closer fit with td-NDP, especially in the more expensive early stages of recovery. Surprisingly, approximates total commodity deficit quite well, which is significantly less computationally intensive than td-NDP or the deterministic version of recovery percolation ().

To better understand how the choice of affects the quality of recovery percolation, we calculate the total cost , defined here as the area under the curve over time (i.e., ) as a function of . Figure 5c shows that rapidly approaches , its value at . For this particular case, we only need to consider , that is less than of edges, at each timestep to get within of the optimal cost.

It is worth highlighting that recovery percolation captures the essential properties of the td-NDP process for , despite the fact that recovery percolation only considers commodity deficit, while td-NDP takes into account such details as heterogeneous repair costs of individual power lines, operational costs, performs network flows, and selects optimal recovery actions considering two timesteps.

Effect of network structure on recovery percolation

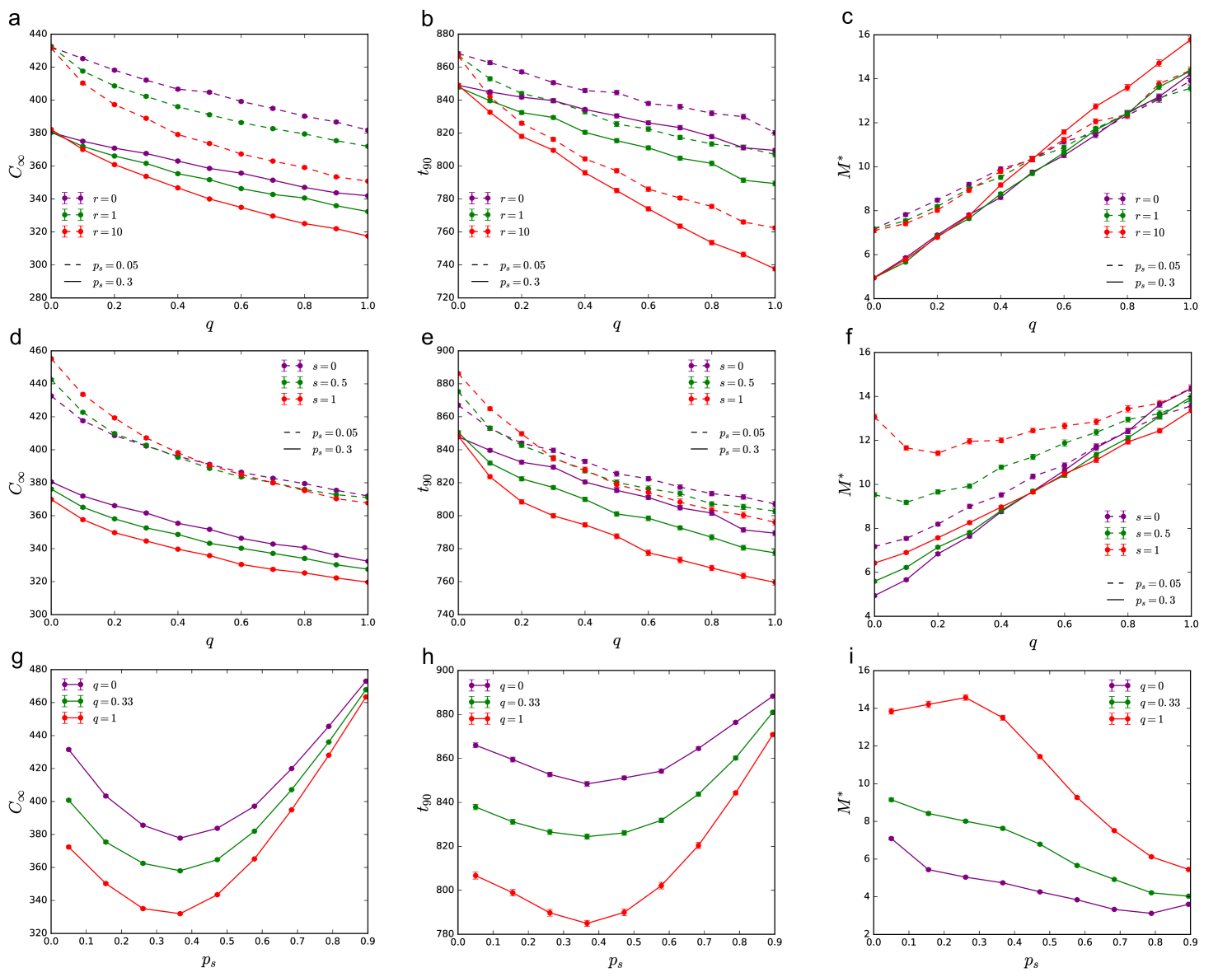

Recovery percolation together with the synthetic power grid networks provide a stylized model to extract key network features that impact the efficiency of the restoration process. For this we systematically investigate how typical structural features affect the following quantities:

-

1.

Total optimal cost of recovery , which is the minimum cost obtainable with recovery percolation ().

-

2.

Time to recovery , the number of timesteps needed to reduce total commodity deficit by 90% percent.

-

3.

Characteristic , which captures the approximability of the process. It is defined as the smallest value for which .

Simulations show that redundancy , which controls the average degree, has the strongest effect (Figs. 6a-c). Increasing redundancy lowers both optimal total cost and recovery time ; however, it increases , meaning that to approximate the optimal solution more edges need to be sampled. This observation is robust to the choice of other parameters. Redundancy increases possible ways to reconnect the network, allowing less costly reconstruction strategies, but this also means that more paths must be explored to pick out the optimal one. The effect of is more subtle, we find that long range shortcuts () further decrease and ; while short cycles () have the opposite effect. The value of has little effect on .

The effect of line splitting depends on both the fraction of suppliers and the redundancy (Figs. 6d-f). For centralized supply (), we find that in case of low redundancy, increases cost and recovery time ; while in case of high redundancy, has the opposite effect, reducing and . Independent of the value of , the characteristic is significantly increased. For distributed supply (), we find that both and are increased by independently of the value of . While the value of is increased by for high , and decreased for low .

Finally, the fraction of suppliers also strongly influences the recovery process (Figs. 6g-i). Total optimal cost and recovery time are high for very centralized (low ) and very distributed (high ) supply, with a minimum in between. If the demand and supply follow the same distribution, the minimum is at . For our choice, the demand is more heterogeneously distributed than the supply, resulting . Increasing , also allows easier approximation of the optimal solution, i.e., decreases with increasing (with the exception of low and ).

Overall, we find that high structural redundancy reduces the optimal cost and time of recovery; however, higher edge sampling is needed to benefit from this reduction. Long range shortcuts in the network further reduce the cost, without significantly increasing . We also benefit from distributed supply, reducing both cost and recovery time, and depending on the level redundancy, may also improve approximability.

Discussion

We investigated the problem of optimal cost reconstruction of critical infrastructure systems after catastrophic events. We started by analyzing realistic recovery strategies for a small-scale case study, the power grid of Shelby County, TN. We identified the penalty incurred for over- and undersupply of commodities as the main contribution to the cost, outranking operational and repair costs by orders of magnitude in the initial periods of recovery. Motivated by this observation, we introduced the recovery percolation model, a competitive percolation model that in addition to network structure also takes the demand and supply associated with each node into account. The advantage of our stylized model is that it is computationally tractable and easy to interpret compared to the complex optimization problems studied in the civil engineering literature, while adequately reproducing important features of realistic recovery processes. This allows us to identify underlying mechanisms of the recovery process. For example, we showed that component size anti-correlates with the unsatisfied demand, which suppresses the emergence of large-scale connectivity through a process analogous to explosive percolation. Such a suppression of large-scale connectivity can be in fact observed in real recovery events [18]. The model also allowed us to systematically investigate the effect of typical network characteristics on the efficiency of the recovery process using synthetic power grids.

The computational complexity of identifying actionable reconstruction strategies is an open issue, especially in the case of interdependent and decentralized recovery scenarios, where systems are larger, and the optimization problem must be solved numerous times [22, 30, 35]. Our stylized model is efficient, but still ignores details. Similar to td-NPD, these strategies provide scenarios that may be useful for developing recovery operator based approaches to mathematically model the dynamics of recovery and enable development of data-driven control approaches [36]. Further work is needed to extend our model to simultaneous recovery of multiple critical infrastructure systems explicitly taking into account interdependencies between the systems. Competitive percolation strategies in general, can provide opportunities for modeling real-world processes. For instance, in addition to this application to recovery, there is recent work of applying competitive percolation strategies to suppress the outbreak of epidemics via targeted immunization [37].

| (2a) | ||||

| subject to, | ||||

| (2b) | ||||

| (2c) | ||||

| (2d) | ||||

| (2e) | ||||

| (2f) | ||||

| (2g) | ||||

| (2h) | ||||

| (2i) | ||||

| (2j) | ||||

| (2k) | ||||

| (2l) | ||||

| (2m) | ||||

| (2n) | ||||

| (2o) | ||||

Methods

Time-dependent NDP

Here, we define our benchmark model for network recovery: the time-dependent network design problem (td-NDP). Our version follows the more general formulation developed by Gonzalez et al. [22, 23, 30]. The td-NDP takes a graph , where is a set of nodes, and is the set of edges connecting nodes. At the beginning of the recovery process the td-NDP uses the destroyed graph, , where and represents the nodes and edges that are not functioning, respectively. The objective function (cf. Equation (2a)) minimizes the total reconstruction cost over a given time domain with , which includes the cost to repair nodes, , cost to repair edges, , cost of flow on each edge, , and oversupply and undersupply penalties for each node, and . These costs usually depend on multiple factors, such as the level of damage, the type and size of the components to be restored, their geographical accessibility, the amount of labor and resources required, and the social vulnerability of the affected areas, among others [22, 38, 39]. To keep track of demand satisfaction, each node also has a supply capacity (demand if negative), . In the most general formalization of the problem, node supply can depend on time , but in this paper we only consider constant values. The variables () account for oversupply (or undersupply) of node . We refer to the sum of the absolute values of oversupply and undersupply () as the commodity deficit of node . The td-NDP includes as decision variables the amount of flow on each edge, , whether or not a node [edge ] is chosen to be recovered at timestep , (), and whether or not a node [edge ] is functional at timestep , (). Constraints 2b- 2o are imposed to ensure that conservation of flow properties are held and that only recovered and functional nodes can produce or consume flow.

The td-NDP formulation is a mixed integer program, which has been shown to be, in general, NP-hard (and becomes exponentially harder as and grows). The number of variables and constraints also become larger as the input graph becomes larger. For many reasonable size problems, computing a global optimal (i.e., where contains the entire time horizon for recovery) is intractable. Therefore, heuristics are used to restrict the size of by dividing the total recovery time into smaller windows, and finding the locally optimal solutions within these windows [22]. It has been shown that such heuristic finds solutions very close to the optimal; however, the computational complexity is still relatively high as a result of the underlying mixed-integer program.

Supply and demand distribution

For our computational experiments, we generate our demand distribution by following the load distribution of the European power grid [39]. This dataset was chosen due to its large system size () and its high resolution. Our goal is not to identify the true analytic form of the load distribution, but to generate statistically similar samples through bootstrapping. We found that an exponentiated Weibull distribution of the form , where and well approximates the features of the demand distribution. Suppliers’ capacities are uniformly distributed to balance the total demand. We also get our ratio of suppliers () to consumers () from this dataset.

References

- [1] Albert, R., Jeong, H. & Barabási, A.-L. Error and attack tolerance of complex networks. \JournalTitleNature 406, 378–382 (2000).

- [2] Cohen, R., Erez, K., Ben-Avraham, D. & Havlin, S. Resilience of the internet to random breakdowns. \JournalTitlePhysical review letters 85, 4626 (2000).

- [3] Motter, A. E. & Lai, Y.-C. Cascade-based attacks on complex networks. \JournalTitlePhysical Review E 66, 065102 (2002).

- [4] Li, D., Yinan, J., Rui, K. & Havlin, S. Spatial correlation analysis of cascading failures: Congestions and blackouts. \JournalTitleScientific Reports 4 (2014).

- [5] Zhao, J., Li, D., Sanhedrai, H., Cohen, R. & Havlin, S. Spatio-temporal propagation of cascading overload failures in spatially embedded networks. \JournalTitleNature Communications 7 (2016).

- [6] Zhong, J. Restoration of interdependent network against cascading overload failure. \JournalTitlePhysica A: Statistical Mechanics and its Applications 514, 884–891 (2018).

- [7] Latora, V. & Marchiori, M. Efficient behavior of small-world networks. \JournalTitlePhysical Review Letters 87, 198701 (2001).

- [8] Ash, J. & Newth, D. Optimizing complex networks for resilience against cascading failure. \JournalTitlePhysica A: Statistical Mechanics and its Applications 380, 673–683 (2007).

- [9] Holme, P., Kim, B. J., Yoon, C. N. & Han, S. K. Attack vulnerability of complex networks. \JournalTitlePhysical Review E 65, 056109 (2002).

- [10] Majdandzic, A. et al. Spontaneous recovery in dynamical networks. \JournalTitleNature Physics 10, 34 (2014).

- [11] Hu, F., Yeung, C. H., Yang, S., Wang, W. & Zeng, A. Recovery of infrastructure networks after localised attacks. \JournalTitleScientific Reports 6 (2016).

- [12] Shang, Y. Localized recovery of complex networks against failure. \JournalTitleScientific Reports 6 (2016).

- [13] Di Muro, M., La Rocca, C., Stanley, H., Havlin, S. & Braunstein, L. Recovery of interdependent networks. \JournalTitleScientific Reports 6 (2016).

- [14] Quattrociocchi, W., Caldarelli, G. & Scala, A. Self-healing networks: Redundancy and structure. \JournalTitlePLOS One 9 (2014).

- [15] Panteli, M., Trakas, D. N., Mancarella, P. & Hatziargyriou, N. D. Boosting the power grid resilience to extreme weather events using defensive islanding. \JournalTitleIEEE Transactions on Smart Grid 7, 2913–2922 (2016).

- [16] Mureddu, M., Caldarelli, G., Damiano, A., Scala, A. & Meyer-Ortmanns, H. Islanding the power grid on the transmission level: less connections for more security. \JournalTitleScientific Reports 6, 34797 (2016).

- [17] National Research Council. Terrorism and the electric power delivery system (National Academies Press, 2012).

- [18] Rudnick, H., Mocarquer, S., Andrade, E., Vuchetich, E. & Miquel, P. Disaster management. \JournalTitleIEEE Power and Energy Magazine 9, 37–45 (2011).

- [19] Johnson, D., Lenstra, J. & Kan, A. The complexity of the network design problem. \JournalTitleNetworks 8, 279–285 (1978).

- [20] Balakrishnan, A., Magnanti, T. L. & Wong, R. T. A dual-ascent procedure for large-scale uncapacitated network design. \JournalTitleOperations Research 37, 716–740 (1989).

- [21] Nurre, S. G., Cavdaroglu, B., Mitchell, J. E., Sharkey, T. C. & Wallace, W. A. Restoring infrastructure systems: An integrated network design and scheduling (inds) problem. \JournalTitleEuropean Journal of Operational Research 223, 794–806 (2012).

- [22] González, A. D., Dueñas-Osorio, L., Sánchez-Silva, M. & Medaglia, A. L. The interdependent network design problem for optimal infrastructure system restoration. \JournalTitleComputer-Aided Civil and Infrastructure Engineering 31, 334–350 (2016).

- [23] González, A. D., Dueñas-Osorio, L., Sánchez-Silva, M. & Medaglia, A. L. The time-dependent interdependent network design problem (TD-INDP) and the evaluation of multi-system recovery strategies in polynomial time. \JournalTitleThe 6th Asian-Pacific Symposium on Structural Reliability and its Applications 544–550 (2016).

- [24] Poorzahedy, H. & Rouhani, O. M. Hybrid meta-heuristic algorithms for solving network design problem. \JournalTitleEuropean Journal of Operational Research 182, 578–596 (2007).

- [25] Gallo, M., D’Acierno, L. & Montella, B. A meta-heuristic approach for solving the urban network design problem. \JournalTitleEuropean Journal of Operational Research 201, 144–157 (2010).

- [26] Li, D., Zhang, Q., Zio, E., Havlin, S. & Kang, R. Network reliability analysis based on percolation theory. \JournalTitleReliability Engineering and System Safety 142, 556–562 (2015).

- [27] Krapivsky, P. L., Redner, S. & Ben-Naim, E. A kinetic view of statistical physics (Cambridge University Press, 2010).

- [28] Achlioptas, D., D’souza, R. M. & Spencer, J. Explosive percolation in random networks. \JournalTitleScience 323, 1453–1455 (2009).

- [29] D’Souza, R. M. & Nagler, J. Anomalous critical and supercritical phenomena in explosive percolation. \JournalTitleNature Physics 11, 531–538 (2015).

- [30] González, A. D., Chapman, A., Dueñas-Osorio, L., Mesbahi, M. & D’Souza, R. M. Efficient infrastructure restoration strategies using the recovery operator. \JournalTitleComputer-Aided Civil and Infrastructure Engineering 32, 991–1006 (2017).

- [31] Wang, Z., Scaglione, A. & Thomas, R. J. Generating statistically correct random topologies for testing smart grid communication and control networks. \JournalTitleIEEE transactions on Smart Grid 1, 28–39 (2010).

- [32] Schultz, P., Heitzig, J. & Kurths, J. A random growth model for power grids and other spatially embedded infrastructure networks. \JournalTitleThe European Physical Journal Special Topics 223, 2593–2610 (2014).

- [33] Li, J., Dueñas-Osorio, L., Chen, C., Berryhill, B. & Yazdani, A. Characterizing the Topological and Controllability Features of U.S. Power Transmission Networks Physica A: Statistical Mechanics and Its Applications 453, 84-98 (2016).

- [34] Van Mieghem, P. Graph spectra for complex networks (Cambridge University Press, 2010).

- [35] Smith, A. M., González, A. D., Dueñas-Osorio, L. & D’Souza, R. M. Interdependent network recovery games. \JournalTitleRisk Analysis (2017).

- [36] Chapman, A., González, A. D., Mesbahi, M., Dueñas-Osorio, L. & D’Souza, R. M. Data-guided control: Clustering, graph products, and decentralized control. In Decision and Control (CDC), 2017 IEEE 56th Annual Conference on, 493–498 (IEEE, 2017).

- [37] Clusella, P., Grassberger, P., Pérez-Reche, F. J. & Politi, A. Immunization and targeted destruction of networks using explosive percolation. \JournalTitlePhysical Review Letters 117, 208301 (2016).

- [38] FEMA. Multi-hazard Loss Estimation Methodology, Earthquake Model - Technical Manual, Hazus - MH 2.1. Tech. Rep., Washington D.C. (2013).

- [39] Hutcheon, N. & Bialek, J. W. Updated and validated power flow model of the main continental european transmission network. In PowerTech (POWERTECH), 1–5 (IEEE, 2013).

Acknowledgements

We gratefully acknowledge support from the U.S. Army Research Laboratory and the U.S. Army Research Office under MURI award number W911NF-13-1-0340, and from DARPA award W911NF-17-1-0077.

Author contributions statement

A.S. was responsible for experimental design and analysis; M.P. was responsible for problem scoping and providing theoretical insights; M.R. provided guidance for power grid analysis and network formation; A.G. and L.D.O. contributed recovery and optimization algorithms and background; R.D. provided percolation and network science guidance and expertise. All authors contributed to the transcription of this article.

Competing interests

The author(s) declare no competing interests.