Complexity-aware Large Scale Origin-Destination Network Generation via Diffusion Model

Abstract.

The Origin-Destination (OD) networks provide an estimation of the flow of people from every region to others in the city, which is an important research topic in transportation, urban simulation, etc. Given structural regional urban features, generating the OD network has become increasingly appealing to many researchers from diverse domains. However, existing works are limited in independent generation of each OD pairs, i.e., flow of people from one region to another, overlooking the relations within the overall network. In this paper, we instead propose to generate the OD network, and design a graph denoising diffusion method to learn the conditional joint probability distribution of the nodes and edges within the OD network given city characteristics at region level. To overcome the learning difficulty of the OD networks covering over thousands of regions, we decompose the original one-shot generative modeling of the diffusion model into two cascaded stages, corresponding to the generation of network topology and the weights of edges, respectively. To further reproduce important network properties contained in the city-wide OD network, we design an elaborated graph denoising network structure including a node property augmentation module and a graph transformer backbone. Empirical experiments on data collected in three large US cities have verified that our method can generate OD matrices for new cities with network statistics remarkably similar with the ground truth, further achieving superior outperformance over competitive baselines in terms of the generation realism.

1. Introduction

The ability to model population mobility for traffic control (Arentze and Timmermans, 2004), urban planning (Vuchic, 2002) and resource scheduling (Ruan et al., 2020; Caminha and Furtado, 2017) has critical impacts on everyday functioning and sustainable development of our cities. The Origin-Destination (OD) matrix is a commonly used way of structuring mobility flow information in a city, which includes the number of travelers between every two regions. However, it costs a lot to gather the OD matrix of a new city through traditional travel surveys. This has led to studies that generate the OD matrices for new cities without any flow information based on city characteristics including geographic structure as well as the demographics and urban functions. Unlike OD matrix forecasting (Wang et al., 2019; Shi et al., 2020), this problem of city-wide OD matrix generation aims to generate the OD matrix for a city without any historical flow information.

Existing works for the OD matrix generation can be divided into two categories, i.e., physics-based methods (Zipf, 1946; Simini et al., 2012; Barbosa et al., 2018) and machine learning-based methods (Robinson and Dilkina, 2018; Pourebrahim et al., 2019; Liu et al., 2020; Simini et al., 2021). The physics-based methods draw an analogy between population and physical phenomena (Zipf, 1946; Simini et al., 2012) and model the flow of people between two regions through equations with several parameters. The machine learning-based methods follow the data-driven paradigm and utilize complex models with many parameters trained on a large amount of data to model the nonlinear dependencies between the mobility flow and features of the origin as well as the destination. Because of the complexity of population mobility, physics-based methods have relatively poor performance, while machine learning-based methods have recently achieved better results and wider applications (Adnan et al., 2016; Hörl et al., 2021; Zeng et al., 2022; Karimi et al., 2019) aided by the sophisticated model structure.

Despite their capability of incorporating complex urban factors related to flow generation, existing works neglect the relations between elements in the city-wide OD matrix and instead generate each element, i.e., mobility flow, in an independent manner. In this regard, previous studies (Yan et al., 2017; Saberi et al., 2017) have investigated urban travel demand patterns in a network perspective and empirically observed the scaling behaviour, i.e., power laws, in distributions of node flux and link weight, etc. This important network property of the city-wide OD matrix (Balcan et al., 2009; Barthélemy, 2011; Yan et al., 2017) is vital for its generation realism and thus calls for a graph generative modeling solution that has been untouched in previous works.

Recently, deep generative models (Kingma and Welling, 2013; Goodfellow et al., 2020; Ho et al., 2020; Song and Ermon, 2019) are proposed to model complex joint distribution of data. Among them, the best-performing work is diffusion models (Ho et al., 2020). Diffusion models approximate complex data distributions by gradually removing small noise from simple distributions (Ho et al., 2020). The elaborate denoising process allows diffusion models to effectively fit complex distributions and generate high quality data. Therefore, we propose to solve the problem of city-wide OD matrix generation based on city characteristics via graph denoising diffusion methods.

However, it is hard to directly model joint distribution of the city-wide OD matrix via graph diffusion methods and generate the OD matrix for a new city. There are two challenges as follows.

-

•

How to overcome the difficulty of generating the city-wide OD matrix covering thousands of regions? The city-wide OD matrix is equivalent to a mobility flow network with thousands of nodes, while existing deep generative graphic models mainly focus on the molecular structure (Niu et al., 2020; Haefeli et al., 2022; Jo et al., 2022; Vignac et al., 2022) and can only handle topologies with tens or hundreds of nodes. This means building continuous connections at million-level, which greatly increases the complexity of the joint distribution.

-

•

How to achieve realistic reproduction of network properties contained in the mobility flow pattern in generation? The mobility flow network/OD matrix exhibits sparsity and scaling behaviours. People prefer to travel a short distance, which causes no flow between most regions. Moreover, the spatial distribution of mobility in a city appears highly heterogeneous with scaling behavior on node and edge level (Saberi et al., 2017; Yan et al., 2017). Maintaining these properties in generated city-wide OD matrices is a claim of realism, but very challenging.

Solving above two challenges calls for a decoupling of the topology and weights of the mobility flow network and locally graphic modeling from node and edge level in graph diffusion methods. We propose a cascaded graph denoising diffusion method for soling the problem of city-wide OD matrix generation (DiffODGen). To generate the city-wide OD matrix and overcome the first challenge, we dismember the generation process into two stages, and construct a pipeline comprised by two diffusion models to deal with sub-tasks in each stage. In the first stage, our proposed method determines the existence of flow, i.e., topology structure of the mobility flow network with a topology diffusion model. Then, the flow volumes of region pairs that have flows will be generated via the flow diffusion model. For better integration of the two stages, we design a collaborative training to eliminate the cascading errors. For maintaining network properties of sparsity and scaling behaviors, we utilize the discrete denoising process (Austin et al., 2021; Vignac et al., 2022) in the topology diffusion model and apply a graph transformer-based network parameterization to model the city-wide OD matrix from network perspective respectively. Besides, we specially design node properties augmentation modules in both diffusion models to enhance the denoising networks with the capability of fully modeling the scaling behaviors on node level.

In summary, the contributions can be summarized as follows.

-

•

We propose to leverage the idea of graph generative modeling in learning the joint distribution of mobility flows for generating city-wide OD matrices in new cities. To the best of our knowledge, we are the first to resolve this important urban computing problem of OD matrix generation within the diffusion model paradigm.

-

•

We design a cascaded graph denoising diffusion method and graph transformer network parameterization with a node properties augmentation module to generate city-wide OD matrix for new cities with the network properties retained.

-

•

Experiment results on two real-world dataset collected from two large US cities demonstrate the superiority of our DiffODGen over state-of-the-art baselines in terms of generating realistic city-wide OD matrices. Importantly, the generated OD matrix exhibits excellent network statistics similarities of sparsity and scaling behaviors in both node level and edge level with the real-world data.

2. RELATED WORK

In this section, we will give a comprehensive review of the related works on OD Matrix generation and recent achievements of diffusion models in the field of graph learning.

2.1. OD Flow Generation

Research related to OD matrix generation has a very long history, with a recent boom brought about by the rise of machine learning algorithms. The methods utilized in these works are mainly in two categories. The first kind of methods are inspired by classic physical laws. In 1946, Zipf (Zipf, 1946) introduced Newton’s physics law of Gravitation to model the mobility flow between two regions. The population of one region is considered as the mass while the flow between two regions is modeled as the universal gravitational force between objects. Simini et al. (Simini et al., 2012) compare the movement of people within urban space to the processes of emission and absorption of radiation in solid physics. Due to the limitation of only modeling the complex population mobility based on simple physical models, these physical methods perform poorly.

With the advances in machine learning, a growing spectrum of problems are being tackled in the data-driven paradigm, and population mobility modeling is no exception. Robinson et al. (Robinson and Dilkina, 2018) show that the tree-based machine learning methods perform much better than traditional physics-based methods, especially Gradient Based Regression Trees (GBRT). Pourebrahim et al. (Pourebrahim et al., 2019) compare popular machine learning models and conclude that random forest performs best. Simini et al. (Simini et al., 2021) introduce deep neural networks to enhance the gravity model with richer urban features, granting improved nonlinear modeling capabilities. Liu et al. (Liu et al., 2020) bring the graph neural networks into the domain of OD flow prediction, using multitask learning as a training strategy to learn embedding with stronger representational power to improve the prediction. However, these models all only consider the features of one OD pair while ignoring complicated dependencies between mobility flows. These models cannot model the network statistic properties of the city-wide mobility flow networks (Saberi et al., 2017).

2.2. Diffusion Models

Diffusion models, which consist of a diffusion (noise-adding) process without any learning parameters and a reverse denoising process converting the sampled noise into data following complicated distribution with the help of denoising networks, are a kind of recent emerging generative models (Ho et al., 2020; Song and Ermon, 2019; Nichol and Dhariwal, 2021; Song et al., 2020). Such models have outperformed other deep learning generative models such as Variational Auto-encoders (VAEs) (Kingma and Welling, 2013), Generative Adversarial Networks (GANs) (Goodfellow et al., 2020) etc in many domains such as computer visions (Ho et al., 2020; Saharia et al., 2022), audio generations (Kong et al., 2020; Tashiro et al., 2021) and the graph generation (Niu et al., 2020; Jo et al., 2022; Vignac et al., 2022).

The most relevant works are those that apply diffusion models to graph generation task (Niu et al., 2020; Jo et al., 2022; Vignac et al., 2022). Niu et al. (Niu et al., 2020) are the first to utilize score-based diffusion models which adopt continuous Gaussian noise to construct the diffusion process to generate the adjacency matrix with the capability of permutation invariance. Further, Jo et al. (Jo et al., 2022) explore the possibility of jointly generating graphs with node and edge features employing diffusion models. Recent works (Haefeli et al., 2022; Vignac et al., 2022) have explored the construction of discrete denoising diffusion models with multinomial noise, enabling the generated graphs to retain sparsity and improve the generation quality. Our work explores the feasibility of applying graph diffusion models to generate the city-wide OD matrices (mobility flow networks), where not only the volumes of the mobility flow are generated by denoising continuous noise, but also the sparsity and scaling properties of OD matrices (Saberi et al., 2017; Yan et al., 2017) are ensured by graph denoising diffusion models.

3. Preliminaries

In this section, we will list important notations and definitions. And a brief introduction to principles of diffusion models are presented.

3.1. Definitions and Problem Formulation

Definition 3.1.

Regions. The city is spatially partitioned into several non-overlapping regions denoted as , where is the number of regions. The different regions are located in different parts of the city and perform different urban functions, which are reflected through the urban characteristics , such as demographics and points of interests (POIs).

Definition 3.2.

OD flow. The OD flow denotes the directed mobility flow between a specific region pair that departs from the origin and moves to the destination .

Definition 3.3.

OD Matrix. The OD matrix includes the OD flows between every two regions in the city, where stands for the mobility flow from region to region .

Problem 1. OD Matrix Generation. Given the regional urban characteristics of the city , generate the whole picture of mobility flow of that city, i.e., the OD matrix .

The OD matrix of a city can also be regarded as a directed weighted network , where the nodes represent regions and the directed edges with weights represent mobility flows.

Definition 3.4.

Mobility Flow Network. The OD matrix in this paper has the same meaning as a mobility flow network , except that one is in terms of data organization and the other is from the network perspective. The element is equivalent to , where denotes the directed edge from node to node .

Definition 3.5.

Adjacency Matrix. A adjacency matrix is a 0-1 binary matrix, where means no edge from node to node , i.e., no mobility flow from region to region and is the opposite. This indicates whether there are edges between nodes in the mobility flow network.

3.2. Diffusion Models

The diffusion models, which consist of a diffusion process and a reverse denoising process, aim to learn the sophisticated data distribution by removing noise from simple distributions using a Markov process (Ho et al., 2020). Through the forward process , the model produces a series of noisy data as hidden states by adding a small noise based on the original raw data .

| (1) |

where is the diffusion steps and is variance schedule. When sampling data from learned distribution, we need a neural network to complete the reverse denoising process by predicting hidden states without by neural networks until the last step of the denoising process has been performed:

| (2) |

Different diffusion processes utilize different kinds of noises (Gaussian noise for continuous data (Ho et al., 2020) and multinomial noise for discrete categorical data (Austin et al., 2021)), depending on the specific scenario.

4. Method

In this section, we will detail the cascaded graph denoising diffusion method for city-wide OD matrix generation in new cities (DiffODGen). First, we will introduce the framework of the method and dive into concrete information about each part of it.

4.1. Cascaded Graph Denoising Diffusion

4.1.1. Overall Framework.

As shown in Figure 1, our method includes two stages, which can be treated as separating the city-wide OD matrix generation task into two steps. In the first stage, as we can see in the upper part of Figure 1, the method determines whether there is a mobility flow between regions , i.e., topology structure, with the topology diffusion model. Then, the region pairs without flow will be directly set to zero flow and discarded in the rest of the process. According to the sparsity of the OD matrix, this will greatly reduce the scale of city-wide OD matrix generation and thus solve the first challenge. In the second stage, we use a continuous denoising diffusion model to learn the joint probability distribution of OD flows between the remaining region pairs for generation.

The diffusion models in both the two stages are in the form of classifier-free conditional diffusion models (Ho and Salimans, 2022), where pairwise regional attributes are element-wise assigned to the OD flows between each two regions in the OD matrix. This modeling can make the generated OD matrix not only globally conform to the overall properties, but also retain the advantages of the pairwise predictive model by fully considering the features of OD pairs so that OD flows are also locally reasonable.

4.1.2. Collaborative Training.

A noteworthy issue is that combining the two diffusion models directly has the problem of cascading errors, i.e., the errors generated in the topology generation stage are completely transferred to the flow generation stage, which leads to an amplification of the overall instability. To solve this issue, we adopt the strategy of teacher-force learning to collaborate the training of networks and . The training process is based on data from the source city, where the true value of the OD matrix is known. Not the adjacency matrix generated is applied for training the network parameters in the flow diffusion model, but the union of generated and the ground truth instead, which is computed as follow,

| (3) |

where the denotes the logic operator of OR. then serves as a mask in the flow diffusion model, so that it focuses only on the elements in the OD matrix whose value are not zero. In this way, the flow diffusion model has the capability of tolerating errors arising in the topology diffusion model and remedy them by generating the missing zero flows during the topology generation, thus improving the overall robustness of the method.

4.2. Topology Diffusion Model

Next, we will give a introduction to the topology diffusion model, including discrete denoising process and network parameterization.

4.2.1. Discrete Denoising Process.

To avoid destroying the sparsity of the generated OD matrix, we utilize the reverse denoising process with discrete state spaces to generate the adjacency matrix in the topology diffusion model. We adopt the d3pm proposed by Austin et al (Austin et al., 2021), which works for graph generation task (Vignac et al., 2022), to approximate the data distribution with . In our approach, data , which follows a binary distribution, is encoded with one-hot encoding, where equals two, i.e., the number of classes (zero/non-zero flow). For the convenience of representation, we briefly refer to the element in adjacency matrix as in the following. The noise-addition in the diffusion process is implemented through the matrix product with a series of transition matrix ,

| (4) |

such that denotes the probability of state transiting from class to class . It is worth noting that the noisy data state at diffusion step can be directly obtained by closed-form (Austin et al., 2021) calculations,

| (5) |

where . The transition matrix applied in our method introduce the uniform noise perturbation, which is calculated as follows,

| (6) |

where means the matrix transposition and controls the degree of adding noise. Given , the reverse denoising process can also be computed in closed-form based on Bayes rule (Austin et al., 2021),

| (7) |

where means the element-wise product. Since is unknown, we construct neural networks to fit the probability of given noisy topology matrix and realize the denoising process with predicted when doing generation,

| (8) |

For generating the adjacency matrix based on specific city characteristics, we then extend the above denoising process into a conditional denoising process to model conditional probability . The conditional denoising process is as follows,

| (9) |

In the procedure of generation, a pure noise , which follows the uniform distribution, is first sampled. The data samples that follow the original data distribution are then recovered gradually from the by the iterative calculation of reverse denoising process described by Eq. 9, where is the trained neural networks. It should be emphasized that the whole process of recovering data through latent states is performed on a discrete space, which can fully preserve the sparsity of the OD matrix (Vignac et al., 2022).

4.2.2. Backbone of Network Parameterization.

For better considering the adjacency matrix, i.e., topology structure and fully modeling the network properties (Saberi et al., 2017), the network parameterization of topology diffusion model is designed to build with the graph transformer proposed by Dwivedi et al (Dwivedi and Bresson, 2020). Moreover, we make two improvements to the network, adapting it to classifier-free element-wise conditional schema and integrating a node properties augmentation module to enhance the ability of modeling the network properties of mobility flows (Saberi et al., 2017).

The backbone of the graph transformer receives two inputs, node features and edge features, as shown in Figure 2. The graph transformer consists of several layers. In each layer, all nodes form a position-insensitive sequence. The attention weights between each pair of nodes will be calculated through self-attention. It is worth noting that the calculation of attention weights used for information propagation between nodes also incorporates the influence of edge features. Each node updates its own features by weighted aggregation of neighbor information. Each edge updates itself by fusing its own features with the attention information between its end nodes. The calculation process of each layer in the graph transformer is as follows,

| (10) |

where means the hidden states of node after layers, means the hidden states of the edge from node to node after layers, , , , , and are the learnable parameters, denotes the concatenation, to means the number of attention heads. The output of each layer will be input to the next layer. After the last layer, the output of node features is dropped and the output of edge features is converted into a tensor of the same shape as by a fully-connected layer to predict , as shown in Figure 2.

4.2.3. Node Properties Augmentation for Topology.

Message-passing graph neural networks have a limitation of unable to capture some specific features (Xu et al., 2018; Morris et al., 2019), such as the weighted aggregation schema can hardly model the degree of nodes in a graph. However, the network properties of mobility flow are largely reflected in the region level, i.e., node level in mobility flow network (Saberi et al., 2017). Therefore, we augment the capability of our graph transformer with a node properties augmentation to model the node level flow characteristics by statistically counting the properties of nodes into node features based on the adjacency matrix. The statistic properties of nodes include, but are not limited to, the degree of the node and the centrality, etc. With the help of node properties augmentation, the network can comprehensively model the noisy topology structure from both node and edge level in each diffusion step.

4.2.4. Classifier-free Element-wise Conditions.

As shown in Figure 2, we make an improvement by introduce a CondEmb module on the neural network backbone to make it classifier-free element-wise conditional denoising networks, so that diffusion models can determine whether there is a mobility flow based on its characteristics of origin and destination. First, urban attributes for each region are processed by MLPs to become regional condition embeddings, which have the same dimension as the node features that will be input to the graph transformer, as shown in Figure 2. Then, regional condition embeddings are fused with node features and edge features respectively through cross attention as the final input of the transformer, where the node features are directly fused with the embeddings region by region, while the edge features are fused with the concatenation of the embeddings of origin and destination as well as the distance between them. In this way, each row and column in the adjacency matrix, and each element has corresponding spatial urban features as its unique conditions that affect its generation in the denoising process.

4.2.5. Training of Topology Diffusion Model.

The network introduced above is trained by reducing the cross entropy between the prediction and true value of each element to empower the denoising process represented by Eq. 9. The overall cross entropy loss of the neural networks is calculated as follows,

| (11) |

where LCE means cross entropy loss. The training algorithm of networks is shown as Algorithm 1. Once neural networks are trained, they can be employed to generate topology structure of mobility flow networks for better city-wide OD matrix generation.

-

•

Spatial structure of the training city:

-

•

Regional city characteristics:

4.3. Flow Diffusion Model

Given the adjacency matrix generated by the topology diffusion model, the mask that determines which region pairs the flows should be generated is calculated by Eq. 3. The mask is then used in the flow diffusion model to determine which elements of the OD matrix need flow generation. It is worth noting that since the generated adjacency matrix is not completely accurate, there are still zero flows that will be generated by the flow diffusion model.

4.3.1. Continous Denoising Diffusion Models.

Different from topology generation, we adopt Gaussian noise to construct the diffusion process since OD flows are continuous values. Since DDPM (Ho et al., 2020) was proposed, diffusion models in continuous latent spaces have been explored to a significant extent (Nichol and Dhariwal, 2021). In this part, the process of noise-addition diffusion of our diffusion models is performed on the image-like tensor, i.e., the OD matrix , where equals 1. Differently, the reverse denoising process is performed only at the position, where the elements equal to 1, in the mask , as shown in Figure1. Thanks to the sparsity of the OD matrix, although the OD matrix is large, our denoising process only focuses on the nonzero flow part, so we can effectively model it.

The diffusion process of the flow diffusion model is similar to the classical continuous diffusion models and has no parameters, which is shown in Eq. 1 from right to left. So we will not cover it repeatedly, but rather introduce the denoising process in detail. The computation of each denoising step is shown as follows,

| (12) |

where means the Gaussian distribution, is the noise schedule and .

4.3.2. Network Parameterization.

Similar to the topology diffusion model, we construct a conditional diffusion model by applying city characteristics as conditions to generate OD flows between regions. The network parameterization is also based on the graph transformer, except that we use the geo-contextual embedding learning methods proposed in GMEL (Liu et al., 2020) as the CondEmb module shown in Figure 2 to enhance our condition features representational capabilities. To be specific, urban attributes for each region are processed by two GATs (Veličković et al., 2017) to obtain the embeddings for each region as the origin and the destination, respectively. Then, region embeddings that are fused with the node features input into the graph transformer are composed of the origin embedding and the destination embedding of the region. The edge embeddings consist of the origin embedding of the origin and the destination embedding of the destination, as well as the distance between them. In addition, node properties augmentation is also used for flow generation to enhance the graph modeling capability from both node and edge level.

-

•

Spatial structure of the training city:

-

•

Regional city characteristics:

-

•

Mask from the topology diffusion model

4.3.3. Training of Flow Diffusion Model.

Ho et al. (Ho et al., 2020) show that the denoising process can be trained by predicting the added noise in the diffusion process at the corresponding time step, i.e., optimizing the neural networks through the following objective,

| (13) |

where denotes the noise added during the step in diffusion process and means the norm. The training algorithm is referred to Algorithm 2. The is used in collaborative training schema to reduce the effect of cascade errors of combining the two stages, whcih has been introduced in Sec. 4.1.2. Once the neural network is trained, it can be used to generate the OD flows for given topology of mobility flow network. First, a pure noise is sampled from the standard Gaussian noise . The noise is then sequentially predicted and removed from to obtain . is finally generated via denoising step iteratively. It is worth emphasizing that elements with a mask of 0 in are not involved in the training and generation process because of the mask .

5. Experiments

5.1. Experimental Setup

5.1.1. Data Description

| City | #regions | #commuters | #population | area(km2) | NZR |

|---|---|---|---|---|---|

| NYC | 1,296 | 1,694,884 | 3,004,606 | 451 | 24.9% |

| Cook | 1,319 | 1,776,489 | 1,974,181 | 4,230 | 25.2% |

| Seattle | 721 | 1,595,531 | 3,495,493 | 5,872 | 41.9% |

We choose three major US metropolises for our experiments. The cities are divided into regions at the census tract level. The basic statistic information on the cities is presented in Table 1. The data for each city includes two parts, city characteristics and the OD matrix, which are introduced as follows.

City Characteristics. City characteristics depict the geographic structure of an entire city and the spatially heterogeneous distribution of city functions. In detail, each region carries attributes about demographics and urban functions, which are portrayed through American Community Survey Data collected by the U.S. Census Bureau and POIs distribution from OpenStreetMap (OpenStreetMap contributors, 2017) respectively. Each region aggregates all the POIs therein to obtain a distribution over different categories. The distance between regions is determined in terms of the planar Euclidean distance between the centroids of the regions. Based on the above data processing, we extract features for each region, as a feature vector including demographic information by age, gender, income, etc. and the number of different categories of POIs, which are normally used for OD matrix generation as in previous works (Robinson and Dilkina, 2018; Pourebrahim et al., 2019; Liu et al., 2020; Simini et al., 2021).

Commuting OD Matrix. The OD matrices are constructed based on the commuting flow collected in Longitudinal Employer-Household Dynamics Origin-Destination Employment Statistics (LODES) dataset in 2018. The commuting flows are aggregated into the regions. Each element in the OD matrices records the number of workers who are resident in one region and employed in another.

5.1.2. Baselines.

We compare our proposed DiffODGen with the following six baselines that can be categorized into three categories.

The first category contains two traditional methods.

Gravity Model (GM) (Barbosa et al., 2018). Motivated by Newton’s law of Gravitation, GM assumes that the mobility flow is positively related to the populations of origin and destination, and negatively related to the distance between them.

Random Forest (RF) (Robinson and Dilkina, 2018; Pourebrahim et al., 2019). RF is a widely adopted tree-based machine learning model thanks to its robustness, achieving rather competitive performance in the task of OD flow generation.

The second category contains two state-of-the-art (SOTA) deep-learning methods developed for OD flow generation.

Deep Gravity Model (DGM) (Simini et al., 2021). DGM introduces deep neural networks into the modeling of gravity models to obtain flows by predicting the probability distribution to different regions when the outflow is given. We adapt the model to predict volumes of OD flow directly, making it to generate the OD matrix for new cities.

Geo-contextual Multitask Embedding Layer (GMEL) (Liu et al., 2020). GMEL uses graph neural networks (GNNs) to aggregate information from neighbors of each region (node) to capture its spatial features in a city (graph), which helps learn better-quality regional embeddings to achieve better prediction accuracy.

Finally, we additionally compare two deep generative models to check the validity of our design in terms of generating a realistic mobility flow network, i.e., the OD matrix.

NetGAN (Bojchevski et al., 2018). NetGAN is a GAN-based model that mimics real networks by generating random walking sequences with the same distribution as those sampled from the real networks. We adapt it to generate directed weighted networks, i.e., OD matrices.

Denoising Diffusion Probabilistic Model (DDPM) (Ho et al., 2020). We also adopt the naive diffusion model that directly learns to generate the OD matrix as a baseline. In this baseline, no distinguishing treatment is assigned to zero elements and non-zero elements in the OD matrix.

| Test City | Cook County (=1.3) New York City (=1.3) | Cook County (=1.3) Seattle (=0.72) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | Flow Value Error | Network Statistics Similarity | Flow Value Error | Network Statistics Similarity | ||||||||

| RMSE | NRMSE | CPC | RMSE | NRMSE | CPC | |||||||

| GM | 4.806 | 1.066 | 0.196 | 0.684 | 0.399 | 0.407 | 12.709 | 1.021 | 0.217 | 0.592 | 0.370 | 0.482 |

| RF | 5.717 | 1.268 | 0.054 | 22.886 | 1.839 | 0.068 | 0.154 | 0.028 | ||||

| DGM | 4.453 | 0.987 | 0.213 | 0.511 | 0.598 | 0.047 | 0.368 | 0.366 | 0.076 | |||

| GMEL | 0.271 | 0.450 | 0.656 | 12.415 | 0.997 | 0.278 | 0.416 | 0.333 | 0.514 | |||

| NetGAN | 4.546 | 1.007 | 0.290 | 0.478 | 0.396 | 12.617 | 1.007 | 0.297 | 0.396 | 0.315 | 0.426 | |

| DDPM | 4.939 | 1.094 | 0.290 | 0.594 | 0.355 | 0.063 | 13.001 | 1.044 | 0.379 | 0.440 | 0.092 | |

| DiffODGen | 4.288 (+3.14%) | 0.950 (+3.26%) | 0.363 (+0.60%) | 0.051 | 0.064 | 0.020 | 10.109 (+13.9%) | 0.812 (+13.9%) | 0.433 (+11.0%) | 0.023 | 0.029 | |

5.1.3. Evaluation Metrics.

We use two kinds of evaluation metrics to verify the generation realism, including three metrics related to flow value error and three metrics related to network statistics similarity. Specifically, the three error-based metrics are Root Mean Square Error (RMSE), Normalized Root Mean Square Error (NRMSE) and the commonly adopted Common Part of Commuting (CPC).

| (14) |

| (15) |

| (16) |

where the denotes the mean. To calculate the statistical similarity between generated OD matrices and real OD matrices, we adopt Jensen-Shannon Divergence (JSD) and use it to measure the distance between distributions of generated data and real data, with respect to three typical network statistics, i.e., inflow, outflow and OD flow. The computation of JSD is shown as follow,

| (17) |

where KL means Kullback–Leibler divergence and denotes the empirical probability distribution. The inflow and outflow are calculated by summing all flows into and out of regions, respectively.

5.1.4. Parameter Settings.

The number of layers of graph transformer in the topology and flow generation phases are 2 and 3 respectively, while the numbers of channels are both 64. The diffusion steps of two diffusion models in our method are 1000, and both use a cosine noise scheduler proposed by Nichol et al (Nichol and Dhariwal, 2021). The denoising networks in two diffusion models are trained with Adam optimizer (Kingma and Ba, 2014) with a learning rate of 3e-4.

The gravity model follows the way in (Barbosa et al., 2018) with 4 fitting parameters. The of random forest are 100. The number of layers for DGM is 10. The number of layers in GNN-based models is 3 and the number of channels is 64. The DDPM baseline follow the parameter settings with the flow diffusion model in our method.

5.2. Overall Performance

Performance comparison. In Table 2 we compare the performance of DiffODGen with baseline methods by training models on data collected in Cook County (in Chicago) and reporting generation results in two new cities, i.e., New York City and Seattle. From the results, we have the following findings:

-

•

Our proposed cascaded graph denoising diffusion method, i.e., DiffODGen, steadily achieves the best performance. The OD matrix generated by DiffODGen achieves the best realism in terms of both flow value error and network statistics similarity. Specifically, compared with the best baseline, the flow value error (RMSE/NRMSE) is reduced by over 3% and 13% in New York City and Seattle, respectively. Moreover, the statistical distribution similarity between the generated OD matrix and real one is rather high, indicated by a near-zero value of JSD.

-

•

Current deep-learning-based OD flow generation methods perform poorly in terms of network statistics similarity. Aided by the capability of modeling nonlinear relationships between city characteristics and flows, deep-learning-based methods, i.e., DGM and GMEL, achieve next-to-the-best accuracy with respect to flow value error. However, the results of the three JSD metrics indicate that they cannot generate a realistic OD matrix regarding its network statistics, due to their independent modeling of each OD matrix element.

-

•

Deep generative methods cannot compete with traditional methods without considering the unique characteristics of the OD matrix. By comparing NetGAN and DDPM, i.e., two generative models widely used in other domains, with RF, we observe that the former results have much larger JSD values, suggesting a less similar network generated by NetGAN and DDPM. For DDPM in particular, this naive diffusion model is hardly comparable to the rather simple GM due to the lack of the cascaded diffusion designed for handling city-wide OD matrix and the network property augmentation leveraged along the denoising process.

-

•

Despite the large deviation between the train and the test city, DiffODGen shows stable performance in terms of generation realism. From Table 1 we can observe that the deviation between Seattle and Cook is larger than that between New York City. Nevertheless, DiffODGen exhibits much significant advantage when tested in Seattle, which is indeed more difficult. This demonstrates its capability of capturing the underlying mechanism behind the observed city-wide OD matrix, owing to its generative modeling from a network perspective.

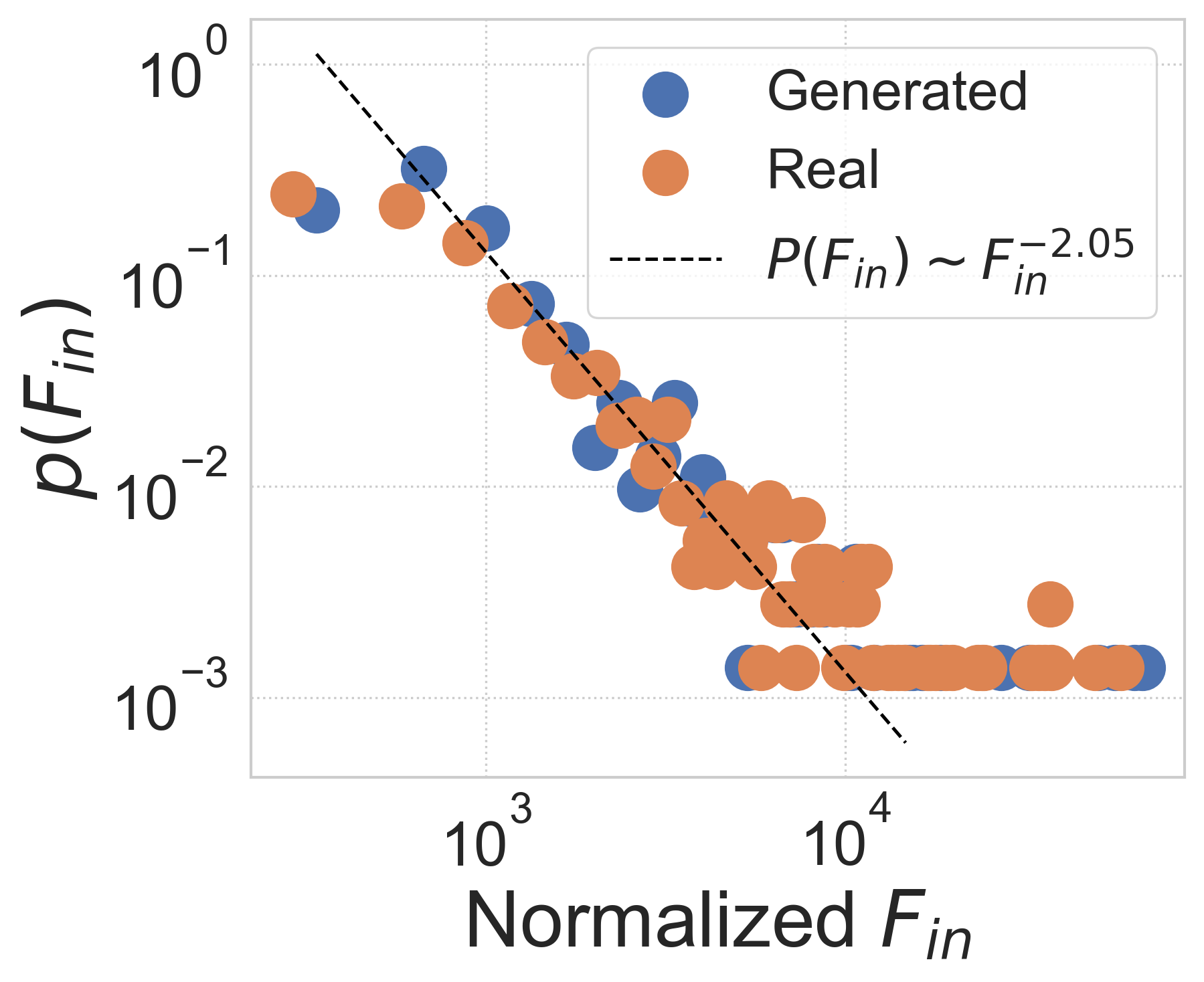

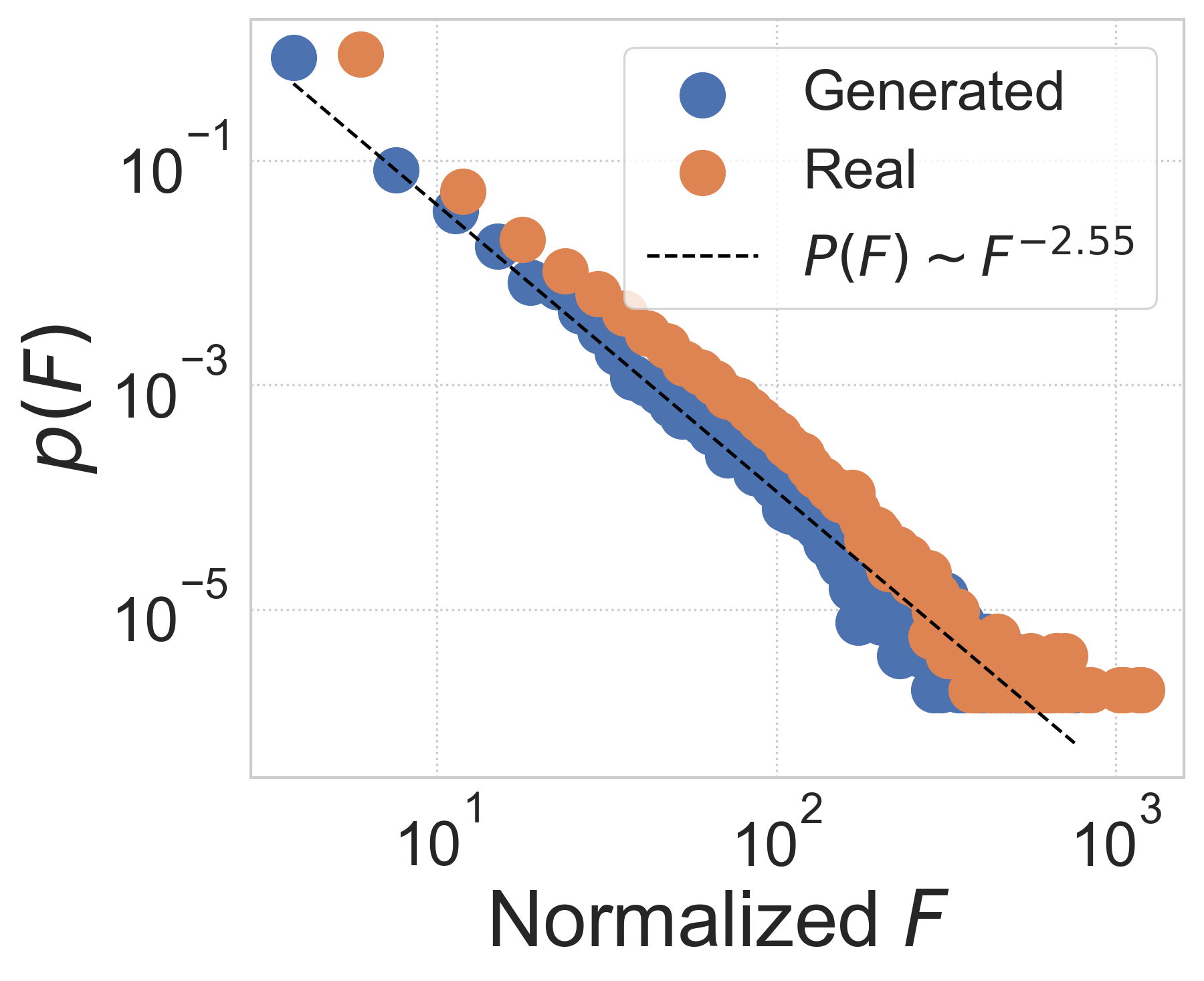

Network statistics analysis. We further investigate the realism of generated city-wide OD matrix from network perspective. Previous works on mobility flow networks have empirically observed scaling behavior in distributions, i.e., a pow-law-like distribution , of node-level inflow (Saberi et al., 2017) and edge-level OD flow (Yan et al., 2017). Correspondingly, in Figure 3 we calculate probability distributions of these two statistics in both generated and real data, finding that the two distributions of inflow and OD flow show a remarkably good match to its power-law fitting, indicated by high values of 0.86-0.98. Note that we normalize two metrics by their means for better visualization. Moreover, as a heavy-tailed distribution like the power law indicates population heterogeneity that is generally hard to capture, the results demonstrate that DiffODGen successfully reproduces the network property contained in the mobility flow pattern by generating realistic city-wide OD matrices.

5.3. Topology Generation Performance

| Test City | New York City (=1.3) | Seattle (=0.72) | ||||

|---|---|---|---|---|---|---|

| Model | NZR | NZR | ||||

| GM | 0.356 | +253.4% | 0.862 | 0.585 | +139.0% | 0.815 |

| RF | +103.6% | 0.647 | 0.064 | |||

| DGM | 0.334 | 0.645 | 0.618 | +52.4% | ||

| GMEL | 0.395 | +300.0% | 0.842 | 0.591 | +143.9% | 0.820 |

| NetGAN | 0.411 | +281.1% | 0.856 | 0.597 | +126.1% | 0.792 |

| DiffODGen | 0.501 | +32.5% | 0.135 | -37.7% | 0.040 | |

To further demonstrate the necessity of leveraging graph generative modeling technique for city-wide OD matrix generation, we investigate the network topology generation performance of DiffODGen by comparing its first-stage results with other baseline methods in both New York City and Seattle.

Performance comparison. We report the performance comparison results with respect to network topology similarity between generated and real OD matrices (here we use the binary version, i.e., adjacency matrix) in Table 3. We choose the binary version of CPC (), the rate of nonzero flows (NZR) and degree distribution similarity (). First, results in New York City demonstrate the superiority of DiffODGen in reconstructing the most realistic network topology given city characteristics in a new city. The generated mobility flow network achieves the highest indicating a good similarity to the real one, and they have close network statistics in terms of sparsity (the smallest gap between NZR) and degree distribution (the lowest ). Comparatively, we observe that other baselines cannot reproduce the realistic network topology. They tend to generate rather too sparse (DGM) or too dense (GMEL) networks. Second, DiffODGen still generates the most realistic network topology in Seattle when evaluating the overall three metrics. Note that RF performs slightly better in , which may be attributed to the smaller scale (=0.72) of Seattle that can be easier for traditional modeling methods.

| Test City | New York City (=1.3) | Seattle (=0.72) | ||||

|---|---|---|---|---|---|---|

| Model | Accuracy | Accuracy | ||||

| GM | 0.276 | 0.183 | 0.900 | 0.419 | 0.984 | |

| RF | 0.605 | 0.271 | 0.434 | 0.451 | 0.222 | |

| DGM | 0.790 | 0.785 | 0.022 | 0.601 | 0.521 | |

| GMEL | 0.249 | 0.001 | 0.995 | 0.419 | 0.000 | 1.000 |

| NetGAN | 0.334 | 0.924 | 0.422 | 0.029 | 0.978 | |

| DiffODGen | 0.321 | 0.738 | 0.339 | 0.370 | ||

Further study of topology similarity and sparsity. Next we investigate further the topology similarity and sparsity of generated OD matrices, which is motivated by the observations of NZR in Table 3. We check three additional metrics, i.e., accuracy, false negative rate (predict a real nonzero flow to be zero) and false positive rate (predict a real zero flow to be nonzero), and report results in Table 4. Note that accuracy is a biased metric as it does not consider the unbalanced ratio between nonzero-zero flows in real data. Unlike baselines that overestimate either nonzero flows (GMEL, near-zero FN and near-one FP) or zero flows (DGM in New York City, near-one FN and near-zero FP), the proposed DiffODGen successfully achieves a trade-off, indicating the necessity of learning to generate realistic network topology in city-wide OD matrix generation.

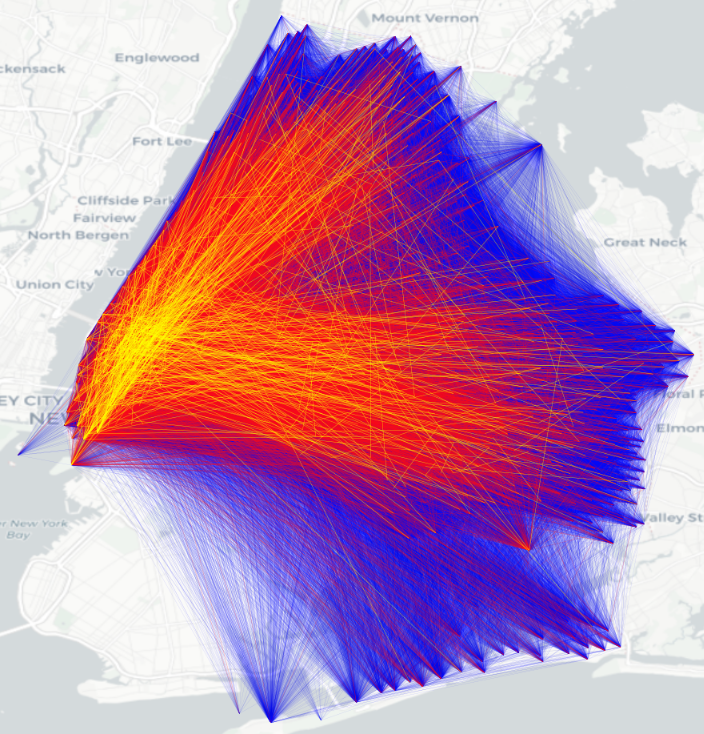

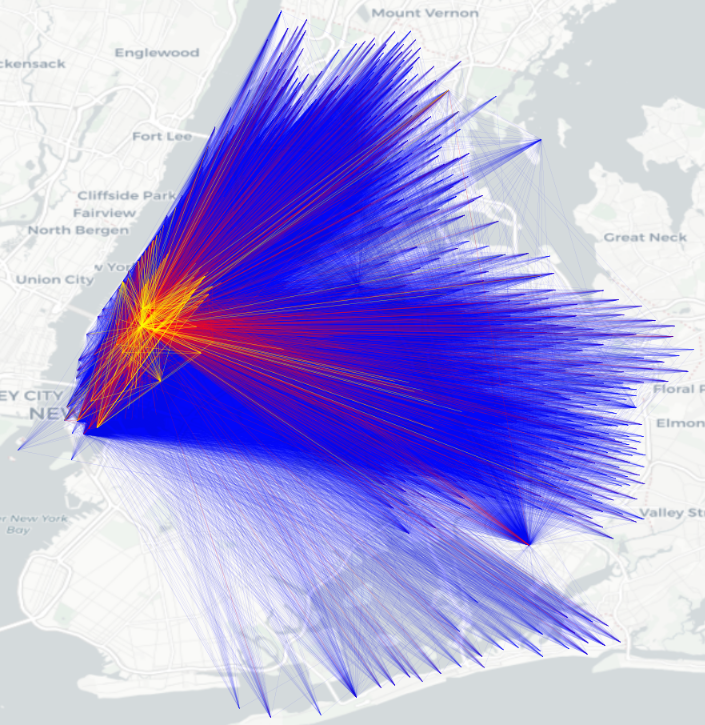

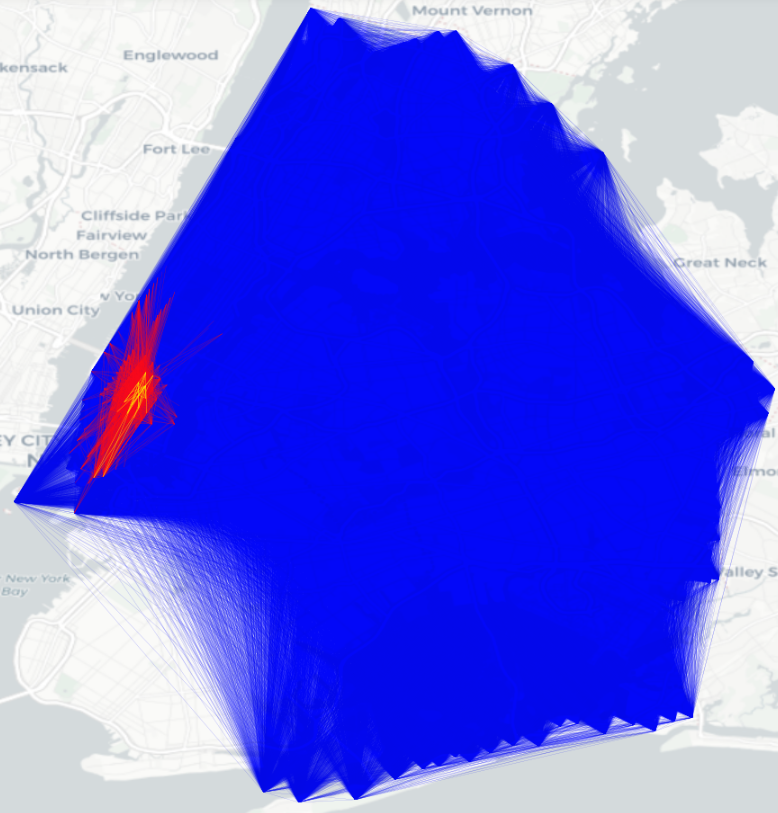

Flow visualization. Finally we visualize the spatial distribution of both real OD flows and generated OD flows in New York City (Figure 4). The results indicate an excellent match to above analysis in Table 3 and Table 4. Compared to real flows, the flows generated by the proposed DiffODGen is with the highest similarity, while those by DGM and GMEL are either too sparse or too dense.

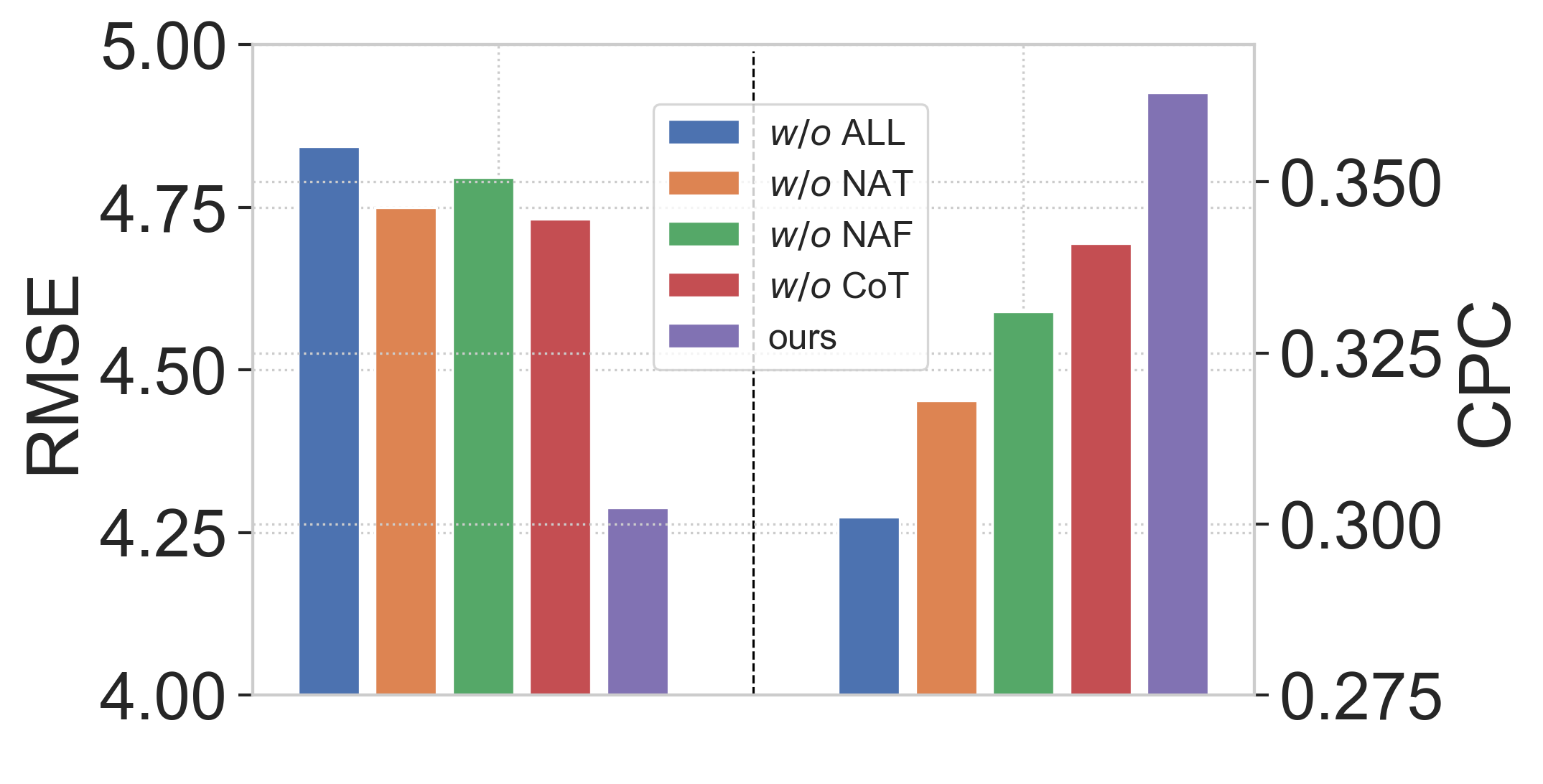

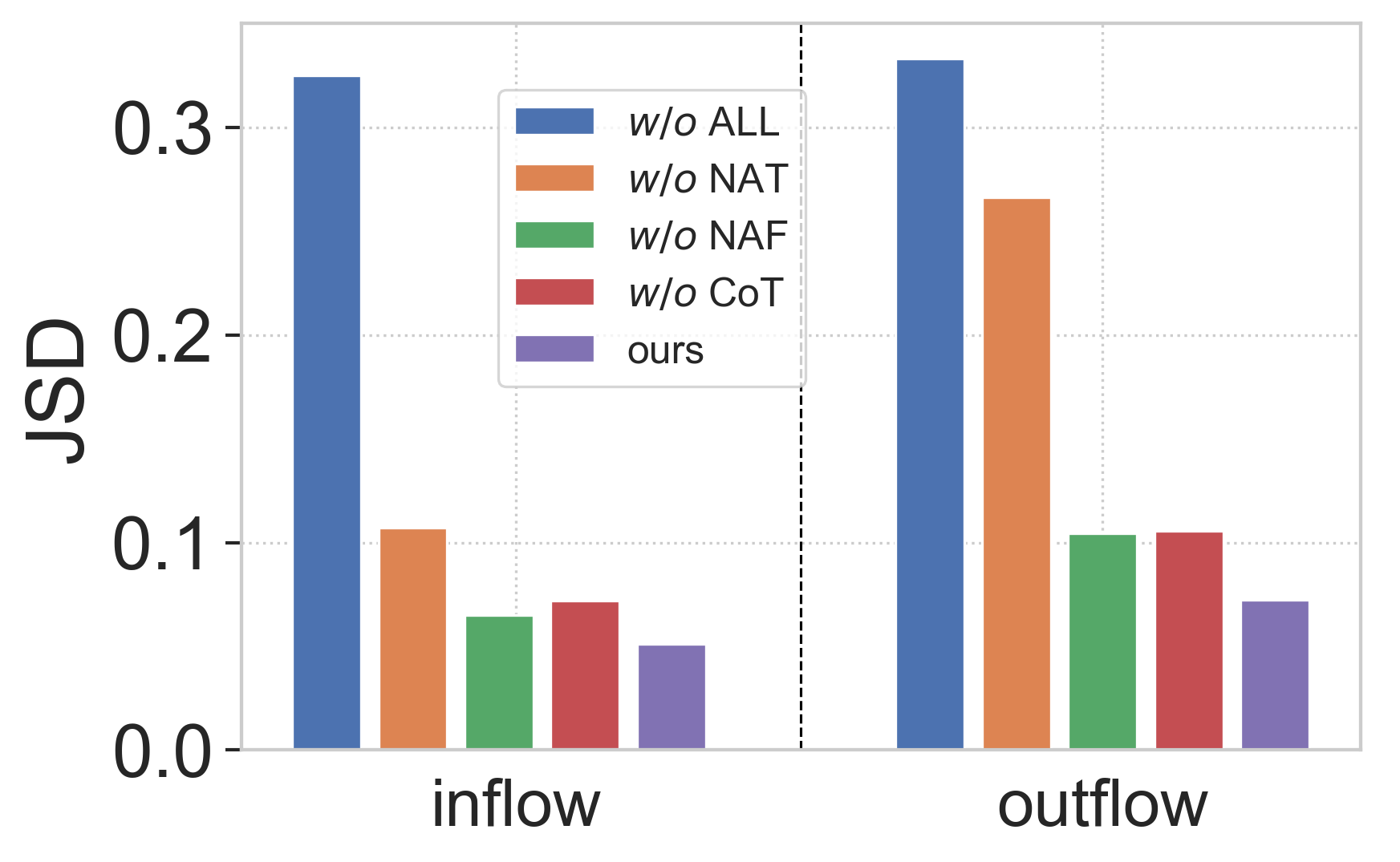

5.4. Ablation Study

Node Properties Augmentation for Topology Generation (NAT). We test the effect of node properties augmentation module utilized in the topology diffusion model, and the results show that the accurate and realistic generation of topology structure of mobility flow network is instrumental. From Figure 5(a), this design will bring up to 14.15% performance improvement according to CPC, which is the largest in magnitude. From Figure 5(b), the performance of the model on network statistics similarity would also be significantly worse, without this design.

Node Properties Augmentation for Topology Generation (NAF). Accordingly, we examine the utility of node properties augmentation applied in the flow diffusion model. The experimental results show that this design also greatly enhances the effect of OD matrix generation with a margin at 9.67%, according to Figure 5(a). This shows that the enhancement of graph transformer is useful from both topology and flow volume perspectives. From Figure 5(b), this design also shows comparable behavior in terms of network statistics similarity..

Collabrative Training (CoT). By experimenting with just the generation of the topology diffusion model, we found that collaborative training can continue to slightly improve performance based on existing designs according to CPC according to Figure 5.

ALL. This part of the experiment is intended to examine the total performance improvement of our model designs compared to the direct application of the cascaded graph denoising diffusion model. From the Figure 5, these model designs are very instrumental. In contrast with naive diffusion models, cascading design can well solve the problem of difficult generation of large-scale OD matrix and improve the effect with a 20.6% improvement on performance.

6. Conclusion

In this work, we propose to investigate the spatial distribution of the OD matrix, i.e. mobility flows between every two regions, of a city from the perspective of networks, and explore the feasibility of introducing the graph denoising diffusion method to model the joint distribution of all elements in the OD matrix. Specifically, we designed a cascaded graph denoising diffusion method (DiffODGen) to generate the city-wide OD matrix for the new city by first generating a topology structure and then mobility flows. By validating in two real-world data scenarios, the DiffODGen can effectively model the joint distribution of the city-wide OD matrix and generate the OD matrix with scaling network behaviours similar with real-world data to improve the precision. This demonstrates the necessity of modeling the joint distribution of all elements in the OD matrix and the feasibility of applying graph diffusion models to solve the problem of OD matrix generation.

References

- (1)

- Adnan et al. (2016) Muhammad Adnan, Francisco C Pereira, Carlos Miguel Lima Azevedo, Kakali Basak, Milan Lovric, Sebastián Raveau, Yi Zhu, Joseph Ferreira, Christopher Zegras, and Moshe Ben-Akiva. 2016. Simmobility: A multi-scale integrated agent-based simulation platform. In 95th Annual Meeting of the Transportation Research Board Forthcoming in Transportation Research Record, Vol. 2. The National Academies of Sciences, Engineering, and Medicine Washington, DC.

- Arentze and Timmermans (2004) Theo A Arentze and Harry JP Timmermans. 2004. A learning-based transportation oriented simulation system. Transportation Research Part B: Methodological 38, 7 (2004), 613–633.

- Austin et al. (2021) Jacob Austin, Daniel D Johnson, Jonathan Ho, Daniel Tarlow, and Rianne van den Berg. 2021. Structured denoising diffusion models in discrete state-spaces. Advances in Neural Information Processing Systems 34 (2021), 17981–17993.

- Balcan et al. (2009) Duygu Balcan, Vittoria Colizza, Bruno Gonçalves, Hao Hu, José J Ramasco, and Alessandro Vespignani. 2009. Multiscale mobility networks and the spatial spreading of infectious diseases. Proceedings of the national academy of sciences 106, 51 (2009), 21484–21489.

- Barbosa et al. (2018) Hugo Barbosa, Marc Barthelemy, Gourab Ghoshal, Charlotte R James, Maxime Lenormand, Thomas Louail, Ronaldo Menezes, José J Ramasco, Filippo Simini, and Marcello Tomasini. 2018. Human mobility: Models and applications. Physics Reports 734 (2018), 1–74.

- Barthélemy (2011) Marc Barthélemy. 2011. Spatial networks. Physics reports 499, 1-3 (2011), 1–101.

- Bojchevski et al. (2018) Aleksandar Bojchevski, Oleksandr Shchur, Daniel Zügner, and Stephan Günnemann. 2018. Netgan: Generating graphs via random walks. In International conference on machine learning. PMLR, 610–619.

- Caminha and Furtado (2017) Carlos Caminha and Vasco Furtado. 2017. Impact of human mobility on police allocation. In 2017 IEEE International Conference on Intelligence and Security Informatics (ISI). IEEE, 125–127.

- Dwivedi and Bresson (2020) Vijay Prakash Dwivedi and Xavier Bresson. 2020. A generalization of transformer networks to graphs. arXiv preprint arXiv:2012.09699 (2020).

- Goodfellow et al. (2020) Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2020. Generative adversarial networks. Commun. ACM 63, 11 (2020), 139–144.

- Haefeli et al. (2022) Kilian Konstantin Haefeli, Karolis Martinkus, Nathanaël Perraudin, and Roger Wattenhofer. 2022. Diffusion Models for Graphs Benefit From Discrete State Spaces. arXiv preprint arXiv:2210.01549 (2022).

- Ho et al. (2020) Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems 33 (2020), 6840–6851.

- Ho and Salimans (2022) Jonathan Ho and Tim Salimans. 2022. Classifier-free diffusion guidance. arXiv preprint arXiv:2207.12598 (2022).

- Hörl et al. (2021) Sebastian Hörl, Felix Becker, and Kay W Axhausen. 2021. Simulation of price, customer behaviour and system impact for a cost-covering automated taxi system in Zurich. Transportation Research Part C: Emerging Technologies 123 (2021), 102974.

- Jo et al. (2022) Jaehyeong Jo, Seul Lee, and Sung Ju Hwang. 2022. Score-based Generative Modeling of Graphs via the System of Stochastic Differential Equations. arXiv preprint arXiv:2202.02514 (2022).

- Karimi et al. (2019) Hadi Karimi, Seyed-Nader Shetab-Boushehri, and Bahador Ghadirifaraz. 2019. Sustainable approach to land development opportunities based on both origin-destination matrix and transportation system constraints, case study: Central business district of Isfahan, Iran. Sustainable cities and society 45 (2019), 499–507.

- Kingma and Ba (2014) Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

- Kingma and Welling (2013) Diederik P Kingma and Max Welling. 2013. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114 (2013).

- Kong et al. (2020) Zhifeng Kong, Wei Ping, Jiaji Huang, Kexin Zhao, and Bryan Catanzaro. 2020. Diffwave: A versatile diffusion model for audio synthesis. arXiv preprint arXiv:2009.09761 (2020).

- Liu et al. (2020) Zhicheng Liu, Fabio Miranda, Weiting Xiong, Junyan Yang, Qiao Wang, and Claudio Silva. 2020. Learning geo-contextual embeddings for commuting flow prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34. 808–816.

- Morris et al. (2019) Christopher Morris, Martin Ritzert, Matthias Fey, William L Hamilton, Jan Eric Lenssen, Gaurav Rattan, and Martin Grohe. 2019. Weisfeiler and leman go neural: Higher-order graph neural networks. In Proceedings of the AAAI conference on artificial intelligence, Vol. 33. 4602–4609.

- Nichol and Dhariwal (2021) Alexander Quinn Nichol and Prafulla Dhariwal. 2021. Improved denoising diffusion probabilistic models. In International Conference on Machine Learning. PMLR, 8162–8171.

- Niu et al. (2020) Chenhao Niu, Yang Song, Jiaming Song, Shengjia Zhao, Aditya Grover, and Stefano Ermon. 2020. Permutation invariant graph generation via score-based generative modeling. In International Conference on Artificial Intelligence and Statistics. PMLR, 4474–4484.

- OpenStreetMap contributors (2017) OpenStreetMap contributors. 2017. Planet dump retrieved from https://planet.osm.org . https://www.openstreetmap.org.

- Pourebrahim et al. (2019) Nastaran Pourebrahim, Selima Sultana, Amirreza Niakanlahiji, and Jean-Claude Thill. 2019. Trip distribution modeling with Twitter data. Computers, Environment and Urban Systems 77 (2019), 101354.

- Robinson and Dilkina (2018) Caleb Robinson and Bistra Dilkina. 2018. A machine learning approach to modeling human migration. In Proceedings of the 1st ACM SIGCAS Conference on Computing and Sustainable Societies. 1–8.

- Ruan et al. (2020) Sijie Ruan, Jie Bao, Yuxuan Liang, Ruiyuan Li, Tianfu He, Chuishi Meng, Yanhua Li, Yingcai Wu, and Yu Zheng. 2020. Dynamic public resource allocation based on human mobility prediction. Proceedings of the ACM on interactive, mobile, wearable and ubiquitous technologies 4, 1 (2020), 1–22.

- Saberi et al. (2017) Meead Saberi, Hani S Mahmassani, Dirk Brockmann, and Amir Hosseini. 2017. A complex network perspective for characterizing urban travel demand patterns: graph theoretical analysis of large-scale origin–destination demand networks. Transportation 44, 6 (2017), 1383–1402.

- Saharia et al. (2022) Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily Denton, Seyed Kamyar Seyed Ghasemipour, Burcu Karagol Ayan, S Sara Mahdavi, Rapha Gontijo Lopes, et al. 2022. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding. arXiv preprint arXiv:2205.11487 (2022).

- Shi et al. (2020) Hongzhi Shi, Quanming Yao, Qi Guo, Yaguang Li, Lingyu Zhang, Jieping Ye, Yong Li, and Yan Liu. 2020. Predicting origin-destination flow via multi-perspective graph convolutional network. In 2020 IEEE 36th International conference on data engineering (ICDE). IEEE, 1818–1821.

- Simini et al. (2021) Filippo Simini, Gianni Barlacchi, Massimilano Luca, and Luca Pappalardo. 2021. A Deep Gravity model for mobility flows generation. Nature communications 12, 1 (2021), 1–13.

- Simini et al. (2012) Filippo Simini, Marta C González, Amos Maritan, and Albert-László Barabási. 2012. A universal model for mobility and migration patterns. Nature 484, 7392 (2012), 96–100.

- Song et al. (2020) Jiaming Song, Chenlin Meng, and Stefano Ermon. 2020. Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502 (2020).

- Song and Ermon (2019) Yang Song and Stefano Ermon. 2019. Generative modeling by estimating gradients of the data distribution. Advances in Neural Information Processing Systems 32 (2019).

- Tashiro et al. (2021) Yusuke Tashiro, Jiaming Song, Yang Song, and Stefano Ermon. 2021. CSDI: Conditional score-based diffusion models for probabilistic time series imputation. Advances in Neural Information Processing Systems 34 (2021), 24804–24816.

- Veličković et al. (2017) Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Lio, and Yoshua Bengio. 2017. Graph attention networks. arXiv preprint arXiv:1710.10903 (2017).

- Vignac et al. (2022) Clement Vignac, Igor Krawczuk, Antoine Siraudin, Bohan Wang, Volkan Cevher, and Pascal Frossard. 2022. DiGress: Discrete Denoising diffusion for graph generation. arXiv preprint arXiv:2209.14734 (2022).

- Vuchic (2002) Vukan R Vuchic. 2002. Urban public transportation systems. University of Pennsylvania, Philadelphia, PA, USA 5 (2002), 2532–2558.

- Wang et al. (2019) Yuandong Wang, Hongzhi Yin, Hongxu Chen, Tianyu Wo, Jie Xu, and Kai Zheng. 2019. Origin-destination matrix prediction via graph convolution: a new perspective of passenger demand modeling. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining. 1227–1235.

- Xu et al. (2018) Keyulu Xu, Weihua Hu, Jure Leskovec, and Stefanie Jegelka. 2018. How powerful are graph neural networks? arXiv preprint arXiv:1810.00826 (2018).

- Yan et al. (2017) Xiao-Yong Yan, Wen-Xu Wang, Zi-You Gao, and Ying-Cheng Lai. 2017. Universal model of individual and population mobility on diverse spatial scales. Nature communications 8, 1 (2017), 1639.

- Zeng et al. (2022) Jinwei Zeng, Guozhen Zhang, Can Rong, Jingtao Ding, Jian Yuan, and Yong Li. 2022. Causal Learning Empowered OD Prediction for Urban Planning. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management. 2455–2464.

- Zipf (1946) George Kingsley Zipf. 1946. The P 1 P 2/D hypothesis: on the intercity movement of persons. American sociological review 11, 6 (1946), 677–686.