Compressibility and probabilistic proofs

Abstract

We consider several examples of probabilistic existence proofs using compressibility arguments, including some results that involve Lovász local lemma.

1 Probabilistic proofs: a toy example

There are many well known probabilistic proofs that objects with some properties exist. Such a proof estimates the probability for a random object to violate the requirements and shows that it is small (or at least strictly less than ). Let us look at a toy example.

Consider a Boolean matrix and its minor (the intersection of rows and columns chosen arbitrarily). We say that the minor is monochromatic if all its elements are equal (either all zeros or all ones).

Proposition.

For large enough and for , there exists a -matrix that does not contain a monochromatic -minor.

Proof.

We repeat the same simple proof three times, in three different languages.

(Probabilistic language) Let us choose matrix elements using independent tosses of a fair coin. For a given colums and rows, the probability of getting a monochromatic minor at their intersection is . (Both zero-minor and one-minor have probability .) There are at most choices for columns and the same number for rows, so by the union bound the probability of getting at least one monochromatic minor is bounded by

and the last expression is less then if, say, and is suffuciently large.

(Combinatorial language) Let us count the number of bad matrices. For a given choice of columns and rows we have possibilities for the minor and possibilities for the rest, and there is at most choices for raws and columns, so the total number of matrices with monochromatic minor is

and this is less than , the total number of Boolean -matrices.

(Compression language) To specify the matrix that has a monochromatic minor, it is enough to specify numbers between and (rows and column numbers), the color of the monochromatic minor ( or ) and the remaining bits in the matrix (their positions are already known). So we save bits (compared to the straightforward list of all bits) using bits instead (each number in the range requires bits; to be exact, we may use ), so we can compress the matrix with a monochromatic minor if , and not all matrices are compressible. ∎

Of course, these three arguments are the same: in the second one we multiply probabilities by , and in the third one we take logarithms. However, the compression language provides some new viewpoint that may help our intuition.

2 A bit more interesting example

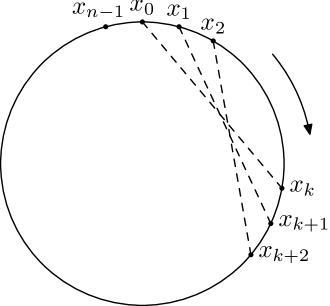

In this example we want to put bits (zeros and ones) around the circle in a “essentially asymmetric” way: each rotation of the circle should change at least a fixed percentage of bits. More precisely, we are interested in the following statement:

Proposition.

There exists such for every suffuciently large there exists a sequence of bits such that for every the cyclic shift by positions produces a sequence

that differs from in at least positions (the Hamming distance between and is at least ).

Proof.

Assume that some rotation (cyclic shift by positions) transforms into a string that coincides almost everywhere with . We may assume that : the cyclic shift by positions changes as many bits as the cyclic shift by (the inverse one). Imagine that we dictate the string from left to right. First bits we dictate normally. But then the bits start to repeat (mostly) the previous ones ( positions before), so we can just say “the same” or “not the same”, and if is small, we know that most of the time we say “the same”. Technically, we have different bits, and at least bits to dictate after the first , so the fraction of “not the same” signals is at most . It is well known that strings of symbols where some symbols appear more often than others can be encoded efficiently. Shannon tells us that a string with two symbols with frequencies and (so ) can be encoded using

bits per symbol and that only when . In our case, for small , one of the frequencies is close to (at most ), and the other one is close to , so is significantly less than . So we get a significant compression for every string that is bad for the theorem, therefore most string are good (so good string do exist).

More precisely, every string that does not satisfy the requirements, can be described by

-

•

[ bits]

-

•

[ bits]

-

•

[ bits where the fraction of s is at most , compressed to bits]

For and for large enough the economy in the third part (compared to ) is more important than in the first part. ∎

Of course, this is essentially a counting argument: the number of strings of length where the fraction of s is at most , is bounded by and we show that the bound for the number of bad strings,

is less than the total number of strings (). Still the compression metaphor makes the proof more intuitive, at least for some readers.

3 Lovász local lemma and

Moser–Tardos algorithm

In our examples of probabilistic proofs we proved the existence of objects that have some property by showing that most objects have this property (in other words, that the probability of this property to be true is close to under some natural distribibution). Not all probabilistic proofs go like that. One of the exceptions is the famous Lovász local lemma (see, e.g., [1]). It can be used in the situations where the union bound does not work: we have too many bad events, and the sum of their probabilities exceeds even if probability of each one is very small. Still Lovász local lemma shows that these bad events do not cover the probability space entirely, assuming that the bad events are “mainly independent”. The probability of avoiding these bad events is exponentially small, still Lovász local lemma provides a positive lower bound for it.

This means, in particular, that we cannot hope to construct an object satisfying the requirements by random trials, so the bound provided by Lovász local lemma does not give us a randomized algorithm that constructs the object with required properties with probability close to . Much later Moser and Tardos [4, 5] suggested such an algorithm — in fact a very simple one. In other terms, they suggested a different distribution under which good objects form a majority.

We do not discuss the statement of Lovász local lemma and Moser–Tardos algorithm in general. Instead, we provide two examples when they can be used, and the compression-language proofs that can be considered as ad hoc versions of Moser–Tardos argument. These two examples are (1) satisfiability of formulas in conjunctive normal form (CNF) and (2) strings without forbidden factors.

4 Satisfiable CNF

A CNF (conjunctive normal form) is a propositional formula that is a conjuction of clauses. Each clause is a disjunction of literals; a literal is a propositional variable or its negation. For example, CNF

consists of two clauses. First one prohibits the case when , , ; the second one prohibits the case when , , . A CNF is satisfiable if it has a satisfying assigment (that makes all clauses true, avoiding the prohibited combinations). In our example there are many satisfying assigments. For example, if and , all values of other variables are OK.

We will consider CNF where all clauses include literals with different variables (from some pool of variables that may contain much more than variables). For a random assignment (each variable is obtained by an independent tossing of a fair coin) the probability to violate a clause of this type is (one of combinations of values for variables is forbidden). Therefore, if the number of clauses of this type is less than , then the formula is satisfiable. This is a tight bound: using clauses with the same variables, we can forbid all the combinations and get an unsatisfiable CNF.

The following result says that we can guarantee the satisfiability for formuli with much more clauses. In fact, the total number of clauses may be arbitrary (but still we consider finite formulas, of course). The only thing we need is the “limited dependence” of clauses. Let us say that two clauses are neighbors if they have a common variable (or several common variables). The clauses that are not neighbors correspond to independent events (for a random assignment). The following statement says that if the number of neighbors of each clause is bounded, then CNF is guaranteed to be satisfisable.

Proposition.

Assume that each clause in some CNF contains literals with different variables and has at most neighbor clauses. Then the CNF is satisfiable.

Note that is a rather tight bound: to forbid all the combinations for some variables, we need only clauses.

Proof.

It is convenient to present a proof using the compression language, as suggested by Lance Fortnow. Consider the following procedure whose argument is a clause (from our CNF).

{ is false }

:

for all that are neighbors of :

if is false then

{ is true; other clauses that were true remain true }

Here is the procedure that assigns fresh random values to all variables in . The pre-condition (the first line) says that the procedure is called only in the situation where is false. The post-condition (the last line) says that if the procedure terminates, then is true after termination, and, moreover, all other clauses of our CNF that were true before the call remain true. (The ones that were false may be true or false.)

Note that up to now we do not say anything about the termination: note that the procedure is randomized and it may happen that it does not terminate (for example, if all Resample calls are unlucky to choose the same old bad values).

Simple observation: if we have such a procedure, we may apply it to all clauses one by one and after all calls (assuming they terminate and the procedure works according to the specification) we get a satisfying assignment.

Another simple observation: it is easy to prove the “conditional correctness” of the procedure . In other words, it achieves its goal assuming that (1) it terminates; (2) all the recursive calls achieve their goals. It is almost obvious: the call may destroy (=make false) only clauses that are neighbors to , and all these clauses are Fix-ed after that. Note that is its own neighbor, so the for-loop includes also a recursive call , so after all these calls (that terminate and satisfy the post-condition by assumption) the clause and all its neighbors are true and no other clause is damaged.

Note that the last argument remains valid even if we delete the only line that really changes something, i.e., the line . In this case the procedure never changes anything but still is conditionally correct; it just does not terminate if one of the clauses is false.

It remains to prove that the call terminates with high probability. In fact, it terminates with probability if there are no time limits and with probability exponentially close to in polynomial time. To prove this, one may use a compression argument: we show that if the procedure works for a long time without terminating, then the sequence of random bits used for resampling is compressible. We assume that each call of uses fresh bits from the sequence. Finally, we note that this compressibility may happen only with exponentially small probability.

Imagine that is called and during its recursive execution performs many calls

(in this order) but does not terminate (yet). We stop it at some moment and examine the values of all the variables.

Lemma.

Knowing the values of the variables after these calls and the sequence , we can reconstruct all the random bits used for resampling.

Proof of the lemma.

Let us go backwards. By assumption we know the values of all variables after the calls. The procedure is called only when is false, and there is only one -tuple of values that makes false. Therefore we know the values of all variables before the last call, and also know the random bits used for the last resampling (since we know the values of variables after resampling).

The same argument shows that we can reconstruct the values of variables before the preceding call , and random bits used for the resampling in this call, etc. ∎

Now we need to show that the sequence of clauses used for resampling can be described by less bits than (the number of random bits used). Here we use the assumption saying each clause has at most neighbors and that the clauses for which is called from , are neighbors of .

One could try to say that since is a neighbor of , we need only bits to specify it (there are at most neighbors by assumption), so we save bits per clause (compared to random bits used by resampling). But this argument is wrong: is not always the neighbor of , since we may return from a recursive call that causes resampling of and then make a new recursive call that resamples .

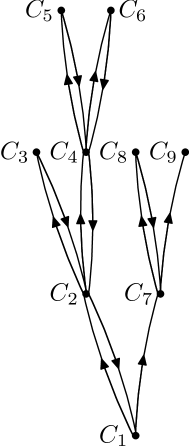

To get a correct argument, we should look more closely at the tree of recursive calls generated by one call (Fig. 2). In this tree the sons of each vertex correspond to neighbor clauses of the father-clause.

The sequence of calls is determined by a walk in this tree, but we go up and down, not only up (as we assumed in the wrong argument). How many bits we need to encode this walk (and therefore the sequence of calls)? We use one bit to distinguish between steps up and down. If we are going down, no other information is needed. If we are going up (and resample a new clause), we need one bit to say that we are going up, and bits for the number of neighbor we are going to. For accounting purposes we combine these bits with a bit needed to encode the step back (this may happen later or not happen at all), and we see that in total we need at most bits per each resampling. This is still less than , so we save one bit for each resampling. If is much bigger than the number of variables, we indeed compress the sequence of random bits used for resampling, and this happens with exponentially small probability.

This argument finishes the proof. ∎

5 Tetris and forbidden factors

The next example is taken from word combinatorics. Assume that a list of binary strings is given. These are considered as “forbidden factors”: this means that we want to construct a (long) string that does not have any of as a factor (i.e., none of is a substring of ). This may be possible or not depending on the list. For example, if we consider two strings as forbidden factors, every string of length or more has a forbidden factor (we cannot use zeros at all, and two ones are forbidden).

The more forbidden factors we have, the more chances that they block the growth in the sense that every sufficiently long string has a forbidden factor. Of course, not only the number of factors matters: e.g., if we consider as forbidden factors, then we have long strings of ones without forbidden factors. However, now we are interested in quantitative results of the following type: if the number of forbidden factors of length is , and the numbers are “not too big”, then there exists an arbitrarily long string without forbidden factors.

This question can be analyzed with many different tools, including Lovász local lemma (see [8]) and Kolmogorov complexity. Using a complexity argument, Levin proved that if for some constant , then there exists a constant and an infinite sequence that does not contain forbidden factors of length smaller than . (See [9, Section 8.5] for Levin’s argument and other related results.) A nice sufficient condition was suggested by Miller [3]: we formulate the statement for the arbitrary alphabet size.

Proposition.

Consider an alphabet with letters. Assume that for each we have “forbidden” strings of length . Assume that there exist some constant such that

Then there exist arbitrarily long strings that do not contain forbidden substrings.

Remarks. 1. We do not consider , since this means that some letters are deleted from the alphabet.

2. By compactness the statement implies that there exists an infinite sequence with no forbidden factors.

3. The constant should be at least , otherwise the right hand side is negative. This means that should be small, and this corresponds to our intution ( should be significantly less than , the total number of strings of length ).

The original proof from [3] uses some ingenious potential function defined on strings: Miller shows that if its value is less than , then one can add some letter preserving this property. It turned out (rather misteriously) that exactly the same condition can be obtained by a completely different argument (following [2, 6]) — so probably the inequality is more fundamental than it may seem! This argument is based on compression.

Proof.

Here is the idea. We start with an empty string and add randomly chosen letters to its right end. If some forbidden string appears as a suffix, it is immediately deleted. So forbidden strings may appear only as suffixes, and only for a short time. After this “backtracking” we continue adding new letters. (This resembles the famous “tetris game” when blocks fall down and then disappear under some conditions.)

We want to show that if this process is unsuccessful in the sense that after many steps we still have a short string, then the sequence of added random letters is compressible, so this cannot happen always, and therefore a long string without forbidden factors exists. Let us consider a “record” (log file) for this process that is a sequence of symbols “” and “” (for each forbidden string we have a symbol, plus one more symbol without a string). If a letter was added and no forbidden string appears, we just add ‘’ to the record. If we have to delete some forbidden string after a letter was added, we write this string in brackets after the sign. Note that we do not record the added letters, only the deleted substrings. (It may happen that several forbidden suffixes appear; in this case we may choose any of them.)

Lemma.

At every stage of the process the current string and the record uniquely determine the sequence of random letters used.

Proof of the lemma.

Having this information, we can reconstruct the configuration going backwards. This reversed process has steps where a forbidden string is added (and we know which one, since it is written in brackets in the record), and also steps when a letter is deleted (and we know which letter is deleted, i.e., which random letter was added when moving forwards). ∎

If after many (say, ) steps we still have a short current string, then the sequence of random letters can be described by the record (due to the Lemma; we ignore the current string part since it is short). As we will see, the record can be encoded with less bits than it should have been (i.e., less than bits). Let us describe this encoding and show that it is efficient (assuming the inequality ).

We use arithmetic encoding for the lengths. Arithmetic encoding for symbols starts by choosing positive reals such that . Then we split the interval into parts of length that correspond to these symbols. Adding a new symbol corresponds to splitting the current interval in the same proportion and choosing the right subinterval. For example, the sequence corresponds to th subinterval of th interval; this interval has length . The sequence corresponds to interval of length and can be reconstructed given any point of this interval (assuming are fixed); to specify some binary fraction in this interval we need at most bits, i.e., bits.

Now let us apply this technique to our situation. For without brackets we use bits, and for where is of length , we use bits. Here are some positive reals to be chosen later; we need . Indeed, we may split into equal parts (of size ) and use these parts as in the description of arithmetical coding above; splitting adds to the code length for strings of length .

To bound the total number of bits used for encoding the record, we perform amortised accounting and show that the average number of bits per letter is less than . Note that the number of letters is equal to the number of signs in the record. Each without brackets increases the length of the string by one letter, and we want to use less that bits for its encoding, where is some constant saying how much is saved as a reserve for amortized analysis. And for a string of length decreases the length by , so we want to use less than bits (using the reserve).

So we need:

together with

Technically is it easier to use non-strict inequalities in the first two cases and a strict one in the last case (and then increase a bit):

Then for a given we take minimal possible :

and it remains to show that the sum is less than for a suitable choice of . Let , then the inequality can be rewritten as

or

and this is our assumption.

Now we see the role of this mystical in the condition: it is just a parameter that determines the constant used for the amortised analysis. ∎

Acknowledgement. Author thanks his LIRMM colleagues, in particular Pascal Ochem and Daniel Gonçalves, as well as the participants of Kolmogorov seminar in Moscow.

References

- [1] N. Alon, J.H. Spencer, The Probabilistic Method, Wiley, 2004.

- [2] D. Gonçalves, M. Montassier, A. Pinlou, Entropy compression method applied to graph colorings, https://arxiv.org/pdf/1406.4380.pdf.

- [3] J. Miller, Two notes on subshifts, Proceedings of the AMS, 140, 1617-1622 (2012).

- [4] R. Moser, A constructive proof of the Lovász local lemma, https://arxiv.org/abs/0810.4812.

- [5] R. Moser, G. Tardos, A constructive proof of the general Lovász local lemma, Journal of the ACM, 57(2), 11.1–11.15 (2010).

- [6] P. Ochem, A. Pinlou, Application of Entropy Compression in Pattern Avoidance, The Electronic Journal of Combinatorics, 21:2, paper P2.7 (2014).

- [7] A. Rumyantsev, A. Shen, Probabilistic Constructions of Computable Objects and a Computable Version of Lovász Local Lemma, Fundamenta Informaticae, 132, 1–14 (2013), see also https://arxiv.org/abs/1305.1535

- [8] A. Rumyantsev, M. Ushakov, Forbidden substrings, Kolmogorov complexity and almost periodic sequences, STACS 2006 Proceedings, Lecture Notes in Computer Science, 3884, 396–407, see also https://arxiv.org/abs/1009.4455.

- [9] A. Shen, V.A. Uspensky, N. Vereshchagin, Kolmogorov complexity and algorithmic randomness, to be published by the AMS, www.lirmm.fr/~ashen/kolmbook-eng.pdf. (Russian version published by MCCME (Moscow), 2013.)

Appendix

There is one more sufficient condition for the existence of arbitrarily long sequences that avoid forbidden substrings. Here is it.111A more general algebraic fact about ideals in a free algebra with generators is sometimes called Golod theorem; N. Rampersad in https://arxiv.org/pdf/0907.4667.pdf gives a reference to Rowen’s book (L. Rowen, Ring Theory, vol. II, Pure and Applied Mathematics, Academic Press, Boston, 1988, Lemma 6.2.7). This more general statement concerns ideals generated not necessarily by strings (products of generators), but by arbitrary uniform elements. The original paper is: Е.С. Голод, И.Р. Шафаревич, О башне полей классов, Известия АН СССР, серия математическая, 1964, 28:2, 261–272, http://www.mathnet.ru/links/f17df1a72a73e5e73887c19b7d47e277/im2955.pdf. If the power series for

(where is the number of forbidden strings of length ) has all positive coefficients, then there exist arbitrarily long strings withour forbidden substrings. Moreover, in this case the number of -letter strings without forbidden substrings is at least , where is the th coefficient of this inverse series.

To prove this result, consider the number of allowed strings of length . It is easy to see that

Indeed, we can add each of letters to each of strings of length , and then we should exclude the cases where there is a forbidden string at the end. This forbidden string may have length , then there are at most possibilities, or length , there are at most possibilities, etc. (Note that and ; note also that we can get a string with two forbidden suffixes, but this is OK, since we have an inequality.) These inequalities can be rephrased as follows: the product

has only non-negative coefficients. Denote the second term by ; if

has only positive coefficients , (as our assumption says), then the first term is a product of two series with non-negative coefficients. The first factor () starts with , so the th coefficient of a product, i.e., , is not less than th coefficient of the second factor, i.e., .

Surprisingly, this condition is closely related to the one considered above, as shown by Dmitry Piontkovsky (his name has a typo in the publication: Д.И. Пиотковский, О росте градуированных алгебр с небольшим числом определяющих соотношений, УМН, 1993,48:3(291), 199–200, http://www.mathnet.ru/links/6034910939adb12fff0cd8fb9745dfc8/rm1307.pdf):

Proposition.

Series

has all positive coefficients if and only if the series in the denominator has a root on a positive part of real line.

Proof.

Assume that the series in the denominator does not have a root, but the inverse series has all positive coefficients. In fact, non-negative coefficients are enough to get a contradiction. For a series with all non-negative coefficients, or with finitely many negative coefficients, the radius of convergence is determined by behavior of the sum on the real line: when the argument approaches the convergence radius, the sum of the series goes to infinity. Now we have the product of two series

that is equal to . One of these series should have finite convergence radius, otherwise both are everywhere defined and the product is everywhere , but both are large for large . Look at the minimal convergence radius (of two); one of the series goes to infinity near the corresponding point on the real line, so the other one converges to zero, so it has bigger convergence radius and reaches zero at the real line. Finally, note that only the first factor (the denominator) may have a zero, since the other one has all non-negative coefficients.

Now assume that the denominator has a zero; we have to prove that the inverse series has only positive coefficients. In general, the following result is true (D. Piontkovsky): if the series

has , and , and for some positive this series converges to , then the inverse series has all positive coefficients. To prove this statement, let be the root, so . Recall the long division process that computes the inverse series. It produce the sequence of remainders: the first is ; then we subtract from the th remainder

the product to cancel the first term, and get the next remainder . By induction we prove that for each remainder :

-

•

;

-

•

all the coefficients , , except the first one, are negative or zeros;

-

•

the first coefficient is positive.

The first claim is true, since it was true for by induction assumption and we subtract a series that equals zero at .

The second claim: by induction assumption the first coefficient in was positive, so we subtract the series with positive first and non-negative third, fourth, etc. coefficients. The first term cancels the first term in , the second term does not matter now, but all the subsequent coefficients are negative or zeros, since we subtract non-negative coefficients from non-positive ones.

Finally, the third claim is the consequence of the first two: if the sum is positive (equal to ) and all the terms except one are non-positive, then the remaining term is positive.

Therefore, all coefficients in the inverse series are positive. ∎

Note that we have shown that if all coefficients of the series are non-negative, then they are positive. Also note that we get a bit stronger result compared to the entropy argument where we required the series to reach a negative value (now the zero value is enough).