Compressing network populations with modal networks reveals structural diversity

Abstract

Analyzing relational data consisting of multiple samples or layers involves critical challenges: How many networks are required to capture the variety of structures in the data? And what are the structures of these representative networks? We describe efficient nonparametric methods derived from the minimum description length principle to construct the network representations automatically. The methods input a population of networks or a multilayer network measured on a fixed set of nodes and output a small set of representative networks together with an assignment of each network sample or layer to one of the representative networks. We identify the representative networks and assign network samples to them with an efficient Monte Carlo scheme that minimizes our description length objective. For temporally ordered networks, we use a polynomial time dynamic programming approach that restricts the clusters of network layers to be temporally contiguous. These methods recover planted heterogeneity in synthetic network populations and identify essential structural heterogeneities in global trade and fossil record networks. Our methods are principled, scalable, parameter-free, and accommodate a wide range of data, providing a unified lens for exploratory analyses and preprocessing large sets of network samples.

I Introduction

A common way to measure a network is to gather multiple observations of the connectivity of the same nodes. Examples include the mobility patterns of a particular group of students encoded as a longitudinal set of co-location networks eagle2009inferring ; lehmann2019fundamental , measurements of connectivity among the same brain regions for different individuals sporns2010networks , or the observation of protein-protein relationships through a variety of different interaction mechanisms stark2006biogrid . These measurements can be viewed as a multilayer network Kivela14 consisting of one layer for each measurement of all links between the nodes. For generality, we consider them as a population of networks—a set of independent network measurements on the same set of nodes, either over time or across systems with consistent, aligned node labels. There often are regularities among such collections of measurements, but each sample may differ substantially from the next. Summarizing these measurements with robust statistical analyses can separate regularities from noise and simplify downstream analyses such as network visualization or regression butts2003network ; Newman18b ; young2020bayesian ; Peixoto18 ; priebe2015statistical ; arroyo2021inference ; tang2018connectome ; lunagomez2020modeling ; wang2019common ; young2021reconstruction .

Most statistical methods for summarizing populations of networks share a similar approach. They model all the members of a population as realizations of a single representative network banks1994metric ; butts2003network ; Newman18a ; Peixoto18 ; le2018estimating ; lunagomez2020modeling , which can be retrieved by fitting the model in question to the observed population. However, the strong assumption that a single “modal” network best explains the observed populations can lead to a poor representation of the data at hand la2016gibbs ; YKN22Clustering . For instance, accurately modeling a population of networks recording face-to-face interactions between elementary school pupils requires at least two representative networks if the data include networks observed during class and recess stehle2011high . Modeling the measurements with a single network will most likely neglect essential variations in the pupil’s face-to-face interactions, leading to similar oversights from summarizing a multimodal probability distribution with only its mean.

Recent research has examined related problems and led to, for example, methods for detecting abrupt regime changes in temporal series of networks peel2015detecting ; peixoto2018change , pooling information across subsets of layers of multiplex networks de2015structural , and embedding nodes in common subspaces across network layers nielsen2018multiple ; wang2019joint ; arroyo2021inference . Several recent contributions have addressed the problem of summarizing populations of networks when multiple distinct underlying network representations are needed, using mixtures of parametric models stanley2016clustering ; signorelli2020model ; mantziou2021 ; yin2022finite ; YKN22Clustering , latent space models durante2017nonparametric , or generative models based on ad hoc graph distance measures la2016gibbs .

These methods cluster network populations with good performance but have some significant drawbacks. None of the methods discussed, except Ref. la2016gibbs , outputs a single sparse representative network for each cluster but requires handling ensembles of network structures, making downstream applications such as network visualization or regression cumbersome. Most of these methods also require potentially unrealistic modeling assumptions about the structure of the clusters. For example, that stochastic block models or random dot product graphs can model all network structures in the clusters. Specifying a generative model for the modal structures also has the downside of often requiring complex and time-consuming methods to perform the within-cluster estimation. Perhaps most critically, existing approaches require either specifying the number of modes ahead of time or resorting to regularization with ad hoc penalties mantziou2021 ; YKN22Clustering ; de2015structural ; durante2017nonparametric not motivated directly by the clustering objective or approximative information criteria la2016gibbs ; signorelli2020model ; yin2022finite poorly adapted to network problems. Overall, current approaches for clustering network populations do not provide a principled solution for model selection and often demand extensive tuning and significant computational overhead from fitting the model to several choices of the number of clusters.

Here we introduce nonparametric inference methods which overcome these obstacles and provide a coherent framework through which to approach the problem of clustering network populations or multiplex network layers, while extracting a representative modal network to summarize each cluster. Our solution employs the minimum description length principle, which allows us to derive an objective function that favors parsimonious representations in an information-theoretic sense and selects the number and composition of representative modal networks automatically from first principles. We first develop a fast Monte Carlo scheme for identifying the configuration of measurement clusters and modal networks that minimizes our description length objective. We then extend our framework to account for special cases of interest: bipartite/directed networks and contiguous clusters containing all ordered networks from the earliest to the latest. We show how to solve the latter problem in polynomial run time with a dynamic program PAY2022 . We demonstrate our methods in applications involving synthetic and real network data, finding that they can effectively recover planted network modes and clusters even with considerable noise. Our methods also provide a concise and meaningful summary of real network populations from applications in global trade and macroevolutionary research.

II Methods

II.1 Minimum description length objective

For our clustering method, we rely on the minimum description length (MDL) principle: the best model among a set of candidate models is the one that permits the greatest compression—or shortest description—of a dataset rissanen1978 . The MDL principle provides a principled criterion for statistical model selection and has consequently been employed in various applications ranging from regression to time series analysis to clustering hansen2001mdl . A large body of research uses the MDL principle for clustering data, including studies on MDL-based methods for mixture models that accommodate continuous georgieva2011cluster ; tabor2014cross and categorical data li2004entropy , as well as methods that are based on more general probabilistic generative models narasimhan2005q . The MDL approach has also been applied to complex network data, most notably for community detection algorithms to cluster nodes within a network Rosvall07 ; Peixoto14a ; kirkley2022spatial and for decomposing graphs into subgraphs koutra2014vog ; wegner2014subgraph ; bloem2020large ; young2021hypergraph ; bouritsas2021partition , but also for clustering entire partitions of networks Kirkley22Reps . Our methods are similar in spirit to the one presented in ref. Kirkley22Reps for identifying representative community divisions among a set of plausible network partitions. Both approaches involve transmitting first a set of representatives and then the dataset itself by describing how each partition or network differs from its corresponding representative. However, the methods differ substantially in their details since they address fundamentally different questions.

We consider an experiment in which the initial data are a population of networks consisting of undirected, unweighted networks on a set of labeled nodes. The networks record, for instance, the co-location patterns among kids in a class of students over class periods.

We aim to summarize these data with modal networks (also undirected and unweighted) on the same set of nodes, with associated clusters of networks , where comprises networks that are similar to the mode . This summary would allow researchers to, for instance, perform all downstream network analyses on a small set of representative networks—the modes—instead of a large set of networks likely to include measurement errors and from which it is difficult to draw valid conclusions.

We assume for simplicity of presentation that all networks and have no self- or multi-edges, although we can account for them straightforwardly. While can be fixed if desired, we assume that it is unknown and must be determined from regularities in the data.

To select among all the possible modes and assignments of networks to clusters, we employ information theory and construct an objective function that quantifies how much information is needed to communicate the structure of the network population to a receiver. Clustering networks in groups of mostly similar instances allows us to communicate the population efficiently in three steps: first the modes, then the clusters, and finally the networks themselves as a series of small modifications to the modes . The MDL principle tells us that any compression achieved in this way reveals modes and clusters that are genuine regularities of the population rather than noise rissanen1978 .

We first establish a baseline for the code length: the number of bits needed to communicate without using any regularities. One way to do this is to first communicate the parameters of the population at a negligible information cost (size , number of nodes , and the total number of edges in all networks of ) and then transmit the population directly. There are possible edge positions in each of the undirected networks in , or possible edge positions for the whole population. So these networks can be configured in ways. It thus takes approximately

| (1) |

bits to transmit these networks to a receiver. (We use the convention for brevity.) Applying Stirling’s approximation , we obtain

| (2) |

written in terms of the binary Shannon entropy

| (3) |

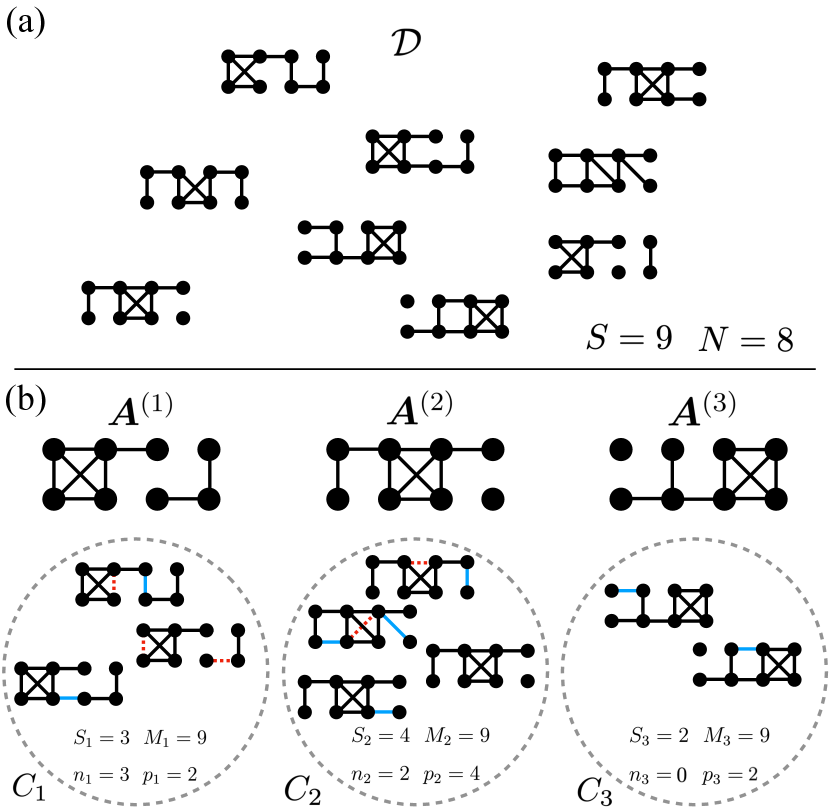

In practice, we expect to need many fewer bits than to communicate , because the population of networks will often have regularities. We propose a multi-part encoding that identifies such regularities by grouping similar networks in clusters with modes , which proceeds as follows. First, we send a small number of modes in their entirety, which ideally captures most of the heterogeneity in the population . This step is costly but will save us information later. We then send the network clusters by transmitting the cluster label of each network . Finally, we transmit the edges of networks in each cluster, using the already transmitted modes as a starting point to compress this part of the encoding significantly. The expected code length can be quantified using simple combinatorial expressions, and the configuration of modes and clusters that minimizes the total expected code length—the MDL configuration—provides a succinct summary of the data . Figure 1 summarizes the transmission process and the individual description length contributions.

The expected length of this multi-part encoding is the sum of the description length of each part of the code that has significant communication costs. The modes are the first objects that incur such costs. Following the same reasoning as before, we denote the number of edges in mode as and conclude that we can transmit the positions of the occupied edges in mode using approximately

| (4) |

bits, where the second expression results from a Stirling approximation as in Eq. (2). We can therefore transmit all the modes with a total code length of

| (5) |

bits.

The next step is to transmit the cluster label of each network in . For this part of the code, we first send the number of networks in each cluster at a negligible cost and then specify a particular clustering compatible with these constraints. The multinomial coefficient gives the total number of possible combinations of these cluster labels. The information content of this step is thus

| (6) |

where we again use the Stirling approximation and where

| (7) |

is the Shannon entropy of a distribution .

Finally, we transmit the network population by sending the differences between the networks in each cluster and their associated mode. To calculate the length of this part of the code, we focus on a particular cluster and count the number of times we will have to remove an edge from the mode when specifying the structure of networks in its cluster using as a reference. We call these edges false negatives, and count them as

| (8) |

where we interpret and as sets of edges, so the summand is the number of edges in mode that are not in the network . Similarly, we also require the number of edges that occur in the networks of cluster but not in the mode—the number of false positives,

| (9) |

Like the cluster sizes and edge counts per cluster , the pairs can be communicated to the receiver at a comparatively negligible cost, and we ignore them in our calculations.

To estimate the information content of this part of the transmission, we count the number of configurations of false-negative and false-positive edges in . Focusing first on the false negatives—the edges that must be deleted—we count that of the edges in the copies of the mode of cluster , will be false-negative edges that can be configured in ways. Similarly, using the shorthand to denote the unoccupied pairs of nodes in the mode , there are locations in which we must place false-positive edges, for a total of possible configurations of false-positive edges. The total information content required for transmitting the locations of the false-negative and false-positive edges of every network in cluster is thus

| (10) |

which we approximate as

| (11) |

Summing over all clusters,

| (12) |

we obtain the total information content of the final step in the transmission process.

We obtain the total description length by adding the contributions of Eqs. (5), (6), and (12), as

| (13) |

This objective function allows for efficient optimization because we can express it as a sum of the cluster-level description lengths

| (14) |

giving

| (15) |

Equations (4) and (10) provide explicit expressions for and .

Equation (15) gives the total description length of the data under our multi-part transmission scheme. By minimizing this objective function we identify the best configurations of modes and clusters . A good configuration will allow us to transmit a large portion of the information in through the modes alone. If we use too many modes, the description length will increase as these are costly to communicate in full. And if we use too few, the description length will also increase because we will have to send lengthy messages describing how mismatched networks and modes differ. Hence, through the principle of parsimony, Eq. (15) favors descriptions with the number of clusters as small as possible but not smaller.

This framework can be modified to accommodate populations of bipartite or directed networks. For the bipartite case, we make the transformations and , where and are the numbers of nodes in each of the two groups. This modification reduces the number of available positions for potential edges. Similarly, for the directed case, we can make the transformations and , which increases the number of available edge positions.

II.2 Optimization and model selection

Since Eq. (15) has large support, is not convex, and has many local optima, a stochastic optimization method is a natural choice for finding reasonable solutions rapidly. We exploit the objective function’s decoupling into a sum over clusters and implement an efficient merge-split Monte Carlo method for the search peixoto2020merge ; Kirkley22Reps . The method greedily optimizes using moves that involve merging and splitting clusters of networks .

Our merge-split algorithm minimizes the description length in Eq. (15) by performing one of the following moves selected uniformly at random and accepting the move as long as it results in a reduction of the description length (15):

-

1.

Reassignment: Pick a network at random and move it from its current cluster to the cluster that results in the greatest decrease in the description length. Compute the modes and that minimize the cluster-level description lengths and using Eq. (14) and the procedure described below, conditioned on the networks in and .

-

2.

Merge: Pick two clusters and at random and merge them into a single cluster . Compute the mode that minimizes the cluster-level description length using Eq. (14) and the procedure described below, conditioned on the networks in . Finally, compute the change in the description length that results from this merge.

-

3.

Split: Pick a cluster at random and split it into two clusters and using the following -means algorithm. First assign every network in to the cluster or at random. Refine the assignments by successively moving every network to the cluster or that results in a greater decrease in the description length and compute the modes and that minimize the cluster-level description lengths and , conditioned on the networks now in and . After convergence of the -means style algorithm, compute the change in the description length that results from this split of cluster .

-

4.

Merge-split: Pick two clusters at random, merge them as in move 2, then perform move 3 on this merged cluster. These two moves in direct succession help reassign multiple networks simultaneously; their addition to the move set improves the algorithm’s performance.

Since these moves modify only one or two clusters, the change in the global description length can be recomputed quickly as updates to the cluster-level description lengths in Eq. (14). Every time a mode is needed for these calculations, we use the mode that minimizes the cluster-level description length in Eq. (14). To find this optimal mode efficiently, we start with the “complete” mode

| (16) |

with an edge between nodes and if at least one network in the cluster contains the edge. We then greedily remove edges from in increasing order of occurrence in the networks of —starting first with edges only found in a single network and going up from there—and update the cluster-level description length as we go. After removing all edges from , the mode giving the lowest cluster-level description length is chosen as the mode for the cluster. This approach is locally optimal under a few assumptions about the sparsity of the networks and the composition of edges in the clusters (see Appendix A for details).

We run the algorithm by starting with initial clusters (this choice has a negligible effect on the results, see Appendix B) and stop when a specified number of consecutive moves all result in rejections, indicating that the algorithm has likely converged. The worst-case complexity of this algorithm is roughly (the worst case is a split move right at the start, see Appendix B). Appendix A details the entire algorithm, and Appendix B provides additional tests of the algorithm, such as its robustness for different choices of .

To diagnose the quality of a solution, we compute the inverse compression ratio

| (17) |

where is the minimum value of over all configurations of , given by the algorithm after termination, and is given in Eq. (2). Equation (17) tells us how much better we can compress the network population by using our multi-step encoding than by using the naïve fixed-length code to transmit all networks individually. If , our model compresses the data , and if , it does not because we waste too much information in the initial transmission steps.

II.3 Contiguous clusters

In Sec. II.2, we described a merge-split Monte Carlo algorithm to identify the clusters and modes that minimize the description length in Eq. (15). This algorithm samples the space of unconstrained partitions of the network population . However, in many applications, particularly in longitudinal studies, we may only be interested in constructing contiguous clusters, where each cluster is now a set of networks where adjacent indexes indicate contiguity of some form (temporal, spatial, or otherwise). Such constraints reduce the space of possible clusterings drastically, and we can minimize the description length exactly (up to the greedy heuristic for the mode construction) using a dynamical program jackson2005algorithm ; bellman2013dynamic ; PAY2022 .

Before we introduce an optimization for this problem, we require a small modification to Eq. (14) for the cluster-level description length to accurately reflect the constrained space of ordered partitions that we are considering. In our derivation of the description length, we assumed that the receiver knows the sizes of the clusters in . If we transmit these sizes in the order of the clusters they describe, the receiver will also know the exact clusters , since knowing the sizes is equivalent to knowing the cluster boundaries in this contiguous case. We can therefore ignore the term in Eq. (14) that tells us how much information is required to transmit the exact cluster configuration. This modification results in a new, shorter description length

| (18) |

and a new global objective

| (19) |

Since the objective in Eq. (19) is a sum of independent cluster-level terms, minimizing this description length for contiguous clusters admits a dynamic programming algorithm solution jackson2005algorithm ; bellman2013dynamic ; PAY2022 that can identify the true optima in polynomial time.

The algorithm is constructed by recursing on , the minimum description length of the first networks in according to Eq. (19). Since the objective function decomposes as a sum over clusters, for any , the MDL can be calculated as

| (20) |

where we set the base case to and define as the description length of the cluster of networks with indices , according to Eq. (18) with the mode computed with the greedy procedure described in Sec. II.2. Once we recurse to , we have found the MDL of our complete dataset, and keeping tab of the minimizing in Eq. (20) for every allows us to reconstruct the clusters.

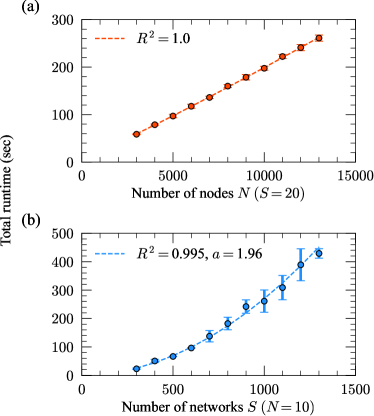

In practice, the recursion can be implemented from the bottom up, starting with , then , and so on. The computational bottleneck for calculating is finding the modes of a cluster times for each evaluation of Eq. (20) (once for each ), leading to an overall complexity for this step. Summing over , the overall time complexity of the dynamic programming algorithm is , which we verify numerically in Appendix B.

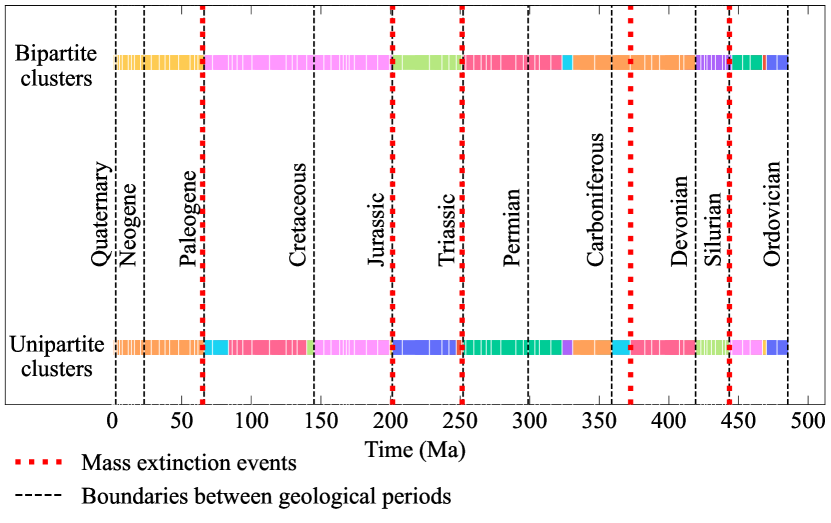

III Results

We test our methods on a range of real and synthetic example network populations. First, we show that our algorithms can recover synthetically generated clusters and modes with high accuracy despite considerable noise levels. Applied to worldwide networks of food imports and exports, we find a strong compression that uses the difference between categories of products and the locations in which they are produced. We then apply our method for contiguous clustering of ordered network populations to a set of networks representing the fossil record from ordered geological stages in the last 500 million years rojas_multiscale_2021 . We examine bipartite and unipartite representations of these systems and find close alignment between our inferred clusters and known global biotic transitions, including those triggered by mass extinction events.

III.1 Reconstruction of synthetic network populations

To demonstrate that the methods presented in Secs. II.2 and II.3 can effectively identify modes and clusters in network populations, we test their ability to recover the underlying modes and clusters generated from the heterogeneous population model introduced in ref. YKN22Clustering . We examine the robustness of these methods under varying noise levels that influence the similarity of the generated networks with the cluster’s mode.

The generative model in YKN22Clustering supposes (using different notation) that we are given modes as well as the cluster assignments of the networks . Each network is generated by first taking each edge independently and adding it to with probability (the true-positive rate). Then, each of possible edges absent from is added to with probability (the false-positive rate). After performing this procedure for all clusters, the end result is a heterogeneous population of networks with underlying modes, with noise in the networks surrounding each mode determined by the rates and . The higher the true-positive rate and the lower the false-positive rate , the closer the networks in cluster resemble their corresponding mode .

Employing Bayesian inference of the modes and cluster assignments as in ref. YKN22Clustering involves adding prior probability distributions over the modes and cluster assignments to the heterogeneous network model YKN22Clustering . With a specific choice of priors on the modes and cluster sizes, Eq. (15) is precisely the equation giving us the Maximum A Posteriori (MAP) estimators of and in this model. We defer the details of this correspondence to Appendix D.

For our experiments, we use two modes, mode 1 and mode 3 from Fig. 1, as the planted modes we aim to recover. To provide a single intuitive parameter quantifying the noise level in the generative model, we choose the true- and false-positive rates to satisfy for each run. Viewing the networks as binary adjacency matrices, the parameter corresponds to the probability of flipping entries of the matrix from 0 to 1 and vice-versa when constructing a network from its assigned cluster. We denote the parameter as the “flip probability” to emphasize this interpretation (same formulation as in ref. YKN22Clustering ). A flip probability corresponds to clusters of networks identical to the cluster modes, and a flip probability of corresponds to completely random networks with no clustering in the population. We thus expect it to be easy to recover the planted modes and clusters for , and the problem becomes more and more difficult as we approach .

We run three separate recovery experiments to test both the unconstrained and contiguous description length objectives in Eq. (15) and Eq (19), respectively. For the unconstrained objective, in each run we generate a population of networks from the model described above, with each network generated from either mode 1 or mode 3 at random with equal probability. We then identify the modes and clusters that minimize the objective in Eq. (15) using the merge-split algorithm detailed in Sec. II.2 and Appendix A. For the recovery of contiguous clusters, in one experiment we generate consecutive networks from each mode so that the population consists of adjacent contiguous clusters. And in another experiment, we generate networks from mode 1, networks from mode 3, and repeat this so that there are adjacent contiguous clusters of the networks generated from the two distinct modes. For these two experiments, we run the dynamic programming algorithm detailed in Sec. II.3 to identify the modes and clusters that minimize the objective in Eq. (19). In all three experiments, we generate a population of networks, each constructed from its corresponding mode using the single flip probability to introduce true- and false-positive edges.

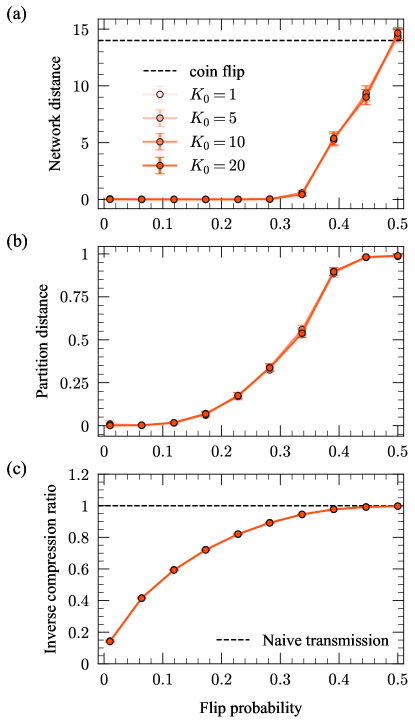

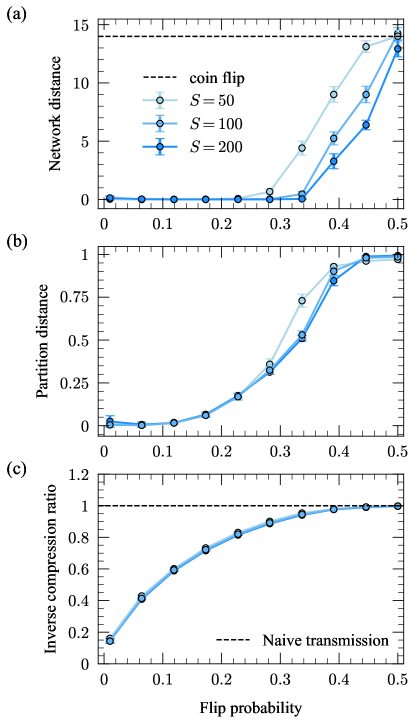

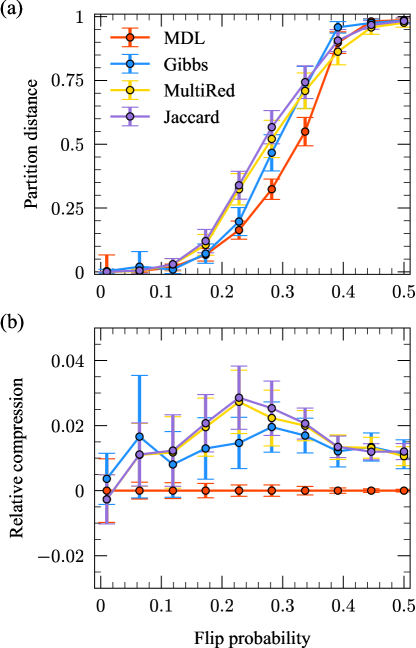

To quantify the mode recovery error, we use the network distance quantified by the average Hamming distance between the inferred modes and the planted modes . As both of our algorithms automatically select the optimal number of clusters , the number of modes we infer can differ from the true number ( or , depending on the experiment). In each experiment, we therefore choose the inferred modes in with the largest corresponding clusters and compute the average Hamming distance between these and the true modes in . (Since there are ways to choose the inferred mode labels, we choose the labeling that produces the smallest Hamming distance.) To measure the error between our inferred clusters and the planted clusters (the “partition distance”), we use one minus the normalized mutual information vinh2010information . We also compute the inverse compression ratio (Eq. (17)) to measure how well the network population can be compressed. We pick a range of values of to tune the noise level in the populations, and at each value of we average these three quantities over realizations of the model to smooth out noise due to randomness in the synthetic network populations. We choose for these experiments, but this choice has little to no effect on the results (see Appendix B).

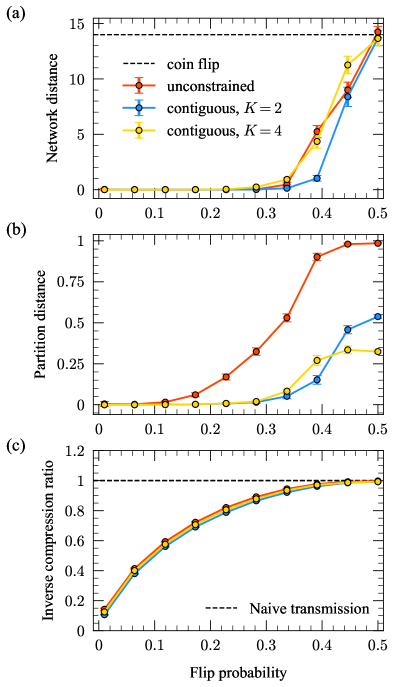

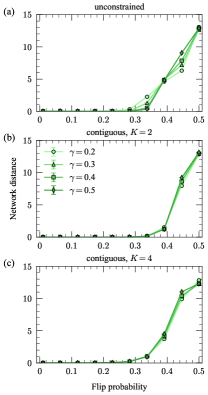

Figure 2 shows the results of our first reconstruction experiment. The reconstruction performance gradually worsens as increases due to the increasing noise level in the sampled networks relative to their corresponding modes (Fig. 2a). In all experiments, the network distance reaches that expected for a completely random guess of the mode networks—a 50/50 coin flip to determine the existence of each edge, denoted by the dashed black line—when . The results in Fig. 2a indicate that in both the unconstrained and contiguous cases, our algorithms are capable of recovering the modes underlying these synthetic network populations with high accuracy, even for substantial levels of noise (up to , corresponding to an average of of the edges/non-edges differing between each mode and networks in its cluster).

The partition distance shows similar gradual performance degradation, with substantial increases in the distance beginning at for the contiguous experiments and for the unconstrained experiment (Fig. 2b). The partition distance levels off at different values across the three experiments, with the unconstrained case exhibiting significantly worse performance than the contiguous cases. We expect this result since contiguity simplifies the reconstruction problem by reducing the space of possible clusterings. Because information-based measures account for the entire space of possible clusterings instead of the highly constrained set produced by contiguous partitions, they overestimate the similarity of partitions in this constrained set. This overestimation intensifies with more clusters kirkley2022spatial .

The inverse compression ratio (Eq. (17)) for these experiments gradually approaches (no compression relative to transmitting each network individually, denoted by the dashed black line) as the noise level increases (Fig. 2c). This result is consistent with the intuition that noisier data will be harder to compress, while data with strong internal regularity will be much easier to compress, as the homogeneities can be exploited for shorter encodings. When is small, we can achieve up to times compression over the naive baseline by using the inferred underlying modes and clusters to transmit these network populations.

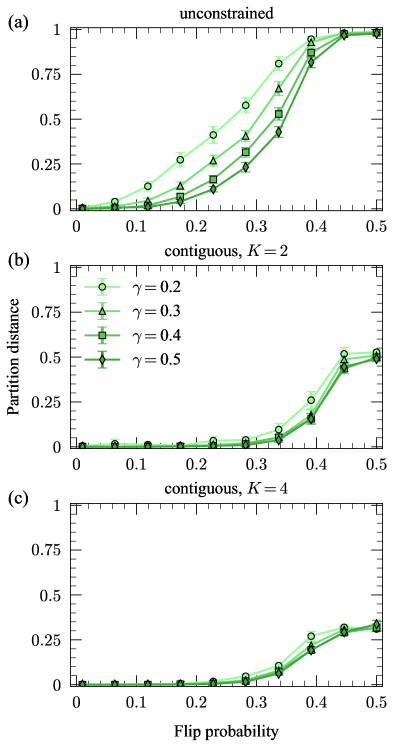

The results in Fig. 2 indicate that our algorithms can recover the underlying modes and their clusters in synthetic network populations. However, these results also depend on how distinguishable the underlying modes are. For identical modes, , it is impossible to recover the cluster labels of the individual network samples . To investigate the dependence of the recovery performance on the modes themselves, we repeat the experiment in Fig. 2, except this time we systematically vary the mode networks for each trial to achieve various levels of distinguishability. In each trial, we set equal to the corresponding mode in Fig. 1 as before, but then generate the edges in from using the flip probability , which we call the “mode separation”. For mode separations , it is challenging to recover the correct cluster labels of the individual sample networks because will closely resemble . On the other hand, for mode separations , the modes will typically be easily distinguished since will have many edges/non-edges that have flipped relative to .

Figure 3 shows the results of this second experiment. The panels show the partition distance between the true and inferred cluster labels for a range of mode separations . In all experiments, the recovery becomes worse for lower values of the separation , but the algorithm still recovers a significant amount of cluster information even for relatively low . As in the previous set of experiments, the recovery performance is substantially worse for the discontiguous case compared with the contiguous cases, again due to the highly constrained ensemble of possible partitions considered by the partition distance in the contiguous cases.

In Appendix B, we show the recovery performance results for the network distance between the true and inferred modes as we vary the mode separation . For the mode recovery, the results are even more robust to the changes in mode separation. This result is consistent with the recovery performance in Fig. 2, where the recovery performance of the partitions starts to worsen at lower noise levels than the recovery of the modes. Thus, small perturbations in the inferred clusters may not affect the inferred modes much, since misclassified networks likely have little in common with the rest of their cluster.

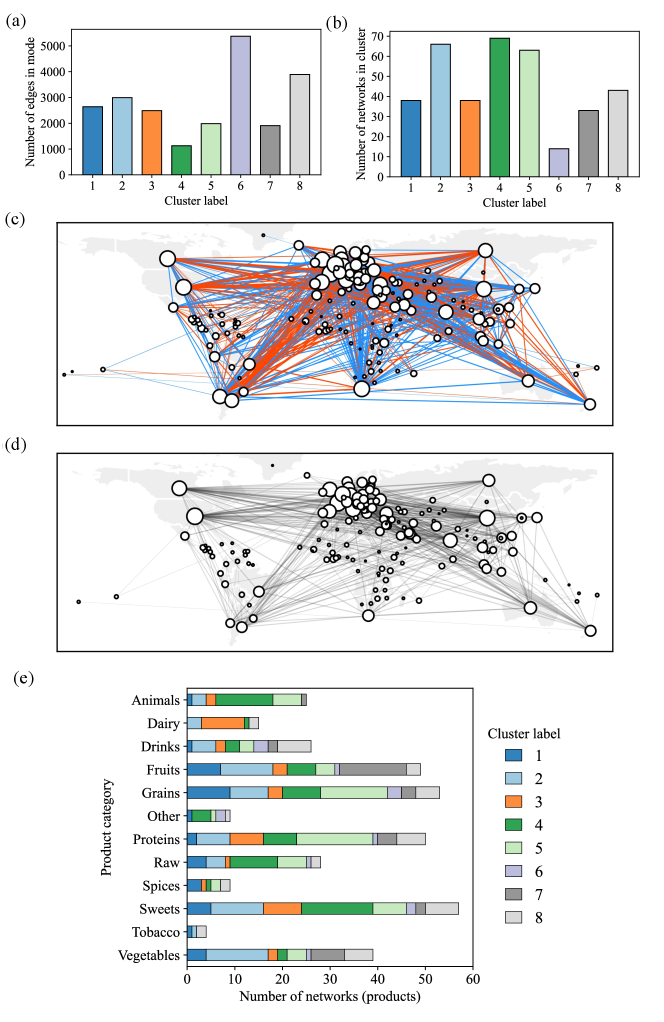

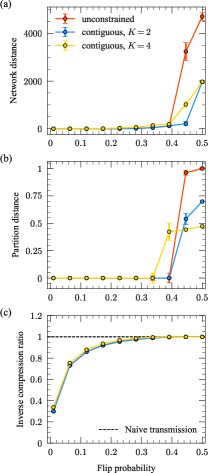

III.2 Unordered network population representing global trade relationships

For our first example with empirical network data, we study a collection of worldwide import/export networks. The nodes represent countries and the edges encode trading relationships. The Food and Agriculture Organization of the United Nations (FAO) aggregates these data, and we use the trades made in 2010, as in de2015structural . Each network in the collection corresponds to a category of products, for example, bread, meat, or cigars. We ignore information about the intensity of trades and merely record the presence or absence of a trading relationship for each category of products. The resulting collection comprises 364 networks (layers) on the same set of 214 nodes with 874.6 edges (average degree of 8.2) on average, with some sparse networks having as little as one edge and the densest containing 6,529 edges. These networks are unordered, so we employ the discontiguous clustering method described in Sec. II.2. We run the algorithm multiple times with varying initial number of clusters to find the best optima, although as with the synthetic reconstruction examples the choice of has little impact on compression. The best compression we find results in 8 modes and achieves a compression ratio of , indicating that it is nearly twice as efficient to communicate the data when we use the modal networks and their clusters. In contrast, in de2015structural a clustering analysis of the same network layers using structural reducibility—a measure of how many layers can be aggregated to reduce pairwise information redundancies among the layers—yielded 182 final aggregated layers, which would poorly compress the data under our scheme and not provide a significant benefit in downstream analyses due to the large final number of clusters. Key properties of the configuration of modes and clusters inferred by our algorithm are illustrated in Fig. 4.

In Fig. 4a we show the number of edges in each inferred mode, which indicates that these modes vary substantially in density to reflect the key underlying structures in networks within their corresponding clusters. The sizes of the clusters, shown in Fig. 4b, also vary substantially, with the most populated cluster (cluster 4) containing nearly 7 times as many networks as the least populated cluster (cluster 6). Some striking geographical commonalities and differences in the structure of the modes can be seen due to the varying composition of their corresponding clusters of networks. Figures 4c and 4d show the differences and similarities respectively between the structure of the modes for clusters 5 and 7, which are chosen as example modes because of their modest densities and distinct distributions of product types (Fig. 4e). Edges that are in mode 7 but not in mode 5 are highlighted in blue, while edges in mode 5 but not in mode 7 are highlighted in red. Meanwhile, the shared edges common to both networks are shown in Fig. 4d in black. Mode 5, which contains a diversity of product types and a relatively large portion of grain and protein products, has a large number of edges connecting the Americas to Europe that are not present in mode 7. On the other hand, mode 7, which is primarily composed of networks representing the trade of fruits and vegetables, has many edges in the global south that are not present in mode 5. However, both modes share a common backbone of edges that are distributed globally.

We categorized the 364 products (the network layers being clustered) into 12 broader categories of product types, plotting their distributions within each cluster in Fig. 4e. There are a few interesting observations we can make about this figure. Nearly all of the dairy products are traded within networks in a single cluster (cluster 3), indicating a high degree of similarity in the trade patterns for dairy products across countries. A similar observation can be made for live animals, which are primarily traded in cluster 4. On the other hand, many of the other products (grains, proteins, sweets, fruits, vegetables, and drinks) are traded in reasonable proportion in all clusters, which may reflect the diversity of these products as well as their geographical sources, which can give rise to heterogeneous trading structures. The densities of the modes and the sizes of the clusters do not have a clear relationship, with cluster 6 containing the smallest number of networks but the densest mode, and clusters 4 and 5 having sparser modes and much larger clusters. This reflects a higher level of heterogeneity in the structure of the trading relationships captured in cluster 6, which requires a denser mode for optimal compression, while the converse is true for clusters 4 and 5.

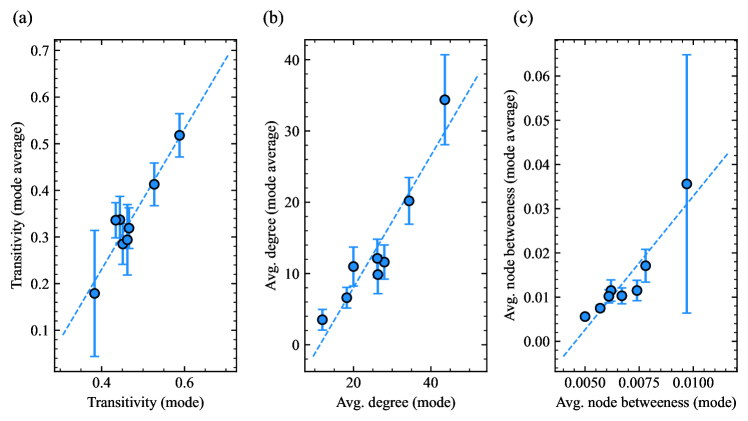

We also identify substantial structural differences in the inferred modes. In Appendix C, we compute summary statistics (average degree, transitivity, and average betweenness) for the modes output in this experiment and the network layers in their corresponding clusters. The statistics vary much more across clusters than within the clusters, suggesting that the MDL optimal mode configuration exemplifies distinct network structures within the dataset. Because the within-cluster average value of each statistic and the corresponding value for the mode network are similar, our method provides an effective preprocessing step for network-level regression tasks.

III.3 Ordered network population representing the fossil record

| Eras | Extinction Events | Periods | MDL Optimal | |

|---|---|---|---|---|

We conclude our analysis with a study of a set of networks representing global marine fauna over the past 500 million years. We aggregate fossil occurrences of the shelled marine animals, including bryozoans, corals, brachiopods, mollusks, arthropods, and echinoderms, into a regular grid covering the Earth’s surface rojas_multiscale_2021 . From these data, we construct unweighted bipartite networks representing ordered time intervals in Earth’s history (geological stages): An edge between a genus and a grid cell indicates that the genus was observed in the grid cell during the network’s corresponding geological stage. We also construct the unipartite projections of these networks: An edge from one genus to another indicates that these two genera were present in the same grid cell during the stage corresponding to the network. In total, there were 18,297 genus nodes, 664 grid cell nodes, 67,371.5 edges on average for the 90 unipartite graphs (average degree of 7.4), and 1462.2 edges on average for the bipartite graphs (average degree of 0.08, corresponding to an average of roughly 10 percent of genera being present at each layer).

In Fig. 5, we show the results of applying our clustering method for contiguous network populations (Sec. II.3) to both the unipartite and bipartite populations representing the post-Cambrian fossil record. We find clusters that capture the known large-scale organization of marine diversity. Major groups of marine animals archived in the fossil record are organized into global-scale assemblages that sequentially dominated oceans and shifted across major biotic transitions. Overall, the bipartite and unipartite fossil record network representations both result in transitions concurrent with the major known geological perturbations in Earth’s history, including the so-called mass extinction events. However, differences in the clusters retrieved from the unipartite and bipartite representations of the underlying paleontological data highlight the impact of this choice on the observed macroevolutionary pattern eriksson_how_2021 .

We also use our methodology to assess the extent to which the standard division of the post-Cambrian rock record in the geological time scale and the well-known mass extinction events compress the assembled networks. Specifically, we evaluate the inverse compression ratio in Eq. (17) on three different partitions of the fossil record networks that are defined by clustering the assembled networks into geological eras (Paleozoic, Mesozoic, and Cenozoic), geological periods (Ordovician to Quaternary), and six time intervals between the five mass extinctions in Fig. 5, with planted modes constructed by placing the networks into each cluster using the algorithm described in Sec. II.2 and Appendix A.

Table 1 shows the results of these experiments. All three partitions compress the fossil record networks almost as much as the optimal partition, which represents a natural division based on major regularities. Accordingly, the planted partition based on mass extinctions is almost as good as this optimal partition because mass extinctions are concurrent with the major geological events shaping the history of marine life. In contrast, partitions based either on standard geological eras or periods are less optimal, likely because they represent, to some extent, arbitrary divisions that are maintained for historical reasons. Our results here provide a complementary perspective to the work in rojas_multiscale_2021 , where a multilayer network clustering algorithm was employed that clusters nodes within and across layers to reveal three major biotic transitions from the fossil data. In Appendix E we review this and other existing multiplex and network population clustering techniques, discussing the similarities and differences with our proposed methods.

IV Conclusion

We have used the minimum description length principle to develop efficient parameter-free methods for summarizing populations of networks using a small set of representative modal networks that succinctly describe the variation across the population. For clustering network populations with no ordering, we have developed a fast merge-split Monte Carlo procedure that performs a series of moves to refine a partition of the networks. For clustering ordered networks into contiguous clusters, we employ a time and memory-efficient dynamic programming approach. These algorithms can accurately reconstruct modes and associated clusters in synthetic datasets and identify significant heterogeneities in real network datasets derived from trading relationships and fossil records. Our methods are principled, non-parametric, and efficient in summarizing complex sets of independent network measurements, providing an essential tool for exploratory and visual analyses of network data and preprocessing large sets of network measurements for downstream applications.

This information-theoretic framework for representing network populations with modal networks can be extended in several ways. For example, a multi-step encoding that allows for hierarchical partitions of network populations would capture multiple levels of heterogeneity in the data. More complex encodings that exploit structural regularities within the networks would allow for simultaneous inference of mesoscale structures—such as communities, core-periphery divisions, or specific informative subgraphs vreeken2022differential —along with the modes and clusters. The encodings can also be adapted to capture weighted networks with multiedges by altering the combinatorial expressions for the number of allowable edge configurations.

Acknowledgments

A.K. was supported in part by the HKU-100 Start Up Grant. M.R. was supported by the Swedish Research Council, Grant No. 2016-00796.

V Code Availability

The algorithm presented in this paper is available at https://github.com/aleckirkley/MDL-network-population-clustering.

VI Data Availability

The data sets used in this paper are available at https://github.com/aleckirkley/MDL-network-population-clustering.

VII Author Contributions

A.K. designed the study and methodology, A.K., A.R., M.R., and J.G.Y. designed the experiments, A.K. and J.G.Y. performed the experiments, A.R. and M.R provided new datasets, and A.K. wrote the manuscript. All authors reviewed, edited and approved the manuscript.

VIII Competing Interests

The authors declare no competing interests.

References

- (1) N. Eagle, A. S. Pentland, and D. Lazer, Inferring friendship network structure by using mobile phone data. Proc. Natl. Acad. Sci. U.S.A. 106(36), 15274–15278 (2009).

- (2) S. Lehmann, Fundamental structures in temporal communication networks. In Temporal Network Theory, pp. 25–48, Springer (2019).

- (3) O. Sporns, Networks of the Brain. MIT Press, Cambridge, MA (2010).

- (4) C. Stark, B.-J. Breitkreutz, T. Reguly, L. Boucher, A. Breitkreutz, and M. Tyers, Biogrid: A general repository for interaction datasets. Nucleic Acids Research 34, D535–D539 (2006).

- (5) M. Kivelä, A. Arenas, M. Barthelemy, J. P. Gleeson, Y. Moreno, and M. A. Porter, Multilayer networks. Journal of Complex Networks 2, 203–271 (2014).

- (6) C. T. Butts, Network inference, error, and informant (in)accuracy: A Bayesian approach. Social Networks 25, 103–140 (2003).

- (7) M. E. J. Newman, Estimating network structure from unreliable measurements. Physical Review E 98, 062321 (2018).

- (8) J.-G. Young, G. T. Cantwell, and M. Newman, Bayesian inference of network structure from unreliable data. J. of Complex Netw. 8(6), cnaa046 (2020).

- (9) T. P. Peixoto, Reconstructing networks with unknown and heterogeneous errors. Physical Review X 8, 041011 (2018).

- (10) C. E. Priebe, D. L. Sussman, M. Tang, and J. T. Vogelstein, Statistical inference on errorfully observed graphs. J. Comput. Graph. Stat 24(4), 930–953 (2015).

- (11) J. Arroyo, A. Athreya, J. Cape, G. Chen, C. E. Priebe, and J. T. Vogelstein, Inference for multiple heterogeneous networks with a common invariant subspace. Journal of machine learning research 22(142) (2021).

- (12) R. Tang et al., Connectome smoothing via low-rank approximations. IEEE Trans. Med. Imaging. 38(6), 1446–1456 (2018).

- (13) S. Lunagómez, S. C. Olhede, and P. J. Wolfe, Modeling network populations via graph distances. J. Am. Stat. Assoc. (116), 536 (2021).

- (14) L. Wang, Z. Zhang, D. Dunson, et al., Common and individual structure of brain networks. Ann. Appl. Stat. 13.

- (15) J.-G. Young, F. S. Valdovinos, and M. Newman, Reconstruction of plant–pollinator networks from observational data. Nature Communications 12(1), 1–12 (2021).

- (16) D. Banks and K. Carley, Metric inference for social networks. Journal of Classification 11, 121–149 (1994).

- (17) M. E. J. Newman, Network structure from rich but noisy data. Nature Physics 14, 542–545 (2018).

- (18) C. M. Le, K. Levin, E. Levina, et al., Estimating a network from multiple noisy realizations. Electron. J. Stat. 12(2), 4697–4740 (2018).

- (19) P. S. La Rosa, T. L. Brooks, E. Deych, B. Shands, F. Prior, L. J. Larson-Prior, and W. D. Shannon, Gibbs distribution for statistical analysis of graphical data with a sample application to fcMRI brain images. Stat. Med. 35, 566–580 (2016).

- (20) J.-G. Young, A. Kirkley, and M. E. J. Newman, Clustering of heterogeneous populations of networks. Physical Review E 105, 014312 (2022).

- (21) J. Stehlé, N. Voirin, A. Barrat, C. Cattuto, L. Isella, J.-F. Pinton, M. Quaggiotto, W. Van den Broeck, C. Régis, B. Lina, et al., High-resolution measurements of face-to-face contact patterns in a primary school. PLOS ONE 6, e23176 (2011).

- (22) L. Peel and A. Clauset, Detecting change points in the large-scale structure of evolving networks. In Proceedings of the 29th International Conference on Artificial Intelligence (AAAI), pp. 2914–2920 (2015).

- (23) T. P. Peixoto and L. Gauvin, Change points, memory and epidemic spreading in temporal networks. Scientific Reports 8, 15511 (2018).

- (24) M. De Domenico, V. Nicosia, A. Arenas, and V. Latora, Structural reducibility of multilayer networks. Nature communications 6(1), 1–9 (2015).

- (25) A. M. Nielsen and D. Witten, The multiple random dot product graph model. Preprint arXiv:1811.12172 (2018).

- (26) S. Wang, J. Arroyo, J. T. Vogelstein, and C. E. Priebe, Joint embedding of graphs. IEEE Trans. Pattern Anal. Mach. Intell. 43, 1324–1336 (2019).

- (27) N. Stanley, S. Shai, D. Taylor, and P. J. Mucha, Clustering network layers with the strata multilayer stochastic block model. IEEE Trans. Netw. Sci. Eng. 3, 95–105 (2016).

- (28) M. Signorelli and E. C. Wit, Model-based clustering for populations of networks. Statistical Modelling 20, 9–29 (2020).

- (29) A. Mantziou, S. Lunagómez, and R. Mitra, Bayesian model-based clustering for multiple network data. Preprint arXiv:2107.03431 (2021).

- (30) F. Yin, W. Shen, and C. T. Butts, Finite mixtures of ERGMs for ensembles of networks. Bayesian Analysis (Advance Publication) pp. 1–39 (2022).

- (31) D. Durante, D. B. Dunson, and J. T. Vogelstein, Nonparametric bayes modeling of populations of networks. J. Am. Stat. Assoc. 112, 1516–1530 (2017).

- (32) A. Patania, A. Allard, and J.-G. Young, Exact and rapid linear clustering of networks with dynamic programming. Preprint arXiv:2301.10403 (2023).

- (33) J. Rissanen, Modeling by the shortest data description. Automatica 14, 465–471 (1978).

- (34) M. H. Hansen and B. Yu, Model selection and the principle of minimum description length. Journal of the American Statistical Association 96, 746–774 (2001).

- (35) O. Georgieva, K. Tschumitschew, and F. Klawonn, Cluster validity measures based on the minimum description length principle. In Proceedings of the International Conference on Knowledge-Based and Intelligent Information and Engineering Systems, pp. 82–89, Springer-Verlag, Berlin (2011).

- (36) J. Tabor and P. Spurek, Cross-entropy clustering. Pattern Recognition 47, 3046–3059 (2014).

- (37) T. Li, S. Ma, and M. Ogihara, Entropy-based criterion in categorical clustering. In Proceedings of the Twenty-first International Conference on Machine Learning, p. 68, Association for Computing Machinery, New York (2004).

- (38) M. Narasimhan, N. Jojic, and J. A. Bilmes, Q-clustering. Advances in Neural Information Processing Systems 18, 979–986 (2005).

- (39) M. Rosvall and C. T. Bergstrom, An information-theoretic framework for resolving community structure in complex networks. Proceedings of the National Academy of Sciences 104, 7327–7331 (2007).

- (40) T. P. Peixoto, Hierarchical block structures and high-resolution model selection in large networks. Physical Review X 4, 011047 (2014).

- (41) A. Kirkley, Spatial regionalization based on optimal information compression. Communications Physics 5(1), 1–10 (2022).

- (42) D. Koutra, U. Kang, J. Vreeken, and C. Faloutsos, Vog: Summarizing and understanding large graphs. In Proceedings of the 2014 SIAM international conference on data mining, pp. 91–99, SIAM (2014).

- (43) A. E. Wegner, Subgraph covers: an information-theoretic approach to motif analysis in networks. Physical Review X 4(4), 041026 (2014).

- (44) P. Bloem and S. de Rooij, Large-scale network motif analysis using compression. Data Mining and Knowledge Discovery 34(5), 1421–1453 (2020).

- (45) J.-G. Young, G. Petri, and T. P. Peixoto, Hypergraph reconstruction from network data. Communications Physics 4(1), 1–11 (2021).

- (46) G. Bouritsas, A. Loukas, N. Karalias, and M. Bronstein, Partition and code: learning how to compress graphs. Advances in Neural Information Processing Systems 34, 18603–18619 (2021).

- (47) A. Kirkley and M. E. J. Newman, Representative community divisions of networks. Communications Physics 5, 1–10 (2022).

- (48) T. P. Peixoto, Merge-split markov chain monte carlo for community detection. Physical Review E 102, 012305 (2020).

- (49) B. Jackson, J. D. Scargle, D. Barnes, S. Arabhi, A. Alt, P. Gioumousis, E. Gwin, P. Sangtrakulcharoen, L. Tan, and T. T. Tsai, An algorithm for optimal partitioning of data on an interval. IEEE Signal Processing Letters 12(2), 105–108 (2005).

- (50) R. Bellman, Dynamic Programming. Princeton University Press (1957).

- (51) A. Rojas, J. Calatayud, M. Kowalewski, M. Neuman, and M. Rosvall, A multiscale view of the Phanerozoic fossil record reveals the three major biotic transitions. Communications Biology 1, 1–2 (2021).

- (52) N. X. Vinh, J. Epps, and J. Bailey, Information theoretic measures for clusterings comparison: Variants, properties, normalization and correction for chance. The Journal of Machine Learning Research 11, 2837–2854 (2010).

- (53) K. M. Cohen, S. C. Finney, P. L. Gibbard, and J.-X. Fan, The ics international chronostratigraphic chart. Episodes Journal of International Geoscience 36, 199–204 (2013).

- (54) D. M. Raup and J. J. S. Jr., Mass extinctions in the marine fossil record. Science 215, 1501–1503 (1982).

- (55) A. Eriksson, D. Edler, A. Rojas, M. de Domenico, and M. Rosvall, How choosing random-walk model and network representation matters for flow-based community detection in hypergraphs. Communications Physics 4, 133 (2021).

- (56) C. Coupette, S. Dalleiger, and J. Vreeken, Differentially describing groups of graphs. Preprint arxiv:2201.04064 (2022).

- (57) D. J. MacKay, Information Theory, Inference and Learning Algorithms. Cambridge University Press, Cambridge (2003).

- (58) P. D. Grünwald and A. Grünwald, The Minimum Description Length Principle. MIT Press, Cambridge, MA (2007).

- (59) L. Peel, D. B. Larremore, and A. Clauset, The ground truth about metadata and community detection in networks. Science Advances 3(5), e1602548 (2017).

- (60) T. P. Peixoto and A. Kirkley, Implicit models, latent compression, intrinsic biases, and cheap lunches in community detection. Preprint arXiv:2210.09186 (2022).

- (61) A. Spanos, Statistical Foundations of Econometric Modelling. Cambridge University Press (1986).

- (62) A. Kirkley, Information theoretic network approach to socioeconomic correlations. Physical Review Research 2, 043212 (2020).

Appendix A Merge-split Monte Carlo algorithm

In this appendix we describe the greedy merge-split Monte Carlo algorithm used to identify the clusters and modes that minimize the description length in Eq. (15). The input to the algorithm is edge sets corresponding to the network population , an initial number of clusters , and a maximum number of consecutive failed moves before termination. ( for all experiments performed in the paper.) Note that the number of true-positive edges, which we denote , will give equivalent results if swapped for in Eq. 14. We will use these true-positive counts in the algorithm below rather than the false-negatives , keeping in mind that they are interchangeable in the description length expressions.

The algorithm is as follows:

-

1.

Initialize clusters by placing each network in in a cluster chosen uniformly at random.

-

2.

For all clusters , create a dictionary mapping all edges in networks within to the number of times occurs in . We do not need to keep track of the edges that never occur in a cluster. This is one computational bottleneck of the algorithm, requiring operations for sparse networks with edges each.

-

3.

For all clusters , compute the mode using the following greedy edge removal algorithm:

-

(a)

Create list of tuples , with items sorted in increasing order of frequency . This is the computational bottleneck of this mode update step, with roughly time complexity assuming the networks in cluster have unique edges.

-

(b)

Initialize:

-

•

-

•

-

•

-

•

-

•

-

•

-

•

-

•

-

•

-

(c)

While :

-

i.

-

ii.

Compute the change in description length

(21) The first term is identical to the second, but with , , and .

-

iii.

-

iv.

-

v.

Increment:

-

•

-

•

-

•

-

•

-

•

-

vi.

If :

-

•

-

•

-

•

-

i.

-

(d)

return with the first edges in removed

-

(a)

-

4.

Initialize counter and compute the total description length for the current cluster and mode configurations using Eq. (15).

-

5.

While , attempt to do one of the following four moves, chosen at random:

-

(a)

Move 1: Reassign a randomly chosen network from its cluster to the cluster that most reduces the description length.

-

i.

For all , compute

The modes are not updated to account for the inclusion/absence of , and so we only require updates to and to account for this reassignment, in order to compute new values of . These edge dictionary updates can be done in operations for sparse graphs.

-

ii.

If for some :

-

•

Move to the cluster that minimizes , and remove from

-

•

-

•

Update to account for the new absence/presence of the edges in

-

•

Update modes and using the new dictionaries

-

•

Update the description length with the corresponding change after the move.

-

•

-

iii.

Else:

-

iv.

Time complexity: for sparse networks, as computing the change in the description length for the move to requires the operation of comparing the edge list of with the edge list of .

-

i.

-

(b)

Move 2: Merge a randomly chosen pair of clusters and to form a new cluster .

-

i.

Compute the dictionary by merging the counts in the dictionaries and .

-

ii.

Compute using the method outlined in Step 3 of the algorithm.

-

iii.

Compute the change in description length

(22) -

iv.

If :

-

•

Add to and delete and

-

•

Keep and delete and

-

•

Keep and delete and

-

•

-

•

-

v.

Else:

-

vi.

Time complexity: roughly , with the bottleneck being the computation of the mode .

-

i.

-

(c)

Move 3: Split a randomly chosen cluster to form new clusters and .

-

i.

Initialize two clusters and their edge dictionaries using the method in Steps 1 and 2 of the algorithm.

-

ii.

Identify the modes of and using the method in Step 3 of the algorithm.

-

iii.

Run a -means style algorithm to compute and and their corresponding modes, which proceeds as follows: While networks are still reassigned at the current move:

-

A.

For all networks in both sub-clusters, compute and , and add to the cluster with the lower of the two values.

-

B.

Update and using the method in Step 2 of the overall algorithm.

-

C.

Update the modes of and using the method in Step 3 of the overall algorithm.

-

A.

-

iv.

Compute the change in description length

(23) -

v.

If :

-

•

Add and to , delete

-

•

Keep and , delete

-

•

Keep and , delete

-

•

-

•

-

vi.

Else:

-

vii.

Time complexity: for roughly equally sized clusters, with the computational bottleneck being the reassignment step in the local -means algorithm.

-

i.

-

(d)

Move 4: Pick two clusters at random to merge, then immediately split following Move 3.

-

•

Time complexity: , similar to Move 3.

-

•

-

(a)

Now that we have laid out the merge-split algorithm, we can show that the greedy mode update in Step 3 of the merge-split algorithm is locally optimal under a few assumptions regarding the sparsity of the networks and the set of edges in the cluster as a whole. The relevant terms of the local description length that we need to consider are those that depend on the choice of mode, or

| (24) |

were we’ve approximated , which will be a good approximation when the modes are sparse. We also note the use of true-positives rather than false-negatives , as discussed at the beginning of the section.

We can first notice that in the sparse network regime, it will never be optimal to include an edge in the mode that does not exist in any of the networks . If , then including an edge in the mode that does not exist in any of the networks will only serve to augment by , increasing the values of the first two terms of in Eq. (24). Thus, we will end up increasing the description length if we add such an edge, and so no such edges will exist in the optimal mode . We can therefore begin our algorithm with the maximally dense mode in Eq. (16) and only consider removing edges, since the optimal mode will be comprised of some subset of these edges.

We can now argue that, for any current iteration of the mode, the best edge to remove from the mode next is the one that occurs the least often in the cluster (i.e. the number of this edge’s occurrences, , is the smallest). will always decrease by regardless of which edge is chosen, but and will decrease and increase by respectively. If we assume that —on average, each network in has at least half of the edges in the mode , which will be true when the networks in have relatively high overlap in their edge sets—then the second term in Eq. (24) will increase its value by the smallest amount under this edge removal when decreases by the least amount. Likewise, the third term in Eq. (24) will also increase its value with this edge removal (since increases by , and we assume that ), and so it will increase by the smallest amount when is smallest. The best edge to remove from the mode in any given step of the algorithm is, therefore, the one with the smallest value of , as it results in the most negative change in . Therefore the greedy edge removal algorithm will reach a locally optimal edge configuration for the mode under these assumptions.

In practice, the total run time of this merge-split algorithm depends on the user’s choice of , the number of consecutive failed moves before algorithm termination. When computation time is not a concern, it is safer to let be as high as possible to ensure no additional merge-split moves will increase the description length. For context, the convergence of the algorithm in the global trade experiment presented here with required on average merge-split steps and a total run time of minutes for a pure Python implementation with no substantial optimizations on an Intel Core i7 CPU. On the other hand, with this same experiment takes on average merge-split steps and a total run time of minutes on the same processor. In the synthetic network experiments, each simulation took on average seconds for MCMC moves with ( and are much smaller, resulting in shorter run times). The contiguous clustering algorithm does not present the same variability in total run time since it is deterministic given the input networks.

Appendix B Additional synthetic reconstruction tests

In this appendix we show the results of additional synthetic reconstruction results for the discontiguous and contiguous network population clustering algorithms of Sec.s II.2 and Sec. II.3. These results verify the robustness of these algorithms as well as their theoretical run time scaling.

In Fig. 6, we plot the results shown in Fig. 2 for different choices of the initial number of clusters in the discontiguous case (the contiguous algorithm always begins with all networks in their own cluster), finding that the results are practically indistinguishable for all choices . In Fig. 7, we repeat the same experiment but this time for different population sizes , finding that the results do depend on , but in an intuitive way: more networks give us more statistical evidence for the clusters and their modes, resulting in better reconstruction.

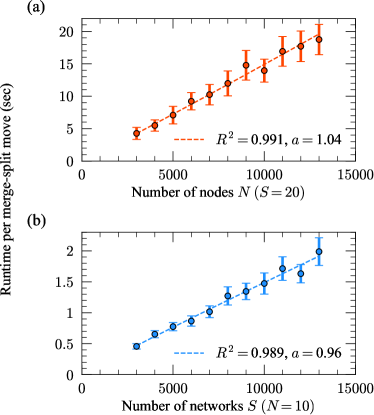

In Fig. 8, we numerically verify the runtime scaling for the MCMC algorithm discussed in Sec. II.2 and Appendix A. For each realization of the experiment, we first constructed two Erdos-Renyi random graphs with nodes and average degree —this value does not matter for the scaling so long as it is constant across —which served as modes for the synthetic population. We then generate a population of networks from these modes using the model of Appendix D, each network having a 50/50 chance of being generated from either mode, with true-positive rates and false positive rates to introduce noise into the population but facilitate relatively simple recovery. The MCMC algorithm of Sec. II.2 was then applied to each population, and the average run time of Move 4 in the algorithm was recorded—this move is the computational bottleneck for since it involves combining a merge and a split move (Moves 2 and 3). Fig. 8 shows the average run time over 100 realizations of synthetic populations for a range of values (at fixed ) and range of values (at fixed ), with error bars corresponding to three standard errors in the mean. The regression was run for the experiments in each panel, and the resulting slopes and coefficients of determination provide strong numerical evidence that the theoretical run time scaling is obeyed for this algorithm. The dependence on is not investigated since the algorithm is constantly updating this parameter. However, at (for Move 3) and (for Moves 2 and 4) the slowest MCMC moves will occur since the largest clusters need to be dealt with, and these will contribute the largest run times to the scaling so in practice the dependence is the primary one of interest.

A similar experiment was run for synthetic contiguous populations with two planted clusters to verify the theoretical run time scaling for the dynamic programming algorithm. The modes for each realization were generated in the same way as for the previous experiment, except this time the first networks were generated from the first mode and the final networks were generated from the second mode. The dynamic programming of Sec. II.3 was then applied to each population, and the average run time of the full algorithm was computed. Fig. 9 shows the average run time over 3 realizations of synthetic populations (each run gave a very consistent run time) for a range of values (at fixed ) and range of values (at fixed ), with error bars corresponding to three standard errors in the mean. The regression was run for the experiment in panel (a) and the regression was run for the experiment in panel (b). The resulting coefficients of determination and slope for the second regression provide strong numerical evidence that the theoretical run time scaling is obeyed for this algorithm.

In Fig. 11, we rerun the reconstruction experiments of Fig. 2 with the same parameters except with the two modes generated from an Erdos-Renyi random graph with nodes and average degree as in the previous experiments. In general, the recovery performance remains qualitatively similar to that of Fig. 2, except with variations in the high noise regime () due to size-dependent effects from the synthetic population generative process. In this regime, there is a very low probability of any substantial overlap among the networks in the population due to the quadratic scaling of the number of possible positions where an edge can be placed. The discontiguous clustering method (red curves) places all networks in a single cluster which is the most parsimonious description of an unstructured population. This outcome results in one nearly complete inferred mode of edges. By the symmetry of the binomial coefficient, it is equally economical in an information-theoretic sense to specify positions of non-edges than edges. Considering the density of the true modes, we then have network hamming distances of roughly , consistent with the red curve in the top panel of the figure.

In the same regime for the case, the contiguous clustering methods tend to do the opposite and place all networks in their own cluster since the corresponding description length does not incur as strong a penalty for extra clusters. Here we then see inferred modes that consist of the individual networks in the population—in other words, random coin flips at each edge position with half of the possible edges occupied. Considering the expected overlap with the true modes and fluctuations, we get mode hamming distances of roughly , as seen in the top panel. Meanwhile, as opposed to the discontiguous case where the NMI equals (i.e. subtracting the NMI from gives a partition distance of ), in the contiguous setting we see NMI values of , resulting in partition distances of for respectively.

Appendix C Analysis of modes

One of the advantages of using a concise set of modes instead of a complete collection of networks is that we can simplify analysis of the whole population greatly. For example, a common task in network machine learning and statistics is network-level regression. In such a task, our goal is to understand how the properties of networks relate to response variables, such as the adoption of a product or practice, or the efficiency of shipping.

To implement a regression, one denotes by the vector of properties of network and by the response for this network, modeling:

| (25) |

where is a random variable modeling deviations from the predictor . Fitting the regression is a matter of identifying a good predictor function given data

Such analysis can become complex and impractical if the set of networks is too large (for example, if computing the network predictors is costly, as is the case of some centrality measures or macroscopic properties like community structure). Hence, one may run the analysis on a smaller set of representative samples. Clustering with an information criterion can achieve this goal. We demonstrate this in Fig. 12, using the FAO Trade Network analyzed in Sec. III.2. The figure shows the relationship between the value of three representative network predictors as computed on the eight identified modes and the average value of these same predictors across the networks of the cluster associated with each mode. One can see that the two quantities are proportional to one another: when, say, the average betweenness centrality of nodes is higher in the mode, it is also higher on average in the network of the associated cluster. This means that we can run a regression analysis on a small set of modes instead of on a complete network—for example, by using the average response of the networks in any given node as the new response variable.

Appendix D Equivalence with Bayesian MAP estimation

In this appendix we establish the correspondence between our minimum description length objective and Bayesian MAP estimation in the heterogeneous network population model of YKN22Clustering . Adapting to the notation in this paper and simplifying the product over network samples in Eq. 17 from YKN22Clustering , the likelihood of the data under the heterogeneous network population generative model is given by

| (26) |

where and are vectors of true- and false-positive rates within the clusters, and where we define as the the number of true-positive edges, as in the previous Appendix.

We can additionally add priors on the modes and clusters to prepare for performing Bayesian inference with the model. As is done in YKN22Clustering , for our prior on the clusters we can assume that each sample is assigned a cluster independently at random with prior probability , giving the following prior over all clusters

| (27) |

We can also suppose that each mode has some prior probability for the existence of each edge , which results in the following prior over all modes

| (28) |

This prior differs from the one used in YKN22Clustering , which couples all modes together through a single edge probability . This prior would result in a similar description length expression to ours when computing MAP estimators, except it does not have the beneficial properties of being a sum of decoupled cluster-level description lengths and allowing the modes to fluctuate independently in their densities. We finally assume (as in YKN22Clustering ) uniform priors over the parameters , which we collectively denote as .

Applying Bayes’ rule, the posterior distribution over modes , clusters , and model parameters is given by

| (29) |

Now, to find the Maximum A Posteriori (MAP) estimators , we can maximize Eq. (D) (or equivalently, its logarithm) using the method of profiling. To do this, we first maximize over the parameters , finding the MAP estimates as a function of the configuration . Then, we can plug the function(s) back into Eq. (D) to obtain an objective function over the configuration , which can then be optimized over the remaining variables to find all the MAP estimators.

The logarithm of the posterior distribution in Eq. (D), which can be maximized to find the MAP estimators, is given by

| (30) | ||||

Maximizing over the model parameters gives the estimators

| (31) | ||||

| (32) | ||||

| (33) | ||||

| (34) |

These can be derived using the method of Lagrange multipliers, with the normalization constraint for the group assignment probabilities. Finally, replacing these expressions back into Eq. (D), and using we find

| (35) |

Thus, maximizing Eq. (35) to find (profiled) MAP estimators of this Bayesian model is equivalent to minimizing the description length . Further, with , the first two lines of Eq. (D) correspond to , the third line corresponds to , and the last line to , which establishes a direct mapping between likelihoods, priors and each part of the multi-part encoding. This correspondence unifies the Bayesian and MDL formulations of network population clustering and explains the good reconstruction performance seen in the experiments of the main text mackay2003information ; grunwald2007minimum .

Appendix E Additional related methods for clustering network populations

The two algorithms proposed in this paper are not the first methods capable of clustering network populations. Indeed, as discussed in the Introduction, there are a variety of existing methods that have tackled this problem using various modeling assumptions. However, directly making an apples-to-apples numerical comparison of the performance of these methods with our own is difficult for a number of reasons. Firstly, as mentioned in the Introduction, most of these methods do not have a unified model selection criterion that allows for automatic inference of the number of clusters. Therefore to make a completely fair numerical comparison with our own methods, we would either have to fix the number of clusters in all methods being compared—removing the additional obstacle of model selection which is one of the key innovations of our method—or add an ad hoc regularization term/Bayesian prior to the existing methods, to which their output may be highly sensitive mantziou2021 . Additionally, class labels of real-world network datasets are often arbitrary and uncorrelated with the structure of the data peel2017ground , so any purely numerical comparison of unsupervised clustering algorithms for network data using label recovery in real-world examples will be ill-posed peixoto2022implicit . Recovery of synthetic clusters, as described in the experiment in Sec. III.1, can serve as a well-posed task for testing the relative performance of existing algorithms if the data can be generated from the model corresponding to each algorithm peixoto2022implicit , but many existing methods do not have explicit generative models and fitting them to other synthetic data may result in severe model misspecification spanos1986statistical .