Computational capabilities at the edge of chaos for one dimensional systems undergoing continuous transitions

Abstract

While there has been a keen interest in studying computation at the edge of chaos for dynamical systems undergoing a phase transition, this has come under question for cellular automata. We show that for continuously deformed cellular automata there is an enhancement of computation capabilities as the system moves towards cellular automata with chaotic spatiotemporal behavior. The computation capabilities are followed by looking into the Shannon entropy rate and the excess entropy, which allows identifying the balance between unpredictability and complexity. Enhanced computation power shows as an increase of excess entropy while the system entropy density has a sudden jump to values near one. The analysis is extended to a system of non-linear locally coupled oscillators that have been reported to exhibit spatiotemporal diagrams similar to cellular automata.

I Introduction

The transition from regular towards chaotic behavior is one of the defining characteristics of complex non-linear dynamical systems. There has been a keen interest in the behavior of a dynamical system as it undergoes such transition, which comes from the fact that it is precisely in such region where enhanced or improved computational capabilities of the system are claimed to be found crutchfield90 ; langton90 ; kauffman92 , this is known as the edge of chaos (EOC) hypothesis. In this context, computation in a dynamical system can have different meanings crutchfield93 . The most common interpretation of computational capacity is related to the ability of the system to perform some “meaningful” task. Meaningful can be understood, given some initial condition, as the system transforming the input in some prescribed way into some output data. More broadly, it can be understood related to the system capability to behave as a universal Turing machine given some proper input and “programming”. A related but different point of view is to associate computation to the generic production, storage, transmission and manipulation of information. It is in this last sense that any dynamical system can be seen as some device amenable to be characterized by its intrinsic computational abilities crutchfield12 . Measures of the computational capabilities of a system have been developed that aim at quantifying the efficiency, in terms of resources, while characterizing the balance between irreducible randomness and pattern production crutchfield12 .

In favor of the EOC hypothesis several systems modeling natural and artificial phenomena exhibit interesting, if not some type of critical behavior, precisely at the edge of chaos su89 ; sole96 ; bertschinger04 ; beggs08 ; boedecker12 . It is not clear, for some one dimensional systems, if the EOC criticality plays a role in the enhancement of computational capabilities, for example in cellular automata. Cellular automata (CA) are discrete space, dynamical systems that act over a spatially extended grid taking values over a finite alphabet, resulting in the evolution of the spatial configuration in discrete time steps. There has been a discussion if meaningful computation abilities in CA are found and should be sought at EOC regions.

Langton langton90 has used the density of quiescent states in the cellular automaton rule, usually denoted by the letter , as the control parameter to explore such questions. The quiescent state is chosen as some arbitrary value in the CA alphabet, and the parameter is the ratio between the number of times the quiescent state does not appear in the CA rule table and the number of entries in the same table. For a binary alphabet CA with nearest neighbor rules (elementary cellular automata or ECA), , where is the number of times the quiescent state (say value ) appears in the rule table. Monte Carlo simulations were performed over the CA space to conclude that, on average, as , increases from , the rules behavior changes from a fixed point regime, to periodic, to complex and finally to chaotic, each regime corresponding to one of Wolfram classification scheme wolfram02 . Packard packard88 further developed these ideas to conclude that CA capable of meaningful computations happens precisely at those critical values of where a transition to the chaotic behavior occurs. Packard results were questioned by Crutchfield et al. crutchfield93 who performed Monte-Carlo simulations similar to the ones made by Packard failing to find EOC enhanced computation capabilities.

In this paper, we show that improvement of computational capabilities indeed occurs at the edge of chaos for some continuously extended CA rules, but not following Langton parameter and therefore, not in the sense of Packard simulations. We will not be seeking to discover useful computational of the CA but instead, improvement of intrinsic computation as measured by entropic based measures of structure and disorder. To support this claim we follow the behavior of a CA as one entry in its rule table is continuously varied between and and use it as control parameter the value of the continuously varying entry. After discussing CA, we turn our attention to a system of one dimensional non-linear locally coupled oscillators that have been found to exhibit dynamical behavior similar to those of CA alonso17 . The quest is to find if in this system with a continuous control variable space, enhanced computation at the EOC can also be found.

II Continuous states cellular automata

To start formalizing some of the expressed ideas, for our purposes it suffices to consider one dimensional CA as a tuple consisting of a set of states , a radius which defines a neighborhood structure from to , and a local rule . The local rule acts simultaneously over a numerable ordered set of cells which can be bi-infinite, but in any case, each cell is properly labeled by natural numbers as in . At a given time step each cell is in a state belonging to and the whole cell array configuration is denoted by . The evolution of the cell array from time to time is given by the global function induced by : such that . is also called the spatial configuration of the cell array at time . The set of is called the spatiotemporal diagram or evolution up to time . The spatiotemporal diagram of the CA can show a wide range of behaviors including the emergence of complex patterns, fractal nature, chaotic behavior, periodic, random evolution, or evolve to homogeneous spatial configurations. CA have been used as models for different natural phenomena as well as a model for computing devices. It is known at least of a one dimensional CA that behaves as a Universal Turing Machine, the so-called rule 110 cook04 ; wolfram02 .

Consider two state cells with a rule defined over a one neighborhood radius such that, a cell will be updated in the next time step according to the current value of the cell and its two nearest neighbors: . Such cellular automata are named elementary cellular automata or ECA wolfram02 . To specify a rule function a look up table can be given, for example as shown in Table 1

| 1 | 1 | 1 | 0 |

| 1 | 1 | 0 | 0 |

| 1 | 0 | 1 | 1 |

| 1 | 0 | 0 | 0 |

| 0 | 1 | 1 | 1 |

| 0 | 1 | 0 | 1 |

| 0 | 0 | 1 | 1 |

| 0 | 0 | 0 | 0 |

When ordered lexicographically the rule has the binary representation equivalent to the decimal number (the reader should notice that according to the definition given in the introducion, the parameter for this rule is ). CA rules are named by their decimal representation. The space of cellular automata with binary alphabet and nearest neighbor interaction will then be a discrete space of eight dimensions or an eight dimensional hypercube where there are rules.

There is no apparent geometry in the CA discrete space, as there is no natural way to define a transition between CA rules in the hypercube allowing for the smooth change of one cellular automaton to a neighboring one while their behavior also changes gradually. One may think in ordering the CA rules as Gray codes savage97 , where successive CA differ by a change of only one entry in the rule table. However, Gray codes ordering does not lead to a corresponding smooth transition in the CA regimes. There is not even any apparent relation in behavior between adjacent rules. Consider rules and with binary representation and , respectively. Both rules differ only in the seventh entry and yet, the former result always in a homogeneous state where each cell in the array has the same value, while the later, as already stated, can perform as a Universal Turing Machine.

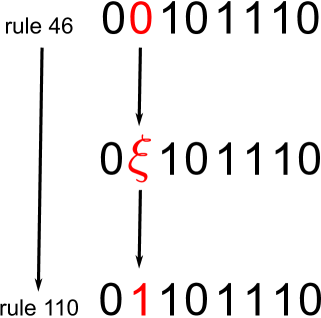

The inability to define a natural ordering among the CA rules along which behavior changes gradually, has hindered the analysis of transitions between CA rules. To overcome this limitation, Pedersen devised a procedure to continuously deform a CA rule into another at the expense of sacrificing the discrete nature of the cells states petersen90 . Consider rule and rule , a continuous transition from the former to the later can be done if we define a CA rule as , where goes from to in a continuous manner (Figure 1), such that for we get rule and for rule is recovered. In order for the transition to work, first a real valued function must be defined, for example , next, an interpolating function is used over the values of , in our case: . The interpolating function is a real valued continuous function that must be consistent with the discrete case for integer values of its argument, but apart from that, it can be chosen in any way. Pedersen used three interpolating functions, one lineal, one quadratic and a third by trigonometric splines, finding that the results were qualitatively similar for every choice. We follow Pedersen and settle for the quadratic interpolating function

| (1) |

where is the largest integer smaller than . As explained, if is an integer number is given by the discrete CA rule.

The price paid in Pedersen extension is that the states of the cells are no longer binary values but any real value in the interval . In order to recover the discrete binary nature of the cell states, we observe the system via a binarizing measuring device: we use the mean value over the whole cell array for a given time step as a threshold value, any cell with a state value over the mean is projected to , otherwise to .

In our simulations, an array of cells were used and the initial random configuration (random binary digits were taken from www.random.org ) was left to evolve in time steps. Simulation results are then presented by averaging over ten simulations for each parameter value, each with different initial configurations. The last ten spatial configurations were taken for each run, so the final averaging was over spatial configurations. For testing the robustness of the result, calculations were occasionally performed for , , and cells, and correspondingly equal time steps, showing no change in the results. Cyclic boundary conditions are used. In all cases, and contrary to Packard Monte Carlo simulations, the variance of the mean values for our simulations is too small even to be represented as error bars.

Before we proceed, we need some measures that can capture the computational capabilities of a system and distinguish from the random portion of the output on the one hand, and data structuring on the other.

III Entropic measures

As a measure of unpredictability we use Shannon entropy rate . When a bi-infinite sequence of emitted symbols has been observed, is the amount of information produced per symbol. If we consider the bi-infinite sequence as a time series, then, it is the amount of new information in an observation of let say cell , considering the state of all previous cells , crutchfield03 . In terms of Shannon entropy

| (2) |

where denotes the Shannon conditional entropy of random variable given variable cover06 .

The block entropy of a bi-infinite string measures the average uncertainty of finding blocks of a given length within the string. Block entropy is calculated by the equation

| (3) |

where the sum goes over all sequences of length drawn from the alphabet , and is the probability of observing one particular string in the bi-infinite string . Entropy density, also known as entropy rate, can be understood as the unpredictability that remains when all that can be seen has been observed, and can also be defined through a limiting process of the normalized block entropies

| (4) |

For a necessarily finite data, the entropy rate has to be estimated. There is a body of literature dealing with the estimation of entropy rate for finite sequences schurmann99 ; rapp01 ; lesne09 . Here will be estimated through Lempel-Ziv factorization procedure that has been extensively discussed in the literature and has been used before in the context of CA kaspar87 ; estevez15 . In short, Lempel-Ziv factorization performs a sequential partition of a finite length string adding a new factor if and only if, it has not been found in the prefix up to the current position of analysis lz76 . In order to emphasize that a finite size estimate of through the Lempel-Ziv procedure is being used, we change to the notation instead.

Formally, if is the substring of consecutive symbols and the ”drop” operator defined as and consequently, , where is a positive number. The Lempel-Ziv factorization is a partition of a finite string of length

in factors such that each factor complies with

-

1.

-

2.

except, perhaps, for the last factor .

The partition is unique for every string. For example the sequence has a Lempel-Ziv factorization , each factor is delimited by a dot. For a data stream of length , is defined by

| (5) |

where is the number of factors in the Lempel-Ziv factorization. The entropy rate for an ergodic source is then given byziv78

| (6) |

For a finite data stream of length where the above limit can not be strictly realized, the entropy rate is then estimated by where we drop the argument when no ambiguity arises. The error in the estimation for a given value of has been discussed beforeamigo06 .

Excess entropy , introduced by Grassberger as effective complexity measuregrassberger86 , has been used in several contexts crutchfield12 including the analysis of CA as a measure of correlation along different scales in a process and related to the intrinsic memory of a system. For a bi-infinite string, the excess entropy measures the mutual information between two infinite halves of the sequence and is related to the correlation of the symbols at all possible scales. Excess entropy can be defined in several equivalent ways, for example as the convergence of the entropy rategrassberger86 ; crutchfield03

| (7) |

is the mutual information between and , and , where is the Shannon entropy of the random variable . As already explained, mutual information is a measure of the amount of information one variable carries regarding the other, and it is a symmetric measure . The information gain measure how much the entropy density is being overestimated as a result of making measurements of the probability of only string blocks up to length , in other words, how much information still has to be learned in order to assert the true entropy of the sourcecrutchfield03 . As entropy decreases with length , if such decrease is fast enough, the sum (7) will converge. is the intrinsic redundancy of the source or apparent memory of the sourcecrutchfield03 . It measures structure in the sense of redundancy being a measure of pattern production. Feldmancrutchfield03 has proven that equation (7) is equivalent, in the ergodic case, to the mutual information between two infinite halves of a bi-infinite string coming from the source output. Structure in this context then means how much information one halve carries about the other and vice-versa, a measure related again with pattern production and context preservation as redundancy.

Numerically, when dealing with finite data streams, excess entropy has to be effectively estimated. Here we use a random shuffle based excess entropy estimate using the Lempel-Ziv estimation of entropy rate and given by melchert15

| (8) |

is a surrogate string obtained by partitioning the string in non-overlapping blocks of length and performing a random shuffling of the blocks. The shuffling for a given block length destroys all correlations between symbols for lengths larger than while keeping the same symbol frequency. is chosen appropriately given the sequence length as to avoid fluctuations. In spite that is not strictly equivalent to the excess entropy as given by equation (7), it is expected to behave in a similar manner melchert15 .

Finally, information distance will be used. Information distance is derived from Kolmogorov randomness theory. The Kolmogorov randomness of a sequence , is the length of the shortest program (), that can reproduce the sequence kolmogorov65 , accordingly, , known as Kolmogorov conditional randomness, is the length of the shortest program able to reproduce if the program , reproducing the string is given. The information distance is defined byli04

| (9) |

is an information based distance measuring, from an algorithmic perspective, how correlated are two sequences: if two sequences can be derived one from the other by a small-sized algorithm, then the corresponding is small.

IV Results

IV.1 Continuously deformed cellular automata

Having defined our entropic measures of randomness and complexity, we first explored the CA space. The logic followed was to focus in rule and all rules that result from flipping a single entry in its rule table (flipping can happen as or depending on the rule entry), which leads to the eight possibilities given by rules , , , , , , and . The rule is called the starting rule, and any of the eight rules related by a state flip in only one entry, the final or ending rule. We find in all cases that the transformation from rule to any of the ending rules proceeds, at a specific value, to a disordered state where entropy rate reaches the maximum value of and then at some other value, the system transforms to a behavior similar to the final rule. For specific ending rules, a clear jump in the excess entropy was found at the verge of falling into the final rule. That is the case for the transformation to ending rule (binary representation ), so the behavior around this rule was studied modifying each entry one at a time.

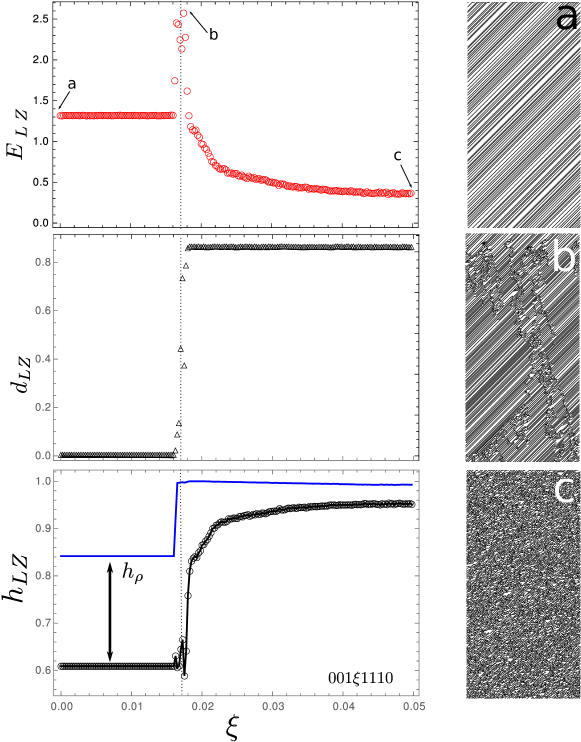

Figure 2 shows the observed entropic measures for values near zero in the fifth entry () of rule 46 () when moving towards rule (). A small range of values is shown to emphasize details of the transition. has a clear peak shaped maximum at a narrow window of values coincident with the jump in . At the starting rule, the spatiotemporal diagram (Fig. 2 left a) shows a shift diagram, the initial random conditions rapidly sets into a dynamic where the site values are spatially shifted with respect to the previous time step. At the value of (Fig. 2 left c) the spatiotemporal diagram is characteristic of cellular automata chaotic behavior as has been reported before (compare, for example, with the spatiotemporal diagram of rule 101 of class 3 in Wolfram automata classification wolfram02 ). The spatiotemporal diagram, at the transformation point, when (Fig. 2 left b), while largely keeping the shift behavior, shows the production of local structures that persist in time while traveling across the cell sites. Also, interactions between the structures are also seen. The value of , for which the peaking of is observed, we believe is a region of enhancement of computation capabilities of the system at the EOC.

Large comes as a result of pattern prevalence over disorder: a high disorder configuration has a large entropy density estimated by and almost zero excess entropy estimated by . This relation between entropy rate and excess entropy has been discussed numerous times in the pastcrutchfield03 ; feldman08 ; crutchfield12 . In a random sequence, there is no correlation between sites values at any length scale, and therefore, no mutual information can be found between two halves of the sequence. An increase in excess entropy can come from the formation of patterns not involving symbol erasure. If we relate computational capability, as stated in the introduction, as the storage, transmission, and manipulation of information, then the excess entropy increase signals an enhancement of such capabilities. Indeed, an increase in excess entropy means larger mutual information between two halves of a sequence which is directly related to storage and, less directly, to transmission of information. As excess entropy is also related to pattern formation, it can also signal the manipulation of information. So, the increase of is related to the enhancement of computation. The second condition that must be tested is that the jump in entropy density is witnessing a phase transition to a chaotic regime.

In order to test the hypothesis of transition to a chaotic regime, we performed the following simulation: the initial configuration was perturbed by changing one cell value in the cell array, and the system was left to evolve the same amount of time steps. We then calculated the Lempel-Ziv distance between the final configuration of the non perturbed and perturbed system. A high value of shows high sensitivity to initial conditions while the opposite is also true, low values of Lempel-Ziv distance is taken as showing low sensitivity to initial conditions. A similar procedure was used by Packard packard88 and Crutchfield et al. crutchfield93 using a different parameter based on Hamming distance and called difference-pattern spreading rate . As discussed above, differs from in that the former is not Hamming distance related but instead, is based in how innovative is one sequence with respect to the other concerning pattern production estevez15 . In all our simulations, does not have the fluctuations reported for . In the two upper plot of figure 2 the excess entropy and the as a function of the parameter are shown. At the same values of where the reaches for a maximum, the also jumps in a step-like manner. For the range of () with low entropy rate, a low sensitivity to perturbation is shown by . High sensitivity to initial conditions is a fingerprint of chaotic behavior. As soon as jumps, so does , so the high entropy region is also a region with chaotic behavior. The region of peaked has intermediate values of entropy rate and .

Low values of entropy rate can come as a result of the erasing of one symbol, say , at the expense of the other. In order to clarify how much of the entropy rate behavior could be due to the erasure (production) of s (s) the entropy rate for random arrangements of cell values, with different symbol density, was calculated for the whole range of possible values. In figure 2b, the curve labeled is the entropy rate for the random arrangement having the same symbol density as the corresponding CA sequence with entropy density for the given value. The difference between both curves is a measure of how much lowering of the entropy rate is due to the structuring of the spatial cell values independent of the production of one symbol. Such a difference has been called multi-information in other contexts melchert15 . It must be noted that just before the entropy jump, in the region of the EOC, multi-information increases significantly as jumps before .

Besides the sensitivity to initial conditions, we looked for an additional criterion that points to the idea that after the peak, the system is indeed in chaotic behavior. A dynamical system is said to be chaotic if and only if it is transitive and its set of periodic points is dense in the phase space dubaq01 . For a system to be transitive, it is not hard to see that surjectivity is a necessary condition. Hedlund hedlund69 proved that a one dimensional discrete CA is surjective if and only if it is -balanced for all . We say that a discrete CA with radius is -balanced if , where for a set , means cardinality. For the binary alphabet used here, -balance means that the rule table will have the same number of s and s, namely . To prove -balance, a random initial configuration is chosen, such that the density of either symbol is , all spatial configurations up to time step , must conserve the same density for the symbols. Rule is -balanced but is not -balanced for . For continuous CA, proving -balance for all can be more involved as time evolution occurs over a continuous state cell array, but the output is the discrete binary projection of the continuous cell array. We use the following criteria: if starting with a random initial binary configuration, after a sufficient number of time steps, the spatial configurations of the discrete projection preserves the density of both symbol at , then the continuous rule is taken to be -balanced. If we assume that Hedlund condition is still necessary, we need to prove that the high entropy states in figure 2 are -balanced, while the low entropy states are not. We performed such test numerically and found it to be true. The compliance of Hedlund rule, together with the sensitivity to the initial conditions points to the idea that certainly the values of where has a peaked maximum, is a region in the EOC.

If -balance of the CA is a necessary condition for chaotic behavior, then CA rules which are not -balanced for more than one entry will never achieve such balance by changing a single entry in the rule table. Of the neighboring CA to rule that is the case for rules , and where indeed we did not find any chaotic regime upon continuous deformation of any entry in the rule table and therefore no EOC enhancement (See supplementary material). Furthermore, it could be hypothesized that non-chaotic rules that are -balanced or can be brought to -balance by the deformation of a single entry in the rule table, are those were a transformation to a chaotic regime could be expected. Such is the case in the set of ECA for thirty-seven rules including rule but also rules and which are also neighbors of rule . We have found a chaotic transition for rule at the rule table entry site corresponding to the triplet entry, and also enhancement at the EOC for this transition. Strong fluctuations in density were found in the EOC region, differing from rule . The chaotic transition was also found for rule together with a smaller but clear peak of at the EOC.

IV.2 Non-linear locally coupled oscillators (NLCO)

Next, we set to explore if the same enhancement of computational capabilities at the EOC could be found in a system of non-linear coupled oscillators introduced by Alonsoalonso17 . Alonso has argued about the similarities in the behavior of the NLCO and the CA. Coupled nonlinear oscillators have been extensively studied in relation with many natural phenomena such as chemical reactions, neural brain activity, insects synchronization mosekilde02 . The model used here consist in a system of locally coupled oscillators using, under the weak coupling assumption, a simply modified Adler equation adler73 . The system exhibits, for certain ranges of values of its control parameters, complex behavior. The local nature of the coupling, where the phase of one oscillator is directly related to two other neighbors, and the rich set of behaviors resembles that of the phenomena found in CA estevez18 . The array of coupled oscillators obeys, for the phase evolution, a system of equations given by

| (11) |

is the proper frequency of the oscillators, and controls the self-feedback of the oscillator relative to the coupling given by the third term in the right hand of equation (11). The number of oscillators is taken even, and the alternating term in the coupling term is to guarantee balance in the interaction of the array of oscillators. Cyclic boundary conditions are enforced. The state of the system is given by the value, at a given time, of the phases of each oscillator which will be observed indirectly by the activity given by . As done with the CA, a similar binary partition scheme is introduced: the mean value of the activity is measured for a given time step and used as a threshold to binarize the data.

Simulations were performed following the same criteria as in the CA case, coupled oscillators were left to evolve for discrete time steps while the activity of the oscillators was recorded. Averaging was performed over different random initial phases configurations. The system of equations (11) was solved numerically using a Runge-Kutta method of order .

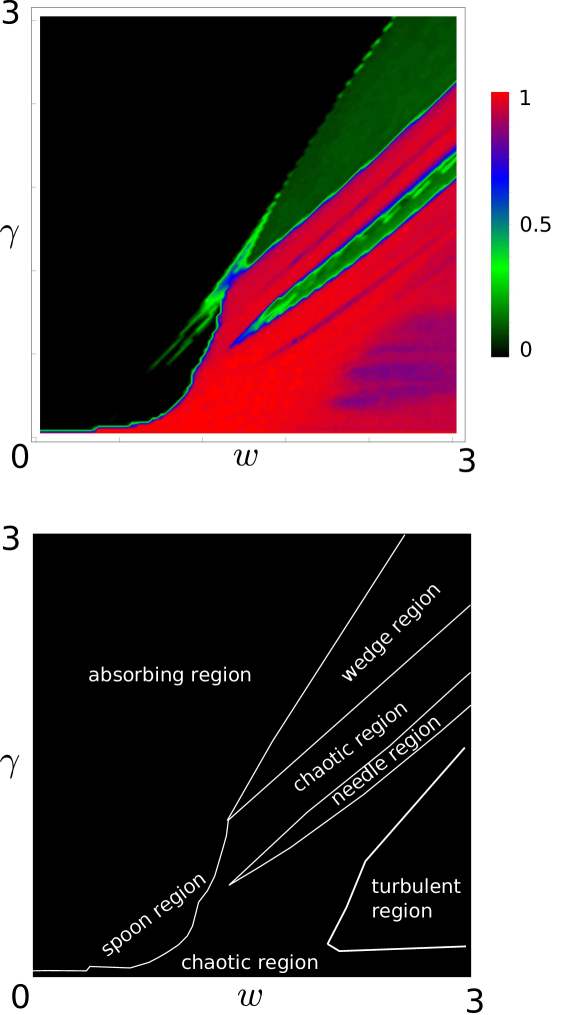

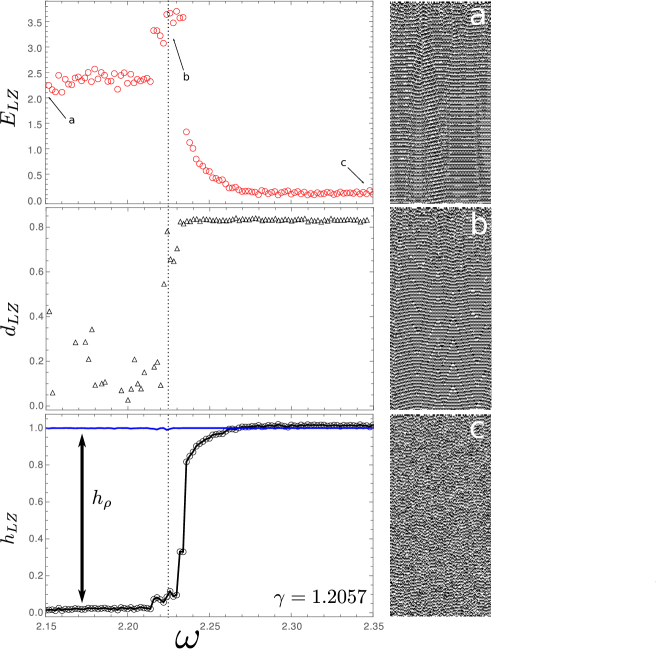

In the case of the oscillators, the variables vary continuously, and the control parameters space is therefore continuous. A detailed study of the “phase” diagram has been done before (Figure 3 shows the phase diagram taken from Ref. estevez18, ). There are several particular regions in the control parameter space. We focused on the needle-shaped region surrounded by a connected chaotic region (Region labeled as needle in Figure 3 below). Previous studies have indicated that the needle region exhibits low values of entropy rate and is insensitive to perturbations in the initial configuration of the oscillators, while the surrounding chaotic region has high entropy rate and high values (See figures 3 and 7 in Estevez et al. estevez18 ). We chose a fixed value of and let vary between and , enough to make a transition from the needle region to the chaotic region. Figure 4 shows the same type of plots as those shown in the CA case. Again a peaked shaped maximum of is witnessed at the same values where the entropy rate has a jump from low values to high disorder attaining the maximum possible value of . The peaked maximum of also coincides with the jump in the values. The spatiotemporal diagrams at the transition point (Fig. 4 left b and supplementary material) shows waves travelling across time and across the cell sites together with some local travelling structures, for smaller values of (Fig. 4 left a and supplementary material) the local structures have disappeared and for larger values (Fig. 4 left c and supplementary material) a chaotic regime can be identified.

In the case of the NLCO, no significant change in the production of one symbol is found, and the density of s is more or less constant; therefore the decrease of for the smaller values of is a result of correlations between the oscillators phases.

Similar to the CA, enhancement of computation at the EOC is also found.

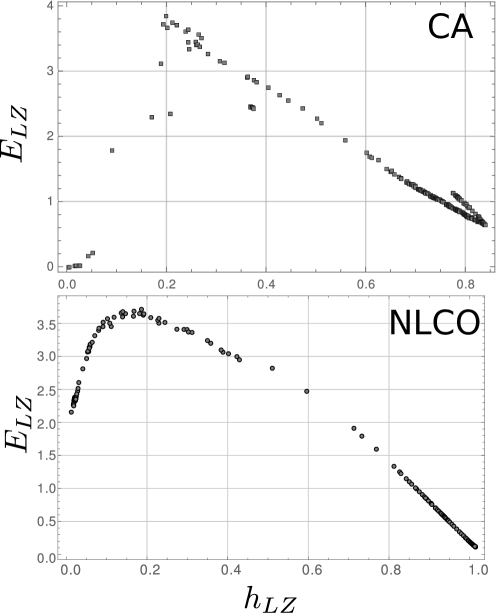

V Complexity-entropy maps

We finally look into the complexity-entropy map crutchfield12 of both systems. The entropy map for cellular automaton rule is shown in figure 5a. Rule has a similar diagram. The map was calculated by continuously varying the sixth entry in the rule table. Feldman et al. feldman08 discussed the interpretation of complexity-entropy diagrams; it measures the system intrinsic computation capabilities in a parameterless way. As already discussed, we also found enhanced computation at the EOC for rule (See supplementary material). The complexity-entropy map of figure 5a is typical of systems transforming from non-chaotic to a chaotic regime, the reader may compare our plot with that reported by Crutchfield and Young for the logistic map exhibiting the well-known period-doubling cascading crutchfield89 . For values of entropy rate near zero, the system is rather simple, and the values are small. It is only with the introduction of some unpredictability that the system can accommodate more complex behavior. It is precisely at those value around where the attains a maximum that corresponds to the EOC. However, it is only to a certain value that a system can accommodate randomness while attaining higher intrinsic computation capability.

Further increase of entropy rate results in the decrease of the system intrinsic computation capability and at , is again at zero. A similar interpretation can be made of the complexity-entropy diagram for the NLCO the main difference is that the curve is smoother pointing to a continuous first derivative. The striking similarity between our plots and those reported by Crutchfield and Young for well-studied systems, further points to the fact that the studied systems undergo a transition to chaotic behavior as the parameter is varied.

VI Conclusions

In conclusion, we have argued for the existence of enhanced computation at the edge of chaos for continuously deformed cellular automata, different from the reports of Langton and Packard. By using a continuously varying parameter that allows transforming from one CA rule to another, geometry in the CA space was recovered. Our approach does not carry the limitations pointed out against Langton studies. The evidence heavily points to the existence, for specific CA rules, of a transition towards a chaotic regime where enhanced intrinsic computation can be found at the EOC. We directly measured such enhancement by looking into a measure of structural complexity while following the behavior of the entropy rate of the system. -balanced analysis seems to be a useful tool to identify other rules where the same phenomena could be expected. The analysis was extended to a system of nonlinear coupled oscillators whose behavior has a strong resemblance to CA spatiotemporal patterns. For this system also improved intrinsic computation at the EOC was identified. The complexity-entropy diagrams show a striking resemblance to the ones reported for well studied non-linear systems were chaotic transitions are known to happen.

VII Acknowledgments

This work was partially financed by FAPEMIG under the project BPV-00047-13. EER which to thank PVE/CAPES for financial support under the grant 1149-14-8. Infrastructure support was given under project FAPEMIG APQ-02256-12.

References

- [1] J. P. Crutchfield and K. Young. Computation at the onset of chaos. In W. H. Zurek, editor, Complexity, entropy, and the physics of information, pages 223–269. Addison Wesley, Redwood City, 1990.

- [2] C. G.Langton. Computation at the edge of chaos. Physica D, 42:12–37, 1990.

- [3] S. A. Kauffman and S. Johnson. Co-evolution to the edge of chaos: Coupled fitness landscapes, pised states, and co-evolutionary avalanches. In C.G Langton, G. Taylor, J. Doyne Farmer, and S. Rasmussen, editors, Artificial Life II, pages 325–368. Addison Wesley, Redwood City, 1992.

- [4] J. P. Crutchfield, P. T. Haber, and M. Mitchell. Revisiting the edge of chaos: evolving cellular automata to perform computations. Comput. Systems, 7:89–130, 1993.

- [5] J. P. Crutchfield. Between order and chaos. Nature, 8:17–24, 2012.

- [6] Z. Su, R. W. Rollins, and E. R. Hunt. Universal properties at the inset of chaos in diode resonator systems. Phys. Rev. A, 40:2689–2697, 1989.

- [7] R. V. Sole, S. C. Manrubia, B. Luque, J. Delgado, and J. Bascompte. Phase transitions and complex systems. Complexity, XX:13–26, 1996.

- [8] N. Bertschinger and T. Natschlager. Real time computation at the edge of chaos inrecurrent neural networks. Neural computation, 16:1413–1436, 2004.

- [9] J. M. Beggs. The criticality hypothesis: how local cortical networks might optimiza information processing. Phil. Trans. of the Royal Soc. of London A: Math, Phys. and Eng. Science, 366:329–343, 2008.

- [10] J. Boedecker, O. Obst, J. T. Lizier, N. M. Mayer, and M. Asada. Information processing in echo state networks at the edge of chaos. Theory in Bioscience, 131:205–213, 2012.

- [11] S. Wolfram. A new kind of science. Wolfram media Inc., Champaign, Illinois, 2002.

- [12] N. H.Packard. Adaptation towards the edge of chaos. In J. A. S. Kelso, A. J. Mandell, and M. F. Shlesinger, editors, Dynamic patterns in complex systems, pages 293–301. World Scientific, Singapore, 1988.

- [13] L. M. Alonso. Complex behavior in chains of nonlinear oscillators. Chaos, DOI: 10.1063/1.4984800, 2017.

- [14] M. Cook. Univrersality in elementary cellular automata. Complex Systems, 15:1–40, 2004.

- [15] C. Savage. A survey of combinatorial gray codes. SIAM Rev., 39:605–629, 1997.

- [16] J. Pedersen. Continous transitions of cellular automata. Complex systems, 4:653–665, 1990.

- [17] J. P. Crutchfield and D. Feldman. Regularities unseen, randomness observed: Levels of entropy convergence. Chaos, 13:25–54, 2003.

- [18] T. M. Cover and J. A. Thomas. Elements of information theory. Second edition. Wiley Interscience, New Jersey, 2006.

- [19] T. Schurmann and P. Grassberg. Entropy estimation of symbol sequence. Chaos, 6:414–427, 1999.

- [20] P. E. Rapp, C. J. Cellucci, K. E. Korslund, T. A. A. Watanabe, and M. A. Jimenez-Montano. Effective normalization of complexity measurements for epoch length and sampling frequency. Phys. Rev. E, 64:016209–016217, 2001.

- [21] A. Lesne, J.L.Blanc, and L. Pezard. Entropy estimation of very short symbolic sequences. Phys. Rev. E, 79:046208–046217, 2009.

- [22] F. Kaspar and H. G. Schuster. Easily calculable measure for the complexity of spatiotemporal patterns. Phys. Rev. A, 36:842–848, 1987.

- [23] E. Estevez-Rams, R. Lora-Serrano, C. A. J. Nunes, and B. Aragón-Fernández. Lempel-Ziv complexity analysis of one dimensional cellular automata. Chaos, 25:123106–123116, 2015.

- [24] A. Lempel and J. Ziv. On the complexity of finite sequences. IEEE Trans. Inf. Th., IT-22:75–81, 1976.

- [25] J. Ziv. Coding theorems for individual sequences. IEEE Trans. Inf. Th., IT-24:405–412, 1978.

- [26] J. M. Amigo and M. B. Kennel. Variance estimators for the lempel ziv entropy rate estimator. Chaos, 16:43102–43108, 2006.

- [27] P. Grassberger. Towards a quantitative theory of self-generated complexity. Int. J. Theo. Phys., 25:907–938, 1986.

- [28] O. Melchert and A. K. Hartmann. Analysis of the phase transition in the two-dimensional ising ferromagnet using a lempel-ziv string-parsing scheme and black-box data-compression utilities. Phys. Rev. E, 91:023306–023317, 2015.

- [29] A. N. Kolmogorov. Three approaches to the concept of the amount of information. Probl. Inf. Transm. (English Trans.)., 1:1–7, 1965.

- [30] M. Li, X. Chen, X. Li, B. Ma, and P. M. B. Vitanyi. The similarity metric. IEEE Trans. Inf. Th., 50:3250–3264, 2004.

- [31] D. P. Feldman, C. S. McTeque, and J. P. Crutchfield. The organization of intrinsic computation: complexity-entropy diagrams and the diversity of natural information processing. Chaos, 18:043106–043121, 2008.

- [32] J.-C Dubacq, B. Durand, and E. Formenti. Kolmogorov complexity and cellular automata classification. Th. Comp. Science, 259:271–285, 2001.

- [33] G. A. Hedlund. Endomorphism and automorphism of the shift dynamical systems. Math. Systems Theory, 3:320–375, 1969.

- [34] E. Mosekilde, Y. MAistrenko, and D. Postnov. Chaotic Synchronization: Application to Living Systems. World Scientific, Singapore, 2006.

- [35] R. Adler. A study of locking phenomena in oscillators. Proc. of the IEEE, 61:1380–1385, 1973.

- [36] E. Estevez-Rams, D. Estevez-Moya, and B. Aragon-Fernandez. Phenomenology of coupled nonlinear oscillators. Chaos, 28:23110–23121, 2018.

- [37] J. P. Crutchfield and K. Young. Inferring statistical complexity. Phys. Rev. Lett, 63:105–108, 1989.