Compute-in-Memory based Neural Network Accelerators for Safety-Critical Systems: Worst-Case Scenarios and Protections

Abstract

Emerging non-volatile memory (NVM)-based Computing-in-Memory (CiM) architectures show substantial promise in accelerating deep neural networks (DNNs) due to their exceptional energy efficiency. However, NVM devices are prone to device variations. Consequently, the actual DNN weights mapped to NVM devices can differ considerably from their targeted values, inducing significant performance degradation. Many existing solutions aim to optimize average performance amidst device variations, which is a suitable strategy for general-purpose conditions. However, the worst-case performance that is crucial for safety-critical applications is largely overlooked in current research. In this study, we define the problem of pinpointing the worst-case performance of CiM DNN accelerators affected by device variations. Additionally, we introduce a strategy to identify a specific pattern of the device value deviations in the complex, high-dimensional value deviation space, responsible for this worst-case outcome. Our findings reveal that even subtle device variations can precipitate a dramatic decline in DNN accuracy, posing risks for CiM-based platforms in supporting safety-critical applications. Notably, we observe that prevailing techniques to bolster average DNN performance in CiM accelerators fall short in enhancing worst-case scenarios. In light of this issue, we propose a novel worst-case-aware training technique named A-TRICE that efficiently combines adversarial training and noise-injection training with right-censored Gaussian noise to improve the DNN accuracy in the worst-case scenarios. Our experimental results demonstrate that A-TRICE improves the worst-case accuracy under device variations by up to 33%.

Index Terms:

Hardware/Software co-design, memory, noise analysis, embedded systemsI Introductions

Deep Neural Networks (DNNs) have achieved unparalleled success in various perception tasks, such as object detection, speech recognition, and image classification. As a result, there is a growing trend to harness DNNs for edge applications, which span smart sensors, smartphones, automobiles, and the like [1, 2]. However, the limited computational resources and power constraints of edge platforms mean that CPUs or GPUs may not always be the optimal choice for deploying computation-intensive DNNs on these devices.

An appealing alternative for edge DNN deployment is the Compute-in-Memory (CiM) DNN accelerators [3]. They offer the advantage of minimizing data movement through in-situ weight data access [4]. By incorporating non-volatile memory (NVM) devices, such as phase-change memories (PCMs), resistive random-access memories (RRAMs), and ferroelectric field-effect transistors (FeFETs), CiM can outperform conventional MOSFET-based designs in terms of memory density and energy efficiency [5]. Nonetheless, the reliability of NVM devices remains a concern. Specifically, there exist issues including device-to-device variations stemming from fabrication defects, and cycle-to-cycle variations due to devices’ inherent unpredictability. This stochastic behavior causes device value deviations from the targeted device value (e.g., targeted device conductance used to represent DNN weights) and finally results in the difference of weight values in DNN inference. If not addressed aptly, the deviation of weight values during computations from their targeted values can lead to a pronounced decline in performance.

To assess the robustness of CiM DNN accelerators, researchers often resort to a Monte Carlo (MC) simulation-based evaluation approach [6]. A device variation model is derived from physical measurements. In every MC iteration, a device instance is sampled from the variation model, and the DNN performance metrics are recorded. This process is repeated multiple times, often thousands until the DNN performance distribution stabilizes. Current methodologies [7, 8, 9, 10, 11] typically involve up to 10,000 MC iterations, making the process highly time-intensive. Some researchers employ Bayesian Neural Networks (BNNs) for robustness evaluation against device variations [12]. However, the variational inference process in BNNs is essentially a type of MC simulation.

Numerous strategies have been introduced to enhance the average performance of CiM DNN accelerators under the influence of device variations. These strategies generally bifurcate into two main categories: (1) minimizing device value deviations, and (2) improving DNN robustness. A widely-used method to reduce device value deviation is write-verify [13], where iterative write and read (verify) operations ensure that the final difference between the weights programmed into the devices and the intended values remains within a pre-defined limit. Employing write-verify has proven effective, reducing weight value differences to below 3% and limiting average DNN performance reduction to less than 0.5% [13]. To enhance DNN robustness, there is a plethora of techniques. Neural architecture search [14, 15], for instance, has been designed to automatically sift through a specified search space to identify more resilient DNN architectures. Variation-aware training [16, 10] introduces device variation-induced weight fluctuations during training, ensuring the resulting DNN weights are fortified against similar perturbations. Additional strategies encompass on-chip in-situ training [17], which directly trains DNNs on inherently noisy devices, and Bayesian Neural Network (BNN) methods that leverage the BNN’s variational training process to enhance DNN robustness [12].

All the evaluation and robustness enhancement methods commonly target the average performance of a DNN with the influence of device variations, suitable for general-purpose conditions. However, in safety-critical applications where failure might lead to loss of life (such as in nuclear systems, aircraft flight control, and medical devices), significant environmental harm, or property damage, relying solely on average performance is insufficient. It is essential to consider the worst-case performance, irrespective of its probability [18]. Addressing this concern presents a challenge: Due to the extremely high dimensionality of the device value deviation space, hoping to capture the worst-case scenario via MC simulations is infeasible. As depicted in Fig. 1, for various DNNs across different datasets, even after convergence is achieved with 100K MC runs, the highest discovered DNN top-1 error rate remains notably higher compared to what our method (to be discussed later in this paper) identifies, where the worst-case error approaches 100%.

Despite the importance of the problem, it remains largely overlooked in existing research. The scarce related work approaches the problem from a security perspective [19], where a weight-projected gradient descent (PGD) method identifies weight perturbations that cause input misclassifications. However, this strategy aims to generate a successful weight perturbation attack rather than pinpoint the worst-case scenario amidst all potential variations.

To fill the gap, we introduce an optimization-based analysis framework in this paper, tailored to efficiently identify the worst-case performance of DNNs in a CiM accelerator, in the scenario where the maximum weight variations are bound by write-verify. Our research highlights that this challenge can be delineated as a constrained optimization problem with a non-differentiable objective. Remarkably, it is amenable to relaxation and the finally relaxed problem can be resolved through gradient-based optimization. Because this optimization problem is solved by a Lagrange multiplier-based method, we name our framework of evaluating the worst-case performance to be Lagrange-based Worst-Case analysis (LWC). Our experiments span various networks and datasets, especially focusing on a prevalent setup where each device encodes 2 bits of data under write-verify, producing a peak weight perturbation magnitude of 0.03 [13]. Notably, Weight PGD [19], the only method of relevance in the literature, can unearth worst-case scenarios where the accuracy mirrors that of a random guess. In contrast, our approach can unveil scenarios where accuracy verges on zero. However, our method (LWC) requires iterative tuning of the Lagrange multiplier, making it inefficient to use if it needs to be called upon multiple times (e.g., in hyper-parameter tuning). Therefore, we further propose a rapid worst-case evaluation scheme named F-LWC, based on the fast gradient sign method (FGSM). F-LWC can efficiently estimate the worst-case performance of DNN models with the influence of device variations, offering an evaluation precision comparable to LWC with a 2x speedup and further offering a 10x speedup with a reasonable evaluation precision.

Moreover, as highlighted in our previous work [20], the existing methods aiming at improving the robustness of DNNs under the influence of device variations do not effectively enhance the worst-case performance. Notably, the two primary solutions, reducing device value deviations through more rigorous write-verify procedures and implementing variation-aware training—either incur significant overheads or fall short in terms of effectiveness, underscoring the need for additional research. Our recent study [21] demonstrates that a unique noise-injection training approach, which introduces right-censored Gaussian (RCG) noise to DNN weights during training, can boost the quantile (e.g., median) performance of DNN models under the influence of device variations. Based on this discovery, in this paper, we introduce a novel worst-case-aware training strategy named Adversarial Training with RIght-Censored Gaussian NoisE (A-TRICE). This strategy effectively improves the worst-case performance of DNN models. We first present a novel adversarial training approach based on our rapid worst-case evaluation method F-LWC. Subsequently, we merge the principles of RCG noise injection training and adversarial training to establish our comprehensive A-TRICE framework.

The major contributions of our study can be encapsulated as:

-

•

We are the first that formulate the problem of finding the worst-case performance in DNN CiM accelerators amidst device variations.

-

•

We introduce an adept gradient-based methodology LWC to navigate the intricacies of the non-differentiable optimization problem and determine the worst-case scenario.

-

•

Our experiments underscore the uniqueness of our framework in identifying the DNN’s worst-case performance.

-

•

We emphasize that, even when maximum weight perturbations are stringently constrained (as with write-verify), DNNs can still undergo a substantial dip in accuracy. Consequently, CiM accelerator deployments in safety-critical environments demand vigilance.

-

•

We propose a novel technique named F-LWC based on the fast-gradient sign method to efficiently estimate the worst-case performance. F-LWC achieves similar worst-case performance evaluation with a 2x speedup.

-

•

We develop a novel training method A-TRICE that improves the worst-case performance by up to 33%.

The structure of this paper unfolds as follows: Section II delves into the background information of CiM DNN accelerators, the robustness challenges spurred by device variations, and the current solutions addressing these concerns. Subsequent to this, in Section III, we formulate the problem of detecting the DNN’s worst-case performance amidst device variations and propose a framework LWC for its resolution, corroborated by experimental evidence. In Section IV, we first propose a fast evaluation framework F-LWC to estimate the worst-case performance so that we can then evaluate the effectiveness of existing methods in improving the worst-case performance. In light of their in-effectiveness, we further discuss our proposed method A-TRICE, and its effectiveness in the same section. We culminate our discourse with conclusive observations in Section V.

II Related Works

In this section, we first describe the overall structure and major building blocks of CiM DNN accelerators, then discuss their robustness challenges spurred by device variations, and finally introduce the current solutions addressing these concerns.

II-A Crossbar-based CiM Engine

The crossbar array serves as the major computational component of CiM DNN accelerators. This array can execute matrix-vector multiplications within a single clock cycle. In such an array, matrix values (e.g., DNN weights) are stored at the intersection of vertical and horizontal lines using NVM devices like RRAMs and FeFETs. Vector values, akin to DNN inputs, are introduced through horizontal data lines as voltage. The resultant output is then channeled through vertical lines in a current form. Computation within the crossbar array operates in the analog domain, following Kirchhoff’s laws. However, for other crucial DNN operations such as pooling and non-linear activation, peripheral digital circuits are employed. Thus, digital-to-analog and analog-to-digital converters (DACs and ADCs) bridge these different components, specifically with DACs transforming digital input data into voltage and ADCs converting analog output currents back to digital values.

Resistive crossbar arrays are susceptible to a variety of variations and noises. The predominant sources include spatial and temporal variations. Spatial variations, stemming from fabrication imperfections, exhibit both localized and widespread correlations across devices. Additionally, due to the unpredictability inherent in device materials, NVM devices experience temporal variations, leading to conductance fluctuations when programmed across different instances. Typically, these temporal variations are distinct between devices and are not influenced by the intended programming value [22]. For the scope of this study, we treat the effects of these non-idealities as independent across each NVM device. Nonetheless, our proposed framework can be adapted to address other variation sources with some alterations.

II-B Evaluating DNN Robustness Against Device Variations

The prevailing approach in current research utilizes Monte Carlo (MC) simulations to evaluate the resilience of CiM DNN accelerators against device variations. Initially, models for device variation and circuitry are established based on physical measurements. The DNN being assessed is then mapped onto the circuit model, facilitating the determination of the desired value for each NVM device. In each MC iteration, for every device, a sample of its non-ideal state is drawn from the variation model to set the actual conductance value. Subsequently, metrics reflecting DNN performance, like classification accuracy, are recorded. This process is repeated, often thousands of times, until a consistent DNN performance distribution is achieved. Conventional methods cited in existing studies [7, 14, 10, 9, 11] typically involve around 10,000 MC iterations, an extremely time-consuming process. Empirical studies [7, 14] suggest that 10k MC cycles are adequate for determining average DNN accuracy, though no concrete theoretical guarantees are provided.

Other scholars have also probed the effects of weight perturbations on the security of neural networks [23, 19]. Termed “Adversarial Weight Perturbation”, this research avenue seeks to relate weight perturbations to the well-researched topic of adversarial examples-based attacks, wherein DNN inputs are deliberately altered to induce misclassifications. One study [23] trained DNNs using adversarial examples to gather data on adversarial weight perturbation. More recently, [19] attempted to identify adversarial weight perturbation using an adapted weight Projected Gradient Descent (PGD) attack. This approach can pinpoint a minor perturbation capable of diminishing DNN accuracy. Nonetheless, while the study concentrates on the attack’s success, it does not provide a guaranteed assessment of the worst-case weight perturbation, as will be evidenced by our forthcoming experimental results.

II-C Addressing the Impact of Device Variations

Several strategies have been introduced to tackle the challenge of device variations in CiM DNN accelerators. This section briefly discusses the two predominant approaches: bolstering DNN robustness and curtailing device variations.

A prevalent technique to bolster DNN robustness in the face of device variations is variation-aware training[16, 6, 10, 24]. This method, sometimes referred to as noise-injection training, integrates variations into DNN weights during the training phase. This integration fosters a DNN model that exhibits statistical resilience to the effects of device variations. In each training iteration, a variation instance, drawn from a predefined distribution, augments the weights during the forward pass, leaving the backpropagation phase unaffected by noise. Upon gradient collection, this variation is discarded, and the pristine weight is updated based on the accrued gradients. Alternatives encompass the design of sturdier DNN architectures [16, 14, 12] and pruning techniques [8, 25].

In efforts to decrease device variations, the write-verify procedure[13, 17] is commonly employed during programming. Initially, an NVM device is programmed to a set state via a designated pulse pattern. Subsequent to this, the device’s value is verified to ascertain if its conductance aligns within a stipulated range of the desired value, essentially assessing its accuracy. If discrepancies arise, a supplemental update pulse is initiated to draw the device conductance nearer to the target. This loop persists until the disparity between the programmed device value and the target diminishes to a satisfactory margin, typically taking a handful of cycles. Cutting-edge research suggests that by selectively applying write-verify to a subset of pivotal devices, one can uphold the average accuracy of a DNN [11]. Additionally, a variety of circuit design initiatives [26, 27] have been put forth to counteract device variations.

III Evaluating Worst-Case Performance of CiM DNN Accelerators

A primary consequence of device variations is the deviation in the conductance of the NVM devices from their intended values. This discrepancy arises from device-to-device and cycle-to-cycle variations during programming. As a result, the weight values of a DNN experience perturbations, which subsequently influence its accuracy. In Section III-A, we start by modeling the effects of NVM device variations on weight perturbation, operating under the assumption that write-verify is employed to limit these variations. Building on this weight perturbation model, Section III-B formulates the challenge of pinpointing the minimum DNN accuracy in the face of weight perturbations, and we introduce a framework named Lagrange-based Worst-Case evaluation (LWC) for its resolution. Section III-C showcases the experimental findings.

III-A Modeling of Weight Perturbation Due to Device Variations

In this section, we present our model that delineates the effects of device variations on DNN weights. Specifically, our primary focus in this paper is on the discrepancies introduced during the programming process, where the conductance value programmed into the NVM devices deviates from the intended value.

For a weight depicted by bits, its desired value, denoted by , is given as

| (1) |

where is the value of the bit of the intended weight. Given that each NVM device can store data equivalent to bits, a single weight value in the DNN requires devices for representation111For simplicity, we assume that M is divisible by K.. This mapping procedure can be articulated as:

| (2) |

Here, denotes the intended conductance of the device encoding a particular weight. It is pertinent to note that the mapping strategy remains consistent even for negative weights.

Assuming that all devices employ the write-verify technique, the deviation between the genuine conductance of each device and its targeted value remains within specified bounds [22]:

| (3) |

In this equation, represents the actual programmed conductance, and indicates the permissible threshold set by write-verify.

When programming a weight, the true value, denoted by , as stored on the devices, can be described as:

| (4) | ||||

Here, is referred to as the weight perturbation bound in this paper.

III-B Problem Definition

Having established our weight perturbation model, we can proceed to delineate the task of determining the worst-case accuracy of a DNN. For simplicity, we denote a neural network by , where refers to its architecture and its set of weights. The forward pass, or computation, of this neural network is symbolized by , with as its inputs.

According to the model detailed in Section III-A, weight perturbations due to device variations are additive and independent. This allows us to represent the forward pass of a neural network under the influence of device variation as . Here, symbolizes the weight perturbation induced by these variations. The perturbed neural network is then defined as .

Based on the above elaborations, we formulate our problem as follows: For a given neural network and a reference dataset , identify the perturbation such that the accuracy of the perturbed neural network on dataset is the lowest, staying within the bounds of permissible weight perturbation. Throughout this document, we will reference this particular perturbation as the worst-case weight perturbation. Similarly, the resulting performance (or accuracy) will be termed the worst-case performance (or accuracy), and the neural network associated with it will be the worst-case neural network.

Given the problem definition, we can represent it as the following optimization problem:

| (5) | ||||

Here, and symbolize the input data and its corresponding classification label from the dataset , respectively. The term represents the maximum magnitude of the weight perturbation, denoted as . The value of is derived from the weight perturbation boundary as discussed in equation (4) from Section III-A. The notation signifies the cardinality (or size) of set . Given that , , and are constants in this scenario, our primary objective is to pinpoint the that diminishes the set of correct classifications to its lowest possible size, thereby leading to the most unfavorable accuracy.

III-C Finding the Worst-Case Performance

The optimization problem defined in equation (5) is challenging to solve directly due to its non-differentiable objective. In this section, we introduce a framework that reformulates the problem, allowing us to tackle it using existing optimization techniques.

Initially, we consider a slight relaxation of the objective. Assuming that there exists a function such that the condition holds true if and only if [28]. Consequently, the optimization objective in equation (5) can be recast as:

| (6) |

Intuitively, minimizing the value in equation (6) can drive the minimization of the objective in equation (5). These two optimization tasks converge in their aims when, under the influence of , all data points in are misclassified.

Several options for satisfy the given criteria. We present some notable examples below.

| (7) |

The function is defined as , and denotes the cross-entropy loss.

Based on empirical findings, in this paper, we opt for

| (8) |

The optimization problem is then relaxed to the form of:

| (9) |

For the relaxed problem, we can employ the Lagrange multiplier to offer an alternative representation:

| (10) |

given that is a carefully selected constant, the optimal solution is achievable. This objective corresponds to the relaxed problem, in which, for some , the optimal solution to the latter matches that of the former.

Consequently, we employ the optimization objective (10) as the relaxed alternative to our originally defined objective (5). Given that the objective (10) is differentiable with respect to , we utilize gradient descent as the optimization algorithm to address this issue.

Methods to determine constant .

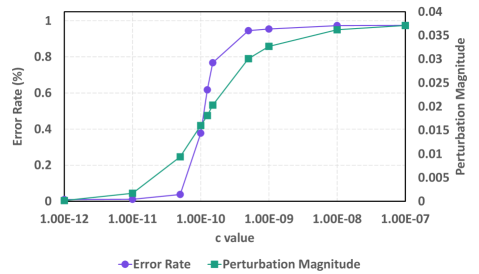

In qualitative terms, when examining objective (10), larger values of indicate a heightened emphasis on reducing accuracy and a diminished emphasis on . This typically leads to a decreased final accuracy and an increased value of . This observation is substantiated by the empirical results illustrated in Fig. 3. Here, we depict how the worst-case error rate and fluctuate based on the chosen , utilizing LeNet for MNIST as an example.

Given that empirical outcomes indicate displays monotonic behavior with respect to , our strategy to determine the optimal value that results in the minimum performance, subject to the weight perturbation constraint , is to employ binary search. Our goal is to identify the largest value that satisfies . The accuracy derived using this particular value represents the worst-case performance of the DNN model when weight perturbations are confined to .

It is worthwhile to note that since the optimization problem outlined in (10) is solvable through gradient descent, both the time and memory complexities of our method align closely with those required for training the DNN. This final framework of evaluating the worst-case performance is named Lagrange-based Worst-Case analysis (LWC).

| Dataset | Model | Ori. Acc. | Worst-case Accuracy (%) | Running Time (Minutes) | ||||

|---|---|---|---|---|---|---|---|---|

| MC | PGD | LWC | MC | PGD | LWC | |||

| MNIST | LeNet | 99.12 | 97.34 | 13.44 | 7.35 | 900 | 3.3 | 5.5 |

| CIFAR-10 | ConvNet | 85.31 | 60.12 | 10.00 | 4.27 | 2700 | 4.2 | 6.0 |

| CIFAR-10 | ResNet-18 | 95.14 | 88.77 | 10.00 | 0.00 | 5400 | 13.3 | 20.0 |

| Tiny ImageNet | ResNet-18 | 65.23 | 25.33 | 0.50 | 0.00 | 14400 | 40.0 | 60.0 |

| ImageNet | ResNet-18 | 69.75 | 43.98 | 0.10 | 0.00 | 231000 | 1980 | 2880 |

| ImageNet | VGG-16 | 71.59 | 66.43 | 0.10 | 0.06 | 313800 | 2530 | 3820 |

| Dataset | Model | c | lr | # of runs |

|---|---|---|---|---|

| MNIST | LeNet | 1E-3 | 1E-5 | 500 |

| CIFAR-10 | ConvNet | 1E-5 | 1E-5 | 100 |

| CIFAR-10 | ResNet-18 | 1E-9 | 1E-4 | 20 |

| Tiny ImgNet | ResNet-18 | 1E-10 | 1E-4 | 20 |

| ImageNet | ResNet-18 | 1E-3 | 1E-3 | 10 |

| ImageNet | VGG-16 | 1E-3 | 1E-3 | 10 |

III-D Experimental Evaluation

In this section, we evaluate the efficacy of our proposed LWC approach in determining the worst-case performance of various DNN models through experiments. We utilized six distinct DNN models across four datasets: LeNet [29] model targeting the MNIST [30] dataset, ConvNet [6] model targeting the CIFAR-10 [31] dataset, ResNet-18 [32] model targeting the CIFAR-10 dataset, ResNet-18 model targeting the Tiny ImageNet [33] dataset, ResNet-18 model targeting the ImageNet [34] dataset, and VGG-16 [35] model targeting the ImageNet dataset. The inference process of LeNet and ConvNet models use a quantization precision of 4 bits, for both weights and layer inputs. On the other hand, the ResNet-18 and VGG-16 models are quantized to 8 bits. For the purposes of this study, we adopt in line with [16, 11]. Following the established procedures detailed in Section II-C, for each weight, the discrepancy between the actual device value and the anticipated value is progressively programmed until it falls below 0.1. Specifically, this means as per [11], resulting in a value of 0.03 (unless stated otherwise). These figures align with those presented in [13], corroborating the authenticity of our modeling and the parameters employed.

Given the absence of prior research for identifying a DNN’s worst-case performance under device variations, besides the basic MC simulations, we also utilize a modified version of the weight PGD attack method [19]. This modified method aims to discern the minimal weight perturbation resulting in a successful attack, serving as an auxiliary reference point. All experiments were executed on Titan-XP GPUs using the PyTorch framework. For the MC simulation baseline, we employed 100,000 runs and leveraged the Adam optimizer for gradient descent. The comprehensive setup for our proposed LWC method is detailed in Table II.

III-D1 Worst-case DNN Accuracy Obtained by Different Methods

Table I reveals that our proposed LWC framework is superior in identifying the worst-case performance when compared to the weight PGD attack and MC simulations. For DNN models like LeNet and ConvNet, our method uncovers weight perturbations that plunge accuracy to below 10%. For ResNet-18 and VGG-16, the accuracy is almost 0%. In contrast, the weight PGD attack can only locate perturbations resulting in accuracy close to what one would expect by random guessing (i.e., 1/N for classifying images to N different classes, translating to 10% in the MNIST and CIFAR-10 dataset, 0.5% in the Tiny ImageNet dataset, and 0.1% in the ImageNet dataset). Methods using MC simulations are the least effective, as even after 100,000 runs, they do not approach the accuracy drops achieved by the other techniques. Given the expansive exploration space created by numerous weights, this outcome is unsurprising.

In terms of computation time, our framework requires slightly more time than the weight PGD attack, primarily due to the gradient descent’s convergence time. Nevertheless, both methods considerably outpace MC simulations in terms of execution time.

These findings indicate a significant vulnerability of DNNs to device variations, even when using the write-verify method and maintaining a maximum weight perturbation of only . Considering that even extensive MC simulations can not indentify the genuine worst-case accuracy, safety-critical applications may necessitate individual inspections of each programmed CiM accelerator to ensure its performance. Relying on random sampling for quality assurance may be too risky.

Furthermore, by comparing our framework’s results for ConvNet and ResNet-18 models on the CIFAR-10 dataset (and also VGG-16 and ResNet-18 models on the ImageNet dataset), it becomes evident that deeper networks are more vulnerable to weight perturbations. This trend aligns with the expectation that greater perturbations can accumulate during forward propagation.

Lastly, the experiments underscore that quantization of both weights and activations is not a reliable strategy for bolstering worst-case DNN performance, considering all the models in this study were quantized as specified in the experimental setup.

III-D2 Analysis of Classification Results

Upon closely analyzing the classification outcomes of the worst-case LeNet model identified by our framework for classifying the MNIST dataset, some intriguing observations emerge. A key aspect we scrutinized was classification confidence, whose distribution is illustrated in Fig. 4. Typically, a DNN’s classification confidence for one certain input image is gauged using the Softmax function applied to its output vector, with the element showcasing the highest score after the Softmax process deemed as the classification result and this score appointed to be the classification confidence. Surprisingly, the figure indicates that the worst-case LeNet confidently misclassifies all inputs, boasting a 0.90 average confidence score for incorrectly classified inputs. Conversely, for correctly classified inputs, its confidence is notably lower, averaging merely 0.47. This starkly contrasts with the original, unperturbed LeNet, which consistently displays confidence levels nearing 1 [29].

Further examination reveals intriguing patterns in classification distribution across different classes, detailed in Table III. The table underscores that a significant chunk of errors stems from images being erroneously categorized into the same class (class 1). Concurrently, a vast majority of images inherently belonging to this class are misclassified into other categories (specifically class 2 and class 3).

We anticipate that these insights will serve as valuable reference points, potentially guiding the creation of innovative algorithms designed to bolster the worst-case performance of DNNs in subsequent studies.

| Model Outputs | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ||

| Ground Truth Labels | 0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1 | 0.0 | 0.4 | 0.5 | 0.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 2 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 3 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 4 | 0.0 | 0.9 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.1 | 0.0 | 0.0 | |

| 5 | 0.0 | 0.9 | 0.0 | 0.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 6 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 7 | 0.0 | 0.6 | 0.0 | 0.4 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 8 | 0.0 | 0.8 | 0.0 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 9 | 0.0 | 0.9 | 0.0 | 0.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

III-D3 Distribution of Worst-Case Weight Perturbation

In this section, we present the distribution of perturbations among the weights. Here we demonstrate an example using the worst-case LeNet model for the MNIST dataset. As illustrated in Fig. 5, a majority (65%) of the weights are either left unperturbed or perturbed to their maximum possible extent, that is, .

Further, in Fig. 6, we detail the count of perturbed weights across different layers. A notable observation is that weights in the convolutional layers, as well as the final fully connected (FC) layer, are more frequently perturbed. This likely stems from their significant influence on the overall accuracy of the DNN.

IV Enhancing Worst-Case Performance of CiM DNN Accelerators

Several strategies have been proposed in existing literature aiming to enhance the average performance of DNNs under the influence of device variations. In this section, we aim to adapt these strategies to optimize the worst-case performance of DNNs and evaluate their efficacy. We will explore two main categories of methods: (1) Restricting device variations and (2) Training DNNs to be more resilient to device variations. From our discussions in Section II, write-verify emerges as a popular strategy in the first category. For the second approach, variation-aware training is commonly adopted. Before assessing the effectiveness of these methods, we introduce a novel evaluation technique designed to efficiently estimate the worst-case performance of DNN models under the influence of device variations. Utilizing this rapid evaluation method, we then appraise the efficacy of the existing approaches and demonstrate that they are either ineffective or overly expensive. As a result, they are unsuitable for enhancing worst-case performance. Based on these observations, we further propose incorporating a novel training method, TRICE [21], in conjunction with the adversarial training approach [19], to improve the worst-case performance. The combined worst-case-aware training method is named A-TRICE.

Unless otherwise indicated, all accuracy metrics presented in this section are derived from training a single DNN architecture with consistent specifications but using three distinct initializations. The accuracy (and error rate) figures are presented as percentages in the format [average standard deviation] for these three iterations. Note that although we use F-LWC to find the optimal hyper-parameters for each protection method, the final worst-case performance results are generated by the more precise LWC method.

IV-A Fast Evaluation Methods

While we can determine the worst-case performance of DNN models under the influence of device variations by using LWC, it still requires multiple gradient descent trials to identify the Lagrange multiplier constant . This introduces a considerable time overhead. Therefore, LWC is not cost-effective for evaluating the effectiveness of different protection methods, especially since this evaluation necessitates extensive hyper-parameter tuning. Each hyper-parameter selection must be assessed independently, so a swifter and more adaptable approach is preferred. To address this challenge, we introduce a relaxed version of LWC to estimate the worst-case performance. Instead of employing the solution from Eq. 10 for the constrained optimization problem in Eq. 9, we use a different approach. While LWC solves the optimization problem in Eq. 9 through the Lagrange multiplier, our proposed rapid evaluation technique employs the fast gradient sign method (FGSM) for this constrained optimization.

Specifically, to minimize Eq. 9 under the constraint , we can start with set to zero and continuously increase (or decrease) by a fixed step size until reaches . Because is relatively small, the convergence is typically reached in iterations. By controlling , a trade-off between execution time and precision can be achieved. The worst-case accuracy of the DNN model is then defined by the accuracy of the model with weights equal to . We term this method F-LWC. Further details of this approach can be found in Algorithm 1.

| Dataset | Model | Ori. | WC Acc. (%) | Time (Min) | ||

|---|---|---|---|---|---|---|

| Acc | LWC | F-LWC | LWC | F-LWC | ||

| MNIST | LeNet | 99.12 | 7.35 | 11.31 | 5.5 | 2.1 |

| CIFAR-10 | ConvNet | 85.31 | 4.27 | 1.51 | 6.0 | 3.0 |

| CIFAR-10 | ResNet-18 | 95.14 | 0.00 | 2.03 | 20.0 | 9.5 |

| Tiny ImageNet | ResNet-18 | 65.23 | 0.00 | 0.00 | 60.0 | 28.7 |

We evaluate the efficacy of F-LWC by contrasting it with LWC. Given that the weight perturbation distance adheres strictly to the constraint , a lower worst-case accuracy implies a more accurate evaluation method. As illustrated in Table IV, both F-LWC and LWCare used to assess the worst-case performance of four distinct DNN models. From an efficiency standpoint, F-LWC boasts a 2x speedup relative to LWC. Regarding accuracy, F-LWC identifies a worst-case scenario closely aligning with that identified by LWC in three models: the LeNet model targeting the MNIST dataset, the ResNet-18 model targeting the CIFAR-10 dataset, and the ResNet-18 model targeting the Tiny ImageNet dataset. Intriguingly, for the ConvNet model for CIFAR-10, F-LWC determines an even lower accuracy than LWC. This discrepancy is rational. LWC demands considerable hyper-parameter tuning, and the values reported in our prior work, which initially introduced LWC [20], may not represent the optimal outcome. However, we maintain this value for consistency with the reported results. Conversely, F-LWC demands minimal hyper-parameter tuning, making it simpler to pinpoint its optimal outcome.

Further illustrating F-LWC’s adaptability, we augment its step size. A larger step size equates to reduced runtime but less accurate estimation, thus presenting a trade-off between these aspects. In Fig. 7, we highlight that, even within a limited runtime, F-LWC still provides a reasonably accurate estimation.

IV-B Conventional Methods for Improving DNN Robustness

In this section, we demonstrate that all of the existing methods used to improve the nvCiM DNN accelerator robustness are not effective in improving the worst-case performance of DNN models. We first discuss the existing conventional methods and then demonstrate their effectiveness.

IV-B1 Stronger Write-Verify

As indicated in Table I, merely relying on the conventional write-verify procedure to restrict the maximum weight perturbation to 0.03 (as specified by in (4)) does not substantially boost the worst-case performance of DNN models. By designating a smaller for the write-verify process, the writing duration may increase. However, this could potentially enhance the DNN’s worst-case performance. More analysis are shown in Fig. 8.

IV-B2 Variation-Aware Training

We have discussed the idea and details of the noise-injection training-based variation-aware training method, where Gaussian noises are injected in the forward and backward process of DNN training to improve the DNN robustness.

IV-B3 Adversarial Training

| Dataset | Model | Regular | VA | ADV |

|---|---|---|---|---|

| MNIST | LeNet | 7.3503.70 | 81.5800.80 | 98.2601.05 |

| CIFAR10 | ConvNet | 4.2700.33 | 3.71 3.76 | 67.0903.85 |

| CIFAR10 | ResNet18 | 0.0000.00 | 2.84 1.20 | 34.8413.20 |

| Tiny IN | ResNet18 | 0.0000.00 | 3.5703.48 | 7.4108.10 |

Here we introduce a modified version of the adversarial training method [19]. Adversarial training is traditionally employed to counteract adversarial input, as summarized in Alg. 2. Drawing parallels to the way adversarial training addresses input perturbations, we inject worst-case perturbations into the weights of a DNN during the training process, aiming to enhance its performance amidst device variations. Specifically, during each training iteration, we first employ LWC to identify the perturbations on the current weights that result in the model’s worst-case accuracy. Subsequently, we integrate these perturbations into the weights and gather the gradient .

Table V demonstrates that both variation-aware training and adversarial training generally yield improvements over regular training. Adversarial training exhibits a slightly superior performance in comparison to variation-aware training. Nevertheless, when benchmarked against the optimal accuracy achievable by these networks in the absence of device variations (refer to the third column in Table I), there remains a notable decline in worst-case accuracy. A notable exception is observed in the LeNet model for MNIST. In this model, adversarial training almost entirely compensates for the accuracy reduction even under worst-case scenarios, a result attributable to the model’s simplicity. Furthermore, as the complexity of the network increases, the enhancements offered by both training methods appear to wane, as evidenced by the mere improvement for ResNet-18 on the Tiny ImageNet dataset.

The blue dashed curve in Fig. 8 shows the effectiveness of stronger write-verify. Stronger write-verify can be effective when the write-verify is done with very strong effort (can provide a very tight bound in device value). Specifically, stronger write-verify can preserve high enough accuracy for the LeNet model when , which would require significant programming time. Stronger write-verify is not effective for larger models like ConvNet and ResNet-18 even with this threshold value. Note that the maximum shown in the figure is , which is greater than previous experiments. This can be viewed as a weaker write-verify scheme.

IV-C A-TRICE Algorithm

As demonstrated in Sect. IV-B, conventional methods are not effective in improving the worst-case performance of DNN models under the influence of device variations. Here we further propose a novel training method named Adversarial Training with RIght-Censored Gaussian NoisE (A-TRICE).

IV-C1 A-TRICE Method

To improve the worst-case performance, we resort to our recent work TRICE [21] which can effectively improve the quantile performance of DNN models. The key to this method is to inject a novel type of noise named Right-Censored Gaussian (RCG) noise instead of the widely used Gaussian noise in the training process. In an RCG distribution, all values follow the Gaussian distribution except that those greater than a certain threshold are set (censored) to be the threshold value. Formally, we have:

| (11) | ||||

where is the use-defined cutoff value and is the standard deviation of the corresponding Gaussian distribution. Although TRICE was originally proposed to improve the percentile performance of DNN models, it is also effective when the percentile is small, mimicking a worst-case scenario.

We incorporate a novel efficient adversarial training method into the TRICE framework so that the resultant A-TRICE framework can efficiently improve the worst-case performance of DNN models. A-TRICE aggregates the adversarial training and noise injection training in one training pass and balances the contribution of these two methods using a coefficient when summing the loss function of the two methods. Specifically, in each iteration of training, A-TRICE first uses the batched input data to approximate a model that would result in the worst-case accuracy using F-LWC. It then performs an adversarial training pass using the worst-case model and collects a resultant . After that, A-TRICE samples a noise instance from RCG distribution, injects this noise into the training process, and collects a resultant . The two resultant losses are then combined using a weighted sum and A-TRICE then use this sum to update the weights through gradient descent. The detailed algorithm is shown in Alg. 3.

IV-C2 Effectiveness of A-TRICE

Here we demonstrate the effectiveness of A-TRICE by comparing it with two baseline methods: vanilla DNN training and variation-aware training that injects Gaussian noise into training. We did not compare A-TRICE with adversarial training because adversarial training is a special case of A-TRICE when the trade-off coefficient .

The comparison result is shown in Fig. 8. Specifically, in Fig. 8(a), we compare A-TRICE with baseline methods in improving the worst-case performance of the LeNet model targeting the MNIST dataset. This experiment shows that variation-aware training is already effective in improving the worst-case performance of this model if provided a tight write-verify bound (). A-TRICE further improves the worst-case accuracy by 30% when is large. Fig. 8(b) demonstrates the comparison between A-TRICE and baseline methods for model ConvNet targeting the CIFAR-10 dataset. We observe that variation-aware training is not effective in improving the worst-case performance of this model, only improving the accuracy by up to 10%. A-TRICE further improves the worst-case accuracy by up to 33%. Finally, we compare A-TRICE with baseline methods in improving the worst-case performance of the ResNet-18 model targeting the CIFAR-10 dataset. The result is shown in Fig. 8(c). We observe that all methods are not fully effective in improving the worst-case performance when is large, but the variation-aware training method is able to improve the worst-case performance by up to 15%, compared with the vanilla training method, and A-TRICE further improves the worst-case performance by up to 31%.

IV-C3 Ablation study

To further evaluate the contribution of introducing adversarial training into the TRICE framework, we perform an ablation study that compares A-TRICE with our previously proposed TRICE framework. We compare A-TRICE with TRICE in improving the worst-case performance of the ConvNet model targeting the CIFAR-10 model in Table VI. We can observe that TRICE and our proposed A-TRICE are both effective in improving the worst-case performance of the ConvNet model. TRICE improves the worst-case accuracy by up to 22% compared with the vanilla training method. Although not shown in the table, this also means a 12% improvement compared with variation-aware training. A-TRICE is even more effective, further improving the worst-case accuracy by up to 21%.

IV-C4 Discussions

In the previous experiments, we demonstrate that A-TRICE is far more effective than all other alternatives and is thus the most promising candidate for improving the worst-case performance. However, we can also observe that even with A-TRICE, the worst-case performance can still be very low for some models when the write-verify bound is reasonably large (e.g., ). Thus, finding more effective methods to address the worst-case performance issue is still an open problem. We also observe that some DNN architectures are more prone to be affected by device variations. For example, when using the same dataset, ResNets have much lower worst-case performance than plain CNNs. We advocate that designers proceed with caution when using ResNets for safety-critical issues.

| Dev. var. | Training Method | ||

|---|---|---|---|

| () | w/o noise | TRICE | A-TRICE |

| 0.00 | 85.93 | 84.52 | 84.17 |

| 0.10 | 12.20 | 34.16 | 56.05 |

| 0.20 | 3.34 | 8.86 | 16.05 |

| 0.30 | 1.51 | 4.30 | 10.13 |

| 0.40 | 0.68 | 2.85 | 10.74 |

V Conclusions

In this paper, instead of focusing on the average performance of DNNs under device variations in CiM accelerators, we introduce an efficient framework to evaluate their worst-case performance, a crucial metric for safety-critical applications. Through our study, we reveal that even with tightly bounded weight perturbations post write-verify, the accuracy of a well-trained DNN can plummet dramatically. Consequently, caution is advised when implementing CiM accelerators in safety-critical scenarios. For instance, during chip quality control after production, it may be prudent to verify the accuracy of each chip individually instead of relying on random sampling. We further evaluate the current techniques aiming at boosting the average DNN performance in CiM accelerators. While doing so, we further propose a fast estimation method of the worst-case scenario to evaluate the influence of using different hyper-parameters for certain robustness-improving methods. Our experiments demonstrate that these methods either impose high costs (as in the case of stronger write-verify) or are ineffective (as seen with training-based methods) when adapted to improve worst-case performance. Thus, we further introduce a novel training method that combines adversarial training and training with right-censored Gaussian noise. Our proposed method significantly improves the worst-case performance of CiM accelerators.

VI Acknowledgement

This project is partially supported by NSF under grants CCF-1919167, CNS-1822099 and CCF-2028879.

References

- [1] L. Yang, Z. Yan, M. Li, H. Kwon, L. Lai, T. Krishna, V. Chandra, W. Jiang, and Y. Shi, “Co-exploration of neural architectures and heterogeneous asic accelerator designs targeting multiple tasks,” in 2020 57th ACM/IEEE Design Automation Conference (DAC). IEEE, 2020, pp. 1–6.

- [2] Y. Sheng, J. Yang, Y. Wu, K. Mao, Y. Shi, J. Hu, W. Jiang, and L. Yang, “The larger the fairer? small neural networks can achieve fairness for edge devices,” 2022.

- [3] A. Shafiee, A. Nag, N. Muralimanohar, R. Balasubramonian, J. P. Strachan, M. Hu, R. S. Williams, and V. Srikumar, “Isaac: A convolutional neural network accelerator with in-situ analog arithmetic in crossbars,” ACM SIGARCH Computer Architecture News, vol. 44, no. 3, pp. 14–26, 2016.

- [4] V. Sze, Y.-H. Chen, T.-J. Yang, and J. S. Emer, “Efficient processing of deep neural networks: A tutorial and survey,” Proceedings of the IEEE, vol. 105, no. 12, pp. 2295–2329, 2017.

- [5] Y.-H. Chen, J. Emer, and V. Sze, “Eyeriss: A spatial architecture for energy-efficient dataflow for convolutional neural networks,” ACM SIGARCH computer architecture news, vol. 44, no. 3, pp. 367–379, 2016.

- [6] X. Peng, S. Huang, Y. Luo, X. Sun, and S. Yu, “Dnn+ neurosim: An end-to-end benchmarking framework for compute-in-memory accelerators with versatile device technologies,” in 2019 IEEE international electron devices meeting (IEDM). IEEE, 2019, pp. 32–5.

- [7] Z. Yan, Y. Shi, W. Liao, M. Hashimoto, X. Zhou, and C. Zhuo, “When single event upset meets deep neural networks: Observations, explorations, and remedies,” in 2020 25th Asia and South Pacific Design Automation Conference (ASP-DAC). IEEE, 2020, pp. 163–168.

- [8] S. Jin, S. Pei, and Y. Wang, “On improving fault tolerance of memristor crossbar based neural network designs by target sparsifying,” in 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE). IEEE, 2020, pp. 91–96.

- [9] T. Liu, W. Wen, L. Jiang, Y. Wang, C. Yang, and G. Quan, “A fault-tolerant neural network architecture,” in 2019 56th ACM/IEEE Design Automation Conference (DAC). IEEE, 2019, pp. 1–6.

- [10] Z. He, J. Lin, R. Ewetz, J.-S. Yuan, and D. Fan, “Noise injection adaption: End-to-end reram crossbar non-ideal effect adaption for neural network mapping,” in Proceedings of the 56th Annual Design Automation Conference 2019, 2019, pp. 1–6.

- [11] Z. Yan, X. S. Hu, and Y. Shi, “Swim: Selective write-verify for computing-in-memory neural accelerators,” in 2022 59th ACM/IEEE Design Automation Conference (DAC). IEEE, 2022.

- [12] D. Gao, Q. Huang, G. L. Zhang, X. Yin, B. Li, U. Schlichtmann, and C. Zhuo, “Bayesian inference based robust computing on memristor crossbar,” in 2021 58th ACM/IEEE Design Automation Conference (DAC). IEEE, 2021, pp. 121–126.

- [13] W. Shim, J.-s. Seo, and S. Yu, “Two-step write–verify scheme and impact of the read noise in multilevel rram-based inference engine,” Semiconductor Science and Technology, vol. 35, no. 11, p. 115026, 2020.

- [14] Z. Yan, D.-C. Juan, X. S. Hu, and Y. Shi, “Uncertainty modeling of emerging device based computing-in-memory neural accelerators with application to neural architecture search,” in 2021 26th Asia and South Pacific Design Automation Conference (ASP-DAC). IEEE, 2021, pp. 859–864.

- [15] Z. Yan, W. Jiang, X. S. Hu, and Y. Shi, “Radars: Memory efficient reinforcement learning aided differentiable neural architecture search,” in 2022 27th Asia and South Pacific Design Automation Conference (ASP-DAC). IEEE, 2022, pp. 128–133.

- [16] W. Jiang, Q. Lou, Z. Yan, L. Yang, J. Hu, X. S. Hu, and Y. Shi, “Device-circuit-architecture co-exploration for computing-in-memory neural accelerators,” IEEE Transactions on Computers, vol. 70, no. 4, pp. 595–605, 2020.

- [17] P. Yao, H. Wu, B. Gao, J. Tang, Q. Zhang, W. Zhang, J. J. Yang, and H. Qian, “Fully hardware-implemented memristor convolutional neural network,” Nature, vol. 577, no. 7792, pp. 641–646, 2020.

- [18] Z. Wang, C. Huang, and Q. Zhu, “Efficient global robustness certification of neural networks via interleaving twin-network encoding,” arXiv preprint arXiv:2203.14141, 2022.

- [19] Y.-L. Tsai, C.-Y. Hsu, C.-M. Yu, and P.-Y. Chen, “Formalizing generalization and adversarial robustness of neural networks to weight perturbations,” Advances in Neural Information Processing Systems, vol. 34, 2021.

- [20] Z. Yan, X. S. Hu, and Y. Shi, “Computing in memory neural network accelerators for safety-critical systems: Can small device variations be disastrous?” 2022 International Conference on Computer-Aided Design (ICCAD), 2022.

- [21] Z. Yan, Y. Qin, X. S. Hu, and Y. Shi, “Improving realistic worst-case performance of nvcim dnn accelerators through training with right-censored gaussian noise,” 2023 International Conference on Computer-Aided Design (ICCAD), 2023.

- [22] B. Feinberg, S. Wang, and E. Ipek, “Making memristive neural network accelerators reliable,” in 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA). IEEE, 2018, pp. 52–65.

- [23] D. Wu, S.-T. Xia, and Y. Wang, “Adversarial weight perturbation helps robust generalization,” Advances in Neural Information Processing Systems, vol. 33, pp. 2958–2969, 2020.

- [24] C.-C. Chang, M.-H. Wu, J.-W. Lin, C.-H. Li, V. Parmar, H.-Y. Lee, J.-H. Wei, S.-S. Sheu, M. Suri, T.-S. Chang et al., “Nv-bnn: An accurate deep convolutional neural network based on binary stt-mram for adaptive ai edge,” in 2019 56th ACM/IEEE Design Automation Conference (DAC). IEEE, 2019, pp. 1–6.

- [25] C.-Y. Chen and K. Chakrabarty, “Pruning of deep neural networks for fault-tolerant memristor-based accelerators,” in 2021 58th ACM/IEEE Design Automation Conference (DAC). IEEE, 2021, pp. 889–894.

- [26] H. Shin, M. Kang, and L.-S. Kim, “Fault-free: A fault-resilient deep neural network accelerator based on realistic reram devices,” in 2021 58th ACM/IEEE Design Automation Conference (DAC). IEEE, 2021, pp. 1039–1044.

- [27] S. Jeong, J. Kim, M. Jeong, and Y. Lee, “Variation-tolerant and low r-ratio compute-in-memory reram macro with capacitive ternary mac operation,” IEEE Transactions on Circuits and Systems I: Regular Papers, 2022.

- [28] N. Carlini and D. Wagner, “Towards evaluating the robustness of neural networks,” in 2017 ieee symposium on security and privacy (sp). IEEE, 2017, pp. 39–57.

- [29] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

- [30] L. Deng, “The mnist database of handwritten digit images for machine learning research,” IEEE Signal Processing Magazine, vol. 29, no. 6, pp. 141–142, 2012.

- [31] A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” 2009.

- [32] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [33] Y. Le and X. Yang, “Tiny imagenet visual recognition challenge,” CS 231N, vol. 7, no. 7, p. 3, 2015.

- [34] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE conference on computer vision and pattern recognition. Ieee, 2009, pp. 248–255.

- [35] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f72b35a6-81c9-4ce1-b4cf-18c7b9032d1a/x11.png) |

Zheyu Yan is a Ph.D. student in the Department of Computer Science and Engineering at the University of Notre Dame. He earned his B.S. degree from Zhejiang University in 2019. He is deeply interested in the co-design of software and hardware for deep neural network accelerators, particularly focusing on non-volatile memory-based compute-in-memory platforms. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f72b35a6-81c9-4ce1-b4cf-18c7b9032d1a/x12.png) |

Xiaobo Sharon Hu earned her B.S. degree from Tianjin University in China in 1982, followed by an M.S. from the Polytechnic Institute of New York in 1984, and then a Ph.D. from Purdue University in West Lafayette, IN, USA, in 1989. She currently holds a Professor position at the Department of Computer Science and Engineering at the University of Notre Dame, Notre Dame, IN, USA. Her research primarily revolves around beyond-CMOS technologies computing, low-power system design, and cyber-physical systems. Dr. Hu was honored with the NSF CAREER Award in 1997 and received Best Paper Awards from the Design Automation Conference in 2001 and the ACM/IEEE International Symposium on Low Power Electronics and Design in 2018. In 2018, Dr. Hu took on the role of General Chair for the Design Automation Conference (DAC). She has been an Associate Editor for various publications including IEEE Transactions on Very Large Scale Integration (VLSI) Systems, ACM Transactions on Design Automation of Electronic Systems, and ACM Transactions on Embedded Computing. Currently, she serves as an Associate Editor for the ACM Transactions on Cyber-Physical Systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f72b35a6-81c9-4ce1-b4cf-18c7b9032d1a/x13.png) |

Yiyu Shi earned his B.S. degree with honors in electronic engineering from Tsinghua University in Beijing, China, in 2005. He later pursued his M.S. and Ph.D. degrees in electrical engineering at the University of California, Los Angeles, completing them in 2007 and 2009, respectively. He now serves as a Professor in the Departments of Computer Science and Engineering as well as Electrical Engineering at the University of Notre Dame, Notre Dame, IN, USA. His research primarily focuses on 3-D integrated circuits, hardware security, and applications in renewable energy. Prof. Shi has been recognized with multiple best paper nominations at premier conferences. He received the IBM Invention Achievement Award in 2009 and was honored with the Japan Society for the Promotion of Science Faculty Invitation Fellowship, the Humboldt Research Fellowship for Experienced Researchers, and the National Science Foundation CAREER Award. Additionally, he was the recipient of the IEEE Region 5 Outstanding Individual Achievement Award and the Air Force Summer Faculty Fellowship. |