Computing finite index congruences of finitely presented semigroups and monoids

Abstract

In this paper, we describe an algorithm for computing the left, right, or 2-sided congruences of a finitely presented semigroup or monoid with finitely many classes, and an alternative algorithm when the finitely presented semigroup or monoid is finite. We compare the two algorithms presented with existing algorithms and implementations. The first algorithm is a generalization of Sims’ low-index subgroup algorithm for finding the congruences of a monoid. The second algorithm involves determining the distinct principal congruences, and then finding all of their possible joins. Variations of this algorithm have been suggested in numerous contexts by numerous authors. We show how to utilize the theory of relative Green’s relations, and a version of Schreier’s Lemma for monoids, to reduce the number of principal congruences that must be generated as the first step of this approach. Both of the algorithms described in this paper are implemented in the GAP [29] package Semigroups [54], and the first algorithm is available in the C++ library libsemigroups [53] and in its Python bindings libsemigroups_pybind11 [52].

1 Introduction

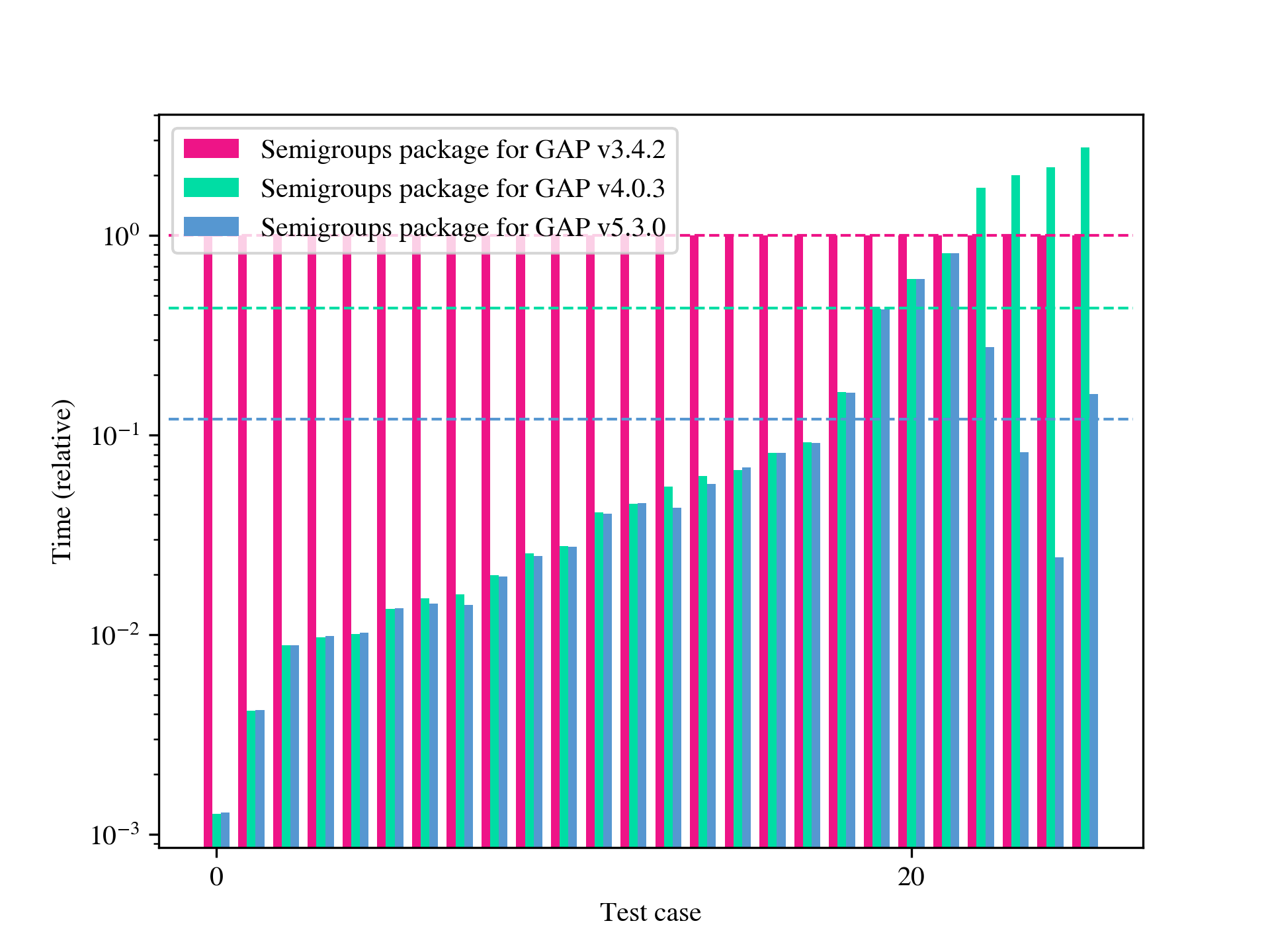

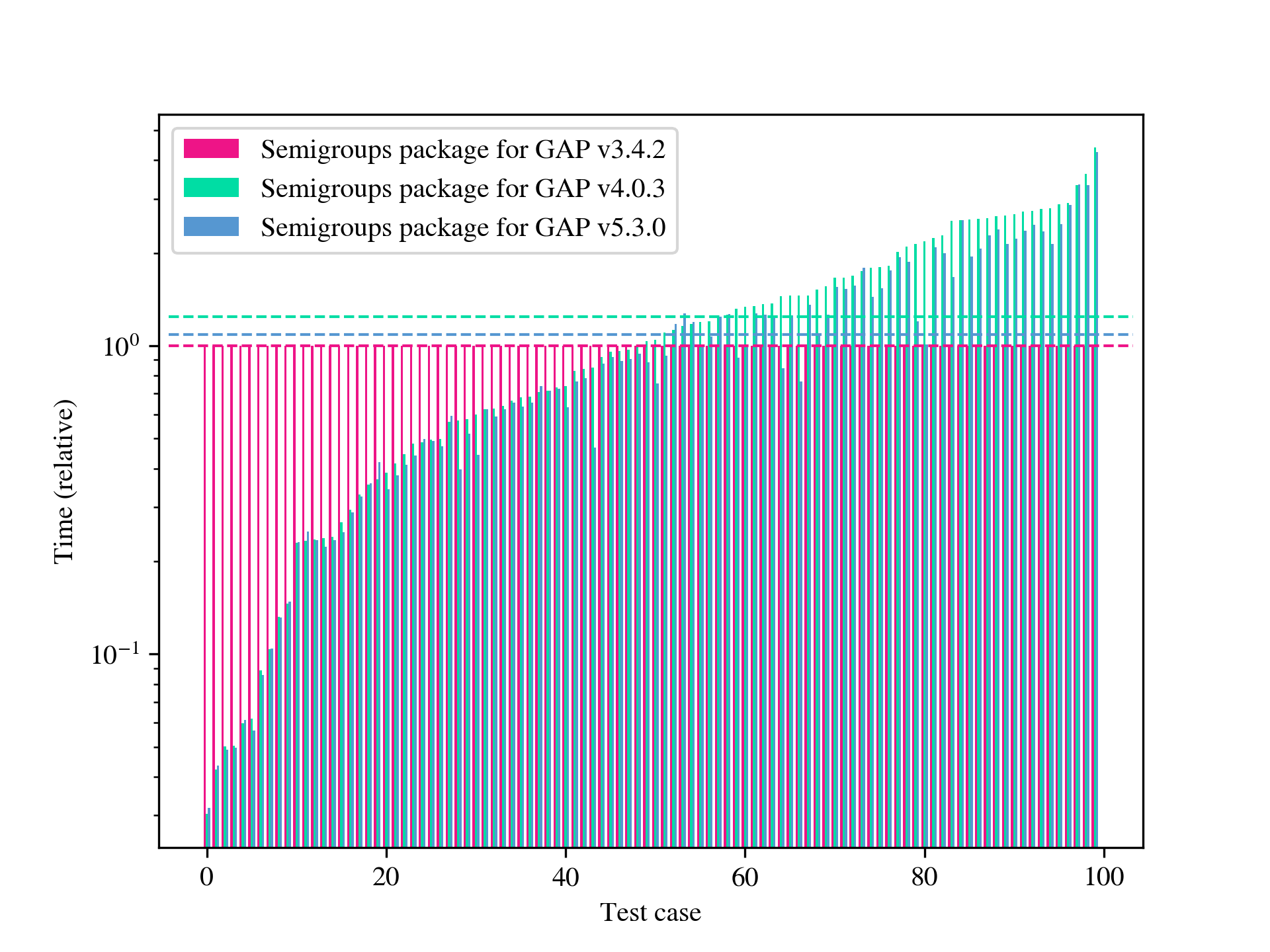

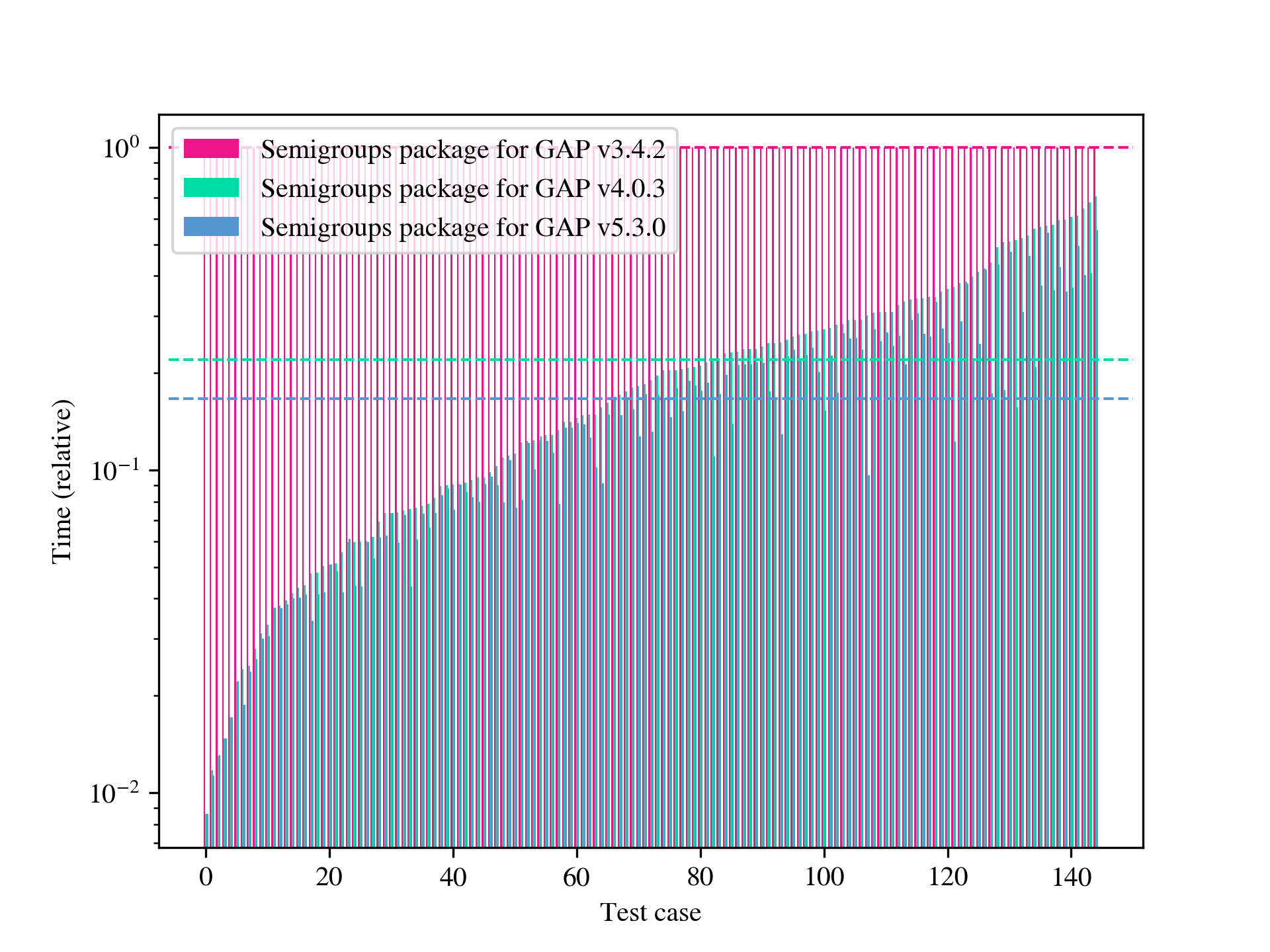

In this paper, we are concerned with the problem of computing finite index congruences of a finitely presented semigroup or monoid. One case of particular interest is computing the entire lattice of congruences of a finite semigroup or monoid. We will present two algorithms that can perform these computations and compare them with each other and to existing algorithms and their implementations. The first algorithm is the only one of its kind, permitting the computation of finite index 1-sided and 2-sided congruences, and a host of other things (see Section 5) of infinite finitely presented semigroups and monoids. Although this first algorithm is not specifically designed to find finite index subgroups of finitely presented groups, it can be used for such computations and is sometimes faster than the existing implementations in 3Manifolds [15] and GAP [29]; see Table A.5. The second algorithm we present is, for many examples, several orders of magnitude faster than any existing method, and in many cases permits computations that were previously unfeasible. Examples where an implementation of an existing algorithm, such as that in [58], is faster are limited to those with total runtime below 1 second; see Table A.6. Some further highlights include: computing the numbers of right/left congruences of many classical examples of finite transformation and diagram monoids, see Appendix B; reproducing and extending the computations from [6] to find the number of congruences in free semigroups and monoids (see Table B.13); computational experiments with the algorithms implemented were crucial in determining the minimum transformation representation of the so-called diagram monoids in [11]; and in classifying the maximal and minimal 1-sided congruences of the full transformation monoids in [12]. In Appendix A, we present significant quantitative data exhibiting the performance of our algorithms. Appendix B provides a wealth of data generated using the implementation of the algorithms described here. For example, the sequences of numbers of minimal 1-sided congruences of a number of well-studied transformation monoids are apparent in several of the tables in Appendix B, such as Table B.12.

The question of determining the lattice of 2-sided congruences of a semigroup or monoid is classical and has been widely studied in the literature; see, for example, [47]. Somewhat more recently, this interest was rekindled by Araújo, Bentz, and Gomes in [2], Young (né Torpey) in [70], and the third author of the present article, which resulted in [23] and its numerous offshoots [7, 16, 18, 19, 20, 21, 22]. The theory of 2-sided congruences of a monoid is analogous to the theory of normal subgroups of a group, and 2-sided congruences play the same role for monoids with respect to quotients and homomorphisms. As such, it is perhaps not surprising that the theory of 2-sided congruences of semigroups and monoids is rather rich. The 2-sided congruences of certain types of semigroup are completely classified, for a small sample among many, via linked triples for regular Rees 0-matrix semigroups [35, Theorem 3.5.8], or via the kernel and trace for inverse semigroups [35, Section 5.3].

The literature relating to 1-sided congruences is less well-developed; see, for example, [7, 51]. Subgroups are to groups what 1-sided congruences are to semigroups. This accounts, at least in part, for the relative scarcity of results in the literature on 1-sided congruences. The number of such congruences can be enormous, and the structure of the corresponding lattices can be wild. For example, the full transformation monoid of degree has size and possesses right congruences111This number was computed for the first time using the algorithm described in Section 4 as implemented in the C++ library libsemigroups [53] whose authors include the authors of the present paper. It was not previously known.. Another example is that of the stylic monoids from [1], which are finite quotients of the well-known plactic monoids [44, 45]. The stylic monoid with generators has size , while the number of left congruences is \@footnotemark.

The purpose of this paper is to provide general computational tools for computing the 1- and 2-sided congruences of a finite, or finitely presented, monoid. There are a number of examples in the literature of such general algorithms; notable examples include [25], [70], and [4], which describes an implementation of the algorithms from [25].

The first of the two algorithms we present is a generalization of Sims’ low-index subgroup algorithm for congruences of a finitely presented monoid; see Section 5.6 in [65] for details of Sims’ algorithm; some related algorithms and applications of the low-index subgroups algorithm can be found in [32], [37], [55, Section 6], and [60]. A somewhat similar algorithm for computing low-index ideals of a finitely presented monoid with decidable word problem was given by Jura in [39] and [40] (see also [61]). We will refer to the algorithm presented here as the low-index congruence algorithm. We present a unified framework for computing various special types of congruences, including: 2-sided congruences; congruences including or excluding given pairs of elements (leading to the ability to compute specific parts of a congruence lattice); solving the word problem in residually finite semigroups or monoids; congruences such that the corresponding quotient is a group; congruences arising from 1- or 2-sided ideals; and 1-sided congruences representing a faithful action of the original monoid; see Section 5 for details. This allows us to, for example, implement a method for computing the finite index ideals of a finitely presented semigroup akin to [39, 40] by implementing a single function (Algorithm 4) which determines if a finite index right congruence is a Rees congruence.

The low-index congruence algorithm takes as input a finite monoid presentation defining a monoid , and a positive integer . It permits the congruences with up to classes to be iterated through without repetition, while only holding a representation of a single such congruence in memory. Each congruence is represented as a certain type of directed graph, which we refer to as word graphs; see Section 2 for the definition. The space complexity of this approach is where is the number of generators of the input monoid, and is the input positive integer. Roughly speaking, the low-index algorithm performs a backtracking search in a tree whose nodes are word graphs. If the monoid is finite, then setting allows us to determine all of the left, right, or 2-sided congruences of . Finding all of the subgroups of a finite group was perhaps not the original motivation behind Sims’ low-index subgroup algorithm from [65, Section 5.6]. In particular, there are likely better ways of finding all subgroups of a finite group; see, for example, [33], [36] and the references therein. On the other hand, in some sense, the structure of semigroups and monoids in general is less constrained than that of groups, and in some cases the low-index congruence algorithm is the best or only available means of computing congruences.

To compute the actual lattice of congruences obtained from the low-index congruences algorithm, we require a mechanism for computing the join or meet of two congruences given by word graphs. We show that two well-known algorithms for finite state automata can be used to do this. More specifically, a superficial modification of the Hopcroft-Karp Algorithm [34], for checking if two finite state automata recognise the same language, can be used to compute the join of two congruences. Similarly, a minor modification of the standard construction of an automaton recognising the intersection of two regular languages can be utilised to compute meets; see for example [68, Theorem 1.25]. For more background on automata theory, see [59].

As described in Section 5.6 of [65], one motivation of Sims’ low-index subgroup algorithm was to provide an algorithm for proving non-triviality of the group defined by a finite group presentation by showing that has a subgroup of index greater than . The low-index congruences algorithm presented here can similarly be used to prove the non-triviality of the monoid defined by a finite monoid presentation by showing that has a congruence with more than one class. There are a number of other possible applications: to determine small degree transformation representations of monoids (every monoid has a faithful action on the classes of a right congruence); to prove that certain relations in a presentation are irredundant; or more generally to show that the monoids defined by two presentations are not isomorphic (if the monoids defined by and have different numbers of congruences with classes, then they are not isomorphic). Further applications are discussed in Section 5.

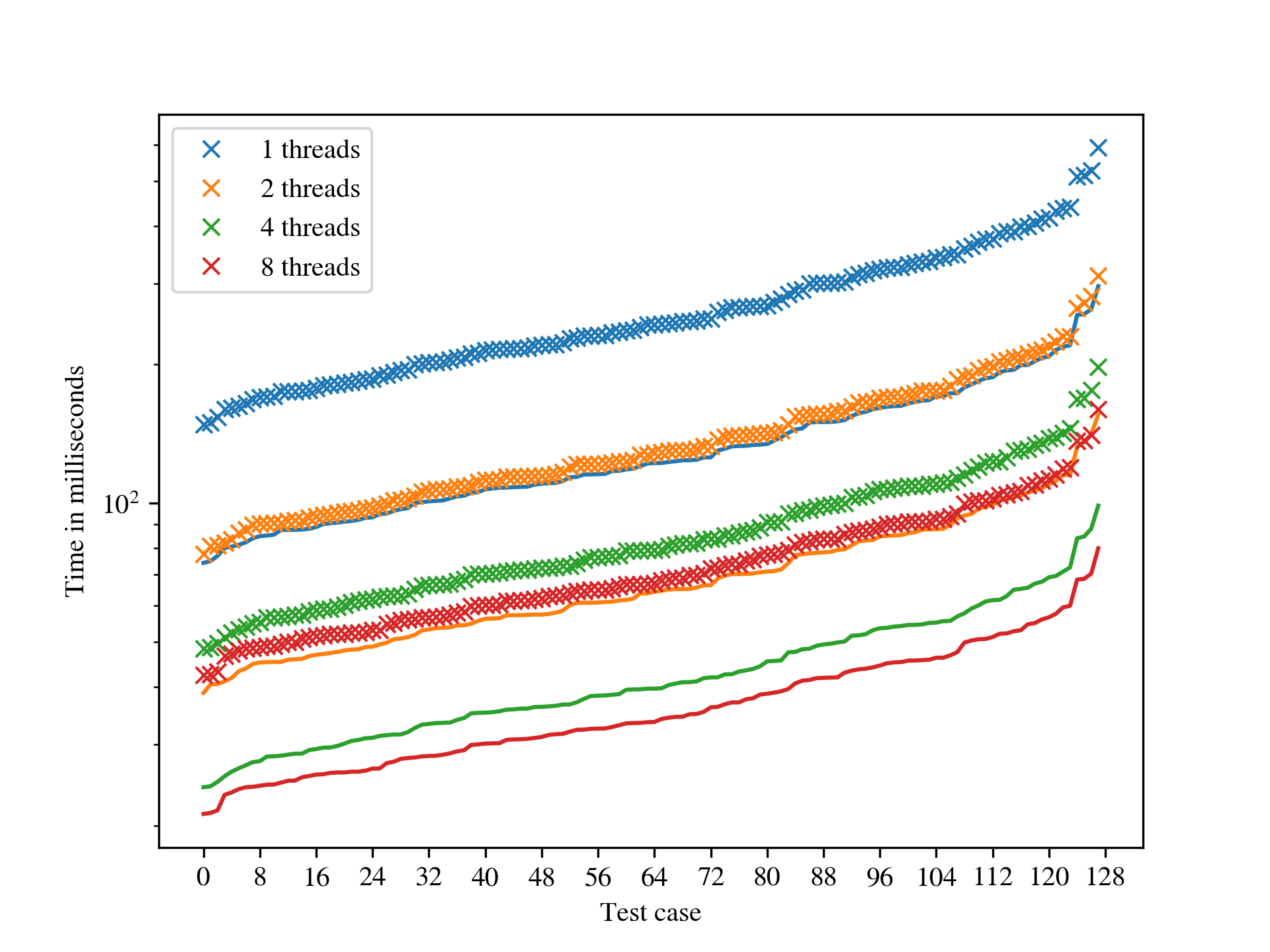

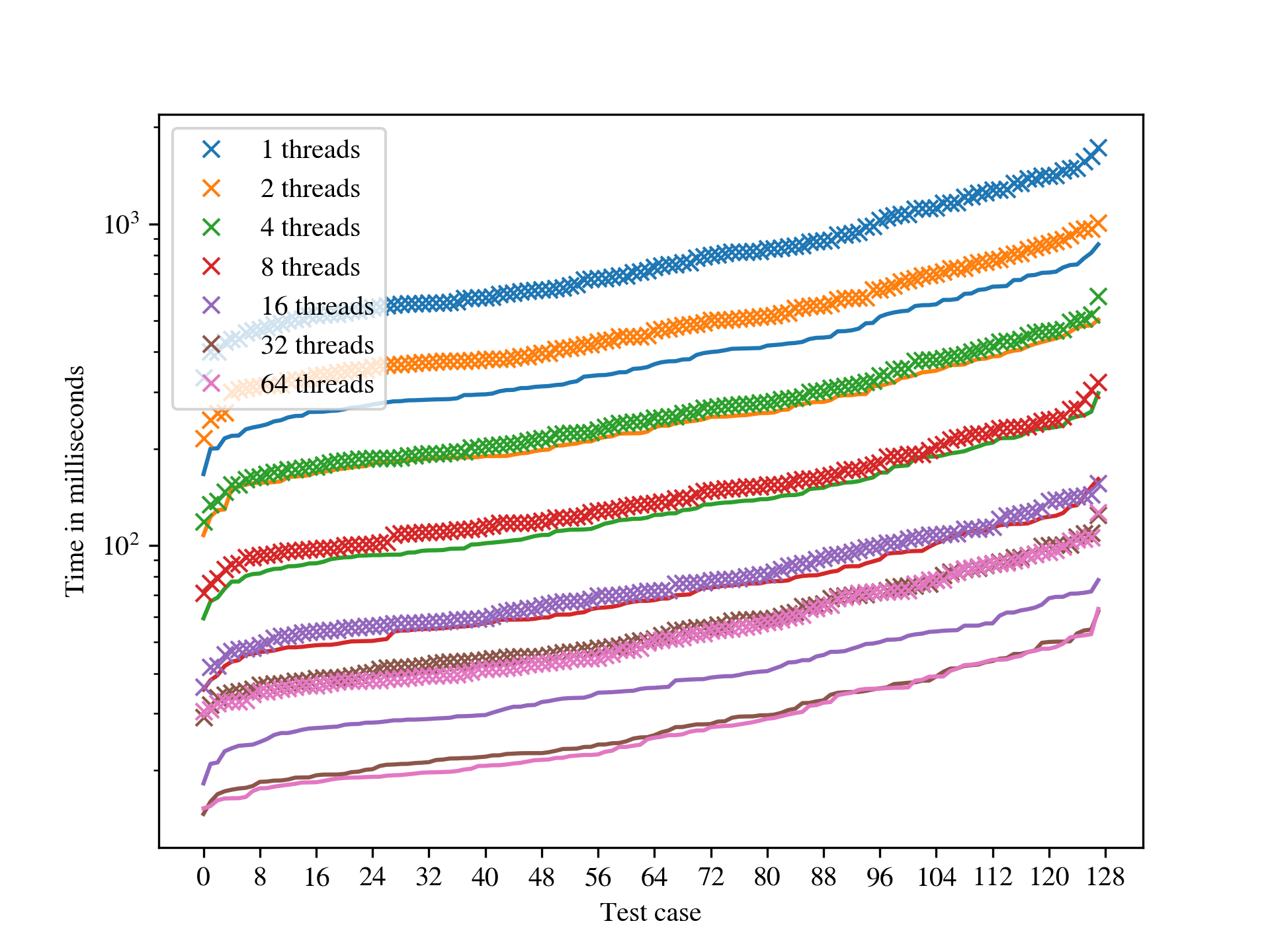

The low-index congruence algorithm is implemented in the open-source C++ library libsemigroups [53], and available for use in the GAP [29] package Semigroups [54], and the Python package libsemigroups_pybind11 [52]. The low-index algorithm is almost embarrassingly parallel, and the implementation in libsemigroups [53] is parallelised using a version of the work stealing queue described in [74, Section 9.1.5]; see Section 4 and Appendix A for more details.

The second algorithm we present is more straightforward than the low-index congruence algorithm, and is a variation on a theme that has been suggested in numerous contexts, for example, in [4, 25, 70]. There are two main steps to this procedure. First, the distinct principal congruences are determined, and, second, all possible joins of the principal congruences are found. Unlike the low-index congruence algorithm, this algorithm cannot be used to compute anything about infinite finitely presented semigroups or monoids. This second algorithm is implemented in the GAP [29] package Semigroups [54].

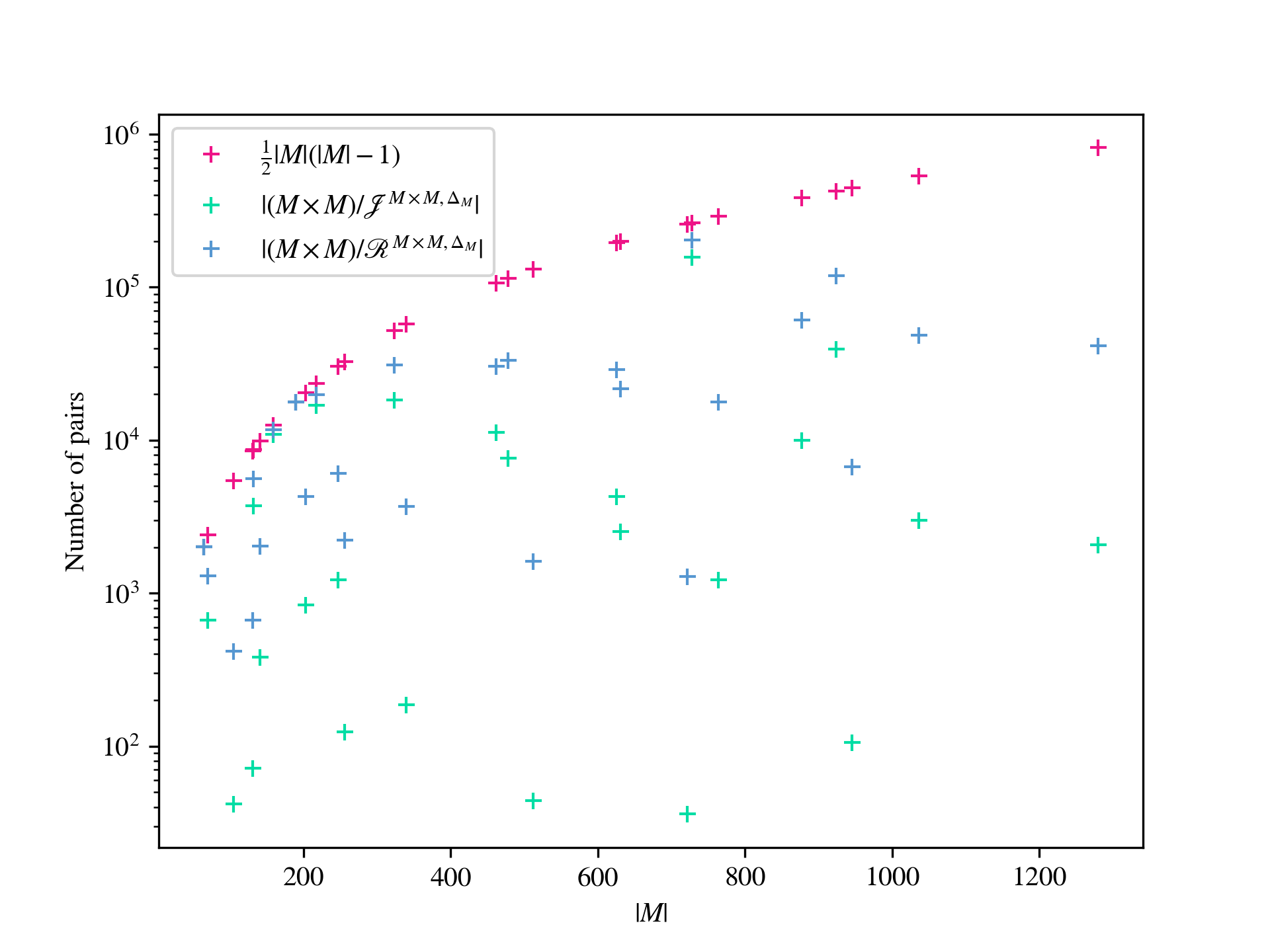

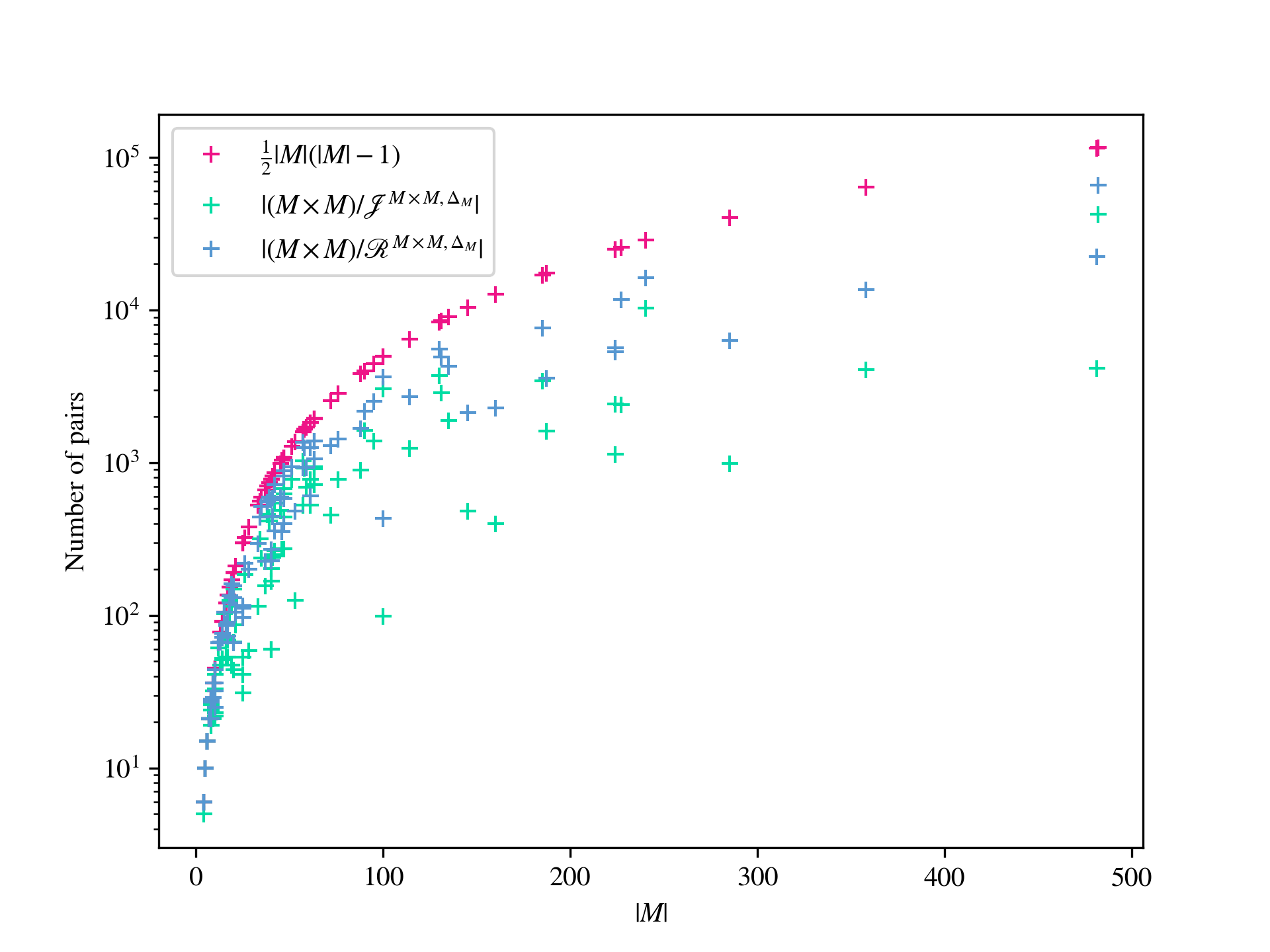

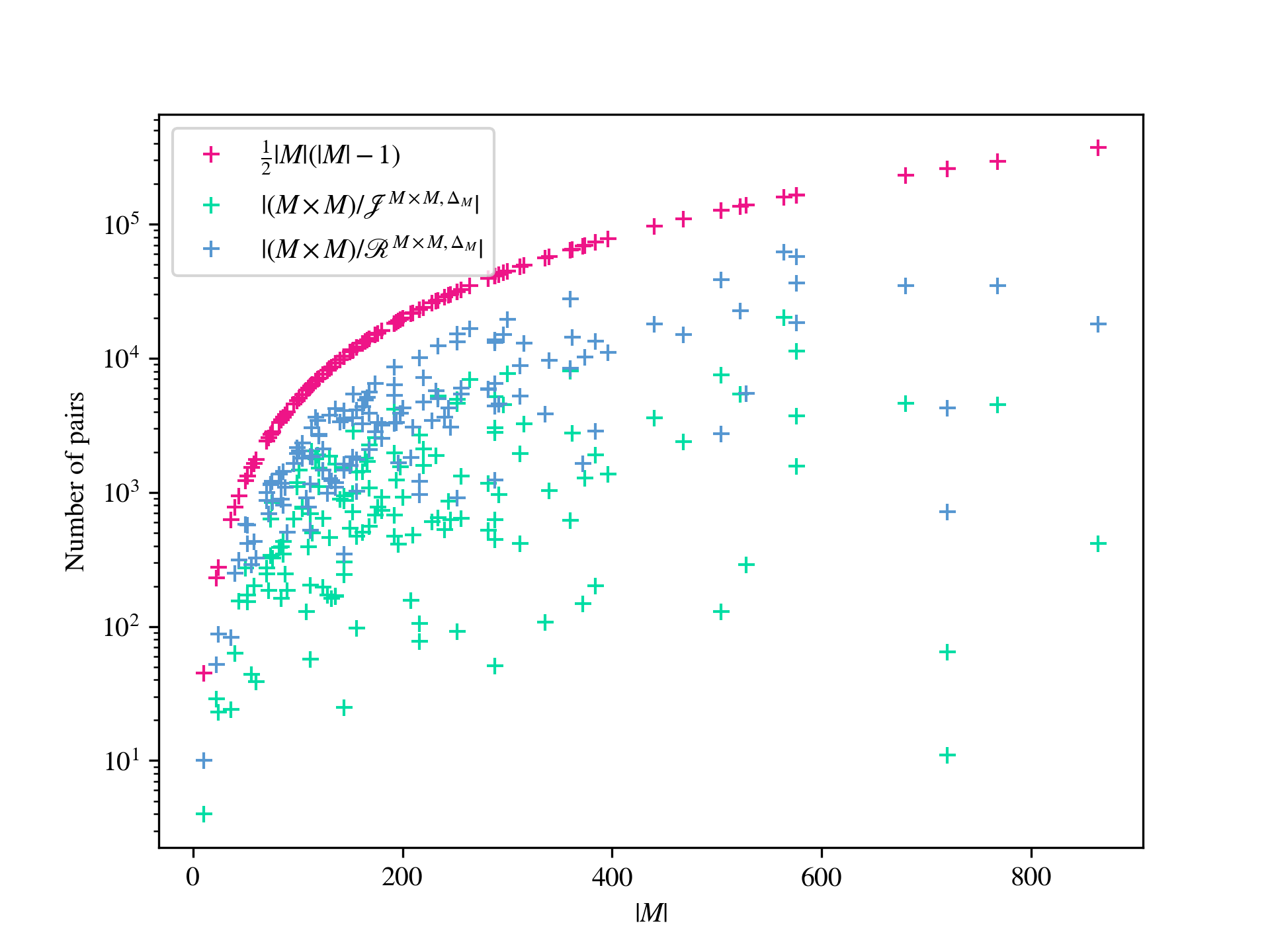

The first step of the second algorithm, as described in, for example, [4, 25, 70], involves computing the principal congruence, of the input monoid , generated by every pair . Of course, in practice, if , then the principal congruences generated by and are the same, and so only principal congruences are actually generated. In either case, this requires the computation of such principal congruences. We will show that certain of the results from [22] can be generalized to provide a sometimes smaller set of pairs required to generate all of the principal congruences of . In particular, we show how to compute relative Green’s -class and -class representatives of elements in the direct product modulo its submonoid . Relative Green’s relations were introduced in [73]; see also [9, 30]. It is straightforward to verify that if are -related modulo , then the principal right congruences generated by and coincide; see 7.1i for a proof. We will show that it is possible to reduce the problem of computing relative -class representatives to the problems of computing the right action of on a set, and membership testing in an associated (permutation) group. The relative -class representatives correspond to strongly connected components of the action of on the relative -classes by left multiplication, and can be found whenever the relative -classes can be. In many examples, the time taken to find such relative -class and -class representatives is negligible when compared to the overall time required to compute the lattice of congruences, and in some examples there is a dramatic reduction in the number of principal congruences that must be generated. For example, if is the general linear monoid of matrices over the finite field of order , then and so . On the other hand, the numbers of relative - and -classes of elements of modulo are and , has and principal 2-sided and right congruences, respectively, and the total number of 2-sided congruences is . Another example: if is the monoid consisting of all matrices over the finite field with elements with determinant or , then and so , but the numbers of relative - and -classes are and , there are and principal 2-sided and right congruences, respectively, and the total number of 2-sided congruences is . Of course, there are other examples where there is no reduction in the number of principal congruences that must be generated, and as such the time taken to compute the relative Green’s classes is wasted; see Appendix A for a more thorough analysis.

One question we have not yet addressed is how to compute a (principal) congruence from its generating pairs. For the purpose of computing the lattice of congruences, it suffices to be able to compare congruences by containment. There are a number of different approaches to this: such as the algorithm suggested in [25, Algorithm 2] and implemented in [4], and that suggested in [70, Chapter 2] and implemented in the GAP [29] package Semigroups [54] and the C++ library libsemigroups [53]. The former is essentially a brute force enumeration of the pairs belonging to the congruence, and congruences are represented by the well-known disjoint sets data structure. The latter involves running two instances of the Todd–Coxeter Algorithm in parallel; see [70, Chapter 2] and [13] for more details.

We conclude this introduction with some comments about the relative merits and de-merits of the different approaches outlined above, and we refer the reader to Appendix A for some justification for the claims we are about to make.

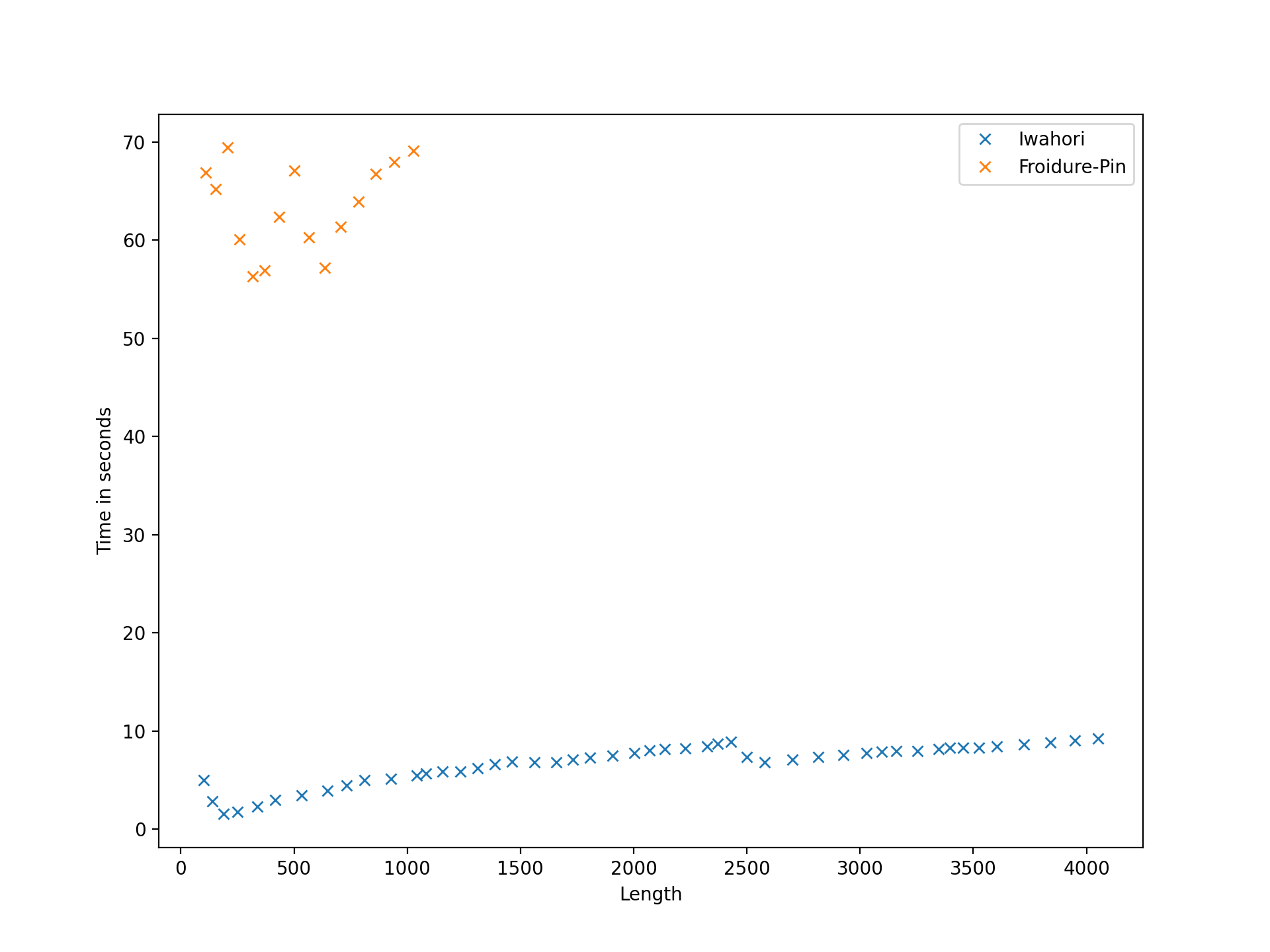

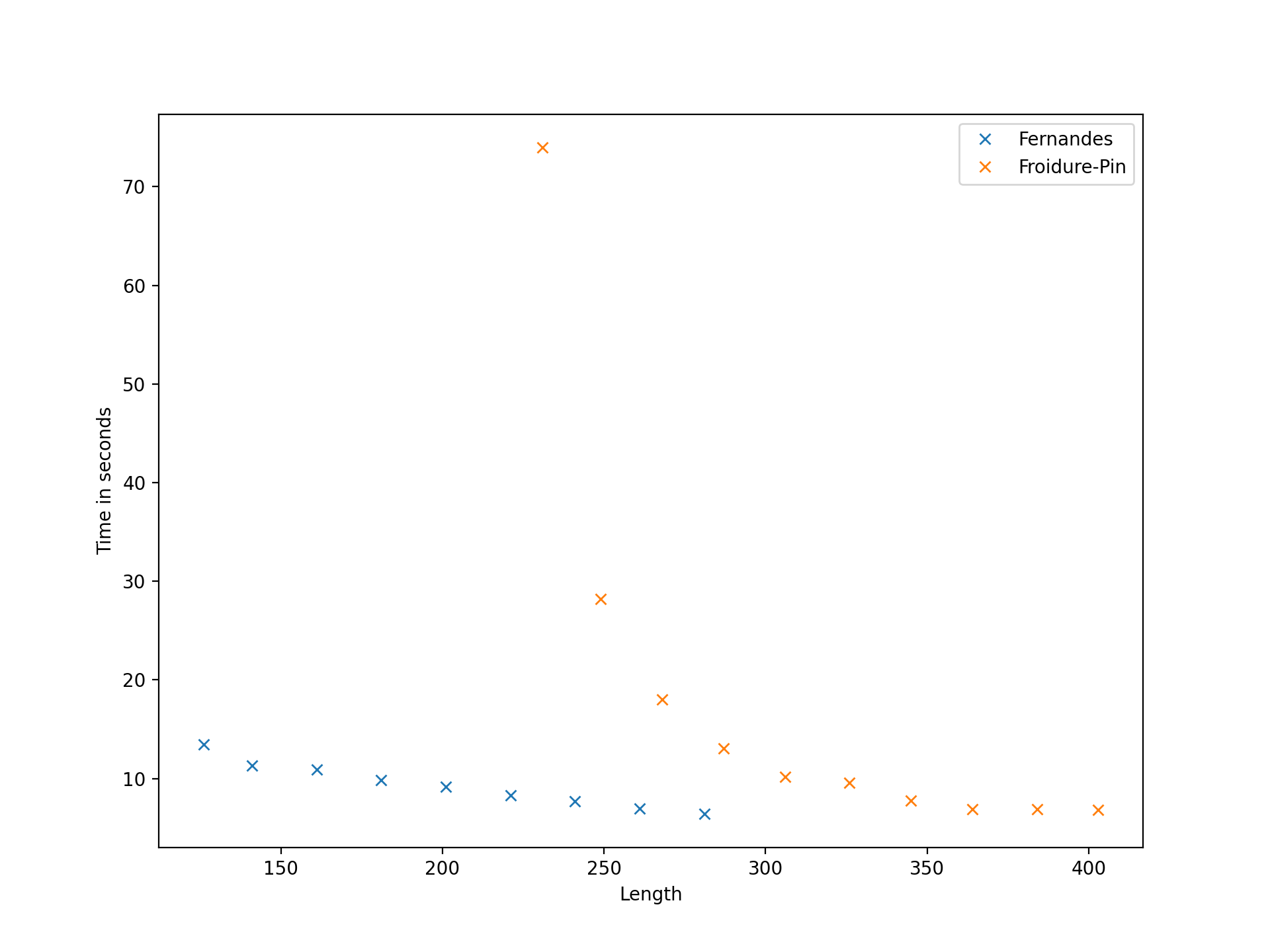

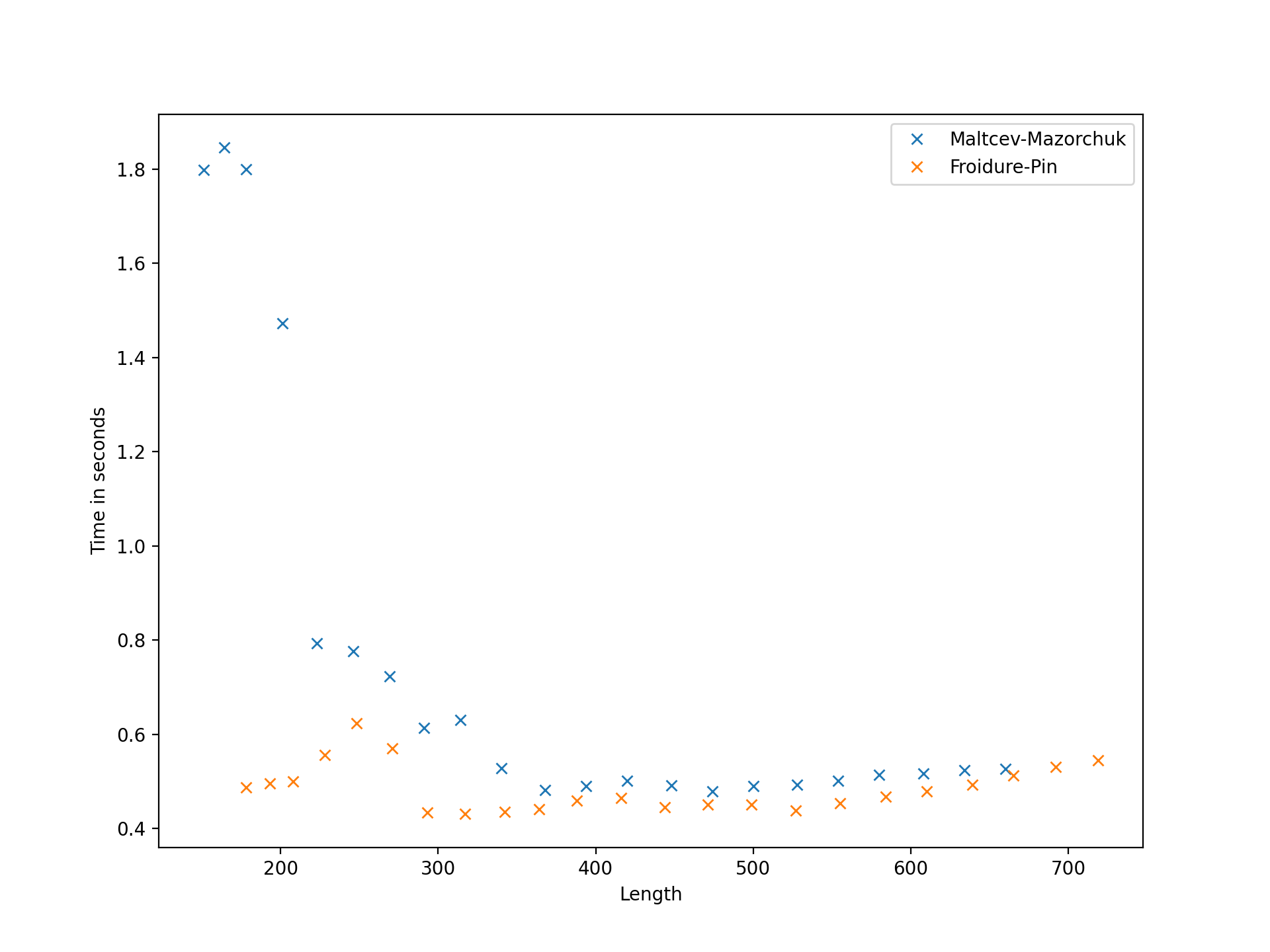

As might be expected, the runtime of the low-index congruence algorithm is highly dependent on the input presentation, and it seems difficult (or impossible) to predict what properties of a presentation reduce the runtime. We define the length of a presentation to be the sum of the lengths of the words appearing in relations plus the number of generators. On the one hand, for a fixed monoid , long presentations appear to have an adverse impact on the performance, but so too do very short presentations. Perhaps one explanation for this is that a long presentation increases the cost of processing each node in the search tree, while a short presentation does not make it evident that certain branches of the tree contain no solutions until many nodes in the tree have been explored. If is finite, then it is possible to find a presentation for using, for example, the Froidure-Pin Algorithm [26]. In some examples, the presentations produced mechanically (i.e. non-human presentations) qualify as long in the preceding discussion. In some examples presentations from the literature (i.e. human presentations) work better than their non-human counterparts, but in other examples they qualify as short in the preceding discussion, and are worse. It seems that in many cases some experimentation is required to find a presentation for which the low-index congruence algorithm works best. On the other hand, in examples where the number of congruences is large, say in the millions, running any implementation of the second algorithm is infeasible because it requires too much space. Having said that, there are still some relatively small examples where the low-index congruences algorithm is faster than the implementations of the second algorithm in Semigroups [54] and in CREAM [58], and others where the opposite holds; see Table A.6.

One key difference between the implementation of the low-index subgroup algorithm in, say, GAP [29], and the low-index congruence algorithm in libsemigroups [53] is that the former finds conjugacy class representatives of subgroups with index at most . As far as the authors are aware, there is no meaningful notion of conjugacy that can be applied to the low-index congruences algorithm for semigroups and monoids in general. Despite the lack of any optimizations for groups in the implementation of the low-index congruence algorithm in libsemigroups [53], its performance is often better than or comparable to that of the implementations of Sims’ low-index subgroup algorithm in [15] and GAP [29]; see Table A.5 for more details.

The present paper is organised as follows: in Section 2 we present some preliminaries required in the rest of the paper; in Section 3 we state and prove some results related to actions, word graphs, and congruences; in Section 4 we state the low-index congruence algorithm and prove that it is correct; in Section 5 we give numerous applications of the low-index congruences algorithm; in Section 6 we show how to compute the joins and meets of congruences represented by word graphs; in Section 7 we describe the algorithm based on [22] for computing relative Green’s relations. In Appendix A, we provide some benchmarks that compare the performance of the implementations in libsemigroups [53], Semigroups [54], and CREAM [58]. Finally in Appendix B we present some tables containing statistics about the lattices of congruences of some well-known families of monoids.

2 Preliminaries

In this section we introduce some notions that are required for the latter sections of the paper.

Throughout the paper we use the symbol to denote an “undefined” value, and note that is not an element of any set except where it is explicitly included.

Let be a semigroup. An equivalence relation is a right congruence if for all and all . Left congruences are defined analogously, and a 2-sided congruence is both a left and a right congruence. We refer to the number of classes of a congruence as its index.

If is any set, and is a function, then is a right action of on if for all and for all . If in addition has an identity element (i.e. if is a monoid), we require for all also. Left actions are defined dually. In this paper we will primarily be concerned with right actions of monoids.

If is a monoid, and and are right actions of on sets and , then we say that is a homomorphism of the right actions and if

for all and all . An isomorphism of right actions is a bijective homomorphism.

Let be any alphabet and let denote the free monoid generated by (consisting of all words over with operation juxtaposition and identity the empty word ). We define a word graph over the alphabet to be a digraph with set of nodes and edges . Word graphs are essentially finite state automata without initial or accept states. More specifically, if is a word graph over , then for any and any , we can define a finite state automaton where: the state set is ; the alphabet is ; the start state is ; the transition function is defined by whenever ; and denotes the accept states.

If is an edge in a word graph , then is the source, is the label, and is the target of . A word graph is complete if for every node and every letter there is at least one edge with source labelled by . A word graph is finite if the sets of nodes and edges are finite. A word graph is deterministic if for every node and every there is at most one edge with source and label .

If , then an -path is a sequence of edges where and and ; is the source of the path; the word labels the path; is the target of the path; and the length of the path is . We say that there exists an -path of length labelled by for all . For , and we will write to mean that labels a -path in , and if does not label a path with source in . When there are no opportunities for ambiguity we will omit the subscript from . If and there is an -path in , then we say that is reachable from . If is a node in a word graph , then the strongly connected component of is the set of all nodes such that is reachable from and is reachable from . If is a word graph and denotes the power set of , then the path relation of is the function defined by . If is a complete word graph and is a node in , then is a right congruence on . If , is a word graph, and is the path relation of , then we say that is compatible with if whenever and for all and for all . Equivalently, if is complete, then is compatible with if and only if for every node in . It is routine to verify that if a word graph is compatible with , then it is also compatible with the least (2-sided) congruence on containing ; we denote this congruence by .

3 Word graphs and right congruences

In this section we establish some fundamental results related to word graphs and right congruences. It seems likely to the authors that the results presented in this section are well-known; similar ideas occur in [38], [42], [65], and probably elsewhere. However, we did not find any suitable references that suit our purpose here, particularly in Section 3.3, and have included some of the proofs of these results for completeness.

This section has three subsections: in Section 3.1 we describe the relationship between right congruences and word graphs; in Section 3.2 we present some results about homomorphisms between word graphs; and finally in Section 3.3 we give some technical results about standard word graphs.

3.1 Right congruences

Suppose that is the monoid defined by the monoid presentation and is a right action of on a set . Recall that is isomorphic to the quotient of the free monoid by . If is the surjective homomorphism with , then we can define a complete deterministic word graph over the alphabet such that whenever . Conversely, if is a complete word graph that is compatible with , then we define by

| (3.1) |

whenever labels an -path in . It is routine to verify that the functions just described mapping a right action to a word graph, and vice versa, are mutual inverses. For future reference, we record these observations in the next proposition.

Proposition 3.1.

Let be the monoid defined by the monoid presentation . Then there is a one-to-one correspondence between right actions of and complete deterministic word graphs over compatible with .

If and are word graphs over the same alphabet , then is a word graph homomorphism if implies ; and we write . An isomorphism of word graphs and is a bijection such that both and are homomorphisms. If is a word graph homomorphism and labels an -path in , then it is routine to verify that labels a -path in .

The correspondence between right actions and word graphs given in 3.1 also preserves isomorphisms, which we record in the next proposition.

Proposition 3.2.

Let be a monoid generated by a set , let and be right actions of , and let and be the word graphs over corresponding to these actions. Then and are isomorphic actions if and only if and are isomorphic word graphs.

Proof.

Assume that and are isomorphic word graphs. Then there exists a bijection . Since and are isomorphic word graphs, labels an -path in if and only if labels a -path in . Hence for all and so is an isomorphism of the actions and .

Conversely, assume that and are isomorphic right actions of on sets and , respectively. Then there exists a bijective homomorphism of right actions such that for all . We will prove that is a word graph isomorphism. It suffices to show that if and only if . If , then and hence . Since is an isomorphism of right actions and so . Similarly, it can be shown that if , then and hence defines a word graph isomorphism. ∎

In Section 4 we are concerned with enumerating the right congruences of a monoid subject to certain restrictions, such as those containing a given set or those with a given number of equivalence classes. If is the monoid defined by the presentation and is a right congruence on , then the function defined by is a right action of on where is the equivalence class of in and . It follows by 3.1 that corresponds to a complete deterministic word graph over compatible with . In particular, the nodes of are the classes , and the edges are .

On the other hand, not every right action of a monoid is an action on the classes of a right congruence. For example, if is a rectangular band with an adjoined identity, then two faithful right actions of are depicted in Fig. 3.1 with respect to the generating set . It can be shown that the action of on shown in Fig. 3.1 corresponds to a right congruence of but that the action of on does not.

In a word graph corresponding to the action of a monoid on a right congruence , if , then there exist such that , and so labels the path from to in . In particular, every node in is reachable from the node . The converse statement, that every complete deterministic word graph compatible with where every node is reachable from corresponds to a right congruence on is established in the next proposition.

If and are semigroups, is a binary relation and is a homomorphism. Then abusing our notation somewhat we write

Proposition 3.3.

Let be the monoid defined by the monoid presentation , let be the unique homomorphism with , and let be a word graph over that is complete, deterministic, compatible with and where every node is reachable from some . Then is a right congruence on , where is the path relation on .

Proof.

Suppose that for some and that is arbitrary. Then and . Hence . Since is complete, , and so . Therefore , and is a right congruence. ∎

It follows from 3.3 that in order to enumerate the right congruences of it suffices to enumerate the word graphs over that are complete, deterministic, compatible with and where every node is reachable from a node . Of course, we only want to obtain every right congruence of once, for which we require the next proposition. Henceforth we will suppose that if is a word graph over , then for some and so, in particular, is always a node in . Moreover, we will assume that the node corresponds to the equivalence class of the identity of .

Proposition 3.4.

Let and be word graphs over corresponding to right congruences of a monoid generated by . Then and represent the same right congruence of if and only if there exists a word graph isomorphism such that .

Proof.

We denote the right congruences associated to and by and , respectively.

Suppose that there exists a word graph isomorphism such that and that the words label -paths in . Then and label -paths and so . Since is a word graph isomorphism satisfying , it follows that , and so , as required.

Conversely, assume that and represent the same right congruence of . We define a word graph homomorphism as follows. For every , fix a word that labels a -path in . We define to be the target of the path with source labelled by in . If , then both and label -paths in , and so . Hence is an edge of . In addition, and it is clear that if , then and hence is a bijection. ∎

It follows from 3.4 that to enumerate the right congruences of a monoid defined by the presentation it suffices to enumerate the complete deterministic word graphs over compatible with where every node is reachable from 0 up to isomorphism. On the face of it, this is not much of an improvement because, in general, graph isomorphism is a difficult problem. However, word graph isomorphism, in the context of 3.4, is trivial by comparison.

If the alphabet and , then we define if or and is less than or equal to in the usual lexicographic order on arising from the linear order on . The order is called the short-lex ordering on . If and , then we write . If is a node in a word graph and is reachable from , then there is a -minimum word labelling a -path; we denote this path by .

The following definition is central to the algorithm presented in Section 4.

Definition 3.5.

A complete word graph over is standard if the following hold:

-

(i)

is deterministic;

-

(ii)

every node is reachable from ;

-

(iii)

for all , if and only if .

Proposition 3.6.

[cf. Proposition 8.1 in [65]] Let and be standard complete word graphs over the same alphabet. Then there exists a word graph isomorphism such that if and only if .

By 3.6, every complete deterministic word graph in which every node is reachable from 0 is isomorphic to a unique standard complete word graph. It is straightforward to compute this standard word graph from the original graph by relabelling the nodes, for example, using the procedure SWITCH from [65, Section 4.7]. We refer to this process as standardizing .

Another consequence of 3.4 and 3.6 is that enumerating the right congruences of a monoid defined by a presentation is equivalent to enumerating the standard complete word graphs over that are compatible with . As mentioned above, the right action of on the classes of a right congruence is isomorphic to the right action represented by the corresponding word graph . We record this in the following theorem.

Theorem 3.7.

Let be a monoid defined by a monoid presentation . Then there is a one-to-one correspondence between the right congruences of and the standard complete word graphs over compatible with .

If is a right congruence on and is the corresponding word graph, then the right actions of on and on are isomorphic; and where is the unique homomorphism with and is the path relation on .

It is possible to determine a one-to-one correspondence between the right congruences of a semigroup and certain word graphs arising from , as a consequence of 3.7.

Corollary 3.8.

Let be a semigroup defined by a semigroup presentation . Then there is a one-to-one correspondence between the right congruences of and the complete word graphs for over compatible with such that for all and all .

If is a right congruence of and is the corresponding word graph for , then the right actions of on and on are isomorphic.

Proof.

By 3.7 it follows that there is a one-to-one correspondence between the right congruences of and the complete word graphs for over compatible with . In addition, there is a one-to-one correspondence between the right congruences of and the right congruences of such that if and only if . Since and coincide it follows that there is a one-to-one correspondence between the right congruences of such that if and only if and the complete word graphs for over compatible with such that for all and all . The argument to prove that the right actions of on and on are isomorphic is identical to the argument in the proof of 3.7. ∎

Given 3.7, we will refer to the standard complete word graph over compatible with corresponding to a given right congruence as the word graph of .

We require the following lemma.

Lemma 3.9.

Let and be semigroup, let , and let be a homomorphism. Then the following hold:

-

(i)

if , is transitive and are such that and for some , then ;

-

(ii)

if is surjective and is contained in the least (left, right, or 2-sided) congruence on containing , then the least (left, right, or 2-sided) congruence on containing is .

Lemma 3.9(i) can be reformulated as follows.

Corollary 3.10.

If and is transitive, then if and only if for all .

A convenient consequence of the correspondence between right congruences and their word graphs allows us to determine a set of generating pairs for from . This method is similar to Stalling’s method for finding a generating set of a subgroup of a free group from its associated coset graph, see, for example, [41, Proposition 6.7].

Lemma 3.11.

Let be a monoid defined by a monoid presentation , let be a right congruence of , and let be the word graph of . Then generates the path relation as a right congruence.

In particular, if is the natural homomorphism with , then

generates as a right congruence.

Proof.

We set where is the short-lex minimum word labelling any -path in for every .

We denote by the right congruence on generated by . If , then since , it follows that both and label -paths in . In particular, and so, since is the least right congruence containing and is a right congruence, also.

For the converse inclusion, we will show that for every that labels a -path in . It will follow from this that .

Suppose that labels a -path in . We write where is the longest prefix of belonging to . We proceed by induction on . If , then , and so . Hence and so by reflexivity. This establishes the base case.

Suppose that for some , for all such that labels a -path and where . Let for some with . Then we may write for some and with . Since is complete, there is an edge for some . Hence by definition.

If we set , then also labels a -path in . Again we write where is the maximal prefix of in and . Since is a prefix of and is maximal, is a (not necessarily proper) prefix of . In particular, and so . Hence by induction . In addition , since and is a right congruence. Therefore by transitivity , as required.

From this point in the paper onwards, for the purposes of describing the time or space complexity of some claims, we assume that we are using the RAM model of computation. The following corollary is probably well-known.

Corollary 3.12.

Let be a monoid defined by a monoid presentation , and let be a right congruence on . If has finite index , then is finitely generated as a right congruence and a set of generating pairs for can be determined in time from the word graph associated to .

Proof.

This follows immediately since the generating set for given in Lemma 3.11 has size , and it can be found by a breadth first traversal of the word graph. The breadth first traversal of the word graph has time complexity . To store the generating pairs for requires at most space and time, yielding the stated time complexity. ∎

3.2 Homomorphisms of word graphs

In this section we state some results relating homomorphisms of word graphs and containment of the associated congruences.

Lemma 3.13.

If and are deterministic word graphs on the same alphabet such that every node in and is reachable from and , respectively, then there exists at most one word graph homomorphism such that .

Proof.

Suppose that are word graph homomorphisms such that and . Then there exists a node such that . If is any word labelling a -path, then, since word graph homomorphisms preserve paths, labels - and -paths in , which contradicts the assumption that is deterministic. ∎

Suppose that and are word graphs. At various points in the paper it will be useful to consider the disjoint union of and , which has set of nodes, after appropriate relabelling, and edges . If , then we will write if it is necessary to distinguish which graph the node belongs to. We will also abuse notation by assuming that and .

Corollary 3.14.

If , , and are word graphs over the same alphabet, and every node in each word graph is reachable from for , then there is at most one word graph homomorphism such that .

Proof.

Suppose that are word graph homomorphisms such that . Then is a word graph homomorphism for and . Hence, by Lemma 3.13, and , and so . ∎

The final lemma in this subsection relates word graph homomorphisms and containment of the associated congruences.

Lemma 3.15.

Let and be word graphs over an alphabet representing congruences and on a monoid . Then there exists a word graph homomorphism such that if and only if .

Proof.

() If is a word graph homomorphism such that , and labels a -path in , then labels a -path in . If , then and label -paths in and so and label -paths in . Hence and so .

() Conversely, assume that . We define as follows. For every , we fix a word labelling a -path. Such a path exists for every because every node is reachable from . We define for all . If , then and both label -paths, and so . Since , it follows that and so and both label -paths in . In particular, is an edge of , and is a homomorphism. ∎

3.3 Standard word graphs

In this section we prove some essential results about standard word graphs.

Suppose that is a word graph where for some and where . The ordering on and induces the lexicographic ordering on and , and the latter orders the edges of any word graph . We write for any and all of these orders.

We defined standard complete word graphs in 3.5, we now extend this definition to incomplete word graphs by adding the following requirement:

-

(iv)

if is a missing edge in , for some , and there exists such that , then .

An edge is a short-lex defining edge in if .

Lemma 3.16.

Let be a deterministic word graph over an alphabet , let , and let

be an -path labelled by . If is the short-lex least word labelling any -path in , then the following hold:

-

(i)

if and only if , hence the -path labelled by does not contain duplicate edges;

-

(ii)

is the short-lex least word labelling any -path for all such that .

Lemma 3.17.

Let be a standard word graph over the alphabet , let , and let

be a -path labelled by . If is the short-lex least word labelling any -path in , then:

-

(i)

every edge is a short-lex defining edge;

-

(ii)

.

Proof.

For (i), let be the short-lex least word labelling a -path for each . By Lemma 3.16(ii), . Hence and so is a short-lex defining edge as required.

For (ii), since , it follows that and so by 3.5(iii), for every . ∎

If is an incomplete word graph over , then we refer to as a missing edge if for all . Recall that the missing edges are ordered lexicographically according to the orders on and as defined at the start of Section 3.3.

Lemma 3.18.

Let be an incomplete standard word graph over the alphabet , and let be a missing edge. Then for all .

Proof.

If , then as required. Assume that . Then and, in particular, there is at least one edge on the -path labelled by . Let be the last such edge. By Lemma 3.16(ii), , and, by 3.5(iv), . If , then is not a missing edge. Hence , and so, either ; or and . If , then, by 3.5(iii), and so , as required. If and , then . ∎

Lemma 3.19.

Let be an incomplete standard word graph over the alphabet , let be a missing edge, let , and let . If , then the following hold:

-

(i)

for all where is the short-lex least word labelling any -path in ;

-

(ii)

is standard.

Proof.

(i). Since every path in is also a path in , for all . Let be arbitrary. If the -path labelled by in does not contain the newly added edge , then also labels a -path in and so .

Seeking a contradiction suppose that -path labelled by in contains the newly added edge . Then we can write where labels a -path and labels a -path in . By Lemma 3.16(ii), labels the short-lex least -path in and so . Since was a missing edge, is deterministic, so by Lemma 3.16(i) in , the -path labelled by does not contain the edge . Hence also labels a -path in and so . By Lemma 3.16(ii) again, . But now by Lemma 3.18 in , , which is a contradiction.

Hence the -path labelled by in does not contain for any , and so .

(ii). Clearly, parts (i) and (ii) of 3.5 hold in . Part (iii) of 3.5 holds by part (i) of this lemma.

To show that 3.5(iv) holds, suppose that is a missing edge of and for some and such that . Note that every missing edge of is a missing edge of also. In particular, is a missing edge of .

If , then, by 3.5(iv) applied to , . But part (i) implies that and , and so also. In particular, satisfies 3.5(iv) in this case.

Applying Lemma 3.18 to and yields . So, if , then . Applying part (i) as in the previous case implies that . ∎

The final lemma in this section shows that the analogue of Lemma 3.19(ii) holds when the target of the missing edge is defined to be a new node.

Lemma 3.20.

Let be an incomplete standard word graph over the alphabet , let be the shortlex least missing edge in , and let . Then is standard.

Proof.

The word graph is deterministic since is a missing edge in . Hence satisfies 3.5(i). Since is standard, every node in is reachable from in and . In particular, is reachable from in and hence so too is . Thus 3.5(ii) holds for .

We set and , so that . As in Lemma 3.19(i), for every , we denote by the short-lex least word labelling any -path in . There are no edges in with source and , and so if , then no -path in contains the newly added edge . Therefore for each . Since is the only edge with target , and from Lemma 3.16(ii), . But then Lemma 3.18 implies that for all . So, if and , then either or . In both cases, and so 3.5(iii) holds for .

To establish that 3.5(iv) holds for , suppose that is a missing edge of and for some and with . There are three cases to consider:

-

(a)

is a missing edge in and ;

-

(b)

is a missing edge in and ;

-

(c)

is not a missing edge in .

If (a) holds, then by 3.5(iv) applied to . But Lemma 3.19 implies that and and so in this case.

We conclude the proof by showing that neither (b) nor (c) holds.

If (b) holds, then . But is the least missing edge of , so , which contradicts the assumption that . Hence (b) does not hold.

If (c) holds, then . Since there are no edges with source in , it follows that and so . But then , which again contradicts the assumption that . ∎

Combining Lemma 3.19(ii) and Lemma 3.20 we obtain the following corollary.

Corollary 3.21.

Let be an incomplete standard word graph over the alphabet , let be the short-lex least missing edge in , and let . Then is also standard.

4 Algorithm 1: the low-index right congruences algorithm

Throughout this section we suppose that: is a fixed finite monoid presentation defining a monoid ; and that is fixed. The purpose of this section is to describe a procedure for iterating through the right congruences of with at most congruence classes. This procedure is based on the Todd–Coxeter Algorithm (see for example [13], [38] or [69]) and is related to Sims’ “low-index” algorithm for computing subgroups of finitely presented groups described in Chapter 5.6 of [65].

As shown in 3.7, there is a bijective correspondence between complete standard word graphs with at most nodes that are compatible with , and the right congruences of with index at most . As we hope to demonstrate, the key advantage of this correspondence is that word graphs are inherently combinatorial objects which lend themselves nicely to various enumeration methods. The algorithm we describe in this section is a more or less classical backtracking algorithm, or depth-first search.

This section is organised as follows. We begin with a brief general description of backtracking algorithms and refining functions in Section 4.1. For a more detailed overview of backtracking search methods see [43, Section 7.2.2]. In Section 4.2 we construct a specific search tree for the problem at hand, whose nodes are standard word graphs; in Section 4.3 we introduce various refining functions that improve the performance of finding right congruences.

4.1 Backtracking search and refining functions

We start with the definition of the search space where we are performing the backtracking search. To do so we require the notion of a digraph where is the set of nodes, and is the set of edges. We denote such digraphs using blackboard fonts to distinguish them from the word graphs defined above.

A multitree is a digraph such that for all there exists at most one directed path from to in . For a node we write to denote the set of all nodes reachable from in .

Given a multitree and a (possibly infinite) set , we say that is a search multitree for if there exists such that . We refer to any such node as a root node of . Recall that the symbol is used to mean “undefined” and does not belong to .

Definition 4.1.

A function is a refining function for if the following hold for all :

-

(i)

,

-

(ii)

if , then ,

-

(iii)

if , then .

For example, the identity function is a refining function for subset of .

If , then we refer to the set

as the children of in . Given algorithms for computing the refining function , testing membership in , and determining the children of any , the backtracking algorithm outputs the set for any . As a consequence if is any root node of . Pseudocode for the algorithm is given in Algorithm 1.

Input: A refining function for and a node .

Output: .

Of course, if is infinite, then will not terminate. In practice, if is finite but is infinite, some care is required when choosing a refining function to ensure that terminates. On the other hand if for only finitely many , then clearly, will terminate.

can be modified to simply count the number of elements in , to apply any function to each element of as it is discovered, or to search for an element of with a particular property by modifying line 5 and 8.

The following properties of search multitrees and refining functions will be useful later:

Proposition 4.2.

Let be a search multitree for . Then

-

(i)

If , then is also a search multitree for ;

-

(ii)

If and and are refining functions for and respectively, then is a refining function for .

4.2 The search multitree of standard word graphs

In this section, we describe the specific search multitree required for the low-index congruences algorithm.

We define to be the set of all standard word graphs over a fixed finite alphabet and we define to consist of the edges if and only if , , is the short-lex least missing edge in , and . Since every word graph over is finite by definition, the set is countably infinite.

We write if and .

Lemma 4.3.

The digraph is a multitree.

Proof.

Suppose that and are paths in such that and . From the definition of it follows that and . Seeking a contradiction suppose that is the least value such that . If is the least missing edge in , then and for some with . It follows that , and so is not deterministic, and hence not standard, which is a contradiction. ∎

We denote the set of all complete standard word graphs over by . Note that, by 3.7, the word graphs in are in bijective correspondence with the right congruences of the free monoid ; see Appendix B. We do not use this correspondence explicitly.

We will now show that every is reachable from the trivial word graph in , so that is a search multitree for .

Lemma 4.4.

Let be any complete standard word graph over . Then there exists a sequence of standard word graphs

such that is an edge in for every . In particular, , and so is a search multitree for .

Proof.

Suppose that we have defined for some such that and is an edge in for every . Let be the least missing edge in . Since is deterministic, there exists such that . We define . Clearly, by definition and .

Since is finite, and the form a strictly increasing sequence of subsets of , it follows that the sequence of is finite. ∎

If is an incomplete standard word graph with least missing edge , then the children of in are:

Clearly, since is finite, it can be computed in linear time in . Also it is possible to check if is complete in constant time by checking whether . Hence we can check whether belongs to in constant time.

We conclude this subsection with some comments about the implementational issues related to for some refining function of and word graph . It might appear that to iterate over in line 7 of Algorithm 1, it is necessary to copy with the appropriate edge added, so that the recursive call to in line 8 does not modify . However, this approach is extremely memory inefficient, requiring memory proportional to the size of the search tree. This is especially bad when is used to count the word graphs satisfying certain criteria or used to find a word graph satisfying a particular property. We briefly outline how to iterate over by modifying inplace, which requires no extra memory (other than that needed to store the additional edge). If the maximum number of nodes in any word graph that will be encountered during the search is known beforehand, then counting and random sampling of within the search multitree can be performed with constant space.

To do this, the underlying datastructure used to store must support the following operations: retrieving the total number of edges, adding an edge (with a potentially new node as its target), removing the most recently added edge (and any incident nodes that become isolated). It is possible to implement a datastructure where each of these operations takes constant time and space, this is the approach used in libsemigroups [53]. Of course, the refining functions may also modify inplace.

Given such a datastructure and refining functions, we can then perform the loop in lines 7-9 of Algorithm 1 as follows:

-

(1)

Let be the total number of edges of , let be the least missing edge of and let .

-

(2)

Add the edge to .

-

(3)

Set , noting that the recursive call takes the modified as input.

-

(4)

If , then repeatedly remove the most recently added edge from until .

-

(5)

Increment . If , then terminate. Otherwise go to Step 2.

The word graph is equal to one of its children after the edge is added in Step 2. After Step 4, is restored to its original state before the recursive call was made. Note that we cannot just remove the last added edge, as the refining function may have added extra edges to in the recursive call, and these extra edges are not removed in the recursive call.

4.3 Refining functions for standard word graphs

We denote the set of complete standard word graphs over

-

•

with at most nodes by ;

-

•

compatible with by .

By 3.7, the word graphs in are precisely the word graphs of the right congruences of the monoid defined by with index at most .

In this section we define the refining functions and for and , respectively. It follows from 4.2(ii) that is a refining function for . We also define two further refining functions for that try to reduce the number of word graphs (or equivalently nodes in the search multitree) visited by ; we will say more about this later.

The first refining function is for any defined by

for every (the set of standard word graphs over and ). It is routine to verify that is a refining function for .

If is a standard word graph, then checking whether (i.e. is complete) can be done in constant time, and checking that (i.e. that ) also has constant time complexity. Moreover, for only finitely many standard word graphs over , and thus terminates and outputs .

It is possible to check if a, not necessarily standard, word graph belongs to in linear time in the length of the presentation . Again since , is a multisearch tree for . This gives us an immediate, if rather inefficient, method for computing all the right congruences with index at most of a given finitely presented monoid: simply run to obtain , and then check each word graph in for compatibility with .

This method would explore just as many nodes of the search tree for the free monoid as it would for the trivial monoid . On the other hand, when considering the trivial monoid, as soon as we define an edge leading to a node other than , both of the relations and are violated and hence there is no need to consider any of the descendants of the current node in the multitree. So that we can take advantage of this observation, we define the function by

Recall from the definition, if , then is compatible with if for every such that and . The non-existence of a path with source labelled by or , however, does not make incompatible with . So it may be possible to extend so that and do label paths with source and common target . Hence does not return just because some relation word does not label a path from some node in . It is straightforward to verify that is a refining function for .

By 4.2(ii), is a refining function for . Therefore the output of is . For comparison, the number of standard word graphs visited by where , for the -generated plactic monoid (with the standard presentation, see [44, 45]) is . On the other hand, the number for is . This example illustrates that it can be significantly faster to check for compatibility with at every node in the search multitree rather than first finding and then checking for compatibility. The extra time spent per word graph (or node in the search multitree) checking compatibility with is negligible in comparison to the saving achieved in this example.

The refiner can be improved. Consider the situation where with for some word and letter . If there is a node such that and , but , then is a missing edge in . There is only one choice for the target of this missing edge which will not break compatibility with . This situation is shown diagrammatically in Fig. 4.1.

So, if , then must be an edge in . Of course, it is not guaranteed that is such that is the least missing edge of any descendent of in the search multitree . However by Lemma 3.19ii, if , then and so is standard.

We now improve the refining function by adding the ability to define edges along paths that are one letter away from fully labelling a relation word in the manner described above. We denote this new refining function for by and define it in Algorithm 2.

Input: A standard word graph or

.

Output: A standard word graph or .

Lemma 4.5.

is a refining function for .

Proof.

We verify the conditions in 4.1.

Clearly, and so 4.1(i) holds. Let . If , then the execution of the algorithm constructs a sequence of word graphs such that is obtained from by adding an edge in line 7 or line 9 of Algorithm 2, where is the final word graph constructed before returning in either line 11 or 14. In the final iteration of the for loop in line 5, is the output word graph, and so the conditions in lines 6 and 8 do not hold because no more edges are added to . The condition in line 10 will only hold if is not compatible with . Therefore if and only if and otherwise. If , then 4.1(ii) and (iii) both hold since is a refining function for .

It suffices to establish that when . The claim can then be established for by straightforward induction. We may also assume without loss of generality is obtained from in line 9 of Algorithm 2. The other case when an edge is added in line 7 of Algorithm 2 is dual.

If we add an edge to in line 9, then the condition in line 8 of Algorithm 2 holds. Therefore, there exist , , , and such that the following hold: ; for some ; for some ; and .

We must show that . Since contains , it is clear that .

Let . It suffices to show that . Since is complete, there exists such that . Since labels an -path in , also labels such a path in . Likewise, labels an -path in . Hence labels an -path in . Since and is compatible with , it follows that and so .

By the definition of there exists a sequence of word graphs

and of edges such that is the least missing edge of and for every .

If is such that , then we consider the sequence of word graphs

Suppose the least missing edge in is . Every missing edge of is also a missing edge of , and so , since is the least missing edge in . Since and differ by the single edge , it follows that either and ; or and . Similarly, if , then by the same argument, the least missing edge in is for every . Therefore

is a path in and so , as required. ∎

It is possible that . To ensure that we add as many edges to as possible we could keep track of whether adds any edges to its input, and run Algorithm 2 again until no more edges are added. We denote this algorithm by ; see Algorithm 3 for pseudocode.

Input: A word graph or .

Output: A word graph or .

That is a refining function for follows by repeatedly applying 4.2(ii). Therefore both and are refining functions for .

While is more computationally expensive than , every edge added to by reduces the number of nodes in that must be traversed in where is by a factor of at least . This tradeoff seems quite useful in practice as can be seen in Appendix A.

Although in line 5 of we loop over all nodes and all relations in , in practice this is not necessary. Clearly, if the word graph is compatible with , then we only need to follow those paths labelled by relations that include any new edges. A technique for doing just this is given in [13, Section 7.2], and it is this that is implemented in libsemigroups [53]. A comparison of the refining functions for presented in this section is given in Table 4.1

| 1 | 2 | 3 | 4 | |

|---|---|---|---|---|

| 6 | 165 | 15,989 | 3,556,169 | |

| 6 | 120 | 1,680 | 29,800 | |

| 6 | 75 | 723 | 6,403 | |

| 6 | 75 | 695 | 6,145 |

5 Applications of Algorithm 1

In this section we describe a number of applications of the low-index right congruences algorithm. Recall that is a monoid defined by the presentation . Each application essentially consists of defining a refining function so that returns a particular subset of right congruences. In brief these subsets are:

-

(a)

left congruences;

-

(b)

2-sided congruences;

-

(c)

right congruences including, or excluding, a given subset of ;

-

(d)

2-sided congruences such that the quotient is a finite group;

-

(e)

non-trivial right Rees congruences when has decidable word problem; and

-

(f)

right congruences where the right action of on the nodes of the word graph of is faithful.

with index up to . A further application of (c) provides a practical algorithm for solving the word problem in finitely presented residually finite monoids; this is described in Section 5.4. Each application enumerated above is described in a separate subsection below.

A further application of the implementations of the algorithms described in this section is to reproduce, and extend, some of the results from [6].

5.1 Left congruences

In Sections 3 and 4, we only considered right congruences, and, in some sense, word graphs are inherently “right handed”. It is possible to state an analogue of 3.7 for left congruences, for which we require the following notation. If , then we write to denote the reverse of . If is arbitrary, then we denote by the set of relations containing for all .

An analogue of 3.1 holds for left actions. More specifically, if is a left action of a monoid on a set , and is as above, then the corresponding word graph is where whenever . Conversely, if is a word graph, then we define a left action by

whenever labels an -path in .

Theorem 5.1.

Let be a monoid defined by a monoid presentation . Then there is a one-to-one correspondence between the left congruences of and the standard complete word graphs over compatible with .

If is a left congruence on and is the corresponding word graph, then the left actions of on (by left multiplication) and on are isomorphic; and where is the unique homomorphism with and is the path relation on .

It follows from 5.1 that the left congruences of a monoid can be enumerated using the same method for enumerating right congruences applied to . Some care is required here, in particular, since the corresponding word graphs are associated to right congruences on the dual of the original monoid (defined by the presentation ), rather than to left congruences on .

5.2 2-sided congruences

The word graph corresponding to a 2-sided congruence of is just the right Cayley graph of with respect to . Therefore characterizing -sided congruences is equivalent to characterizing Cayley graphs. The corresponding question for groups — given a word graph , determine whether is the Cayley graph of a group — was investigated in [41, Theorem 8.14]. An important necessary condition, in our notation, is that for all . In some sense, this condition states that the automorphism group of is transitive. We will next show that the corresponding condition for monoids is that for all . This condition for monoids is, in the same sense as for groups, equivalent to the statement that for every node of the Cayley word graph there is an endomorphism mapping the identity to that node.

Lemma 5.2.

Let be the monoid defined by the monoid presentation , and let be a right congruence on with word graph . Then is a 2-sided congruence if and only if for all .

Proof.

For every , we denote by the short-lex minimum word labelling any -path in . We also denote by the unique surjective homomorphism with . Recall that, since is compatible with , and so, by 3.10, if and only if . Also, by 3.7, .

For the forward implication, suppose that is a 2-sided congruence and that . Then . Since is a 2-sided congruence,

for all . In particular, again by 3.10, . Thus . Since , it follows that and so as required.

We can further refine Lemma 5.2 by using the generating pairs of Lemma 3.11 to yield a computationally testable condition as follows.

Theorem 5.3.

Let be the monoid defined by the monoid presentation , and let be a right congruence on with word graph . Then is a 2-sided congruence if and only if is compatible with where is the short-lex minimum word labelling any -path in .

Proof.

Let be the unique surjective homomorphism with and let denote the set

() If is a 2-sided congruence, then by Lemma 5.2, for all . The relation is contained in by definition. Therefore for all . Hence is compatible with as required.

() Assume that is compatible with . Then and so . Since and is the least right congruence containing , it follows that . Hence by Lemma 3.9ii. Therefore . But is generated as a right congruence by by Lemma 3.11 and so giving equality throughout. In particular, is a 2-sided congruence, as required. ∎

In 5.3 we showed there is a bijection between the 2-sided congruences of the monoid defined by and the complete standard word graphs compatible with both and the set . We denote by the set of complete standard word graphs corresponding to 2-sided congruences of . Recall from 3.12 that we can compute from in time. We can also verify that a given word graph is compatible with in where is the sum of the lengths of the words occurring in , using, for example, .

We define the function by

for all .

Lemma 5.4.

TwoSidedMakeCompatibleRepeatedly is a refining function for .

Proof.

On superficial inspection, it might seem that TwoSidedMakeCompatibleRepeatedly is a refining function because is a refining function. However, this does not follow immediately because the set is dependent on the input word graph .

Clearly, so 4.1(i) holds.

It follows that where is the refining function returns the set of word graphs of the 2-sided congruences of the monoid defined by with index at most . As a practical comparison, the number of word graphs visited by where and for the -generated plactic monoid is . On the other hand, the number of word graphs visited when using the refining function is only .

As an example, in Table B.13 we compute the number of 2-sided congruences with index at most of the free monoid when and are not too large. For example, we compute the number of 2-sided congruences of up to index , , and , when , and , respectively.

5.3 Congruences including or excluding a relation

Given two elements and of the monoid defined by the finite presentation , we might be interested in finding finite index right congruences containing or not containing . Suppose that and are such that where is the unique homomorphism with . By 3.7, if is a right congruence of , then if and only if in the word graph of .

For , we denote by the set of complete standard word graphs such that . Similarly, we denote by the set of complete standard word graphs such that .

We also define refining functions and by

It is routine to verify that and are refining functions for and , respectively.

Composing these refining functions with and any of the refining functions for one or 2-sided congruences from Section 4 and Section 5.2 allows us to find one or 2-sided congruences of with index at most that include or exclude a given relation.

5.4 McKinsey’s algorithm

A monoid is residually finite if for all with there exists a finite monoid and homomorphism such that . In [50], McKinsey gave an algorithm for deciding the word problem in finitely presented residually finite monoids. McKinsey’s Algorithm in [50] is, in fact, more general, and can be applied to residually finite universal algebras.

McKinsey’s algorithm relies on two semidecision procedures — one for testing equality in a finitely presented monoid and the other for testing inequality in a finitely generated residually finite monoid. It is well-known (and easy to show) that testing equality is semidecidable for every finitely presented monoid.

Suppose that is finitely presented by and that is residually finite. There are only finitely many finite monoids of every size, and only finitely many possible functions from to . If is any such function, then it is possible to verify that extends to a homomorphism by checking that satisfies the (finite set of) relation . Clearly, if and for some , then, since is a function, . It follows that it is theoretically possible to verify that by looping over the finite monoids , the functions , and for every that extends to a homomorphism , testing whether . Thus testing inequality in a finitely presented residually finite monoid is also semidecidable.

McKinsey’s algorithm proceeds by running semidecision algorithms for testing equality and inequality in parallel; this is guaranteed to terminate, and so the word problem for finitely presented residually finite monoids is decidable in theory. In practice, checking for equality in a finitely presented monoid with presentation can be done somewhat efficiently by performing a backtracking search in the space of all elementary sequences over . On the other hand, the semidecision procedure given above for checking inequality is extremely inefficient. For example, the number of monoids of size at most up to isomorphism and anti-isomorphism is ; see [17].

The low-index congruences algorithm provides a more efficient algorithm for deciding inequality in a finitely presented residually finite monoid by utilizing the refining function for some . The set consists of exactly the 2-sided congruences on with index at most such that (where is the natural homomorphism). Hence in if and only if for some . Therefore can be used to implement McKinsey’s algorithm with a higher degree of practicality.

5.5 Congruences defining groups

We say that a 2-sided congruence on is a group congruence if the quotient monoid is a group. A 2-sided congruence is a group congruence if and only if for every there exists such that and where is the identity element. If is generated by , then is a group congruence if and only if for every there exists such that and . We say that a word graph is injective if for all and there is at most one edge in with target and label . This is the dual of the definition of determinism. We can decide if a finite word graph corresponds to a group congruence as follows.

Theorem 5.5.

Let be the monoid defined by the monoid presentation , and let be a finite index 2-sided congruence on with word graph . Then is a group congruence if and only if is injective.

Proof.

Let be the unique surjective homomorphism with .

Let for some and . Since defines a group there exists such that . Since is a 2-sided congruence, and so and similarly . But and both label -paths in , and so . Hence by transitivity . Then, by 3.10 and 3.7, and so . We have shown that is injective.

Suppose that . Since the set is infinite but has only finitely many nodes, it follows from the pigeonhole principle that there exists and with such that and both label -paths in . Assume that is the least such value. If , then there exist such that and label - and -paths respectively. It follows that and so by injectivity . In particular, and both label -paths, and this contradicts the minimality of .

Therefore and so . Hence, by 3.7, . In particular, if , then . Since is surjective, and was arbitrary, it follows that is a group congruence. ∎

It is possible to verify if a given word graph over is injective, or not. In particular, in the representation used in libsemigroups [53], this can be verified in time linear in . Furthermore, if is not injective, then neither is any descendent of in the search multitree . Hence the following function is a refining function for the set of all word graphs corresponding to group congruences:

Composing IsInjective, , and any of the refining functions for of word graphs corresponding to 2-sided congruences of , this gives us a method for computing all group congruences with index at most of the monoid presented by .

5.6 Rees congruences

In this section we describe how to use the low-index right congruences algorithm to compute Rees congruences, i.e. those arising from ideals. A related algorithm for finding low-index Rees congruences is given in [39] and [40]. Like the low-index congruences algorithm, Jura’s Algorithm in [39] and [40] also uses some aspects of the Todd–Coxeter Algorithm. The algorithm presented in this section is distinct from Jura’s Algorithm. In general, the problem of computing the finite index ideals of a finitely presented monoid is undecidable; see [40] and [61, Theorem 5.5]. However, if the word problem happens to be decidable for a finitely presented monoid, then so too is the problem of computing the finite index ideals of that monoid.

Given a right ideal of a monoid , the right Rees congruence of is . The trivial congruence is a right Rees congruence if and only if has a right zero; and the trivial congruence has finite index if and only if is finite. As such, we will restrict ourselves, in this section, to considering only non-trivial finite index right Rees congruence.

Let be a standard word graph of a right congruence of and let be the unique homomorphism with . We call a node a sink if for all . We say that a sink is non-trivial if there exists an edge such that , where as usual is the short-lex least word labelling a -path in .

If is compatible with the relations defining , is the right congruence of any complete standard word graph compatible with that contains , and is a non-trivial sink, then the equivalence class of on corresponding to contains at least 2 elements: and . In particular, is non-trivial, which explains why we called a non-trivial sink.

We give a criterion for deciding if a complete standard word graph corresponds to a non-trivial right Rees congruence in the next theorem.

Theorem 5.6.

Let be the monoid defined by the monoid presentation , let be a right congruence on with word graph , and let be the unique homomorphism with . Then is a non-trivial right Rees congruence if and only if the following conditions hold:

-

(i)

there exists a unique non-trivial sink ;

-

(ii)

if and , then .

Proof.

() Let be a right ideal of such that and , and let be the corresponding non-trivial right Rees congruence with complete standard word graph . If is such that , then since is complete, for some .

If for some , then since is a right Rees congruence. By 3.10, it follows that , and so also. Conversely, if , then and hence . Hence labels a -path in if and only if .

In particular and if is arbitrary, then , and so also labels a -path in . It follows that for all and is a sink. To show that (i) holds, it remains to show that is non-trivial and unique.

To show that is non-trivial, consider the set

Since and is surjective, this set is non-empty. We set to be the short-lex least word in . Since , and is the short-lex least such word, and , it follows that . Hence for some and some . If labels a -path in for some , then and so it suffices to show that . If , then . Since , it would follow that and is non-trivial. Hence it suffices to show that .

If , then , and so . But implies that and so since is a Rees congruence, as required. If , then and so . Since and is the least element of , it follows that and so , as required. We have shown that is non-trivial.

To establish the uniqueness of , let be a non-trivial sink. By the definition of non-trivial sinks, there exists such that . Since , it follows that . If or , then, since is a Rees congruence, , which is a contradiction. Hence and so .

To show that (ii) holds, let and . It follows that neither , and so . On the other hand implies and so again since is a right Rees congruence.

() Let be the unique node satisfying condition (i) and let

If , then , and is a non-trivial Rees congruence. Hence we may suppose that .

By assumption for all and so for all . Hence if labels a -path in , then so does for all and so for all and . Since is surjective, this implies that is a right ideal of . It suffices by 3.7 to show that .

Suppose that . If , then there exist such that and . Hence by the definition of , both and label -paths in . This implies and so . Otherwise, if , then by reflexivity since is a right congruence. Hence .

For the converse, suppose that for some such that . We proceed by induction on . If , then and so by reflexivity.

Suppose that for some and for all with , implies . Let be such that and . Without loss of generality there are two cases to consider: when ; and when and .

If , then by assumption. Hence we can write for some and . If , then and so . In particular, and so by induction . Thus . Since and , it follows by (ii) that . Hence by transitivity.

If and , then we can write and for some and . If and , then . Since , it follows that . Similarly . Hence, by induction, and so . If , then by (ii) applied to , it follows that . Thus, by transitivity, , and the proof is complete. ∎

Unlike in the previous subsections, the conditions of 5.6 can only be tested if a method for solving the word problem in the monoid is known. Given an algorithm for solving the word problem, the non-triviality of a sink in 5.6(i) and the condition in 5.6(ii) can both be verified computationally.

Let be the set of all standard complete word graphs corresponding to non-trivial right Rees congruences on the monoid presented by . The function is defined in Algorithm 4. We will show that the function , is a refining function for in Lemma 5.7.

Input: A word graph or .

Output: A word graph or .

Lemma 5.7.

is a refining function for .

Proof.

Suppose that and . Then returns in line 10 or line 20 of Algorithm 4. If returns in line 10, then there exist such that and . If any descendent of has a unique non-trivial sink , then or . In particular, 5.6(ii) does not hold, and so , and so 4.1(ii) holds.

If returns in line 20, then there exists a unique and such that . The node is the unique node with this property for every descendent of also. But, by the condition of line 19, is not a sink in , and hence no descendent of contains a unique non-trivial sink. In other words, , and so 4.1(ii) holds.

Finally assume that returns in line 24 of Algorithm 4. We must show that . Since , certainly . Suppose that . The if statement in line 15 implies that has been assigned a value in and therefore it is the unique non-trivial sink of every descendent of in including . Since edges are only added to in line 18, it follows that and so 4.1(iii) holds. ∎

It follows from Lemma 5.7 that where is .

If is a right ideal of , then the Rees congruence is a 2-sided congruence if and only if is a 2-sided ideal of . Hence is the set of all standard complete word graphs corresponding to Rees congruences by 2-sided ideals. Combining the criteria of 5.6 and 5.3 we can computationally check if a word graph belongs to . Therefore where is .

5.7 Congruences representing faithful actions

If is a (right) monoid action, then every induces a transformation defined by . We say that is faithful if implies that for all . Of course, if is a faithful right action of , then and are isomorphic monoids. Several recent papers have studied transformation representations of various classes of semigroups and monoids; see [3], [10], [11], and [49] and the references therein. The implementations of the algorithms in this paper were crucial in [11] and can be used to verify, in finitely many cases, some of the results in [49].

Recall that to every complete deterministic word graph compatible with we associate the right action given by for all , where is the unique homomorphism with .

We require the following theorem.

Theorem 5.8 (cf. Proposition 1.2 in [71] or Chapter I, Proposition 5.24 in [42]).

Let be a monoid and let be a right congruence on . Then the action of on by right multiplication is faithful if and only if the only 2-sided congruence contained in is trivial.

A 2-sided congruence on a monoid is principal if there exists such that and is the least 2-sided congruence containing , i.e. . We also refer to the pair in the preceding sentence as the generating pair of the principal congruence . Note that if is a principal 2-sided congruence, then by definition.

Clearly, every non-trivial 2-sided congruence contains a principal 2-sided congruence. If is a right congruence not containing any principal 2-sided congruences, then contains no non-trivial 2-sided congruences. Hence, by 5.8, acts faithfully on by right multiplication. This argument establishes one implication of the next theorem.

Theorem 5.9.