Computing the nc-rank via discrete convex optimization on CAT(0) spaces

Abstract

In this paper, we address the noncommutative rank (nc-rank) computation of a linear symbolic matrix

where each is an matrix over a field , and are noncommutative variables. For this problem, polynomial time algorithms were given by Garg, Gurvits, Oliveira, and Wigderson for , and by Ivanyos, Qiao, and Subrahmanyam for an arbitrary field . We present a significantly different polynomial time algorithm that works on an arbitrary field . Our algorithm is based on a combination of submodular optimization on modular lattices and convex optimization on CAT(0) spaces.

Keywords: Edmonds’ problem, noncommutative rank, CAT(0) space, proximal point algorithm, submodular function, modular lattice, -adic valuation, Euclidean building

1 Introduction

The present article addresses rank computation of a linear symbolic matrix— a matrix of the following form:

| (1.1) |

where each is an matrix over a field , are variables, and is viewed as a matrix over . This problem, sometimes called Edmonds’ problem, has fundamental importance in a wide range of applied mathematics and computer science; see [36]. Edmonds’ problem (on large field ) is a representative problem that belongs to RP—the class of problems having a randomized polynomial time algorithm—but is not known to belong to P. The existence of a deterministic polynomial time algorithm for Edmonds’ problem is one of the major open problems in theoretical computer science.

In 2015, Ivanyos, Qiao, and Subrahmanyam [29] introduced a noncommutative formulation of the Edmonds’ problem, called the noncommutative Edmonds’ problem. In this formulation, linear symbolic matrix is regarded as a matrix over the free skew field , which is the “most generic” skew field of fractions of noncommutative polynomial ring . The rank of over the free skew field is called the noncommutative rank, or nc-rank, which is denoted by . Contrary to the commutative case, the noncommutative Edmonds’ problem can be solved in polynomial time.

As well as the result, the algorithms for nc-rank are stimulating subsequent researches. The first polynomial time algorithm is due to Garg, Gurvits, Oliveira, and Wigderson [17] for the case of . They showed that Gurvits’ operator scaling algorithm [19], which was designed for solving a special class (Edmonds-Rado class) of Edmonds’ problem, can solve nc-singularity testing (i.e., testing whether ) in polynomial time. The operator scaling algorithm has rich connections to various fields of mathematical sciences. Particularly, nc-singularity testing can be formulated as a geodesically-convex optimization problem on Riemannian manifold , and the operator scaling can be viewed as a minimization algorithm on it; see [2]. For explosive developments after [17], we refer to e.g., [9] and the references therein.

Ivanyos, Qiao, and Subrahmanyam [29, 30] developed the first polynomial time algorithm for the nc-rank that works on an arbitrary field . Their algorithm is viewed as a “vector-space generalization” of the augmenting path algorithm in the bipartite matching problem. This indicates a new direction in combinatorial optimization, since Edmonds’ problem generalizes several important combinatorial optimization problems. Inspired by their algorithm, [27] developed a combinatorial polynomial time algorithm for a certain algebraically constraint 2-matching problem in a bipartite graph, which corresponds to the (commutative) Edmonds’ problem for a linear symbolic matrix in [32]. Also, a noncommutative algebraic formulation that captures weighted versions of combinatorial optimization problems was studied in [25, 26, 40].

The main contribution of this paper is a significantly different polynomial time algorithm for computing the nc-rank on an arbitrary field . While describing the above algorithms and validity proofs is rather tough work, the algorithm and proof presented in this paper are conceptually simple, elementary, and relatively short. Further, it is also relevant to the following two cutting edge issues in discrete and continuous optimization:

-

•

submodular optimization on a modular lattice.

-

•

convex optimization on a CAT(0) space.

A submodular function on a lattice is a function satisfying for . Submodular functions on Boolean lattice are well-studied, and have played central roles in the developments of combinatorial optimization; see [15]. They are correspondents of convex functions (discrete convex functions) in discrete optimization; see [37]. Optimization of submodular functions beyond Boolean lattices, particularly on modular lattices, is a new research area that has just started; see [16, 24, 34] on this subject.

A CAT(0) space is a (non-manifold) generalization of nonpositively curved Riemannian manifolds; see [8]. While CAT(0) spaces have been studied mainly in geometric group theory, their effective utilization in applied mathematics has gained attention; see e.g.,[6]. A CAT(0) space is a uniquely-geodesic metric space, and convexity concepts are defined along unique geodesics. Theory of algorithms and optimization on CAT(0) spaces is now being pioneered; see e.g., [3, 4, 5, 22, 41].

Our algorithm is obtained as a combination of these new optimization approaches. We hope that this will bring new interactions to the nc-rank literature. While it is somehow relevant to geodesically-convex optimization mentioned above, we deal with optimization on combinatorially-defined non-manifold CAT(0) spaces. The most important implication of our result is that convex optimization algorithms on such spaces can be a tool of showing polynomial time complexity.

Outline.

Let us outline our algorithm. As shown by Fortin and Reutenauer [14], the nc-rank is given by the optimum value of an optimization problem:

Theorem 1.2 ([14]).

Let be a matrix of form (1.1). Then is equal to the optimal value of the following problem:

| has an zero submatrix, | ||||

As in [29, 30], our algorithm is designed to solve this optimization problem. This problem FR can also be formulated as an optimization problem on the modular lattice of vector subspaces in , as follows. Regard each matrix as a bilinear form by

Then the condition of FR says that there is a pair of vector subspaces and of dimension and , respectively, that annihilates all bilinear forms, i.e., . The objective function is written as . Therefore, FR is equivalent to the following problem (maximum vanishing subspace problem; MVSP):

It is a basic fact that the family of all vector subspaces in forms a modular lattice with respect to the inclusion order. Hence, MVSP is an optimization problem over . Further, by reversing the order of the second , it can be viewed as a submodular function minimization (SFM) on modular lattice ; see Proposition 3.2 in Section 3.1.

Contrary to the Boolean case, it is not known generally whether a submodular function on a modular lattice can be minimized in polynomial time. The reason of polynomial-time solvability of SFM on Boolean lattice is the Lovász extension [35]— a piecewise-linear interpolation of function such that is convex if and only if is submodular. For SFM on a modular lattice, however, such a good convex relaxation to is not known.

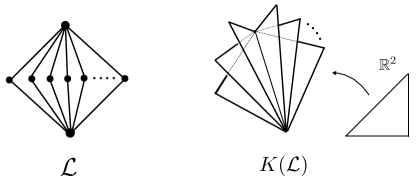

A recent study [24] introduced an approach of constructing a convex relaxation of SFM on a modular lattice, where the domain of the relaxation is a CAT(0) space. The construction is based on the concept of an orthoscheme complex [7]. Consider the order complex of , and endow each simplex with a specific Euclidean metric. The resulting metric space is called the orthoscheme complex of , and is dealt with as a continuous relaxation of . The details are given in Section 2.2.2. Figure 1 illustrates the orthoscheme complex of a modular lattice with rank , which is obtained by gluing Euclidean isosceles right triangles along longer edges.

The orthoscheme complex of a modular lattice was shown to be CAT(0) [10]. This enables us to consider geodesically-convexity for functions on . In this setting, a submodular function is characterized by the convexity of its piecewise linear interpolation, i.e., Lovász extension [24]. According to this construction, we obtain an exact convex relaxation of MVSP in a CAT(0)-space.

Our proposed algorithm is obtained by applying the splitting proximal point algorithm (SPPA) to this convex relaxation. SPPA is a generic algorithm that minimizes a convex function of a separable form , where each is a convex function. Each iteration of the algorithm updates the current point to its resolvent of — a minimizer of , where is chosen cyclically. Bačák [4] showed that SPPA generates a sequence convergent to a minimizer of (under a mild assumption). Subsequently, Ohta and Pálfia [39] proved a sublinear convergence of SPPA.

The main technical contribution is to show that SPPA is applicable to the convex relaxation of MVSP and becomes a polynomial time algorithm for MVSP: We provide an equivalent convex relaxation of MVSP with a separable objective function , and show that the resolvent of each can be computed in polynomial time. By utilizing the sublinear convergence estimate, a polynomial number of iterations for SPPA identifies an optimal solution of MVSP.

Compared with the existing algorithms, this algorithm has advantages and drawbacks. As mentioned above, our algorithm and its validity proof are relatively simple. Particularly, it can be uniformly written for an arbitrary field , where only the requirement for is that arithmetic operations is executable. No care is needed for a small finite field, whereas the algorithm in [29, 30] needs a field extension. On the other hand, our algorithm is very slow; see Theorem 3.3. This is caused by using a generic and primitive algorithm (SPPA) for optimization on CAT(0) spaces. We believe that this will be naturally improved in future developments.

The problematic point of our algorithm is bit-complexity explosion for the case of . Our algorithm updates feasible vector subspaces in MVSP, and can cause an exponential increase of the bit-size representing bases of those vector subspaces. To resolve this problem and make use of the advantage in finite fields, we propose a reduction of nc-rank computation on to that on . This reduction is an application of the -adic valuation on . We consider a weighted version of the nc-rank, which was introduced by [25] for and is definable for an arbitrary field with a discrete valuation. The corresponding optimization problem MVMP is a discrete convex optimization on a representative CAT(0) space—the Euclidean building for (or ). This may be viewed as a -adic counterpart of the above geodesically-convex optimization approach on for nc-singularity testing on . By using an obvious relation of the -adic valuation of a nonzero integer and its bit-length in base , we show that nc-singularity testing on reduces to a polynomial number of nc-rank computation over the residue field , in which the required bit-length is polynomially bounded.

Organization.

The rest of this paper is organized as follows. In Section 2, we present necessary backgrounds on convex optimization on CAT(0) space, modular lattices, and submodular functions. In Section 3, we present our algorithm and show its validity. In Section 4, we present the -adic reduction for nc-rank computation on .

Original motivation: Block triangularization of a partitioned matrix.

The original version [21] of this paper dealt with block triangularization of a matrix with the following partition structure:

where is an matrix over field for and . Consider the following block triangularization

where and are permutation matrices and and are regular transformations “within blocks,” i.e., and are block diagonal matrices with block diagonals and , respectively. Such a block triangularization was addressed by Ito, Iwata, and Murota [31] for motivating analysis on physical systems with (restricted) symmetry. The most effective block triangularization is determined by arranging a maximal chain of maximum-size zero-blocks exposed in , where the size of a zero block is defined as the sum of row and column numbers. This generalizes the classical Dulmage-Mendelsohn decomposition for bipartite graphs and Murota’s combinatorial canonical form for layered mixed matrices; see [23, 37].

Finding a maximum-size zero-block is nothing but FR (or MVSP) for the linear symbolic matrix obtained by multiplying variable to ; see [25, Appendix] for details. The original version of our algorithm was designed for this zero-block finding. Later, we found that this is essentially nc-rank computation. This new version improves analysis (on Theorem 3.3), simplifies the arguments, particularly the proof of Theorem 3.9, and includes the new section for the -adic reduction.

2 Preliminaries

Let denote . Let , , denote the sets of real, rational, and integer numbers, respectively. Let denote the vector in such that if and zero otherwise. The -unit vector is simply written as .

2.1 Convex optimization on CAT(0)-spaces

2.1.1 CAT(0)-spaces

Let be a metric space with distance function . A path in is a continuous map , where its length is defined as over and . If and , then we say that a path connects . A geodesic is a path satisfying for every . A geodesic metric space is a metric space in which any pair of two points is connected by a geodesic. Additionally, if a geodesic connecting any points is unique, then is called uniquely geodesic.

We next introduce a CAT(0) space. Informally, it is defined as a geodesic metric space in which any triangle is not thicker than the corresponding triangle in Euclidean plane. We here adopt the following definition. A geodesic metric space is said to be CAT(0) if for every point , every geodesic and , it holds

| (2.1) |

The following property of a CAT(0) space is a basis of introducing convexity.

Proposition 2.1 ([8, Proposition 1.4]).

A CAT-space is uniquely geodesic.

Suppose that is a CAT space. For points in , let denote the image of a unique geodesic connecting . For , the point on with is formally written as .

A function is said to be convex if for all it satisfies

If it satisfies a stronger inequality

for some , then is said to be strongly convex with parameter . In this paper, we always assume that a convex function is continuous. A function is said to be -Lipschitz with parameter if for all it satisfies

Lemma 2.2.

For any , the function is strongly convex with parameter , and is -Lipschitz with , where denotes the diameter of

The former follows directly from the definition (2.1) of CAT(0)-space. The latter follows from .

2.1.2 Proximal point algorithm

Let be a complete CAT(0)-space (which is also called an Hadamard space). For a convex function and the resolvent of is a map defined by

Since the function is strongly convex with parameter , the minimizer is uniquely determined, and is well-defined; see [5, Proposition 2.2.17].

The proximal point algorithm (PPA) is to iterate updates . This simple algorithm generates a sequence converging to a minimizer of under a mild assumption; see [3, 5]. The splitting proximal point algorithm (SPPA) [4, 5], which we will use, minimizes a convex function represented as the following form

where each is a convex function. Consider a sequence satisfying

- Splitting Proximal Point Algorithm (SPPA)

-

Let be an initial point.

-

For , repeat the following:

Bačák [4] showed that the sequence generated by SPPA converges to a minimizer of if is locally compact. Ohta and Pálfia [39] proved sublinear convergence of SPPA if is strongly convex and is not necessarily locally compact.

Theorem 2.3 ([39]).

Suppose that is strongly convex with parameter and each is -Lipschitz. Let be the unique minimizer of . Define the sequence by

Then the sequence generated by SPPA satisfies

2.2 Geometry of modular lattices

We use basic terminologies and facts in lattice theory; see e.g., [18]. A lattice is a partially ordered set in which every pair of elements has meet (greatest common lower bound) and join (lowest common upper bound). Let denote the partial order, where means and . A pairwise comparable subset of , arranged as , is called a chain (from to ), where is called the length. In this paper, we only consider lattices in which any chain has a finite length. Let and denote the minimum and maximum elements of , respectively. The rank of element is defined as the maximum length of a chain from to . The rank of lattice is defined as the rank of . For elements with the interval is the set of elements with . Restricting to , the interval is a lattice with maximum and minimum . If and , we say that covers and denote or . For two lattices , their direct product becomes a lattice, where the partial order on is defined by .

A lattice is called modular if for every triple of elements with , it holds . A modular lattice satisfies the Jordan-Dedekind chain condition. This is, the lengths of maximal chains of every interval are the same. Also, we often use the following property:

| (2.2) |

This can be seen from the definition of modular lattices, and holds also when replacing by .

A modular lattice is said to be complemented if every element can be represented as a join of atoms, where an atom is an element of rank . It is known that for a complemented modular lattice, every interval is complemented modular, and a lattice obtained by reversing the partial order is also complemented modular. The product of two complemented modular lattices is also complemented modular.

A canonical example of a complemented modular lattice is the family of all subspaces of a vector space , where the partial order is the inclusion order with , and . Another important example is a Boolean lattice—a lattice isomorphic to the poset of all subsets of with respect to the inclusion order .

2.2.1 Frames—Boolean sublattices in a complemented modular lattice

Let be a complemented modular lattice of rank , and let denote the rank function of . A complemented modular lattice is equivalent to a spherical building of type A [1]. We consider a lattice-theoretic counterpart of an apartment, which is a maximal Boolean sublattice of .

A base is a set of atoms with . The sublattice generated by a base is called a frame, which is isomorphic to a Boolean lattice by the map

Lemma 2.4 (see e.g.,[18]).

Let be a complemented modular lattice of rank .

-

(1)

For chains in , there is a frame containing and .

-

(2)

For a frame and an ordering of its basis, define map by

(2.3) Then is a retraction to such that it is rank-preserving (i.e., ) and order-preserving (i.e., ).

This is nothing but a part of the axiom of building, where the map in (2) is essentially a canonical retraction to an apartment.

Proof.

We show (1) by the induction on . Suppose that and . Consider the maximal chains from to , where and consists of . Note that the maximality of follows from (2.2). By induction, there is a frame of the interval (that is a complemented modular lattice of rank ) such that it contains . Consider the first index such that . Then for , and . For , by and modularity, it holds that covers . Again by modularity, it must hold for . By complementality, we can choose an atom such that . Now is a frame as required.

(2). By (2.2), is a maximal chain from to . From this and the chain condition, the rank-preserving property follows. Suppose that and for . Then . By (2.2) and the chain condition from to , it must hold . This means that any index appeared in (2.3) for also appears in that for . Then, the order-preserving property follows. ∎

Suppose that is the lattice of all vector subspaces of , and that we are given two chains and of vector subspaces, where each subspace in the chains is given by a matrix with (or ). The above proof can be implemented by Gaussian elimination, and obtain vectors with in polynomial time.

2.2.2 The orthoscheme complex of a modular lattice

Let be a modular lattice of rank . Let denote the geometric realization of the order complex of . That is, is the set of all formal convex combinations of elements in such that the support of is a chain of . Here “convex” means that the coefficients are nonnegative reals and . A simplex corresponding to a chain is the subset of points whose supports belong to .

We next introduce a metric on . For a maximal simplex corresponding to a maximal chain , define a map by

| (2.4) |

This is a bijection from to the -dimensional simplex of vertices . This simplex is called the -dimensional orthoscheme. The metric on each simplex of is defined by

| (2.5) |

Accordingly, the length of a path is defined as the supremum of over all and , in which belongs to a simplex for each . Then the metric on is defined as the infimum of over all paths connecting . The resulting metric space is called the orthoscheme complex of [7]. By Bridson’s theorem [8, Theorem 7.19], is a complete geodesic metric space. Basic properties of the orthoscheme complex of a modular lattice are summarized as follows.

Proposition 2.5.

-

(1)

[10] For a modular lattice , the orthoscheme complex is a complete CAT(0) space.

- (2)

- (3)

-

(4)

[10] For a complemented modular lattice of rank and a frame of with an ordering of its basis, the map is extended to by

Then is a nonexpansive retraction from to . In particular,

-

(4-1)

is an isometric subspace of , and

-

(4-2)

.

-

(4-1)

For a complemented modular lattice , the CAT(0)-property of is equivalent to the CAT(1)-property of the corresponding spherical building, as shown in [20].

The isometry between and .

The isometry from to (Proposition 2.5 (2)) is given by

The inverse map is constructed as follows: For , choose maximum with , , set , , repeat it from until . The resulting satisfies .

The -coordinate of a frame .

A frame is isomorphic to Boolean lattice by . Further, the subcomplex is viewed as an -cube , and a point in is viewed as via isometry . This -dimensional vector is called the -coordinate of . From -coordinate , the original expression of is recovered by sorting in decreasing order as: , and letting

| (2.7) |

where .

2.2.3 Submodular functions and Lovász extensions

Let be a modular lattice. A function is said to be submodular if

For a function , the Lovász extension is defined by

In the case of , this definition of the Lovász extension coincides with the original one [15, 35] by (Proposition 2.5 (3)).

Proposition 2.6.

Let be a modular lattice of rank . For a function , we have the following.

-

(1)

[24] is submodular if and only if the Lovász extension is convex.

-

(2)

The Lovász extension is -Lipschitz with

-

(3)

Suppose that is integer-valued. For , if , then a minimizer of exists in the support of .

Proof.

(1) [sketch]. For two points , there is a frame such that contains . Since is an isometric subspace of (Proposition 2.5 (4)), the geodesic belongs to . Hence, a function on is convex if and only if it is convex on for every frame . For any frame , the restriction of a submodular function to is a usual submodular function on Boolean lattice . Hence is viewed as the usual Lovász extension by , and is convex.

(2). We first show that the restriction of to any maximal simplex is -Lipschitz with . Suppose that corresponds to a chain . Let and be points in . Define by

By (2.4) and (2.5), we have Let . Then we have

where we let and . Thus, is -Lipschitz.

Next we show that is -Lipschitz. For any , choose the geodesic between and , and such that belongs to simplex . Then we have

(3). Let , and let . Suppose to the contrary that all ’s satisfy . Then . Hence . However this contradicts . ∎

3 Algorithm

3.1 Nc-rank is submodular minimization

Consider MVSP for a linear symbolic matrix . Let us formulate MVSP as an unconstrained submodular function minimization over a complemented modular lattice. Let and denote the lattices of all vector subspaces of , where the partial order of is the inclusion order and the partial order of is the reverse inclusion order. Let be defined by

where is the restriction of to . Then the condition in MVSP can be written as . By using as a penalty term, consider the following unconstrained problem:

Then it is easy to see:

Lemma 3.1.

Any optimal solution of MVSPR is optimal to MVSP.

Proposition 3.2.

The objective function of MVSPR is submodular on .

Proof.

Submodularity of and directly follows from . Thus it suffices to verify that is submodular:

Note that an equivalent statement appeared in [32, Lemma 4.2].

Thus, MVSPR has a convex relaxation on CAT(0) space with objective function that is the Lovász extension

| (3.1) |

3.2 Splitting proximal point algorithm for nc-rank

We apply SPPA to the following perturbed version of the convex relaxation:

We regard the objective function as , where is defined by

Theorem 3.3.

Let be the sequence obtained by SPPA applied to with . For , the support of contains a minimizer of MVSP.

Proof.

We first show that is -Lipschitz with By Lemma 2.2, Proposition 2.5 (4-2), and Proposition 2.6 (2), the Lipschitz constants of and are and , respectively. Therefore, if or , then the Lipschitz constant of is . The Lipschitz constant of other is .

The objective function is strongly convex with parameter . Let denote the minimizer of . By Theorem 2.3, we have

Thus, for , it holds .

Thus, after a polynomial number of iterations, a minimizer of MVSP exists in the support of . Our remaining task is to show that the resolvent of each summand can be computed in polynomial time.

3.2.1 Computation of the resolvent for

First we consider the resolvent of . This is an optimization problem over the orthoscheme complex of a single lattice. It suffices to consider the following problem.

where , and .

Lemma 3.4.

Suppose that belongs to a maximal simplex . Then the minimizer of P1 exists in .

Proof.

Let for the maximal chain of . Let be the unique minimizer of P1. Consider a frame containing chains and . Notice . Let and be the -coordinates of and , respectively. By (2.6), it holds , since . Hence the objective function of P1 is written as

We can assume that by relabeling. Then . Suppose that . Then must hold. If , then interchanging the -coordinate and -coordinate of gives rise to another point in having a smaller objective value. This is a contradiction to the optimality of . Suppose that . If , then replace both and by to decrease the objective value, which is a contradiction. Thus . By (2.7), the original coordinate is written as (with and ). This means that belongs to . ∎

As in the proof, to solve P1, consider (implicitly) a frame containing the chain for , and the following Euclidean convex optimization problem:

where and are represented in the -coordinate. Then the optimal solution of P1′ is obtained coordinate-wise. Specifically, is , , or for each . According to (2.7), the expression in is recovered.

Theorem 3.5.

The resolvent of is computed in polynomial time.

3.2.2 Computation of the resolvent for

Next we consider the computation of the resolvent of . It suffices to consider the following problem for :

where , , and . As in the case of P1, we reduce P2 to a convex optimization over by choosing a special frame of .

For , let denote the subspace in defined by

Namely is the orthogonal subspace of with respect to the bilinear form . For , let be defined analogously. Let and denote the left and right kernels of , respectively:

Let . An orthogonal frame is a frame of satisfying the following conditions:

-

•

is a frame of .

-

•

is a frame of .

-

•

.

-

•

( ).

-

•

for .

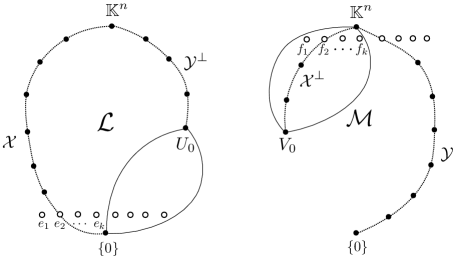

Figure 2 is an intuitive illustration of an orthogonal frame.

Proposition 3.6.

Let be an orthogonal frame. The restriction of the Lovász extension to can be written as

| (3.2) |

where is the -coordinate of and is the -coordinate of .

Proposition 3.7.

Let and be maximal chains of and , respectively. Then there exists an orthogonal frame satisfying

| (3.3) |

Such a frame can be found in polynomial time.

Proposition 3.8.

Let and be maximal chains corresponding to maximal simplices containing and , respectively. For an orthogonal frame satisfying , the minimizer of P2 exists in .

The above three propositions are proved in Section 3.2.3. Assuming these, we proceed the computation of the resolvent. For an orthogonal frame satisfying (3.3), the problem P2 is equivalent to

Again this problem is easily solved coordinate-wise. Obviously and for . For , is the minimizer of the -dimensional problem. Obviously this can be solved in constant time.

Theorem 3.9.

The resolvent of is computed in polynomial time.

Remark 3.10 (Bit complexity).

In the above SPPA, the required bit-length for coefficients of is bounded polynomially in . Indeed, the transformation between the original coordinate and an -coordinate corresponds to multiplying a triangular matrix consisting of entries; see (2.7). In each iteration , the optimal solution of quadratic problem P1′ or P2′ is obtained by adding (fixed) rational functions in to (current points) and multiplying a (fixed) rational matrix in . Consequently, the bit increase is polynomially bounded.

On the other hand, in the case of , we could not exclude the possibility of an exponential increase of the bit-length for the basis of a vector subspace appearing in the algorithm.

3.2.3 Proofs of Propositions 3.6, 3.7, and 3.8

We start with basic properties of , which follow from elementary linear algebra.

Lemma 3.11.

-

(1)

If , then and .

-

(2)

.

-

(3)

.

-

(4)

induces an isomorphism between and with inverse . In particular, .

An alternative expression of by using is given.

Lemma 3.12.

.

Proof.

Consider bases of and of . We can assume that is a base of . Consider the matrix representation of with respect to these bases. Its submatrix of -th columns is a zero matrix. On the other hand, the submatrix of -th columns must have the column-full rank . Thus, the rank of is . The second expression is obtained similarly. ∎

Proof of Proposition 3.6.

An orthogonal frame is naturally identified with Boolean lattice . Notice that if and if . The latter fact follows from . By Lemma 3.11 (2), we have for . By Lemma 3.12 and for (with inclusion order reversed), we have

Identify with by . Then is also written as

Observe that the Lovász extension of is obtained simply by extending the domain to . Hence, we obtain the desired expression.

Proof of Proposition 3.7.

By Lemma 2.4, we can find (in polynomial time) a frame containing two chains and . Suppose that and . We can assume that . Let for . Then holds, since, by Lemma 3.11 (2), we have .

Consider the chain in . Then since each is the join of a subset of . Taking as above, is represented as the join of a subset of . Consider a consecutive pair in . Consider and . Then, by Lemma 3.11 (3), and . Suppose that . Then (by (2.2) and Lemma 3.11 (1)). Thus, for some , it holds . Here must hold. Otherwise , which contradicts . Also, . Thus . Therefore, for each with , we can choose an atom with to add to , and obtain a required frame (containing and ).

Proof of Proposition 3.8.

Consider retractions and ; see Lemma 2.4 (2) for definition. Define a retraction by

Our goal is to show that does not increase the objective value of P2.

First we show

| (3.4) |

Indeed, letting and , we have

The second equality follows from . The third from the modularity: Let and . Then and . Thus we have . The forth follows from for . The fifth follows from Lemma 3.11 (4). Note that by each atom with is taken in the join of the definition (2.3) of .

Next we show

| (3.5) |

Indeed, for , we have In the second equality, we use (3.4) and rank-preserving property of . The inequality follows from order-preserving property .

4 A -adic approach to nc-rank over

In this section, we consider nc-rank computation of , where each is a matrix over . Specifically, we assume that each is an integer matrix. As remarked in Remark 3.10, the algorithm in the previous section has no polynomial guarantee for the length of bits representing bases of vector subspaces. Instead of controlling bit sizes, we consider to reduce nc-rank computation over to that over (for small ).

For simplicity, we deal with nc-singularity testing of . Here is called nc-singular if , and called nc-regular if . We utilize a relationship between nc-rank and the ordinary rank (on arbitrary field ). For a positive integer , the -blow up of is a linear symbolic matrix defined by

where denotes the Kronecker product and is a matrix with variable entries .

Lemma 4.1 ([28, 33]).

A matrix of form (1.1) is nc-regular if and only if there is a positive integer such that is regular.

There is an upper bound of such a . Derksen and Makam[13] proved a polynomial (linear) bound by utilizing the regularity lemma in [29]. Such bounds play an essential role in the validity of the algorithms of [17, 29, 30]. Interestingly, our reduction presented below does not use any bound of .

Fix an arbitrary prime number . Let denote the -adic valuation:

where are nonzero integers prime to , and we let . Every rational is uniquely represented as the -adic expansion

| (4.1) |

where and . The leading (nonzero) coefficient is given as the solution of . Then is divided by . Repeating the same procedure for , we obtain the subsequent coefficients in (4.1).

The -adic expansion of a nonnegative integer is the same as the binary expression of , where is equal to the number of consecutive zeros from the first bit. This interpretation holds for an arbitrary prime . In particular, the -adic valuation of a nonzero integer is bounded by the bit-length in base :

| (4.2) |

The -adic valuation on is extended to as follows. For a polynomial , define by

| (4.3) |

Accordingly, the valuation of a rational function is defined as . This is called the Gauss extension of .

Our algorithm for testing nc-singularity is based on the following problem (maximum vanishing submodule problem; MVMP):

This problem is definable for an arbitrary field with a discrete valuation, and the following arguments are applicable for such a field, while [25] introduced MVMP for the rational function field with one valuable.

MVMP is also a discrete convex optimization on a CAT(0) space. Indeed, its domain can be viewed as the vertex set (the set of lattices, certain submodules of ) of the Euclidean building for , and the objective function is an L-convex function; see [25, 26]. A Euclidean building is a representative space admitting a CAT(0)-metric.

The optimal value of MVMP is denoted by , where we let if MVMP is unbounded. The motivation behind this notation is explained in Remark 4.6.

For a feasible solution of MVMP, consider the -adic expansion of for each . The leading matrix consists of values and is considered in . Then we can consider the linear symbolic matrix

over .

Lemma 4.2.

For a feasible solution of MVMP, the following hold:

-

(1)

. In particular, .

-

(2)

If is regular, then .

Proof.

They follow from . The inequality holds in equality precisely when the leading matrix is regular. ∎

The following algorithm for MVMP is due to [25], which originated from Murota’s combinatorial relaxation algorithm [37] and can be viewed as an descent algorithm on the Euclidean building. For an integer vector , let denote the diagonal matrix with diagonals in order.

- Algorithm: Val-Det

- 0:

-

Let .

- 1:

-

Solve FR (or MVSP) for , and obtain optimal matrices such that has an zero submatrix in its upper-left corner.

- 2:

-

If is nc-singular, i.e., , then let and go to step 1. Otherwise stop.

The initial in step 0 is feasible with objection value , as each is an integer matrix. In step 2, are regarded as matrices in with entries in . Observe that each entry in the upper-left submatrix of is divided by . Thus, the update in step 2 keeps the feasibility of . Further, it strictly increases the objective value: . Note that and cannot be divided by , since and are invertible in modulo . Therefore, nc-regularity of is a necessary condition for optimality of . In fact, it is sufficient.

Proposition 4.3 ([26]).

A feasible solution is optimal if and only if is nc-regular. In this case, it holds for some .

Proof.

From the proof and Lemma 4.1, we have:

Corollary 4.4.

is nc-regular if and only if .

Therefore, Val-Det does not terminate if is nc-singular. A stopping criterion guaranteeing nc-singularity of is obtained as follows:

Proposition 4.5.

Suppose that each consists of integer entries whose absolute values are at most . If is nc-regular, then . Thus, iterations of Val-Det certify nc-singularity of .

Proof.

Suppose that is nc-regular. By Proposition 4.3, for some . We estimate . The following argument is a sharpening of the proof of [26, Lemma 4.9]. Rewrite as

where is an block matrix with block size such that the -th block equals to and other blocks are zero. By multilinearity of determinant, we have

where ranges over if belongs to the -th block (i.e., and is the matrix with the -th column chosen from with . A monomial in this expression is written as for a nonnegative vector with . The coefficient is given by

where are taken so that appears times. The total number of such indices is

From Hadamard’s inequality and the fact that each column of has at most nonzero entries with absolute values at most , we have

| (4.4) |

Therefore, the bit length of in base is bounded by . By (4.2), we have . Thus, . ∎

For , the algorithm Val-Det is executed as follows. Instead of updating , update as . Then, is computed as . In step 2, are matrices such that all entries of the corner of each are divided by . Hence, the next is again an integer matrix. The bit-length bound of each entry in increases by (starting from the initial bound ). Therefore, until detecting nc-singularity of , the required bit-length is .

Remark 4.6 (Valuations on the free skew field).

As shown by Cohn [11, Corollary 4.6], any valuation on a field is extended to the free skew field . Then we can consider the valuation of the Dieudonne determinant of . If the extension is discrete and coincides with the Gauss extension (4.3) on , then one can show by precisely the same argument in [25] that is given by MVMP. Such an extension seems always exist; in this case, . We verified the existence of an extension with the latter property (by adapting Cohn’s argument in [11, Section 4]). However we could not prove the discreteness. Note that the arguments in this section is independent of the existence issue.

Acknowledgments

We thank Kazuo Murota, Satoru Iwata, Satoru Fujishige, Yuni Iwamasa for helpful comments, and thank Koyo Hayashi for careful reading. The work was partially supported by JSPS KAKENHI Grant Numbers 25280004, 26330023, 26280004, 17K00029, and JST PRESTO Grant Number JPMJPR192A, Japan.

References

- [1] P. Abramenko and K. S. Brown, Buildings—Theory and Applications. Springer, New York, 2008.

- [2] Z. Allen-Zhu, A. Garg, Y. Li, R. Oliveira, and A. Wigderson. Operator scaling via geodesically convex optimization, invariant theory and polynomial identity testing. preprint, 2017, the conference version in STOC 2018.

- [3] M. Bačák, The proximal point algorithm in metric spaces. Israel Journal of Mathematics 194 (2013), 689–701.

- [4] M. Bačák, Computing medians and means in Hadamard spaces. SIAM Journal on Optimization 24 (2014), 1542–1566.

- [5] M. Bačák, Convex Analysis and Optimization in Hadamard Spaces. De Gruyter, Berlin, 2014.

- [6] L. J. Billera, S. P. Holmes, and K. Vogtmann: Geometry of the space of phylogenetic trees. Advances in Applied Mathematics 27 (2001), 733–767.

- [7] T. Brady and J. McCammond, Braids, posets and orthoschemes. Algebraic and Geometric Topology 10 (2010), 2277–2314.

- [8] M. R. Bridson and A. Haefliger, Metric Spaces of Non-positive Curvature. Springer-Verlag, Berlin, 1999.

- [9] P. Bürgisser, C. Franks, A. Garg, R. Oliveira, M. Walter, and A. Wigderson, Towards a theory of non-commutative optimization: geodesic first and second order methods for moment maps and polytopes. preprint, 2019, the conference version in FOCS 2019.

- [10] J. Chalopin, V. Chepoi, H. Hirai, and D. Osajda. Weakly modular graphs and nonpositive curvature. Memoirs of the AMS, to appear.

- [11] P. M. Cohn, The construction of valuations of skew fields. Journal of the Indian Mathematical Society 54 (1989) 1–45.

- [12] P. M. Cohn, Skew fields. Cambridge University Press, Cambridge, 1995.

- [13] H. Derksen and V. Makam, Polynomial degree bounds for matrix semi-invariants. Advances in Mathematics 310 (2017), 44–63.

- [14] M. Fortin and C. Reutenauer, Commutative/non-commutative rank of linear matrices and subspaces of matrices of low rank. Séminaire Lotharingien de Combinatoire 52 (2004), B52f.

- [15] S. Fujishige, Submodular Functions and Optimization, 2nd Edition. Elsevier, Amsterdam, 2005.

- [16] S. Fujishige, T Király, K. Makino, K. Takazawa, and S. Tanigawa, Minimizing Submodular Functions on Diamonds via Generalized Fractional Matroid Matchings. EGRES Technical Report (TR-2014-14), (2014).

- [17] A. Garg, L. Gurvits, R. Oliveira, and A. Wigderson, Operator scaling: theory and applications. Foundations of Computational Mathematics (2019).

- [18] G. Grätzer, Lattice Theory: Foundation. Birkhäuser, Basel, 2011.

- [19] L. Gurvits, Classical complexity and quantum entanglement. Journal of Computer and System Sciences 69 (2004), 448–484.

- [20] T. Haettel, D. Kielak, and P. Schwer, The 6-strand braid group is CAT(0). Geometriae Dedicata 182 (2016), 263–286.

- [21] M. Hamada and H. Hirai, Maximum vanishing subspace problem, CAT(0)-space relaxation, and block-triangularization of partitioned matrix. preprint, 2017.

- [22] K. Hayashi, A polynomial time algorithm to compute geodesics in CAT(0) cubical complexes. Discrete & Computational Geometry, to appear.

- [23] H. Hirai, Computing DM-decomposition of a partitioned matrix with rank-1 blocks. Linear Algebra and Its Applications 547 (2018), 105–123.

- [24] H. Hirai, L-convexity on graph structures. Journal of the Operations Research Society of Japan 61 (2018), 71–109.

- [25] H. Hirai, Computing the degree of determinants via discrete convex optimization on Euclidean buildings. SIAM Journal on Applied Geometry and Algebra 3 (2019), 523–557.

- [26] H. Hirai and M. Ikeda, A cost-scaling algorithm for computing the degree of determinants, preprint, 2020.

- [27] H. Hirai and Y. Iwamasa, A combinatorial algorithm for computing the rank of a generic partitioned matrix with submatrices. preprint, 2020, the conference version in IPCO 2020.

- [28] P. Hrubeš and A. Wigderson, Non-commutative arithmetic circuits with division. Theory of Computing 11 (2015), 357–393.

- [29] G. Ivanyos, Y. Qiao, and K. V. Subrahmanyam, Non-commutative Edmonds’ problem and matrix semi-invariants. Computational Complexity 26 (2017) 717–763.

- [30] G. Ivanyos, Y. Qiao, and K. V. Subrahmanyam, Constructive noncommutative rank computation in deterministic polynomial time over fields of arbitrary characteristics. Computational Complexity 27 (2018), 561–593.

- [31] H. Ito, S. Iwata, and K. Murota, Block-triangularizations of partitioned matrices under similarity/equivalence transformations. SIAM Journal on Matrix Analysis and Applications 15 (1994), 1226–1255.

- [32] S. Iwata and K. Murota, A minimax theorem and a Dulmage-Mendelsohn type decomposition for a class of generic partitioned matrices. SIAM Journal on Matrix Analysis and Applications 16 (1995), 719–734.

- [33] D. S. Kaliuzhnyi-Verbovetskyi and V. Vinnikov, Noncommutative rational functions, their difference-differential calculus and realizations. Multidimensional Systems and Signal Processing 23 (2012), 49–77.

- [34] F. Kuivinen, On the complexity of submodular function minimisation on diamonds. Discrete Optimization, 8 (2011), 459–477.

- [35] L. Lovász, Submodular functions and convexity. In A. Bachem, M. Grötschel, and B. Korte (eds.): Mathematical Programming—The State of the Art (Springer-Verlag, Berlin, 1983), 235–257.

- [36] L. Lovász, Singular spaces of matrices and their application in combinatorics. Boletim da Sociedade Brasileira de Matemática 20 (1989), 87–99.

- [37] K. Murota, Matrices and Matroids for Systems Analysis. Springer-Verlag, Berlin, 2000.

- [38] K. Murota, Discrete Convex Analysis. SIAM, Philadelphia, 2004.

- [39] S. Ohta and M. Pálfia, Discrete-time gradient flows and law of large numbers in Alexandrov spaces. Calculus of Variations and Partial Differential Equations 54 (2015) 1591–1610.

- [40] T. Oki, Computing the maximum degree of minors in skew polynomial matrices. preprint, 2019, the conference version in ICALP 2020.

- [41] M. Owen, Computing geodesic distances in tree space. SIAM Journal on Discrete Mathematics 25 (2011), 1506–1529.