Concave Utility Reinforcement Learning with Zero-Constraint Violations

Abstract

We consider the problem of tabular infinite horizon concave utility reinforcement learning (CURL) with convex constraints. For this, we propose a model-based learning algorithm that also achieves zero constraint violations. Assuming that the concave objective and the convex constraints have a solution interior to the set of feasible occupation measures, we solve a tighter optimization problem to ensure that the constraints are never violated despite the imprecise model knowledge and model stochasticity. We use Bellman error-based analysis for tabular infinite-horizon setups which allows analyzing stochastic policies. Combining the Bellman error-based analysis and tighter optimization equation, for interactions with the environment, we obtain a high-probability regret guarantee for objective which grows as , excluding other factors. The proposed method can be applied for optimistic algorithms to obtain high-probability regret bounds and also be used for posterior sampling algorithms to obtain a loose Bayesian regret bounds but with significant improvement in computational complexity.

1 Introduction

In many applications where a learning agent uses reinforcement learning to find optimal policies, the agent optimizes a concave function of the expected rewards or the agent must satisfy certain constraints while maximizing an objective (Altman & Schwartz, 1991; Roijers et al., 2013). For example, in network scheduling, a controller can maximize fairness of the users using a concave function of the average reward of each of the users (Chen et al., 2021). Consider a scheduler which allocates a resource to users. Each user obtains some reward based on their current state. The goal of the scheduler is to maximize fairness among the users. However, there are certain preferred users for which some service level agreements (SLA) must be made. For this setup, the scheduler aims to find a policy which maximizes the fairness while ensuring the SLA constraints of the preferred users are met. Note that, here, the objective is a non-linear concave utility in the presence of constraints on service level agreement. Setups with constraints also exist in autonomous vehicles where the goal is to reach the destination quickly while ensuring the safety of the surroundings (Le et al., 2019; Tessler et al., 2018). Further, an agent may aim to efficiently explore the environment by maximizing the entropy, which is a concave function of the distribution induced over the state and action space Hazan et al. (2019).

Owing to the variety of the use cases, recently, there has been significant effort to make RL algorithms for setups with constraints, or concave utilties, or both. For episodic setup, works range from model based algorithms (Brantley et al., 2020; Yu et al., 2021) to primal-dual based model-free algorithms (Ding et al., 2021). Recently, there has been a thrust towards developing algorithms which can also achieve zero-constraint violations in the learning phase as well Wei et al. (2022a); Liu et al. (2021); Bai et al. (2022b). However, for the episodic setup, the majority of the current works consider the weaker regret definition specified by Efroni et al. (2020) and only achieve zero expected constraint violations. Further, these algorithms require the knowledge of a safe policy following which the agent does not violate constraints, or the knowledge of the Slater’s gap which determines how far a safe policy is from the constraint boundary.

The definition which considers the average over time makes sense for an infinite horizon setup as the long-term average is naturally defined (Puterman, 2014). For a tabular infinite-horizon setup, Singh et al. (2020) proposed an optimistic epoch-based algorithm. Much recently, Chen et al. (2022) proposed an Optimistic Online Mirror Descent based algorithm. In this work, we consider the problem of maximizing concave utility of the expected rewards while also ensuring that a set of convex constraints of the expected rewards are also satisfied. Moreover, we aim to develop algorithms that can also ensure that the constraints are not violated during the training phase as well. We work with tabular MDP with infinite horizon. For such setup, our algorithm updates policies as it learns the system model. Further, our approach also bounds the accumulated observed constraint violations as compared to the expected constraint violations.

For infinite horizon setups for non-constrained setup, the regret analysis has been widely studied Fruit et al. (2018); Jaksch et al. (2010). However, we note that the dealing with constraints and non-linear setup requires additional attention because of the stochastic policies. Further, unlike episodic setup, the distribution at the epoch is not constant and hence the policy switching cost has to be accounted explicitly. Prior works in infinite horizon also faced this issue and provide some tools to overcome this limitation. Singh et al. (2020) builds confidence intervals for transition probability for every next state given the current state-action pair and obtains a regret bound of . Chen et al. (2022) obtains a regret bound of with constraint violations for ergodic MDPs with mixing time following an analysis which works with confidence intervals on both transition probability vectors and value functions.

To overcome the limitations mentioned in previous analysis and to obtain a tighter result, we propose an optimism based UC-CURL algorithm which proceeds in epochs . At each epoch, we solve for an policy which considers constraints tighter by than the true bounds for the optimistic MDP in the confidence intervals for the transition probabilities. Further, as the knowledge of the model improves with increased interactions with the environment, we reduce this tightness. This -sequence is critical to our algorithm as, if the sequence decays too fast, the constraints violations cannot be bounded by zero. If this sequence decays too slow, the objective regret may not decay fast enough. Further, using the -sequence, we do not require the knowledge of the total time for which the algorithm runs.

We bound our regret by bounding the gap between the optimal policy in the feasible region and the optimal policy for the optimization problem with tight constraints. We bound this gap with a multiplicative factor of , where is Slater’s parameter. Based on our analysis using the Slater’s parameter , we consider a case where a lower bound on the time horizon is known. This knowledge of allows us to relax our assumption on .

Further, for the regret analysis of the proposed UC-CURL algorithm, we use Bellman error for infinite horizon setup to bound the difference between the performance of optimistic policy on the optimistic MDP and the true MDP. Compared to analysis of Jaksch et al. (2010), this allows us to work with stochastic policies. We bound our regret as and constraint violations as , where and are the number of states and actions respectively, is the Lipschitz constant of the objective and constraint functions, is the number of costs the agent is trying to optimize, and is the mixing time of the MDP. The Bellman error based analysis along with Slater’s slackness assumption also allows to develop posterior sampling based methods for constrained RL (see Appendix G) by showing feasibility of the optimization problem for the sampled MDPs.

To summarize our contributions, we improve prior results on infinite horizon concave utility reinforcement learning setup on multiple fronts. First, we consider convex function for objectives and constraints. Second, even with a non-linear function setup, we reduce the regret order to and bound the constraint violations with . Third, our algorithm does not require the knowledge of the time horizon , safe policy, or Slater’s gap . Finally, we provide analysis for posterior sampling algorithm which improves both empirical performance and computational complexity.

2 Related Works

Constrained RL: Altman (1999) builds the formulation for constrained MDPs to study constrained reinforcement learning and provides algorithms for obtaining policies with known transition models. Zheng & Ratliff (2020) considered an episodic CMDP (Constrained Markov Decision Processes) and use an optimism based algorithm to bound the constraint violation as with high probability. Kalagarla et al. (2021) also considered the episodic setup to obtain PAC-style bound for an optimism based algorithm. Ding et al. (2021) considered the setup of -episode length episodic CMDPs with -dimensional linear function approximation to bound the constraint violations as by mixing the optimal policy with an exploration policy. Efroni et al. (2020) proposes a linear-programming and primal-dual policy optimization algorithm to bound the regret as . Wei et al. (2022a); Liu et al. (2021) considered the problem of ensuring zero constraint violations using a model-free algorithm for tabular MDPs with linear rewards and constraints. However, for infinite horizon setups, the analysis from finite horizon algorithms does not directly hold. This is because finite horizon setups can update the policy after every episode. But this policy switch modifies the induced Markov chains which takes time to converge to a stationary distribution.

Xu et al. (2021) considered an infinite horizon discounted setup with constraints and obtain global convergence using policy gradient algorithms. Bai et al. (2022b) proposed a conservative stochastic model-free primal-dual algorithm for infinite horizon discounted setup. Ding et al. (2020); Bai et al. (2023) also considered an infinite horizon discounted setup with parametrization. They used a natural policy gradient to update the primal variable and sub-gradient descent to update the dual variable. In addition to the above results on discounted MDPs, the long-term rewards have also been considered. Singh et al. (2020) considered the setup of infinite-horizon ergodic CMDPs with long-term average constraints with an optimism based algorithm. Gattami et al. (2021) analyzed the asymptotic performance for Lagrangian based algorithms for infinite-horizon long-term average constraints, however they only show convergence guarantees without explicit convergence rates. Chen et al. (2022) provided an optimistic online mirror descent algorithm for ergodic MDPs which obtain a regret bound of , and Wei et al. (2022b) provided a model free SARSA algorithm which obtains a regret bound of for constrained MDPs. Agarwal et al. (2022b) proposed a posterior sampling based algorithm for infinite horizon setup with a regret of and constraint violation of .

| Algorithm(s) | Setup | Regret | Constraint Violation | Non-Linear |

| conRL Brantley et al. (2020) | FH | Yes | ||

| MOMA Yu et al. (2021) | FH | Yes | ||

| TripleQ Wei et al. (2022a) | FH | No | ||

| OptPess-LP Liu et al. (2021) | FH | No | ||

| OptPess-Primal Dual Liu et al. (2021) | FH | No | ||

| UCRL-CMDP Singh et al. (2020) | IH | No | ||

| Chen et al. Chen et al. (2022) | IH | No | ||

| Wei et al. Wei et al. (2022b) | IH | No | ||

| Agarwal et al. Agarwal et al. (2022b) | IH | No | ||

| UC-CURL (This work) | IH | Yes |

Concave Utility RL: Another major research area related to this work is concave utility RL (Hazan et al., 2019). Along this direction, Cheung (2019) considered a concave function of expected per-step vector reward and developed an algorithm using Frank-Wolfe gradient of the concave function for tabular infinite horizon MDPs. Agarwal & Aggarwal (2022); Agarwal et al. (2022a) also considered the same setup using a posterior sampling based algorithm. Recently, Brantley et al. (2020) combined concave utility reinforcement learning and constrained reinforcement learning for an episodic setup. Yu et al. (2021) also considered the case of episodic setup with concave utility RL. However, both (Brantley et al., 2020) and (Yu et al., 2021) consider the weaker regret definition by Efroni et al. (2020), and Cheung (2019); Yu et al. (2021) do not target the convergence of the policy. Further, these works do not target zero-constraint violations. Recently, policy gradient based algorithms have also been studied for discounted infinite horizon setup Bai et al. (2022a).

Another parallel line of work in RL which deals with concave utilities is variational policy gradient (Zhang et al., 2021; 2020). However, they consider discounted MDPs whereas we consider undiscounted setup for our work.

Compared to prior works, we consider the constrained reinforcement learning with convex constraints and concave objective function. Using infinite-horizon setup, we consider the tightest possible regret definition. Further, we achieve zero constraint violations with objective regret tight in using an optimization problem with decaying tightness. A comparative survey of key prior works and our work is also presented in Table 1.

3 Problem Formulation

We consider an ergodic tabular infinite-horizon constrained Markov Decision Process . is finite set of states, and is a finite set of actions. denotes the transition probability distribution such that on taking action in state , the system moves to state with probability . and denotes the average reward obtained and average costs incurred in state action pair , and is the distribution over the initial state.

The agent interacts with in time-steps for a total of time-steps. We note that is possibly unknown and . At each time , the agent observes state and plays action . The agent selects an action on observing the state using a policy , where is the probability simplex on the action space. On following a policy , the long-term average reward of the agent is denoted as:

| (1) |

where denotes the expectation over the state and action trajectory generated from following on transitions . The long-term average reward can also be represented as:

where is the discounted cumulative reward on following policy , and is the steady-state occupancy measure generated from following policy on MDP with transitions Puterman (2014). Similarly, we also define the long-term average costs as follows:

| (2) |

The agent interacts with the CMDP for time-steps in an online manner and aims to maximize a function of the average per-step reward. Further, the agent attempts to ensure that a function of average per-step costs is at most . In the hindsight, the agents wants to play a policy which which satisfies:

| (3) |

Let denote the -step transition probability on following policy in MDP starting from some state where . Let denote the time taken by the Markov chain induced by the policy to hit state starting from state . Further, let be the mixing time of the MDP . We now introduce our assumptions on the MDP .

Assumption 3.1.

For MDP , we have with being the long-term steady state distribution induced by policy , and and are problem specific constants. Additionally, the mixing time of the MDP if finite or . In other words, the MDP, , is ergodic.

Assumption 3.2.

The rewards , the costs , and the functions and are known to the agent.

Assumption 3.3.

The scalarization function is jointly concave and the constraints are jointly convex. Hence for any arbitrary distributions and , the following holds.

| (4) | ||||

| (5) |

Assumption 3.4.

The function and are assumed to be a Lipschitz function, or

| (6) | ||||

| (7) |

Remark 3.5.

Remark 3.6.

For non-Lipshitz continuous functions such as entropy, we can obtain maximum entropy exploration if choose function with for a particular state action pair and choosing to cover all state-action pairs and a regularizer Hazan et al. (2019).

Assumption 3.7.

There exists a policy , and one constant such that

| (8) |

This assumption is again a standard assumption in the constrained RL literature (Efroni et al., 2020; Ding et al., 2021; 2020; Wei et al., 2022a). is referred as Slater’s constant. Ding et al. (2021) assumes that the Slater’s constant is known. Wei et al. (2022a) assumes that the number of iterations of the algorithm is at least for episode length . On the contrary, we simply assume the existence of and a lower bound on the value of which gets relaxed as the agent acquires more time to interact with the environment.

Any online algorithm starting with no prior knowledge will need to obtain estimates of transition probabilities and obtain reward and costs , for each state action pair. Initially, when algorithm does not have good estimate of the model, it accumulates a regret as well as violates constraints as it does not know the optimal policy. We define reward regret as the difference between the average cumulative reward obtained vs the expected rewards from running the optimal policy for steps, or

Additionally, we define constraint regret as the gap between the constraint function and incurred and constraint bounds, or

In the following section, we present a model-based algorithm to obtain this policy , and reward regret and the constraint regret accumulated by the algorithm.

4 Algorithm

We now present our algorithm UC-CURL and the key ideas used in designing the algorithm. Note that if the agent is aware of the true transition , it can solve the following optimization problem for the optimal feasible policy.

| (9) |

with the following set of constraints,

| (10) | |||

| (11) | |||

| (12) |

for all and . Equation (11) denotes the constraint on the transition structure for the underlying Markov Process. Equation (10) ensures that the solution is a valid probability distribution. Finally, Equation (12) are the constraints for the constrained MDP setup which the policy must satisfy. Using the solution for , we can obtain the optimal policy as:

| (13) |

However, the agent does not have the knowledge of to solve this optimization problem, and thus starts learning the transitions with an arbitrary policy. We first note that if the agent does not have complete knowledge of the transition of the true MDP , it should be conservative in its policy to allow room to violate constraints. Based on this idea, we formulate the -tight optimization problem by modifying the constraint in Equation (12) as.

| (14) |

Let be the solution of the -tight optimization problem, then the optimal conservative policy becomes:

| (15) |

We are now ready to design our algorithm UC-CURL which is based on the optimism principle (Jaksch et al., 2010). The UC-CURL algorithm is presented in Algorithm 1. The algorithm proceeds in epochs . The algorithm maintains three key variables , , and for all . stores the number of times state-action pair are visited in epoch . stores the number of times are visited till the start of epoch . stores the number of times the system transitions to state after taking action in state . Another key parameter of the algorithm is where is the start time of the epoch and is a configurable constant. Using these variables, the agent solves for the optimal -conservative policy for the optimistic MDP by replacing the constraints in Equation (11) by:

| (16) | |||

| (17) | |||

| (18) |

for all and and . Equation (18) ensures that the agent searches for optimistic policy in the confidence intervals of the transition probability estimates.

Combining the right hand side of (16) with (10) gives

Thus, joint with (16), we see that equality in (16) will be satisfied at the boundary as for some can never exceed the boundary to compensate for another and hence, for all , will lie on the boundary. In other words, the above constraints give . Further, we note that the region for the constraints is convex. This is because the set is convex when . We note that even though the optimization problem may look non-convex due to constraints having product of two variables, we see Equations (9), (14), and (16)-(18) form a convex optimization problem. We expand more on this in Appendix B. We note that (Rosenberg & Mansour, 2019) provide another approach to obtain a convex optimization problem for optimistic MDP.

Let be the solution for -tight optimization equation for the optimistic MDP. Then, we obtain the optimal conservative policy for epoch as:

| (19) |

The agent plays the optimistic conservative policy for epoch . Note that the conservative parameter decays with time. As the agent interacts with the environment, the system model improves and the agent does not need to be as conservative as before. This allows us to bound both constraint violations and the objective regret. Further, if during the initial iterations of the algorithms a conservative solution is not feasible, we can ignore the constraints completely. We will show that the conservation behavior is required when to compensate for the violations in the initial period of the algorithm E.2.

For the UC-CURL algorithm described in Algorithm 1, we choose . However, if the agent has access to a lower bound (Assumption 3.7) on the time horizon , the algorithm can change the in each epoch as follows. Note that if , becomes as specified in Algorithm 1 and if , becomes constant for all epochs .

5 Regret Analysis

After describing UC-CURL algorithm, we now perform the regret and constraint violation analysis. We note that the standard analysis for infinite horizon tabular MDPs of UCRL2 Jaksch et al. (2010) cannot be directly applied as the policy is possibly stochastic for every epoch. Another peculiar aspect of the analysis of the infinite horizon MDPs is that the regret grows linearly with the number of epochs (or policy switches). This is because a new policy induces a new Markov chain and this chain take time to converge to the stationary distribution. The analysis still bounds the regret by as the number of epochs are bounded by .

Before diving into the details, we first define few important variables which are key to our analysis. The first variable is the standard -value function. We define as the long term expected reward on taking action in state and then following policy for the MDP with transition . Mathematically, we have

We also define Bellman error for the infinite horizon MDPs as the difference between the cumulative expected rewards obtained for deviating from the system model with transition for one step by taking action in state and then following policy . We have:

| (20) |

After defining the key variables, we can now jump into bounding the objective regret . Intuitively, the algorithm incurs regret on three accounts. First source is following the conservative policy which we require to limit the constraint violations. Second source of regret is solving for the policy which is optimal for the optimistic MDP. Third source of regret is the stochastic behavior of the system. We also note that the constraints are violated because of the imperfect MDP knowledge and the stochastic behavior. However, the conservative behavior actually allows us to violate the constraints within some limits which we will discuss in the later part of this section.

We start by stating our first lemma which bounds the regret due to solving for a conservative policy. We define -tight optimization problem as optimization problem for the true MDP with transitions with . We bound the gap between the value of function at the long-term expected reward of the policy for -tight optimization problem and the true optimization problem (Equation (9)-(12)) in the following lemma.

Lemma 5.1.

Let be the long-term average reward following the optimal feasible policy for the true MDP and let be the long-term average rewards following the optimal policy for the tight optimization problem for the true MDP , then for , we have,

| (21) |

Proof Sketch.

We construct a policy for which the steady state distribution is the weighted average of two steady state distributions. First distribution is for the optimal policy for the true optimization problem. Second distribution is for the policy which satisfies Assumption 3.7. We show that this constructed policy satisfies the -tight constraints. Further, using Lipschitz continuity, we convert the difference between function values into the difference between the long-term average rewards to obtain the required result. The detailed proof is provided in Appendix C. ∎

Lemma 5.1 and our construction of sequence allows us to limit the growth of regret because of conservative policy by .

To bound the regret from the second source, we use a Bellman error based analysis. In our next lemma, we show that the difference between the performance of a policy on two different MDPs is bounded by long-term averaged Bellman error. Formally, we have:

Lemma 5.2.

The difference of long-term average rewards for running the optimistic policy on the optimistic MDP, , and the average long-term average rewards for running the optimistic policy on the true MDP, , is the long-term average Bellman error as

| (22) |

Proof Sketch.

We start by writing in terms of the Bellman error. Subtracting from and using the fact that and , we obtain the required result. A complete proof is provided in Appendix D.3. ∎

After relating the gap between the long-term average rewards of policy on the two MDPs, we aim to bound the sum of Bellman error over an epoch. For this, we first bound the Bellman error for a particular state action pair in the following lemma. We have,

Lemma 5.3.

With probability at least , the Bellman error for state-action pair in epoch is upper bounded as

| (23) |

where is the number of visitations to till epoch and is the bias of the MDP with transition probability .

Proof Sketch.

We start by noting that the Bellman error essentially bounds the impact of the difference in value obtained because of the difference in transition probability to the immediate next state. We bound the difference in transition probability between the optimistic MDP and the true MDP using the result from Weissman et al. (2003). This approach gives the required result. A complete proof is provided in Appendix D.3. ∎

We use Lemma 5.2 and Lemma 5.3 to bound the regret because of the imperfect knowledge of the system model. We bound the expected Bellman error in epoch starting from state and action by constructing a Martingale sequence with filtration and using Azuma’s inequality Bercu et al. (2015). Using the Azuma’s inequality, we can also bound the deviations because of the stochasticity of the Markov Decision Process. The result is stated in the following lemma with proof in Appendix D.

Lemma 5.4.

With probability at least , the regret incurred from imperfect model knowledge and process stochastics is bounded by

| (24) |

The regret analysis framework also prepares us to bound the constraint violations as well. We again start by quantifying the reasons for constraint violations. The agent violates the constraint because 1. it is playing with the imperfect knowledge of the MDP and 2. the stochasticity of the MDP which results in the deviation from the average costs. We note that the conservative policy for every epoch does not violate the constraints, but instead allows the agent to manage the constraint violations because of the imperfect model knowledge and the system dynamics.

We note that the Lipschitz continuity of the constraint function allows us to convert the function of averaged costs to the sum of averaged costs. Further, we note that we can treat the cost similar to rewards Brantley et al. (2020). This property allows us to bound the cost incurred incurred in a way similar to how we bound the gap from the optimal reward by . We now want that the slackness provided by the conservative policy should allow constraint violations. This is ensured by our chosen sequence. We formally state that result in the following lemma proven in parts in Appendix D and Appendix E.

Lemma 5.5.

The cumulative sum of the sequence is upper and lower bounded as

| (25) |

After giving the details on bounds on the possible sources of regret and constraint violations, we can formally state the result in the form of following theorem.

Theorem 5.6.

For all and , the regret of UC-CURL algorithm is bounded as

| (26) |

and the constraints are bounded as , with probability at least .

5.1 Posterior Sampling Algorithm

We can also modify the analysis to obtain Bayesian regret for a posterior sampling version of the UC-CURL algorithm using Lemma 1 of Osband et al. (2013). In the posterior sampling algorithm, instead of finding the optimistic MDP, we sample the transition probability using an updated posterior. This sampling allows to reduce the complexity of the optimization problem by eliminating Eq. (17) and Eq. (18). The complete algorithm is described in Appendix G. We note that optimization problem for the UC-CURL algorithm is feasible because the true MDP lies in the confidence interval. However, for the sampled MDP obtaining the feasibility requires a stronger Slater’s condition.

5.2 Further Modifications

The proposed algorithm, and the analysis can be easily extended to convex constraints by applying union bounds. Further, our analysis uses Proposition 1 of Jaksch et al. (2010) to bound the epochs by . However, we can improve the empirical performance of the UC-CURL algorithm by modifying the epoch trigger condition (Line 11 of Algorithm 1). Triggering a new episode whenever becomes for any state-action pair results in a linearly increasing episode length with total epochs bounded by . This modification results in a better empirical performance (See Appendix 6 for simulations) at the cost of a higher theoretical regret bound and computation complexity for obtaining a new policy at every epoch.

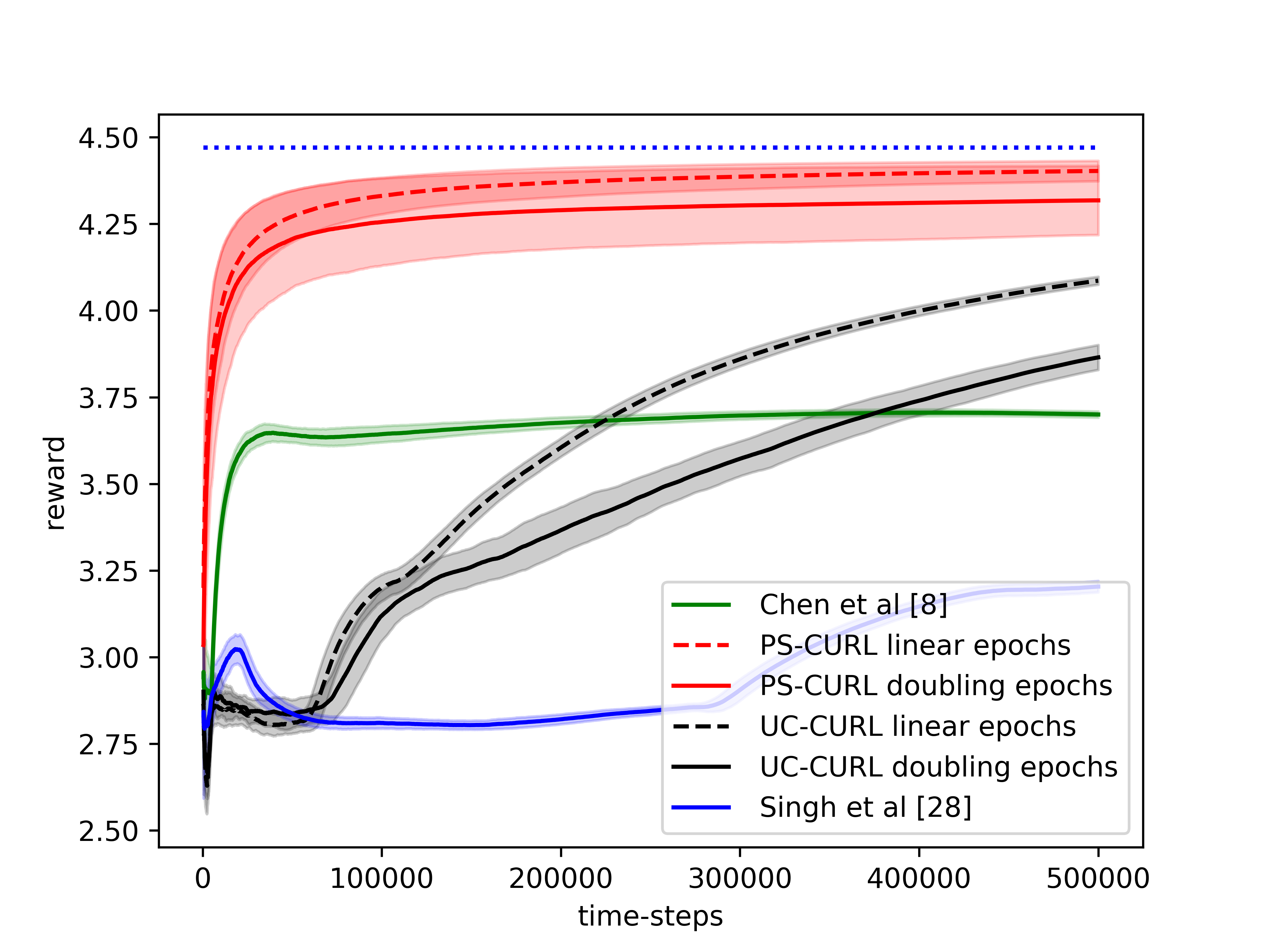

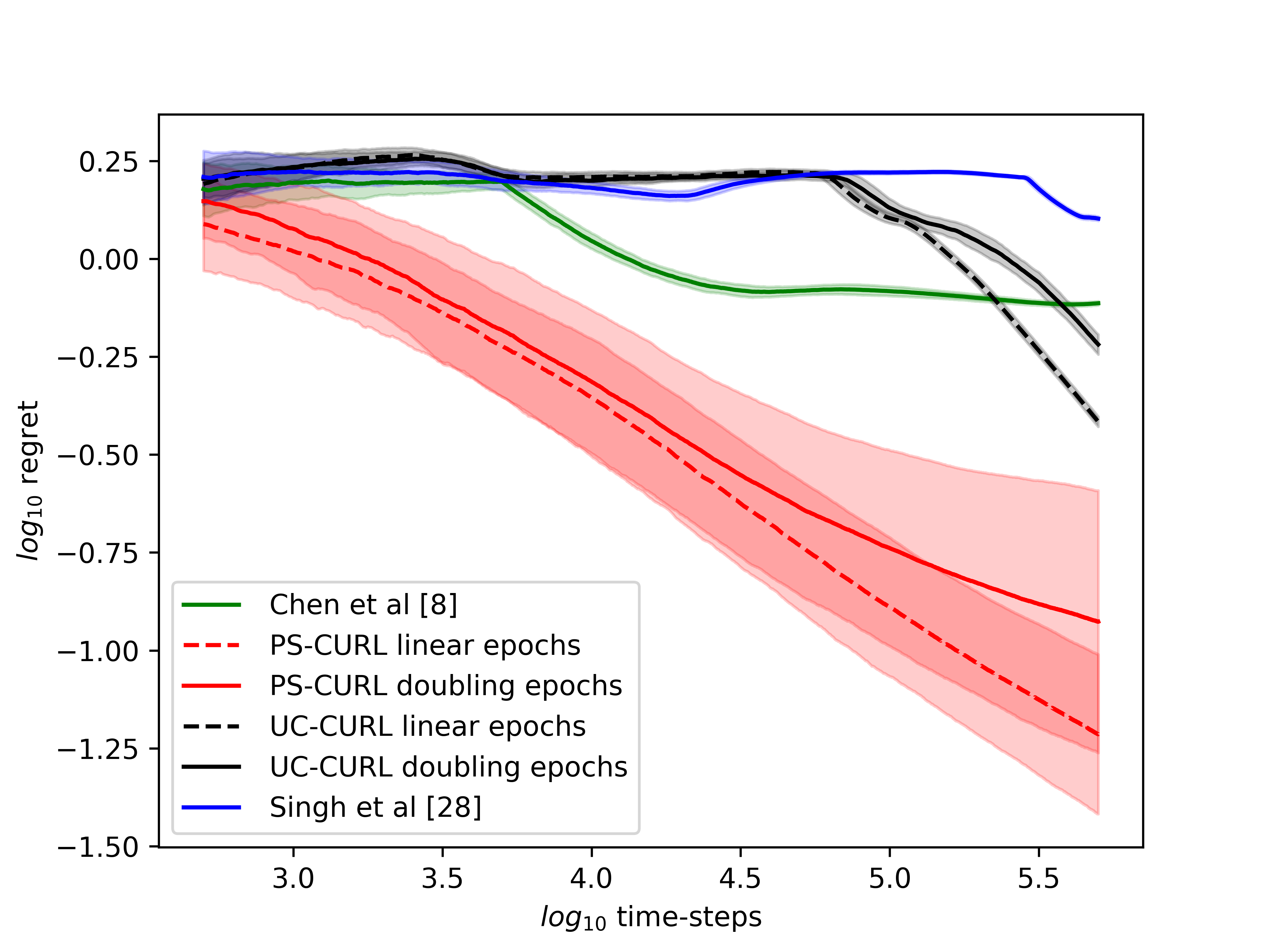

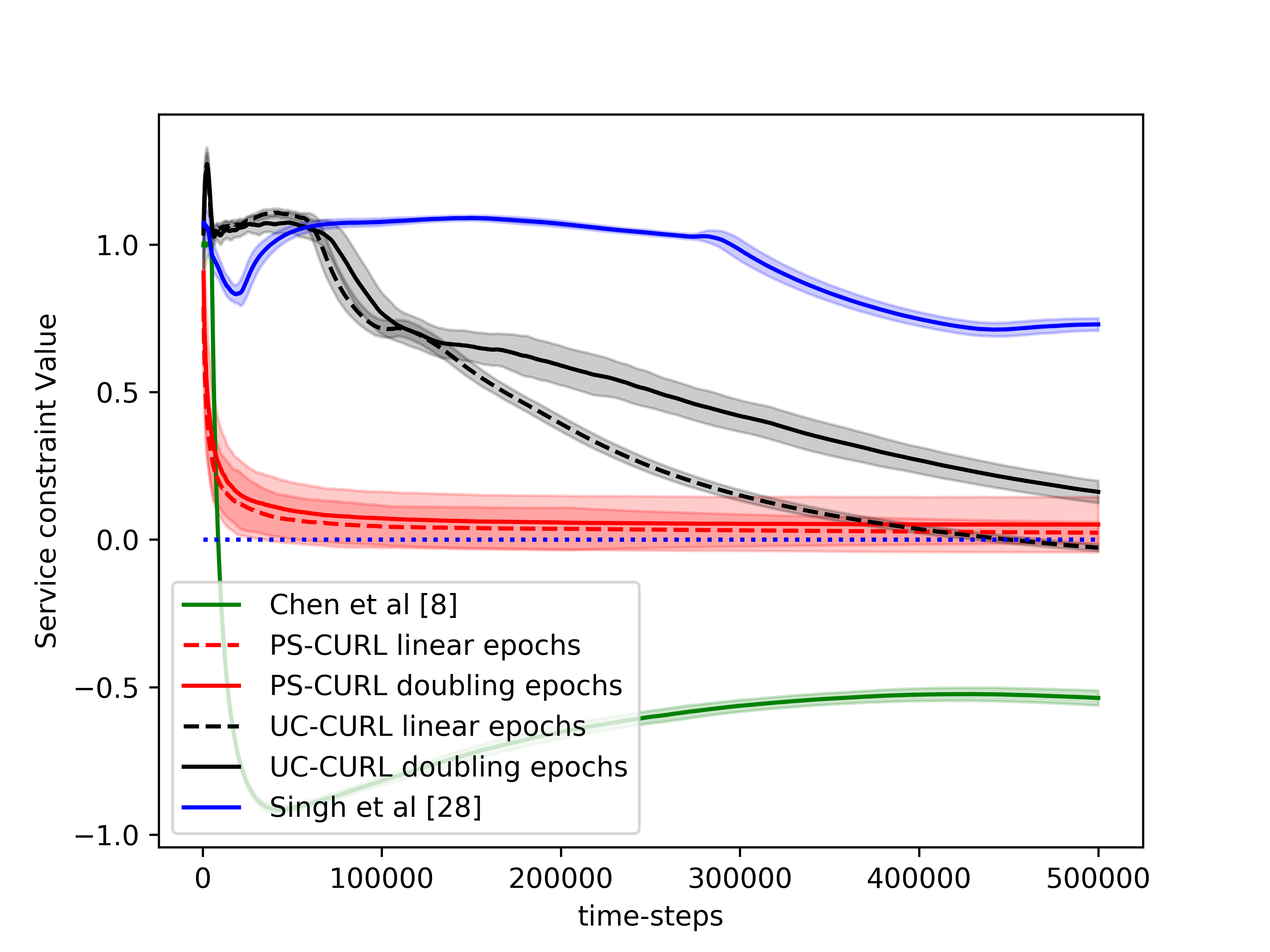

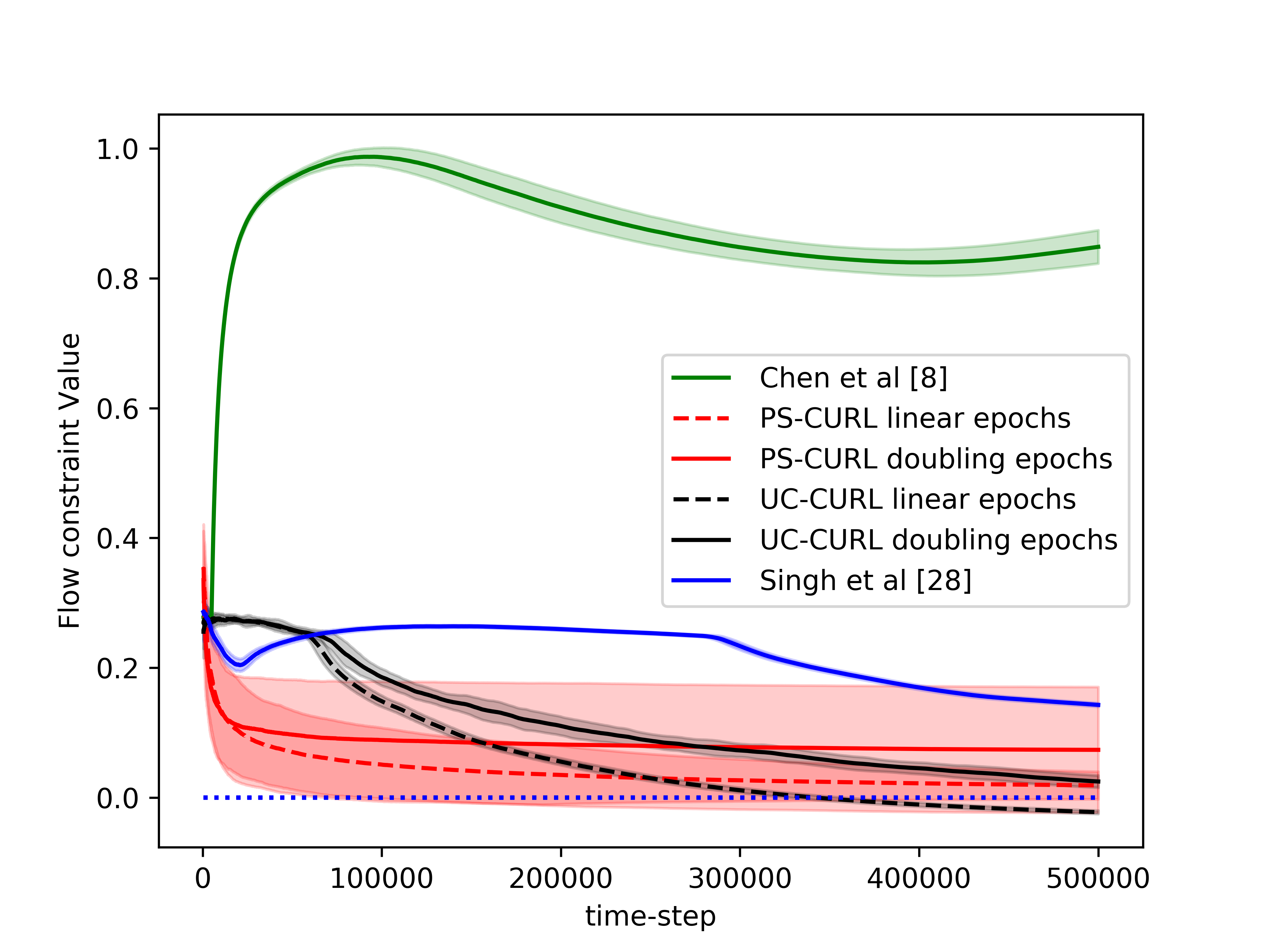

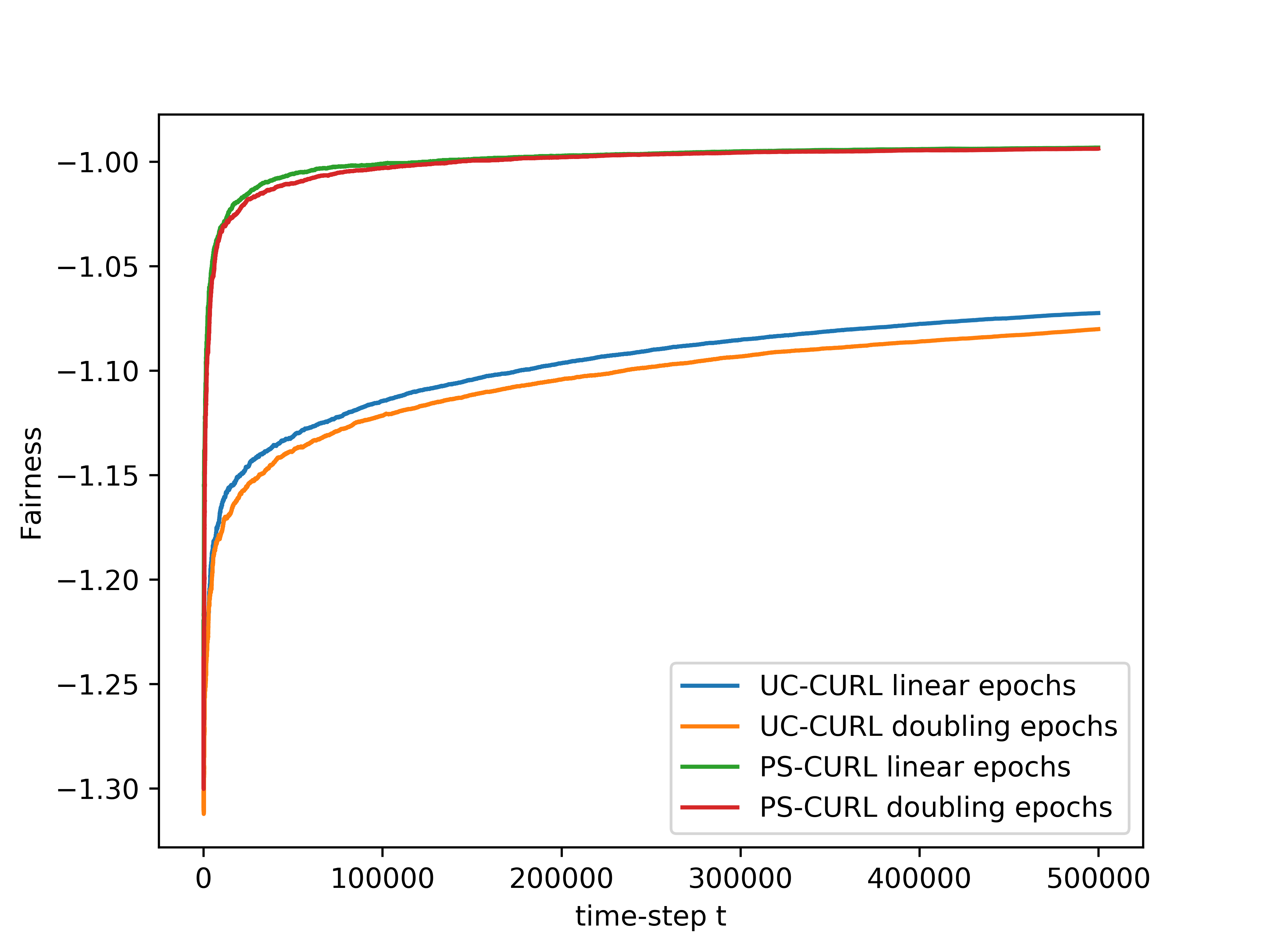

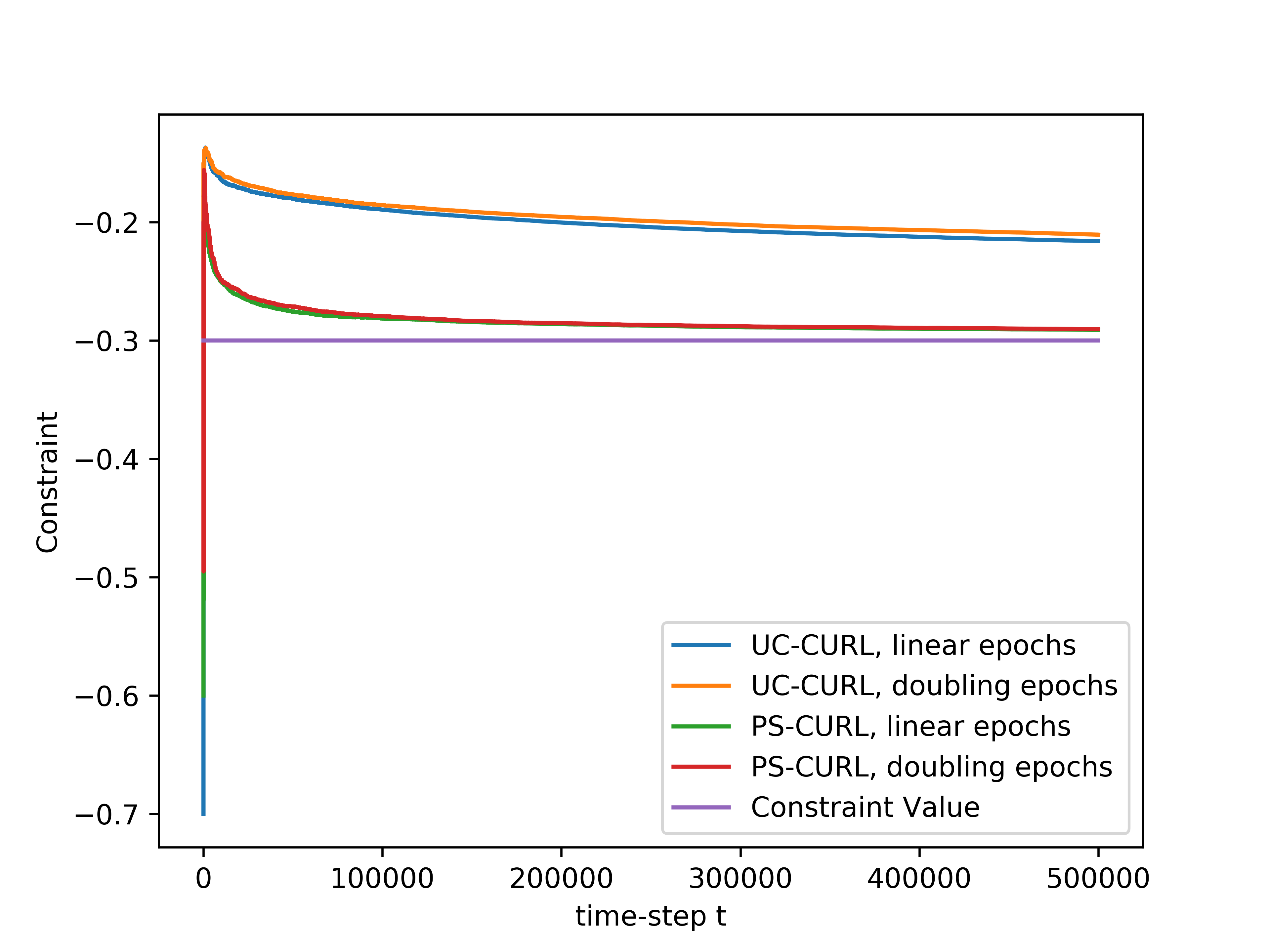

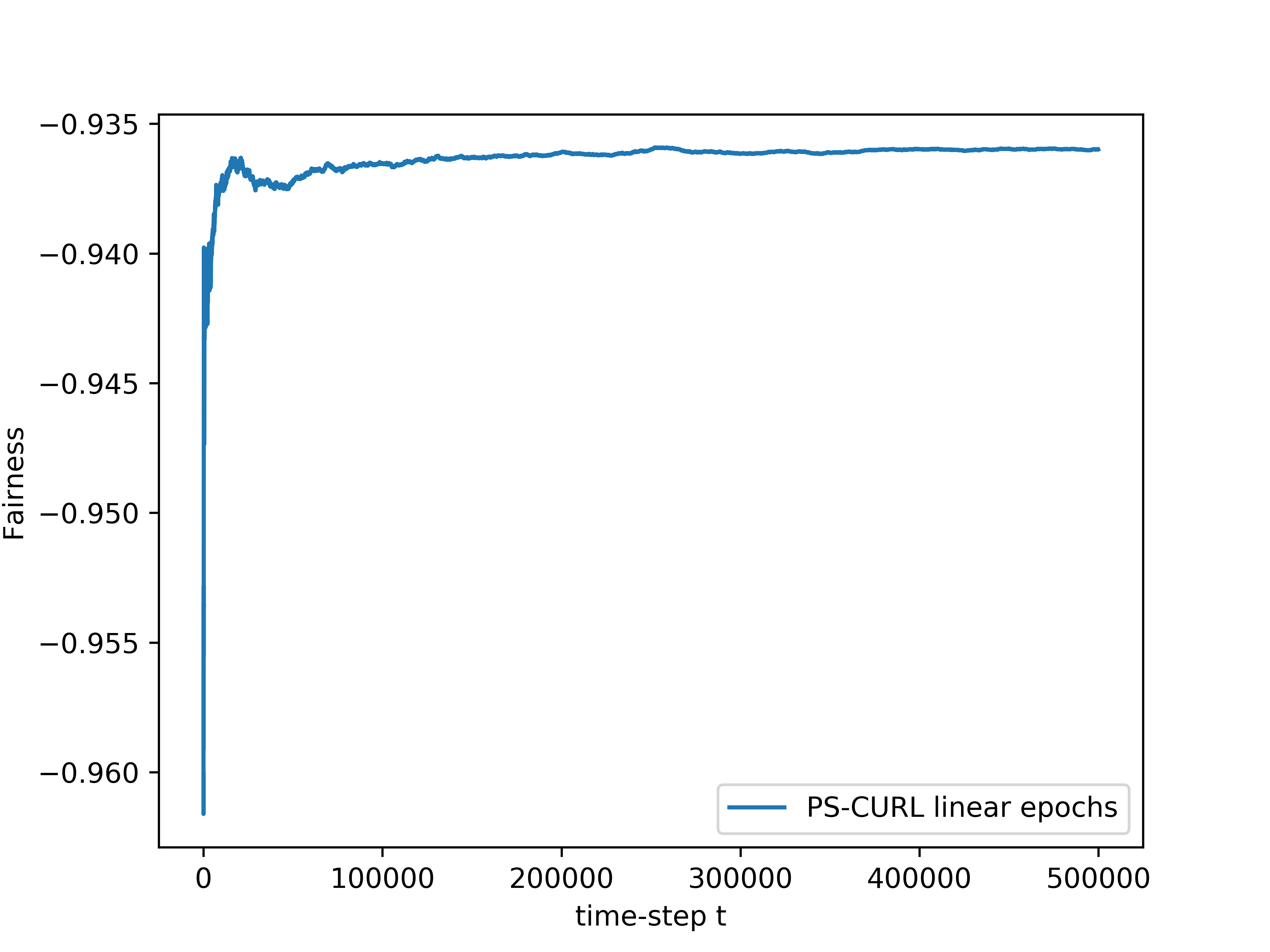

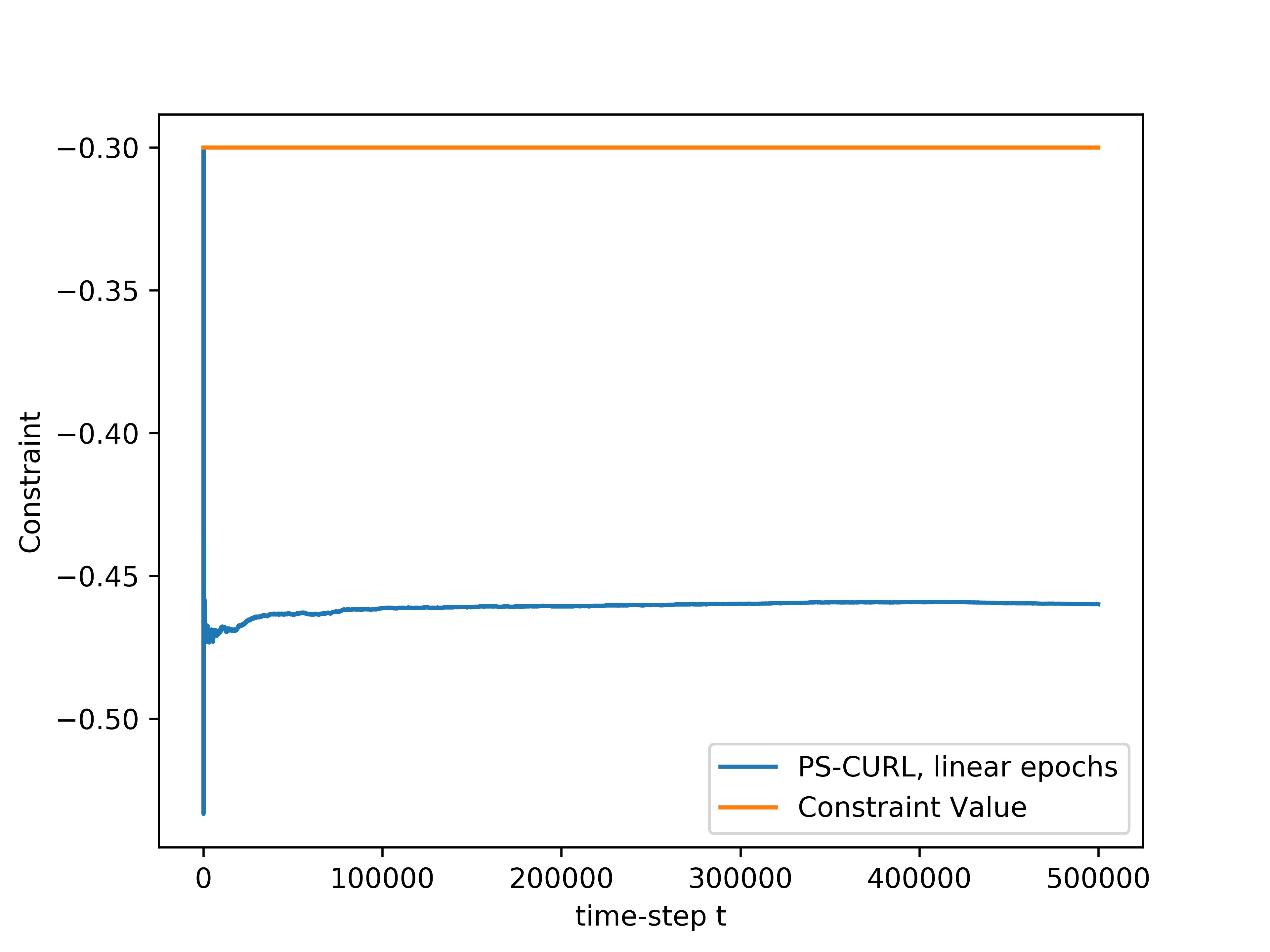

6 Simulation Results

To validate the performance of the UC-CURL algorithm and the PS-CURL algorithm, we run the simulation on the flow and service control in a single-serve queue, which was introduced in (Altman & Schwartz, 1991). Along with validating the performance of the proposed algorithms, we also compare the algorithms against the algorithms proposed in Singh et al. (2020) and in Chen et al. (2022) for model-based constrained reinforcement learning for infinite horizon MDPs. Compared to these algorithms, we note that our algorithm is also designed to handle concave objectives of expected rewards with convex constraints on costs with constraint violations.

In the queue environment, a discrete-time single-server queue with a buffer of finite size is considered. The number of customers waiting in the queue is considered as the state in this problem and thus . Two kinds of the actions, service and flow, are considered in the problem and control the number of customers together. The action space for service is a finite subset in , where . Given a specific service action , the service a customer is successfully finished with the probability . If the service is successful, the length of the queue will reduce by 1. Similarly, the space for flow is also a finite subsection in . In contrast to the service action, flow action will increase the queue by with probability if the specific flow action is given. Also, we assume that there is no customer arriving when the queue is full. The overall action space is the Cartesian product of the and . According to the service and flow probability, the transition probability can be computed and is given in the Table 2.

| Current State | |||

|---|---|---|---|

.

Define the reward function as and the constraints for service and flow as and , respectively. Define the stationary policy for service and flow as and , respectively. Then, the problem can be defined as

| (27) |

According to the discussion in (Altman & Schwartz, 1991), we define the reward function as , which is an decreasing function only dependent on the state. It is reasonable to give higher reward when the number of customers waiting in the queue is small. For the constraint function, we define and , which are dependent only on service and flow action, respectively. Higher constraint value is given if the probability for the service and flow are low and high, respectively.

In the simulation, the length of the buffer is set as . The service action space is set as and the flow action space is set as . We use the length of horizon and run independent simulations of all algorithms. The experiments were run on a core Intel-i9 CPU @ GHz with GB of RAM. The result is shown in the Figure 1(d). The average values of the cumulative reward and the constraint functions are shown in the solid lines. Further, we plot the standard deviation around the mean value in the shadow to show the random error. In order to compare this result to the optimal, we assume that the full information of the transition dynamics is known and then use Linear Programming to solve the problem. The optimal cumulative reward for the constrained optimization is calculated to be 4.48 with both flow constraint and service constraint values to be . The optimal cumulative reward for the unconstrained optimization is 4.8 with service constraint being and flow constraint being .

We now discuss the performance of all the algorithms starting with our algorithms UC-CURL and PS-CURL. In Figure 1(d), we observe that the proposed UC-CURL algorithm in Algorithm 1 does not perform well initially. We observe that this is because the confidence interval radius for any are not tight enough in the initial period. After the algorithms collects sufficient samples to construct tight confidence intervals around the transition probabilities, the algorithm starts converging towards the optimal policy. We also note that the linear epoch modification of the algorithm works better than the doubling epoch algorithm presented in Algorithm 1. This is because the linear epoch variant updates the policy quickly whereas the doubling epoch algorithm works with the same policy for too long and thus looses the advantages of collected samples. For our implementation, we choose the value of parameter in Algorithm 1 as , using which we observe that the constraint values start converging towards zero.

We now analyse the performance of the PS-CURL algorithm. For our implementation of the PS-CURL algorithm, we sample the transition probabilities using Dirichlet distribution. Note that the true transition probabilities were not sampled from a Dirichlet distribution and hence this experiment also shows the robustness against misspecified priors. We observe that the algorithm quickly brings the reward close to the optimal rewards. The performance of the PS-CURL algorithm is significantly better than the UC-CURL algorithm. We suspect this is because the UC-CURL algorithm wastes a large-number of steps to find optimistic policy with a large confidence interval. This observation aligns with the TDSE algorithm Ouyang et al. (2017), where they show that the Thompson sampling algorithm with epochs performs empirically better than the optimism based UCRL2 algorithm Jaksch et al. (2010) with epochs. Osband et al. (2013) also made a similar observation where their PSRL algorithm worked better than the UCRL2 algorithm. Again, we set the value of parameter as and with , the algorithm does not violate constraints. We also observe that the standard deviation of the rewards and constraints are higher for the PS-CURL algorithm as compared to the UC-CURL algorithm as the PS-CURL algorithm has an additional stochastic component which arises from sampling the transition probabilities.

After analysing the algorithms presented in this paper, we now analyse the performance of the algorithm by Chen et al. (2022). They provide an optimistic online mirror descent algorithm which also works with conservative parameter to tightly bound constraint violations. Their algorithm also obtains a regret bound. However, their algorithm is designed for a linear reward/constraint setup with a single constraint, and empirically the algorithm is difficult to tune as it requires additional knowledge of , , , and to fine tune parameters used in their algorithm. We set the value of the learning rate for online mirror descent as with an episode length of . Further, we scale the rewards and costs to ensure that they lie between and . We analyze the behavior of the optimistic online mirror descent algorithm in Figure 1(b). We observe that the algorithm has three phases. The first phase is the first episodes where the algorithm uses a uniform policy which is the initial flat area till first steps. In the second phase, the algorithm updates the policy for the first time and starts converging to the optimal policy with a convergence rate which matches to that of the PS-CURL algorithm. However, after few policy updates, we observe that the algorithm has oscillatory behavior which is because the dual variable updates require online constraint violations.

Finally, we analyze the the algorithm by Singh et al. (2020). They also provide an algorithm which proceeds in epochs and solves an optimization problem at every epoch. The algorithm considers a fixed epoch length . Further, the algorithm considers a confidence interval on each estimate of for all triplet. The algorithm does not perform well even though it updates the policy most frequently because of creating confidence intervals on individual transition probabilities instead of the probability vector .

From the experimental observations, we note that the proposed UC-CURL algorithm is suitable in cases where the parameter tuning is not possible and the system requires tighter bounds on deviation of the performance of the algorithm. The PS-CURL algorithm can be used in cases where the variance in algorithm’s performance can be tolerated or computational complexity is a constraint. Further, for both the algorithms, it is beneficial to use the linear increasing epoch lengths. Additionally, the algorithm by Chen et al. (2022) is suitable for cases where solving an optimization equation is not feasible, for example an embedded system, as the algorithm updates policy using exponential function which can be easily computed. However, this algorithm is only applicable in applications with linear reward/constraint and single constraint.

7 Conclusion

We considered the problem of Markov Decision Process with concave objective and convex constraints. For this problem, we proposed UC-CURL algorithm which works on the principle of optimism. To bound the constraint violations, we solve for a conservative policy using an optimistic model for an -tight optimization problem. Using an analysis based on Bellman error for infinite-horizon MDPs, we show the UC-CURL algorithm achieves constraint violations with a regret bound of . Further, to reduce the computation complexity of finding optimistic MDP, we also propose a posterior sampling algorithm which finds the optimal policy for a sampled MDP. We provide a Bayesian regret bound of for the posterior sampling algorithm by considering a stronger Slater’s condition to solve for constrained optimization for sampled MDPs as well. As part of potential future works, we consider dynamically configuring to be an interesting and important direction to reduce the requirement of problem parameters.

References

- Agarwal & Aggarwal (2022) Mridul Agarwal and Vaneet Aggarwal. Reinforcement learning for joint optimization of multiple rewards. Accepted to Journal of Machine Learning Research, 2022.

- Agarwal et al. (2022a) Mridul Agarwal, Vaneet Aggarwal, and Tian Lan. Multi-objective reinforcement learning with non-linear scalarization. In Proceedings of the 21st International Conference on Autonomous Agents and Multiagent Systems, pp. 9–17, 2022a.

- Agarwal et al. (2022b) Mridul Agarwal, Qinbo Bai, and Vaneet Aggarwal. Regret guarantees for model-based reinforcement learning with long-term average constraints. In The 38th Conference on Uncertainty in Artificial Intelligence, 2022b.

- Altman & Schwartz (1991) E. Altman and A. Schwartz. Adaptive control of constrained markov chains. IEEE Transactions on Automatic Control, 36(4):454–462, 1991. doi: 10.1109/9.75103.

- Altman (1999) Eitan Altman. Constrained Markov decision processes, volume 7. CRC Press, 1999.

- Bai et al. (2022a) Qinbo Bai, Mridul Agarwal, and Vaneet Aggarwal. Joint optimization of concave scalarized multi-objective reinforcement learning with policy gradient based algorithm. Journal of Artificial Intelligence Research, 74:1565–1597, 2022a.

- Bai et al. (2022b) Qinbo Bai, Amrit Singh Bedi, Mridul Agarwal, Alec Koppel, and Vaneet Aggarwal. Achieving zero constraint violation for constrained reinforcement learning via primal-dual approach. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 36, pp. 3682–3689, 2022b.

- Bai et al. (2023) Qinbo Bai, Amrit Singh Bedi, and Vaneet Aggarwal. Achieving zero constraint violation for constrained reinforcement learning via conservative natural policy gradient primal-dual algorithm. In Proceedings of the AAAI Conference on Artificial Intelligence, 2023.

- Bercu et al. (2015) Bernard Bercu, Bernard Delyon, and Emmanuel Rio. Concentration inequalities for sums and martingales. Springer, 2015.

- Brantley et al. (2020) Kianté Brantley, Miro Dudik, Thodoris Lykouris, Sobhan Miryoosefi, Max Simchowitz, Aleksandrs Slivkins, and Wen Sun. Constrained episodic reinforcement learning in concave-convex and knapsack settings. Advances in Neural Information Processing Systems, 33:16315–16326, 2020.

- Bubeck et al. (2015) Sébastien Bubeck et al. Convex optimization: Algorithms and complexity. Foundations and Trends® in Machine Learning, 8(3-4):231–357, 2015.

- Chen et al. (2021) Jingdi Chen, Yimeng Wang, and Tian Lan. Bringing fairness to actor-critic reinforcement learning for network utility optimization. In IEEE INFOCOM 2021-IEEE Conference on Computer Communications, pp. 1–10. IEEE, 2021.

- Chen et al. (2022) Liyu Chen, Rahul Jain, and Haipeng Luo. Learning infinite-horizon average-reward Markov decision process with constraints. In Kamalika Chaudhuri, Stefanie Jegelka, Le Song, Csaba Szepesvari, Gang Niu, and Sivan Sabato (eds.), Proceedings of the 39th International Conference on Machine Learning, volume 162 of Proceedings of Machine Learning Research, pp. 3246–3270. PMLR, 17–23 Jul 2022.

- Cheung (2019) Wang Chi Cheung. Regret minimization for reinforcement learning with vectorial feedback and complex objectives. Advances in Neural Information Processing Systems, 32:726–736, 2019.

- Cui et al. (2019) Wei Cui, Kaiming Shen, and Wei Yu. Spatial deep learning for wireless scheduling. ieee journal on selected areas in communications, 37(6):1248–1261, 2019.

- Ding et al. (2020) Dongsheng Ding, Kaiqing Zhang, Tamer Basar, and Mihailo Jovanovic. Natural policy gradient primal-dual method for constrained markov decision processes. Advances in Neural Information Processing Systems, 33, 2020.

- Ding et al. (2021) Dongsheng Ding, Xiaohan Wei, Zhuoran Yang, Zhaoran Wang, and Mihailo Jovanovic. Provably efficient safe exploration via primal-dual policy optimization. In International Conference on Artificial Intelligence and Statistics, pp. 3304–3312. PMLR, 2021.

- Efroni et al. (2020) Yonathan Efroni, Shie Mannor, and Matteo Pirotta. Exploration-exploitation in constrained mdps. arXiv preprint arXiv:2003.02189, 2020.

- Fruit et al. (2018) Ronan Fruit, Matteo Pirotta, Alessandro Lazaric, and Ronald Ortner. Efficient bias-span-constrained exploration-exploitation in reinforcement learning. In International Conference on Machine Learning, pp. 1578–1586. PMLR, 2018.

- Gattami et al. (2021) Ather Gattami, Qinbo Bai, and Vaneet Aggarwal. Reinforcement learning for constrained markov decision processes. In International Conference on Artificial Intelligence and Statistics, pp. 2656–2664. PMLR, 2021.

- Ghasemipour et al. (2020) Seyed Kamyar Seyed Ghasemipour, Richard Zemel, and Shixiang Gu. A divergence minimization perspective on imitation learning methods. In Conference on Robot Learning, pp. 1259–1277. PMLR, 2020.

- Hazan et al. (2019) Elad Hazan, Sham Kakade, Karan Singh, and Abby Van Soest. Provably efficient maximum entropy exploration. In International Conference on Machine Learning, pp. 2681–2691. PMLR, 2019.

- Jaksch et al. (2010) Thomas Jaksch, Ronald Ortner, and Peter Auer. Near-optimal regret bounds for reinforcement learning. Journal of Machine Learning Research, 11(Apr):1563–1600, 2010.

- Jin et al. (2017) Chi Jin, Rong Ge, Praneeth Netrapalli, Sham M Kakade, and Michael I Jordan. How to escape saddle points efficiently. In International Conference on Machine Learning, pp. 1724–1732. PMLR, 2017.

- Kalagarla et al. (2021) Krishna C Kalagarla, Rahul Jain, and Pierluigi Nuzzo. A sample-efficient algorithm for episodic finite-horizon mdp with constraints. 35(9):8030–8037, 2021.

- Kwan et al. (2009) Raymond Kwan, Cyril Leung, and Jie Zhang. Proportional fair multiuser scheduling in lte. IEEE Signal Processing Letters, 16(6):461–464, 2009.

- Lan et al. (2010) Tian Lan, David Kao, Mung Chiang, and Ashutosh Sabharwal. An axiomatic theory of fairness in network resource allocation. IEEE, 2010.

- Langford & Kakade (2002) J Langford and S Kakade. Approximately optimal approximate reinforcement learning. In Proceedings of ICML, 2002.

- Le et al. (2019) Hoang Le, Cameron Voloshin, and Yisong Yue. Batch policy learning under constraints. In International Conference on Machine Learning, pp. 3703–3712. PMLR, 2019.

- Liu et al. (2021) Tao Liu, Ruida Zhou, Dileep Kalathil, Panganamala Kumar, and Chao Tian. Learning policies with zero or bounded constraint violation for constrained mdps. Advances in Neural Information Processing Systems, 34:17183–17193, 2021.

- Osband et al. (2013) Ian Osband, Daniel Russo, and Benjamin Van Roy. (more) efficient reinforcement learning via posterior sampling. In Advances in Neural Information Processing Systems, pp. 3003–3011, 2013.

- Ouyang et al. (2017) Yi Ouyang, Mukul Gagrani, Ashutosh Nayyar, and Rahul Jain. Learning unknown markov decision processes: A thompson sampling approach. Advances in neural information processing systems, 30, 2017.

- Puterman (2014) Martin L Puterman. Markov decision processes: discrete stochastic dynamic programming. John Wiley & Sons, 2014.

- Roijers et al. (2013) Diederik M Roijers, Peter Vamplew, Shimon Whiteson, and Richard Dazeley. A survey of multi-objective sequential decision-making. Journal of Artificial Intelligence Research, 48:67–113, 2013.

- Rosenberg & Mansour (2019) Aviv Rosenberg and Yishay Mansour. Online convex optimization in adversarial markov decision processes. In International Conference on Machine Learning, pp. 5478–5486. PMLR, 2019.

- Singh et al. (2020) Rahul Singh, Abhishek Gupta, and Ness B Shroff. Learning in markov decision processes under constraints. arXiv preprint arXiv:2002.12435, 2020.

- Tessler et al. (2018) Chen Tessler, Daniel J Mankowitz, and Shie Mannor. Reward constrained policy optimization. In International Conference on Learning Representations, 2018.

- Wei et al. (2022a) Honghao Wei, Xin Liu, and Lei Ying. Triple-q: A model-free algorithm for constrained reinforcement learning with sublinear regret and zero constraint violation. In International Conference on Artificial Intelligence and Statistics, pp. 3274–3307. PMLR, 2022a.

- Wei et al. (2022b) Honghao Wei, Xin Liu, and Lei Ying. A provably-efficient model-free algorithm for infinite-horizon average-reward constrained markov decision processes. In Proceedings of the AAAI Conference on Artificial Intelligence, 2022b.

- Weissman et al. (2003) Tsachy Weissman, Erik Ordentlich, Gadiel Seroussi, Sergio Verdu, and Marcelo J Weinberger. Inequalities for the l1 deviation of the empirical distribution. Hewlett-Packard Labs, Tech. Rep, 2003.

- Wierman (2011) Adam Wierman. Fairness and scheduling in single server queues. Surveys in Operations Research and Management Science, 16(1):39–48, 2011.

- Xu et al. (2021) Tengyu Xu, Yingbin Liang, and Guanghui Lan. Crpo: A new approach for safe reinforcement learning with convergence guarantee. In International Conference on Machine Learning, pp. 11480–11491. PMLR, 2021.

- Yu et al. (2021) Tiancheng Yu, Yi Tian, Jingzhao Zhang, and Suvrit Sra. Provably efficient algorithms for multi-objective competitive rl. In Marina Meila and Tong Zhang (eds.), Proceedings of the 38th International Conference on Machine Learning, volume 139 of Proceedings of Machine Learning Research, pp. 12167–12176. PMLR, 18–24 Jul 2021.

- Zhang et al. (2020) Junyu Zhang, Alec Koppel, Amrit Singh Bedi, Csaba Szepesvari, and Mengdi Wang. Variational policy gradient method for reinforcement learning with general utilities. Advances in Neural Information Processing Systems, 33:4572–4583, 2020.

- Zhang et al. (2021) Junyu Zhang, Chengzhuo Ni, Zheng Yu, Csaba Szepesvari, and Mengdi Wang. On the convergence and sample efficiency of variance-reduced policy gradient method. In A. Beygelzimer, Y. Dauphin, P. Liang, and J. Wortman Vaughan (eds.), Advances in Neural Information Processing Systems, 2021. URL https://openreview.net/forum?id=Re_VXFOyyO.

- Zheng & Ratliff (2020) Liyuan Zheng and Lillian Ratliff. Constrained upper confidence reinforcement learning. In Learning for Dynamics and Control, pp. 620–629. PMLR, 2020.

Appendix A Assumptions and their justification

We first introduce our initial assumptions on the MDP . We assume the MDP is ergodic. Ergodicity is a commonly used assumption in constrained RL literature Singh et al. (2020); Chen et al. (2022). Further, ergodicity is required to obtain stationary Markovian policies which can be tranferred from training setup to test environment. Let denote the -step transition probability on following policy in MDP starting from some state . Also, let denotes the time taken by the Markov chain induced by the policy to hit state starting from state . Building on these variables, and , we make our first assumption as follows:

Assumption A.1.

The MDP is ergodic, or

| (28) |

where is the long-term steady state distribution induced by policy , and and are problem specific constants. Also, we have

| (29) |

where is the finite mixing time of the MDP .

We note that in most of the problems, rewards are engineered according to the problem. However, the system dynamics are stochastic and typically not known. Based on this, we make the following assumption on rewards.

Assumption A.2.

The rewards , the costs and the functions and are known to the agent.

Our next assumption is on the functions and . Many practically implemented fairness objectives are concave (Kwan et al., 2009), or the agent want to explore all possible state action pairs by maximizing the entropy of the long-term state-action distribution (Hazan et al., 2019), or the agent may want to minimize divergence with respect to a certain expert policy (Ghasemipour et al., 2020). Formally, we have

Assumption A.3.

The scalarization function is jointly concave and the constraints are jointly convex. Hence for any arbitrary distributions and , the following holds.

| (30) | ||||

| (31) |

We impose an additional assumption on the functions and . We assume that the functions are continuous and Lipschitz continuity in particular. Lipschitz continuity is a common assumption for optimization literature (Bubeck et al., 2015; Jin et al., 2017; Zhang et al., 2020). Additionally, in practice this assumption is validated, often by adding some regularization. We have,

Assumption A.4.

The function and are assumed to be a Lipschitz function, or

| (32) | ||||

| (33) |

We consider a standard setup of concave and the Lipschitz function as considered by (Cheung, 2019; Brantley et al., 2020; Yu et al., 2021). Note that the analysis in this paper directly works for , where the function takes as input multiple average per-step rewards. We can obtain maximum entropy exploration if choose function with for a particular state action pair and choosing to cover all state-action pairs and a regularizer .

Next, we assume the following Slater’s condition to hold.

Assumption A.5.

There exists a policy , and one constant such that

| (34) |

Further, if there is a (possibly unknown) lower bound on time-horizon, , then we only require . This assumption is again a standard assumption in the constrained RL literature (Efroni et al., 2020; Ding et al., 2021; 2020; Wei et al., 2022a). is referred as Slater’s constant. (Ding et al., 2021) assumes that the Slater’s constant is known. (Wei et al., 2022a) assumes that the number of iterations of the algorithm is at least for episode length . On the contrary, we simply assume the existence of and a lower bound on the value of which can be relaxed as the agent acquires more time to interact with the environment.

Appendix B Efficiently solving the Conservative Optimistic Optimization problem

We now provide the details on efficiently solving the optimistic optimization problem described with constraints in Equation (16)-(18). Similar to the method proposed in Rosenberg & Mansour (2019), we define a new variable which denotes the probability of being is state , taking action , and then moving to state . Now, the transition probability to next state given current state and action is given as:

| (35) |

Further, the occupancy measure of state-action pair is given as

| (36) |

Based on these two observations, at the beginning of epoch , we define the optimization problem as follows:

| (37) |

subject to following constraints

| (38) | |||

| (39) | |||

| (40) | |||

| (41) | |||

| (42) | |||

| (43) |

for all , and . Also, is an auxiliary variable introduced to reduce the complexity of norm constraints and the present the optimization problem in a disciplined convex program which can be coded easily in CVXPY. The Equations (41), (42), (43) jointly describe the confidence interval on the probability estimates.

Appendix C Proof of Lemma 5.1

Proof.

Note that denotes the stationary distribution of the optimal solution which satisfies

| (44) |

Further, from Assumption 3.7, we have a feasible policy for which

| (45) |

We now construct a stationary distribution obtain the corresponding as:

| (46) | |||

| (47) |

For this new policy and convex constraint , we observe that

| (48) | |||

| (49) |

| (50) | |||

| (51) | |||

| (52) |

where Equation (50) follows from the convexity of the constraints. Equation (51) follows from Equation (44) and Equation (45).

Note that the policy corresponding to stationary distribution constructed in Equation (46) satisfies the -tight constraints. Further, we find as the optimal solution for the -tight optimization problem. Hence, we have

| (53) | |||||

| (54) | |||||

| (55) |

| (56) | |||||

| (57) | |||||

| (58) |

where, Equation (54) follows from the Lipschitz assumption on the joint objective . Equation (58) follows from the fact that for all . ∎

Appendix D Objective Regret Bound

In this section, we begin with breaking down the regret in multiple components and then analysis the components individually.

D.1 Regret breakdown

We first break down our regret into multiple parts which will help us bound the regret.

| (59) | ||||

| (60) | ||||

| (61) | ||||

| (62) | ||||

| (63) | ||||

| (64) | ||||

| (65) | ||||

| (66) | ||||

| (67) |

where Equation (62) comes from the fact that the policy is for the optimistic CMDP and provides a higher value of the function . Equation 63 comes from the concavity of the function , and Equation 64 comes from the Lipschitz continuity of the function . The three terms in Equation (67) are now defined as:

| (68) |

denotes the regret incurred from not playing the optimal policy for the true optimization problem in Equation (9) but the optimal policy for the -tight optimization problem in epoch .

| (69) |

denotes the gap between expected rewards from playing the optimal policy for -tight optimization problem on the optimistic MDP instead of the true MDP. For this term, we further consider another modification. We have being the optimistic MDP with optimistic policy as the solutions for the optimization equation solved at the beginning of every epoch. Now consider an MDP in the confidence set, which maximizes the long term expected reward for policy or for all in the confidence interval at epoch . Hence, we have

| (70) | ||||

| (71) |

We relabel as in the remaining analysis to reduce notation clutter.

| (72) |

denotes the gap between obtained rewards from playing the optimal policy for -tight optimization problem the true MDP and the expected per-step reward of playing the optimal policy for -tight optimization problem the true MDP.

D.2 Bounding

D.3 Bounding

We relate the difference between long-term average rewards for running the optimistic policy on the optimistic MDP and the long-term average rewards for running the optimistic policy on the true MDP () with the Bellman error. Formally, we have the following lemma:

Lemma D.1.

The difference of long-term average rewards for running the optimistic policy on the optimistic MDP, , and the average long-term average rewards for running the optimistic policy on the true MDP, , is the long-term average Bellman error as

| (81) |

Proof.

Note that for all , we have:

| (82) | ||||

| (83) |

where Equation (83) follows from the definition of the Bellman error for state action pair .

Similarly, for the true MDP, we have,

| (84) | ||||

| (85) |

Subtracting Equation (85) from Equation (83), we get:

| (86) | ||||

| (87) |

Using the vector format for the value functions, we have,

| (88) |

Now, converting the value function to average per-step reward we have,

| (89) | ||||

| (90) | ||||

| (91) |

where the last equation follows from the definition of occupancy measures by Puterman (2014). ∎

Remark D.2.

We now want to bound the Bellman errors to bound the gap between the average per-step reward , and . From the definition of Bellman error and the confidence intervals on the estimated transition probabilities, we obtain the following lemma:

Lemma D.3.

With probability at least , the Bellman error for state-action pair in epoch is upper bounded as

| (92) |

Proof.

Starting with the definition of Bellman error in Equation (20), we get

| (93) | ||||

| (94) | ||||

| (95) | ||||

| (96) | ||||

| (97) | ||||

| (98) | ||||

| (99) | ||||

| (100) | ||||

| (101) | ||||

| (102) | ||||

| (103) |

where Equation (95) comes from the assumption that the rewards are known to the agent. Equation (99) follows from the fact that the difference between value function at two states is bounded. Equation (100) comes from the definition of bias term Puterman (2014). Equation (101) follows from Hölder’s inequality. In Equation (102), is the bias span of the MDP with transition probabilities for policy . Also, the norm of probability vector is bounded using Lemma F.1 for start time of epoch . ∎

Additionally, note that the norm in Equation (101) is bounded by . Thus the Bellman error is loose upper bounded by for all state-action pairs.

Note that we have converted the difference of average rewards into the average Bellman error. Also, we have bounded the Bellman error of a state-action pair. We now want to bound the average Bellman error of an epoch using the realizations of Bellman error at state-action pairs visited in an epoch. For this, we present the following lemma.

Lemma D.4.

With probability at least , the cumulative expected Bellman error is bounded as:

| (104) |

Proof.

Let be the filtration generated by the running the algorithm for time-steps. Note that conditioned on filtration the two expectations and are not equal as the former is the expected value of the long-term state distribution and the latter is the long-term state distribution condition on initial state . We now use Assumption 3.1 to obtain the following set of inequalities.

| (105) | ||||

| (106) | ||||

| (107) | ||||

| (108) | ||||

| (109) | ||||

| (110) | ||||

| (111) |

where Equation 107 comes from Assumption 3.1 for running policy starting from state for steps and from Lemma 5.3. Equation (111) follows from bounding the total-variation distance for all states and from the fact that .

Using this, and the fact that forms a Martingale difference sequence conditioned on filtration with , we can use Azuma-Hoeffding inequality to bound the summation as

| (112) | |||||

| (113) | |||

| (114) |

where Eq. (114) comes from the Azuma-Hoefdding’s inequality with probability at least . ∎

D.4 Bounding the term

Note that we have for all in the confidence set.

Lemma D.5.

For a MDP with rewards and transition probabilities , using policy , the difference of bias of any two states , and is bounded as .

Proof.

Note that for all in the confidence set. Now, consider the following Bellman equation

where and .

Consider two states . Also, let be a random variable. With , we also define another operator,

Note that for all since maximizes the reward over all the transition probabilities in the confidence set of Eq. (21) including the true transition probability . Further, for any two vectors with , we have . Hence, we have for all . Hence, we have

Taking limit as , we have , thus completing the proof. ∎

We are now ready to bound using Lemma D.1, Lemma D.3, and Lemma D.4. We have the following set of equations:

| (115) | |||||

| (116) | |||||

| (117) |

| (118) | |||

| (119) | |||

| (120) | |||

| (121) | |||

| (122) | |||

| (123) |

D.5 Bounding

Bounding follows mostly similar to Lemma D.4. At each epoch, the agent visits states according to the occupancy measure and obtains the rewards. We bound the deviation of the observed visitations to the expected visitations to each state action pair in each epoch.

Lemma D.6.

With probability at least , the difference between the observed rewards and the expected rewards is bounded as:

| (124) |

Proof.

We note that is a Martingale difference sequence bounded by because the rewards are bounded by . Hence, following the proof of Lemma D.4 we get the required result. ∎

D.6 Bounding the number of episodes

The number of episodes of the UC-CURL algorithm are bounded by from Proposition 18 of Jaksch et al. (2010). We now bound the number of episodes for the modification of the algorithm as described in Section 5.2. We considered to trigger a new episode whenever becomes where is the number of visitations to which triggered a new epoch. In the following lemma, we show that the number of episodes are bounded by with this epoch trigger schedule.

Lemma D.7.

If the UC-CURL algorithm triggers a new epoch whenever for any state-action pair , the total number of epochs are bounded by , where and for all .

Proof.

Let be the number visitations to state-action pair and be the total number of epochs triggered when the trigger condition is met for state action pair . Hence, we have

| (125) | ||||

| (126) | ||||

| (127) |

where considering only epoch triggers for gives Equation (126). Equation (127) is obtained from the fact that which gives .

Now, we have the following,

| (128) | ||||

| (129) | ||||

| (130) | ||||

| (131) |

where Equation (131) is obtained from the convexity of . Hence, we have,

| (132) |

Further, the first epoch is triggered when the algorithm starts. Hence we have . ∎

Appendix E Bounding Constraint Violations

To bound the constraint violations , we break it into multiple components. We can then bound these components individually.

E.1 Constraint breakdown

We first break down our constraint violations into multiple parts which will help us bound the constraint violations.

| (133) | ||||

| (134) | ||||

| (135) | ||||

| (136) | ||||

| (137) | ||||

| (138) | ||||

| (139) | ||||

| (140) |

where Equation (185) comes from the fact the policy is solution of a conservative optimization equation. Equation (186) comes from the convexity of the constraint . Equation (187) follows from the Lipschitz assumption. The three terms in Equation (67) are now defined as:

| (141) |

denotes the gap left by playing the policy for -tight optimization problem on the optimistic MDP.

| (142) |

denotes the difference between long-term average costs incurred by playing the policy on the true MDP with transitions and the optimistic MDP with transitions . This term is bounded similar to the bound of .

| (143) |

denotes the difference between long-term average costs incurred by playing the policy on the true MDP with transitions and the realized costs. This term is bounded similar to the bound of .

E.2 Bounding

Note that allows us to violate constraints by not having the knowledge of the true MDP and allowing deviations of incurred costs from the expected costs. We now want to lower bound to allow us sufficient slackness. With this idea, we have the following set of equations.

| (144) | ||||

| (145) | ||||

| (146) | ||||

| (147) | ||||

| (148) | ||||

| (149) | ||||

| (150) |

where is some epoch for which .

E.3 Bounding , and

We note that costs incurred in , and follows the same bound as and respectively. Thus, replacing with , we obtain constraint violations because of imperfect system knowledge and system stochastics as .

Summing the three terms gives the required bound and choosing gives the required bound on constraint violations.

Appendix F Concentration bound results

We want to bound the deviation of the estimates of the estimated transition probabilities of the Markov Decision Processes . For that we use deviation bounds from (Weissman et al., 2003). Consider, the following event,

| (151) |

where . Then, we have the following result:

Lemma F.1.

The probability that the event fails to occur us upper bounded by .

Proof.

From the result of (Weissman et al., 2003), the distance of a probability distribution over events with samples is bounded as:

| (152) |

This, for gives,

| (153) | ||||

| (154) | ||||

| (155) |

We sum over the all the possible values of till time-step to bound the probability that the event does not occur as:

| (156) |

Finally, summing over all the , we get,

| (157) |

∎

The second lemma is the Azuma-Hoeffding’s inequality, which we use to bound Martingale difference sequences.

Lemma F.2 (Azuma-Hoeffding’s Inequality).

Let be a Martingale difference sequence such that for all , then,

| (158) |

Appendix G Posterior Sampling Algorithm

Note that in the UC-CURL algorithm, the agent solves for an optimistic policy. This convex optimization problem may be computationally intensive with additional variables and additional constraints. We now present the posterior sampling version of the UC-CURL algorithm which reduces this computational complexity by sampling the transition probabilities from the updated posterior. The posterior sampling algorithm is based on Lemma 1 of Osband et al. (2013), which we state formally here.

Lemma G.1.

[Posterior Sampling] If is the distribution of then, for any -measurable function ,

| (159) |

where is the MDP sampled at the beginning of the epoch at time-step .

We now present our posterior sampling based PS-CURL algorithm described in Algorithm 2. Similar to the UC-CURL algorithm, the PS-CURL algorithm proceeds in epochs. At each epoch , the agent samples , it can solve the following optimization problem for the optimal feasible policy.

| (160) |

with the following set of constraints,

| (161) | |||

| (162) | |||

| (163) |

for all and . Using the solution for for -tight optimization equation for the optimistic MDP, we obtain the optimal conservative policy for epoch as:

| (164) |

For the UC-CURL algorithm, the true MDP lies in the confidence interval with high probability, and hence the solution of the optimization problem was guaranteed. However, the same is not true for the MDP with sampled transition probabilities. We want the existence of a policy such that Equation (163) holds. We obtain the condition for existence of such a policy in the following lemma. To obtain the lemma, we first state a tighter Slater assumption as:

Assumption G.2.

There exists a policy , and constants and such that

| (165) |

Lemma G.3.

If there exists a policy , such that

| (166) |

and there exists episodes and with start timesteps and respectively satisfying , then for the policy satisfies,

| (167) |

Proof.

We start with the Lipschitz assumption (Assumption 3.4) to obtain,

| (168) | ||||

| (169) |

where Equation (169) is obtained by choosing the sign of modulo in the previous equation. We now bound the term using Bellman error. We have,

| (170) |

where is the Bellman error for cost . We bound the expectation using Azuma-Hoeffding’s inequality as follows:

| (171) | ||||

| (172) | ||||

| (173) | ||||

| (174) | ||||

| (175) | ||||

| (176) |

| (177) | |||

| (178) |

where Equation (172) is obtained by summing both sides from to . Equation (173) is obtained by summing over the geometric series with ratio . Equation (174) comes from Lemma D.4. Equation (175) comes from the fact that for all , and then replacing the lower bound of . Equation (176) follows from the Cauchy Schwarz inequality. Equation (177) follows from the fact that the epoch length is same as the number of visitations to all state action pairs in an epoch.

From Lemma G.3 we observe that for a tighter Slater condition on the true MDP, we can only guarantee a weaker Slater guarantee. However, we make that assumption to obtain the feasibility of the optimization problem in Equation (160).

The Bayesian regret of the PS-CURL algorithm is defined as follows:

Similarly, we define Bayesian constraint violations, , as the expected gap between the constraint function and incurred and constraint bounds, or

where .

Now, we can use Lemma G.1 to obtain and , and follow the analysis similar to the analysis of Theorem 5.6 to obtain the required regret bounds.

G.1 Bound on constraints

We now bound the constraint violations and prove that using a conservative policy. We can reduce the constraint violations to . We have:

| (183) | ||||

| (184) | ||||

| (185) | ||||

| (186) | ||||

| (187) | ||||

| (188) | ||||

| (189) | ||||

| (190) |

where Equation (186) follows from the convexity of the function , and Equation (187) follows from the Lipschtiz continuity.

We bound similar to the analysis of by

| (191) |

We focus our attention on bounding . For this, note that in Assumption 3.4 we assumed that the cost function is Lipschitz continuous and the gradients are bounded at all points. This implies for a bounded input domain the cost function is bounded. We assume that the upper bound of the cost is . We now obtain the bound on as:

| (192) | ||||

| (193) | ||||

| (194) | ||||

| (195) | ||||

| (196) | ||||

| (197) | ||||

| (198) | ||||

| (199) |

where Equation (194) follows from the bound on . Equation (195) follows from following the conservative policy.

Thus, choosing an appropriate , we can bound constraint violations by .

Appendix H Further Discussions

H.1 Regarding Ergodicity in Assumption 3.1

Regarding the assumption on ergodicity in Assumption 3.1, we make two observations:

For MDPs with constraints, we note that for optimal policy to be stationary, the MDP has to be ergodic. For finite diameter MDPs, the optimal policy can be non-stationary. Consider an MDP with three states, left, middle, right, and two actions left, right. The left action keeps the state unchanged from left, or takes the agent left from middle and to middle from right. Similarly, the right action takes the agent to middle from left and to right from middle, or keep the agent to right. Since there exists different recurrent classes for different policies (For a policy which takes only left action, the recurrent class contains only left state. For policy which takes only right action, the recurrent class contains only right state), the MDP is non-ergodic. Further, the agent obtains a reward of +1 and cost of 0 on taking left action in left state and reward of 0 and cost of +1 on taking right action in the right state. A stationary policy provides an average reward of as the agent stays in all three states with equal probability and takes either actions with equal probability in each state. Whereas, if the agent follows a a non-stationary optimal policy, the agent optimizes both rewards and cost with average reward of . Hence, the agent must stay in state left as much as the state right by only making minimal transitions via the middle state. Thus, the optimal policy is non-stationary for non-ergodic MDPs. This example is provided in detail by Cheung (2019).

The second observation is for finite diameter MDPs, where Chen et al. (2022) provided an algorithm which requires the knowledge of the time horizon and the span of the costs . We note that the two variables might not be known to the agent in advance. Further, the knowledge of the time horizon is required to divide the time horizons to epochs of duration to obtain a regret bound of . This particular epoch length is required to bound the bias-span of the MDP considered in the epoch. Finally, we note that the finite mixing time is also assumed in other works in constrained IH MDP Singh et al. (2020).

We note that even if we use other exploration strategies, we will require the Bellman error analysis to analyze the stochastic policies. In this work, we perform an exploration and exploitation strategy by dividing the time horizon into epochs and then updating the policy in each epoch using the MDP model built using exploration done in previous epochs. The analysis of the regret will still need the impact of stochastic policies and thus the analysis approaching the paper will still be needed for any exploration strategy.

We also note that since the MDP is ergodic, exploration can be done with any policy and the agent does not need an optimistic MDP to explore. However, the agent wants to minimize the regret for the online algorithm, and hence it plays the optimal policy based on the MDP estimated/learned till time . To do so the agent finds the optimistic policy or the policy which provides the highest possible reward in the confidence interval. Note that if agent agent could have played any policy and obtained same regret bound, a policy worse than the true MDP can also exist in the confidence interval and that would not give the same performance. In the following, we provide a simplified problem setup and algorithm to demonstrate that some policy may achieve large regret bound even with ergodic assumption.

Consider a simplified problem setup where with no constraints. Note that this is the classical RL setup. Also consider an algorithm where the agent uses the estimated MDP without considering the confidence intervals. After every epoch, the agent solves for the optimal policy using the following optimization equation.

| (200) |

with the following set of constraints,

| (201) | |||

| (202) |

where is the estimate for transition probability to next state given state action pair after epoch . Let be the solution for optimization problem in Equation (200)-(202) for epoch .

Now the regret , till time horizon , is defined as

| (203) | ||||

| (204) | ||||

| (205) | ||||

| (206) | ||||

| (207) |

is analysed in similarly to the regret analysis of the proposed UC-CURL algorithm. For , we obtain the following analysis:

| (208) | ||||

| (209) |

Now, the second term, , in Equation (209) can be again analyzed using the Bellman error based analysis. We are primarily interested in the first term. Now, note that since the agent does not play the optimistic policy, cannot be upper bounded by the optimal policy of the optimistic MDP in the confidence interval. For this, the average reward satisfies . For a posterior sampling algorithm, the optimal policy for the sampled MDP satisfies . This two properties for the optimistic or posterior sampling algorithm contributes to the key parts in the analysis and design of the RL algorithms.

However, no such relationship can be established for , and and hence the first term of Equation (209) is not trivially upper bounded by . Further, for the optimal policy for the estimated MDP can return a reward lower than the optimal policy on the true MDP or resulting in a trivial regret bound.

H.2 Regarding Optimality

We note the work of Singh et al. (2020) provided a lower bound of , where is the diameter of the MDP, , are the number of actions and states respectively and is the time horizon for which the algorithm runs. Based on the lower bound, the regret results presented in this work are optimal in and . However, to obtain a tighter dependence on using tighter concentration inequalities for stochastic policies remain an open problem. Further, we note that reducing the dependence of to the diameter while also keeping the regret order in as is an open problem.

Appendix I Experiments with Fairness Utility and Constraints