Conformal mapping Coordinates Physics-Informed Neural Networks (CoCo-PINNs): learning neural networks for designing neutral inclusions

Abstract

We focus on designing and solving the neutral inclusion problem via neural networks. The neutral inclusion problem has a long history in the theory of composite materials, and it is exceedingly challenging to identify the precise condition that precipitates a general-shaped inclusion into a neutral inclusion. Physics-informed neural networks (PINNs) have recently become a highly successful approach to addressing both forward and inverse problems associated with partial differential equations. We found that traditional PINNs perform inadequately when applied to the inverse problem of designing neutral inclusions with arbitrary shapes. In this study, we introduce a novel approach, Conformal mapping Coordinates Physics-Informed Neural Networks (CoCo-PINNs), which integrates complex analysis techniques into PINNs. This method exhibits strong performance in solving forward-inverse problems to construct neutral inclusions of arbitrary shapes in two dimensions, where the imperfect interface condition on the inclusion’s boundary is modeled by training neural networks. Notably, we mathematically prove that training with a single linear field is sufficient to achieve neutrality for untrained linear fields in arbitrary directions, given a minor assumption. We demonstrate that CoCo-PINNs offer enhanced performances in terms of credibility, consistency, and stability.

1 Introduction

Physics-informed neural networks (PINNs)(Raissi et al., 2019; Karniadakis et al., 2021) are specialized neural networks designed to solve partial differential equations (PDEs). Since their introduction, PINNs have been successfully applied to a wide range of PDE-related problems (Cuomo et al., 2022; Hao et al., 2023; Wu et al., 2024). A significant advantage of using PINNs is their versatile applicability to different types of PDEs and their ability to deal with PDE parameters or initial/boundary constraints while solving forward problems (Akhound-Sadegh et al., 2023; Cho et al., 2023; Rathore et al., 2024; Lau et al., 2024). The conventional approach to solving inverse problems with PINNs involves designing neural networks that converge to the parameters or constraints to be reconstructed, which are typically modeled as constants or functions. We refer to this methodology as classical PINNs. Numerous successful outcomes in solving inverse problems using PINNs have been reported. See, for example, Chen et al. (2020); Jagtap et al. (2022); Haghighat et al. (2021). However, as the complexity of the PDE-based inverse problem increases, the neural networks may require additional design to represent the parameters or constraints accurately. For instance, Pokkunuru et al. (2023) utilized Bayesian approach to design the loss function, Guo et al. (2022) used Monte Carlo approximation to compute the fractional derivatives, Xu et al. (2023) adopted multi-task learning method to weight losses and also presented the forward-inverse problem combined neural networks, and Yuan et al. (2022) propose the auxiliary-PINNs to solve the forward and inverse problems of integro-differential equations. This increase in network complexity can significantly escalate computational difficulties and the volume of data necessary for training PINNs. Moreover, the direct approach to approximate the reconstructing parameters by neural networks enables too much fluent representation ability. This alludes to the fact that the conventional approach is inadequate depending on the problems due to the intrinsic ill-posedness of inverse problems.

In this paper, we apply the PINNs’ framework to address the inverse problem of designing neutral inclusions, a topic that will be elaborated below. The challenge of designing neutral inclusions falls within the scope where traditional PINNs tend to perform inadequately. To overcome this limitation, we propose improvements to the PINNs approach by incorporating mathematical analytical methods.

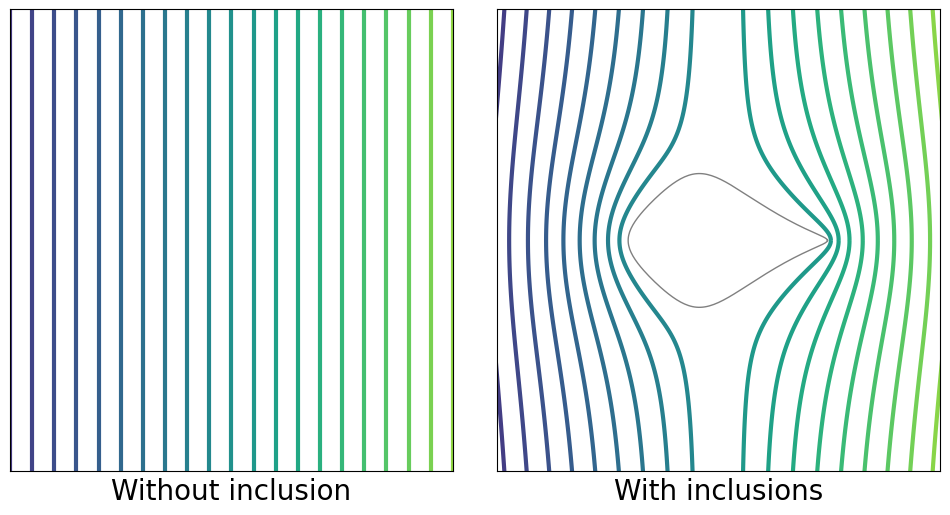

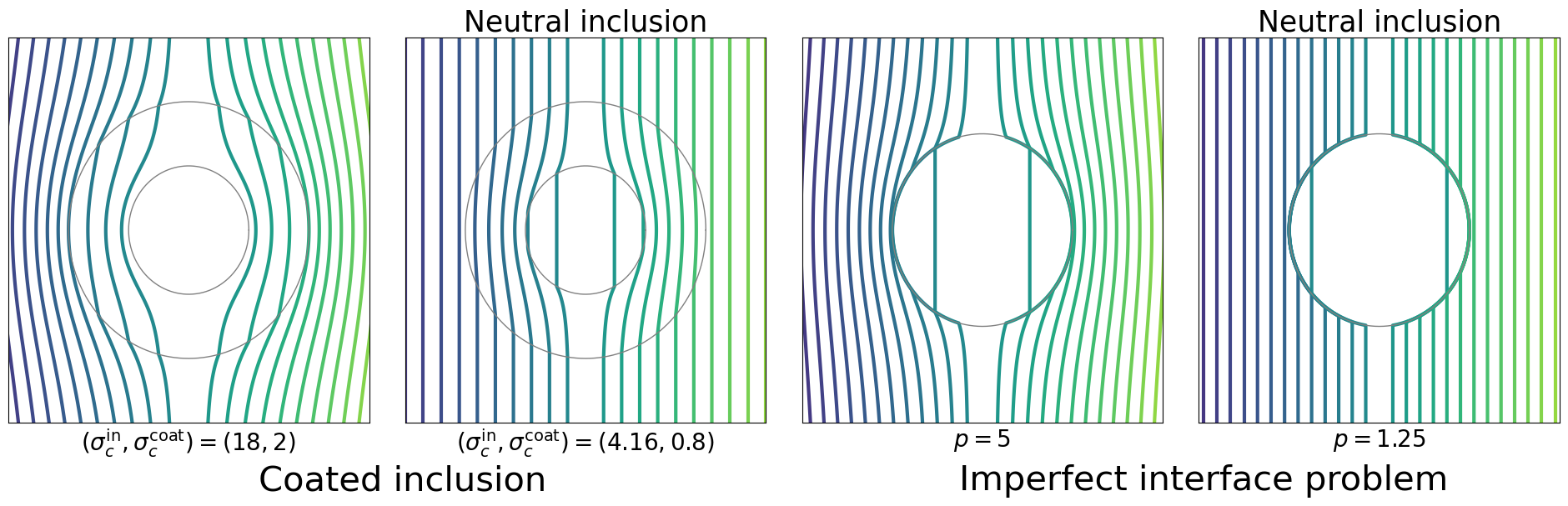

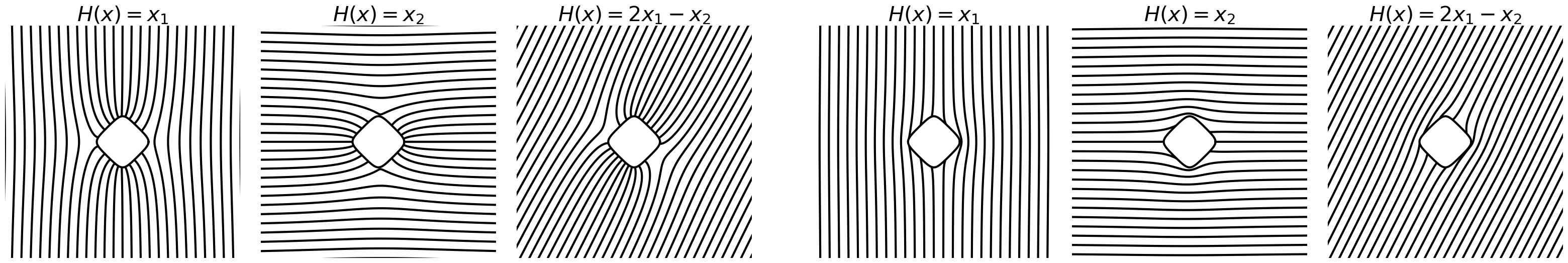

Inclusions with different material features from the background medium commonly cause perturbations in applied fields when they are inserted into the medium. Problems analyzing and manipulating the effects of inclusions have gained significant attention due to their fundamental importance in the modeling of composite materials, particularly in light of rapid advancements and diverse applications of these materials. Specific inclusions, referred to as neutral inclusions, do not disturb linear fields (see Figure 1.2). The neutral inclusion problem has a long and established history in the theory of composite materials (Milton, 2002). Some of the most well-known examples include coated disks and spheres (Hashin, 1962; Hashin & Shtrikman, 1962), as well as coated ellipses and ellipsoids (Grabovsky & Kohn, 1995; Kerker, 1975; Sihvola, 1997). The primary motivation for studying neutral inclusions is to design reinforced or embedded composite materials in such a way that the stress field remains unchanged from that of the material without inclusions and avoids stress concentration. Extensive research has been conducted on neutral inclusions and related concepts, such as invisibility cloaking involving wave propagation, in fields including acoustics, elasticity, electromagnetic waves within the microwave range Alù & Engheta (2005); Ammari et al. (2013); Landy & Smith (2013); Liu et al. (2017); Zhou & Hu (2006, 2007); Zhou et al. (2008); Yuste et al. (2018).

Designing neutral inclusions with general shapes presents an inherent challenge. In the context of the conductivity problem, which is the focus of this paper, mathematical theory shows that only coated ellipses and ellipsoids can maintain neutral properties for linear fields in all directions (Kang & Lee, 2014; Kang et al., 2016; Milton & Serkov, 2001). In contrast, non-elliptical shapes can remain neutral for just a single linear field direction (Jarczyk & Mityushev, 2012; Milton & Serkov, 2001). To address the difficulty of designing general shaped neutral inclusions, relaxed versions of the problem have been studied (Choi et al., 2023; Kang et al., 2022; Lim & Milton, 2020). Differently from the above examples, where perfectly bonding boundaries are assumed, imperfect interfaces introduce discontinuities in either the flux or potential boundary conditions in PDEs. Ru (1998) found interface parameters for typical inclusion shapes in two-dimensional elasticity for typical inclusion shapes. Benveniste & Miloh (1999) found neutral inclusions under a single linear field. The interface parameters, which characterize these discontinuities, may be non-constant functions defined along the boundaries of the inclusions so that, theoretically, the degree of freedom of the interface parameters is infinite. Hence, we expect to overcome the inherent challenge of designing neutral inclusions with general shapes by considering neutral inclusions with imperfect interfaces. A powerful technique for dealing with planar inclusion problems of general shapes has been to use conformal mappings and to define orthogonal curvilinear coordinates (Movchan & Serkov, 1997; Cherkaev et al., 2022; Ammari & Kang, 2004; Jung & Lim, 2021), where the existence of the conformal mapping for a simply connected bounded domain is mathematically guaranteed by the Riemann mapping theory. Using these coordinates, Kang & Li (2019); Choi & Lim (2024) constructed weakly neutral inclusions that yield zero coefficients for leading-order terms in PDEs solution expansions (also refer to Milton (2002); Choi et al. (2023); Lim & Milton (2020) for neutral inclusion problems using the conformal mapping technique). However, such asymptotic approaches cannot achieve complete neutrality within this framework. Moreover, the requirement for analytic asymptotic expressions poses limitations on the generalizability of this approach.

In this paper, by adopting a deep learning approach approach, we focus on precise values of the solution rather than asymptotic ones. Unlike the asymptotic approaches in Kang & Li (2019); Choi & Lim (2024), the proposed method does not rely on an analytical expansion formula and incorporates the actual solution values directly into the loss function design. More precisely, we introduce a novel forward-inverse PINN framework by combining complex analysis techniques into PINNs, namely Conformal mapping Coordinates Physics-Informed Neural Networks (CoCo-PINNs). We define the loss function to include the evaluations of the solutions at sample points exterior of inclusions. Furthermore, we leverage the conformal mapping to effectively sample collocation points for PDEs involving general shaped inclusions. We found that classical PINNs–which treat the interface parameters as functions approximated by neural networks–perform inadequately when applied to designing imperfect parameters for achieving neutrality. Instead, we propose training the Fourier series coefficients of the imperfect parameters, rather than approximating the function. We test the performance of our proposed method in finding the forward solution by using the analytical mathematical results for the forward solution presented in Choi & Lim (2024), where theoretical direct solutions are expressed as products of infinite dimensional matrices whose entries depends on the expansion coefficients of interface parameter. Additionally, we leverage these analytical results to explain that why it is possible to train the PINN for the neutral inclusion using only a single applied field (see Subsection 3.2).

Many PINNs approaches to solving PDEs focus primarily on the forward problem and are typically validated through comparisons with numerical methods such as Finite Element Methods (FEM) and Boundary Element Methods (BEM), and others. In contrast, our problem addresses both forward and inverse problems simultaneously, adding complexity, especially in cases involving complex-shaped inclusions, and making it more challenging to achieve accurate forward solutions. Consequently, “reliability” becomes a critical factor when applying PINNs to our problem. The proposed CoCo-PINNs provide more accurate forward solutions, along with improved identification of the inverse parameters, compared to classical PINNs. They demonstrate greater consistency in repeated experiments and exhibit improved stability with respect to different conductivity values . We conduct experiments to ensure the “reliability” of CoCo-PINNs by assessing the credibility, consistency, and stability.

It is noteworthy that by utilizing Fourier series expansions to represent the inverse parameters, CoCo-PINNs offer deeper analytic insights into these parameters, making the solutions not only more accurate but also more explainable. Furthermore, our method requires no training data for the neutral inclusion problem due to its unique structure, where constraints at exterior points effectively serve as data. An additional remarkable feature is that our proposed method has proven effective in identifying optimal inverse parameters that are valid for general first-order background fields including those not previously trained. This impressive result is supported by a rigorous mathematical analysis.

In summary, this paper contains the following contributions:

-

•

We developed a novel approach to PINNs, namely CoCo-PINNs, which have been shown to offer enhanced credibility, consistency, and stability compared to classical PINNs.

-

•

We have adopted the exterior conformal mapping in the PINNs to make it train the problem corresponding to the arbitrarily shaped domains.

-

•

Due to the nature of the neutral inclusion problem, it can be mathematically shown that once training with a given background solution or , a similar effect can be obtained for arbitrary linear background solutions for any (see section 3.2 for more details).

2 Inverse problem of neutral inclusions with imperfect conditions

We set to be a two-dimensional vector in . On occasion, we regard , whereby will be used. We assume that is a nonempty simply connected bounded domain with an analytic boundary (refer Appendix B). We assume the interior region has the constant conductivity while the background medium has the constant conductivity . We set where is the characteristic function. We further assume that the boundary of is not perfectly bonding, resulting in a discontinuity in the potential. This discontinuity is represented by a nonnegative real-valued function on , referred to as the interface parameter or interface function. Specifically, we consider the following potential problem:

| (2.1) |

where is an applied background potential and denotes the normal derivative with the unit exterior normal vector to .

Here, we define neutral inclusion to provide a clearer explanation.

Definition 1.

We define as a neutral inclusion for the imperfect interface problem (2.1) if for all in the exterior region where is any arbitrary linear fields, i.e., for any .

We explore the development of neural networks to find a specific interface function that makes a neutral inclusion, given the inclusion along with the conductivities and , while simultaneously providing the forward solution .

2.1 Series solution for the governing equation via conformal mapping

By the Riemann mapping theorem (see Appendix B), there exists a unique and conformal mapping from onto such that , , and

| (2.2) |

We set . We use modified polar coordinates via . One can numerically compute the conformal mapping coefficients and for a given domain (Jung & Lim, 2021; Wala & Klöckner, 2018). In the following, we assume is given. Choi & Lim (2024) obtained the solution expression for the solution using the geometric function theory for complex analytic functions for a given arbitrary analytic domain :

Theorem 2.1 (Analytic solution formula).

Let be the Faber polynomials associated with and the applied field is given by , the solution satisfies

| (2.3) | |||

| (2.4) |

where is a diagonal matrix whose entries are , and denotes the real part of a complex number. The matrices and are determined by the conductivities, the shape of inclusion, and the coefficients of the expansion formula of the interface function ; refer to Appendix B for the concept of Faber polynomials and explicit definitions.

From Theorem 2.1, the exact solution to eq. 2.1 can be obtained analytically. We denote this solution as for comparison with the trained forward solution by neural networks. This analytic solution will be used to evaluate the credibility of the PINNs forward solver, as discussed in Section 4.2.

2.2 Series representation of the interface function

In this subsection, based on complex analysis, we present the series expansion formula for designing the CoCo-PINNs. We assume that the interface function is nonnegative, bounded, and continuous on . By introducing a parametrization of with , the interface function admits a Fourier series expansion with respect to :

where and are real constant coefficients. In particular, we take , , where is the conformal mapping in eq. 2.2. In this case, we have

| (2.5) | ||||

for some complex-valued constants . Note that we can similarly express the boundary conditions in eq. 2.1 in terms of the variable , enabling us to effectively address these boundary conditions.

We further assume that is represented by a finite series, truncated at the -term for some . Specifically, we define

| (2.6) |

At this state, the reconstruction parameters are deduced to , and this makes the inverse problem of determining the interface parameter over-determined by using the constraints for many sample points exterior of .

A fundamental characteristic of inverse problems is that they are inherently ill-posed, and the existence or uniqueness of the inverse solution–the interface function in this paper–is generally not guaranteed. When solving a minimization problem, neural networks may struggle if the problem admits multiple minimizers. In particular, the ability of neural networks to approximate a wide range of functions can lead them to converge to suboptimal solutions, corresponding to local minimizers of the loss function. As a result, the classical approach in PINNs, which allows for flexible function representation, can be highly sensitive to the initial parameterization. In contrast, the series expansion approach constrains the solution to well-behaved functions, reducing sensitivity to the initial parameterization and ensuring the regularity of the target function. Additionally, since the series approximation method requires fewer parameters, it can be treated as an over-determined problem, helping to mitigate the ill-posedness of inverse problems.

3 The proposed method: CoCo-PINNs

This section introduces the CoCo-PINNs, their advantages, and the mathematical reasoning behind why neutral inclusions designed by training remain effective even in untrained background fields. We begin with the loss design corresponding to the imperfect interface problem eq. 2.1, whose solution exhibits a discontinuity across due to the imposed boundary conditions. We denote the solutions inside and outside as and , respectively, and represent their neural networks’ approximations as and . We named these solutions as trained forward solutions. We aim to train the interface function, represented by for the truncated series approximation and for the fully connected neural network approximation. The method utilizing is referred to as CoCo-PINNs, while the approach using to represent the interface function is called classical PINNs.

3.1 Model design for the forward-inverse problem

We utilize three sets of collocation points: , and , which are finite sets of points corresponding to the interior, exterior, and boundary of , respectively, with a slight abuse of notation for . We select collocation points based on conformal mapping theory to handle PINNs in arbitrarily shaped domains, and provide a detailed methodology for this selection in Section D.1. To address the boundary conditions for , we use and for the limit of the boundary from the exterior and interior, respectively, with small , and unit normal vector .

The loss functions corresponding to the governing equation and the design of a neutral inclusion are defined as follows:

| (3.1) | |||||

| (3.2) | |||||

| (3.3) | |||||

| (3.4) | |||||

| (3.5) |

with . In the case where we train using classical PINNs, we replace the interface function with . To enforce the non-negativity of the interface function, we introduce an additional loss function .

By combining all the loss functions with weight variables , we define the total loss by

| (3.6) |

Here, denotes the number of the elements in the set . We then consider the following loss with regularization term:

| (3.7) |

where represents the weights of the neural networks , is the Frobenius norm, and the -norm is used for , that is,

This type of regularization is commonly used to address ill-posed problems. We used the loss in eq. 3.7 for all experiments.

CoCo-PINNs are designed using complex geometric function theory to address the interface problem. While classical PINNs rely on neural network approximations based on the universal approximation theory, CoCo-PINNs utilize Fourier series expansion, which helps overcome the challenges of ill-posedness in the neutral inclusion inverse problems and ensures that the inverse solution remains smooth. Additionally, this approach allows for the selection of initial coefficients of the interface function using mathematical results. The results of CoCo-PINNs can be explained by a solid mathematical foundation, as discussed in section 3.2. In section 4, we examine the advantages of CoCo-PINNs in terms of credibility, consistency, and stability.

3.2 Neutral inclusion effects for untrained linear fields

In this section, we briefly explain the reason that the CoCo-PINNs can yield the neutral inclusion effect for untrained background fields. Since the governing equation eq. 2.1 is linear with respect to , the following trivially holds by the properties of linear PDEs:

Theorem 3.1.

Consider a domain, denoted by , that is of arbitrary shape and whose boundary is given by an exterior conformal mapping . If there exists an interface function that makes a neutral inclusion for the background field and , simultaneously, then is neutral also for all linear fields of arbitrary directions .

By Theorem 3.1, one can expect to find a function such that is neutral to all linear fields by training with only two background fields, assuming such a exists. Although the existence of this function has not yet been theoretically verified, experiments in this paper with various shapes demonstrate that, for given , there exists a that produces the neutral inclusion effect, meaning that the perturbation is negligible for all directions of .

Remark 1.

In all examples, we successfully identified a with the neutral inclusion effect by training with only a single . These results can be explained by the following theorem.

Theorem 3.2.

Let with an interface function be neutral for a single background field . If the first rows of and given in eq. 2.4 are linearly independent, then is neutral also for all linear fields of arbitrary directions .

The proof of Theorem 3.2 can be found in Appendix C. As a future direction, it would be interesting to either prove that the first rows of and are linearly independent for any , or to find counterexamples–namely, inclusions that are neutral in only one direction.

Remark 2.

According to the universal approximation theorem, for a given interface function , the analytic solution on a bounded set and on can be approximated by neural networks. Additionally, by the Fourier analysis, can be approximated by a truncated Fourier series . In light of Theorem 3.2 and remark 1, we train using only a single linear field .

Figure 3.1 demonstrates the operational principles of the neural networks we designed. We use ‘Univ.’ to represent the universal approximation theorem, ‘Fourier’ for the Fourier series expansion, Thm. 2.1 for the analytical solution derived from the mathematical result, and ‘Cred.’ for credibility. We note that is the analytic solution to eq. 2.1 associated with obtained either from via CoCo-PINNs or from via classical PINNs. The credibility of is determined by whether is small. Credibility is a crucial factor in the proposed PINNs’ schemes for identifying neutral inclusions with imperfect boundary conditions. If the trained forward solution , obtained alongside with or , is close to , we can conclude that the neural networks have successfully identified the interface function, ensuring that exhibits the neutral inclusion effect. This is because has small values in by the definition of the loss function . In other words, if the interface function provided by neural networks makes both and small, then this interface function is the desired one. However, if is not small, it becomes unclear whether the neural networks have failed to solve the inverse problem or the forward problem.

The field of AI research is currently facing significant challenges regarding the efficacy and explainability of solutions generated by neural networks. Moreover, there is a pressing need to establish “reliability” credibility in these solutions. It is noteworthy that the trained forward solution deviates from the analytic solution defined with in several examples, particularly in cases involving complicated-shaped inclusions (see Section 4.2). This discrepancy raises concerns about the “reliability” credibility of neural networks.

4 Experiments

We present the successful outcomes for designing the neutral inclusions by using the CoCo-PINNs, as well as the experiment results to verify the “reliability” in terms of credibility, consistency, and stability. Credibility indicates whether the trained forward solution closely approximates the analytic solution, which we assess by comparing the trained forward solution with the analytic solution derived in Choi & Lim (2024). Consistency focuses on whether the interface functions obtained from the classical PINNs and the CoCo-PINNs converge to the same result for re-experiments under identical environments. It’s worth noting that even if the neural networks succeed in fitting the forward solution and identifying the interface parameter in a specific experiment, this success may only occur occasionally. Consistency is aimed at determining whether the training outcomes are steady or merely the result of chance, and it can be utilized as an indicator of the steadiness of the model. training model. Lastly, stability refers to the sensitivity of a training model, examining how the model’s output changes in response to variations in environments of PDEs.

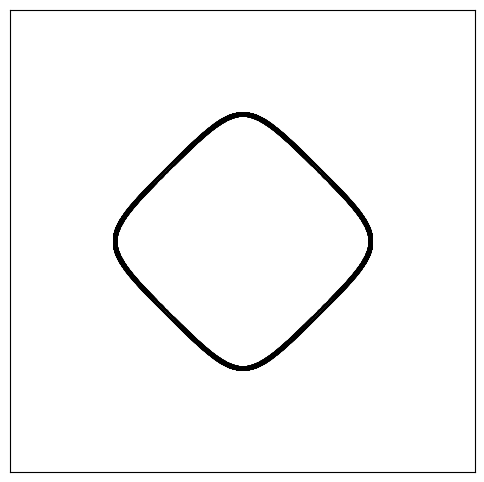

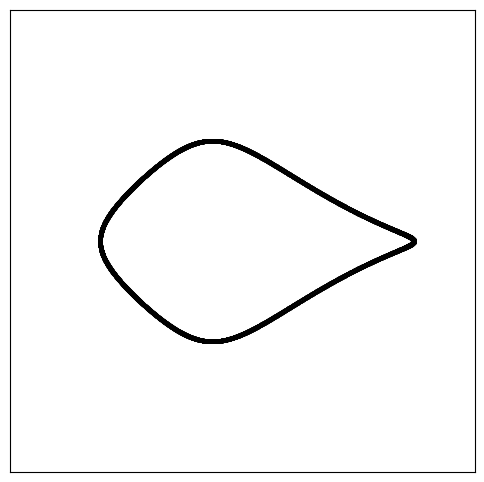

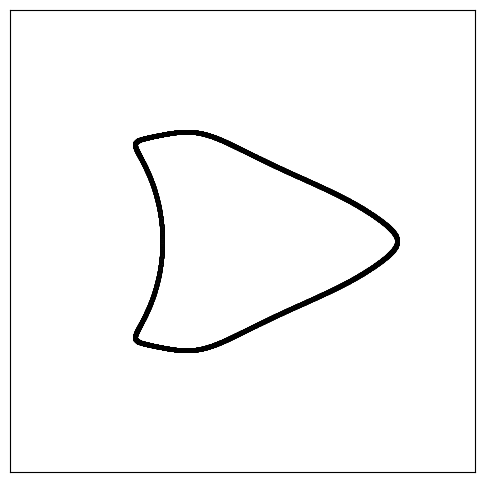

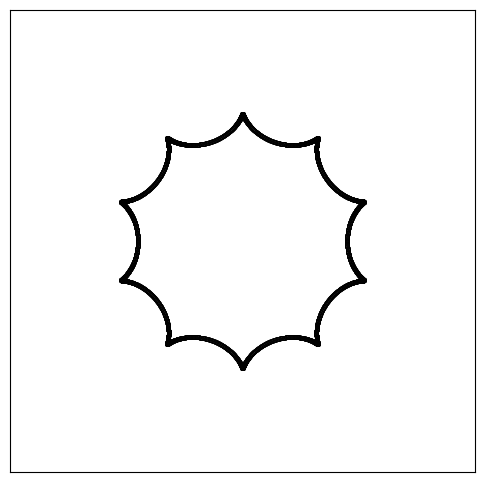

The inclusions with the shapes illustrated in Figure 4.1 will be used throughout this paper. Each shaped inclusion is defined by the conformal map given by eqs. D.1, D.2, D.3 and D.4 in Section D.3. We named the shapes of the inclusions ‘square’, ‘fish’, ‘kite’, and ‘spike’.

We introduce the quantities to validate the credibility and the neutral inclusion effect as follows:

| (4.1) | ||||

| (4.2) | ||||

| (4.3) |

4.1 Neutral inclusion

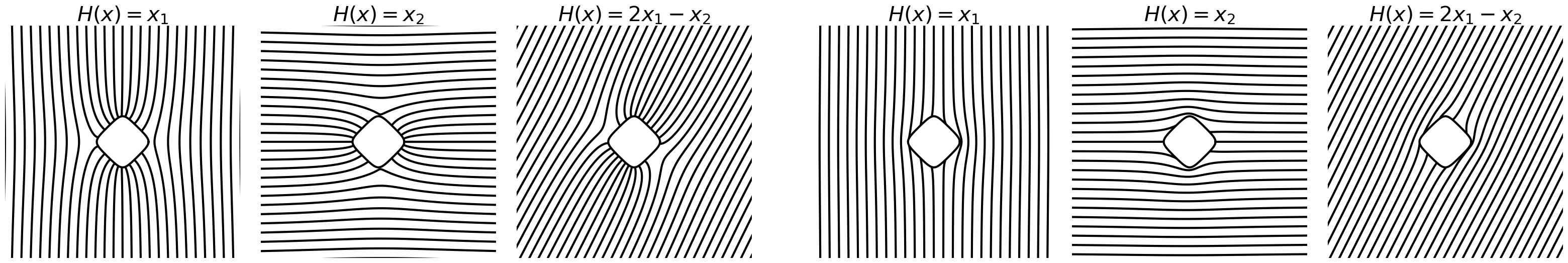

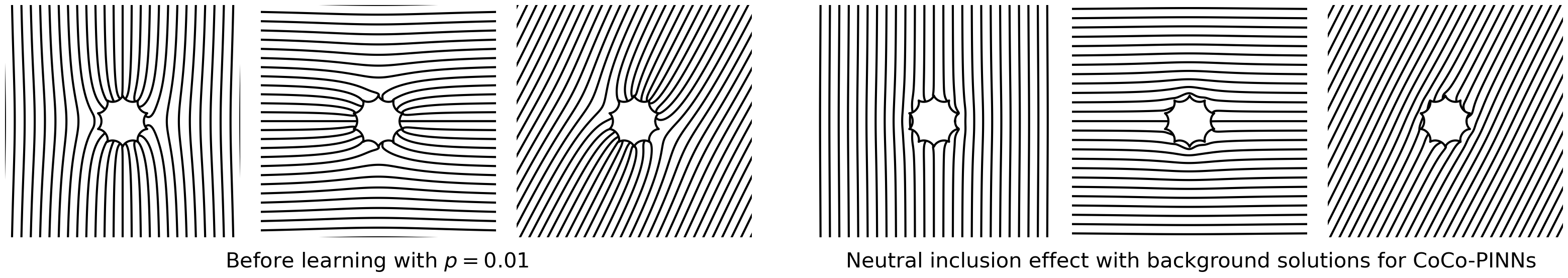

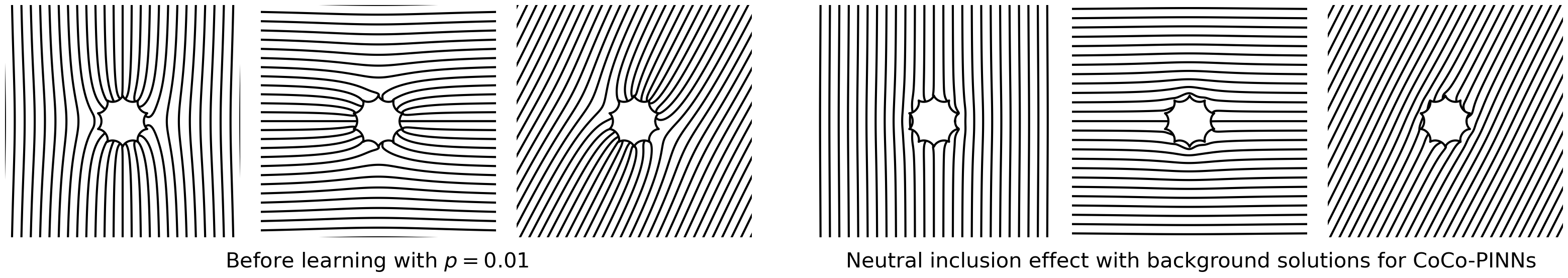

We present experimental results demonstrating the successful achievement of the neutral inclusion effect using CoCo-PINNs. For training, we use only a single background solution, , and illustrate the neutral inclusion effects for three background solutions and ; see Theorem 3.2 for a theoretical explanation.

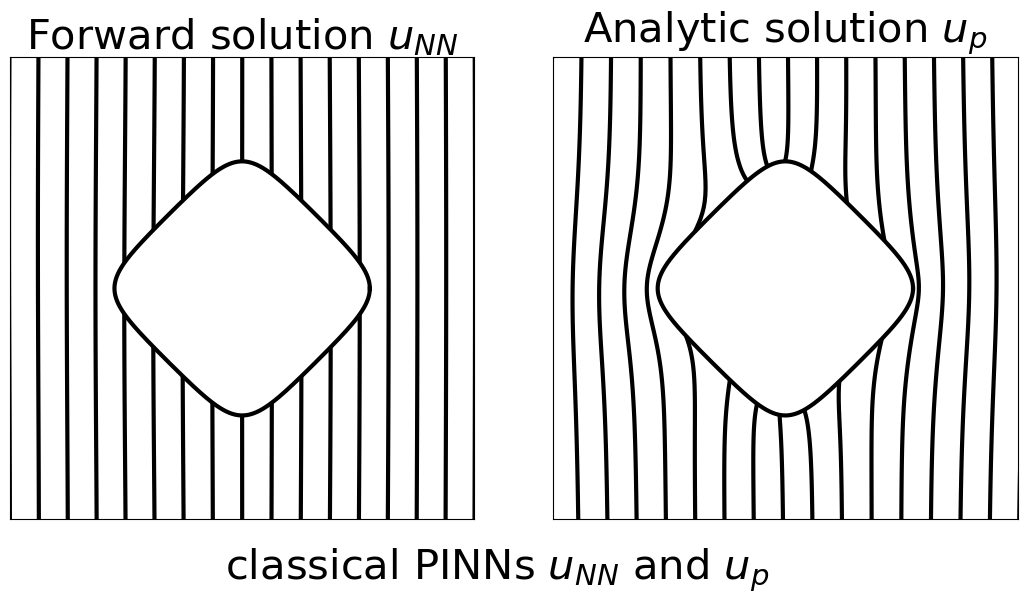

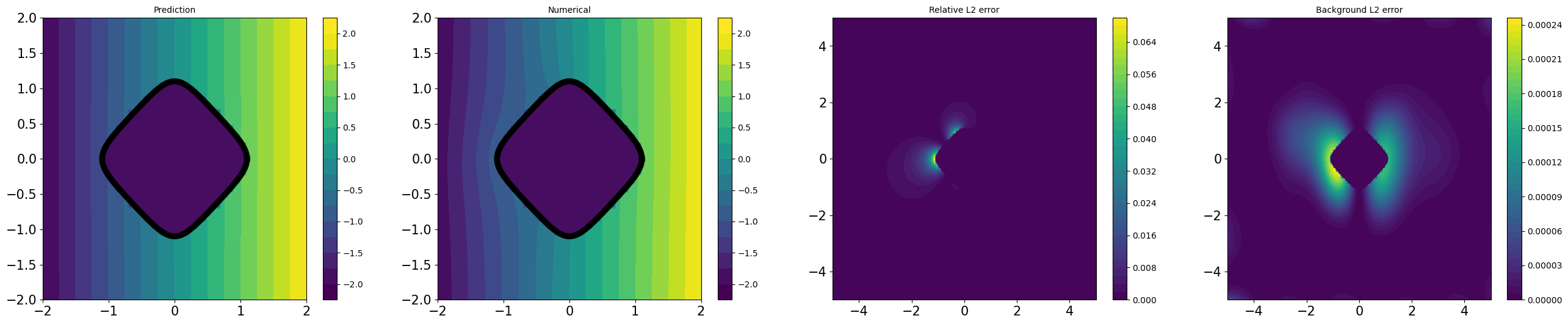

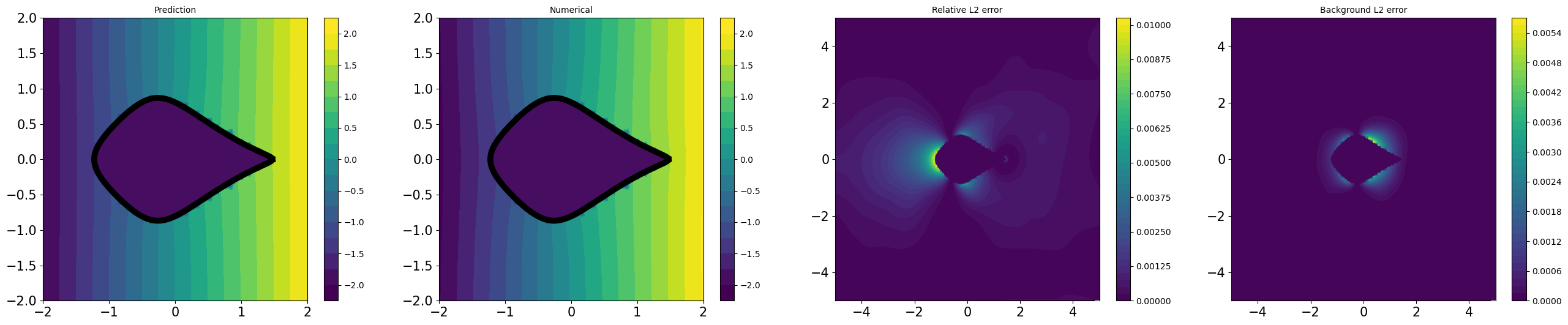

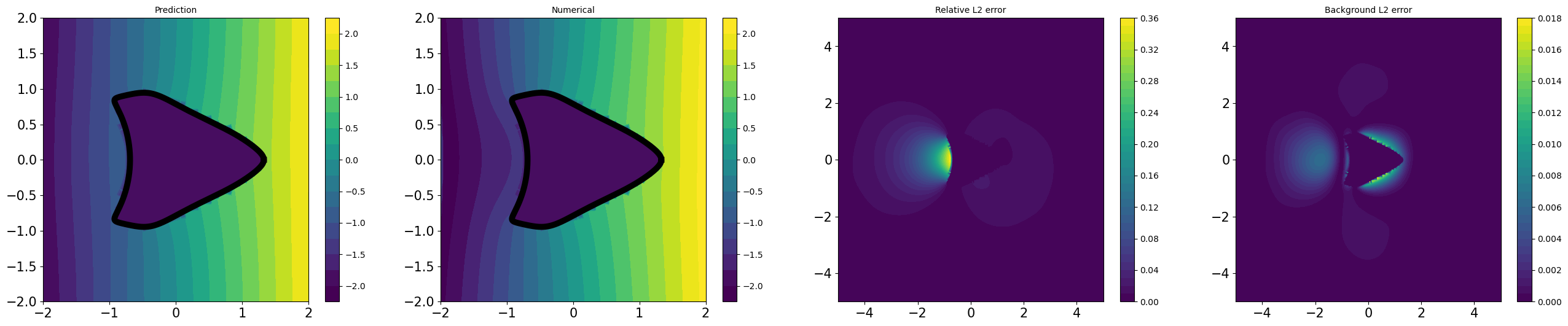

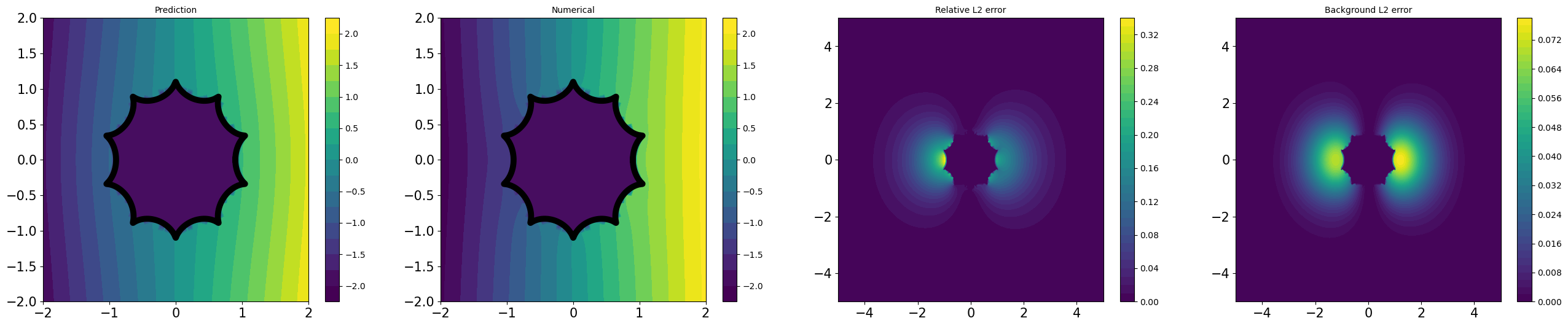

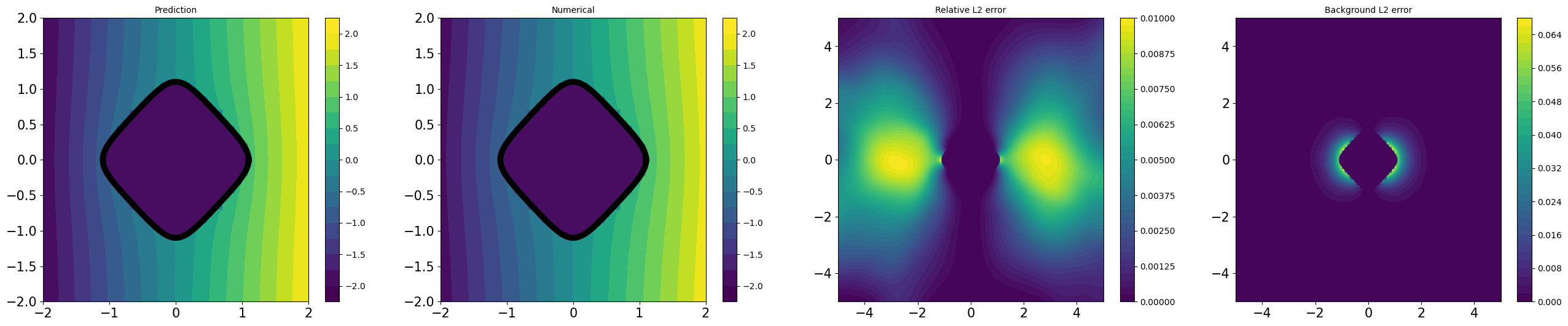

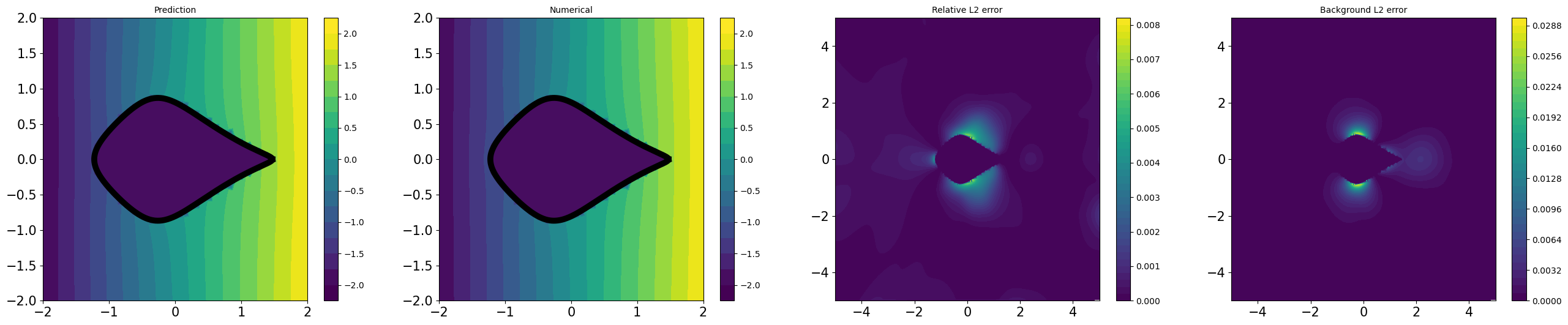

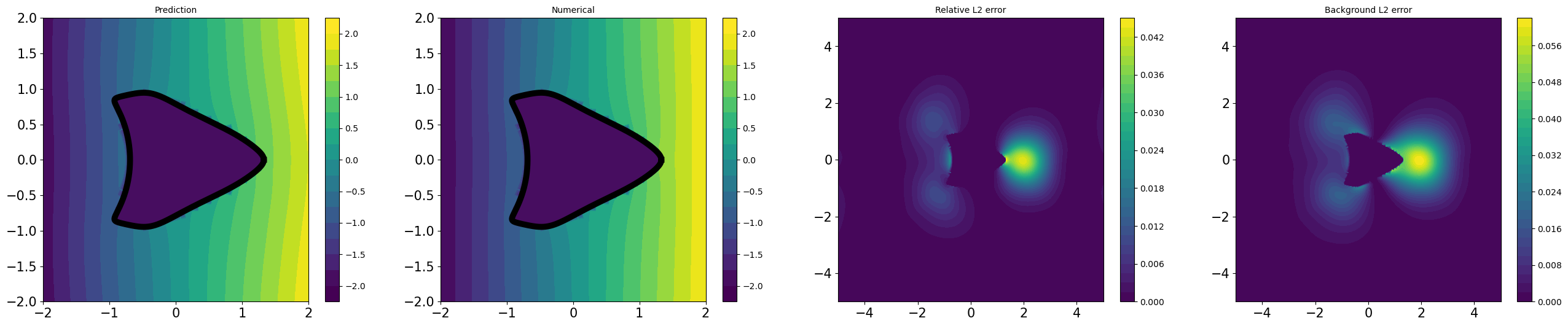

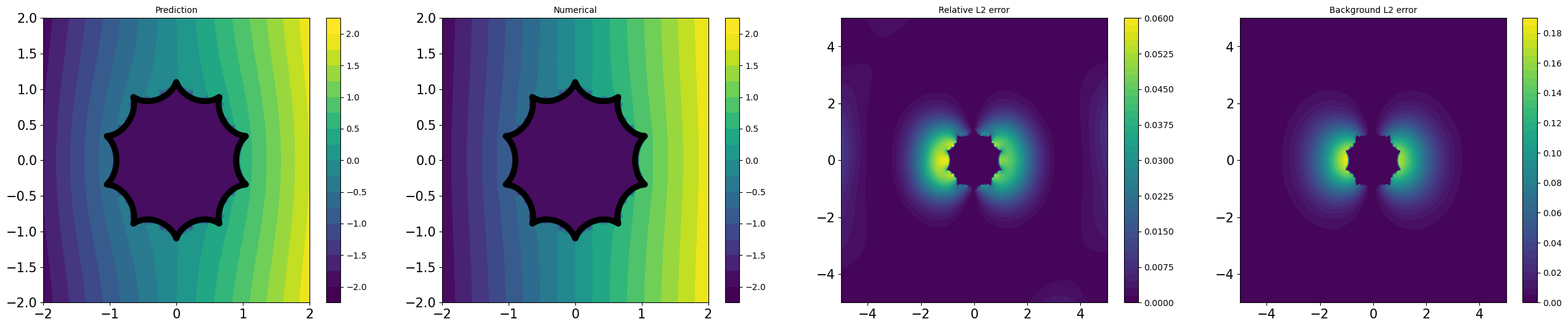

Inclusions generally yield perturbations in the applied background fields. However, the domain , with the interface function trained from CoCo-PINNs, achieves the neutral inclusion effects across all three test background fields, as shown by the level curves of the analytic solutions in Figure 4.2. All results are presented in Figure D.2 of Section D.5.

4.2 Credibility of classical PINNs and CoCo-PINNs

| Shape | Credibility | ||

|---|---|---|---|

| Classical PINNs | square | 7.241e-04 | 2.617e-01 |

| fish | 4.704e-04 | 1.005e-01 | |

| kite | 8.790e-03 | 5.999e-01 | |

| spike | 1.371e-02 | 5.798e-01 | |

| CoCo- PINNs | square | 3.197e-03 | 9.958e-02 |

| fish | 2.505e-04 | 8.960e-02 | |

| kite | 1.947e-03 | 2.117e-01 | |

| spike | 3.969e-03 | 2.431e-01 | |

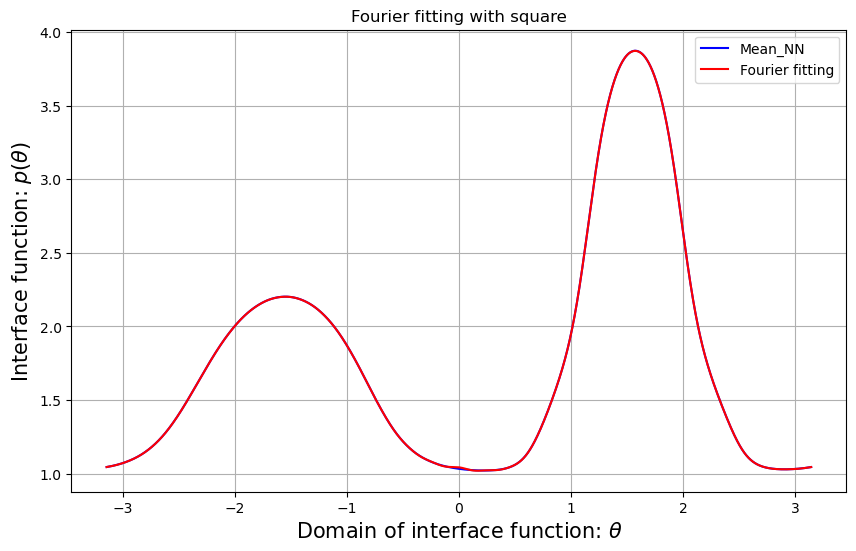

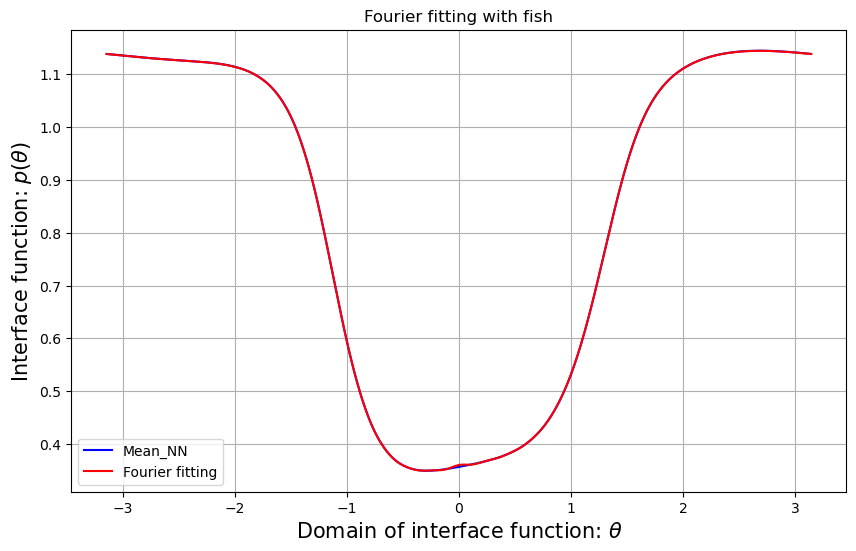

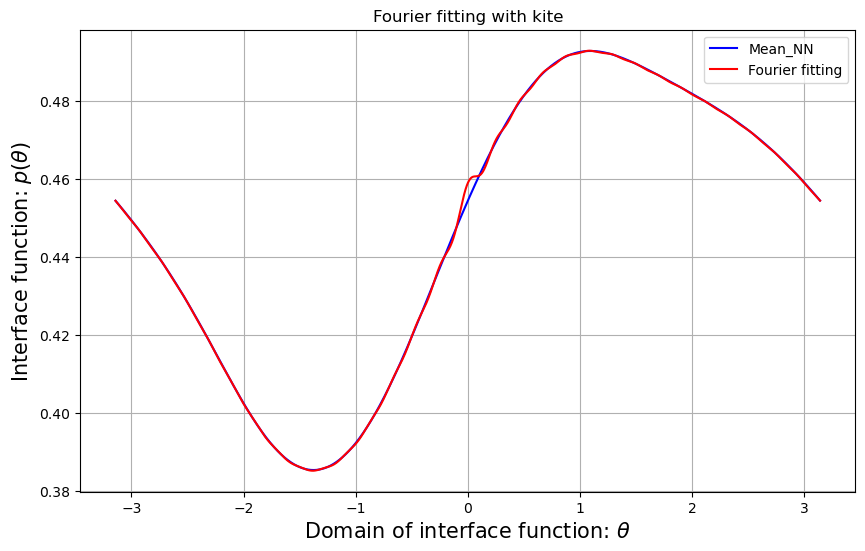

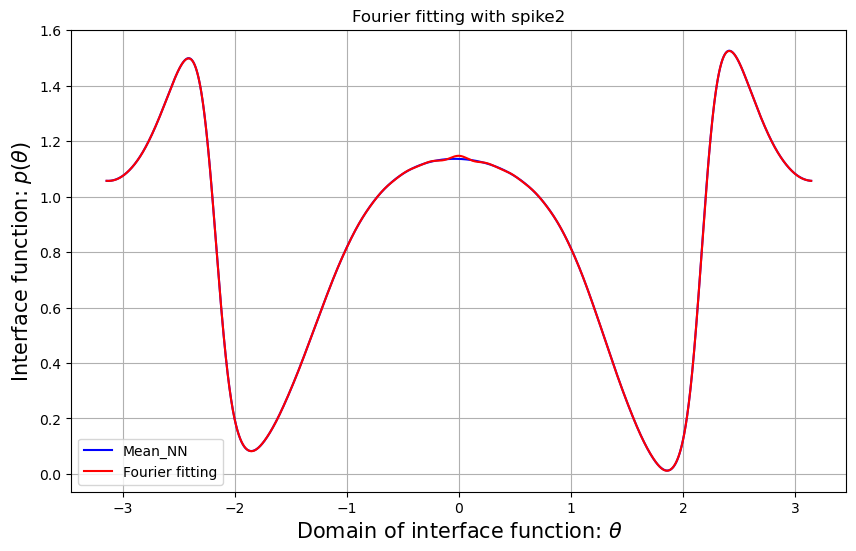

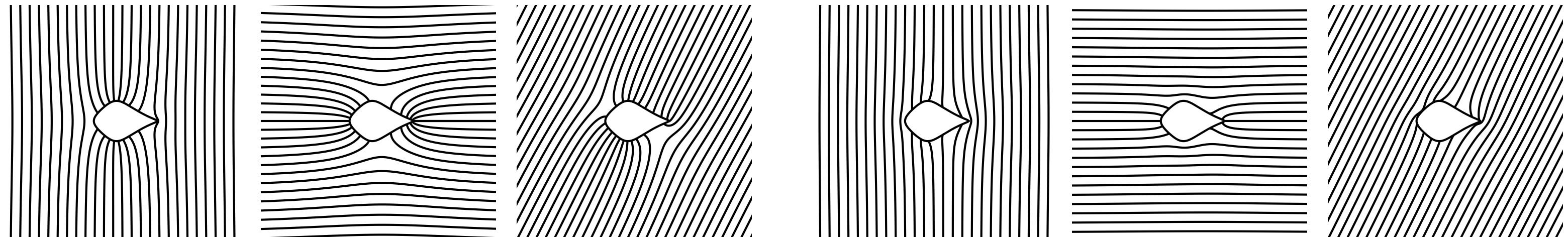

We investigate the credibility of the two methods. We examine whether the exterior part of the trained forward solution matches the analytic solution . Recall that, we denote as the analytic solution when coefficients of the interface function are given by training. Once training is complete, CoCo-PINNs provide the expansion coefficients of the interface function directly. For classical PINNs, where the interface function is represented by neural networks, we compute the Fourier coefficients of . We use the Fourier series expansion up to a sufficiently high order to ensure that the difference between the neural network-designed interface function and its Fourier series is small (see Figure D.1 in Section D.4). We experiment for four inclusion shapes in Figure 4.1. Detailed experiment settings are given in the Appendix D.

Table 1 demonstrates CoCo-PINNs have superior performance in the shape of ‘fish’, ‘kite’, and ‘spike’ compared to the classical PINNs. Although the credibility error for classical PINNs appears smaller than that for CoCo-PINNs on the ‘square’ shape as shown in Table 1, the trained forward solution by classical PINNs illustrates an exorbitant large deviation that does not coincide with the analytic solution derived from the inverse parameter result, as shown in Figure 4.3. This indicates that, despite its strong performance in minimizing the loss function, the classical PINNs approach fails to effectively function as a forward solver.

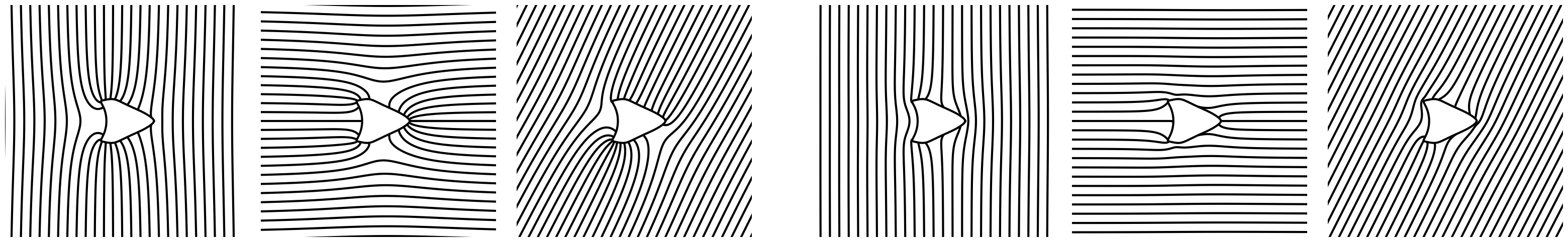

4.3 Consistency of classical PINNs and CoCo-PINNs

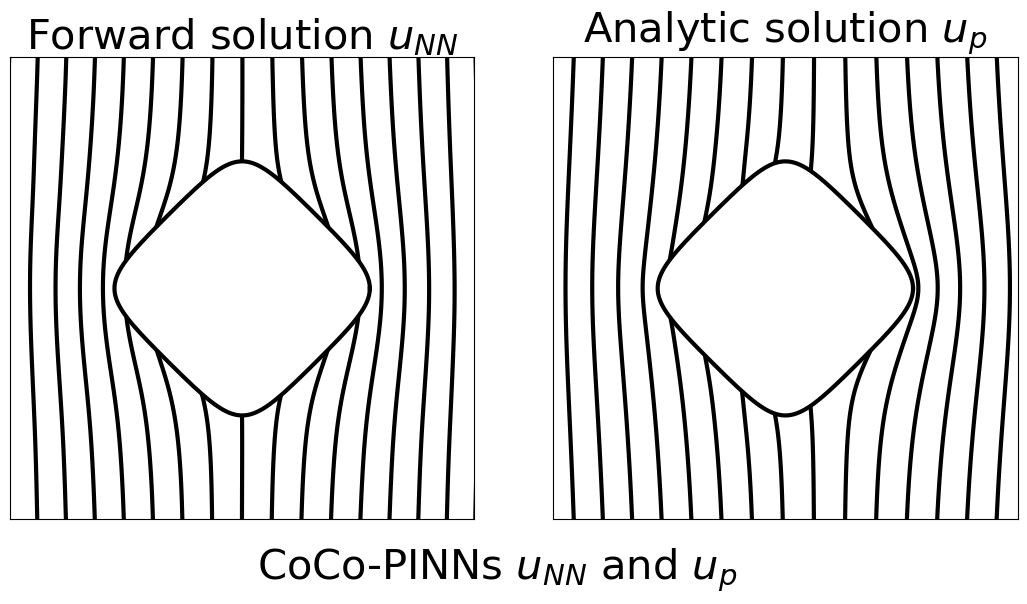

In this subsection, we examine whether repeated experiments consistently yield similar results. Figure 4.4 shows the interface functions after training the classical PINNs and CoCo-PINNs performed independently multiple times. We repetitively test times under the same condition and plot the interface function pointwise along the boundary of the unit disk. The blue-dashed and red-bold lines represent the mean of the interface functions produced by classical PINNs and CoCo-PINNs, respectively, while the blue- and red-shaded regions indicate the pointwise standard deviations of the interface functions, respectively. Each column corresponds to an experiment with ‘square’, ‘fish’, ‘kite’, and ‘spike’.

As shown in Figure 4.4, the interface functions trained by classical PINNs show inconsistency, while CoCo-PINNs produce consistent results. The precise value for the mean of the standard deviations is provided in column of Table 2. Additionally, columns through of Table 2 present the three quantities in eqs. 4.1, 4.2 and 4.3, which show the credibility and the errors indicating the neutral inclusion effect. These results clearly demonstrate that CoCo-PINNs exhibit superior credibility compared to classical PINNs. Furthermore, CoCo-PINNs outperform classical PINNs in achieving the neutral inclusion effect, particularly for complex shapes, as verified by the quantity . In some cases, the trained forward solution and the inverse solution produced by classical PINNs show a significant discrepancy, with differing substantially from the analytic solution derived from (see Figure D.3 in Section D.6).

| Shape | Interface function | |||||||

|---|---|---|---|---|---|---|---|---|

| Mean of S.D. | Mean | S.D. | Mean | S.D. | Mean | S.D. | ||

| Classical PINNs | square | 2.614e-01 | 1.071e-03 | 1.348e-04 | 3.185e-01 | 2.601e-02 | 1.102e-03 | 1.043e-04 |

| fish | 4.011e-01 | 1.685e-03 | 1.375e-03 | 1.223e-01 | 1.606e-02 | 2.220e-03 | 1.895e-03 | |

| kite | 5.358e-01 | 1.779e-02 | 2.412e-02 | 1.173e+00 | 1.082e+00 | 1.676e-02 | 2.454e-02 | |

| spike | 2.554e-01 | 1.094e-02 | 2.511e-03 | 5.188e-01 | 7.791e-02 | 1.636e-03 | 1.048e-03 | |

| CoCo- PINNs | square | 1.357e-01 | 3.190e-03 | 1.515e-04 | 1.014e-01 | 2.152e-03 | 5.045e-03 | 2.443e-04 |

| fish | 3.264e-02 | 2.482e-04 | 1.554e-05 | 9.028e-02 | 5.617e-03 | 4.065e-04 | 6.261e-06 | |

| kite | 2.258e-02 | 1.314e-03 | 4.500e-04 | 1.800e-01 | 2.625e-02 | 4.267e-04 | 1.990e-05 | |

| spike | 1.432e-01 | 3.512e-03 | 1.505e-04 | 2.336e-01 | 3.575e-03 | 1.367e-03 | 3.073e-04 | |

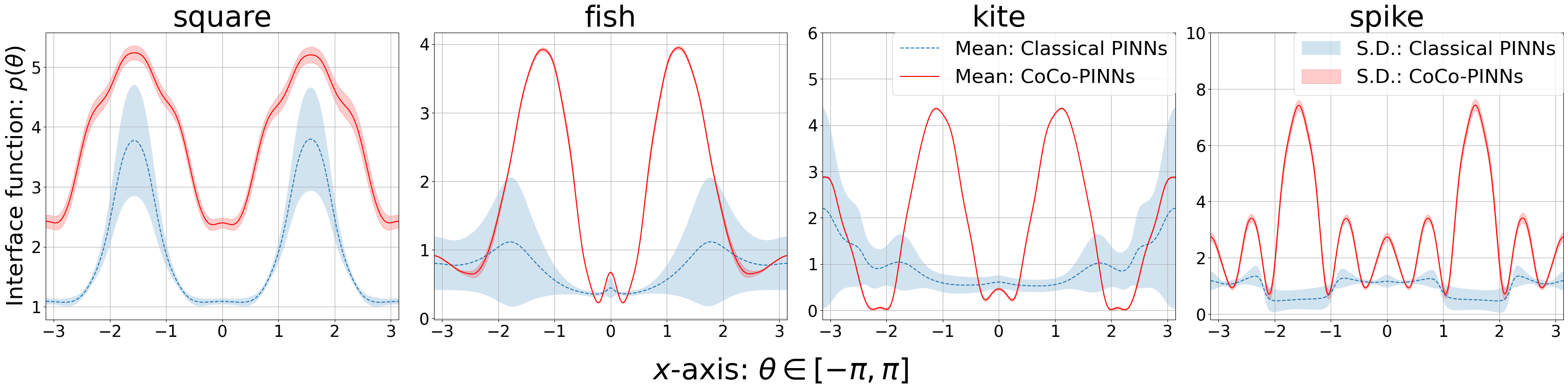

4.4 Stability of classical PINNs and CoCo-PINNs

In this subsection, we assess the stability of the interface function along with the change of environments of PDEs. Since both classical PINNs and CoCo-PINNs are trained for a fixed domain and background field , we focus on stability with respect to different conductivities . In Figure 4.5, we present experiments results obtained for and , where the inclusion shapes are ‘square’, ‘fish’, ‘kite’, and ‘spike’. Recall that the ill-posed nature of inverse problems can lead to significant instability, causing the inverse solution to exhibit large deviations in response to environmental changes or re-experimentation. Classical PINNs for neutral inclusions with imperfect conditions represent such an unstable case, as demonstrated in Figure 4.5. In contrast, our CoCo-PINNs are stable for repeated experiments, and we confirmed that CoCo-PINNs are stable for slightly changed environments. Table 3 provides the mean of standard deviations for all experiments used in Figure 4.5. As shown in Table 3, the CoCo-PINNs are exceedingly stable for consistency and stability than classical PINNs.

| Shape | Conductivities for interior | |||||

|---|---|---|---|---|---|---|

| Classical PINNs | square | 3.060e-01 | 2.784e-01 | 2.614e-01 | 2.840e-01 | 1.289e-01 |

| fish | 4.421e-01 | 4.416e-01 | 4.056e-01 | 4.218e-01 | 3.734e-01 | |

| kite | 6.282e-01 | 6.062e-01 | 4.703e-01 | 4.722e-01 | 4.597e-01 | |

| spike | 3.505e-01 | 2.855e-01 | 2.469e-01 | 2.588e-01 | 2.420e-01 | |

| CoCo- PINNs | square | 2.082e-01 | 1.565e-01 | 1.357e-01 | 1.775e-01 | 1.457e-01 |

| fish | 2.841e-02 | 3.077e-02 | 2.760e-02 | 2.611e-02 | 3.105e-02 | |

| kite | 1.874e-02 | 2.346e-02 | 2.095e-02 | 2.325e-02 | 2.420e-02 | |

| spike | 1.142e-01 | 1.709e-01 | 1.313e-01 | 1.468e-01 | 1.513e-01 | |

5 Conclusion

We focus on the inverse problem of identifying an imperfect function that makes a given simply connected inclusion a neutral inclusion. We introduce a novel approach of Conformal mapping Coordinates Physics-Informed Neural Networks (CoCo-PINNs) based on complex analysis and PDEs. Our proposed approach of CoCo-PINNs successively and simultaneously solves the forward and inverse problem much more effectively than the classical PINNs approach. While the classical PINNs approach may occasionally demonstrate success in finding an imperfect function with a strong neutral inclusion effect, the reliability of this performance remains uncertain. In contrast, CoCo-PINNs present high credibility, consistency, and stability, with the additional advantage of being explainable through analytical results. The potential applications of this method extend to analyzing and manipulating the interaction of embedded inhomogeneities and surrounding media, such as finding inclusions having uniform fields in their interiors. Several questions remain, including the generalization to multiple inclusions and three-dimensional problems, as well as proving the existence of an interface function that achieves neutrality.

References

- Akhound-Sadegh et al. (2023) Tara Akhound-Sadegh, Laurence Perreault-Levasseur, Johannes Brandstetter, Max Welling, and Siamak Ravanbakhsh. Lie point symmetry and physics-informed networks. In A. Oh, T. Naumann, A. Globerson, K. Saenko, M. Hardt, and S. Levine (eds.), Advances in Neural Information Processing Systems, volume 36, pp. 42468–42481. Curran Associates, Inc., 2023. URL https://proceedings.neurips.cc/paper_files/paper/2023/file/8493c860bec41705f7743d5764301b94-Paper-Conference.pdf.

- Alù & Engheta (2005) A. Alù and N. Engheta. Achieving transparency with plasmonic and metamaterial coatings. Phys. Rev. - Stat. Nonlinear Soft Matter Phys., 72(1), 2005. doi: 10.1103/PhysRevE.72.016623. URL https://link.aps.org/doi/10.1103/PhysRevE.72.016623.

- Ammari et al. (2013) H. Ammari, H. Kang, H. Lee, M. Lim, and S. Yu. Enhancement of near cloaking for the full Maxwell equations. SIAM J. Appl. Math., 73(6):2055–2076, 2013. doi: 10.1137/120903610.

- Ammari & Kang (2004) Habib Ammari and Hyeonbae Kang. Reconstruction of small inhomogeneities from boundary measurements, volume 1846 of Lecture Notes in Mathematics. Springer-Verlag, Berlin, 2004. ISBN 3-540-22483-1. doi: 10.1007/b98245.

- Benveniste & Miloh (1999) Y. Benveniste and T. Miloh. Neutral inhomogeneities in conduction phenomena. J. Mech. Phys. Solids, 47(9):1873–1892, 1999. ISSN 0022-5096. doi: https://doi.org/10.1016/S0022-5096(98)00127-6. URL https://www.sciencedirect.com/science/article/pii/S0022509698001276.

- Chen et al. (2020) Yuyao Chen, Lu Lu, George Em Karniadakis, and Luca Dal Negro. Physics-informed neural networks for inverse problems in nano-optics and metamaterials. Opt. Express, 28(8):11618–11633, Apr 2020. doi: 10.1364/OE.384875.

- Cherkaev et al. (2022) Elena Cherkaev, Minwoo Kim, and Mikyoung Lim. Geometric series expansion of the Neumann-Poincaré operator: application to composite materials. European J. Appl. Math., 33(3):560–585, 2022. ISSN 0956-7925,1469-4425. doi: 10.1017/s0956792521000127.

- Cho et al. (2023) Junwoo Cho, Seungtae Nam, Hyunmo Yang, Seok-Bae Yun, Youngjoon Hong, and Eunbyung Park. Separable physics-informed neural networks. In Thirty-seventh Conference on Neural Information Processing Systems, 2023. URL https://openreview.net/forum?id=dEySGIcDnI.

- Choi & Lim (2024) Doosung Choi and Mikyoung Lim. Construction of inclusions with vanishing generalized polarization tensors by imperfect interfaces. Stud. Appl. Math., 152(2):673–695, 2024. ISSN 0022-2526,1467-9590.

- Choi et al. (2023) Doosung Choi, Junbeom Kim, and Mikyoung Lim. Geometric multipole expansion and its application to semi-neutral inclusions of general shape. Z. Angew. Math. Phys., 74(1):Paper No. 39, 26, 2023. ISSN 0044-2275,1420-9039. doi: 10.1007/s00033-022-01929-z.

- Cuomo et al. (2022) Salvatore Cuomo, Vincenzo Schiano Di Cola, Fabio Giampaolo, Gianluigi Rozza, Maziar Raissi, and Francesco Piccialli. Scientific machine learning through physics–informed neural networks: Where we are and what’s next. Journal of Scientific Computing, 92(3):88, 2022.

- Grabovsky & Kohn (1995) Yury Grabovsky and Robert V. Kohn. Microstructures minimizing the energy of a two phase elastic composite in two space dimensions. I. The confocal ellipse construction. J. Mech. Phys. Solids, 43(6):933–947, 1995. ISSN 0022-5096,1873-4782. doi: 10.1016/0022-5096(95)00016-C. URL https://doi.org/10.1016/0022-5096(95)00016-C.

- Guo et al. (2022) Ling Guo, Hao Wu, Xiaochen Yu, and Tao Zhou. Monte carlo fpinns: Deep learning method for forward and inverse problems involving high dimensional fractional partial differential equations. Computer Methods in Applied Mechanics and Engineering, 400:115523, 2022. ISSN 0045-7825. doi: https://doi.org/10.1016/j.cma.2022.115523. URL https://www.sciencedirect.com/science/article/pii/S0045782522005254.

- Haghighat et al. (2021) Ehsan Haghighat, Maziar Raissi, Adrian Moure, Hector Gomez, and Ruben Juanes. A physics-informed deep learning framework for inversion and surrogate modeling in solid mechanics. Computer Methods in Applied Mechanics and Engineering, 379:113741, 2021. ISSN 0045-7825. doi: https://doi.org/10.1016/j.cma.2021.113741. URL https://www.sciencedirect.com/science/article/pii/S0045782521000773.

- Hao et al. (2023) Zhongkai Hao, Chengyang Ying, Hang Su, Jun Zhu, Jian Song, and Ze Cheng. Bi-level physics-informed neural networks for PDE constrained optimization using broyden’s hypergradients. In The Eleventh International Conference on Learning Representations, 2023. URL https://openreview.net/forum?id=kkpL4zUXtiw.

- Hashin & Shtrikman (1962) Z. Hashin and S. Shtrikman. A Variational Approach to the Theory of the Effective Magnetic Permeability of Multiphase Materials. Journal of Applied Physics, 33(10):3125–3131, 10 1962. ISSN 0021-8979. doi: 10.1063/1.1728579. URL https://doi.org/10.1063/1.1728579.

- Hashin (1962) Zvi Hashin. The elastic moduli of heterogeneous materials. Trans. ASME Ser. E. J. Appl. Mech., 29:143–150, 1962. ISSN 0021-8936,1528-9036.

- Jagtap et al. (2022) Ameya D. Jagtap, Zhiping Mao, Nikolaus Adams, and George Em Karniadakis. Physics-informed neural networks for inverse problems in supersonic flows. Journal of Computational Physics, 466:111402, 2022. ISSN 0021-9991. doi: https://doi.org/10.1016/j.jcp.2022.111402. URL https://www.sciencedirect.com/science/article/pii/S0021999122004648.

- Jarczyk & Mityushev (2012) Paweł Jarczyk and Vladimir Mityushev. Neutral coated inclusions of finite conductivity. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 468(2140):954–970, 2012. doi: 10.1098/rspa.2011.0230. URL https://royalsocietypublishing.org/doi/abs/10.1098/rspa.2011.0230.

- Jung & Lim (2021) Younghoon Jung and Mikyoung Lim. Series expansions of the layer potential operators using the Faber polynomials and their applications to the transmission problem. SIAM J. Math. Anal., 53(2):1630–1669, 2021. ISSN 0036-1410,1095-7154. doi: 10.1137/20M1348698.

- Kang & Lee (2014) H. Kang and H. Lee. Coated inclusions of finite conductivity neutral to multiple fields in two-dimensional conductivity or anti-plane elasticity. Eur. J. Appl. Math., 25(3):329–338, 2014. doi: 10.1017/S0956792514000060.

- Kang et al. (2016) H. Kang, H. Lee, and S. Sakaguchi. An over-determined boundary value problem arising from neutrally coated inclusions in three dimensions. Ann. Sc. Norm. Super. Pisa - Cl. Sci., 16(4):1193–1208, 2016.

- Kang & Li (2019) Hyeonbae Kang and Xiaofei Li. Construction of weakly neutral inclusions of general shape by imperfect interfaces. SIAM J. Appl. Math., 79(1):396–414, 2019. ISSN 0036-1399,1095-712X. doi: 10.1137/18M1185818. URL https://doi.org/10.1137/18M1185818.

- Kang et al. (2022) Hyeonbae Kang, Xiaofei Li, and Shigeru Sakaguchi. Existence of weakly neutral coated inclusions of general shape in two dimensions. Appl. Anal., 101(4):1330–1353, 2022. ISSN 0003-6811,1563-504X. doi: 10.1080/00036811.2020.1781821. URL https://doi.org/10.1080/00036811.2020.1781821.

- Karniadakis et al. (2021) George Em Karniadakis, Ioannis G Kevrekidis, Lu Lu, Paris Perdikaris, Sifan Wang, and Liu Yang. Physics-informed machine learning. Nature Reviews Physics, 3(6):422–440, 2021.

- Kerker (1975) M. Kerker. Invisible bodies. J. Opt. Soc. Am., 65(4):376–379, 1975. doi: 10.1364/JOSA.65.000376.

- Landy & Smith (2013) N. Landy and D. R. Smith. A full-parameter unidirectional metamaterial cloak for microwaves. Nat. Mater., 12(1):25–28, 2013. doi: 10.1038/nmat3476.

- Lau et al. (2024) Gregory Kang Ruey Lau, Apivich Hemachandra, See-Kiong Ng, and Bryan Kian Hsiang Low. PINNACLE: PINN adaptive collocation and experimental points selection. In The Twelfth International Conference on Learning Representations, 2024. URL https://openreview.net/forum?id=GzNaCp6Vcg.

- Lim & Milton (2020) Mikyoung Lim and Graeme W. Milton. Inclusions of general shapes having constant field inside the core and nonelliptical neutral coated inclusions with anisotropic conductivity. SIAM J. Appl. Math., 80(3):1420–1440, 2020. ISSN 0036-1399,1095-712X. doi: 10.1137/19M1246225. URL https://doi.org/10.1137/19M1246225.

- Liu et al. (2017) H. Liu, Y. Wang, and S. Zhong. Nearly non-scattering electromagnetic wave set and its application. Z. Angew. Math. Phys., 68(2):35, 2017. ISSN 0044-2275. doi: 10.1007/s00033-017-0780-1.

- Milton (2002) G. W. Milton. The Theory of Composites, volume 6 of Cambridge Monographs on Applied and Computational Mathematics. Cambridge University Press, Cambridge, 2002. doi: 10.1017/CBO9780511613357.

- Milton & Serkov (2001) G. W. Milton and S. K. Serkov. Neutral coated inclusions in conductivity and anti-plane elasticity. Proc. R. Soc. A: Math. Phys. Eng. Sci., 457(2012):1973–1997, 2001. doi: 10.1098/rspa.2001.0796.

- Movchan & Serkov (1997) A. B. Movchan and S. K. Serkov. The Pólya-Szegö matrices in asymptotic models of dilute composites. European J. Appl. Math., 8(6):595–621, 1997. ISSN 0956-7925,1469-4425. doi: 10.1017/S095679259700315X.

- Pokkunuru et al. (2023) Akarsh Pokkunuru, Pedram Rooshenas, Thilo Strauss, Anuj Abhishek, and Taufiquar Khan. Improved training of physics-informed neural networks using energy-based priors: a study on electrical impedance tomography. In The Eleventh International Conference on Learning Representations, 2023. URL https://openreview.net/forum?id=zqkfJA6R1-r.

- Pommerenke (1992) C. Pommerenke. Boundary Behaviour of Conformal Maps, volume 299 of Grundlehren der Mathematischen Wissenschaften. Springer-Verlag Berlin Heidelberg, 1992. doi: 10.1007/978-3-662-02770-7.

- Raissi et al. (2019) M. Raissi, P. Perdikaris, and G.E. Karniadakis. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics, 378:686–707, 2019. ISSN 0021-9991. doi: https://doi.org/10.1016/j.jcp.2018.10.045. URL https://www.sciencedirect.com/science/article/pii/S0021999118307125.

- Rathore et al. (2024) Pratik Rathore, Weimu Lei, Zachary Frangella, Lu Lu, and Madeleine Udell. Challenges in training PINNs: A loss landscape perspective. In Ruslan Salakhutdinov, Zico Kolter, Katherine Heller, Adrian Weller, Nuria Oliver, Jonathan Scarlett, and Felix Berkenkamp (eds.), Proceedings of the 41st International Conference on Machine Learning, volume 235 of Proceedings of Machine Learning Research, pp. 42159–42191. PMLR, 21–27 Jul 2024. URL https://proceedings.mlr.press/v235/rathore24a.html.

- Ru (1998) C.-Q. Ru. Interface design of neutral elastic inclusions. Int. J. Solids Struct., 35(7):559–572, 1998. ISSN 0020-7683. doi: https://doi.org/10.1016/S0020-7683(97)00072-3. URL https://www.sciencedirect.com/science/article/pii/S0020768397000723.

- Sihvola (1997) A. Sihvola. On the dielectric problem of isotrophic sphere in anisotropic medium. Electromagnetics, 17(1):69–74, 1997. doi: 10.1080/02726349708908516.

- Wala & Klöckner (2018) M. Wala and A. Klöckner. Conformal mapping via a density correspondence for the double-layer potential. SIAM J. Sci. Comput., 40(6):A3715–A3732, 2018. ISSN 1064-8275. doi: 10.1137/18M1174982.

- Wu et al. (2024) Haixu Wu, Huakun Luo, Yuezhou Ma, Jianmin Wang, and Mingsheng Long. Ropinn: Region optimized physics-informed neural networks. arXiv preprint arXiv:2405.14369, 2024.

- Xu et al. (2023) Chen Xu, Ba Trung Cao, Yong Yuan, and Günther Meschke. Transfer learning based physics-informed neural networks for solving inverse problems in engineering structures under different loading scenarios. Computer Methods in Applied Mechanics and Engineering, 405:115852, 2023. ISSN 0045-7825. doi: https://doi.org/10.1016/j.cma.2022.115852. URL https://www.sciencedirect.com/science/article/pii/S0045782522008088.

- Yuan et al. (2022) Lei Yuan, Yi-Qing Ni, Xiang-Yun Deng, and Shuo Hao. A-pinn: Auxiliary physics informed neural networks for forward and inverse problems of nonlinear integro-differential equations. Journal of Computational Physics, 462:111260, 2022. ISSN 0021-9991. doi: https://doi.org/10.1016/j.jcp.2022.111260. URL https://www.sciencedirect.com/science/article/pii/S0021999122003229.

- Yuste et al. (2018) Pedro Yuste, Juan M. Rius, Jordi Romeu, Sebastian Blanch, Alexander Heldring, and Eduard Ubeda. A microwave invisibility cloak: The design, simulation, and measurement of a simple and effective frequency-selective surface-based mantle cloak. IEEE Antennas and Propagation Magazine, 60(4):49–59, 2018. doi: 10.1109/MAP.2018.2839903.

- Zhou & Hu (2006) X. Zhou and G. Hu. Design for electromagnetic wave transparency with metamaterials. Phys. Rev. - Stat. Nonlinear Soft Matter Phys., 74(2), 2006. doi: 10.1103/PhysRevE.74.026607.

- Zhou & Hu (2007) X. Zhou and G. Hu. Acoustic wave transparency for a multilayered sphere with acoustic metamaterials. Phys. Rev. - Stat. Nonlinear Soft Matter Phys., 75(4), 2007. doi: 10.1103/PhysRevE.75.046606.

- Zhou et al. (2008) X. Zhou, G. Hu, and T. Lu. Elastic wave transparency of a solid sphere coated with metamaterials. Phys. Rev. B - Condens. Matter Mater. Phys., 77(2), 2008. doi: 10.1103/PhysRevB.77.024101.

Appendix A Notations

Table 4 provides the list of notations used throughout the paper.

| Notation | Meaning |

|---|---|

| background fields | |

| interface function | |

| Inverse solution via classical PINNs | |

| Truncated representation of interface function & Inverse solution via CoCo-PINNs | |

| Analytic solution with given interface function | |

| Trained forward solution |

Appendix B Geometric function theory

Geometric function theory is the research area of mathematics with the corresponding geometric properties of analytic functions. One remarkable result is the Riemann mapping theorem. We briefly introduce this theorem with related results.

B.1 Riemann mapping, Faber polynomials, and Grunsky coefficients

A connected open set in the complex plane is called a domain. We say that a domain is simply connected if its complement is connected.

Theorem B.1 (Riemann mapping theorem).

If is a nonempty simply connected domain, then there exists a conformal map from the unit ball onto .

We assume that is a nonempty simply connected bounded domain. Then, by the Riemann mapping theorem, there exists a unique and conformal mapping from onto such that , , and

| (B.1) |

The quantity in eq. B.1 is called the conformal radius of . One can obtain eq. B.1 by using Theorem B.1, the power series expansion of an analytic function and its reflection with respect to a circle; we refer to for instance Pommerenke (1992, Chapter 1.2) for the derivation.

We further assume that has an analytic boundary, that is, can be conformally extended to for some .

The exterior conformal mapping in eq. B.1 defines the Faber polynomials by the relation

| (B.2) |

The Faber polynomials are monic polynomials of degree , and their coefficients are uniquely determined by the coefficients of . In particular, one can determine by the following recursive relation:

| (B.3) |

In particular, . A core feature of the Faber polynomials is that has only a single positive-order term . In other words, we have

where are known as the Grunsky coefficient.

Remark 3.

The Faber polynomial forms a basis for complex analytic functions in . This means that an analytic function in can be expanded into as

for some complex coefficients .

Appendix C Proof of main theorem

As the Faber polynomials form a basis for complex analytic functions (see remarked in Remark 3), the background field is an entire harmonic so that it is the real part of an entire analytic function. Hence, satisfies

for some complex coefficients . Choi & Lim (2024) showed that, for some , the solution to the eq. 2.1 admits the expression

where the coefficients and are depending on , and . Recall that are Grunsky coefficients.

We define semi-infinite matrices:

| (C.1) | |||

| (C.2) |

where is the Kronecker delta function, and .

Define and denote the scale factor as , that is,

For , we have for . We consider the Fourier series of in :

where is the Fourier coefficients. As is a real-valued function, one can show that for each and, hence,

| (C.3) |

We denote

If , we can use .

Recall that is given by the background field, and is determined by . Choi & Lim (2024) showed that

| (C.4) |

and, using this relation, derived the expression of in terms of , , and as follows:

| (C.5) |

where

We set

Proof of Theorem 3.2. The neutral inclusion means that where for all . This implies that . In other words, .

If the background field is , with and so that the matrix is

| (C.6) |

Hence, has nonzero entries only in its first row. Hence, is neutral to the linear field if and only if By the assumption of the theorem, the first rows of and are linearly independent. Hence, This implies that is neutral to linear fields of all directions.

Appendix D Experimental Details

D.1 Collocation points

We denote the set of collocation points as

for interior, exterior, boundary, and the limit to the boundary from interior and exterior components, respectively. We define as follows: and are the images of the exterior conformal map under the uniform grid of its restricted domain, and the boundary of the disk with radius by

for some fixed . The limits to the boundary from exterior and interior are defined by

with a small and a unit normal vector to the boundary . To select the interior points, we recall that can be represented as . Now, we fix the angle and divide the radius uniformly. In other words, we collect such that and , that is,

D.2 Experimental setup and parameters

In common settings for experiments, we set and are also used. The conformal radius and . which determines the domain of the conformal map, and sample points are located in the square box of . We generated collocation points , and with a single epoch so that collocation points are all fixed. For the neural networks’ architecture, we use 4 hidden layers with a width of 20 and the Tanh activation due to its smoothness. We use Adam optimizers under 25,000 iterations adjusting learning rates lr pinn: all types of neural networks and lr inv: for interface (inverse) parameters decaying with per 1000 iterations along the learning rate schedulers. We fix both learning rates as and . We use the CoCo-PINNs with which takes a real number and complex numbers as inverse parameters. To verify the classical PINNs’ result, we use the Fourier fitting with an order of . The Fourier fitting is explained in Section D.4. Note that using too high order makes singularities, and hence, it represents awful credibility. We set the initial value of the interface function to 5 for the square and spike shape to make it easier to satisfy the condition of being a positive function.

Remark on the environments Traditionally, hyperparameter tuning is important to acquire the PINNs’ performance. Since balancing them is much more complicated due to the difficulty of the PDEs, we need to choose the appropriate values to enhance PINNs. Here are the brief guidelines for our settings: 1) When we balance the number of collocation points, although the exterior domain is much larger than the interior, fitting the background fields on the exterior domain has been designed to be less complex. So we set 4 times of interior points. Also, the boundary effect is essential for neutral inclusion, we put more points on the boundary same with the interior’s points. 2) Re-sampling collocation points can lead to the uncertainty of the interface function. If we sample again for each epoch, PINNs are hardly adapted for new sample points, especially for the boundary condition loss, which is quite important for the neutral inclusion effects. It is also from the experimental results that the results of re-sampling are worse than those of fixed-sampling. Hence, we fix the points and proceed to a single epoch. 3) To control the learning rates adaptively, we use the Adam optimizers so that we can handle the sensitivity of Fourier coefficients much better. Since Adam has faster convergence and robustness, they can adjust the learning rates appropriately during the training.

The following algorithm explains the progress of the CoCo-PINNs and classical PINNs.

Input: Background fields: ; Interface function: or

Output:

D.3 Conformal maps for various shapes

The shapes shown in Figure 4.1 are defined by the conformal map as follows:

| (D.1) | ||||

| (D.2) | ||||

| (D.3) | ||||

| (D.4) |

The eqs. D.1, D.2, D.3 and D.4 present ‘square’, ‘fish’, ‘kite’, and ‘spike’, respectively.

D.4 Fourier fittings

In order to ascertain whether the trained forward solution is true or not, it is necessary to identify the Fourier series that is sufficiently similar to the original interface function and, hence, achieve the real analytic solution. We utilize the Fourier series approximation for each interface function. Figure D.1 presents the difference between the interface function and the Fourier series we used. We denote as the Fourier series corresponding to the . Given that is sufficiently close to , it is reasonable to utilize the analytic solution obtained by in order to ascertain the credibility of the classical PINNs results.

The relative error of the neural network-designed interface function and its Fourier series formula is given by

The relative errors and the Fourier fittings for each shape are given by Tables 5 and D.1, respectively.

| square | fish | kite | spike |

| 5.013e-07 | 2.915e-07 | 1.729e-06 | 2.443e-06 |

D.5 Neutral inclusion effect for arbitrary fields

In this subsection, we present the neutral inclusion effect of shapes and different fields , and . The neutral inclusion effects for one random experiment results are given in Table 6.

| Shape | with | |||

|---|---|---|---|---|

| CoCo- PINNs | square | 5.004e-03 | 8.164e-03 | 2.801e-02 |

| fish | 4.050e-04 | 2.694e-04 | 1.868e-03 | |

| kite | 4.358e-04 | 1.654e-04 | 1.928e-03 | |

| spike | 2.230e-03 | 5.847e-03 | 1.498e-02 | |

After many fair experiments with both CoCo-PINNs and classical PINNs, we concluded that CoCo-PINNs were superior. After that, we tested the neutral inclusion effect for each shape by utilizing the CoCo-PINNs. Figure D.2 presents the results. In the case of unit circle inclusion, exact neutral inclusion appeared. The shape we used may have no interface functions that make exact neutral inclusions. Notwithstanding, the CoCo-PINNs results for the neutral inclusion effect are, to some extent, satisfactory.

D.6 Illustrations for credibility

All experiments described in Table 1 are illustrated in Figures D.4 and D.3, by utilizing CoCo-PINNs and classical PINNs, respectively. We illustrate the pairs of

for each shape in Figure 4.1.

As shown Figures D.4 and D.3, the trained forward solutions by CoCo-PINNs are more similar to analytic solution using the interface parameter given by the CoCo-PINNs’ training results than classical PINNs’ one.