Constrained Attack-Resilient Estimation of Stochastic Cyber-Physical Systems

Abstract

In this paper, a constrained attack-resilient estimation algorithm (CARE) is developed for stochastic cyber-physical systems. The proposed CARE can simultaneously estimate the compromised system states and attack signals. It has improved estimation accuracy and attack detection performance when physical constraints and operational limitations are available. In particular, CARE is designed for simultaneous input and state estimation that provides minimum-variance unbiased estimates, and these estimates are projected onto the constrained space restricted by inequality constraints subsequently. We prove that the estimation errors and their covariances from CARE are less than those from unconstrained algorithms and confirm that this property can further reduce the false negative rate in attack detection. We show that estimation errors of CARE are practically exponentially stable in mean square. Finally, an illustrative example of attacks on a vehicle is given to demonstrate the improved estimation accuracy and detection performance compared to an existing unconstrained algorithm.

keywords:

Detection, Kalman filtering, Recursive estimation, Stability, State estimation[cor1]Corresponding author.

1 Introduction

Cyber-Physical Systems (CPS) play a vital role in the metabolism of applications from large-scale industrial systems to critical infrastructures, such as smart grids, transportation networks, precision agriculture, and industrial control systems rajkumar2010cyber . Recent developments in CPS and their safety-critical applications have led to a renewed interest in CPS security. The interaction between information technology and the physical system has made control components of CPS vulnerable to malicious attacks cardenas2008research . Recent cases of CPS attacks have clearly illustrated their susceptibility and raised awareness of the security challenges in these systems. These include attacks on large-scale critical infrastructures, such as the German steel mill cyber attack lee2014german , and Maroochy Water breach slay2007lessons . Similarly, malicious attacks on avionics and automotive vehicles have been reported, such as the U.S. drone RQ-170 captured in Iran peterson2011iran , and disabling the brakes and stopping the engine on civilian cars koscher2010experimental ; checkoway2011comprehensive .

Related work

Traditionally, most works in the field of attack detection had only focused on monitoring the cyber-space misbehavior raiyn2014survey . With the emergence of CPS, it becomes vitally important to monitor physical misbehavior as well because the impact of the attack on physical systems also needs to be addressed cardenas2008secure . In the last decade, attention has been drawn from the perspective of the control theory that exploits some prior information on the system dynamics for detection and attack-resilient control. For instance, a unified modeling framework for CPS and attacks is proposed in pasqualetti2013attack . A typical control architecture for the networked system under both cyber and physical attacks is proposed in teixeira2012attack ; then attack scenarios, such as Denial-of-Service (DoS) and false-data injection (FDI) are analyzed using this control architecture in teixeira2015secure .

In recent years, model-based detection has been tremendously studied. Attack detection has been formulated as an / optimization problem, which is NP-hard pajic2014robustness . A convex relaxation has been studied in fawzi2014secure . Furthermore, the worst-case estimation error has been analyzed in pajic2017attack . Multirate sampled data controllers have been studied to guarantee detectability in NAGHNAEIAN201912 and to detect zero-dynamic attacks in 8796181 . A residual-based detector has been designed for power systems against false-data injection attacks, and the impact of attacks has been analyzed in liu2011false .

In addition, some papers have studied active detection, such as mo2009secure ; mo2014detecting , where the control input is watermarked with a pre-designed scheme that sacrifices optimality. The aforementioned methods have the problem that the state estimate is not resilient concerning the attack signal, and incorrect state estimates make it more challenging for defenders to react to malicious attacks consequently.

Attack-resilient estimation and detection problems have been studied to address the above challenge in yong2015resilients ; forti2016bayesian ; kim2020simultaneous , where attack detection has been formulated as a simultaneous input and state estimation problem, and the minimum-variance unbiased estimation technique has been applied. More specifically, the approach has been applied to linear stochastic systems in yong2015resilients , stochastic random set methods in forti2016bayesian , and nonlinear systems in kim2020simultaneous . These detection algorithms rely on statistical thresholds, such as the test, which is widely used in attack detection mo2014detecting ; teixeira2010cyber . Since the detection accuracy improves when the covariance decreases, a smaller covariance is desired.

On top of the minimum-variance estimation approach, the covariance can be further reduced when we incorporate the information of the input and state in terms of constraints. There have been several investigations on Kalman filtering with state constraints simon2002kalman ; ko2007state ; simon2010kalman ; kong2021kalman . The state constraints are induced by unmodeled dynamics and operational processes. Some of these examples include vision-aided inertial navigation mourikis2007multi , target tracking wang2002filtering and power systems yong2015simultaneous . Constraints on inputs are also considered, such as avoiding reachable dangerous states under the assumption that the attack input is constrained kafash2018constraining and designing a resilient controller based on the partial knowledge of the attacker in terms of inequality constraints djouadi2015finite . The methods in kafash2018constraining ; djouadi2015finite can efficiently be used to maneuver a class of attacks when input inequality constraints are available but cannot resiliently address the estimation problem due to the false-data injection. This problem remains to be solved with a stability guarantee in the presence of inequality constraints. In the current paper, we aim to solve the resilient estimation problem and investigate the stability and performance of the algorithm design that integrates with information aggregation. To the best of our knowledge, this is the first investigation that considers both state and input inequality constraints for attack-resilient estimation with guaranteed stability.

Contributions

Our main contributions of this work can be summarized as follows. i) We propose a constrained attack-resilient estimation algorithm (CARE) that can estimate the compromised system states and the attack signals simultaneously. CARE first provides minimum-variance unbiased estimates, and then they are projected onto the constrained space induced by information aggregation. ii) The proposed CARE has better estimation performance. We show that the projection strictly reduces the estimation errors and covariances. iii) We are the first to investigate the stability of the estimation algorithm with inequality constraints and prove that the estimation errors are practically exponentially stable in mean square. iv) The proposed CARE has better attack detection performance. We provide rigorous analysis that the false negative rate is reduced by using the proposed algorithm. v) The proposed algorithm is compared with the state-of-the-art method to show the improved estimation and attack detection performance.

Paper organization

The rest of the paper is organized as follows. Section 2 introduces notations, test for detection, and problem formulation. The high-level idea of the proposed algorithm is presented in Section 3.1. Section 3.2 gives a detailed algorithm derivation. Section 4 demonstrates the performance improvement and investigates the stability analysis of the proposed algorithm. Section 5 presents an illustrative example of vehicle attacks. Finally, Section 6 draws the conclusion.

2 Preliminaries

Notations

We use the subscript to denote the time index. For a real set , denotes the set of positive elements in the -dimensional Euclidean space and denotes the set of all real matrices. For a matrix , , , , , and denote the transpose, inverse, Moore-Penrose pseudoinverse, diagonal, trace and rank of , respectively. For a symmetric matrix , () indicates that is positive (semi)definite. The matrix denotes the identity matrix with an appropriate dimension. We use to denote the standard Euclidean norm for vector or an induced matrix norm if it is not specified, to denote the expectation operator, and to denote matrix multiplication when the multiplied terms are in different lines. For a vector , denotes the element in the vector . Finally, vectors , , denote the ground truth, estimate and estimation error of , respectively.

Test for Detection

Given a sample of Gaussian random variable with unknown mean and known covariance , the test provides statistical evidence of whether or not. In particular, the sample is being normalized by , and we compare the normalized value with , where is the value with degree of freedom and statistical significance level . We reject the null hypothesis : , if , and accept alternative hypothesis : , i.e., there is significant statistical evidence that is non-zero. Otherwise, we accept , i.e., there is no significant evidence that is non-zero.

False negative rate

Given a set of vectors , the false negative rate of the test is defined as the ratio of the number of false negative test results and the number of non-zero vectors in the given set

| (1) |

where

| (2) |

Problem Formulation

Consider the following linear time-varying (LTV) discrete-time stochastic system111 The current paper considers a general formulation for the attack input matrix . If is injected into the control input, then . If is directly injected into the system, then .

| (3a) | ||||

| (3b) | ||||

where , and are the state, the control input and the sensor measurement, respectively. The attack signal is modeled as a simultaneous input , which is unknown to the defender. System matrices , , and are known and bounded with appropriate dimensions. We assume that , . This is typical assumption as in yong2016unified ; kim2017attack . The interpretation of this assumption is that the impact of the attack on the system dynamics can be observed by . The process noise and the measurement noise are assumed to be i.i.d. Gaussian random variables with zero means and covariances and . Moreover, the measurement noise , the process noise , and the initial state are uncorrelated with each other.

The adopted attack model in (3) is known as the FDI attack that is a very general type of attack, and includes physical attacks, Trojans, replay attacks, overflow bugs, packet injection, etc guo2018roboads . Because of this generality, this attack model has been widely used in CPS security literature (e.g., pasqualetti2013attack ; teixeira2015secure ; yong2015resilients ).

In the cyber-space, digital attack signals could be unconstrained, but their impact on the physical world is restricted by physical and operational constraints (i.e., and are constrained). For example, a vehicle has a limit on acceleration, velocity, steering angle, and change of steering angle. Any physical constraints and ability limitations on attack signals and states are presented by the inequality constraints

| (4) |

where matrices , , and vectors , are known and bounded with appropriate dimensions. Throughout this paper, we assume that the feasible sets of the constraints in (4) are non-empty.

Remark 1

Gaussian noise in (3) is one of the general ways to model physical systems so that the filtering algorithms use this model to track the level of uncertainties. Therefore, many pieces of work consider Gaussian noise even in the presence of bounded constraints teixeira2009state ; simon2002kalman ; simon2010kalman .

Problem statement

Given the stochastic system in (3), we aim to design an attack-resilient estimation algorithm that can simultaneously estimate the compromised system state and the attack signal . In addition, we seek to improve estimation accuracy and detection performance with a stability guarantee when incorporating the information of the input and state in terms of constraints in (4).

3 Algorithm Design

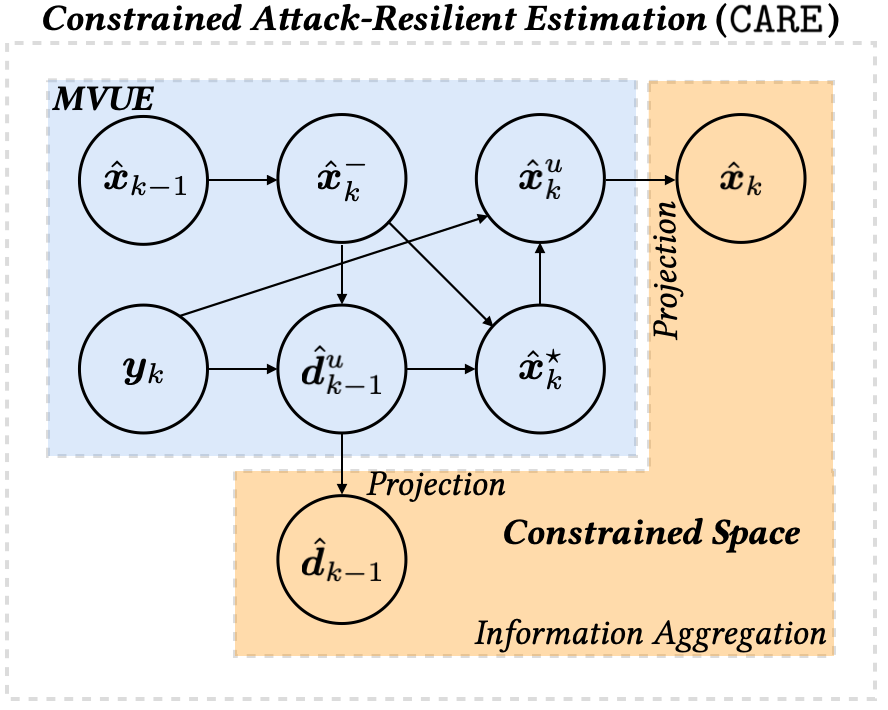

To address the problem statement described in Section 2, we propose a constrained attack-resilient estimation algorithm (CARE), as sketched in Fig. 1, which consists of a minimum-variance unbiased estimator (MVUE) and an information aggregation step via projection. In particular, the optimal estimation provides minimum-variance unbiased estimates, and these estimates are projected onto the constrained space eventually in the information aggregation step. We outline the essential steps of CARE in Section 3.1 and provide a detailed derivation of the algorithm in Section 3.2.

3.1 Algorithm Statement

The proposed CARE can be summarized as follows:

| (5) | |||

| (6) | |||

| (7) | |||

| (8) | |||

| projection update: |

| (9) | ||||

| (10) |

Given the previous state estimate and its error covariance , the current state can be predicted by in (5) under the assumption that the attack signal is absent. The unconstrained attack estimate can be obtained by comparing the difference between the predicted output and the measured output in (6), where is the optimal filter gain that can be obtained by applying Gauss-Markov theorem, as shown in Proposition 3 later. The state prediction can be updated incorporating the unconstrained attack estimate in (7). The output is used to correct the current state estimate in (8), where is the filter gain that is obtained by minimizing the state error covariance . In the information aggregation step (projection update), we apply the input constraint in (9) by projecting onto the constrained space and obtain the constrained attack estimate . Similarly, the state constraint in (10) is applied to obtain the constrained state estimate . The complete algorithm is presented in Algorithm 1.

3.2 Algorithm Derivation

Prediction

The current state can be predicted by (5) under the assumption that the attack signal . The prediction error covariance is

| (11) |

Attack estimation

The linear attack estimator in (6) utilizes the difference between the measured output and the predicted output . Substituting (3) and (5) into (6), we have

which is a linear function of the attack signal . Under the assumption that there is no projection update, i.e., the state and attack estimates are unconstrained, we design the optimal gain matrix such that the estimate becomes the best linear unbiased estimate (BLUE) by the following two propositions.

Proposition 2

Assume that there is no projection update and . The state estimates and the unconstrained attack estimates are unbiased for all , i.e. , if and only if .

Proof: Sufficiency: Assuming that , the statement can be proved by induction. First, we will show the statement holds when as a base case. By the definition, the errors of the time update and the measurement update in (7) and (8) are given by

| (12) | ||||

| (13) |

and the error of the unconstrained attack estimate is

| (14a) | ||||

| (14b) | ||||

Under the assumptions that and the process noise and measurement noise are zero-mean Gaussian, i.e. , the expectation of the term (14b) is zero at . Since is deterministic for all , i.e., , we have if , i.e., the expectation of the term (14a) is zero at .Then we have by applying expectation operation on (12) and (13). In the inductive step, suppose ; then if . Then, similarly, we have by (12) and (13). Since there is no projection update, we have .

Necessity: Assuming that for all , or equivalently , the statement also can be proved by induction. In (14), if for any , we have . Therefore, following a similar procedure, we can show that the necessity holds.

Proposition 3

Assume that there is no projection update and . The unconstrained attack estimates are BLUE if

| (15) |

where .

Proof: Substituting (3a) into (3b), we have

| (16) |

Subtraction of on the both sides of (16) yields

| (17) |

Since the covariances of the process noise and the measurement noise are known, with (11), the covariance of the error term in (17) can be expressed as . Applying the Gauss-Markov theorem (see Appendix A), we can get the minimum-variance-unbiased linear estimator (BLUE) of in (6) with , where .

Remark 4

The error covariance can be found by The cross error covariance of the state estimate and the attack estimate is

Time update

Given the unconstrained attack estimate , the state prediction can be updated as in (7). We derive the error covariance of as

where .

Measurement update

In this step, the measurement is used to update the propagated estimate as shown in (8). The covariance of the state estimation error is

The gain matrix is obtained by minimizing the trace of , i.e. The solution is given by where .

Projection update

We are now in the position to project the estimates onto the constrained space. Apply the first constraint in (4) to the unconstrained attack estimate , and the attack estimation problem can be formulated as the following constrained convex optimization problem

| (18) | ||||

where can be any positive definite symmetric weighting matrix. In the current paper, we select which results in the smallest error covariance as shown in simon2002kalman . From Karush-Kuhn-Tucker (KKT) conditions of optimality, we can find the corresponding active constraints. We denote and the rows of and the elements of corresponding to the active constraints of . Then (18) becomes

| (19) | ||||

The solution of (19) can be found by where

| (20) |

The attack estimation error is

| (21) |

The error covariance can be found by

| (22) |

under the assumption that holds. Notice that this assumption holds when the ground truth satisfies the active constraint . From (20), it can be verified that . Therefore, from (22) we have

| (23) |

Similarly, applying the second constraint in (4) to the unconstrained state estimate , we formalize the state estimation problem as follows:

| (24) | ||||

where we select for the smallest error covariance. We denote and the rows of and the elements of corresponding to the active constraints of . Using the active constraints, we reformulate (24) as follows:

| (25) | ||||

The solution of (25) is given by where

| (26) |

Under the assumption that holds, the state estimation error covariance can be expressed as

| (27) |

where . Notice that this assumption holds when the ground truth satisfies the active constraint .

4 Performance and Stability Analysis

In Section 4.1, we show that the projection induced by inequality constraints improves attack-resilient estimation accuracy and detection performance by decreasing estimation errors and the false negative rate in attack detection. Notice that the estimate and the ground truth satisfy the active constraint in (19) and the inequality constraint in (4), respectively. However, it is uncertain whether the ground truth satisfies the active constraints or not. In this case, from (21) we have

| (28) |

A similar statement holds for the state estimation error:

| (29) |

These considerations indicate that the projection potentially induces biased estimates, rendering the traditional stability analysis for unbiased estimation invalid. In this context, we will prove that estimation errors of the CARE are practically exponentially stable in mean square which will be proven in Section 4.2.

4.1 Performance Analysis

For the analysis of the performance through the projection, we first decompose the state estimation error into two orthogonal spaces as follows:

| (30) |

We will show that the errors in the space remain identical after the projection, while the errors in the space reduce through the projection, as in Lemma 5.

Lemma 5

The decomposition of in the space is equal to that of , and the decomposition of in the space is equal to that of scaled by , i.e.

| (31) | ||||

| (32) |

where , and

for . Similarly, it holds that and , where , and for .

Proof: The relationship in (31) can be obtained by applying to

which implies (31). The solution of defines a closed convex set . The point is not an element of the convex set. The point has the minimum distance from with metric in the convex set by (25). Since the solution is in the closed set , and is a weighted projection with weight , the relationship (32) holds. The statements for attack estimation errors can be proven by a similar procedure, which is omitted here.

With the results from Lemma 5, we can show that the projection reduces the estimation errors and the error covariances, as formulated in Theorem 6.

Theorem 6

CARE reduces the state and attack estimation errors and their error covariances from the unconstrained algorithm, i.e., and , and . Strict inequality holds if , and , respectively.

Proof: The statement for is the direct result of Lemma 5, where strict inequality holds if for some . The statement for can be proved by a similar procedure. To show the rest of the properties, we first identify the equality

| (33) |

Since we have by (26), it holds that , and , i.e. . Then, we have , which implies . Notice that (33) holds for as well. Similar to (23), we have . Given that is positive definite, we have the desired result . The relation for can be obtained by a similar procedure.

The properties in Theorem 6 are desired for accurate estimation as well as attack detection. More specifically, since the false negative rate of a attack detector is a function of the estimate and the covariance as in (1), more accurate estimations can reduce the false negative rate under the following assumption.

Assumption 7

In the presence of the attack (), the following two conditions hold: (i) , and (ii) the ground truth satisfies the condition .

Remark 8

Assumption 7 implies that the unconstrained attack estimation error is small with respect to the ground truth , and the normalized ground truth attack signal is larger than ; otherwise, it cannot be distinguished from the noise. Notice that Assumption 7 is only considered for smaller false negative rates (Theorem 9), but not for the estimation performance (Theorem 6) and stability analysis in Section 4.2, where we will show the stability of the attack estimation error (Theorem 13) which renders the stability of .

According to (1), we denote the false negative rates of the proposed CARE and the unconstrained algorithm as and , respectively. The following Theorem 9 demonstrates that the false negative rate of CARE is less or equal to that of the unconstrained algorithm.

Theorem 9

Under Assumption 7, given a set of attack vectors , the following inequality holds

| (34) |

Proof: To prove (34) is equivalent to showing that the number of false negative test results of CARE is less or equal to that of the unconstrained algorithm

| (35) |

If there is no projection (), it holds that and . And, if there is no attack (), it holds that . Therefore, we have

| (36) |

where . In the rest of the proof, we consider the case for . Rewriting the normalized test value from CARE by substituting with according to (22), we have the following:

| (37) |

where has been applied. Now we expand and rearrange (37), resulting in the following:

| (38) |

Applying (22) and (23) to (38), we have

| (39) |

Since satisfies the input active constraint, we can substitute with . Then the residue defined in (39) can be written as follows:

| (40) |

Expanding and rearranging (40), we have the following:

| (41) | |||

| (42) | |||

| (43) |

where . Using the result from Theorem 6 and the first inequality in Assumption 7, we obtain . Then we have , since (41) to (43) are positive, respectively. Therefore, from (39), we have

| (44) |

Considering the condition in (44), we can divide the set into three partitions as follows:

According to (2), we have

| (45) | ||||

| (46) |

Therefore, from (36), (45) and (46) we conclude that (35) holds, which completes the proof.

4.2 Stability Analysis

Although the projection reduces the estimation errors and their error covariances as shown in Theorem 6, it trades the unbiased estimation off according to (28) and (29). In the absence of the projection, Algorithm 1 reduces to the algorithm in yong2016unified , which is an unbiased estimation, while the traditional stability analysis for unbiased estimation becomes invalid after the projection is applied.

To prove the recursive stability of the biased estimation, it is essential to construct a recursive relation between the current estimation error and the previous estimation error . However, the construction is not straightforward compared to that in filtering with equality constraints simon2002kalman ; yong2015simultaneous or filtering without constraints anderson1981detectability ; yong2016unified . Especially, it is difficult to find the exact recursive relation between and , since is also a function of , i.e. . Then, we have , since the inequality holds. To address this issue, we decompose the estimation error into two orthogonal spaces as in (30). By Lemma 5, (30) becomes where . Note that is an unknown matrix and thus cannot be used for the algorithm. We use it only for analytical purposes. Now under the following assumptions, we present the stability analysis of the proposed Algorithm 1.

Assumption 10

We have . There exist , , , , , , , such that the following holds for all :

Remark 11

In Assumption 10, it is assumed that , i.e., the number of the state constraints are less than the number of state variables. The rest of Assumption 10 is widely used in the literature on extended Kalman filtering kluge2010stochastic and nonlinear input and state estimation kim2017attack .

To show the boundedness of the unconstrained state error covariance , we first define the matrices and .

Theorem 12

Let the pair be uniformly detectable222Please refer to anderson1981detectability for the definition of uniform detectability., then the unconstrained state error covariance is bounded, i.e., there exist non-negative constants and such that for all .

Proof: The unconstrained state estimation error can be found by

| (47) |

where , and . Therefore, the update law of unconstrained covariance is calculated from (47) and (27) as follows:

| (48) |

where . The covariance update law (48) is identical to the covariance update law of the Kalman filtering solution of the transformed system

| (49) | ||||

where . However, in the transformed system, the process noise and measurement noise are correlated, i.e., . To decouple the noises, we add a zero term to the state equation in (49), and obtain the following:

where , is the known input, and is the new process noise. The new process noise and the measurement noise could be decoupled by choosing the gain such that . The solution can be found by . Then, the system (49) becomes

Since the pair is uniformly detectable, by Theorem 5.2 in anderson1981detectability , the statement holds.

Theorem 12 shows that the uniform detectability of the transformed system is one of the sufficient conditions of boundedness of . Under the assumption of boundedness of from Theorem 12, we show the constrained estimation errors and are practically exponentially stable in mean square as in Theorem 13.

Theorem 13

Consider Assumption 10 and assume that there exist non-negative constants and such that holds for all . Then the estimation errors and are practically exponentially stable in mean square, i.e., there exist constants such that for all

Proof: Consider the Lyapunov function After substituting (47) into the Lyapunov function, we obtain

| (50) |

By the uncorrelatedness property papoulis2002probability of , and , the Lyapunov function (13) becomes

| (51) |

The following statements are formulated to deal with each term in (51).

Claim 14

There exists a constant , such that .

Proof: Since , it holds that and thus . Therefore, because is a projection matrix. From Assumption 10 and Theorem 12, we have , and . Since is upper bounded by , we can have . Then we have

| (52) |

Substitution of (52) into (48) yields

| (53) |

where the inequality holds because . As , the inverse of the left hand side of (14) exists and is symmetric positive definite. By the matrix inversion lemma tylavsky1986generalization , it follows that

| (54) |

Since is a positive definite matrix, and , we have

which implies . Since and , inequality (54) proves the claim.

Claim 15

There exists a positive constant , such that

Proof: The first term is bounded by

where we apply . Likewise, the second term is bounded by

These complete the proof.

Through Claims 14 and 15, (51) becomes

By recursively applying the above relation, we have

which implies practical exponential stability of the estimation error

where and have been applied. Constants are defined by

Since is a linear transformation of , the same stability holds for . Likewise, the same stability holds for in (21) because it is a linear transformation of . We omit its details.

5 Illustrative Example

In this example, we test Algorithm 1 on a vehicle model with input and state constraints and compare the estimation accuracy and the detection performance with an unconstrained algorithm.

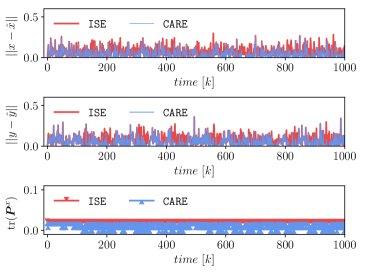

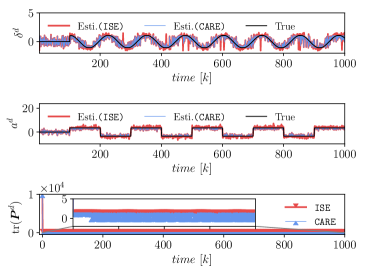

| CARE | 88.928 | 672.914 | 0.455 | 27.351∗ |

| ISE | 123.623 | 1041.837 | 0.613 | 40.577∗ |

∗ The summation ranges from to due to the large initialization (-scale), as shown in Fig. 4.

5.1 Experimental Setup

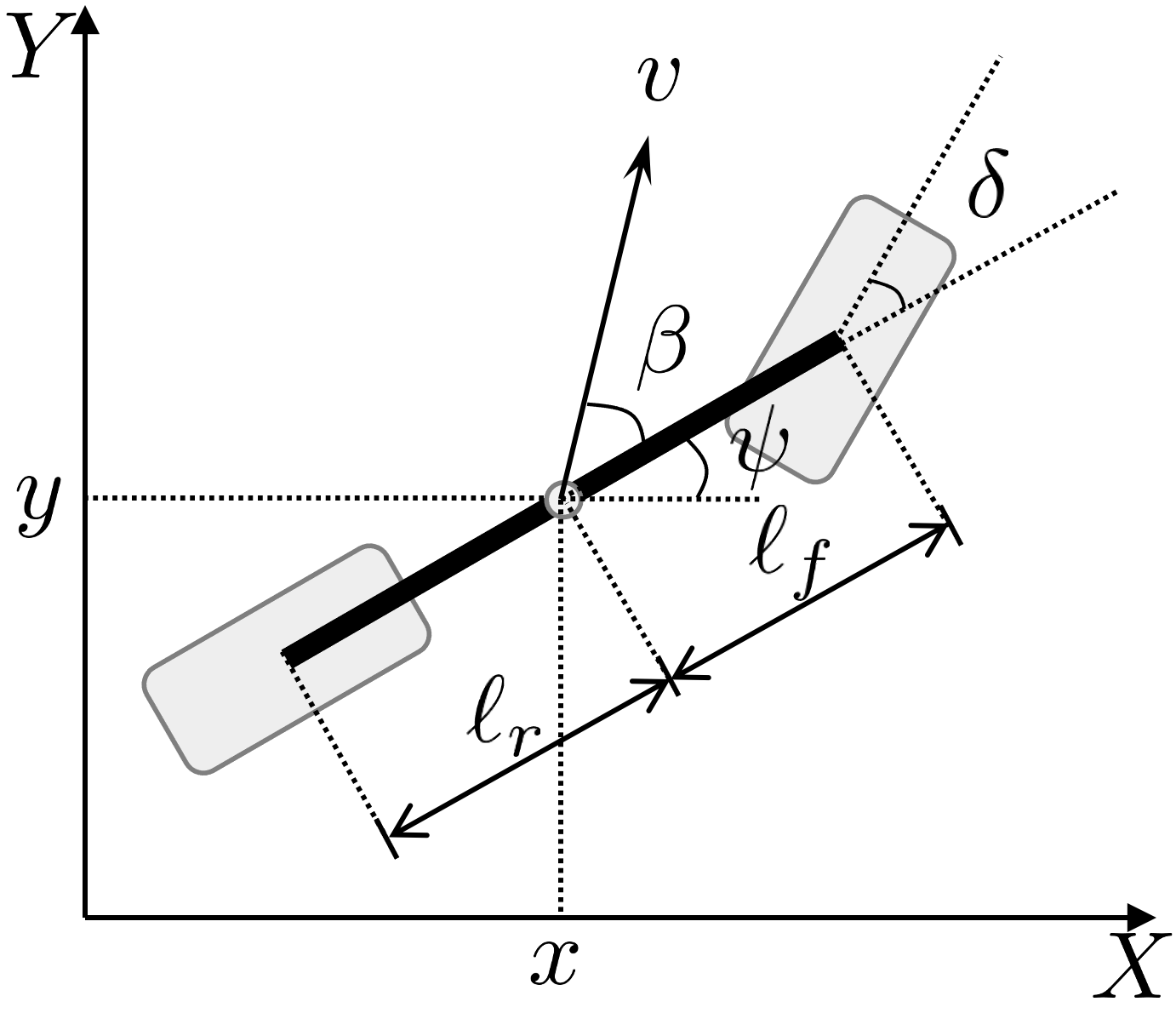

We consider a kinematic bicycle model (Fig. 2) in rajamani2011vehicle . The nonlinear continuous-time model is given as

where and are the coordinates of the center of mass, is the velocity of the center of mass, is the angle of the velocity with respect to the longitudinal axis of the vehicle, is the acceleration, is the heading angle of the vehicle, is the steering angle of the front wheel, and and represent the distance from the center of mass of the vehicle to the front and rear axles, respectively.

Since the proposed algorithm is for linear discrete-time systems, we perform the linearization and discretization as in law2018robust with sampling time . We rewrite the system in the form of (3), where is the state vector, is the input vector, and is the attack input vector. We consider the scenario that attack input is injected into the input, i.e. . The system matrices are given as follows:

and . The noise covariances and are considered as diagonal matrices with and .

The vehicle is assumed to have state constraints on the location , and the velocity , and input constraints on the steering angle and the acceleration .

The unknown attack signals are

where .

The constraints on the vehicle can be formulated by inequality constraints as in (4):

To reduce the effect of instantaneous noises, the cumulative sum algorithm (CUSUM) is adopted lai1995sequential . The test is utilized in a cumulative form. The CUSUM detector is characterized by the detector state :

| (55) |

where is the pre-determined forgetting rate. At each time , the CUSUM detector (55) is used to update the detector state and detect the attack. In particular, we conclude that the attack is presented if

| (56) |

All values are in standard SI units: (meter) for , , , and ; for , , , , and ; for ; for and .

5.2 Results

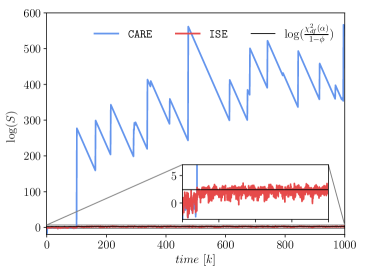

We show a comparison of the proposed algorithm (CARE) and the unified linear input and state estimator (ISE) introduced in yong2016unified . Figure 3 shows the estimation errors of the constrained states ( and ) and the traces of the state error covariances, and Fig. 4 shows the unknown attack signals and their estimates and traces of the attack estimation error covariances. As expected, CARE produces smaller state estimation error and lower covariance. When the attack happens after , the estimates obtained by CARE are closer to the true values and have lower error covariances (cf. Table 1).

The estimates are used to calculate the detector state in (55). The statistical significance of the attack is tested using the CUSUM detector. The threshold is calculated by in (56) with the significance level and the forgetting rate . The detector states and the threshold are plotted in scale (Fig. 5). When the attack is present, CARE can detect the attack by producing high detector state values above the threshold, while the detector state values from ISE are oscillating around the threshold, suffering from a high false negative rate of .

6 Conclusion

In this paper, we presented a constrained attack-resilient estimation algorithm (CARE) of linear stochastic cyber-physical systems. The proposed algorithm produces minimum-variance unbiased estimates and utilizes physical constraints and operational limitations to improve estimation accuracy and detection performance via projection. In particular, CARE first provides minimum-variance unbiased estimates, and then these estimates are projected onto the constrained space. We formally proved that estimation errors and their covariances from CARE are less than those from unconstrained algorithms and showed that CARE had improved false negative rate in attack detection. Moreover, we proved that the estimation errors of the proposed estimation algorithm are practically exponentially stable. A simulation of attacks on a vehicle demonstrates the effectiveness of the proposed algorithm and reveals better attack-resilient properties compared to an existing algorithm.

Appendix A Gauss-Markov Theorem

Theorem 16 (Gauss-Markov Theorem sayed2003fundamentals )

Given the linear model , where is a zero-mean random variable with positive-definite covariance matrix and is full rank matrix with , the minimum-variance-unbiased linear estimator of given is .

Acknowledgements

This work has been supported by the National Science Foundation (ECCS-1739732 and CMMI-1663460).

References

- (1) R. Rajkumar, I. Lee, L. Sha, J. Stankovic, Cyber-physical systems: the next computing revolution, in: IEEE Design Automation Conference, 2010, pp. 731–736.

- (2) A. A. Cárdenas, S. Amin, S. Sastry, Research challenges for the security of control systems., HotSec 5 (2008) 15.

- (3) R. M. Lee, M. J. Assante, T. Conway, German steel mill cyber attack, Industrial Control Systems 30 (2014) 22.

- (4) J. Slay, M. Miller, Lessons learned from the Maroochy water breach, in: Springer International Conference on Eritical Infrastructure Protection, 2007, pp. 73–82.

- (5) S. Peterson, P. Faramarzi, Iran hijacked us drone, says iranian engineer, Christian Science Monitor 15 (2011).

- (6) K. Koscher, A. Czeskis, F. Roesner, S. Patel, T. Kohno, S. Checkoway, D. McCoy, B. Kantor, D. Anderson, H. Shacham, et al., Experimental security analysis of a modern automobile, in: IEEE Symposium on Security and Privacy, 2010, pp. 447–462.

- (7) S. Checkoway, D. McCoy, B. Kantor, D. Anderson, H. Shacham, et al., Comprehensive experimental analyses of automotive attack surfaces, in: USENIX Security Symposium, Vol. 4, 2011, pp. 447–462.

- (8) J. Raiyn, A survey of cyber attack detection strategies, International Journal of Security and Its Applications 8 (1) (2014) 247–256.

- (9) A. A. Cardenas, S. Amin, S. Sastry, Secure control: Towards survivable cyber-physical systems, in: 28th International Conference on Distributed Computing Systems Workshops, 2008, pp. 495–500.

- (10) F. Pasqualetti, F. Dörfler, F. Bullo, Attack detection and identification in cyber-physical systems, IEEE Transactions on Automatic Control 58 (11) (2013) 2715–2729.

- (11) A. Teixeira, D. Pérez, H. Sandberg, K. H. Johansson, Attack models and scenarios for networked control systems, in: Proceedings of the 1st international conference on High Confidence Networked Systems, 2012, pp. 55–64.

- (12) A. Teixeira, I. Shames, H. Sandberg, K. H. Johansson, A secure control framework for resource-limited adversaries, Automatica 51 (2015) 135–148.

- (13) M. Pajic, J. Weimer, N. Bezzo, P. Tabuada, O. Sokolsky, I. Lee, G. J. Pappas, Robustness of attack-resilient state estimators, in: ACM/IEEE International Conference on Cyber-Physical Systems, 2014, pp. 163–174.

- (14) H. Fawzi, P. Tabuada, S. Diggavi, Secure estimation and control for cyber-physical systems under adversarial attacks, IEEE Transactions on Automatic Control 59 (6) (2014) 1454–1467.

- (15) M. Pajic, I. Lee, G. J. Pappas, Attack-resilient state estimation for noisy dynamical systems, IEEE Transactions on Control of Network Systems 4 (1) (2017) 82–92.

- (16) M. Naghnaeian, N. H. Hirzallah, P. G. Voulgaris, Security via multirate control in cyber–physical systems, Systems & Control Letters 124 (2019) 12–18.

- (17) N. H. Hirzallah, P. G. Voulgaris, N. Hovakimyan, On the estimation of signal attacks: A dual rate SD control framework, in: IEEE European Control Conference (ECC), 2019, pp. 4380–4385.

- (18) Y. Liu, P. Ning, M. K. Reiter, False data injection attacks against state estimation in electric power grids, ACM Transactions on Information and System Security 14 (1) (2011) 21–32.

- (19) Y. Mo, B. Sinopoli, Secure control against replay attacks, in: 47th Annual Allerton Conference on Communication, Control, and Computing, 2009, pp. 911–918.

- (20) Y. Mo, R. Chabukswar, B. Sinopoli, Detecting integrity attacks on SCADA systems, IEEE Transactions on Control Systems Technology 22 (4) (2014) 1396–1407.

- (21) S. Z. Yong, M. Zhu, E. Frazzoli, Resilient state estimation against switching attacks on stochastic cyber-physical systems, in: IEEE Conference on Decision and Control (CDC), 2015, pp. 5162–5169.

- (22) N. Forti, G. Battistelli, L. Chisci, B. Sinopoli, A Bayesian approach to joint attack detection and resilient state estimation, in: IEEE Conference on Decision and Control (CDC), 2016, pp. 1192–1198.

- (23) H. Kim, P. Guo, M. Zhu, P. Liu, Simultaneous input and state estimation for stochastic nonlinear systems with additive unknown inputs, Automatica 111 (2020) 108588.

- (24) A. Teixeira, S. Amin, H. Sandberg, K. H. Johansson, S. S. Sastry, Cyber security analysis of state estimators in electric power systems, in: IEEE Conference on Decision and Control (CDC), 2010, pp. 5991–5998.

- (25) D. Simon, T. L. Chia, Kalman filtering with state equality constraints, IEEE Transactions on Aerospace and Electronic Systems 38 (1) (2002) 128–136.

- (26) S. Ko, R. R. Bitmead, State estimation for linear systems with state equality constraints, Automatica 43 (8) (2007) 1363–1368.

- (27) D. Simon, Kalman filtering with state constraints: A survey of linear and nonlinear algorithms, IET Control Theory & Applications 4 (8) (2010) 1303–1318.

- (28) H. Kong, M. Shan, S. Sukkarieh, T. Chen, W. X. Zheng, Kalman filtering under unknown inputs and norm constraints, Automatica 133 (2021) 109871.

- (29) A. I. Mourikis, S. I. Roumeliotis, A multi-state constraint Kalman filter for vision-aided inertial navigation, in: IEEE International Conference on Robotics and Automation (ICRA), 2007, pp. 3565–3572.

- (30) L. Wang, Y. Chiang, F. Chang, Filtering method for nonlinear systems with constraints, IEEE Proceedings-Control Theory and Applications 149 (6) (2002) 525–531.

- (31) S. Z. Yong, M. Zhu, E. Frazzoli, Simultaneous input and state estimation of linear discrete-time stochastic systems with input aggregate information, in: IEEE Conference on Decision and Control (CDC), 2015, pp. 461–467.

- (32) S. H. Kafash, J. Giraldo, C. Murguia, A. A. Cardenas, J. Ruths, Constraining attacker capabilities through actuator saturation, in: IEEE American Control Conference (ACC), 2018, pp. 986–991.

- (33) S. M. Djouadi, A. M. Melin, E. M. Ferragut, J. A. Laska, J. Dong, A. Drira, Finite energy and bounded actuator attacks on cyber-physical systems, in: IEEE European Control Conference (ECC), 2015, pp. 3659–3664.

- (34) S. Z. Yong, M. Zhu, E. Frazzoli, A unified filter for simultaneous input and state estimation of linear discrete-time stochastic systems, Automatica 63 (2016) 321–329.

- (35) H. Kim, P. Guo, M. Zhu, P. Liu, Attack-resilient estimation of switched nonlinear cyber-physical systems, in: IEEE American Control Conference (ACC), 2017, pp. 4328–4333.

- (36) P. Guo, H. Kim, N. Virani, J. Xu, M. Zhu, P. Liu, Roboads: Anomaly detection against sensor and actuator misbehaviors in mobile robots, in: 48th Annual IEEE/IFIP international conference on dependable systems and networks (DSN), 2018, pp. 574–585.

- (37) B. O. Teixeira, J. Chandrasekar, L. A. Tôrres, L. A. Aguirre, D. S. Bernstein, State estimation for linear and non-linear equality-constrained systems, International Journal of Control 82 (5) (2009) 918–936.

- (38) B. D. O. Anderson, J. B. Moore, Detectability and stabilizability of time-varying discrete-time linear-systems, SIAM Journal on Control and Optimization 19 (1) (1981) 20–32.

- (39) S. Kluge, K. Reif, M. Brokate, Stochastic stability of the extended Kalman filter with intermittent observations, IEEE Transactions on Automatic Control 55 (2) (2010) 514–518.

- (40) A. Papoulis, S. U. Pillai, Probability, random variables, and stochastic processes, Tata McGraw-Hill Education, 2002.

- (41) D. J. Tylavsky, G. R. Sohie, Generalization of the matrix inversion lemma, Proceedings of the IEEE 74 (7) (1986) 1050–1052.

- (42) R. Rajamani, Vehicle dynamics and control, Springer Science & Business Media, 2011.

- (43) C. K. Law, D. Dalal, S. Shearrow, Robust model predictive control for autonomous vehicles/self driving cars, arXiv preprint arXiv:1805.08551 (2018).

- (44) T. L. Lai, Sequential changepoint detection in quality control and dynamical systems, Journal of the Royal Statistical Society. Series B (Methodological) (1995) 613–658.

- (45) A. H. Sayed, Fundamentals of adaptive filtering, John Wiley & Sons, 2003.