Contextual Bandits with Large Action Spaces: Made Practical

Abstract

A central problem in sequential decision making is to develop algorithms that are practical and computationally efficient, yet support the use of flexible, general-purpose models. Focusing on the contextual bandit problem, recent progress provides provably efficient algorithms with strong empirical performance when the number of possible alternatives (“actions”) is small, but guarantees for decision making in large, continuous action spaces have remained elusive, leading to a significant gap between theory and practice. We present the first efficient, general-purpose algorithm for contextual bandits with continuous, linearly structured action spaces. Our algorithm makes use of computational oracles for (i) supervised learning, and (ii) optimization over the action space, and achieves sample complexity, runtime, and memory independent of the size of the action space. In addition, it is simple and practical. We perform a large-scale empirical evaluation, and show that our approach typically enjoys superior performance and efficiency compared to standard baselines.

1 Introduction

We consider the design of practical, theoretically motivated algorithms for sequential decision making with contextual information, better known as the contextual bandit problem. Here, a learning agent repeatedly receives a context (e.g., a user’s profile), selects an action (e.g., a news article to display), and receives a reward (e.g., whether the article was clicked). Contextual bandits are a useful model for decision making in unknown environments in which both exploration and generalization are required, but pose significant algorithm design challenges beyond classical supervised learning. Recent years have seen development on two fronts: On the theoretical side, extensive research into finite-action contextual bandits has resulted in practical, provably efficient algorithms capable of supporting flexible, general-purpose models (Langford and Zhang, 2007; Agarwal et al., 2014; Foster and Rakhlin, 2020; Simchi-Levi and Xu, 2021; Foster and Krishnamurthy, 2021). Empirically, contextual bandits have been widely deployed in practice for online personalization and recommendation tasks (Li et al., 2010; Agarwal et al., 2016; Tewari and Murphy, 2017; Cai et al., 2021), leveraging the availability of high-quality action slates (e.g., subsets of candidate articles selected by an editor).

The developments above critically rely on the existence of a small number of possible decisions or alternatives. However, many applications demand the ability to make contextual decisions in large, potentially continuous spaces, where actions might correspond to images in a database or high-dimensional embeddings of rich documents such as webpages. Contextual bandits in large (e.g., million-action) settings remains a major challenge—both statistically and computationally—and constitutes a substantial gap between theory and practice. In particular:

-

•

Existing general-purpose algorithms (Langford and Zhang, 2007; Agarwal et al., 2014; Foster and Rakhlin, 2020; Simchi-Levi and Xu, 2021; Foster and Krishnamurthy, 2021) allow for the use of flexible models (e.g., neural networks, forests, or kernels) to facilitate generalization across contexts, but have sample complexity and computational requirements linear in the number of actions. These approaches can degrade in performance under benign operations such as duplicating actions.

- •

- •

As a result of these algorithmic limitations, empirical aspects of contextual decision making in large action spaces have remained relatively unexplored compared to the small-action regime (Bietti et al., 2021), with little in the way of readily deployable out-of-the-box solutions.

Contributions

We provide the first efficient algorithms for contextual bandits with continuous, linearly structured action spaces and general function approximation. Following Chernozhukov et al. (2019); Xu and Zeevi (2020); Foster et al. (2020), we adopt a modeling approach, and assume rewards for each context-action pair are structured as

| (1) |

Here is a known context-action embedding (or feature map) and is a context embedding to be learned online, which belongs to an arbitrary, user-specified function class . Our algorithm, SpannerIGW, is computationally efficient (in particular, the runtime and memory are independent of the number of actions) whenever the user has access to (i) an online regression oracle for supervised learning over the reward function class, and (ii) an action optimization oracle capable of solving problems of the form

for any . The former oracle follows prior approaches to finite-action contextual bandits (Foster and Rakhlin, 2020; Simchi-Levi and Xu, 2021; Foster and Krishnamurthy, 2021), while the latter generalizes efficient approaches to (non-contextual) linear bandits (McMahan and Blum, 2004; Dani et al., 2008; Bubeck et al., 2012; Hazan and Karnin, 2016). We provide a regret bound for SpannerIGW which scales as , and—like the computational complexity—is independent of the number of actions. Beyond these results, we provide a particularly practical variant of SpannerIGW (SpannerGreedy), which enjoys even faster runtime at the cost of slightly worse (-type) regret.

Our techniques

On the technical side, we show how to efficiently combine the inverse gap weighting technique (Abe and Long, 1999; Foster and Rakhlin, 2020) previously used in the finite-action setting with optimal design-based approaches for exploration with linearly structured actions. This offers a computational improvement upon the results of Xu and Zeevi (2020); Foster et al. (2020), which provide algorithms with -regret for the setting we consider, but require enumeration over the action space. Conceptually, our results expand upon the class of problems for which minimax approaches to exploration (Foster et al., 2021b) can be made efficient.

Empirical performance

As with previous approaches based on regression oracles, SpannerIGW is simple, practical, and well-suited to flexible, general-purpose function approximation. In extensive experiments ranging from thousands to millions of actions, we find that our methods typically enjoy superior performance compared to existing baselines. In addition, our experiments validate the statistical model in Eq. 1 which we find to be well-suited to learning with large-scale language models (Devlin et al., 2019).

1.1 Organization

This paper is organized as follows. In Section 2, we formally introduce our statistical model and the computational oracles upon which our algorithms are built. Subsequent sections are dedicated to our main results.

-

•

As a warm-up, Section 3 presents a simplified algorithm, SpannerGreedy, which illustrates the principle of exploration over an approximate optimal design. This algorithm is practical and oracle-efficient, but has suboptimal -type regret.

- •

Section 5 presents empirical results for both algorithms. We close with discussion of additional related work (Section 6) and future directions (Section 7). All proofs are deferred to the appendix.

2 Problem Setting

The contextual bandit problem proceeds over rounds. At each round , the learner receives a context (the context space), selects an action (the action space), and then observes a reward , where is the underlying reward function. We assume that for each round , conditioned on , the reward is sampled from a (unknown) distribution . We allow both the contexts and the distributions to be selected in an arbitrary, potentially adaptive fashion based on the history.

Function approximation

Following a standard approach to developing efficient contextual bandit methods, we take a modeling approach, and work with a user-specified class of regression functions that aims to model the underlying mean reward function. We make the following realizability assumption (Agarwal et al., 2012; Foster et al., 2018; Foster and Rakhlin, 2020; Simchi-Levi and Xu, 2021).

Assumption 1 (Realizability).

There exists a regression function such that for all and .

Without further assumptions, there exist function classes for which the regret of any algorithm must grow proportionally to (e.g., Agarwal et al. (2012)). In order to facilitate generalization across actions and achieve sample complexity and computational complexity independent of , we assume that each function is linear in a known (context-dependent) feature embedding of the action. Following Xu and Zeevi (2020); Foster et al. (2020), we assume that takes the form

where is a known, context-dependent action embedding and is a user-specified class of context embedding functions.

This formulation assumes linearity in the action space (after featurization), but allows for nonlinear, learned dependence on the context through the function class , which can be taken to consist of neural networks, forests, or any other flexible function class a user chooses. For example, in news article recommendation, might correspond to an embedding of an article obtained using a large pre-trained language-model, while might correspond to a task-dependent embedding of a user , which our methods can learn online. Well-studied special cases include the linear contextual bandit setting (Chu et al., 2011; Abbasi-Yadkori et al., 2011), which corresponds to the special case where each has the form for some fixed , as well as the standard finite-action contextual bandit setting, where and .

We let denote the embedding for which . We assume that and . In addition, we assume that for all .

Regret

For each regression function , let denote the induced policy, and define as the optimal policy. We measure the performance of the learner in terms of regret:

2.1 Computational Oracles

To derive efficient algorithms with sublinear runtime, we make use of two computational oracles: First, following Foster and Rakhlin (2020); Simchi-Levi and Xu (2021); Foster et al. (2020, 2021a), we use an online regression oracle for supervised learning over the reward function class . Second, we use an action optimization oracle, which facilitates linear optimization over the action space (McMahan and Blum, 2004; Dani et al., 2008; Bubeck et al., 2012; Hazan and Karnin, 2016)

Function approximation: Regression oracles

A fruitful approach to designing efficient contextual bandit algorithms is through reduction to supervised regression with the class , which facilitates the use of off-the-shelf supervised learning algorithms and models (Foster and Rakhlin, 2020; Simchi-Levi and Xu, 2021; Foster et al., 2020, 2021a). Following Foster and Rakhlin (2020), we assume access to an online regression oracle , which is an algorithm for online learning (or, sequential prediction) with the square loss.

We consider the following protocol. At each round , the oracle produces an estimator , then receives a context-action-reward tuple . The goal of the oracle is to accurately predict the reward as a function of the context and action, and we evaluate its prediction error via the square loss . We measure the oracle’s cumulative performance through square-loss regret to .

Assumption 2 (Bounded square-loss regret).

The regression oracle guarantees that for any (potentially adaptively chosen) sequence ,

for some (non-data-dependent) function .

We let denote an upper bound on the time required to (i) query the oracle’s estimator with and receive the vector , and (ii) update the oracle with the example . We let denote the maximum memory used by the oracle throughout its execution.

Online regression is a well-studied problem, with computationally efficient algorithms for many models. Basic examples include finite classes , where one can attain (Vovk, 1998), and linear models (), where the online Newton step algorithm (Hazan et al., 2007) satisfies 2 with . More generally, even for classes such as deep neural networks for which provable guarantees may not be available, regression is well-suited to gradient-based methods. We refer to Foster and Rakhlin (2020); Foster et al. (2020) for more comprehensive discussion.

Large action spaces: Action optimization oracles

The regression oracle setup in the prequel is identical to that considered in the finite-action setting (Foster and Rakhlin, 2020). In order to develop efficient algorithms for large or infinite action spaces, we assume access to an oracle for linear optimization over actions.

Definition 1 (Action optimization oracle).

An action optimization oracle takes as input a context , and vector and returns

| (2) |

For a single query to the oracle, We let denote a bound on the runtime for a single query to the oracle. We let denote the maximum memory used by the oracle throughout its execution.

The action optimization oracle in Eq. 2 is widely used throughout the literature on linear bandits (Dani et al., 2008; Chen et al., 2017; Cao and Krishnamurthy, 2019; Katz-Samuels et al., 2020), and can be implemented in polynomial time for standard combinatorial action spaces. It is a basic computational primitive in the theory of convex optimization, and when is convex, it is equivalent (up to polynomial-time reductions) to other standard primitives such as separation oracles and membership oracles (Schrijver, 1998; Grötschel et al., 2012). It also equivalent to the well-known Maximum Inner Product Search (MIPS) problem (Shrivastava and Li, 2014), for which sublinear-time hashing based methods are available.

Example 1.

Let be a graph, and let represent a matching and be a vector of edge weights. The problem of finding the maximum-weight matching for a given set of edge weights can be written as a linear optimization problem of the form in Eq. 2, and Edmonds’ algorithm (Edmonds, 1965) can be used to find the maximum-weight matching in time.

Action representation

We define as the number of bits used to represent actions in , which is always upper bounded by for finite action sets, and by for actions that can be represented as vectors in . Tighter bounds are possible with additional structual assumptions. Since representing actions is a minimal assumption, we hide the dependence on in big- notation for our runtime and memory analysis.

2.2 Additional Notation

We adopt non-asymptotic big-oh notation: For functions , we write (resp. ) if there exists a constant such that (resp. ) for all . We write if , if . We use only in informal statements to highlight salient elements of an inequality.

For a vector , we let denote the euclidean norm. We define for a positive definite matrix . For an integer , we let denote the set . For a set , we let denote the set of all Radon probability measures over . We let denote the set of all finitely supported convex combinations of elements in . When is finite, we let denote the uniform distribution over all the elements in . We let denote the delta distribution on . We use the convention and .

3 Warm-Up: Efficient Algorithms via Uniform Exploration

In this section, we present our first result: an efficient algorithm based on uniform exploration over a representative basis (SpannerGreedy; Algorithm 1). This algorithm achieves computational efficiency by taking advantage of an online regression oracle, but its regret bound has sub-optimal dependence on . Beyond being practically useful in its own right, this result serves as a warm-up for Section 4.

Our algorithm is based on exploration with a G-optimal design for the embedding , which is a distribution over actions that minimizes a certain notion of worse-case variance (Kiefer and Wolfowitz, 1960; Atwood, 1969).

Definition 2 (G-optimal design).

Let a set be given. A distribution is said to be a G-optimal design with approximation factor if

where .

The following classical result guarantees existence of a G-optimal design.

Lemma 1 (Kiefer and Wolfowitz (1960)).

For any compact set , there exists an optimal design with .

Algorithm 1 uses optimal design as a basis for exploration: At each round, the learner obtains an estimator from the regression oracle , then appeals to a subroutine to compute an (approximate) G-optimal design for the action embedding . Fix an exploration parameter , the algorithm then samples an action from the optimal design with probability (“exploration”), or plays the greedy action with probability (“exploitation”). Algorithm 1 is efficient whenever an approximate optimal design can be computed efficiently, which can be achieved using Algorithm 5. We defer a detailed discussion of efficiency for a moment, and first state the main regret bound for the algorithm.

Theorem 1.

With a -approximate optimal design subroutine and an appropriate choice for , Algorithm 1, with probability at least , enjoys regret

In particular, when invoked with Algorithm 5 (with ) as a subroutine, the algorithm enjoys regret

and has per-round runtime and maximum memory .

Computational efficiency

The computational efficiency of Algorithm 1 hinges on the ability to efficiently compute an approximate optimal design (or, by convex duality, the John ellipsoid (John, 1948)) for the set . All off-the-shelf optimal design solvers that we are aware of require solving quadratic maximization subproblems, which in general cannot be reduced to a linear optimization oracle (Definition 1). While there are some special cases where efficient solvers exist (e.g., when is a polytope (Cohen et al. (2019) and references therein)), computing an exact optimal design is NP-hard in general (Grötschel et al., 2012; Summa et al., 2014). To overcome this issue, we use the notion of a barycentric spanner, which acts as an approximate optimal design and can be computed efficiently using an action optimization oracle.

Definition 3 (Awerbuch and Kleinberg (2008)).

Let a compact set of full dimension be given. For , a subset of points is said to be a -approximate barycentric spanner for if every point can be expressed as a weighted combination of points in with coefficients in .

The following result shows that any barycentric spanner yields an approximate optimal design.

Lemma 2.

If is a -approximate barycentric spanner for , then is a -approximate optimal design.

Using an algorithm introduced by Awerbuch and Kleinberg (2008), one can efficiently compute the -approximate barycentric spanner for the set using an action optimization oracle; their method is restated stated as Algorithm 5 in Appendix A.

Key features of Algorithm 1

While the regret bound for Algorithm 1 scales with , which is not optimal, this result constitutes the first computationally efficient algorithm for contextual bandits with linearly structured actions and general function approximation. Additional features include:

-

•

Simplicity and practicality. Appealing to uniform exploration makes Algorithm 1 easy to implement and highly practical. In particular, in the case where the action embedding does not depend on the context (i.e., ) an approximate design can be precomputed and reused, reducing the per-round runtime to and the maximum memory to .

-

•

Lifting optimal design to contextual bandits. Previous bandit algorithms based on optimal design are limited to the non-contextual setting, and to pure exploration. Our result highlights for the first time that optimal design can be efficiently combined with general function approximation.

Proof sketch for Theorem 1

To analyze Algorithm 1, we follow a recipe introduced by Foster and Rakhlin (2020); Foster et al. (2021b) based on the Decision-Estimation Coefficient (DEC),111The original definition of the Decision-Estimation Coefficient in Foster et al. (2021b) uses Hellinger distance rather than squared error. The squared error version we consider here leads to tighter guarantees for bandit problems where the mean rewards serve as a sufficient statistic. defined as , where

| (3) |

Foster et al. (2021b) consider a meta-algorithm which, at each round , (i) computes by appealing to a regression oracle, (ii) computes a distribution that solves the minimax problem in Eq. 3 with and plugged in, and (iii) chooses the action by sampling from this distribution. One can show (Lemma 7 in Appendix A) that for any , this strategy enjoys the following regret bound:

| (4) |

More generally, if one computes a distribution that does not solve Eq. 3 exactly, but instead certifies an upper bound on the DEC of the form , the same result holds with replaced by . Algorithm 1 is a special case of this meta-algorithm, so to bound the regret it suffices to show that the exploration strategy in the algorithm certifies a bound on the DEC.

Lemma 3.

For any , by choosing , the exploration strategy in Algorithm 1 certifies that .

4 Efficient, Near-Optimal Algorithms

In this section we present SpannerIGW (Algorithm 2), an efficient algorithm with regret (Algorithm 2). We provide the algorithm and statistical guarantees in Section 4.1, then discuss computational efficiency in Section 4.2.

4.1 Algorithm and Statistical Guarantees

Building on the approach in Section 3, SpannerIGW uses the idea of exploration with an optimal design. However, in order to achieve regret, we combine optimal design with the inverse gap weighting (IGW) technique. previously used in the finite-action contextual bandit setting (Abe and Long, 1999; Foster and Rakhlin, 2020).

Recall that for finite-action contextual bandits, the inverse gap weighting technique works as follows. Given a context and estimator from the regression oracle , we assign a distribution to actions in via the rule

where and is chosen such that . This strategy certifies that , which leads to regret . While this is essentially optimal for the finite-action setting, the linear dependence on makes it unsuitable for the large-action setting we consider.

To lift the IGW strategy to the large-action setting, Algorithm 2 combines it with optimal design with respect to a reweighted embedding. Let be given. For each action , we define a reweighted embedding via

| (5) |

where and is a reweighting parameter to be tuned later. This reweighting is action-dependent since term appears on the denominator. Within Algorithm 2, we compute a new reweighted embedding at each round using , the output of the regression oracle .

Algorithm 2 proceeds by computing an optimal design with respect to the reweighted embedding defined in Eq. 5. The algorithm then creates a distribution by mixing the optimal design with a delta mass at the greedy action . Finally, in Eq. 6, the algorithm computes an augmented version of the inverse gap weighting distribution by reweighting according to . This approach certifies the following bound on the Decision-Estimation Coefficient.

Lemma 4.

For any , by setting , the exploration strategy used in Algorithm 2 certifies that .

This lemma shows that the reweighted IGW strategy enjoys the best of both worlds: By leveraging optimal design, we ensure good coverage for all actions, leading to (rather than ) scaling, and by leveraging inverse gap weighting, we avoid excessive exploration, leading rather than scaling. Combining this result with Lemma 7 leads to our main regret bound for SpannerIGW.

| (6) |

Theorem 2.

Let be given. With a -approximate optimal design subroutine and an appropriate choice for , Algorithm 2 ensures that with probability at least ,

In particular, when invoked with Algorithm 3 (with ) as a subroutine, the algorithm has

and has per-round runtime and the maximum memory .

Algorithm 2 is the first computationally efficient algorithm with -regret for contextual bandits with general function approximation and linearly structured action spaces. In what follows, we show how to leverage the action optimization oracle (Definition 1) to achieve this efficiency.

4.2 Computational Efficiency

The computational efficiency of Algorithm 2 hinges on the ability to efficiently compute an optimal design. As with Algorithm 1, we address this issue by appealing to the notion of a barycentric spanner, which serves as an approximate optimal design. However, compared to Algorithm 1, a substantial additional challenge is that Algorithm 2 requires an approximate optimal design for the reweighted embeddings. Since the reweighting is action-dependent, the action optimization oracle cannot be directly applied to optimize over the reweighted embeddings, which prevents us from appealing to an out-of-the-box solver (Algorithm 5) in the same fashion as the prequel.

To address the challenges above, we introduce ReweightedSpanner (Algorithm 3), a barycentric spanner computation algorithm which is tailored to the reweighted embedding . To describe the algorithm, let us introduce some additional notation. For a set of actions, we let denote the determinant of the -by- matrix whose columns are . ReweightedSpanner adapts the barycentric spanner computation approach of Awerbuch and Kleinberg (2008), which aims to identify a subset with that approximately maximizes . The key feature of ReweightedSpanner is a subroutine, IGW-ArgMax (Algorithm 4), which implements an (approximate) action optimization oracle for the reweighted embedding:

| (7) |

IGW-ArgMax uses line search reduce the problem in Eq. 7 to a sequence of linear optimization problems with respect to the unweighted embeddings, each of which can be solved using . This yields the following guarantee for Algorithm 3.

Theorem 3.

Suppose that Algorithm 3 is invoked with parameters , , and , and that the initialization set satisfies . Then the algorithm returns a -approximate barycentric spanner with respect to the reweighted embedding set , and does so with runtime and memory.

We refer to Section C.1 for self-contained analysis of IGW-ArgMax.

On the initialization requirement

The runtime for Algorithm 3 scales with , where is such that for the initial set . In Section C.3, we provide computationally efficient algorithms for initialization under various assumptions on the action space.

5 Empirical Results

In this section we investigate the empirical performance of SpannerGreedy and SpannerIGW through three experiments. First, we compare the spanner-based algorithms to state-of-the art finite-action algorithms on a large-action dataset; this experiment features nonlinear, learned context embeddings . Next, we study the impact of redundant actions on the statistical performance of said algorithms. Finally, we experiment with a large-scale large-action contextual bandit benchmark, where we find that the spanner-based methods exhibit excellent performance.

Preliminaries

We conduct experiments on three datasets, whose details are summarized in Table 1. oneshotwiki (Singh et al., 2012; Vasnetsov, 2018) is a named-entity recognition task where contexts are text phrases preceding and following the mention text, and where actions are text phrases corresponding to the concept names. amazon-3m (Bhatia et al., 2016) is an extreme multi-label dataset whose contexts are text phrases corresponding to the title and description of an item, and whose actions are integers corresponding to item tags. Actions are embedded into with specified in Table 1. We construct binary rewards for each dataset, and report 90% bootstrap confidence intervals (CIs) of the rewards in the experiments. We defer other experimental details to Section D.1. Code to reproduce all results is available at https://github.com/pmineiro/linrepcb.

| Dataset | |||

|---|---|---|---|

| oneshotwiki-311 | 622000 | 311 | 50 |

| oneshotwiki-14031 | 2806200 | 14031 | 50 |

| amazon-3m | 1717899 | 2812281 | 800 |

Comparison with finite-action baselines

We compare SpannerGreedy and SpannerIGW with their finite-action counterparts -Greedy and SquareCB (Foster and Rakhlin, 2020) on the oneshotwiki-14031 dataset. We consider bilinear models in which regression functions take the form where is a matrix of learned parameters; the deep models of the form , where is a learned two-layer neural network and contains learned parameters as before.222Also see Section D.1 for details. Table 2 presents our results. We find that SpannerIGW performs best, and that both spanner-based algorithms either tie or exceed their finite-action counterparts. In addition, we find that working with deep models uniformly improves performance for all methods. We refer to Table 4 in Section D.3 for timing information.

| Algorithm | Regression Function | |

|---|---|---|

| Bilinear | Deep | |

| best constant | ||

| -Greedy | ||

| SpannerGreedy | ||

| SquareCB | ||

| SpannerIGW | ||

| supervised | ||

Impact of redundancy

Finite-action contextual bandit algorithms can explore excessively in the presence of redundant actions. To evaluate performance in the face of redundancy, we augment oneshotwiki-311 by duplicating action the final action. Table 3 displays the performance of SpannerIGW and its finite-action counterpart, SquareCB, with a varying number of duplicates. We find that SpannerIGW is completely invariant to duplicates (in fact, the algorithm produces numerically identical output when the random seed is fixed), but SquareCB is negatively impacted and over-explores the duplicated action. SpannerGreedy and -Greedy behave analogously (not shown).

| Duplicates | SpannerIGW | SquareCB |

|---|---|---|

| 0 | ||

| 16 | ||

| 256 | ||

| 1024 |

Large scale exhibition

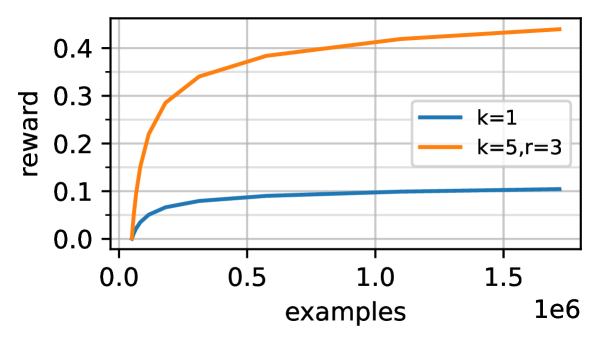

We conduct a large scale experiment using the amazon-3m dataset. Following Sen et al. (2021), we study the top- setting where actions are selected at each round. Out of the total number of actions sampled, we let denote the number of actions sampled for exploration. We apply SpannerGreedy for this dataset and consider regression functions similar to the deep models discussed before. The setting corresponds to running our algorithm unmodified, and corresponds to selecting 5 actions per round and using 3 exploration slots. Fig. 1 in Section D.4 displays the results. For the final CI is , and for the final CI is .

In the setup with , our results are directly comparable to Sen et al. (2021), who evaluated a tree-based contextual bandit method on the same dataset. The best result from Sen et al. (2021) achieves roughly 0.19 reward with , which we exceed by a factor of 2. This indicates that our use of embeddings provides favorable inductive bias for this problem, and underscores the broad utility of our techniques (which leverage embeddings). For , our inference time on a commodity CPU with batch size 1 is 160ms per example, which is slower than the time of 7.85ms per example reported in Sen et al. (2021).

6 Additional Related Work

In this section we highlight some relevant lines of research not already discussed.

Efficient general-purpose contextual bandit algorithms

There is a long line of research on computationally efficient methods for contextual bandits with general function approximation, typically based on reduction to either cost-sensitive classification oracles (Langford and Zhang, 2007; Dudik et al., 2011; Agarwal et al., 2014) or regression oracles (Foster et al., 2018; Foster and Rakhlin, 2020; Simchi-Levi and Xu, 2021). Most of these works deal with a finite action spaces and have regret scaling with the number of actions, which is necessary without further structural assumptions (Agarwal et al., 2012). An exception is the works of Foster et al. (2020) and Xu and Zeevi (2020), both of which consider the same setting as the present paper. Both of the algorithms in these works require solving subproblems based on maximizing quadratic forms (which is NP-hard in general (Sahni, 1974)), and cannot directly take advantage of the linear optimization oracle we consider. Also related is the work of Zhang (2021), which proposes a posterior sampling-style algorithm for the setting we consider. This algorithm is not fully comparable computationally, as it requires sampling from specific posterior distribution; it is unclear whether this can be achieved in a provably efficient fashion.

Linear contextual bandits

The linear contextual bandit problem is a special case of our setting in which is constant (that is, the reward function only depends on the context through the feature map ). The most well-studied families of algorithms for this setting are UCB-style algorithms and posterior sampling. With a well-chosen prior and posterior distribution, posterior sampling can be implemented efficiently (Agrawal and Goyal, 2013), but it is unclear how to efficiently adapt this approach to accomodate general function approximation. Existing UCB-type algorithms require solving sub-problems based on maximizing quadratic forms, which is NP-hard in general (Sahni, 1974). One line of research aims to make UCB efficient by using hashing-based methods (MIPS) to approximate the maximum inner product (Yang et al., 2021; Jun et al., 2017). These methods have runtime sublinear (but still polynomial) in the number of actions.

Non-contextual linear bandits

For the problem of non-contextual linear bandits (with either stochastic or adversarial rewards), there is a long line of research on efficient algorithms that can take advantage of linear optimization oracles (Awerbuch and Kleinberg, 2008; McMahan and Blum, 2004; Dani and Hayes, 2006; Dani et al., 2008; Bubeck et al., 2012; Hazan and Karnin, 2016; Ito et al., 2019); see also work on the closely related problem of combinatorial pure exploration (Chen et al., 2017; Cao and Krishnamurthy, 2019; Katz-Samuels et al., 2020; Wagenmaker et al., 2021). In general, it is not clear how to lift these techniques to contextual bandits with linearly-structured actions and general function approximation. We also mention that optimal design has been applied in the context of linear bandits, but these algorithms are restricted to the non-contextual setting (Lattimore and Szepesvári, 2020; Lattimore et al., 2020), or to pure exploration (Soare et al., 2014; Fiez et al., 2019). The only exception we are aware of is Ruan et al. (2021), who extend these developments to linear contextual bandits (i.e., where ), but critically use that contexts are stochastic.

Other approaches

Another line of research provides efficient contextual bandit methods under specific modeling assumptions on the context space or action space that differ from the ones we consider here. Zhou et al. (2020); Xu et al. (2020); Zhang et al. (2021); Kassraie and Krause (2022) provide generalizations of the UCB algorithm and posterior sampling based on the Neural Tangent Kernel (NTK). These algorithms can be used to learn context embeddings (i.e., ) with general function approximation, but only lead to theoretical guarantees under strong RKHS-based assumptions. For large action spaces, these algorithms typically require enumeration over actions. Majzoubi et al. (2020) consider a setting with nonparametric action spaces and design an efficient tree-based learner; their guarantees, however, scale exponentially in the dimensionality of action space. Sen et al. (2021) provide heuristically-motivated but empirically-effective tree-based algorithms for contextual bandits with large action spaces, with theoretical guarantees when the actions satisfy certain tree-structured properties. Lastly, another empirically-successful approach is the policy gradient method (e.g., Williams (1992); Bhatnagar et al. (2009); Pan et al. (2019)). On the theoretical side, policy gradient methods do not address the issue of systematic exploration, and—to our knowledge—do not lead to provable guarantees for the setting considered in our paper.

7 Discussion

We provide the first efficient algorithms for contextual bandits with continuous, linearly structured action spaces and general-purpose function approximation. We highlight some natural directions for future research below.

-

•

Efficient algorithms for nonlinear action spaces. Our algorithms take advantage of linearly structured action spaces by appealing to optimal design. Can we develop computationally efficient methods for contextual bandits with nonlinear dependence on the action space?

-

•

Reinforcement learning. The contextual bandit problem is a special case of the reinforcement learning problem with horizon one. Given our positive results in the contextual bandit setting, a natural next step is to extend our methods to reinforcement learning problems with large action/decision spaces. For example, Foster et al. (2021b) build on our computational tools to provide efficient algorithms for reinforcement learning with bilinear classes.

Beyond these directions, natural domains in which to extend our techniques include pure exploration and off-policy learning with linearly structured actions.

References

- Abbasi-Yadkori et al. (2011) Yasin Abbasi-Yadkori, Dávid Pál, and Csaba Szepesvári. Improved algorithms for linear stochastic bandits. In NIPS, volume 11, pages 2312–2320, 2011.

- Abe and Long (1999) Naoki Abe and Philip M Long. Associative reinforcement learning using linear probabilistic concepts. In ICML, pages 3–11. Citeseer, 1999.

- Agarwal et al. (2012) Alekh Agarwal, Miroslav Dudík, Satyen Kale, John Langford, and Robert Schapire. Contextual bandit learning with predictable rewards. In Artificial Intelligence and Statistics, pages 19–26. PMLR, 2012.

- Agarwal et al. (2014) Alekh Agarwal, Daniel Hsu, Satyen Kale, John Langford, Lihong Li, and Robert Schapire. Taming the monster: A fast and simple algorithm for contextual bandits. In International Conference on Machine Learning, pages 1638–1646. PMLR, 2014.

- Agarwal et al. (2016) Alekh Agarwal, Sarah Bird, Markus Cozowicz, Luong Hoang, John Langford, Stephen Lee, Jiaji Li, Dan Melamed, Gal Oshri, Oswaldo Ribas, Siddhartha Sen, and Aleksandrs Slivkins. Making contextual decisions with low technical debt. arXiv:1606.03966, 2016.

- Agrawal and Goyal (2013) Shipra Agrawal and Navin Goyal. Thompson sampling for contextual bandits with linear payoffs. In International Conference on Machine Learning, pages 127–135. PMLR, 2013.

- Atwood (1969) Corwin L Atwood. Optimal and efficient designs of experiments. The Annals of Mathematical Statistics, pages 1570–1602, 1969.

- Awerbuch and Kleinberg (2008) Baruch Awerbuch and Robert Kleinberg. Online linear optimization and adaptive routing. Journal of Computer and System Sciences, 74(1):97–114, 2008.

- Bergstra and Bengio (2012) James Bergstra and Yoshua Bengio. Random search for hyper-parameter optimization. Journal of machine learning research, 13(2), 2012.

- Bhatia et al. (2016) K. Bhatia, K. Dahiya, H. Jain, P. Kar, A. Mittal, Y. Prabhu, and M. Varma. The extreme classification repository: Multi-label datasets and code, 2016. URL http://manikvarma.org/downloads/XC/XMLRepository.html.

- Bhatnagar et al. (2009) Shalabh Bhatnagar, Richard S Sutton, Mohammad Ghavamzadeh, and Mark Lee. Natural Actor–Critic algorithms. Automatica, 45(11):2471–2482, 2009.

- Bietti et al. (2021) Alberto Bietti, Alekh Agarwal, and John Langford. A contextual bandit bake-off. Journal of Machine Learning Research, 22(133):1–49, 2021.

- Bubeck et al. (2012) Sébastien Bubeck, Nicolo Cesa-Bianchi, and Sham M Kakade. Towards minimax policies for online linear optimization with bandit feedback. In Conference on Learning Theory, pages 41–1. JMLR Workshop and Conference Proceedings, 2012.

- Cai et al. (2021) William Cai, Josh Grossman, Zhiyuan Jerry Lin, Hao Sheng, Johnny Tian-Zheng Wei, Joseph Jay Williams, and Sharad Goel. Bandit algorithms to personalize educational chatbots. Machine Learning, pages 1–30, 2021.

- Cao and Krishnamurthy (2019) Tongyi Cao and Akshay Krishnamurthy. Disagreement-based combinatorial pure exploration: Sample complexity bounds and an efficient algorithm. In Conference on Learning Theory, pages 558–588. PMLR, 2019.

- Cesa-Bianchi and Lugosi (2012) Nicolo Cesa-Bianchi and Gábor Lugosi. Combinatorial bandits. Journal of Computer and System Sciences, 78(5):1404–1422, 2012.

- Chen et al. (2017) Lijie Chen, Anupam Gupta, Jian Li, Mingda Qiao, and Ruosong Wang. Nearly optimal sampling algorithms for combinatorial pure exploration. In Conference on Learning Theory, pages 482–534. PMLR, 2017.

- Chernozhukov et al. (2019) Victor Chernozhukov, Mert Demirer, Greg Lewis, and Vasilis Syrgkanis. Semi-parametric efficient policy learning with continuous actions. Advances in Neural Information Processing Systems, 32:15065–15075, 2019.

- Chu et al. (2011) Wei Chu, Lihong Li, Lev Reyzin, and Robert Schapire. Contextual bandits with linear payoff functions. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, pages 208–214. JMLR Workshop and Conference Proceedings, 2011.

- Cohen et al. (2019) Michael B Cohen, Ben Cousins, Yin Tat Lee, and Xin Yang. A near-optimal algorithm for approximating the John Ellipsoid. In Conference on Learning Theory, pages 849–873. PMLR, 2019.

- Dani and Hayes (2006) Varsha Dani and Thomas P Hayes. Robbing the bandit: Less regret in online geometric optimization against an adaptive adversary. In SODA, volume 6, pages 937–943, 2006.

- Dani et al. (2008) Varsha Dani, Thomas P Hayes, and Sham M Kakade. Stochastic linear optimization under bandit feedback. Conference on Learning Theory (COLT), 2008.

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. In NAACL-HLT (1), 2019.

- Dudik et al. (2011) Miroslav Dudik, Daniel Hsu, Satyen Kale, Nikos Karampatziakis, John Langford, Lev Reyzin, and Tong Zhang. Efficient optimal learning for contextual bandits. In Proceedings of the Twenty-Seventh Conference on Uncertainty in Artificial Intelligence, pages 169–178, 2011.

- Edmonds (1965) Jack Edmonds. Paths, trees, and flowers. Canadian Journal of mathematics, 17:449–467, 1965.

- Fiez et al. (2019) Tanner Fiez, Lalit Jain, Kevin G Jamieson, and Lillian Ratliff. Sequential experimental design for transductive linear bandits. Advances in neural information processing systems, 32, 2019.

- Foster and Rakhlin (2020) Dylan Foster and Alexander Rakhlin. Beyond UCB: Optimal and efficient contextual bandits with regression oracles. In International Conference on Machine Learning, pages 3199–3210. PMLR, 2020.

- Foster et al. (2018) Dylan Foster, Alekh Agarwal, Miroslav Dudik, Haipeng Luo, and Robert Schapire. Practical contextual bandits with regression oracles. In International Conference on Machine Learning, pages 1539–1548. PMLR, 2018.

- Foster et al. (2021a) Dylan Foster, Alexander Rakhlin, David Simchi-Levi, and Yunzong Xu. Instance-dependent complexity of contextual bandits and reinforcement learning: A disagreement-based perspective. In Conference on Learning Theory, pages 2059–2059. PMLR, 2021a.

- Foster and Krishnamurthy (2021) Dylan J Foster and Akshay Krishnamurthy. Efficient first-order contextual bandits: Prediction, allocation, and triangular discrimination. Advances in Neural Information Processing Systems, 34, 2021.

- Foster et al. (2020) Dylan J Foster, Claudio Gentile, Mehryar Mohri, and Julian Zimmert. Adapting to misspecification in contextual bandits. Advances in Neural Information Processing Systems, 33, 2020.

- Foster et al. (2021b) Dylan J Foster, Sham M Kakade, Jian Qian, and Alexander Rakhlin. The statistical complexity of interactive decision making. arXiv preprint arXiv:2112.13487, 2021b.

- Grötschel et al. (2012) Martin Grötschel, László Lovász, and Alexander Schrijver. Geometric algorithms and combinatorial optimization, volume 2. Springer Science & Business Media, 2012.

- Hazan and Karnin (2016) Elad Hazan and Zohar Karnin. Volumetric spanners: An efficient exploration basis for learning. The Journal of Machine Learning Research, 17(1):4062–4095, 2016.

- Hazan et al. (2007) Elad Hazan, Amit Agarwal, and Satyen Kale. Logarithmic regret algorithms for online convex optimization. Machine Learning, 69(2-3):169–192, 2007.

- Ito et al. (2019) Shinji Ito, Daisuke Hatano, Hanna Sumita, Kei Takemura, Takuro Fukunaga, Naonori Kakimura, and Ken-Ichi Kawarabayashi. Oracle-efficient algorithms for online linear optimization with bandit feedback. Advances in Neural Information Processing Systems, 32:10590–10599, 2019.

- John (1948) F John. Extremum problems with inequalities as subsidiary conditions. R. Courant Anniversary Volume, pages 187–204, 1948.

- Jun et al. (2017) Kwang-Sung Jun, Aniruddha Bhargava, Robert Nowak, and Rebecca Willett. Scalable generalized linear bandits: Online computation and hashing. Advances in Neural Information Processing Systems, 30, 2017.

- Kassraie and Krause (2022) Parnian Kassraie and Andreas Krause. Neural contextual bandits without regret. In International Conference on Artificial Intelligence and Statistics, pages 240–278. PMLR, 2022.

- Katz-Samuels et al. (2020) Julian Katz-Samuels, Lalit Jain, Kevin G Jamieson, et al. An empirical process approach to the union bound: Practical algorithms for combinatorial and linear bandits. Advances in Neural Information Processing Systems, 33, 2020.

- Kiefer and Wolfowitz (1960) Jack Kiefer and Jacob Wolfowitz. The equivalence of two extremum problems. Canadian Journal of Mathematics, 12:363–366, 1960.

- Krishnamurthy et al. (2020) Akshay Krishnamurthy, John Langford, Aleksandrs Slivkins, and Chicheng Zhang. Contextual bandits with continuous actions: Smoothing, zooming, and adapting. Journal of Machine Learning Research, 21(137):1–45, 2020.

- Langford and Zhang (2007) John Langford and Tong Zhang. The epoch-greedy algorithm for contextual multi-armed bandits. Advances in neural information processing systems, 20(1):96–1, 2007.

- Lattimore and Szepesvári (2020) Tor Lattimore and Csaba Szepesvári. Bandit algorithms. Cambridge University Press, 2020.

- Lattimore et al. (2020) Tor Lattimore, Csaba Szepesvari, and Gellert Weisz. Learning with good feature representations in bandits and in RL with a generative model. In International Conference on Machine Learning, pages 5662–5670. PMLR, 2020.

- Lebret and Collobert (2014) Rémi Lebret and Ronan Collobert. Word embeddings through Hellinger PCA. In Proceedings of the 14th Conference of the European Chapter of the Association for Computational Linguistics, pages 482–490, 2014.

- Li et al. (2010) Lihong Li, Wei Chu, John Langford, and Robert E Schapire. A contextual-bandit approach to personalized news article recommendation. In Proceedings of the 19th international conference on World wide web, pages 661–670, 2010.

- Mahabadi et al. (2019) Sepideh Mahabadi, Piotr Indyk, Shayan Oveis Gharan, and Alireza Rezaei. Composable core-sets for determinant maximization: A simple near-optimal algorithm. In International Conference on Machine Learning, pages 4254–4263. PMLR, 2019.

- Majzoubi et al. (2020) Maryam Majzoubi, Chicheng Zhang, Rajan Chari, Akshay Krishnamurthy, John Langford, and Aleksandrs Slivkins. Efficient contextual bandits with continuous actions. Advances in Neural Information Processing Systems, 33, 2020.

- McMahan and Blum (2004) H Brendan McMahan and Avrim Blum. Online geometric optimization in the bandit setting against an adaptive adversary. In International Conference on Computational Learning Theory, pages 109–123. Springer, 2004.

- Meyer (2000) Carl D Meyer. Matrix analysis and applied linear algebra, volume 71. Siam, 2000.

- Pan et al. (2019) Feiyang Pan, Qingpeng Cai, Pingzhong Tang, Fuzhen Zhuang, and Qing He. Policy gradients for contextual recommendations. In The World Wide Web Conference, pages 1421–1431, 2019.

- Reimers and Gurevych (2019) Nils Reimers and Iryna Gurevych. Sentence-BERT: Sentence embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 11 2019. URL http://arxiv.org/abs/1908.10084.

- Ruan et al. (2021) Yufei Ruan, Jiaqi Yang, and Yuan Zhou. Linear bandits with limited adaptivity and learning distributional optimal design. In Proceedings of the 53rd Annual ACM SIGACT Symposium on Theory of Computing, pages 74–87, 2021.

- Sahni (1974) Sartaj Sahni. Computationally related problems. SIAM Journal on computing, 3(4):262–279, 1974.

- Schrijver (1998) Alexander Schrijver. Theory of linear and integer programming. John Wiley & Sons, 1998.

- Sen et al. (2021) Rajat Sen, Alexander Rakhlin, Lexing Ying, Rahul Kidambi, Dean Foster, Daniel N Hill, and Inderjit S Dhillon. Top-k extreme contextual bandits with arm hierarchy. In International Conference on Machine Learning, pages 9422–9433. PMLR, 2021.

- Sherman and Morrison (1950) Jack Sherman and Winifred J Morrison. Adjustment of an inverse matrix corresponding to a change in one element of a given matrix. The Annals of Mathematical Statistics, 21(1):124–127, 1950.

- Shrivastava and Li (2014) Anshumali Shrivastava and Ping Li. Asymmetric LSH (ALSH) for sublinear time maximum inner product search (MIPS). Advances in neural information processing systems, 27, 2014.

- Simchi-Levi and Xu (2021) David Simchi-Levi and Yunzong Xu. Bypassing the monster: A faster and simpler optimal algorithm for contextual bandits under realizability. Mathematics of Operations Research, 2021.

- Singh et al. (2012) Sameer Singh, Amarnag Subramanya, Fernando Pereira, and Andrew McCallum. Wikilinks: A large-scale cross-document coreference corpus labeled via links to Wikipedia. Technical Report UM-CS-2012-015, University of Massachusetts, Amherst, 2012.

- Soare et al. (2014) Marta Soare, Alessandro Lazaric, and Rémi Munos. Best-arm identification in linear bandits. Advances in Neural Information Processing Systems, 27, 2014.

- Summa et al. (2014) Marco Di Summa, Friedrich Eisenbrand, Yuri Faenza, and Carsten Moldenhauer. On largest volume simplices and sub-determinants. In Proceedings of the twenty-sixth annual ACM-SIAM symposium on Discrete algorithms, pages 315–323. SIAM, 2014.

- Tewari and Murphy (2017) Ambuj Tewari and Susan A Murphy. From Ads to interventions: Contextual bandits in mobile health. In Mobile Health, pages 495–517. Springer, 2017.

- Vasnetsov (2018) Andrey Vasnetsov. Oneshot-wikilinks. https://www.kaggle.com/generall/oneshotwikilinks, 2018.

- Vovk (1998) Vladimir Vovk. A game of prediction with expert advice. Journal of Computer and System Sciences, 56(2):153–173, 1998.

- Wagenmaker et al. (2021) Andrew Wagenmaker, Julian Katz-Samuels, and Kevin Jamieson. Experimental design for regret minimization in linear bandits. In International Conference on Artificial Intelligence and Statistics, pages 3088–3096, 2021.

- Williams (1992) Ronald J Williams. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Machine learning, 8(3):229–256, 1992.

- Xu et al. (2020) Pan Xu, Zheng Wen, Handong Zhao, and Quanquan Gu. Neural contextual bandits with deep representation and shallow exploration. arXiv preprint arXiv:2012.01780, 2020.

- Xu and Zeevi (2020) Yunbei Xu and Assaf Zeevi. Upper counterfactual confidence bounds: a new optimism principle for contextual bandits. arXiv preprint arXiv:2007.07876, 2020.

- Yang et al. (2021) Shuo Yang, Tongzheng Ren, Sanjay Shakkottai, Eric Price, Inderjit S Dhillon, and Sujay Sanghavi. Linear bandit algorithms with sublinear time complexity. arXiv preprint arXiv:2103.02729, 2021.

- Zhang (2021) Tong Zhang. Feel-good thompson sampling for contextual bandits and reinforcement learning. arXiv preprint arXiv:2110.00871, 2021.

- Zhang et al. (2021) Weitong Zhang, Dongruo Zhou, Lihong Li, and Quanquan Gu. Neural Thompson sampling. In International Conference on Learning Representation (ICLR), 2021.

- Zhou et al. (2020) Dongruo Zhou, Lihong Li, and Quanquan Gu. Neural contextual bandits with ucb-based exploration. In International Conference on Machine Learning, pages 11492–11502. PMLR, 2020.

Appendix A Proofs and Supporting Results from Section 3

This section is organized as follows. We provide supporting results in Section A.1, then give the proof of Theorem 1 in Section A.2.

A.1 Supporting Results

A.1.1 Barycentric Spanner and Optimal Design

Algorithm 5 restates an algorithm of Awerbuch and Kleinberg (2008), which efficiently computes a barycentric spanner (Definition 3) given access to a linear optimization oracle (Definition 1). Recall that, for a set of actions, the notation (resp. ) denotes the determinant of the -by- matrix whose columns are the (resp. ) embeddings of actions.

Lemma 5 (Awerbuch and Kleinberg (2008)).

For any , Algorithm 5 computes a -approximate barycentric spanner for within iterations of the while-loop.

Lemma 6.

Fix any constant . Algorithm 5 can be implemented with runtime and memory .

Proof of Lemma 6.

We provide the computational complexity analysis starting from the while-loop (line 5-12) in the following. The computational complexity regarding the first for-loop (line 1-3) can be similarly analyzed.

-

•

Outer loops (lines 5-6). From Lemma 5, we know that Algorithm 5 terminates within iterations of the while-loop (line 5). It is also clear that the for-loop (line 6) is invoked at most times.

-

•

Computational complexity for lines 7-10. We discuss how to efficiently implement this part using rank-one updates. We analyze the computational complexity for each line in the following.

-

–

Line 7. We discuss how to efficiently compute the linear function through rank-one updates. Fix any . Let denote the invertible (by construction) matrix whose -th column is (with ). Using the rank-one update formula for the determinant (Meyer, 2000), we have

(8) We first notice that since one can take . We can then write

where . Thus, whenever and are known, compute takes time. The maximum memory requirement is , following from the storage of .

-

–

Line 8. When is computed, we can compute by first compute and and then compare the two. This process takes two oracle calls to , which takes time. The maximum memory requirement is , following from the memory requirement of and the storage of .

-

–

Line 9. Once and are computed, checking the updating criteria takes time. The maximum memory requirement is , following from the storage of and .

-

–

Line 10. We discuss how to efficiently update and through rank-one updates. If an update is made, we can update the determinant using rank-one update (as in Eq. 8) with runtime and memory ; and update the inverse matrix using the Sherman-Morrison rank-one update formula (Sherman and Morrison, 1950), i.e.,

which can be implemented in time and memory. Note that the updated matrix must be invertible by construction.

Thus, using rank-one updates, the total runtime adds up to and the maximum memory requirement is . We also remark that the initial matrix determinant and inverse can be computed cheaply since the first iteration of the first for-loop (i.e., line 2 with ) is updated from the identity matrix.

-

–

To summarize, Algorithm 5 has runtime and uses at most units of memory. ∎

The next proposition shows that a barycentric spanner implies an approximate optimal design. The result is well-known (e.g., Hazan and Karnin (2016)), but we provide a proof here for completeness.

See 2

Proof of Lemma 2.

Assume without loss of generality that spans . By Definition 3, we know that for any , we can represent as a weighted sum of elements in with coefficients in the range . Let be the matrix whose columns are the vectors in . For any , we can find such that . Since is invertible (by construction), we can write , which implies the result via

∎

A.1.2 Regret Decomposition

Fix any . We consider the following meta algorithm that utilizes the online regression oracle defined in 2.

For :

-

•

Get context from the environment and regression function from the online regression oracle .

-

•

Identify the distribution that solves the minimax problem (defined in Eq. 3) and play action .

-

•

Observe reward and update regression oracle with example .

The following result bounds the contextual bandit regret for the meta algorithm described above. The result is a variant of the regret decomposition based on the Decision-Estimation Coefficient given in Foster et al. (2021b), which generalizes Foster and Rakhlin (2020). The slight differences in constant terms are due to the difference in reward range.

Lemma 7 (Foster and Rakhlin (2020); Foster et al. (2021b)).

Suppose that 2 holds. Then probability at least , the contextual bandit regret is upper bounded as follows:

In general, identifying a distribution that exactly solves the minimax problem corresponding to the DEC may be impractical. However, if one can identify a distribution that instead certifies an upper bound on the Decision-Estimation Coefficient (in the sense that ), the regret bound in Lemma 7 continues to hold with replaced by .

A.1.3 Proof of Lemma 3

See 3

Proof of Lemma 3.

Fix a context . In our setting, where actions are linearly structured, we can equivalently write the Decision-Estimation Coefficient as

| (9) |

Recall that within our algorithms, is obtained from the estimator output by . We will bound the quantity in Eq. 9 uniformly for all and with . Recall that we assume .

Denote and . For any , let , where is any -approximate optimal design for the embedding . We have the following decomposition.

| (10) |

For the first term in Eq. 10, we have

Next, since

by AM-GM inequality, we can bound the second term in Eq. 10 by

We now turn our attention to the third term. Observe that since is optimal for , . As a result, defining , we have

Here, the third line follows from the AM-GM inequality, and the last line follows from the (-approximate) optimal design property and the definition of .

Combining these bounds, we have

Since , taking gives

whenever . On the other hand, when , this bound holds trivially. ∎

A.2 Proof of Theorem 1

See 1

Proof of Theorem 1.

Consider . Combining Lemma 3 with Lemma 7, we have

The regret bound in Theorem 1 immediately follows by choosing

In particular, when Algorithm 5 is invoked as a subroutine with parameter , Lemma 2 implies that we may take .

Computational complexity. We now bound the per-round computational complexity of Algorithm 1 when Algorithm 5 is used as a subroutine to compute the approximate optimal design. Outside of the call to Algorithm 5, Algorithm 1 uses calls to to obtain and to update , and uses a single call to to compute . With the optimal design returned by Algorithm 5 (represented as a barycentric spanner), sampling from takes at most time, since . outside of Algorithm 5 adds up to . In terms of memory, calling and takes units, and maintaining the distribution (the barycentric spanner) takes units, so the maximum memory (outside of Algorithm 5) is . The stated results follow from combining the computational complexities analyzed in Lemma 6. ∎

Appendix B Proofs and Supporting Results from Section 4.1

In this section we provide supporting results concerning Algorithm 2 (Section B.1), and then give the proof of Theorem 2 (Section B.2).

B.1 Supporting Results

Lemma 8.

In Algorithm 2 (Eq. (6)), there exists a unique choice of such that , and its value lies in .

Proof of Lemma 8.

Define . We first notice that is continuous and strictly decreasing over . We further have

and

As a result, there exists a unique normalization constant such that . ∎

See 4

Proof of Lemma 4.

As in the proof of Lemma 3, we use the linear structure of the action space to rewrite the Decision-Estimation Coefficient as

Where is such that . We will bound the quantity above uniformly for all and .

Denote , and be a -approximate optimal design with respect to the reweighted embedding ). We use the setting throughout the proof. Recall that for the sampling distribution in Algorithm 2, we set and define

| (11) |

where is a normalization constant (cf. Lemma 8).

We decompose the regret of the distribution in Eq. 11 as

| (12) |

Writing out the expectation, the first term in Eq. 12 is upper bounded as follows.

where we use that in the second inequality (with the convention that ).

The second term in Eq. 12 can be upper bounded as in the proof of Lemma 3, by applying the AM-GM inequality:

The third term in Eq. 12 is the most involved. To begin, we define and apply the following standard bound:

| (13) |

where the second line follows from the AM-GM inequality. The second term in Eq. 13 matches the bound we desired, so it remains to bound the first term. Let be the following sub-probability measure:

and let . We clearly have from the definition of (cf. Eq. 11). We observe that

where the last line uses that . Since is positive-definite by construction, we have that . As a result,

| (14) |

where the last line uses that , since is a -approximate optimal design for the set . Finally, we observe that the second term in Eq. 14 is cancelled out by the forth term in Eq. 12.

B.2 Proof of Theorem 2

See 2

Proof.

Combining Lemma 4 with Lemma 7, we have

The theorem follows by choosing

In particular, when Algorithm 3 is invoked as the subroutine with parameter , we may take .

Computational complexity. We now discuss the per-round computational complexity of Algorithm 2. We analyze a variant of the sampling rule specified in Section D.2 that does not require computation of the normalization constant. Outside of the runtime and memory requirements required to compute the barycentric spanner using Algorithm 3, which are stated in Theorem 3, Algorithm 2 uses calls to the oracle to obtain and update , and uses a single call to to compute . With and , we can compute in time for any ; thus, with the optimal design returned by Algorithm 3 (represented as a barycentric spanner), we can construct the sampling distribution in time. Sampling from takes time since . This adds up to runtime . In terms of memory, calling and takes units, and maintaining the distribution (the barycentric spanner) takes units, so the maximum memory (outside of Algorithm 3) is . The stated results follow from combining the computational complexities analyzed in Theorem 3 , together with the choice of described above. ∎

Appendix C Proofs and Supporting Results from Section 4.2

This section of the appendix is dedicated to the analysis of Algorithm 3, and organized as follows.

-

•

First, in Section C.1, we analyze Algorithm 4, a subroutine of Algorithm 3 which implements a linear optimization oracle for the reweighted action set used in the algorithm.

-

•

Next, in Section C.2, we prove Theorem 3, the main theorem concerning the performance of Algorithm 3.

-

•

Finally, in Section C.3, we discuss settings in which the initialization step required by Algorithm 3 can be performed efficiently.

Throughout this section of the appendix, we assume that the context and estimator —which are arguments to Algorithm 3 and Algorithm 4—are fixed.

C.1 Analysis of Algorithm 4 (Linear Optimization Oracle for Reweighted Embeddings)

A first step is to construct an (approximate) argmax oracle (after taking absolute value) with respect to the reweighted embedding . Recall that the goal of Algorithm 4 is to implement a linear optimization oracle for the reweighted embeddings constructed by Algorithm 3. That is, for any , we would like to compute an action that (approximately) solves

Define

| (15) |

The main result of this section, Theorem 4, shows that Algorithm 4 identifies an action that achieves the maximum value in Eq. 15 up to a multiplicative constant.

Theorem 4.

Fix any , . Suppose for some . Then Algorithm 4 identifies an action such that , and does so with runtime and maximum memory .

Proof of Theorem 4.

Recall from Eq. 5 that we have

where ; note that the denominator is at least . To proceed, we use that for any and , we have

Taking and above, we can write

| (16) | ||||

| (17) |

The key property of this representation is that for any fixed , Eq. 17 is a linear function of the unweighted embedding , and hence can be optimized using . In particular, for any fixed , consider the following linear optimization problem, which can be solved by calling :

| (18) |

Define

| (19) |

If was known (which is not the case, since is unknown), we could set in Eq. 18 and compute an action using a single oracle call. We would then have , which follows because is the maximizer in Eq. 16 for .

To get around the fact that is unknown, Algorithm 4 performs a grid search over possible values of . To show that the procedure succeeds, we begin by bounding the range of . With some rewriting, we have

Since , we have

Algorithm 4 performs a -multiplicative grid search over the intervals and , which uses grid points. It is immediate to that the grid contains such that and . Invoking Lemma 9 (stated and proven in the sequel) with implies that . To conclude, recall that Algorithm 4 outputs the maximizer

where is the set of argmax actions encountered by the grid search. Since , we have as desired.

Computational complexity. Finally, we bound the computational complexity of Algorithm 4. Algorithm 4 maintains a grid of points, and hence calls the oracle in total; this takes time. Computing the final maximizer from the set , which contains actions, takes time (compute each takes time). Hence, the total runtime of Algorithm 4 adds up to . The maximum memory requirement is , follows from calling , and storing and other terms such as . ∎

C.1.1 Supporting Results

Lemma 9.

Let be defined as in Eq. 19. Suppose has and . Then, if , we have .

Proof of Lemma 9.

First observe that using the definition of , along with Eq. 16 and Eq. 18, we have , where the second inequality uses that . Since , we have . If , then since , we have

where we use that for the first inequality and use the definition of for the second equality.

On the other hand, when , we similarly have

Summarizing both cases, we have . ∎

C.2 Proof of Theorem 3

See 3

Proof of Theorem 3.

We begin by examining the range of used in Theorem 4. Note that the linear function passed as an argument to Algorithm 3 takes the form , i.e., , where . For the upper bound, we have

by Hadamard’s inequality and the fact that the reweighting appearing in Eq. 5 enjoys . This shows that . For the lower bound, we first recall that in Algorithm 3, the set is initialized to have , and thus , where accounts for the reweighting in Eq. 5. Next, we observe that as a consequence of the update rule in Algorithm 3, we are guaranteed that across all rounds. Thus, whenever Algorithm 4 is invoked with the linear function described above, there must exist an action such that , which implies that and we can take in Theorem 4.

We next bound the number of iterations of the while-loop before the algorithm terminates. Let . At each iteration (beginning from line 3) of Algorithm 3, one of two outcomes occurs:

-

1.

We find an index and an action such that , and update .

-

2.

We conclude that and terminate the algorithm.

We observe that (i) the initial set has with (as discussed before), (ii) by Hadamard’s inequality, and (iii) each update of increases the (absolute) determinant by a factor of . Thus, fix any , we are guaranteed that Algorithm 3 terminates within iterations of the while-loop.

We now discuss the correctness of Algorithm 3, i.e., when terminated, the set is a -approximate barycentric spanner with respect to the reweighted embedding . First, note that by Theorem 4, Algorithm 4 is guaranteed to identify an action such that as long as there exists an action such that . As a result, by Observation 2.3 in Awerbuch and Kleinberg (2008), if no update is made and Algorithm 3 terminates, we have identified a -approximate barycentric spanner with respect to embedding .

Computational complexity. We provide the computational complexity analysis for Algorithm 3 in the following. We use to denote the matrix whose -th column is with .

-

•

Initialization. We first notice that, given and , it takes time to compute for any . Thus, computing and takes time, where we use (with ) to denote the time of computing matrix determinant/inversion. The maximum memory requirement is , following from the storage of and .

-

•

Outer loops (lines 1-2). We have already shown that Algorithm 5 terminates within iterations of the while-loop (line 2). It is also clear that the for-loop (line 2) is invoked at most times.

-

•

Computational complexity for lines 3-7. We discuss how to efficiently implement this part using rank-one updates. We analyze the computational complexity for each line in the following. The analysis largely follows from the proof of Lemma 6.

-

–

Line 3. Using rank-one update of the matrix determinant (as discussed in the proof of Lemma 6), we have

where . Thus, whenever and are known, compute takes time. The maximum memory requirement is , following from the storage of .

-

–

Line 4. When is computed, we can compute by invoking IGW-ArgMax (Algorithm 4). As discussed in Theorem 4, this step takes runtime and maximum memory (by taking as discussed before).

-

–

Line 5. Once and are computed, checking the updating criteria takes time. The maximum memory requirement is , following from the storage of and .

-

–

Line 6. As discussed in the proof of Lemma 6, if an update is made, we can update and using rank-one updates with time and memory.

Thus, using rank-one updates, the total runtime for line 3-7 adds up to and maximum memory requirement is .

-

–

To summarize, Algorithm 5 has runtime and uses at most units of memory. ∎

C.3 Efficient Initializations for Algorithm 3

In this section we discuss specific settings in which the initialization required by Algorithm 3 can be computed efficiently. For the first result, we let denote the ball of radius in .

Example 2.

Suppose that there exists such that . Then by choosing , we have .

The next example is stronger, and shows that we can efficiently compute a set with large determinant whenever such a set exists.

Example 3.

Suppose there exists a set such that for some . Then there exists an efficient algorithm that identifies a set with for , and does so with runtime and memory .

Proof for Example 3.

The guarantee is achieved by running Algorithm 5 with . One can show that this strategy achieves the desired approximation guarantee by slightly generalizing the proof of a similar result in Mahabadi et al. (2019). In more detail, Mahabadi et al. (2019) study the problem of identifying a subset such that and is (approximately) maximized, where denotes the matrix whose columns are for . We consider the case when , and make the following observations.

-

•

We have . Thus, maximizing is equivalent to maximizing .

-

•

The Local Search Algorithm provided in Mahabadi et al. (2019) (Algorithm 4.1 therein) has the same update and termination condition as Algorithm 5. As a result, one can show that the conclusion of their Lemma 4.1 also applies to Algorithm 5.

∎

Appendix D Other Details for Experiments

D.1 Basic Details

Datasets

oneshotwiki (Singh et al., 2012; Vasnetsov, 2018) is a named-entity recognition task where contexts are text phrases preceding and following the mention text, and where actions are text phrases corresponding to the concept names. We use the python package sentence transformers (Reimers and Gurevych, 2019) to separately embed the text preceding and following the reference into , and then concatenate, resulting in a context embedding in . We embed the action (mentioned entity) text into and then use SVD on the collection of embedded actions to reduce the dimensionality to . The reward function is an indicator function for whether the action corresponds to the actual entity mentioned. oneshotwiki-311 (resp. oneshotwiki-14031) is a subset of this dataset obtained by taking all actions with at least 2000 (resp. 200) examples.

amazon-3m (Bhatia et al., 2016) is an extreme multi-label dataset whose contexts are text phrases corresponding to the title and description of an item, and whose actions are integers corresponding to item tags. We separately embed the title and description phrases using sentence transformers, which leads to a context embedding in . Following the protocol used in Sen et al. (2021), the first 50000 examples are fully supervised, and subsequent examples have bandit feedback. We use Hellinger PCA (Lebret and Collobert, 2014) on the supervised data label cooccurrences to construct the action embeddings in . Rewards are binary, and indicate whether a given item has the chosen tag. Actions that do not occur in the supervised portion of the dataset cannot be output by the model, but are retained for evaluation: For example, if during the bandit feedback phase, an example consists solely of tags that did not occur during the supervised phase, the algorithm will experience a reward of 0 for every feasible action on the example. For a typical seed, this results in roughly 890,000 feasible actions for the model. In the setup, we take the top- actions as the greedy slate, and then independently decide whether to explore for each exploration slot (the bottom slots). For exploration, we sample from the spanner set without replacement.

Regression functions and oracles

For bilinear models, regression functions take the form , where is a matrix of learned parameters. For deep models, regression functions pass the original context through 2 residual leaky ReLU layers before applying the bilinear layer, , where is a learned two-layer neural network, and is a matrix of learned parameters. For experiments with respect to oneshotwiki datasets, we add a learned bias term for regression functions (same for every action); for experiments with respect to the amazon-3m dataset, we additionally add an action-dependent bias term that is obtained from the supervised examples. The online regression oracle is implemented using PyTorch’s Adam optimizer with log loss (recall that rewards are 0/1).

Hyperparameters

For each algorithm, we optimize its hyperparameters using random search (Bergstra and Bengio, 2012). Speccifically, hyperparameters are tuned by taking the best of 59 randomly selected configurations for a fixed seed (this seed is not used for evaluation). A seed determines both dataset shuffling, initialization of regressor parameters, and random choices made by any action sampling scheme.

Evaluation

We evaluate each algorithm on 32 seeds. All reported confidence intervals are 90% bootstrap CIs for the mean.

D.2 Practical Modification to Sampling Procedure in SpannerIGW

For experiments with SpannerIGW, we slightly modify the action sampling distribution so as to avoid computing the normalization constant . First, we modify the weighted embedding scheme given in Eq. 5 using the following expression:

We obtain a -approximate optimal design for the reweighted embeddings by first computing a -approximate barycentric spanner , then taking . To proceed, let and . We construct the sampling distribution as follows:

-

•

Set for each .

-

•

Assign remaining probability mass to .

With a small modification to the proof of Lemma 4, one can show that this construction certifies that . Thus, the regret bound in Theorem 2 holds up to a constant factor. Similarly, with a small modification to the proof of Theorem 3, we can also show that —with respect to this new embedding—Algorithm 3 has runtime and memory.

D.3 Timing Information

Table 4 contains timing information the oneshotwiki-14031 dataset with a bilinear model. The CPU timings are most relevant for practical scenarios such as information retrieval and recommendation systems, while the GPU timings are relevant for scenarios where simulation is possible. Timings for SpannerGreedy do not include the one-time cost to compute the spanner set. Timings for all algorithms use precomputed context and action embeddings. For all but algorithms but SpannerIGW, timings reflect the major bottleneck of computing the argmax action, since all subsequent steps take time with respect to . In particular, SquareCB is implemented using rejection sampling, which does not require explicit construction of the action distribution. For SpannerIGW, the additional overhead is due to the time required to construct an approximate optimal design for each example.

| Algorithm | CPU | GPU |

|---|---|---|

| -Greedy | 2 ms | 10 s |

| SpannerGreedy | 2 ms | 10 s |

| SquareCB | 2 ms | 10 s |

| SpannerIGW | 25 ms | 180 s |

D.4 Additional Figures