Continuous-mixture Autoregressive Networks for efficient variational calculation of many-body systems

Abstract

We develop deep autoregressive networks with multi channels to compute many-body systems with continuous spin degrees of freedom directly. As a concrete example, we embed the two-dimensional XY model into the continuous-mixture networks and rediscover the Kosterlitz-Thouless (KT) phase transition on a periodic square lattice. Vortices characterizing the quasi-long range order are accurately detected by the autoregressive neural networks. By learning the microscopic probability distributions from the macroscopic thermal distribution, the neural networks compute the free energy directly and find that free vortices and anti-vortices emerge in the high-temperature regime. As a more precise evaluation, we compute the helicity modulus to determine the KT transition temperature. Although the training process becomes more time-consuming with larger lattice sizes, the training time remains unchanged around the KT transition temperature. The continuous-mixture autoregressive networks we developed thus can be potentially used to study other many-body systems with continuous degrees of freedom.

Introduction.—Machine learning techniques are attracting widespread interest in different fields of science and technology because of its power to extract and express structures inside complex data. In particular, physicists have employed it to do projects including classification, regression, and pattern generation Buchanan (2019); Carleo et al. (2019). It was found that the neural networks can classify the phase structures of many-body systems Wang (2016); Carrasquilla and Melko (2017); Pang et al. (2018); Fujimoto et al. (2018, 2020). As for the regression, it was successfully applied to the event selection in a large data set (e.g., from the LHCb) Metodiev and Thaler (2018); Kasieczka et al. (2019), the spinodal decomposition in heavy ion collisions Steinheimer et al. (2019), and the molecular structure prediction Smith et al. (2017). Furthermore, it also sheds light on the innovation of the methods of first-principle calculations of many-body systems Carleo and Troyer (2017); Nagy and Savona (2019); Hartmann and Carleo (2019); Pfau et al. (2019); Vicentini et al. (2019); Yoshioka and Hamazaki (2019). The restricted Boltzmann machine was applied to solve quantum many-body systems Carleo and Troyer (2017). A deep neural network was constructed to derive the solution of the many-electron schrödinger equation Pfau et al. (2019). It was found that proper neural networks can work as an efficient to characterize many-body systems. Another interesting direction is modification of the classical algorithms with machine learning Shen et al. (2018); Mori et al. (2018); Carleo et al. (2019), which may improve or assist the conventional first-principle computations.

In addition, it is natural to apply neural networks to many-body systems on the lattices, since they share similar discrete architectures. In some pioneer attempts Alexandru et al. (2017); Broecker et al. (2017); Urban and Pawlowski (2018); Mori et al. (2018); Zhou et al. (2019), the training data were generated by the classical Markov Chain Monte Carlo (MCMC) method. However, its expandability and efficiency are limited because of the critical slowing down near the critical point Urban and Pawlowski (2018) and the sign-problem Broecker et al. (2017). Recently, a new method based on an autoregressive neural network was proposed and applied to discrete spin systems (e.g., the Ising model) Wu et al. (2019); Sharir et al. (2020). This new method uses a variational Ansatz that decomposes the macroscopic probability distribution into microscopic distributions on the lattice sites. It was demonstrated that a higher computational accuracy can be achieved in solving several Ising-type systems Ou (2019).

However, it still remains challenging to solve a general many-body system with continuous degrees of freedom, e.g., a continuous spin system which may exhibit a topological phase transition. Previous attempts failed when one applied methods that work well for discrete spin systems to continuous cases Cristoforetti et al. (2017). Being different from the classical phase transition, the topological phase transition occurs with topological defects emerging. This has been attracting the attention from various fields of physics Background (2016). Some related efforts using both supervised learning and unsupervised learning have been made to handle this problem Wang and Zhai (2017); Beach et al. (2018); Suchsland and Wessel (2018); Zhang et al. (2018); Carvalho et al. (2018); Hu et al. (2019); Fukushima et al. (2019). The winding numbers were recognized by a neural network with supervised training for an one-dimensional insulator model Zhang et al. (2018). By generating the configurations with MCMC sampling and supplying feature engineered vortex configurations as the input, neural networks could detect the topological phase transition from well-preprocessed configurations Beach et al. (2018). While the unsupervised learning was applied to identify the topological orders Cristoforetti et al. (2017); Rodriguez-Nieva and Scheurer (2019); Scheurer and Slager (2020), an efficient variational approach combined with powerful autoregressive neural networks is still missing.

In this Letter, we propose Continuous-mixture Autoregressive Networks (CANs) to solve the continuous spin systems efficiently, which can be further applied to continuous field systems. As a concise reference example, we study the two-dimensional (2D) XY model on a square lattice, which exhibits the so-called Kosterlitz-Thouless (KT) phase transition Gupta et al. (1988); Kosterlitz (1974); Weber and Minnhagen (1988). The CANs are introduced to recognize the topological phase transition with continuous variables in an unsupervised manner. In such autoregressive neural networks, the microscopic state at each lattice site is modeled by a conditional probability, which constructs a joint probability for the whole configuration Wu et al. (2019); Sharir et al. (2020). In this work, we introduce a generic framework for CANs and construct suitable neural networks to study the XY model. The vortices, serving as the signal of the KT transition, are automatically generated by the neural networks. Correspondingly, the KT transition temperature of the 2D XY model can be more accurately determined by calculating the helicity modulus Weber and Minnhagen (1988). As for the computing time cost, the training process becomes undoubtedly more time-consuming for larger lattice sizes. However, the training time remains unchanged around the transition point. Considering the advantages of the CANs, we propose further potential applications to other many-body systems with continuous degrees of freedom in the final part of this work.

Continuous-mixture Autoregressive Networks.—Let us consider a many-body system on a lattice with continuous spin degrees of freedom at each lattice site. Because the orientation of each spin changes continuously, the conditional probability for spin at site must also be continuous. We propose that a proper mixture of the beta distribution is the prior probability to ensure that the continuous variables distribute randomly in a finite interval bet . The beta distribution is continuously defined in a finite interval with two positive shape parameters . Thus the output layers of neural networks are designed to be of two channels for each Beta component, and the conditional probabilities are derived as

| (1) |

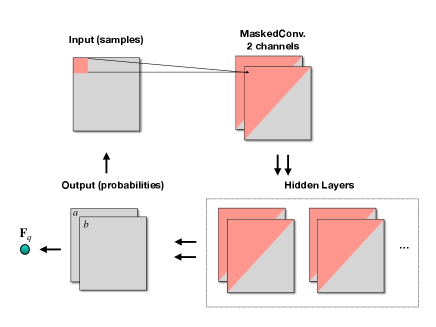

where is the gamma function, is a set of parameters of the networks, and . The outputs of the hidden layers are and , which can be realized by Fig. 1 as an autoregressive neural networks.

As a practical example, we consider the 2D XY model on the lattice. The Hamiltonian is expressed in terms of spins living at the lattice sites with nearest-neighbor interactions,

| (2) |

where indicates that the sum is taken over all nearest-neighbor pairs and the angle denotes the spin orientation at site . The Mermin-Wagner theorem forbids long-range order (LRO) in 2D systems with continuous degrees of freedom, since the strong fluctuations in 2D break the order Wagner and Schollwoeck (2010). Nevertheless, the formation of topological defects (i.e., vortices and anti-vortices) in the XY model distinguishes phases with and without quasi-LRO, which characterizes the global properties of the many-body system.

To detect the KT phase transition in the XY model, we study the free energy according to statistical mechanics, where , with being the temperature. The free energy is obtained from the partition function , which contains all information of the system. The summation runs over all possible configurations of the system. Monte Carlo algorithms can be routinely applied to generate the configurations and can achieve proper relative importance among different configurations. However, the free energy cannot be computed directly from the Monte Carlo algorithms. Variational approaches can be employed to obtain a variational free energy. In this work, the variational target function is set to be the joint probability of all configurations and equals the Boltzmann distribution . We use a variational for the joint distribution, denoted by . It is parametrized by a set of variational parameters , which are tuned to approach the target distribution . The Kullback-Leibler divergence MacKay and Kay (2003) between the variational and the target distributions, , provides the measure of the closeness from to . The corresponding variational free energy can be derived from . We obtain

| (3) |

Since is non-negative, the variational free energy gives an upper bound of the true free energy . Thus the minimization of and the variational free energy are actually equivalent. Meanwhile, as pointed out in previous works Carleo and Troyer (2017); Wu et al. (2019); Sharir et al. (2020), it is straightforward to map the parameters onto the weights of an Artificial Neural Network (ANN). Correspondingly, the variational free energy becomes the loss function. Using the log-derivative trick Williams (1992) we obtain

| (4) |

where the gradient is weighted by the reward signal .

Once the parameters are mapped onto ANN, the variational problem becomes nothing but training the networks. Using autoregressive networks such as CANs for the parametrization, we can decompose the variational distribution into a product of the conditional probabilities,

| (5) |

which provides the variational by parametrizing each conditional probability as neural networks. Considering the symmetry, we employ the PixelCNNVan Den Oord et al. (2016) that can naturally preserve the locality and the translational symmetry on a square lattice. In addition, the autoregressive property is guaranteed by putting a mask on the convolution kernel Germain et al. (2015); mas . As shown in Fig. 1, the input layer takes in the configurations on the lattice. After passing it through several masked convolution layers, the parameters of the beta distribution at each site are obtained in the output layer. Then the configuration probability can be derived and the variational free energy can be further calculated via Eq.(3) after such a forward propagation for a batch of independent configurations. If we specify the channels in the convolution layers to represent the parameters of each beta component, we find that it greatly saves the training time and speeds up the sampling.

Training the neural networks here is the key to perform the variational approach. We use a classical back-propagation algorithm. The nuts-and-bolts computation with CANs is with the following procedures: (i) With the randomly initialized network, sample a batch of independent configurations to be the training set; (ii) Pass the training set forward to evaluate the log-probability and the variational free energy ; (iii) Estimate the gradient and update the network weights via back-propagation; (iv) With the updated network, resample a batch of configurations to be the new training set, which actually follow the current joint probability ; (v) Repeat the above procedures until the loss function becomes eventually convergent; (vi) Sample an ensemble of independent configurations from site by site at once; (vii) Calculate the thermodynamic observables. On the square lattice with sites, the convergence is reached if the change of the variational energy per site is much smaller than the superior limit, Chung (1999).

Rediscovery of KT Transition.—Now we study the KT transition and calculate the thermodynamic quantities of the 2D XY model using CANs. In the calculations, the default width and depth of the network we adopted in CANs are set to be , with lattice sites being the input. The multi-channel feature is used to construct a mixture of the beta distributions, which makes the networks more expressive Salimans et al. (2017). The Adam optimizer is applied to minimize the loss function in Pytorch. The CANs can be implemented on GitHub Wang (2020) and the corresponding hyper-parameters are consistent with previous works Wu et al. (2019).

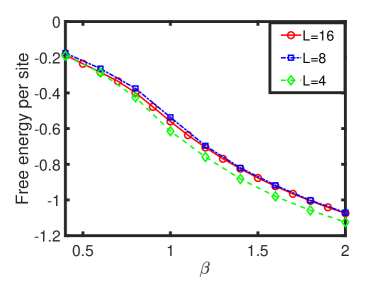

The thermodynamic observables of the 2D XY model have been computed with the MCMC method Gupta et al. (1988); Hasenbusch (2005); Komura and Okabe (2012); Weber and Minnhagen (1988). It is interesting to compare the results from CANs and MCMC. The advantage of CANs is that the free energy per site can be directly calculated. It is presented Fig. 2 for three different lattice sizes, . The results for and indicate that the free energy converges rapidly with increasing lattice sizes, which ensures that the size effect can be avoided. In the following discussions we use the results for .

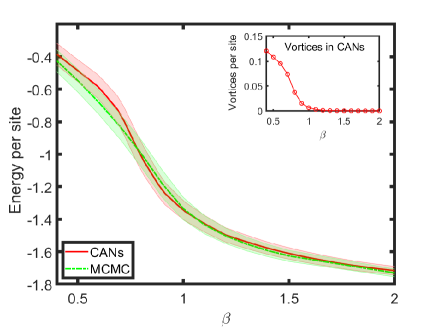

Fig. 3 shows the energy per site as a function of obtained from CANs and MCMC with the same number of configurations. Here the MCMC calculation was implemented with a classical algorithm Tobochnik and Chester (1979); Teitel and Jayaprakash (1983). In the low temperature regime (), the result from CANs agrees with that from MCMC despite the statistical error from thermal fluctuations. In the high temperature regime (), however, the two results do not match perfectly. We can understand this deviation from the comparison of the number of free vortices (or anti-vortices) shown in Table 1. Since a larger number of free vortices and anti-vortices indicates a larger entropy in the XY model, configurations with more vorticity vor lead to a lower free energy. This is the reason why the energy per site from CANs is larger than that from MCMC at , because the entropy from the free vortices and anti-vortices balances the free energy. The situation is reversed at for the same reason. The rapid increase of the number of free vortices per site around indicates that there exists a topological phase transition, i.e., the KT transition.

| 0.4 | 0.6 | 0.8 | 1.0 | 1.2 | ||

|---|---|---|---|---|---|---|

| Energy | MCMC | -0.424 | -0.682 | -0.996 | -1.336 | -1.502 |

| CANs | -0.384 | -0.588 | -1.017 | -1.346 | -1.497 | |

| Vortices | MCMC | 0.114 | 0.080 | 0.042 | 0.010 | 0.002 |

| CANs | 0.121 | 0.096 | 0.038 | 0.007 | 0.001 | |

The KT transition is a transition from bound vortex-antivortex pairs at low temperatures to unpaired vortices and anti-vortices at high temperature. In previous works Gupta et al. (1988); Komura and Okabe (2012); Bighin et al. (2019), the KT transition temperature was reported as . In our method, the CANs capture the global property of the 2D XY model since the trained neural networks help achieve a good evaluation of the free energy. Nevertheless, at high temperature, the disorder of the configurations due to the thermal fluctuations results in a slight mismatch between CANs and MCMC. The temperature effect attenuates the long-range correlation exponentially Kosterlitz (1974), which also slightly weakens the expressive ability of CANs for a finite-size system. Additionally, we emphasize that the elementary conditional distribution at each site, , should be carefully chosen since the spin in the XY model is periodically valued. We find that, as a common test, the choice of the normal distribution for can hardly make the the loss function converge to a reasonable value.

KT Transition Point.—To recognize the KT transition point quantitatively, we may introduce the spin stiffness , which reflects the change of the free energy in response to an infinitesimally slow twist on the spins. In the continuum limit, it is . In practice, we consider another quantity, the helicity modulus Weber and Minnhagen (1988); Gupta et al. (1988); Komura and Okabe (2012); Hasenbusch (2005), which is equivalent to in the limit . It can be expressed as

| (6) |

where is the size of the square lattice, is the vector pointing from site to site , and is an arbitrary unit vector in the 2D plane. The Kosterlitz renormalization group Kosterlitz (1974) predicts that jumps from the value to zero at the critical temperature, and hence the helicity modulus gives a reliable prediction of the KT transition point.

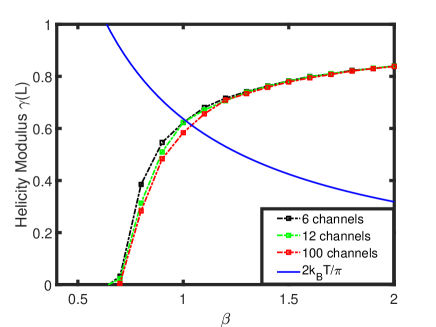

In Fig. 4, we show the helicity modulus for a large lattice size . The markers are the numerical results evaluated from CANs with several multi-channel mixtures of the beta distribution and the dashed lines are the corresponding interpolation curves. With increasing number of channels, the crossing point with the curve predicts the KT transition temperature. For a sufficiently large number of channels (100), we observe that the crossing point is located at . Since the helicity modulus depends on the correlation function that needs a higher order statistics than the energy (i.e., the mean square of the energy), here we only consider a large lattice size that can give a precise evaluation of . The result is consistent with that from the standard Monte Carlo simulations Komura and Okabe (2012); Beach et al. (2018).

We find that the computational time cost is around 0.2 seconds per training step and is almost independent of the temperature. This indicates that the Critical Slowing Down (CSD) is hopefully avoided. Even though the burden due to the increasing auto-correlation time Goodman and Sokal (1989); Urban and Pawlowski (2018) in MCMC does not appear in CANs, the relation between the training time cost and the lattice size should be mentioned here. The training time per step in CANs with the default network setup for different lattice sizes at the KT transition temperature can be recorded. We find that as a function of the lattice size it can be well fitted as , with and . Thus the cost of bypassing the CSD is such a training time that has an approximate polynomial dependence on . However, it is just one-off and the following configuration sampling procedure can take on the parallel advantage of GPU for a large ensemble generation. Therefore, a more powerful GPU can reduce the time cost efficiently. As a reference, all results presented in this work were obtained on a Nvidia RTX 2080 GPU.

Summary.—We have proposed continuous-mixture autoregressive networks (CANs) for an efficient variational calculation of many-body systems with continuous degrees of freedom. Specifically, a CAN can be designed as an Ansatz for the variational approach to the topological phase transition in the 2D XY model. The CANs are able to learn to construct microscopic states of the system, in which vortices and anti-vortices emerge automatically. We can evaluate the energy and the vorticity using configurations generated from the networks and compare them to the results from the MCMC calculations. The autoregressive structure of the neural networks is beneficial to study the long-range correlations even beyond the phase transition point. It sheds light on the investigation of more latent topological structures, such as a coupled XY model Bighin et al. (2019) and a twisted bilayer graphene Julku et al. (2020), where novel long-range correlations may emerge. Besides, a straightforward determination of the KT transition point in CANs is shown to be consistent with previous works using the standard Monte Carlo methods.

Although the increasing time cost with the lattice size is unavoidable, it becomes more economical in searching for the critical point in the limit of a large ensemble of configurations, because of the direct GPU usage. With the help of CANs, the CSD problem occurring in MCMC is expected to be remarkably alleviated, which may reduce the computational difficulties in more complicated many-body systems, e.g., lattice simulation for the possible critical end point in the QCD phase diagram. The model could be a practical step Urban and Pawlowski (2018), where the computational accuracy and practicability could be rigorously examined. Therefore, the deep learning approach, especially with well-designed neural networks, can be applied to specific physical problems Mehta and Schwab (2014); Iten et al. (2020). This inspires us to explore the techniques from a more physical viewpoint, which will help us open the black boxes of the deep learning and the nature.

Acknowledgements.

We thank Giuseppe Carleo and Junwei Liu for useful discussions. The work is supported by the BMBF under the ErUM-Data project (K. Z.), the AI grant of SAMSON AG, Frankfurt (K. Z.), the National Natural Science Foundation of China with Grant No. 11875002 (Y. J.) and No.11775123 (L. H. and L. W.), the Zhuobai Program of Beihang University (Y. J.), and the National Key R&D Program of China with Grant No. 2018YFA0306503 (L. H.). K. Z. also thanks the donation of NVIDIA GPUs from NVIDIA Corporation.References

- Buchanan (2019) M. Buchanan, Nat. Phys. 15, 1208 (2019).

- Carleo et al. (2019) G. Carleo, I. Cirac, K. Cranmer, L. Daudet, M. Schuld, N. Tishby, L. Vogt-Maranto, and L. Zdeborová, Rev. Mod. Phys. 91, 045002 (2019).

- Wang (2016) L. Wang, Phys. Rev. B 94, 195105 (2016).

- Carrasquilla and Melko (2017) J. Carrasquilla and R. G. Melko, Nat. Phys. 13, 431 (2017).

- Pang et al. (2018) L.-G. Pang, K. Zhou, N. Su, H. Petersen, H. Stöcker, and X.-N. Wang, Nat. Commun 9, 210 (2018).

- Fujimoto et al. (2018) Y. Fujimoto, K. Fukushima, and K. Murase, Phys. Rev. D 98, 023019 (2018).

- Fujimoto et al. (2020) Y. Fujimoto, K. Fukushima, and K. Murase, Phys. Rev. D 101, 054016 (2020).

- Metodiev and Thaler (2018) E. M. Metodiev and J. Thaler, Phys. Rev. Lett. 120, 241602 (2018).

- Kasieczka et al. (2019) G. Kasieczka, T. Plehn, A. Butter, K. Cranmer, D. Debnath, B. M. Dillon, M. Fairbairn, D. A. Faroughy, W. Fedorko, C. Gay, L. Gouskos, J. F. Kamenik, P. Komiske, S. Leiss, A. Lister, S. Macaluso, E. Metodiev, L. Moore, B. Nachman, K. Nordström, J. Pearkes, H. Qu, Y. Rath, M. Rieger, D. Shih, J. Thompson, and S. Varma, SciPost Phys. 7, 014 (2019).

- Steinheimer et al. (2019) J. Steinheimer, L.-G. Pang, K. Zhou, V. Koch, J. Randrup, and H. Stoecker, J. High Energ. Phys. 2019 (12), 122.

- Smith et al. (2017) J. S. Smith, O. Isayev, and A. E. Roitberg, Chem. Sci. 8, 3192 (2017).

- Carleo and Troyer (2017) G. Carleo and M. Troyer, Science 355, 602 (2017).

- Nagy and Savona (2019) A. Nagy and V. Savona, Phys. Rev. Lett. 122, 250501 (2019).

- Hartmann and Carleo (2019) M. J. Hartmann and G. Carleo, Phys. Rev. Lett. 122, 250502 (2019).

- Pfau et al. (2019) D. Pfau, J. S. Spencer, A. G. d. G. Matthews, and W. M. C. Foulkes, ArXiv190902487 Phys. (2019), arXiv:1909.02487 [physics] .

- Vicentini et al. (2019) F. Vicentini, A. Biella, N. Regnault, and C. Ciuti, Phys. Rev. Lett. 122, 250503 (2019).

- Yoshioka and Hamazaki (2019) N. Yoshioka and R. Hamazaki, Phys. Rev. B 99, 214306 (2019).

- Shen et al. (2018) H. Shen, J. Liu, and L. Fu, Phys. Rev. B 97, 205140 (2018).

- Mori et al. (2018) Y. Mori, K. Kashiwa, and A. Ohnishi, Prog Theor Exp Phys 2018, 10.1093/ptep/ptx191 (2018).

- Alexandru et al. (2017) A. Alexandru, P. Bedaque, H. Lamm, and S. Lawrence, Phys. Rev. D 96, 094505 (2017), arXiv:1709.01971 .

- Broecker et al. (2017) P. Broecker, J. Carrasquilla, R. G. Melko, and S. Trebst, Sci. Rep. 7, 8823 (2017).

- Urban and Pawlowski (2018) J. M. Urban and J. M. Pawlowski, ArXiv181103533 Hep-Lat Physicsphysics (2018), arXiv:1811.03533 [hep-lat, physics:physics] .

- Zhou et al. (2019) K. Zhou, G. Endrődi, L.-G. Pang, and H. Stöcker, Phys. Rev. D 100, 011501 (2019).

- Wu et al. (2019) D. Wu, L. Wang, and P. Zhang, Phys. Rev. Lett. 122, 080602 (2019).

- Sharir et al. (2020) O. Sharir, Y. Levine, N. Wies, G. Carleo, and A. Shashua, Phys. Rev. Lett. 124, 020503 (2020).

- Ou (2019) Z. Ou, ArXiv180801630 Cs Stat (2019), arXiv:1808.01630 [cs, stat] .

- Cristoforetti et al. (2017) M. Cristoforetti, G. Jurman, A. I. Nardelli, and C. Furlanello, ArXiv170509524 Cond-Mat Physicshep-Lat (2017), arXiv:1705.09524 [cond-mat, physics:hep-lat] .

- Background (2016) S. Background, Topological Phase Transitions and Topological Phases of Matter, Tech. Rep. (the Royal Swedish Academy of Sciences, 2016).

- Wang and Zhai (2017) C. Wang and H. Zhai, Phys. Rev. B 96, 144432 (2017).

- Beach et al. (2018) M. J. S. Beach, A. Golubeva, and R. G. Melko, Phys. Rev. B 97, 045207 (2018), arXiv:1710.09842 .

- Suchsland and Wessel (2018) P. Suchsland and S. Wessel, Phys. Rev. B 97, 174435 (2018), arXiv:1802.09876 .

- Zhang et al. (2018) P. Zhang, H. Shen, and H. Zhai, Phys. Rev. Lett. 120, 066401 (2018).

- Carvalho et al. (2018) D. Carvalho, N. A. García-Martínez, J. L. Lado, and J. Fernández-Rossier, Phys. Rev. B 97, 115453 (2018).

- Hu et al. (2019) H.-Y. Hu, S.-H. Li, L. Wang, and Y.-Z. You, ArXiv190300804 Cond-Mat Physicshep-Th (2019), arXiv:1903.00804 [cond-mat, physics:hep-th] .

- Fukushima et al. (2019) K. Fukushima, S. S. Funai, and H. Iida, ArXiv190800281 Hep-Th (2019), arXiv:1908.00281 [hep-th] .

- Rodriguez-Nieva and Scheurer (2019) J. F. Rodriguez-Nieva and M. S. Scheurer, Nat. Phys. 15, 790 (2019).

- Scheurer and Slager (2020) M. S. Scheurer and R.-J. Slager, ArXiv200101711 Cond-Mat Physicsphysics (2020), arXiv:2001.01711 [cond-mat, physics:physics] .

- Gupta et al. (1988) R. Gupta, J. DeLapp, G. G. Batrouni, G. C. Fox, C. F. Baillie, and J. Apostolakis, Phys. Rev. Lett. 61, 1996 (1988).

- Kosterlitz (1974) J. M. Kosterlitz, J. Phys. C: Solid State Phys. 7, 1046 (1974).

- Weber and Minnhagen (1988) H. Weber and P. Minnhagen, Phys. Rev. B 37, 5986 (1988).

- (41) Intuitively, in the Bayesian inference the beta distribution is the conjugate prior probability distribution of the Bernoulli distribution, which has been proven to be a proper distribution for the Ising model case.

- Van Den Oord et al. (2016) A. Van Den Oord, N. Kalchbrenner, and K. Kavukcuoglu, in Proceedings of the 33rd International Conference on International Conference on Machine Learning - Volume 48, ICML’16 (JMLR.org, New York, NY, USA, 2016) pp. 1747–1756.

- Wagner and Schollwoeck (2010) H. Wagner and U. Schollwoeck, Scholarpedia 5, 9927 (2010).

- MacKay and Kay (2003) D. J. C. MacKay and D. J. C. M. Kay, Information Theory, Inference and Learning Algorithms (Cambridge University Press, 2003).

- Williams (1992) R. J. Williams, Mach Learn 8, 229 (1992).

- Germain et al. (2015) M. Germain, K. Gregor, I. Murray, and H. Larochelle, in ICML (2015).

- (47) This also ensures that the weights are nonzero for half of the kernel and each conditional probability is independent of with for a prechosen ordering.

- Chung (1999) S. G. Chung, Phys. Rev. B 60, 11761 (1999).

- Salimans et al. (2017) T. Salimans, A. Karpathy, X. Chen, and D. P. Kingma, ArXiv170105517 Cs Stat (2017), arXiv:1701.05517 [cs, stat] .

- Wang (2020) L. Wang, Code for recognizing the topological phase transition by the continuous-mixture autoregressive networks (2020), https://github.com/Anguswlx/CAN2XY.

- Hasenbusch (2005) M. Hasenbusch, J. Phys. A: Math. Gen. 38, 5869 (2005).

- Komura and Okabe (2012) Y. Komura and Y. Okabe, J. Phys. Soc. Jpn. 81, 113001 (2012).

- Tobochnik and Chester (1979) J. Tobochnik and G. V. Chester, Phys. Rev. B 20, 3761 (1979).

- Teitel and Jayaprakash (1983) S. Teitel and C. Jayaprakash, Phys. Rev. B 27, 598 (1983).

- (55) The number of free vortices (which equals to the number of free anti-vortices) is given by , where the vorticity is given by in the continuum limit.

- Bighin et al. (2019) G. Bighin, N. Defenu, I. Nándori, L. Salasnich, and A. Trombettoni, Phys. Rev. Lett. 123, 100601 (2019).

- Goodman and Sokal (1989) J. Goodman and A. D. Sokal, Phys. Rev. D 40, 2035 (1989).

- Julku et al. (2020) A. Julku, T. J. Peltonen, L. Liang, T. T. Heikkilä, and P. Törmä, Phys. Rev. B 101, 060505 (2020).

- Mehta and Schwab (2014) P. Mehta and D. J. Schwab, ArXiv14103831 Cond-Mat Stat (2014), arXiv:1410.3831 [cond-mat, stat] .

- Iten et al. (2020) R. Iten, T. Metger, H. Wilming, L. del Rio, and R. Renner, Phys. Rev. Lett. 124, 010508 (2020), arXiv:1807.10300 .