Continuous-Time Path-Dependent Exploratory Mean-Variance Portfolio Construction

Abstract

In this paper, we present an extended exploratory continuous-time mean-variance framework for portfolio management. Our strategy involves a new clustering method based on simulated annealing, which allows for more practical asset selection. Additionally, we consider past wealth evolution when constructing the mean-variance portfolio. We found that our strategy effectively learns from the past and performs well in practice.

1 Introduction

The revolutionary work Selection (\APACyear1952) is considered as the beginning of modern portfolio management, which proposes a framework for constructing portfolios in single periods. Much research in portfolio management came out after then. Li \BBA Ng (\APACyear2000) considers the portfolio selection problem in multiple periods setting and Zhou \BBA Li (\APACyear2000) further studies the portfolio selection problem in a continuous-time setting, which makes portfolio management in high frequency possible. In Wang \BOthers. (\APACyear2018), an innovative exploratory continuous-time mean-variance framework is first introduced. It replaces the deterministic policy with a stochastic policy to adjust assets’ holdings, achieving the ideal trade-off between exploration and exploitation, and is considered more robust than the deterministic policy. This exploratory framework is further studied in a series of subsequent papers. Wang (\APACyear2019) extends the framework into a multi-assets setting. Jia \BBA Zhou (\APACyear2022\APACexlab\BCnt2), Jia \BBA Zhou (\APACyear2022\APACexlab\BCnt1), Jia \BBA Zhou (\APACyear2022\APACexlab\BCnt3) develops a more general framework for policy evaluation and policy improvement.

In this paper, we further extend the exploratory continuous-time mean-variance framework by first clustering assets into several groups and then constructing a mean-variance portfolio by taking past wealth processes into consideration. In other words, there are phases in our framework. The first one is the clustering phase, and the second is the portfolio construction phase. The motivation for adding a clustering phase is to exclude similar assets and thus reduce the complexity. It is not possible and also not necessarily to hold thousands of stocks at one time for small retail and institutional investors. In the second portfolio construction phase, the motivation for considering the past wealth process or past performance is we think past experience should be important for making a current decision. When making a current decision, one should base it on past lessons together with the current wealth level instead of basing it only on the current wealth level. One intuitive example will be if a person came into the stock market in mid-2020, that person probably would think making money in the stock market is easy and maybe start to use high leverage in expect to make a higher gain, therefore, this person probably had a huge loss in 2022. However, if a person came into the stock market in 2008, that person probably would become more cautious, and therefore be aware of the market environment such as the Feds’ interest rate policy.

Many asset clustering methods have been developed in the past decades. As pointed out in Tang \BOthers. (\APACyear2022), one necessary criterion that wasn’t in the previous literature is that correlations for two assets from the same cluster with any other asset should be similar. Following that paper’s spirit, we use the similarity metric proposed in Dolphin \BOthers. (\APACyear2021) instead of using correlation. It is because correlation sometimes can’t capture similarities between two assets, as pointed out in Dolphin \BOthers. (\APACyear2021) one asset can have negative returns, and the other asset can have high returns, but these two assets still are highly correlated. The similarity metric we use here takes cumulative returns into consideration and therefore can capture the pattern similarities between assets. The clustering method we use in this paper is a simulated-annealing-based method, which is inspired by Ludkovski \BOthers. (\APACyear2022). We define an energy function that depends on asset similarities within a group. To our best knowledge, it is the first literature that uses this new similarity metric to cluster assets by a simulated annealing clustering method. From the empirical results, this method performs well, and can even possibly help investors find assets that guarantee statistical arbitrage opportunities, which requires further studies.

There is a huge literature on portfolio management or portfolio optimization, but very few consider portfolio management problems in a path-dependent setting. The path-dependent setting in portfolio management means that the positions of assets should depend on the performance of past investments. Learning from the past is an important philosophy that shouldn’t be ignored. The path-dependent case has technical difficulties, and there were no suitable tools for a long time. Thanks to the groundbreaking paper Dupire (\APACyear2019) that introduces functional Ito calculus, which defines a new type of calculus on path space, and thus proposes a path version of the Ito formula, and the Feynmann-Kac formula. However, the path-dependent Hamilton-Jacobi-Bellman equation is very hard to solve numerically. Thanks to the recent development of deep learning, makes solving path-dependent Hamilton-Jacobi-Bellman equations possible. There are literature that uses neural networks to solve path-dependent PDE, such as Saporito \BBA Zhang (\APACyear2020), and Sabate-Vidales \BOthers. (\APACyear2020). In this paper, we use PDGM to solve the PDE numerically, which is a neural network architecture whose main components are LSTM and feedforward networks. LSTM, as one of the most classical neural networks, has the ability to process sequential information and is naturally suitable to summarize past history. The feed-forward neural can then model certain functional by using the summaries given by LSTM. It is worth pointing out that LSTM is designed to forget some information in the past. In the deep learning community, the transformer basically replaced the LSTM to process sequential information. We believe replacing the LSTM component by a transformer component can have better results in solving path-dependent PDE, which requires further studies.

2 Simulated Annealing Clustering

Subsection 2.1 introduces a new similarity metric, and subsection 2.2 introduces the clustering method based on the proposed similarity metric via simulated annealing.

2.1 Similarity Metric of Financial Time Series

As pointed out in Dolphin \BOthers. (\APACyear2021), two assets can be perfectly correlated, but their performances will be very different. It is because conventional correlation is a similarity m, correlation cannot be the only criterion to cluster assets with similar patterns assets, correlation cannot be the only criterion. In this paper, we use the metric proposed in Dolphin \BOthers. (\APACyear2021) to measure the similarity of two assets.

Assume there are two time-series , and , which represent returns of two assets, and the similarity between these two time-series is defined to be

| (1) |

| (2) |

| (3) |

In the above definition of similarity, measures the distance between the cumulative returns of two assets and is a modified version of correlation that measures the similarity of absolute performance instead of relative performance to mean returns. is a hyper-parameter to be determined. Empirically, the metric performs best when is between 0.4 and 0.6.

2.2 Simulated Annealing Clustering

The clustering procedure is based on simulated annealing. The key to the simulated annealing clustering method is the energy functions. The ideal energy function should control the size of each cluster, and let similar assets be in the same group. Let be a partition of assets. Inspired by Ludkovski \BOthers. (\APACyear2022), we propose a similar energy function

| (4) |

In the above definition, is the number of clusters, which should never be 1, otherwise, it is not a clustering method. is a hyper-parameter to be determined, which put a soft constraint on the number of clusters.

The simulated annealing clustering method is as follows – initially, all assets are in the same group, and for each iteration, a perturbation operation is applied to move one asset from one cluster to another with some criterion of accepting the perturbation. The criterion of accepting perturbation is whether or not the energy function is decreased or not. If the energy function is decreased, then one should accept the perturbation. If the energy function is increased, then one should accept the perturbation under a certain probability related to the energy function.

The exact simulated annealing clustering method is as follows

Initialization: Choose an initial temperature: , final temperature: , cooling rate: , and let all assets in the same cluster.

Step 1: Assume current step is step. Denote the current partition as , and apply perturbation operation to the current partition, which resulting a new partition, denote as . Notice that the current temperature is

Step 2: .

If: , accept partition as the partition for next iteration

Else:

is accepted as with probability

is accepted as with probability

Step 3: Lowering the temperature based on cooling rate: . Step 4: If , end the clustering procedure. Otherwise, repeat steps 1 - 3

3 Exploratory Mean-Variance Framework

The exploratory mean-variance framework is first proposed in Wang \BOthers. (\APACyear2018) for one-dimensional asset dynamics and extends to multi-dimensional asset dynamics in Wang (\APACyear2019). We follow Wang (\APACyear2019) to give an introduction to the exploratory mean-variance framework in this section.

The portfolio management problem is forming a portfolio that minimizes the variance under expected returns.

| (5) | |||

| (6) |

Assume the clustering phase is already completed, and there are clusters. We randomly select assets, by choosing one asset from each cluster, whose dynamics are denoted as . For each asset , assume that the underlying dynamics to be

Let , , and denote the vector of drift rates, the covariance matrix, and the vector of n-dim independent Brownian motions respectively. Let denote the holdings (in dollar) of those assets at time . Therefore, the wealth process will be

| (7) |

In this paper, the holdings/control is path-dependent, which means holdings/control depend on the past history of the wealth process. To differentiate the current wealth level and the path of the wealth process, we use lowercase letters for the current wealth level and use uppercase letters for the path of the wealth process. For example, means the wealth level at time under holdings/control , while means a path of wealth process from time to time . Now, the policy is a probability density, meaning that holdings/control at time has probability density to be at , based on a realized wealth process is .

Under the exploratory framework proposed in Wang \BOthers. (\APACyear2018), we have a new stochastic process that denotes the average performance of the wealth process is

| (8) |

Here, we assume that policy is appied at time . is an independent Brownian motion, is a positive definite matrix. , and are as follows,

| (9) |

| (10) |

The goal is to identify a policy that minimizes the following objective function, where is the Lagrangian multiplier

| (11) |

This cost function is very similar to the cost function in Wang (\APACyear2019), but instead, the cost function is path-dependent. The superscript indicates wealth processes are generated under the policy . Now that we have path-dependent control and path-dependent cost function, we can’t simply apply classical stochastic control results here, a functional version of stochastic control is needed.

4 Path Dependent Stochastic Control

4.1 Functional Ito Calculus

Functional Ito calculus is a crucial and powerful tool developed in Dupire (\APACyear2019) to study path-dependent stochastic calculus problems. The following is a quick introduction to functional Ito calculus and some important consequences in the Dupire (\APACyear2019).

Let denote the set of cadlag paths up to time , and specifically, is the space of cadlag paths that takes values in . denotes the space of two cadlag paths, one takes values in and the other takes value in . In general, , and is a functional.

If is a path that takes value in , and indicates the value at time . We define vertical, and flat extensions as follows, (For the path that takes value in , the above vertical and flat extensions are done on each dimension individually)

Let be a functional, then the partial derivative as follows, (for , the following definition is on each dimension individually)

To understand the functional Ito formula, we need to introduce the metric on the path space . Let , and be two paths in , and without loss of generality, assume . Their distance is defined to be

| (12) |

After defining the metric, we can define the continuity of a functional.

A functional is -continuous at if , , and such that , there is . A functional is -continuous if it is -continuous at all paths.

A functional is in if it is -continuous, in x, and in t, with its partial derivatives also be -continuous.

Theorem 4.1 (Functional Ito Formula)

Let be a continuous semi-martingale process, , and is a path of process . Then, for any ,

| (13) |

4.2 Functional Feynman-Kac Formula and Functional HJB equation

Now, assume that is a path for the wealth process. If we use policy for the left time, the cost functional are as follow,

| (14) |

To simplify our computation, denote the following functional as

| (15) |

| (16) |

| (17) |

Thus, the dynamics, and the cost functional are

| (18) |

| (19) |

Let , and consider the following,

| (20) |

Consider the following,

Therefore, we can see the functional is martingale, by the functional Ito formula, the following can be derived

| (21) |

To simplify the notation, assume the policy be applied at time , then at time , and there is a path . , and we have functional Feynman-Kac formula,

| (22) |

Now, let , the following is an informal derivation of path-dependent HJB equation. The rigorous derivation of path-dependent HJB equation for portfolio management problems faces lots of technical challenges, which is beyond the scope of this paper.

| (23) | ||||

| (24) | ||||

| (25) | ||||

| (26) | ||||

| (27) |

Since the first term in the above equation depends on the policy applied from time , which is independent from the policy applied during the interval . In other words, the policy appears in the above equation is a combination of policy applied in the interval , and the policy applied after time . Therefore, the above equation becomes

| (28) |

Moving the left-hand side to the right, and letting , the above equation becomes,

| (29) | ||||

| (30) | ||||

| (31) |

Divide both sides by , we have the following path-dependent HJB equation

| (32) |

Consider the formula inside the , plug in , , and , we have the following formula,

| (33) |

To find a candidate for the optimal policy, the following equation has to be independent of and only depend on

| (34) |

| (35) |

Assume depend on the , and by the fact that is continuous at every point , then without loss of generality, there exist two regions , and such that for points , and . Without loss of generality, assume the volume of these two regions is equal to . Now, let be sufficiently small, and consider the upgraded that for , and for , and is same for the rest space. Therefore, the change of for regions is

| (36) | ||||

| (37) | ||||

| (38) | ||||

| (39) |

Here, since the is sufficiently small, we use Taylor expansion for the to the (37). Similarly, for region , the change of the is

| (40) | ||||

| (41) | ||||

| (42) | ||||

| (43) |

Therefore, the change of (31) is

| (44) | ||||

| (45) | ||||

| (46) | ||||

| (47) |

From the above derivation, one can see that if the policy depends on , there is always an improved policy by slightly adjusting the probability density.

| (48) |

Thus, the optimal policy is of the following form, where is constant

| (49) |

By the fact that is a probability distribution, we have

| (50) |

Thus, is Gaussian distribution, more specifically,

| (51) |

Plug the policy back into HJB equation, as shown in Wang (\APACyear2019), and the HJB equation becomes,

| (52) |

In path-dependent cases, it is impossible to write an analytic solution for equation . Solving equation numerically is the only way and should be sufficient for practitioners. It is natural to solve path-dependent PDE numerically using neural networks because the deep learning community has developed ways to deal with sequential information.

5 Deep Learning PDE Solver

To solve numerically, it is important to approximate the value function, , where is a path of wealth process up to time . However, there is no magic in deep learning. None of the deep learning algorithms can directly deal with continuous sample paths and output values. Therefore, we have to discretize the time and sample path, plug the discretized version into a neural network, and get the output value.

Perhaps the most famous neural network to capture long-term dependence is Long Short-Term Memory (LSTM), which is a special type of recurrent neural network but doesn’t successfully mitigate the problem of gradient exploding and gradient vanishing (Those two are the same thing). Plugging our discretized version of the sample path into LSTM, we can get outputs that represent the path information in a neat way. After capturing the path information, there is one more thing to do – modeling the value function based on those outputs that contain path information. It is an easier step, because of the universal approximation theorem, we can just use a feed-forward neural network. In the rest of this section, we follow the framework proposed in Saporito \BBA Zhang (\APACyear2020) to solve our equation.

5.1 Long Short-Term Memory

First, LSTM is not a specific neural network structure, but more like a building block instead. The structure of an LSTM block is as follows,

In the above block diagram, there are three inputs, , , and , and there are two outputs , and . Intuitively, one can think in this way, , as a single number, is a neat way to represent all the information for the first steps, so people call it ”long-term memory”. While for , the short-term memory, as the name indicated, recent several steps’ information has more influence on it. At last, represents the information one gets from the current step. Those three memories interact with each other to generate new long-term memory and short-term memory, , and .

Back to our equation, assume now we have a sample path of the wealth process , where . We discretize the time interval into equal periods, , and , which will be useful later. Let , where be the wealth at time . So, we have the following network that consist of blocks of LSTM. Here, , and represent there is no memory initially.

In summary, we discretize a sample path into pieces, and plug it into a neural network that consists of LSTM blocks, we get an output vector . The next thing to do is use the output vector to model the value function .

5.2 Value Function Approximation

To approximate the value function , where , we use the most well-known neural network — feed-forward neural network, or fully connected neural network with three inputs, , , and , and one output, denote as , where means the parameters in the feed-forward network. The exact structure of the feed-forward network (the number of hidden layers, the width of each layer, and the exact activation function) will be discussed in the empirical studies, and won’t be important for the rest of the section.

To summarize the above procedure, denote , where denotes parameters of LSTM neural networks. The partial derivatives of the value function can thus be represented as (here, we adapt the notation in Saporito \BBA Zhang (\APACyear2020))

| (53) | |||

| (54) | |||

| (55) | |||

| (56) | |||

| (57) |

In this way, with the ”right” parameters , and , we can approximate by . In order to achieve the ”right” coefficients, we need to train the above model with simulated sample paths.

Consider the equation , to simplify the notations, let

| (58) | |||

| (59) | |||

| (60) |

Thus, equation becomes

| (61) |

If there are simulated paths, and we discretize each sample path into pieces. Denote be the simulated path. To save the space, let . then the loss function to minimize is

| (62) |

Here, is a hyper-parameter to be determined depending on the situation, the last term is in order to let the average of final values of the simulated paths be as close to the expected return as possible.

6 Empirical Studies

There are two phases of empirical studies, the first is the clustering phase, and the second is the portfolio construction phase.

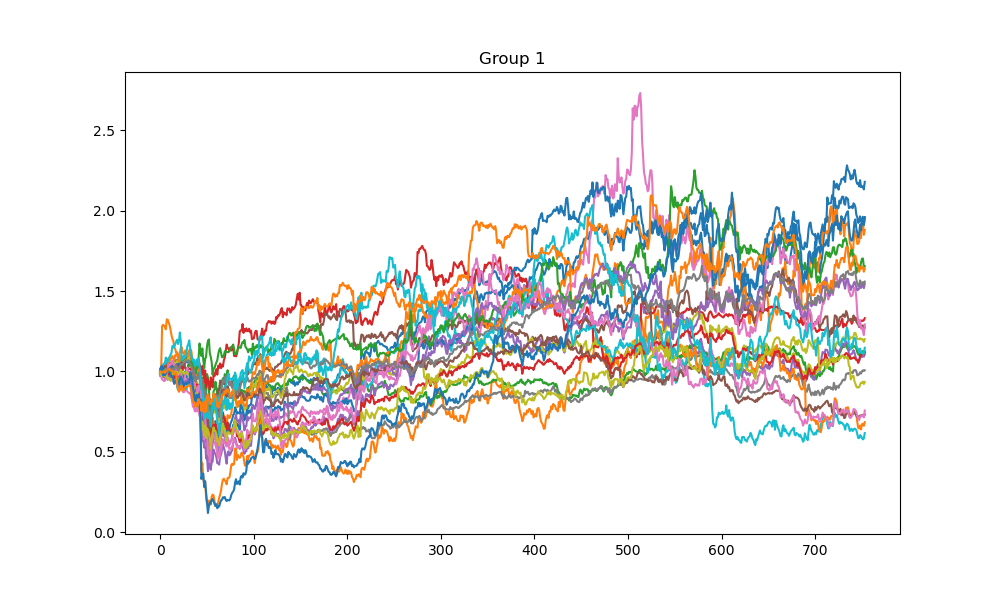

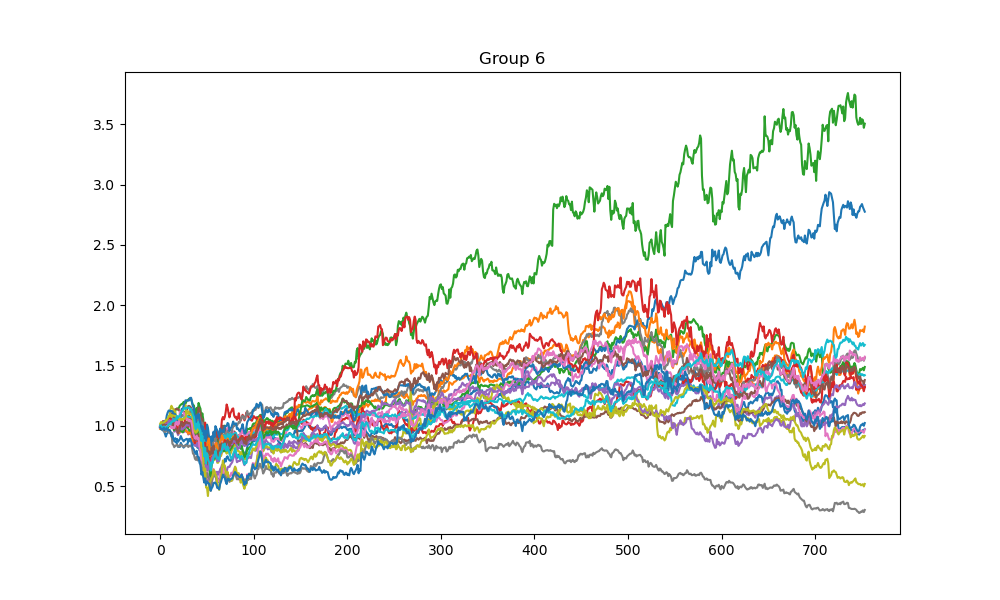

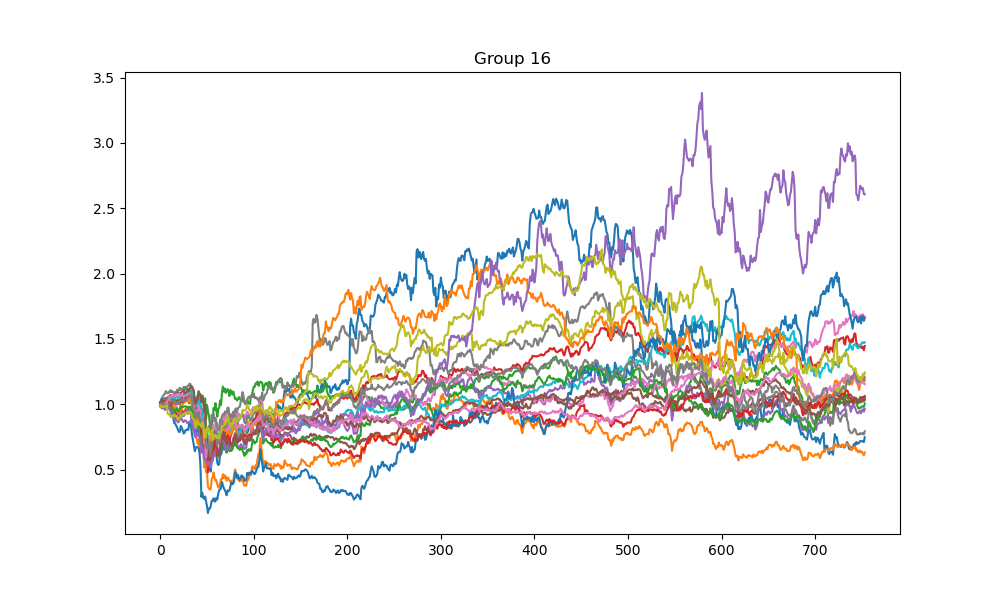

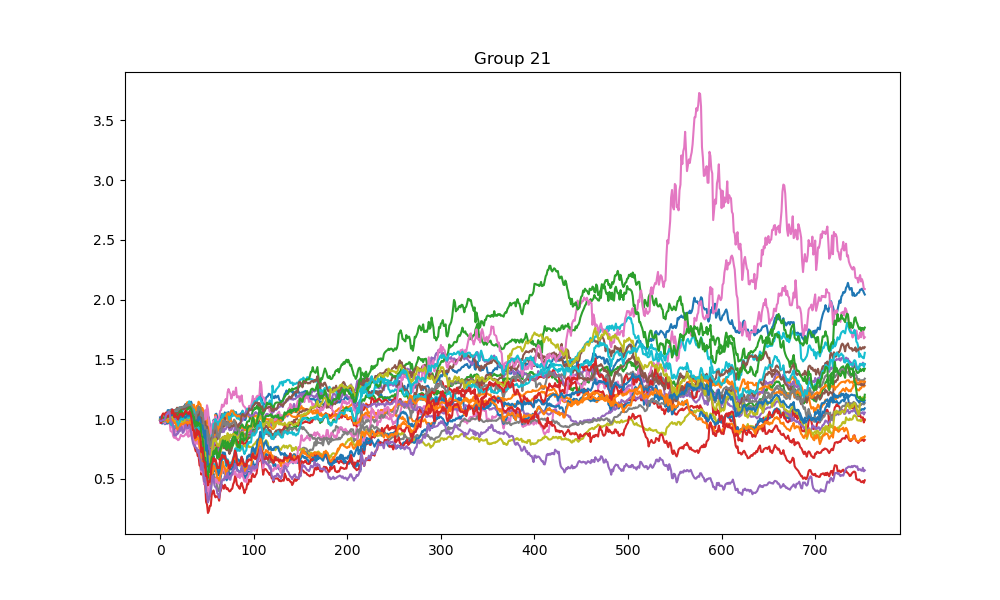

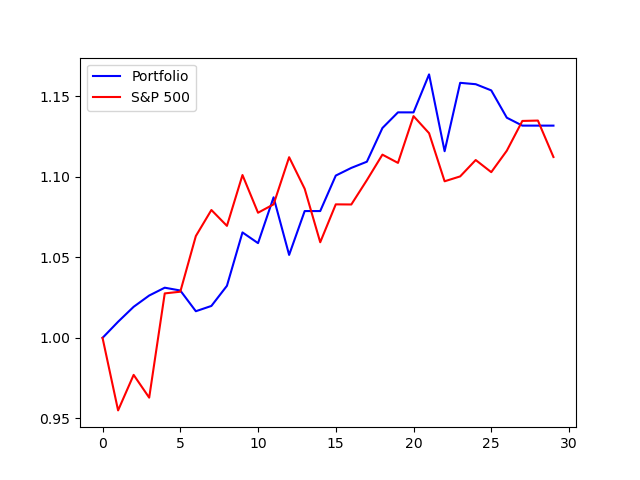

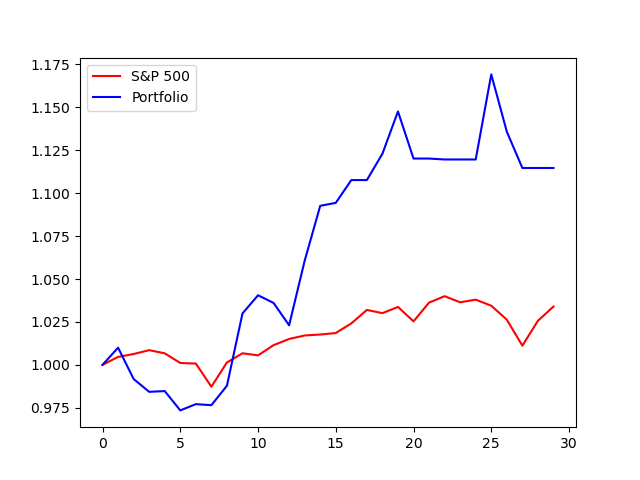

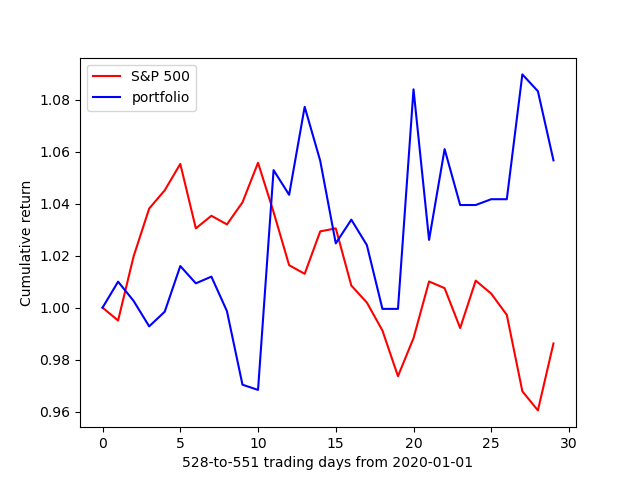

In the clustering phase, the simulated annealing clustering method with , , , , and is used to cluster stocks in the S&P 500 index based on 2020-2022 historic data into 25 groups. The procedure is repeated 100 times to minimize the energy function. Figures 1-4 show the cumulative returns of stocks from four different groups. The results indicate that most stocks in a group perform similarly, while there may be some exceptional stocks that perform differently. This finding suggests that the clustering method could be used for pair trading to identify statistical arbitrage opportunities if the size of each cluster is limited to 2-5 stocks. However, this research direction is not explored in this paper and left for future studies.

In the second phase, 25 stocks are randomly selected from each group and a mean-variance portfolio is constructed based on their historic data. Before constructing the portfolio, the drift rate and covariance matrix for each day must be estimated. In this paper, the estimation is based on the past 75 days of stock returns, which may not be the most accurate method. More accurate estimation can be achieved with high-frequency data and sufficient computation resources.

The parameters used in the trading strategy were set as follows: , , and since the interest rate has been zero for most of the past two years. The initial wealth path was and the trading strategy began with 1 dollar. At the start of each period, the current wealth path was used to generate hundreds of simulated paths with the same drift rate and volatility. These simulated paths were then used to train the neural network, which produced the policy parameters for generating holdings for the next period. A new wealth point was added to the current wealth path after each iteration, therefore, a new neural network needs to be trained. Due to limited computational resources, the trading strategy was only tested for 30 consecutive trading days (30 iterations) at three different periods covering bull, bear, and volatile markets. Figure 5-7 shows that this trading strategy outperformed the S&P 500 index.

7 Conclusion

This paper introduces a novel asset clustering method and extends the exploratory mean-variance framework to the path-dependent case. To further improve this framework, possible enhancements include replacing LSTM with transformer to enhance the neural network’s structure, using high-frequency data to estimate model parameters, and adding constraints on leverage or portfolio asset percentages to enhance the framework’s robustness.

References

- Dolphin \BOthers. (\APACyear2021) \APACinsertmetastardolphin2021measuring{APACrefauthors}Dolphin, R., Smyth, B., Xu, Y.\BCBL \BBA Dong, R. \APACrefYearMonthDay2021. \BBOQ\APACrefatitleMeasuring Financial Time Series Similarity with a View to Identifying Profitable Stock Market Opportunities Measuring financial time series similarity with a view to identifying profitable stock market opportunities.\BBCQ \BIn \APACrefbtitleInternational Conference on Case-Based Reasoning International conference on case-based reasoning (\BPGS 64–78). \PrintBackRefs\CurrentBib

- Dupire (\APACyear2019) \APACinsertmetastardupire2019functional{APACrefauthors}Dupire, B. \APACrefYearMonthDay2019. \BBOQ\APACrefatitleFunctional itô calculus Functional itô calculus.\BBCQ \APACjournalVolNumPagesQuantitative Finance195721–729. \PrintBackRefs\CurrentBib

- Jia \BBA Zhou (\APACyear2022\APACexlab\BCnt1) \APACinsertmetastarjia2022policy_b{APACrefauthors}Jia, Y.\BCBT \BBA Zhou, X\BPBIY. \APACrefYearMonthDay2022\BCnt1. \BBOQ\APACrefatitlePolicy evaluation and temporal-difference learning in continuous time and space: A martingale approach Policy evaluation and temporal-difference learning in continuous time and space: A martingale approach.\BBCQ \APACjournalVolNumPagesJournal of Machine Learning Research231541–55. \PrintBackRefs\CurrentBib

- Jia \BBA Zhou (\APACyear2022\APACexlab\BCnt2) \APACinsertmetastarjia2022policy_a{APACrefauthors}Jia, Y.\BCBT \BBA Zhou, X\BPBIY. \APACrefYearMonthDay2022\BCnt2. \BBOQ\APACrefatitlePolicy gradient and actor-critic learning in continuous time and space: Theory and algorithms Policy gradient and actor-critic learning in continuous time and space: Theory and algorithms.\BBCQ \APACjournalVolNumPagesJournal of Machine Learning Research231541–55. \PrintBackRefs\CurrentBib

- Jia \BBA Zhou (\APACyear2022\APACexlab\BCnt3) \APACinsertmetastarjia2022q{APACrefauthors}Jia, Y.\BCBT \BBA Zhou, X\BPBIY. \APACrefYearMonthDay2022\BCnt3. \BBOQ\APACrefatitleq-Learning in continuous time q-learning in continuous time.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:2207.00713. \PrintBackRefs\CurrentBib

- Li \BBA Ng (\APACyear2000) \APACinsertmetastarli2000optimal{APACrefauthors}Li, D.\BCBT \BBA Ng, W\BHBIL. \APACrefYearMonthDay2000. \BBOQ\APACrefatitleOptimal dynamic portfolio selection: Multiperiod mean-variance formulation Optimal dynamic portfolio selection: Multiperiod mean-variance formulation.\BBCQ \APACjournalVolNumPagesMathematical finance103387–406. \PrintBackRefs\CurrentBib

- Ludkovski \BOthers. (\APACyear2022) \APACinsertmetastarludkovski2022large{APACrefauthors}Ludkovski, M., Swindle, G.\BCBL \BBA Grannan, E. \APACrefYearMonthDay2022. \BBOQ\APACrefatitleLarge Scale Probabilistic Simulation of Renewables Production Large scale probabilistic simulation of renewables production.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:2205.04736. \PrintBackRefs\CurrentBib

- Sabate-Vidales \BOthers. (\APACyear2020) \APACinsertmetastarsabate2020solving{APACrefauthors}Sabate-Vidales, M., Šiška, D.\BCBL \BBA Szpruch, L. \APACrefYearMonthDay2020. \BBOQ\APACrefatitleSolving path dependent PDEs with LSTM networks and path signatures Solving path dependent pdes with lstm networks and path signatures.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:2011.10630. \PrintBackRefs\CurrentBib

- Saporito \BBA Zhang (\APACyear2020) \APACinsertmetastarsaporito2020pdgm{APACrefauthors}Saporito, Y\BPBIF.\BCBT \BBA Zhang, Z. \APACrefYearMonthDay2020. \BBOQ\APACrefatitlePDGM: a neural network approach to solve path-dependent partial differential equations Pdgm: a neural network approach to solve path-dependent partial differential equations.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:2003.02035. \PrintBackRefs\CurrentBib

- Selection (\APACyear1952) \APACinsertmetastarselection1952harry{APACrefauthors}Selection, P. \APACrefYearMonthDay1952. \BBOQ\APACrefatitleHarry Markowitz Harry markowitz.\BBCQ \APACjournalVolNumPagesThe Journal of Finance7177–91. \PrintBackRefs\CurrentBib

- Tang \BOthers. (\APACyear2022) \APACinsertmetastartang2022asset{APACrefauthors}Tang, W., Xu, X.\BCBL \BBA Zhou, X\BPBIY. \APACrefYearMonthDay2022. \BBOQ\APACrefatitleAsset selection via correlation blockmodel clustering Asset selection via correlation blockmodel clustering.\BBCQ \APACjournalVolNumPagesExpert Systems with Applications195116558. \PrintBackRefs\CurrentBib

- Wang (\APACyear2019) \APACinsertmetastarwang2019large{APACrefauthors}Wang, H. \APACrefYearMonthDay2019. \BBOQ\APACrefatitleLarge scale continuous-time mean-variance portfolio allocation via reinforcement learning Large scale continuous-time mean-variance portfolio allocation via reinforcement learning.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:1907.11718. \PrintBackRefs\CurrentBib

- Wang \BOthers. (\APACyear2018) \APACinsertmetastarwang2018exploration{APACrefauthors}Wang, H., Zariphopoulou, T.\BCBL \BBA Zhou, X. \APACrefYearMonthDay2018. \BBOQ\APACrefatitleExploration versus exploitation in reinforcement learning: a stochastic control approach Exploration versus exploitation in reinforcement learning: a stochastic control approach.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:1812.01552. \PrintBackRefs\CurrentBib

- Zhou \BBA Li (\APACyear2000) \APACinsertmetastarzhou2000continuous{APACrefauthors}Zhou, X\BPBIY.\BCBT \BBA Li, D. \APACrefYearMonthDay2000. \BBOQ\APACrefatitleContinuous-time mean-variance portfolio selection: A stochastic LQ framework Continuous-time mean-variance portfolio selection: A stochastic lq framework.\BBCQ \APACjournalVolNumPagesApplied Mathematics and Optimization4219–33. \PrintBackRefs\CurrentBib