Contrastive explainable clustering with differential privacy

Abstract

This paper presents a novel approach in Explainable AI (XAI), integrating contrastive explanations with differential privacy in clustering methods. For several basic clustering problems, including -median and -means, we give efficient differential private contrastive explanations that achieve essentially the same explanations as those that non-private clustering explanations can obtain. We define contrastive explanations as the utility difference between the original clustering utility and utility from clustering with a specifically fixed centroid. In each contrastive scenario, we designate a specific data point as the fixed centroid position, enabling us to measure the impact of this constraint on clustering utility under differential privacy. Extensive experiments across various datasets show our method’s effectiveness in providing meaningful explanations without significantly compromising data privacy or clustering utility. This underscores our contribution to privacy-aware machine learning, demonstrating the feasibility of achieving a balance between privacy and utility in the explanation of clustering tasks.

1 Introduction

Different notions of clustering are fundamental primitives in several areas, including machine learning, data science, and operations research Reddy (2018). Clustering objectives (e.g., -median and -means), crucial in facility location planning, have been notably applied in pandemic response strategies, such as determining optimal sites for COVID-19 vaccination and testing centers Bertsimas et al. (2022); Mehrab et al. (2022). The distance to a cluster center becomes a significant factor in these scenarios, particularly highlighted during the COVID-19 pandemic, where accessible vaccine locations played a pivotal role Rader et al. (2022). This context often leads to individuals, especially those distant from a cluster center, seeking explanations for their specific assignment or location in the clustering plan. For example, consider a resident questioning why a COVID-19 testing center isn’t located next to their home. Such queries reflect the need for explainability in clustering decisions, where factors like population density, resource allocation, and public health considerations impact site selection. The resident’s inquiry underscores the importance of transparent methodologies in clustering that can provide understandable explanations for facility placements, especially in critical health scenarios.

Such questions fall within the area of Explainable AI, which is a rapidly growing and vast area of research Finkelstein et al. (2022); Sreedharan et al. (2020); Miller (2019); Boggess et al. (2023). We focus on post-hoc explanations, especially contrastive explanations, e.g., Miller (2019), which address “why P instead of Q?” questions. Such methods have been used in many applications, including multi-agent systems, reinforcement learning, and contrastive analysis, e.g., Boggess et al. (2023); Sreedharan et al. (2021); Madumal et al. (2020); Sreedharan et al. (2020). In the context of the clustering problems mentioned above, a possible explanation for a resident is the increase in the cost of the clustering if a center is placed close to the resident, compared to the cost of an optimal solution, which is relatively easy to compute. This increased cost reflects a trade-off, where optimizing for one resident’s convenience could result in worse centroid positions for other residents, highlighting the complex decision-making process in clustering for fair resource distribution.

Data privacy is a crucial concern across various fields, and Differential Privacy (DP) is one of the most widely used and rigorous models for privacy Dwork and Roth (2014). We focus on the setting where the set of data points are private; for instance, in the vaccine center deployment problem, each corresponds to an individual who is seeking vaccines, and would like to be private. There has been a lot of work on the design of differentially private solutions to clustering problems like -median and -means under such a privacy model Ghazi et al. (2020); Gupta et al. (2010); Balcan et al. (2017); Stemmer and Kaplan (2018). These methods guarantee privacy of the data points and output a set of centers (which are public) such that its cost, denoted by is within multiplicative and additive factors and of the optimal solution , i.e., .

While there has been significant progress in various domains of differential privacy, the intersection of explainability and differential privacy still needs to be explored. In clustering problems, a potential private contrastive explanation given to agent is to provide the - , where is a set of centers generated by private algorithm (with privacy budget ), and is the cost of the clustering solution while fixing one center at agent ’s location. However, giving such a private contrastive explanation to each agent naively using a -approximate private clustering would lead to -approximation, even if we use advanced composition techniques for privacy. The central question of our research: is it possible to offer each user a private contrastive explanation with a limited overall privacy budget?

Our contributions.

1. We introduce the PrivEC problem, designed to formalize private contrastive explanations to all agents in clustering using -median and -means objectives.

2. We present an -DP mechanism, PrivateExplanations, which provides a contrastive explanation to each agent while ensuring the same utility bounds as private clustering in Euclidean spaces Ghazi et al. (2020).

We use the private coreset technique of Ghazi et al. (2020), which is an intermediate private data structure that preserves similar clustering costs as the original data.

The main technical challenge is to derive rigorous bounds on the approximation factors for all the contrastive explanations.

3. We evaluate our methods empirically on realistic datasets used in the problems of vaccine center deployment in Virginia Mehrab et al. (2022).

Our results show that the privacy-utility trade-offs are similar to those in private clustering, and the errors are quite low even for reasonable privacy budgets, demonstrating the effectiveness of our methods.

Our research stands out by seamlessly integrating differential privacy into contrastive explanations, maintaining the quality of explanations even under privacy constraints. This work bridges the gap between privacy and explainability, marking a significant advancement in privacy-aware machine learning. Due to space limitations, we present many technical details and experimental evaluations in the Appendix.

2 Related Work

Our work considers differential privacy for explainable AI in general (XAI) and Multi-agent explanations (XMASE) in particular, focusing on post-hoc contrastive explanations for clustering. We summarize some of the work directly related to our paper; additional discussion is presented in the Appendix, due to space limitations.

Extensive experiments presented in Saifullah et al. (2022) demonstrate non-negligible changes in explanations of black-box ML models through the introduction of privacy.

Nguyen et al. (2023) considers feature-based explanations (e.g., SHAP) that can expose the top important features that a black-box model focuses on. To prevent such expose they introduced a new concept of achieving local differential privacy (LDP) in the explanations, and from that, they established a defense, called XRAND, against such attacks. They showed that their mechanism restricts the information that the adversary can learn about the top important features while maintaining the faithfulness of the explanations.

Goethals et al. (2022) study the security of contrastive explanations, and introduce the concept of the “explanation linkage attack”, a potential vulnerability that arises when employing strategies to derive contrastive explanations. To address this concern, they put forth the notion of k-anonymous contrastive explanations. As the degree of privacy constraints increases, a discernible trade-off comes into play: the quality of explanations and, consequently, transparency are compromised.

Closer to our application is the work of Georgara et al. (2022), which investigates the privacy aspects of contrastive explanations in the context of team formation. They present a comprehensive framework that integrates team formation solutions with their corresponding explanations, while also addressing potential privacy concerns associated with these explanations. Additional evaluations are needed to determine the privacy of such heuristic-based methods.

There has been a lot of work on private clustering and facility location, starting with Gupta et al. (2010), which was followed by a lot of work on other clustering problems in different privacy models, e.g., Huang and Liu (2018); Stemmer (2020); Stemmer and Kaplan (2018); Nissim and Stemmer (2018); Feldman et al. (2017). Gupta et al. (2010) demonstrated that the additive error bound for points in a metric space involves an term, where is the space’s diameter. Consequently, all subsequent work, including ours, assumes points are restricted to a unit ball.

We note that our problem has not been considered in any prior work in the XAI or differential privacy literature. The formulation we study here will likely be useful for other problems requiring private contrastive explanations.

3 Preliminaries

Let denote a dataset consisting of -dimensional points (referred to sometimes as agents). We consider the notion of -clustering, as defined by Ghazi et al. (2020).

Definition 1.

(-Clustering Ghazi et al. (2020)). Given , and a multiset of points in the unit ball, a -clustering is a set of centers minimizing .

For and , this corresponds to the -median and -means objectives, respectively. We drop the subscript and superscript , when it is clear from the context, and refer to the cost of a feasible clustering solution by .

Definition 2.

(-approximation). Given , and a multiset of points in the unit ball, let denote the cost of an optimal -clustering. We say is a – approximation to a -optimal clustering if .

A coreset (of some original set) is a set of points that, given any centers, the cost of clustering of the original set is “roughly” the same as that of the coreset Ghazi et al. (2020).

Definition 3.

For , a set is a -coreset of if for every , we have .

Privacy model. We use the notion of differential privacy (DP), introduced in Dwork and Roth (2014), which is a widely accepted formalization of privacy. A mechanism is DP if its output doesn’t differ too much on “neighboring” datasets; this is formalized below.

Definition 4.

is -differentially private if for any neighboring datasets and ,

If , we say is -differentially private.

We operate under the assumption that the data points in represent the private information of users or agents. We say are neighboring (denoted by ) if they differ in one data point. When a value is disclosed to an individual agent , it is imperative to treat the remaining clients in as private entities. To address this necessity, we adopt the following privacy model:

Definition 5.

A mechanism is --exclusive DP if, , and for all :

Note that if is -DP, it is --exclusive DP for any .

Relation to Joint-DP model. Kearns et al. (2014) defines Joint-DP–a privacy model that “for each of agent , knowledge of the agents does not reveal much about agent ”. It contrasts with our privacy model, where each explanation to agent uses direct and private knowledge of agent (that private knowledge will be returned to only agent ) and such knowledge does not reveal much about other agents.

In clustering scenarios such as k-means or k-median, it is beneficial for an individual data point to be positioned near the cluster center, as this reduces its distance. This concept has practical applications in fields such as urban planning and public health. For example, clustering algorithms like -means and -median have been utilized to optimize the placement of mobile vaccine centers, as noted in Mehrab et al. (2022). However, once the cluster centers are calculated, residents might wonder why a vaccine clinic has not been positioned nearer to their homes. Our objective is to provide explanations for each data point that is not near a cluster center. Following the approach suggested by Miller (2019), one way to provide an explanation is to re-run the clustering algorithm, this time setting the resident’s location as a fixed centroid. This allows us to explain the changes in clustering costs, where an increase could describe why certain placements are less optimal.

The focus of this paper is twofold: residents not only need explanations but also wish to maintain their privacy, preferring not to disclose their precise locations/data publicly. Traditional private clustering methods struggle to offer these private explanations because they accumulate the privacy budget by continuously applying private clustering to answer numerous inquiries, ultimately resulting in explanations that are not private. Thus, we have developed a framework that delivers the necessary explanations to residents while protecting their confidentiality.

In essence, our framework is data-agnostic and capable of offering contrastive explanations for any private data within clustering algorithms, effectively balancing the need for privacy with the demand for transparency in decision-making.

Definition 6.

Private and Explainable Clustering problem (PrivEC)

Given an instance , clustering parameters , and a subset of data points: , the goal is to output:

Private: An -DP clustering solution

(available to all)

Explainable: For each , output - , which is --exclusive DP.

is a private solution computed by the clustering algorithm while fixing one centroid to the position of agent .

We assume that is not revealed to any agent, but - is released to agent as contrastive explanation.

4 peCluster Mechanism

The PrivateClustering algorithm Ghazi et al. (2020) includes dimension reduction, forming a private coreset, applying an approximation clustering algorithm on the coreset, and then reversing the dimension reduction, ultimately yielding an -DP clustering solution.

The PrivateExplanations algorithm receives the original private clustering cost from PrivateClustering and then leverages the PrivateClustering framework but introduces a fixed-centroid clustering algorithm on the coreset instead of regular approximation clustering algorithm, followed by a dimension reverse. Ultimately, after calculating the fixed centroid clustering algorithm and determining its cost in the original dimension, we subtract the original private clustering cost obtained from the PrivateClustering algorithm, outputting - .

To elaborate further, Private Clustering first reduces the dataset’s dimensionality using DimReduction (Algorithm 1) to enable efficient differentially private coreset construction. It then uses a DP Exponential Mechanism to build a PrivateCoreset (Algorithm 7 of Ghazi et al. (2020)), which is clustered using any -approximate, not necessarily private clustering algorithm. The low-dimensional clustering cost is scaled to obtain the original high-dimensional cost, and the centroids are recovered in the original space.

Multiplying the low-dimensional clustering cost with gives the clustering cost of the original high-dimensional instance Makarychev et al. (2019).

By Running DimReverse using FindCenter (Algorithm 10 of Ghazi et al. (2020)) we can find the centroids in the original space.

The output is the private centroids, their cost, the private coreset, and the low-dimensional dataset.

We employed DimReduction since PrivateCoreset is an exponential algorithm. This approach enables the transformation of dimensionality reduction into an exponential process, effectively converting the algorithm’s time complexity from exponential to polynomial with respect to the input size.

The following summarizes Algo. 3 - PrivateClustering:

Input: Agents’ location (), original and reduced dimension (d, d’). Output: Private clustering: centers and cost.

Input:

Output: low-dimensional space dataset.

Input:

Output: Private Centroids in high dimension

Input:

Output: -differentially private centers with cost , Private Coreset and low-dimensional space dataset.

Input:

Output: { DP explanations for each agent (i)

Private contrastive explanation:

The following summarizes Algorithm 4 - PrivateExplanations:

Input: Private coreset (), Private clustering cost (), Agents looking for contrastive explanation ().

Output: Explanation for each agent in ( )).

- 1.

-

2.

Calculate clustering cost by multiplication - Algorithm 4, line 2.

-

3.

Return utility decrease as an explanation - Algorithm 4, line 4.

After the completion of PrivateClustering, which yields private centroids, specific users within , may request an explanation regarding the absence of a facility at their particular location. We want to provide each agent with the overall cost overhead that a facility constraint at his specific location will yield. To address these queries, we integrate our peCluster mechanism. This algorithm takes the previously computed coreset and employs our NonPrivateApproxFC(tailor-suited non-private algorithm we designed and explained in the following sections) -approximate algorithm to output the cost of -clustering with a fixed centroid.

The output of our algorithm provides the difference cost of the clustering, denoted as cost() - cost(). This output effectively captures the contrast between the overall clustering cost and the specific clustering cost when an individual agent () inquires about the absence of a facility at their location, aligning precisely with the key objectives and functions outlined earlier in the abstract.

The privacy analysis, as demonstrated in Theorem 1, establishes the privacy guarantees of PrivateClustering and peCluster. The output is -differentially private as an output of -DP algorithm. Consequently, both and maintain -DP status, under the Post-Processing property.

Applying to find the centers in the original space, is -DP by Composition theorem. For each , cost() is produced by Post-Processing of with only , hence ) satisfies --exclusive-DP.

Theorem 1.

A critical component of our algorithm involves a detailed utility analysis. In the following sections, we will present rigorous upper bounds and specific constraints for k-means and k-median.

Utility Analyses. PrivateCoreset is setup with parameter (other than the privacy budget ), which depends on (Line 1, Algorithm 3). It leads to the cost of every clustering on (the coreset) is off from its cost on by a multiplicative factor of plus an additive factor of . Then, by applying the Dimensional Reduction lemma (Appendix section B), which states that the cost of a specific clustering on (-dimensional space) is under some constant factor of the same clustering on (-dimensional space), we can bound the by its optimal clustering .

Lemma 1.

(Full proof in Lemma 7) With probability at least , (clustering cost) is a -approximation of , where111We use the notation to explicitly ignore factors of :

| (1) | ||||

| (2) |

We note that is chosen carefully by composing (1) the factor of , which will scale to due to operator and cancel out the scaling factor occurs when mapping the cost of clustering from the lower dimensional space to the original space and (2) the factor of that guarantees each with probability at least (and for all simultaneously with probability ).

Theorem 2.

Lemma 2.

Running Time Analysis. Assume that polynomial-time algorithms exist for -clustering and for -clustering with a fixed center. Setting (that satisfies Dimension-Reduction-Lemma(Appendix section B)), algorithm PrivateCoreset takes time (Lemma 42 of Ghazi et al. (2020)), which is equivalent to . FindCenter takes time (Corollary 54 of Ghazi et al. (2020)) and is being called times in total. Finally, we run instance of -clustering and instances of -clustering with a fixed center. It follows that the total running time of our algorithm is . This means the computational complexity of our algorithm is polynomial in relation to the size of the input data.

Tight Approximation Ratios. Corollary 1 and 2 will wrap up this section by presenting a specific, tight approximation ratio() achieved after applying NonPrivateApproxFC. These corollaries will detail and confirm the algorithm’s effectiveness in achieving these approximation ratios.

4.1 NonPrivateApproxFC for -median

As previously mentioned, we have specifically developed a non-private fixed centroid clustering algorithm, referred to as NonPrivateApproxFC.

Our algorithm is proved to be an 8-approximation algorithm.

We obtain our results by adapting Charikar et al. (1999) to work with a fixed centroid (Moving forward in our discussion, we will refer to the fixed centroid as ).

To grasp how we adapted the algorithm to suit our needs, it’s essential to understand the definitions used in Charikar et al. (1999) as they introduce demand for each location as a weight reflecting the location’s importance. represents list of agents .

For the conventional k-median problem, each is initially set to 1 for all . The term represents the cost of assigning any to and represents if location is assigned to center .

We first assume that the fixed center is one of the input data points to formulate the -median problem with a fixed center as the solution of the integer program (in the Appendix).

We can then relax the IP condition to get the following LP:

| minimize | (5) | |||

| (6) | ||||

| (7) | ||||

| (8) | ||||

| (9) |

Let be a feasible solution of the LP relaxation and let for each as the total (fractional) cost of client .

The first step. We group nearby locations by their demands without increasing the cost of a feasible solution , such that locations with positive demands are relatively far from each other. By re-indexing, we get .

We will show that it’s always possible to position as the first element of the list, i.e., is equal to the minimum value of all . Recall that: since we know that and .

The remaining work of the first step follows Charikar et al. (1999). We first set the modified demands . For , moving all demand of location to a location s.t. and , i.e., transferring all ’s demand to a nearby location with existing positive demand. Demand shift occurs as follows: . Since we initialize , and we never move its demands away, it follows that .

Let be the set of locations with positive demands . A feasible solution to the original demands is also a feasible solution to the modified demands. By Lemma 11, for any pair : .

Lemma 3.

The cost of the fractional for the input with modified demands is at most its cost for the original input.

Proof.

The cost of the LP and . Since we move the demands from to a location with lower cost the contribution of such moved demands in is less than its contribution in , it follows that . ∎

The second step. We analyze the problem with modified demands . We will group fractional centers from the solution to create a new solution with cost at most such that for each and for each . We also ensure that in this step, i.e., will be a fractional center after this.

The next lemma states that for each , there are at least of the total fractional centers within the distance of .

Lemma 4.

For any -restricted solution there exists a -integral solution with no greater cost.

Proof.

The cost of the -restricted solution (by Lemma 7 of Charikar et al. (1999)) is:

| (10) |

Let be ’s closest neighbor location in , the first term above is independent of and the minimum value of is . We now construct a -integral solution with no greater cost. Sort the location in the decreasing order of the weight and put to the first of the sequence, set for the first locations and for the rest. By doing that, we minimize the cost by assigning heaviest weights to the maximum multiplier (i.e., ) while assigning lightest weights to the minimum multiplier (i.e., ) for each . Any feasible -restricted solution must have to satisfy the constraint of so that the contribution of is the same as its of . It follows that the cost of is no more than the cost of . ∎

The third step. This step is similar to the part of Step 3 of Charikar et al. (1999) that converts a -integral solution to an integral solution with the cost increases at most by . We note that there are two types of center and , hence there are two different processes. All centers with are kept while more than half of centers with are removed. Since we show that in the previous step, is always chosen by this step and hence guarantees the constraint of .

Theorem 3.

For the metric k-median problem, the algorithm above outputs an -approximation solution.

Proof.

It is obvious that the optimal of the LP relaxation is the lower bound of the optimal of the integer program. While constructing an integer solution for the LP relaxation with the modified demands, Lemma 13 incurs a cost of and the third step multiplies the cost by a factor of , making the cost of the solution (to the LP) at most . Transforming the integer solution of the modidied demands to a solution of the original input adds an additive cost of by Lemma 12 and the Theorem follows. ∎

Corollary 1.

Running peCluster with NonPrivateApproxFC be the above K-median algorithm, with probability at least , is a -approximation of –the optimal K-median with a center fixed at position , in which:

4.2 NonPrivateApproxFC for -means

In this section, we describe an algorithm that is a -approximation of the k-means problem with a fixed center. We adapt the work by Kanungo et al. (2002) by adding a fixed center constraint to the single-swap heuristic algorithm. Similar to Kanungo et al. (2002), we need to assume that we are given a discrete set of candidate centers from which we choose centers. The optimality is defined in the space of all feasible solutions in , i.e., over all subsets of size of . We then present how to remove this assumption, with the cost of a small constant additive factor, in the Appendix.

Definition 7.

Let be the optimal clustering with be the cluster with the fixed center . A set is a -approximate candidate center set if there exists , such that:

| (11) |

Given , let denote the squared Euclidean distance between . Let , . Let , , where is the closest point of to . We drop when the context is clear.

Let be the fixed center that must be in the output. Let be the set of candidate centers, that . We define stability in the context of -means with a fixed center as follows. We note that it differs from the definition of Kanungo et al. (2002) such that we never swap out the fixed center :

Definition 8.

A set of k centers that contains the fixed center is called -stable if: for all , .

Algorithm. We initialize as a set of centers form that . For each set , we perform the swapping iteration:

-

•

Select one center

-

•

Select one replaced center

-

•

Let

-

•

If reduces the distortion, . Else,

We repeat the swapping iteration until , i.e., after iterations, is a -stable. Theorem 4 states the utility of an arbitrary -stable set, which is also the utility of our algorithm since it always outputs an -stable set. We note that if is created with some errors to the actual optimal centroids, the utility bound of our algorithm is increased by the factor , i.e., ours is a -approximation to the actual optimal centroids.

Theorem 4.

If is an -stable -element set of centers, then . Furthermore, if is a -approximate candidate center set, then is a -approximate of the actual optimal centroids in the Euclidean space.

Kanungo et al. (2002)’s analysis depends on the fact that all centers in the optimal solution are centroidal, hence they can apply centroidal-Lemma in the Appendix. Our analysis must take into account that is a fixed center, which is not necessarily centroidal. Therefore, we need to adapt and give special treatment for as an element of the optimal solution. Also, in the swapping iteration above, we never swap out , since the desired output must always contain , which also alters the swap pairs mapping as in Kanungo et al. (2002).

We adapt the mapping scheme of Kanungo et al. (2002), described in the Appendix with one modification: we set up a fixed capturing pair between and . It makes sense since , as the closest center in to is itself. With it, we always generate a swap partition of and that both contain . The reason we must have this swap partition (even though we never actually perform this swap on ) is that we will sum up the cost occurred by every center in and by pair-by-pair from which the total costs and are formed (in Lemma 5). For this partition, there are two cases:

-

•

, which means the partitions contain only , and hence when they are swapped, nothing changes from to and hence do not violate the constraint we set above about not swapping out .

-

•

, which means the partitions contain some that . By the design of the swap pairs mapping, all will be chosen to be swapped out instead, and will never be swapped out.

For each swap pair of and , we analyze the change in the distortion. For the insertion of , all points in are assigned to , leads to the change of distortion to be: .

For all points in that lost , they are assigned to new centers, which changes the distortion to be:

and by the property of swap pairs, , since ; the inequality is because for all , .

Lemma 5.

(Full proof in Lemma 15) Let be -stable set and be the optimal set of centers, we have , where .

Lemma 6.

(Full proof in Lemma 17) With and defined as above: .

Using the same argument with Kanungo et al. (2002), we have or or which means , and the Theorem follows.

In Section D.2, we describe how to create a -approximation candidate center set for any constant . Using it to create as a -approximation candidate center set and perform the above algorithm on , the final output will be a -approximation.

Corollary 2.

Running peCluster with NonPrivateApproxFC be the above -means algorithm, with probability at least , is a -approximation of –the optimal -means with a center fixed at position , in which:

5 Experiments

In this part of our study, we focus on understanding the impact of the privacy budget on the trade-off between privacy and accuracy. Additionally, we explore how influences the quality of differentially private explanations.

To assess the impact of on privacy and accuracy, we will employ a set of metrics: 1) PO (Private Optimal) to evaluate the clustering cost using the differentially private algorithm, 2) PC (Private Contrastive) to determine the cost in fixed centroid differentially private clustering, 3) RO (Regular Optimal) to measure clustering cost in regular, non-private data, and 4) RC (Regular Contrastive) to analyze the cost of fixed centroid clustering in non-private data.

Measurements To measure the quality of explanations, we define two key metrics: 1) APE (Average Private Explanation), which calculates the difference between PC and PO to represent the decrease in utility for clustering as an explanatory output for agents, and 2) AE (Average Explanation), which is the difference between RO and RC, serving as a baseline to assess the extent to which differential privacy impacts the explanations. These metrics will enable us to effectively quantify the explanatory power of our approach.

We study the trade-offs between privacy and utility for the above metrics. The value balances privacy and utility: lower values prioritize privacy but may compromise utility.

Datasets Our study focuses on activity-based population datasets from Charlottesville City and Albemarle County, Virginia, previously used for mobile vaccine clinic deployment Mehrab et al. (2022). The Charlottesville dataset includes 33,000 individuals, and Albemarle County has about 74,000. Due to our algorithm’s intensive computational demands, we sampled 100 points for contrastive explanations.

Computational intensity of our algorithm stems primarily from the k-median algorithm we employ, which formulates the problem using linear programming. When the input size is large, processing times for this specific k-median algorithm can increase significantly. However, the implementation we use is one of the more straightforward approaches to executing k-median with a fixed centroid, and faster algorithms could potentially be applied to alleviate these concerns. For this study, we have opted not to focus on optimizing the computation time but rather to sample the data points to expedite our results. Additionally, we chose to sample 100 points because this number strikes a balance between computational efficiency and the clarity of the results for the reader. Importantly, the number of sampled points does not substantially impact the outcome of our analysis, allowing us to maintain the integrity of our findings while ensuring readability and accessibility for our audience.

Furthermore, our method is data agnostic and does not rely on the s-sparse feature for sample points. It is sufficiently adaptable to handle sample points from any distribution. This flexibility ensures that our approach is robust and versatile, capable of effectively managing diverse datasets regardless of their sparsity or the specific characteristics of their distribution.

Dimensiality It is important to note that our experiments were conducted on a 2D dataset. This choice was driven by the fact that the initial step of our method involves reducing dimensionality, as outlined in DimReduction (Algorithm 1). This process entails scaling down and normalizing the data to produce a lower-dimensional dataset. By implementing this step at the outset, we effectively avoid the challenges associated with managing high-dimensional datasets, thus simplifying the data preparation phase.

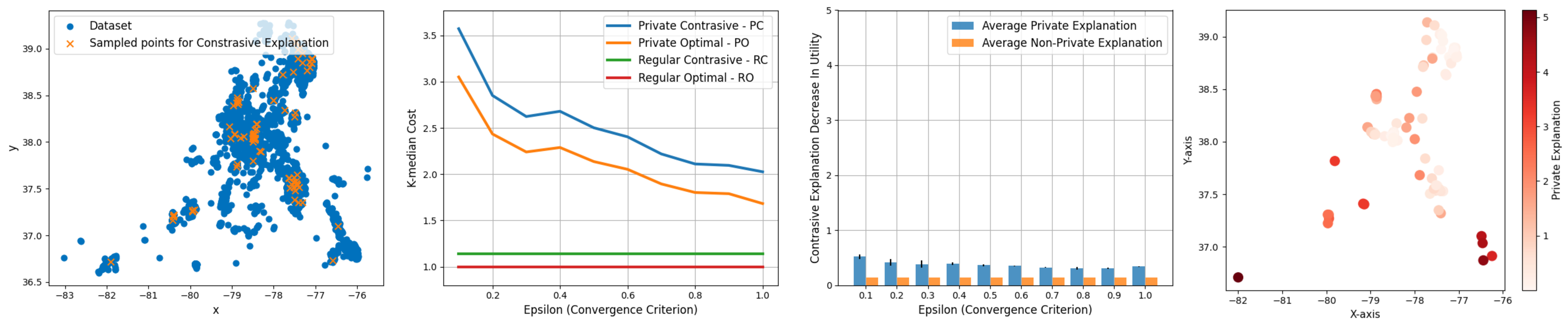

Experimental results. In this section, we focus solely on the Albemarle County dataset, with additional analyses and datasets presented in the Appendix. Figure 1(a) displays the dataset, highlighting the original data points with blue dots and the sampled points used for contrastive explanations with orange ’X’ markers. In Figure 1(b), we observe significant trends in the k-median clustering results for both private and non-private settings. Both the Private Optimal (PO) and Private Contrastive (PC) exhibit a downward trend in the private setting, indicating that as the budget increases, the error cost decreases, which underscores the trade-off between privacy and accuracy. The trends for non-private settings are depicted as straight lines, reflecting their independence from the privacy budget and are included for comparative purposes.

Our analysis shows a consistent gap between the Private Optimal (PO) and Private Contrastive (PC) across different levels of , marking a stable contrastive error that does not fluctuate with changes in the privacy budget. This stability is pivotal as it supports our goal of providing dependable explanations to agents, regardless of the value, ensuring that our approach adeptly balances privacy considerations with the demand for meaningful explainability.

The distinction between PO and PC arises because PO is derived from implementing the optimal k-median/k-means algorithm, while PC results from enforcing a centroid’s placement at a specific agent’s location, inherently producing a less optimal outcome due to this constraint. Initially, agents are presented with the optimal outcome (PO). When an agent inquires why a centroid is not positioned at their specific location, we offer a contrastive explanation (PC), which is naturally less optimal than the original result as it evaluates the utility change from hypothetically placing a centroid at that specific location. In this scenario, a higher score signifies lower utility, emphasizing the balance between providing personalized explanations and adhering to privacy constraints.

Figure 1(c) displays the average private explanation (APE) and average explanation (AE) with variance across different explanations. The average values of APE across multiple runs are relatively stable, irrespective of the privacy budget, reinforcing the robustness of our methodology against the constraints imposed by differential privacy.

Lastly, the heatmap in Figure 1(d) provides insights into how the relative spatial positioning affects the contrastive explanation. It vividly shows that the greater the distance a fixed centroid is from the distribution center, the larger the contrastive error, further illustrating the intricate dynamics of our clustering approach.

6 Discussion

Our work explores the design of private explanations for clustering, particularly focusing on the k-median and k-means objectives for Euclidean datasets.

We formalize this as the PrivEC problem, which gives a contrastive explanation to each agent equal to the increase in utility when a cluster centroid is strategically positioned near them.

The accuracy bounds of our algorithm match private clustering methods, even as they offer explanations for every user, within a defined privacy budget.

The related work in this domain has seen the development of algorithms for contrastive explanations, but our contribution stands out by integrating differential privacy guarantees.

Our experiments demonstrate the resilience of our approach. Despite the added layer of providing differential private explanations on top of differential private clustering, the quality of our explanations remains uncompromised.

References

- Balcan et al. [2017] Maria-Florina Balcan, Travis Dick, Yingyu Liang, Wenlong Mou, and Hongyang Zhang. Differentially private clustering in high-dimensional euclidean spaces. In Doina Precup and Yee Whye Teh, editors, Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6-11 August 2017, volume 70 of Proceedings of Machine Learning Research, pages 322–331. PMLR, 2017. URL http://proceedings.mlr.press/v70/balcan17a.html.

- Barrett et al. [2009] Christopher L Barrett, Richard J Beckman, Maleq Khan, VS Anil Kumar, Madhav V Marathe, Paula E Stretz, Tridib Dutta, and Bryan Lewis. Generation and analysis of large synthetic social contact networks. In Proceedings of the 2009 Winter Simulation Conference (WSC), pages 1003–1014. IEEE, 2009.

- Bertsimas et al. [2022] Dimitris Bertsimas, Vassilis Digalakis Jr, Alexander Jacquillat, Michael Lingzhi Li, and Alessandro Previero. Where to locate covid-19 mass vaccination facilities? Naval Research Logistics (NRL), 69(2):179–200, 2022.

- Boggess et al. [2023] Kayla Boggess, Sarit Kraus, and Lu Feng. Explainable multi-agent reinforcement learning for temporal queries. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence (IJCAI), 2023.

- Charikar et al. [1999] Moses Charikar, Sudipto Guha, Éva Tardos, and David B Shmoys. A constant-factor approximation algorithm for the k-median problem. In Proceedings of the thirty-first annual ACM symposium on Theory of computing, pages 1–10, 1999.

- Chen et al. [2021] Jiangzhuo Chen, Stefan Hoops, Achla Marathe, Henning Mortveit, Bryan Lewis, Srinivasan Venkatramanan, Arash Haddadan, Parantapa Bhattacharya, Abhijin Adiga, Anil Vullikanti, Aravind Srinivasan, Mandy L Wilson, Gal Ehrlich, Maier Fenster, Stephen Eubank, Christopher Barrett, and Madhav Marathe. Prioritizing allocation of covid-19 vaccines based on social contacts increases vaccination effectiveness. medRxiv, 2021.

- Dasgupta and Gupta [2003] Sanjoy Dasgupta and Anupam Gupta. An elementary proof of a theorem of johnson and lindenstrauss. Random Structures & Algorithms, 22(1):60–65, 2003.

- Dwork and Roth [2014] Cynthia Dwork and Aaron Roth. The algorithmic foundations of differential privacy. Found. Trends Theor. Comput. Sci., 9(3–4):211–407, aug 2014. ISSN 1551-305X. doi: 10.1561/0400000042. URL https://doi.org/10.1561/0400000042.

- Feldman et al. [2017] Dan Feldman, Chongyuan Xiang, Ruihao Zhu, and Daniela Rus. Coresets for differentially private k-means clustering and applications to privacy in mobile sensor networks. In Proceedings of the 16th ACM/IEEE International Conference on Information Processing in Sensor Networks, pages 3–15, 2017.

- Finkelstein et al. [2022] Mira Finkelstein, Lucy Liu, Yoav Kolumbus, David C Parkes, Jeffrey S Rosenschein, Sarah Keren, et al. Explainable reinforcement learning via model transforms. Advances in Neural Information Processing Systems, 35:34039–34051, 2022.

- Georgara et al. [2022] Athina Georgara, Juan Antonio Rodríguez-Aguilar, and Carles Sierra. Privacy-aware explanations for team formation. In International Conference on Principles and Practice of Multi-Agent Systems, pages 543–552. Springer, 2022.

- Ghazi et al. [2020] Badih Ghazi, Ravi Kumar, and Pasin Manurangsi. Differentially private clustering: Tight approximation ratios. In Proceedings of the 34th International Conference on Neural Information Processing Systems, NIPS’20, Red Hook, NY, USA, 2020. Curran Associates Inc. ISBN 9781713829546.

- Goethals et al. [2022] Sofie Goethals, Kenneth Sörensen, and David Martens. The privacy issue of counterfactual explanations: explanation linkage attacks. arXiv preprint arXiv:2210.12051, 2022.

- Gupta et al. [2010] Anupam Gupta, Katrina Ligett, Frank McSherry, Aaron Roth, and Kunal Talwar. Differentially private combinatorial optimization. In Proceedings of the Twenty-First Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2010, Austin, Texas, USA, January 17-19, 2010, pages 1106–1125. SIAM, 2010. doi: 10.1137/1.9781611973075.90. URL https://doi.org/10.1137/1.9781611973075.90.

- Huang and Liu [2018] Zhiyi Huang and Jinyan Liu. Optimal differentially private algorithms for k-means clustering. In Proceedings of the 37th ACM SIGMOD-SIGACT-SIGAI Symposium on Principles of Database Systems, pages 395–408, 2018.

- Johnson and Lindenstrauss [1984] William B Johnson and Joram Lindenstrauss. Extensions of lipschitz mappings into a hilbert space. In Conference on Modern Analysis and Probability, volume 26, pages 189–206. American Mathematical Society, 1984.

- Kanungo et al. [2002] Tapas Kanungo, David M Mount, Nathan S Netanyahu, Christine D Piatko, Ruth Silverman, and Angela Y Wu. A local search approximation algorithm for k-means clustering. In Proceedings of the eighteenth annual symposium on Computational geometry, pages 10–18, 2002.

- Kearns et al. [2014] Michael Kearns, Mallesh Pai, Aaron Roth, and Jonathan Ullman. Mechanism design in large games: Incentives and privacy. In Proceedings of the 5th conference on Innovations in theoretical computer science, pages 403–410, 2014.

- Madumal et al. [2020] Prashan Madumal, Tim Miller, Liz Sonenberg, and Frank Vetere. Explainable reinforcement learning through a causal lens. In Proceedings of the AAAI conference on artificial intelligence, volume 34, pages 2493–2500, 2020.

- Makarychev et al. [2019] Konstantin Makarychev, Yury Makarychev, and Ilya Razenshteyn. Performance of johnson-lindenstrauss transform for k-means and k-medians clustering. In Proceedings of the 51st Annual ACM SIGACT Symposium on Theory of Computing, pages 1027–1038, 2019.

- Matoušek [2000] Jiří Matoušek. On approximate geometric k-clustering. Discrete & Computational Geometry, 24(1):61–84, 2000.

- Mehrab et al. [2022] Zakaria Mehrab, Mandy L Wilson, Serina Chang, Galen Harrison, Bryan Lewis, Alex Telionis, Justin Crow, Dennis Kim, Scott Spillmann, Kate Peters, et al. Data-driven real-time strategic placement of mobile vaccine distribution sites. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 36, pages 12573–12579, 2022.

- Miller [2019] Tim Miller. Explanation in artificial intelligence: Insights from the social sciences. Artificial intelligence, 267:1–38, 2019.

- Nguyen et al. [2023] Truc Nguyen, Phung Lai, Hai Phan, and My T Thai. Xrand: Differentially private defense against explanation-guided attacks. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 37, pages 11873–11881, 2023.

- Nissim and Stemmer [2018] Kobbi Nissim and Uri Stemmer. Clustering algorithms for the centralized and local models. In Algorithmic Learning Theory, pages 619–653. PMLR, 2018.

- Patel et al. [2022] Neel Patel, Reza Shokri, and Yair Zick. Model explanations with differential privacy. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, pages 1895–1904, 2022.

- Rader et al. [2022] Benjamin Rader, Christina M Astley, Kara Sewalk, Paul L Delamater, Kathryn Cordiano, Laura Wronski, Jessica Malaty Rivera, Kai Hallberg, Megan F Pera, Jonathan Cantor, et al. Spatial modeling of vaccine deserts as barriers to controlling sars-cov-2. Communications Medicine, 2(1):141, 2022.

- Reddy [2018] Chandan K Reddy. Data clustering: algorithms and applications. Chapman and Hall/CRC, 2018.

- Saifullah et al. [2022] Saifullah Saifullah, Dominique Mercier, Adriano Lucieri, Andreas Dengel, and Sheraz Ahmed. Privacy meets explainability: A comprehensive impact benchmark. arXiv preprint arXiv:2211.04110, 2022.

- Sreedharan et al. [2020] Sarath Sreedharan, Utkarsh Soni, Mudit Verma, Siddharth Srivastava, and Subbarao Kambhampati. Bridging the gap: Providing post-hoc symbolic explanations for sequential decision-making problems with inscrutable representations. arXiv preprint arXiv:2002.01080, 2020.

- Sreedharan et al. [2021] Sarath Sreedharan, Siddharth Srivastava, and Subbarao Kambhampati. Using state abstractions to compute personalized contrastive explanations for ai agent behavior. Artificial Intelligence, 301:103570, 2021.

- Stemmer [2020] Uri Stemmer. Locally private k-means clustering. In Shuchi Chawla, editor, Proceedings of the 2020 ACM-SIAM Symposium on Discrete Algorithms, SODA 2020, Salt Lake City, UT, USA, January 5-8, 2020, pages 548–559. SIAM, 2020. doi: 10.1137/1.9781611975994.33. URL https://doi.org/10.1137/1.9781611975994.33.

- Stemmer and Kaplan [2018] Uri Stemmer and Haim Kaplan. Differentially private k-means with constant multiplicative error. Advances in Neural Information Processing Systems, 31, 2018.

Appendix A Related work: additional details

Our work considers differential privacy for explainable AI in general (XAI) and Multi-agent explanations (XMASE) in particular, focusing on post-hoc contrastive explanations for clustering.

Extensive experiments presented in Saifullah et al. [2022] demonstrate non-negligible changes in explanations of black-box ML models through the introduction of privacy. The findings in Patel et al. [2022] corroborate these observations regarding explanations for black-box feature-based models. These explanations involve creating local approximations of the model’s behavior around specific points of interest, potentially utilizing sensitive data. In order to safeguard the privacy of the data used during the local approximation process of an eXplainable Artificial Intelligence (XAI) module, the researchers have devised an innovative adaptive differentially private algorithm. This algorithm is designed to determine the minimum privacy budget required to generate accurate explanations effectively. The study undertakes a comprehensive evaluation, employing both empirical and analytical methods, to assess how the introduction of randomness inherent in differential privacy algorithms impacts the faithfulness of the model explanations.

Nguyen et al. [2023] considers feature-based explanations (e.g., SHAP) that can expose the top important features that a black-box model focuses on. To prevent such expose they introduced a new concept of achieving local differential privacy (LDP) in the explanations, and from that, they established a defense, called XRAND, against such attacks. They showed that their mechanism restricts the information that the adversary can learn about the top important features while maintaining the faithfulness of the explanations.

The analysis presented in Goethals et al. [2022] considers security concerning contrastive explanations. The authors introduced the concept of the ”explanation linkage attack”, a potential vulnerability that arises when employing instance-based strategies to derive contrastive explanations. To address this concern, they put forth the notion of k-anonymous contrastive explanations. Furthermore, the study highlights the intricate balance between transparency, fairness, and privacy when incorporating k-anonymous explanations. As the degree of privacy constraints is heightened, a discernible trade-off comes into play: the quality of explanations and, consequently, transparency are compromised.

Amongst the three types of eXplainable AI mentioned earlier, the maintenance of privacy during explanation generation incurs a certain cost. This cost remains even if an expense was previously borne during the creation of the original model. However, in our proposed methodology for generating contrastive explanations in clustering scenarios, once the cost of upholding differential privacy in the initial solution is paid, no additional expenses are requisite to ensure differential privacy during the explanation generation phase.

Closer to our application is the study that investigates the privacy aspects concerning contrastive explanations in the context of team formation Georgara et al. [2022]. In this study, the authors present a comprehensive framework that integrates team formation solutions with their corresponding explanations, while also addressing potential privacy concerns associated with these explanations. To accomplish this, the authors introduce a privacy breach detector (PBD) that is designed to evaluate whether the provision of an explanation might lead to privacy breaches. The PBD consists of two main components: (a) A belief updater (BU), calculates the posterior beliefs that a user is likely to form after receiving the explanation. (b) A privacy checker (PC), examines whether the user’s expected posterior beliefs surpass a specified belief threshold, indicating a potential privacy breach. However, the research is still in its preliminary stages and needs a detailed evaluation of the privacy breach detector.

Our contribution includes the development of comprehensive algorithms for generating contrastive explanations with differential privacy guarantees. We have successfully demonstrated the effectiveness of these algorithms by providing rigorous proof for their privacy guarantees and conducting extensive experiments that showcased their accuracy and utility. In particular, we have shown the validity of our private explanations for clustering based on the -median and -means objectives for Euclidean datasets. Moreover, our algorithms have been proven to have the same accuracy bounds as the best private clustering methods, even though they provide explanations for all users, within a bounded privacy budget. Notably, our experiments in the dedicated experiments section reveal that the epsilon budget has minimal impact on the explainability of our results, further highlighting the robustness of our approach.

There has been a lot of work on private clustering and facility location, starting with Gupta et al. [2010], which was followed by a lot of work on other clustering problems in different privacy models, e.g., Huang and Liu [2018], Stemmer [2020], Stemmer and Kaplan [2018], Nissim and Stemmer [2018], Feldman et al. [2017]. Gupta et al. [2010] demonstrated that the additive error bound for points in a metric space involves an term, where is the space’s diameter. Consequently, all subsequent work, including ours, assumes points are restricted to a unit ball.

Appendix B Additional proofs for PECluster

Theorem 5.

Proof.

It follows that is the direct results (using no private information) of , which is -differentially private coreset. By the post-processing property, is -DP (which implies -DP).

is the output of DimReverse that is -DP w.r.t. the input . By composition, is -DP since the input is calculated partially from , which is -DP.

For each explanations , let , let be the value of with input dataset (and as the private coreset of respectively) and a fixed center , let , we have and therefore:

| (15) | ||||

| (16) | ||||

| (17) |

which implies that is --exclusive DP, since is -DP (which implies --exclusive DP). ∎

Lemma 7.

(Full version of Lemma 1) With probability at least , is a -approximation of , where:

| (18) | ||||

| (19) |

Proof.

Lemma 8.

Proof.

By the result of Lemma 9, with probability we have:

| (20) | ||||

| (21) | ||||

| (22) | ||||

| (23) |

Lemma 9.

(Johnson-Lindenstrauss (JL) Lemma Johnson and Lindenstrauss [1984], Dasgupta and Gupta [2003]) Let be any -dimensional vector. Let denote a random -dimensional subspace of and let denote the projection from to . Then, for any we have

| (24) |

Lemma 10.

(Dimensionality Reduction for -Cluster Makarychev et al. [2019]) For every , there exists . Let be a random d-dimensional subspace of and denote the projection from to . With probability , the following holds for every partition of :

| (25) |

where denotes the partition .

Theorem 6.

(Full version of Theorem 2) Fix an . With probability at least , cost() released by Algorithm 4 is a -approximation of the optimal cost , in which:

and is the optimal -clustering cost with a center is fixed at position .

Proof.

Let and , setting , applying Lemma 10, we have:

| (26) |

By standard concentration, it can be proved that with probability as follows:

Using Lemma 9, we have:

| (27) |

Since is in the unit ball, , which leads to:

| (28) |

Setting , , we have:

| (29) | ||||

| (30) | ||||

| (31) |

By union bound on all , then with probability at least , for all . Since is the output of PrivateCoreset with input , then by Theorem 38 of Ghazi et al. [2020], is a -coreset of (with probability at least ), with as:

| (32) |

Let be the solution of NonPrivateApproxFC in peCluster for a fixed , be the optimal solution of the clustering with fixed center at on , be the optimal cost of the clustering with fixed center at on . By the -approximation property of NonPrivateApproximationFC, we have:

| (33) | ||||

| (34) | ||||

| (35) | ||||

| (36) |

Composing with Lemma 10, we have:

| (37) | ||||

| (38) | ||||

| (39) | ||||

| (40) |

Finally, since

| (41) | ||||

| (42) | ||||

| (43) | ||||

| (44) | ||||

| (45) | ||||

| (46) |

where in we substitute the value of , and is because , and is because , and the Lemma follows. ∎

Set and the Lemma follows. ∎

Appendix C Additional proofs for -median algorithm

Definition 9.

The solution of the -median problem (with demands and a center fixed at a location ) can be formulated as finding the optimal solution of the following Integer program (IP):

| minimize | (48) | |||

| (49) | ||||

| (50) | ||||

| (51) | ||||

| (52) | ||||

| (53) | ||||

| (54) | ||||

| (55) |

Lemma 11.

Locations satisfy: .

Proof.

The lemma follows the demands moving step (in the first step of the algorithm): for every to the right of (which means ) and within the distance of (that also covers all points within distance ), we move all demands of to , hence will not appear in . ∎

Lemma 12.

(Lemma 4 of Charikar et al. [1999]) For any feasible integer solution for the input with modified demands, there is a feasible integer solution for the original input with cost at most plus the cost of with demands .

Lemma 13.

(Theorem 6 of Charikar et al. [1999]) There is a -restricted solution of cost at most .

Appendix D Additional proofs for -means algorithm

Lemma 14.

(Lemma 2.1 of Kanungo et al. [2002]) Given a finite subset of points in , let be the centroid of . Then for any , .

Lemma 15.

(Full version of Lemma 5) Let be -stable set and be the optimal set of centers, we have , where .

Proof.

Since is -stable, we have for each swap pair:

| (56) | ||||

| (57) |

We will sum up the inequality above for all swap pairs. For the left term, the sum is overall :

| (58) | ||||

| (59) |

Since each will appear exactly once, and will cover all points in .

For the right term, the sum is over all that is being swapped out. We note that each can be swapped out at most twice, hence:

| (60) | ||||

| (61) |

When we combine the two terms, we have:

| (62) | ||||

| (63) | ||||

| (64) |

and the Lemma follows. ∎

Lemma 16.

(Proof in Lemma 2.2 & 2.3 of Kanungo et al. [2002]) Let , we have

Lemma 17.

(Full version of Lemma 6) With and defined as above: .

D.1 Swap pairs mapping

In this section, we describe the swap pairs mapping scheme for the -means with a fixed center algorithm. We adapt the scheme of Matoušek [2000] to accommodate the fixed center. We discuss the modifications in Section 4.2. Here we discuss the complete mapping scheme.

At the last iteration of the algorithm, we always have a candidate set of centers that is -stable, i.e., no single feasible swap can decrease its cost. We then analyze some hypothetical swapping schemes, in which we try to swap a center with an optimal center . We utilize the fact that such single swaps do not decrease the cost to create some relationships between and –the optimal cost. Particularly, these relationships are stated in Lemma 15 and Lemma 17.

Let be the fixed center. We note that and . Let be the closest center in for an optimal center , which means is captured by . It follows that . A center may capture no optimal center (we call it lonely). We partition both and into and that for all .

We construct each pair of partitions as follows: let be a non-lonely center, , i.e., is the set of all optimal centers that are captured by . Now, we compose with lonely centers (which are not partitioned into any group from ) to form . It is clear that .

We then generate swap pairs for each pair of partitions by the following cases:

-

•

: let , generate a swap pair .

-

•

: let in which are lonely centers, let , generate swap pairs for . Also, we generate a swap pair of . Please note that does not belong to any swap pair, each belongs to exactly one swap pair, and each belongs to at most two swap pairs.

We then guarantee the following properties of our swap pairs:

-

1.

each is swapped in exactly once

-

2.

each is swapped out at most twice

-

3.

for each swap pair , either captures only , or is lonely (captures nothing).

D.2 -approximate candidate center set for fixed-center -means.

We describe how to generate a -approximate candidate center set for -means with fixed center for a dataset . From the result of Matoušek [2000], we create a set which is a -approximation centroid set of . We will prove that forms a -approximate candidate center set for -means with fixed center .

Definition 10.

Let be a finite set with its centroid . A -tolerance ball of is the ball centered at and has radius of .

Definition 11.

Let be a finite set. A finite set is a -approximation centroid set of if intersects the -tolerance ball of each nonempty .

Lemma 18.

(Theorem 4.4 of Matoušek [2000]) We can compute –a -approximation centroid set of that has size of in time .

Theorem 7.

Let , in which is a -approximation centroid set computed as Lemma 18, then is a -approximate candidate center set for -means with fixed center .

Proof.

Let be the optimal clustering in which is the cluster whose center is (we denote it as ). For any , we define and in which is the centroid of . By Definition 7, we will prove that there exists a set and such that . We adapt the analysis of Matoušek [2000] for the special center –which is not a centroid as other centers in -means.

First, we analyze the optimal cost. For any cluster except , its center is also the centroid of the cluster, while must have center :

| (77) | ||||

| (78) |

Now, we construct as follows: setting , for , is the candidate center that intersects the -tolerance ball of cluster . For , . For other clusters, as below:

| (79) | ||||

| (80) | ||||

| (81) | ||||

| (82) | ||||

| (83) | ||||

| (84) | ||||

| (85) |

Let be the Voronoi partition with centers , i.e., are points in the Voronoi region of in the Voronoi diagram created by , we have:

| (86) | ||||

| (87) | ||||

| (88) | ||||

| (89) | ||||

| (90) |

where is because implies its minimal cost for any center, is because s are picked by Voronoi partition which minimies the cost over partitions of seletecd centers, and is because as we proved above, and the Theorem follows. ∎

D.3 Additional details on experiments

D.3.1 Datasets and Experimental Setup

Beyond the Albemarle County dataset discussed in the main body of the paper, we also conducted experiments on other datasets, including the Charlottesville city dataset and synthetic activity-based populations. Here, we provide a detailed analysis of these experiments.

Charlottesville City Dataset:

Synthetic 2D Dataset:

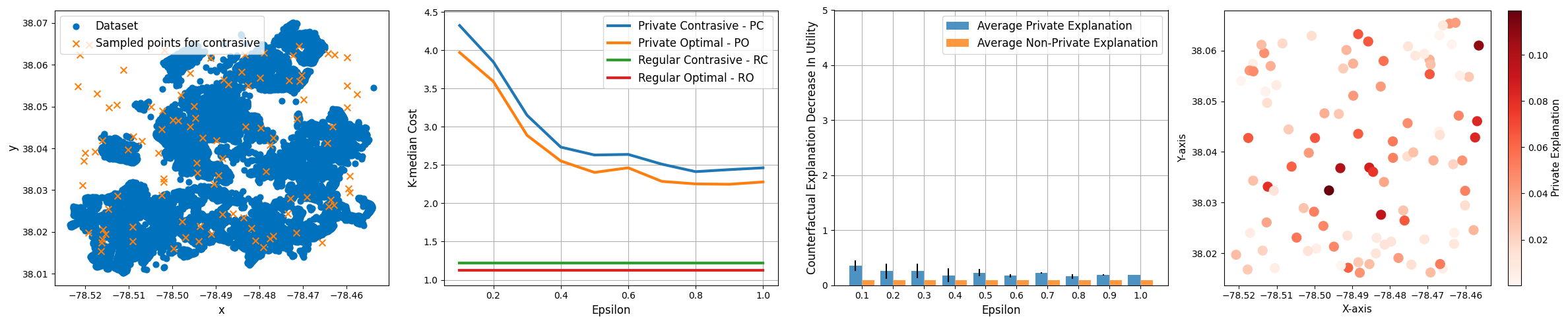

The synthetic dataset was meticulously crafted to emulate the distribution and characteristics of real-world datasets, ensuring a balance between realism and controlled variability. Comprising 100 data points in a 2D space, this dataset was uniformly distributed, mirroring the range observed in the real datasets we analyzed.

The primary motivation behind this synthetic dataset is to provide a sandbox environment, free from the unpredictable noise and anomalies of real-world data. This controlled setting is pivotal in understanding the core effects of differential privacy mechanisms, isolating them from external confounding factors. The dataset serves as a foundational tool in our experiments, allowing us to draw comparisons and validate our methodologies before applying them to more complex, real-world scenarios.

D.3.2 Experimental Results

Impact of on Private Optimal (PO) and Private Contrastive (PC):

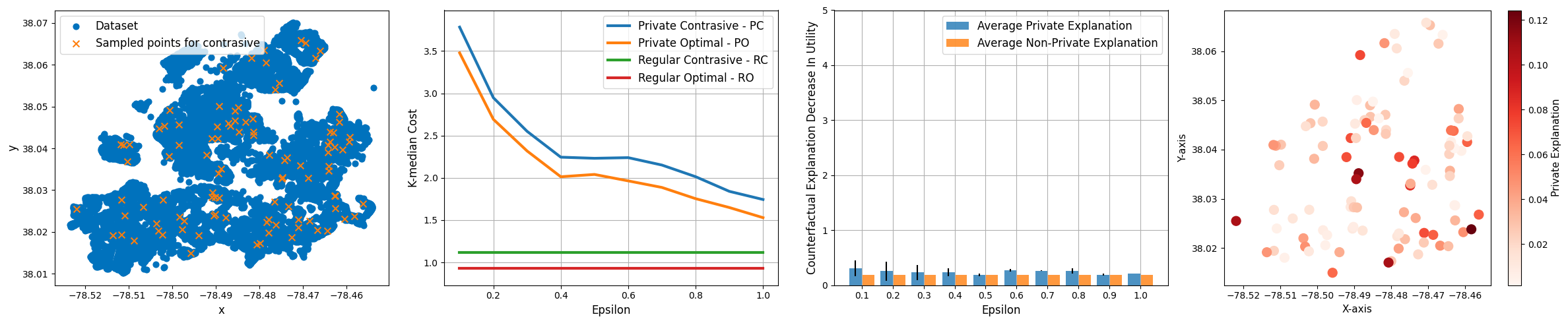

For the Charlottesville city dataset, we observed similar trends to those in the Albemarle county dataset. As the value increased, prioritizing accuracy, there was a slight compromise in privacy. However, consistent with our hypothesis, the influence of the epsilon budget on the explainability of our outcomes was negligible.

Effect of Privacy on Explainability:

The contrastive explanations for the Charlottesville city dataset also showcased the resilience of our approach. Even with differential privacy perturbations, the quality of explanations remained consistent, emphasizing the robustness of our method.

Visualization:

Figure 2 - (a) showcases the Charlottesville city dataset. Blue dots represent the original dataset, while orange ’X’ markers indicate the sampled points for contrastives. The distribution of these points provides a visual representation of the data’s diversity and the regions we focused on for our contrastive analysis.

D.3.3 Performance Evaluation:

For each value, we conducted 25 different runs on the Charlottesville city dataset. The average results were consistent with our findings from the Albemarle County dataset. It’s essential to note that these multiple invocations were solely for performance evaluation. In real-world applications, invoking private algorithms multiple times could degrade the privacy guarantee.

Consistency in Contrastive Explanations across Datasets:

Despite the distinct scales between the Charlottesville city and the Albemarle county datasets, we observed consistent patterns in the contrastive explanations. Specifically, as illustrated in Figure 2 - (b) and Figure 2 - (c), the contrastive explanations remained largely unaffected by variations in the budget. This consistency further reinforces our hypothesis that the epsilon budget has a negligible influence on the explainability of our outcomes, even when applied to datasets of different scales.

Impact of Fixed Centroids on Contrastive Error:

In Figure 2 - (d), we delve deeper into the effects of selecting different centroids as fixed centroids. It’s evident that the choice of a fixed centroid has a pronounced impact on the contrastive error. This underscores the importance of the centroid’s position concerning the data distribution. Some centroids, especially those closer to data-dense regions, tend to produce lower counterfactual errors, while others, particularly those near sparser regions or outliers, result in higher errors. This observation highlights the intricate relationship between centroid positioning and the resulting contrastive explanation’s accuracy.

D.3.4 Conclusion:

The extended experiments on the Charlottesville city dataset further validate our approach’s efficacy. The balance between privacy and utility, the robustness of contrastive explanations, and the negligible impact of on explainability were consistent across datasets. These findings underscore the potential of our method for diverse real-world applications.