Control software analysis, Part I

Open-loop properties

Abstract: As the digital world enters further into everyday life, questions are raised about the increasing challenges brought by the interaction of real-time software with physical devices. Many accidents and incidents encountered in areas as diverse as medical systems, transportation systems or weapon systems are ultimately attributed to “software failures”. Since real-time software that interacts with physical systems might as well be called control software, the long litany of accidents due to real-time software failures might be taken as an equally long list of opportunities for control systems engineering. In this paper, we are interested only in run-time errors in those pieces of software that are a direct implementation of control system specifications: For well-defined and well-understood control architectures such as those present in standard textbooks on digital control systems, the current state of theoretical computer science is well-equipped enough to address and analyze control algorithms. It appears that a central element to these analyses is Lyapunov stability theory, which translate into invariant theory in computer implementations.

1 Introduction

1.1 Preliminaries: An example system

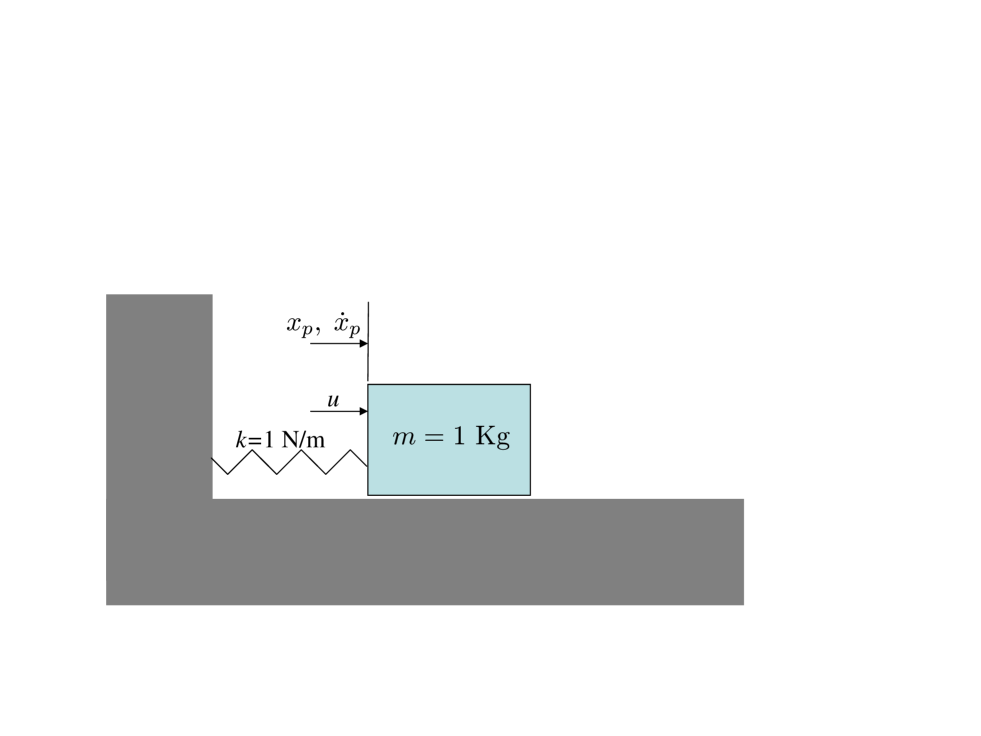

We consider a standard control system, in this case a one-mass, one spring system. such as that shown in Fig. 1.

The system is modeled via the following differential equation

| (1) |

In these equations, and are measured in meters and meters per second, respectively, while is expressed in Newtons. We assume that the output is available for feedback purposes. The chosen controller is of the lead-lag type,

| (2) |

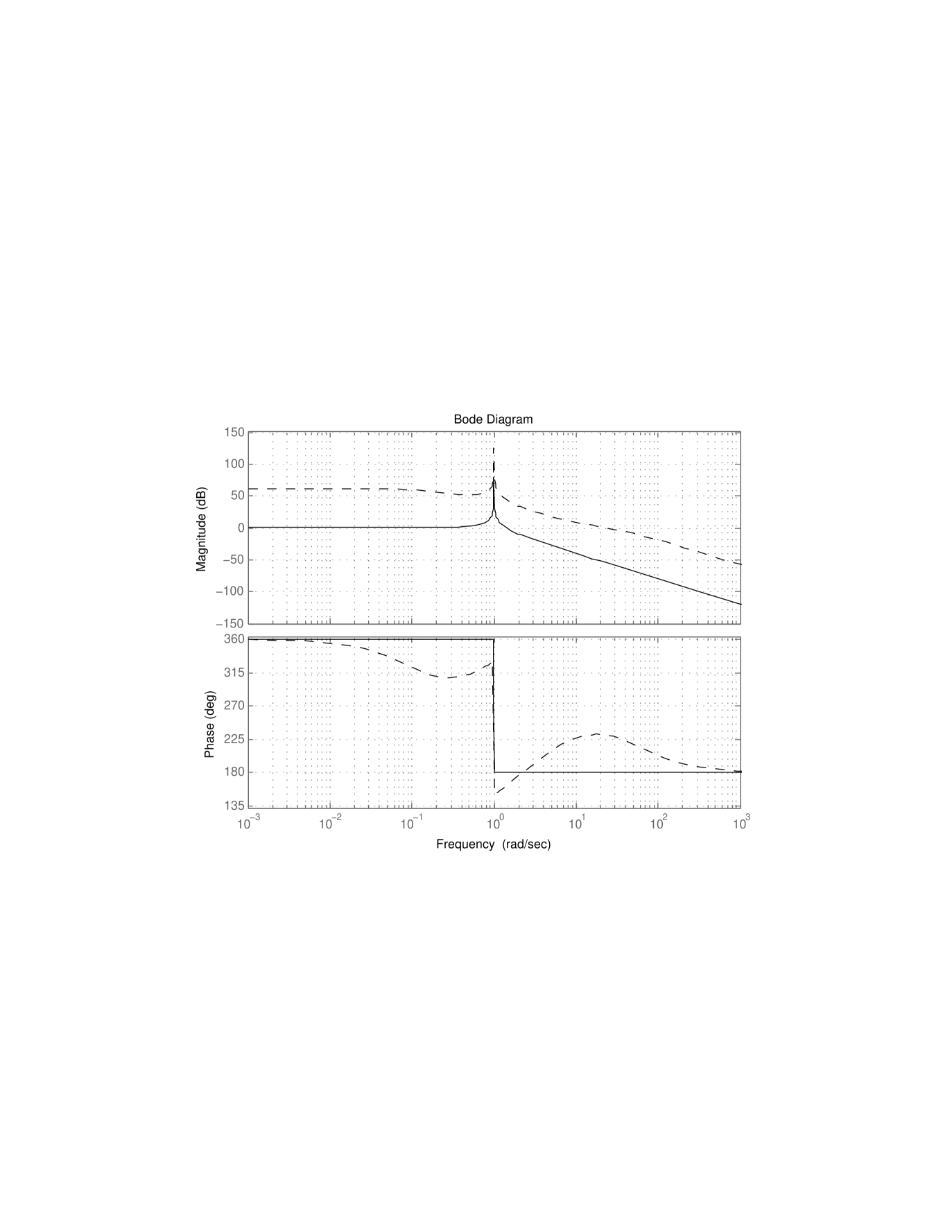

which guarantees good closed-loop system bandwidth (in excess of 20 rad/sec) and good steady-state disturbance rejection properties; following common practices, the input to the controller is first passed through a saturation function to avoid variable overflow in the controller. The essential properties of the closed-loop system may be easily extracted from the Bode plot of the open-loop transfer function of the plant mounted in series with the controller, shown in Fig. 2.

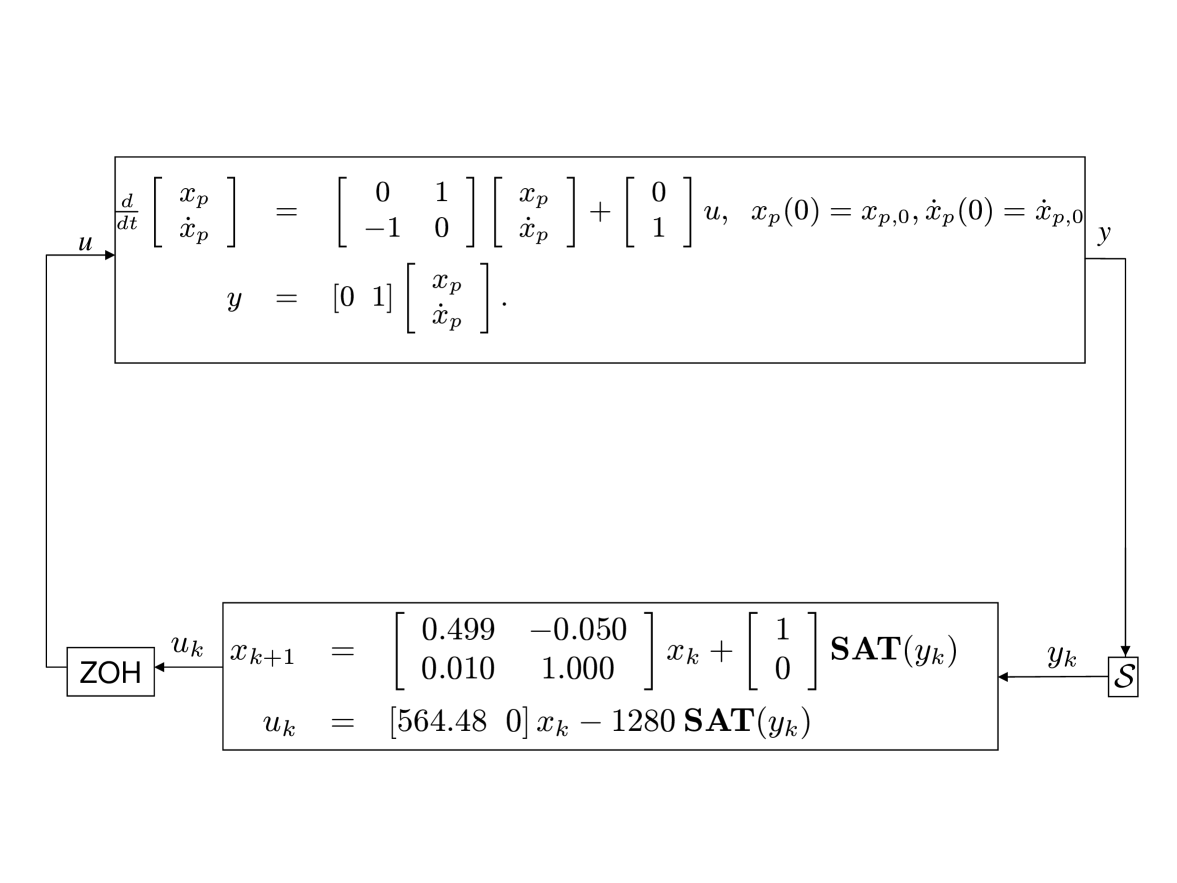

For example, the phase margin can be easily seen to exceed 50 degrees. This controller is then implemented on a computer by considering a sample-and-hold architecture running at 100 Hz, by implementing the state-space model

| (3) |

which is no more than a first-order Euler discretization of the following state-space realization of the controller (2)

In the expression for the compensator, is , the output of the system sampled at time . Likewise, is the compensator output fed to the plant during the time interval , resulting in the closed-loop description of the system as shown in Fig. 3.

This figure includes the sampling process and the zero-order hold ZOH. An implementation of the compensator may then be realized by means of the simplified, but functional C code shown in Fig. 4.

1: int main(int argc, char *argv[])

2: {

3: double *x[2], *xb[2], y, u;

4: x[0] = 0;

5: x[1] = 0;

6: while(1){

7: fscanf(stdin,"%f",&y);

8: if (y >1){

9: y=1

10:Ψ }

11:Ψ if (y<-1){

12: y=-1

13:Ψ }

14: u = 564.48*x[0]-1280*y;

15: fprintf(stdout,"%f\n",u);

16: xb[0]=x[0];

17: xb[1]=x[1];

18: x[0]:= 0.4990*xb[0]-0.0500*xb[1]+y;

19: x[1]:= 0.01*xb[0]+xb[1];

20: }

21:}

The final step involves the compilation of the C code to executable code, and the eventual operation of the system in closed-loop.

1.2 Performance and stability questions

Considering the entire system, made of the physical plant and its controller, we are led to asking whether “the system works”. In this paper, we are interested in asking this question about the software implementation of the control law shown in Fig. 4 and the corresponding executable binary code.

Many control engineers and researchers may wonder whether this question makes any sense at all: After all, control system textbooks suggest that the task of verifying whether the closed-loop system “works” amounts to establishing, in some way or some other, that the closed-loop “system” that consists of the system (1) combined with either the continuous-time controller (2) or the discrete-time controller (3) is stable, which requires no more than computing the roots of a low-order polynomial. Further, control systems manufacturers may require the controller itself to be open-loop stable. Other questions about system performance, including disturbance rejection properties and robustness against modeling inaccuracies, may also be easily answered by using algebraic or graphical methods.

Note, however, that the process of generating C code from the continuous or discrete control laws is often done automatically, by means of commercial autocoders whose implementation details are not fully known. Likewise, proprietary or public-domain compilers are then used to produce binary executables from the C code. While autocoders and compilers can be assumed to operate in good faith, they cannot just be trusted blindly. In addition, the codes generated out of the specifications often contain detailed computations whose details are not known at the level of control law specifications. Thus the produced C code and the binary executable must be examined in detail for adequate performance by following a process that is independent from the original code generation process. The amount of work involved in such an endeavour may appear formidable. However, the alternative which is to certify the correctness of autocoders and compilers is even more daunting [Rin99, AR04, RM99].

The rest of this paper is devoted to bringing forward the elements that make the static analysis of code that implements control system specification not only possible, but also necessary given how easy it is to perform.

2 Code Analysis

2.1 Performance analysis and Hoare logic

Analyzing the software that implements a control system consists of answering several questions:

-

•

Does the control function keep executing properly and within acceptable execution time limits?

-

•

Is the implemented controller stable and what is the range of values explored by each variable?

-

•

Is the closed-loop system stable?

-

•

Does the software implement the specifications?

These questions may be extended to include further concerns about system performance. However, the problem remains fundamentally the same: That is, to reach the same level of confidence about system stability and performance when considering the controller code in Fig. 4 together with the plant (1) as that reached when analyzing the controller specification (3), together with the plant (1). To reach that goal, two options exist:

The first option is to follow a “macroscopic pattern matching” approach, whereby the controller specification (3) is reconstructed from the code in Fig. 4. This solution, adopted in [CCF+05, Fer04], is particularly advantageous when the code structure is relatively repeatable from one instance to the other. However, pattern matching can become more challenging if codes that implement controllers do not have well-identified and rigid structures.

The second option, which we call code-level analysis, is to develop proofs that directly identify the code semantics. Code-level analysis is, in principle, supported by an elegant logical environment that closely matches available control oriented methodologies. This environment, developed by Floyd and Hoare in the late 1960s [Flo67, Hoa69], intends to describe the code semantics by concatenating elementary knowledge about each line of code [Pel01, Ch.7]: Each line of code is annotated with one precondition pre satisfied by the program state prior to the line execution, and one postcondition post satisfied by the program state after the execution of the line. Thus each line of code loc is transformed into a Hoare triple of the kind {pre}loc{post }, where the pre and post are compatible with loc. Global program properties can then be inferred by concatenating Hoare triples, as follows: Considering two consecutive Hoare triples {pre_1}loc_1{post_1 } and {pre_2}loc_2{post_2 } and the property {post_1} {pre_2}, whe have {pre_1}loc_1, loc_2{post_2 }. Further developments of Hoare logic will be used thereafter.

2.2 Hoare logic and Lyapunov functions

We now examine how Hoare logic can be used to formalize the analysis of control system stability requirements for the control system specifications and their implementation. Informally speaking, we know that the system that implements the controller is stable and all states are bounded because the matrix

is stable, that is, its two eigenvalues have modulus less than one, and the input is effectively bounded in absolute value by one. While exact, this proof does not carry over easily to the software implementation. Moreover, it is somewhat incomplete since it does not tell anything related to the range covered by the variables involved. These issues may be addressed by means of Lyapunov stability theory: Consider the Lyapunov function , where

and is a positive definite matrix. Then it is easy to show that the value of decays along the trajectories of

that is if and . That alone indicates stability of the controller, yet it does not bring any indication about the size of the states of the system. For that purpose, and following well-known practices [BEFB94], it is possible to show that defines not only a valid Lyapunov function, but also an invariant ellipsoid

for any input , and this ellipsoid defines a bounded region for . In particular, if is included in (and it does since ), then the state will stay in the ellipsoid forever. A simple eigenvalue computation on shows that each entry of the state is bounded in absolute value by 7.

Let us now examine how this knowledge can be included in the controller’s executable code so as to establish the code’s correctness. We may begin our effort with analyzing a Matlab script that implements the controller, given in Fig. 5. The reason for doing so is that a Matlab code structure is sufficiently close to typical control system specifications to make the correspondence between traditional proofs and code proof transparent, while remaining executable by Matlab’s interpreter.

1: A = [0.4990, -0.0500; 0.0100, 1.0000]; 2: C = [564.48, 0]; 3: B = [1;0];D = -1280; 4: x = zeros(2,1); 5: while 1 6: y = fscanf(stdin,"%f"); 7: y = max(min(y,1),-1); 8: u = C*x + D*y; 9: fprintf(stdout,"%f\n",u); 10: x = A*x + B*y; 11: end

Identifying as the controller’s state, we now proceed with establishing the stability of the controller’s Matlab implementation by using Hoare logic. For that, we may begin the program annotation at any line of choice, for example at line 8: u = C*x + Dy. This assignment does not influence the state but determines the value of . It also introduces the controller input . Thus we propose the following triple:

| (4) |

Much about this statement may appear to be rather arbitrary at first sight, and indeed requires global knowledge of the program (or its specifications, described earlier) to be established. However, the statement can be easily figured out from the value of and the statements . From there on, it is possible to obtain statements about the evolution of the state-space by means of backwards propagation [Pel01]. To begin with, line 7: y = max(min(y,1),-1) implicitly indicates that after this assignment, and can be commented via the triple

| {} |

| 7: y = max(min(y,1),-1); |

At this point, the assignment in line 6: y = fscanf(stdin,"%f") can simply be surrounded by the same statement to yield the triple

| 6: y = fscanf(stdin,"%f") |

Line 5: while 1 may not be separated from line 11: end. Following standard Hoare logic [Pel01, Mon03], these two lines may be documented as follows:

| 5: while 1 |

| ⋮ |

| 11: end |

Moving backwards, we see that the two lines that must be worked on are lines 4 and 10. Considering first the assignment 4: x = zeros(2,1), we are led to the triple

| 4: x = zeros(2,1) |

| . |

Moving further backwards yields no changes concerning the state variable , and triples may be constructed for lines 1 through 3 according to the template

| loc |

| . |

Line 10: x = A*x + B*y introduces the controller input and performs an important transformation on the state . Using insight from line 7: y = max(min(y,1),-1) we introduce the condition . We also propagate the statement backwards: Replacing by its value yields the statement . Thus the triple

| 10: x = A*x + B*y; |

Moving to line 9: fprintf(stdout,"%fn",u), we see that it really does not act in any way on the state of the program. However, it introduces the controller output value , whose numerical range must be known. At this point, we postulate the property and we are led to the triple

| 9: fprintf(stdout,"%fn",u) |

| . |

For this triple to be “compatible” with the triple (4) associated with line 8, we must prove the statement

| (5) |

For the specific instances of , and under consideration, this statement is indeed true, yet not trivial. A simple proof relies on the -procedure [AG64], which amounts to introducing the auxiliary (and true) statement

| (6) |

leading to

which may now be checked much more easily than (5) alone. Thus a preferrable triple for line 8 is

| 8: u = C*x+D*y; |

|---|

Concatenating statements about all individual instructions together leads us to the following annotated Matlab code:

| 1: A = [0.4990, -0.0500; 0.0100, 1.0000]; |

| 2: C = [-564.48, 0]; |

| 3: B = [1;0];D=1280 |

| 4: x = zeros(2,1); |

| 5: while 1 |

| 6: y = fscanf(stdin,"%f") |

| 7: y = max(min(y,1),-1); |

| 8: u = C*x+D*y; |

| skip |

| 9: fprintf(stdout,"%fn",u) |

| 10: x = A*x + B*y; |

| 11: end |

This annotated program contains the instruction whose purpose is only to separate two consecutive logical statements.

3 Forward vs. backwards propagation of constraints

Although the backwards constraint propagation described in the previous section is easy to do and best matches the well-known procedures used to establish stability properties of dynamical systems, forward constraint propagation provides key insights about how these constraints may otherwise be formulated.

We now reconsider the Matlab code shown in Fig. 5, with a forward exploration perspective. Once again, ellipsoids are used to bound all state variables and inputs involved in this program. Forward propagation allows us to begin at line 1: A = [0.4990, -0.0500; 0.0100, 1.0000], which can simply be surrounded by statements to produce the Hoare triple

| 1: A = [0.4990, -0.0500; 0.0100, 1.0000]; |

Lines 2 and 3 can be treated similarly. Line 4: x = zeros(2,1) introduces the variable , and since , line 4 can be followed by the post-condition to yield the triple

| 4: x = zeros(2,1); |

which is the same as that given in the backwards analysis. Lines 5 and 11 introduce the controller loop, and we postulate the pre-condition , which then leads to commented set of commands:

| 5: while 1 |

| 11: end |

Line 6: y = fscanf(stdin,"%f"); merely introduces the variable with no particular property associated with it. Thus the triple

| 6: y = fscanf(stdin,"%f"); |

The next line 7: y = max(min(y,1),-1); is much more informative since it forces to be bounded by one in absolute value. This information may be encoded in various ways. One is to incorporate this information verbatim, therefore leading to the triple

| 7: y = max(min(y,1),-1); |

However, the postcondition will not be used as the preconditon for line 8: u = C*x + D*y;. Instead, we introduce the weaker pre-condition

Asking what the precondition becomes after passing through line 8 is equivalent to asking what the image of an ellipsoid through a linear mapping is. Since it is also an ellipsoid (in this case 1-dimensional), we conclude that a valid clause in the postcondition of line 8 is , while keeping the other clausal elements identical. The resulting triple is therefore

| 8: u = C*x + D*y; |

which propagates the set to which and belong, while providing a bound on the output variable . Line 9: fprintf(stdout,"%fn",u); merely writes the output of the controller to the appropriate memory location for further processing. Since is not used afterwards, its range may be dropped from the postcondition, thus leading to the triple

| 9: fprintf(stdout,"%fn",u); |

We finally consider line 10: x = A*x + B*y. Again, this line transforms the ellipsoid into the ellipsoid , where

| (7) |

To conclude, thus line 10 leads to the following triple

| 10: x = A*x + B*y; |

The documentation of the program by means of Hoare triple is now complete. It is necessary, however, to check that the post condition of line 10 implies the precondition of line 11, that is:

This is equivalent to showing that

| (8) |

which is indeed true for the given values of , and . Moreover, it can be shown that the clause (8) is equivalent to (6) for generic values of , , and , using standard matrix manipulations [BEFB94], thus demonstrating the “equivalence” of the forward and backwards approaches to analyze this program. The forward analysis eventually yields the annotated program

| 1: A = [0.4990, -0.0500; 0.0100, 1.0000]; |

| 2: C = [-564.48, 0]; |

| 3: B = [1;0];D=1280 |

| 4: x = zeros(2,1); |

| 5: while 1 |

| 6: y = fscanf(stdin,"%f") |

| 7: y = max(min(y,1),-1); |

| skip |

| 8: u = C*x+D*y; |

| 9: fprintf(stdout,"%fn",u) |

| 10: x = A*x + B*y; |

| skip |

| 11: end |

4 C code analysis

The latter analysis may now be extended to the original C code given in Fig. 4. The main difference between the C code and its Matlab counterpart is the level of detail reached by the instructions; while Matlab uses vectors, matrices, and direct operations on these, the C program only performs scalar operations. In addition, some 1-line functions now extend over several lines. It is therefore interesting to see how the proofs developed for the earlier Matlab code apply to this more detailed C code.

4.1 Forward analysis

We will perform only the forward analysis for the sake of brevity. Skipping variable declarations, the first relevant line of code is 4: x[0]=0. We may therefore write the triple

| 4: x[0] = 0 |

Line completes the initialization of , leading to the triple

| 5: x[1] = 0 |

| . |

Doing as precedently, line 6: while(1){, together with line 20: } leads to the set of commented lines

| 6: while(1){ |

| 20: } |

Line 7: fscanf(stdin,"%f",&y); likewise leads to the triple

| 7: fscanf(stdin,"%f",&y); |

| . |

The test 8: if (y >1){ 10: } may be commented using insight from line 9 as

| 8: if (y >1){ |

| ⋮ |

| 10: } |

| . |

which is of course compatible with the assignment 9: y = 1

| 9: y = 1 |

| . |

The second test 11: if (y<-1){ 13: } is documented the same way

| 11: if (y<-1){ |

| ⋮ |

| 13: } |

and line 12: y=-1 yields the triple

| 12: y=-1 |

| . |

The latter postcondition is clearly the same as that of line 7 of the Matlab program shown in Fig. 5. Following the same process as for the Matlab program, the precondition for line 14: u = 564.48*x[0]-1280*y is chosen to be weaker than the post-condition of line 13; we choose the precondition

to generate the triple

| 14: u = 564.48*x[0]-1280*y |

Line 15: fprintf(stdout,"%fn",u); may in turn be instrumented as the triple

| 15: fprintf(stdout,”%fn”,u); |

Lines 16 and 17 utilise a buffer variable aimed at performing the controller state update. The effect of these lines is easier to figure out by means of matrix operations: For example, line 16: xb[0]=x[0] can be seen as the matrix operation

Given this matrix operation, it is easy to figure out the image of the ellipsoid through this mapping. The parameterization of the resulting ellipsoid needs changing, however, because it is singular (it is flat along one of its semi-axes). Whenever the matrix is invertible, the following define the same ellipsoid:

When is not invertible, then only one of the statements above defines an ellipsoid. For the sake of conciseness, we will name the ellipsoid defined as

With such an alternative ellipsoid definition we may write a concise triple for line 16 as

| 16: xb[0]=x[0] |

with

Line 17: xb[1]=x[1]; may be treated likewise, and yield the triple

| 17: xb[1]=x[1]; |

where

Continuing along the same lines the triple for 18: x[0]:= 0.4990*xb[0]-0.0500*xb[1]+y; is

| 18: x[0]:= 0.4990*xb[0]-0.0500*xb[1]+y; |

with

and the triple for line 19: x[1]:= 0.01*xb[0]+xb[1]; is

| 19: x[1]:= 0.01*xb[0]+xb[1]; |

with

Explicit calculations then show , which ensures consistency between the postcondition of line 19 and the precondition of line 20. Moreover, it is easy to show that , where is defined in Eq. (7).

4.2 Remarks

Bringing the forward analyses of the Matlab code, on the one hand, and of the C code, on the other hand, shows how the higher level-of-detail present in the C code instructions yields considerably more detailed information about the behavior of each variable. This hgher level of detail is precisely what makes the analysis of the C code necessary beyond that of the Matlab code. The C code analysis is, however, not complete and still contains ambiguities. Consider for example Line 18: x[0]:= 0.4990*xb[0]-0.0500*xb[1]+y;. Depending on the compiler used, this line may be executed as x[0]:= (0.4990*xb[0]+(-0.0500*xb[1]+y)); or x[0]:=((0.4990*xb[0]-0.0500*xb[1])+y);, for example. The resulting intermediate values may differ widely, yet must be computed in advance to offer a complete analysis of the real time computations that take place during comntroller operations. This justifies a further, more detailed analysis of the code that is produced by compiling that given in Fig. 4.

In addition, it is clear that the analyses shown on a simple second-order controller example easily extend to linear controllers of arbitrary order (possibly subject to input saturation).

5 Conclusions

In the first part of this report, we have advocated the necessity of documenting not only the specifications, but also the implementation of control systems. Using well-known proofs about the behaviors of controller specifications, we have shown how such proofs may be translated as proofs about various levels of implementation of such controllers.

References

- [AG64] M. A. Aizerman and F. R. Gantmacher. Absolute stability of regulator systems. Information Systems. Holden-Day, San Francisco, 1964.

- [AR04] K. Arkoudas and M. Rinard. Deductive runtime certification. In Proceedings of the 2004 Workshop on Runtime Verification (RV 2004), Barcelona, Spain, April 2004.

- [BEFB94] S. Boyd, L. El Ghaoui, E. Feron, and V. Balakrishnan. Linear Matrix Inequalities in System and Control Theory, volume 15 of SIAM Studies in Applied Mathematics. SIAM, 1994.

- [CCF+05] P. Cousot, R. Cousot, J. Feret, A. Miné, D. Monniaux, L. Mauborgne, and X. Rival. The ASTRÉE analyzer. In In S. Sagiv (Ed.), Programming Languages and Systems, 14th European Symposium on Programming, ESOP 2005, Held as Part of the Joint European Conferences on Theory and Practice of Software, ETAPS 2005, Lecture Notes in Computer Science 3444, pages 21–30, Edinburgh, UK, April 4–8, 2005, 2005. Springer.

- [Fer04] J. Feret. Static analysis of digital filters. In Lecture Notes in Computer Science, Programming Languages and Systems, volume 2986/2004, pages 33–48. Springer-Verlag, 2004.

- [Flo67] R.W. Floyd. Assigning meanings to programs. In J. T. Schwartz, editor, Mathematical Aspects of Computer Science, Proceedings of Symposia in Applied Mathematics, volume 19, pages 19–32, Providence, RI, december 1967. American Mathematical Society.

- [Hoa69] C.A.R. Hoare. An axiomatic basis for computer programming. Communications of the ACM, 12(10):576–583, October 1969.

- [Mon03] J. F. Monin. Software Reliability Methods. Springer, 2003.

- [Pel01] D. A. Peled. Software Reliability Methods. Springer, 2001.

- [Rin99] M. Rinard. Credible compilation. Technical Report MIT-LCS-TR-776, Massachusetts Institute of Technology, Laboratory for Computer Science, Cambridge, MA, 1999.

- [RM99] Martin Rinard and Darko Marinov. Credible compilation with pointers. In FLoC Workshop on Run-Time Result Verification, Trento, Italy, 1999.