ControlBurn: Feature Selection by Sparse Forests

Tree ensembles distribute feature importance evenly amongst groups of correlated features. The average feature ranking of the correlated group is suppressed, which reduces interpretability and complicates feature selection. In this paper we present ControlBurn, a feature selection algorithm that uses a weighted LASSO-based feature selection method to prune unnecessary features from tree ensembles, just as low-intensity fire reduces overgrown vegetation. Like the linear LASSO, ControlBurn assigns all the feature importance of a correlated group of features to a single feature. Moreover, the algorithm is efficient and only requires a single training iteration to run, unlike iterative wrapper-based feature selection methods. We show that ControlBurn performs substantially better than feature selection methods with comparable computational costs on datasets with correlated features.

1. Introduction

Tree ensembles are well-loved in machine learning for balancing excellent predictive performance with model interpretability. Importantly, tree ensemble models tend not to overfit even when the number of features is large. And even complex boosting ensembles admit feature importance scores that are cheap to compute. These properties make tree ensembles a popular choice for feature selection: simply train a model on all the features, and select the most important features according to the feature importance score.

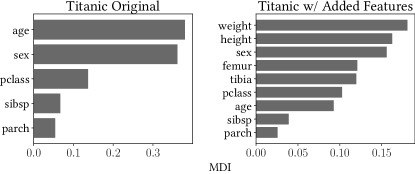

However, when tree ensembles are trained on data with correlated features, the resulting feature rankings can be unreliable. This correlation bias arises because tree ensembles split feature importance scores evenly amongst groups of correlated features. Figure 2 shows an example. On the left plot, feature importance is calculated for a random forest classifier fit on the Titanic dataset. The forest identifies age, sex, and passenger class as the most influential features. As an experiment, we modify the Titanic dataset by adding the synthetic features “height”, “weight”, “femur” length, and “tibia” length, which we generate from a plausible distribution that depends on age and sex, following measurements from (howell2000demography, ). As a result, “height”, “weight”, “sex”, “age”, “femur”, and “tibia” are highly correlated. While these features together are important, the feature importance of each variable in this cluster drops. More generally, when large groups of correlated features are present in a dataset, important features may appear much less influential than they actually are. Simple feature selection methods may select the whole group, omit the entire group, or pick the wrong features from the group. Here, “weight” and “height”, synthetic noisy features, are now elevated above more important features like “age” and “sex”. In addition, “pclass” is elevated above “age”.

In this paper we develop ControlBurn, a feature selection algorithm that eliminates correlation bias by sparsifying the forest, just as fire is used in forest management to maintain a healthy (biological) forest by reducing density. We first grow a forest using techniques such as bagging and boosting. Then we reduce the number of trees by choosing sparse weights for each tree via a group-lasso penalized objective that balances feature sparsity with predictive performance.

Importantly, our algorithm is efficient and scalable. We only need to fit a tree ensemble once and solve a group LASSO problem, which scales linearly with the number of data points, to select a sparse subset of features.

On our modified Titanic dataset, ControlBurn selects “sex”, “pclass”, and either “age” or a continuous proxy for age (“weight”, “height”, “femur” or “tibia”) as the three most important features. Since ControlBurn is robust to correlation bias, these selected features align with the three most important features in the original data.

1.1. Applications

1.1.1. Interpretable machine learning

Correlation bias reduces the interpretability of feature rankings. When features importance scores are split evenly amongst groups of correlated features, influential features are harder to detect: feature ranks can become cluttered with many features that represent similar relationships. ControlBurn allows a data scientist to quickly identify a sparse subset of influential features for further analysis.

1.1.2. Optimal experimental design

In many experimental design problems, features are costly to observe. For example, in medical settings, certain tests are expensive and risky to the patient. In these situations it is crucial to avoid redundant tests. In this setting, ControlBurn can be used to identify a subset of features (for any integer ) with (nearly the) best model performance, eliminating redundancy.

1.2. Main contributions

The main contributions of this paper follow.

-

•

We develop ControlBurn, a feature selection algorithm robust to correlation bias that can recover a sparse set of features from highly intercorrelated data.

-

•

We present empirical evidence that ControlBurn outperforms traditional feature selection algorithms when applied to datasets with correlated features.

1.3. Paper organization

We first examine related work concerning tree ensembles and feature selection techniques. Following this literature review, we explain in detail our implementation of ControlBurn. We then describe our experimental procedure to evaluate ControlBurn against traditional feature selection methods. Finally we present our findings and discuss interesting directions for future research.

2. Background

2.1. Tree ensembles

The following section provides an overview of tree ensembles and details how feature importance is computed in tree models.

2.1.1. Decision trees

form the base learners used in tree ensembles. We give a quick overview of classification trees below. A classification tree of depth separates a dataset into at most distinct partitions. Within each partition, all data points are predicted as the majority class. Classification trees are grown greedily, and splits are selected to minimize the Gini impurity, misclassification error, or deviance in the directly resulting partitions. The textbook (friedman2001elements, , Chapter 9) provides a comprehensive reference on how to construct decision trees.

2.1.2. Bagging

reduces the variance of tree ensembles through averaging the predictions of many trees. We sample the training data uniformly with replacement to create bags (bootstrapped datasets with the same size as the original) and fit decision trees on each bag. The final prediction is the average (or majority vote, for classification) of the predictions from all the trees. Reducing correlation between trees improves the effectiveness of bagging. To do so, we can restrict the number of features considered each time a tree is built or a split is selected (friedman2001elements, , Chapter 15).

2.1.3. Boosting

fits an additive sequence of trees to reduce the bias of a tree ensemble. Each decision tree is fit on the residuals of its predecessor; weak learners are sequentially combined to improve performance. Popular boosting algorithms include AdaBoost (freund1999short, ), gradient boosting (friedman2001greedy, ), and XGBoost (chen2016xgboost, ). The textbook (friedman2001elements, , Chapter 10) provides a comprehensive reference on boosting algorithms.

2.1.4. Feature importance scores

can be computed from decision trees by calculating the decrease in node impurity for each tree split. Gini index and entropy are two commonly used metrics to measure this impurity. Averaging this metric over all the splits for each feature yields that feature’s importance score. This score is known as Mean Decrease Impurity (MDI); features with larger MDI scores are considered more important. MDI scores can be calculated for tree ensembles by averaging over all the trees in the ensemble (2001.04295, ).

2.2. Feature selection

Machine learning algorithms are sensitive to the underlying structure of the data. In datasets with linear relationships between the features and the response, linear regression, logistic regression, and support vector machines with linear kernels (cortes1995support, ) are all suitable candidates for a model. For nonlinear datasets, tree ensembles such as random forests, gradient boosted trees, and explainable boosting machines (EBMs) (nori2019interpretml, ) are commonly used.

Feature selection is fairly straightforward in the linear setting. Methods such as LASSO (tibshirani1996regression, ), regularized logistic regression (lee2006efficient, ), and SVMs with no kernels (lee2006efficient, ) can select sparse models. Our work aims to extend regularization techniques commonly used in linear models to the non-linear setting. Existing feature selection algorithms for nonlinear models can be divided into the following categories: filter-based, wrapper-based, and embedded (chandrashekar2014survey, ).

2.2.1. Filter-based

feature selection algorithms remove features suspected to be irrelevant based on metrics such as correlation or chi-square similarity (ambusaidi2016building, ; lee2011filter, ). Features that are correlated with the response are preserved while uncorrelated features are removed. Groups of intercorrelated features correlated with the response are entirely preserved.

Filter-based algorithms are model-agnostic, their performance depends on the relevancy of the similarity metric used. For example, using Pearson’s correlation to select features from highly non-linear data will yield poor results.

2.2.2. Wrapper-based

feature selection algorithms iteratively select features through retraining the model across different feature subsets. (mafarja2018whale, ; maldonado2009wrapper, ).

For example, in recursive feature elimination (RFE) the model is initially trained on the full feature set. The features are ranked by feature importance scores and the least important feature is pruned. The model is retrained on the remaining features and the procedure is repeated until the desired number of features is reached. Other wrapper-based feature selection algorithms include sequential and stepwise feature selection. All of these algorithms are computationally expensive since the model must be retrained several times.

2.2.3. Embedded

feature selection algorithms select important features as the model is trained. (langley1994selection, ; blum1997selection, ). For example in LASSO regression, the regularization parameter controls the number of coefficients forced to zero, i.e. the number of features excluded from the model. For tree ensembles, MDI feature importance scores are computed during the training process. These scores can be used to select important features, however this algorithm is highly susceptible to correlation bias since MDI feature importance is distributed evenly amongst correlated features. ControlBurn extends LASSO to the nonlinear setting and is an embedded feature selection algorithm robust to correlation bias.

2.3. Sparsity vs. stability

Like LASSO in the linear setting, ControlBurn trades stability for sparsity. To build a sparse model on a datasets with correlated features, ControlBurn selects a subset of features to represent the group. The algorithm is useful for selecting an independent set of features, but the selected set is not stable. Indeed, an arbitrarily small perturbation to the dataset can lead the algorithm to select a different set of features when the features are highly correlated.

The tradeoff between sparsity and stability is fundamental to machine learning (xu2011sparse, ). Feature selection and explanation algorithms such as LASSO regression, best-subset selection (bertsimas2016best, ), and LIME (ribeiro2016should, ) encourage sparsity, while algorithms such as ridge regression and SHAP (lundberg2017unified, ) are more stable but not sparse. To achieve both sparsity and stability, the dataset may need to be very large (zhou2021, ).

3. ControlBurn algorithm

3.1. Overview

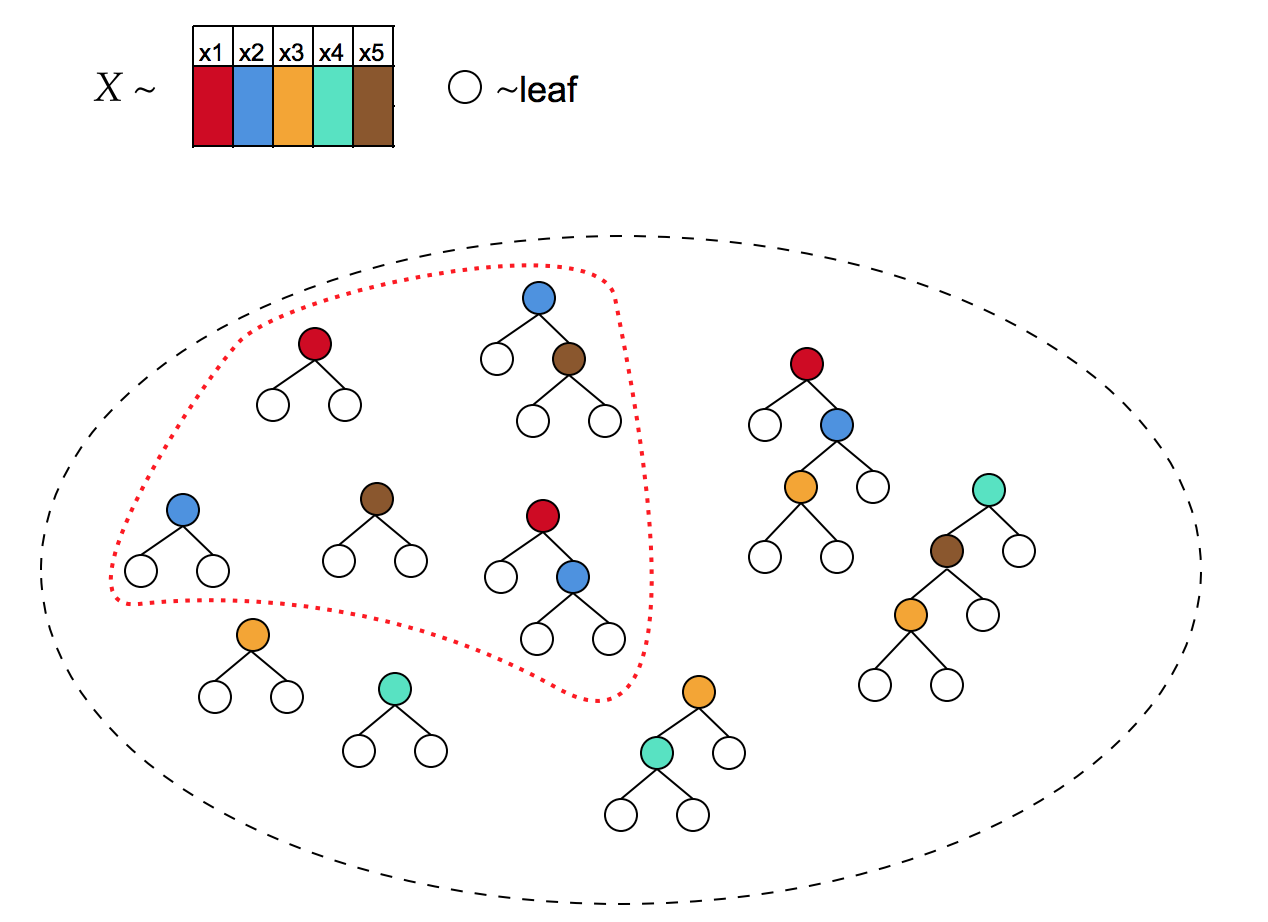

The ControlBurn algorithm works as follows. Given feature matrix and response , we build a forest consisting of decision trees to predict from . We will describe in detail in the next section various approaches to build this forest. Each tree for generates a vector of predictions . For each tree , let be a binary indicator vector, with if feature generates a split in tree .

Fix a regularization parameter . We introduce a non-negative variable to represent the weight of each tree in the forest; a tree with plays no role in prediction and can be pruned. Let be the matrix with vectors as columns and let be the matrix with vectors as columns. Hence gives the predictions of the forest on each instance given weights , while the elements of give the total weight of each feature in the forest.

We seek a feature-sparse forest (sparse ) that yields good predictions. To this end, define a loss function for the prediction problem at hand, for example square loss for regression , or logistic or hinge loss for classification.

Inspired by the group LASSO (tibshirani1996regression, ), our objective is to minimize the loss together with a sparsifying regularizer, over all possible weights for each tree:

| (1) |

Since for all , we equivalently express this problem as

| (2) |

where is the number of features used in tree . This objective encourages sparse models, as the regularization penalty increases as more features are used in the ensemble. The hyperparameter controls the sparsity of the solution.

Let denote the solution to Problem 2. A tree with a non-zero weight is used by the ensemble, while a tree with weight is omitted. We say feature was selected by the ensemble if is non-zero.

The final step of our algorithm fits a new tree ensemble model on the selected features. This step improves solution quality, just as refitting a least squares regression model on features selected from LASSO regression reduces the bias of the final model (chzhen2019lasso, ).

Our algorithm operates in two major steps: first we grow a forest, and then we sparsify the forest by solving Problem (2). We discuss each step in detail below.

3.2. Growing a forest

ControlBurn works best when executed on a diverse forest, with trees that use different sets of features. Traditional forest-growing methods such as bagging often fail to generate sufficient diversity. Using standard methods to grow the forest, we often find that no subset of the trees yields a feature-sparse model, or that the resulting model has poor accuracy.

For example, if trees are grown to full depth, every tree in the forest generally uses the full feature set. Hence the only sparse solution to Problem 2, for any , is : either all or none of the features will be selected.

We have developed two algorithms to grow diverse forests: incremental depth bagging and incremental depth bag-boosting. Both are simple to tune and match the accuracy of other state-of-the-art tree ensembles.

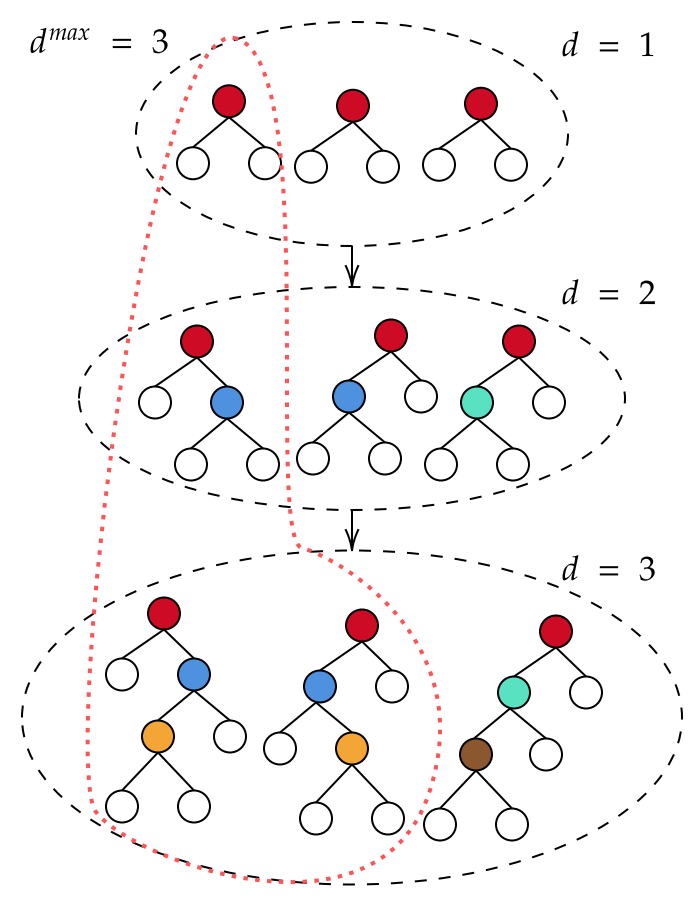

3.2.1. Incremental depth bagging

(Algorithm 1) increases forest diversity by incrementally increasing the depth of trees grown. A tree of depth can use no more than features. As we increase , the number of features used per tree increases, allowing the model to represent more complex feature interactions.

The algorithm has a single hyperparameter, , the maximum depth of trees to grow. This hyperparameter is easy to choose: bagging prevents overfitting, so bigger is better, and is allowed.

We represent a tree ensemble as a set of trees }. With some abuse of notation, we let be the output of the tree ensemble given input .

Incremental depth bagging increments the depth of trees grown when the performance of the model saturates at the lower depths. At each depth level, the training loss is recorded each time a new tree is added to the ensemble. When the training loss converges, the algorithm increments the depth and begins adding deeper trees. We say the loss converges if the tail elements of the sequence fall within an tube. Experimentally, choosing and yields good results. This methodology differs from typical early stopping algorithms used in machine learning, since it stops based on training loss rather than test loss. We use training loss because it is fast to evaluate and overfitting is not a concern, since bagged tree ensembles tend not to overfit with respect to the number of trees used.

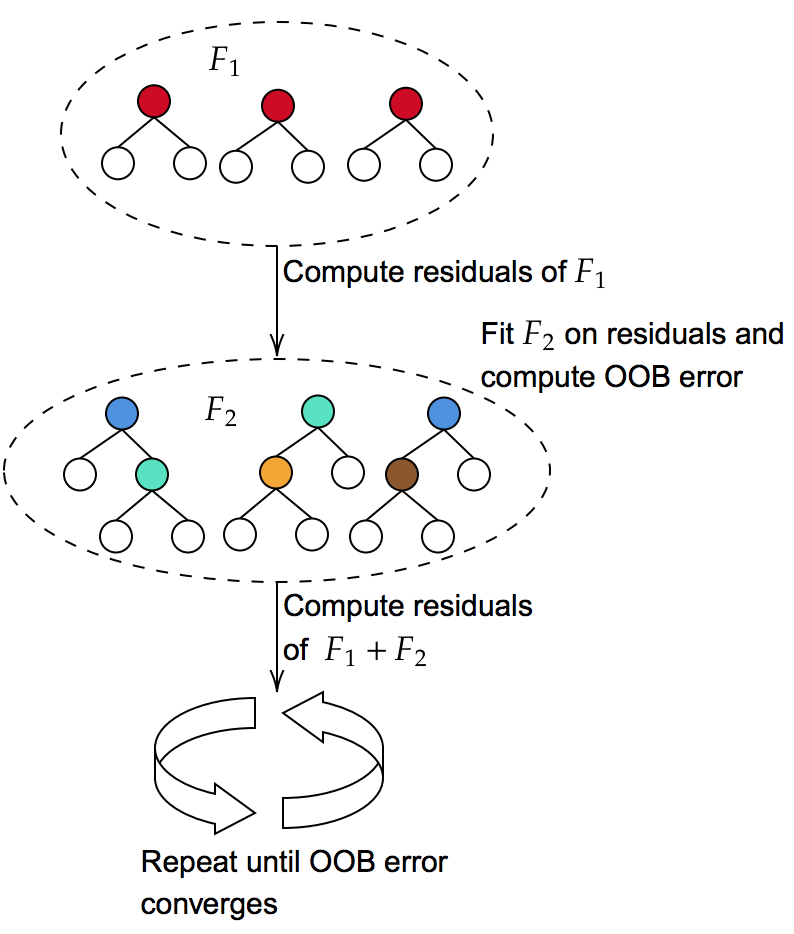

3.2.2. Incremental depth bag-boosting

(Algorithm 2)

uses out-of-bag (OOB) error to automatically determine the maximum depth of trees to grow, and requires no hyperparameters. The algorithm uses bagging ensembles as the base learner in gradient boosting to increase diversity without sacrificing accuracy.

The number of trees grown per boosting iteration is determined by how fast the training error of the ensemble converges, as in §3.2.1. Each boosting iteration increments the depth of trees grown and updates the pseudo-residuals. This is done by setting for .

For each row in , the OOB prediction is obtained by only using trees in fit on bags . Let be the improvement in OOB error between subsequent boosting iterations. The algorithm terminates when .

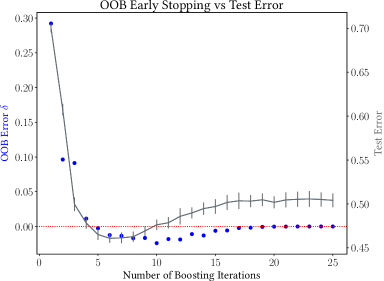

In Figure 6, incremental depth bag-boosting is conducted on the abalone dataset from UCI MLR (Dua:2019, ). The scatterplot shows how the improvement in OOB error, , changes as the number of boosting iterations increase. The procedure will terminate at the sixth boosting iteration, when . The lineplot shows how out-of-sample (OOS) test error varies with the number of boosting iterations. OOS error is minimized at the seventh boosting iteration. This is remarkably close to the OOB error stop, which is determined on-the-fly.

3.2.3. Theoretical guarantees

The following lemma shows that the average performance improvement calculated using OOB samples is a good estimator of the true performance improvement for the data distribution. The lemma applies to any pair of ensembles and where every element in ensemble appears in ensemble .

Lemma 3.1.

Let be an ensemble with trees , and let be an ensemble with one more tree . Suppose the data (including the feature and label ) follows the distribution . Define the improvement . Let be the expected improvement of the model over , and be the set of OOB samples of tree . Suppose is finite and each independently follows the distribution . Then

If the labels are all bounded, is a sub-exponential random variable with some parameters , and for all ,

We found empirically that incremental depth bag-boosting performs better than incremental depth bagging when used in ControlBurn. In addition, the OOB early stopping rule eliminates all hyperparameters and limits the size of the forest. This reduces computation time in the following optimization step. Forests grown by incremental depth bag-boosting are more feature diverse than those grown via incremental depth bagging. Consider the case where a single highly influential binary feature dominates a dataset. While each iteration of incremental depth bagging will include this feature, the feature will only appear in the first boosting iteration in incremental depth bag-boosting. Boosting algorithms fit on the errors of previous iterations, so once the relationship between the feature and the outcome is learned, the feature may be ignored in subsequent iterations.

3.2.4. Other forest growing algorithms

include feature-sparse decision trees and explainable boosting machines (EBM). These algorithms do not perform as well as incremental depth bag-boosting, but may be more interpretable

The procedure to build feature-sparse decision trees is a simple extension of the tradition tree building algorithm. For classification, decision trees typically choose splits to minimize the Gini impurity of the resulting nodes. Sparse decision trees add an additional cost to this impurity function when the tree splits on a feature that has not been used before. As a result, when groups of correlated features are present, the algorithm consistently splits on a single feature from each group. The cost parameter controls the sparsity of each tree. Ensembles of feature-sparse trees are not necessarily sparse, so ControlBurn must be used to obtain a final sparse model.

Explainable boosting machines are generalized additive models composed of boosted trees trained on a single feature (lou2013accurate, ). Each tree in an EBM is feature-sparse with sparsity . The additive nature of EBMs improve interpretability as the contribution of each feature in the model can be easily obtained. However, this comes at a cost of predictive performance since EBMs cannot capture higher order interactions. When ControlBurn is used on EBMs, the matrix in the optimization step is the identity matrix.

3.3. Optimization variants

3.3.1. Feature groupings

In many applications, it may be necessary to restrict feature selection to a set of features rather than individual ones. For example, in medical diagnostics, a single blood test provides multiple features for a fixed cost. We can easily incorporate this feature grouping requirement into our framework by redefining . Suppose features are partitioned into various groups. Instead of defining to be the number of features tree uses, we now define

where is the cost of the group of features and is the set of feature groups tree uses. Our original formulation corresponds to the case where all groups are singleton.

3.3.2. Non-homogeneous feature costs

In some problems the cost of obtaining one feature may be very different from the cost of obtaining another. We can easily accommodate this non-homogeneous feature costs requirement into our framework as well. Instead of defining to be the number of features used by tree , we define

where is the cost of feature and is the set of features tree uses. Our original formulation corresponds to the case where all .

3.3.3. Sketching

The optimization step of ControlBurn scales linearly with the number of rows in our data. We can sketch to improve runtime. Let be the sampled (e.g., Gaussian) sketching matrix. Our optimization problem becomes:

| (3) |

A relatively small , around , can provide a good solution (pilanci2015randomized, ).

4. Experimental setup

We will evaluate how well ControlBurn selects features for binary classification problems.

4.1. Data

We report results on three types of datasets.

4.1.1. Semi-synthetic

To evaluate how well ControlBurn handles correlation bias, we synthetically add correlated features to existing data by duplicating columns and adding Gaussian white noise to each replicated column. This procedure experimentally controls the degree of feature correlation in the data.

4.1.2. Benchmark

We consider a wide range of benchmark datasets selected from the Penn Machine Learning Benchmark Suite (olson2017pmlb, ). This curated suite aggregates datasets from well-known sources such as the UCI Machine Learning Repository (Dua:2019, ). We select all datasets in this suite with a binary target, 43 datasets in total.

4.1.3. Case studies

Finally, we select several real-world datasets known to contain correlated features, based on machine learning threads on Stack Overflow and Reddit. We select the Audit dataset, where risk scores are co-linear, and the Adult Income dataset, where age, gender, and marital status form a group of correlated features (Dua:2019, ). We also evaluate ControlBurn on the DNA microarray data used in West (2001) (West:2001ft, ), since gene expression levels are highly correlated.

4.2. ControlBurn for experiments

We first generate a forest of classification trees using incremental depth bag-boosting. The algorithm requires no hyperparameters and uses out-of-bag error to determine how many trees to grow. We use logistic loss as in optimization problem 2.

After solving the optimization problem,

we eliminate all features with weight 0 and refit a random forest classifier on the remaining features. We evaluate the test ROC-AUC of this final model.

4.2.1. Bisection

The regularization parameter in Problem 2 controls the number of features selected by ControlBurn, . We use bisection to find the value of that yields the desired number of selected features . Our goal in feature selection is to produce an interpretable model, so we mandate that in our experiments. Tree ensembles with more features can be difficult to interpret.

In fact, we can use bisection with ControlBurn to generate feature sets of any sparsity between and . Start with a value of large enough to guarantee . Let denote the number of features we want ControlBurn to select in the following iteration and initialize . Bisect and solve Problem 2 to obtain . If , update ; otherwise if , update . If , update . Repeat until .

4.3. Baseline feature selection

We will evaluate ControlBurn against the following baseline, an embedded feature selection method we call the random forest baseline. Given feature matrix and response , fit a random forest classifier and select the top features ranked by MDI feature importance scores. This feature selection algorithm is embedded since MDI feature importance scores are computed during training.

We fit a random forest classifier on the features selected by ControlBurn and the features selected by our random forest baseline and compare the difference in test performance. We use a 5-fold cross validation to estimate test error.

Our results in Section 5 omit comparisons between ControlBurn and filter-based and wrapper-based feature selection algorithms. We comment on comparisons here.

Experimentally, ControlBurn consistently outperforms filter-based algorithms across all datasets.

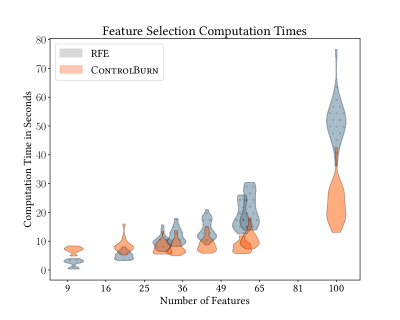

Wrapper-based algorithms are computationally expensive compared to ControlBurn since the model must be retrained each time a new subset of features is evaluated. For example, selecting features using Recursive Feature Elimination (RFE) from a dataset with features requires training iterations. In Best Subset Selection, the number of iterations required scales exponentially with . In contrast, like all embedded feature selection algorithms, ControlBurn only requires a single training iteration to select features. To illustrate this advantage, we subsample our PMLB datasets to 1000 rows and compare computation time between ControlBurn and RFE. Figure 7 demonstrates that RFE runtime drastically increases with compared to ControlBurn.

4.4. Implementation

We use scikit-learn (scikit-learn, ) to implement all random forest and decision tree classifiers in our experiment, and we use CVXPY (diamond2016cvxpy, ) and MOSEK (mosek, ) to formulate and solve optimization problems.

The code and data to reproduce our experiments can be found in this git repository.

5. Results

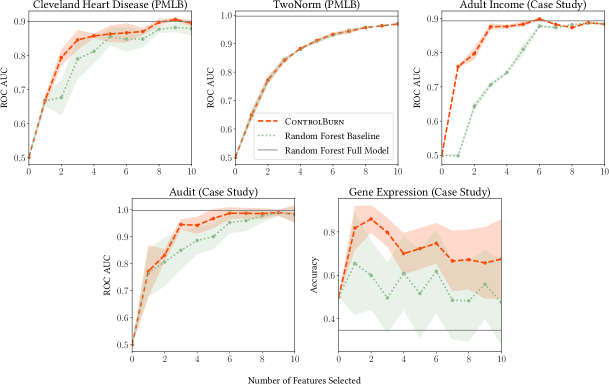

ControlBurn performs better than our random forest baseline on datasets that contain correlated features. In Figure 10, we plot the performance of our selected models against the number of features selected by our two algorithms. On datasets with correlated features, such as the Cleveland Heart Disease dataset, ControlBurn performs better than the random forest baseline. On datasets where all of the features are independent, such as Breiman’s synthetic TwoNorm dataset, there is no difference in performance.

In the following sections, we first discuss how ControlBurn performs when correlated features are added to semi-synthetic data. We then examine our results across our survey of PMLB datasets. Finally, we present several case studies that show the benefit of using ControlBurn on real world data.

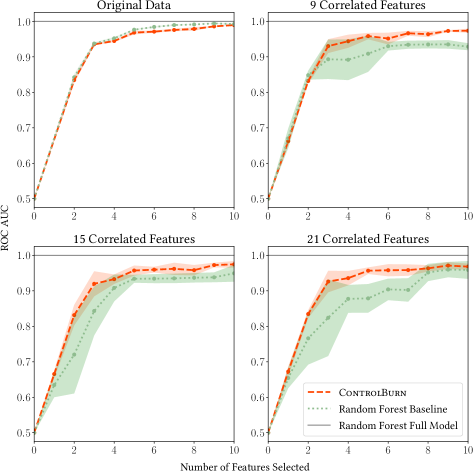

5.1. Semi-synthetic dataset

To create our semi-synthetic dataset, we start with the Chess dataset from UCI MLR (Dua:2019, ). This dataset contains very few correlated features, since all features correspond to chessboard positions. We observe in figure 8 that ControlBurn performs nearly identically to our random forest baseline on the original data. We take the 3 most influential features, determined by random forest MDI importance, and create noisy copies by adding Gaussian noise with . We repeat this process, duplicating the influential features 3, 5, and 7 times, which adds 9, 15, and 21 highly correlated features. Figure 8 shows that ControlBurn performs substantially better than the random forest baseline as the number of correlated features increases: MDI feature importance is diluted amongst the groups of correlated features, depressing the “importance” rank of any feature in the correlated group. This semi-synthetic example illustrates that ControlBurn can effectively select features even when groups of strongly correlated features exist.

5.2. PMLB suite

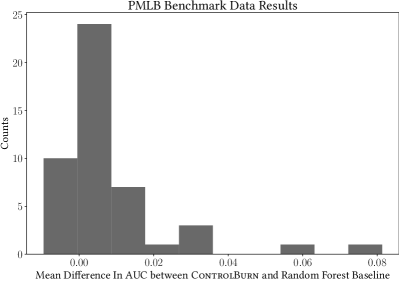

We evaluate ControlBurn on all binary classification datasets in the PMLB suite. On each dataset, we select features using ControlBurn and our random forest baseline and record the difference in ROC-AUC between the random forest classifiers fit on the selected features. We average these differences across sparsity levels and present the distribution as Figure 9.

In this histogram, we see that ControlBurn performs similarly to the random forest baseline for most of these datasets: most of the distribution is supported on , since most of the datasets in PMLB do not contain correlated features. Still, ControlBurn performs better than our random forest baseline on average: the mean of the distribution is positive, and the distribution is right-skewed. Most of the datasets along the right tail, such as Musk, German, and Sonar, contain correlated features.

5.3. Case studies

5.3.1. Adult income dataset

We use ControlBurn to build a classification model to predict whether an adult’s annual income is above $50k. The Adult Income plot in figure 10 shows how well ControlBurn performs compared to our random forest baseline. We see that ControlBurn performs substantially better than our baseline when features are selected. ControlBurn selects “EducationNum”, “CapitalGain”, and “MaritalStatus_Married-civ-spouse” while our random forest baseline selects “fnlwgt”, “Age” and “CapitalGain”.

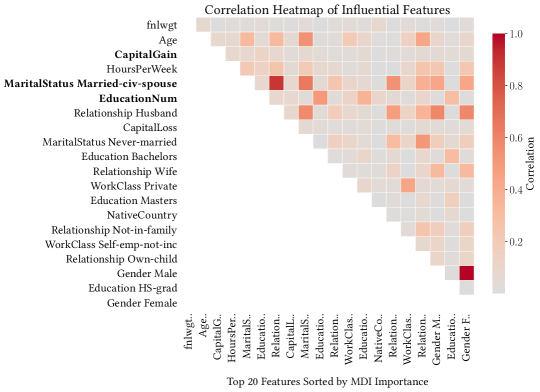

Figure 11 shows a correlation heat map of the top 20 features in the Adult Income dataset ranked by MDI importance. The top 3 features selected by ControlBurn are in bold. From this plot, it is apparent that the MDI feature importance of “MaritalStatus_Married-civ-spouse” is diluted because the feature is correlated with many other influential features. ControlBurn is able to select this feature while our random forest baseline ignores it.

It is also interesting to note that the feature “fnlwgt” is uninformative: it represents the population size of a row and is unrelated to income. This feature is given high importance by our random forest baseline but ignored by ControlBurn. We discuss this phenomenon further in Section 6.1.

5.3.2. Audit dataset

The Audit dataset demonstrates that highly correlated and even duplicated features sometimes appear in real-world data. Many of these risk scores are highly correlated and several scores are linear transformations of existing scores. We use ControlBurn to build a classification model to predict whether a firm is fradulent based on risk scores. Figure 10 shows that ControlBurn outperforms our baseline for any sparsity level .

5.3.3. Microarray gene expression

In this experiment, we use ControlBurn to determine which gene expressions are the most influential towards predicting the hormone receptor status of breast cancer tumors. Our data contains 49 tumor samples and over 7000 distinct gene expressions. We formulate this task as a binary classification problem to predict receptor status using gene expressions as features. The Gene Expression plot in Figure 10 shows the difference in accuracy between a model selected using ControlBurn and our random forest baseline. ControlBurn outperforms our baseline for every sparsity . These preliminary findings suggest that ControlBurn is effective at finding a small number of genes with important effects on tumor growth. We discuss implications and extensions appropriate for gene expression data further in Section 6.2.

6. Discussion and future work

6.1. Bias from uninformative continuous features

Brieman et. al (1984) (brieman1984classification, ) and Strobl et. al (2007) (strobl2007bias, ) note that MDI feature importance is biased towards features with more potential splits. The feature importance of continuous features, which can be split on multiple values, is inflated compared to binary features.

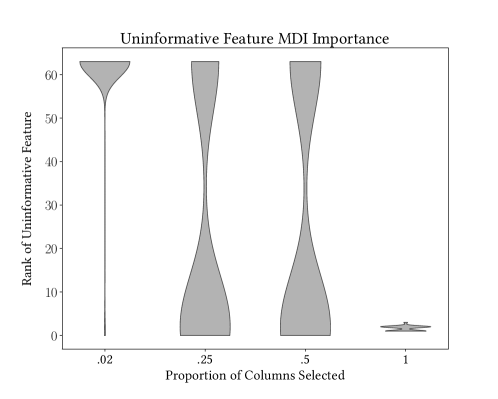

We attempt to quantify whether ControlBurn suffers bias from uninformative continuous features. We replicate the experiment conducted by Zhou and Hooker (2019) (zhou2019unbiased, ) by adding a random continuous feature to a classification dataset. We use ControlBurn to select a relevant subset of features and compute the feature rank, via MDI importance, of the uninformative feature in the model. Figure 12 shows that when the full feature set is selected, the uninformative feature is often present in the top feature rankings due to bias. As we increase and select fewer features, our uninformative feature is demoted. This experiment demonstrates that ControlBurn mitigates bias from uninformative continuous features. It would be interesting to compare ControlBurn against other feature importance algorithms robust to this bias, such as Unbiased Feature Importance (zhou2019unbiased, ).

6.2. Limits of LASSO: big , small

Zou and Hastie (2005) (zou2005regularization, ) note a limitation of LASSO: the number of features selected by LASSO is bounded by the number of rows . When ControlBurn is used for a problem with more features than rows (), the number of nonzero entries in is bounded by . At most trees can be included in the selected model. If all trees are grown to full depth, the selected model can use at most features. In our gene expression example, while . The upper-bound on the number of features that can selected via ControlBurn is much higher than the corresponding upper-bound for LASSO regression, however ControlBurn may still be unable to select the entire feature set if . ControlBurn also selects a single feature from a group of correlated features. This makes ControlBurn robust to correlation bias but ineffective for gene selection, where it is desirable to include entire groups of correlated gene expressions.

We propose extending ControlBurn by adding an regularization term to our optimization problem, just as the elastic net extends LASSO regression in the linear setting. Group elastic nets for gene selection have been explored in Munch (2018) (munch2018adaptive, ); using this approach to select tree ensembles presents an interesting direction for future research.

7. Conclusion

In this work, we develop ControlBurn, a weighted LASSO-based feature selection algorithm for tree ensembles. Our algorithm is robust to correlation bias; when presented with a group of correlated features, ControlBurn will assign all importance to a single feature to represent the group. This improves the interpretability of the selected model, as a sparse subset of features can be recovered for further analysis. ControlBurn is flexible and can incorporate various feature groupings and costs. In addition, the algorithm is computationally efficient and scalable.

Our results indicate that ControlBurn performs better than traditional embedded feature selection algorithms across a wide variety of datasets. We also found our algorithm to be robust to bias from uninformative continuous features. ControlBurn is an effective and useful algorithm for selecting important features from correlated data.

References

- (1) Ambusaidi, M. A., He, X., Nanda, P., and Tan, Z. Building an intrusion detection system using a filter-based feature selection algorithm. IEEE transactions on computers 65, 10 (2016), 2986–2998.

- (2) ApS, M. MOSEK Fusion API for Python 9.2.37, 2021.

- (3) Bertsimas, D., King, A., Mazumder, R., et al. Best subset selection via a modern optimization lens. Annals of statistics 44, 2 (2016), 813–852.

- (4) Blum, A. L., and Langley, P. Selection of relevant features and examples in machine learning. Artificial intelligence 97, 1-2 (1997), 245–271.

- (5) Brieman, L., Friedman, J. H., Olshen, R. A., and Stone, C. J. Classification and regression trees. wadsworth. Inc. Monterey, California, USA (1984).

- (6) Chandrashekar, G., and Sahin, F. A survey on feature selection methods. Computers & Electrical Engineering 40, 1 (2014), 16–28.

- (7) Chen, T., and Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining (2016), pp. 785–794.

- (8) Chzhen, E., Hebiri, M., Salmon, J., et al. On lasso refitting strategies. Bernoulli 25, 4A (2019), 3175–3200.

- (9) Cortes, C., and Vapnik, V. Support-vector networks. Machine learning 20, 3 (1995), 273–297.

- (10) Diamond, S., and Boyd, S. CVXPY: A Python-embedded modeling language for convex optimization. Journal of Machine Learning Research 17, 83 (2016), 1–5.

- (11) Dua, D., and Graff, C. UCI machine learning repository, 2017.

- (12) Freund, Y., Schapire, R., and Abe, N. A short introduction to boosting. Journal-Japanese Society For Artificial Intelligence 14, 771-780 (1999), 1612.

- (13) Friedman, J., Hastie, T., and Tibshirani, R. The elements of statistical learning, vol. 1. Springer series in statistics New York, 2001.

- (14) Friedman, J. H. Greedy function approximation: a gradient boosting machine. Annals of statistics (2001), 1189–1232.

- (15) Howell, N. Demography of the dobe! kung, 2nd edn. new brunswick, 2000.

- (16) Langley, P., et al. Selection of relevant features in machine learning. In Proceedings of the AAAI Fall symposium on relevance (1994), vol. 184, Citeseer, pp. 245–271.

- (17) Lee, I.-H., Lushington, G. H., and Visvanathan, M. A filter-based feature selection approach for identifying potential biomarkers for lung cancer. Journal of clinical Bioinformatics 1, 1 (2011), 11.

- (18) Lee, S.-I., Lee, H., Abbeel, P., and Ng, A. Y. Efficient l~ 1 regularized logistic regression. In Aaai (2006), vol. 6, pp. 401–408.

- (19) Lou, Y., Caruana, R., Gehrke, J., and Hooker, G. Accurate intelligible models with pairwise interactions. In Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining (2013), pp. 623–631.

- (20) Lundberg, S., and Lee, S.-I. A unified approach to interpreting model predictions. arXiv preprint arXiv:1705.07874 (2017).

- (21) Mafarja, M., and Mirjalili, S. Whale optimization approaches for wrapper feature selection. Applied Soft Computing 62 (2018), 441–453.

- (22) Maldonado, S., and Weber, R. A wrapper method for feature selection using support vector machines. Information Sciences 179, 13 (2009), 2208–2217.

- (23) Münch, M. M., Peeters, C. F., Van Der Vaart, A. W., and Van De Wiel, M. A. Adaptive group-regularized logistic elastic net regression. Biostatistics (2018).

- (24) Nori, H., Jenkins, S., Koch, P., and Caruana, R. Interpretml: A unified framework for machine learning interpretability. arXiv preprint arXiv:1909.09223 (2019).

- (25) Olson, R. S., La Cava, W., Orzechowski, P., Urbanowicz, R. J., and Moore, J. H. Pmlb: a large benchmark suite for machine learning evaluation and comparison. BioData mining 10, 1 (2017), 1–13.

- (26) Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., and Duchesnay, E. Scikit-learn: Machine learning in Python. Journal of Machine Learning Research 12 (2011), 2825–2830.

- (27) Pilanci, M., and Wainwright, M. J. Randomized sketches of convex programs with sharp guarantees. IEEE Transactions on Information Theory 61, 9 (2015), 5096–5115.

- (28) Ribeiro, M. T., Singh, S., and Guestrin, C. ” why should i trust you?” explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (2016), pp. 1135–1144.

- (29) Scornet, E. Trees, forests, and impurity-based variable importance, 2020.

- (30) Strobl, C., Boulesteix, A.-L., Zeileis, A., and Hothorn, T. Bias in random forest variable importance measures: Illustrations, sources and a solution. BMC bioinformatics 8, 1 (2007), 25.

- (31) Tibshirani, R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological) 58, 1 (1996), 267–288.

- (32) West, M. M., Blanchette, C. C., Dressman, H. H., Huang, E. E., Ishida, S. S., Spang, R. R., Zuzan, H. H., Olson, J. A. J., Marks, J. R. J., and Nevins, J. R. J. Predicting the clinical status of human breast cancer by using gene expression profiles. Proceedings of the National Academy of Sciences of the United States of America 98, 20 (Sept. 2001), 11462–11467.

- (33) Xu, H., Caramanis, C., and Mannor, S. Sparse algorithms are not stable: A no-free-lunch theorem. IEEE transactions on pattern analysis and machine intelligence 34, 1 (2011), 187–193.

- (34) Zhou, Z., and Hooker, G. Unbiased measurement of feature importance in tree-based methods. arXiv preprint arXiv:1903.05179 (2019).

- (35) Zhou, Z., Hooker, G., and Wang, F. S-lime: Stabilized-lime for model explanation. In Proceedings of the 27th ACM SIGKDD international conference on knowledge discovery and data mining (2021).

- (36) Zou, H., and Hastie, T. Regularization and variable selection via the elastic net. Journal of the royal statistical society: series B (statistical methodology) 67, 2 (2005), 301–320.

Appendix A Benchmark Datasets

| dataset | Rows | Columns |

|---|---|---|

| analcatdata_cyyoung8092 | 97 | 10 |

| australian | 690 | 14 |

| biomed | 209 | 8 |

| breast_cancer_wisconsin | 569 | 30 |

| churn | 5000 | 20 |

| cleve | 303 | 13 |

| colic | 368 | 22 |

| diabetes | 768 | 8 |

| glass2 | 163 | 9 |

| Hill_Valley_without_noise | 1212 | 100 |

| horse_colic | 368 | 22 |

| ionosphere | 351 | 34 |

| ring | 7400 | 20 |

| spambase | 4601 | 57 |

| tokyo1 | 959 | 44 |