Convergence of a Human-in-the-Loop Policy-Gradient Algorithm With Eligibility Trace Under Reward, Policy, and Advantage Feedback

Abstract

Abstract—Fluid human–agent communication is essential for the future of human-in-the-loop reinforcement learning. An agent must respond appropriately to feedback from its human trainer even before they have significant experience working together. Therefore, it is important that learning agents respond well to various feedback schemes human trainers are likely to provide. This work analyzes the COnvergent Actor–Critic by Humans (COACH) algorithm under three different types of feedback—policy feedback, reward feedback, and advantage feedback. For these three feedback types, we find that COACH can behave sub-optimally. We propose a variant of COACH, episodic COACH (E-COACH), which we prove converges for all three types. We compare our COACH variant with two other reinforcement-learning algorithms: Q-learning and TAMER.

1 Introduction

We study the algorithm COACH (MacGlashan et al., 2017a), designed to learn from evaluative feedback. We would like for the algorithm to find an optimal policy under different feedback schemes, since a human trainer is apt to select from several possible approaches and we do not know which will be chosen a priori.

We present a proof of convergence for three natural feedback schemes. 1) Feedback can take the form of an economic incentive in which the learner gets an immediate reward for moving into a state based on the state’s desirability—one-step reward. 2) Feedback can be a binary signal that tells the learner whether the action it took was correct (1) or not (0) with respect to the trainer’s intended policy. And, 3) feedback can reveal how good an action was relative to the agent’s recent behavior—the action’s advantage. It is desirable for a learning algorithm to perform appropriately in all three of these settings.

E-COACH (Algorithm 1) is such a learning algorithm. It takes input policy , discount factor , and a learning rate .

E-COACH (Episodic COACH) is a close variant of the original COACH with a few differences. 1) It keeps track explicitly of the number of steps elapsed in the current episode. 2) E-COACH’s most notable difference from COACH is E-COACH’s use of a decay factor. This element emphasizes information from temporally closer decisions over more distant ones. 3) In addition, E-COACH does not use a parameter to decay the eligibility trace . This makes E-COACH’s treatment of eligibility traces like setting in the original COACH algorithm.

We propose E-COACH instead of COACH because COACH does not take advantage of the discount factor, . This causes it to incorrectly estimate the expected reward, causing it to perform poorly on the given environment. We provide an example of such a scenario in 6.1. In contrast to COACH, we show that E-COACH can find converge under all three feedback schemes described above.

2 Background

A Markov Decision Process (MDP) is a five-tuple: . Here, is a set of reachable states, is the set of actions an agent might use, is a probability that the agent would move to state from the given state having taken action , is the reward obtained for taking action from state , and is a discount factor indicating the importance of immediate rewards as opposed to rewards received in distant future.

A stochastic policy , where , defines an agent’s behavior via . Note that is a vector parameter of the policy, and we assume that is differentiable with respect to this parameter. For brevity, we will denote as when the parameter vector is clear from context.

The value functions and measure the performance of policy :

and

When an agent’s policy , then we call that policy optimal. We will denote optimal policies as and use the shorthand . Also, for sake of brevity, we will write simply as from now on. Note that all expectations we consider are conditioned on the policy. If not specified otherwise , is an expectation over where and .

3 E-COACH Under Reward Feedback

A simple form of feedback a trainer may choose to give a learner is the one-step reward obtained from the MDP for the action the agent just took. Such reward feedback is convenient since it is myopic and does not require the trainer to consider future rewards. It assumes a direct analogy between the rewards that define the task and the feedback provided by the trainer—it is the simplest extension of standard reinforcement learning to the interactive setting. For the following theoretical results to hold, we assume the human-trainer gives consistent reward, as per the definition of our feedback, . represents our feedback, which we will redefine in Sections 3, 4.2, and 5.

We look at an MDP . Under reward feedback, when an agent takes an action in state , the trainer gives feedback

Theorem 3: E-COACH converges under reward feedback .

Proof: Consider the sequence of updates on and at each time step :

where, for brevity, we define .

To better understand what the updates mean, consider some terminal time . The value may refer to the time at which an agent reaches the goal or a pre-decided time at which the trainer stops the agent. We use only for the purpose of elucidation and the analysis below also extends to the infinite horizon case when is unbounded. We ignore the in the update above for sake of clarity. By linearity of the updates, it is trivial to incorporate into the calculations below.

Rearranging the order of summation

Taking expectation

3.1 E-COACH Objective Function

In this section we show that the gradient of the objective function is what REINFORCE performs gradient ascent on. Note that this quantity is what E-COACH is estimating (via parameter) and performing gradient ascent on.

Consider the REINFORCE algorithm (Algorithm 2). Here, is a Monte Carlo estimate of . Hence, at any terminal time , it is clear that the expected value of obtained by REINFORCE is equal to that of E-COACH.

Consider the unnormalised state visitation distribution, such that , where denotes the probability of arriving in state at time following policy . The objective to maximize, as described in the Policy Gradient Theorem (Sutton et al., 2000), is

and its gradient is

The gradient can be rewritten as .

Expanding with respect to yields

which is exactly what E-COACH and REINFORCE are estimating.

Since E-COACH is performing gradient ascent on the policy gradient objective, we can use results from (Agarwal et al., 2020) to say that E-COACH converges to local optima or saddle points. Although, recent work has shown that policy gradient methods can escape saddle points under mild assumptions on the rewards and minor modifications to existing algorithms (Zhang et al., 2020) (Jin et al., 2017).

Something to note is that behavioral evidence indicates that one-step reward is not a typical choice of human trainers (Ho et al., 2019).

4 E-COACH Under Policy Feedback

To argue that E-COACH converges under policy feedback, we first consider a more general form of feedback and then show policy feedback is a special case.

4.1 E-COACH with a More General Type of Feedback

Let us start by considering two similar MDPs and . Note the differing reward functions and in the two MDPs.

We will denote the value functions for and as and , respectively. We will say that the starting state for both of our MDPs is . Define , .

The following theorem will have the following assumption:

- 1.

-

2.

We also assume that for the case where the MDP has an infinite horizon, which will we will justify later on.

Theorem 4.1 requires the condition that all optimal policies for are also optimal for . We will later show that this condition holds true for the case of policy feedback in theorem 4.2, allowing us to leverage these results.

Theorem 4.1: If all optimal policies for are also optimal for (optimal policies of are a subset of those for ), then running E-COACH on will result in a policy that is close to an optimal policy on , which will also be close to an optimal policy for . Let’s define . Then we find that,

Proof: We have to show that running E-COACH in will yield a policy that is not too far off from an optimal policy for . We would like to run E-COACH on , using the alternate form of feedback as the reward function, and for any good policy (as per assumption 1) we get from E-COACH on , we would like for that policy to also be good on , the original MDP we are trying to solve.

The lower-bound in the theorem statement is immediate.

For the upper-bound, let’s let denote a policy that follows/simulates for the first time-steps and for the rest. Hence, on the time-step, will follow/simulate and not . Let denote the value of policy . Therefore, we can say that and . Remember that is optimal on and by the condition above, and thus has value .

We’ll start by considering . Both and accumulate the same expected reward for the first steps and so these rewards cancel out. Note that the we use below is the same as that defined in section 3.1. We find the following:

Now we’ll use the above fact when considering .

As , we have that .

Note that our theorem says something different than the Simulation Lemma (Kearns & Singh, 1998) as we make no assumptions about how close the reward functions of and are. Instead our theorem requires optimal policies in be optimal in and bounds the return of a policy learnt by E-COACH in .

4.2 E-COACH Under Policy Feedback

Let be an MDP without any specific reward function. Under policy feedback, a trainer has a target stationary deterministic policy in mind and delivers feedback based on whether the trainer’s decision agrees with . When an agent takes an action in state , the trainer will give feedback

with defined as,

Theorem 4.2: E-COACH converges under feedback .

Proof: Consider the case of replacing the reward function with in MDP , constructing a new MDP . We would like to show that, in this setting, the E-COACH algorithm converges to the optimal solution. and satisfy the prerequisites for theorem 4.1.

Consider the optimal policy for . The best policy will select the best action in every state. We have that . The optimal policy for will achieve this value function because, if not, then we have a policy such that , where and for some . Take the smallest value such that , then so then the policy achieving is sub-optimal. We can conclude that is the value function for the optimal policy. Therefore, the policy that always chooses the action that gives a value of one is optimal. Also, note that always choosing the action that results in a feedback of one corresponds exactly to the decision of by construction of . So, we obtain that . In other words, an optimal policy in the new domain is equivalent to the target policy from the original one.

We can leverage Theorem 4.1 to show that the algorithm converges under policy feedback.

5 E-COACH Under Advantage Feedback

COACH (MacGlashan et al., 2017a) was originally motivated by the observation that human feedback is observed to be policy dependent—if a decision improves over the agent’s recent decisions, trainers provide positive feedback. If it is worse, the trainer is more likely to provide negative feedback. As such, feedback is well modeled by the advantage function of the agent’s current policy.

In advantage feedback, when an agent takes action in state , the trainer will give feedback

with defined as,

Theorem 5: E-COACH converges under feedback .

Proof: Since , we have that

By the equation above we can say the following

| (1) |

We also know that

| (2) |

Using the same approach as in theorem 3, we look at the sequence of updates made to the policy parameter until some terminal time .

Rearranging the order of summation

Therefore, taking the expectation

Using equations 1 and 2, we can say that

Using the fact that (Thomas & Brunskill, 2017)

Thus, using the argument in 3.1, we can state that E-COACH converges under advantage feedback.

6 Original COACH

This section assesses the convergence of the original COACH algorithm (MacGlashan et al., 2017a) under the three different types of feedback defined in this paper. Recall the main differences between E-COACH and COACH:

-

1.

COACH makes use of an eligibility decay factor .

-

2.

COACH does not discount the feedback by as part of the algorithm.

Note that and are not replaceable as the can only be used to discount stored gradients and thereby discount future rewards. On the other hand, is used to both discount future rewards as well as estimate the unnormalised state visitation distribution described in 3.1.

As a result, the original COACH is incapable of estimating the state visitation distribution. Hence, the updates made at and would be weighted equally by COACH.

This property goes against what policy-gradient algorithms would do. By not using , COACH is basically drawing from a state visitation distribution different from the state visitation distribution that is part of the objective function that we described in 3.1. As a result, the updates made by COACH are not estimating . Although COACH may learn to do reasonably well, we cannot say that it will behave optimally.

6.1 COACH Under One-Step Reward

The algorithm will converge, but the policy it converges to will be suboptimal for because COACH does not estimate the gradient of the policy gradient objective, . Because COACH does not incorporate the discount factor, it behaves as if the domain has , even if it isn’t necessarily the case. If the domain has a , then the policy will have a long-term view because it will ignore this discount factor. Ignoring discounts can lead to suboptimal behavior.

Consider the five-state domain in Figure 2. It shows how the optimal decision in a state can change with the discount factor.

6.2 Policy Feedback

COACH will converge, but to a poor performing policy for the same reason given in Section 6.1.

6.3 Advantage Feedback

COACH should converge under this feedback type as per the argument given by MacGlashan et al..

7 Comparison With Other Algorithms

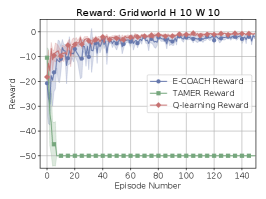

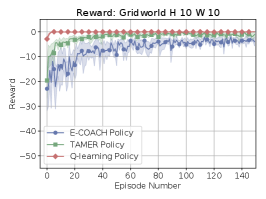

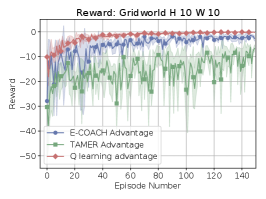

We now know that E-COACH converges under several types of feedback. The three highlighted in this paper are Policy, Advantage, and Reward feedback. In this section, we compare E-COACH to TAMER (Knox & Stone, 2008) and Q-learning under these three types of feedback.

7.1 TAMER

TAMER expects the human trainer to take each action’s long-term implications into account when providing feedback. TAMER learns the trainer’s feedback function, then returns the policy that maximizes one-step feedback in each state.

The pseudocode as described in algorithm 3 is the TAMER algorithm. See (Knox & Stone, 2008) for more details. The represents the time, the weights is used for the reward model, and the feature vectors and are state feature vectors. It takes input , a learning rate.

Note that there are several different versions of TAMER. The one we are analyzing is the original by Knox & Stone (2008).

7.1.1 Reward Feedback

Because TAMER will maximize over the learned function, it will result in a bad policy for this form of feedback. TAMER does not take future rewards into account and instead will greedily maximize for immediate reward. TAMER assumes the trainer has taken future rewards into account already. See figure 1.

7.1.2 Policy Feedback

TAMER expects policy feedback and chooses correct actions assuming sufficient exploration. See figure 1.

7.1.3 Advantage Feedback

It is not known precisely how TAMER responds to advantage feedback. Knox & Stone (2008) claim that TAMER should work under moving feedback. That is, TAMER should behave properly even when feedback changes over time because the algorithm expects the human trainer to be inconsistent and continues to update its choices even in the face of changes. The advantage function assigns different values to actions as the policy is updated, so at different times it gets different values. Assuming TAMER is able to learn this moving function, then a greedy one-step policy should be optimal because the maximal value of the advantage function is always the optimal action for the given state. See figure 1.

7.2 Q-learning

Q-learning (Watkins, 1989) is an algorithm that expects feedback in the form of immediate reward and calculates long-term value from these signals. Specifically, is its estimate of long-term value and, when it is informed of a transition from to via action and feedback , it makes the update:

7.2.1 Reward Feedback

Q-learning is typically defined to expect the feedback to be the expected one-step reward or a value whose expectation is . It has been proven to converge to optimal behavior under this type of feedback (Watkins & Dayan, 1992; Littman & Szepesvári, 1996; Singh et al., 2000; Melo, 2001). See figure 1.

7.2.2 Policy Feedback

Given policy feedback, Q-learning will optimize the expected sum of future “rewards”, which, in this case, is an indicator of whether the agent’s selected action is the trainer’s target policy or not.

Policy feedback depends on only the previous state and action, and, as such, Q-learning can treat this feedback as a reward function and converge on the behavior that optimizes the sum of these feedbacks.

Interestingly, the policy that optimizes the sum of policy feedbacks is exactly the target policy. This observation follows from the fact that matching the trainer’s target policy results in a value of . On the other hand, selecting even a single action that does not match the trainer’s target policy results in the removal of one of these terms and therefore lower value. Under policy feedback, Q-learning thus converges to the policy that matches the trainer’s target policy. See figure 1.

7.2.3 Advantage Feedback

Q-learning is not designed to work with advantage feedback because the advantage function is policy dependent and can cause its reward signals to change as it updates its value. Nevertheless, advantage feedback does provide a signal for how values should change and, empirically, we often see Q-learning handling advantage feedback well. The analytical challenge is that the changes in the policy influence the reward and the changes in the reward influence the policy, so these two functions need to converge together for Q-learning to handle advantage feedback successfully.

We conjecture that careful annealing of Q-learning’s learning rate could provide a mechanism for stabilizing these two different adaptive processes. Resolving this question is a topic for future work. We believe the work done by Konda & Borkar (1999) could provide greater insight. See figure 1, where Q-learning appears to converge for a simple GridWorld domain.

8 Conclusion

In this paper, we analyzed the convergence of COnvergent Actor-Critic by Humans (MacGlashan et al., 2017a) under three types of feedback—one-step reward, policy, and advantage feedback. These are all examples of feedback a human trainer might give.

We defined a COACH variant called E-COACH and demonstrated its convergence under these types of feedback. Original COACH, unfortunately, does not necessarily converge to an optimal policy under the feedback types defined in this paper. In addition, we compared the new E-COACH with two algorithms: Q-learning and TAMER. TAMER does poorly under one-step-reward feedback. And Q-learning appears to converge to optimal behavior under one-step-reward and policy feedback, but future work is required to determine its performance under advantage feedback.

References

- Abel (2019) Abel, D. simple_rl: Reproducible reinforcement learning in python. In ICLR Workshop on Reproducibility in Machine Learning, 2019.

- Agarwal et al. (2020) Agarwal, A., Kakade, S. M., Lee, J. D., and Mahajan, G. On the theory of policy gradient methods: Optimality, approximation, and distribution shift. 2020. arXiv:1908.00251v5.

- Ho et al. (2019) Ho, M. K., Cushman, F., Littman, M. L., and Austerweil, J. L. People teach with rewards and punishments as communication, not reinforcements. Journal of Experimental Psychology: General, pp. 520–549, 2019.

- Jin et al. (2017) Jin, C., Ge, R., Netrapalli, P., Kakade, S. M., and Jordan, M. I. How to escape saddle points efficiently. 2017. arXiv:1703.00887v1.

- Kearns & Singh (1998) Kearns, M. and Singh, S. Near-optimal reinforcement learning in polynomial time. pp. 12–16, 1998.

- Knox & Stone (2008) Knox, W. B. and Stone, P. TAMER: Training an agent manually via evaluative reinforcement. In 2008 7th IEEE International Conference on Development and Learning, pp. 292–297. IEEE, 2008.

- Konda & Borkar (1999) Konda, V. R. and Borkar, V. S. Actor-critic–type learning algorithms for markov decision processes. SIAM J. CONTROL OPTIM, 1999.

- Littman & Szepesvári (1996) Littman, M. L. and Szepesvári, C. A generalized reinforcement-learning model: Convergence and applications. In Saitta, L. (ed.), Proceedings of the Thirteenth International Conference on Machine Learning, pp. 310–318, 1996.

- MacGlashan et al. (2017a) MacGlashan, J., Ho, M. K., Loftin, R., Peng, B., Wang, G., Roberts, D. L., Taylor, M. E., and Littman, M. L. Interactive learning from policy-dependent human feedback. In Proceedings of the Thirty-Fourth International Conference on Machine Learning, 2017a.

- MacGlashan et al. (2017b) MacGlashan, J., Ho, M. K., Loftin, R., Peng, B., Wang, G., Roberts, D. L., Taylor, M. E., and Littman, M. L. Interactive learning from policy-dependent human feedback. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 2285–2294. JMLR. org, 2017b.

- Melo (2001) Melo, F. S. Convergence of Q-learning: A simple proof. Institute Of Systems and Robotics, Tech. Rep, pp. 1–4, 2001.

- Singh et al. (2000) Singh, S., Jaakkola, T., Littman, M. L., and Szepesvári, C. Convergence results for single-step on-policy reinforcement-learning algorithms. Machine Learning, 39:287–308, 2000.

- Sutton et al. (2000) Sutton, R. S., McAllester, D., Singh, S., and Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. In Neural Information Processing Systems, pp. 1057–1063, 2000.

- Thomas & Brunskill (2017) Thomas, P. S. and Brunskill, E. Policy gradient methods for reinforcement learning with function approximation and action-dependent baselines. arXiv:1706.06643v1, 2017.

- Watkins (1989) Watkins, C. J. C. H. Learning from Delayed Rewards. PhD thesis, King’s College, Cambridge, UK, 1989.

- Watkins & Dayan (1992) Watkins, C. J. C. H. and Dayan, P. Q-learning. Machine Learning, 8(3):279–292, 1992.

- Zhang et al. (2020) Zhang, K., Koppel, A., Zhu, H., and Basar, T. Global convergence of policy gradient methods to (almost) locally optimal policies. 2020. arXiv:1906.08383v3.