1 Introduction

Forward-backward stochastic differential equations (FBSDEs) and partial differential equations (PDEs) of parabolic type have found numerous applications in stochastic control, finance, physics, etc., as a ubiquitous modeling tool. In most situations encountered in practice the equations cannot be solved analytically but require certain numerical algorithms to provide approximate solutions. On the one hand, the dominant choices of numerical algorithms for PDEs are mesh-based methods, such as finite differences, finite elements, etc. On the other hand, FBSDEs can be tackled directly through probabilistic means, with appropriate methods for the approximation of conditional expectation. Since these two kinds of equations are intimately connected through the nonlinear Feynman–Kac formula [1], the algorithms designed for one kind of equation can often be used to solve another one.

However, the aforementioned numerical algorithms become more and more difficult, if not impossible, when the dimension increases.

They are doomed to run into the so-called “curse of dimensionality” [2] when the dimension is high, namely, the computational complexity grows exponentially as the dimension grows.

The classical mesh-based algorithms for PDEs require a mesh of size .

The simulation of FBSDEs faces a similar difficulty in the general nonlinear cases, due to the need to compute conditional expectation in high dimension.

The conventional methods, including the least squares regression [3], Malliavin approach [4], and kernel regression [5], are all of exponential complexity. There are a limited number of cases where practical high-dimensional algorithms are available. For example, in the linear case, Feynman–Kac formula and Monte Carlo simulation together provide an efficient approach to solving PDEs and associated BSDEs numerically.

In addition, methods based on the branching diffusion process [6, 7] and multilevel Picard iteration [8, 9, 10] overcome the curse of dimensionality in their considered settings.

We refer [9] for the detailed discussion on the complexity of the algorithms mentioned above.

Overall there is no numerical algorithm in literature so far proved to overcome the curse of dimensionality for general quasilinear parabolic PDEs and the corresponding FBSDEs.

A recently developed algorithm, called the deep BSDE method [11, 12], has shown astonishing power in solving general high-dimensional FBSDEs and parabolic PDEs [13, 14, 15]. In contrast to conventional methods, the deep BSDE method employs neural networks to approximate unknown gradients and reformulates the original equation-solving problem into a stochastic optimization problem. Thanks to the universal approximation capability and parsimonious parameterization of neural networks, in practice the objective function can be effectively optimized in high-dimensional cases, and the function values of interests are obtained quite accurately.

The deep BSDE method was initially proposed for decoupled FBSDEs.

In this paper, we extend the method to deal with coupled FBSDEs and a broader class of quasilinear parabolic PDEs. Furthermore, we present an error analysis of the proposed scheme, including decoupled FBSDEs as a special case.

Our theoretical result consists of two theorems. Theorem 1 provides a posteriori error estimation of the deep BSDE method. As long as the objective function is optimized to be close to zero under fine time discretization, the approximate solution is close to the true solution. In other words, in practice, the accuracy of the numerical solution is effectively indicated by the value of the objective function.

Theorem 2 shows that such a situation is attainable, by relating the infimum of the objective function to the expression ability of neural networks. As an implication of the universal approximation property (in the sense), there exist neural networks with suitable parameters such that the obtained numerical solution is approximately accurate.

To the best of our knowledge, this is the first theoretical result of the deep BSDE method for solving FBSDEs and parabolic PDEs.

Although our numerical algorithm is based on neural networks, the theoretical result provided here is equally applicable to the algorithms based on other forms of function approximations.

The article is organized as follows. In section 2, we precisely state our numerical scheme for coupled FBSDEs and quasilinear parabolic PDEs and give the main theoretical results of the proposed numerical scheme.

In section 3, the basic assumptions and some useful results from the literature are given for later use. The proofs of the two main theorems are provided in section 4 and section 5, respectively.

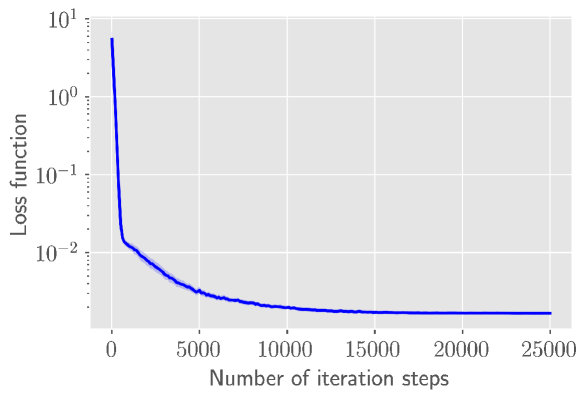

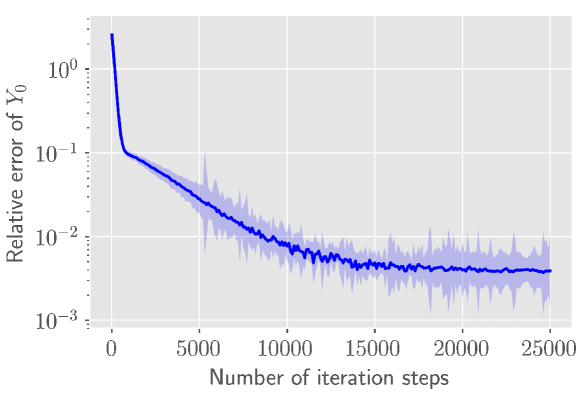

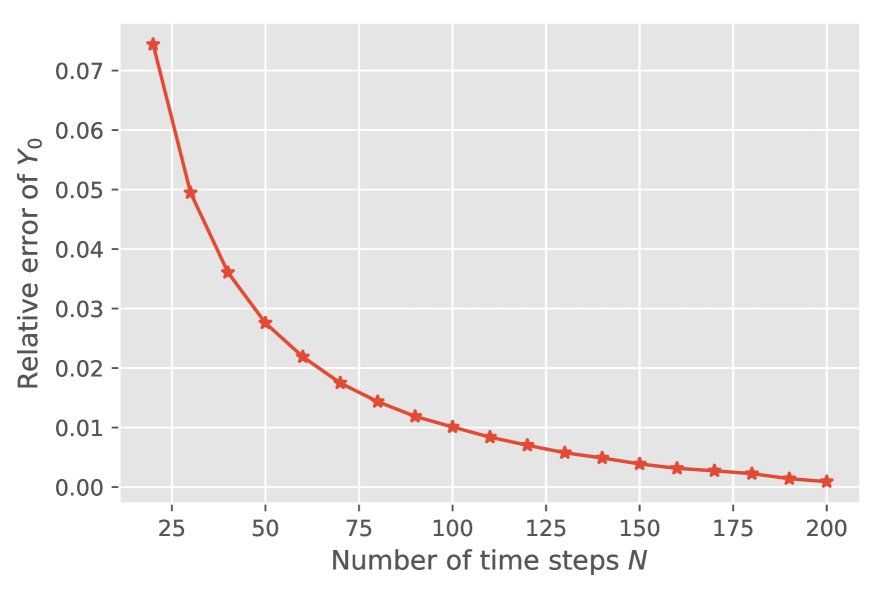

Some numerical experiments with the proposed scheme are presented in section 6.

2 A Numerical Scheme for Coupled FBSDEs and Main Results

Let be the terminal time, be a filtered probability space equipped with a -dimensional standard Brownian motion starting from . is a square-integrable random variable independent of . We use the same notation to denote the filtered probability space generated by .

The notation denotes the Euclidean norm of a vector and denotes the Frobenius norm of a matrix .

Consider the following coupled FBSDEs

|

|

|

(2.1) |

|

|

|

(2.2) |

in which takes values in , takes values in , and takes values in .

Here we assume to be one-dimensional to simplify the presentation. The result can be extended without any difficulty to the case where is multi-dimensional. We say is a solution of the above FBSDEs, if all its components are -adapted and square-integrable, together satisfying equations (2.1)(2.2).

Solving coupled FBSDEs numerically is more difficult than solving decoupled FBSDEs. Except the Picard iteration method developed in [16], most methods exploit the relation to quasilinear parabolic PDEs via the four-time-step-scheme in [17]. This type of methods suffers from high dimensionality due to spatial discretization of PDEs. In contrast, our strategy, starting from simulating the coupled FBSDEs directly, is a new purely probabilistic scheme. To state the numerical algorithm precisely, we consider a partition of the time interval , with and . Let for .

Inspired by the nonlinear Feynman–Kac formula that will be introduced below, we view as a function of and view as a function of and .

Equipped with this viewpoint, our goal becomes finding appropriate functions and for such that and can serve as good surrogates of and , respectively. To this end, we consider the classical Euler scheme

|

|

|

(2.3) |

Without loss of clarity, here we use the notation as , as , etc.

Following the spirit of the deep BSDE method, we employ a stochastic optimizer to solve the following stochastic optimization problem

|

|

|

(2.4) |

where and are parametric function spaces generated by neural networks.

To see intuitively where the objective function (2.4) comes from, we consider the following variational problem:

|

|

|

(2.5) |

|

|

|

|

|

|

where is -measurable and square-integrable, and is a -adapted square-integrable process.

The solution of the FBSDEs (2.1)(2.2) is a minimizer of the above problem since the loss function attains zero when it is evaluated at the solution.

In addition, the wellposedness of the FBSDEs (under some regularity conditions) ensures the existence and uniqueness of the minimizer.

Therefore, we expect (2.4), as a discretized counterpart of (2.5), defines a benign optimization problem and the associated near-optimal solution

provides us a good approximate solution of the original FBSDEs. The reason we do not represent as a function of only is that the process is not Markovian, while the process is Markovian, which facilitates our analysis considerably. If and are both independent of , then the FBSDEs (2.1)(2.2) are decoupled, we can take as a function of only, as the numerical scheme introduced in [11, 12].

Our two main theorems regarding the deep BSDE method are the following, mainly on the justification and property of the objective function (2.4) in the general coupled case, regardless of the specific choice of parametric function spaces.

An important assumption for the two theorems is the so-called weak coupling or monotonicity condition, which will be explained in detail in section 3.

The precise statement of the theorems can be found in Theorem 1′ (section 4) and Theorem 2′ (section 5), respectively.

Theorem 1.

Under some assumptions, there exists a constant C, independent of h, d, and m, such that for sufficiently small h,

|

|

|

(2.6) |

where , , for .

Theorem 2.

Under some assumptions, there exists a constant C, independent of h, d and m, such that for sufficiently small h,

|

|

|

|

|

|

|

|

|

|

|

|

where . If and are independent of , the term can be replaced with .

Briefly speaking, Theorem 1 states that the simulation error (left side of equation (2.6)) can be bounded through the value of the objective function (2.4).

To the best of our knowledge, this is the first result for the error estimation of the coupled FBSDEs, concerning both time discretization error and terminal distance.

Theorem 2 states that the optimal value of the objective function can be small if the approximation capability of the parametric function spaces ( and above) is high.

Neural networks are a promising candidate for such a requirement, especially in high-dimensional problems.

There are numerous results, dating back to the 90s (see, e.g., [18, 19, 20, 21, 22, 23, 24, 25, 26, 27]), in regard to the universal approximation and complexity of neural networks.

There are also some recent analysis [28, 29, 30, 31] on approximating the solutions of certain parabolic partial differential equations with neural networks.

However, the problem is still far from resolved. Theorem 2 implies that if the involved conditional expectations can be approximated by neural networks whose numbers of parameters growing at most polynomially both in the dimension and the reciprocal of the required accuracy, then the solutions of the considered FBSDEs can be represented in practice without the curse of dimensionality. Under what conditions this assumption is true is beyond the scope of this work and remains for further investigation.

The above-mentioned scheme in (2.3)(2.4) is for solving FBSDEs. The so-called nonlinear Feynman–Kac formula, connecting FBSDEs with the quasilinear parabolic PDEs, provides an approach to numerically solve quasilinear parabolic PDEs (2.7) below through the same scheme.

We recall a concrete version of the nonlinear Feynman–Kac formula in Theorem 3 below and refer interested readers to e.g., [32] for more details. According to this formula, the term can be interpreted as . Therefore, we can choose the random variable with a delta distribution, a uniform distribution in a bounded region, or any other distribution we are interested in. After solving the optimization problem, we obtain as an approximation of . See [11, 12] for more details.

Theorem 3.

Assume

-

1.

and , , are smooth functions with bounded first-order derivatives with respect to .

-

2.

There exist a positive continuous function and a constant , satisfying that

|

|

|

|

|

|

-

3.

There exists a constant such that is bounded in the Hölder space .

Then the following quasilinear PDE has a unique classical solution that is bounded with bounded , , and ,

|

|

|

(2.7) |

The associated FBSDEs (2.1)(2.2) have a unique solution with , , and is the solution of the following SDE

|

|

|

Remark.

The statement regarding FBSDEs (2.1)(2.2) in Theorem 3 is developed through a PDE-based argument, which requires , uniform ellipticity of , and high-order smoothness of , and . An analogous result through probabilistic

argument is given below in Theorem 4 (point 4). In that case, we only need the Lipschitz condition for all of the involved functions, in addition to some weak coupling or monotonicity conditions demonstrated in Assumption 3. Note that the Lipschitz condition alone does not guarantee the existence of a solution to the coupled FBSDEs, even in the situation when are linear (see [16, 32] for a concrete counterexample).

Remark.

Theorem 3 also implies that the assumption that the drift function only depends on is general. If depends on as well, one can move the associated term in (2.7) into the nonlinearity and apply the nonlinear Feynman–Kac formula back to obtain an equivalent system of coupled FBSDEs, in which the new drift function is independent of .

3 Preliminaries

In this section, we introduce our assumptions and two useful results in [16].

We use the notation , , .

Assumption 1.

-

(i)

There exist (possibly negative) constants , such that

|

|

|

|

|

|

|

|

-

(ii)

b, , f, g are uniformly Lipschitz continuous with respect to (x,y,z). In particular, there are non-negative constants K, , , , , , and such that

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

-

(iii)

, , and are bounded. In particular, there are constants , , , and such that

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

We note here et al. are all constants, not partial derivatives.

For convenience, we use to denote the set of all the constants mentioned above and assume K is the upper bound of .

Assumption 2.

are uniformly Hölder- continuous with respect to . We assume the same constant K to be the upper bound of the square of the Hölder constants as well.

Assumption 3.

One of the following five cases holds:

-

1.

Small time duration, that is, T is small.

-

2.

Weak coupling of Y into the forward SDE (2.1), that is, and are small. In particular, if , then the forward equation does not depend on the backward one and, thus, equations (2.1)(2.2) are decoupled.

-

3.

Weak coupling of X into the backward SDE (2.2), that is, and are small. In particular, if , then the backward equation does not depend on the forward one and, thus, equations (2.1)(2.2) are also decoupled. In fact, in this case, Z = 0 and (2.2) reduces to an ODE.

-

4.

f is strongly decreasing in y, that is, is very negative.

-

5.

b is strongly decreasing in x, that is, is very negative.

The assumptions stated above are usually called weak coupling and monotonicity conditions in literature [16, 33, 34].

To make it more precise, we define

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Then, a specific quantitative form of the above five conditions can be summarized as:

|

|

|

(3.1) |

In other words, if any of the five conditions of the weak coupling and monotonicity conditions holds to certain extent, the two inequalities in (3.1) hold. Below, we refer to (3.1) as Assumption 3 and the five general qualitative conditions described above as the weak coupling and monotonicity conditions.

The above three assumptions are basic assumptions in [16], which we need in order to use the results from [16], as stated in Theorems 4 and 5 below.

Theorem 4 gives the connections between coupled FBSDEs and quasilinear parabolic PDEs under weaker conditions. Theorem 5 provides the convergence of the implicit scheme for coupled FBSDEs. Our work primarily uses the same set of assumptions except that we assume some further quantitative restrictions related to the weak coupling and monotonicity conditions, which will be transparent through the extra constants we define in proofs. Our aim is to provide explicit conditions on which our results hold and more clearly present the relationship between these constants and the error estimates. As will be seen in the proof, roughly speaking, the weaker the coupling (resp., the stronger the monotonicity, the smaller the time horizon) is, the easier the condition is satisfied, and the smaller the constant related with error estimates are.

Theorem 4.

Under Assumptions 1, 2, and 3, there exists a function u: that satisfies the following statements.

-

1.

.

-

2.

with some constant C depending on and .

-

3.

u is a viscosity solution of the PDE (2.7).

-

4.

The FBSDEs (2.1)(2.2) have a unique solution and . Thus, satisfies decoupled FBSDEs

|

|

|

|

|

|

|

|

Furthermore, the solution of the FBSDEs satisfies the path regularity with some constant C depending on and T

|

|

|

(3.2) |

in which , , for . If is càdlàg, we can replace with .

Remark.

Several conditions can guarantee admits a càdlàg version, such as and with some , see e.g., [35].

Theorem 5.

Under Assumptions 1, 2, and 3, for sufficiently small h, the following discrete-time equation ()

|

|

|

(3.3) |

has a solution such that and

|

|

|

(3.4) |

where , , for , and C is a constant depending on and T.

Remark.

In [16], the above result (existence and convergence) is proved for the explicit scheme, which is formulated as replacing with in the last equation of (3.3).

The same techniques can be used to prove the implicit scheme, as we state in Theorem 5.

Finally, to make sure the system in (2.3) is well-defined, we restrict our parametric function spaces and as in Assumption 4 below.

Note that neural networks with common activation functions, including ReLU and sigmoid function, satisfy this assumption.

Under Assumption 1 and 4, one can easily prove by induction that

, and defined in (2.3) are all measurable and square-integrable random variables.

Assumption 4.

and are subsets of measurable functions from to and to with linear growth, namely, and in (2.3) satisfy and for .

4 A Posteriori Estimation of the Simulation Error

We prove Theorem 1 in this section. Comparing the statements of Theorem 1 and Theorem 5, we wish to bound the differences between and

with the objective function .

Recalling the definition of the system of equations (2.3), we have

|

|

|

|

(4.1) |

|

|

|

|

(4.2) |

Taking the expectation on both sides of (4.2), we obtain

|

|

|

Right multiplying on both sides of (4.2) and taking the expectation again, we obtain

|

|

|

The above observation motivates us to consider the following system of equations

|

|

|

(4.3) |

Note that (4.3) is defined just like the FBSDEs (2.1)(2.2), where the component is defined forwardly and the components are defined backwardly. However, since we do not specify the terminal condition of , the system of equations (4.3) has infinitely many solutions. The following lemma gives an estimate of the difference between two such solutions.

Lemma 1.

For , suppose are two solutions of (4.3),

with , .

For any , and sufficiently small h, denote

|

|

|

|

(4.4) |

|

|

|

|

|

|

|

|

|

|

|

|

Let , then we have, for ,

|

|

|

|

|

|

|

|

To prove Lemma 1, we need the following lemma to handle the component.

Lemma 2.

Let , given , by the martingale representation theorem, there exists an -adapted process such that and .

Then we have .

Proof.

Consider the auxiliary stochastic process for . By Itô’s formula,

|

|

|

Noting that , we have

|

|

|

∎

Proof of Lemma 1.

Let

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Then we have

|

|

|

|

(4.5) |

|

|

|

|

(4.6) |

|

|

|

|

(4.7) |

By the martingale representation theorem, there exists an -adapted square-integrable process such that

|

|

|

or, equivalently,

|

|

|

|

(4.8) |

From equations (4.5) and (4.8), noting that , , , , and are all -measurable, and , ,

we have

|

|

|

|

|

|

|

|

(4.9) |

|

|

|

|

(4.10) |

From equation (4.9), by Assumptions 1, 2 and the root-mean square and geometric mean inequality (RMS-GM inequality), for any , we have

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Recall , , . By induction we can obtain that, for ,

|

|

|

Similarly, from equation (4.10), for any , we have

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(4.11) |

To deal with the integral term in (4.11), we apply Lemma 2 to (4.6)(4.8) and get

|

|

|

|

which implies, by the Cauchy inequality,

|

|

|

|

|

|

|

|

|

|

|

|

where denotes the -th component of the vector.

Plugging it into (4.11) gives us

|

|

|

(4.12) |

Then for any and sufficiently small satisfying , we have

|

|

|

Recall , . By induction we obtain that, for ,

|

|

|

∎

Now we are ready to prove Theorem 1, whose precise statement is given below. Note that its conditions are satisfied if any of the five cases in the weak coupling and monotonicity conditions holds.

Theorem 1′.

Suppose Assumptions 1, 2, 3, and 4 hold true and there exist such that , where

|

|

|

|

(4.13) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Then there exists a constant , depending on , , , , and , such that for sufficiently small ,

|

|

|

(4.14) |

where , , for .

Remark.

The above theorem also implies the coercivity of the objective function (2.4) used in the deep BSDE method. Formally speaking, the coercivity means that if , we have , which is a direct result from Theorem 1′.

Remark.

If any of the weak coupling and monotonicity conditions introduced in Assumption 3 holds to a sufficient extent, there must exist satisfying the conditions in Theorem 1′. We discuss the 5 cases in what follows.

-

1.

Suppose all other constants and are fixed, if is sufficiently small, then the second factor of could be sufficiently close to 0 such that .

-

2.

Suppose all other constants and are fixed, if and are sufficiently small, then could be sufficiently small such that .

-

3.

Suppose all other constants and are fixed, if and are sufficiently small, then and thus the last factor in could be sufficiently close to 0 such that .

-

4.

Suppose all constants except and are fixed.

Let and rewrite as

|

|

|

It is straightforward to check that there exists a negative constant such that when , .

By the definition of , if is sufficiently negative, there exists such that and is sufficiently large to ensure

|

|

|

Combining these two estimates gives .

-

5.

Noting that and play the same role in , we use the same argument as above to show that when is sufficiently negative, there exists such that .

Proof of Theorem 1′.

From the proof of this theorem and throughout the remainder of the paper, we use to generally denote a constant that only depends on , , and , whose value may change from line to line when there is no need to distinguish. We also use to generally denote a constant depending on , , and the constants represented by .

We use the same notations as Lemma 1. Let , (defined in system (2.3)) and , , (defined in system (3.3)). It can be easily checked that both , , , satisfy the system of equations (4.3). Our proof strategy is to use Lemma 1 to bound the difference between two solutions

through the objective function . This allows us to apply Theorem 5 to derive the desired estimates.

To begin with, note that for any , the RMS-GM inequality yields

|

|

|

Let

|

|

|

Lemma 1 tells us

|

|

|

and

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Therefore by definition of and , we have

|

|

|

|

|

|

|

|

Consider the function

|

|

|

|

When , we have

|

|

|

|

|

|

|

|

Let

|

|

|

(4.15) |

Recall

|

|

|

and note that

|

|

|

When , comparing and , we know that, for any , there exists and sufficiently small such that

|

|

|

|

(4.16) |

|

|

|

|

(4.17) |

By fixing and choosing suitable , we obtain our error estimates of and as

|

|

|

|

(4.18) |

|

|

|

|

(4.19) |

To estimate , we consider estimate (4.12), in which can take any value no smaller than . If , we choose

and obtain

|

|

|

Summing from 0 to gives us

|

|

|

|

|

|

|

|

(4.20) |

The case can be dealt with similarly by choosing and the same type of estimate can be derived.

Finally, combining estimates (4.18)(4.19)(4.20) with Theorem 5, we prove the statement in Theorem 1′.

∎

5 An Upper Bound for the Minimized Objective Function

We prove Theorem 2 in this section. We first state three useful lemmas. Theorem 2′, as a detailed statement of Theorem 2, and Theorem 6, as an variation of Theorem 2′ under stronger conditions, are then provided, followed by their proofs. The proofs of three lemmas are given at the end of the section.

The main process we analyze is (2.3). Lemma 3 gives an estimate of the final distance provided by (2.3) in terms of the deviation between the approximated variables and the true solutions.

Lemma 3.

Suppose Assumptions 1, 2, and 3 hold true. Let , be defined as in system (2.3) and . Given ,

there exists a constant depending on , , , and , such that for sufficiently small ,

|

|

|

where ,

, and .

Lemma 3 is close to Theorem 2, except that is not a function of and defined in (2.3). To bridge this gap, we need the following two lemmas.

First, similar to the proof of Theorem 1′, an estimate of the distance between the process defined in (2.3) and the process defined in (3.3) is also needed here. Lemma 4 is a general result to serve this purpose, providing an estimate of the difference between two backward processes driven by different forward processes.

Lemma 4.

Let for , . Suppose and satisfy

|

|

|

(5.1) |

for , . Let , , then for any , and sufficiently small , we have

|

|

|

where .

Lemma 5 shows that, similar to the nonlinear Feynman–Kac formula, the discrete stochastic process defined in (2.3) can also be linked to some deterministic functions.

Lemma 5.

Let be defined in (2.3). When , there exist deterministic functions for such that , satisfy

|

|

|

(5.2) |

for .

If and are independent of , then there exist deterministic functions for such that , satisfy (5.2).

Now we are ready to prove Theorem 2, with a precise statement given below. Like Theorem 1′, the conditions below are satisfied if any of the five cases of the weak coupling and monotonicity conditions holds to certain extent.

Theorem 2′.

Suppose Assumptions 1, 2, 3, and 4 hold true. Given any , , and , let be defined in (4.4) and

|

|

|

|

(5.3) |

|

|

|

|

|

|

|

|

|

|

|

|

If there exist satisfying and , then there exists a constant C depending on , , , , , , and , such that for sufficiently small ,

|

|

|

(5.4) |

where . If is cádlag, we can replace with . If and are independent of , we can replace with .

Remark.

If we take the infimum within the domains of and on both sides, we recover the original statement in Theorem 2.

Remark.

If any of the weak coupling and monotonicity conditions introduced in Assumption 3 holds to a sufficient extent, there must exist satisfying the conditions in Theorem 2′. The arguments are very similar to those provided in Remark Remark. Hence, we omit the details here for the sake of brevity.

Proof of Theorem 2′.

Using Lemma 3 with , we obtain

|

|

|

(5.5) |

Splitting the term and applying the generalized mean inequality, we have (recall is defined in Theorem 5)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(5.6) |

From equations (3.2)(3.4), we know that

|

|

|

|

|

|

|

|

|

|

|

|

(5.7) |

Plugging estimates (5.6)(5.7) into (5.5) gives us

|

|

|

|

|

|

|

|

|

|

|

|

(5.8) |

It remains to estimate the term , to which we intend to apply Lemma 4.

Let and . The associated and are then defined according to equation (5.1). Note that but is not necessarily equal to , due to the possible violation of the terminal condition.

From Lemma 5, we know can be represented as with being a deterministic function. By the property of conditional expectation, we have

|

|

|

for any . Therefore we have the estimate

|

|

|

|

|

|

|

|

(5.9) |

Recall that .

Similar to the derivation of estimate (4.16) (using a given without final specification) in the proof of Theorem 1′, when , we have

|

|

|

|

(5.10) |

in which .

Plugging (5.10) into (5.9), and then into (5.8), we get

|

|

|

|

and

|

|

|

|

|

|

|

|

|

|

|

|

(5.11) |

for sufficiently small . Here is defined as

|

|

|

|

|

|

|

|

The forms of inequalities (5.4) and (5.11) are already very close.

When ,

there exists such that for sufficiently small , we have .

Rearranging the term in inequality (5.11) yields our final estimate.

∎

We shall briefly discuss how the universal approximation theorem can be applied based on Theorem 2′. For instance, Theorem 2.1 in [22] states that every continuous and piecewise linear function with -dimensional input can be represented by a deep neural network with rectified linear units and at most depth. Now we view as a target function with input and as another target function with input . Since and , we know that both target functions can be approximated in the sense by continuous and piecewise linear functions with arbitrary accuracy. Then the aforementioned statement implies that the two target functions can be approximated by two neural networks with rectified linear units and at most depth, although the width might go to infinity as the approximation error decreases to 0. Therefore, according to Theorem 2′, there exist good neural networks such that the value of the objective function is small.

Note that there still exist some concerns about the result in Theorem 2′. First, the function changes when changes for . Second, the function may depend on . Even if the FBSDEs are decoupled so that the above two concerns do not exist, we know nothing a priori about the property of . In the next theorem, we replace with , which can resolve these problems. However, meanwhile we require more regularity for the coefficients of the FBSDEs.

Theorem 6.

Suppose Assumptions 1, 2, 3, 4 and the assumptions in Theorem 3 hold true. Let be the solution of corresponding quasilinear PDEs (2.7) and be the squared Lipschitz constant of with respect to x. With the same notations of Theorem 2′, when and

|

|

|

there exists a constant depending on , , , , , , and , such that for sufficiently small ,

|

|

|

(5.12) |

where .

Proof.

By Theorem 3, we have , in which is the solution of

|

|

|

Using Lemma 3 again with gives us

|

|

|

Given the continuity of , we know admits a continuous version. Hence the term in can be replaced with , i.e.,

|

|

|

(5.13) |

Similar to the arguments in inequalities (5.6)(5.7), we have

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

where the last equality uses the convergence result (3.4). Plugging it into (5.13), we have

|

|

|

|

|

|

|

|

(5.14) |

for sufficiently small .

We employ the estimate (5.10) again to rewrite

inequality (5.14) as

|

|

|

|

|

|

|

|

(5.15) |

where

|

|

|

Arguing in the same way as that in the proof of Theorem 2′, when is strictly bounded above by for sufficiently small , we can choose small enough and rearrange the terms in inequality (5.15) to obtain the result in inequality (5.12).

Remark.

The Lipschitz constant used in Theorem 6 may be further estimated a priori. Denote the Lipschitz constant of function with respect to as , and the bound of function as . Loosely speaking, we have

|

|

|

Here can be estimated from the first point of Theorem 4 and can be estimated through the Schauder estimate (see, e.g., [32, Chapter 4, Lemma 2.1]). Note that the resulting estimate may depend on the dimension .

5.1 Proof of Lemmas

Proof of Lemma 3.

We construct continuous processes as follows. For , let

|

|

|

|

|

|

|

|

From system (2.3), we see this definition also works at . We are interested in again the estimates of the following terms

|

|

|

|

For , let

|

|

|

|

|

|

|

|

|

|

|

|

By definition,

|

|

|

|

|

|

|

|

Then by Itô’s formula, we have

|

|

|

|

|

|

|

|

Thus,

|

|

|

|

|

|

|

|

For any , using Assumptions 1, 2 and the RMS-GM inequality, we have

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(5.16) |

By the RMS-GM inequality, we also have

|

|

|

|

(5.17) |

|

|

|

|

(5.18) |

in which we choose and . The path regularity in Theorem 4 tells us

|

|

|

(5.19) |

Plugging inequalities (5.17)(5.18)(5.19) into (5.16) with simplification, we obtain

|

|

|

|

|

|

|

|

(5.20) |

Then,

by Grönwall inequality, we have

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(5.21) |

where , , and is sufficiently small.

Similarly, with the same type of estimates in (5.16)(5.20), for any , we have

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Arguing in the same way of (5.21), by Grönwall inequality, for sufficiently small , we have

|

|

|

|

|

|

|

|

with , .

Choosing and using

|

|

|

where and , we furthermore obtain

|

|

|

(5.22) |

with .

Define

|

|

|

Combining inequalities (5.21)(5.22) together yields

|

|

|

|

|

|

|

|

|

|

|

|

Letting ,

we have

|

|

|

(5.23) |

We start from and apply inequality (5.23) repeatedly to obtain

|

|

|

(5.24) |

in which for the last term we use the fact from inequality (3.2).

Note that

|

|

|

|

|

|

|

|

Given any , we can choose small enough such that

|

|

|

|

This condition and inequality (5.24) together give us

|

|

|

|

|

|

|

|

(5.25) |

Finally, by decomposing the objective function, we have

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(5.26) |

We complete our proof by combining inequalities (5.25)(5.26) and choosing .

∎

Proof of Lemma 4.

We use the same notations as in the proof of Lemma 1. As derived in (4.12), for any , we have

|

|

|

(5.27) |

Multiplying both sides of (5.27) by gives us

|

|

|

|

|

|

|

|

|

|

|

|

(5.28) |

Summing (5.28) up from to , we obtain

|

|

|

(5.29) |

Note that by Assumption 1.

Plugging it into (5.29), we arrive at the desired result.

∎

Proof of Lemma 5.

We prove by induction backwardly.

Let for convenience. It is straightforward to see that the statement holds for .

Assume the statement holds for . For , we know .

Recalling the definition of in (2.3), we can rewrite , with being a deterministic function. Note . Since is independent of , there exists a deterministic function such that .

Next we consider . Let , where denotes the -algebra generated by . We know is a Banach space and another equivalent representation is

|

|

|

Consider the following map defined on :

|

|

|

By Assumption 3, is square-integrable. Furthermore, following the same argument for , can also be represented as a deterministic function of . Hence, . Note that Assumption 1 implies . Therefore is a contraction map on when . By the Banach fixed-point theorem, there exists a unique fixed-point satisfying . We choose to validate the statement for .

When and are independent of , all of the arguments above can be made similarly with also being independent of .

∎