COREclust: a new package for a robust and scalable analysis of complex data

2 Institut de Mathématiques de Toulouse (UMR 5219), CNRS, France

)

Abstract

In this paper, we present a new R package COREclust dedicated to the detection of representative variables in high dimensional spaces with a potentially limited number of observations. Variable sets detection is based on an original graph clustering strategy denoted CORE-clustering algorithm that detects CORE-clusters, i.e. variable sets having a user defined size range and in which each variable is very similar to at least another variable. Representative variables are then robustely estimate as the CORE-cluster centers. This strategy is entirely coded in C++ and wrapped by R using the Rcpp package. A particular effort has been dedicated to keep its algorithmic cost reasonable so that it can be used on large datasets. After motivating our work, we will explain the CORE-clustering algorithm as well as a greedy extension of this algorithm. We will then present how to use it and results obtained on synthetic and real data.

1 Introduction

Discovering representative information in high dimensional spaces with a limited number of observations is a recurrent problem in data analysis. Heterogeneity between the variables behaviour and multiple similarities between variable subsets make the analysis of complex systems an unobvious task. In this context, we developped a R package that wraps C++ functions dedicated to the detection of CORE-clusters, i.e. of variable sets having a controled size range and with similar observed behaviours. As developped hereafter, these CORE-clusters are of particular interest in complex systems as they represent different types of behaviours that can be robustely detected in the observations.

Complex systems are typically modeled as undirected weighted graphs ([4], [16]) where the nodes (vertices) represent the variables and the edge weights are a measure of similarity between the variables. They cover a wide range of applications in various fields: social science [27], technology [1] or biology [2]. Cluster structures in such complex networks are non-trivial topological features that are estimated using graph clustering methods. The choice of a specific graph clustering algorithm is often application dependent on the nature (structure, size) of the data.

A wide variety of traditional methods were developed to treat the problem of graph clustering, which include graph partitioning, hierarchical clustering, partitional clustering and spectral clustering [14], [15], [17], [19], [26], [25], [12] . The first technique [10] aims to find partitions such that interpartition edges are minimized and is based on strong assumptions (need to know the number of groups and their size). The second standard category of methods called hierarchical clustering [25] can be divided into two types of algorithms: agglomerative algorithms (start with a cluster by node, then iteratively merge the clusters) and divisive algorithms (start with a single cluster, then iteratively split it). Such techniques make little assumptions on the number and the size of the groups. However, they do not allow to select the best graph partition. Moreover, based on a pairwise similarity matrix, their computational cost increases quickly with the number of nodes. Partitional clustering methods include k-means ([12]), k-median strategy, and enable to form a fixed number of groups based on a distance function. Lately, the last approach [26] that can have highly accurate results on different data types, imposes a high cost of computing results. Those algorithms are mostly based on the eigen-decomposition of Laplacian matrices of either weighted or unweighted graphs.

A common issue when using those methods on complex system is that each node/variable will be set in a cluster. For instance, a large amount of clusters will be computed if the clustering granularity is high. Some clusters will then have a high similarity rate between their nodes/observations and other ones will contain no pertinent information. Quite often, interesting relations will also be split into several clusters, making it tricky to identify a sparse number of pertinent variables. Conversely, a low granularity will lead to few large clusters with a high internal heterogeneous behaviours making it even harder to identify representative variables. In the important context where the number of observations is lower that the observations dimension , an even more critical issue is that traditional graph clustering techniques suffer from this high dimensionality manifested through an instability due to high complexity. Selected variable clusters will then be likely to be meaningless. A regularization strategy is therefore mandatory and requires the detection of pertinent clusters of representative variables.

In our approach, we gather a controled number of variables which present similarities. Those tightly groups, called cores, constitute highly connected subnetworks and do not necessarily cover all nodes/variables. Those cores all support a central variable position charcterized by the lowest average distance between the other variables in the same core. Note that the notion of core in graph clustering is already used in k-means clustering algorithms [20]. As we will develop in section 2, a major difference between k-means and our proposed approach is that we do not need to tune the total number of clusters but their minimal size, so only similar variables will be gathered in CORE-clusters. Other graph clustering algorithms, based on k-core decomposition, also partition the graph into a maximal group of entities which are connected to at least k other entities in the group, were also developed: In [9], the detection of such a partition involves repeatedly deleting all the nodes whose degree is less than k until no more such nodes exist. In particular, based on the concept of degeneracy of a network meaning that the largest value of k for which a k-core exists, it aims to decrease the computational time of high computational complexity graph clustering methods , where is the number of nodes/variables without any significant loss of accuracy, applying them on main subgraphs of the network. The algorithm takes as input a clustering coefficient that indicates the degree to which nodes tend to cluster together. The method of [3], that is implemented in the package igraph, is also related to the notion of coreness as it calculates hierarchically the core number for each node with a complexity in the order of . A node’s coreness is k if it belongs to a k-core but not (k+1)-core. Unlike those articles, CORE-clustering algorithm seeks to directly control the core size from an optimized parameter (minimal core size) and not the number of cores to be detected.

Our strategy then requires the tuning of a single and intuitive parameter: the minimum number of nodes/variables in each core. Thus, the heart of our approach is a new graph clustering algorithm, called CORE Clustering, that robustly identifies groups of representative variables (CORE-clusters) of a high dimensional system with potentially few observations having similarities. Only highly connected nodes are clustered together according to a limited level of granularity. The algorithmic cost of our strategy is additionnaly limited and scales well to large datasets. This point of view has been originally considered in [5] and we present here an improved different version of this initial idea. The package COREclust is implemented in C++ and is interfaced with R in order to make it possible to select clusters of leading players within a potentally large complex system. Our methodology is described in Section 2. More details about the package and its use are then given in Section 4. Section 5 finally presents results obtained using our package and concludes this paper.

2 Statistical Methodology

2.1 Interactions model

We consider the set of observations of the studied system. Each observation is described by quantitative variables, so for . The representative variables in the system will be identified based on a quantitative description of the variable interactions. This requires to define a notion of distance between the observed variables. In this paper, we use standard Pearson correlations but other distance measures could be alternatively used. The interactions are then modelled as an undirected weigted graph where the nodes represent the variables and the edges represent the variable pairs having a non-negligible similarity score. Each edge has also a weight equals to the similarity score between the nodes/variables it connects.

We denote the undirected weigthed graph modeling the observed system. The set contains nodes, each of them representing an observed variable, and the set contains the weighted edges that model the similarity level of the variable pairs.

2.2 CORE-clusters

As mentioned section 1, the representative variables of the studied system are indirectely detected as the centers of CORE-clusters. In our work, this central variable is simply the one that has the lowest average distance to all other variables in the CORE-cluster. The central part of our strategy is then the detection of CORE-clusters of variables based on the graph . CORE-clusters are groups of nodes/variables that are densely connected to each other. The notion of -core introduced firstly by Seidman [20] for unweighted graphs in 1983, formally describe those cohesive groups. To this end, let us consider the degree of a node as the number of edges incident to itself.

Definition 1

A subgraph H of a graph G(N,E) is a -core or a core of degree k if and only if H is a maximal subgraph such that where is the minimum degree of (we say that k is the rank of such a core).

One major limitation of the graph decomposition into cores is its design to work on unweigthed graphs. However, real networks are quite frequently weighted, and their weights describe important properties of the underlying systems. In such networks, nodes have (at least) two properties that can characterize them, their degree and their weight. In our approach, we will consider links with major weights.

2.3 CORE-clusters detection

In this paper, we developed the CORE-clustering algorithm based on the notion of CORE-clusters. In order to prevent instability in the process of core detection from one run to the other, the user has to tune the coefficient so that the size of the CORE-clusters to be detected will be in . Thus, the -cores to be detected directly depends on this coefficient. The latter controls the level of regularisation when estimating the representative variables: Large values of leads to the detections of large sets of strongly related variables. In that case, the representative variables of these sets is likely to be meaningful even if . However each CORE-cluster may also contain several variables that would have ideally been representative. On the contrary, too small values of will be likely to detect all meaningful representative variables but also a non-negligible number of false positive representative variables, especially if the observations are noisy or if . Note that another optional parameter is the threshold on the minimal similarity observed between two variable to define an edge in . Using it with values stricly higher than allows to reduce the graph size.

An important characteristic of our algorithm is that it first strongly simplifies using a maximum spanning tree strategy. The resulting graph has the same nodes as and the tree structure instead of the initial edges , where is a subset of . All node pairs are then still connected to each other but there is only a single path between each node pair. This strongly sparsifies the graph and makes possible the use of fast and scalable algorithms as described section 3. CORE-clusters detection strategy, which estimates strongly interconnected variable sets also makes sense on as computing a maximum spanning tree aims to find a tree sturcture out of the edges with the highest possible weights in average.

3 Computational Methodology

3.1 Main interactions estimation

The very first step of our strategy is to quantify the similarity between the different observed variables. The similarity is first computed and represented as a dense graph , where contains nodes, each of them representing an observed variable, and contains undirected weighted edges that model a similarity level between all pairs of variables. Various association measures can be used to quantify the similarity between variables and , . The most popular ones are Spearman or Pearson correlation measures, different notions of distances, or smooth correlation coefficients. We used the absolute value of Pearson’s correlation coefficient in our package, so the weight between the nodes and is the Pearson’s correlation coefficient between the absolute values of the observed variables and . In this approach, we consider the variables with no connection in between which will have a correlation coefficient set to zero. The algorithmic cost of this estimation using a naive technique is but it can be easily parallelized using divide and conquer algorithms for reasonably large datasets, as in [18]. For large to very large datasets, correlations should be computed on sparse matrices, using e.g. [7] to make this task computationally tractable.

Once is computed, its complexity is strongly reduced to make possible the efficient use of scalable clustering strategies on our data.

The maximum spanning tree of is built by using Kruskal’s algorithm [11]. We denote the resulting graph, where has a tree-like structure. Details of the algorithm are given in Alg. 1. The algorithmic cost of the sort procedure (line 1) is . Then, the for loop (lines 4 to 10) only scans one time the edges. In this for loop, the most demanding procedure is the propagation of label , line 8. Fortunately, the nodes on which the labels are propagated are connex to in . We can then use a Depth-First Search algorithm [23] for that task, making the average performance of the for loop .

3.2 CORE-clusters detection

In contrast with the other clustering techniques, our CORE-clustering approach detects clusters having an explicitely controled granularity level, and only gathers nodes/variables with a behaviour considered as similar (see section 3.1). Core structures are detected from the maximal spanning tree by gathering iteratively its nodes in an order that depends on the edge weights in . CORE-structures are identified when a group of gathered nodes has a size in , where is the parameter that controls the granularity level. Algorithm details are given Alg. 2.

To understand how Alg. 2 detects clusters of coherent nodes, we emphasize that the CORE-clustering algorithm is applied on the maximum spanning tree and not on . Only the most pertinent relations of each node are then taken into account, so the nodes with no pertinent relation will be randomly linked to each other in . As Alg. 2 will first gather these nodes, they will generate many small and meaningless clusters. Parameter should then not be too small to avoid considering these clusters as CORE-clusters. Then, the first pertinent clusters will be established using the edges of with larger weights, leading to pertinent node groups. Finally, the largest weights of are treated in the end of Alg. 2 in order to split into several CORE-clusters the nodes related to the most influent nodes/variables.

Remark that the algorithmic structures of Alg. 1 and Alg. 2 are similar. However Alg. 2 runs on and not on . The number of edges in is much lower than since has a tree structure and not a complete graph structure. It should indeed be slightly higher than [22], which is much lower than . Moreover the propagation algorithm (lines 9 and 11) will never propagate then labels on more than nodes. The algorithmic cost of the sort procedure (line 1) is then and the average performance of the for loop .

3.3 A greedy alternative for CORE-clusters detection

We propose an alternative strategy for the CORE-clusters detection algorithms: The edge treatment queue may be ordered by following decreasing edge weights instead of increasing edge weights. The nearest edges will then be first gathered making coherent CORE-clusters as in Alg. 2, although one CORE-cluster may contain several key players. To avoid gathering noisy information, the for loop on the edges (line 6 of Alg. 2) should also stop before meaningless edges are treated. This strategy has a key interest: It can strongly reduce the computational time dedicated to Algs. 1 and 2. By doing so, Alg. 1 and modified Alg. 2 are purely equivalent to Alg. 3.

Again, the structure of this algorithm is very similar to the structure of the Maximum Spanning Tree strategy section 3.1. The algorithmic cost of the sort procedure (line 1) is . Then, the loop lines 4 to 13 scans edges where should be sufficiently high to capture the main CORE-clusters but also where . Labels propagations in this loop (lines 7 and 9) is also limited to nodes. The average performance of the for loop is therefore .

3.4 Central variables selection in CORE-clusters

Once a CORE-cluster is identified, we use a straighforward strategy to select its central variable: the distance between all pairs of variables in each CORE-cluster is computed using a Dijkstra’s algorithm [6], [28] in . The central variable is then the one that has the lowest average distance to all other variables in the CORE-cluster. As the CORE-clusters have less than nodes, the algorithmic cost of this procedure is times the number of detected CORE-clusters, which should remain low even for large datasets.

4 Package description

The package COREclust introduced in this paper can be freely downloaded at the url https://sourceforge.net/projects/core-clustering/files/Rpackage_CoreClustering.tar and is based on a R script calling C++ functions. It only requires preinstalling the Rcpp package [8] to automatically compile and call C++ functions. Note that the C++ algorithm only depends on standard libraries so it requires a standard C++ compiler and no specific library. After its installation, the package can be loaded in R by typing library(”COREclust”).

The latter contains a set of C++ and R functions for detecting the main core structures within a large complex system as well as different tests for measuring the accuracy and the robustness of the algorithm developed for that task.

4.1 C++ functions

The main C++ function of COREclust called by R is principalFunction. Its first inputs are the name of the file containing the similarity level between the variables (arg_InputFile), the minimal size of the cores (arg_MinCoreSize) and the coefficient (arg_Gamma). Note that is only used when performing the greedy algorithm. The next input is the name of the file containing the output variable labels (arg_OutputFile). The last one is finally a coefficient or that controls whether the standard or the greedy algorithm will be performed (greedyOrNot). It is worth mentionning that the input csv file is a correlation matrix generated by R before executing the C++ code. Then the structure of principalFunction is as described in Alg. 4.

Remark that the C++ code heavily depends on the class CompactGraph, that is summarized in file CoreClust.h. This class contains all variables to store undirected weighted graphs and node Labels. It also contains different methods (i.e. class functions) to read undirected weighted graphs in a list of weighted edges or a squared matrix, to write a graph and its labels, as well as to perform the CORE-clustering strategies described in Alg. 2 and Alg. 3. As mentioned section 2 the scalability of our algorithms directly depend on the algorithm that sorts the graph edges depending of their weights. To make this step computationally efficient, we have used in the SortByIncreasingWeight and SortByDecreasingWeight methods of CompactGraph the reference C++ function std::sort. For elements, this function performs comparisons in average until C++11, and comparisons after C++11.

4.2 R functions

User interactions are made through a R script algo_clust.R that executes the C++ code of Alg. 4. It first takes as inputs the name of a Rdata or a csv file (arg_InputFile). The remaining arguments are the minimal size of the cores (arg_MinCoreSize), the name of the file containing the output variable labels (arg_OutputFile) and optionally the coefficient (arg_Gamma) that controls the edge number to be scanned to capture the main cores. If it is not called as argument, is set to zero and the fifth input argument of the principalFunction is set to 1 (CORE-clustering algorithm will be applied on submit data). Otherwise, greedyOrNot is set to 2.

Note that the script algo_clust.R contains a R function corr_Mat that computes the similarity matrix of the observed data. It takes as arguments the name of the file containing the dataset Obs_file_name and a coefficient FileType equals to if Obs_file_name is a csv file and otherwise. In the first case, the absolute value of Pearson’s correlation is computed using the function cor of package stat ([24]) and the associated adjacency matrix is built. In the second case, the input matrix that contains the observations is of type sparseMatrix (out of the package Matrix [13]). Then, the absolute value of Pearson’s correlation is computed on its coefficients using the function corSparse of package qlcMatrix [7].

It also includes the function Rcpp::sourceCpp from the package Rcpp that parses the specified C++ file or source code. A shared library is then built and its exported functions and Rcpp modules are made available in the specified environment.

In the R file, the similarity matrix is first computed and saved in the csv file corrMat.csv that will be loaded by the principalFunction of the C++ code.

5 Results on synthetic data

In order to study the ability of our algorithm to detect core structures and clusters of nodes from the graph, we simulated correlated data with clusters of varying size, density and level of noise, many different situations which may be found in any system. We also tested the accuracy and the robustness of our algorithm based on a specific similarity measure.

5.1 Simulated networks

Many complex networks are known to be scale free, meaning that the network has a little amount of highly connected nodes (hubs) and many poorly connected nodes. Simulating such networks can be seen as a process through which we first generate the profile of leader actors (hubs) and then the profile of the remaining ones around those hubs.

We consider here that the simulated individual profiles are divided in different clusters of size ,,…,. A simulated expression data set is then composed of actor profiles generated for the clusters and has the structure:

| (1) |

Strategy for simulating actor profiles in a single cluster of size is:

-

1.

Generate the profile of one variable from a normal distribution, . This profile defines the leader or hub profile.

-

2.

Choose a minimum correlation and a maximum correlation between the leader actor and other actors in the cluster.

-

3.

Generate profiles such that the correlation of the j-th profile with the leader actor profile is forall close to

where . The correlation is close to and the -th correlation is equal to . The j-th profile is also generated by adding a Gaussian noise term to the leader profile, to allow a correlation with the leader profile close to the required correlation

where .

From the matrix including the profile of each actor of the system , the coefficients of the matrix of Pearson correlation will be computed and finally represent the edges of the simulated network .

5.2 Performance evaluation on synthetic data

Clustering results can be validated using two scores: The external validation compares the clustering result with a ground truth result. Internal validation also evaluates the clustering quality using the result itself plus the graph properties, and no ground truth data. It can therefore also be used on real-life data. The latter will be introduced in 6.

5.2.1 External evaluation of cores quality on synthetic data

Let , be the variables and () be the ground-truth CORE-clusters of variables, typically on synthetic data. We also denote () the CORE-clusters predicted by our algorithm. In order to evaluate the quality of the prediction, we compute a score defined as:

| (2) |

where and .

To compute this score, each is equal to if there is no overlap between and any , and is equal to the number of variables in if a contains all the variables of . We emphasize that a CORE-cluster contains between and variables so, if the synthetic CORE-clusters have an appropriate size, no should match with more than one . A score equals to then means that a perfecly accurate estimation of the was reached, and the closer to 0 its values are the less accurate the CORE-clusters detection is.

Note that other scores, such as the Gini index could have potentially been used. Contrary to standard external validation scores used in supervised clustering, we do not evaluate a clustering quality with a label given to all variables/nodes. Here we assess the detection of clusters, potentially on a subset of variables and do not want to penalize or favor the fact that some variables have no label. We then use the score Eq. (2).

5.3 Simulations

Standard and greedy algorithms were applied on four different simulations repeated times, to study their performances and robustness. On the basis of the built dataset in section 4.1, each simulation was generated with well-defined clusters inside which correlations between actor profiles decrease linearly from to . Let simulation be the name of the function modeling the dataset introduced above.

-

a)

The first simulation matches the generation of the standard dataset of size defined in section 4.1 with and clusters of size and ;

-

b)

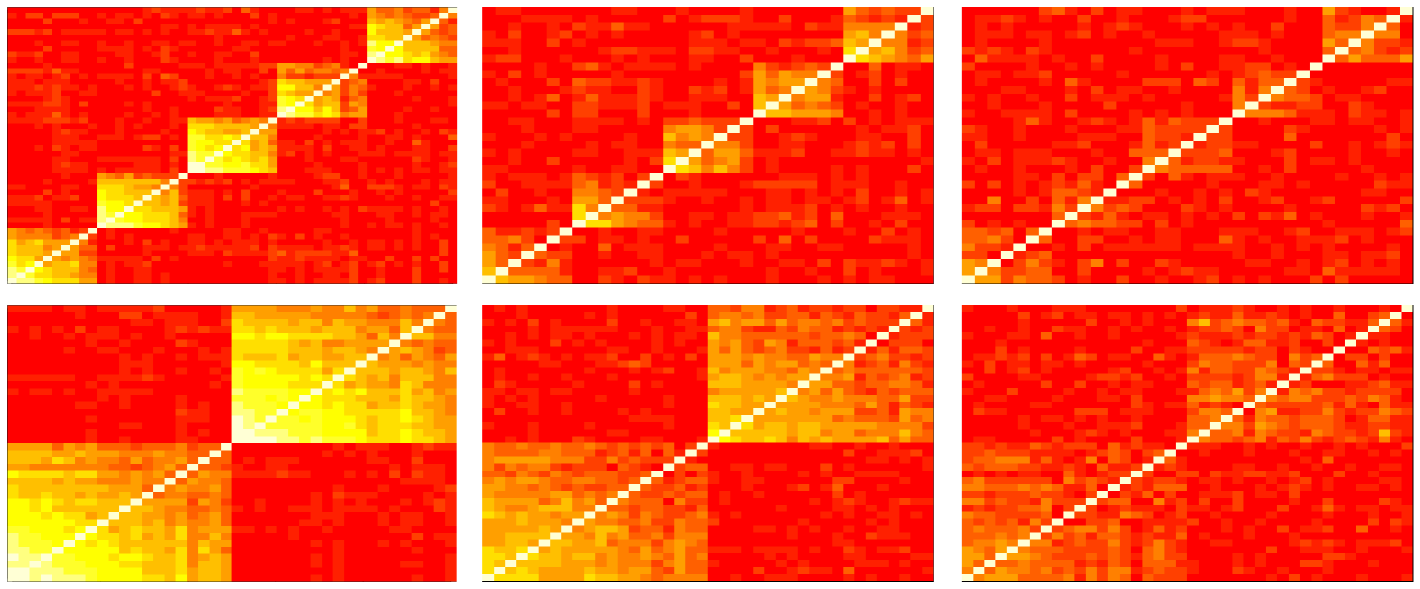

The second one consisted in creating noisy data and testing the CoreClustering algorithms on it. To this end, we simulated the standard dataset, and gradually added to each variable an increasing gaussian noise term drawn from a normal distribution depending on a coefficient . Examples of simulated matrices with this noise term are shown in Fig. 1;

-

c)

The third simulation aims at changing the minimal size of core structures detected i.e the level of granularity by the algorithms along the process taking values from and checking their accuracy. The number of clusters was fixed at with sizes randomly drawn from the set ;

-

d)

The last simulation consists in varying the size of the sample from 5 to 30 and setting and the minimal size of core structures to .

5.4 Results

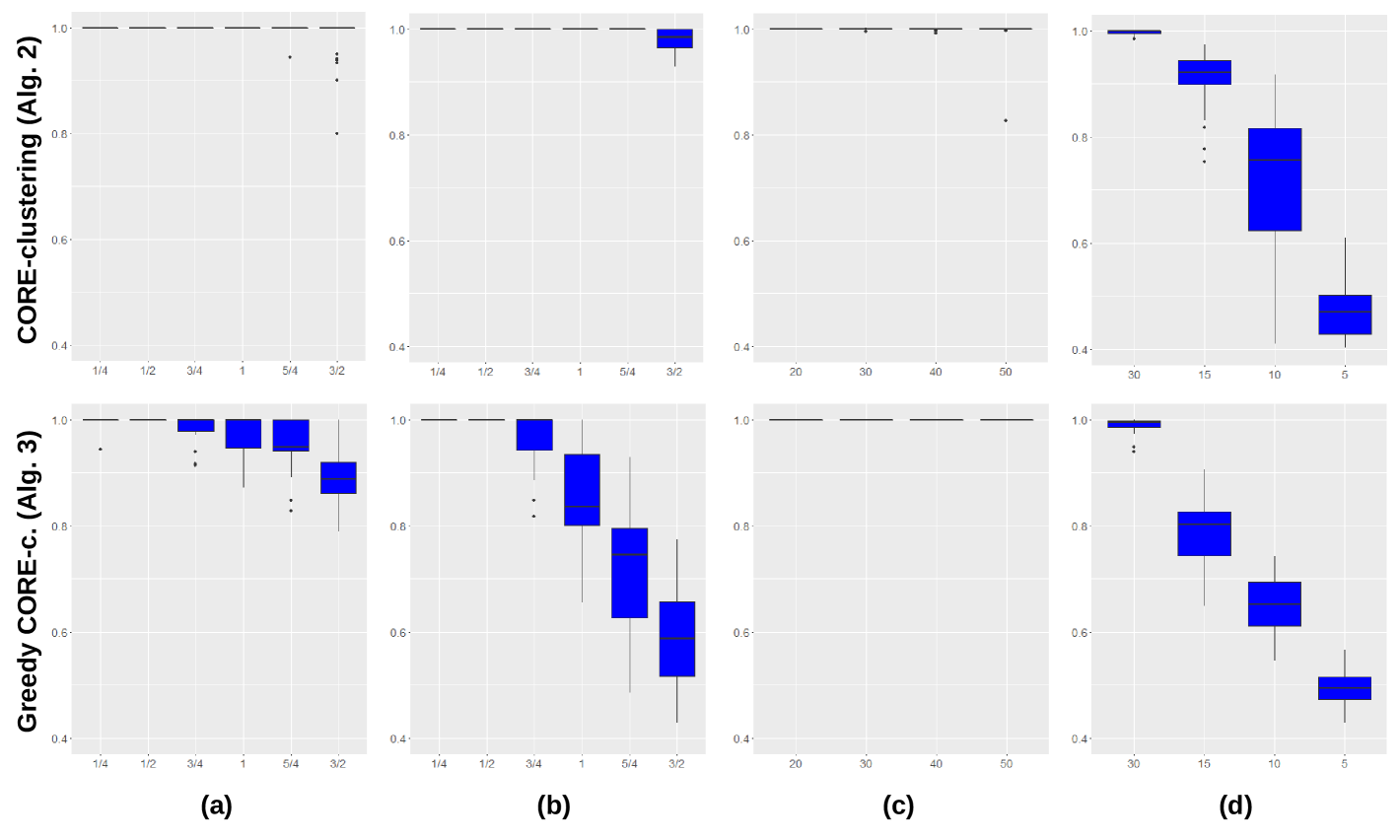

For all the simulated datasets, CoreClustering and CoreClustering_Greedy were applied on their matrices of correlation and then, the cores detected were compared to the real cluster groups thanks to the quality measurement. The results are shown in Fig. 2 in the form of boxplots. The first four figures (a) and (b) show that the standard CoreClustering is slightely more robust to noisy dataset than the CoreClustering_Greedy . The opposite effect can be observed when the sample size of the dataset decreases ((d)). Moreover, it should be noticed that increasing the number of clusters in the dataset slightly declines the accuracy of the detected cores. However, the two algorithms are really effective when varying the granularity coefficient ((c)) which differentiate it from other clustering techniques mentioned in the introduction. Nevertheless, it must be noted that the CoreClustering_Greedy is really more effective in terms of processing time than the other one.

6 Package application on real data

The COREclust package is distributed with example datasets, in particular a classic [21] dataset that can be found on the UCI Machine Learning Repository111https://archive.ics.uci.edu/ml/datasets/Yeast. We present in this section results obtained using the COREclust algorithms on this dataset.

6.1 Data yeast

Using simulated data, we showed that CORE-clustering algorithms performs well to detect core structures in a high dimensional network. This section illustrates the use of Core-Clustering algorithms on the well-known synchronized yeast cell cycle data set of [21], which includes 77 samples under various time during the cell cycle and a total of 6179 genes, of which 1660 genes are retained for this analysis after preprocessing and filtering. The goal of this analysis is then to detect core structures among the correlation patterns in the time series of gene expressions of yeast measured along the cell cycle. From this dataset introduced as a matrix, a measure of similarity between all gene pairs was measured with the absolute value of Pearson’s correlation. A total of about weighted edges will then be considered when representing the variable correlations in a graph.

6.2 Sparse data yeast

The second application of the two developed algorithms is based on a sparse version of the data yeast mentioned above. From the dataset built, of the information on it is set to zero, making the data sparse. The matrix containing those data was then converted to a sparse matrix type using the package Matrix of R and a measure of similarity suitable for it was quantified using e.g. [7].

6.3 Command lines

Those similarity matrices are then saved in a CSV format and passed as the first argument of the functions algo_clust and algo_clust_greedy. The latter are finally run using the following command line Rscript algo_clust.R in_InputFile in_MinCore_Size in_Outputfile or Rscript algo_clust_greedy.R in_InputFile in_MinCore_Size in_Outputfile, where the in_MinCoreSize and in_Outputfile arguments are fixed by the user.

6.4 Internal evaluation of the strength of the connections quality in cores

We are interested here in evaluating the performances of the core detection algorithms using internal criteria. Let us intoduce the following evaluation metrics which will quantify the internal quality, i.e, the minimal property of the density of a core.

Let be the correlation matrix between each variable . Let the number of cores detected. Each variable is assigned to a unique core (). The quality measurement of internal connections strength in cores is defined as:

| (3) |

where we suppose that a weight equals to zero means that there is no connection between the two nodes involved. For each node of a given core, this score first determines the maximal strength of the edges that connect this node to its adjacent nodes. Over all those coefficents, the minimal one is then divided by the maximal strength of all edges in the core. As the last coefficient is very closed to one, the final score emphasizes the minimal strength of all vertices in each core between its internal nodes. A score closed to one then means that the algorithm reveals a core with highly connected nodes.

6.5 Result

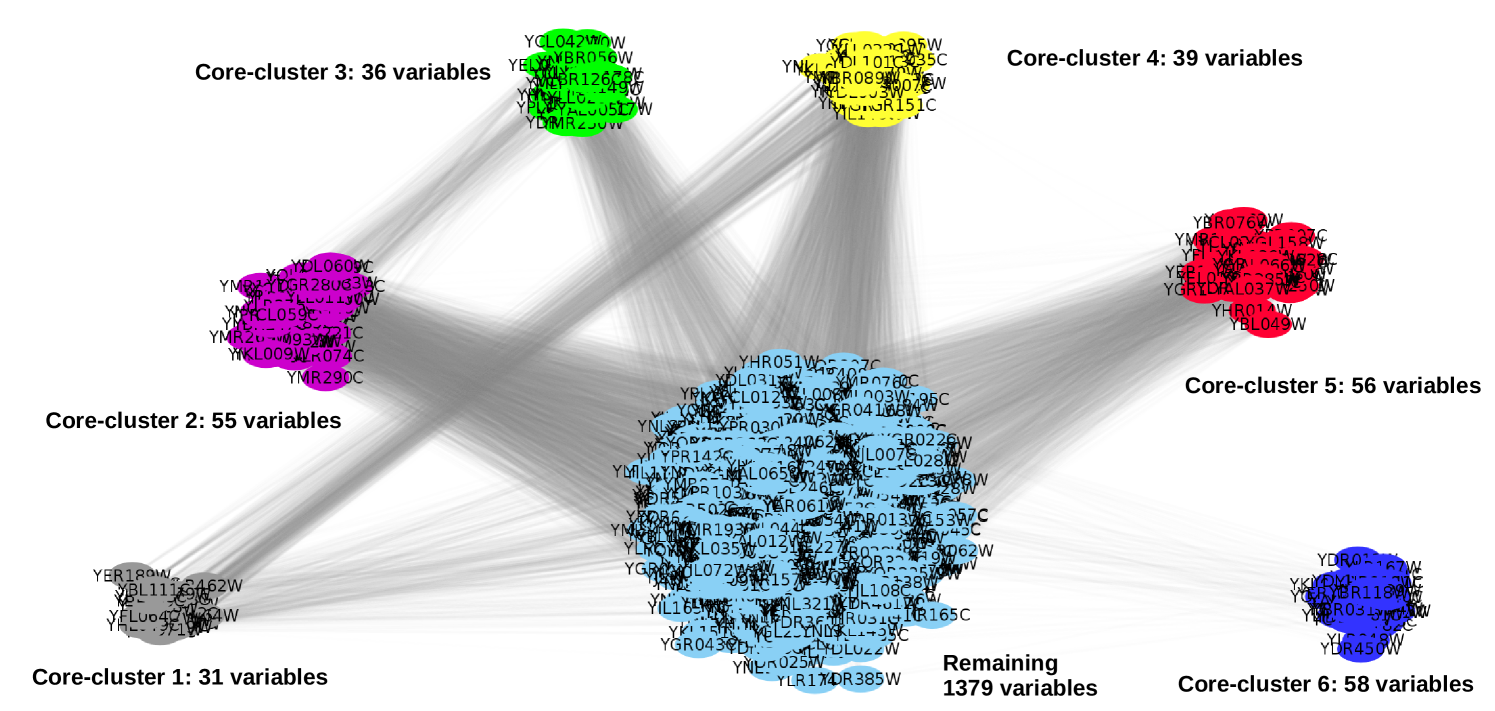

In this context, the standard and greedy Core-Clustering algorithms were applied on the matrices of similarity introduced above with the aim of proving their performances on a real complex dataset. Applied on the original one, they detected respectively and core structures whereas and were catched when it comes to their sparse version. The minimal size of core structures was set at . A visualization of the core clusters detected by the standard algorithm was made (Figure 3) using Cytoscape222http://www.cytoscape.org/ where the transparency on the edges linearly depends on the correlation between the variables. Transparency for correlation 0.5 is and transparency for correlation 1 is . The quality of the connections strength in core structures detected in each dataset were then internally evaluated using the quality measurement introduced in section 5. The mean of the scores obtained for each cores when the standard algorithm is applied on the first type of data is in whereas it is in for the greedy algorithm. Thus, the assumption that CORE-clusters are sets of highly connected variables is verified. For the sparse version of the data yeast, the scores are respectively in and in . Those low scores could be dued to the limited number of information in the sparse data. Additionnaly, the core structures detected in each dataset were compared using the quality measurement of clusters introduced in Section 5. The scoring was about for the first type of data and for its sparse version. However, in view of the different number of core structures detected by each algorithm, this measurement actually compares the first cores recognized by its greedy version with all of the standard one. Thus, the resultant scores show that the two algorithms are both robust when they are applied on a complex dataset and performs efficiently even on very sparse dataset. The computational times for the standard and greedy version of the clustering algorithm applied on the original dataset were respectively about and seconds on a Core i5 computer with a RAM of Go. Then, when they were tested on a sparse dataset, the computational times were about 255.92 and 31.78 seconds.

7 Conclusion

Although complex systems in high dimensional spaces with a limited number of observations are quite common across many fields, efficient methods to treat the associated problem of graph clustering is an unobvious task. Some of these techniques, based on assumptions in view of controlling the variables contribution to the global clustering, often do not allow to select the best graph partition. In reply to this issue, we developped the R package COREclust introduced in this paper. This package offers two strategies to robustly identify groups of representative variables of the studied system by tuning a single intuitive parameter: the minimum number of variables in each core. Its effectiveness as a graph clustering technique was further illustrated by simulations and applications on real dataset.

References

- Alcade Cuesta et al. [2016] Alcade Cuesta F, Gonzalez Sequeiros P, Lozano Rojo A (2016). “Exploring the topological sources of robustness against invasion in biological and technological networks.” Scientific Reports, 6.

- Barabasi and Oltvai [2004] Barabasi A, Oltvai Z (2004). “Network biology: understanding the cell’s functional organization.” Nature Reviews Genetics, 5, 101–113.

- Batagelj and Zaversnik [2002] Batagelj V, Zaversnik M (2002). “An O(m) Algorithm for Cores Decomposition of Networks.” Preprint, 5, 129–145.

- Boccaletti et al. [2006] Boccaletti S, Latora V, Moreno Y, Chavez M, Hwang DU (2006). “Complex networks: Structure and dynamics.” Physics Reports, 424, 175–308.

- BRUNET et al. [Dec. 3 2015] BRUNET A, LOUBES JM, AZAIS JM, Courtney M (Dec. 3 2015). “Method of identification of a relationship between biological elements.” WO Patent App. PCT/EP2015/060,779.

- Cormen et al. [2001] Cormen T, Leiserson C, Rivest R, C S (2001). “Introduction to Algorithms.” MIT Press and McGraw-Hill.

- Cysouw [2018] Cysouw M (2018). “R function cor.sparse.” https://www.rdocumentation.org/packages/qlcMatrix/versions/0.9.2/topics/cor.sparse. Accessed: 2018-02-02.

- Eddelbuettel and François [2011] Eddelbuettel D, François R (2011). “Rcpp: Seamless R and C++ Integration.” Journal of Statistical Software, 40(8), 1–18.

- Giatsidis et al. [2016] Giatsidis C, Malliaros F, Tziortziotis N, Dhanjal C, Kiagias A, Thilikos D, Vazirgiannis M (2016). “A k-core Decomposition Framework for Graph Clustering.” Preprint.

- Kernighan and Lin [1970] Kernighan B, Lin S (1970). “An efficient heuristic procedure for partitioning graphs.” The Bell System Technical Journal, 42, 291–307.

- Kruskal [1956] Kruskal JB (1956). “On the shortest spanning subtree of a graph and the traveling salesman problem.” Proceedings of the American Mathematical Society, 7, 48–50.

- MacQueen [1967] MacQueen J (1967). Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, volume 1. University of California Press.

- Maechler [2017] Maechler M (2017). “R package Matrix.” https://www.rdocumentation.org/packages/Matrix/versions/1.2-12. Accessed: 2017-11-16.

- Newman [2004a] Newman M (2004a). “Detecting community structure in networks.” The European Physical Journal B-Condensed Matter and Complex Systems, 38, 321–330.

- Newman [2004b] Newman M (2004b). “Fast algorithm for detecting community structure in networks.” Physical review E, 69.

- Newman [2009] Newman M (2009). Networks: An Introduction. Oxford University Press.

- Newman and Girvan [2004] Newman M, Girvan M (2004). “Finding and evaluating community structure in networks.” Physical review E, 69, 15.

- Randall [1998] Randall KH (1998). Cilk: Efficient Multithreaded Computing. Ph.D. thesis, Massachusetts Institute of Technology.

- Schaeffer [2007] Schaeffer S (2007). “Graph clustering.” Computer Science Review, 1, 27–64.

- Seidman [1983] Seidman S (1983). “Network structure and minimum degree.” Social Networks, 5, 269–287.

- Spellman et al. [1998] Spellman P, Sherlock G, Zhang M, Vishwanath I, Anders K, Eisen M, Brown P, Botstein D, Futcher B (1998). “Comprehensive identification of cell-cycle-regulated genes of yeast saccharomyces cerevisiae by microarray hybridization.” Molecular biiology of the cell, 9(12), 3273–3297.

- Steele [2002] Steele JM (2002). “Minimal Spanning Trees for Graphs with Random Edge Lengths.” In Mathematics and Computer Science II, pp. 223–245. Springer.

- Tarjan [1972] Tarjan R (1972). “Depth first search and linear graph algorithms.” SIAM JOURNAL ON COMPUTING, 1(2).

- Team [2015] Team RC (2015). “R package stats.” https://www.R-project.org/.

- Tibshirani and Friedman [2001] Tibshirani T, Friedman J (2001). “The Elements of Statistical Learning.” Between Data Science and Applied Data Analysis.

- Von Luxburg [2007] Von Luxburg U (2007). “A tutorial on spectral clustering.” Statistics and computing, 17, 395–416.

- Wasserman and Faust [1994] Wasserman U, Faust K (1994). Social Network Analysis: Methods and Applications. Cambridge University Press.

- Zan and Noon [1998] Zan B, Noon C (1998). “Shortest Path Algorithms: An Evaluation Using Real Road Networks.” Transportation Science, 32, 65–73.