Counting from Sky: A Large-scale Dataset for Remote Sensing Object Counting and A Benchmark Method

Abstract

Object counting, whose aim is to estimate the number of objects from a given image, is an important and challenging computation task. Significant efforts have been devoted to addressing this problem and achieved great progress, yet counting the number of ground objects from remote sensing images is barely studied. In this paper, we are interested in counting dense objects from remote sensing images. Compared with object counting in a natural scene, this task is challenging in the following factors: large scale variation, complex cluttered background, and orientation arbitrariness. More importantly, the scarcity of data severely limits the development of research in this field. To address these issues, we first construct a large-scale object counting dataset with remote sensing images, which contains four important geographic objects: buildings, crowded ships in harbors, large-vehicles and small-vehicles in parking lots. We then benchmark the dataset by designing a novel neural network that can generate a density map of an input image. The proposed network consists of three parts namely attention module, scale pyramid module and deformable convolution module to attack the aforementioned challenging factors. Extensive experiments are performed on the proposed dataset and one crowd counting datset, which demonstrate the challenges of the proposed dataset and the superiority and effectiveness of our method compared with state-of-the-art methods.

Index Terms:

Object counting, remote sensing, attention mechanism, scale pyramid module, deformable convolution layer.I Introduction

Object counting, whose aim is to estimate the number of objects in a still image or video frame, is an important yet challenging computer vision task. It has been attracting increasing research interest because of its practical applications in various fields, such as crowd counting [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13], cell microscopy [14, 15, 16], counting animals for ecologic studies [17], vehicle counting [18, 19, 20] and environment survey [21, 22]. Albeit great progress has been made in many domains of object counting, only a few works have been done to address the problem of ground object counting in remote sensing community over the past few years, for example counting palms or olive trees from remotely sensed images [23, 24, 25].

In recent years, with the growing population and rapid development of urbanization, geographic objects such as buildings, cars have become more and more gathering and easily densely crowded. This impels an increasing number of researchers to study the scene understanding from the perspective of object counting. There are emerging studies that analyze crowd scenes from an airborne platform on a helicopter [26], and detecting and evaluating the number of cars from drones [27] or even satellites [28]. However, the dominant ground objects in remote sensing images such as buildings, ships are ignored by the community, estimating the number of which could be beneficial for many practical applications, to name a few urban planning [29], environment control and mapping [30], digital urban model construction [31] and disaster response and assessment [32].

One major reason for this is that there is a lack of large-scale remote sensing object counting dataset available for the community. Although aerial image understanding has gained much attention and there are many datasets built for tasks such as object detection [33, 34] or scene classification [35, 36], these datasets are not intended for counting and the number of objects in which is too small to support the counting task. Another reason that limits the progress and applications of counting in remote sensing field lies in that the well-developed counting models may not work well in remote sensing images, since the objects captured overhead possess distinct features from that of captured by surveillance or consumer cameras.

To be specific, compared with the counting task in other fields, e.g., crowd counting, object counting in remote sensing images faces several challenges in the following aspects:

-

•

Scale variation. Objects (e.g., buildings) in remote sensing images have large scale variations ranging from only a few pixels to thousands of pixels. This extreme scale change makes it difficult to infer an accurate number of objects.

-

•

Complex clutter background. The size of crowded small objects in remote sensing images is so small that it is difficult to detect and recognize them. The objects are often submerged in complex clutter backgrounds, which will distract the models from the region of interest and make them unable to predict the true count.

-

•

Orientation arbitrariness. Objects in remote sensing images are taken overhead, thus the orientation is not certain, which is different from the objects in natural images such as crowds have an upright orientation.

-

•

Dataset scarcity. As mentioned above, object counting datasets in remote sensing filed are extremely lacking. Although some datasets for object detection in remote sensing images such as SpaceNet111https://aws.amazon.com/public-datasets/spacenet/ attempt to alleviate this issue, they are not initially designed for the counting task, thus making them not a good choice for the counting research. The recently released datasets such as DLR-ACD [26] and CARPK [27] are acquired using UAV platforms and constructed for crowd or car counting, which is lack of typical geographic objects such as buildings and ships.

Intuitively, object counting can be achieved in a straightforward way with the aid of well-designed detectors, such as Faster RCNN [37], YOLO [38] and SSD [39], when the objects are identified and located, the counting itself is trivial. However, this solution could be successful in the condition that objects are with large sizes and sparsely located but may fail in the dense cases, especially for adjoining dense buildings, densely crowded ships in the harbors and small vehicles in the parking lots.

To overcome the aforementioned problems and facilitate future research, we prepare to begin with two routes, one is methodology, and the other is data source. For the methodology, we design a deep supervised network to estimate the count of different geographic objects under complex scenes. Following Lempitsky et al.’s pioneer work [16] that casts the object counting as density estimation, our method also accomplishes the counting task by first predicting the density maps of input images and then integrating them to obtain the final counts. The proposed model is comprised of three stages. The first stage is a truncated VGG-16 [40] serving as a feature extractor that extracts features from the input image, following which is an Attention module used to highlight informative features and suppress backgrounds. The subsequent stage is a Scale Pyramid module with different dilation rates. This module captures the multi-scale information of the objects. The final part is a Deformable convolution layer which we hope the proposed method could be robust to orientation changing. For convenience, we name the proposed method ASPD-Net.

Regarding the data source, we have constructed a large-scale Remote Sensing Object Counting dataset (abbreviated as RSOC). The dataset contains a total of 3057 images and 286,539 instances covered by four widely studied and concerned object types involving buildings, ships, large vehicles, and small vehicles. All instances are accurately annotated with pixel-level center points. To our best knowledge, this is the largest dataset designed for the counting task in remote sensing images. We hope this dataset will motivate researchers of the remote sensing community to pay attention to the counting topic and promote research on aerial scene understanding.

In summary, we make the following contributions.

-

1.

We propose a specially designed deep neural network ASPD-Net to attack the challenges of object counting in remote sensing images. The proposed ASPD-Net addresses the problems of scale and rotation variation by introducing a feature pyramid module and deformable convolution module. In addition, the attention mechanism is incorporated to jointly aggregate multi-scale features and suppress clutter backgrounds.

-

2.

A large-scale remote sensing object counting dataset, termed RSOC, is constructed and released to the community to boost the development of object counting in the field of remote sensing. The RSOC dataset consists of 3,057 images with 286,539 annotations and covers four categories, including buildings, ships, large-vehicles, and small-vehicles. To the best of our knowledge, this is the first attempt to build and release a dataset that facilitates research of object counting in remote sensing images.

-

3.

We set a benchmark for the RSOC dataset by conducting extensive experiments, which demonstrates the effectiveness of our proposed method. We also make comparisons with several state-of-the-art counting methods, and the proposed method achieves superior performance on the RSOC dataset. Additionally, experiments on one widely used crowd counting dataset demonstrate the robustness and generalization ability of the proposed approach.

This paper extends and makes further improvement compared with its previous version [41] in the following aspects: 1) More details and descriptions of the dataset, including the collection of the data, annotation manner and statistical information are added into this paper; 2) We give a brief review of related work to enable readers to have a comprehensive understanding of our work; 3) More experiments including ablation studies and comparisons with state-of-the-art counting methods are included to demonstrate the effectiveness and superiority of our approach.

The remainder of this paper is organized as follows. In Section II we briefly review some works related to this paper. The dataset is presented in Section IV. We give detailed structures of the proposed method and experiments in Section III and Section V, respectively. Finally, this paper is concluded in Section VI.

II Related work

II-A Object counting

Contemporary works for object counting can be roughly classified into three categories: 1) detection-based; 2) regression-based and 3) density map estimation based methods. Detection-based methods are inchoate counting techniques, which estimate the number of objects from the detection of object instances. The performance of these methods relies on sophisticated detectors and is not satisfactory owing to the fact that object detection is still in its primitive stage in the early years. In recent years, with the advent of deep learning techniques, object detection has achieved significant progress. Object detectors such as Faster RCNN [37], SSD [39], YOLO [38] have shown remarkable performance in various object detections such as face [42], pedestrian [43] and so on. Counting is completed spontaneously after the detection by simply summarizing the number of detection instances. However, these approaches can only work well in the easy case, i.e., objects are sparsely located and in relatively large scales, they may show poor performance in densely congested scenes, particularly in remote sensing images, since ground objects such as dense buildings, small-vehicles, large-vehicles, and ships are far beyond current detection performance.

Regression-based methods, also known as global-regression based methods, count objects via mapping the high-dimension images into the real number space through regression models such as linear regression [44] or Gaussian mixture regression [45]. Elaborately designed hand-crafted features such as SIFT [46], GLCM [47] are usually employed to represent images. These methods are successful when dealing with objects with homogeneity scales and uniform distributions, however, may fail when objects are with varying scales and cluster distributions.

Lempitsky et al. [16] set a milestone for subsequent counting researches by casting the visual object counting problem as image density estimation, in which the integral of the density map gives the count of objects within it. Following this work, Pham et al. [48] learn a non-linear mapping function by using random forest regression. Recently, with the entering of the deep learning era, many CNN based counting methods have been proposed and achieved great success, especially in crowd counting. Zhang et al. [1] propose a multi-column neural network (MCNN) with three branches, each of which constructed with different kernel sizes for capturing varisized objects. Sindagi et al. [49] improve the MCNN by jointly learning crowd count classification and density map estimation, and integrate them into an end-to-end cascaded network. Sam et al. [5] propose a switching CNN to select the most suitable regressor by training a switch classifier. Instead of designing a wider multi-column network, Li et al. [12] propose to devise a deeper single column network that utilizes dilated convolution to enlarge the receptive fields so as to boost counting performance. In this paper, we also intend to design a CNN based model for estimating density maps of remote sensing images by taking advantage of recently developed techniques such as attention mechanism.

II-B Attention mechanism

Attention mechanism has been incorporated into deep neural networks for improving performance in various computer vision tasks, including image captioning [50, 51], image question answering [52], video analysis [53], image classification [54], object counting [7, 55] and countless others. Bahdanau et al. [56] are among the first to introduce the attention mechanism and successfully apply it to machine translation. Chen et al. [57] propose to encode the spatial- and channel-wise attention sequentially for improving image captioning performance. Wang et al. [58] put forward a non-local neural network to compute the response at a position as a weighted sum of feature maps. Woo et al. [59] devise a convolution block attention module (CBAM) to enrich feature representations. It can be plugged into many feed-forward convolutional networks. The attention mechanism has also been introduced into the counting field. For instance, Liu et al. [7] incorporate an attention module to adaptively decide the appropriate counting mode for different locations on the image based on its real density conditions. Zhang et al. [55] propose an attentional neural field network, in which they introduce the no-local attention module [58] to expand the receptive field to the entire image such that the method can deal with large scale variations. Our work arranges the channel and spatial attention modules in different manners. The rational arrangement will be demonstrated in Section V-C. With the attention module incorporated in our proposed remote sensing object counting framework, we can achieve the goal of highlighting the region of interest and alleviating the interference of cluttered backgrounds.

II-C Dilated convolutions

Dilated convolution has been widely used in a bunch of vision tasks, due to its prominent characteristics of enlarging the receptive field of networks without increasing the computation complexity. Yu et al. [60] design a dilated convolution-based network to capture multi-scale contextual features for better segmenting objects. Song et al. [61] leverage pyramid dilated convolution for video salient object detection. Li et al. [62] utilize dilated convolution for image de-raining. In counting community, CSRNet [12] also employs dilated convolution to design a deep convolutional neural network for crowd counting in congested scenes.

Different from previous approaches, our proposed work incorporates the attention mechanism to capture more contextual features and then concatenates several parallel dilated convolutions with different dilation rates to extract multi-scale features. Furthermore, a set of deformable convolution layers is leveraged to generate high-quality density maps to accurately locate the position of objects, to address the issue that orientation arbitrariness due to overhead perspective in the remote sensing images.

III Methodology

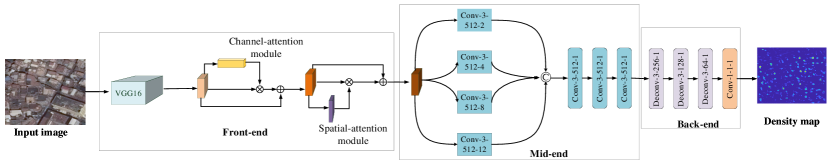

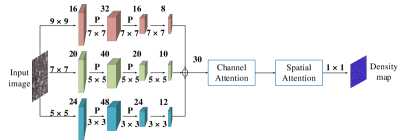

The architecture of the proposed ASPD-Net is illustrated in Fig. 1. It comprises of three stages. The front-end is a truncated VGG-16 [40] incorporated with an attention module to extract features of an input image. The mid-end is a scale pyramid module (SPM) followed by four dilated convolution layers to deal with scale variation. Finally, we equip the back-end with a set of deformable convolution layers for better capturing orientation information and add a convolution layer to generate density map.

III-A Feature extraction with attention (front-end)

For a given remote sensing image with an arbitrary size, we first feed it into a feature extractor, which is composed of the first 10 convolution layers of the pre-trained VGG-16 [40]. The VGG-16 is widely used in counting tasks as backbone to build models for its strong generalization ability [5, 6, 12], thus in this work we also employ VGG-16 as the basis to construct our network. VGGs were initially designed and trained for image classification task [63]. Although it has been proved that VGGs could generalize well to remote sensing images after fun-tuning [64], additional designs should be considered to address counting tasks, since there is a significant gap between counting and classification. The features should be enhanced to better represent crowded objects.

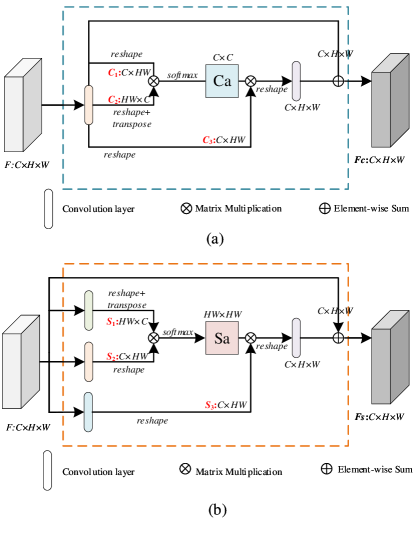

Inspired by recent development in attention mechanism, especially [59] and [65], we incorporate attention modules to achieve the goal of capturing more contextual and high-level semantics. We consider both channel- and spatial-attention, which are described below:

III-A1 Channel-attention

In the extremely dense scenes, textures of the foregrounds are hard to distinguish from that of the backgrounds. Channel-attention could alleviate this problem. The architecture of channel-attention is depicted in Fig. 2(a).

Concretely, for a given intermediate feature map , where represents the number of the channels, and denote the height and width of the feature map. First of all, one convolution layer is executed, and then through reshaping or transpose operations, two feature maps and are obtained. To generate the channel attention map, a matrix multiplication and softmax operations are applied on and . In this way, we obtain a channel attention map with a size of . Specifically, the process can be formulated as follows:

| (1) |

where means the influence of th channel on the th channel. The final weighted feature maps with channel attention module with size of is calculated as:

| (2) |

where is a learnable parameter, which can be leaned from a convolution operation.

III-A2 Spatial-attention

Observing that there have different densities in different locations of the feature map, we further encode long-range dependencies from spatial dimension, which is effective for the performance of the spatial locations. Similar to the operations in the channel-attention aforementioned, the architecture of the spatial-attention is illustrated in Fig. 2(b). However, spatial-attention has some differences from channel-attention in two folds: 1) Instead of only one convolution layer adopted in channel-attention, it has three in the spatial attention operations; 2) In contrast to the size of the channel attention map () is , the size of spatial attention map () is . Specifically, can be computed as follows:

| (3) |

where denotes the influence at the position of the th pixel on the th pixel of the feature map. More similar of the positions means stronger correlations between them. Then the final weighted feature map with spatial attention whose size is can be obtained as below:

| (4) |

where is a learnable parameter, which is leaned with the same operations as in Equ. 2.

III-B Scale pyramid module (mid-end)

There are multiple max-pooling operations in the front-end stage, leading to dramatically decrease in the size of the feature map. The size of the output map is only 1/64 of the input image. This will bring two drawbacks. Firstly, a density map indicates the localization of objects in the image. Pooling operation makes the features invariance to local translations thus is good for classification however is harmful for object localization, in here is a barrier to generating high-quality density map. Secondly, the information of small objects is weakened as the spatial resolution of the feature map decreases, making the network blind to the small objects.

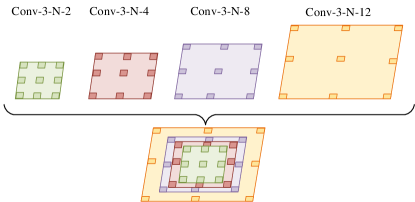

To address these problems, inspired by [66], we introduce a scale pyramid module (SPM) that is built with four parallel dilated convolution layers. The dilated convolution is convolution with holes as illustrated in Fig. 3. It was first introduced by Yu et al. [60] in the segmentation task to expand the receptive fields without losing spatial resolution of feature maps. In addition, there are no extra parameters or computations, making it an excellent solution for dense prediction tasks, e.g., scene parsing [67] and object counting [12, 66].

In this work, all the four layers have the same channels however different dilation rates to capture different scale information. We use dilation rates 2, 4, 8 and 12 as suggested in [66]. In this way, we build a pyramid with different receptive fields that can not only keep the spatial resolution of feature maps unchanged but also can be invariant to scale variations. The structure of the SPM is depicted in Fig. 3.

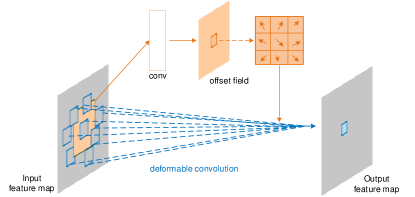

III-C Deformable convolution module (back-end)

Deformable convolution [68] is an operation that adds an offset, whose magnitude can be learnable, on each point in the receptive field of the feature map. After the offset, the shape of the receptive field matches the actual shape of the object rather than a square. The advantage of the offset is that no matter how deformable the object is, the region of convolution always covers the object. The diagram and the visualization of deformable convolution are illustrated in Fig. 4 and Fig. 5, respectively.

For a normal convolution, a given sampling location with convolution kernel of dilation 1, which can be represented as . And then the output feature map on the location can be formulated as

| (5) |

where represents weighted parameters and means the input feature map.

In contrast with normal convolution, deformable convolution adds an adaptive learnable offset , which can be optimized via training [68]. Therefore, the deformable convolution of feature map can be represented as

| (6) |

Specifically, we adopt three deformable convolution layers with the kernel size followed by ReLU activation after each layer. And then a convolution is leveraged to generate the density map. The final counting number of objects can be computed by summing all the pixel values of the density map. With the dynamic sampling scheme in deformable convolution, the orientation arbitrariness due to the overhead perspective in the remote sensing imagery can be well addressed.

III-D Ground truth generation

We generate the ground truth density maps following the procedure of density map generation in previous works [1, 12, 66]. Assuming that there is an object instance at pixel , it can be represented by a delta function . Therefore, for an image with annotations, it can be represented as follows:

| (7) |

To generate the density map , we convolute with a Gaussian kernel, which can be defined as follows:

| (8) |

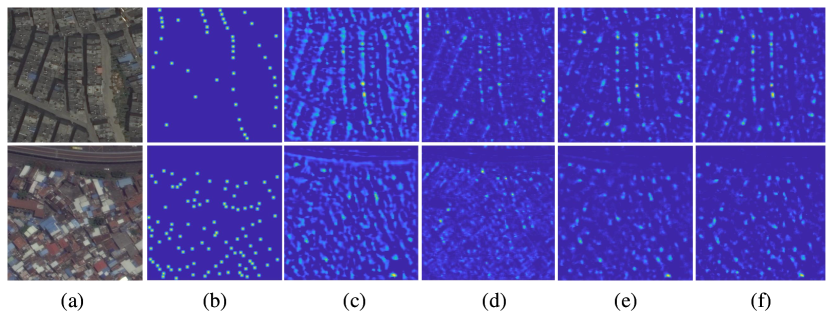

where represents the standard deviation. Empirically, we adopt the fixed kernel with for all the experiments. The ground truth density maps after Gaussian convolution operation are visualized in Fig. 6.

III-E Loss function

We adopt Euclidean distance as loss function to evaluate the difference between the predicted density maps and the ground truths, which is widely adopted by many other counting works [1, 3, 5]. The loss function is defined as follows:

| (9) |

where means the batch size, represents the input image and indicates the trainable parameters, and are an estimated density map and its corresponding ground truth, respectively.

IV Remote sensing object counting (RSOC) dataset

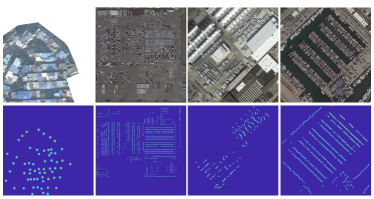

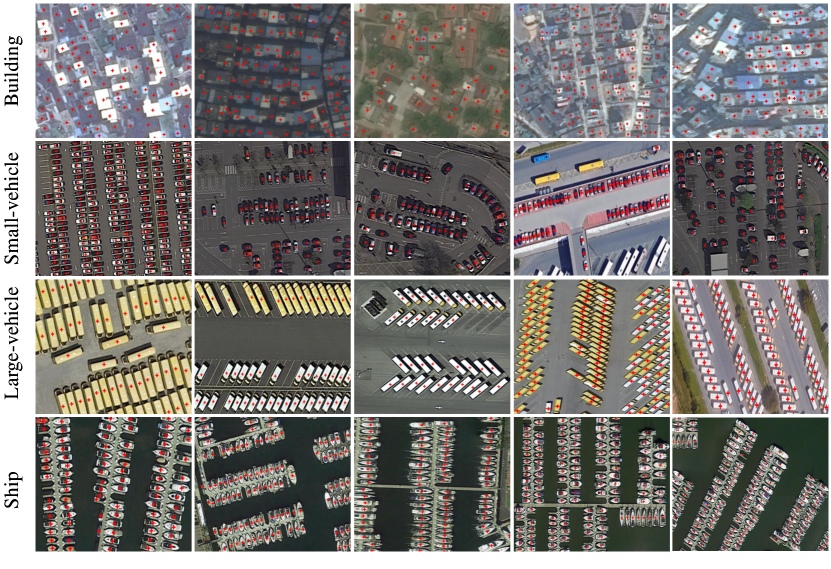

The absence of publicly available dataset especially large-scale datasets seriously limits the progress of object counting in the remote sensing field. Nowadays, there are only a few datasets available for the community. These datasets are either in a small scale or counting can be achieved easily even using an off-the-shelf detector. For example, the oil trees dataset [24] has only 10 images and 1251 instances in total. The few number of instances easily leads to overfitting for deep learners. In spite of 32,716 cars the COWC dataset [28] has, too much contextual information it contains, making it more suitable for detection tasks. CARPK [27] is a newly collected drone-based dataset, which has nearly 90,000 cars with bounding box annotations. The distributes of these objects in this dataset are scattered, thus making it more suitable for detection task rather than counting. More recently, a large-scale aerial image based dataset, named DLR-ACD [26], is proposed for counting task. This dataset is quite large, which contains 226,291 instances. However, there are only 33 images in it. And more importantly, it is annotated for crowd counting and thus cannot be used for geographic object counting tasks. A simple statistics information of these four datasets is given in Table I. To facilitate counting research in remote sensing community, we construct a large-scale remote sensing object counting dataset, termed RSOC. It consists of 3057 images and 286,539 instances in total. To our best knowledge, this is the largest dataset available for the remote sensing object counting task. Some representative samples are presented in Fig. 7.

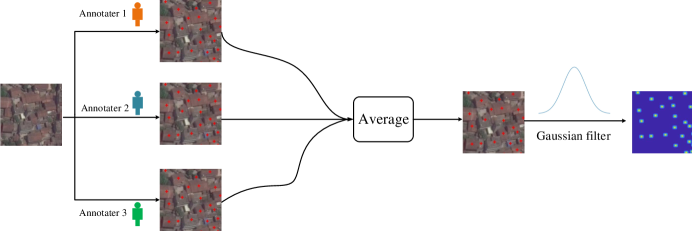

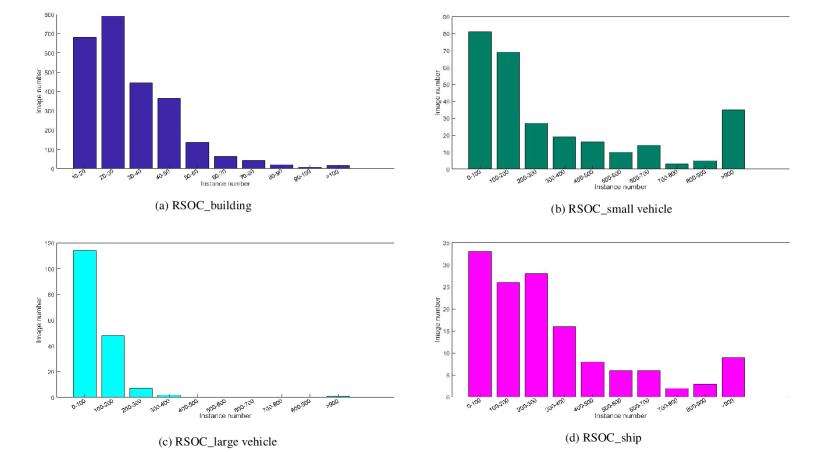

Data collection. Four types of objects involving buildings, small vehicles, large vehicles, and ships are included in our RSOC dataset, these four types are among the main concerns of researches in the remote sensing community. The images of buildings are collected from Google Earth, while the other three categories are collected from the DOTA dataset [33], which is a very large dataset built for object detection in aerial images. During collection, the easy cases, i.e., images containing only disperse distributed objects are removed from the RSOC, since we only focus on cluster instances and the count of those disperse ones can be easily inferred from an off-the-shelf detector. As a consequence, there leaves only hard samples such as crowded inshore ships and intensively packed vehicles in the parking lots. Finally, there are 280 images for small vehicles, 172 images for large vehicles, 137 images for ships are included in our dataset. Incorporating 2468 images collected from Google Earth for buildings, the ROSC dataset has 3057 images in total. For each subset, we divide it into training and testing sets as illustrated in Table I. The instance distribution of each subset is plotted in Fig. 9.

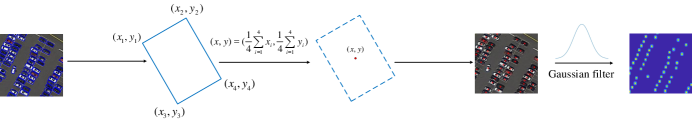

Annotation. To reduce the workload and speed up the annotation, the buildings are annotated with center points. Images from DOTA [33] are labeled with rotated quadrilateral bounding boxes enclosing the objects. The labels are denoted as , where indicates the positions of the vertices of the boxes in the image. We take the centroid of the bounding box as the central location, which can be calculated as follows,

| (10) |

The specific annotation process of our constructed dataset is depicted in Fig. 8.

Statistics. More information on this dataset is given in Table I, from which we can observe that the RSOC dataset has distinct features from other datasets: 1) large data capacity: as mentioned before, the RSOC dataset consists of four categories, 3057 images, and 286,539 instances. It is the largest counting dataset for remote sensing image understanding up to today; 2) large scale variation: the size of objects in the dataset ranges from a dozens of pixels to thousands of pixels, making it extremely challenging for counting; 3) diverse scenes and categories: the dataset covers a variety of scenes including parking lots, towns, villages, harbors and so on. Each scene contains specific annotations such as buildings, vehicles, ships.

| Dataset | Platform | Images | Training/test | Average Resolution | Annotation Format | Count Statistics | ||||

| Total | Min | Average | Max | |||||||

| Olive trees [24] | UAV | 10 | – | 40003000 | circle | 1251 | 107 | 125.1 | 143 | |

| COWC [28] | aerial | – | – | low | center point | 32,716 | – | – | – | |

| CARPK [27] | drone | 1448 | 989/459 | 1280720 | bounding box | 89,777 | 1 | 62 | 188 | |

| DLR-ACD [26] | aerial | 33 | 19/14 | 36195226 | center point | 226,291 | 285 | 6857 | 24,368 | |

| RSOC | Building | satellite | 2468 | 1205/1263 | 512512 | center point | 76,215 | 15 | 30.88 | 142 |

| Small-vehicle | satellite | 280 | 222/58 | 2473 2339 | oriented bounding box | 148,838 | 17 | 531.56 | 8531 | |

| Large-vehicle | satellite | 172 | 108/64 | 1552 1573 | oriented bounding box | 16,594 | 12 | 96.48 | 1336 | |

| Ship | satellite | 137 | 97/40 | 2558 2668 | oriented bounding box | 44,892 | 50 | 327.68 | 1661 | |

V Experiments

In this section, we benchmark the RSOC dataset by conducting extensive experiments on it. In addition, the ASPD-Net is evaluated on the RSOC and compared with previous state-of-the-art counting methods to demonstrate its superiority. Beyond comparison, ablation studies are also provided to verify the effectiveness of ASPD-Net. Besides, we conduct experiments on one popular crowd counting dataset, i.e., ShanghaiTech dataset [1], to further demonstrate the robustness and generalization ability of our proposed approach.

V-A Evaluation metrics

Two widely used metrics, Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE), are employed to evaluate the performance of the proposed and comparison methods. MAE measures the accuracy of the model, while RMSE measures the robustness. These two metrics are defined as follows:

| (11) |

| (12) |

where is the number of test images, denotes the predicted count for the th image and indicates the ground truth count.

V-B Implementation details

We implement the proposed ASPD-Net in PyTorch [69], train and test it on an NVIDIA 2080Ti GPU. The first 10 convolution layers are fine-tuned from VGG-16 [40], and the other layers are initialized through a Gaussian noise with 0.01 standard deviation. ASPD-Net is trained in an end-to-end manner. During training, we employ stochastic gradient descent (SGD) to optimize the network and set the learning rate as le-5. For the building subset, we adopt a batch size of 32 and set a batch size of 1 for the other three subsets. For ShanghaiTech crowd counting dataset, we inherit the training manner in [12]. All training will reach convergence in 400 epochs.

We apply data augmentation to generate more training samples. For each image from the training set, 9 sub-images with 1/4 size of the original image are cropped, of which four are adjacent non-overlapping sub-images and the other five are cropped randomly. After then, a mirror flip is applied to double them. Since the ship, large-vehicle and small-vehicle images are with large sizes, which will lead to out-of-memory of GPU in the training phase, therefore, we resize all the large images into 1024 768 pixels before data augmentation.

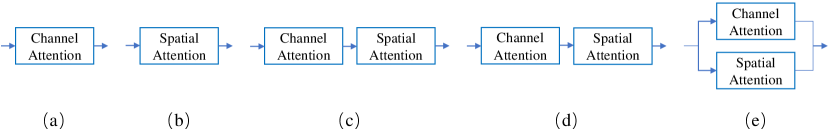

V-C Arrangement of the channel- and spatial-attention

There are several configurations when integrating channel- and spatial-attention, as shown in Fig. 10. Here we test all the combinations on top of the MCNN [1] by embedding attention modules into the MCNN. We choose MCNN because it is easy to implement and train. An example test model is shown in Fig. 11. We conduct experiments on the RSOC_building subset. The results are illustrated in Table II. We can find that incorporating attention module, either spatial or channel attention, could significantly improve the performance by a large margin. Channel attention performs slightly better than spatial attention. Combining two attentions together could further boost the performance. And sequential assemblies are superior to parallel one. This is consistent with [59]. The ‘channel+spatial’ configuration obtains the best result, thus in the following experiments, we employ it as the attention module to embed into the proposed ASPD-Net.

| Method | RSOC_building | |

|---|---|---|

| MAE | RMSE | |

| MCNN [1] | 13.65 | 18.56 |

| MCNN [1]+spatial | 11.41 | 16.09 |

| MCNN [1]+channel | 11.20 | 15.92 |

| MCNN [1]+channelspatial in parallel | 11.21 | 15.83 |

| MCNN [1]+spatial+channel | 11.11 | 15.77 |

| MCNN [1]+channel+spatial | 10.18 | 14.52 |

| Method | RSOC_building | RSOC_small-vehicle | RSOC_large-vehicle | RSOC_ship | ||||

|---|---|---|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | |

| Baseline | 8.00 | 11.78 | 443.72 | 1252.22 | 34.10 | 46.42 | 240.01 | 394.81 |

| Baseline+Att | 7.92 | 11.67 | 439.51 | 1248.95 | 28.75 | 41.63 | 228.45 | 365.37 |

| Baseline+Att+SPM | 7.85 | 11.58 | 436.84 | 1243.73 | 24.38 | 36.54 | 211.58 | 334.53 |

| Baseline+Att+SPM+DCM (ours) | 7.59 | 10.66 | 433.23 | 1238.61 | 18.76 | 31.06 | 193.83 | 318.95 |

V-D Ablation study

To better quantify the contribution of different modules of our method, we conduct an ablation study on the RSOC_building subset with simplified models.

Baseline: We set a baseline method similar to CSRNet [12], which is composed of 10 convolution layers carved from VGG-16 and 6 dilated convolution layers with dilation rate 2.

Baseline+Att: A sequential channel-spatial attention module (Att) is added on top of the baseline method.

Baseline+Att+SPM: The Att module and the scale pyramid module (SPM) are added on the top of the baseline method.

Baseline+Att+SPM+DCM: the proposed ASPD-Net.

The results of the ablation experiments are tabulated in Table III. From the table, we can see that each component in our network contributes to performance improvement. Specifically, the naive baseline method does not perform the most optimal performance. Using the attention module captures the global and local dependencies of the feature maps. The use of scale pyramid module further improves the performance by capturing the multi-scale information. A toy example for the visualization of density maps on RSOC_building subset is depicted in Fig. 12. It can be intuitively observed that by incorporating attention module, scale pyramid module, and deformable convolution module into the unified framework, our proposed ASPDNet can obtain the superior counting performance and accurate predicts.

V-E Comparison with state-of-the-arts on RSOC dataset

We make comparison with several state-of-the-art counting methods, which are first raised for crowed counting, however, they can be applicable for object counting in remote sensing images. We report the results in Table IV. It can be observed that our approach achieves the best performance on the RSOC dataset. Specifically, compared with the second best state-of-the-art method, ASPDNet improves the performance with 1.94% MAE and 7.14% RMSE on RSOC_building subset, 0.47% MAE and 0.77% RMSE on RSOC_small vehicle subset, 35.40% MAE and 33.09% RMSE on RSOC_large vehicle subset and 3.86% MAE and 4.18% RMSE on RSOC_ship subset, respectively. From the evaluation metrics, we can find that even though we have achieved the best performance on the proposed RSOC dataset, there is still a large margin to be improved, especially for small objects such as small-vehicles and ships. This is consistent with other counting tasks that higher congested the scene is, more challenging the counting task will be. This also indicates the challenging nature of the proposed RSOC is and we hope this will encourage more research efforts to put on our dataset.

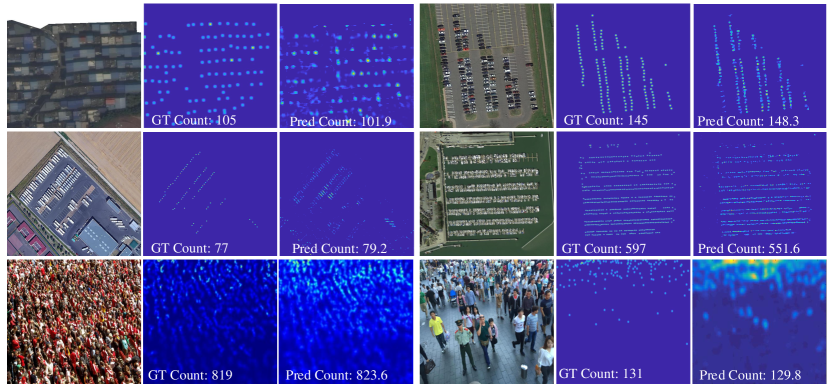

Figs. 13 depicts the visualization results for some sample images from RSOC_building, RSOC_small vehicle, RSOC_large vehicle and RSOC_ship subsets. It can be observed that our proposed method obtains high-quality density maps with small count errors. It also indicates even under the extreme conditions of scale variation, complex clutter backgrounds, and orientation arbitrariness, our proposed approach still performs strong robustness capacity. Meanwhile, from the generated density maps, we can find that our model has accurate localization ability to some extent.

| Building | Small vehicle | Large vehicle | Ship | |||||

|---|---|---|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | |

| MCNN [1] | 13.65 | 16.56 | 488.65 | 1317.44 | 36.56 | 55.55 | 263.91 | 412.30 |

| CMTL [49] | 12.78 | 15.99 | 490.53 | 1321.11 | 61.02 | 78.25 | 251.17 | 403.07 |

| CSRNet [12] | 8.00 | 11.78 | 443.72 | 1252.22 | 34.10 | 46.42 | 240.01 | 394.81 |

| SANet [11] | 29.01 | 32.96 | 497.22 | 1276.66 | 62.78 | 79.65 | 302.37 | 436.91 |

| SFCN [70] | 8.94 | 12.87 | 440.70 | 1248.27 | 33.93 | 49.74 | 240.16 | 394.81 |

| SPN [66] | 7.74 | 11.48 | 445.16 | 1252.92 | 36.21 | 50.65 | 241.43 | 392.88 |

| SCAR [65] | 26.90 | 31.35 | 497.22 | 1276.65 | 62.78 | 79.64 | 302.37 | 436.92 |

| CAN [71] | 9.12 | 13.38 | 457.36 | 1260.39 | 34.56 | 49.63 | 282.69 | 423.44 |

| SFANet [72] | 8.18 | 11.75 | 435.29 | 1284.15 | 29.04 | 47.01 | 201.61 | 332.87 |

| ASPDNet (proposed) | 7.59 | 10.66 | 433.23 | 1238.61 | 18.76 | 31.06 | 193.83 | 318.95 |

V-F Comparison with state-of-the-arts on crowd counting dataset

To further demonstrate the effectiveness and generalization ability of our proposed method, we apply it on one crowd counting dataset, i.e., ShanghaiTech dataset [1]. This dataset is composed of 1,198 annotated images with a total of 330,165 presidents, which is split into two parts, Part_A and Part_B. Part_A contains 482 highly congested images which are randomly crawled from the Internet, where 400 images serve as training and the remaining are for testing. Part_B contains 716 image with relatively sparse people which are taken from the busy streets of metropolitan areas in Shanghai, China, where 400 images are for training and the rest 316 are for testing.

We report the comparison results in Table V. It can be seen that compared with the state-of-the-art methods, our proposed method can obtain the best results, which demonstrates great robustness and generalization ability. Specifically, compared with the baseline method, CSRNet [12], it gains 10.85% / 16.35% (MAE / RMSE) on Part_A, and 32.08% / 34.38% (MAE / RMSE) on Part_B, respectively. Some visualizations of density maps are depicted in Fig. 13, which shows the proposed method still can generate accurate predicts for diverse objects from sparse to dense scenarios.

| ShanghaiTech Part_A | ShanghaiTech Part__B | |||

| MAE | RMSE | MAE | RMSE | |

| MCNN [1] | 110.2 | 173.2 | 26.4 | 41.3 |

| CMTL [49] | 101.3 | 152.4 | 20.0 | 31.1 |

| Switch-CNN [5] | 90.4 | 135.0 | 21.6 | 33.4 |

| IGCNN [73] | 72.5 | 118.2 | 13.6 | 21.1 |

| CSRNet [12] | 68.2 | 115.0 | 10.6 | 16.0 |

| SANet [11] | 67.0 | 104.5 | 8.4 | 13.6 |

| SFCN [70] | 64.8 | 107.5 | 7.6 | 13.0 |

| SPN [66] | 61.7 | 99.5 | 9.4 | 14.4 |

| SCAR [65] | 66.3 | 114.1 | 9.5 | 15.2 |

| ADCrowdNet [74] | 70.9 | 115.2 | 7.7 | 12.9 |

| BL [75] | 62.8 | 101.8 | 7.7 | 12.7 |

| ASPDNet (proposed) | 60.8 | 96.2 | 7.2 | 10.5 |

V-G Standard deviations experiments

To validate the stability of our proposed method, following [76], we also report the standard deviations of our methods on the constructed dataset. See Table VI for detail, note that the standard deviations are computed using 5 trials.

| Building | Small vehicle | Large vehicle | Ship | |||||

| MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | |

| Ours | 7.590.8 | 10.661.7 | 433.230.6 | 1238.611.3 | 18.760.5 | 31.061.2 | 193.830.7 | 318.951.5 |

VI Conclusion and future work

Counting the object instance in remote sensing images is a remarkable significant yet scientifically challenging topic. To achieve this, we devise an ASPD-Net by incorporating attention mechanism, scale pyramid module, and deformable convolution module into a unified framework. Moreover, considering that the development of this field has been limited mainly due to the scarcity of large-scale datasets with accurately annotated. To remedy this, we present a new large-scale remote sensing object counting dataset which encompasses 4 object categories with 3057 images, and 286,539 instances in total. Extensive experimental results including quantitative and qualitative demonstrate the effectiveness and superiority of our proposed approach compared with the off-the-shelf state-of-the-art methods for crowd counting. In addition, to further validate the effectiveness of each component and the generalization ability of our designed ASPD-Net, some ablation studies on our constructed RSOC dataset and experiments on one widely used crowd counting dataset are also implemented. We expect that our contribution can bridge the gap and guide the new developments on the object counting in remote sensing imagery.

Nevertheless, there are some drawbacks such as class unbalanced in our proposed RSOC dataset, suboptimal performance on small object counting of the proposed method. Therefore, in the future, we plan to collect more images from various platforms, and devise better model to alleviate the small object counting problems. Meanwhile, we intend to design dedicated class-agnostic algorithms for remote sensing object counting.

References

- [1] Y. Zhang, D. Zhou, S. Chen, S. Gao, and Y. Ma, “Single-image crowd counting via multi-column convolutional neural network,” in CVPR, 2016, pp. 589–597.

- [2] D. Onoro-Rubio and R. J. López-Sastre, “Towards perspective-free object counting with deep learning,” in ECCV. Springer, 2016, pp. 615–629.

- [3] L. Boominathan, S. S. Kruthiventi, and R. V. Babu, “Crowdnet: A deep convolutional network for dense crowd counting,” in ACM MM. ACM, 2016, pp. 640–644.

- [4] D. Kang and A. Chan, “Crowd counting by adaptively fusing predictions from an image pyramid,” arXiv preprint arXiv:1805.06115, 2018.

- [5] D. B. Sam, S. Surya, and R. V. Babu, “Switching convolutional neural network for crowd counting,” in CVPR. IEEE, 2017, pp. 4031–4039.

- [6] V. A. Sindagi and V. M. Patel, “Generating high-quality crowd density maps using contextual pyramid cnns,” in ICCV, 2017, pp. 1861–1870.

- [7] J. Liu, C. Gao, D. Meng, and A. G. Hauptmann, “Decidenet: Counting varying density crowds through attention guided detection and density estimation,” in CVPR, 2018, pp. 5197–5206.

- [8] M. Hossain, M. Hosseinzadeh, O. Chanda, and Y. Wang, “Crowd counting using scale-aware attention networks,” in WACV. IEEE, 2019, pp. 1280–1288.

- [9] L. Zhang, M. Shi, and Q. Chen, “Crowd counting via scale-adaptive convolutional neural network,” in WACV. IEEE, 2018, pp. 1113–1121.

- [10] J. Sang, W. Wu, H. Luo, H. Xiang, Q. Zhang, H. Hu, and X. Xia, “Improved crowd counting method based on scale-adaptive convolutional neural network,” IEEE Access, 2019.

- [11] X. Cao, Z. Wang, Y. Zhao, and F. Su, “Scale aggregation network for accurate and efficient crowd counting,” in ECCV, 2018, pp. 734–750.

- [12] Y. Li, X. Zhang, and D. Chen, “CSRNet: Dilated convolutional neural networks for understanding the highly congested scenes,” in CVPR, 2018, pp. 1091–1100.

- [13] R. R. Varior, B. Shuai, J. Tighe, and D. Modolo, “Scale-aware attention network for crowd counting,” CVPR, 2019.

- [14] Y. Wang and Y. Zou, “Fast visual object counting via example-based density estimation,” in ICIP. IEEE, 2016, pp. 3653–3657.

- [15] E. Walach and L. Wolf, “Learning to count with cnn boosting,” in ECCV. Springer, 2016, pp. 660–676.

- [16] V. Lempitsky and A. Zisserman, “Learning to count objects in images,” in NIPS, 2010, pp. 1324–1332.

- [17] C. Arteta, V. Lempitsky, and A. Zisserman, “Counting in the wild,” in ECCV. Springer, 2016, pp. 483–498.

- [18] H. Zhang, Z. Kyaw, S.-F. Chang, and T.-S. Chua, “Visual translation embedding network for visual relation detection,” in CVPR, 2017, pp. 5532–5540.

- [19] S. Zhang, G. Wu, J. P. Costeira, and J. M. Moura, “Fcn-rlstm: Deep spatio-temporal neural networks for vehicle counting in city cameras,” in ICCV, 2017, pp. 3667–3676.

- [20] R. Guerrero-Gómez-Olmedo, B. Torre-Jiménez, R. López-Sastre, S. Maldonado-Bascón, and D. Onoro-Rubio, “Extremely overlapping vehicle counting,” in PRIA. Springer, 2015, pp. 423–431.

- [21] G. French, M. Fisher, M. Mackiewicz, and C. Needle, “Convolutional neural networks for counting fish in fisheries surveillance video,” MVAB, pp. 1–7, 2015.

- [22] B. Zhan, D. N. Monekosso, P. Remagnino, S. A. Velastin, and L.-Q. Xu, “Crowd analysis: a survey,” MVA, vol. 19, no. 5-6, pp. 345–357, 2008.

- [23] Y. Bazi, H. Al-Sharari, and F. Melgani, “An automatic method for counting olive trees in very high spatial remote sensing images,” in IGARSS, vol. 2. IEEE, 2009, pp. 125–128.

- [24] E. Salamí, A. Gallardo, G. Skorobogatov, and C. Barrado, “On-the-fly olive trees counting using a uas and cloud services,” Remote Sens., vol. 11, no. 3, p. 316, 2019.

- [25] N. A. Mubin, E. Nadarajoo, H. Z. M. Shafri, and A. Hamedianfar, “Young and mature oil palm tree detection and counting using convolutional neural network deep learning method,” IJRS, vol. 40, no. 19, pp. 7500–7515, 2019.

- [26] R. Bahmanyar, E. Vig, and P. Reinartz, “Mrcnet: Crowd counting and density map estimation in aerial and ground imagery,” in BMVC-ODRSS, 2019, pp. 1–12.

- [27] M.-R. Hsieh, Y.-L. Lin, and W. H. Hsu, “Drone-based object counting by spatially regularized regional proposal network,” in ICCV, 2017, pp. 4145–4153.

- [28] T. N. Mundhenk, G. Konjevod, W. A. Sakla, and K. Boakye, “A large contextual dataset for classification, detection and counting of cars with deep learning,” in ECCV. Springer, 2016, pp. 785–800.

- [29] M. M. Rathore, A. Ahmad, A. Paul, and S. Rho, “Urban planning and building smart cities based on the internet of things using big data analytics,” Computer Networks, vol. 101, pp. 63–80, 2016.

- [30] J.-F. Pekel, A. Cottam, N. Gorelick, and A. S. Belward, “High-resolution mapping of global surface water and its long-term changes,” Nature, vol. 540, no. 7633, p. 418, 2016.

- [31] L. Guan, Y. Ding, X. Feng, and H. Zhang, “Digital beijing construction and application based on the urban three-dimensional modelling and remote sensing monitoring technology,” in IGARSS. IEEE, 2016, pp. 7299–7302.

- [32] Y. Fan, Q. Wen, W. Wang, P. Wang, L. Li, and P. Zhang, “Quantifying disaster physical damage using remote sensing data—a technical work flow and case study of the 2014 ludian earthquake in china,” International Journal of Disaster Risk Science, vol. 8, no. 4, pp. 471–488, 2017.

- [33] G.-S. Xia, X. Bai, J. Ding, Z. Zhu, S. Belongie, J. Luo, M. Datcu, M. Pelillo, and L. Zhang, “Dota: A large-scale dataset for object detection in aerial images,” in CVPR, 2018, pp. 3974–3983.

- [34] K. Li, G. Wan, G. Cheng, L. Meng, and J. Han, “Object detection in optical remote sensing images: A survey and a new benchmark,” ISPRS P&RS, vol. 159, pp. 296–307, 2020.

- [35] G.-S. Xia, J. Hu, F. Hu, B. Shi, X. Bai, Y. Zhong, L. Zhang, and X. Lu, “Aid: A benchmark data set for performance evaluation of aerial scene classification,” TGRS, vol. 55, no. 7, pp. 3965–3981, 2017.

- [36] G. Cheng, J. Han, and X. Lu, “Remote sensing image scene classification: Benchmark and state of the art,” Proc. of the IEEE, vol. 105, no. 10, pp. 1865–1883, 2017.

- [37] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” in NIPS, 2015, pp. 91–99.

- [38] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in CVPR, 2016, pp. 779–788.

- [39] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “Ssd: Single shot multibox detector,” in ECCV. Springer, 2016, pp. 21–37.

- [40] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

- [41] G. Gao, Q. Liu, and Y. Wang, “Counting dense objects in remote sensing images,” in ICASSP, 2020.

- [42] S. Yang, P. Luo, C.-C. Loy, and X. Tang, “Wider face: A face detection benchmark,” in CVPR, 2016, pp. 5525–5533.

- [43] P. Dollar, C. Wojek, B. Schiele, and P. Perona, “Pedestrian detection: An evaluation of the state of the art,” TPAMI, vol. 34, no. 4, pp. 743–761, 2012.

- [44] N. Paragios and V. Ramesh, “A mrf-based approach for real-time subway monitoring,” in CVPR, vol. 1. IEEE, 2001, pp. I–I.

- [45] Y. Tian, L. Sigal, H. Badino, F. De la Torre, and Y. Liu, “Latent gaussian mixture regression for human pose estimation,” in ACCV. Springer, 2010, pp. 679–690.

- [46] D. G. Lowe et al., “Object recognition from local scale-invariant features.” in ICCV, vol. 99, no. 2, 1999, pp. 1150–1157.

- [47] R. M. Haralick, K. Shanmugam et al., “Textural features for image classification,” IEEE Trans. Syst., Man, Cybern., no. 6, pp. 610–621, 1973.

- [48] V.-Q. Pham, T. Kozakaya, O. Yamaguchi, and R. Okada, “Count forest: Co-voting uncertain number of targets using random forest for crowd density estimation,” in ICCV, 2015, pp. 3253–3261.

- [49] V. A. Sindagi and V. M. Patel, “Cnn-based cascaded multi-task learning of high-level prior and density estimation for crowd counting,” in AVSS. IEEE, 2017, pp. 1–6.

- [50] K. Xu, J. Ba, R. Kiros, K. Cho, A. Courville, R. Salakhudinov, R. Zemel, and Y. Bengio, “Show, attend and tell: Neural image caption generation with visual attention,” in ICML, 2015, pp. 2048–2057.

- [51] Q. You, H. Jin, Z. Wang, C. Fang, and J. Luo, “Image captioning with semantic attention,” in CVPR, 2016, pp. 4651–4659.

- [52] Z. Yang, X. He, J. Gao, L. Deng, and A. Smola, “Stacked attention networks for image question answering,” in CVPR, 2016, pp. 21–29.

- [53] Y. Huang, X. Cao, X. Zhen, and J. Han, “Attentive temporal pyramid network for dynamic scene classification,” in AAAI, vol. 33, 2019, pp. 8497–8504.

- [54] F. Wang, M. Jiang, C. Qian, S. Yang, C. Li, H. Zhang, X. Wang, and X. Tang, “Residual attention network for image classification,” in CVPR, 2017, pp. 3156–3164.

- [55] A. Zhang, L. Yue, J. Shen, F. Zhu, X. Zhen, X. Cao, and L. Shao, “Attentional neural fields for crowd counting,” in ICCV, 2019, pp. 5714–5723.

- [56] D. Bahdanau, K. Cho, and Y. Bengio, “Neural machine translation by jointly learning to align and translate,” arXiv preprint arXiv:1409.0473, 2014.

- [57] L. Chen, H. Zhang, J. Xiao, L. Nie, J. Shao, W. Liu, and T.-S. Chua, “Sca-cnn: Spatial and channel-wise attention in convolutional networks for image captioning,” in CVPR, 2017, pp. 5659–5667.

- [58] X. Wang, R. Girshick, A. Gupta, and K. He, “Non-local neural networks,” in CVPR, 2018, pp. 7794–7803.

- [59] S. Woo, J. Park, J.-Y. Lee, and I. So Kweon, “CBAM: Convolutional block attention module,” in ECCV, 2018, pp. 3–19.

- [60] F. Yu and V. Koltun, “Multi-scale context aggregation by dilated convolutions,” ICLR, 2015.

- [61] H. Song, W. Wang, S. Zhao, J. Shen, and K.-M. Lam, “Pyramid dilated deeper convlstm for video salient object detection,” in ECCV, 2018, pp. 715–731.

- [62] X. Li, J. Wu, Z. Lin, H. Liu, and H. Zha, “Recurrent squeeze-and-excitation context aggregation net for single image deraining,” in ECCV, 2018, pp. 254–269.

- [63] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein et al., “Imagenet large scale visual recognition challenge,” IJCV, vol. 115, no. 3, pp. 211–252, 2015.

- [64] X. Liu, M. Chi, Y. Zhang, and Y. Qin, “Classifying high resolution remote sensing images by fine-tuned vgg deep networks,” in IGARSS. IEEE, 2018, pp. 7137–7140.

- [65] J. Gao, Q. Wang, and Y. Yuan, “Scar: Spatial-/channel-wise attention regression networks for crowd counting,” Neurocomputing, vol. 363, pp. 1–8, 2019.

- [66] X. Chen, Y. Bin, N. Sang, and C. Gao, “Scale pyramid network for crowd counting,” in WACV. IEEE, 2019, pp. 1941–1950.

- [67] H. Zhao, J. Shi, X. Qi, X. Wang, and J. Jia, “Pyramid scene parsing network,” in CVPR, 2017, pp. 2881–2890.

- [68] J. Dai, H. Qi, Y. Xiong, Y. Li, G. Zhang, H. Hu, and Y. Wei, “Deformable convolutional networks,” in ICCV, 2017, pp. 764–773.

- [69] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga et al., “Pytorch: An imperative style, high-performance deep learning library,” in NeurIPS, 2019, pp. 8024–8035.

- [70] Q. Wang, J. Gao, W. Lin, and Y. Yuan, “Learning from synthetic data for crowd counting in the wild,” in CVPR, 2019, pp. 8198–8207.

- [71] W. Liu, M. Salzmann, and P. Fua, “Context-aware crowd counting,” CVPR, 2019.

- [72] L. Zhu, Z. Zhao, C. Lu, Y. Lin, Y. Peng, and T. Yao, “Dual path multi-scale fusion networks with attention for crowd counting,” arXiv preprint arXiv:1902.01115, 2019.

- [73] D. Babu Sam, N. N. Sajjan, R. Venkatesh Babu, and M. Srinivasan, “Divide and grow: capturing huge diversity in crowd images with incrementally growing cnn,” in CVPR, 2018, pp. 3618–3626.

- [74] N. Liu, Y. Long, C. Zou, Q. Niu, L. Pan, and H. Wu, “Adcrowdnet: An attention-injective deformable convolutional network for crowd understanding,” CVPR, 2019.

- [75] Z. Ma, X. Wei, X. Hong, and Y. Gong, “Bayesian loss for crowd count estimation with point supervision,” in ICCV, 2019, pp. 6142–6151.

- [76] V. A. Sindagi, R. Yasarla, D. S. Babu, R. V. Babu, and V. M. Patel, “Learning to count in the crowd from limited labeled data,” in ECCV, 2020.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ec423bc6-b8f1-49c5-a5bf-1f0b2cd3cdd3/x15.png) |

Guangshuai Gao received the B.Sc. degree in applied physics from college of science and the M.Sc. degree in signal and information processing from the School of Electronic and Information Engineering, from the Zhongyuan University of Technology, Zhengzhou, China, in 2014 and 2017, respectively. He is currently pursuing the Ph.D. degree with the Laboratory of Intelligent Recognition and Image Processing, Beijing Key Laboratory of Digital Media, School of Computer Science and Engineering, Beihang University. His research interests include image processing, pattern recognition, remote sensing image analysis and digital machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ec423bc6-b8f1-49c5-a5bf-1f0b2cd3cdd3/x16.png) |

Qingjie Liu received the B.S. degree in computer science from Hunan University, Changsha, China and the Ph.D. degree in computer science from Beihang University, Beijing, China. He is currently an Assistant Professor with the School of Computer Science and Engineering, Beihang University. He is also a Distinguished Research Fellow with the Hangzhou Institute of Innovation, Beihang University, Hangzhou. His current research interests include remote sensing image analysis, pattern recognition, and computer vision. He is a member of the IEEE. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/ec423bc6-b8f1-49c5-a5bf-1f0b2cd3cdd3/x17.png) |

Yunhong Wang received the B.S. degree from Northwestern Polytechnical University, Xi’an, China, in 1989, and the M.S. and Ph.D. degrees from the Nanjing University of Science and Technology, Nanjing, China, in 1995 and 1998, respectively, all in electronics engineering. She was with the National Laboratory of Pattern Recognition, Institute of Automation, Chinese Academy of Sciences, Beijing, China, from 1998 to 2004. Since 2004, she has been a Professor with the School of Computer Science and Engineering, Beihang University, Beijing, where she is currently the Director of Laboratory of Intelligent Recognition and Image Processing, Beijing Key Laboratory of Digital Media. Her research results have published at prestigious journals and prominent conferences, such as the IEEE TRANSACTIONS ON PATTERN ANALYS IS AND MACHINE INTELLIGENCE (TPAMI), TRANSACTIONS ON IMAGE PROCESSING (TIP), TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY (TIFS), Computer Vision and Pattern Recognition (CVPR), International Conference on Computer Vision (ICCV), and European Conference on Computer Vision (ECCV). Her research interests include biometrics, pattern recognition, computer vision, data fusion, and image processing. She is a Fellow of the IEEE. |