Credit migration: Generating generators

Abstract

Markovian credit migration models are a reasonably standard tool nowadays, but there are fundamental difficulties with calibrating them. We show how these are resolved using a simplified form of matrix generator and explain why risk-neutral calibration cannot be done without volatility information. We also show how to use elementary ideas from differential geometry to make general inferences about calibration stability.

This is the longer version of a paper published in RISK (Feb 2021).

Introduction

Credit markets face uncertain times, and the current upset caused by the coronavirus pandemic is likely to result in deterioration of the credit fundamentals for many issuers. Although there has been a huge rally since the end of the Global Financial Crisis, which now seems a distant memory, there have been upsets in the Eurozone (2011), and in commodity and energy markets (2014–16), while in emerging markets there have been downgrades in the sovereigns of Brazil, Russia and Turkey, and even during the benign year of 2019, the great majority of LatAm corporate names on rating-watch were potential downgrades rather than upgrades222See e.g. Credit Suisse Latin America Chartbook.. It is worthwhile, we think, to revisit the subject of credit migration.

A standard modelling framework, introduced by Jarrow et al. [Jarrow97] over twenty years ago, uses a Markov chain, parametrised by a generator matrix, to represent the evolution of the credit rating of an issuer. A good overview of subsequent developments, including its extension to multivariate settings, is given by [Bielecki11]; in terms of how it relates to the credit markets and default history, an excellent series of annual reports is produced by Standard & Poors, of which the most recent is [SandP19]. In the taxonomy of credit models, the ratings-based Markov chain model is best understood as a sort of reduction of the credit barrier models, in the sense that the firm value, or distance to default, is replaced by the credit rating. This reduces the dimensionality from a continuum of possible default distances to a small number of rating states, a natural idea as credit rating is a convenient way of thinking about a performing issuer.

The Markov chain model can be used in a number of ways, making it of general interest:

-

•

Econometrically, to tie potential credit losses to econometric indicators and thence to bank capital requirements [Fei13];

-

•

Risk management of vanilla and exotic credit portfolios;

-

•

Valuation of structured credit products. It is worth bearing in mind that although the synthetic CDO market is a fraction of its former self, the CLO market is still thriving, with new CLOs being issued frequently. Further, the ‘reg-cap’ sector (trades providing regulatory capital relief for banks) is growing, and requires the pricing and risk management of contingent credit products;

-

•

Understanding the impact of rating-dependent collateral calls in XVA;

-

•

Stripping the credit curve from bond prices, as a way of constructing sensibly-shaped yield curves for different ratings and tenors.

The model can, therefore, be used in both subjective (historical) and market-implied (risk-neutral) modelling, and we shall consider both here. Nevertheless there are several difficulties that have not been discussed in the literature, detracting from the utility of the model and limiting its application hitherto:

-

(I)

The number of free parameters is uncomfortably high (with states plus default it is );

-

(II)

Computation of transition probabilities requires the generator matrix to be exponentiated, but this is a trappy subject [Moler03];

-

(III)

There are algebraic hurdles associated with trying to make a generator matrix time-varying.

Additionally the following fundamental matters are insufficiently understood:

-

(IV)

What is the best way to calibrate to an empirical (historical) transition matrix, and what level of accuracy may reasonably be asked for?

-

(V)

Model calibration is one of the most important disciplines in quantitative finance, and yet no serious attempt has been made to answer the following: Can we uniquely identify the risk-neutral generator from the term structure of credit spreads for each rating? If not, what extra information is needed?

We address all of these here, solving most of the above problems using a new parametrisation in which a tridiagonal generator matrix is coupled with a Lévy stochastic time change (we call this TDST).

1 Tridiagonal–stochastic time change (TDST) model

We consider an -states-plus-default continuous-time Markov chain and write for the generator matrix with the th state denoting default (we will write this state as ). The off-diagonal elements of must be nonnegative and the rows sum to zero; the last row is all zero because default is an absorbing state. The probability of default in time , conditional on starting at state , is the th element of the last column of the matrix : in other words .

Let us begin by writing the generator matrix in the form

| (1) |

where , and is such that the rows of sum to zero; the offdiagonal elements of , and those of , must of course be nonnegative. The -period transition matrix is given by

where the function is defined by . As is apparent, we have used a matrix exponential, and also the function , and it is worth making some general remarks about analytic functions of matrices.

Let be a function analytic on a domain . Let denote the open disc of centre and radius , and assume that this lies inside . Then possesses a Taylor series convergent for . It is immediate, proven for example by reduction to Jordan normal form [Cohn84], that the expansion

enjoys the same convergence provided that all the eigenvalues of lie in . We can therefore write without ambiguity, and will use this notation henceforth. This construction establishes the existence of the matrix exponential ( and ), though it does not at all make it a good computational method, because roundoff errors can be substantial333For example in computing at : the roundoff error is far in excess of the correct answer.. Taylor series expansion is one of the many bad ways of calculating the matrix exponential [Higham08, Moler03]—a point that is routinely ignored. A better idea is

| (2) |

and in each case computation is easiest when is a power of two because it can be accomplished by repeated squaring. The second expression is slightly harder to calculate as it requires a matrix inverse, but it has an advantage: for , is a valid transition matrix whereas may not be.

Having discussed the matrix exponential, we also have something to say about its inverse. It is in principle possible to write down a -period (commonly one year) transition matrix and ask if it comes from a generator , which amounts to finding the matrix logarithm of . If such a generator exists then can be raised to any real positive power, and is sometimes said to be embeddable444‘Embedding’: there exists a smooth map from into the space of valid transition matrices, obeying . It is, of course, .. Not all valid transition matrices are embeddable. By identifying a generator at the outset we avoid this problem.

Another important thread is the use of diagonalisation, implicit in the above discussion because we have already mentioned eigenvalues. If for some invertible and diagonal then . However, while a real matrix is generically555This means that if it isn’t, it is arbitrarily close to one that is. diagonalisable, it may be that is almost singular. There is also the minor irritation that may not be real. This method is another popular way of exponentiating matrices, but again one that is only reliable in certain contexts, for example symmetric matrices: more of this presently.

A simplification of the model is to assume that is tridiagonal: that is to say the only nonzero elements are those immediately above, below, or on the leading diagonal. We define a transitive generator to be one in which all the super- and sub-diagonal elements of are strictly positive. An intransitive generator does not permit certain transitions to neighbouring states to occur: informally this causes the rating to ‘get stuck’ and can be ruled out on fundamental grounds, though it does retain some interest as a limiting case. Also, we define a restricted generator to be one in which all the rows of , except for the th, sum to zero: equivalently, is zero except for its bottom element. Both these simplified models have a clear connection to credit modelling as discretisations of the structural (Merton) model, in which the firm value is replaced by the credit rating as a distance-to-default measure. A restricted tridiagonal generator corresponds to a Brownian motion hitting a barrier, while an unrestricted tridiagonal generator has an additional dynamic of the firm suddenly jumping to default. The transitivity condition simply ensures that the volatility always exceeds zero.

It is clear that the space of restricted tridiagonal generators has degrees of freedom, and the unrestricted one . These are substantially lower than the needed to define a general model. Also, tridiagonality is useful not just for dimensionality reduction. A transitive generator can always be diagonalised, and has real eigenvalues. This is because with a positive diagonal matrix and a symmetric tridiagonal matrix; then can be diagonalised via an orthogonal change of basis as with ′ denoting transpose. (The method is particularly fast for tridiagonal matrices: see e.g. [NRC, §11.3].) Thus

The right-hand column of the matrix, written as “”, need not be computed directly because it can be obtained from the rows summing to unity. Note that the eigenvalues of , i.e. the (diagonal) elements of , are real and negative. This makes computation of the matrix exponential straightforward666Technically, the limit of an intransitive generator will cause to become singular, and so the construction then fails, but this seems to be a theoretical issue only.. As an aside we note the connection between tridiagonal matrices, orthogonal polynomials and Riemann-Hilbert problems [Deift00, Ch.2].

The problem with tridiagonal generators is that they do not permit multiple downgrades over an infinitesimal time period, and so the probability of such events over short (non-infinitesimal) periods, while positive, is not high enough. In this respect, the presence of a jump-to-default transition achieves little, because that is not the main route to default: far more likely is the occurrence of multiple downgrades, often by several notches at a time. So how do we make these sudden multiple downgrades occur?

To retain the structure above we employ a stochastic time change, replacing in the above equation by with denoting ‘real’ time and ‘business’ time. We are going to make a pure jump process, with the idea that business time occasionally takes a big leap, causing multiple transitions, and we call this model TDST (tridiagonal with stochastic time change). Formally the process is to be a monotone-increasing Lévy process777See e.g. [Schoutens03] for a general introduction. described by the generator , i.e.:

The -period transition matrix is obtained by integrating over all paths . As the dependence on is through an exponential, this is straightforward, and the effect is to replace by in (1), adjusting the last column as necessary. (In context will be regular in the left half-plane, so we can legitimately write the matrix function .) Accordingly the generator and -period transition matrix are

| (3) |

We can impose that have unit mean (as otherwise the model is over-specified because and can be scaled in opposite directions), so . An obvious choice is the CMY process

| (4) |

which has as special cases the Inverse Gaussian process and the Gamma process, respectively ():

This retains the connection with structural (Merton) modelling, in that we have a discretisation of a Lévy process hitting a barrier—a standard idea in credit risk modelling [Lipton02c, Martin09a, Martin10b, Dalessandro11]. An incidental remark about the Gamma process is that it allows the matrix exponential to be calculated in an alternative simple way, as noted in the Appendix.

It is perhaps worth emphasising that despite our using the term ‘stochastic time change’ this model specification still has a static generator. The stochastic time change serves only as a mechanism for generating multiple downgrades in an infinitesimal time period using a tridiagonal generator matrix. The number of parameters for this model is , where ( here) is the number needed to specify .

2 Calibration to historical transition matrix

Israel et al. [Israel00] spend some time showing that empirical transition matrices are not necessarily embeddable (q.v.). In fact it is only necessary to test whether the empirical matrix is statistically likely to be so. Taking S&P’s data in [SandP19, Tables 21,23], we ask if we can find a TDST model that fits well enough.

There are a number of issues to consider. The first is the right measure of closeness of fit. From the perspective of statistical theory the most appropriate is the Kullback–Leibler divergence888A Taylor series expansion around gives the well-known chi-squared measure of “, summed” ( observed, expected)—this can also be used to define the fitting error, and it gives similar results.:

| (5) |

where are the historical probabilities of transition from state to (with denoting default) and the model ones. This is essentially the log likelihood ratio statistic, i.e. the logarithm of the ratio of the likelihood of the model compared with that of the maximum likelihood estimator [Cox74, Ch.4]. Note that is not symmetrical in : nor should it be. To see why, consider first the effect of letting with : this means that the event has been observed but that the model probability is zero, an error that should be heavily penalised. On the other hand, with simply means that the model is attaching positive probability to an event that has not been observed, which is not a major objection.

Empirical transition data reveal a problem: the probability of transiting from rated to unrated (‘NR’) is quite high. A standard idea is to distribute this probability pro rata amongst the elements of each row, excepting the transition to default: doing so retains, as it must, the property that each row sum to unity. However, this is not the only way of making the adjustment, and although the ‘NR’ problem is unfortunate, it allows for, or cannot rule out, considerable leeway in the fitting—which is why attempts to exactly fit the matrix are misguided. Another issue is that the standard deviation of low empirical probabilities is proportionally quite high. The finiteness of the samples used to construct empirical transition matrices introduces considerable relative uncertainties.

We then minimise with respect to the elements of (and the bottom element of ) and the parameters in (4). Optimisation of the 7-state model takes a fraction of a second and the results are shown in Figure 2. It is seen that the fit is good, and furthermore the fitting errors are small by comparison with the uncertainties introduced by the ‘NR’ problem. The errors are also typically smaller than the standard errors given by S&P, which are in [SandP19, Table 21] but omitted here999One has to be careful in interpreting these, for a reason that we have already touched on. Certain transitions have never been observed, and for these S&P show the probability and standard error as zero. Now if the true frequency is zero, then the mean and standard deviation of the observed frequency will be zero. But what we really want is an estimate of the true frequency and the standard deviation of that estimate. Clearly if Bayesian inference is followed then the latter is not zero, because the true frequency may be ..

If we pass to an 18-state model (AAA, AA+, AA, …, CCC+, CCC) we still have a large number of parameters even after the reduction to a TDST model (324 to around 36). It is possible to fit these, but some further simplification proves advantageous. Suppose that the free parameters in the matrix are the sub/superdiagonal elements for the following transitions only: , , , , , , and also the bottom element of , giving . (Hence there are 12 in all.) The values for intermediate states ( and so on) are found by logarithmic interpolation101010For convenience is taken to be the same as , and and the same as .. A minor complication is that the S&P data group CCC+, CCC and lower ratings into one. We have ignored any rating lower than CCC because in practice transition through these states is very rapid, and default almost always ensues [SandP19, Chart 10]. Again the results are good: see Figure 2.

| (i) |

(ii) (%) AAA AA A BBB BB B CCC AAA AA A BBB BB B CCC (iii) (%) AAA AA A BBB BB B CCC AAA AA A BBB BB B CCC (%) AAA AA A BBB BB B CCC AAA AA A BBB BB B CCC (iv) 0 AAA AA A BBB BB B CCC value

[Fitted] (%) AAA AA+ AA AA– A+ A A– BBB+ BBB BBB– BB+ BB BB– B+ B B– CCC+ CCC AAA AA+ AA AA– A+ A A– BBB+ BBB BBB– BB+ BB BB– B+ B B– CCC+ CCC

3 Making the generator time-varying

3.1 Theoretical considerations

We start by pointing out a trap that is very easy to fall into. When the generator varies with time, the transition matrix is not given by the following expression (or its expectation conditional on information known at time , for a stochastically varying ):

| (6) |

Instead, the so-called ordered exponential, sometimes written ‘’, must be used111111To see where the problem is, suppose that equals for and for , both constant matrices. Then the period- transition matrix is because one needs commutativity but might not have it. See Wikipedia page on ‘ordered exponential’.. This can be thought of as

What is beguiling about (6) is its simplicity, its ‘obvious’ connection to standard ideas on rates modelling, and that its RHS is a valid transition matrix, albeit the wrong one.

We can invoke the Feynman-Kac representation, which is that if obeys the SDE

and is a function of only, then the matrix obeys the backward equation

but this equation is unlikely to have a closed-form solution, so has to be solved numerically e.g. on a trinomial tree.

Another route that is likely to cause difficulties is an over-reliance on diagonalisation methods. We have said that if we can write then it may be easy to exponentiate , depending on how well-conditioned is. To make time-varying it is superficially attractive to allow to vary while fixing . The problem with that is that there is no guarantee that a valid transition matrix is thereby produced.

There is one case which, interestingly, links to both these ideas. That is where the effect of time variation is simply to scale by a factor, i.e. with a static matrix and some scalar positive process which may as well have unit mean. The commutativity problem disappears, so that (6) is now correct, and only the eigenvalues vary over time. This model may or may not be appropriate, depending on the context, as we discuss later.

An obvious choice of dynamics is the CIR process,

| (7) |

Importantly, the joint distribution of and is known via the double Laplace transform [Lamberton12, Prop. 2.5]:

| (8) |

with

and . Accordingly the -period transition matrix conditional on is121212The functions and are regular for , so can be evaluated ‘at’ .

| (9) |

the unconditional one is obtained by integrating out, which is immediate because its unconditional distribution is Gamma of mean and shape parameter .

3.2 Historical calibration with a time-varying generator

Default rates fluctuate over time, and we wish to capture this effect in a model that can then be linked to suitable econometric indicators [Fei13]. Thus we need a stochastically-driven model, as opposed to a deterministic but time-inhomogenous one as considered in [Bluhm07].

As just mentioned, a simple scaling of the generator matrix can be done easily. However, it is unrealistic, because in benign years there are more upgrades and fewer downgrades, and the reverse in bad ones: it is not correct simply to scale both by the same factor. Returning to (3), write

in which the terms on the RHS are valid generator matrices that are, respectively, lower- and upper-triangular. These are generators for an upgrade-only and a downgrade-only Markov chain, and so this decomposition is unique. Except in trivial cases we will always have . Now scale these two terms by different positive variables and representing the contribution from upgrades and downgrades respectively, so that

| (10) |

It is easiest to do this in a discrete-time setting, because our natural source of data for calibration is annual. Then the one-year transition matrix is the exponential of , but we no longer have a convenient closed-form calculation for it, and have to resort to (2).

We do not have yearly transition matrices to calibrate to: even if we did, they would be quite ‘noisy’, so that for example we might have an instance of a transition A to BB, but none to BB+. However two pieces of annual information are available [SandP19, Table 6]: the downgrade:upgrade ratio and the default rate, both of which are averaged across rating states. Roughly speaking the former quantity gives information about , and the latter about . They can be matched exactly, and uniquely, by a simple numerical search, except in singular cases where there are no upgrades/downgrades/defaults. Doing this for each year gives Figure 3.

The sample statistics of and are: mean 0.95, 0.95; standard deviation 0.42, 0.65; correlation 0.55. This shows, as anticipated, that it is necessary to have two factors rather than just a single one. In fact, this is a similar conclusion to that in [Andersson00, §2]. It is perhaps surprising that the correlation is positive, because ‘good years’ should see high and low and oppositely in bad years, which was the whole point of having two factors. The author is indebted to M. van Beek for pointing out that over shorter time scales, data suggest a negative correlation. In other words, looking at all of 2009 is unhelpful as it is a year of two parts: first multiple downgrades and defaults, then multiple upgrades. It is hard to be precise about what stochastic process follow, as we have only 38 data points, but a sensible model choice for each of is the exponential of an autoregressive (AR) process. That is to say, we write for the logarithm of , with the mean subtracted; then the AR() model is

where is a white noise process and the are coefficients to be determined. The properties of these processes, and the fitting of their coefficients, are well understood, e.g. [Makhoul75, Marple87]. An AR(1) process captures mean-reversion. An AR(2) process allows more subtlety, and allows the possibility of rating momentum, in the sense that next year’s up/downgrade rate is likely to be higher than this year’s if this year’s was higher than last year’s, or in equations

a condition that can be expressed in terms of the autocovariance function and thence of the by the Yule-Walker equations. However, there is little evidence for this in the data used here, suggesting that if rating momentum exists it is likely to be observed over a faster time scale than a year, and is possibly more of an idiosyncratic effect, in the sense that troubled issuers often suffer successive downgrades over a period of months (which does occur).

4 Risk-neutral calibration

4.1 Theoretical considerations

Risk-neutral calibration is the identification of a generator matrix that fits a given set of CDS spreads or bond prices, via the survival curve131313The valuation of CDS and bonds in terms of the survival curve is well-understood, e.g. [OKane08].. This is an entirely different problem from fitting to a prescribed historical transition matrix.

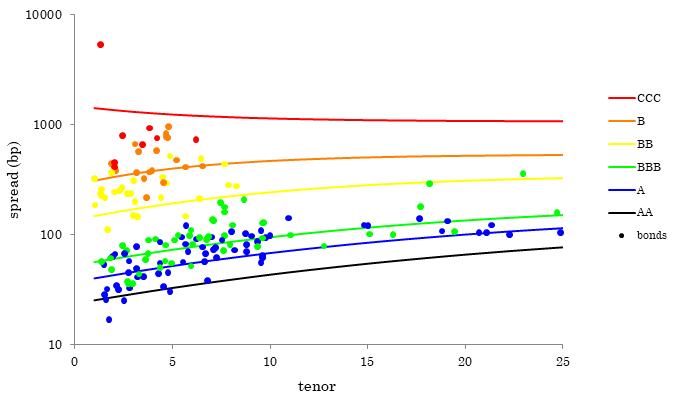

The first point we make is that in reality we should not expect anywhere near a perfect fit in doing this: there is no reason why the market should trade a credit in line with its public rating, as the market provides a current view of the firm’s future solvency, and the rating may not be up to date. Thus it is quite possible to have two different BBB firms trading at 80bp and 140bp at the 5y tenor; once we get down to CCC+ we can have names trading anywhere between 500bp and 5000bp. Also there may be no bonds of a particular rating: this will almost certainly be true when rating modifiers are used. All these examples can be found in the dataset we used. Therefore we must be prepared for very ‘noisy’ data, and the objective of fitting must be to come up with sensibly-shaped curves that give a reasonable fit.

It is superficially attractive to describe this as a ‘bond pricing’ model. However, bonds are quoted on price or spread-to-Treasury, so there is no need for a pricing model as such. Arguably it is suitable for ‘matrix pricing’ of illiquid bonds, but an illiquid bond is likely to trade at a significant yield premium to a liquid one of the same rating and tenor, so this would have to be borne in mind. In reality, the model is most suited to relative value analysis or for a parsimonious representation of a certain sector, e.g. “Where do US industrials trade for different rating and maturity?” Another application is as an input to the evaluation of EM corporate bonds, coupling the developed market spread to the country spread [Martin18c].

Our next point is different, but no less fundamental. Even in a hypothetical world of bond/CDS spreads exactly lining up with their public ratings, and trading with great liquidity and low bid-offer, does the term structure, for each rating, allow us to uniquely identify the generator? There is a clear intuition about why the term structure on its own does not suffice. Knowing the full transition matrix allows us to value any contingent claim, including claims that require us to know about rating (and hence price) volatility. But such information cannot be gleaned from bond prices alone.

The term structure provides (in principle) clearly-interpretable pieces of information, in the form of the short-term spread and the gradient of the spread curve at the short end, but in practice observations of these quantities will be ‘noisy’. Denoting by the survival probability for time , conditional on being in state at time zero, we have for small ,

If we convert to a par CDS spread then the PV of the payout leg and the coupon leg are, for small maturity , respectively

(with the recovery rate), and

and the CDS spread is the ratio of the two:

Alternatively we can use the continuously-compounded zero-coupon Z-spread , and the result is the same. Writing the matrix square as an explicit sum, we obtain after some elementary algebra:

| (11) |

The first of these is obvious: the short-term spread is explained by the instantaneous probability of transition to default. The second expresses extra loss arising from transition to riskier states ( with ) balanced off against transition to less-risky states (): if the former exceeds the latter, the spread curve is upward-sloping, and vice versa. In particular, the highest rating necessarily has an upward-sloping curve and the lowest a downward-sloping one.

Now suppose we have two term structures that agree on and for each . They might look different at the long end (), but how many extra parameters would such a difference entail? So it seems plausible that the term structure provides a little over pieces of information, and certainly nowhere near . A ‘toy’ example shows this well (Figure 4141414The letter codes A,B,C do not correspond to S&P ratings: they are just labels of convenience, with A the best and C the worst. Recovery .). The term structures are not identical, but are so close that there is no realistic way of telling the generators apart—we chose, as is easily done, matrices for which the information in (11) is identical. Rating B clearly has higher volatility in the second matrix.

4.2 Spectral theory

It is possible to use basic differential geometry to provide some quantitative precision about invertibility. There exists a smooth pricing map from the space of generator matrices to the space of term structures , that is, a set of curves of spread vs maturity, one for each rating. At any point it has a derivative that relates, linearly, infinitesimal changes in generator matrix to infinitesimal changes in term structure, and represented by the jacobian151515The jacobian of a map at is the matrix whose th element is evaluated at . . To construct it, take and perform, one by one, bumps on it, in which each bump consists in increasing the element , for , by a small amount while adding to so as to keep the th row sum zero. Compute the new term structure , written as a set of points (maturities 1y, 2y, …y and ratings). The difference estimates the directional derivative, and repeating this for each possible bump gives the jacobian, which is of dimension , containing the sensitivity of all points on the term structure to all bumps. The degree to which is locally invertible161616The singular values of only tell us about local invertibility: it is easy to construct an that causes the domain to be folded on itself in such a way that is nonsingular but is not one-to-one. A simple example is given by : the singular values of are identical, being , but is periodic in , and so obviously not one-to-one. In practice, therefore, establishing that a function is invertible is hard if . In context this means that we might have two totally different generator matrices giving precisely the same term structure, though this is perhaps unlikely. can be understood by examining the singular value decomposition (SVD, [NRC, §2.6]) of the jacobian, that is, , in which are orthogonal and with : these elements are the roots of the characteristic equation , and are the singular values. In attempting to locally invert we have to divide by , so small singular values cause a problem. As an overall scaling of the matrix does not affect its conditioning, standard procedure is to plot , for , on a logarithmic scale, and we call this the normalised spectrum of . In well-conditioned problems the are all roughly equal; in ill-conditioned problems some are very small, and in these coordinate directions inversion will be impossible without grossly amplifying any observation noise.

Taking one further step we also want to examine whether a particular parametrised subspace of permits stable inversion: in context is, of course, the TDST parameters. Let be the space of parameters, with a map taking a particular parameter set to its associated generator. We can construct and by bumping the parameters and seeing the effect on the generator and the term structure . Notionally the singular values of the restricted171717i.e. the restiction of to the tangent space of . are simply those of , but this is not meaningful as is a rectangular matrix: instead, the values we seek are the roots of the more general characteristic equation

| (12) |

This construction is invariant under local reparametrisation: if we have two different parametrisations of the same subspace of , i.e. and , with locally invertible, then the above equation does not depend on the choice or : in other words it just depends on and on the subspace of .

4.3 Empirical work

We fitted an 18-state matrix to US bond data from the manufacturing sector on 05-Feb-20. To reduce the dimensionality of the problem further we used the same trick as in §2, specifying sub- and super-diagonal elements for AA,A,BBB,BB,B,CCC and dealing with rating modifiers by interpolation: this gives 14 parameters (). The performance surface was quite flat: different parameter sets gave almost the same quality of fit. We have more to discuss, but the reader may wish to glance at Figure 5, which shows the end result. To avoid cluttering the plot, rating modifiers are not shown, so that for example BBB+,BBB,BBB are all coloured the same as BBB. One obvious feature is the high degree of dispersion of bond spreads around their fitted curves. We can also see from Figure 6(a) that there is no realistic way of fitting anywhere near parameters, as the singular values roll off so rapidly. This first graph can be constructed for any generator so it has nothing to do with TDST. Turning next to Figure 6(b), which pertains to TDST, we see from the green trace that some instability is likely as the smallest normalised singular values are quite small. This points to the conclusion that further constraining is required. We have already anticipated that volatility assumptions need to be incorporated, and so we address that next.

4.4 Adding spread volatility

If we wish to capture spread volatility in a realistic way, we should augment the model to allow for the generator to be stochastic, thereby capturing day-to-day fluctuations in spread that are not associated with rating transition. Let us therefore return to §3.1 and revisit the idea of a CIR process to drive this. Equation (8) giving the joint law of and allows a wide range of contingent claims to be valued. However, single-name CDS options scarcely trade181818See [Martin11c] for a review and discussion of this subject. so there would be nothing to calibrate to.

A more practical idea is to compute the instantaneous spread volatility and attempt to match it to historical data. As the effect of is approximately to scale the credit spreads proportionally, the instantaneous volatility of the period- spread for rating is

| (13) |

where is the volatility parameter in the CIR process.

Turning now to empirical matters, we make some simple observations, based on time series analysis of some 1500 bond and CDS spreads going back 10–20 years. The first is that credit spread volatility is roughly 40%, and that this does not depend strongly on rating, tenor, or spread level. The second is that there is little evidence of mean reversion, and so in (7) needs to be quite low: we have fixed it at 0.1(/year), though the model calibration is not sensitive to this choice. Thus, at the expense of adding one more191919As can be set to unity. parameter (), we can penalise the deviation of each from our assumed value of 0.4. This was found to stabilise the calibration.

We can now redo the spectral analysis of Figure 6, augmenting the pricing map so that it takes a given generator to the term structure of spread and also of instantaneous volatility, notated . The singular values, as expected, roll off more slowly (blue trace), showing that new information is being supplied by the volatility term structure. By Figure 6(a) this is, unsurprisingly, still not enough to allow 324 parameters to be fitted, but Figure 6(b) suggests that enough stability may be conferred within the TDST parametrisation, which is what we found in practice.

A final point about this kind of model is that it is capable of capturing the effects seen in early 2008 where high-grade credits had inverted yield curves even at modest spreads of 100–200bp. As we said earlier, a static generator cannot deal with this as the spread curve for the best rating state cannot possibly be inverted, and in any realistic parametrisation all the investment-grade curves will be not be. But with a dynamic generator this is now possible, if one introduces into the calibration. When it is very much higher than its long-term mean, short-term spreads will be elevated, as happened in that time period.

|

|

||||||||||||||||||||

|

|

|

(a)

|

(b)

|

5 Conclusions

We have presented a new model (TDST) based on a tridiagonal generator matrix coupled with a stochastic time change to allow multiple downgrades to occur with a probability that is not far too low. The model may be calibrated historically or to bond/CDS spreads, and the relevant conclusions are given below. An incidental advantage is that the matrix exponential is generally easy to calculate.

With regard to historical calibration, the presence of a ‘WR/NR’ (unrated or withdrawn rating), and the fact that some transitions are very rare, gives rise to considerable uncertainty as to the true underlying transition probabilities. The TDST model as described here is sufficiently flexible to calibrate within these uncertainties, without having too many parameters. If the model is made time-varying, two factors are needed to capture the volatility of upgrades and downgrades.

With regard to risk-neutral calibration, it is impossible to unambiguously identify a generator matrix from term structure, and two ingredients are essential: (i) dimensionality reduction e.g. through TDST and (ii) information about volatility. Once this is provided the calibration works well even in the presence of real data which must be expected to be very ‘noisy’.

Using the theory of §4.2 to analyse the derivative of the pricing map should be a standard procedure in any calibration exercise. It is not at all restricted to the application in this paper.

Acknowledgements

I thank Di Wang and Huong Vu for their work in earlier stages of this project, and Roland Ordovàs and Misha van Beek for helpful discussions.

Appendix A Appendix

A.1 Comment on the Gamma process

In the limit of one or more super- or sub-diagonal elements of being zero, i.e. an intransitive generator, the construction will no longer work, because becomes singular. However, in the case of the Gamma model the transition matrix is simply a matrix power, as it is

Going back to (2), we can now see why the second expression is a valid transition matrix. It can be computed directly, as follows. First, compute , which is most easily done by LU factorisation of . Next, raise this to the power . As raising a matrix to an integer is easy (by successive squaring and using the binary representation of ), we only have to deal with the case where . Applying the binomial theorem twice and interchanging the order of summation:

where denotes the truncation error. It is easy to see that is a polynomial of degree that vanishes at , while . (See [Gradshteyn94, §0.154].) Therefore this method writes as the Lagrange interpolant between ; the simplest formula is which gives a linear interpolation between and . The expansion is convergent because in context the eigenvalues of lie in .