Cross Learning in Deep Q-Networks

Abstract

In this work, we propose a novel cross Q-learning algorithm, aim at alleviating the well-known overestimation problem in value-based reinforcement learning methods, particularly in the deep Q-networks where the overestimation is exaggerated by function approximation errors. Our algorithm builds on double Q-learning, by maintaining a set of parallel models and estimate the Q-value based on a randomly selected network, which leads to reduced overestimation bias as well as the variance. We provide empirical evidence on the advantages of our method by evaluating on some benchmark environment, the experimental results demonstrate significant improvement of performance in reducing the overestimation bias and stabilizing the training, further leading to better derived policies.

1 Introduction

Overestimation has been identified as one of the most severe problems in value-based reinforcement learning (RL) algorithms such as Q-learning [1], where the maximization of value estimates induces a consistent positive bias, and the error of the estimates is accumulated by the nature of temporal difference (TD) learning. In the function approximation setting such as deep Q-networks (DQN), the issue of value overestimation is more severe, given the noise induced by the inaccuracy of the approximation. As a result, learning DQN tends to have instability and variability for estimated Q-values, the derived policies accroding to the overestimated Q-values tend to be not optimal and often diverge.

To overcome this issue, double Q-learning [2] has become a standard approach for training DQNs. The main purpose of double Q-learning is to avoid the overestimation problem for the target Q-value, by introducing negative bias from the double estimates. The usual way to realize it in DQN is to maintain a target network which is a copy of the policy DQN which is either frozen for a period of time, or softly updated with exponential moving average. The target network then is used to estimate the TD target. This may alleviate the issue, however, double DQN still often suffer from overestimation in practice, partially because the policy and target estimates of -values are usually too similar, while the noise from high variance is propagated through the network and occasional large reward can produce great overestimation in the future. Another approach sometimes proposed is to impose a bias-correction term on the estimates for Q-learning [3], however, the error correction term is complicated to derive for deep networks, in which the finiteness of state space is no longer true. A more recent modification over double DQN favors underestimation and clips the Q-value estimates [4], that is, always chooses the minimum of the estimated targets over the two networks. The clipped double Q-learning is used on the critics in actor-critic methods for the deterministic policy gradient, which is referred to as TD3 (twin delayed deep deterministic policy gradient) and has shown state-of-the-art results on multiple tasks. However, the intentionally engineered underestimation lacks of rigorous theoretical guide, in addition, it may induce bias in the other direction, e.g., the underestimation can also accumulate through TD learning and derive suboptimal policies. Further, excessive underestimation can naturally lead to slower convergence.

Another direction to alleviate overestimation is through reducing the variance during training. For example, [5] uses the average of the learned estimated Q-values from multiple networks, which is designed to help reduce the target approximation error. There also exist various variance reduction techniques [6, 7, 8, 9] that focus on the general non-convex optimization procedure for accelerating the stochastic gradient descent, or their direct application on DQNs [10], in which the agent could obtain smaller approximated gradient errors. Reducing the variance can effectively stabilize the DQN training procedure, and overestimation alleviation can be seen as a by-product. However, these are indirect methods for overestimation control, and the positive bias due to the max operator in TD update are not taken care of.

To address these concerns, we propose a cross DQN algorithm, which can be seen as a direct extension of an earlier variant of double DQN, but can be more flexible. In cross DQN, we maintain more than two networks, and update them one at a time based on the estimation from another randomly selected one. As mentioned above, the averaged DQN [5] calculates the average of estimated Q-values, with the primary purpose of the overall variance reduction. For all networks, each step of TD updates as well as action selections are based on combining the estimates. Consequently, the networks are tangled together and cannot be implemented with a parallel simulation. In bootstrapped DQN [11], one of the networks (or heads) is bootstrapped for each action selection step during training, aiming at encouraging exploration early on. Thus the simulation is not independent among networks, while the TD updates are totally independent within each of the networks, by using its own estimation of Q-values as in standard (double) DQN. [12] investigates more general applications of traditional ensemble reinforcement learning on policies, i.e., majority voting, rank voting, Boltzmann addition, etc. to combine the different policies derived from multiple networks, by which they called the target ensembles, in addition to the averaged DQN which they called the temporal ensemble. All of the above-mentioned work that maintain multiple networks have achieved better performance by addressing different issues through some particular settings. Our method focuses on the variation of TD updates, in which the target Q-values are estimated with a bootstrapped network for calculating the gradients, with the direct goal of reducing overestimation. Each of the networks would perform its own TD updates, while maintaining flexibility in action selections: the networks can either interact with the environment independently, or through any other ensemble strategy. The detailed implementation options would be discussed in Section 3.

In supervised learning, ensemble strategies such as bagging, boosting, stacking, and hierarchical mixture of experts, etc. are commonly applied to achieve better performance, by simultaneously learning and combining multiple models. All of the abovementioned algorithms that maintain multiple models, including ours, can be seen as special cases of general ensemble DQNs. But our method has a deeper root in resampling and model selection. By bootstrapping another model to assess the values of current model, we introduce model bias for in-sample estimations, but reduce the variance of out-of-sample estimations (i.e., the squares of out-of-sample bias), in other words, the trained model can generalize better and alleviate overfitting. For squared errors, this can be expressed as the well-known bia-variance trade-off: In value-based reinforcement learning, the model easily overfits due to overestimation (which is caused by the max operator) during learning. Cross Q-learning introduces underestimation bias, and further reduces the variance, thus improves the generalization of the trained model.

Like in [4], our work can be naturally extended to the state of the art actor-critic methods in continuous action space, such as the deep deterministic policy gradient [13], in which the critic network(s) are learned to give an estimate of the Q-value for the actor network to update its gradient and derive policies. Usually multiple critic networks are applied, however, rather than accumulating their learned gradients (either synchronously or asynchronously [14]) and optionally sharing network layers, no other information is shared among the critics. The extension of our method allows the critics to share their value estimates and utilize that of others, which leads to more accurate estimation of each critics, thus can improve the performance of these models. Similar to these actor-critic algorithms, our work can be implemented for parallel training easily, and the exchange of information among networks could take place either synchronously or asynchronously like the accumulation of gradients, as there is always tradeoff between synchronous and asynchronous update.

The rest of this paper is organized as follows. In Section 2, we resume the basics of value-based RL, and go through some recent related research. In Section A, we formally define the estimators for the maximum expected values, along with their theoretical properties. The convergence of our cross estimator is shown in Section B. Section 3 illustrates our cross DQN algorithm directly derived from the double DQN in details. We show some empirical results in Section 4. Finally, Section 5 draws conclusions and discusses future work.

2 Background

2.1 Value-based Reinforcement Learning

A natural abstraction for many sequential decision-making problems is to model the system as a Markov Decision Process (MDP) [15], in which the agent interacts with the environment over a sequence of discrete time steps. It is often represented as a 5-tuple: , where is a set of states; is a set of actions that can be taken; is the transition function such that , which denotes the (stationary) probability distribution over of reaching a new state , after taking action in state ; is the reward function, which can take the form of either , , or ; and is the discount factor.

A policy defines the conditional probability distribution of choosing each action while in state . For an MDP, once a stationary policy is fixed, the distribution of the reward sequence is then determined. Thus to evaluate a policy , it is natural to define the action value function under as the expected cumulative discounted reward by taking action starting from state and following thereafter:

| (1) |

The goal of solving an MDP is to find an optimal policy that maximizes the expected cumulative discounted reward in all states. The corresponding optimal action values satisfy , and Banach’s fixed-point theorem ensures the existence and uniqueness of the fixed-point solution of Bellman optimality equations [15]:

| (2) |

from which we can derive a deterministic optimal policy by being greedy with respect to , i.e., .

In reinforcement learning problems, the agent must interact with the environment to learn the information about the transition and reward functions, meanwhile trying to produce an optimal policy. While interacting with the environment, at each time step , the agent senses some representation of current state , selects an action , then receives an immediate reward from the environment and finds itself in a new state . The experience tuple summarizes the observed transition for a single step. Based on the experiences through interacting with the environment, the agent can either learn the MDP model first by approximating the transition probabilities and reward functions, and then plan in the MDP to obtain an optimal policy (this is called the model-based approach in reinforcement learning); or without learning the model, directly learn the optimal value functions and upon which the optimal policy is derived (this is called the model-free approach).

As a model-free approach, Q-learning [16] updates one-step bootstrapped estimation of Q-values from the experience samples over time steps. The update rule upon observing is

| (3) |

in which is the learning rate, serves as the update target of the Q-value, which can be seen as a sample of the expected value of one-step look-ahead estimation for state-action pair , based on the the maximum estimated value over next state , and the last term is simply the current estimation. The difference is referred to as temporal difference (TD) error, or Bellman error. Note that one can bootstrap more than one step when estimating the target, often by using the eligibility trace as in [17]. Q-learning is guaranteed to converge to the optimal values in probability as long as each action is executed in each state infinitely often, is sampled following the distribution , is sampled with mean , variance is bounded and given appropriately decaying .

2.2 DQN and Double DQN

For environments with large state spaces, the Q-values are often represented by a function of state-action pairs rather than the tabular form, i.e., , where is a parameter vector. We consider Q-learning with function approximation in this paper. To update parameter vector , first-order gradient methods are usually applied to minimize the mean squared error (MSE) loss: However, with function approximation, the convergence guarantee can no longer be established in general. Neural networks, while attractive as a powerful function approximator, were well known to be unstable and even to diverge when applied for reinforcement learning until deep Q-network (DQN) [18] was introduced to show great success, in which several important modifications were made. Experience replay [19] was used to address the non-stationary data problem, by storing and mixing the samples (i.e., experiences) into a replay memory for the updates. During training a batch of experiences is randomly sampled each time and the gradient descent is performed on the sampled batch. This way the temporal correlations could be alleviated. In addition, a separate target network, which is a copy of the learned network parameters () is employed. This copy is frozen for a period of time and is only updated periodically (denoted as ), and is applied to calculate the TD error, with the aim of improving stability.

A variety of extensions and generalizations have been proposed and shown successes in the literature. Overestimation due to the max operator in Q-learning may significantly hurt the performance. To reduce the overestimation error, double DQN (DDQN) [2] decouples the action selection from estimation of the target, that is, choosing the maximizing action according to the original network (), and evaluate the current value using the other one ( from the target network), i.e., The procedures of double DQN is shown in Algorithm 1.

2.3 Dueling DQN

[20] proposed the dueling network architecture, in which lower layers of a deep neural network are shared and followed by two streams of fully-connected layers, that are used to represent two separate estimators, one for the state value function and the other for the associated state-dependent action advantage function . The two outputs are then combined to estimate the action value :

| (4) |

Note here the average of advantage values across all possible actions are used to achieve better stability, instead of the max operator in the other form proposed in [20], i.e.,

| (5) |

The dueling factoring often leads to faster convergence and better policy evaluation, especially in the presence of similar-valued actions. The deployment of advantage values is more robust to noise, since it emphasizes the gaps between -values of different actions given the same state, which are usually tiny thus small amount of noise may results in reordering of actions. In addition, the subtraction of an action-irrelevant baseline in Equation (4) also effectively reduces variance, which helps stabilize learning and thus is more often used. The shared feature learning module also generalizes learning across actions, in which more frequent updating of the value stream leads to more efficient learning of state values, contrasts with that in DQNs of a single stream output, only one of the action values is updated while other action values remain untouched.

2.4 Bootstrapped DQN

The main purpose of Bootstrapped DQN [11] is to provide efficient “deep” exploration inspired by Thompson sampling or as probability matching in Bayesian reinforcement learning [21], but instead of maintaining a distribution over possible values and intractable exact posterior update, it takes a single sample from the posterior. Bootstrapped DQN maintains a -ensemble, represented by a multi-head deep neural network in order to parameterize a set of different -value functions. The lower layers are shared by the “heads”, and each head represents an independent estimate of the action value . For each episode at training, Bootstrapped DQN picks a single head uniformly at random, and follows the greedy policy with respect to the selected -value estimates, i.e., , until the end of the episode.

Bootstrapped DQN diversifies the -estimates and improves exploration through independent initialization of the heads as well as the fact that each head is trained with different experience samples. The heads can be trained together with the help of so-called bootstrap mask , which decides whether the -th head should be trained, i.e., the transition experience updates only if is nonzero. In addition, bootstrapped DQN adapts double DQN in order to avoid overestimation, i.e., the the estimates of TD targets are calculated using the target network . The loss backpropagated to -the head is then

| (6) |

Note the gradients should be further aggregated and normalized for updating the lower layers of the network.

3 Cross DQN

In this section, we elaborate our proposed cross Q-learning method and its variants. Cross DQN serves as an extension to the double DQN algorithm [2], which has been used as the default setting for most state-of-art DQN training.

Double DQN was proposed in the aim of reducing overestimation bias, in which the target network simply is a delayed-updated copy of the current network. Note that the original vanilla DQN also uses two networks, the purpose of periodic frozen and update of the target network is to stabilize learning. Specifically, in vanilla DQN, the target network is used to evaluate both the action and the value, i.e.,

| (7) |

On the other hand, in double DQN, the current network is used to evaluate the action and select , while the target network is used for evaluate the value, so that action selection is decoupled from estimation of the target:

| (8) |

In practice however, it is common the case that little improvement can be gained by using double DQN, since the current and target networks are usually too similar due to slowly changed parameters in neural network models with SGD optimization. We can neither set the period of updating target too long, otherwise the derived policy would not exhibit learning and progress. As a result, double DQN does not entirely eliminate the overestimation bias. In Section 4, we will further experimentally show the elimination of overestimation is not effective nor sufficient in double DQN.

Instead of maintaining only two separate networks, we will use a set of models for estimating Q-values and selecting actions in our cross Q-learning. While update each network’s parameters, we will calculate its TD target Q-value using one of the other models. More specifically, let the network with parameters we are about to adjust be our current network (), and we randomly pick another network to be our target network (, e.g., ). To compute the target Q-value, we will use the current network to evaluate the actions and select in the next state , while the value is evaluated by using the target network, i.e.,

| (9) |

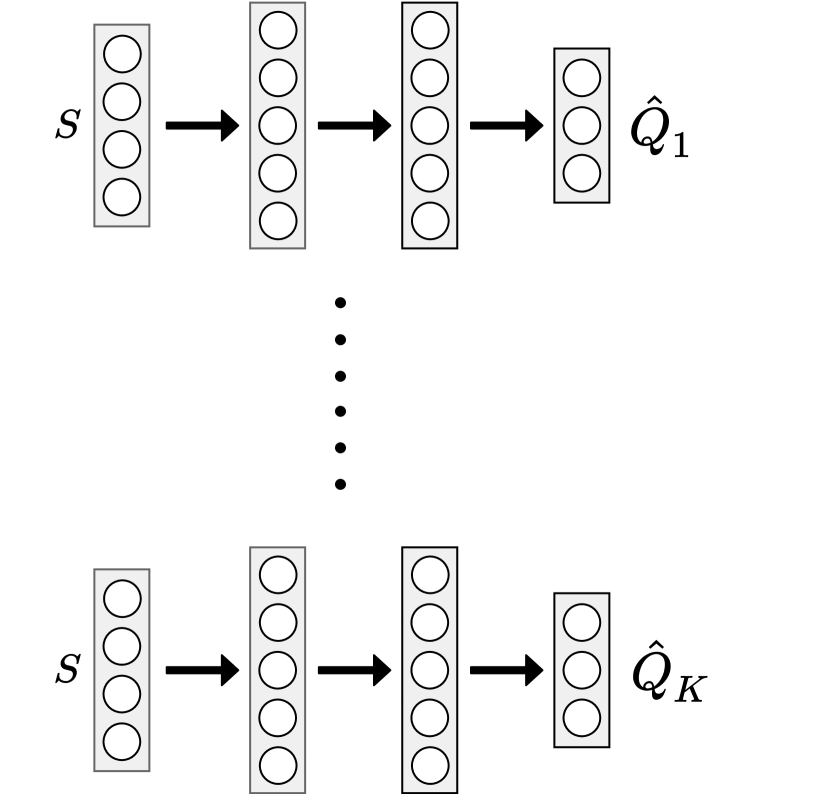

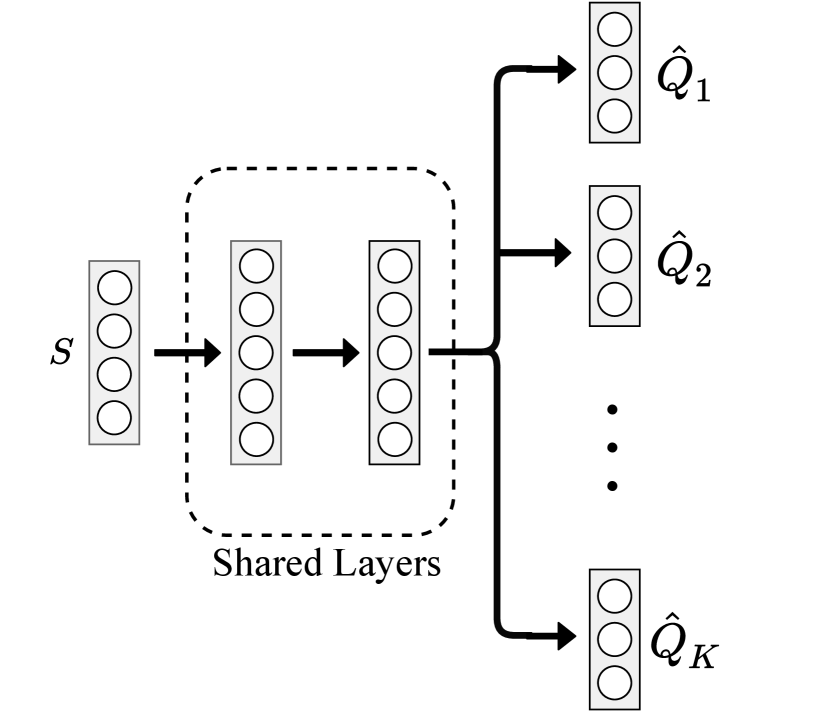

In implementation, we have flexibility and various options in how to utilize the different Q-networks. There always exist tradeoffs among different choices that we need to consider in order to pick the one that meets our goal most. For example, we can have different design of neural network architectures. A natural choice of having independent models is to maintain a list of separate neural networks with the same architecture, the difference between their outputs (i.e., streams of Q-values derived from the same ()-pair as the input) comes from different random parameter initialization of each model, also is due to that different data that each model is trained upon, i.e., for each step of backpropagation, each model randomly samples a mini-batch of experiences and performs SGD optimization with the mini-batch. Maintaining copies of models implies that not only the storage for the models would be times large as a single network, also the forward propagation would take times amount of computations. Instead, we can utilize the shared network design for the models, in which the models shared their weights except for the last layer, which consists of value function heads from which the value functions are derived, and the weights on the last layer are generally different. Thus we have much less parameters in total to be trained, and the computational burden can be greatly alleviated. Moreover, as recent deep learning research reveals, the first few layers of neural network are mainly about representations learning, the shared layers provide the same features expressed for computing , this can be seen as online transfer of learned knowledge among models. Note that in shared learning settings, in order to avoid premature learning and suboptimal convergence, the gradients of the network except the last layer are usually normalized by , but this also results in slower learning early on. On the other hand, the separate models are simpler yet provide more variability in -values, also are more stable during training. In addition, when we train the networks in distributed system, the separate networks do not depend on others’ weights thus can be learned independently, which requires much less information exchange and this could be a huge advantage for distributed learning. The comparison of the separate and shared network architectural design is shown in Figure 1.

With different models (or heads), while each could derive a possibly different policy, there is no doubt that during test phase we should take advantage of ensembles, for instance by choosing the action with the majority votes across the outputs. However, we can make choices on how to combine action selections into a single policy during training. With ensemble action selection such as majority voting, the derived policy is often superior than any individual one, thus greatly reduces the variance during training, as we will experimentally show in Section 4. This in turn refines exploitation, results in great variance reduction of -values and speeds up learning. Note that to deal with exploration-exploitation dilemma, -greedy strategy is needed to encourage exploration. On the other hand, we may also randomly pick a single network from the models, and act as it suggests during training. This falls into the paradigm of Bootstrapped DQN [11], which encourages exploration, in the cost of slower early learning (see Section 4), but may learn better policy later with more exploration. Another advantage of bootstrapped action selection is that it can slightly reduce computational burden, since instead of forward passing and computing all of the -values for action selection, we can calculate only one of them. The procedure of bootstrapped version of cross DQN is presented in Algorithm 3.

Another choice we can make is the training frequency. In our cross DQN settings, when backpropagation occurs, we can either choose to train on a single network (e.g., the single model that provides the action selection), or each of the networks could independently sample a mini-batch of experiences and perform SGD optimization. The latter would increase the sample efficiency and speed up learning, while the former would reduce the computational burden, in which the number of backpropagation (which is the most computational expensive) remains the same as in a single DQN. In addition, with the former setting, our cross Q-learning does not require maintaining copies of the networks as the target. Experimentally, we found that freezing targets merely has any effect on stabilization of learning, but only costs doubled memory for model storage. This is due to two reasons. First, we bootstrap a model that is different than the current one, when , the variety of models ensures the difference in parameter initialization, as well as the difference of mini-batch data their learning based upon, which in turn ensures the independence of Q-value estimates. Secondly, with less frequent update of each network, the bootstrapped target -value changes less as well, also helps stabilize learning.

4 Experimental Results

In this paper, we conducted experiments on two classical control problems, CartPole and LunarLander, for extended tests. We selected these testbeds in the aim of covering different challenges, especially in terms of complexity. As both environments interfaced through OpenAI gym environment [22], unless specified otherwise. The neural networks have a number of hyperparameters. The combinatorial space of hyperparameters is too large for an exhaustive search, therefore we have performed limited tuning. For each component, we started with the same settings as in [23] in order to make comparisons with states of the art results.

4.1 CartPole

4.1.1 Experimental Setup

The CartPole, also known as an inverted pendulum, in which a pole (or pendulum) is attached by an un-actuated joint to a cart (i.e., the pivot point). The pendulum starts upright at the center of a 2D track but is unstable since the center of gravity is above the pivot point. The goal of this task is to keep the pole balanced and prevent it from falling over, by applying appropriate force to the pivot point, while the force could move the cart along the frictionless track with finite length of 4.8 units. An immediate reward of is provided for every timestep that the pole remains not falling over, and the maximum cumulative rewards in an episode are clipped to 200. An episode also ends when the pole is slanted with degree from vertical, or the cart moves out of the track [24]. In each timestep, the agent is provided with current state , which represents cart position, cart velocity, pole angle, and pole angular velocity, respectively. A unit force either from left or right can be applied, thus the actions are discrete with .

As in [23], we approximate the -values using a neural network with two fully-connected hidden layers (which consist of 64 and 32 neurons, respectively). We train each of the neural networks for 1000 episodes (approximately a little less than 200000 steps), with a FIFO memory of size transitions for experience replay. A target network is updated every 500 steps to further stabilize learning. The adaptive moment estimation (Adam) optimizer with learning rate is used to train the network, since it is in general less sensitive to the choice of the learning rate than other stochastic gradient descent algorithms [25]. The optimization is performed on mini-batches of size 32, sampled uniformly from the experience replay. The discount factor is set to 0.99, and -greedy policy is used for choosing actions throughout interacting with the environment, which starts with exploration , and annealed to in the first 10000 steps.

After every 20 training episodes, we conduct a performance test that plays 10 full episodes using the greedy policy deterministically derived from the current network. For the models with , majority voting is used for the action selection disregard whether or not bootstrapped -value head is used during training. The cumulative rewards of each test episode are used for comparison among different models. Moreover, in order to comparing the estimation of -values among models, every 20 training episodes, we randomly sample a batch of historical -pairs from the replay buffer and compute their -values using current network. More than one thousand samples ensure that their mean is somewhat representative for -values under current model.

4.1.2 Analysis of Cross Q-learning Effects

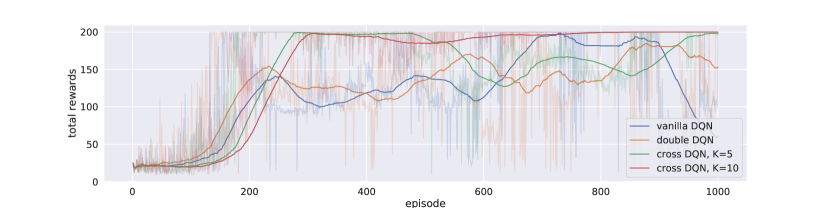

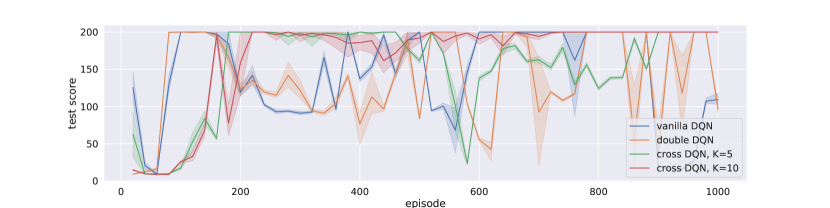

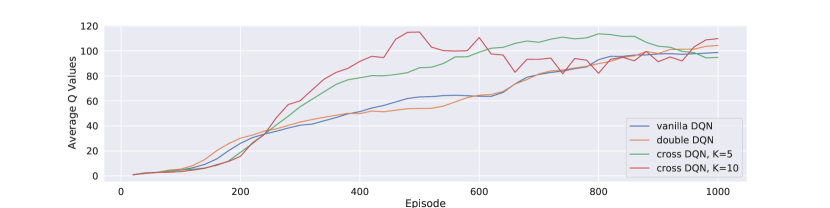

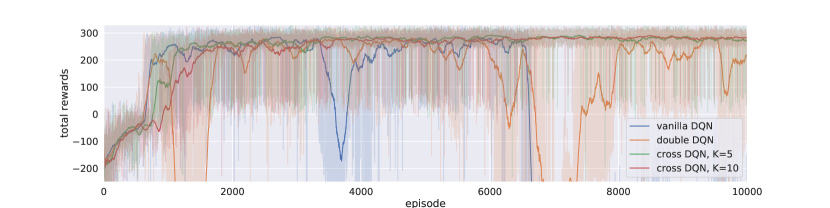

We compared our cross Q-learning algorithms with vanilla DQN and double DQN. Note that vanilla DQN uses single estimators, while double DQN uses double estimators, and our cross DQN uses cross estimators. and are used in cross DQNs. Figure 2(a) illustrate the training history of episodic total rewards of the four models, from which we can see that although with a single network (vanilla and double DQNs), the agent starts to learn early on with less samples, in particular, double Q-learning helps the single network to learn even faster, however, the learned models are not stable. With cross Q-learning, although the networks learn slower at the beginning, in particular, cross DQN with started to learn even later than cross DQN with , once cross DQNs start to learn, the performance improvement is substantial. Not only the total rewards are higher, the learning is also much more stable. After 300 episodes, the training total rewards converge to 200 for cross DQN, with little variation (due to exploration). cross DQN has more variation, but it also seems to converge after 900 episodes, while vanilla DQN and double DQN are easily deteriorated, and have much larger variations.

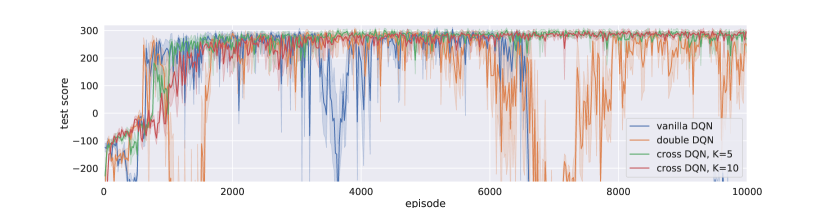

The performance improvement can be more clearly seen in Figure 2(b). After 300 episodes of training, the policies derived cross DQN with become more and more stable, the variance of test total rewards become zero close to the end of training. Cross DQN with deteriorates after 500 episodes of training, but later it also learns to derive stable policy that has total rewards of 200 with tiny variances. Whereas the policies derived from vanilla DQN and double DQN can only get score which is approximately half of cross DQNs, and with large variances. The policy derived from double DQN seems to be a little better than that from vanilla DQN, but the improvement is not as significant as that of using cross Q-learning.

Furthermore, part of the reason for slower start of cross DQN is due to our learning settings, in which we only perform SGD optimization on one of the networks (or heads). In other words, we reduce the learning frequency of each network (or head) down to to alleviate the computational effort, at the cost of slower start on learning. If we increase the learning frequency (i.e., backpropagate for each of the networks/heads every time), the learning should be faster.

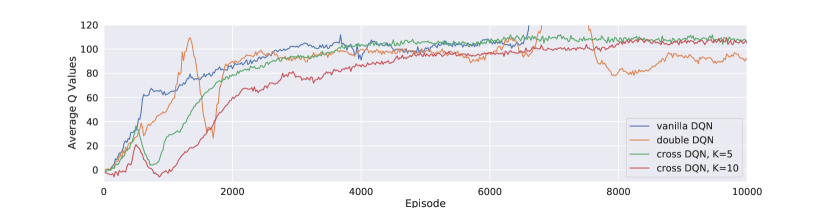

We also plot the average -values from bootstrapped 1024 -pairs as shown in Figure 2(c). We observe that the beginning of learning, vanilla DQN has highest estimates of -values, which is an evidence of overestimation. The estimates from double DQN is lower, but only for limited amount, therefore we say that double Q-learning may have not solve the overestimation problem completely. Cross DQNs have quite smaller estimations at the beginning, in particular, as gets larger, the estimates of -values become even lower. Overestimation is clearly an obstacle of effective learning, as a result, the estimated -values from cross DQNs are substantially higher than that from vanillar or double DQNs, since cross DQNs has derived better policies and obtained higher rewards. The -values estimates from cross DQNs start to converge after the derived policies stabilized, At the end of training, the estimated -values from the four different models are about at the same level, however, note that the estimates from vanilla and double DQNs continue increasing, and their derived policies are not stable, also have lower rewards. Our cross Q-learning algorithm has addressed the overestimation problem better.

4.1.3 Effects of dueling DQN & Bootstrapped DQN

As the cross learning architecture shares the same input-output interface with standard DQN, we can recycle many recent advances in DQN research. We have mentioned one variant in Section 3 that it can combined with Bootstrapped DQN for action selection during training, while in Secction 4.1.2, our experiments for cross DQN are based on majority voting from different -functions. Furthermore, it is convenient to combine the dueling architecture into each of the networks. The goal of dueling DQN is to reduce variance for -value estimation, by subtracting a baseline and emphasizing the advantages among different actions, thus accelerates learning effectively. The variance reduction is performed on a single network’s estimation, while our cross Q-learning reduces variance from a different perspective. For each network, the target values were calculated with other models by bootstrapping from multiple -values, thus introduces some bias. Due to the bias-variance tradeoff, however, the variance of our estimates decreases, and thus the overall error becomes smaller. In addition, the maximum operator induces overestimation bias, while cross-estimator tends to introduce bias in the other direction, thus greatly alleviates overestimation problem.

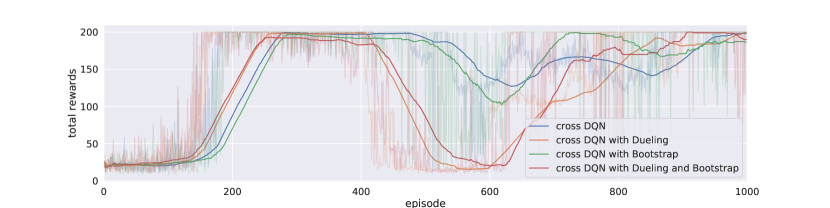

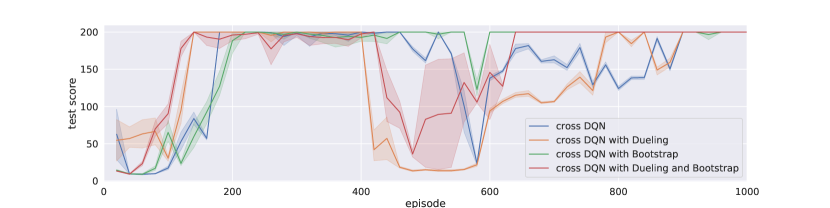

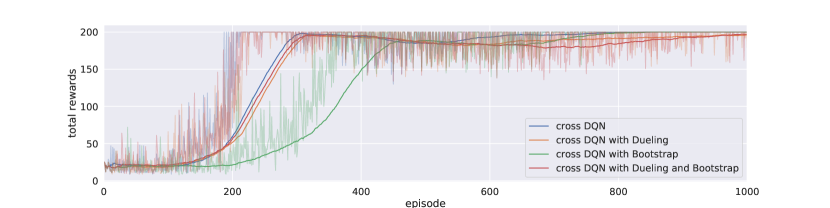

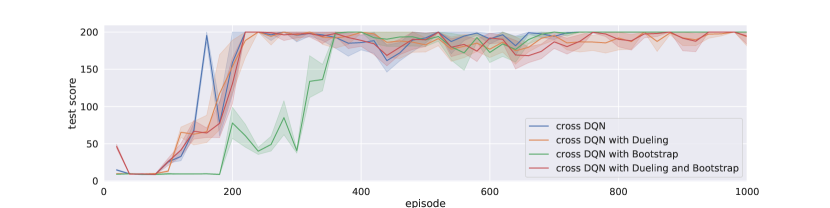

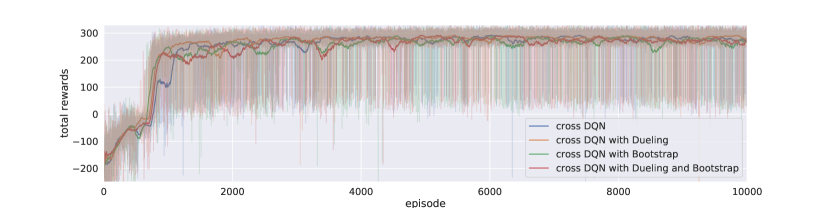

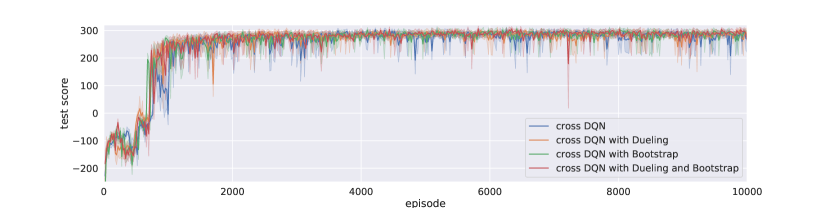

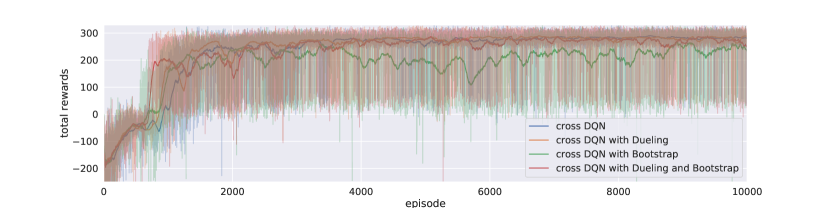

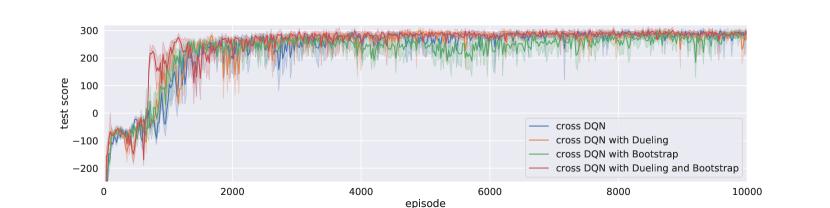

Figure 3 and Figure 4 illustrate the training and testing performance of cross DQN with different architectures, for the cases of and , respectively. We can see that dueling architecture speeds up early on learning effectively, without hurting the model performance later in general. On the other hand, Bootstrapped DQN slows learning at beginning, especially when is large, since the selected actions varies among networks at beginning quite a bit. For example, the cross DQN with bootstrap converges around 400 episodes while the other cross learning agents converges before 200 episodes. But after learned something, the bootstrapped action selection won’t hurt the model. In fact, it might help learning for more complicated tasks because of more exploration early on. At least, using bootstrapped DQN can help our cross DQN agent make faster action selection during training and reduce computational burden slightly, since instead of calculate all Q-values, we can calculate only one of them. Moreover, by comparing the learning curves of bootstrapped cross DQNs with different s, we can conclude that it is primarily our cross Q-learning rather than policy ensemble that greatly reduces the variance, as with the variations are much smaller that that with , though policy ensemble further reduces the variance greatly, and during testing phase, our agent can definitely benefit from ensemble of multiple models. Naturally combined crossed Q-learning with dueling and bootstrapped DQN, our model aggregates the merits from all three perspectives.

4.2 Lunar Lander

The task of Lunar Lander in Box2D [26] is to land the spaceship between the flags smoothly. In each step, the agent is provided with the current state of the lander in , in which 6 of the dimensions are in continuous space whereas the other 2 are dummy variables in discrete space, and the agent is allowed to make one of the 4 possible actions (i.e., the action space is discrete): fire the left, right, or down throttle so that the lander could obtain a force toward the opposite direction, or do nothing. At the end of each step, the agent receives a reward and moves to a new state . An episode finishes if the lander rest on the ground at zero speed (receives additional reward of ), or hits the ground and crashes (receives additional reward), or flies outside the screen, or reaches the maximum of 1000 time steps of one episode. The agent aims for successful landing which is defined as reaching the landing pad (between two flags) centered at the ground at the speed of zero, and receives an additional reward in range , while landing outside the pad would cause some penalty.

We built each network with two fully-connected hidden layers, which consist of 128 and 64 neurons, respectively. We train each of the neural networks for 10000 episodes for the LunarLander task, with a much larger replay buffer of size . The target network update is set to every 1000 steps for vanilla and double DQN, and learning rate and batch size of 64 are used for Adam optimizer to train all the models. The discount factor is again 0.99, and exploration rate is set to annealed to in the first 100000 steps. And again, -values for bootstrapped 1024 -pairs are evaluated and 10 episodes of performance tests with current policy are conducted every 20 training episodes.

In Figure 5, We compared our cross Q-learning algorithms with vanilla DQN and double DQN. With slower learning in the first a few hundreds of episodes due to our experimental design of the learning frequencies, cross DQNs learned much better and more stable policies, while vanilla and double DQN have large variances in both learning curves and performance testing. Figure 5(c) clearly shows that from the beginning, vanilla DQN optimistically gathers the occasional large rewards which are due to the high variance, and produces great overestimations. Double DQN slightly allivates the problem, but cannot avoid the overestimation effectively. The derived policies from these two networks are then not optimal nor stable. As learning going on, the estimated -values from both vanilla and double DQN explode, resulting in that the derived policies are no better than random actions. On the other hand, cross DQNs have much lower -value estimations at the beginning, and the estimates from model with are even lower than that from model with .

After 1000 episodes, the estimates continue growing until convergence, and their values converge to a same level at about . The derived policies are very stable, with total rewards close to 300 and also have little variance. Note that double DQN has lower estimates of -values than cross DQNs after 8000 episodes of training. The reason is that the corresponding policies from double DQN are much worse, and it does not indicate that double DQN addresses overestimation better.

Comparing Figure 6 and Figure 7, seems works even better than for most of time. Especially for bootstrapped cross DQN, both the learning curve and the test scores are lower than other cross DQN models. This indicates that it is not always the larger the better, since cross estimator would induce underestimate bias, and too much underestimation may also hide the real better actions and thus hurt the model performance. In fact, cross DQN might have too much underestimation at the beginning, which slows down the learning process significantly. But overall, the bootstrapped cross learning with dueling architecture performs best among all models, including all cross DQNs. We say that the DQN architectures are too complicated, and the aggregated effect may significantly change the performance of a particular model. Generally speaking, our cross DQNs favor underestimation, which should be much better than overestimation if no unbiased estimation can be achieved, since underestimations do not tend to propagate too much during training, as lower valued actions are avoided by the greedy action selection mechanism. And the bias-variance tradeoff tells us that the overall error can be reduced when the variance of our estimates is greatly decreased, by introducing slight negative bias, this in tern leads to better model performance.

Note that the derived policies from cross DQNs are much more stable in general, and hard to deteriorate. There are at least two reasons for this phenomena. First, cross Q-learning effectively addressed overestimation problem, thus premature policy would be more difficult to derived from cross DQN. In addition, we always ensemble policies using methods such as majority voting during test time, which in general is superior and has a stabilizing effect for action selections. The improved stability comes from larger barrier for altering the decision boundaries, and we could care much less about the early termination as an additional hyperparameter during training. This is yet another advantage of using multiple networks as in cross DQN.

5 Conclusions and Future Work

In this paper, we have presented the cross Q-learning algorithm, an extension to DQN that effectively reduces overestimation, stabilizes training, and improves performance. Cross DQN is a simple extension that can be easily integrated with other algorithmic improvement such as dueling network and bootstrapped DQN, leads to dramatic performance enhancement. We have both shown in theory and demonstrated in several experiments of classical control problems that the proposed scheme is superior in reducing overestimation and leads to better policies derivation, compared to widely used approaches such as double DQN. Cross learning favors underestimation, the introduced negative bias can greatly help variance reduction. We analyze this effect from the famous bias-variance tradeoff point of view. However, this also indicates that it is not the case the larger the better model performance in cross DQN. Nevertheless, DQN models tolerate underestimation much more than overestimation, as lower valued actions can be avoided by the greedy action selection mechanism.

It is noted that the computation complexity of cross DQN is generally higher, comparing with that of single network DQNs. We can, however, greatly reduce the complexity given the flexibility provided by our model. In addition, ensemble policies from multiple networks help stabilize the decision space, which can be utilized optionally in stablizing learning and definitely during testing.

As future work, we would apply cross learning to the state-of-the-art actor-critic methods in continuous control, further reduce the overestimation and stabilize those algorithms. Also, analysis from statistical learning theory could be helpful for us to derive more advanced cross learning strategies, for instance, better bootstrap estimations may be obtained by mimicking the -fold cross validation [27], or from Bayesian perspective [28].

Moreover, it worth noting that in each step of Q-learning (and more general value-based RL), we utilize -values in several different places. Now that a set of different -functions are applied, we can make different choices for picking particular one to use. We call them generalized cross learning in DQNs, and some existing work can be fell into a particular subclass of our generalized method. The first place that -values are utilized is when the agent makes decision for choosing an action at time step while observing . We can pick a random -function for action selection, and this is exactly what bootstrapped DQN [11] does. We say the bootstrapped DQN is a special case of our generalized cross DQN. The next place is at TD update when the target Q-values need to be evaluated for choosing the next action , which might not be executed, but is used to evaluate the current target Q-value and derive the operator. Recall in Q-learning we use the maximum estimator. Finally, after picking the next action , its value can be evaluated, again we have choices here for picking a -function to use. In the version of our cross DQN we presented in this work, which is directly derived from double DQN, we decoupled the selection and evaluation of the next action , where the current network is used for evaluating while another target network is used for selecting . We could try to do the opposite in certain circumstances, i.e., select with the current network and bootstrap another network to evaluate , which should have the effect of decrease bias but increase variance due to bias-variance tradeoff in general statistical learning scheme. One can further analyze and experiment with other generalized cross Q-learning variants.

References

- [1] Sebastian Thrun and Anton Schwartz. Issues in using function approximation for reinforcement learning.

- [2] Hado Van Hasselt, Arthur Guez, and David Silver. Deep reinforcement learning with double q-learning. In AAAI, pages 2094–2100, 2016.

- [3] Donghun Lee, Boris Defourny, and Warren B Powell. Bias-corrected q-learning to control max-operator bias in q-learning. In 2013 IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning (ADPRL), pages 93–99. IEEE, 2013.

- [4] Scott Fujimoto, Herke Van Hoof, and David Meger. Addressing function approximation error in actor-critic methods. arXiv preprint arXiv:1802.09477, 2018.

- [5] Oron Anschel, Nir Baram, and Nahum Shimkin. Averaged-dqn: Variance reduction and stabilization for deep reinforcement learning. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pages 176–185. JMLR. org, 2017.

- [6] Rie Johnson and Tong Zhang. Accelerating stochastic gradient descent using predictive variance reduction. In Advances in neural information processing systems, pages 315–323, 2013.

- [7] Aaron Defazio, Francis Bach, and Simon Lacoste-Julien. Saga: A fast incremental gradient method with support for non-strongly convex composite objectives. In Advances in neural information processing systems, pages 1646–1654, 2014.

- [8] Mark Schmidt, Nicolas Le Roux, and Francis Bach. Minimizing finite sums with the stochastic average gradient. Mathematical Programming, 162(1-2):83–112, 2017.

- [9] Zeyuan Allen-Zhu. Katyusha: The first direct acceleration of stochastic gradient methods. The Journal of Machine Learning Research, 18(1):8194–8244, 2017.

- [10] Zengqiang Chen, Beibei Qin, Mingwei Sun, and Qinglin Sun. Q-learning-based parameters adaptive algorithm for active disturbance rejection control and its application to ship course control. Neurocomputing, 2019.

- [11] Ian Osband, Charles Blundell, Alexander Pritzel, and Benjamin Van Roy. Deep exploration via bootstrapped dqn. In Advances in neural information processing systems, pages 4026–4034, 2016.

- [12] Xi-liang Chen, Lei Cao, Chen-xi Li, Zhi-xiong Xu, and Jun Lai. Ensemble network architecture for deep reinforcement learning. Mathematical Problems in Engineering, 2018.

- [13] Timothy P Lillicrap, Jonathan J Hunt, Alexander Pritzel, Nicolas Heess, Tom Erez, Yuval Tassa, David Silver, and Daan Wierstra. Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971, 2015.

- [14] Volodymyr Mnih, Adria Puigdomenech Badia, Mehdi Mirza, Alex Graves, Timothy Lillicrap, Tim Harley, David Silver, and Koray Kavukcuoglu. Asynchronous methods for deep reinforcement learning. In International conference on machine learning, pages 1928–1937, 2016.

- [15] ML Puterman. Markov decision processes. 1994. Jhon Wiley & Sons, New Jersey, 1994.

- [16] Christopher JCH Watkins and Peter Dayan. Q-learning. Machine learning, 8(3-4):279–292, 1992.

- [17] Richard S Sutton. Learning to predict by the methods of temporal differences. Machine learning, 3(1):9–44, 1988.

- [18] Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A Rusu, Joel Veness, Marc G Bellemare, Alex Graves, Martin Riedmiller, Andreas K Fidjeland, Georg Ostrovski, et al. Human-level control through deep reinforcement learning. Nature, 518(7540):529–533, 2015.

- [19] Long-Ji Lin. Self-improving reactive agents based on reinforcement learning, planning and teaching. Machine learning, 8(3-4):293–321, 1992.

- [20] Ziyu Wang, Tom Schaul, Matteo Hessel, Hado Van Hasselt, Marc Lanctot, and Nando De Freitas. Dueling network architectures for deep reinforcement learning. arXiv preprint arXiv:1511.06581, 2015.

- [21] Malcolm Strens. A bayesian framework for reinforcement learning.

- [22] Greg Brockman, Vicki Cheung, Ludwig Pettersson, Jonas Schneider, John Schulman, Jie Tang, and Wojciech Zaremba. Openai gym, 2016.

- [23] Prafulla Dhariwal, Christopher Hesse, Oleg Klimov, Alex Nichol, Matthias Plappert, Alec Radford, John Schulman, Szymon Sidor, Yuhuai Wu, and Peter Zhokhov. Openai baselines. https://github.com/openai/baselines, 2017.

- [24] Andrew G Barto, Richard S Sutton, and Charles W Anderson. Neuronlike adaptive elements that can solve difficult learning control problems. IEEE transactions on systems, man, and cybernetics, 5:834–846, 1983.

- [25] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [26] Erin Catto. Box2d: A 2d physics engine for games, 2011.

- [27] Hado Van Hasselt. Estimating the maximum expected value: an analysis of (nested) cross validation and the maximum sample average. arXiv preprint arXiv:1302.7175, 2013.

- [28] Carlo D’Eramo, Marcello Restelli, and Alessandro Nuara. Estimating maximum expected value through gaussian approximation. In International Conference on Machine Learning, pages 1032–1040, 2016.

- [29] Satinder Singh, Tommi Jaakkola, Michael L Littman, and Csaba Szepesvári. Convergence results for single-step on-policy reinforcement-learning algorithms. Machine learning, 38(3):287–308, 2000.

- [30] Hado V Hasselt. Double q-learning. In Advances in Neural Information Processing Systems, pages 2613–2621, 2010.

Appendix A Estimating the Maximum Expected Values

For Q-learning, the action is selected according to the estimated target Q-values. This is an instance of a more general maximum expected value estimation problem, which is formed as follows. Consider a set of random variables , we are interested in finding the maximum expected value among the set of variables, which is defined as

while each is usually estimated from samples. Let denote the sample space for estimating , for , and we further assume that the samples in are i.i.d. The sample mean is then an unbiased estimator for .

Let be the probability density function (PDF) for the variable , and be the cumulative density function (CDF). The maximum expected value is then

| (10) |

A.1 (Single) Maximum Estimator

The most straightforward way to approximate is to take the maximum over the sample mean for each , i.e., . Note that the sample means are unbiased estimates of the true means, thus is an unbiased estimate for , however, it is a biased estimate for .

Consider its CDF , we can write

| (11) |

Comparing equations (10) and (11), clearly and are note equivalent. Moreover, the product term in the integral introduces positive bias (since CDFs are monotonically increasing, the sum of their derivatives will be positive, the integral value would be monotonically increasing while more product terms are added). Therefore, we say that the expected value of the single estimator for the maximum is an overestimation of the maximum expected value.

A.2 Double Estimator

Consider the case that we use two sets of estimators and , in which each and is estimated from a set of samples independent of the one to estimate , i.e., , , and . For all , both and are unbiased estimator for , assuming all the samples in both sets are independently drawn from the population. That means for all , including , the action that maximizes the sample mean . Therefore, can be used to estimate as well as , i.e.,

The same argument holds for the opposite way considering the best action over and the sample mean . The selection of means that all other gives lower estimation, i.e., . Let and be the PDF and CDF of , respectively. Then

The expected value of double estimator is a weighted sum of the sample means’ expected values in one sample space, weighted by the probability of each sample mean to be the maximum in the other sample space, i.e.,

Double estimator gives us negative bias, since the weights are probabilities, which are positive and sum to 1, the maximum expected value then serves as an upper bound for the weighted sum, as some weights may also be given to variables whose expected value is less than the maximum.

A.3 Cross Estimator

We can easily extend the double estimator to a more general case, in which instead of using two sets of estimators, suppose now we have independent sets of estimators . We call it the cross estimator. The double estimator can be seen as a special case of the more general cross estimator. Similar argument as analyzing the double estimator can be applied here, for any two estimators and , as

The cross estimator finally uses a convex combination of the sample means,

thus also underestimates the maximum expected value.

Theorem 1.

[27] There does not exist an unbiased estimator for maximum expected values.

Appendix B Convergence in the Limit

In this section, we first present a lemma which claims the convergence of SARSA from [29], and then use it to prove convergence of cross Q-learning. Note that this part heavily borrows the proof of the convergence of double Q-learning [30], but serves as a more general case.

Lemma 2.

[29] . Consider a stochastic process , where and satisfy the equation

Let be a sequence of increasing -fields such that and are -measureable and and are -measurable, for .

converges to zero with probability one (w.p.1) if the following hold:

-

1.

the set is finite.

-

2.

, and w.p. 1.

-

3.

, where and converges to zero w.p. 1.

-

4.

, where is a constant.

in which denotes the maximum norm.

Theorem 3.

In a given ergodic MDP, suppose that we have a set of Q-value functions, , as updated by cross Q-learning, will converge to the optimal value function with probability 1, if the following conditions hold:

-

1.

The MDP is finite, i.e., .

-

2.

.

-

3.

The -values are stored in a lookup table.

-

4.

Each state-action pair is visited infinitely often.

-

5.

Each receives an infinite number of updates, for all .

-

6.

, and w.p. 1. Moreover, .

-

7.

Proof.

Let are randomly picked with . Apply Lemma 2 by letting , , , and , where . The first two conditions of Lemma 2 hold immediately from conditions 1 and 6 of Lemma 3, respectively. And since condition 7 of Theorem 3 gives us the bounds for the variance of rewards, the fourth condition of Lemma 2 holds.

To show the third condition of Lemma 2, we write

in which we define as the estimated -value for under the standard (single) Q-learning. While the convergence of standard Q-learning in finite MDP is well-known, i.e., , it suffices to show that , so that the condition on the expected contraction of holds.

Let . It is important to note that at each step, the choice of is random, all with equal probability . Consider the case that is updated using at time , the update of is

Or, again with probability , we use to update , in this case we have

Otherwise, this particular pair is not selected at time , and the update of is then zero. Then

Clearly converges to 0 since the coefficient on the R.H.S. is less than 1. Therefore we have shown that since in expectation and are randomly chosen. It in turn ensures condition 3 of Lemma 2 holds, which completes our proof. ∎

Finally, we rephrase Theorem 3 as follows:

Proposition 4.

Cross estimation converges in the limit, given finite and ergodic MDP.