Cross-Scope Spatial-Spectral Information Aggregation for Hyperspectral Image Super-Resolution

Abstract

Hyperspectral image super-resolution has attained widespread prominence to enhance the spatial resolution of hyperspectral images. However, convolution-based methods have encountered challenges in harnessing the global spatial-spectral information. The prevailing transformer-based methods have not adequately captured the long-range dependencies in both spectral and spatial dimensions. To alleviate this issue, we propose a novel cross-scope spatial-spectral Transformer (CST) to efficiently investigate long-range spatial and spectral similarities for single hyperspectral image super-resolution. Specifically, we devise cross-attention mechanisms in spatial and spectral dimensions to comprehensively model the long-range spatial-spectral characteristics. By integrating global information into the rectangle-window self-attention, we first design a cross-scope spatial self-attention to facilitate long-range spatial interactions. Then, by leveraging appropriately characteristic spatial-spectral features, we construct a cross-scope spectral self-attention to effectively capture the intrinsic correlations among global spectral bands. Finally, we elaborate a concise feed-forward neural network to enhance the feature representation capacity in the Transformer structure. Extensive experiments over three hyperspectral datasets demonstrate that the proposed CST is superior to other state-of-the-art methods both quantitatively and visually. The code is available at https://github.com/Tomchenshi/CST.git.

Index Terms:

Hyperspectral image, super-resolution, deep learning, Transformer.I Introduction

Hyperspectral images provide abundant spectral information on a variety of contiguous spectral bands from the target scene [1]. Unlike traditional imaging techniques that capture color information in limited channels, they possess the remarkable diagnostic ability to distinguish subtle spectral differences and inherent characteristics of various materials [2]. Therefore, hyperspectral images have been widely employed in diverse fiels, such as target detection [3, 4], geological exploration [5], and medical diagnosis [6]. However, an inherent trade-off between spatial and spectral resolution exists in the obtained hyperspectral images due to hardware limitations. Consequently, they frequently suffer from low spatial resolution. Researchers have endeavored to solve these challenges without relying solely on hardware advances.

Super-resolution (SR) seeks to reconstruct high-resolution (HR) images from their low-resolution (LR) counterparts [7]. Recently, the development of SR techniques has extended to hyperspectral images. According to whether auxiliary images are used, hyperspectral image SR can be categorized into fusion-based hyperspectral image super-resolution [8, 9, 10] and single hyperspectral image super-resolution (SHSR) [11, 12]. The fusion-based methods merge the LR hyperspectral images and HR auxiliary images such as multispectral and panchromatic image to reconstruct HR hyperspectral images. Currently, these methods often show superior performance compared to SHSR and have become the dominant approach. Nevertheless, these HR auxiliary images are often difficult to obtain and require precise registration with the target hyperspectral image[13]. These challenges hinder the implementation of fusion-based methods in practical scenarios.

Without additional auxiliary information, SHSR has a very favourable perspective and it has been widely investigated recently [14, 15]. In the past, traditional methods typically employ handcrafted priors [16, 17, 18, 19] such as sparse representation, low-rank matrix approximation, and dictionary learning to reconstruct high-resolution hyperspectral images. Nevertheless, these techniques are intricate and inflexible, resulting in limited performance. With the rapid development of deep learning, convolutional neural networks have shown surprising performance in the field of computer vision. By considering the deep SR networks for natural images [20, 21, 22], hyperspectral image SR has achieved remarkable developments [23]. However, these RGB methods are not directly applicable to hyperspectral images. Some researchers treat hyperspectral images as combinations of different spectra and then employ deep SR networks to process them in a band-by-band manner, which ignores the inherent high-dimensional spectral properties in hyperspectral images. Another intuitive approach is to feed the entire hyperspectral image directly into the deep model for three-channel RGB images, which exponentially increases the parameters and computational cost of the network [11].

Despite the convolutional neural networks (CNN) tailored for hyperspectral images that have shown good performance [11, 24], most of them utilize 2D or 3D convolutions to reveal latent spatial-spectral features in hyperspectral images. Specifically, 2D convolution can only exploit the spatial information in a confined area and is unable to delve into spectral features, which may result the spectral distortion. While 3D convolution can exploit local-range spectral correlations, it cannot model long-range spectral dependencies. Additionally, 3D convolution substantially escalates the network complexity [25]. The grouping-based methods [26, 14, 27] contemplate spectral correlations within local groups. Although the researchers have endeavored to fortify the connections between neighboring spectral groups by designing specialized modules [28], they have not fully exploited the long-range spectral correlations among global spectra.

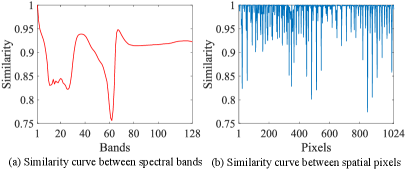

Recently, Transformers have become increasingly prevalent in SR tasks due to their capacity to capture long-range dependencies [29, 30]. Nevertheless, few researchers have employed dedicated transformers to model global spatial-spectral dependencies for hyperspectral image SR. Evidently, the quadratically growing computational cost with the number of tokens limits the application of Transformer-based methods. Several works for natural images [31, 32], have investigated specific strategies to mitigate this phenomenon, most of which are not compatible with hyperspectral images. The window-based Transformers [31] limit the long-range spatial information in a regional range, which does not adequately focus on the global spatial dependencies. The channel-wise self-attention [32] emphasizes the interactions between high-dimensional channels. Some schemes [12, 33] for hyperspectral image SR adopt the self-attention mechanism in the spectral dimension and leverage 3D convolutions to extract spatial information. However, on the one hand, these methods have not taken into account the long-range spatial dependencies. On the other hand, the low-rank based approaches [34, 19] illustrate that excessive redundancy across high-dimensional channels will adversely impact hyperspectral image SR performance. As shown in Fig. 1, we can first observe in Fig. 1 (a) that the first spectral band exhibits strong similarity not only with the adjacent spectral bands but also with the distant spectral bands. Secondly, in Fig. 1 (b), the first pixel shows strong similarity with both the neighboring pixels and the pixels at remote positions. Finally, we can notice that in both pixel and spectral curves, there are some regions with relatively weak similarity. Therefore, it can be inferred that there is redundancy in both spatial and spectral dimensions when extracting features. How to effectively model the spatial and spectral long-range dependencies for single hyperspectral image SR remains a significant challenge.

Motivated by these factors, we propose a cross-scope spatial-spectral Transformer (CST) network to model the long-range spatial and spectral dependencies. In the Transformer module, we individually devise the cross-scope spatial self-attention (CSA) and cross-scope spectral self-attention (CSE). In the spatial dimension, to facilitate the long-range spatial dependencies over the window-based self-attention [35, 36], we explore the similarities between local features within rectangular windows and aggregated global features. CSA not only preserves the linear complexity of the window-based attention mechanism but also thoroughly establishes the global dependencies. In the spectral dimension, to alleviate the negative influence of the redundant high-dimensional spectra, we explore the interactions between refined spatial-spectral features and global features. CSE can effectively mitigate the burdens involved in computing long-range spectral dependencies among high-dimensional spectra and achieve the dominant global spectral interactions. Inspired by [32], we construct a concise feed-forward neural network behind the cross-scope self-attentions to reinforce the representation capability of long-range spatial-spectral features. Finally, we aggregate the inductive bias of convolutions with the Transformer layer by merging a parallel channel attention with the cross-scope attentions. Extensive experiments on three common benchmark datasets show that the proposed CST outperforms the state-of-the-art approaches both quantitatively and visually. In summary, the contributions of this paper are summarized as follows:

-

1)

We propose a novel cross-scope spatial-spectral Transformer for SHSR to adequately capture the long-range dependencies in both spatial and spectral dimensions, which enhances the SR performance in linear computational complexity.

-

2)

To actualize the long-range spatial dependencies, we design a cross-scope spatial self-attention to investigate the similarities between local features within rectangular windows and the aggregated global features.

-

3)

To mitigate the effect of redundant high-dimensional spectra and capture the long-range spectral dependencies, we formulate a cross-scope spectral self-attention through the interactions between the characteristic spatial-spectral features and global features.

The remaining sections of this paper are organized as follows. Section II briefly reviews existing hyperspectral images SR methods. Section III presents the the proposed method in detail. Section IV shows the settings and experimental results and ablation analysis. Finally, Section V concludes this paper.

II Related Works

II-A CNN-based single hyperspectral image SR

Single hyperspectral image super-resolution has attracted considerable attention owing to its diverse application prospects. Due to the excellent performance of CNNs for natural image SR [20, 21, 22, 37], a large number of CNN-based architectures have emerged for addressing hyperspectral image super-resolution. Yuan [38] integrated the transfer learning and collaborative non-negative matrix factorization to guide the reconstruction of hyperspectral image from the natural images. To utilize the high-dimensional spectral characteristics, Mei [11] proposed a 3D fully CNN network (3DFCNN) to directly extract the spatial-spectral features by the 3D convolutions. To reduce the high computational and memory complexity of 3D convolutions, Li [39] decomposed the 3D kernel into the combination of 1D-2D convolutional kernels. Then, Li [24] designed a mixed convolutional network (MCNet) to extract the spatial and spectral information by mixing the 2D and separable 3D convolution. Furthermore, Li [40] alternately employed 2D and 3D units to boost the spatial-spectral representation capacity, which fully explored the relationship between 2D/3D convolutions (ERCSR). Then, replacing 3D Convolution with a grouping mechanism, Li [26] further designed a grouped deep recursive residual network (GDRRN) by introducing the group-wise convolution into the recursive residual module. Similarly, Jiang [14] proposed a progressive multi-branch network to learn a spatial-spectral prior of the grouping spectra (SSPSR). To maintain the spatial–spectral consistency, Wang [27] proposed a recurrent feedback network (RFSR) with the regularization strategy to strengthen the interaction between the adjacent spectral grouping. Then, Wang [28] explored the similar and complementary information within adjacent spectral groups to reconstruct more details. Li [41] designed a dual-stage network from the coarse stage to the fine stage to exploit the spatial-spectral similarity between adjacent bands. Nevertheless, these convolution-based methods were restricted to excavating the local spatial-spectral features, which overlooked the long-range spatial-spectral dependencies.

II-B Transformer-based single hyperspectral image SR

Transformer has received widespread application in SR tasks [42, 43, 32] due to its exceptional performance in exploring non-local similarity. Liu [33] designed a parallel branch network called Interactformer, which incorporated both Transformer modules and 3D convolutions. Hu [12] proposed a multilevel progressive network (MPNet) to learn the fine details by the progressive learning and non-local channel attention. Wang [44] also combined the spectrum-wise self-attention and 3D convolutions named 3D-THSR to learn the spatial-spectral features in global receptive field. However, there were several limitations with the aforementioned approach. Firstly, in order to reduce the computational complexity, these methods only explored long-range dependencies in the spectral dimension, thereby failing to fully harness the global spatial information. Secondly, based on the previous analysis, these methods ignored the redundancy when calculating self-attention from the deep features with expanded spectral dimension. Lastly, the utilization of 3D convolutions for extracting local information required substantial memory consumption. Hence, to efficiently capture long-range dependencies in both spectral and spatial dimensions with low complexity, we introduce a cross-scope method to leverage local-global spatial-spectral information.

III Proposed Method

In this section, we first elaborate the overall architecture of the proposed network CST. Subsequently, we present the spatial and spectral cross-scope self-attention mechanisms, and the concise feed-forward neural network, respectively. Finally, we describe the used loss functions in this paper.

III-A Overall Architecture

Motivated by the limitations of current CNNs and Transformer methods in capturing long-range spatial-spectral dependencies [31, 33], this paper aims to address the shortcomings of the existing CNN and Transformer in revealing latent spatial-spectral features. While 2D convolutions are confined to local areas and neglect spectral features, 3D convolutions lack the capability to model long-range dependencies and escalate network complexity. Despite the rising popularity of Transformers in SR, few studies have focused on dedicated Transformers for global spatial-spectral dependencies in hyperspectral image SR. Current strategies for natural images are not readily adaptable to hyperspectral data [14]. The proposed approach seeks to overcome these challenges by introducing a novel cross-scope strategy that leverages local-global spatial-spectral information efficiently.

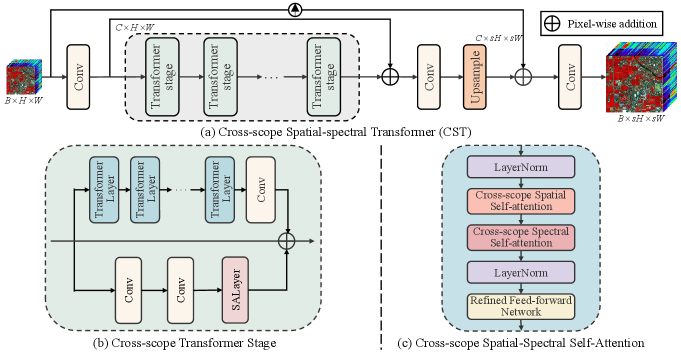

The overall architecture of the proposed CST is shown in Fig. 2, consisting of three parts: shallow feature extraction, deep feature extraction, and image reconstruction. Given the input LR hyperspectral image and the SR scale factor , where and are the height and width in the spatial dimension and is the number of spectral bands, our network outputs the HR hyperspectral image . The CST first extracts the shallow features from through a convolution, which expands the spectral dimensions to obtain more feature maps. The shallow feature extraction can be denoted as

| (1) |

where is the shallow feature extraction layer, and is the extracted shallow feature. Then, the shallow features pass through a sequence of Transformer stages to extract the deep features. As shown in Fig. 2 (b), the designed cross-scope Transformer stage contains successive Transformer layers and one convolution layer. And each Transformer stage includes two parallel branches, Transformer layer and spectral attention module, to adaptively aggregate the features from the the non-local or local regions. The deep feature extraction can be denoted as

| (2) |

where is the function of -th Transformer stage and denotes the -th spatial-spectral feature from the Transformer stage. Subsequently, the deep feature is obtained by the skip connection and one convolution layer, which can be denoted as

| (3) |

where represents the extracted deep feature. Finally, the deep feature is fed into the reconstruction layer to improve the spatial resolution, which can be denoted as

| (4) |

where represents an upsampling operation implemented using pixelshuffle and denotes the upsampled feature. The final reconstruction SR image can be attained by long skip connection and one convolution layer, which can be denoted as

| (5) |

where is the bicubic upsampling operation. Through the final residual structure, the network can directly learn the significant textures and details for HR hyperspectral image.

As shown in Fig. 2 (c), the designed Transformer layer adopts the common the architecture that consists of some essential blocks: LayerNorm (LN) modules, distinctive cross-scope multi-head self-attention modules, and concise feed-forward neural network. Let denote the input feature of the -th Transformer layer. The outputs of Transformer layer can be expressed as

| (6) | ||||

where represents the cross-scope multi-head self-attention, represents the function of layer normalization, and represents the designed concise feed-forward neural network. The details of CMSA are shown in Fig.1 (c), which contains a cross-Scope spatial attention and cross-scope spectral attention.

III-B Cross-Scope Spatial Self-attention

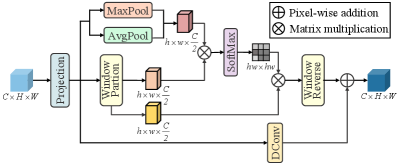

Traditional convolutional approaches struggle to capture extended spatial dependencies, while standard Transformers are constrained by computational complexity. Drawing from these factors, we further introduce the cross-scope spatial self-attention to address the inherent drawback of window-based attention mechanisms that can only account for information within local regions. As shown in Fig. 3, the spatial cross-attention integrates the local and global information within the window-based attention mechanism, aiming to establish comprehensive global spatial dependencies while maintaining linear computational complexity.

Following [36], for the input feature after layer normalization, CSA is first divide it into two segments along the spectral dimension, where and . Then, and are fed into horizontal and vertical cross-scope spatial multi-head self-attention mechanisms, respectively. Finally, the output of CSA is calculated by aggregating the results from the horizontal and vertical dimensions, which can be denoted as

| (7) | ||||

where and represents the function of mutli-head self-attention along horizontal and vertical dimensions, respectively.

Specifically, we calculate the multi-head attention in parallel, and here we take the single head as an example for the sake of simplicity. When the size of rectangle window is and , we partition the feature as vertically, where and . Then, the local features are calculated in the vertical windows. To establish long-range spatial dependencies within linear complexity, we directly apply two pooling operations to the input features to obtain global spatial information. Subsequently, the local and global features are reshaped and linearly mapped as query (), key (), and value () to compute the cross-attention, which can be denoted as

| (8) | ||||

where and represent the learnable parameters, and represent the function of adaptive average and max poolings, is the relative position encoding, and is the feature dimension. Finally, the output of the vertical CSA is obtained as

| (9) |

Similarly, for the horizontal cross-scope spatial multi-head self-attention, the size of rectangle window is and we can obtain the horizontal output . Additionally, between consecutive cross-scope spatial self-attention, we introduce the shift operation along different directions to enhance interactions between different windows. The previous works have demonstrate that the rectangle windows are advantageous for capturing repetitive textures in various directions.

The computational complexity of CSA can be denoted as

| (10) |

where and are the constants. In summary, our CSA can calculate the spatial cross-attention with linear computational complexity on the spatial size of .

III-C Cross-Scope spectral self-attention

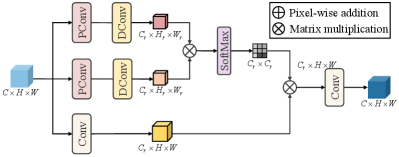

Traditional convolution-based methods adopt the 3D convolution to emphasize local spectral features, disregarding the potential long-range connections. Although some Transformer-based approaches have combined spectral self-attention mechanisms and 3D convolutions, they often suffer from increased memory costs and high complexity due to the high-dimensional feature space. To tackle these issues, we design a cross-scope spectral self-attention mechanism that efficiently captures characteristic spatial-spectral correlations and global interactions. As shown in Fig. 4, the spectral cross-attention models the global spectral dependencies in linear computational complexity.

For the input feature from the CSA, CSE first normalizes and reshapes it as . Then, we sequentially employ the point-wise convolution and depth-wise convolution to extract the characteristic features containing comprehensive spatial-spectral information. We also achieve the multi-head attention mechanism, and here we take the single head as an example for the simplicity. To avoid excessive redundancy across high-dimensional channels, the characteristic features, (i.e. query () and key ()), are adaptively calculated through the reduction in both spatial and spectral dimensions. In this paper, we define , and . We exploit the cross-attention between the characteristic features and the global feature to achieve the global spatial-spectral interactions, which can be denoted as

| (11) | ||||

where is the function of point-wise convolution, is the function of depth-wise convolution, is the standard convolution, is the feature dimension, and is the output of CSE. Through CSE, we facilitate the interactions between essential representative features and global features, capturing long-range spectral similarities with low computational cost.

The standard computational complexity of self-attention on spectral dimension is . The computational complexity of the designed CSE can be denoted as

| (12) |

where , , and . In summary, our CSA can calculate the spectral cross-attention with low computational cost compared with the original spectral self-attention.

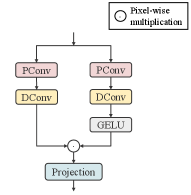

III-D Concise Feed-Forward Neural Network

Traditional feed-forward neural network treat the pixels at each position independently and equally without considering the relationships between them. Following the gating mechanism [32], we design a concise feed-forward neural network (CFN) to improve the representative captivity of the model. As shown in Fig. 5, CFN comprises two parallel branches to process the input spatial-spectral features. In detail, we sequentially utilize point-wise convolution and depth-wise convolution in two branches to extract details beneficial for image reconstruction. Additionally, a non-linear transformation using the GELU activation function is applied in one of the branches, enabling the network to flexibly focus on crucial information. Finally, the advantageous context is learned through the element-wise product of the linear and non-linear branches. Given an input feature , the process of CFN can be denoted as

| (13) |

Compared with the gating structure in [32], our CFN focuses on exploring internal spatial correlations to uncover more spatial information conducive to image restoration.

III-E Loss Function

Following the previous works, three losses, i.e. loss, spectral angle mapper (SAM) loss, and gradient loss in both spatial and spectral domains, are employed to optimize the proposed network.

Firstly, the loss is widely used in SR for natural image [22]. It calculates the absolute pixel-wise difference between the reconstructed SR image and the original HR image. While the loss tends to generate overly smoothed results, the loss provides a more equitable distribution of errors and enhances the convergence of training. This property encourages the model to reconstruct sharper and more detailed results. In our approach, we adopt the loss to measure the accuracy of the reconstructed hyperspectral images and preserve the spatial details during the super-resolution process. Secondly, The SAM loss is introduced to ensure the spectral consistency of the reconstruction images. Unlike traditional loss functions that focus solely on pixel-wise differences, the SAM loss takes into account the spectral characteristics of hyperspectral data. Finally, inspired by [27, 28], we integrate gradient information to further enhance the sharpness of the reconstructed images. The gradient loss focuses on the differences between adjacent pixels. In conclusion, the total loss for the proposed network can be formulated as

| (14) | |||

| (15) | |||

| (16) | |||

| (17) |

where is the number of the input in a training batch, and represents the -th HR and SR hyperspectral images, and represents the horizontal, vertical, and spectral gradients of the input, respectively. and denote the hyper-parameters that control the balance between the different losses for excellent SR performance. The analysis of the loss functions is shown in the section of the experiment. In this paper, is set to 0.3 and is set to 0.1, empirically.

IV Experiments and Results

IV-A Datasets

The experiments are conducted on three common real-scenario hyperspectral image datasets, i.e., Chikusei [45], Pavia Center [46], and Houston 111https://hyperspectral.ee.uh.edu/?page_id=1075.

IV-A1 Chikusei Dataset

The Chikusei dataset is a hyperspectral remote sensing image, acquired by the Headwall Hyperspec-VNIR-C imaging sensor over agricultural and urban areas in Chikusei, Ibaraki, Japan. The image contains 128 spectral bands ranging from 363 to 1018 nm with a spatial resolution of . The ground sampling distance is 2.5 m.

IV-A2 Houston Dataset

The Houston 2018 dataset is the part of the 2018 IEEE GRSS Data Fusion Contes, acquired by the ITRES CASI 1500 spectral imager over the University of Houston campus and its surrounding urban area in Houston, Texas, America. The image contains 48 spectral bands ranging from 380 to 1050 nm with a spatial resolution of . The ground sampling distance is 1 m.

IV-A3 Pavia Centre Dataset

The Pavia Centre dataset is a hyperspectral remote sensing image, taken by the Reflective Optics System Imaging Spectrometer (ROSIS) sensor during a flight campaign over the center area of Pavia, northern Italy, in 2001. The image contains 103 bands ranging from 430 to 860 nm with a spatial resolution of . The ground sampling distance is 1.3 m.

IV-B Implementation Details

In the proposed network CST, we set the kernel size of the standard convolution and depth-wise convolution as , and the size of others for point-wise convolution and spectral attention is . Zero padding is adopted to maintain the spatial size of feature maps. Following the settings in [22], we set the reduction ratio in CA as 16. We set the number of channels to , the number of Transformer stages as , and the number of Transformer layers as . In general, the rectangle window size is set to . In the image reconstruction, we adopt the progressive upsampling strategy by PixelShuffle [47] to upsample the input LR hyperspectral images for reducing the parameters (e.g., upsampling 3 times for scale factor 8). For the loss function, we set and . In the training procedure, the Adam optimizer with the default setting is employed to train the network for 500 epochs, and the size of the mini-batch is set to 32. The initial learning rate is set to and halves every 100 epochs until 300 epochs. The proposed model is implemented by Pytorch on NVIDIA RTX 3090 GPU.

We compare the proposed CST with other state-of-the-art methods at three scale factors, including one interpolation method, one Transformer-based SR SwinIR [31] for natural image and deep learning-based SHSR methods, i.e. Bicubic, GDRRN [26], SSPSR [14], RFSR [27], and GELIN [28]. For SwinIR, We directly feed the whole hyperspectral image into the network for training and modify the dimensions of the input and output. For the aforementioned methods, we strive to achieve their optimal performance. Six popular evaluation metrics are used for the comparison experiments to entirely demonstrate the performance of the models in both spatial and spectral dimensions, including peak signal-to-noise ratio (PSNR), structure similarity (SSIM), spectral angle mapper (SAM), cross correlation (CC), root-mean-squared error (RMSE), and erreur relative global adimensionnellede synthese (ERGAS). While PSNR, SSIM, and RMSE are typically employed to assess the quality of natural image restoration, the remaining three metrics, CC, SAM, and ERGAS, are common evaluation measures in hyperspectral image fusion tasks.

IV-C Experiments on the Chikusei dataset

| Method | Scale | PSNR | SSIM | SAM | CC | RMSE | ERGAS |

|---|---|---|---|---|---|---|---|

| bicubic | 43.2125 | 0.9721 | 1.7880 | 0.9781 | 0.0082 | 3.5981 | |

| GDRRN [26] | 46.4286 | 0.9869 | 1.3911 | 0.9885 | 0.0056 | 2.6049 | |

| SwinIR [31] | 47.3018 | 0.9889 | 1.2228 | 0.9898 | 0.0051 | 2.4146 | |

| SSPSR [14] | 47.4073 | 0.9893 | 1.2035 | 0.9906 | 0.0051 | 2.3177 | |

| RFSR [27] | 47.6258 | 0.9898 | 1.1335 | 0.9910 | 0.0049 | 2.2862 | |

| Gelin [28] | - | - | - | - | - | - | |

| Ours | 48.0852 | 0.9908 | 1.1057 | 0.9918 | 0.0047 | 2.1685 | |

| bicubic | 37.6377 | 0.8954 | 3.4040 | 0.9212 | 0.0156 | 6.7564 | |

| GDRRN [26] | 39.0864 | 0.9265 | 3.0536 | 0.9421 | 0.0130 | 5.7972 | |

| SwinIR [31] | 39.5366 | 0.9364 | 2.8327 | 0.9456 | 0.0122 | 5.6280 | |

| SSPSR [14] | 39.9797 | 0.9393 | 2.4864 | 0.9528 | 0.0119 | 5.1905 | |

| RFSR [27] | 39.8950 | 0.9382 | 2.4656 | 0.9517 | 0.0120 | 5.2334 | |

| Gelin [28] | 40.1573 | 0.9410 | 2.4266 | 0.9543 | 0.0118 | 5.0314 | |

| Ours | 40.2406 | 0.9431 | 2.3453 | 0.9554 | 0.0116 | 5.0123 | |

| bicubic | 34.5049 | 0.8069 | 5.0436 | 0.8314 | 0.0224 | 9.6975 | |

| GDRRN [26] | 34.7395 | 0.8199 | 5.0967 | 0.8381 | 0.0213 | 9.6464 | |

| SwinIR [31] | 34.8785 | 0.8307 | 5.0413 | 0.8465 | 0.0210 | 9.4743 | |

| SSPSR [14] | 35.1643 | 0.8299 | 4.6911 | 0.8560 | 0.0206 | 9.0504 | |

| RFSR [27] | 35.5049 | 0.8405 | 4.2785 | 0.8661 | 0.0199 | 8.6338 | |

| Gelin [28] | 35.6496 | 0.8464 | 4.1354 | 0.8707 | 0.0197 | 8.4520 | |

| Ours | 35.7902 | 0.8522 | 3.9915 | 0.8753 | 0.0192 | 8.3636 |

After removing the blurred edges, the size of the remaining central region in the Chikusei dataset is . Following the previous work [14, 28], the four non-overlapping images with the size of are cropped from the top region for testing. The remaining area is cropped into overlapping HR images for training (10% of the training data is randomly selected for validation). Specifically, the spatial resolution of LR training patches is , and the sizes of corresponding HR patches for scale factors , , and are , , and , respectively. All the LR patches from the hyperspectral image are generated through bicubic downsampling at different scales.

The quantitative results of our method and other compared methods at different scale factors on the Chikusei dataset are shown in Table I. The best results are indicated in bold, and the second-best results are underlined. We can observe that the interpolation-based method Bicubic exhibits ordinary SR performance, while learning-based methods achieve significant improvements. While the Transformer-based method SWINIR can slightly enhance spatial recovery results, this approach designed for natural image super-resolution does not consider the spectral characteristics of hyperspectral images, leading to spectral distortion. Grouping-based methods designed for hyperspectral images restore the HR images in both spatial and spectral dimensions but do not explore the non-local spatial-spectral similarities. Notably, through capturing the global spatial-spectral dependencies, our proposed CST achieves the best performance for all metrics at scale factors , , and , which demonstrates the effectiveness and superiority of our method.

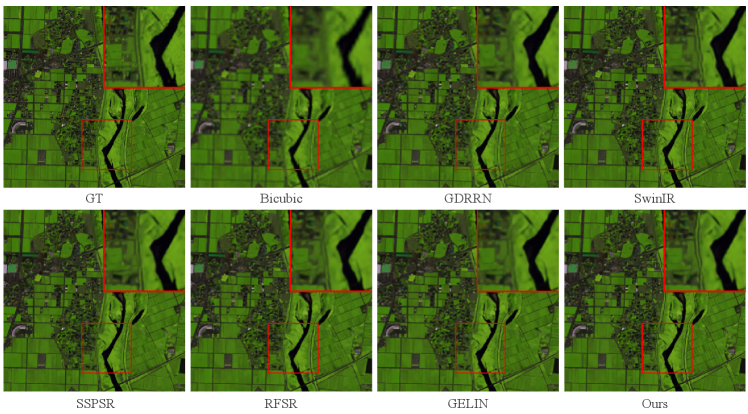

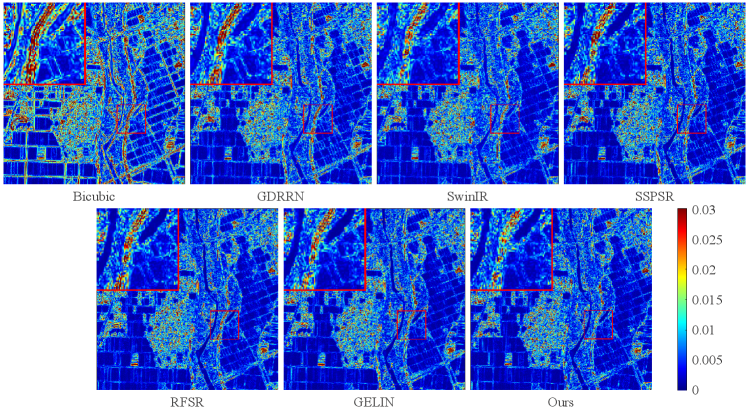

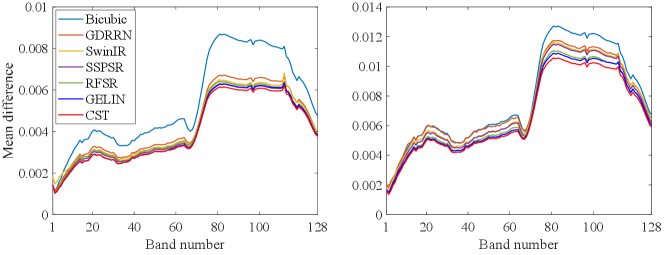

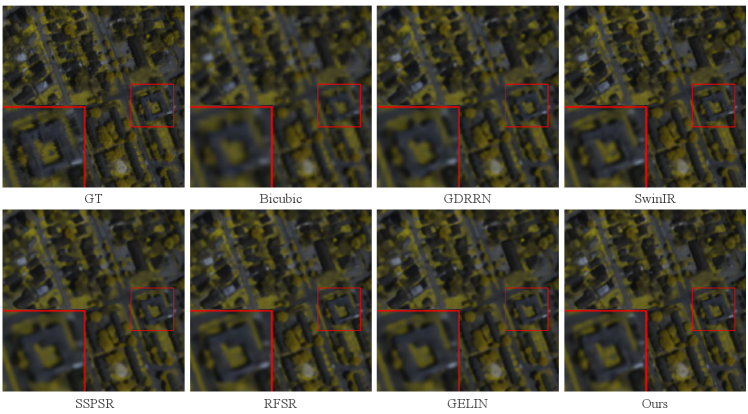

As shown in Fig. 6, the qualitative results of all methods at scale factor on the Chikusei dataset are presented. Especially, the 70th, 100th, and 36th bands of the test image are selected as the R-G-B channels for visual comparison. We can observe that while interpolation-based method Bicubic upsampling can achieve hyperspectral image SR, it often introduces blur and overly smooth details. Recent methods like SSPSR and GELIN have made progress in restoring overall structure, but they still suffer from unclear boundaries and severe artifacts. In particular, the visual results demonstrate that the proposed CST can reconstruct the HR hyperspectral images with clearer and sharper details compared with the other methods. Moreover, the advantages of our method can be better observed based on the enlarged view of the details in the red box. Additionally, we also visualize the mean error images among all spectra in Fig. 7 to provide an intuitive view of the accuracy of the reconstruction for individual pixels. In the error images, a bluer color indicates a higher accuracy for the reconstruction results. According to the highlighted red box region in error maps, our method exhibits minimal errors, which illustrates the superiority of the proposed method. Finally, the mean spectral difference curves of the test images at the scale factors and from the testing dataset are shown in Fig. 8 to evaluate the SR results from a spectral perspective. Compared to analyzing the reflectance of random pixels in the reconstructed image, the mean spectral difference offers a comprehensive measure of the accuracy of spectral reconstruction quality across the entire image. The lower the curve, the fewer spectral differences and distortion between the SR results and the ground-truth. Clearly, our method achieves the best spectral reconstruction results at different scale factors.

IV-D Experiments on the Houston dataset

| Method | Scale | PSNR | SSIM | SAM | CC | RMSE | ERGAS |

|---|---|---|---|---|---|---|---|

| bicubic | 49.4735 | 0.9915 | 1.2707 | 0.9940 | 0.0040 | 1.3755 | |

| GDRRN [26] | 51.5205 | 0.9949 | 1.1241 | 0.9957 | 0.0031 | 1.0723 | |

| SwinIR [31] | 52.2118 | 0.9957 | 1.0863 | 0.9964 | 0.0028 | 0.9898 | |

| SSPSR [14] | 52.1246 | 0.9954 | 1.0101 | 0.9965 | 0.0029 | 1.0035 | |

| RFSR [27] | 52.5131 | 0.9958 | 0.9491 | 0.9968 | 0.0028 | 0.9571 | |

| Gelin [28] | - | - | - | - | - | - | |

| Ours | 53.4784 | 0.9967 | 0.8803 | 0.9974 | 0.0025 | 0.8570 | |

| bicubic | 43.0272 | 0.9613 | 2.5453 | 0.9741 | 0.0086 | 2.9085 | |

| GDRRN [26] | 44.2964 | 0.9730 | 2.5347 | 0.9760 | 0.0069 | 2.4700 | |

| SwinIR [31] | 46.0971 | 0.9808 | 1.9463 | 0.9864 | 0.0059 | 2.0039 | |

| SSPSR [14] | 45.6017 | 0.9778 | 1.9650 | 0.9850 | 0.0063 | 2.1380 | |

| RFSR [27] | 45.8677 | 0.9792 | 1.8304 | 0.9858 | 0.0060 | 2.0659 | |

| Gelin [28] | 45.8715 | 0.9790 | 1.8759 | 0.9859 | 0.0061 | 2.0778 | |

| Ours | 46.9957 | 0.9839 | 1.6508 | 0.9892 | 0.0054 | 1.8236 | |

| bicubic | 38.1083 | 0.8987 | 4.6704 | 0.9177 | 0.0152 | 5.1229 | |

| GDRRN [26] | 38.2592 | 0.9085 | 4.9045 | 0.9138 | 0.0140 | 4.9135 | |

| SwinIR [31] | 39.4013 | 0.9194 | 4.0586 | 0.9370 | 0.0127 | 4.3333 | |

| SSPSR [14] | 39.2844 | 0.9164 | 4.2673 | 0.9346 | 0.0130 | 4.4212 | |

| RFSR [27] | 39.4899 | 0.9211 | 3.8403 | 0.9379 | 0.0126 | 4.2967 | |

| Gelin [28] | 39.6387 | 0.9216 | 3.9231 | 0.9393 | 0.0125 | 4.2453 | |

| Ours | 39.9786 | 0.9261 | 3.5802 | 0.9442 | 0.0121 | 4.0958 |

Following the above settings, the eight non-overlapping images from the Houston dataset with the size of are cropped from the top region for testing. The remaining area is cropped into overlapping HR patches for training (10% of the training data is randomly selected for validation). Specifically, the spatial resolution of LR training patches is , and the sizes of corresponding HR patches for scale factors , , and are , , and , respectively.

In Table II, the quantitative results of all methods at different scale factors on the Houston dataset are shown. It can be observed that our CST method outperforms other methods for all metrics at different scale factors. Moreover, compared to the results on other datasets, our method shows greater improvements on the Houston dataset. This is attributed to the Transformer-based structure of our method, which requires a substantial amount of training data to enhance the capabilities of the model, and the Houston dataset offers more training samples. The results further substantiate the effectiveness of our method, and emphasize the significance of long-range spatial and spectral similarities in hyperspectral image SR.

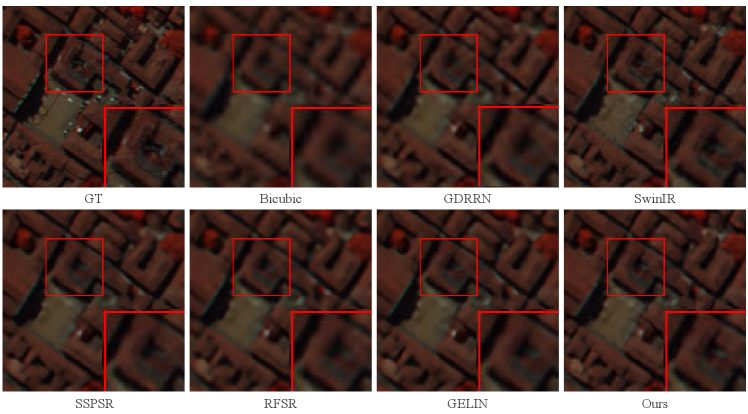

Similarly, as shown in Fig. 9, we also present the qualitative results of all methods at scale factor on the Houston dataset. Specifically, the 29th, 26th, and 19th bands of the test image are treated as the R–G–B channels for visual comparison. It can be observed that other methods inevitably produce many blurry edges and artificial artifacts, while our method is capable of recovering more low-frequency and high-frequency information in the SR hyperspectral images. Moreover, the enlarged view of the details in the red box presents the advantages of our method.

IV-E Experiments on the Pavia dataset

| Method | Scale | PSNR | SSIM | SAM | CC | RMSE | ERGAS |

|---|---|---|---|---|---|---|---|

| bicubic | 32.5489 | 0.9143 | 4.4086 | 0.9491 | 0.0242 | 4.0984 | |

| GDRRN [26] | 34.3982 | 0.9484 | 4.1618 | 0.9681 | 0.0182 | 3.1716 | |

| SwinIR [31] | 36.6070 | 0.9620 | 3.4767 | 0.9765 | 0.0151 | 2.6903 | |

| SSPSR [14] | 36.5800 | 0.9627 | 3.4717 | 0.9766 | 0.0152 | 2.6856 | |

| RFSR [27] | 35.7468 | 0.9564 | 3.6104 | 0.9724 | 0.0167 | 2.9409 | |

| Gelin [28] | - | - | - | - | - | - | |

| Ours | 37.3427 | 0.9677 | 3.2840 | 0.9795 | 0.0139 | 2.5000 | |

| Bicubic | 27.8623 | 0.7240 | 6.1325 | 0.8522 | 0.0424 | 6.8670 | |

| GDRRN [26] | 28.7845 | 0.7889 | 6.6320 | 0.8776 | 0.0377 | 6.2168 | |

| SwinIR [31] | 29.5134 | 0.8227 | 5.6553 | 0.8966 | 0.0346 | 5.7325 | |

| SSPSR [14] | 29.5355 | 0.8223 | 5.4462 | 0.8975 | 0.0346 | 5.7045 | |

| RFSR [27] | 29.4013 | 0.8152 | 5.5216 | 0.8938 | 0.0351 | 5.8014 | |

| Gelin [28] | 29.4854 | 0.8204 | 5.4265 | 0.8967 | 0.0348 | 5.7317 | |

| Ours | 29.7451 | 0.8311 | 5.3885 | 0.9021 | 0.0337 | 5.5829 | |

| bicubic | 24.9874 | 0.4744 | 7.6196 | 0.6892 | 0.0603 | 9.5021 | |

| GDRRN [26] | 25.1226 | 0.5030 | 8.3504 | 0.6931 | 0.0591 | 9.3617 | |

| SwinIR [31] | 25.3301 | 0.5229 | 7.9841 | 0.7102 | 0.0577 | 9.1353 | |

| SSPSR [14] | 25.5094 | 0.5410 | 7.4538 | 0.7242 | 0.0566 | 8.9497 | |

| RFSR [27] | 25.4987 | 0.5332 | 7.3518 | 0.7227 | 0.0565 | 8.9615 | |

| Gelin [28] | 25.4975 | 0.5418 | 7.3091 | 0.7234 | 0.0566 | 8.9651 | |

| Ours | 25.5692 | 0.5450 | 7.2958 | 0.7284 | 0.0561 | 8.8889 |

After removing the noise region without information, the size of the available part in the Pavia dataset is . For scale factors and , the four non-overlapping images with the size of are cropped from the left region for testing. Additionally, for the scale factor , the non-overlapping images with the size of are cropped from the left region for testing. The remaining area is cropped into overlapping HR images for training (10% of the training data is randomly selected for validation). Specifically, the spatial resolution of LR training patches is , and the sizes of corresponding HR patches for scale factors , , and are , , and , respectively.

In Table III, the quantitative results of all methods at different scale factors on the Houston dataset are shown. Compared to the other datasets, the Pavia dataset has a lower spatial resolution. Consequently, it is a challenge for our Transformer-based method CST due to the limited training samples. In addition, all methods perform poorly relatively on this dataset. Moreover, Nonetheless, our method still manages to outperform other methods across all metrics on this dataset. In summary, our method achieves competitive performance on the dataset with limited samples, which demonstrates the effectiveness of non-local spatial and spectral similarities.

As shown in Fig. 10, we visualize the qualitative results of all methods from the testing image at the scale factor ×4. Especially, the 100th, 30th, and 12th bands of the testing image are selected as the R–G–B channels for visual comparison. The observations for the previous dataset are also applicable to the Pavia dataset. Similarly, other methods generate unclear contours and textures, while our approach can restore more fine details. Based on the region in the red box, we can observe the superiority of our approach.

IV-F Ablation study

In this section, we provide the performance of different variants at the scale factor on Chikusei dataset to verify the effectiveness of our method. We calculate the model parameters and the floating point operations (Flops) of all methods when the size of input is .

IV-F1 Effectiveness of the proposed cross-scope self-attention

over the Chikusei testing dataset at scale factor 4

| Variant | Params.() | FLOPs() | PSNR | SSIM | SAM | CC | RMSE | ERGAS |

|---|---|---|---|---|---|---|---|---|

| w/o CSA | 8.992 | 19.135 | 40.1703 | 0.9420 | 2.3736 | 0.9547 | 0.0116 | 5.0560 |

| w/o CSE | 10.062 | 20.223 | 39.9529 | 0.9391 | 2.4104 | 0.9524 | 0.0119 | 5.1921 |

| CSA-CSA | 12.189 | 22.376 | 40.0703 | 0.9408 | 2.3742 | 0.9536 | 0.0118 | 5.1140 |

| CSE-CSE | 10.049 | 20.199 | 40.0298 | 0.9407 | 2.4209 | 0.9533 | 0.0118 | 5.1556 |

| CSA-CSE(Ours) | 11.119 | 21.287 | 40.2406 | 0.9431 | 2.3453 | 0.9554 | 0.0116 | 5.0123 |

In the proposed cross-scope multi-head self-attention, we devise a cross-scope spatial self-attention and a cross-scope spectral self-attention to model the spatial and spectral long-range dependencies separately. To verify the effectiveness of the proposed CST, CSA, and the structure of the cross-scope self-attention, we design various variants of CST. By removing CSA or CSE independently, we denote the variants as “Ours w/o CSA” or “Ours w/o CSE”. To investigate the impact of self-attention mechanisms in different dimensions, we employ two consecutive CSAs by replacing CSE with CSA in the variant and denote it as “Ours with 2CSA”. Similarly, another variant containing two consecutive CSEs is denoted as “Ours with 2CSE”. As shown in Table IV, when using a single CSA or CSE, the performance of the model significantly deteriorates. When using two consecutive CSAs, the PSNR decreases by dB compared to “w/o CSA”. The possible reason is the excessive redundancy in exploring spatial information, making it difficult for the model to learn effectively. Compared with the other variants, our model achieves the best performance, which demonstrates the higher efficiency of the proposed cross-scope multi-head self-attention.

over the Chikusei testing dataset at scale factor 4

| Variant | Params.() | FLOPs() | PSNR | SSIM | SAM | CC | RMSE | ERGAS |

|---|---|---|---|---|---|---|---|---|

| w/o spe | 12.170 | 22.352 | 40.2147 | 0.9427 | 2.3633 | 0.9551 | 0.0116 | 5.0321 |

| w/o spa | 11.133 | 21.311 | 40.1936 | 0.9425 | 2.3627 | 0.9550 | 0.0116 | 5.0481 |

| Ours | 11.119 | 21.287 | 40.2406 | 0.9431 | 2.3453 | 0.9554 | 0.0116 | 5.0123 |

| Window Size | Params.() | FLOPs() | PSNR | SSIM | SAM | CC | RMSE | ERGAS |

|---|---|---|---|---|---|---|---|---|

| (2,2) | 11.119 | 21.286 | 40.1888 | 0.9425 | 2.3719 | 0.9549 | 0.0116 | 5.0403 |

| (2,4) | 11.119 | 21.286 | 40.1374 | 0.9421 | 2.3883 | 0.9544 | 0.0117 | 5.0881 |

| (2,8) | 11.119 | 21.287 | 40.1756 | 0.9424 | 2.3596 | 0.9548 | 0.0116 | 5.0558 |

| (2,16) | 11.119 | 21.287 | 40.2406 | 0.9431 | 2.3453 | 0.9554 | 0.0116 | 5.0123 |

IV-F2 Effectiveness of the reduction strategy

As we analyze in Fig. 1 in the introduction, there is a significant amount of redundancy in both spatial and spectral dimensions, which can be a computational burden when calculating self-attention. Therefore, in CSA, we reduce the computation of features in both spatial and spectral dimensions. Additionally, we study the effect of this dimensionality reduction strategy in Table V. We can see that when not using dimensionality reduction in either spatial or spectral dimensions, the network’s computation and complexity increase significantly, and its performance does not improve but, in fact, slightly decreases. This demonstrates the effectiveness of our reduction strategy.

IV-F3 Analysis of the rectangle window in CSA

In order to investigate the impact of different sizes of rectangular windows in CSA, we conducted a large amount of experiments with various sizes of rectangular windows. Table VI presents the relationship between the window size and the experimental results. We can observe that as the spatial self-attention window size increases, our proposed network can capture more spatial information in larger regions, and both spatial and spectral metrics improve accordingly without extra computational cost. This demonstrates the importance of long-range spatial dependencies in hyperspectral image SR. Therefore, for better results, we adopt a rectangle window with the size of (2, 16) in this study.

IV-F4 Effectiveness of different losses

images of Chikusei dataset at the scale factor . Bold represents represents the best

| PSNR | SSIM | SAM | |||

|---|---|---|---|---|---|

| ✓ | 40.0777 | 0.9413 | 2.4751 | ||

| ✓ | ✓ | 40.1939 | 0.9425 | 2.3579 | |

| ✓ | ✓ | 40.1229 | 0.9418 | 2.4951 | |

| ✓ | ✓ | ✓ | 40.2406 | 0.9431 | 2.3453 |

We study the effectiveness of different losses in our experiments. The performance of different combinations of all losses is shown in Table VII. When considering only the loss, our method achieves the poorest results, especially in terms of PSNR and SSIM. By only incorporating the single spectral loss, our approach obtains the second-best results in both spatial and spectral metrics, indicating that leveraging spectral information can enhance performance and assist in the construction of HR hyperspectral image in spatial and spectral dimensions. Moreover, with only the gradient loss, our method improves the PSNR in terms of spatial dimensions while sacrificing the spectral accuracy. We can conclude that the gradient loss enhances the sharpness of high-resolution images, resulting in clearer details. When all loss functions are employed, our method achieves the best results, suggesting the effectiveness of using multiple loss functions in our approach. Under the constraints of the loss functions used in this paper, our method effectively explores global spectral similarity.

IV-F5 Analysis of the number of Transformer stages

| Number () | PSNR | SSIM | SAM |

|---|---|---|---|

| 40.1189 | 0.9416 | 2.3967 | |

| 40.2406 | 0.9431 | 2.3453 | |

| 40.2312 | 0.9430 | 2.3523 | |

| 40.2080 | 0.9428 | 2.3404 |

Our CST model consists of multiple consecutive Transformer stages, and here we investigate the impact of the number of Transformer stages on the experimental results in Table VIII. It can be observed that when using fewer modules (), our method produces the worst results. As we increase to 4 and 6, the quantitative metrics improve accordingly. However, when we further set to 8, the experimental results deteriorate. The main reason is that as the network depth increases, the Transformer architecture requires more data for training, and the network is prone to overfitting with poor generalization ability.

V Conclusion

In this study, we propose a new method CST to addresses the challenges in hyperspectral image super-resolution. In essence, CST primarily leverages the designed cross-scope spatial-spectral self-attention to effectively capture both long-range spatial and spectral dependencies. Through aggregating the global representative features with the rectangle window self-attention mechanism in the spatial dimension, the devised CSA efficiently explores the interactions between the local and global features in linear complexity. By introducing the reduction strategy in spectral self-attention, the designed CSE significantly integrates the comprehensive features and refined spatial-spectral features, which reduces the computational cost without sacrificing the SR performance. Moreover, we adopt a concise feed-forward neural network to further process the long-range spatial-spectral features, which can enhance the representational capacity and generalization of the network. Finally, we demonstrate the effectiveness and superiority of CST through extensive experiments on various datasets. CST consistently outperforms state-of-the-art methods quantitatively and visually, thanks to its ability to capture long-range spatial and spectral similarities.

References

- [1] R. O. Green, M. L. Eastwood, C. M. Sarture et al., “Imaging spectroscopy and the airborne visible/infrared imaging spectrometer (aviris),” Remote sens. Environ., vol. 65, no. 3, pp. 227–248, Sep. 1998.

- [2] L. Fang, Y. Jiang, Y. Yan, J. Yue, and Y. Deng, “Hyperspectral image instance segmentation using spectral-spatial feature pyramid network,” IEEE Trans. Geosci. Remote. Sens., vol. 61, pp. 1–13, Jan. 2023.

- [3] Y. Xu, L. Zhang, B. Du, and L. Zhang, “Hyperspectral anomaly detection based on machine learning: An overview,” IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens., vol. 15, pp. 3351–3364, Apr. 2022.

- [4] L. Gao, X. Sun, X. Sun, L. Zhuang, Q. Du, and B. Zhang, “Hyperspectral anomaly detection based on chessboard topology,” IEEE Trans. Geosci. Remote. Sens., vol. 61, pp. 1–16, Feb. 2023.

- [5] Y. Tan, L. Lu, L. Bruzzone, R. Guan, Z. Chang, and C. Yang, “Hyperspectral band selection for lithologic discrimination and geological mapping,” IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens., vol. 13, pp. 471–486, Jan. 2020.

- [6] G. Lu and B. Fei, “Medical hyperspectral imaging: a review,” J. Biomed. Opt., vol. 19, no. 1, pp. 010 901–010 901, Jan. 2014.

- [7] Z. Wang, J. Chen, and S. C. H. Hoi, “Deep learning for image super-resolution: A survey,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 43, no. 10, pp. 3365–3387, Oct. 2021.

- [8] R. Dian and S. Li, “Hyperspectral image super-resolution via subspace-based low tensor multi-rank regularization,” IEEE Trans. Image Process., vol. 28, no. 10, pp. 5135–5146, May 2019.

- [9] J. Li, D. Hong, L. Gao, J. Yao, K. Zheng, B. Zhang, and J. Chanussot, “Deep learning in multimodal remote sensing data fusion: A comprehensive review,” Int. J. Appl. Earth Obs. Geoinformation, vol. 112, p. 102926, Aug. 2022.

- [10] W. Dong, J. Qu, S. Xiao, T. Zhang, Y. Li, and X. Jia, “Noise prior knowledge informed bayesian inference network for hyperspectral super-resolution,” IEEE Trans. Image Process., vol. 32, pp. 3121–3135, May 2023.

- [11] S. Mei, X. Yuan, J. Ji, Y. Zhang, S. Wan, and Q. Du, “Hyperspectral image spatial super-resolution via 3d full convolutional neural network,” Remote. Sens., vol. 9, no. 11, p. 1139, Nov. 2017.

- [12] J. Hu, Y. Liu, X. Kang, and S. Fan, “Multilevel progressive network with nonlocal channel attention for hyperspectral image super-resolution,” IEEE Trans. Geosci. Remote. Sens., vol. 60, pp. 1–14, Dec. 2022.

- [13] K. Zheng, L. Gao, D. Hong, B. Zhang, and J. Chanussot, “Nonregsrnet: A nonrigid registration hyperspectral super-resolution network,” IEEE Trans. Geosci. Remote. Sens., vol. 60, pp. 1–16, Dec. 2022.

- [14] J. Jiang, H. Sun, X. Liu, and J. Ma, “Learning spatial-spectral prior for super-resolution of hyperspectral imagery,” IEEE Trans. Computational Imaging, vol. 6, pp. 1082–1096, May 2020.

- [15] K. Wang, X. Liao, J. Li, D. Meng, and Y. Wang, “Hyperspectral image super-resolution via knowledge-driven deep unrolling and transformer embedded convolutional recurrent neural network,” IEEE Trans. Image Process., vol. 32, pp. 4581–4594, Jul. 2023.

- [16] R. Dian, L. Fang, and S. Li, “Hyperspectral image super-resolution via non-local sparse tensor factorization,” in IEEE Conf. Comput. Vis. Pattern Recognit. CVPR), Jul. 2017, pp. 3862–3871.

- [17] L. Fang, H. Zhuo, and S. Li, “Super-resolution of hyperspectral image via superpixel-based sparse representation,” Neurocomputing, vol. 273, pp. 171–177, Aug. 2018.

- [18] Y. Xu, Z. Wu, J. Chanussot, and Z. Wei, “Nonlocal patch tensor sparse representation for hyperspectral image super-resolution,” IEEE Trans. Image Process., vol. 28, no. 6, pp. 3034–3047, Jun. 2019.

- [19] L. Gao, D. Hong, J. Yao, B. Zhang, P. Gamba, and J. Chanussot, “Spectral superresolution of multispectral imagery with joint sparse and low-rank learning,” IEEE Trans. Geosci. Remote. Sens., vol. 59, no. 3, pp. 2269–2280, Mar. 2021.

- [20] B. Lim, S. Son, H. Kim, S. Nah, and K. M. Lee, “Enhanced deep residual networks for single image super-resolution,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), Jul. 2017, pp. 1132–1140.

- [21] Y. Zhang, Y. Tian, Y. Kong, B. Zhong, and Y. Fu, “Residual dense network for image super-resolution,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2018, pp. 2472–2481.

- [22] Y. Zhang, K. Li, K. Li, L. Wang, B. Zhong, and Y. Fu, “Image super-resolution using very deep residual channel attention networks,” in Proc. Eur. Conf. Comput. Vis. (ECCV), vol. 11211, Sep. 2018, pp. 294–310.

- [23] D. Liu, J. Li, and Q. Yuan, “A spectral grouping and attention-driven residual dense network for hyperspectral image super-resolution,” IEEE Trans. Geosci. Remote. Sens., vol. 59, no. 9, pp. 7711–7725, Sep. 2021.

- [24] Q. Li, Q. Wang, and X. Li, “Mixed 2d/3d convolutional network for hyperspectral image super-resolution,” Remote. Sens., vol. 12, no. 10, p. 1660, May 2020.

- [25] Q. Wang, Q. Li, and X. Li, “Hyperspectral image superresolution using spectrum and feature context,” IEEE Trans. Ind. Electron., vol. 68, no. 11, pp. 11 276–11 285, Nov. 2021.

- [26] Y. Li, L. Zhang, C. Ding, W. Wei, and Y. Zhang, “Single hyperspectral image super-resolution with grouped deep recursive residual network,” in Proc. IEEE Int. Conf. Multimedia Big Data (BigMM), Sep. 2018, pp. 1–4.

- [27] X. Wang, J. Ma, and J. Jiang, “Hyperspectral image super-resolution via recurrent feedback embedding and spatial-spectral consistency regularization,” IEEE Trans. Geosci. Remote. Sens., vol. 60, pp. 1–13, Mar. 2022.

- [28] X. Wang, Q. Hu, J. Jiang, and J. Ma, “A group-based embedding learning and integration network for hyperspectral image super-resolution,” IEEE Trans. Geosci. Remote. Sens., vol. 60, pp. 1–16, Oct. 2022.

- [29] D. Hong, Z. Han, J. Yao, L. Gao, B. Zhang, A. Plaza, and J. Chanussot, “Spectralformer: Rethinking hyperspectral image classification with transformers,” IEEE Trans. Geosci. Remote. Sens., vol. 60, pp. 1–15, Nov. 2022.

- [30] M. Li, J. Liu, Y. Fu, Y. Zhang, and D. Dou, “Spectral enhanced rectangle transformer for hyperspectral image denoising,” in IEEE/CVF Conf. Comput. Vis. Pattern Recognit. CVPR). IEEE, Jun. 2023, pp. 5805–5814.

- [31] J. Liang, J. Cao, G. Sun, K. Zhang, L. V. Gool, and R. Timofte, “Swinir: Image restoration using swin transformer,” in Proc. IEEE/CVF Int. Conf. Comput. Vis. Workshops (ICCVW), Oct. 2021, pp. 1833–1844.

- [32] S. W. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, and M. Yang, “Restormer: Efficient transformer for high-resolution image restoration,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2022, pp. 5718–5729.

- [33] Y. Liu, J. Hu, X. Kang, J. Luo, and S. Fan, “Interactformer: Interactive transformer and CNN for hyperspectral image super-resolution,” IEEE Trans. Geosci. Remote. Sens., vol. 60, pp. 1–15, Jun. 2022.

- [34] L. Zhang, L. Song, B. Du, and Y. Zhang, “Nonlocal low-rank tensor completion for visual data,” IEEE Trans. Cybern., vol. 51, no. 2, pp. 673–685, Feb. 2021.

- [35] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” in Proc. IEEE/CVF Int. Conf. Comput. Vis. (ICCV), Oct. 2021, pp. 9992–10 002.

- [36] Z. Chen, Y. Zhang, J. Gu, Y. Zhang, L. Kong, and X. Yuan, “Cross aggregation transformer for image restoration,” in Advances in Neural lnf. Process. Syst. (NeurIPS), Jun. 2022.

- [37] T. Dai, J. Cai, Y. Zhang, S. Xia, and L. Zhang, “Second-order attention network for single image super-resolution,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2019, pp. 11 065–11 074.

- [38] Y. Yuan, X. Zheng, and X. Lu, “Hyperspectral image superresolution by transfer learning,” IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens., vol. 10, no. 5, pp. 1963–1974, May 2017.

- [39] J. Li, R. Cui, B. Li, R. Song, Y. Li, and Q. Du, “Hyperspectral image super-resolution with 1d-2d attentional convolutional neural network,” Remote. Sens., vol. 11, no. 23, p. 2859, Dec. 2019.

- [40] Q. Li, Q. Wang, and X. Li, “Exploring the relationship between 2d/3d convolution for hyperspectral image super-resolution,” IEEE Trans. Geosci. Remote. Sens., vol. 59, no. 10, pp. 8693–8703, Jan. 2021.

- [41] Q. Li, Y. Yuan, X. Jia, and Q. Wang, “Dual-stage approach toward hyperspectral image super-resolution,” IEEE Trans. Image Process., vol. 31, pp. 7252–7263, Nov. 2022.

- [42] Y. Mei, Y. Fan, Y. Zhou, L. Huang, T. S. Huang, and H. Shi, “Image super-resolution with cross-scale non-local attention and exhaustive self-exemplars mining,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2020, pp. 5689–5698.

- [43] H. Chen, Y. Wang, T. Guo, C. Xu, Y. Deng, Z. Liu, S. Ma, C. Xu, C. Xu, and W. Gao, “Pre-trained image processing transformer,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2021, pp. 12 299–12 310.

- [44] Y. Wu, R. Cao, Y. Hu, J. Wang, and K. Li, “Combining global receptive field and spatial spectral information for single-image hyperspectral super-resolution,” Neurocomputing, vol. 542, p. 126277, Apr. 2023.

- [45] N. Yokoya and A. Iwasaki, “Airborne hyperspectral data over chikusei,” Space Appl. Lab., Univ. Tokyo, Tokyo, Japan, Tech. Rep. SAL-2016-05-27, May 2016.

- [46] X. Huang and L. Zhang, “A comparative study of spatial approaches for urban mapping using hyperspectral rosis images over pavia city, northern italy,” Int. J. Remote Sens., vol. 30, no. 12, pp. 3205–3221, Mar. 2009.

- [47] W. Shi, J. Caballero, F. Huszar, J. Totz, A. P. Aitken, R. Bishop, D. Rueckert, and Z. Wang, “Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2016, pp. 1874–1883.