Cutting Sequence Diffuser: Sim-to-Real Transferable Planning

for Object Shaping by Grinding

Abstract

Automating object shaping by grinding with a robot is a crucial industrial process that involves removing material with a rotating grinding belt. This process generates removal resistance depending on such process conditions as material type, removal volume, and robot grinding posture, all of which complicate the analytical modeling of shape transitions. Additionally, a data-driven approach based on real-world data is challenging due to high data collection costs and the irreversible nature of the process. This paper proposes a Cutting Sequence Diffuser (CSD) for object shaping by grinding. The CSD, which only requires simple simulation data for model learning, offers an efficient way to plan long-horizon action sequences transferable to the real world. Our method designs a smooth action space with constrained small removal volumes to suppress the complexity of the shape transitions caused by removal resistance, thus reducing the reality gap in simulations. Moreover, by using a diffusion model to generate long-horizon action sequences, our approach reduces the planning time and allows for grinding the target shape while adhering to the constraints of a small removal volume per step. Through evaluations in both simulation and real robot experiments, we confirmed that our CSD was effective for grinding to different materials and various target shapes in a short time.

Index Terms:

Model Learning for Control, Manipulation Planning, Machine Learning for Robot ControlI INTRODUCTION

Object-shape manipulation is an important skill in industry and our daily lives [1]. A shaping method that gradually removes unnecessary material from a base stock is called a removal process. As a common industrial removal process, this paper focuses on the grinding process in which a rotating grinding belt removes material and explores the automation of this process with a robot.

The grinding process generates removal resistance depending on the process conditions (e.g., material type, removal volume, robot grinding posture, etc.). Such removal resistance drags the robot’s end-effector and leads to shaping errors, complicating the analytical modeling of the objects’ shape transitions. Therefore, modeling shape transitions with a data-driven approach using real-world data may be reasonable. In particular, object-shaping by Model-Based Reinforcement Learning (MBRL) has shown its effectiveness by iteratively optimizing both shape transition models by learning and action plans using Model Predictive Control (MPC) [2]. However, due to the irreversible nature of the removal process, the high cost of data collection remains a severe issue, emphasizing the importance of addressing this problem from a practical standpoint.

This study utilizes simulations to explore an action-planning method that requires no data collection in the real world. Ignoring the removal resistance, the shape transition of objects due to grinding can be viewed as a geometric splitting of the shape with a cutting surface (belt) and simulated by algebraic operations. However, removal resistance creates a reality gap between the real world and simulations.

The grinding resistance theory [3], which states that removal resistance is proportional to the removal volume of one step, suggests that reducing the removal volume in the grinding action decreases resistance and thus the reality gap. This approach should enable the direct transfer of simulated action sequences to the real world. However, such an approach lengthens task steps and increases computational costs, necessitating a long-horizon action sequence planner. Recent generative models, such as diffusion models [4, 5, 6], have shown the ability to learn long-horizon trajectory distributions [7, 8]. This suggests that sampling from these distributions could serve as an effective long-horizon action sequence planner.

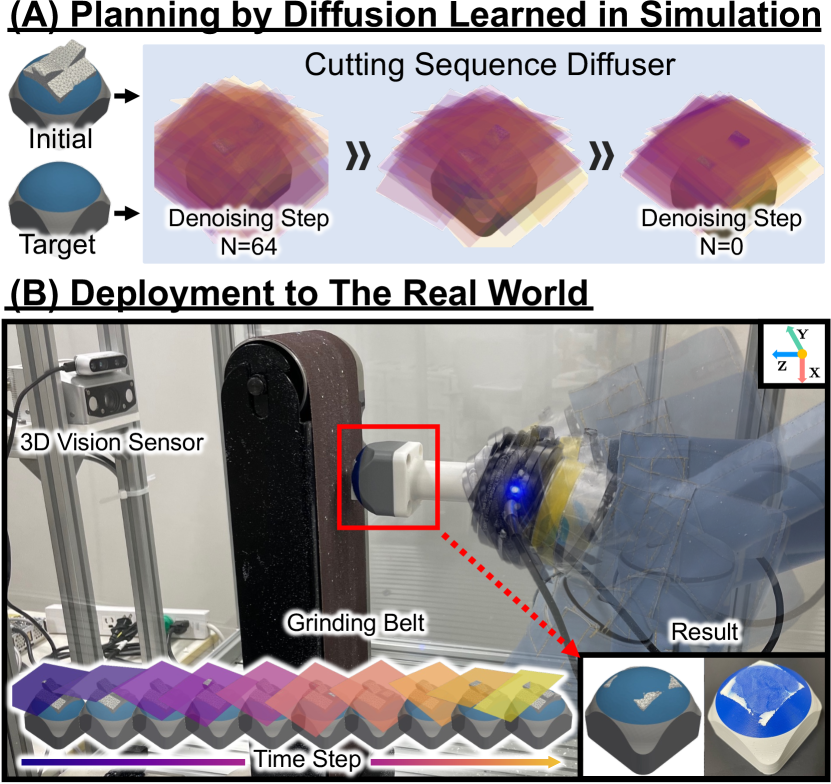

With the above in mind, we propose a Cutting Sequence Diffuser (CSD) for object shaping by grinding (Figure1). Our method constrains the robot’s action with a small removal volume, which reduces removal resistance to simplify the shape transition as a geometric cutting model. This transition model is then applied to data collection for an action sequence planner by a diffusion model. Diffusion models can represent flexible distributions because data distributions are learned by gradually removing noise from Gaussian noise [5]. Therefore, sampling from a distribution conditioned on the cost of the target behavior (trajectory) enables long-horizon action sequences to be planned quickly, which has been difficult to accomplish with conventional optimization-based action planning methods [7, 8].

We evaluate the proposed method in both simulation and real robot experiments. These results indicate that our method enables a sim-to-real transfer due to action constraints that reduce the removal resistance. Moreover, an action plan by a diffusion model quickly provides flexible long-horizon action sequences for different materials and various target shapes.

The following are the main contributions of this paper:

-

•

We propose a sim-to-real transferable shape transition model that constrains a robot’s action with a small removal volume to reduce removal resistance.

-

•

We propose a novel cutting sequence planning framework, CSD, which utilizes a diffusion model for object shaping by grinding.

-

•

We validate that CSD enables direct transfer of the planned action sequence to the real world through simulation and real robot experiments.

II RELATED WORK

II-A Learning to Plan for Object-shape Manipulation

Many studies have been conducted on object-shaping tasks with robots. The reinforcement learning approach is practical because there is no risk of injury or physical burden on the human demonstrators compared to imitation learning which uses demonstration data. The deformation process is a typical method of shaping objects by bending or pressing as if forming dough. Since this process is reversible and can revert to its original shape, data collection costs are low. Some studies have been conducted using collected data from the real world. Matl et al. automated a dough-shaping task using MBRL [9]. In addition, since the deformation process does not generate removal resistance, learned policies can be easily transferred on a simulator to the real world [10, 11].

In contrast, the removal process is irreversible and cannot revert to its original shape, which causes high data collection costs. For the grinding process, CSA-MBRL [2] has been proposed to plan actions by learning a shape transition model that considers removal resistance using real data. The finite element or particle methods are used as simulators for removal processes but still suffer from a reality gap due to removal resistance. Another method has been proposed to identify the dynamics parameters of a simulator using real-world data [12]. Heiden et al. proposed a cutting simulator to replicate the force applied to a knife when cutting ingredients using real-world data [13]. However, such methods only identify the dynamics parameters of the simulator and do not plan robot actions for object shaping.

Thus, the automation of removal processes has been limited to approaches that either learn from real data or identify simulator parameters. In contrast, the novelty of our proposed method is its ability to transfer the planned actions in a simulator to the real world. In other words, our method does not require real data, unlike CSA-MBRL.

II-B Robot Control with Diffusion Models

Diffusion models [4, 5, 6] are a type of generative model known for their high expressiveness and flexibility. They generate data through a denoising process and can conduct conditional sampling via guide (cost) functions. This capability allows for various applications, such as text-conditioned image generation [6] and video editing [14].

As examples of applying diffusion models for robots, Mishra et al. proposed a task and motion planning method that combines individual task skills [15]. In the context of action (motion) planning, Janner et al. proposed a diffuser [8]. They show that long-horizon trajectories conditioned on such guide functions as rewards can be planned using a diffusion model. In addition, Chi et al. proposed a visuomotor policy that plans robot actions from image inputs using a diffusion model [16, 17]. Learned policy has been validated across 11 tasks that require multi-modal action. Diffusion models have also been applied to contact-rich manipulation tasks such as wiping [18]. In another study, Urain et al. proposed a SE(3) diffusion model for 6-DoF grasping that optimizes a robot’s motion and grasping posture [19].

Our method follows the features of flexible planning for long-horizon action sequences in a short time compared to conventional optimization-based action planning methods. Consequently, we propose a novel object-shaping method that is the first to apply a diffusion model as an action planner for the grinding process. Furthermore, through long-horizon action sequence planning using a diffusion model conditioned by guide (cost) functions, our method enables shaping different materials and various target shapes.

III Preliminary

In preparation for the proposed method, §III-A describes shape transition modeling using a cutting surface, which can be constructed by constraining a robot’s action to a small removal volume. Additionally, we formulate the action planning problem. §III-B, introduces a trajectory generation (action planning) method using a diffusion model [7, 8].

III-A Object Shaping Planning for Grinding

III-A1 Shape Transition Modeling by Cutting Surface

The grinding process is performed by local surface contact between the tool surface (belt) and the object shape. Ignoring removal resistance, shape transition can be viewed as a geometric splitting by the cutting surface (Figure2, top). Here, the robot’s action is equivalent to specifying the cutting surface. This step allows us to represent the shape transition model as a Geometric-Cutting Model (GCM), where cutting surface (robot action) geometrically splits current shape into next time-step shape and removal shape . The shape transition by the cutting surface is defined as the following functions [2]:

| (1) |

These functions split the shape using geometric collision detection, simplifying their implementation. Figure2 (bottom) shows shape deformation by cutting surface .

In the actual grinding process, removal resistance is generated depending on the process conditions. The removal resistance generated by grinding is called grinding resistance , which is basically proportional to removal volume and inversely proportional to belt rotation speed , [3]. Here is a coefficient, derived from the material type and the belt’s grinding performance. If the belt rotation speed is constant, the grinding resistance is proportional to the removal volume. Thus, a cutting action with a large removal volume increases the grinding resistance and the actual shape transition contradicts the GCM.

III-A2 Cutting Sequence Optimization Problem

Given a shape transition model, we denote trajectory and cost function , optimal robot action sequences can be formulated as follows:

| (2) |

Here is the planning horizon and is the task index. Deriving action sequences that satisfy Eq. 2 using conventional optimization-based action planning methods (e.g., random shooting method [20]), significantly increases the computational cost depending on the planning horizon. Hence, long-horizon action planning is time-consuming [7, 8].

III-B Trajectory Generation with Diffusion Models

III-B1 Denoising Diffusion Probabilistic Models

Our framework generates trajectory using diffusion models. They consist of a forward diffusion process that transforms the trajectory from data distribution into Gaussian noise and a denoising (inverse) process that transforms the Gaussian noise back to data distribution. Given that original data and denote the data after adding times noise, the forward diffusion process is defined: where is a noise scheduler that controls the noise scale.

The denoising process is parametrized as a Gaussian form, and the mean and covariance are defined by a model with parameter which takes noise data and diffusion steps as input:

| (3) | ||||

| (4) | ||||

| (5) |

Covariance denotes and independent of parameter . Where , , and . In the learning process, instead of directly optimizing , noise added to data , can be learned by simplified loss function , since can be represented by as [5].

III-B2 Diffusion Models with Guided Sampling

By introducing, a binary variable to indicate the trajectory as optimal by and non-optimal by , and using the Control as Inference, trajectory optimization can be formulated as a stochastic inference problem that samples the optimal trajectory from distribution by Here is a prior distribution of the trajectory, and is the likelihood for the optimality of the trajectory. As a common assumption, since belongs to an exponential family, we can arbitrarily write .

The posterior distribution of the denoising process can be expressed in Gaussian form for each denoising process . Therefore, an approximate formulation is obtained as follows [4, 6]:

| (6) |

where and are given by Eq. 4: . is derived from the gradient of the cost function:

| (7) |

Thus, by shifting mean in the denoising process based on the gradient of a cost function designed for the task (e.g., state constraints, trajectory smoothness), we can generate trajectories guided by the cost function.

In addition, diffusion models are trained for the accuracy of the generated trajectories rather than single-step errors. Hence, they can quickly plan long-horizon action sequences without suffering from the significant increase in computational cost that is common in conventional optimization-based action planning methods [7, 8].

IV Proposed Method

This section explains our planning method for cutting sequences using a diffusion model. §IV-A shows the framework of our proposed method, CSD. The following sections describe the components of the framework: §IV-B describes the action constraints used for data collection, §IV-C describes a method for reducing high-dimensional shape information to lower dimensions, and §IV-D describes the design and usage of the cost functions for guides during trajectory generation.

IV-A Cutting Sequence Diffuser Framework

The proposed method consists of the following three major phases (Figure3): 1) Data Collection: We collect episodes of random state action sequences constrained by a small removal volume. Data collection is conducted by a simulator that implements a geometric cutting model. The details of the action constraints are described in §IV-B. 2) Cutting Sequence Diffuser Training: A diffusion model is trained to generate trajectories with planning horizon using the collected data. During the training, high-dimensional shapes are reduced by a variational auto-encoder, as described in §IV-C. 3) Deployment: The planned cutting sequences are integrated with closed-loop control to ensure robust action execution, similar to MPC. CSD plans -horizon cutting sequences based on the current observed shape, the cost function, and the two-step guidance described in §IV-D. Then, -step actions from the planned cutting sequence are executed as control inputs for the robot. By repeating this process until task horizon , object-shaping can be achieved by grinding with a diffusion model.

IV-B Sim-to-real Transferable Data Collection

A geometric cutting model is only applicable when the removal volume in one step is sufficiently small. Therefore, random state action sequences for diffusion model learning are collected under the following constraints:

| sample | ||||

| subject to | (8a) | |||

| (8b) | ||||

Where returns 0 if no removal volume exists; otherwise it returns the removal volume. is the upper bound of the removal volume at one step. Eq. 8b constrains the cutting action that overcuts reference shape to improve the efficiency of data collection. is the volume threshold that overcuts the reference shape.

IV-C Latent Encoding of Shape States

In grinding processes, shape representations such as point clouds and depth images used as states are high-dimensional. Directly using such high-dimensional states increases the training costs of a diffusion model. Therefore, a Variational Auto-Encoder (VAE) with parameters is used to compress state into a latent feature . Where is the dimension of the latent features. Thus, trajectory used in a diffusion model is defined as . In our experiments shown below, we employed point cloud as the state, where is the number of points and is the -th particle position.

IV-D Guided Sampling for Cutting Sequence Generation

IV-D1 Cost Design

This section describes the types of cost functions used as guides for trajectory generation by a diffusion model. Total cost for to is calculated by summing each cost: , . Where are the weights of each cost.

State constrain cost: This cost generates a trajectory that satisfies the state constraint , such as current shape and target shape . Observed state distributions in diffusion models are represented by a Dirac delta function. Hence, state constraint cost is expressed as follows:

| (9) |

In the implementation, if satisfies the state constraint, we directly replace with a state constraint value (e.g., or ) in all the denoising processes, as in [8].

Over-cutting cost: Since the grinding process is irreversible, over-cutting target shape is undesirable. Therefore, we introduce over-cutting cost :

| (10) |

Cut volume limit cost: This cost constrains the removal volume at one step. If removal volume exceeds , the following cost is imposed:

| (11) |

Action smoothness cost: To suppress the vibrations of the robot’s end-effector and promote the planning of smooth cutting sequences, we constrain the travel length of the cutting surface as follows:

| (12) |

Where is the -th action value at time step and is the upper bound of the travel length for each action dimension. The variable indicates the action index.

In the general grinding process, overcutting the target shape and exceeding the volume limit that can be removed in one step is fatal. Therefore, it would be preferable to set the weights to ensure that the and costs are dominant.

IV-D2 Trajectory Generation with Two-step Guide

The proposed method increases task horizon by constraining the removal volume to reduce the reality gap. Therefore, as shown in §IV-A, the task horizon is divided into practical planning horizon lengths . However, the grinding process is a goal-conditioned trajectory planning problem where only current shape and final target shape can be used as state constraints. Thus, if we naively set the final target shape as the end of planning horizon constraint , CSD tries to achieve object shaping, which normally requires steps, within a planning horizon . This typically occurs when the planning horizon is shorter than the task horizon (i.e., ). Hence, such an approach can generate cutting sequences that remove a large volume at once, potentially causing significant removal resistance.

To address this issue, we execute trajectory planning with two-step guidance. Initially, at , we set planning horizon equal to task horizon and generate a trajectory for the entire task horizon to obtain the reference shape states for each time step. Next, we plan the -horizon trajectory by referring to the obtained shape as the state constraint at the end of the planning horizon, . Thus, we use the shape state obtained by planning the trajectory with the entire task horizon as the state constraint at the end of the planning horizon. This approach allows for the suppression of cutting actions with large removal volumes at once. As a result, it may reduce removal resistance and promote smooth trajectory generation.

V Robotic Rough-Grinding Environments

Figure 1 shows our robotic rough-grinding system. A grinding belt is fixed in the environment, and the object is mounted on the 6-DoF robot’s end-effector. The robot moves to the front of the 3D vision sensor and captures a point cloud as the object’s shape. Robot action manipulates the coordinates of the object around the work origin, which is an arbitrary distance from the grinding belt. It is equivalent to specifying the cutting surface of the object. The 2-DoF of the parallel translation and the 1-DoF rotation around a perpendicular axis can be ignored because the grinding belt is sufficiently wide. As a result, the robot’s action becomes .

Figure 4 shows the initial and target shapes used for the experiments, all of which were fabricated by a 3D printer. The gray part is the base, the blue part is the target shape, and the white part is the shape to be removed by grinding. We prepared white parts printed with Acrylonitrile Styrene Acrylate (ASA) filament and PolyCarbonate (PC) filament, the latter of which is a harder material than ASA.

| Shape A with ASA | Shape A with PC | Shape B with ASA | Shape C with ASA | |||||

| Method | Shape Error | Planning Time [s] | Shape Error | Planning Time [s] | Shape Error | Planning Time [s] | Shape Error | Planning Time [s] |

| Const-RS | 1.42 | 691.82 | 1.42 | 691.73 | 1.89 | 435.41 | 1.60 | 289.75 |

| CSA-MBRL | 0.96 | 140.21 | 1.07 | 140.94 | 1.52 | 93.89 | 1.40 | 70.87 |

| CSD (Ours) | 0.94 | 46.98 | 0.95 | 47.55 | 1.31 | 39.39 | 1.18 | 28.17 |

| Shape A with ASA | Shape A with PC | |||||||

| Method | Shape Error | Over-cutting | Action Smoothness | Cut Volume Limit | Shape Error | Over-cutting | Action Smoothness | Cut Volume Limit |

| CSD w/o Guide | 0.97 | 16.17 | 1.52 | 0.09 | 0.98 | 20.17 | 1.51 | 0.10 |

| CSD w/ Guide | 0.94 | 5.67 | 0.76 | 0.05 | 0.95 | 3.83 | 0.64 | 0.04 |

| Cut Volume Limit | ||

| Method | Shape A with ASA | Shape A with PC |

| CSD w/ One-step Guide | 0.074 | 0.077 |

| CSD w/ Two-step Guide | 0.051 | 0.044 |

| Shape A with ASA | ||

| Planning Method | Shape Error | Planning Time [s] |

| CVAE | 1.08 | 23.37 |

| Diffusion | 0.94 | 46.98 |

VI SIMULATION EXPERIMENT

In our simulation experiment, we evaluated the proposed method with an environment that implemented a virtual grinding resistance as a proxy for the real world. This experiment had four objectives. First, we compared the shape error after the processing and the action planning time to the baseline methods. Second, we compared the action planner used in the proposed method. Third, we investigated its applicability for environments with different grinding resistances and different initial and target shapes. Fourth, we evaluated the effectiveness of the guided trajectory generation with a designed cost function. Additional experiments and parameter settings are available on our project page: https://t-hachimine.github.io/csd/.

VI-A Simulation Environments

We used Open3D [21] and Pyvista [22] for the rendering. The initial and target shapes were created by CAD and converted to the point cloud. We implemented a virtual grinding resistance model, depending on the robot’s action and the removal volume. In the simulation experiment, the shape deformation due to the grinding resistance was reproduced as the deviation of the cutting surface. We prepared two virtual grinding resistance models (ASA and PC) to reproduce different grinding resistances with the material. The removal volume was calculated by the sum of the Euclidean distance between the removal shape and the cutting surface divided by the number of removal shape point clouds.

VI-B Baseline Methods

Const-RS: This method samples action with a small removal volume similar to the proposed method and executes action planning by MPC. We used a random shooting method [20] to optimize the cost function of MPC. CSA-MBRL: The MBRL method learns a shape transition model by considering the grinding resistance using real data and executes action planning by MPC [2]. CVAE: This method uses Conditional VAE (CVAE) [7, 23] as an action planner instead of a diffusion model. The encoder and decoder networks used the same structure as a diffusion model. During training, the conditions were set as the start and end states of the planning horizon. At the planning time, trajectories were conditioned by the current and target states. Finally, action sequences were derived by optimizing the likelihood against other costs using the gradient descent method.

VI-C Data Collection and Action Planning Settings

We randomly sampled actions with and did not overcut the target shape of Shape A as a reference shape by more than . We prepared 9K trajectories with 251 steps per episode. Shape state was compressed into latent features by a VAE with a PointNet structure [24]. Following the same learning procedure as in [7, 8], we calculated the training loss of a 2D-Unet diffusion model using . Finally, we trained it in 2M steps with batch size , , and diffusion step .

| Shape A with ASA | Shape A with PC | Shape B with ASA | Shape C with ASA | |||||

| Method | Shape Error | Planning Time [s] | Shape Error | Planning Time [s] | Shape Error | Planning Time [s] | Shape Error | Planning Time [s] |

| Const-RS | 3.05 | 1087.00 | 2.58 | 1090.55 | 1.90 | 900.00 | 2.00 | 662.28 |

| CSA-MBRL | 1.40 | 89.13 | 1.68 | 87.48 | 1.54 | 90.33 | 1.52 | 68.02 |

| CSD (Ours) | 1.28 | 49.61 | 1.27 | 45.87 | 1.21 | 35.46 | 1.16 | 28.63 |

| Shape A with ASA | Shape A with PC | |||||||

| Method | Shape Error | Over-cutting | Action Smoothness | Cut Volume Limit | Shape Error | Over-cutting | Action Smoothness | Cut Volume Limit |

| CSD w/o Guide | 1.52 | 20.00 | 1.42 | 0.11 | 2.46 | 26.00 | 1.31 | 0.12 |

| CSD w/ Guide | 1.28 | 4.00 | 0.62 | 0.05 | 1.27 | 4.00 | 0.73 | 0.05 |

| Cut Volume Limit | ||

| Method | Shape A with ASA | Shape A with PC |

| CSD w/ One-step Guide | 0.094 | 0.086 |

| CSD w/ Two-step Guide | 0.046 | 0.048 |

VI-D Simulation Results

VI-D1 Evaluation with Baseline Methods

Figure 5 (a) shows an example of the shape after processing Shape A with ASA. TABLE I compares the shape error and the action planning time for six trials of each method. In the following experiment, we used Chamfer discrepancy [25] for the shape error calculation:

| (13) |

where and are the positions of the individual points in the point clouds. This metric evaluates the error using the Euclidean distance between the nearest neighbor points of two point clouds. It indicates that the shape error is rarely zero unless the two point clouds are perfectly aligned. Const-RS and CSD were performed with task steps, a planning horizon of , and control inputs. CSA-MBRL was performed with , , and . CSA-MBRL defines a single-step action as follows: 1) Transitioning from the work origin to the target cutting surface, 2) Holding the target cutting surface for a specific duration, and 3) Returning to the work origin. This definition allows each step to cover a larger range of tasks, leading to shorter , , and . Additionally, , , and were set based on [2] to maintain consistency with prior experiments and ensure comparability. These results show that our proposed method can quickly plan long-horizon action sequences and achieve comparable shape error as CSA-MBRL, which learns the shape transition model from real data.

VI-D2 Comparison of Action Planning Methods within the Proposed Method

TABLE IV compares the action planner within the proposed method. The former with CVAE had a shorter planning time than the diffusion model. However, it had larger shape error, indicating the suitability of employing a diffusion model for the proposed method, where grinding accuracy is important even if action planning takes some time.

VI-D3 Applicability of Proposed Method

Figures 5 (b)-(d) and TABLE I show the results of grinding Shape A with PC and Shapes B and C with ASA. These results indicate that the proposed method can be applied to environments with different grinding resistances and different initial and target shapes by combining actions with a small removal volume through flexible trajectory generation using a diffusion model.

VI-D4 Effects of Guided Diffusion

TABLE II compares the task performances with/without a guide. Four evaluation metrics were used for the task performance. Over-cutting is the number of times the target shape was excessively cut. The cut volume limit is obtained by dividing the cumulative sum of the volumes that exceed the removal volume threshold by the number of task steps. Refer to Eqs. 12–13 concerning shape error and action smoothness. These results show that a guided trajectory generation with a cost function improves each evaluation metric.

TABLE III compares the cut volume limit with/without the two-step guide. Figure6 shows the transition of the cumulative sum of the volumes that exceeded the cut volume limit. In the case of a one-step guide, planning actions exceeded the cut volume limits around the initial step. However, the two-step guide suppressed the excesses of the cut volume limits.

VII REAL ROBOT EXPERIMENT

We evaluated the proposed method in a real robot environment because the grinding resistance was basically proportional to the removed volume, however, in the actual grinding process, it was also generated from other effects (e.g., friction heat). In the robot experiment, cutting sequences were generated by a diffusion model trained with the same settings as the simulation experiment. The robot followed each linearly interpolated action (cutting surface) at a constant velocity by position control. The shape coordinate error between the simulation and the real world was calibrated in advance.

VII-A Results

VII-A1 Evaluation with Baseline Methods

Figure 7 (a) shows an example of the shape after processing Shape A with ASA. TABLE V compares the shape error and the action planning time for three trials of each method. Const-RS and CSD were performed with task steps, a planning horizon of , and control inputs. CSA-MBRL was performed with , , and . CSA-MBRL observed the shape every two steps to reduce the task execution time. The shape observation in the real robot experiments required the robot to be moved in front of the 3D vision sensor (Figure1) and to take multiple measurements to obtain an accurate shape, which was time-consuming. Therefore, the predicted shape (one step ahead) was used as the observed shape where no shape observation was performed, and the action was planned again. These results show that, similar to the simulation results, the proposed method can quickly plan long-horizon action sequences and achieve comparable shape error as CSA-MBRL, which learns the shape transition model from real data.

Figure 8 compares the observations, action planning, and action execution times required to process Shape A with ASA. Const-RS required longer time to optimize a long-horizon action. CSA-MBRL, required frequent shape observations to reduce the shape error. In contrast, the proposed method required less task execution time due to its ability to quickly plan long-horizon action sequences.

VII-A2 Applicability of Proposed Method

Figures 7 (b)-(d) and TABLE V show the results of grinding Shape A with PC and Shapes B and C with ASA. The proposed method can be applied to different materials and different initial and target shapes by combining actions with a small removal volume through flexible trajectory generation using a diffusion model.

VII-A3 Effects of Guided Diffusion

TABLE VI compares the task performances with/without a guide. These results show that a guided trajectory generation with a cost function improves each evaluation metric. Additionally, TABLE VII compares the cut volume limit with/without the two-step guide. We confirmed that it suppressed the excesses of the cut volume limits.

VIII DISCUSSION

The evaluation of this study focused on applicability to different materials and initial and target shapes. Therefore, investigating the applicability of different belt rotation speeds and abrasive grain types is interesting future work. Since the proposed method reduced removal resistance by constraining the removal volume, it may be adaptable by appropriately setting the cut volume limit cost used for action planning.

One limitation of the proposed method is that the trajectory generated by a diffusion model sometimes included actions that did not remove the shape. Optimizing the trajectory based on the grinding resistance during processing is a further extension to reduce the task execution time. We may study a method that adjusts the trajectory with a small amount of real data by black-box optimization based on a trajectory generated by a diffusion model.

IX CONCLUSION

We proposed novel sim-to-real transferable object shaping for grinding. A novel technique that constrained a robot’s action based on removal resistance theory reduced the sim-to-real gap. This technique enables data collection on a simplified geometric simulator and action planning with a diffusion model. Experimental results show that our method enables a sim-to-real transfer and an action plan with a diffusion model quickly provides flexible long-horizon action sequences for different materials and various target shapes. We found that the task execution times can be reduced in the grinding process, where shape observation is time-consuming.

References

- [1] V. E. Arriola-Rios, P. Guler, F. Ficuciello, D. Kragic, B. Siciliano, and J. L. Wyatt, “Modeling of deformable objects for robotic manipulation: A tutorial and review,” Frontiers in Robotics and AI, vol. 7, p. 82, 2020.

- [2] T. Hachimine, J. Morimoto, and T. Matsubara, “Learning to shape by grinding: Cutting-surface-aware model-based reinforcement learning,” IEEE Robotics and Automation Letters, vol. 8, pp. 6235–6242, 2023.

- [3] J. Tang, J. Du, and Y. Chen, “Modeling and experimental study of grinding forces in surface grinding,” Journal of Materials Processing Technology, vol. 209, pp. 2847–2854, 2009.

- [4] J. Sohl-Dickstein, E. Weiss, N. Maheswaranathan, and S. Ganguli, “Deep unsupervised learning using nonequilibrium thermodynamics,” in Int. Conf. on Machine Learning, pp. 2256–2265, 2015.

- [5] J. Ho, A. Jain, and P. Abbeel, “Denoising diffusion probabilistic models,” in Advances in Neural Information Processing Systems, vol. 33, pp. 6840–6851, 2020.

- [6] P. Dhariwal and A. Nichol, “Diffusion models beat gans on image synthesis,” in Advances in Neural Information Processing Systems, vol. 34, pp. 8780–8794, 2021.

- [7] J. Carvalho, A. T. Le, M. Baierl, D. Koert, and J. Peters, “Motion planning diffusion: Learning and planning of robot motions with diffusion models,” in IEEE/RSJ Int. Conf. on Intelli. Robots and Sys., pp. 1916–1923, 2023.

- [8] M. Janner, Y. Du, J. Tenenbaum, and S. Levine, “Planning with diffusion for flexible behavior synthesis,” in Int. Conf. on Machine Learning, vol. 162, pp. 9902–9915, 2022.

- [9] C. Matl and R. Bajcsy, “Deformable elasto-plastic object shaping using an elastic hand and model-based reinforcement learning,” in IEEE/RSJ Int. Conf. on Intelli. Robots and Sys., pp. 3955–3962, 2021.

- [10] J. Liang, V. Makoviychuk, A. Handa, N. Chentanez, M. Macklin, and D. Fox, “Gpu-accelerated robotic simulation for distributed reinforcement learning,” in Conf. on Robot Learning, pp. 270–282, 2018.

- [11] Z. Huang, Y. Hu, T. Du, S. Zhou, H. Su, J. Tenenbaum, and C. Gan, “Plasticinelab: A soft-body manipulation benchmark with differentiable physics,” in Int. Conf. on Learning Representations, 2021.

- [12] C. C. Beltran-Hernandez, N. Erbetti, and M. Hamaya, “Sliceit!–a dual simulator framework for learning robot food slicing,” in IEEE Int. Conf. on Robot. and Autom., pp. 4296–4302, 2024.

- [13] E. Heiden, M. Macklin, Y. S. Narang, D. Fox, A. Garg, and F. Ramos, “Disect: A differentiable simulation engine for autonomous robotic cutting,” in Robotics: Science and Systems, 2021.

- [14] N. Cohen, V. Kulikov, M. Kleiner, I. Huberman-Spiegelglas, and T. Michaeli, “Slicedit: Zero-shot video editing with text-to-image diffusion models using spatio-temporal slices,” in Int. Conf. on Machine Learning, vol. 235, pp. 9109–9137, 2024.

- [15] U. Mishra, S. Xue, Y. Chen, and D. Xu, “Generative skill chaining: Long-horizon skill planning with diffusion models,” in Conf. on Robot Learning, pp. 2905–2925, 2023.

- [16] C. Chi, S. Feng, Y. Du, Z. Xu, E. Cousineau, B. Burchfiel, and S. Song, “Diffusion policy: Visuomotor policy learning via action diffusion,” in Robotics: Science and Systems, 2023.

- [17] C. Chi, Z. Xu, C. Pan, E. Cousineau, B. Burchfiel, S. Feng, R. Tedrake, and S. Song, “Universal manipulation interface: In-the-wild robot teaching without in-the-wild robots,” in Robotics: Science and Systems, 2024.

- [18] M. Okada, M. Komatsu, and T. Taniguchi, “A contact model based on denoising diffusion to learn variable impedance control for contact-rich manipulation,” IEEE/RSJ Int. Conf. on Intelli. Robots and Sys, 2024.

- [19] J. Urain, N. Funk, J. Peters, and G. Chalvatzaki, “Se (3)-diffusionfields: Learning smooth cost functions for joint grasp and motion optimization through diffusion,” in IEEE Int. Conf. on Robot. and Autom., pp. 5923–5930, 2023.

- [20] A. Nagabandi, G. Kahn, R. S. Fearing, and S. Levine, “Neural network dynamics for model-based deep reinforcement learning with model-free fine-tuning,” in IEEE Int. Conf. on Robot. and Autom., pp. 7559–7566, 2018.

- [21] Q. Zhou, J. Park, and V. Koltun, “Open3D: A modern library for 3D data processing,” in arXiv:1801.09847, 2018.

- [22] C. B. Sullivan and A. Kaszynski, “PyVista: 3d plotting and mesh analysis through a streamlined interface for the visualization toolkit (VTK),” Journal of Open Source Software, vol. 4, p. 1450, 2019.

- [23] B. Ichter, J. Harrison, and M. Pavone, “Learning sampling distributions for robot motion planning,” in IEEE Int. Conf. on Robot. and Autom, pp. 7087–7094, 2018.

- [24] C. R. Qi, H. Su, K. Mo, and L. J. Guibas, “Pointnet: Deep learning on point sets for 3d classification and segmentation,” in Conf. on Computer Vision and Pattern Recognition, pp. 652–660, 2017.

- [25] T. Nguyen, Q. Pham, T. Le, T. Pham, N. Ho, and B. Hua, “Point-set distances for learning representations of 3d point clouds,” in Int. Conf. on Computer Vision, pp. 10458–10467, 2021.