[]6338_supple

CXR Segmentation by AdaIN-based Domain Adaptation and Knowledge Distillation

Abstract

As segmentation labels are scarce, extensive researches have been conducted to train segmentation networks with domain adaptation, semi-supervised or self-supervised learning techniques to utilize abundant unlabeled dataset. However, these approaches appear different from each other, so it is not clear how these approaches can be combined for better performance. Inspired by recent multi-domain image translation approaches, here we propose a novel segmentation framework using adaptive instance normalization (AdaIN), so that a single generator is trained to perform both domain adaptation and semi-supervised segmentation tasks via knowledge distillation by simply changing task-specific AdaIN codes. Specifically, our framework is designed to deal with difficult situations in chest X-ray radiograph (CXR) segmentation, where labels are only available for normal data, but the trained model should be applied to both normal and abnormal data. The proposed network demonstrates great generalizability under domain shift and achieves the state-of-the- art performance for abnormal CXR segmentation.

Keywords:

Chest X-ray, Segmentation, Domain adaptation, Knowledge distillation, Self-supervised learning1 Introduction

High-accuracy image segmentation often serves as the first step in various medical image analysis tasks [6, 20]. Recently, deep learning (DL) approaches have become the state-of-the-art (SOTA) techniques for medical image segmentation tasks thanks to their superior performance compared to the classical methods [6].

The performance of DL-based segmentation algorithm usually depends on large amount of labels, but segmentation masks are scarce due to expensive and time-consuming annotation procedures. Another difficulty in DL-based segmentation is the so-called domain shift, i.e., a segmentation network trained with data in a specific domain often undergoes drastic performance degradation when applied to unseen test domains.

For example, in the field of chest X-ray radiograph (CXR) analysis [2], segmentation networks trained with normal CXR data often produce under-segmentation when applied to abnormal CXRs with severe infectious diseases such as viral or bacterial pneumonia [18, 27]. The missed regions from under-segmentation mostly contain crucial features, such as pulmonary consolidations or ground-glass opacity, for classifying the infectious diseases. Thus, highly-accurate lung segmentation results without under-segmentation are required to guarantee that DL-based classification algorithms fully learn crucial lung features, while alleviating irrelevant factors outside the lung to prevent shortcut learning [7].

To solve the label scarcity and domain shift problems, there have been extensive researches to train segmentation networks in a semi-supervised manner using limited training labels, or in a self-supervised or unsupervised manner even without labeled dataset [1, 28, 29, 23, 32, 19, 17, 3]. However, these approaches appear different from each other, and there exist no consensus in regard to how these different approaches can be synergistically combined. Inspired by success of StarGANv2 for transferring style between various domains [4], here we propose a style transfer-based knowledge distillation framework that can synergistically combine supervised segmentation, domain adaptation and self-supervised knowledge distillation tasks to improve unsupervised segmentation performance. Specifically, our framework is designed to deal with difficult but often encountered situations where segmentation masks are only available for normal data, but the trained method should be applied to both normal and abnormal images.

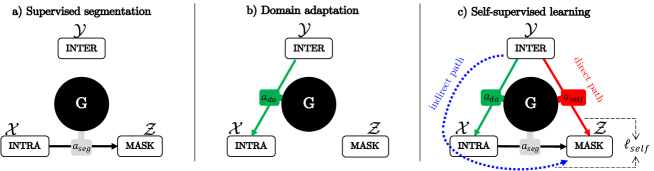

The key idea is that a single generator trained along with the adaptive instance normalization (AdaIN) [11] can perform supervised segmentation as well as domain adaptation between normal and abnormal domains by simply changing the AdaIN codes, as shown in Fig. 1(a) and (b), respectively. The network is also trained in a self-supervised manner using another AdaIN code in order to force direct segmentation results (illustrated as a red arrow in Fig. 1(c)) to be matched to indirect segmentation results through domain adaptation and subsequent segmentation (illustrated as a blue arrow in Fig. 1(c)). Since a single generator is used for all these tasks, the network can synergistically learn common features from different dataset through knowledge distillation.

To validate the concept of the proposed framework, we train our network using labeled normal CXR dataset and unlabeled pneumonia CXR dataset, and test the model performance on unseen dataset composed of COVID-19 pneumonia CXRs [27, 13]. We further evaluate the network performance on domain-shifted CXR datasets for both normal and abnormal cases, and compared the results with other SOTA techniques. Experimental results confirm that our method has great promise in providing robust segmentation results for both in-domain and out-of-domain dataset. We release our source code 111https://github.com/yjoh12/CXR-Segmentation-by-AdaIN-based-Domain-Adaptation-and-Knowledge-Distillation.git for being utilized in various CXR analysis tasks presented in Supplementary Section S1.

2 Related Works

To make this paper self-contained, here we review several existing works that are necessary to understand our method.

2.0.1 Image Style Transfer

The aim of the image style transfer is to convert a content image into a certain stylized image. Currently, two types of approaches are often used for image style transfer.

First, when a pair of content image and a reference style image is given, the goal is to convert the content image to imitate the reference style. For example, the adaptive instance normalization (AdaIN) has been proposed as a simple but powerful method [11] for image style transfer. Specifically, AdaIN layers of a network estimate means and variance of the given reference style features and use the learned parameters to adjust those of the content image.

On the other hand, unsupervised style transfer approaches such as CycleGAN [33] learn target reference style distribution rather than individual style given a single image. Unfortunately, the CycleGAN approach requires generators to translate between domains. To deal with this, multi-domain image translation approaches have been proposed. In particular, StarGANv2 [4] introduces an advanced single generator-based framework, which transfers styles over multiple domains by training domain-specific style codes of AdaIN.

2.0.2 Semi and Self-supervised Learning

For medical image segmentation, a DL-based model trained with labeled dataset in a specific domain (e.g. normal CXR) is often needed to be refined for different domain dataset in semi-supervised, self-supervised or unsupervised manners [28, 29, 23, 32, 19, 17, 3]. These approaches try to take advantage of learned features from a specific domain to generate pseudo-labels or distillate the learned knowledge to another domain.

Specifically, Tang et al. [28] applies a semi-supervised learning approach by generating pseudo CXRs via an image-to-image style transfer framework, and achieves improved segmentation performance by training a segmentation network with both labeled and pseudo-labeled dataset.

Self-supervised learning approaches can also bring large improvements in image segmentation tasks, by promoting consistency between model outputs given a same input with different perturbations or by training auxiliary proxy tasks [32, 19, 17]. In general, DL models trained with auxiliary self-consistency losses are proven to achieve better generalization capability as well as better primary task performance, especially when training with abundant unlabeled dataset.

2.0.3 Teacher-Student Approaches

Teacher-student approaches can be utilized for semi or self-supervised learning framework. These methods consist of two individual networks, i.e., a student and a teacher model. The student model is trained in a supervised manner, as well as in a self-supervised manner which enforces the student model outputs to be consistent with outputs from the teacher model [17, 23, 29].

Specifically, Li et al. [17] introduce a dual-teacher framework on segmentation task, which consists of two teacher models: a traditional teacher model for transferring intra-domain knowledge and an additional teacher model for transferring inter-domain knowledge. To leverage inter-domain dataset, images from different domain are firstly style-transferred as pseudo-images using CycleGAN [33]. Then the student network is trained to predict consistent outputs with those of the inter-domain teacher given the style-transferred images, so that the acquired inter-doamin knowledge can be integrated into the student network.

3 Methods

3.1 Key Idea

One of the unique features of StarGANv2 [4] is that it can synergistically learn common features across multiple image domains via shared layers to fully utilize all different datasets, but still allow domain-specific style transfer using different style codes. Inspired by this idea, our framework adapts AdaIN layers to perform style transfer between normal and abnormal domains and further utilizes an additional style code for self-supervised learning.

Specifically, our framework categorizes the training data into three distinct groups: the segmentation mask [MASK], their matched input image domain [INTRA], and domain-shifted input images with no segmentation labels [INTER]. Due to the domain shift between [INTRA] and [INTER] domains, a network trained in a supervised manner using only [INTRA] dataset does not generalize well for [INTER] domain. To mitigate this problem, we propose a single generator to perform supervised segmentation (see Fig. 1(a)) as well as domain adaptation between [INTRA] and [INTER] domains using different AdaIN codes (see Fig. 1(b)).

Similar to the existing teacher-student approaches, we then introduce a self-consistency loss between different model outputs given learned task-specific AdaIN codes. The teacher network considers the indirect segmentation through domain adaptation followed by segmentation (indicated as the blue arrow in Fig. 1(c)), and the student network considers the direct segmentation (indicated as the red arrow in Fig. 1(c)). The network is trained in a self-supervised manner that enforces the direct segmentation results to be consistent with the indirect segmentation results, so that unlabeled domain images can be directly segmented. This enables knowledge distillation from learned segmentation and domain adaptation tasks to the self-supervised segmentation task.

Once the network is trained, only a single generator and pre-built AdaIN codes can be simply utilized at the inference phase, which makes the proposed method more practical.

3.2 Overall Framework

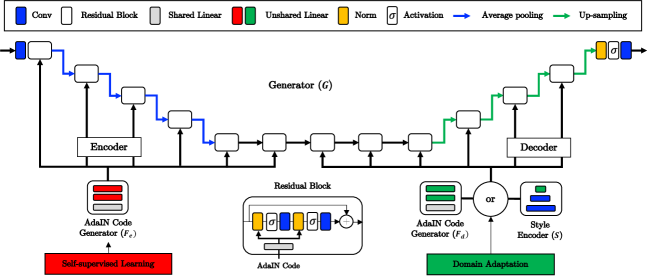

Overall architecture of our network is shown in Fig. 2, which is composed of a single generator , AdaIN code generators for the encoder and decoder, and , respectively, a style encoder , and a multi-head discriminator .

The generator is composed of encoder and decoder modules. Specifically, the encoder part is composed of four downsampling residual blocks and two intermediate residual blocks. The decoder part is composed of two intermediate residual blocks and four up-sampling residual blocks. Each residual block is composed of AdaIN layers, activation layers, and convolution layers. All the AdaIN layers are connected to the code generators and , and the style encoder is also connected to the decoder module. Detailed network specification is provided in Supplementary Section S2.

One of key ideas of our framework is introducing independent code generators and to each encoder and decoder module. Thanks to the two separate code generators, the generator can perform segmentation, domain adaptation and self-supervised learning tasks, by simply changing combinations of the AdaIN codes, as shown in Table 1.

| AdaIN code | [Source] | [Target] | Task | ||

| (mean, var) | (mean, var) | ||||

| [INTRA] | [MASK] | (0, 1) | (0, 1) | segmentation | |

| [INTRA] | [MASK] | (0, 1) | learnable | dummy segmentation | |

| [INTER] | [INTRA] | (0, 1) | learnable | domain adaptation | |

| [INTRA] | [INTER] | (0, 1) | learnable | domain adaptation | |

| [INTER] | [MASK] | learnable | (0, 1) | self-supervised |

Specifically, let and refer to the [INTRA], [INTER] and [MASK] domains associated with the probability distribution , and . Then, our generator is defined by

| (1) |

where is the input image either in or , refers the AdaIN code, as shown in Table 1, and and indicate the code generators for the encoder and the decoder, respectively. Given the task-specific code , AdaIN layers of the generator efficiently shift weight distribution to desirable style distribution, by adjusting means and variances. Detailed style transfer mechanism of AdaIN is provided in Supplementary Section S3. Hence, the generator can generate output either in , or domain, depending on different codes combination.

The style encoder is introduced by StyleGANv2 to impose an additional constraint, so that the learned AdaIN codes should reflect style of the given reference images [4]. In our framework, the style encoder encodes the generated output into a code for imposing code-level cycle-consistency. Another role of the style encoder is to generate reference-guided codes given reference images. By alternatively generating codes using the style encoder or the AdaIN code generator , as illustrated as the module in Fig. 2, the learned codes can be regularized to reflect the reference styles.

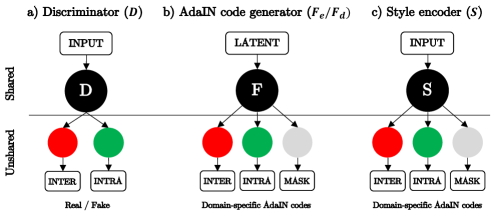

The discriminator is composed of shared convolution layers followed by multi-headed unshared convolution layers for each image domain, as shown in Fig. 3(a). In the discriminator, the input image can be classified as 1 or 0 for each domain separately, where 1 indicates real and 0 indicates fake. The AdaIN code generator and the style encoder are also composed of shared layers followed by domain-specific unshared layers, as shown in Fig. 3(b) and (c). Thanks to the existence of the shared layers, learned features from a specific domain can be shared with other domains, improving overall performance of each module.

In the following, we provide more detailed description how this network can be trained.

3.3 Neural Network Training

Our training losses are extended from traditional style transfer framework, with specific modification to include the segmentation and the self-supervised learning tasks. The details are as follows.

3.3.1 Supervised Segmentation

This part is a unique contribution of our work compared to traditional style transfer methods. Fig. 1(a) shows the supervised segmentation, which can be considered as conversion from to . In this case, the generator is trained by the following:

| (2) |

where and are hyper-parameters, and the segmentation loss is defined by the cross-entropy loss between generated output and its matched label:

| (3) |

where denotes the -th pixel of the ground truth segmentation mask with respect to the input image , denotes the softmax probability function of the -th pixel in the generated image, and denotes the supervised segmentation task output.

Once a segmentation result is generated, the style encoder encodes the generated image to be consistent with the dummy AdaIN code . This can be achieved by using the following style loss:

| (4) |

where can be either generated by or , given segmentation mask . Although this code is not used for segmentation directly, the generation of this dummy AdaIN code turns out to be important to train the shared layers in the AdaIN code generator and the style encoder. We analyzed contribution of different losses for the supervised segmentation task, as analyzed in Supplementary Section S4.

3.3.2 Domain Adaptation

Fig. 1(b) shows the training scheme for the domain adaptation between and . The training of domain adaptation solves the following optimization problem:

| (5) |

The role of Equation 5 is to train the generator to synthesize style-transfered images given domain-specific AdaIN codes , while fooling the discriminator . As this step basically follows traditional style transfer methods, the detailed domain adaptation loss is deferred to Supplementary Section S5.

3.3.3 Self-supervised Learning

This part is another unique contribution of our work. The goal of the self-supervised learning is to directly transfer an unlabeled image in to segmentation mask , illustrated as the red arrow in Fig. 1(c).

Specifically, since [INTER] domain lacks segmentation mask, knowledge learned from both the supervised learning and domain adaptation need to be distilled. Thus, our contribution comes from introducing novel constraints: (1) the direct segmentation outputs trained in a self-supervised manner, illustrated as the red arrow in Fig. 1(c) should be consistent with indirect segmentation outputs, illustrated as the blue arrow in Fig. 1(c). (2) At the inference phase, it is often difficult to know which domain the input comes from. Therefore, a single AdaIN code should deal with both [INTRA] and [INTER] domain image segmentation. This leads to the following self-consistency loss:

| (6) | |||

| (7) | |||

| (8) | |||

| (9) |

where indicates the frozen generator , and denote inter-domain and intra-domain self-consistency loss, respectively, and and are hyper-parameters for each. The role of Eq. (8) is for emposing the constraint (1), and Eq. (9) is for emposing the constraint (2).

In fact, this procedure can be regarded as a teacher-student approach. The indirect path is a teacher network that guides the training procedure of the direct path, which regards the student network. In contrast to the existing teacher-student approaches [29, 17, 23], our approach does not need two separate networks: instead, the single generator with different AdaIN codes combinations can be served as the teacher or the student network, which is another big advantage of our method.

4 Experiments

4.1 Experimental settings

4.1.1 Dataset

For training the supervised segmentation task, normal CXRs were acquired from JSRT dataset [26] with their paired lung segmentation labels from SCR dataset [31]. For training domain adaptation task, we collected pneumonia CXRs from RSNA [8] and Cohen dataset [5]. Detailed dataset information is described in Table 2.

| Domain | Dataset | Label | Disease class | Bit | View | Total | Train | Val | Test | DS level | |

| Weak | Harsh | ||||||||||

| Labeled train set | JSRT | O | Normal, Nodule | 12 | PA | 247 | 178 | 20 | 49 | - | |

| Unlabeled train set | RSNA | - | PN (COVID-19) | 10 | AP | 218 | 218 | - | - | - | |

| Cohen et al. | - | PN (COVID-19, | 8 | PA, AP | 680 | 640 | - | 40 | - | ||

| Viral, Bacterial, TB) | |||||||||||

| External testset | NLM | O | Normal | 8 | PA | 80 | - | - | 80 | 80 | 80 |

| BIMCV-13 | O | PN (COVID-19) | 16 | PA, AP | 13 | - | - | 13 | 13 | 13 | |

| BIMCV | - | PN (COVID-19) | 16 | AP | 374 | - | - | 374 | - | - | |

| BRIXIA | - | PN (COVID-19) | 16 | AP | 2384 | - | - | 2384 | - | - | |

| Note: PN, pneumonia; TB, tuberculosis; DS level, distribution shift level. | |||||||||||

To test the proposed network performance on external datasets, we utilized three external resources. For normal CXR segmentation evaluation, NLM dataset with paired lung labels were utilized [14]. For abnormal CXR segmentation evaluation, BIMCV dataset [13] and BRIXIA dataset [27] were utilized. Besides, additional 13 CXRs from the BIMCV dataset (indicated as BIMCV-13), with labeled consolidation or ground glass opacities features by radiologists, were utilized for quantitative evaluation of abnormal CXRs segmentation.

For further analyzing the model performance on domain-shifted conditions, external labeled dataset were prepared with three different levels of modulation, defined as distribution shift level. None-level indicates original inputs. Weak- and Harsh-level indicate intensity and contrast modulated inputs with random scaling factors within 30% and 60% range, followed by addition of Gaussian noise with standard deviation of 0.5 and 1, respectively.

All the input CXRs and labels are resized to . We did not perform any pre-processing or data augmentation except for normalization of pixel intensity range to [-1,0, 1.0].

4.1.2 Implementation Details

The proposed network was trained by feeding input images from a pair of two randomly chosen domains: one for the source domain and the other for the target domain. For example, if a domain pair composed of [INTER] and [INTRA] domains fed into the network, the network was trained for the domain adaptation task. When a domain pair, composed of [INTRA] as source and [MASK] as target domain, fed into the network, the network was trained for the supervised segmentation task. For self-supervised learning, an image from [INTER] domain, as well as from [INTRA], was fed as the source domain to output the segmentation mask. Implementation details for training the proposed network are provided in Supplementary Section S6.

For the domain adaptation task, we utilized CycleGAN [33], MUNIT [12] and StarGANv2 [4] as baseline models for comparative studies. For the segmentation task, we utilized U-Net [24] as a baseline model. All the baseline models were trained with identical conditions to that of the proposed model. To evaluate unified performance of the domain adaptation task and the segmentation task, we utilized available models for performing abnormal CXR segmentation, i.e., XLSor [28] and lungVAE [25]. Implementation details for all the comparative models are provided in Supplementary Section S7.

4.1.3 Evaluation Metric

For normal CXRs, quantitative segmentation performance of both lungs was evaluated using dice similarity score (Dice) index. The abnormal CXR segmentation performance was evaluated quantitatively using true positive ratio (TPR) of the annotated abnormalities labels. Moreover, for unlabeled abnormal dataset, domain adaptation and segmentation performance were qualitatively evaluated based on generation of expected lung structure covered with highly-consolidated regions.

4.2 Results

4.2.1 Unified Domain Adaptation and Segmentation

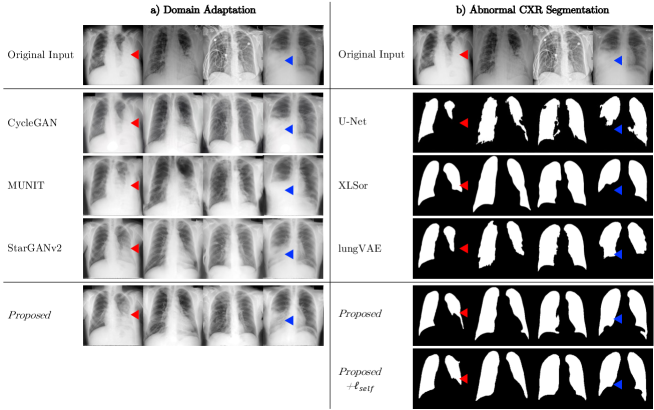

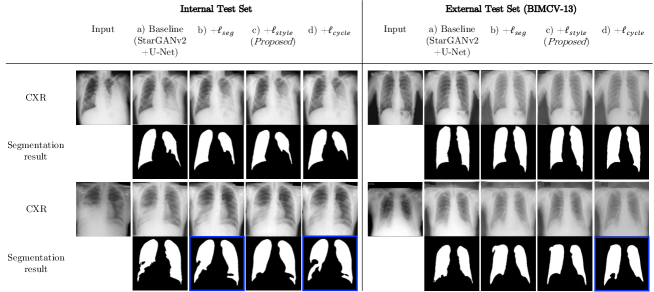

The unified performance was evaluated on the internal test set. We defined our model trained with segmentation loss (Eq. (2)) and domain adaptation loss (Eq. (5)) as Proposed, and the model trained with additional self-consistency loss (Eq. (6)) as Proposed+. As shown in Fig. 4(a), compared to CycleGAN and MUNIT, Proposed model utilizing StarGANv2 framework successfully transferred highly consolidated regions in abnormal CXRs into normal lungs.

Abnormal CXR segmentation results are presented in Fig. 4(b). All the comparative models failed to segment highly consolidated lung regions, indicated as red and blue triangles. Note that Proposed and Proposed+ models were the only methods that successfully segmented abnormal lungs as like normal lungs.

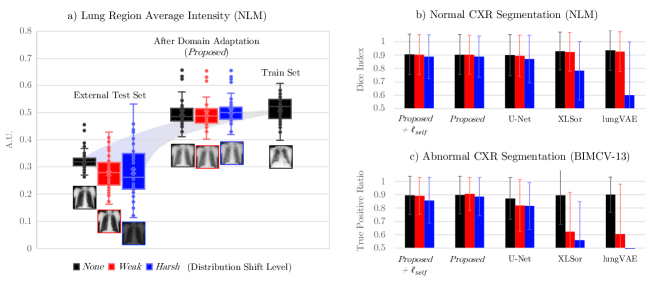

4.2.2 Quantitative Evaluation on Domain-shifted External Dataset

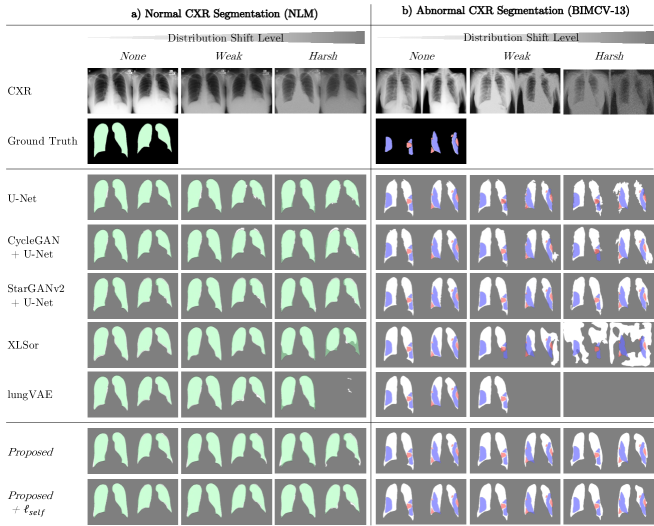

For quantitative evaluation, we utilized labeled dataset for both normal and abnormal CXRs (NLM and BIMCV-13, respectively). To verify that the proposed methods could still retain segmentation performance on domain-shifted dataset, we further tested the model performance on distribution modulated inputs (Weak- and Harsh-level) for both normal and abnormal dataset.

As illustrated in Fig. 5(a), Proposed model successfully adapted shifted distribution of lung area intensity to be similar to the train set distribution. Thanks to the successful domain adaptation performance, both Proposed and Proposed+ models maintained robust segmentation performance compared to other models, as distribution gap increases (see blue bar plots of Fig. 5(b) and (c)).

Specifically, as reported in Table 3, for the abnormal CXR segmentation task, all the models showed promising performance by achieving TPR of around 0.90 for the original (None-level) inputs, illustrated as black bar plots in Fig. 5(c). However, XLSor and lungVAE performance drastically dropped to around 0.60 for Weak-level inputs, while Proposed model performance rather improved, as illustrated in red bar plots. For Harsh-level inputs, lung structures were only correctly segmented by Proposed and Proposed+ models with above 0.85 of TPR, as illustrated in blue bar plots.

| Method | Normal CXR (Dice Index) | Abnormal CXR (True Positive Ratio) | ||||

| Distribution Shift Level | Distribution Shift Level | |||||

| None | Weak | Harsh | None | Weak | Harsh | |

| SS | ||||||

| U-Net [24] | 0.90 0.15 | 0.89 0.16 | 0.87 0.18 | 0.87 0.16 | 0.82 0.20 | 0.82 0.18 |

| DA+SS | ||||||

| CycleGAN [33]+U-Net | 0.89 0.17 | 0.89 0.17 | 0.86 0.18 | 0.88 0.17 | 0.84 0.22 | 0.85 0.17 |

| StarGANv2 [4]+U-Net | 0.90 0.15 | 0.90 0.15 | 0.88 0.15 | 0.90 0.13 | 0.90 0.12 | 0.88 0.16 |

| Proposed | 0.90 0.01 | 0.90 0.14 | 0.89 0.15 | 0.90 0.14 | 0.91 0.12 | 0.89 0.14 |

| UDS/Self | ||||||

| XLSor [28] | 0.93 0.14 | 0.92 0.15 | 0.78 0.22 | 0.90 0.22 | 0.62 0.30 | 0.56 0.29 |

| lungVAE [25] | 0.94 0.15 | 0.93 0.15 | 0.60 0.40 | 0.90 0.13 | 0.61 0.38 | 0.10 0.26 |

| Proposed+ | 0.91 0.15 | 0.90 0.15 | 0.89 0.16 | 0.90 0.14 | 0.89 0.14 | 0.86 0.17 |

| Note: SS, supervised segmentation; DA, domain adaptation; UDS, unified DA+SS; Self, self-supervised segmentation. | ||||||

Corresponding segmentation contours are also presented in Fig. 6. The qualitative segmentation performance was surprising since artificial perturbation, which seemed tolerable for human eye, brought strong performance degradation to the existing DL-based algorithms. Specifically, for abnormal CXR segmentation, all the comparative UDS/Self methods (XLSor and lungVAE) failed to be generalized to several out-of-distribution cases from Harsh-level inputs (see Fig. 6(b)). The UDS/Self methods have no additional domain adaptation process, but the networks themselves are trained with augmented data distribution, e.g., images added with random noise or pseudo-pneumonia images. The degraded performance indicates that the above data augmentation techniques are still limited to be generalized to way-shifted data distribution. U-Net with or without style-transfer based pre-processing (CycleGAN or StarGANv2), rather endured harsh-distribution shift, however, lung contours showed irregular shapes with rough boundary. On the other hand, Proposed and Proposed+ models showed stable segmentation performance on majority of domain-shifted cases. In particular, Proposed+ model showed most promising performance, with the least over-segmentation artifact for both normal and abnormal datasets.

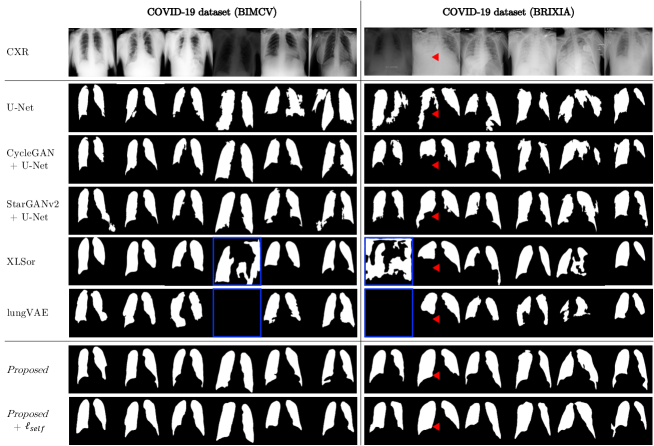

4.2.3 Qualitative Evaluation on COVID-19 Dataset

For evaluating the model performance on real-world dataset, we utilized COVID-19 pneumonia dataset (BIMCV and BRIXIA), which are obtained from more than 12 hospitals. Fig. 7 presents qualitative results of abnormal CXR segmentation on COVID-19 pneumonia dataset. Representative cases were randomly selected from each dataset.

The external COVID-19 dataset showed varied intensity and noise distribution. Comparative models mostly failed to segment regular lung shapes. Specifically, for highly consolidated regions indicated as red triangles, all the existing models were suffered from under-segmentation artifacts. For several cases, XLSor and lungVAE totally failed to be generalized to domain-shift issues (blue boxes). Proposed and Proposed+ models showed reliable segmentation performance without severe under-segmentation or over-segmentation artifacts.

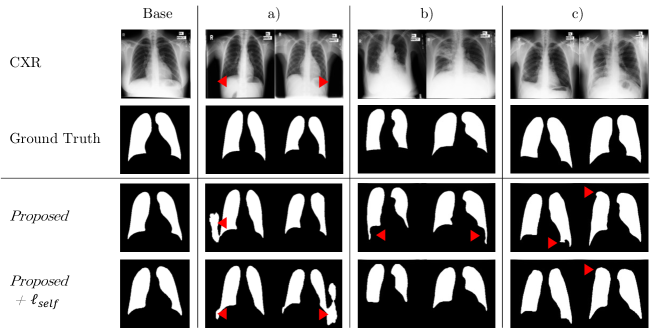

4.2.4 Error Analysis

We further analyzed typical error cases, which failed to be segmented, and the error cases were grouped into three categories. The representative error cases selected from each category are shown in Supplementary Section S8.

5 Conclusions

We present a novel framework, which can perform segmentation, domain adaptation and self-supervised learning tasks within a single generator in a cost-effective manner (Supplementary Section S9). The proposed network can fully leverage knowledge learned from each task by utilizing shared network parameters, thus the model performance can be synergistically improved via knowledge distillation between multiple tasks, so that achieves SOTA performance on the unsupervised abnormal CXR segmentation task. The experimental results demonstrate that the proposed unified framework can solve domain shift issues with great generalizability, even on dataset with way-shifted distribution. Last but not the least, the proposed model does not need any pre-processing techniques but shows superior domain adaptation performance, which presents a promising direction to solve the generalization problem of DL-based segmentation methods.

Acknowledgement

This research was supported by the Korea Medical Device Development Fund grant funded by the Korea government (the Ministry of Science and ICT, the Ministry of Trade, Industry and Energy, the Ministry of Health & Welfare, the Ministry of Food and Drug Safety) (Project Number: 1711137899, KMDF_PR_20 200901_0015), the MSIT(Ministry of Science and ICT), Korea, under the ITRC (Information Technology Research Center) support program(IITP-2022-2020-0-01461) supervised by the IITP(Institute for Information & communications Technology Planning & Evaluation), the National Research Foundation of Korea under Grant NRF-2020R1A2B5B03001980, and the Field-oriented Technology Development Project for Customs Administration through National Research Foundation of Korea(NRF) funded by the Ministry of Science & ICT and Korea Customs Service(NRF-2021M3I1A1097938)

References

- [1] Bai, W., Oktay, O., Sinclair, M., Suzuki, H., Rajchl, M., Tarroni, G., Glocker, B., King, A., Matthews, P.M., Rueckert, D.: Semi-supervised learning for network-based cardiac mr image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 253–260. Springer (2017)

- [2] Çallı, E., Sogancioglu, E., van Ginneken, B., van Leeuwen, K.G., Murphy, K.: Deep learning for chest x-ray analysis: A survey. Medical Image Analysis 72, 102125 (2021)

- [3] Chen, C., Dou, Q., Chen, H., Qin, J., Heng, P.A.: Unsupervised bidirectional cross-modality adaptation via deeply synergistic image and feature alignment for medical image segmentation. IEEE transactions on medical imaging 39(7), 2494–2505 (2020)

- [4] Choi, Y., Uh, Y., Yoo, J., Ha, J.W.: Stargan v2: Diverse image synthesis for multiple domains. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8188–8197 (2020)

- [5] Cohen, J.P., Morrison, P., Dao, L.: COVID-19 image data collection. arXiv 2003.11597 (2020), https://github.com/ieee8023/covid-chestxray-dataset

- [6] De Fauw, J., Ledsam, J.R., Romera-Paredes, B., Nikolov, S., Tomasev, N., Blackwell, S., Askham, H., Glorot, X., O’Donoghue, B., Visentin, D., et al.: Clinically applicable deep learning for diagnosis and referral in retinal disease. Nature medicine 24(9), 1342–1350 (2018)

- [7] DeGrave, A.J., Janizek, J.D., Lee, S.I.: Ai for radiographic covid-19 detection selects shortcuts over signal. Nature Machine Intelligence 3(7), 610–619 (2021)

- [8] Desai, S., Baghal, A., Wongsurawat, T., Jenjaroenpun, P., Powell, T., Al-Shukri, S., Gates, K., Farmer, P., Rutherford, M., Blake, G., et al.: Chest imaging representing a covid-19 positive rural us population. Scientific data 7(1), 1–6 (2020)

- [9] Frid-Adar, M., Amer, R., Gozes, O., Nassar, J., Greenspan, H.: Covid-19 in cxr: From detection and severity scoring to patient disease monitoring. IEEE journal of biomedical and health informatics 25(6), 1892–1903 (2021)

- [10] Hou, J., Gao, T.: Explainable dcnn based chest x-ray image analysis and classification for covid-19 pneumonia detection. Scientific Reports 11(1), 1–15 (2021)

- [11] Huang, X., Belongie, S.: Arbitrary style transfer in real-time with adaptive instance normalization. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 1501–1510 (2017)

- [12] Huang, X., Liu, M.Y., Belongie, S., Kautz, J.: Multimodal unsupervised image-to-image translation. In: Proceedings of the European conference on computer vision (ECCV). pp. 172–189 (2018)

- [13] de la Iglesia Vayá, M., Saborit, J.M., Montell, J.A., Pertusa, A., Bustos, A., Cazorla, M., Galant, J., Barber, X., Orozco-Beltrán, D., García-García, F., Caparrós, M., González, G., Salinas, J.M.: Bimcv covid-19+: a large annotated dataset of rx and ct images from covid-19 patients (2020)

- [14] Jaeger, S., Candemir, S., Antani, S., Wáng, Y.X.J., Lu, P.X., Thoma, G.: Two public chest X-ray datasets for computer-aided screening of pulmonary diseases. Quantitative imaging in medicine and surgery 4(6), 475 (2014)

- [15] Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

- [16] Lakhani, P., Sundaram, B.: Deep learning at chest radiography: automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology 284(2), 574–582 (2017)

- [17] Li, X., Yu, L., Chen, H., Fu, C.W., Xing, L., Heng, P.A.: Transformation-consistent self-ensembling model for semisupervised medical image segmentation. IEEE Transactions on Neural Networks and Learning Systems (2020)

- [18] Oh, Y., Park, S., Ye, J.C.: Deep learning covid-19 features on cxr using limited training data sets. IEEE Transactions on Medical Imaging 39(8), 2688–2700 (2020). https://doi.org/10.1109/TMI.2020.2993291

- [19] Orbes-Arteaga, M., Varsavsky, T., Sudre, C.H., Eaton-Rosen, Z., Haddow, L.J., Sørensen, L., Nielsen, M., Pai, A., Ourselin, S., Modat, M., et al.: Multi-domain adaptation in brain mri through paired consistency and adversarial learning. In: Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data, pp. 54–62. Springer (2019)

- [20] Ouyang, X., Huo, J., Xia, L., Shan, F., Liu, J., Mo, Z., Yan, F., Ding, Z., Yang, Q., Song, B., et al.: Dual-sampling attention network for diagnosis of covid-19 from community acquired pneumonia. IEEE Transactions on Medical Imaging 39(8), 2595–2605 (2020)

- [21] Park, S., Kim, G., Oh, Y., Seo, J.B., Lee, S.M., Kim, J.H., Moon, S., Lim, J.K., Ye, J.C.: Vision transformer using low-level chest x-ray feature corpus for covid-19 diagnosis and severity quantification. arXiv preprint arXiv:2104.07235 (2021)

- [22] Paszke, A., Gross, S., Chintala, S., Chanan, G., Yang, E., DeVito, Z., Lin, Z., Desmaison, A., Antiga, L., Lerer, A.: Automatic differentiation in PyTorch. In: NIPS Autodiff Workshop (2017)

- [23] Perone, C.S., Ballester, P., Barros, R.C., Cohen-Adad, J.: Unsupervised domain adaptation for medical imaging segmentation with self-ensembling. NeuroImage 194, 1–11 (2019)

- [24] Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention. pp. 234–241. Springer (2015)

- [25] Selvan, R., Dam, E.B., Detlefsen, N.S., Rischel, S., Sheng, K., Nielsen, M., Pai, A.: Lung segmentation from chest x-rays using variational data imputation (2020)

- [26] Shiraishi, J., Katsuragawa, S., Ikezoe, J., Matsumoto, T., Kobayashi, T., Komatsu, K.i., Matsui, M., Fujita, H., Kodera, Y., Doi, K.: Development of a digital image database for chest radiographs with and without a lung nodule. American Journal of Roentgenology 174(1), 71–74 (Jan 2000). https://doi.org/10.2214/ajr.174.1.1740071, https://doi.org/10.2214/ajr.174.1.1740071

- [27] Signoroni, A., Savardi, M., Benini, S., Adami, N., Leonardi, R., Gibellini, P., Vaccher, F., Ravanelli, M., Borghesi, A., Maroldi, R., et al.: Bs-net: Learning covid-19 pneumonia severity on a large chest x-ray dataset. Medical Image Analysis 71, 102046 (2021)

- [28] Tang, Y.B., Tang, Y.X., Xiao, J., Summers, R.M.: Xlsor: A robust and accurate lung segmentor on chest x-rays using criss-cross attention and customized radiorealistic abnormalities generation. In: International Conference on Medical Imaging with Deep Learning. pp. 457–467. PMLR (2019)

- [29] Tarvainen, A., Valpola, H.: Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. arXiv preprint arXiv:1703.01780 (2017)

- [30] Toussie, D., Voutsinas, N., Finkelstein, M., Cedillo, M.A., Manna, S., Maron, S.Z., Jacobi, A., Chung, M., Bernheim, A., Eber, C., et al.: Clinical and chest radiography features determine patient outcomes in young and middle-aged adults with covid-19. Radiology 297(1), E197–E206 (2020)

- [31] Van Ginneken, B., Stegmann, M.B., Loog, M.: Segmentation of anatomical structures in chest radiographs using supervised methods: a comparative study on a public database. Medical image analysis 10(1), 19–40 (2006)

- [32] Xue, Y., Feng, S., Zhang, Y., Zhang, X., Wang, Y.: Dual-task self-supervision for cross-modality domain adaptation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 408–417. Springer (2020)

- [33] Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (Oct 2017)

CXR Segmentation by AdaIN-based Domain Adaptation and Knowledge Distillation: Supplementary Materials

Yujin Oh Jong Chul Ye

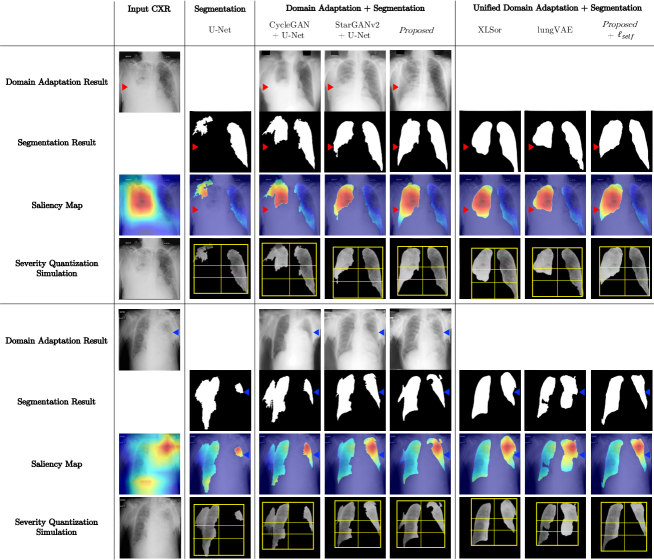

S1 Applications for DL-based Automatic CXR Analysis

Current deep learning (DL)-based CXR analysis tasks not just diagnose disease but provide explainable results such like saliency map or quantified severity level to assist clinicians [16, 10, 21, 9]. Accordingly, we further investigated applications of the proposed method using COVID-19 pneumonia CXR dataset [13]. For generating saliency map, we referenced a public source code 222https://github.com/priyavrat-misra/xrays-and-gradcam.git. In addition, for severity quantification, we followed array-based methods [21, 30].

Fig. S1 shows that Proposed and Proposed+ models provide the most suitable segmentation mask for saliency map or severity array generation. The results demonstrate that our methods can be utilized for various automatic CXR analysis tasks.

S2 Network Architecture

We provide details of the proposed framework, as shown in Table S1, S2, S3 and S4. Note that each input and output dimension for the domain adaption task is single channel (), whereas the segmentation task requires two channels () to separate foreground and background channels.

| Module | Layer | Norm | Resize | Input dimension |

| (C H W ) | ||||

| In | Conv 11 | - | - | 256 256 |

| Encoder | ResBlock 4 | AdaIN | Down | 64 256 256 |

| ResBlock 2 | AdaIN | - | 512 x 16 x 16 | |

| Decoder | ResBlock 2 | AdaIN | - | 512 16 16 |

| ResBlock 4 | AdaIN | Up | 512 16 16 | |

| Unshared | Norm | IN | - | 64 256 256 |

| Leaky ReLU | - | - | ||

| Conv 11 | - | - | ||

| Output | - | - | - | 256 256 |

| 256 256 |

| Module | Layer | Input dimension |

| (C) | ||

| In | Latent z | 4 |

| Shared | Linear 3 | 4 |

| Linear 1 | 512 | |

| Unshared | Linear 3 | 512 |

| Linear 1 | 512 | |

| Output | - | 16 K |

| Module | Layer | Input channel | Input size |

| (C) | (H W ) | ||

| Unshared | Conv 11 | , | 256 256 |

| Shared | ResBlock 6 | 64 | 256 256 |

| Leaky ReLU | 512 | 4 4 | |

| Conv 44 | 512 | 4 4 | |

| Leaky ReLU | 512 | 1 1 | |

| Unshared | Linear | 512 | 1 1 |

| Output | - | 16 K | - |

| Module | Layer | Input channel | Input size |

| () | (H W ) | ||

| Shared | Conv 11 | 1 | 256 256 |

| ResBlock 6 | 64 | 256 256 | |

| Leaky ReLU | 512 | 4 4 | |

| Conv 44 | 512 | 4 4 | |

| Leaky ReLU | 512 | 1 1 | |

| Unshared | Conv 11 | 512 | 1 1 |

| Output | - | 1 K | - |

S3 Adaptive Instance Normalization

AdaIN has been proposed as an extension of the instance normalization [11]. AdaIN layer receives a content input and a AdaIN code , and simply aligns the channel-wise mean and variance of to match those of desirable style by:

| (10) |

where and compute affine parameters from AdaIN code , and represent mean and variance, respectively. In this way, AdaIN simply scales the normalized content input with , and shifts with .

S4 Ablation Study

For ablation study, we analyzed contribution of different losses for the supervised segmentation task. We cumulatively added each loss to the baseline model, and compared segmentation performance on abnormal CXR.

Fig. S2 compares performance of each configuration. In configuration (b) with additional segmentation loss, domain adaptation performance was superior to the baseline. However, we observed that the segmentation results have several concave regions (blue boxes), which failed to resemble the general shape of lung structure. In configuration (c) with additional style loss, we observed that the segmentation results resemble normal lung better than (b), thanks to the style loss, which can extract common features of normal and abnormal CXR via the shared layer of the style encoder. In configuration (d) with additional cycle-consistency loss, we observed rather degraded lung segmentation performance as depicted as blue boxes, which have more concave regions compared to that of (c). The cycle-consistency loss, which tries to revert the generated lung mask back to the original image, may disturb the network to segment extremely consolidated lung regions.

Based on the ablation study results, we set the configuration (c) as our supervised segmentation loss.

S5 Domain Adaptation Loss

Domain adaptation loss basically follows StarGANv2 [4], given by

| (11) | |||

| (12) | |||

| (13) | |||

| (14) | |||

| (15) |

where , and are hyper-parameters and is the adversarial loss defined by

| (16) |

where and are source and target domains, which are chosen randomly from and so that all domain combinations can be considered. Furthermore, the learnable part of the AdaIN code is generated either from the encoder AdaIN coder generator or the style encoder given a reference target .

The cycle-consistency loss is defined as follows:

| (17) |

Similar to the cycle-consistency loss for the images, we introduce the style loss to enforce the cycle-consistency in the AdaIN code domain. More specifically, once a fake image is generated using a domain-specific AdaIN code, the style encoder with the fake image as an input should reproduce the original AdaIN code. This can be achieved by minimizing the following style loss:

| (18) |

Finally, to make the generated fake images diverse, the difference between two fake images that are generated by two different AdaIN codes should be maximized. This can be achieved by maximizing the following loss:

| (19) |

where an additional is generated either from the encoder AdaIN coder generator or the style encoder given an additional reference image.

S6 Implementation Details

The proposed method was implemented with PyTorch library [22]. We applied Adam optimizer [15] to train the models and set the batch size 1. The model was trained using a NVIDIA GeForce GTX 1080 Ti GPU. Hyper parameters were chosen to be , , , , and . Learning rate was optimized to . Once training iteration reaches certain fixed iteration points throughout the total iterations, the learning rate was reduced by factor of 10.

The network was trained for 20K iterations to simultaneously train domain adaptation and supervised segmentation tasks. We adopted early stopping strategy based on validation performance of abnormal chest X-ray radiograph (CXR) segmentation results. In terms of the training sequence, the self-supervised training started after training the domain adaptation and supervised segmentation tasks until they guaranteed certain performances. For self-supervised learning, the network was continued to be trained in self-supervised manner for additional 5K iterations.

At the inference phase, as for post-processing steps, two largest contours were automatically selected based on contour areas, and any holes within each contour were filled. The post-processing technique was identically applied to all the comparative model outputs for fair comparison.

S7 Comparative Model Implementations

For comparative study, baseline models for domain adaptation and supervised segmentation tasks, i.e., CycleGAN [33], MUNIT [12], StarGANv2 [4] and U-Net [24], were trained with identical conditions to that of the proposed model. For comparing performance of the unified domain adaptation and segmentation network, we inferenced pre-trained networks optimized for abnormal CXR segmentation, i.e., XLSor [28] and lungVAE [25], by utilizing their official source codes. 333https://github.com/raghavian/lungVAE444https://github.com/rsummers11/CADLab/tree/master/Lung_Segmentation_XLSor

S8 Error Analysis

We analyzed typical error cases, which failed to be segmented, and the error cases were grouped into three categories: (a) Over-segmentation on background pixels, (b) distorted lung shape, and (c) distorged lung boundary, as shown in Fig. S3.

S9 Computational Costs

The proposed unified framework costs less training computation resources, compared to training individual domain adaptation and segmentation networks. Table S5 shows total network parameters utilized for either training or inference of comparative networks.

Once the model is trained, only the generator with pre-built AdaIN codes are used at the inference phase, thus the model costs only the single generator.

As shown in Table S5, Proposed and Proposed+ models need the least number of network parameters, with most promising segmentation performance. Specifically, compared to Proposed model, Proposed+ model only needs a single inference without preceding domain adaptation task, with comparable segmentation performance to that of Proposed model.

| Method | Training | Inference(S) | Inference | ||||

| Generator(S) | Others | Total | Generator(S) | Others | Total | Time | |

| SS | |||||||

| U-Net [24] | 29M | - | 29M | 29M | - | 29M | 1 |

| XLSor [28] | 71M | - | 71M | 71M | - | 71M | 1 |

| DA | |||||||

| CycleGAN [33] | - | 29M | 29M | - | - | - | |

| MUNIT [12] | - | 47M | 47M | - | - | - | |

| StarGANv2 [4] | - | 78M | 78M | - | - | - | |

| DA+SS | |||||||

| CycleGAN + U-Net | 29M | 29M | 58M | 29M | 11M | 40M | 2 |

| StarGANv2 + U-Net | 29M | 78M | 127M | 29M | 34M | 63M | 2 |

| Proposed | 34M | 45M | 79M | 34M | - | 34M | 2 |

| UDS/Self | |||||||

| MUNIT + XLSor | 71M | 47M | 118M | 71M | - | 71M | 1 |

| lungVAE [25] | 34M | - | 34M | 34M | - | 34M | 1 |

| Proposed+ | 34M | 46M | 80M | 34M | - | 34M | 1 |

| Note: SS, supervised segmentation; DA, domain adaptation; UDS, unified DA+SS; Self, self- | |||||||

| supervised segmentation; (S), segmentation task; Others, other module parameters. | |||||||