CyberLoc: Towards Accurate Long-term Visual Localization

Abstract

This technical report introduces CyberLoc, an image-based visual localization pipeline for robust and accurate long-term pose estimation under challenging conditions. The proposed method comprises four modules connected in a sequence. First, a mapping module is applied to build accurate 3D maps of the scene, one map for each reference sequence if there exist multiple reference sequences under different conditions. Second, a single-image-based localization pipeline (retrieval–matching–PnP) is performed to estimate 6-DoF camera poses for each query image, one for each 3D map. Third, a consensus set maximization module is proposed to filter out outlier 6-DoF camera poses, and outputs one 6-DoF camera pose for a query. Finally, a robust pose refinement module is proposed to optimize 6-DoF query poses, taking candidate global 6-DoF camera poses and their corresponding global 2D-3D matches, sparse 2D-2D feature matches between consecutive query images and SLAM poses of the query sequence as input. Experiments on the 4seasons dataset show that our method achieves high accuracy and robustness. In particular, our approach wins the localization challenge of ECCV 2022 workshop on Map-based Localization for Autonomous Driving (MLAD-ECCV2022).

Keywords:

autonomous driving, image-based localization, image retrieval, image matching, multiple maps, multi-session PnP, consensus set maximization, pose graph optimization, bundle adjustment, slam

1 Introduction

This technical report studies the problem of image-based localization with respect to a pre-built 3D map. This problem has attracted considerable attention recently due to the widespread potential applications, such as in autonomous driving [1], robotics [2] and VR/AR [3]. It aims to estimate the 6-DoF global pose for a query image given a pre-built 3D map. Although visual localization has progressed rapidly in the past few years, how to achieve a robust and accurate localization under long-term challenging conditions still remains to be solved.

The main challenges are: 1) how to create accurate 3D maps that are robust to environmental changes, and 2) how to use the pre-built maps to accurately localize the camera.

To address the first challenge, we propose to use a stereo-camera rig with GPS-IMU to mapping the world. Given stereo image sequences with ground-truth 6-DoF poses, we separately reconstruct four 3D maps using four methods: 1) stereo Structure from Motion (SfM) with sparse 2D image features; 2) stereo SLAM; 3) stereo SfM with dense image matching; and 4) stereo disparity for each frame. Our motivation of using the above four 3D methods is to build a robust 3D map with respect to scene changes.

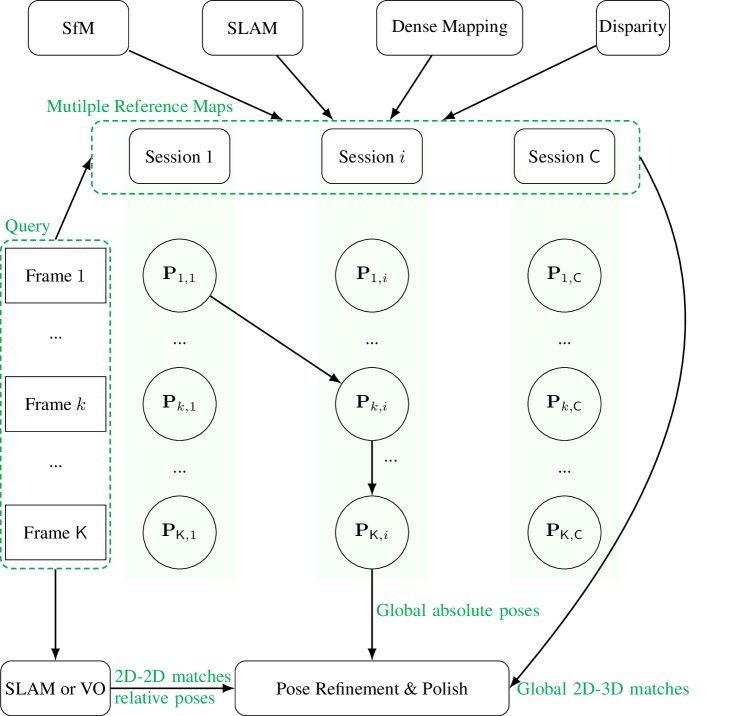

To address the second challenge, we introduce two modules, namely the consensus set maximization and pose refinement module. Given multiple global poses corresponding to multiple reference maps, the consensus set maximization aims to select the best pose for each query, resulting in one global pose and one set of global 2D-3D matches per query image. For query image sequences, the pose refinement module utilizes the global information (i. e., the global poses and 2D-3D matches), and the local information (i. e., SLAM poses and 2D-2D matches between consecutive query images), to further optimize query poses. The entire localization pipeline of CyberLoc is given in Figure 1.

The main contributions of this technical report are:

-

1.

We propose a visual localization pipeline consisting of four consecutive modules that help to achieve high accuracy and robustness under long-term environmental changes;

-

2.

We show that using multiple reference maps helps to overcome failed localization caused by long-term scene changes. This is achieved by a new consensus set maximization module that identifies the best query pose with respect to multiple reference maps;

-

3.

We introduce a robust pose refinement method, combining global information from pre-built maps and local information from SLAM, to refine query poses.

The proposed method is validated on the 4seasons dataset [1] and achieves state-of-the-art performance. In the following sections, we will give details of the proposed four modules. We first give our mapping pipeline in Sec. 2, to reconstruct 3D maps for multiple reference sessions. Next, we present our single image localization in Sec. 3, to obtain multiple global poses for each query image. We then give the proposed consensus set maximization method in Sec. 4, to select the best query pose for each query image. Finally, in Sec. 5, we provide the proposed robust pose refinement method.

2 Mapping

2.1 Image Pre-processing

In this section, we introduce some image pre-processing steps before using images for sparse 3D map reconstruction.

Low light image enhancement.

For images captured in the night, we perform low light image enhancement using LLFlow [4]. An example is given in Figure 2. We find that using enhanced images would help to extract better 2D local features and global feature vectors from images.

Semantic segmentation.

When working in highly dynamic environment, both SfM and SLAM methods would perform poorly due to interference from dynamic objects [5]. Feature points on dynamic objects are either unrepeatable when performing 3D reconstruction or ‘fool’ the feature tracking in SLAM. Following common practice [6], we perform semantic segmentation using SegFormer [7] to mask out dynamic objects (e.g., car, cyclist, etc.). Furthermore, pixels belong to the car shield are also masked out.

Resizing.

Before using images to extract features, we resize images while keeping their original aspect ratio. The reason is that the performance of feature extraction networks is vulnerable to image size. To extract a global feature vector from an image, we use a size of (long side). To extract 2D local features from an image, we use a size of . The two values are used as they perform best on the validation dataset.

2.2 Feature Extraction

Global feature vector.

Global feature vectors of images are used by image retrieval to, 1) find co-visible images for an image in the mapping (i. e., SfM) stage; or 2) find a local map for each query image in the localization stage. To extract discriminative global feature vectors, we use an internal neural network trained on open street-view images and internal datasets. The comparison with respect to state-of-the-art NetVLAD [8], SARE [9], SFRS [10] on typical benchmarks and the 4seasons dataset is given in Table 1.

| Methods | Pitts250k | Tokyo 24/7 | St Lucia | Cityloop | Oldtown | Parkinggarage | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R@1 | R@5 | R@1 | R@5 | R@1 | R@5 | R@1 | R@5 | R@1 | R@5 | R@1 | R@5 | |

| NetVLAD[8] | 86.0 | 93.2 | 73.3 | 82.9 | - | - | - | - | - | - | - | - |

| SARE[9] | 89.0 | 95.5 | 79.7 | 86.7 | - | - | - | - | - | - | - | - |

| SFRS[10] | 90.7 | 96.4 | 85.4 | 91.1 | 86.1 | 93.5 | 73.3 | 82.4 | 32.8 | 44.8 | 97.2 | 99.3 |

| Ours | 93.0 | 97.5 | 89.5 | 94.6 | 99.6 | 99.9 | 95.9 | 97.2 | 67.7 | 74.7 | 99.7 | 100. |

Assembling multiple Global feature vectors from multiple networks is a common practice to improve the performance of image retrieval 111Please refer to the Google landmark retrieval challenge for more information. However, we found that the assembling technique does not work on the 4seasons dataset. The reason is that our single model outperforms other state-of-the-art methods by a large margin on the 4seasons dataset.

Local features.

2D local features are used to perform sparse 3D reconstruction of the environment. We extract around 2000 SuperPoint [11] feature points for an image.

2.3 SfM

We have pre-recorded database images with ground-truth 6-DoF camera poses in a world coordinate system. We perform image retrieval to find Top-K (K=) similar database images. For each database image pair, we perform sparse feature matching and find 2D-2D matches. We further prune out wrong 2D-2D matches by enforcing the epipolar constraint using the ground-truth poses. With 2D-2D matches and ground-truth poses, we triangulate 222 SVD with post non-linear refinement. 3D points and perform structure-only bundle adjustment 333Fixing camera poses. to refine the positions of 3D points using colmap [12].

Remark 1.

Given Superpoint feature points, though SGMNet [13], ClusterGNN [14] and ELA [15] successfully reduce the computation complexity, their performance is worse than the pre-trained SuperGlue 444See results on the YFCC100M [16] and FM-Bench [17] datasets.. Recently, our proposed FGCNet [18] achieves 4x speedup than SuperGlue, while maintaining competitive performance with respect to the pre-trained SuperGlue.

Remark 2.

For a triangulated 3D point, its precision depends on point-camera distances, number of visible cameras, view angles, etc. Though MegLoc [19] proposes to prune out 3D points with large uncertainty 555Please refer to Sec.5.1 of [20]., we found the uncertainty threshold is hard to tune and we decided not to use this filtering step.

2.4 SLAM

Given ground-truth 6-DoF camera poses of images, with SuperPoint feature points, we perform SLAM using the ptam [21]. Note that we skip the pose estimation stage in the SLAM and only optimize 3D points in the local map optimization stage.

Remark.

The main difference between 3D points from SfM and SLAM is that we use ALL images in SLAM rather than keyframes in SfM. 3D points are triangulated and updated sequentially, rather than in a bundle.

2.5 Dense Mapping

Using the same retrieved pairs in Sec.2.3, we use dense feature matching method QTA [22] to build sparse 2D-2D matches and then triangulate 3D points in the same way as Sec.2.3. Since dense feature matching methods do not detect sparse 2D feature points and can generate unrepeatable 2D-2D matches 666Given 2D-2D matches for image pair A-B and A-C, 2D feature points in image A are different., we use the same quantization technique in Patch2Pix [23] to alleviate this problem.

Remark.

The main difference between 3D points from Dense Mapping and SfM is that we use dense feature matching method QTA [22] rather than sparse feature matching method SuperGlue [24] to build 2D-2D matches. The motivation is that QTA [22] can generate more matches than SuperGlue [24] for textureless image pairs. More 3D points can be triangulated, resulting in a robust 3D representation for textureless areas.

2.6 Disparity

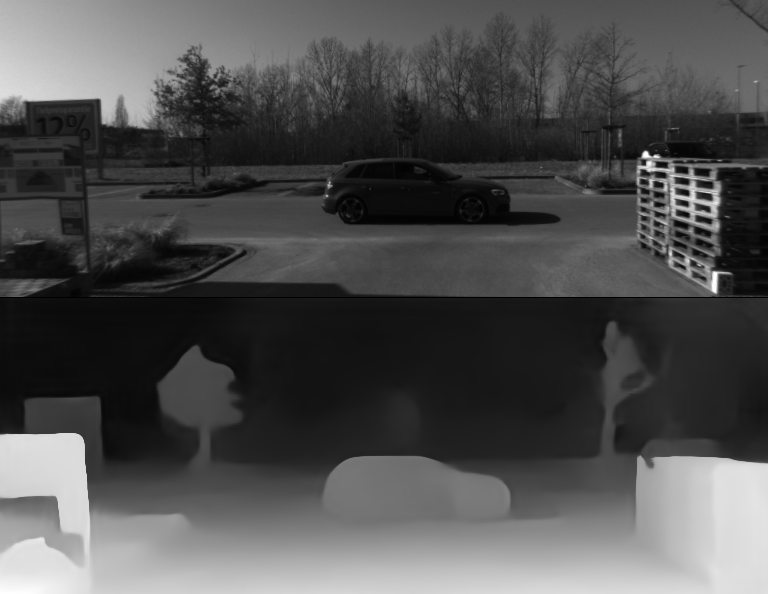

For each image pair, we can estimate a dense disparity map using the pre-trained LEAStereo [25], thus obtaining a local 3D map for each database image. An example of the estimated disparity map is given in Figure 3.

3 Localization

For each query image, we retrieve a set of candidate database keyframes using the same global feature vectors described in Sec.2.2. Since we have four pre-reconstructed 3D maps from SfM, SLAM, dense mapping, and disparity, we first perform four independent localization steps and then combine these localization results.

Specifically, for maps from SfM and SLAM, we first find 2D-2D matches using SuperGlue for each query and candidate database pair. Since we have recorded the matching 3D point (if have) of each database 2D feature point, a set of 2D-3D matches are automatically obtained for each query and candidate database image pair.

For maps from dense mapping, the only difference is that we use the dense feature matching method QTA [22] to find 2D-3D matches and all other components are the same.

For maps from disparity, we also use QTA [22] to find 2D-2D matches. For each 2D feature point from the database image, we triangulate 3D points using the disparity map. With 2D-2D matches and 3D points for database feature points, we obtain a set of 2D-3D matches.

Finally, the 2D-3D matches from the top-K () candidate database images and above four maps are combined to perform PnP 777Generalized camera model, aka, Plcker lines [26]. to estimate a 6-DoF camera pose in the world coordinate system.

Remark 1.

The motivation of using 2D-3D matches from different maps is to utilize their complimentary characteristics to improve the robustness of our method with respect to large scene changes.

Remark 2.

Directly combining all 2D-3D matches from top-K () candidate database keyframes and above four maps would generate a large set of 2D-3D matches with low inlier ratio, posing significant challenge for the subsequent RANSAC-PnP step. To improve the inlier ratio, for each map, we perform RANSAC-PnP to obtain a set of inlier 2D-3D matches, and remove duplicated 2D-3D matches 888One 2D feature point from the query image may be matched to multiple different 3D points. We only keep one 2D-3D pair with minimal reprojection error. before combing 2D-3D matches from four maps.

4 Multi-session Consensus Set Maximization

In a scene with multiple reference sequences such as the 4seasons[1] dataset, multi-session maps can be generated for the scene. In this section, we introduce a consensus set maximization method to fuse localization results using these multi-session maps.

A simple method to use multi-session maps is to combine 2D-3D matches from multi-session maps to perform RANSAC-PnP. Although it works for complementary multi-session results, the performance is limited since the best localization result from one-session map can be worsened by 2D-3D matches from other-session maps. Using combined 2D-3D matches for RANSAC-PnP would prone to produce averaged (or one dominant) 6-DoF pose, for poses from multi-session maps.

Another method to use multi-session maps is to find the best combination of multi-session maps using trial-and-error [27]. It works for multi-session maps captured in different times of a day, in small-scale room environment. For the 4seasons dataset, the large-scale multi-session maps are captured in different times of a year, making it hard to find the best combination.

The key idea of our method is finding the best 6-DoF pose from multi-session maps for each query image through an optimization process, rather than combining 2D-3D matches from multi-session maps to perform RANSAC-PnP. The proposed method consistently produces optimal results across different datasets.

4.1 Problem Definition

Let denotes a query image sequence up to timestamp . For each query image , we have candidate 6-DoF poses , one from a reference map.

We aim to find the most accurate 6-DoF pose for .

4.2 Consensus Set Maximization

For query images and , we can obtain a set of 2D-2D matches via feature tracking in SLAM. Given query poses and , we can compute the number of inlier 2D-2D matches subject to and , by thresholding the Sampson errors [28] of 2D-2D matches . We denote the number of inlier 2D-2D matches as and use it to measure the compatibleness of poses and . We assume the best poses would generate the largest score , i. e., finding the largest consensus set. Though the problem is non-convex [29] and the original contains outlier matches, we found the score describes the correctness of poses well. The consensus set maximization problem is solved by a integer programming process.

4.3 Integer Programming

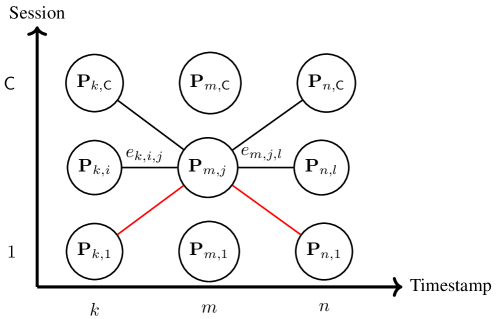

We use three frames to describe our integer programming process, as is given in Figure 4. We first build a densely connected graph for consecutive timestamps. For nodes and , there is an edge connecting them. The score of edge is (abbrev. for clarity), which is the number of inlier 2D-2D matches using poses and . Our integer programming process is given by,

| (1) | ||||

| (2) | ||||

| (3) |

Eq.(2) enforces that exactly one edge should be selected for the connectivity of the graph. Eq.(3) enforces the following relationship: 1) if node is selected, there must be an edge connecting to other nodes in consecutive frames; 2) if node is NOT selected, all edges connecting to other nodes in consecutive frames should be removed.

There are a maximum 999If one map session fails to estimate a camera pose, the number of edges between consecutive timestamps is smaller than . of edges (optimization variables) in our integer programming. The solution can be found very efficiently by off-the-shelf toolboxes. After optimization, nodes (poses) connected by edges are deemed as the best poses.

4.4 Experimental Result

We validate the proposed consensus set maximization method on the oldtown and cityloop datasets, from the 4seasons dataset. The number of image sequences with ground-truth poses is four and three, for the oldtown and cityloop dataset, respectively. For each dataset, one sequence is used for testing and the rest sequences are used for mapping. The localization results are separately given in Table 2 and Table 3. The results show that the proposed consensus set maximization method is robust and consistently shows good performance on the two datasets. In contrast, the simple method of merging 2D-3D matches does not work on the cityloop dataset. The reason is that localization results using multi-session maps are not compatible, on the cityloop dataset.

| Trans.Err. | Ref_0 | Ref_1 | Ref_2 | 2D-3D Merge | Int. Prog. |

|---|---|---|---|---|---|

| 0.05m | 36.60% | 39.80% | 43.20% | 51.00% | 49.80% |

| 0.1m | 60.80% | 68.40% | 69.20% | 78.30% | 78.40% |

| 0.2m | 79.30% | 87.00% | 85.60% | 94.10% | 93.20% |

| 0.5m | 89.20% | 93.50% | 92.30% | 96.60% | 96.50% |

| 1.0m | 92.00% | 95.50% | 94.10% | 97.80% | 97.80% |

| 3.0m | 93.70% | 96.50% | 95.00% | 98.30% | 98.30% |

| Trans. Err. | Ref_0 | Ref_1 | 2D-3D Merge | Int. Prog. |

|---|---|---|---|---|

| 0.05m | 69.20% | 74.20% | 73.30% | 79.20% |

| 0.1m | 91.80% | 88.00% | 87.30% | 92.30% |

| 0.2m | 98.00% | 94.70% | 94.20% | 97.70% |

| 0.5m | 99.60% | 99.20% | 99.10% | 99.70% |

| 1.0m | 99.90% | 99.50% | 99.60% | 100.00% |

| 3.0m | 100.00% | 99.70% | 99.70% | 100.00% |

We run our method on a PC equipped with a 2TB RAM and an AMD EPYC 7742 CPU. The running time of our method is given in Table 4. The implementation is based on Python and uses CBC solver for optimization. The running time can be significantly reduced using C++.

| Dataset | Total time | Single frame time | |

|---|---|---|---|

| Oldtown | 24.4s | 7.4ms | |

| Cityloop | 70.7s | 6.9ms |

5 Pose Refinement

In this section, we show how to combine global information from Sec. 4 and local information from SLAM to refine query poses.

5.1 Problem Definition

For each query image , we have obtained its best global pose from the multi-session localization step (Sec. 4). Considering that the accuracy (even being an outlier) of varies for different frames, we associate a weight variable for each , describing the weight of in our optimization process. We also retrieve 2D-3D matches corresponding to , and denote the reprojection error at pose as .

For consecutive frames, we can obtain their local relative poses and 2D-2D matches through SLAM. Given query poses and , we denote the two-view matching (Sampson) error as .

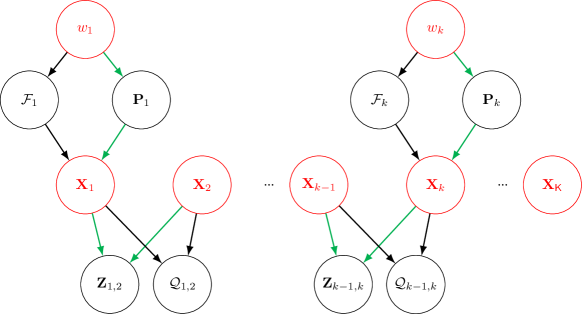

Given query images up to timestamp , we aim to solve query poses and latent weights . The overall framework of our optimization problem is given in Fig. 5.

Our objective function is given by,

| (4) |

where denotes the pose error, and denotes the relative pose between and , and are two weights to balance the 2D-3D reprojection error and 2D-2D Sampson error.

Remark 1

If no global pose can be obtained at frame , one can simply remove , , and .

Remark 2

One can also add skip constrains and ().

5.2 Robust Optimization

Inspired by [30], we use Expectation-Maximization (EM) algorithm to solve Eq.(5.1). The latent weights and query poses are alternatively optimized until convergence (the change of is smaller than a threshold).

Expectation Step

We aim to find the best while fixing , the Expectation step becomes,

| (5) |

where the regularization term is added to avoid a trivial solution of and is a constant.

Eq.(5) is convex, and the minimum can be found by differentiating it with respect to and setting the gradient to zero. The updating of at the -th iteration using query pose is given by,

| (6) |

Maximization Step

We aim to find the best while fixing , the updating of at the -th iteration using weights is given by,

| (7) |

Initialization

To initialize the iterative E-M updating process, we select a good subset of poses from as seeds and initialize other query poses using their most recent seeds and relative SLAM poses, resulting in . A query pose with the number of 2D-3D matches () larger than a pre-defined threshold is deemed as a seed.

5.3 Experimental Result

Based on the global localization results of Sec. 4.4, we run the pose refinement step. Our refinement objective function has four terms (Eq. (5.1)) and we conduct following ablation studies to validate the effectiveness of each term. Specifically,

-

1.

Pose Graph Optimization (PGO). By removing global 2D-3D reprojection term and 2D-2D Sampson term , the objective function becomes PGO.

-

2.

PGO_2D2D. By removing global 2D-3D reprojection term , the objective function becomes PGO with 2D-2D sampson term.

-

3.

PGO_2D3D. By removing 2D-2D Sampson term , the objective function becomes PGO with 2D-3D reprojection term.

-

4.

PGBA. We keep all four terms and denote the objective function as PGBA (PGO with Bundle Adjustment).

The results of the above four methods are given in Table 5 and 6. For the oldtown dataset, both PGO_2D2D and PGO_2D3D outperforms PGO, showing the effectiveness of adding global 2D-3D reprojection and 2D-2D Sampson terms. The best performance is obtained by combining the two terms, resulting in PGBA. For the cityloop dataset, all methods have similar performance probably because this dataset is saturated.

| Trans. Err. | Baseline | PGO | PGO_2D2D | PGO_2D3D | PGBA |

|---|---|---|---|---|---|

| 0.05m | 49.80% | 55.10% | 56.20% | 56.80% | 56.90% |

| 0.1m | 78.40% | 83.80% | 84.30% | 85.20% | 85.30% |

| 0.2m | 93.30% | 96.80% | 96.80% | 97.20% | 97.30% |

| 0.5m | 96.50% | 98.50% | 98.40% | 98.40% | 98.40% |

| 1.0m | 97.80% | 99.00% | 99.00% | 99.00% | 99.00% |

| 3.0m | 98.30% | 99.30% | 99.30% | 99.30% | 99.30% |

| Trans. Err. | Baseline | PGO | PGO_2D2D | PGO_2D3D | PGBA |

|---|---|---|---|---|---|

| 0.05m | 79.20% | 81.00% | 81.00% | 81.30% | 81.40% |

| 0.1m | 92.30% | 93.00% | 93.00% | 93.10% | 93.00% |

| 0.2m | 97.70% | 98.20% | 98.20% | 98.60% | 98.60% |

| 0.5m | 99.70% | 99.70% | 99.70% | 99.70% | 99.70% |

| 1.0m | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% |

| 3.0m | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% |

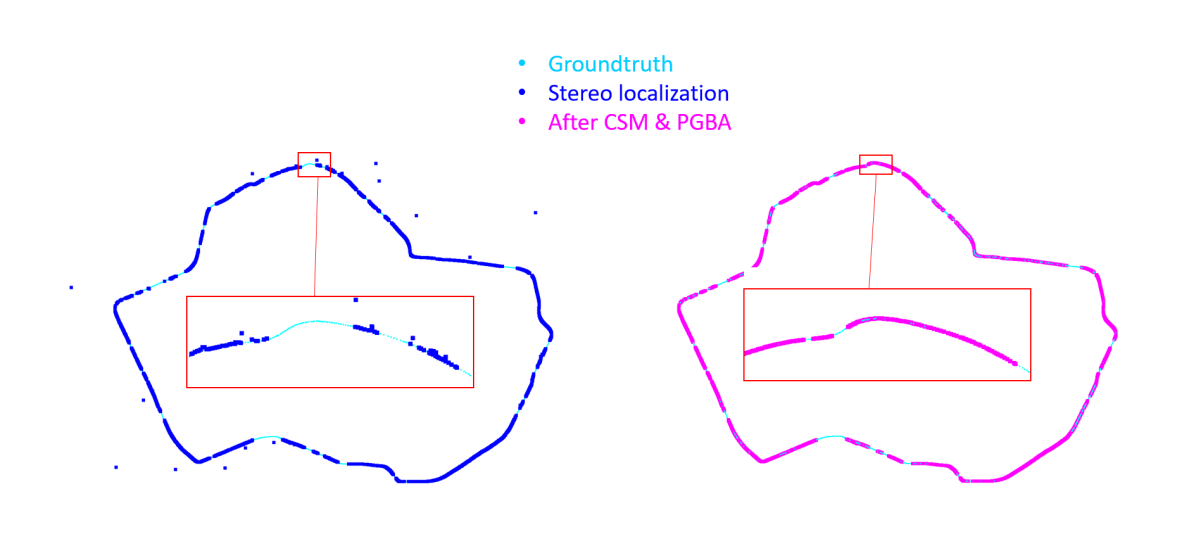

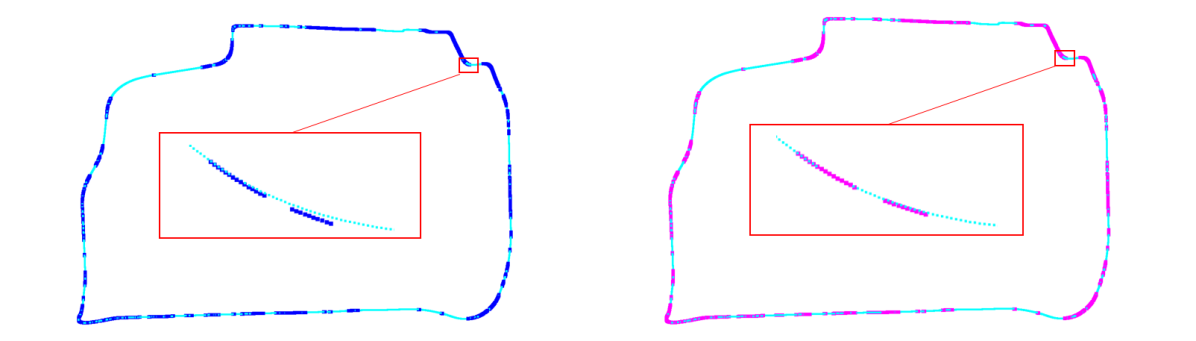

We show estimated query positions before and after consensus set maximization and pose refinement steps in Fig. 6. It clearly shows that wrong estimated query positions are refined to correct positions, resulting in a smooth trajectory after refinement.

Our pose refinement step is implemented using C++, and the processing time is given in Table 7. For time-critical applications, methods PGO and PGO_2D3D are good candidates.

| Dataset | PGO | PGO_2D2D | PGO_2D3D | PGBA | |

|---|---|---|---|---|---|

| Oldtown | 26.7s | 2486.7s | 102.0s | 2245.0s | |

| Cityloop | 21.0s | 2263.8s | 93.9s | 2677.8s |

6 Pose Polishing

Note that the global pose used in the pose refinement (Sec.5) step is from one of the multi-sessions localization results. Using optimized query poses from Sec.5, we can unify information from multi-sessions to obtain a better , followed by another round of consensus set maximization and pose refinement as described in Sec.4 and Sec.5. This final pose polishing step is performed (optionally) only once.

As discussed in Sec.4, simply combining all 2D-3D matches from multi-session maps would produce an averaged (or one dominant) pose over multi-session poses. Using the accurate as an anchor, we first compute reprojection errors of all 2D-3D matches from multi-session maps and then prune out outlier 2D-3D matches. Remaining 2D-3D matches are used to compute the final global pose for the final round of pose fusion and refinement. This guided pose polish step could further improve the final pose accuracy for better application.

6.1 Experimental Result

Based on estimated poses from Sec. 5.3, we test the pose polish module. We use refined poses to filter 2D-3D matches and recompute the pose for each session. A second round of consensus set maximization and pose refinement is performed on these poses. The results are given in Table 8. For both datasets, polishing helps to further improve pose accuracies, as the Recall@0.05m increases.

| Oldtown | Cityloop | |||

|---|---|---|---|---|

| Trans. Err. | Baseline | Polish | Baseline | Polish |

| 0.05m | 56.90% | 59.70% | 81.40% | 82.10% |

| 0.1m | 85.30% | 85.20% | 93.00% | 93.30% |

| 0.2m | 97.30% | 97.20% | 98.60% | 98.60% |

| 0.5m | 98.40% | 98.50% | 99.70% | 99.70% |

| 1.0m | 99.00% | 99.00% | 100.00% | 100.00% |

| 3.0m | 99.30% | 99.30% | 100.00% | 100.00% |

7 Conclusions

We have proposed a method, named CyberLoc, for robust and accurate visual localization under challenging conditions. The key idea is to build a robust map for each reference sequence, find the best global camera pose with respect to multi-session maps, and combine global localization and local SLAM to refine camera poses. The proposed robust mapping and localization, consensus set maximization and pose refinement facilitates the success of our method, to be used in scenes with illumination and environmental changes. Extensive experiments on 4seasons datasets demonstrate the high accuracy and robustness of our method.

References

- [1] Wenzel, P., Wang, R., Yang, N., Cheng, Q., Khan, Q., von Stumberg, L., Zeller, N., Cremers, D.: 4seasons: A cross-season dataset for multi-weather slam in autonomous driving. In: DAGM German Conference on Pattern Recognition, Springer (2020) 404–417

- [2] Strisciuglio, N., Tylecek, R., Blaich, M., Petkov, N., Biber, P., Hemming, J., van Henten, E., Sattler, T., Pollefeys, M., Gevers, T., et al.: Trimbot2020: An outdoor robot for automatic gardening. In: ISR 2018; 50th International Symposium on Robotics, VDE (2018) 1–6

- [3] Sarlin, P.E., Dusmanu, M., Schönberger, J.L., Speciale, P., Gruber, L., Larsson, V., Miksik, O., Pollefeys, M.: Lamar: Benchmarking localization and mapping for augmented reality. arXiv preprint arXiv:2210.10770 (2022)

- [4] Wang, Y., Wan, R., Yang, W., Li, H., Chau, L.P., Kot, A.C.: Low-light image enhancement with normalizing flow. arXiv preprint arXiv:2109.05923 (2021)

- [5] Liu, L., Li, H., Dai, Y., Pan, Q.: Robust and efficient relative pose with a multi-camera system for autonomous driving in highly dynamic environments. IEEE Transactions on Intelligent Transportation Systems 19(8) (2017) 2432–2444

- [6] Saputra, M.R.U., Markham, A., Trigoni, N.: Visual slam and structure from motion in dynamic environments: A survey. ACM Computing Surveys (CSUR) 51(2) (2018) 1–36

- [7] Xie, E., Wang, W., Yu, Z., Anandkumar, A., Alvarez, J.M., Luo, P.: Segformer: Simple and efficient design for semantic segmentation with transformers. Advances in Neural Information Processing Systems 34 (2021) 12077–12090

- [8] Arandjelovic, R., Gronat, P., Torii, A., Pajdla, T., Sivic, J.: Netvlad: Cnn architecture for weakly supervised place recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. (2016) 5297–5307

- [9] Liu, L., Li, H., Dai, Y.: Stochastic attraction-repulsion embedding for large scale image localization. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. (2019) 2570–2579

- [10] Ge, Y., Wang, H., Zhu, F., Zhao, R., Li, H.: Self-supervising fine-grained region similarities for large-scale image localization. In: European conference on computer vision, Springer (2020) 369–386

- [11] DeTone, D., Malisiewicz, T., Rabinovich, A.: Superpoint: Self-supervised interest point detection and description. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops. (2018) 224–236

- [12] Schonberger, J.L., Frahm, J.M.: Structure-from-motion revisited. In: Proceedings of the IEEE conference on computer vision and pattern recognition. (2016) 4104–4113

- [13] Chen, H., Luo, Z., Zhang, J., Zhou, L., Bai, X., Hu, Z., Tai, C.L., Quan, L.: Learning to match features with seeded graph matching network. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. (2021) 6301–6310

- [14] Shi, Y., Cai, J.X., Shavit, Y., Mu, T.J., Feng, W., Zhang, K.: Clustergnn: Cluster-based coarse-to-fine graph neural network for efficient feature matching. arXiv preprint arXiv:2204.11700 (2022)

- [15] Suwanwimolkul, S., Komorita, S.: Efficient linear attention for fast and accurate keypoint matching. arXiv preprint arXiv:2204.07731 (2022)

- [16] Thomee, B., Shamma, D.A., Friedland, G., Elizalde, B., Ni, K., Poland, D., Borth, D., Li, L.J.: Yfcc100m: The new data in multimedia research. Communications of the ACM 59(2) (2016) 64–73

- [17] Bian, J.W., Wu, Y.H., Zhao, J., Liu, Y., Zhang, L., Cheng, M.M., Reid, I.: An evaluation of feature matchers for fundamental matrix estimation. arXiv preprint arXiv:1908.09474 (2019)

- [18] Liu, L., Liyuan, P., Wei, L., Qichao, X., Yuxiang, W., Jiangwei, L.: Fgcnet: Fast graph convolution for matching features. ISMAR (2022)

- [19] Peng, S., He, Z., Zhang, H., Yan, R., Wang, C., Zhu, Q., Liu, X.: Megloc: A robust and accurate visual localization pipeline. arXiv preprint arXiv:2111.13063 (2021)

- [20] Zou, D., Tan, P.: Coslam: Collaborative visual slam in dynamic environments. IEEE transactions on pattern analysis and machine intelligence 35(2) (2012) 354–366

- [21] Pire, T., Fischer, T., Castro, G., De Cristóforis, P., Civera, J., Berlles, J.J.: S-ptam: Stereo parallel tracking and mapping. Robotics and Autonomous Systems 93 (2017) 27–42

- [22] Tang, S., Zhang, J., Zhu, S., Tan, P.: Quadtree attention for vision transformers. arXiv preprint arXiv:2201.02767 (2022)

- [23] Zhou, Q., Sattler, T., Leal-Taixe, L.: Patch2pix: Epipolar-guided pixel-level correspondences. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. (2021) 4669–4678

- [24] Sarlin, P.E., DeTone, D., Malisiewicz, T., Rabinovich, A.: Superglue: Learning feature matching with graph neural networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. (2020) 4938–4947

- [25] Cheng, X., Zhong, Y., Harandi, M., Dai, Y., Chang, X., Li, H., Drummond, T., Ge, Z.: Hierarchical neural architecture search for deep stereo matching. Advances in Neural Information Processing Systems 33 (2020)

- [26] Pless, R.: Using many cameras as one. In: 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2003. Proceedings. Volume 2., IEEE (2003) II–587

- [27] Labbé, M., Michaud, F.: Multi-session visual slam for illumination invariant localization in indoor environments. arXiv preprint arXiv:2103.03827 (2021)

- [28] Hartley, R., Zisserman, A.: Multiple view geometry in computer vision. Cambridge university press (2003)

- [29] Li, H.: Consensus set maximization with guaranteed global optimality for robust geometry estimation. In: 2009 IEEE 12th International Conference on Computer Vision, IEEE (2009) 1074–1080

- [30] Lee, G.H., Fraundorfer, F., Pollefeys, M.: Robust pose-graph loop-closures with expectation-maximization. In: 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE (2013) 556–563

- [31] Agarwal, S., Mierle, K., Team, T.C.S.: Ceres Solver (3 2022)