Data-driven discovery of governing equations for coarse-grained heterogeneous network dynamics

Abstract: We leverage data-driven model discovery methods to determine the governing equations for the emergent behavior of heterogeneous networked dynamical systems. Specifically, we consider networks of coupled nonlinear oscillators whose collective behaviour approaches a limit cycle. Stable limit-cycles are of interest in many biological applications as they model self-sustained oscillations (e.g. heart beats, chemical oscillations, neurons firing, circadian rhythm). For systems that display relaxation oscillations, our method automatically detects boundary (time) layer structures in the dynamics, fitting inner and outer solutions and matching them in a data-driven manner. We demonstrate the method on well-studied systems: the Rayleigh Oscillator and the Van der Pol Oscillator. We then apply the mathematical framework to discovering low-dimensional dynamics in networks of semi-synchronized Kuramoto, Rayleigh, Rossler, and Fitzhugh-Nagumo oscillators, as well as heterogeneous combinations thereof. We also provide a numerical exploration of the dimension of collective network dynamics as a function of several network parameters, showing that the discovery of coarse-grained variables and dynamics can be accomplished with the proposed architecture.

1 Introduction

Data-driven modeling paradigms are an increasingly important tool for the characterization of behavior in complex, high-dimensional systems. For instance, Reduced order models (ROMs) [1] aim to exploit the ubiquitous observation from experiment and/or computation that meaningful input/output signals in high-dimensional systems are encoded in low-dimensional patterns of dynamic activity. However, many complex systems of interest exhibit behavior across multiple temporal or spatial scales, which poses unique challenges for modeling and predicting their behavior. It is often the case that while we are primarily interested in macroscale phenomena, the microscale dynamics must also be modeled and understood, as they play a central role in driving the macroscale behavior. This can make dealing with multiscale systems particularly difficult unless the time scales are disambiguated in a principled way. There is a significant body of research focused on modeling multiscale systems to produce coarse-grained descriptions: notably the equation-free (EF) method[2] for linking scales and the heterogeneous multiscale modeling (HMM) framework [3]. Additional work has focused on testing for the presence of multiscale dynamics so that analyzing and simulating multiscale systems is more computationally efficient [4, 5]. Many of the same issues that make modeling multiscale systems difficult can also present challenges for model discovery and system identification. These challenges motivate the development of specialized methods for performing model discovery on problems with multiple time scales, taking into account the unique properties of multiscale systems. Specifically, we integrate dimensionality-reduction, sparse regression, and robust statistics to discover governing evolution equations for coarse-grained heterogeneous network dynamics as well as to explicitly disambiguate and model multiscale temporal phenomena (See Fig. 3).

The discovery and pairing of coordinates and dynamics is the hallmark feature of scientific modeling. For instance, the second century Ptolemaic description of celestial mechanics featured an earth-centric coordinate systems with the retrograde planetary dynamics expressed as linear superposition of circular motion of different frequencies, i.e. the doctrine of the perfect circle. By Newton’s time in the seventeenth century, the heliocentric coordinate system was established allowing for an description of planetary motion with the inverse square law of gravitation. Thus it is not surprising that methods for jointly finding coordinates and dynamics have emerged as a central theme in modern data-driven science. Indeed, there is significant diversity in mathematical approaches for pairing a coordinate representation with an accompanying dynamical model that characterizes the evolution. Reduced order models (ROMs) accomplish this pairing by constructing coordinates from the most dominant correlated activity, or proper orthogonal decomposition (POD) [6, 1], and then Galerkin projecting on the governing equations [1]. More broadly, modal decomposition techniques, such as POD and dynamic mode decomposition (DMD) [7], approximate linear subspaces using dominant correlations in spatio-temporal data [8]. Linear subspaces, however, are highly restrictive and ill-suited to handle parametric dependencies. Attempts to circumvent these shortcomings include using multiple linear subspaces covering different temporal or spatial domains [9, 10, 11, 12], multi-resolution DMD [13], diffusion map embeddings [14, 15, 16, 17], or more recently, using deep learning to compute underlying nonlinear subspaces which are advantageous for dynamics, both linear and nonlinear [18, 19, 20, 21, 22]. These techniques represent data-driven architectures for extracting order-parameter descriptions of the underlying spatio-temporal dynamics observed [23].

Such flexibility and diversity in algorithms for the joint discovery of coordinates and dynamics has allowed for many new representations of physical systems, including in the networked dynamical systems of interest here. Specifically, we are interested in modeling the emergent coarse-grained heterogenous behavior that arises in high-dimensional, networked dynamical systems whose underlying dynamics are strongly nonlinear. In particular, we consider networks of coupled nonlinear oscillators whose collective behavior approaches relaxation-oscillation limit cycle dynamics. Recently, oscillatory phenomena have been coarse-grained into learned and/or collective coordinates (intrinsic coordinates) for modeling their evolution [24, 25, 26, 27, 28, 29]. Gottwald originally introduced collective coordinates to describe coupled phase oscillators of the Kuramoto model [25], and then extended the mathematical framework to reduce infinite-dimensional stochastic partial differential equations (SPDEs) with symmetry to a set of finite-dimensional stochastic differential equations which describe the shape of the solution and the dynamics along the symmetry group [26]. Shlizerman et al [27] coarse-grained using coordinates associated with the dominant correlated activity, or singular value decomposition, and projected into a framework of neural activity measures. Kemeth et al [24] instead learn collective dynamics on a slow manifold, after initial transients have died out, which can be approximated through a learned model based on local spatial partial derivatives in the emergent coordinates. These works are closely related to the present work, as their aims are well-aligned with the goals of our method. However, there are key differences. Specifically, we consider coarse-graining on a system for which regions of fast activity are critical for characterizing the dynamics, i.e. the dynamics produce temporal boundary layers. In particular, we wish to learn the dominant balance dynamics that occur in both fast and slow temporal regimes on network level dynamics.

In this work, we leverage dimensionality-reduction, sparse regression, and robust statistics to discover coarse-grained models of heterogeneous networked dynamical systems. We consider networks consisting of several types of oscillators and explore how changing the network structure influences the collective dynamics. Nishikawa et al [29] and Motter et al [30] consider synchronization of networks, including scale free and small world, as a function of the homogeneous or heterogeneous distribution of connectivity, showing that homogeneous networks are more easily synchronized. Here, we focus on aspects of the emergent dynamics that arise from the heterogeneity of the oscillators themselves. Specifically, in many regimes, the emergent dynamics exhibit two key features we aim to model: (i) nontrivial coarse-grained dynamics (relaxation-oscillations), and (ii) temporal boundary layers (rapid transient dynamics). Specifically, the proposed mathematical architecture discovers parsimonious governing evolution equations for coarse-grained network dynamics and, if desired, explicitly disambiguates emergent temporal boundary layer phenomena. The developed algorithm starts simply by projecting onto coordinates associated with the dominant correlated activity, i.e. by taking the singular value decomposition. We then identify rapid transition regions associated with transients in the time dynamics of the dominant modes by applying the sparse identification of nonlinear dynamics (SINDy)) [18] algorithm with data-trimming [31], which is a standard analysis tool from robust statistics. The governing equations produced by this stage of the method can be used to describe the full limit cycle. However, if a more detailed model is desired, the results of trimming are used to segment the coarse-grained trajectory, separating regions of rapid transition from the slow-temporal field, which is equivalent to the slow center-manifold considered previously [24, 25]. We then utilize the SINDy framework again to discover parsimonious governing equations for each region of the coarse-grained trajectory, constructing a hybrid model for the overall dynamics. Here we apply the full method to a network of coupled Fitzhugh-Nagumo oscillators, which produce rapid (spiking) behavior. The strongly nonlinear nature of the underlying agents leads to the existence of temporal boundary layers in the collective dynamics. The overall architecture allows for a data-driven approach to characterizing dominant-balance physics [23, 32] and singularly perturbed problems [33, 34, 35], which is a classic technique for understanding, for instance, boundary layer formation in fluid dynamics.

2 Coarse-graining and SINDy for multi-scale systems

Coarse-graining is of broad scientific interest across a diversity of disciplines. Coarse-graining seeks to determine a set of variables that successfully summarize the detailed state of a network of interacting agents. Analytical and computational approaches have long been sought for achieving this end, with the former leveraging the dynamical systems theory of center manifold reductions, normal forms, bifurcation theory and perturbation analysis. Indeed, the seminal review of Cross and Hohenberg [23] detail the many mathematical architectures available for deriving order-parameter descriptions, or coarse-grained models, that characterize the dynamics. More recent computational approaches include statistical and machine learning algorithms [36, 37] for network clustering, principal component analysis, and, for instance, algorithms for detecting density (k-core decomposition). The diversity of methods developed allow for a new coordinate representation for the collective dynamics. This representation can then be leveraged by model discovery methods such as SINDy to determine the dynamics on the coarse-grained coordinates.

SINDy recovers parsimonious representations of the dynamics from measurement data by sparse regression to a library of candidate models [18]. Thus the goal of SINDy is to promote a parsimonious representation of the complex system by traditional and interpretable governing equations. Consider the nonlinear dynamical system, which will ultimately be our coarse-grained representation,

| (1) |

which is measured at time points . From measurements, we construct the matrix = . The method introduced in [18] seeks to identify via sequential threshold least-squares, which is a proxy for the sparsifying zero-norm. The set of state measurements are used to populate a library of candidate nonlinear terms , where defines the vector of all product combinations of the state components. Each candidate term should be unique, as a suitable library is crucial in the SINDy algorithm. A common strategy is start with polynomials and increase the complexity of the library with other terms, such as trigonometric functions. Thus, the system in (1) is approximated by:

| (2) |

The time derivatives , if not measured directly, can be found via numerical differentiation and should be appropriately de-noised, if necessary (low pass Butterworth, total variation regularization, etc.) [38]. The coefficients are the sparse weightings of the corresponding candidate library terms. Therefore, our regression relies on sparse regularization to enforce a parsimonious corresponding to the fewest nonlinear terms in our library that describe our dynamics well:

| (3) |

Regressing to the zero-norm is often achieved by relaxing the the one-norm. However, modern optimization frameworks are allowing for computationally tractable proxies for the zero-norm that are superior to the one-norm relaxation [31, 39]. SINDy is modular and adaptible, allowing for modifications which include the discovery of spatio-temporal systems [40, 41], multiscale dynamics [20], parametric dependencies [42], hybrid dynamical systems [43], systems subject to control [44, 45], and implicit dynamics [46, 47, 48]. Further, it can be used in the low-data limit [49, 50, 51] and with denoising architectures [52, 53]. The SINDy algorithm, which has an accompanying python package [54], is the workhorse algorithm for our coarse-graining method.

To disambiguate fast scale dynamics, our coarse-graining method exploits a key extension of SINDy: data trimming. Modifying Eq. (3) to include trimming yields:

| (4) |

where is an estimate of the number of the input data points that are considered “inliers.” See the work of Champion et al. for a thorough explanation [31]. After the SINDy with trimming algorithm is applied, the vector has lower entries where data points are harder to fit to a parsimonious model of system dynamics. In systems that exhibit multi-scale temporal dynamics, these troublesome points often correspond to spikes in the derivative. Thus, trimming effectively identifies regions of rapid change.

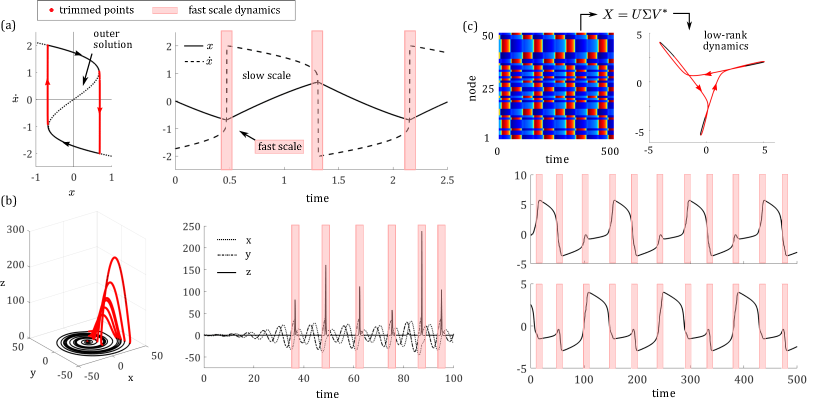

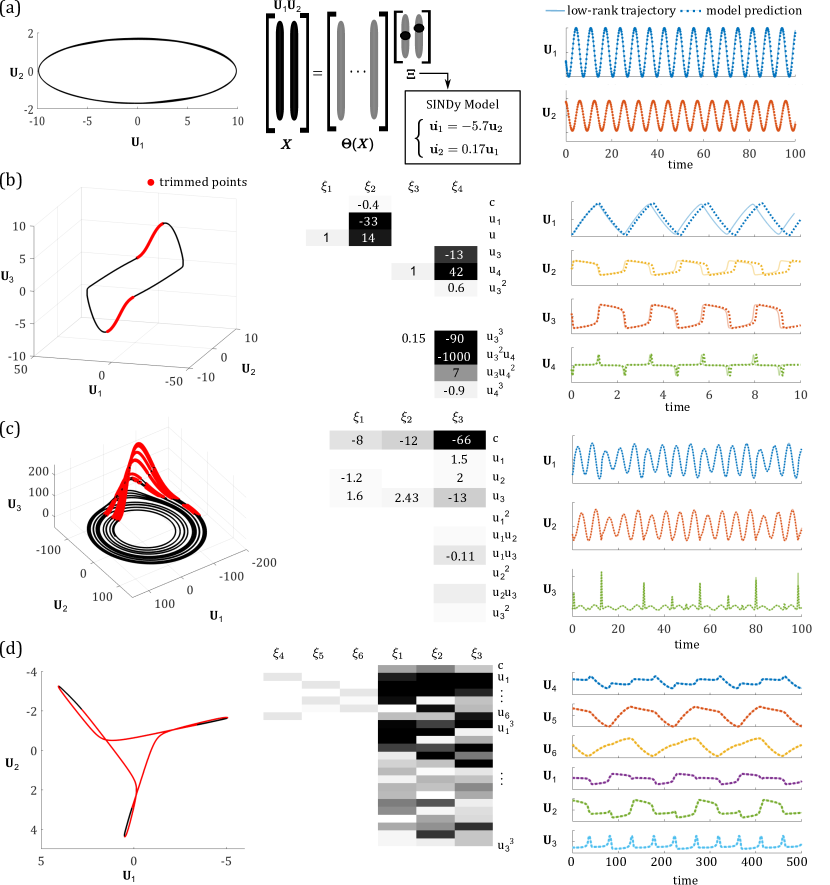

As an example, in Fig. 1a we illustrate how data-trimming partitions the limit cycle of a Rayleigh oscillator: the points which deviate from the slow-scale outer solution are trimmed, coinciding with the two boundary layers in the limit cycle. Trimming applied to Rossler dynamics on a strange attractor identifies rapid excursions in the z-component of the system (Fig. 1b). When applied to a low-rank representation of a network of spiking Fitzhugh-Nagumo (FHN) oscillators, trimming identifies regions of rapid spiking and relaxation (Fig. 1c). In each of these examples, the trimmed fraction, or , is a hyperparameter of the method that must be tuned according to the proportion of the input measurements that appear to belong to regions of fast-scale dynamics. If the fraction is set too high, then points adjacent to the fast scale dynamics will also be trimmed. Conversely, if the trimmed fraction is set too low then only a subset of the fast-scale dynamics will be identified. Another factor to consider in applying this method is that in order to effectively repurpose an outlier-detection technique from robust statistics to partition our data, the data must be clean. If the input trajectory is noisy, then data trimming will pull out the noisy data points and their neighbors, whose derivative estimates are corrupted, and, possibly, also the desired regions of rapid scale dynamics.

3 Hybrid Models for Relaxation Oscillators

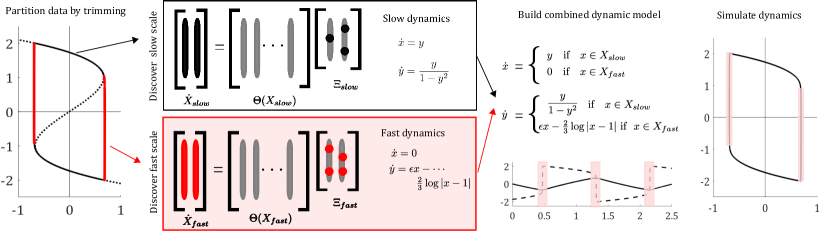

The steps of our method for data-driven identification of temporal boundary layer phenomena in relaxation oscillations are outlined on the canonical Rayleigh oscillator in Fig. 2. First, SINDy with trimming is applied to the input trajectory to partition the data. Any reasonable library can be used at this point, as we only need the output from trimming, i.e. the vector from (4), not the model coefficients. The entries of are compared to a threshold value, and points with entries below the threshold are assigned to the fast-scale group, , while points with an entry above the threshold are assigned to the slow-scale group, . Next, the locations of the points belonging to within the phase space are used to bound the region in which the fast scale dynamics will apply. The bounding box is formed around each stretch of consecutive trimmed points, and then overlapping boxes are joined resulting in one region for each segment of the limit cycle displaying fast-scale dynamics. Points outside of these bounding boxes are assigned to during simulation. Then, SINDy is applied separately to data in and . For the slow-scale group, the candidate library of functions is built using . For the fast scale group, the candidate library of functions is built using . With these two dynamic models in hand, and a partition on the phase space determining when each model applies, we can simulate the system dynamics. At each time step, before calculating an update to the system position, we check whether the point belongs to or . If , then the fast scale dynamics are used to calculate an update. If , then the slow scale dynamics are used to calculate an update. Note that the hybrid model is only valid for points on the limit cycle of the system. In this example, trajectories initiated elsewhere in the phase space either remain on the line , approach the line in the upper half plane, or approach the line in the negative half plane.

We also demonstrate this hybrid model approach on the limit cycle of a Van der Pol oscillator exhibiting relaxation-oscillations, as shown in Fig. 3. Like the Rayleigh Oscillator example, we first partition the input trajectory using trimming. Note that the trimmed points, shown in red, are those points that deviate from the outer solution predicted by asymptotic approximation theory, shown by a dashed line. We then use the set of trimmed points to bound the regions of phase space governed by fast scale dynamics, and the remaining phase space is considered to be governed by slow scale dynamics. Next we apply SINDy to data points from the slow scale scale region, fitting the dynamics to a library that depends on and includes rational functions. In this case, we fit the dynamics of the two regions of fast scale dynamics separately, calling those in the lower half plane and those in the upper half plane . For both subsets of data, we fit the dynamics to a library of cubic polynomials in and . We treat the two segments of fast dynamics separately because fitting both regions at once results in an average between the two desired models that does not approximate either branch fully. We note, however, that the resulting models for and reported in Fig. 3, can easily be transformed to match each other by applying an absolute value sign to . If such symmetries are clear from viewing the limit cycle of a system, then the library of candidate functions used in SINDy could be built using polynomials of , and both regions of fast scale dynamics could be fit together. Again simulation of the system is accomplished by checking whether the current state belongs to or , making an update with the appropriate dynamic rules, and then iterating these two steps until the desired time span is covered.

4 Coarse-graining heterogeneous spatio-temporal dynamics

For our coarse-graining method, a reduced-order basis is first derived through the singular value decomposition [55, 56] (Fig. 4) and second, SINDy is deployed to discover the dynamics in this reduced order basis (Fig. 5). If the low-rank dynamics exhibit multiscale temporal behavior, then SINDy with trimming can be used instead to identify regions of fast-scale dynamics and fit a hybrid model to the data, facilitating analysis of the dominant balance physics in distinct dynamic intervals of the collective limit cycle. This method allows us to tackle coarse-graining for networks displaying heterogenous spatio-temporal dynamics that are not tractable with analytical approaches. For very high-dimensional problems, randomized algorithms [57] and compressed decompositions [58] can be exploited in order to compute the low-rank subspaces more efficiently.

The systems that we consider here are networks of coupled oscillators of the form

| (5) |

where is a vector of state variables, or nodes, and is a vector of functions encoding the dynamics on each node. The dynamics depend on intrinsic properties of each node as well as coupling to neighboring nodes. Neighbors are defined by a symmetric, unweighted adjacency matrix, , where the entries if node and node are connected and otherwise. Here we consider Erdos-Renyi adjacency matrices, so may be sparse or highly connected as we tune the edge probability, . The functions could take on any arbitrary form, but we limit our attention to a selection of well-known oscillators.

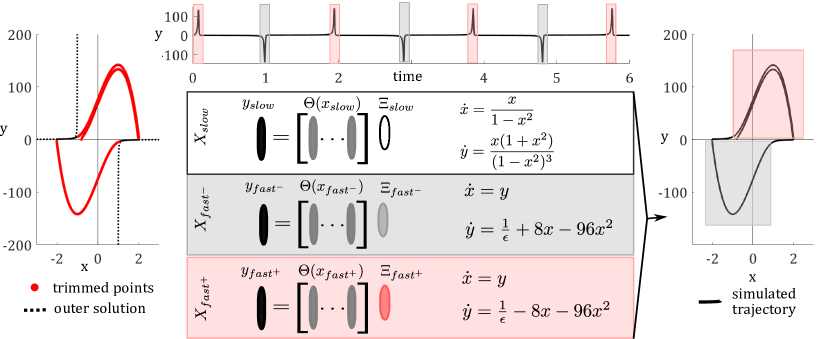

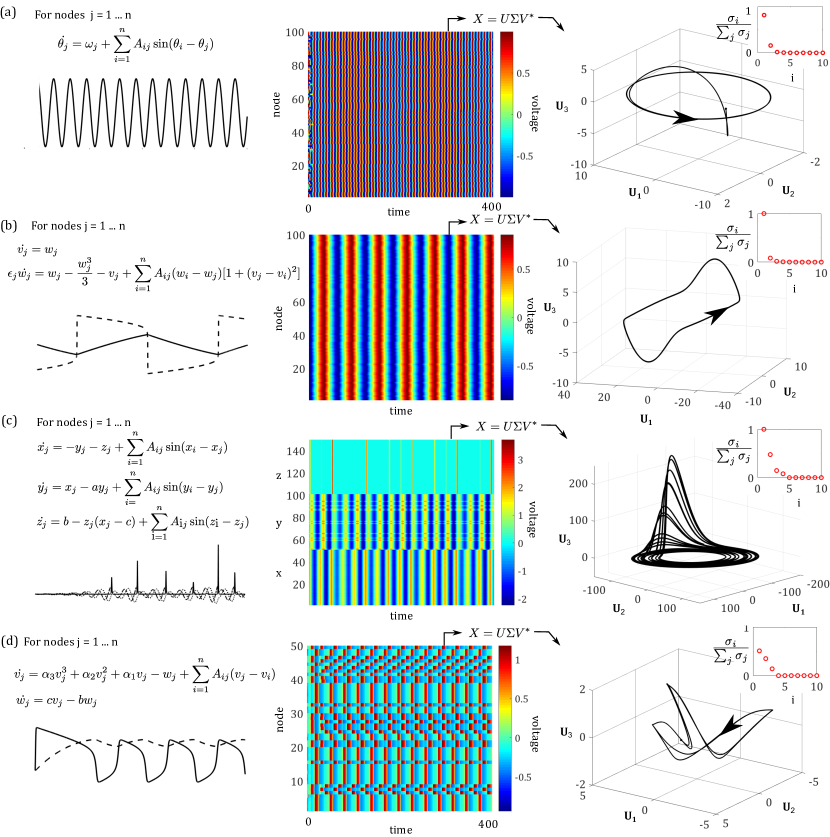

Before applying our method to heterogeneous systems, we establish that it is producing reasonable results by considering a network consisting of Kuramoto oscillators with sinusoidal coupling. For the ubiquitous Kuramoto oscillator, the phase of node , denoted , is governed by

| (6) |

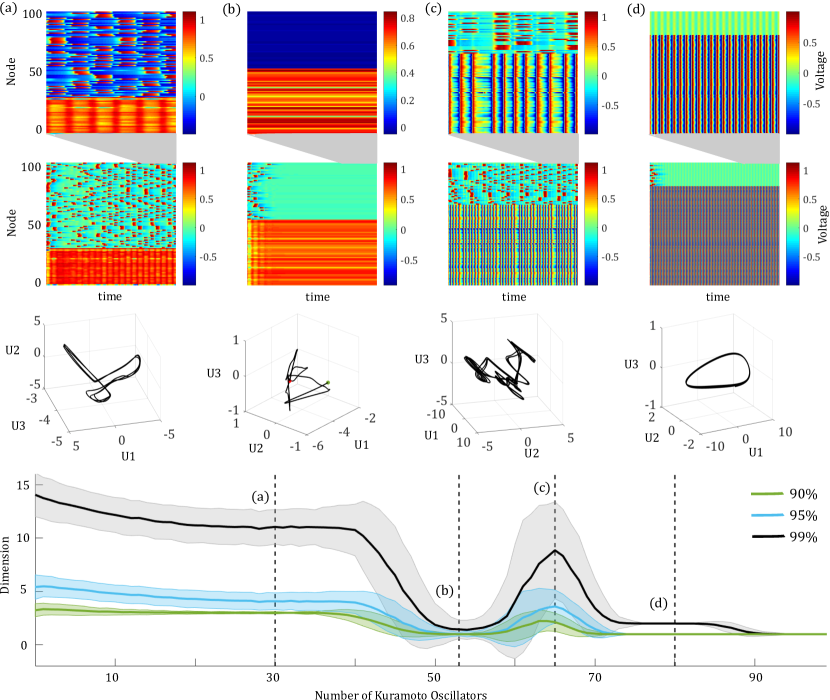

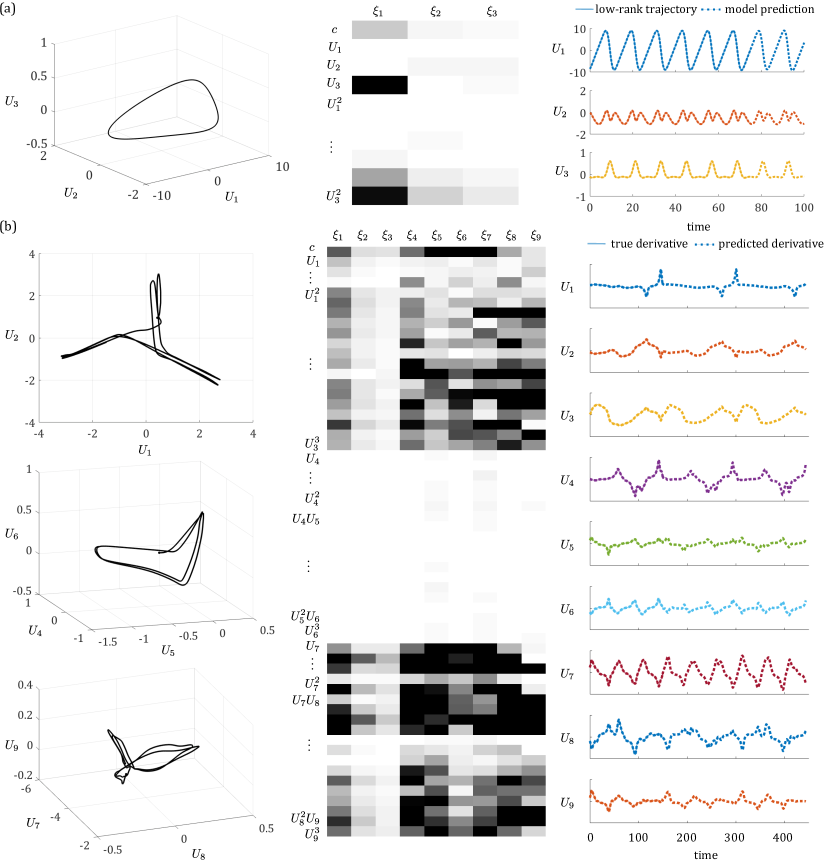

The key parameters besides the aforementioned adjacency matrix, are the connectivity strength , and the intrinsic frequency . For each node in the system, is drawn from a uniform distribution. Kuramoto oscillators have been widely studied and exhibit a well-known phase transition from asynchrony below a critical coupling threshold to partial synchrony above this threshold. The system continues to synchronize more fully as the coupling parameter is further increased. Panel (a) of Figure 4 illustrates the behavior of a single Kuramoto oscillator as well as the full system dynamics for a network of Kuramoto oscillators. Note that we are visualising the cosine of the phase. Taking the singular value decomposition and projecting onto the first few modes reduces the system dynamics to a stable elliptical limit cycle. Retaining two of these modes and applying the SINDy method to the 2D limit cycle produces a simple and accurate set of governing equations which can be used to simulate the system dynamics (Fig. 5a, final column). With this friendly, linear collective behavior no trimming is required to recover useful model equations.

Next, we consider a slightly more complicated system: Rayleigh oscillators with nonlinear coupling. In this case each node follows the second order governing equation

| (7) |

where is drawn from a uniform distribution and again is the connectivity parameter. The nonlinear coupling strategy, termed Haken-Kelso-Bunz (HKB) coupling, was introduced to study human bimanual experiments and applied to data capturing the synchronization of people rocking chairs by Alderisio et al. [59]. In numerical simulations, we observe partial synchrony in these systems when there is sufficient coupling (Fig. 4b). This collective behavior is captured by a nonlinear limit cycle in the low-dimensional representation. Applying SINDy to the 4D projection with trimming pulls out regions of rapid relaxation along the direction of and produces a model that is linear in and and cubic in and . Here each oscillator has an intrinsic on the order of . The multi-scale nature of the collective system dynamics is evident in the fact that the coefficients learned for modes and are much higher than those learned for and . With a distribution of values centered closer to , we would see a smaller gap between scales as . Comparing the trajectory predicted by the model against the true low-rank trajectory of the system (final column of Fig. 5), we see that after one lap of the limit cycle, the prediction gets out of phase with the true trajectory. The SINDy model is attracted to a smaller stable limit cycle parallel to the true dynamics. Applying this method to systems with larger values of , i.e. systems for which the boundary layers are less sharp, results in model dynamics that stay on track for longer time.

We also demonstrate our method on a network of Rossler oscillators with sinusoidal coupling. In this case each node has 3 state variables governed by

| (8) |

The parameters are uniform across all nodes in the network for a given run. In the example shown in Fig. 4c, . The trajectory of each node is drawn to a similarly shaped strange attractor, however the attractors may be translated in phase space depending on the initial condition of the node. The nodes of the network largely synchronize phases and frequencies in their component, synchronize frequencies of their component, and partially synchronize the timing of excursions in the component. Projecting onto the first three singular vectors, the system lands on an attractor that resembles a Rossler attractor with a few differences. Specifically, the attractor is tilted slightly with respect to the axes, so there is a background oscillation rate in . Due to this tilt, the “excursions” also disrupt the background activity along and slightly. Applying SINDy with trimming to this low-dimensional trajectory results in a linear model for and and a dense cubic equation for the dynamics of . Because the attractor for the collective dynamics is tilted in the SVD-space compared to an attractor for a single Rossler oscillator, these cross terms are necessary to capture the background frequency and the excursions. Observe that trimmed points are mostly spikes along the direction. In this case, the model predictions and the true trajectory match up very well for time steps that were used in the SVD projection initially. However, about time in Fig. 5c you can start to see that the model prediction is deviating from the true trajectory–capturing less of the excursions. Continuing to predict beyond the training data, the model still produces dynamics that resemble a collective Rossler oscillator but they lose fidelity to the true system dynamics.

The last type of oscillator that we considered were linearly coupled Fitzhugh-Nagumo oscillators. This simplified model of a neuron has two state variables: a voltage variable which displays rapid spiking and relaxation under an external stimulus and a slower recovery variable. Each FHN node is governed by the pair of equations

| (9) |

For these simulations we use parameters , , and as studied in [27]. This combination yields semicoherent spiking behavior across the network (Fig. 4d). In the raster plot of the network, we see that subsets of the nodes are firing together. The limit-cycle that emerges from projecting this onto 3 SVD modes has a distinctive triangular shape. Each edge appears to result from the spiking of a different subset of nodes. Note that for different parameter values, the system may cease spiking and settle to a steady state or spiking may be more chaotic. For this system we projected to low dimensions by taking the SVD of the voltage and the recovery variables within the nodes separately. and are the first three modes in the voltage variable. , , and are the first three modes in the recovery variable. Applying SINDy with trimming to this highly nonlinear, 6-dimensional limit cycle yields a model that is linear in the recovery variables and cubic in the voltage variables. The model predictions and the true dynamics of the system match up very well even over long time horizons (Fig. 5d).

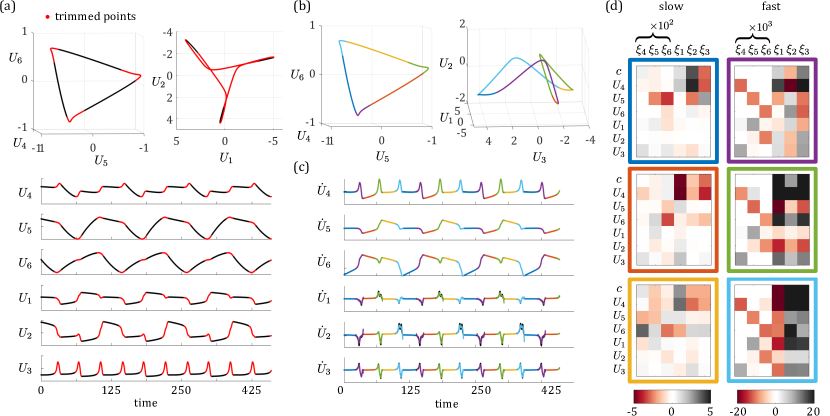

The multiscale nature of the limit-cycle in the low-rank representation of our FHN network makes it a prime candidate for building a hybrid model. After the network dynamics are reduced to 6 dimensions, we can apply the same steps as outlined for the Rayleigh and Van der Pol oscillators. First, we apply SINDy with trimming. Trimming identifies regions of fast-scale dynamics along directions through , which correspond to the three subsets of partially synchronized oscillators firing. In the low-rank dynamics these are the three corners of the triangular limit-cycle on the recovery modes and the three edges of the limit-cycle on the voltage modes (Fig. 6a). Along the limit cycle, alternating segments of fast and slow scale dynamics are used to partition phase-space into 6 regions, each of which has unique dominant-balance dynamics. So rather than just and , we have , , , , , and . These six intervals are color coded in Fig. (6b). The derivatives of the low-rank state variables in each of the six regions are fit using a linear library. We can see that the slow dynamic regions (blue, orange and yellow) show great agreement in all variables. The fast dynamic regions are also well matched in the recovery modes, 4,5 and 6. The fit is not as clean at the ends of each fast region in the voltage modes, 1, 2 and 3. The coefficients for the 6 dynamic models can be used to assess the system behavior within each region (6c).

4.1 Heterogeneous Oscillator Networks

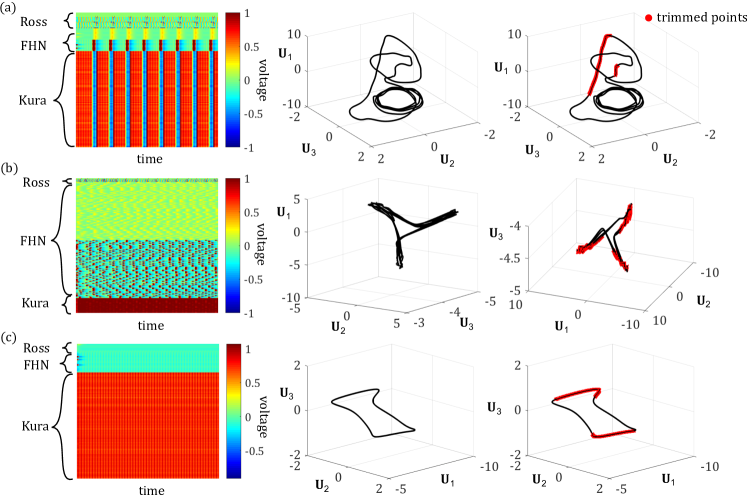

By considering networks of nodes displaying relaxation-oscillations and chaotic dynamics, we demonstrated the power of this method to disambiguate and capture spatio-temporal heterogeneity. However, we are not limited to networks consisting of a single type of oscillator. We can enhance the heterogeneity of the system by mixing two or three types of oscillators together in one network and apply the same coarse-graining method (Fig. 7 and 10).

As a case study on networks of two types of oscillators, we coupled together nodes governed by Kuramoto dynamics and nodes governed by FHN dynamics where . When coupling FHN nodes to Kuramoto nodes equations (6) and (9) apply to the appropriate subset of nodes in the system, except that FHN nodes are coupled to the sine of the Kuramoto node to maintain a consistent scale. For visualization purposes we ordered the system such that the first nodes are Kuramoto and the next are FHN. The adjacency matrices for these simulations are Erdos-Renyi graphs with connection probability . The FHN parameters are , , and with connectivity strength . The intrinsic frequencies for Kuramoto oscillators are drawn from a uniform distribution centered at , so the population would naturally oscillate much more quickly than the FHN firing rate. The connectivity strength for Kuramoto oscillators is , so this bloc also synchronizes more quickly than the FHN bloc.

With this set of parameters, we can simulate many different network instances at all possible ratios of Kuramoto to FHN oscillators. When , the system matches the all FHN network discussed previously with collective dynamics landing on a triangular limit cycle. When , the system matches the all Kuramoto network, with low rank dynamics attracted to a stable, elliptical limit cycle. For ratios in between the low-rank dynamics evolve from the triangular FHN cycle to the elliptical Kuramoto cycle (Fig. 7). When , the FHN bloc dominates the collective dynamics and the Kuramoto oscillators synchronize to what is typically the third mode of the FHN network. As the number of Kuramoto oscillators increases, this strengthens the influence of the third mode, widening the variation in this direction and rounding out the sharp corners of the triangular cycle. When the numbers of Kuramoto and FHN nodes are more equal, around , the system dynamics often collapse to a stationary state over time. Then continuing to increase into the range , there is a window of interesting mixed dynamics. In this range, the Kuramoto bloc sets the pace for a background pulse that is shared across all nodes, including the FHN nodes. However, the FHN nodes still fire periodically. The corresponding low rank dynamics always have a dominant oscillatory first mode. For some networks, the FHN recovery periods stretch the period of the Kuramoto oscillators, and so an echo of the FHN triangle is captured in modes 2 and 3 as shown in Fig. 7c. In other cases, the Kuramoto oscillators are not strongly entrained to the FHN firing, so the second mode is also oscillatory and mode 3 captures chaotic oscillations. These collective dynamics land on a figure-8 shaped attractor. Finally, increasing still further into the range causes the Kuramoto bloc to dominates as it entrains the FHN nodes to pulse at the mean Kuramoto frequency. These synchronized oscillations are well captured by a curved ellipse in the SVD projection. The collective low-rank dynamics appear almost entirely Kuramoto-like, ie. follow an elliptical limit cycle, beyond . In this example, we thoroughly explored the dynamics observed for one set of parameters on a mixed Kuramoto-FHN network. However, many other potential parameter sets could be considered and would lead to different collective dynamics.

For each ratio of to and this fixed set of parameters, we estimated the dimensions of the collective dynamics by calculating the number of SVD modes required to reconstruct the network dynamics to , , and accuracy with respect to the Frobenius norm. This estimate was repeated for 1000 network iterations at each ratio, and the mean and standard deviation were calculated (Fig. 7). The expected dynamic dimension of the network is the highest when the FHN bloc is dominant. It is low around a 50:50 ratio when the system often goes to a steady state and again above when the Kuramoto bloc fully dominates. There is a jump in dimension for the window around to where we see the system exhibiting collective dynamics that are a fairly even balance between the two styles of oscillator. These estimates of the dynamic dimension give a hint as to how many modes need to be used when fitting a SINDy model to the collective limit cycle. In Fig. 8, we apply our coarse graining method to produce models for the example in panels (a) and (d) of Fig. 7. For the network in panel Fig. 8a, which is mostly dominated by Kuramoto oscillators, though the FHN influence makes the limit cycle non-linear, our method produces a stable model that can be used to predict network dynamics over a long time period. The first mode is quite dominant, we see much larger coefficients on these dynamics compared to the other two modes. In contrast, to accurately fit the derivative for the network that is FHN dominated, we need 9 modes and a cubic library. In this case the SVD was applied to the Kuramoto and the FHN blocs separately, akin to learning coarse grained variables for subsets of the nodes in a network as in the method of Antonsen and Ott [60]. The voltage variables of the FHN nodes and the Kuramoto operate on a fast scale, whereas the recovery variables of the FHN nodes operate on a slow scale. The recovered model fits the derivative well, however it is unstable. This is an inherent challenge in the standard SINDy implementation, which can be addressed through further modifications that ensure model stability. There are several stabilization approaches introduced in [61, 50].

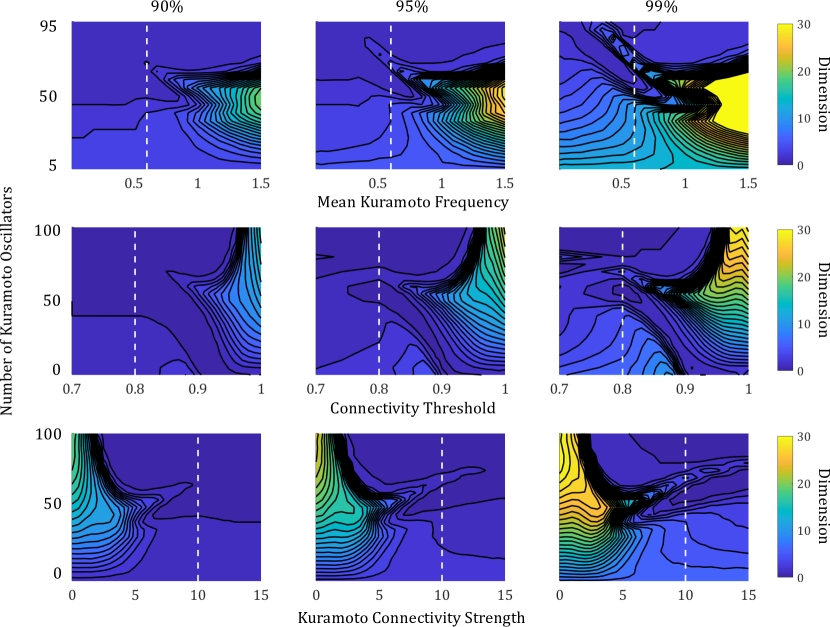

In addition to varying the ratio of Kuramoto to FHN oscillators, we also estimated the dimension of collective network dynamics as a function of other network parameters. We tested a wide range of network connectivities and differed the scale between the FHN and Kuramoto oscillations. These explorations are mapped out in Figure 9. Note that the white dotted line indicates the parameter set that was illustrated in Fig. 7: the mean Kuramoto frequency is 0.6, the connectivity threshold (1 minus the connectivity probability) on the adjacency matrix is , and the Kuramoto connectivity strength is 10, while . When constructing these figures, the dimension of a given instance of a network was estimated by simulating the network until time , taking the SVD of the system dynamics from time onwards, and then calculating the number of singular values required to retain and accuracy in reconstruction. Imposing a time delay before computing the SVD allows the system to settle onto the long-term limit cycle, so our estimate of the dimension discounts the early transient behavior of the system. For a given parameter set, 1000 instances of independent networks were simulated and the average dimension for each accuracy threshold was calculated. As we change two parameters defining the network structure and two parameters defining the Kuramoto nodes’ internal dynamics, we observe wide variation in the typical dimension of the collective dynamics.

Starting in the top row of Figure 9, we see that the dimension of the collective dynamics increases sharply with the mean Kuramoto frequency when the ratio is around 1. At higher frequencies, the intrinsic Kuramoto dynamics are on a very different time scale than the instrinsic FHN dynamics so we no longer achieve entrainment of one group by the other for equal size blocs. There is a narrow region of low dimension that persists for oscillator ratios above 50 up to a mean frequency of about 1. This is the region in which many system instances go to a steady stationary state. When the Kuramoto bloc is dominant, the dimension does not vary significantly as the mean frequency changes because the oscillators synchronize to one speed; no matter how fast or slow that speed is, the dynamics will be low-dimensional. When the FHN bloc is fully dominant, the dimension does not vary significantly with the kuramoto oscillator frequency because the dynamics of all nodes are driven almost exclusively by coupling to the FHN nodes.

In the second row of Figure 9, we vary the connection probability, , when constructing the adjacency matrix for the network. As the connectivity threshold, , increases, the coupling across all nodes is weakened until the network becomes disconnected. In the upper half of these maps, when the collective dynamics are dominated by Kuramoto oscillators, we observe the phase transition from a synchronized or semi-sychronized state with dimension less than 3 to an asynchronous state with dimensions well above 10. This transition is apparent as a black region for high and high connectivity thresholds because the dimension is increasing so rapidly that the contour lines are on top of each other. When FHN nodes make up a significant amount of the population, this rapid jump in dimension is ameliorated because as FHN nodes become disconnected, they lose the driving force needed to maintain tonic spiking instead relaxing to a stable steady state. The constant signal from the FHN nodes reduces the overall dimension of the system. When FHN nodes dominate the system (), there is a phase transition from tonic spiking behavior for connectivity thresholds in the range to a constant steady state above , again visible as a black region where contour lines are on top of each other in the plot.

Finally, we examined the dimension of network dynamics as we varied the connectivity strength amongst the bloc of Kuramoto oscillators (Fig. 9, row 3). In this case higher values of indicate stronger coupling and higher synchronization within the Kuramoto bloc. Lower connectivity leads to higher dimension collective dynamics and higher connectivity leads to lower dimension collective dynamics if the number of Kuramoto oscillators is above 1. As with the connectivity threshold, we observe a sharp phase transition between partial synchronicity and asynchronicity as a black region on the maps when . For smaller values of the transition is more gradual because the FHN modes are well-connected and offset the chaotic firing of the Kuramoto bloc. When is very small, the dynamic dimension is independent of because the FHN dynamics are fully dominant.

We also applied our coarse-graining method to networks consisting of three different types of oscillators: Kuramoto, FHN, and Rossler. With this level of heterogeneity, the possible sets of network parameters to consider quickly grows, making a thorough exploration intractable. However, for some parameter regimes and initial conditions we demonstrate that the collective dynamics are low-dimensional and can be captured by a small number of SVD modes. Possible behaviors include high frequency, low amplitude background oscillations with periodic high amplitude spikes across the full system (Fig. 10a), FHN-like semi-synchronized spiking with Rossler oscillators adding higher-frequency oscillations during the relaxation period (Fig. 10b), or a repeated high-frequency, nonlinear oscillation at a low amplitude across the Kuramoto bloc (Fig. 10c). In each of these examples, we can apply SINDy with trimming to isolate regions of differing dynamics. In panel (a) from Fig. 10, the points corresponding to the universal rapid relaxation across the system are trimmed along with the steepest portion of the collective spike. In panel (b) from Fig. 10, the low dimensional dynamics look like the now familiar triangular FHN limit cycle, but there are irregular oscillations allow the corner wings of the triangle. There portions seem to be contributed by the small bloc of Rossler oscillators in the system and are precisely the points picked out by trimming. In panel (c) from Fig. 10, trimming identifies regions of rapid change along the dimension, while is constant and is nearly constant.

5 Discussion

Modeling heterogeneous, multiscale physics remains a mathematically challenging proposition. Indeed, traditional computational techniques quickly become intractable when attempting to resolve such systems in both space and time. Emerging data-driven methods are enabling new mathematical architectures that can automate the discovery of coarse-grained coordinates and dynamics that are low-dimensional and parsimonious. This renders approximate models that allow for rapid evaluation of the heterogeneous, multiscale physics. More than that, these techniques can also be constructed to produce interpretable physics models.

We have proposed a set of algorithms for modeling heterogeneous, high-dimensional networked dynamical systems. Specifically, we have leveraged dimensionality-reduction, sparse regression, and robust statistics to discover interpretable, coarse-grained models of the underlying physics. The method further allows us to disambiguate between the resulting fast and slow scale dynamics of the system. Used in combination, these methods are able to: (i) identify low-dimensional embeddings (coordinates) on which to construct models, (ii) identify different time scales using the statistical robustification technique of data trimming, and (iii) identify interpretable parsimonious models of the coarse-grained dynamics at different timescales.

The mathematical architecture has been demonstrated with a series of numerical experiments on networked nonlinear oscillators. The oscillators, whether a single type of oscillator or a heterogeneous set of oscillators, evolve and interact to produce low-rank dynamics that often include relaxation oscillations. The literature is largely devoid of the consideration of such systems due to their complexity. However, the mathematical architecture developed here is able to extract meaningful models even with such high-dimensional heterogeneous interactions.

Acknowledgements

The authors acknowledge funding support from the Air Force Office of Scientific Research (AFOSR FA9550-19-1-0386) and from the National Science Foundation AI Institute in Dynamic Systems grant number 2112085.

References

- [1] Peter Benner, Serkan Gugercin, and Karen Willcox. A survey of projection-based model reduction methods for parametric dynamical systems. SIAM review, 57(4):483–531, 2015.

- [2] Ioannis G Kevrekidis, C William Gear, James M Hyman, Panagiotis G Kevrekidid, Olof Runborg, Constantinos Theodoropoulos, and others. Equation-free, coarse-grained multiscale computation: Enabling mocroscopic simulators to perform system-level analysis. Communications in Mathematical Sciences, 1(4):715–762, 2003.

- [3] E Weinan, Bjorn Engquist, and others. The heterogeneous multiscale methods. Communications in Mathematical Sciences, 1(1):87–132, 2003.

- [4] Gary Froyland, Georg A Gottwald, and Andy Hammerlindl. A computational method to extract macroscopic variables and their dynamics in multiscale systems. SIAM Journal on Applied Dynamical Systems, 13(4):1816–1846, 2014.

- [5] Gary Froyland, Georg A Gottwald, and Andy Hammerlindl. A trajectory-free framework for analysing multiscale systems. Physica D: Nonlinear Phenomena, 328:34–43, 2016.

- [6] Philip Holmes, John L Lumley, Gahl Berkooz, and Clarence W Rowley. Turbulence, coherent structures, dynamical systems and symmetry. Cambridge university press, 2012.

- [7] J Nathan Kutz, Steven L Brunton, Bingni W Brunton, and Joshua L Proctor. Dynamic mode decomposition: data-driven modeling of complex systems. SIAM, 2016.

- [8] Kunihiko Taira, Steven L Brunton, Scott Dawson, Clarence W Rowley, Tim Colonius, Beverley J McKeon, Oliver T Schmidt, Stanislav Gordeyev, Vassilios Theofilis, and Lawrence S Ukeiley. Modal analysis of fluid flows: An overview. AIAA Journal, 55(12):4013–4041, 2017.

- [9] Markus Dihlmann, Martin Drohmann, and Bernard Haasdonk. Model reduction of parametrized evolution problems using the reduced basis method with adaptive time-partitioning. Proc. of ADMOS, 2011:64, 2011.

- [10] Ido Bright, Guang Lin, and J Nathan Kutz. Compressive sensing based machine learning strategy for characterizing the flow around a cylinder with limited pressure measurements. Physics of Fluids, 25(12):127102, 2013.

- [11] Taddei, T., Perotto, S., and Quarteroni, A. Reduced basis techniques for nonlinear conservation laws. ESAIM: M2AN, 49(3):787–814, 2015.

- [12] Benjamin Peherstorfer and Karen Willcox. Online adaptive model reduction for nonlinear systems via low-rank updates. SIAM Journal on Scientific Computing, 37(4):A2123–A2150, 2015.

- [13] J Nathan Kutz, Xing Fu, and Steven L Brunton. Multiresolution dynamic mode decomposition. SIAM Journal on Applied Dynamical Systems, 15(2):713–735, 2016.

- [14] Ronald R Coifman, Stephane Lafon, Ann B Lee, Mauro Maggioni, Boaz Nadler, Frederick Warner, and Steven W Zucker. Geometric diffusions as a tool for harmonic analysis and structure definition of data: Diffusion maps. Proceedings of the National Academy of Sciences of the United States of America, 102(21):7426–7431, 2005.

- [15] Ronald R Coifman and Stéphane Lafon. Diffusion maps. Applied and computational harmonic analysis, 21(1):5–30, 2006.

- [16] Ronald R Coifman, Ioannis G Kevrekidis, Stéphane Lafon, Mauro Maggioni, and Boaz Nadler. Diffusion maps, reduction coordinates, and low dimensional representation of stochastic systems. Multiscale Modeling & Simulation, 7(2):842–864, 2008.

- [17] Boaz Nadler, Stéphane Lafon, Ronald R Coifman, and Ioannis G Kevrekidis. Diffusion maps, spectral clustering and reaction coordinates of dynamical systems. Applied and Computational Harmonic Analysis, 21(1):113–127, 2006.

- [18] Steven L Brunton, Joshua L Proctor, and J Nathan Kutz. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proceedings of the national academy of sciences, 113(15):3932–3937, 2016.

- [19] Bethany Lusch, J Nathan Kutz, and Steven L Brunton. Deep learning for universal linear embeddings of nonlinear dynamics. Nature communications, 9(1):1–10, 2018.

- [20] Kathleen Champion, Bethany Lusch, J Nathan Kutz, and Steven L Brunton. Data-driven discovery of coordinates and governing equations. Proceedings of the National Academy of Sciences, 116(45):22445–22451, 2019.

- [21] Kathleen P Champion, Steven L Brunton, and J Nathan Kutz. Discovery of nonlinear multiscale systems: Sampling strategies and embeddings. SIAM Journal on Applied Dynamical Systems, 18(1):312–333, 2019.

- [22] Shaowu Pan, Nicholas Arnold-Medabalimi, and Karthik Duraisamy. Sparsity-promoting algorithms for the discovery of informative Koopman invariant subspaces. (Pope 2001), 2020.

- [23] Mark C Cross and Pierre C Hohenberg. Pattern formation outside of equilibrium. Reviews of modern physics, 65(3):851, 1993.

- [24] Felix P Kemeth, Tom Bertalan, Thomas Thiem, Felix Dietrich, Sung Joon Moon, Carlo R Laing, and Ioannis G Kevrekidis. Learning emergent pdes in a learned emergent space. arXiv preprint arXiv:2012.12738, 2020.

- [25] Georg A Gottwald. Model reduction for networks of coupled oscillators. Chaos: An Interdisciplinary Journal of Nonlinear Science, 25(5):053111, 2015.

- [26] Madeleine Cartwright and Georg A Gottwald. A collective coordinate framework to study the dynamics of travelling waves in stochastic partial differential equations. Physica D: Nonlinear Phenomena, 397:54–64, 2019.

- [27] Eli Shlizerman, Konrad Schroder, and J Nathan Kutz. Neural activity measures and their dynamics. SIAM Journal on Applied Mathematics, 72(4):1260–1291, 2012.

- [28] Megan Morrison and J Nathan Kutz. Nonlinear control of networked dynamical systems. IEEE Transactions on Network Science and Engineering, 8(1):174–189, 2020.

- [29] Takashi Nishikawa, Adilson E Motter, Ying-Cheng Lai, and Frank C Hoppensteadt. Heterogeneity in oscillator networks: Are smaller worlds easier to synchronize? Physical review letters, 91(1):014101, 2003.

- [30] Adilson E Motter, Changsong Zhou, and Jürgen Kurths. Network synchronization, diffusion, and the paradox of heterogeneity. Physical Review E, 71(1):016116, 2005.

- [31] Kathleen Champion, Peng Zheng, Aleksandr Y Aravkin, Steven L Brunton, and J Nathan Kutz. A unified sparse optimization framework to learn parsimonious physics-informed models from data. IEEE Access, 8:169259–169271, 2020.

- [32] Jared L Callaham, James V Koch, Bingni W Brunton, J Nathan Kutz, and Steven L Brunton. Learning dominant physical processes with data-driven balance models. Nature communications, 12(1):1–10, 2021.

- [33] Carl M Bender and Steven A Orszag. Advanced mathematical methods for scientists and engineers I: Asymptotic methods and perturbation theory. Springer Science & Business Media, 2013.

- [34] Jirayr Kevorkian and Julian D Cole. Perturbation methods in applied mathematics, volume 34. Springer Science & Business Media, 2013.

- [35] J Nathan Kutz. Advanced differential equations: Asymptotics & perturbations. arXiv preprint arXiv:2012.14591, 2020.

- [36] Christopher M Bishop. Pattern recognition and machine learning. springer, 2006.

- [37] Kevin P Murphy. Machine learning: a probabilistic perspective. MIT press, 2012.

- [38] Floris Van Van Breugel, J Nathan Kutz, and Bingni W Brunton. Numerical differentiation of noisy data: A unifying multi-objective optimization framework. IEEE Access, 8:196865–196877, 2020.

- [39] Peng Zheng, Travis Askham, Steven L Brunton, J Nathan Kutz, and Aleksandr Y Aravkin. A unified framework for sparse relaxed regularized regression: Sr3. IEEE Access, 7:1404–1423, 2018.

- [40] Samuel H Rudy, Steven L Brunton, Joshua L Proctor, and J Nathan Kutz. Data-driven discovery of partial differential equations. Science Advances, 3(4):e1602614, 2017.

- [41] Hayden Schaeffer. Learning partial differential equations via data discovery and sparse optimization. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 473(2197):20160446, 2017.

- [42] Samuel Rudy, Alessandro Alla, Steven L Brunton, and J Nathan Kutz. Data-driven identification of parametric partial differential equations. SIAM Journal on Applied Dynamical Systems, 18(2):643–660, 2019.

- [43] Niall M Mangan, Travis Askham, Steven L Brunton, J Nathan Kutz, and Joshua L Proctor. Model selection for hybrid dynamical systems via sparse regression. Proceedings of the Royal Society A, 475(2223):20180534, 2019.

- [44] Eurika Kaiser, J Nathan Kutz, and Steven L Brunton. Sparse identification of nonlinear dynamics for model predictive control in the low-data limit. Proceedings of the Royal Society A, 474(2219):20180335, 2018.

- [45] Steven L Brunton, Joshua L Proctor, and J Nathan Kutz. Sparse identification of nonlinear dynamics with control (sindyc). IFAC-PapersOnLine, 49(18):710–715, 2016.

- [46] Niall M Mangan, Steven L Brunton, Joshua L Proctor, and J Nathan Kutz. Inferring biological networks by sparse identification of nonlinear dynamics. IEEE Transactions on Molecular, Biological and Multi-Scale Communications, 2(1):52–63, 2016.

- [47] Niall M Mangan, J Nathan Kutz, Steven L Brunton, and Joshua L Proctor. Model selection for dynamical systems via sparse regression and information criteria. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 473(2204):20170009, 2017.

- [48] Kadierdan Kaheman, J Nathan Kutz, and Steven L Brunton. Sindy-pi: a robust algorithm for parallel implicit sparse identification of nonlinear dynamics. Proceedings of the Royal Society A, 476(2242):20200279, 2020.

- [49] Markus Quade, Markus Abel, J Nathan Kutz, and Steven L Brunton. Sparse identification of nonlinear dynamics for rapid model recovery. Chaos: An Interdisciplinary Journal of Nonlinear Science, 28(6):063116, 2018.

- [50] Seth M Hirsh, David A Barajas-Solano, and J Nathan Kutz. Sparsifying priors for bayesian uncertainty quantification in model discovery. arXiv preprint arXiv:2107.02107, 2021.

- [51] Urban Fasel, J Nathan Kutz, Bingni W Brunton, and Steven L Brunton. Ensemble-sindy: Robust sparse model discovery in the low-data, high-noise limit, with active learning and control. arXiv preprint arXiv:2111.10992, 2021.

- [52] Samuel H Rudy, Steven L Brunton, and J Nathan Kutz. Smoothing and parameter estimation by soft-adherence to governing equations. Journal of Computational Physics, 398:108860, 2019.

- [53] Kadierdan Kaheman, Steven L Brunton, and J Nathan Kutz. Automatic differentiation to simultaneously identify nonlinear dynamics and extract noise probability distributions from data. arXiv preprint arXiv:2009.08810, 2020.

- [54] Alan A Kaptanoglu, Brian M de Silva, Urban Fasel, Kadierdan Kaheman, Jared L Callaham, Charles B Delahunt, Kathleen Champion, Jean-Christophe Loiseau, J Nathan Kutz, and Steven L Brunton. Pysindy: A comprehensive python package for robust sparse system identification. arXiv preprint arXiv:2111.08481, 2021.

- [55] J Nathan Kutz. Data-driven modeling & scientific computation: methods for complex systems & big data. Oxford University Press, 2013.

- [56] Steven L Brunton and J Nathan Kutz. Data-driven science and engineering: Machine learning, dynamical systems, and control. Cambridge University Press, 2022.

- [57] N Benjamin Erichson, Sergey Voronin, Steven L Brunton, and J Nathan Kutz. Randomized matrix decompositions using r. arXiv preprint arXiv:1608.02148, 2016.

- [58] N Benjamin Erichson, Steven L Brunton, and J Nathan Kutz. Compressed dynamic mode decomposition for background modeling. Journal of Real-Time Image Processing, 16(5):1479–1492, 2019.

- [59] Francesco Alderisio, Benoît G Bardy, and Mario Di Bernardo. Entrainment and synchronization in networks of rayleigh–van der pol oscillators with diffusive and haken–kelso–bunz couplings. Biological cybernetics, 110(2):151–169, 2016.

- [60] Edward Ott and Thomas M Antonsen. Long time evolution of phase oscillator systems. Chaos: An interdisciplinary journal of nonlinear science, 19(2):023117, 2009.

- [61] Charles B Delahunt and J Nathan Kutz. A toolkit for data-driven discovery of governing equations in high-noise regimes. arXiv preprint arXiv:2111.04870, 2021.