This work has been submitted to the IEEE for possible publication. Copyright may be transferred without notice, after which this version may no longer be accessible.

Data-driven Estimation of the Algebraic Riccati Equation for

the Discrete-Time Inverse Linear Quadratic Regulator Problem

Abstract

In this paper, we propose a method for estimating the algebraic Riccati equation (ARE) with respect to an unknown discrete-time system from the system state and input observation. The inverse optimal control (IOC) problem asks, “What objective function is optimized by a given control system?” The inverse linear quadratic regulator (ILQR) problem is an IOC problem that assumes a linear system and quadratic objective function. The ILQR problem can be solved by solving a linear matrix inequality that contains the ARE. However, the system model is required to obtain the ARE, and it is often unknown in fields in which the IOC problem occurs, for example, biological system analysis. Our method directly estimates the ARE from the observation data without identifying the system. This feature enables us to economize the observation data using prior information about the objective function. We provide a data condition that is sufficient for our method to estimate the ARE. We conducted a numerical experiment to demonstrate that our method can estimate the ARE with less data than system identification if the prior information is sufficient.

I INTRODUCTION

The inverse optimal control (IOC) problem is a framework used to determine the objective function optimized by a given control system. By optimizing the objective function obtained by IOC, a different system can imitate the given control system’s behavior. For example, several studies have been conducted on robots imitating human or animal movements [1, 2, 3]. To apply IOC, in these studies, the researchers assumed that human and animal movements are optimal for unknown criteria. Such an optimality assumption holds in some cases [4].

The first IOC problem, which assumes a single-input linear time-invariant system and quadratic objective function, was proposed by Kalman [5]. Anderson [6] generalized the IOC problem in [5] to the multi-input case. For this type of IOC problem, called the inverse linear quadratic regulator (ILQR) problem, Molinari [7] proposed a necessary and sufficient condition for a linear feedback input to optimize some objective functions. Moylan et al. [8] proposed and solved the IOC problem for nonlinear systems. IOC in [5, 6, 7, 8] is based on control theory, whereas Ng et al. [9] proposed inverse reinforcement learning (IRL), which is IOC based on machine learning. Recently, IRL has become an important IOC framework, along with control theory methods [10].

We consider the ILQR problem with respect to an unknown discrete-time system. Such a problem often occurs in biological system analysis, which is the main application target of IOC. The control theory approach solves the ILQR problem by solving a linear matrix inequality (LMI) that contains the algebraic Riccati equation (ARE) [11]. However, to obtain the ARE, the system model is required. The IRL approach also has difficulty solving our problem. There is IRL with unknown systems [12], and IRL with continuous states and input spaces [13]; however, to the best of our knowledge, no IRL exists with both.

In the continuous-time case, we proposed a method to estimate the ARE from the observation data of the system state and input directly [14]. In the present paper, we estimate the ARE for a discrete-time system using a method that extends the result in [14]. Similarly to [14], our method transforms the ARE by multiplying the observed state and input on both sides. However, this technique alone cannot calculate ARE without the system model because the form of the ARE differs between continuous and discrete-time. We solve this problem using inputs from the system’s controller. Also, the use of such inputs enable us to estimate the ARE without knowing the system’s control gain. We prove that the equation obtained from this transformation is equivalent to the ARE if the dataset is appropriate. The advantage of our method is the economization of the observation data using prior information about the objective function. We conducted a numerical experiment to demonstrate that our method can estimate the ARE with less data than system identification if the prior information is sufficient.

The structure of the remainder of this paper is as follows: In Section II, we formulate the problem considered in this paper. In Section III, we propose our estimation method and prove that the estimated equation is equivalent to the ARE. In Section IV, we describe numerical experiments that confirm our statement in Section III. Section V concludes the paper.

II PROBLEM FORMULATION

We consider the following discrete-time linear system:

| (1) |

where and are constant matrices, and and are the state and the input at time , respectively.

Let . We write the set of real-valued symmetric matrices as . In the LQR problem [15], we determine the input sequence that minimizes the following objective function:

| (2) |

where and .

We assume that the system (1) is controllable and matrices and are positive definite. Then, for any , the LQR problem has a unique solution written as the following linear state feedback law:

| (3) |

where is a unique positive definite solution of the ARE:

| (4) |

For defined in (3), holds.

In the ILQR problem, we find positive definite matrices and such that an input with the given gain is a solution of the LQR problem, that is, minimizes (2).

We can solve the ILQR problem by solving an LMI. Let the system (1) be controllable. Then, minimizes (2) if and only if a positive definite matrix exists that satisfies (4) and the following:

| (5) |

By transforming (4) and (5), we obtain the following pair of equations equivalent to (4) and (5):

| (6) |

Hence, we can solve the ILQR problem by determining , , and that satisfy the following LMI:

| (7) |

In biological system analysis or reverse engineering, the system model and control gain is often unknown, and the ARE (6) is not readily available. Hence, we consider the following problem of determining a linear equation equivalent to (6) using the system state and input observation:

Problem 1 (ARE estimation problem)

Consider controllable system (1) and controller with unknown , , and . Suppose observation data of the system state and input are given, where

| (8) |

Let . inputs in the data are obtained from the unknown controller as follows:

| (9) |

Determine a linear equation of , , and with the same solution space as (6).

We discuss without assuming that the data is sequential, that is, always satisfies . However, in an experiment, we show that our result can also be applied to one sequential data. The ARE has multiple solutions and we call the set of these solutions, which is a linear subspace, a solution space.

III DATA-DRIVEN ESTIMATION OF ARE

The simplest solution to Problem 1 is to identify the control system, that is, matrices , and . We define matrices , , , , and as follows:

| (10) |

Let the matrices and have row full rank. Then, matrices , , and are identified using the least square method as follows:

| (11) |

In ILQR problems, prior information about matrices and may be obtained. However, such information cannot be used for system identification. We propose a novel method that uses the prior information and estimates the ARE using less observation data than system identification.

The following theorem provides an estimation of the ARE:

Theorem 1

Consider Problem 1. Let be an matrix whose th row th column element is defined as

| (12) |

Then, the following condition is necessary for the ARE (6) to hold.

| (13) |

Proof:

We define and as

| (14) |

The ARE (6) is equivalent to the combination of and . Let and be arbitrary natural numbers. From the ARE, we obtain

| (15) |

Using the assumption (8) and (9), we can transform the left-hand side of (15) into the following:

| (16) |

Therefore, we obtain from (15) and (16), which proves Theorem 1. ∎

Equation (13) is the estimated linear equation that we propose in this paper and it can be obtained directly from the observation data without system identification. Our method obtains one scalar linear equation of , , and from a pair of observation data. However, at least one of the paired data must be a transition by a linear feedback input with the given gain . Equation (13) consists of scalar equations obtained in such a manner. Therefore, it is expected that if and are sufficiently large, (13) is equivalent to (6), which is also linear for the matrices , , and .

Equation (12) is similar to the Bellman equation [16]. Suppose that the ARE (6) holds, that is, minimizes . Then, the following Bellman equation holds:

| (17) |

The equation is equivalent to the Bellman equation if and . The novelty of Theorem 1 is that holds under the weaker condition, that is, only . Theorem 1 is also proved from the Bellman equation (17). See the supplemental material for the details.

The following theorem demonstrates that if the data satisfies the condition required for system identification, our estimation is equivalent to the ARE:

Theorem 2

Proof:

From Theorem 1, the ARE (6) implies the estimation (13), that is, . As shown in the proof of Theorem 1, matrix is expressed as follows using the matrices defined in (10) and (14):

| (18) |

Because the matrix has row full rank, implies

| (19) |

Because has row full rank, we obtain and from (19), which proves Theorem 2. ∎

The advantage of our method is the data economization that results from having prior information about and . Suppose that some elements of and are known to be zero in advance, and independent ones are fewer than the scalar equations in the ARE (6). Then, there is a possibility that our method can estimate the ARE with fewer data than required for system identification.

For fixed , our method can provide the largest number of scalar equations when . We use the following lemma to explain the reason:

Lemma 1

Suppose that there exist and that satisfy

| (20) |

where for any . Then, the following holds for any :

| (21) |

Proof:

Lemma 1 means that if data is the linear combination of other data, the equations obtained from are also linear combinations of other equations not using . Hence, without noise, such a data as is meaningless. If , at least one of is the linear combination of other data because the inputs in those data are obtained from the same gain . Therefore, larger than reduces data efficiency. From the above and , our method can provide equations if .

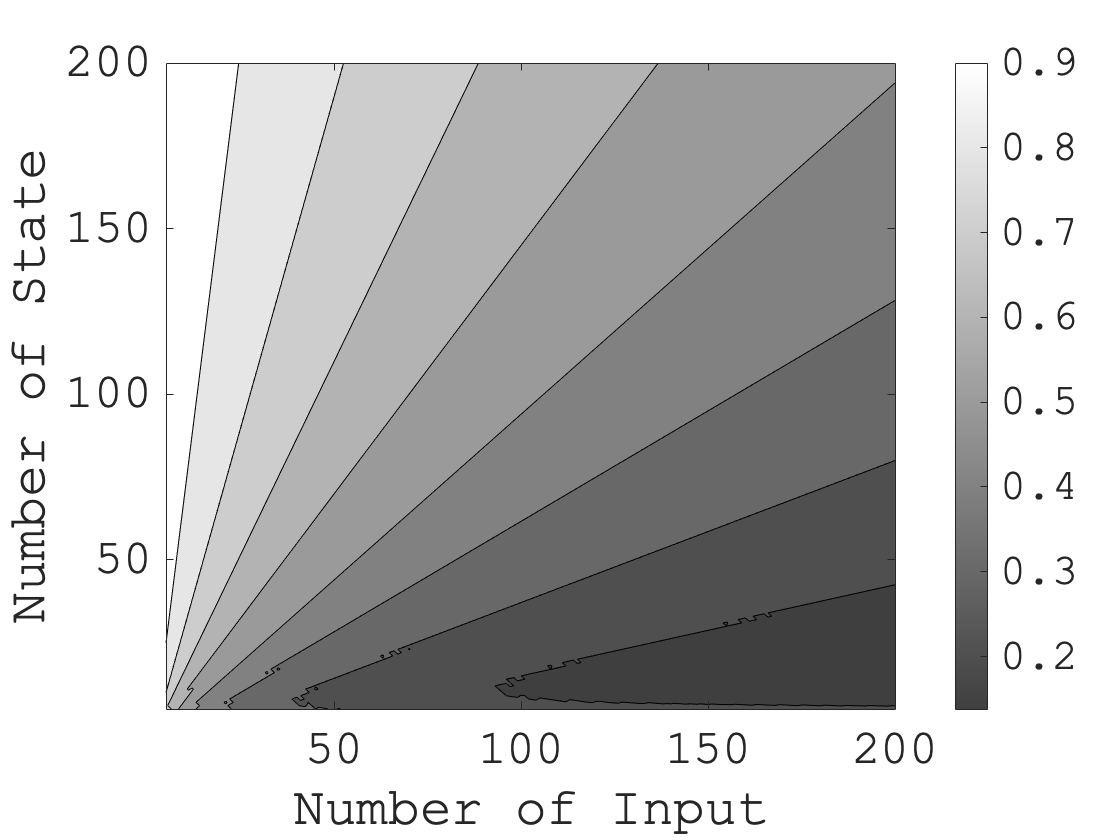

For an example of prior information, we consider diagonal and . In this case, the number of the independent elements of , , and is . Hence, if is or larger, we have more equations than the independent elements. Fig. 1 compares the numbers and of data required for our method and system identification, respectively, if and are diagonal. From Fig. 1, our method may estimate the ARE with less data than system identification. Additionally, this tendency becomes stronger as the number of inputs becomes larger than the number of states.

In the case of noisy data, we discuss the unbiasedness of the coefficients in (13). The coefficient of th row th column element of in (12) is expressed as follows:

| (23) |

where is the th element of . Suppose that the data is a sum of the true value and zero-mean noise distributed independently for each data. Then, we can readily confirm that if , the coefficient (23) of in (12) is unbiased, and otherwise, it is biased. This result is also the same for the coefficients of the elements of and .

IV NUMERICAL EXPERIMENT

IV-A Distance Between Solution Spaces

In our experiments, we evaluated the ARE estimation using a distance between the solution spaces of the ARE and estimation. Let be a vector generated by independent elements in the matrices , , and in any fixed order, where is defined according to the prior information. Because the ARE (6) is linear for , we can transform (6) into the following equivalent form:

| (24) |

where is the coefficient matrix and . Similarly, the estimation (13) is transformed into the following equivalent form:

| (25) |

where is the coefficient matrix and . We define the solution spaces and of the ARE (24) and estimation (25) as follows:

| (26) |

We define the distance between the solution spaces using the approach provided [17]. Assume that and have the same rank. Let , be the orthogonal projections to and , respectively. The distance between and is defined as follows:

| (27) |

where is the matrix norm induced by the 2-norm of vectors. Distance is the maximum Euclidian distance between and when , as explained in Fig. 2 and by the following equation:

| (28) |

Hence, the distance satisfies .

The orthogonal projections and are obtained from the orthogonal bases of and . To obtain these orthogonal bases, we use singular value decompositions of and . In our experiments, we performed the computation using MATLAB 2023a.

IV-B Experiment 1

In this experiment, we confirmed Theorem 2 by solving Problem 1. We set the controllable pair as follows:

| (29) |

The given gain is the solution of the LQR problem for the following and :

| (30) |

We considered the following observation data with that satisfy the condition of Theorem 2:

| (31) |

| (32) |

Using this setting, each of the ARE (6) and our estimation (13) contained scalar equations for variables, that is, independent elements in the symmetric matrices , , and .

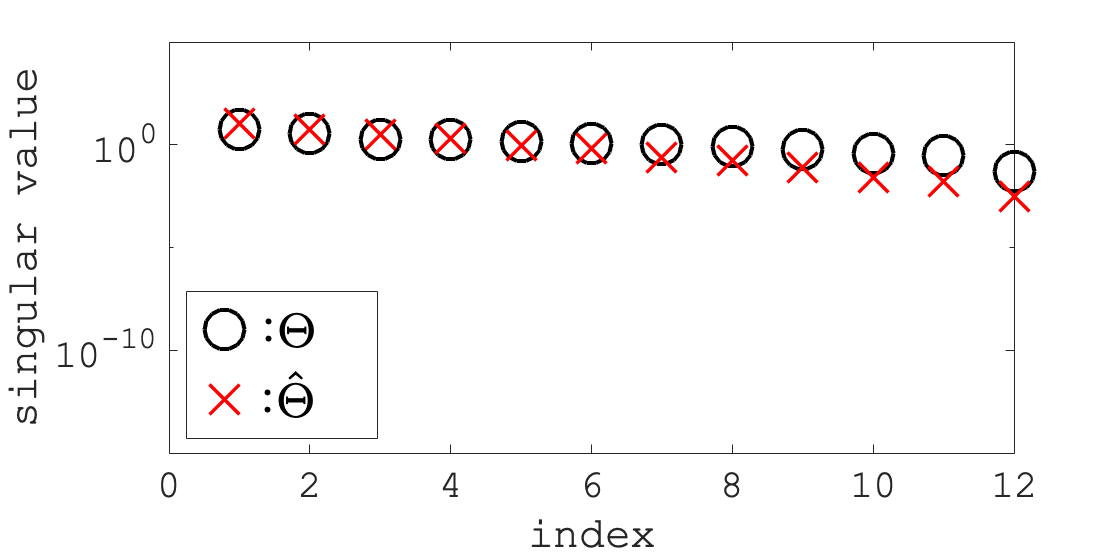

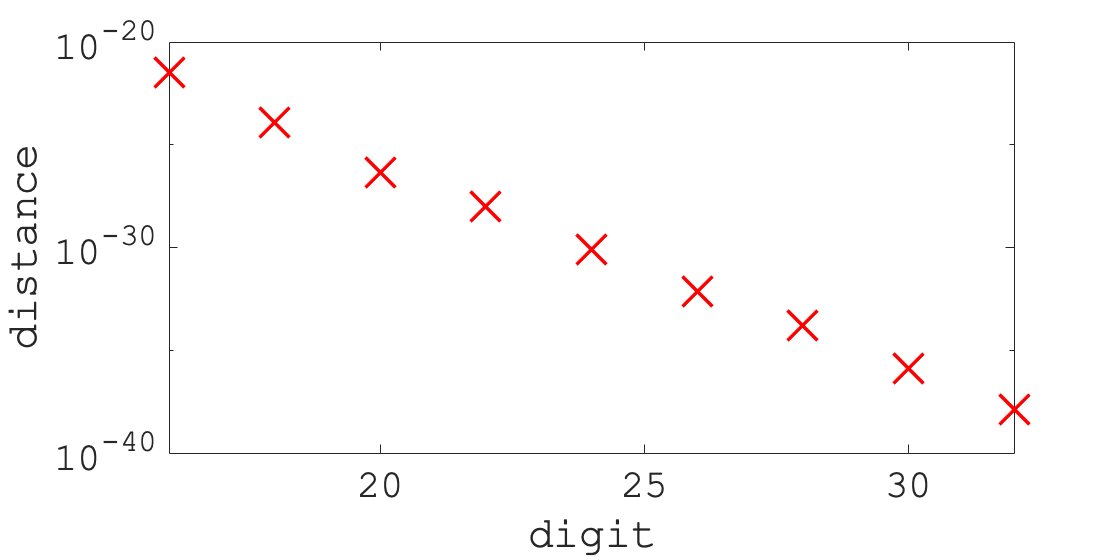

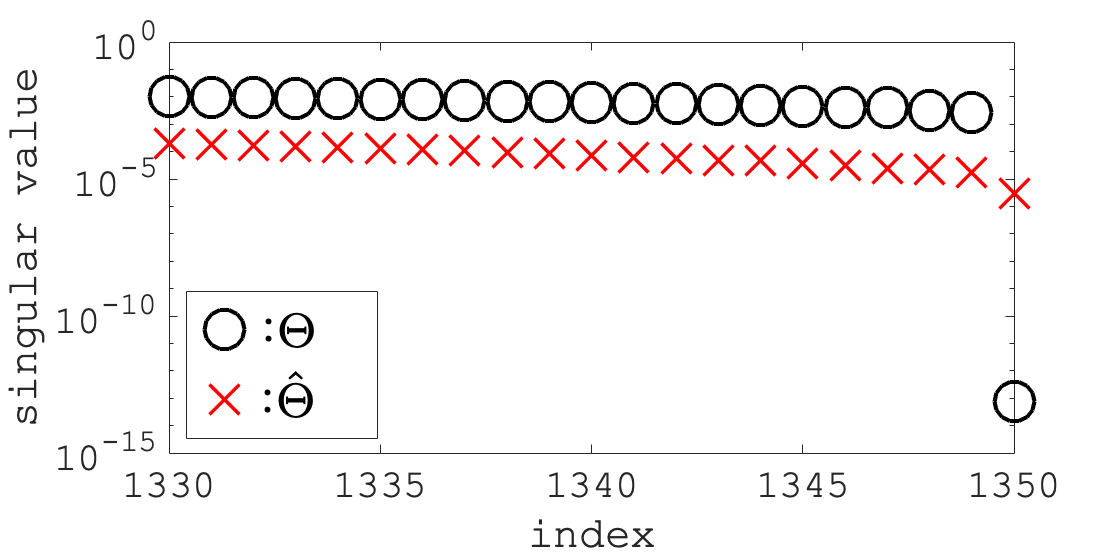

Fig. 2 shows the singular values of and , in descending order, that we computed using the default significant digits. As shown in Fig. 2, there is no zero singular value of , . Hence, the ranks of and are , and the solution spaces , are three-dimensional subspaces. To investigate the influence of the computational error, we performed the same experiment with various numbers of significant digits. Fig. 3 shows the relationship between the distance and the number of significant digits. As shown in Fig. 3, the logarithm of the distance decreased in proportion to the number of significant digits. Therefore, we concluded that non-zero was caused by the computational error and that our method correctly estimated the ARE.

IV-C Experiment 2

In this experiment, we confirmed that, if and are diagonal, Theorem 1 can estimate the ARE with fewer observation data than system identification. We randomly set the matrices and by generating each element from the uniform distribution in . After the generation, we confirmed that is controllable. The given is the solution of the LQR problem for the diagonal matrices and whose elements were generated from the uniform distribution in . We used observation data that satisfy (9) for . We generated the elements of and , from the uniform distribution in . The number of used data, , is less than required for system identification (11).

Using this setting, the ARE (6) and our estimation (13) contained and scalar equations, respectively. The variables of these scalar equations were independent elements in the symmetric matrix and diagonal matrices and .

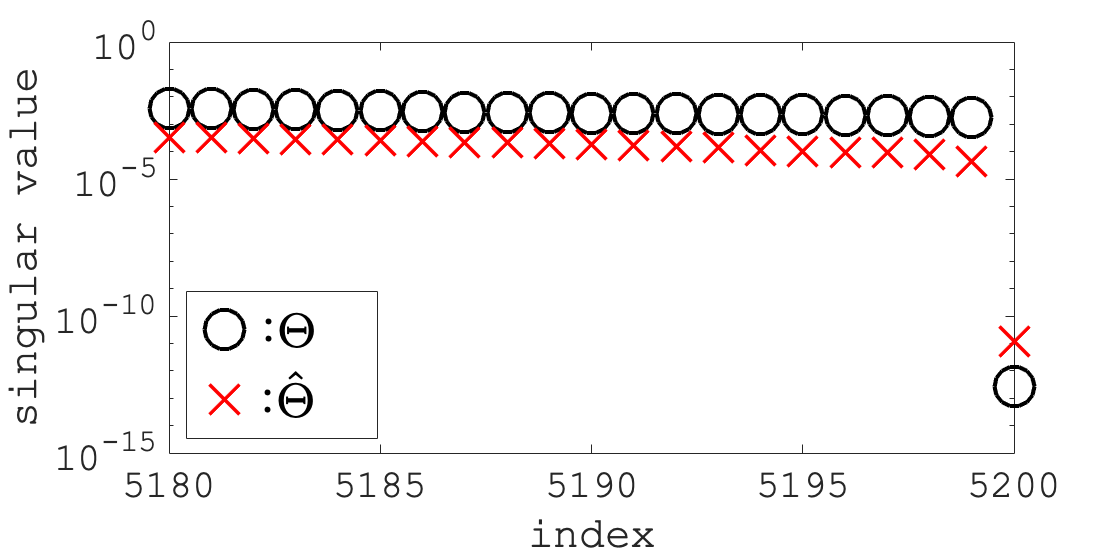

Because the arbitrary-precision arithmetic was too slow to perform for the scale of this experiment, we performed the computation using the default significant digits. We indexed the singular values of and in descending order; Fig. 4 shows the 5180–5200th singular values. From Fig. 4, we assumed that, without computational error, and each had a zero singular value. Then, the solution spaces , were one-dimensional subspaces, and the distance was .

We compared our method with system identification. For system identification, we generated additional data and in the same manner as and . Using the same approach as that for , we defined the solution space of the ARE (6) using , , and identified by (11). Then, the distance was . Therefore, our method estimated the ARE with the same order of error as system identification using fewer observation data than system identification.

IV-D Experiment 3

In this experiment, we compared our method and system identification under a practical conditions: a single noisy and sequential data and sparse but not diagonal and . We set the matrices and in the same manner as Experiment 2. The control gain is the solution of the LQR problem for the sparse matrices and . We performed three operations to generate and . First, we generated matrices and in the same manner as and set and . Second, we randomly set non-diagonal elements of to to make it sparse while maintaining symmetry. Similarly, we set elements of to . Finally, add the product of a constant and the identity matrix to and so that the maximum eigenvalues are times as large as the minimum ones, respectively.

To generate data, we used the following system:

| (33) |

where and are the state and input at time , respectively. If , the vector with norm is the product of a scalar constant and a random vector whose elements follow a uniform distribution in . Otherwise, . We ran the system (33) through time steps with a random initial state satisfying and obtained data as follows:

| (34) |

where and are the observation noise whose elements follow a normal distribution with a mean and variance . We explain the value of later. Throughout the simulation, holds times. Hence, . We sorted the data so that (9) holds if noise does not exist.

Under the above condition, the ARE (6) and our estimation (13) contained and scalar equations, respectively. The variables of these scalar equations were independent elements in the symmetric matrix and sparse matrices and .

We indexed the singular values of and in descending order. Fig. 5 shows the 1330–1350th singular values when . Although there is no zero singular value due to noise, the last singular value is noticeably small as shown in Fig. 5. Hence, we can conclude that the solution spaces , were one-dimensional subspaces.

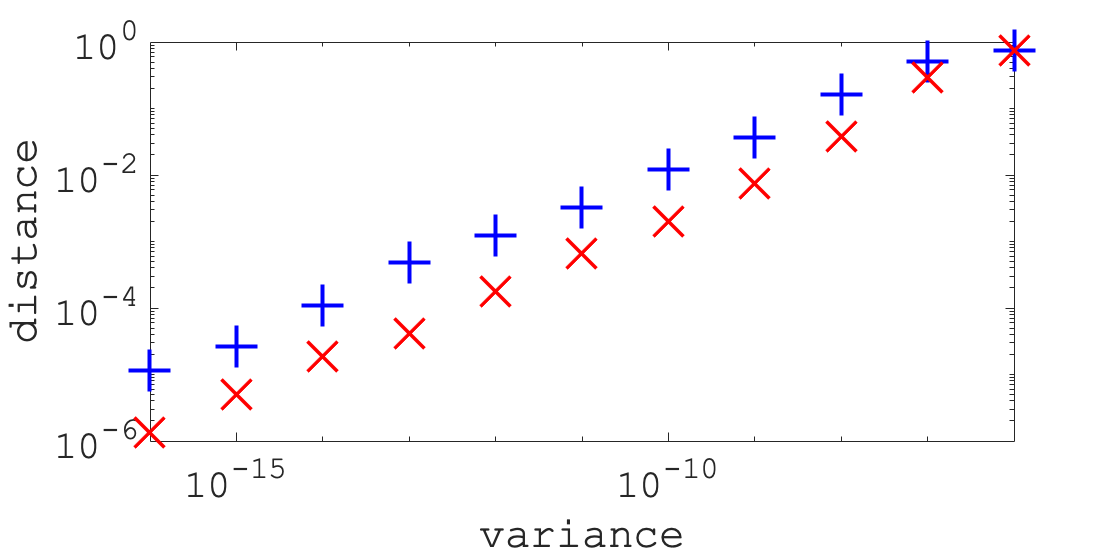

We conducted experiments with different noise variance from to . Also, the noise seed differs for each experiment, but the other seeds are the same. Fig. 6 shows the relationship between the noise variance and distances and . As shown in Fig. 6, our method outperformed system identification in almost all experiments. In the experiments of variances from to , the ratio of to is approximately constant, and the average ratio is . Because the maximum value of the distances is , we conclude that the estimations using both methods failed with a larger noise variance than .

V CONCLUSIONS

In this paper, we proposed a method to estimate the ARE with respect to an unknown discrete-time system from the input and state observation data. Our method transforms the ARE into a form calculated without the system model by multiplying the observation data on both sides. We proved that our estimated equation is equivalent to the ARE if the data are sufficient for system identification. The main feature of our method is the direct estimation of the ARE without identifying the system. This feature enables us to economize the observation data using prior information about the objective function. We conducted a numerical experiment that demonstrated that that our method requires less data than system identification if the prior information is sufficient.

References

- [1] K. Mombaur, A. Truong, and J.-P. Laumond, “From human to humanoid locomotion–an inverse optimal control approach”, Autonomous Robots, vol. 28, pp. 369–383, 2010.

- [2] W. Li, E. Todorov, and D. Liu, “Inverse Optimality Design for Biological Movement Systems”, IFAC Proceedings Volumes, vol. 44, no. 1, pp. 9662–9667, 2011.

- [3] H. El-Hussieny, A.A. Abouelsoud, S.F.M. Assal, and S.M. Megahed, “Adaptive learning of human motor behaviors: An evolving inverse optimal control approach”, Engineering Applications of Artificial Intelligence, vol. 50, pp. 115–124, 2016

- [4] R.M. Alexander, “The gaits of bipedal and quadrupedal animals”, International Journal of Robotics Research, 3(2), pp. 49–59, 1984.

- [5] R.E. Kalman, “When Is a Linear Control System Optimal?”, Journal of Basic Engineering, 86(1), pp. 51–60, 1964.

- [6] B.D.O. Anderson, The Inverse Problem of Optimal Control, Technical report: Stanford University, vol. 6560, no.3, Stanford University, 1966.

- [7] B. Molinari, “The stable regulator problem and its inverse”, IEEE Transactions on Automatic Control, vol. 18, no. 5, pp. 454–459, 1973.

- [8] P. Moylan and B. Anderson, “Nonlinear regulator theory and an inverse optimal control problem”, IEEE Transactions on Automatic Control, vol. 18, no. 5, pp. 460–465, 1973.

- [9] A.Y. Ng and S. Russell, “Algorithms for Inverse Reinforcement Learning”, Proceedings of the Seventeenth International Conference on Machine Learning, pp. 663–670, 2000.

- [10] N. Ab Azar, A. Shahmansoorian, and M. Davoudi, “From inverse optimal control to inverse reinforcement learning: A historical review”, Annual Reviews in Control, vol. 50, pp. 119–138, 2020.

- [11] M.C. Priess, R. Conway, J. Choi, J.M. Popovich, and C. Radcliffe, “Solutions to the Inverse LQR Problem With Application to Biological Systems Analysis”, IEEE Transaction on Control Systems Technology, vol. 23, no. 2, pp. 770–777, 2015.

- [12] M. Herman, T. Gindele, J. Wagner, F. Schmitt, and W. Burgard, “Inverse reinforcement learning with simultaneous estimation of rewards and dynamics”. Artificial intelligence and statistics, PMLR, pp. 102–110, 2016.

- [13] N. Aghasadeghi and T. Bretl, “Maximum entropy inverse reinforcement learning in continuous state spaces with path integrals”. IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 1561–1566, 2011.

- [14] S. Sugiura, R. Ariizumi, M. Tanemura, T. Asai, and S. Azuma, Data-driven Estimation of Algebraic Riccati Equation for Inverse Linear Quadratic Regulator Problem, SICE Annual Conference, 2023.

- [15] P.J. Antsaklis and A.N. Michel, Linear Systems, Birkhäuser Boston, 2005.

- [16] M. bardi, Optimal Control and Viscosity Solutions of Hamilton-Jacobi-Bellman Equations, Birkhäuser, 1997.

- [17] G.H. Golub, and C.F. Van Loan, Matrix Computations, JHU Press, 1997.

SUPPLEMENTARY MATERIAL

We give another proof of Theorem 1 using the following Bellman equation:

| (1) |

To this end, we prove the following theorem equivalent to Theorem 1.

Theorem 1’: Consider Problem 1. Let , be arbitrary natural numbers. Suppose that the following ARE holds:

| (2) |

Then, we have if holds, where is defined as

| (3) |