Data Generation for Testing and Grading SQL Queries

Abstract

Correctness of SQL queries is usually tested by executing the queries on one or more datasets. Erroneous queries are often the results of small changes or mutations of the correct query. A mutation Q’ of a query Q is killed by a dataset D if Q(D) Q’(D). Earlier work on the XData system showed how to generate datasets that kill all mutations in a class of mutations that included join type and comparison operation mutations.

In this paper, we extend the XData data generation techniques to handle a wider variety of SQL queries and a much larger class of mutations. We have also built a system for grading SQL queries using the datasets generated by XData. We present a study of the effectiveness of the datasets generated by the extended XData approach, using a variety of queries including queries submitted by students as part of a database course. We show that the XData datasets outperform predefined datasets as well as manual grading done earlier by teaching assistants, while also avoiding the drudgery of manual correction. Thus, we believe that our techniques will be of great value to database course instructors and TAs, particularly to those of MOOCs. It will also be valuable to database application developers and testers for testing SQL queries.

Keywords:

Mutation Testing, Test Data GenerationCurrent working at IBM IRL, India

Currently working at Oracle India Pvt. Ltd.

1 Introduction

Queries written in SQL are used in a variety of different applications. An important part of testing these applications is to test the correctness of SQL queries in these applications. The queries are usually tested using multiple ad hoc test cases provided by the programmer or the tester. Queries are run against these test cases and tested by comparing the results with the intended one manually or by automated test cases. However, this approach involves manual effort in terms of test case generation and also does not ensure whether all the relevant test cases have been covered or not. Formal verification techniques involve comparing a specification with an implementation. However, since SQL queries are themselves specifications and do not contain the implementation, formal verification techniques cannot be applied for testing SQL queries.

A closely related problem is grading SQL queries written by students. Grading SQL queries is usually done by executing the query on small datasets and/or by reading the student query and comparing those with the correct query. Manually created datasets, as well as datasets created in a query independent manner, can be incomplete and are likely to miss errors in queries. Manual reading and comparing of queries is difficult, since students may write queries in a variety of different ways, and is prone to errors as graders are likely to miss subtle mistakes. For example, when required to write the query below:

SELECT course.id, department.dept_name FROM course LEFT OUTER JOIN (SELECT * from department

WHERE department.budget > 70000) d USING (dept_name);

students often write the query :

SELECT course.id, department.dept_name FROM course LEFT OUTER JOIN department USING (dept_name)

WHERE department.budget > 70000;

which looks sufficiently similar for a grader to miss the difference. These queries are not equivalent since they give different results on departments with budget less than 70000.

Mutation testing is a well-known approach for checking the adequacy of test cases for a program mutation:testing . Mutation testing involves generating mutants of the original program by modifying the program in a controlled manner. For SQL queries, we consider that a mutation is a single (syntactically correct) change of the original query; a mutant is the result of one of more mutations on the original query. A dataset kills a mutant if the original query and the mutant give different results on the dataset, allowing us to distinguish between the queries. A test suite consisting of multiple datasets kills a mutant if at least one of the datasets kills the mutant.

Consider the query:

SELECT dept_name, COUNT(DISTINCT id) FROM course LEFT OUTER JOIN takes USING(course_id) GROUP BY dept_name

One of the mutants obtained by mutating the join condition of the query is:

SELECT dept_name, COUNT(DISTINCT id) FROM

course INNER JOIN takes

USING(course_id) GROUP BY dept_name

Similarly by mutating the aggregation we get the following mutation:

SELECT dept_name, COUNT(id) FROM

course LEFT OUTER JOIN takes

USING(course_id) GROUP BY dept_name

In this paper, we address the problem of generating datasets that can catch commonly occurring errors in a large class of SQL queries. Queries with common errors can be thought of as mutants of the original query. Our goal is to generate (a relatively small number of) datasets so as to kill a wide variety of query mutations. These datasets can be used in two distinct ways:

-

a)

To check if a given query is what was intended, a tester manually examines the result of the query on each dataset, and checks if the result is what was intended.

-

b)

To check if a student query is correct, the results of the student query and a given correct query are compared on each dataset. A difference on any dataset indicates that the student query is erroneous (We note that checking query equivalence is possible in limited special cases but is hard or undecidable in generalineq.equiv ; conjuct.equiv ; bag ).

There has been increased interest in the recent years in test data generation for SQL queries including Tuya:2010 ; Riva:2010 ; SQE ; QEX ; olstonCS09 addresses a similar problem in the context of data-flow programs. Our earlier work on the XData system xdata:icde10 ; xdata:icde11 showed how to generate datasets that can distinguish the correct query from some class of query mutations, including join and comparison operator mutations. However, real life SQL queries have a variety of features and mutations that were not handled in xdata:icde10 ; xdata:icde11 . (Related work is described in detail in Section 12.) A few of the techniques described in this paper were sketched in a short workshop paper xdata:dbtest13 , but details were not presented there.

In Sections 4 to 8 we describe techniques to handle different SQL query features. For each feature, we first discuss techniques to handle data generation for that feature, then describe mutations of these features, and finally present techniques to kill these mutations. In Section 9 we describe techniques for killing new classes of mutations for query features that were handled in our earlier work xdata:icde10 ; xdata:icde11 .

Each data generation technique is designed to handle specific query constructs or specific mutations of the query. We combine these techniques to generate datasets for a complete query, with each dataset targeting a specific type of mutation. One dataset is capable of killing one or more mutations. Specifically, we do not generate any mutants at all. Our goal is to generate datasets to kill mutations and not enumerate the possible mutants. Although the number of mutations may be very large, our approach generates a small number of datasets that can kill a much larger number of mutations.

The contributions of this paper are as follows.

-

1.

We discuss (in Section 4) how to generate test data and kill mutations for queries involving string predicates such as string comparison and the LIKE predicate, using a string solver we have developed.

-

2.

We support the NULL values and several mutations that may arise because of the presence of NULLs (Section 5).

-

3.

For queries containing constraints on aggregated results, we describe (in Section 6) a new algorithm to find the number of tuples that need to be generated for each relation to satisfy the aggregation constraints.

-

4.

We support test data generation and mutation killing for a large class of nested subqueries (Section 7).

-

5.

We also support data generation and mutation killing for queries containing set operators (Section 8).

- 6.

-

7.

The data types supported include floating point numbers, time and date values. The class of queries is extended to include insert, delete, update and parameterized queries as well as view creation statements (Section 10).

-

8.

We describe (in Section 11) techniques for grading student queries based on the datasets generated by XData. These techniques can be used for grading, as well as in a learning mode where it can give immediate feedback to students.

-

9.

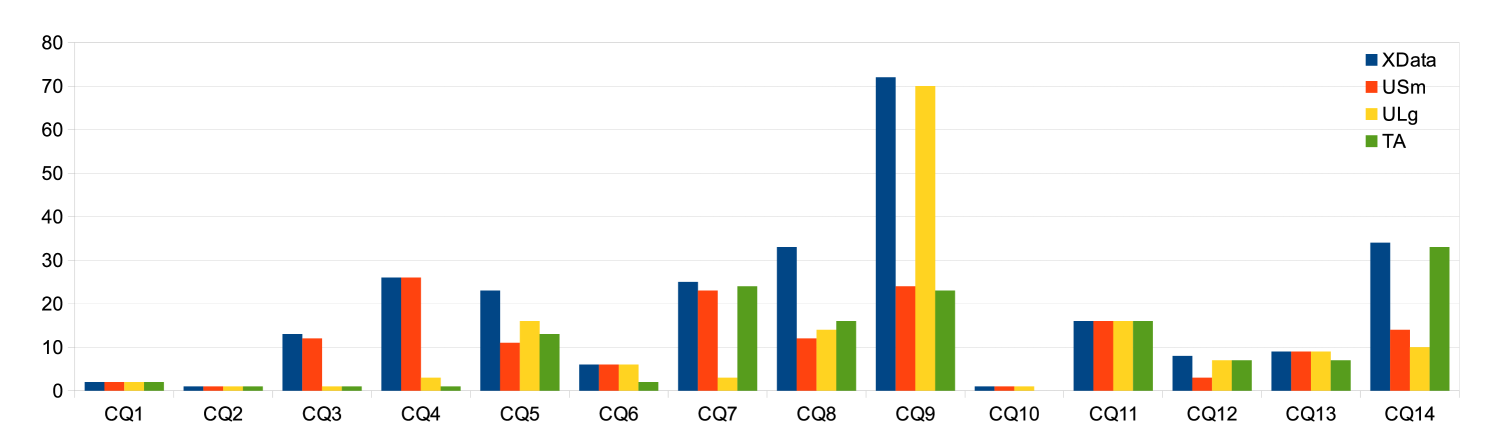

In Section 13 we present performance results of our techniques. We generate test data for a number of queries involving constrained aggregation and subqueries on the University database dbconcepts2010 as well as queries of the TPC-H benchmark and show that the datasets generated by XData are able to kill most of the non-equivalent mutations. We also test the effectiveness of our grading tool by using as a benchmark a set of assignments given as part of a database course at IIT Bombay. We show that the datasets generated using our techniques catch more errors than the University datasets, provided with dbconcepts2010 , as well as manual grading by the TAs, on all the queries.

We believe the techniques presented in this paper will be of great value to database application developers and testers for testing real life SQL queries. It will also be valuable to database course instructors and TAs by taking the drudgery out of grading and allow SQL query assignments to be properly checked in MOOC setting, where manual grading is not feasible.

2 Background

In our earlier work on XData xdata:icde11 , we presented techniques for generating test data for killing SQL query mutants; we briefly outline that work below.

2.1 Approach to Data Generation

Given an SQL query , XDataxdata:icde11 generates multiple datasets. The first dataset is designed to generate non-empty datasets for , wherever feasible, which itself kills several mutations that would generate an empty result on that dataset. Each of the remaining datasets is targeted to kill one or more mutations of the query; i.e. on each dataset the given query returns a result that is different from those returned by each of the mutations targeted by that dataset. The number of possible mutations is very large, but the number of datasets generated to kill these mutations is small.

To generate a particular dataset, XData does the following:

-

1.

It generates a set of constraint variables, where each tuple in the target dataset is represented by a tuple of constraint variables.

-

2.

It generates a set of constraints between these variables. For example, selection conditions, join conditions, primary key and foreign key conditions are all mapped to constraints on these variables. Different datasets are designed to catch different mutations; the exact set of constraints generated (as also the set of constraints variables) is different for each dataset, as described shortly.

-

3.

It then invokes a constraint (SMT) solver smt 111A constraint solver takes as input a set of constraints and produces a result that satisfies the constraints. to solve the constraints; the solution given by the solver assigns values to each constraint variable, thereby defining a specific dataset.

In order to kill mutations, the goal of XData is to generate datasets that produce different results on the query and its mutation. To produce different results, constraints are added in a manner so as to ensure that the mutation in a node of a query tree is reflected above leading to different results for the query and its mutation. For example consider the following query:

Example 1

SELECT course.course_id, COUNT(DISTINCT takes.id) FROM course INNER JOIN takes USING(course_id) WHERE course.credits >= 6

This query has two predicates course INNER JOIN takes USING (course_id) and course.credits >= 6. When generating datasets to kill the mutations of join predicates we need to ensure that is satisfied for the tuple generated for the course table. In case is not satisfied, both the query and the mutant could give empty results.

2.2 Mutation Space and Datasets

The mutation space considered consisted of the following

-

1.

Join Type Mutations: A join type mutations involves replacing one of { INNER, LEFT OUTER, RIGHT OUTER } JOIN with another. Consider the mutation from department INNER JOIN course to department LEFT OUTER JOIN course. In order to kill this mutation, we need to ensure that there is a tuple in department relation that does not satisfy the join condition with any tuple in course relation. The INNER JOIN query would not output that tuple in the department relation while the LEFT OUTER JOIN would.

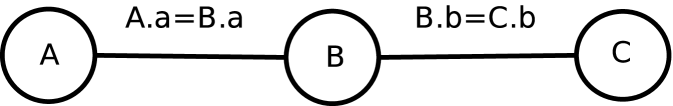

In SQL, a join query can be specified in a join order independent fashion, with many equivalent join orders for a given query. Hence, the number of join type mutations across all these orders is exponential. From the join conditions specified in the query, XData forms equivalence classes of relation, attribute pairs such that elements in the same equivalence class need to be assigned the same value to meet (one or more) join conditions. Using these equivalence classes, XData generates a linear number of datasets to kill join type mutations across all join orderings. If a pair of relations involve multiple join conditions XData nullifies each join condition separately.

-

2.

Selection Predicate Mutations: For selection conditions XData considers mutations of the relational operator where any occurrence of one of is replaced by another. For killing mutations for the selection condition relop , XData generates 3 datasets (1) , (2) , and (3) . These three datasets kill all non-equivalent mutations from one relop to another relop. These datasets also kill mutations because of missing selection conditions.

-

3.

Unconstrained Aggregation Mutation: Aggregations at the root of the query tree are not constrained to satisfy any condition. The aggregation function can be mutated among MAX, MIN, SUM, AVG, COUNT and their DISTINCT versions. In order to kill these mutations, a dataset with three tuples is generated; two with the same value (non-zero) and another with a different value in the aggregate column.

2.3 Constraint Generation

We now describe our techniques for constraint generation. Our current implementation uses the CVC3 constraint solver CVC3 . We are working on implementing the constraints in the SMT-LIB format smtlib so that we can potentially use several constraint solvers compatible with SMT-LIB.

In CVC3, text attributes are modeled as enumerated types while numeric attributes are modeled as subtypes of integers or rationals. The data type declarations in CVC3 are as follows. For each attribute of each relation, we specify a set of acceptable values, taken from an input database, as datatypes in CVC3. While the input database is not necessary for data generation, its use makes for improved readability and comprehension of the query results. In case an input database is not specified we get the range from the data type of the corresponding column.

A tuple type is created for each relation, where each element is a constraint variable of the specified type. A relation is represented as an array of constraint variables; the size of the array has to be determined before solving the constraints, and constraints have to be specified for each attribute of each tuple.

Consider an input database which has CS-101, BIO-301, CS-312 and PHY-101 as course_id, and credits is an integer constrained to be between 2 and 10. Then, this translates to the following the declarations in CVC3.

DATATYPE course_id = CS-101 | BIO-301 | CS-312 | PHY-101 END; credits:TYPE = SUBTYPE (LAMBDA (x: INT): x > 1 AND x < 11); course_tuple_type:TYPE = [course_id,credits]; course: ARRAY INT OF course_tuple_type;

Tuple attributes are referenced by position, not by name; thus, course[2].0 refers to the value of the first attribute, which is course_id, of the second tuple in course.

To ensure a non-empty result for the query in Example 1, we need a tuple in course

which matches a tuple in takes on attribute course_id and where the course.credits >= 6. This is done by creating a tuple for each of the relations and adding the following constraints:

ASSERT course[1].0 = takes[1].1;

ASSERT course[1].1 >= 6;

Primary key constraints are enforced by constraints that ensure that if two tuples match on the primary key, then the values of the remaining attributes for those two tuples should also match. Foreign key constraints are enforced by adding extra tuples that satisfy the foreign key condition. Foreign key constraints for the foreign key from takes.course_id to course.course_id are specified as:

ASSERT FORALL(i: takes_index): EXISTS (j: course_index): takes[i].1 = course[j].0;

where takes_index and course_index give the index range for the takes and course arrays; takes[i].1 stands for dept_name of the ith tuple of course. In our example an extra tuple would be generated for course for each tuple in takes, although in this case the first tuple of course itself ensures the foreign key constraint is satisfied for the first tuple of takes.

The above constraints are given to CVC3 which generates satisfying values (assuming the constraints are satisfiable).

As explained earlier in this section, to kill a mutation of the inner join to right outer join, we

need a value in course.course_id which does not match any value in takes.course_id.

To do so we replace the earlier equality constraint

ASSERT course[1].0 = takes[1].1;

with:

ASSERT NOT EXISTS(i:course_index):

(course[i].0 = takes[1].1);

and generate the required dataset using CVC3.

Datasets for killing other mutations are generated similarly.

2.4 Disjunctions

Tuya et al. in Riva:2010 presented techniques for killing mutations in the presence of disjunctions.

For killing a where clause mutation of a query, the mutation should be reflected as a change at the root of the query tree. Consider the clause , where and are conjuncts of selection conditions. If a condition in is mutated, should be false so that the change in the condition of affects the output of the query. For example, let be AND . If we mutate the first condition in to we need to ensure that is satisfied while is not satisfied. If is satisfied there would be no change in the output of the query. Although not mentioned in Riva:2010 , the above technique not only kills mutations of atomic selection conditions (such as comparisons) but also kills mutations of conjunction operations to disjunctions and vice versa.

The XData system has been extended to implement the above technique for killing selection predicate mutations in the presence of disjunctions.

3 Queries and Mutations Considered

The class of queries considered by XData now includes

-

a)

Single block queries with join/outer-join operations and predicates in the where-clause, and optionally aggregate operations, corresponding to select / project / join / outer-join queries in relational algebra, with aggregation operations.

-

b)

Multi-block queries. Our current implementation can deal with subqueries up to a single level of nesting.

-

c)

Compound queries with set operators UNION(ALL), INTERSECT(ALL) and EXCEPT(ALL).

In this paper, we remove the following assumptions made in xdata:icde11 :

-

a)

SQL queries do not contain string comparison or string like operators such as like, ilike, etc.

-

b)

Aggregations are only present at the top of the query tree, and hence they are not constrained.

-

c)

SQL queries are single block queries with no nested subqueries.

-

d)

NULL values are not allowed for attribute values.

-

e)

Selection predicates are conjunctions of simple conditions of the form expr relop expr.

XData now considers a large class of mutations - join type mutations, comparison operator mutations, aggregation mutations, string mutations, NULL mutations, set operator mutations, join condition mutations, group by attribute mutations and distinct mutations. Of these only join type mutations, comparison operator mutations and aggregation mutations were discussed previously in xdata:icde11 .

We retain the following assumptions

-

a)

The only database constraints are unique, primary key and foreign key constraints.

-

b)

Queries do not include numeric functions or expressions other than simple arithmetic expressions.

-

c)

Join predicates are conjunctions of simple conditions.

-

d)

No user defined functions are used.

We only consider single mutations in a query when generating test datasets, since the space of mutants is much larger with multiple mutations. It is possible that an erroneous query may contain multiple mistakes; queries with multiple mutations are likely, but not always guaranteed, to be killed by the datasets we generate. Completeness guarantees for our data generation techniques are described in Appendix D.

4 Data Generation for String Constraints

SQL queries can have equality and inequality conditions on strings, and

pattern matching conditions using the LIKE operator or its variants.

Consider the SQL query,

SELECT * from student WHERE name LIKE ‘Amol%’ AND name LIKE ‘%Pal’ AND tot_cred > 30

In order to generate the first dataset that produces a non-empty result for this query or to kill mutations of the condition tot_cred > 30, we need to generate a tuple for which attribute name satisfies the LIKE conditions ‘Amol%’ and ‘%Pal’. To generate such a value we need to solve the corresponding string constraints. For killing mutations of the LIKE operators also we need to solve similar string constraints.

Since many constraint solvers, including CVC3, do not support string constraints, we solve the string constraints outside of the solver. We describe the types of string constraints considered in Section 4.1 and our approach to solving string constraints in Section 4.2 We then discuss test data generation for killing mutations involving string operators in Section 4.3. Note that for this to work; there should be no dependence between string and other constraints so that the string constraints can be solved independently of other constraints. For example, for constraints like , where is a string attribute and is an integer attribute, the condition on cannot be solved independently of constraints on if there are other constraints on and . However, if an integrated constraint solver this restriction does not apply.

4.1 Types of String Constraints Considered

For string comparisons, we consider the following class of string constraints: relop constant, and relop , where and are string variables, and relop operators are and case-insensitive equality denoted by . We support LIKE constraints of the form likeop pattern, where likeop is one of LIKE, ILIKE (case insensitive like), NOT LIKE and NOT ILIKE. We also support strlen() relop constant where relop is one of or . We do not support constraints of the form likeop , where both and are variables.

We support the string functions upper and lower in queries where these functions can be rewritten using one of the operators described above; for example upper() = ‘ABC’ can be rewritten as ‘ABC’, and similarly upper() LIKE pattern can be replaced by ILIKE pattern. We rewrite these conditions as a pre-processing step. Conditions like () = constant or () LIKE pattern, where the constant or pattern contains at least one lower case character, cannot be satisfied. Hence for such conditions we do not change the operators but return an empty dataset. If these functions are used on a constant string, we convert the string to upper or lower according to the function.

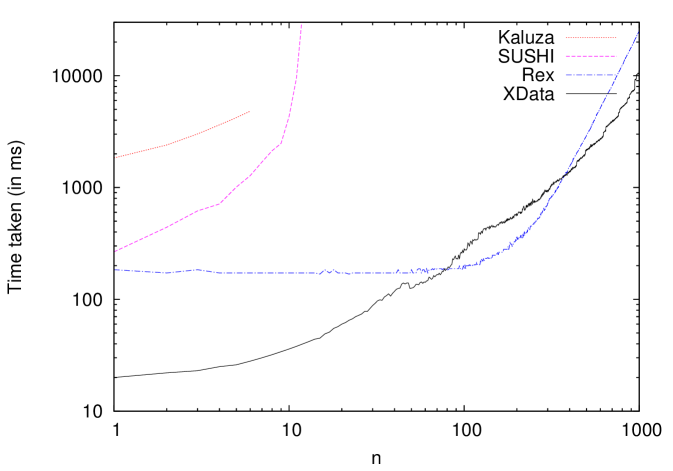

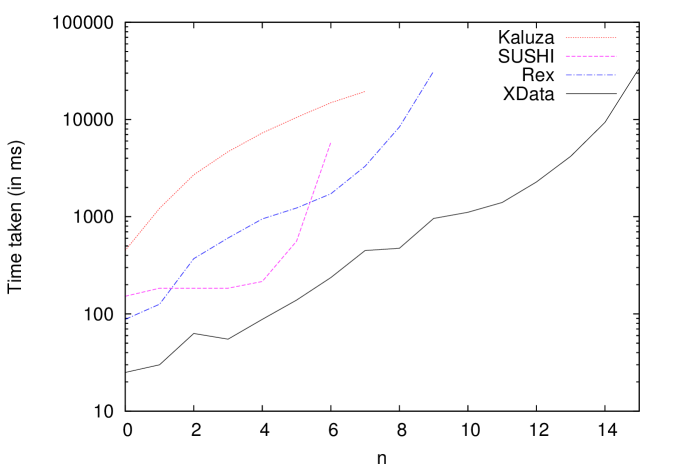

4.2 Solving String Constraints

There are several available string solvers that we considered, including Hampi HAMPI , Kaluza KAL , SUSHI SUSHI and Rex REX . However, we found that Hampi and Kaluza were rather slow, and while they handled regular expressions and length constraints, they could not handle constraints such as , where both and are variables. Rex and SUSHI, though much faster, could not handle constraints involving multiple string variables. Hence, we built our own solver which is described in Appendix B. Subsequent to the implementation of our string solver the latest version of CVC (CVC4) has also provided some support for solving string constraints cvc4:string , but it has some limitations currently222Although there are some limitations in CVC4 currently; in future we may use CVC4 as an integrated solver for both string constraints and other constraints.. Refer the experimental section in Appendix B for details.

Once the values for string variables are obtained we solve the non-string constraints using CVC3 and get an overall solution as follows: enumeration types are created in CVC3 for string variables, with the enumeration names being the (suitably encoded) strings generated by the string solver. For example, consider a query which has a single string constraint: like . Let the string that satisfies the constraint be Biology, then the constraint is specified as

ASSERT(table[index].pos = Biology)

in CVC3, where table[index].pos is the corresponding CVC3 variable of . We then add constraints in CVC3 equating each string variable to its corresponding enumeration name, add other non-string constraints as described in Section 2 and invoke CVC3 to get a suitable dataset.

If there are disjunctions in the selection predicate, it is not possible to separate the string constraints since not all string constraints may need to be satisfied.

4.3 Killing String Constraint Mutations

There can be different types of string mutations depending on whether the string condition is a comparison condition or a LIKE condition.

String Comparison Mutation

Consider a string constraint of the form relop , where is a variable (attribute name), could be another variable or a constant. We consider mutations of relop where any occurrence of one of is replaced by another. Three datasets are enough to kill all the relop mutations. These are the datasets generated for (1) (2) (3) . These datasets will also kill the mutation because of missing string selection mutations. In addition, to kill mutations between and , we generate an additional dataset, where , but .

LIKE Predicate Mutation

We also consider the mutation of the likeop operators where one of

LIKE, ILIKE, NOT LIKE, NOT ILIKE is mutated to another or the operator is missing.

For a condition likeop pattern, where is an attribute name,

the three datasets given below are sufficient to kill all mutations among the LIKE

operators:

Dataset 1 satisfying the condition LIKE pattern.

Dataset 2 satisfying condition ILIKE pattern,

but not LIKE pattern.

Dataset 3 failing both the LIKE and ILIKE conditions.

| Mutation to kill | Dataset |

| LIKE vs. NOT LIKE | 1, 2, 3 |

| LIKE vs. ILIKE | 2 |

| LIKE vs. NOT ILIKE | 1, 3 |

| NOT LIKE vs. ILIKE | 1, 3 |

| NOT LIKE vs. NOT ILIKE | 2 |

| ILIKE vs. NOT ILIKE | 1, 2, 3 |

| Missing LIKE / ILIKE | 3 |

| Missing NOT LIKE / NOT ILIKE | 1 |

For example, for the condition S1 LIKE ‘bio_’, the conditions in the three cases would be (1) S1 LIKE ‘bio_’, (2) S1 LIKE ‘BIO_ ’, and (3) S1 LIKE ‘CIO_’.

The targeted mutations and the datasets that kill them are shown in Table 1.

LIKE Pattern Mutations

A common error while using the LIKE operator is the specification of an incorrect pattern in the query, for example, specifying LIKE ‘Comp_’ or LIKE ‘Com%’ in place of LIKE ‘Comp%’. There could be a very large number of such patterns to be considered. We handle mutations that involve ‘_’ in place of ‘%’ and vice versa and also missing ‘_’ or ‘%’. Consider the like predicate to be likeop P.

-

•

For killing the mutation of ‘%’ to ‘_’ or for missing ‘%’, we generate separate datasets for each occurrence of the ‘%’ replaced with “__”(two underscores) . The pattern with ‘%’ gives a non-empty result while the mutated patterns will give an empty result on the corresponding datasets if the likeop is LIKE or ILIKE. For NOT LIKE and NOT ILIKE the pattern with ‘%’ gives an empty result while the mutated patterns will give a non-empty result.

-

•

For killing the mutation of ‘_’ to ‘%’ or for missing ‘_’, we generate separate datasets for each occurrence of ‘_’ with that occurrence of ‘_’ removed. If the likeop is LIKE or ILIKE the original pattern gives an empty result while the mutated patterns give non-empty results on the corresponding dataset. For NOT LIKE and NOT ILIKE the pattern with ‘_’ gives a non-empty result while the mutated patterns will give an empty result.

5 Handling NULLs

In our earlier work xdata:icde11 , we could not handle NULLs. In this section, we discuss how we model NULLs using regular non-NULL values; to the best of our knowledge, none of the SMT solvers supports NULL values with SQL NULL value semantics.

To model NULLs for string attributes, we enumerate a few more values in the enumerated type and designate them NULLs. For example, the domain of course_id is modeled in CVC3 as follows:

DATATYPE course_id = CS190 | CS632 | NULL_course_id_1 | NULL_course_id_2 END;

Here, the first two values are regular values from the domain of course_id, while the last two values are used as NULLs. For numeric values, we model NULLs as any integer in a range of negative values that are not part of the given domain of that numeric value.

Next, we define a function which identifies which values are NULL values and which are not. This function is syntactic sugar for dealing with NULLs cleanly and is defined per domain to identify the NULLs in that particular domain. In addition to specifying which values are NULLs, we also explicitly need to state that the other values are NOT NULL. Otherwise, the solver may choose to treat a NON-NULL value as a NULL value. Following is an example of the function in CVC3:

ISNULL_COURSE_ID : COURSE_ID -> BOOLEAN; ASSERT NOT ISNULL_COURSE_ID(CS190); ASSERT NOT ISNULL_COURSE_ID(CS632); ASSERT ISNULL_COURSE_ID(NULL_crse_id_1); ASSERT ISNULL_COURSE_ID(NULL_crse_id_2);

We also need to enforce another property of nulls, namely, that nulls are not comparable. To do so, we choose different NULL values for different constraint variables that may potentially be assigned a null value, thus implicitly enforcing an inequality between them.

The capability to generate NULLs enables us to handle nullable foreign keys, selection conditions involving IS NULL checks and kill mutations of COUNT to COUNT(*).

5.1 Nullable Foreign Keys

If a foreign key attribute , is nullable then the foreign key constraint is encoded in the SMT solver by forcing values of to be either values from the corresponding primary key values or NULL values; this allows the SMT solver to assign NULLS to foreign keys if required. Nullable foreign keys allow us to kill more mutants than is possible if the foreign key attribute as not nullable. (Our implementation handles multi-attribute foreign and primary keys.)

5.2 IS NULL / NOT IS NULL Clause

If the query contains a condition IS NULL, we explicitly assign (a different) NULL to attribute for each tuple if the query contains only inner joins or only a single relation (provided the attribute is nullable; attributes declared as primary key or as not null cannot be assigned a NULL value).

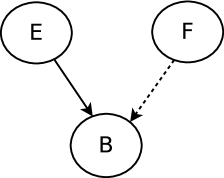

However in case the query contains an outer join there may be multiple ways to ensure that an attribute has NULL value. Let us consider the join condition E1 E2. If the IS NULL condition is on an attribute of E1 we need to ensure that the value of that attribute is NULL. If the IS NULL condition is on an attribute on E2 we need to ensure that either (a) that attribute is NULL (which may not be possible if E1 is a relation and the attribute is not nullable) or (b) for that tuple in E1 there does not exist any matching tuple in E2; this can be done by a minor change in the algorithm to handle NOT EXISTS subqueries as described in Section 7.1 (Algorithm 1). We omit details for brevity.

We consider mutation from IS NULL to NOT IS NULL. The first dataset (the one that generates non-empty results on the original query) kills the mutation of IS NULL to NOT IS NULL if the IS NULL condition is present in the form of conjunctions with other conditions. In the presence of disjunctions, we generate a dataset such that the IS NULL condition is satisfied while the conditions present in disjunction with the IS NULL condition are not satisfied. If the query contains an IS NULL then the dataset will give a non-empty result whereas the NOT IS NULL mutant will generate an empty result and vice versa. We also consider the mutation where the mutant query does not contain the IS NULL condition In order to kill this mutation we generate a tuple with the IS NULL condition being replaced by NOT IS NULL (with the conditions present in disjunction with the IS NULL not being satisfied). The original query gives an empty result while the mutant gives a non-empty result.

If the query contains the condition NOT IS NULL the corresponding mutations can be killed in a similar manner.

5.3 NULLs and COUNT(*)

To kill the mutation from COUNT(attr) to COUNT(*), where attr is a set of attributes, we create a dataset such that all tuples in a group have attr as NULL (provided all attributes in attr are nullable and none of them is forced to be non-nullable by selection or join conditions). COUNT(attr) gives a count of 0 while COUNT(*) gives a count of equal to the total number of tuples.

In order to kill mutations of COUNT(*) to COUNT (attr), for any set of attributes attr, we create a dataset such that all nullable columns (columns that can be assigned NULL values and do not have conditions that force them to be not NULL) have NULL values. If any attribute in attr is not nullable, COUNT(*) and COUNT(attr) are equivalent mutations.

6 Constrained Aggregation

In xdata:icde11 we considered aggregates which did not have any constraints on the aggregation result e.g. via a HAVING clause, or in an enclosing SQL query of a subquery with aggregation. In this section, we discuss techniques for data generation for queries which have constrained aggregation. We assume that each aggregate is on a single attribute, not on multiple attributes or expressions. We also assume that aggregation constraints do not involve disjunctions.

Consider the HAVING clause constraint, . In case the domain of is restricted to [0,5] it is not possible to generate a single tuple for such that the aggregation constraint is satisfied. Most constraint solvers including CVC3 do not support a relation type where the number of tuples may be left unspecified. Some solvers like Alloy alloy do support a relation type. However, there are other limitations to using Alloy since it is very slow and supports only the integer datatype. We model relations as arrays of tuples with a predefined number of tuples in each relation; such aggregation constraints cannot be translated into SMT solver constraints leaving the number of tuples unspecified. Hence, before generating SMT solver constraints we must (a) estimate the number of tuples , required to satisfy the aggregation constraints, and (b) in case the input to the aggregate is a join of multiple base relations, translate this number to appropriate number of tuples for each base relation so that the join result contains exactly tuples.

In Section 6.1 we discuss how to estimate the number of tuples to satisfy an aggregation constraint. We discuss data generation for constrained aggregation on a single relation in Section 6.2 and for join results in Section 6.3.

6.1 Estimating Number of Tuples per Group

We now consider how to estimate the number of values (tuples), , needed to satisfy aggregation constraints. For each attribute, , on which there are aggregate constraints we consider the following for estimating .

-

1.

Aggregation Properties: Constraint variables sumA, minA, maxA, avgA, countA respectively correspond to the results of aggregation operators SUM, MIN, MAX, AVG and COUNT on attribute . Note that countA also indicates the number of tuples at the input to the aggregation. We add the following conditions

-

•

Since the value of each tuple cannot be less than minA and greater than maxA, it follows that minAcountA sumA maxAcountA.

-

•

If the domain of is integer and is unique,

minA + (minA + 1) + … + (minA + - 1) ( - + 1) + ( - + 2) + … + (maxA - + ( - 1)) +(). We use the simplified form of the above expression for constraint generation. -

•

sumA

-

•

-

2.

Domain Constraints: Constraint variables dminA, dmaxA correspond to the minimum and maximum value in the domain of attribute . We add the following constraints

-

•

dminA minA maxA dmaxA. This constraint states that minA cannot be less than the domain minimum or greater than maxA.

-

•

-

3.

Aggregation Constraints: Aggregation constraints specified by the query (e.g. sumA ).

-

4.

Selection Conditions: If the query contains non-aggregate constraints on any attribute , we add these to the tuple estimation constraints. For example, consider the query,

SELECT dept_name,SUM(credits) FROM course INNER JOIN dept USING (dept_name) WHERE credits <= 4 GROUP BY dept_name HAVING SUM(credits) < 13

Here, because credits column has a selection condition on it, its limit is constrained. Hence, maxcredits is also added to the list of constraints above.

The solver returns a value for the count which satisfies all the constraints above, but the value may not be the minimum. Since we are interested in small datasets, we want the count to be as small as possible. Hence, we run CVC3 with the count fixed to different values, ranging from to MAX_TUPLES and choose the smallest value of the count for which CVC3 gives a valid answer.333Since we are interested in small datasets, we set MAX_TUPLES to in our experiments. We borrow the idea of calculating the number of tuples, using multiple tries, for the aggregation constraint from RQP RQP . However, note that the problem is different here, since, unlike RQP, we do not know the value of the aggregation in the query result. Note that the above procedure works even in case of multiple aggregates on the same column or on different columns.

Heuristic Extensions

The value with which the aggregate is compared to may be a column (i.e. a variable) e.g. HAVING SUM(R.a) relop S.b. This can happen when S.b is a group by attribute or when the constrained aggregation is in a subquery and S.b is a correlation variable from an outer query. For such cases, we replace the column name by a CVC3 variable when estimating the number of tuples. We also add the domain and selection conditions for that column as constraints on the CVC3 variable. The solver then chooses a value for the number of tuples such that the aggregate is satisfied for some value of the variable in its domain.

If the aggregation has a DISTINCT clause we add constraints to make the corresponding aggregated attribute unique.

Handling constraint aggregation in general for these cases is an area of future work.

6.2 Data Generation for Aggregate on a Single Relation

In case the aggregate is on a single relation the number of tuples estimated is assigned to the only relation. For each result tuple generated by an aggregation operator, we create a tuple of constraint variables where group by attributes are equated to the corresponding values in the inputs and aggregation results are replaced by arithmetic expressions. For example, is replaced by , where R[i] to R[i+k] are the tuples assigned for a particular group. We also add constraints to ensure that no two tuples in R[i]…R[i+k] are the same if the relation has a primary key.

The tuple variables created as above can be used for other operations e.g. selection or join that use the aggregation result as inputs.

In case data generation for multiple groups is required we add constraints to ensure that at least one of the GROUP BY attributes is distinct across groups.

Consider the query,

SELECT id, COUNT(*) FROM takes WHERE grade = ‘A+’ AND year = 2010 GROUP BY id HAVING COUNT(*) < 3

For this query the number of tuples in the group is estimated to be 1. We assign a single tuple to the takes relation and add constraints to ensure that grade for this tuple is ‘A+’ and year is 2010.

Note that if the XData system generates additional tuples for the takes relation (for example because this query is part of a subquery and there may be other instances of the takes relation outside the subquery or takes is referenced by some other relation and we need to generate additional tuples to satisfy foreign key dependencies) the value of COUNT() in the having clause may change and the constrained aggregation may no longer be satisfied. In order to ensure that the HAVING clause is not affected we need to ensure that no other tuple in the takes relation belongs to the same group.

In general, to ensure that the additional tuples generated do not cause problems we add constraints to ensure that for any additional tuple either has a different value for the GROUP BY attribute and hence belongs to a different group or fails at least one of the selection conditions. In the above example, we assert that either the id is different for the additional tuple or . In practice, the conditions are generated by Algorithm 5 described in Appendix C, which handles the general case of aggregation on join results, to assert these constraints as described in Section 6.3.2.

6.3 Data Generation for Aggregation on JOIN Results

In case the aggregate is on a join result we need to assign tuples to each of the relations such that the join results in the required number of tuples. In this section, we address this issue.

6.3.1 Estimating Number of Tuples per Relation

We assume here that all join conditions are equijoins. The required number of tuples is denoted by . Consider a query that involves where we need tuples for a GROUP BY on . Each of the relations need be assigned a specific number of tuples such that the result of the join produces tuples.

A naive way is to assign tuples to a relation, and assign the same value to all its joining attributes, . For relations joining with only a single tuple is assigned and the joining attribute(s) are assigned the value to the corresponding join attribute of . For all other relations also single tuple is assigned and the joining attributes are equated. It is easy to see that this assignment will lead to tuples in the output. The assignment, however, does not work in case the joining attribute(s) of are unique (either due to primary keys or by inference from other primary keys) or multiple values are required for attributes of some other relations (to satisfy the aggregate constraint).

We define the following types of attribute(s) that are used for assigning cardinality to relations.

-

1.

uniqueElements: Sets of attributes for which no two tuples in a group can have the same value. These sets of attributes are placed in uniqueElements, where uniqueElements[] contains sets of unique elements of relation . If a relation has unique constraints for (a,b) and (a,c) then uniqueElements[]={{a,b},{a,c}}.

-

2.

singleValuedAttributes: The attribute(s) which have the same value across all tuples in a group. These attributes are placed in singleValuedAttributes.

Using the uniqueElements, singleValuedAttributes, join conditions and foreign key conditions for each relation under conditions we estimate the number of tuples for each relation. Details for this are provided in the Appendix A.

6.3.2 Data Generation

After getting the tuple assignment for each relation we add CVC3 constraints to fix the number of tuples in a group to the estimated value. For each join condition, constraints are generated depending on the number of tuples assigned. For example, if both relations and have tuples, the constraint is generated for all , while if has tuples and has 1 tuple, the constraint is generated for all . Constraints variables for the output of the aggregate operator are created as described earlier in Section 6.2. One difference is in handling aggregation for relations that have been assigned one tuple. For example, is replaced by , where R[i] to R[i+k] are the tuples assigned for a particular group, if has tuples, otherwise it is replaced by , where is the only tuple assigned for a group. Unique constraints are added as pairwise non-equality constraints to ensure that sets of have distinct values.

Constraints to ensure that additional tuples do not alter satisfaction of the aggregate conditions for the group are generated using Algorithm 5 described in Appendix C. The inputs to the algorithm are (a) - query tree corresponding to block that contains the constrained aggregate (b) - the tuples generated to satisfy the constrained aggregation and (c) - conditions that evaluate each GROUP BY attributes to the corresponding values in .

Data generation for multiple groups is done by adding constraints to ensure that at least one of the GROUP BY attributes is distinct across groups.

The constraints are then given as input to CVC3, and output of CVC3 gives us the required dataset.

Discussion:

Our tuple assignment techniques always assign either 1 tuple or tuples to a relation. There could be cases where such an assignment is not possible and a different assignment is required to generate datasets. However, in such an assignment it becomes difficult to assert constraints such that the join of the relations will generate exactly the required number of tuples. Handling tuple assignment for cases where either 1 or tuples cannot be assigned to all the relations to satisfy the aggregation constraint is an area of future work.

6.4 Constraint Aggregation and Mutant Killing

Techniques for killing aggregation mutations were described in xdata:icde11 (summarized in Section 2.2). A dataset to kill aggregation mutations is generated by creating multiple tuples per group using techniques of constrained aggregation described above. Different mutations of the aggregate operator will produce different values on this dataset. To ensure that the value difference due to aggregate mutation will cause a difference in the constraint aggregate result, we need to ensure that only one of the query or its mutation satisfies the aggregation constraint. For some cases, we have implemented constraints to ensure that there is a difference in the constraint aggregate result. Implementing this in general is an area of future work.

Datasets for killing mutations of comparison operators in aggregation constraint (e.g. having clause) are generated using existing techniques in XData for handling comparison mutations. Killing mutations due to additional and missing group by attributes is discussed in Section 9.2.

7 Where Clause Subqueries

We now consider test data generation for SQL queries involving subqueries. Data generation for subqueries in the FROM clause is discussed in Section 10; in this section we consider data generation and mutation killing for subqueries in the where clause. We initially assume in Section 7.1 that subqueries do not have aggregations. Subqueries with aggregation are discussed in Section 7.2.

7.1 Data Generation for Subqueries Without Aggregation

EXISTS Connective

Consider a query Q with a nested subquery predicate EXISTS(). To generate a non-empty result for Q we need to ensure that SQ gives a non-empty result. If SQ does not have any correlation variables we treat subquery SQ as a query in itself and add constraints to generate a non-empty dataset for the subquery using our data generation techniques. We then add constraints for Q for predicates other than the subquery. The dataset is then generated based on these constraints.

If SQ has correlation conditions, then for every tuple that is generated for Q, we call a function to generate the constraints for data generation of the subquery, with the correlation variables passed as parameters.

The correlation conditions are treated as selections in SQ

with the given constraint variables and appropriate constraints are generated for SQ.

For example, consider the query

SELECT course_id,title

FROM course INNER JOIN section USING(course_id)

WHERE year = 2010 AND EXISTS (SELECT * FROM prereq

WHERE prereq_id=‘CS-201’ AND

prereq.course_id = course.course_id)

To generate a dataset for the outer query, we generate a single tuple each for the course and section relations. Let the tuples be course[1] and section[1]. We then add constraints to assert section[1].year=2010 and course[1].course_id = section[1].course_id. We pass the correlation variable course[1].course_id as a parameter to the function for generating constraints for the subquery. For this tuple in the outer query block, we generate a tuple in prereq relation, say prereq[1], for which we add constraints to ensure that prereq[1].prereq_id = ‘CS-201’ and prereq[1].course_id = course[1].course_id.

NOT EXISTS Connective

Consider a query Q with a nested subquery predicate NOT EXISTS(). Here we need to ensure that the number of tuples from SQ is 0.

If SQ has only a single relation R, we add constraints to ensure that every tuple in R fails at least one of the selection conditions. In case, SQ has a join of two or more relations we traverse the tree of SQ, and in a recursive manner add constraints on selections and joins to ensure that no tuple reaches the root of SQ. If the join is an INNER JOIN we need to ensure that there exists no pair of tuples for which the join conditions are satisfied or that one of the inputs to the join is empty. In case the join is LEFT OUTER JOIN, we need to ensure that there is no tuple in the left subtree. Similarly, in case of RIGHT OUTER JOIN we need to ensure that no tuple is projected from the right subquery.

Constraints to ensure that there is no tuple from the NOT EXISTS subquery are added using Algorithm 1. If the subquery contains selections with disjunctions, we may fail to get the selection conditions that involve only in Step 4 of our algorithm. Our algorithm is currently restricted to NOT EXISTS queries that do not contain any disjunction. At Step 5 we assert negations of the constraints corresponding to the particular selection condition, . For example, if is a NOT EXISTS subquery we assert constraints corresponding to EXISTS(). Correlation variables in SQ, if present, are treated in the same manner as EXISTS subquery and passed as parameters. Correlation conditions are then treated as selections in Algorithm 1.

IN/NOT IN Connective

We convert subqueries of the IN type to EXISTS type subquery by adding the IN connective as a correlation condition in the WHERE clause of the EXISTS subquery. The same techniques as that of EXISTS are then used.

Similarly. subqueries using a NOT IN connective are converted to use the NOT EXISTS connective.

For example,

r.a IN (SELECT s.b FROM .. WHERE ..)

is converted to

EXISTS (SELECT s.b FROM .. WHERE .. AND r.a = s.b)

ALL/ANY Connective

Subqueries with ALL and ANY connectives, always appear with one of the

comparison operators, for example “ ALL” or “ ANY”.

We transform subqueries of the form ANY to an EXISTS query with condition as a correlation condition in the WHERE clause.

Subqueries with ALL are transformed to a NOT EXISTS query with a negation of the condition as a correlation condition, or either of the correlation variables in the correlation condition as NULL in the WHERE clause.

For example,

r.a >ALL (SELECT s.b FROM .. WHERE ..)

is converted to

NOT EXISTS

(SELECT s.b FROM .. WHERE .. AND r.a <= s.b

OR IS NULL(r.a) OR IS NULL(s.b))

Scalar Subqueries

Scalar subqueries are subqueries that return only a single result. We consider scalar subqueries in the where clause which are used in conditions on the form SSQ relop attr/value, where SSQ is a scalar subquery, attr is an attribute from the outer block of query and value is a constant. For scalar subqueries, we generate only a single tuple for the query and assert that the projected attribute satisfies the comparison operator. Correlation conditions, if any, are treated in the same manner as subqueries with the EXISTS connective.

7.2 Data Generation for Subqueries With Aggregation

In this section, we consider subqueries that have aggregation. Constraints involving aggregation can be in the inner query (e.g. HAVING clause) or in outer query (e.g. r.s (SELECT agg(s.b…)))

Non Scalar Subqueries

The techniques in Section 7.1 can be applied for EXISTS subqueries without constrained aggregation, since we only need to ensure empty / non-empty results for the subquery. For NOT EXISTS Algorithm 1 covers the case of aggregate operators as well.

In case of constrained aggregation in EXISTS subquery (e.g. HAVING clause), we use the techniques described in Section 6 to generate tuples for the subquery; multiple tuples may be generated. In case there is a constrained aggregation in the NOT EXISTS subquery, we assert constraints to ensure that either the constraint aggregation is not satisfied or there is no tuple input to the aggregation constraint.

Subqueries of the IN/NOT IN/ALL/ANY type having an aggregate as the projected attribute can be transformed into EXISTS/NOT EXISTS in a similar manner as shown in Section 7.1. In this case, the projected aggregate is added as a HAVING clause. For example,

r.a NOT IN (SELECT agg(s.b) FROM .. WHERE .. )

is converted to

NOT EXISTS (SELECT agg(s.b) FROM .. WHERE .. HAVING agg(s.b) = r.a)

The techniques for constrained aggregation in EXISTS/ NOT EXISTS can then be applied.

Scalar Subqueries

Consider the following query involving the relation takes(id, course_id, sec_id, semester, year, grades),

SELECT id FROM takes WHERE grade < (SELECT MIN(grade) FROM takes WHERE year = 2010)

To generate datasets for this query we add constraints to generate a tuple, for the takes relation in the outer query. The tuple estimation technique for the subquery estimates that one tuple is required to satisfy the comparison operator (). We add constraints to generate one more tuple, say for takes relation corresponding to the subquery and add a constraint to ensure that takes[2].year = 2010 for that tuple. We then add the constraint, takes[1].grade takes[2].grade to ensure that the grade of the outer query tuple is greater than the grade of the subquery tuple. Since takes[1] does not participate in aggregation we need to ensure that it does not satisfy the conditions of the subquery block. To ensure this, the constraint is added.

In general, consider a query of the form

SELECT * FROM rel1 JOIN .. WHERE cond1 AND ...

AND attr1 relop (SELECT agg(sqrel1.attr2)

FROM sqrel1 JOIN ... WHERE sqcond1 AND ..)

For such subqueries we need to ensure that the aggregate, agg(sqrel1.attr2) satisfies the condition attr1 relop agg(sqrel1.attr2). In order to do this, we may need to project multiple tuples from the subquery. We use the techniques described in Section 6 to estimate the number of tuples, assign the desired number of tuples to each relation and generate constraints for data generation. In order to ensure that no additional tuple affects the aggregate value, we use the techniques described in Algorithm 5 in Appendix C. The input to the algorithm is the same as described in Section 7.4 below.

Similar to EXISTS subquery, in the presence of correlation conditions, we generate one group of tuples in the subquery for every tuple in the outer query.

7.3 Killing Subquery Connective Mutations

EXISTS/NOT EXISTS, IN/NOT IN Mutation

The dataset generated for the original query will kill the mutation between IN and NOT IN, and between EXISTS and NOT EXISTS if the subquery condition is present in the form of conjunctions with other conditions. In the presence of disjunctions, we generate a dataset such that the subquery condition is satisfied and conditions in disjunction with it are not. The EXISTS clause gives an empty result when NOT EXISTS gives a non-empty result, and vice versa. Similar datasets are generated to kill mutations of IN vs. NOT IN.

Comparison Operator Mutation

For conditions of the form “r.A relop (SSQ)” where SSQ is a scalar subquery, as well as conditions of “r.A relop [ALL/ANY] SQ”, we consider mutations among the different relops. Similar to the approach shown in Section 2.2 we generate data for three cases, with relop replaced by , and .

ANY/ALL Mutation

This mutation involves changing from ANY to ALL or vice versa. Since the ANY subquery has been transformed to EXISTS the mutation from ANY to ALL becomes a double mutation - replacing EXISTS with NOT EXISTS and negating the correlation condition corresponding to the ANY comparison condition. The case for ALL to ANY mutation is similar.

Let the correlation condition added because of transformation of ALL/ANY to EXISTS/NOT EXISTS be relop . We generate a dataset with two tuples in the subquery for every tuple in the outer query. We add constraints for relop for one tuple and the negation of relop for the other tuple. The ANY query will produce a non-empty result while the ALL query will produce an empty result.

Missing Subquery Mutation

To kill the mutation of a query with missing EXISTS condition connective we generate a dataset with the EXISTS condition replaced by NOT EXISTS. If the EXISTS condition is missing the mutant query will give a non-empty answer while the original query will give an empty answer. Similarly, for killing mutations with missing subquery connectives in other cases we replace NOT EXISTS with EXISTS, IN with NOT IN and NOT IN with IN.

The datasets generated to kill comparison operator mutation will also kill mutations involving missing scalar/ALL/ANY subqueries. If the subquery is present the original query will give an empty result on at least one of the three datasets while the mutant query will produce a non-empty result on all the three datasets.

7.4 Killing Mutations in a Subquery

We also need to generate test data for killing mutations in subquery blocks. For the EXISTS connective and for scalar subqueries we treat a subquery block as a normal query and generate sets of constraints to kill mutations in the subquery block. For each constraint set, we also add constraints to ensure a non-empty result on the outer query block.

For killing selection (comparison mutations, string mutations, NULL mutations), JOIN, and HAVING clause mutations the techniques described in xdata:icde11 and this paper generate datasets that produce empty result on either the query or the mutant but not both. Thus for these mutations the subquery will satisfy the EXISTS condition or the comparison operator (for scalar subqueries) for either the subquery or its mutation enabling XData to kill the mutation.

Extra tuples may get generated for the subquery if there are relations in the subquery that are repeated in the query or are referenced by other relation through foreign keys. Because of these extra tuples, an empty result may turn into a non-empty result or vice versa. To prevent this, we add constraints using Algorithm 5 described in Appendix C where (a) - query tree of the subquery (b) - tuples created for the subquery (c) - correlation conditions with correlation variables being passed as parameters. The constraints ensure that the extra tuples do not affect the result of the subquery, preventing the extra tuples from turning an empty result into a non-empty result or vice versa.

In case there are disjunctions with the subquery, we add constraints to ensure that other conditions in disjunction with the subquery (e.g. P or EXISTS(Q)) are not satisfied as described in Section 2.4.

Mutations like distinct or aggregation mutation in the project clause of the subquery create equivalent mutants of the query and hence need not be killed.

If the subquery uses the NOT EXISTS connective, we generate the datasets for killing mutations in the subquery treating the NOT EXISTS as an EXISTS conditions. Out of the original query and the mutant, the query that produces empty results on the subquery satisfies the NOT EXISTS conditions and produces non-empty results for the outer query. The query that does not produce empty results does not satisfy the NOT EXISTS condition and produces an empty result in the outer query. Thus, these mutations can be killed.

Subquery connectives IN, NOT IN, ANY and ALL are converted to EXISTS and NOT EXISTS as described earlier. Mutations in the subquery are killed after the conversion.

| Dataset | P | Q | UNION | UNION | INTERSECT | INTERSECT | EXCEPT | EXCEPT |

|---|---|---|---|---|---|---|---|---|

| ALL | ALL | ALL | ||||||

| 1 | ||||||||

| 2 | ||||||||

| 3 | ||||||||

| 4 | ||||||||

| 5 | ||||||||

| 6 | (sum) | (diff) | ||||||

| 7 | ||||||||

| 8 | (sum) | (min) | - (diff) |

8 Set Operators

In this section, we consider data generation and mutation killing for queries that contain one of the following set operators - UNION, UNION ALL, INTERSECT, INTERSECT ALL, EXCEPT, EXCEPT ALL.

8.1 Data generation

Set queries are of the form,

P SETOP Q

where SETOP is a set operator, and P and Q are queries that may be simple or compound queries themselves.

In order to generate a dataset that produces a non-empty result on this query if the SETOP is UNION(ALL) we add constraints to ensure non-empty results for P or Q or both (P and Q may have conflicting constraints so for both to have non-empty results may not always be possible).

Data generation for INTERSECT(ALL) is done in a similar manner as the EXISTS subquery described in Section 7.1. We treat the query as

SELECT * FROM (P) WHERE EXISTS

(SELECT * FROM Q WHERE pred)

where predicate pred equates each projected attribute of P to the corresponding attribute of Q. For each tuple in P, we generate a corresponding tuple in Q that satisfies the correlation condition, pred, as described in Section 7.1. Data generation for EXCEPT(ALL) is done in a similar manner using NOT EXISTS instead of EXISTS, using the techniques described earlier for the NOT EXISTS operator.

8.2 Killing Set Operator Mutations

In order to kill the mutations among the different operators (UNION(ALL), INTERSECT(ALL), EXCEPT (ALL)) we generate datasets as described below (summarized in Table 2 along with the results for various set operators).

-

1.

Generate a dataset that has exactly one tuple for P. Add constraints to ensure that one matching tuple exists in Q.

-

2.

Generate a dataset that has one tuple for P. Add constraints to ensure that does not exist in Q.

-

3.

Generate a dataset which has at least two identical tuples for Q. Add constraints to create one matching tuple for P.

-

4.

Generate a dataset that has one tuple for Q. Add constraints to ensure that does not exist in P.

-

5.

Generate a dataset which has at least two identical tuples for Q. Add constraints to ensure not matching tuples for P.

-

6.

Generate a dataset that has at least two identical tuples, for P. Add constraints to ensure that there is exactly one matching tuple in Q.

-

7.

Generate a dataset that has at least two identical tuples, for P. Add constraints to ensure that does not exist in Q.

-

8.

Generate a dataset that has at least two identical tuples, for both P and Q.

We call kill a mutation between a pair of set operators if for a dataset the results of the query as shown in Table 2 differ. Note that it may not be possible to generate some datasets because of query/integrity constraints; in particular primary key constraints may prevent generation of datasets with duplicates. It may not be necessary to generate all datasets to kill all mutations. As an optimization we can stop generation of datasets if we have successfully generated at least one of the datasets for killing each of the mutations.

For both P and Q we have three options; either generate no tuple, one tuple or more than one tuple. Table 2 exhaustively covers all combinations (except for the case where both P and Q are empty, since if both P an Q are empty all operators would give an empty result and no mutation would be killed). Hence, these datasets are sufficient to kill all pairs mutations. For example the mutation between INTERSECT and INTERSECT ALL can only be killed if there is more than one matching tuple between P and Q. Dataset 8 covers this case. The only mutation that may be missed is the mutation between EXCEPT ALL and other operators except UNION ALL since for dataset 8, we cannot guarantee whether the result would be , or . Dataset 8 would still be able to kill the mutation between UNION ALL and EXCEPT ALL since UNION ALL would produce more tuples in the result than EXCEPT ALL. Hence, if it is possible to only generate dataset 8, mutations of other operators with EXCEPT ALL may not get killed. In order to provide completeness guarantees for killing mutations involving EXCEPT ALL, we need to generate specific number of tuples for P and Q. This is an area of future work.

To ensure that a tuples of one relation does not exist in the other, constraints are added using the NOT EXISTS technique described in Algorithm 1 of Section 7.1. To ensure that a tuple in one relation exists in the other, we use the EXISTS technique described in Section 7.1.

To create at least two identical tuples in the result of a subquery, we assert constraints to imply that the number of tuples is more than one. Then using the techniques described in Section 6 for constrained aggregation we estimate the required number of tuples for each base relation. We treat the projected attributes in the select clause as the group by attributes in constrained aggregation, which ensures that these have the same value across tuples. Data generation is done using techniques for constrained aggregation described in Section 6.2 and Section 6.3.2.

8.3 Killing Mutations in Input to Set Operators

We also need to kill mutations in the input to the set operator. For this, we need to ensure that the effect of the mutation makes a difference in the result of the set operator.

If the set operator is UNION/UNION ALL and the mutation to the query is in P, we add constraints to ensure that the mutation in P is killed. In addition to ensure that there are no tuples from Q that mask the changes in the result we add constraints similar to NOT EXISTS subquery for Q. Similarly data generation can be done for killing mutations in Q.

We treat INTERSECT and EXCEPT queries as EXISTS and NOT EXISTS respectively as described earlier. Mutations of P can be killed by datasets to kill mutations of the outer query block while the mutations in Q can be killing by killing mutations in the subquery block as described in Section 7.4.

9 Handling Join Condition, Group By Attribute and Distinct Clause Mutations

In this section, we describe our techniques to kill missing or additional joins conditions, group by attributes and DISTINCT keyword. Although our previous work handled joins, group by and distinct clause, these mutations were not considered.

9.1 Missing or Extra Joins Conditions

Consider the tables student (id, name, dept_name), course (course_id, course_name and dept_name) and takes (id, course_id, sec_id, semester, year) from the University schema in dbconcepts2010 . Consider the query,

SELECT course_id,course_name FROM student INNER JOIN takes ON(id) INNER JOIN course ON(course_id) WHERE student.id = 1234

One of the mutations of the query could be because of an additional join condition leading to a mutant query like

SELECT course_id,course_name FROM student INNER JOIN takes ON(id) INNER JOIN course ON(course_id, dept_name) WHERE student.id = 1234

Such errors are common when using natural joins. For example, if natural join was used in place of .. INNER JOIN course ON(course_id) resulting in student.dept_name being equal to course.dept_name.

In order to kill such mutations, we do the following. Let the relations being joined be and . For every attribute such that (a) there is an attribute with identical names and (b) there is no join condition involving and in the original query, we assert that the values held by the two attributes are not equal. The original query without the join condition would give a non-empty result while the mutation would give an empty result.

Similarly, there could be mutants such that the mutant query contains some missing join conditions. Such mutations can be killed by the datasets that kill join type mutations (INNER / LEFT OUTER / RIGHT OUTER) described in Section 2.2.

9.2 Group By Clause Mutations

In this section, we discuss the mutation of the query due to the presence of additional attributes or absence of some attributes in the group by clause.

9.2.1 Additional Group By Attributes

Consider the following query, , to find the number of students taking each course every time it is offered.

SELECT count(id), course_id, semester, year FROM takes GROUP BY course_id, semester, year

Additional attributes included in the group by clause such as section as shown in the query, , below, could result in an erroneous query.

SELECT count(id), course_id, semester, year FROM takes GROUP BY course_id, semester, year, section

To catch such mutations, we generate a dataset for each possible additional group by attribute, with more than one tuple in the group, such that the additional attribute (section in this case) has different values for different tuples in the group. This ensures that the incorrect query produces multiple groups while the correct one produces only a single group, thereby killing the mutation. Note that because of some selection conditions resulting in attributes being single-valued, functional dependencies on group by attributes and equality conditions on group by attributes some of the mutations with additional GROUP BY may be equivalent to the original query. We do not consider such attributes.

There are situations where the above approach would not work e.g. if the group by is in an EXISTS subquery. The EXISTS condition is satisfied regardless of one or two groups being present. In such a case if there is no constrained aggregation the mutation would be equivalent but if there are aggregation constraints the mutation may not be equivalent and needs to be killed.

If the group has an aggregation that is constrained, e.g., or the number of tuples is assigned based on the aggregation constraint. We try to ensure that the data generated is such that the aggregation constraints of one of the queries, i.e., either of the original query or of its mutant are satisfied, resulting in a non-empty result on either the original query or its mutation but not both, hence killing the mutation.

Let the group by attributes be . For each possible additional group by attribute, , we generate up to 2 corresponding datasets. In our first attempt, we try to generate two separate groups, which agree on but differ in , such that each group (when grouped by ) satisfies the aggregation constraints, but the group containing the union of these tuples (i.e., group by ) does not. Note that this may not be possible in case the aggregate is of the form SUM(x) for values in the positive range or COUNT(x) etc. Hence, we also try to generate a dataset such that the combined group satisfies the aggregate but the individual groups do not. If either succeeds, the mutation will be killed.

9.2.2 Missing Group By Attributes

Another common error is to miss specifying some of the group by attributes. For example, if one misses specifying the attribute, semester in the GROUP BY clause but query then the resultant query is clearly erroneous. Such erroneous queries can be easily detected if the number of attributes projected out is different.

However, that may not be the case for all queries where a group by attribute has been missed. For instance, in the above example, if semester was not in the projection list, the missing group by mutation would be harder to catch. Although rare, we have found such cases when the group by is in a subquery whose result is an aggregation tuple.

We generate datasets to kill such mutations as follows: Let be the group by attributes. For missing group by attribute, , we treat the original query as the one with the missing group by attribute and its mutation with the additional group by attribute as the original query. Datasets can be generated using the techniques for killing mutations of additional group by attributes.

9.3 Distinct Clause Mutations

Users may erroneously omit the DISTINCT keyword in the projection list of a select clause.

For example, consider the following query from dbconcepts2010 that finds the department names of all instructors.

SELECT DISTINCT dept_name

FROM instructor

In this query, the absence of the DISTINCT keyword would lead to the same department name being repeated which is not desired. We term mutations that add or delete the DISTINCT keyword to the select as distinct mutations (DISTINCT of aggregates is covered in Section 2.2). To kill such mutations we need a dataset which results in at least two tuples in the output such that these tuples are identical on the projected attributes. We use the technique described in Section 8.2 for generating tuples with identical projected attributes. For such a dataset, the query with the DISTINCT keyword will give only a single tuple as output while the one without, will give at least two tuples.

In case the constraints are not satisfiable, it is not possible to have multiple tuples with the same value of the projected attribute(s). This could happen if one of the projected attributes is a primary key for the input to the DISTINCT clause or if the projected attributes are also used as GROUP BY attributes in the same query block. For such cases, the DISTINCT mutation is equivalent.

10 Other Extensions

From clause subqueries: Our parser turns from clause subqueries into a tree which can be handled using our existing techniques.

We do not handle from clause subqueries that project aggregates, if there are constraints on the aggregation result in the enclosing query (other than simple constraints which our techniques handle) or if the query uses the lateral construct. Handling such queries is an area of future work.

Handling Parameterized Queries:

When generating datasets for a query with parameters, we assign a

variable to every parameter. The solution given by the SMT solver also contains a

value for each parameter.

It should be noted that since the solver assigns these values,

each dataset may potentially have its own values for the parameters.

DATE and TIME:

We handle SQL data types related to date and time,

namely DATE, TIME and TIMESTAMP by converting them to integers.

Floating and Fixed Point Numbers:

CVC3 allows real numbers to be represented as (arbitrary precision) rationals and hence

when populating a real type data (floating or fixed precision)

from the database or query, we represent it as a fraction in CVC3.

When converting values to fixed precision values, supported by SQL, the conversion can in theory

cause problems in rare cases, since two rationals generated by CVC3

which are very close to each other may map to the same fixed precision number.

We have however not observed this in practice so far.

BETWEEN operator:

For queries that contain the BETWEEN operator, say attr BETWEEN a AND b, we convert the BETWEEN operator to .

The datasets for killing selection mutations are also able to catch mutations where the user intended the range to include or or both.

Insert/Delete/Update Queries: To handle INSERT queries involving a subquery, and DELETE queries, we convert them to SELECT queries by replacing “INSERT INTO relation” or “DELETE” by “SELECT *”. UPDATE queries are similarly converted by creating a SELECT query whose projection list includes the primary key of the updated table, and the new values for each updated column; the WHERE clause remains unchanged from the UPDATE query. Data generation is then done to catch mutations of the resultant SELECT queries.

When testing queries in an application for correctness, we execute the original

INSERT, DELETE or UPDATE queries against the generated datasets.

To test student queries against a given correct query, we perform the transformation from

INSERT, DELETE and UPDATE queries to SELECT queries as above for both the given

student queries and the given correct queries, before comparing them

as described in Section 11.

Handling WITH Clause and Views:

We syntactically convert a query using a WITH clause or views by

performing view expansion. The assumptions we make about the query structure

must be satisfied by the resultant expanded query.