QI et al.

Xinbei Lv, Qingdao Innovation and Development Center, Harbin Engineering University, Qingdao, China 266000.

Decentralized Control for Discrete-time Mean-Field Systems with Multiple Controllers of Delayed Information

Abstract

[Abstract]In this paper, the finite horizon asymmetric information linear quadratic (LQ) control problem is investigated for a discrete-time mean field system. Different from previous works, multiple controllers with different information sets are involved in the mean field system dynamics. The coupling of different controllers makes it quite difficult in finding the optimal control strategy. Fortunately, by applying the Pontryagin’s maximum principle, the corresponding decentralized control problem of the finite horizon is investigated. The contributions of this paper can be concluded as: For the first time, based on the solution of a group of mean-field forward and backward stochastic difference equations (MF-FBSDEs), the necessary and sufficient solvability conditions are derived for the asymmetric information LQ control for the mean field system with multiple controllers. Furthermore, by the use of an innovative orthogonal decomposition approach, the optimal decentralized control strategy is derived, which is based on the solution to a non-symmetric Riccati-type equation.

keywords:

Pontryagin’s maximum principle, asymmetric information control, mean-field system, orthogonal decomposition approach1 Introduction

Different from the controlled linear stochastic differential/difference equations (SDEs) studied in classical stochastic control problem, the mathematical expectation terms appear in the mean-field SDEs. Due to the applications in large population stochastic dynamics games, and various physical and sociological dynamical systems, the study of mean-field SDEs can be traced back to 1950s, and abundant research results have been obtained, see 1-13. Particularly, the continuous time mean-field LQ control problem was firstly studied in 3, and a necessary and sufficient solvability condition was proposed. Furthermore, both the finite horizon LQ control problem and the infinite horizon stabilization control problem for discrete time mean-field systems were solved in 7, 8. Moreover, the indefinite LQ control for mean-field systems is investigated in 9, 10.

It is important to note that the previously mentioned studies on mean-field control problem are mainly symmetric information control problem, only a centralized control strategy needs to be designed. As is well known, the traditional centralized control problem with one single controller were well studied since the last century. In addition, for the system dynamics with multiple controllers of the same information structure, the corresponding decentralized control problems can be converted into a centralized control problem by using the system augmentation approach. Decentralized control systems have multiple controllers that are collaboratively trying to control a system by taking actions based on their individual observations. The observations of one controller may not be available to the other controllers. Whereas, in contrast to centralized control systems, the decentralized control problem with controllers of asymmetric information structure remains less investigated.

We should emphasis that real world systems usually contain multiple controllers, and each controller accesses its individual information. For this situation, finding optimal control strategy is usually difficult in view of the coupling of different controllers. The pioneering study of asymmetric information control is the well-celebrated Witsenhausen’s counterexample raised in 1968 (see 14), which shows that the linear control strategy is no longer optimal for a decentralized control of linear dynamical system, and the associated optimal control problem remains unsolved. Since then, in view of the wide applications in many fields, the research of decentralized control with asymmetric information controllers has attracted much interest from researchers in recent years. For example, the stochastic game problem with asymmetric information was investigated and solved in 21, 22. The optimal local and remote decentralized control problem was investigated via the common information approach, see 16, 17. Besides, the decentralized control for linear stochastic system with multiple controllers of different information structures was studied in 15-18.

Different from the previous works mentioned above, we will investigate a special kind of decentralized control problem for discrete-time mean-field systems. Specifically, multiple controllers with delayed information patterns as well as the mean-field terms (i.e., the expectations of the controllers and the state) are contained within the system dynamics. Meanwhile, each controller can access its individual information, which is different with each other. Our goal is to design the optimal decentralized control strategy to minimize a given quadratic cost function. It should be pointed out that the studied problem has not been solved so far. The existence of mean-field terms and the delayed asymmetric information structure make the studied problem challenging in the following aspects: 1) In view of the asymmetric information structure, the controllers are coupled with each other, hence finding the optimal decentralized control is difficult, see 16, 17; 2) Due to the existence of the mean-filed terms, the original optimal decentralized control problem cannot be considered as a standard LQ control problem, and the explicit solvability conditions have not been derived, see 3.

In this paper, by applying the maximum principle, the corresponding decentralized control problem for mean-field systems with controllers of delayed information structures is well studied. In the first place, by the use of the variational method, the necessary and sufficient solvability conditions are given in accordance with a group of MF-FBSDEs. Subsequently, in order to decouple the associated MF-FBSDEs, an innovative orthogonal decomposition approach is proposed. Hence, in view of the delayed information structure, it is shown that the MF-FBSDEs are decoupled, and the relationship between the costate and the state is established. Finally, the optimal decentralized control strategy is derived by introducing asymmetric Riccati equations.

Very recently, the optimal LQ control problem with multiple controllers of non-symmetric information structure was investigated in 18. It is worth noting that the adopted methodology exhibits innovation in the following aspects: Firstly, to deal with the mean field terms in mean-field system, a group of mean-field forward and backward stochastic difference equations (MF-FBSDEs) is firstly introduced. Obviously, the MF-FBSDEs were not mentioned in reference 18, and we show that the optimal decentralized control can be uniquely solved if and only if the MF-FBSDEs can be uniquely decoupled. Secondly, as a result, in order to obtain the optimal decentralized control for mean-field systems, we introduce a novel technique for decoupling the MF-FBSDEs. Specifically, we derive the control mathematical expectation first, followed by obtaining the optimal decentralized control. It is worth noting that this approach has not been utilized in reference 18. Furthermore, this paper demonstrates that the optimal decentralized control can be computed by solving a set of new asymmetric Riccati equations.

The contributions of the paper are: 1) The necessary and sufficient conditions for the discrete-time mean field decentralized control problem with multiple controllers of delayed information patterns are derived. 2) Utilizing a novel orthogonal decomposition approach, we have successfully obtained the solution to the group of MF-FBSDEs, which, to the best of our knowledge, represents a new and original contribution. 3) With regards to the optimal predictor, we have successfully overcome the challenges associated with the coupling between multiple controllers, enabling us to derive the optimal decentralized control for the first time.

The necessary and sufficient conditions for the discrete-time mean field decentralized control problem with multiple controllers of delayed information patterns are derived. 2) According to a novel orthogonal decomposition approach, the solution to the group of MF-FBSDEs is obtained, which is new as far as our knowledge. 3) In terms of the optimal predictor, the barrier of the coupling between the multiple controllers are overcome, the optimal decentralized control are thus derived for the first time.

The structure of the paper is as follows. In Section 2, the problem under consideration is formulated, while the solvability conditions of the problem is shown in Section 3. The optimal decentralized control strategy is developed in Section 4. A numerical example is given in Section 5, and we conclude the paper in Section 6. Finally, there are some relevant detailed proofs in Appendix.

Notations: means the unit matrix with rank ; Superscript denotes the transpose of a matrix. Real symmetric matrix implies that is strictly positive definite (or positive semi-definite). signifies the -dimensional Euclidean space. represents the inverse of real matrix . Denote the natural filtration as an the -algebra generated by and augmented by all the -null sets. means the conditional expectation with respect to . For the convenience of presentation, the following concise notations are introduced.

| (1) |

2 Problem Formulation

We consider the following discrete-time mean-field stochastic system with multiple controllers

| (2) |

where is the time instant, is the system state, is the -th control input. , and are matrices of appropriate dimensions, is a scalar-valued Gaussian white noise with . The initial state is given, and is the expectation taken over the noise and initial state .

To guarantee the solvability of system (2), the initial control strategies are given arbitrarily.

It is noted that the expectations are involved in the system dynamics (2), which will cause essential difficulties in deriving the optimal decentralized control strategy. For the sake of discussion, it can be derived from (2) that

| (3) |

Throughout this paper, for (2)-(3), the following assumption on the information structure is made: {assumption} The -th controller is -measurable, in which is subject to

Clearly, for and , we can infer

Remark 2.1.

For mean-field system (2), controllers are involved, and each controller can access its individual information, which is different with each other. Such kind of system is called asymmetric information stochastic system, and the corresponding optimal control problem turns into a decentralized control problem, and finding the optimal decentralized control strategy is usually difficult, see 16, 17, 18, 19, 20, 21.

Corresponding with system (2)-(3), the cost functional is given by

| (4) |

where are deterministic symmetric weighting matrices with appropriate dimensions.

For the weighting matrices in (2), we might as well assume: {assumption}

In what follows, we will present an introduction to the decentralized control problem of mean-field system with multiple controllers of delayed information.

Problem 2.2.

Find -measurable controllers to minimize (2).

Remark 2.3.

The solution to Problem 2.2 is hard to obtain, and the optimal decentralized control strategy has not been derived before. The reasons are twofold: 1) The information set available to each controller is different, which results in the coupling of controllers, and makes the finding of optimal decentralized control strategy difficult. 2) The mathematical expectation terms appear in (2)-(2), which destroys the adaptability of the control inputs, and consequently, Problem 2.2 cannot be solved by applying traditional methods such as system augmentation.

3 Existence of Optimal Decentralized Control Strategy

We are going to consider the existence of the solution to Problem 2.2 via the variational method. To begin with, the following lemma shall be given, which serves as the preliminary.

Lemma 3.1.

For (2) and (2), set , and denote , , in which is -adapted with . We have

| (5) |

In the above, and are the state variable and the cost functional corresponding with , respectively. Moreover, can be calculated as

| (6) |

in which , and the costate satisfies the following backward iteration

| (7) |

with terminal condition satisfies the following iteration

| (8) |

Proof 3.2.

In view of space limitations, the detailed proof is omitted here, which can be deduced from Lemma 1 of 18.

Based on the results of Lemma 3.1, we can present the following lemma on the solvability conditions of Problem 2.2.

Lemma 3.3.

Proof 3.4.

‘Necessity’: If Problem 2.2 can be uniquely solved and Assumptions 2-2 hold, we will show (9) should be uniquely solved.

In fact, for any and , if we denote as the optimal control strategy for , then it can be implied from (3.1) that,

| (10) |

Note that can be shown from Assumption 2, then we will show (9) holds by contraction. In other words, it is assumed that

| (11) |

By letting , we have

Obviously, we can always find some such that which contradicts with (9). The proof of Necessity is straightforward.

Remark 3.5.

Combining Lemma 3.1 and Lemma 3.3, the necessary and sufficient unique solvability conditions of Problem 2.2 are derived. Subsequently, in order to obtain the optimal decentralized control strategy, we must pay our attention on solving the following MF-FBSDEs,

| (12) |

which consists of system (2), costate equation (7) and equilibrium condition (9), where (2) is forward and (7) is backward.

4 Optimal Decentralized Control

In this section, we will explore the optimal decentralized control strategy by solving the MF-FBSDEs (12). For this reason, an innovative orthogonal decomposition approach will be adopted.

4.1 Orthogonal Decomposition Approach

By applying the orthogonal decomposition approach, the following lemma can be presented.

Lemma 4.1.

Proof 4.2.

By plugging (9) into (1) and (4.1), we get:

Then, for

for simplicity of calculation, we have

| (19) |

What’s more, for obviously (17) is established.

We know that (17) can be obtained by combining (9) and (19). Next, using (1) and (19), (16) can be verified. Before proving (4.1) and (4.1), it is worth noting that the following two relationships are established:

1) For we infer that

2) For there holds that

Remark 4.3.

The decoupling of the MF-FBSDEs (12) is difficult in view of the non-classic information structure of Problem 2.2. Therefore, the orthogonal decomposition approach is introduced in Lemma 4.1, which will play a critical role in decoupling (12). In what follows, we will show the method to derive the optimal decentralized control strategy by the use of the orthogonal decomposition approach to decouple MF-FBSDEs (12).

In view of the delayed information pattern for the controllers, it is necessary to derive the associated mathematical conditional expectation, i.e., the optimal predictor.

Lemma 4.4.

The optimal predictor can be given as follows:

| (20) |

Proof 4.5.

For (2), it can be easily induced that by taking the mathematical expectation of system (2):

| (21) |

Subsequently,

| (22) |

By taking the conditional mathematical expectation of , the optimal predictor can be derived as

Note that the following relationship can be holds,

Moreover, it is noted that is -measurable for . Otherwise, is not -measurable for , subsequently, by using (4.1), we know that can be represented by for

4.2 Decoupling MF-FBSDEs

In this section, we will adopt the induction approach to decouple the MF-FBSDEs (12) and derive a solution to Problem 2.2.

For simplicity, let’s define the following asymmetric Riccati equations,

| (23) |

with terminal conditions and for and In the above, for

| (24) |

where

Now it is the position to state the solution to Problem 2.2.

Theorem 4.6.

Suppose Assumptions 2-2 hold, for (2) and (2), Problem 2.2 is uniquely solvable if and only if and are invertible, for where and are given in (4.2).

Proof 4.7.

Please refer to the Appendix for detailed proof.

Remark 4.8.

In Theorem 4.6, it is shown that the gain matrices of the optimal decentralized control strategy can be calculated via some asymmetric Riccati equations, which can be calculated offline. Moreover, we prove that the optimal control strategy is a linear feedback of the optimal predictor and the state mean , which is feasible in calculation. The obtained results are derived for the first time.

Remark 4.9.

From the main results of Theorem 4.6, it is not hard to verify that the obtained results include the results of 8, 18 as special cases. On one hand, with , Problem 2.2 can be reduced as the control problem for mean-field system without delay in 8, and the optimal control with in Theorem 4.6 is exactly the optimal control shown in 8. On the other hand, with , Problem 2.2 turns into the case investigated in 18, and the optimal decentralized control strategy in Theorem 4.6 can be presented the same as that in 18.

5 Numerical Example

To illustrate the obtained results in Theorem 4.6, the following numerical example shall be given as below.

By using Theorem 4.6, can be calculated, and it can be found and are invertible. On the other hand, since given above are positive definite, we can verify in (4.2).

Thus, from Theorem 4.6, we know Problem 2.2 can be uniquely solved, and the optimal decentralized control is given as:

In the following, we choose

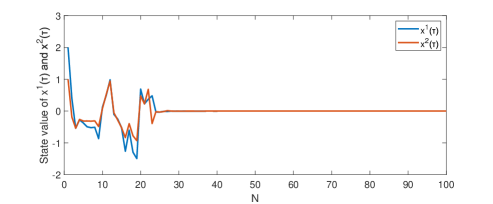

By using the results of Theorem 4.6, the state trajectory for can be obtained, which is depicted as in FIGURE 1. From FIGURE 1, it is clear that the system state converges to 0.

6 Conclusion

In this paper, we have investigated the decentralized control problem for the discrete-time mean field systems with multiple controllers of delayed information. Firstly, by the use of Pontryagin’s maximum principle, the necessary and sufficient solvable conditions for the decentralized control problem have been derived. Next, in order to handle with the asymmetric information pattern, the orthogonal decomposition approach has been proposed to decouple the associated MF-FBSDEs. Finally, the optimal decentralized control strategy has been derived, which is based on an asymmetric Riccati equation. For future research, we will extend the results of this paper to study the infinite time horizon case.

Acknowledgments

This work was supported by National Natural Science Foundation of China under grant 61903210, Natural Science Foundation of Shandong Province under grant ZR2019BF002, China Postdoctoral Science Foundation under grants 2019M652324, 2021T140354, Qingdao Postdoctoral Application Research Project, Major Basic Research of Natural Science Foundation of Shandong Province under grant ZR2021ZD14.

Appendix: Proof of Theorem 1

Proof 6.1.

According to Lemma 3.1-Lemma 3.3, we know that the necessary and sufficient conditions for the unique solution of Problem 2.2 is that equilibrium condition (9) is uniquely solvable. Consequently, based on the induction method, we will solve the MF-FBSDEs (12) to find the solution of Problem 2.2.

In the first place, for we have then (16) with implies

| (28) |

By taking the mathematical expectation of the (6.1), we have

| (29) |

Further, can be calculated as

| (30) |

Substitute (30) into (6.1), we have

| (31) |

According to Lemma 3.1, it is noted that the equilibrium condition (9) for is uniquely solvable if and only if and are invertible. The unique optimal control can be obtained as

| (32) |

Next, we will calculate In fact, can be rewritten as

| (33) |

Similarly, by taking the mathematical expectation of the (6.1), there holds

| (35) |

it yields that

| (36) |

Substitute (36) into (6.1), we have

| (37) |

Similar to the analysis above (6.1), (9) is uniquely solvable if and only if and are invertible. Apparently, can be derived.

The remainder of the argument is analogous to the above analysis, so by repeating the above procedures step by step, it can be verified that can be given by

| (38) |

and is given by

| (39) |

where ,,, satisfy the following relationships

| (40) |

In other words, in (4.6) has been verified for .

Afterwards, we will concentrate on calculating . From (7), we can obtain that

| (41) |

where we have used the relationships

| (42) |

and

| (43) |

which mean that (27) has been proved for .

In order to adopt the induction method, we assume that the assertions in Theorem 4.6 also hold when . In other words, for , it is assumed that the following assertions hold:

In the following, we will verify (4.6) for . In fact, from (16), we have

| (44) |

Hence, (9) is uniquely solvable if and only if and are invertible. In this case, and in (4.6) can be calculated from (6.1), respectively.

In the following, we will verify . Similar to the arguments in (6.1), it follows that

| (45) |

i.e., can be proved from (6.1).

Following the derivations of (6.1)-(40) and (6.1), it can be verified that satisfies (4.6), and can be expressed as (4.6).

Subsequently, to end the induction method, will be calculated.

| (46) |

which leads to (27). This ends the induction method.

As a consequence, we have shown that the unique solvability of (9) is equivalent to the invertibility of and . In other words, Problem 2.2 is uniquely solvable if and only if and are invertible for Furthermore, the unique optimal control strategy can be presented as (4.6).

Finally, we shall calculate the optimal cost functional with the optimal control in (4.6). Actually, it follows that:

| (47) |

References

- 1 M. Kac, “Foundations of kinetic theory,” In Proceedings of The third Berkeley symposium on mathematical statistics and probability, 3(1956), pp. 171-197.

- 2 H. P. McKean, “A class of Markov processes associated with nonlinear parabolic equations,” In Proceedings of the National Academy of Sciences of the United States of America, 56(1966), no. 6, pp. 1907-1911.

- 3 J. Yong, “Linear-quadratic optimal control problems for mean-field stochastic differential equations,” SIAM Journal on Control and Optimization, 51(2013), no. 4, pp. 2809-2838.

- 4 R. Elliott, X. Li, and Y. Ni, “Discrete time mean-field stochastic linear-quadratic optimal control problems,” Automatica, 49(2013), no. 11, pp. 3222-3233.

- 5 Y. Ni, R. Elliott and X. Li, “Discrete-time mean-field stochastic linear-quadratic optimal control problems, II: Infinite horizon case,” Automatica, 57(2015), pp. 65-77.

- 6 Q. Qi, H. Zhang and Z. Wu, “Stabilization control for linear continuous time mean-field systems,” IEEE Transactions on Automatic Control, 64(2019), no. 8, pp. 3461-3468.

- 7 H. Zhang and Q. Qi, “Optimal control for mean-field system: discrete time case,” IEEE 55th Conference on Decision and Control, (2016), pp. 4474-4480.

- 8 H. Zhang, Q. Qi and M. Fu, “Optimal stabilization control for discrete time mean-field stochastic systems,” IEEE Transactions on Automatic Control, 64(2019), no. 3, pp. 1125-1136.

- 9 B. C. Wang and H. Zhang, “Indefinite linear quadratic mean field social control problems with multiplicative noise,” IEEE Transactions on Automatic Control, 66(2021), no. 11, pp. 5221-5236.

- 10 N. Li, X. Li and Z. Yu, “Indefinite mean-field type linear-quadratic stochastic optimal control problems,” Automatica, 122(2020), pp. 109267:1-109267:10.

- 11 W. Yu, F. Wang, Y. Huang and H. Liu, “Social optimal mean field control problem for population growth model,” Asian Journal of Control, 22(2019), no. 6, pp. 2444-2451.

- 12 Z. Li, D. Marelli, M. Fu and Z. Ren, “Decentralized social-optimal solution of finite number of average field linear quadratic control,” Asian Journal of Control, 24(2022), no. 4, pp. 1916-1927.

- 13 Q. Qi, L. Xie and H. Zhang, “Linear quadratic optimal control for discrete-time mean-field systems with input delay,” IEEE Transactions on Automatic Control, 67(2022), no. 8, pp. 3806-3821.

- 14 H. S. Witsenhausen, “A Counterexample in stochastic optimum control,” SIAM Journal on Control, 6(1968), no. 1, pp. 131-147.

- 15 L. Li and H. Zhang, “Stabilization of discrete-time systems with multiplicative noise and multiple delays in the control variable,” SIAM Journal on Control and Optimization, 54(2016), no. 2, pp. 894-917.

- 16 X. Liang and J. Xu, “Control for network control systems with remote and local controllers over unreliable communication channel,” Automatica, 98(2018), pp. 86-94.

- 17 S. M. Asghari, Y. Ouyang and A. Nayyar, “Optimal local and remote controllers with unreliable uplink channels,” IEEE Transactions on Automatic Control, 64(2019), no. 5, pp. 1816-1831.

- 18 Q. Qi, L. Xie and H. Zhang, “Optimal control for stochastic systems with multiple controllers of different information structures,” IEEE Transactions on Automatic Control, 66(2021), no. 9, pp. 4160-4175.

- 19 Q. Wang, B. Fu, C. Lin and P. Li, “Exponential synchronization of chaotic Lur’e systems with time-triggered intermittent control,” Communications in Nonlinear Science and Numerical Simulation, 109(2022), pp. 106298.

- 20 Q. Wang and Y. He, “Time-triggered intermittent control of continuous systems,” International Journal of Robust and Nonlinear Control, 31(2021), pp. 6867-68789.

- 21 A. Nayyar, A. Gupta, C. Langbort and T. Başar, “Common information based Markov perfect equilibria for stochastic games with asymmetric information: finite games,” IEEE Transactions on Automatic Control, 59(2014), no. 3, pp. 555-570.

- 22 A. Gupta, A. Nayyar, C. Langbort and T. Başar, “Common information based Markov perfect equilibria for linear-Gaussian games with asymmetric information,” SIAM Journal on Control and Optimization, 52(2014), no. 5, pp. 3228-3260.