Decomposition with Monotone B-splines: Fitting and Testing

Abstract

A univariate continuous function can always be decomposed as the sum of a non-increasing function and a non-decreasing one. Based on this property, we propose a non-parametric regression method that combines two spline-fitted monotone curves. We demonstrate by extensive simulations that, compared to standard spline-fitting methods, the proposed approach is particularly advantageous in high-noise scenarios. Several theoretical guarantees are established for the proposed approach. Additionally, we present statistics to test the monotonicity of a function based on monotone decomposition, which can better control Type I error and achieve comparable (if not always higher) power compared to existing methods. Finally, we apply the proposed fitting and testing approaches to analyze the single-cell pseudotime trajectory datasets, identifying significant biological insights for non-monotonically expressed genes through Gene Ontology enrichment analysis. The source code implementing the methodology and producing all results is accessible at https://github.com/szcf-weiya/MonotoneDecomposition.jl.

Keywords: Function Decomposition; Monotone B-splines; Curve Fitting; Test of Monotonicity.

1 Introduction

Suppose we have pairs of observations , with , independent and identically distributed (i.i.d.) according to an unknown probability distribution . Various methods exist for estimating the conditional expectation function , ranging from simple linear regressions (including ridge and lasso) to more sophisticated nonlinear techniques. Spline is one of the most popular methods, particularly when . Unlike existing methods, we aim to estimate the monotonic components of and then use their sum as an estimator for . This is because any general function can be decomposed into the sum of an increasing function and a decreasing function (a more formal proof is given in the Supplementary Material for self-contained).

The monotone decomposition idea has been exploited by [5] in their recent algorithm, where the monotone decomposition is incorporated into fitting monotone Bayesian additive regression trees (mBART). They found that fitting by monotone decomposition with mBART outperforms the corresponding BART algorithm proposed earlier in [4]. We here focus on the case of , and adopt B-spline basis functions to represent the monotone components,

where the superscripts and subscripts “” and “” indicate for up (increasing) and down (decreasing), respectively. The monotonicity of each monotone component is ensured by the monotonicity of the coefficients [23], i.e.,

This paper is organized as follows. In Section 2, we formulate monotone decomposition with cubic splines and establish properties of the solution, particularly for monotone functions. In Section 3, we propose the monotone decomposition with smoothing splines and establish similar properties and theoretical guarantees. In Section 4, we present simulation results to demonstrate how fitting via monotone decomposition can outperform the corresponding unconstrained fitting, particularly in high-noise scenarios. In Section 5, we propose statistics for testing monotonicity and, in Section 6, we demonstrate the power of the proposed method via simulations. In Section 7, we present the results of our analysis on single-cell pseudo-time trajectory datasets using the fitting and testing techniques based on monotone decomposition. Finally, we discuss the limitations and potential future work in Section 8.

2 Monotone Decomposition with Cubic Splines

Cubic splines are the most popular polynomial splines for practitioners. Presumably, cubic splines are the lowest-order splines for which the knot-discontinuity is not visible to the human eye, and there is scarcely any good reason to go beyond cubic splines [12]. On the other hand, although there are many equivalent bases for representing a spline function, the B-spline basis system developed by [7] is attractive numerically [17]. Thus, we take the order-4 B-spline basis to represent cubic splines, under which the problem reduces to solving the following optimization problem:

| (1) |

where is an -vector of the responses (note that we use round brackets to denote column vectors), are the matrices constructed by evaluating the B-spline basis at , and are the coefficient vectors.

For simplicity, we consider . Note that the knots for determining the B-spline basis are conventionally on the quantiles of ’s, then the B-spline basis functions are also the same, , so the above problem (1) becomes

| (2) |

where .

First of all, Proposition 1 establishes the equivalence between problem (2) with the corresponding unconstrained B-spline fitting.

Proposition 1.

Regardless of the component solutions to problem (2), the overall solution is equivalent to the unconstrained B-spline fitting, i.e., the least squares solution,

| (3) |

Specifically,

Note that the monotone components in (2) are not identifiable, since

where is an arbitrary increasing sequence, . In other words, the decomposition for a general function is not unique since

where is an arbitrary increasing function.

In order to have a unique decomposition, we consider the closest decomposition in some sense, such as the difference between two monotone components being the smallest in the -norm. Thus, we consider imposing some discrepancy constraint on problem (2), as detailed in the following subsections, to help obtain a unique solution.

2.1 Discrepancy Constraint: A Motivating Example

Consider the simple function , which may be decomposed as

where is an increasing function. If we set , then it is easy to show that the magnitude of the difference between the two monotone components is lower-bounded by , i.e.,

| (4) |

Since it is unreasonable to have a decreasing component for a strictly increasing function, the ideal decomposition should correspond to . Equation (4) suggests to us that such an ideal decomposition may be obtained by requiring the two monotone components to differ the least.

In light of this observation, we introduce the following discrepancy constraint for the two monotone components in the decomposition:

| (5) |

where is a tuning parameter. The role of parameter can be summarized as follows,

-

•

if , then the solution is , and hence are constant functions;

-

•

if , then the problem reduces to be equivalent to the unconstrained B-spline fitting;

-

•

a moderate imposes some regularization, which is preferable for a better fitting.

2.2 General Functions

With the discrepancy constraint in (5), we can restate problem (2) as

| (6) |

Defining and

we further rewrite problem (6) as

| (7) |

It is more convenient to consider its Lagrangian form

| (8) |

where is the Lagrange multiplier. By Lagrangian duality, there is a one-to-one correspondence between the constrained problem (7) and the Lagrangian form (8): for each value of in the range where the constraint is active, there is a corresponding value of that yields the same solution from the Lagrangian form (8). Conversely, the solution to problem (8) solves the bound problem (7) with . Some basic properties of the solution to problem (8) are summarized in Proposition 2.

2.3 Monotone Functions

To delve deeper into the properties of the solution to problem (8), this section discusses the monotone decomposition of monotone functions. Without loss of generality, we consider increasing functions.

Proposition 3.

Let be the monotone decomposition to problem (8). Suppose is increasing, then

-

(i)

is a vector with identical elements, i.e., , where the constant ;

-

(ii)

if there is no ties in , i.e., , then

(9) where the unconstrained B-spline solution is given in Equation (3);

-

(iii)

if , where , then

(10) where is a block diagonal matrix with elements

The above result (ii) can be viewed as a special case when .

2.4 MSE Comparisons

To quantify the performance of fitting by monotone decomposition, consider the model

| (11) |

Define the mean squared error of the fitness,

where , and the expectations are taken over . Proposition 4 shows that the fitting with monotone decomposition can achieve better performance, particularly in high-noise scenarios, when the underlying function is monotone.

Proposition 4.

Suppose the monotone decomposition satisfies that is increasing. Let be a matrix defined in Proposition 3 such that is the sub-vector with unique elements. If

where

and the expectations are taken over since (and hence ) depends on , then there exists monotone decomposition such that .

Particularly, if we assume there is no ties in , i.e., for different , then there always exists a monotone decomposition such that regardless of the noise level.

The lower bound of in Proposition 4 might not be easy to evaluate. Nonetheless, the pivotal implication is that the monotone decomposition fitting can achieve better performance when the noise level is large enough. Extensive simulations in Section 4 agree with this argument. Moreover, although Proposition 4 is specifically established for monotone functions, the simulations show that the monotone decomposition fitting with cubic splines can also outperform the corresponding unconstrained cubic splines applied to random functions, particularly in high-noise scenarios.

3 Monotone Decomposition with Smoothing Splines

When dealing with cubic splines, it is typically necessary to ascertain both the number of basis functions, denoted as , and the optimal placement of knots. In contrast, smoothing splines take a different approach by employing all unique data points as knots, thus bypassing the need for an optimization process to determine the knot placement and the number of knots required for B-spline basis functions.

With B-spline basis functions, the smoothing spline can be estimated as follows,

| (12) |

where is called the roughness penalty matrix and is the penalty parameter. For this reason, smoothing splines are also referred to as penalized splines.

Imposing the roughness penalty on problem (8), where , we have the Lagrangian form of monotone decomposition with smoothing splines,

| (13) |

For general functions, the properties in Proposition 2 also hold for the monotone decomposition with smoothing splines.

3.1 Monotone Functions

Likewise, we delve deeper into the characteristics of monotone decomposition with smoothing splines on monotone functions. The solutions demonstrate analogous shrinkage with offset patterns, akin to those observed in Proposition 3 for monotone decomposition with cubic splines, and the results are articulated in the following Proposition 5.

Proposition 5.

Let be the monotone decomposition to problem (13). Suppose is increasing, then

-

(i)

is a vector with identical elements, i.e., , where the constant ;

-

(ii)

if there is no ties in , i.e., the inequalities hold strictly, , then

(14) where is the solution to smoothing spline with penalty parameter ,

- (iii)

3.2 MSE Comparisons

Based on model (11), for a comparative analysis of the fitting performance between monotone decomposition with smoothing splines and their smoothing splines counterparts, we further define the mean squared error for smoothing splines,

where is the solution to smoothing splines with penalty parameter .

Proposition 6 shows that employing monotone decomposition with smoothing splines can result in a superior mean squared error (MSE) compared to smoothing splines in the context of monotone functions, particularly when the noise level is sufficiently high. While the condition (15) outlined in Proposition 6 may appear intricate, the simulations presented in the next section empirically substantiate this assertion. Furthermore, although the proposition is specifically formulated for monotone functions, the simulations show that the monotone decomposition applied to general functions can still achieve better performance in high-noise scenarios.

Proposition 6.

Consider the smoothing spline with penalty parameter . Let be the coefficient vector and denote its hat matrix by . If

| (15) |

and suppose the monotone decomposition is increasing with no ties, then there exists a monotone decomposition with parameters , where , that achieves smaller mean squared error than .

4 Simulations for Fitting

In this section, we compare the performance of monotone decomposition using simulated examples. We generate data from function with standard Gaussian noises,

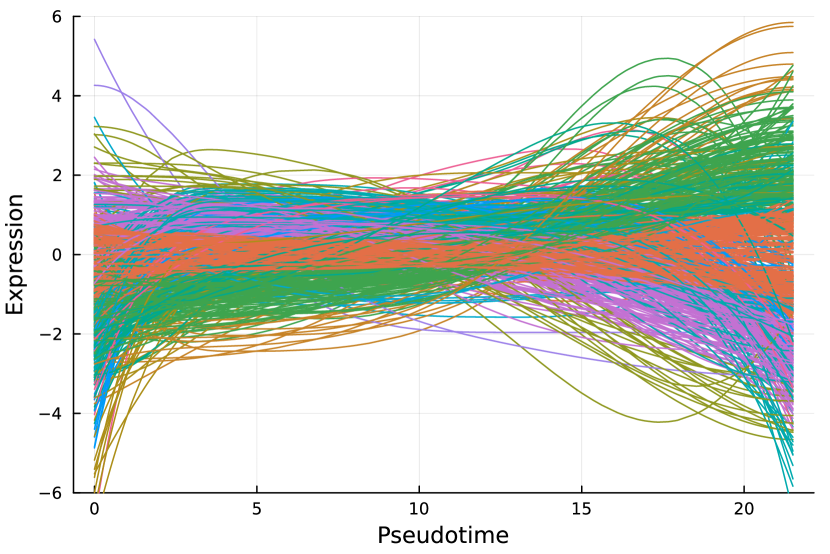

To cover a diverse range of functional forms, we consider the following different types of functions.

-

•

monotone functions: (i) polynomial function: ; (ii) exponential function: ; (iii) sigmoid function: .

-

•

general functions: (i) unimodal: ; (ii) random functions, where the kernel can be Squared Exponential (SE), Rational Quadratic (RQ), Matérn (Mat) and Periodic [18]. The numerical values appended to the kernel names in Tables 6 and 8 are the kernel parameters. For example, “Mat12” refers to the Matérn kernel with parameter . The detailed procedure for generating a random function and a visualization of those curves can be found in the Supplementary Material.

To compare the performance of different methods, we adopt the mean squared fitting error (MSFE), i.e., the residual sum of squares (RSS) divided by sample size, and the mean squared prediction error (MSPE),

where are equally spaced within the same range of . Based on replications, we estimate the mean MSFE and MSPE, together with their respective standard errors. To judge how significant the differences of MSPE between the fitting by monotone decomposition and the corresponding spline fitting, we consider

and denote the differences for each experiment as . We report the -value for the one-sided -test versus when (or when ). Besides, we also count the proportion for the fitting by monotone decomposition that achieves better performance,

4.1 Cubic Splines

Firstly, we consider the monotone decomposition with cubic splines. There are two tuning parameters: the number of basis functions , and the Lagrange multiplier for the discrepancy between the two components. We adopt two strategies to optimize these parameters:

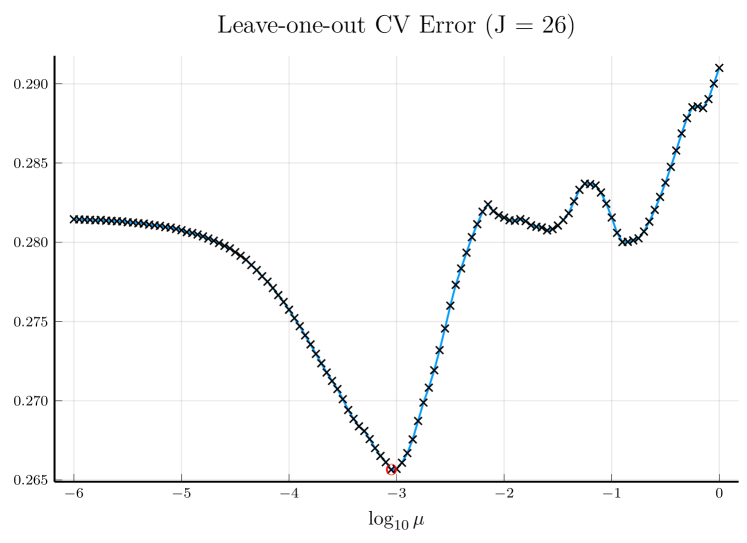

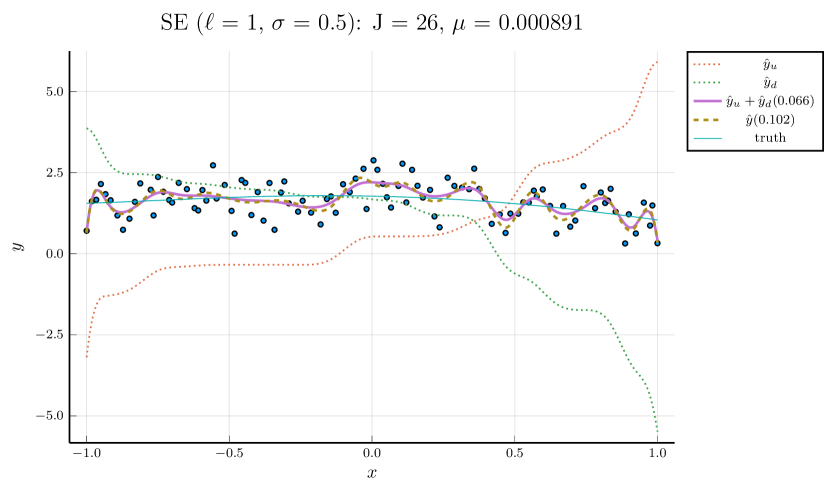

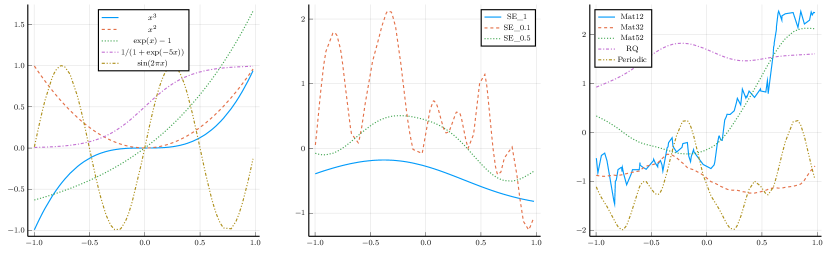

Tune with fixed :

We pick , the tuning parameter for cubic splines, to be a minimizer of the cross-validation (CV) error, and then perform the monotone decomposition with cubic splines using the same while tuning the parameter . Figure 1 shows an example, with selected by CV. The left panel shows the leave-one-out CV error plotted against . The cubic spline fitting and the monotone decomposition fitting are displayed in the right panel, where the former achieves 0.102 MSPE, while the latter improves the MSPE to 0.066.

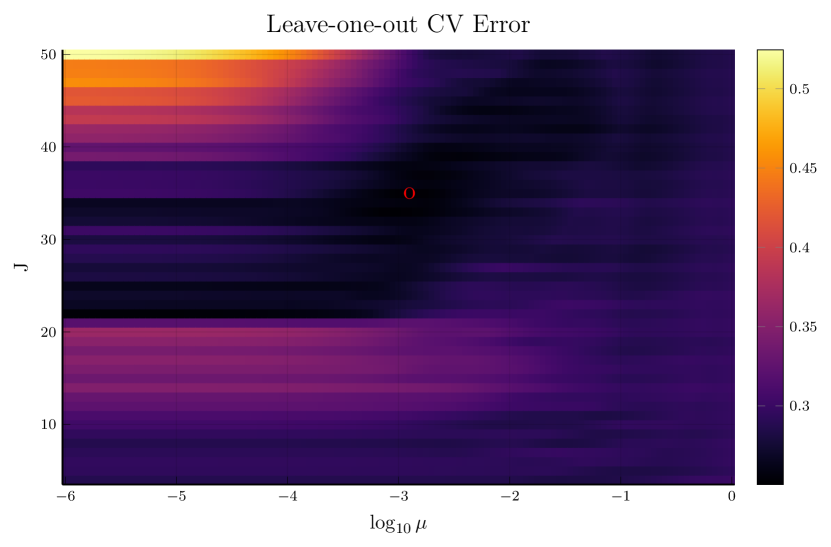

Tune and simultaneously:

Instead of fixing in the monotone decomposition procedure, we also use cross-validation to choose it, together with . Figure 2 displays an example of this process, where the left panel shows the CV error for each parameter pair , and the right panel compares the fitting given the parameters that minimize the CV error to cubic spline fitting, whose parameter is separately tuned by CV.

Note that the right panels of Figures 1 and 2 depict the same training data. Although the MSPE of 0.079 by tuning simultaneously is slightly larger than the MSPE of 0.066 by tuning with fixed , the monotone decomposition method achieves better performance than the cubic spline fitting, which has an MSPE of 0.102.

To provide comprehensive comparisons, we conducted 100 repetitions for 12 types of curves under different noise levels. The results by tuning and simultaneously are summarized in Table 6, and the results by tuning with fixed can be found in the Supplementary Material. For some curves with small noises (e.g., ), such as , the decomposition method performs slightly worse than cubic spline fitting. Nevertheless, the monotone decomposition always outperforms cubic spline fitting in higher noise (e.g., ) scenarios, regardless of the optimization strategies.

| curve | MSFE | MSPE | p-value | prop. | |||

| CubicSpline | MonoDecomp | CubicSpline | MonoDecomp | ||||

| 1.0 | 9.71e+00 (7.3e-02) | 9.73e+00 (7.3e-02) | 7.50e+00 (3.1e-01) | 6.93e+00 (2.6e-01) | 5.91e-03 (**) | 0.59 | |

| 1.0 | 9.65e+00 (7.4e-02) | 9.60e+00 (7.2e-02) | 7.63e+00 (3.5e-01) | 7.17e+00 (2.7e-01) | 2.21e-02 (*) | 0.6 | |

| 1.0 | 9.57e+00 (5.9e-02) | 9.49e+00 (7.3e-02) | 7.56e+00 (2.8e-01) | 7.08e+00 (3.1e-01) | 3.59e-02 (*) | 0.57 | |

| sigmoid | 1.0 | 9.51e+00 (8.5e-02) | 9.50e+00 (8.7e-02) | 7.33e+00 (3.2e-01) | 6.61e+00 (2.6e-01) | 1.45e-03 (**) | 0.56 |

| SE-1 | 1.0 | 9.55e+00 (7.0e-02) | 9.51e+00 (7.6e-02) | 7.29e+00 (3.2e-01) | 6.62e+00 (2.7e-01) | 5.51e-03 (**) | 0.63 |

| SE-0.1 | 1.0 | 9.29e+00 (8.5e-02) | 9.20e+00 (9.3e-02) | 1.44e+01 (2.6e-01) | 1.38e+01 (2.4e-01) | 5.93e-04 (***) | 0.7 |

| Mat12-1 | 1.0 | 9.79e+00 (8.9e-02) | 9.73e+00 (9.1e-02) | 1.26e+01 (2.9e-01) | 1.17e+01 (2.4e-01) | 9.31e-07 (***) | 0.68 |

| Mat12-0.1 | 1.0 | 1.04e+01 (1.3e-01) | 1.04e+01 (1.3e-01) | 2.07e+01 (2.4e-01) | 2.01e+01 (2.4e-01) | 1.85e-04 (***) | 0.73 |

| Mat32-1 | 1.0 | 9.62e+00 (8.2e-02) | 9.61e+00 (8.3e-02) | 9.01e+00 (3.5e-01) | 8.00e+00 (2.6e-01) | 1.46e-04 (***) | 0.56 |

| Mat32-0.1 | 1.0 | 9.62e+00 (1.1e-01) | 9.53e+00 (9.7e-02) | 1.68e+01 (2.5e-01) | 1.58e+01 (2.1e-01) | 4.67e-10 (***) | 0.72 |

| RQ-0.1-0.5 | 1.0 | 9.55e+00 (1.1e-01) | 9.50e+00 (1.2e-01) | 1.50e+01 (2.4e-01) | 1.44e+01 (2.6e-01) | 5.01e-03 (**) | 0.68 |

| Periodic-0.1-4 | 1.0 | 9.41e+00 (1.2e-01) | 9.31e+00 (1.1e-01) | 1.72e+01 (3.0e-01) | 1.60e+01 (2.5e-01) | 2.03e-10 (***) | 0.63 |

4.2 Smoothing Splines

This section compares the fitting performance of monotone decomposition with smoothing splines to the fitting of the smoothing splines. There are two tuning parameters: the penalty parameter and the Lagrange multiplier for the discrepancy. We consider three strategies to optimize these parameters:

-

•

Tune for smoothing splines first, then tune for the monotone decomposition with smoothing splines using the tuned ;

-

•

According to Proposition 6, tune for smoothing splines first, then tune the shrinkage factor for monotone decomposition with smoothing splines using penalty parameter ;

-

•

Simultaneously tune and for monotone decomposition with smoothing splines.

All strategies use cross-validation (CV) to determine the parameters. Specifically, we pick the tuning parameters that minimize the CV error.

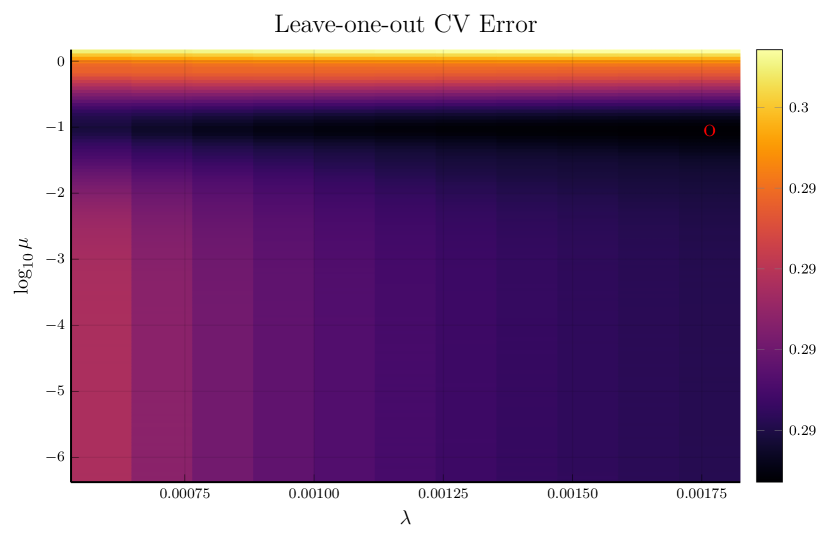

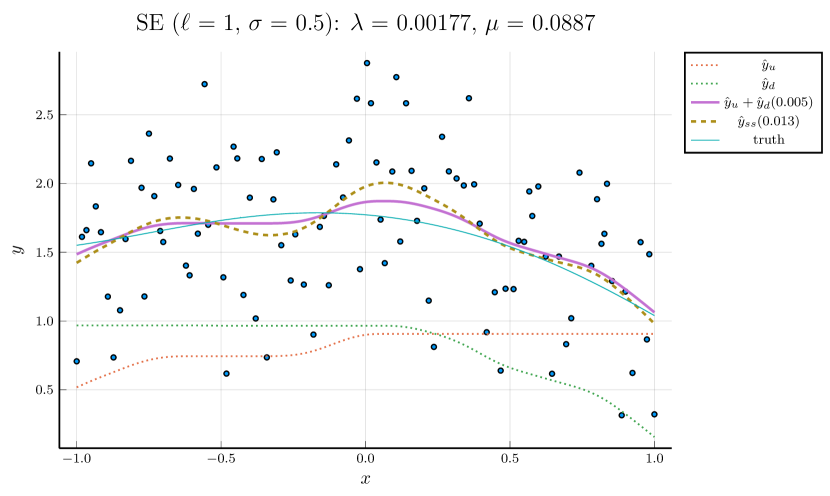

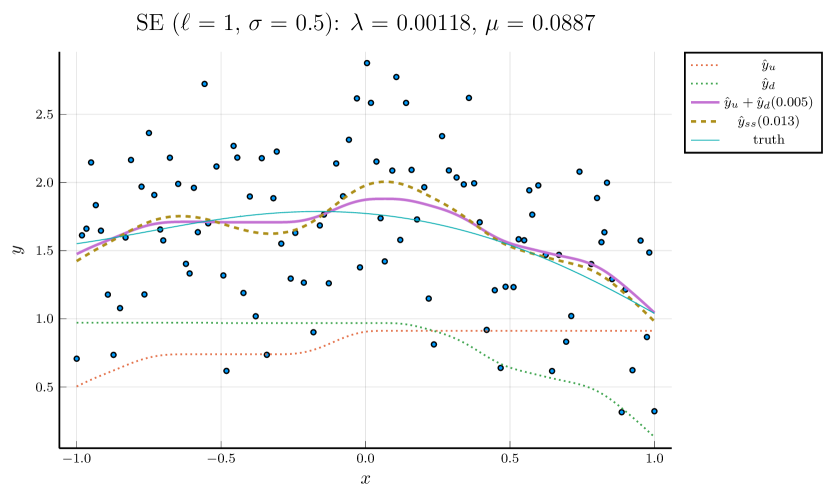

For brevity, we only present the results using the third strategy in this section. Results based on the other two strategies can be found in the Supplementary Material. Figure 3 illustrates the simultaneous tuning of using the same toy example presented in Figures 1 and 2. The smoothness penalty in smoothing splines results in fitted curves that are notably less wiggly compared to the curves fitted by cubic splines without such a penalty.

The results of 100 repetitive experiments are summarized in Table 8. We observe that the monotone decomposition consistently outperforms the corresponding smoothing splines. Moreover, the monotone decomposition with CV-tuned is more likely to obtain better performance compared to the other two strategies. Regardless of the optimization strategies employed, all results consistently show that the monotone decomposition fitting can achieve a good performance, especially in high-noise scenarios.

| curve | MSFE | MSPE | p-value | prop. | |||

| SmoothSpline | MonoDecomp | SmoothSpline | MonoDecomp | ||||

| 1.0 | 9.65e+00 (8.9e-02) | 9.69e+00 (8.5e-02) | 6.44e+00 (2.9e-01) | 6.35e+00 (2.5e-01) | 1.68e-01 | 0.49 | |

| 1.0 | 9.75e+00 (8.2e-02) | 9.77e+00 (8.2e-02) | 6.47e+00 (1.8e-01) | 6.21e+00 (1.7e-01) | 1.18e-04 (***) | 0.65 | |

| 1.0 | 9.74e+00 (8.4e-02) | 9.75e+00 (8.4e-02) | 5.94e+00 (2.3e-01) | 5.82e+00 (2.1e-01) | 3.12e-02 (*) | 0.58 | |

| sigmoid | 1.0 | 9.60e+00 (7.8e-02) | 9.64e+00 (7.5e-02) | 5.99e+00 (2.9e-01) | 5.68e+00 (2.4e-01) | 4.47e-04 (***) | 0.67 |

| SE-1 | 1.0 | 9.67e+00 (8.3e-02) | 9.70e+00 (8.1e-02) | 6.32e+00 (2.8e-01) | 6.11e+00 (2.5e-01) | 3.86e-03 (**) | 0.6 |

| SE-0.1 | 1.0 | 8.92e+00 (9.2e-02) | 8.99e+00 (9.0e-02) | 1.23e+01 (2.1e-01) | 1.23e+01 (2.1e-01) | 4.32e-01 | 0.57 |

| Mat12-1 | 1.0 | 9.65e+00 (9.5e-02) | 9.69e+00 (9.2e-02) | 1.15e+01 (2.3e-01) | 1.13e+01 (2.1e-01) | 3.40e-04 (***) | 0.56 |

| Mat12-0.1 | 1.0 | 9.76e+00 (1.2e-01) | 9.92e+00 (1.1e-01) | 1.90e+01 (2.2e-01) | 1.90e+01 (2.2e-01) | 2.06e-01 | 0.58 |

| Mat32-1 | 1.0 | 9.59e+00 (8.5e-02) | 9.64e+00 (7.5e-02) | 7.20e+00 (2.4e-01) | 7.04e+00 (2.0e-01) | 3.65e-02 (*) | 0.49 |

| Mat32-0.1 | 1.0 | 8.96e+00 (1.1e-01) | 9.14e+00 (1.0e-01) | 1.48e+01 (2.2e-01) | 1.47e+01 (2.1e-01) | 1.44e-01 | 0.54 |

| RQ-0.1-0.5 | 1.0 | 9.10e+00 (1.1e-01) | 9.22e+00 (1.1e-01) | 1.27e+01 (2.4e-01) | 1.25e+01 (2.2e-01) | 1.21e-03 (**) | 0.6 |

| Periodic-0.1-4 | 1.0 | 8.69e+00 (1.0e-01) | 8.89e+00 (9.1e-02) | 1.44e+01 (2.1e-01) | 1.43e+01 (1.9e-01) | 3.03e-01 | 0.49 |

5 Test of Monotonicity

Once obtaining two monotone components through monotone decomposition, in addition to utilizing the sum of these two components as a fitting method, we can also derive statistics for the monotonicity testing. Consider the model , where is a scalar covariate, is a scalar dependent random variable, is the noise satisfying , and is an unknown function. Testing of monotonicity aims to test

where “monotone” can be specifically (strictly) “increasing” or “decreasing”.

5.1 Related Work

There are many existing approaches for testing monotonicity. [1] constructed a test based on critical bandwidth. They fitted a local linear regression and determined the smallest bandwidth value such that the estimate becomes monotone. This critical bandwidth is then used as a test statistic, and the -value is calculated by the bootstrap method. [11] pointed out the shortcoming of the test when the true function has flat and nearly flat spots, and they proposed a test that estimates local slopes and approximates the distribution of the weighted minimum. [9] proposed test statistics that are functionals of a U-process, which is based on the signs of . They approximated the limiting distribution by Gaussian processes and then derived the critical values for an asymptotic significance level . [3] used the similar U-statistics, but he introduced a weighting function and proposed a statistic based on , where is chosen from a large set of potentially useful weighting functions to maximize the statistic. [22] used quadratic regression splines to fit the data, took the minimum of the slopes at the knots as the test statistic, and then estimated the null distribution of such a statistic by performing constrained quadratic regression splines.

5.2 Test by Monotone Decomposition

Suppose we have obtained the monotone components. Propositions 3 and 5 imply that the coefficients for one component would be constant if the function is monotone. Thus, we can test the monotonicity of a function by testing whether the coefficients of monotone components are constant. The equivalences are summarized in Table 3.

| Original Hypothesis | Hypothesis in terms of Monotone Decomposition |

| , i.e., | |

| , i.e., |

In Table 3, the minimum (maximum) of two vectors are defined as:

where is the sample variance on the elements of a vector. To test and , consider the test statistics

Note that the null hypothesis will be rejected if the test statistic is large enough. Specifically, given a significance level , the respective null hypothesis would be rejected if or , where and denote the critical values of the distributions of under the respective null hypotheses, respectively. The distributions of under their null hypotheses can be characterized by the bootstrap samples. Note that the and can be heterogeneous, so we take the wild bootstrap [6]. Without loss of generality, we focus on the test of increasing functions, and the procedure for testing is outlined in Algorithm 1.

6 Simulations for Testing

Firstly, we want to check whether the methods can control the Type I error. Specifically, consider five monotone functions, and . We conducted 100 simulations and calculated the proportion of rejecting the null hypothesis. Ideally, the rejection proportion should be less than 0.05 if we pick the commonly used significance level . The results are reported in Table 4.

| Methods | Curves | |||||||||

| n = 50 | 100 | 200 | n = 50 | 100 | 200 | n = 50 | 100 | 200 | ||

| [22] | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.01 | 0.0 | |

| 0.53 | 0.84 | 1.0 | 0.05 | 0.08 | 0.08 | 0.02 | 0.06 | 0.04 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.03 | 0.02 | 0.01 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| 0.0 | 0.0 | 0.0 | 0.01 | 0.0 | 0.0 | 0.04 | 0.06 | 0.05 | ||

| [9] | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| [1] | 0.0 | 0.0 | 0.0 | 0.02 | 0.0 | 0.01 | 0.01 | 0.0 | 0.01 | |

| 0.0 | 0.0 | 0.0 | 0.2 | 0.19 | 0.18 | 0.26 | 0.2 | 0.15 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.05 | 0.02 | 0.01 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.04 | 0.02 | 0.0 | ||

| 0.0 | 0.0 | 0.0 | 0.02 | 0.0 | 0.02 | 0.02 | 0.01 | 0.0 | ||

| MDCS | 0.01 | 0.0 | 0.12 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 0.0 | 0.0 | 0.0 | 0.01 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| 0.02 | 0.11 | 0.03 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| MDSS | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| 0.1 | 0.08 | 0.08 | 0.08 | 0.1 | 0.07 | 0.09 | 0.05 | 0.03 | ||

| 0.0 | 0.02 | 0.02 | 0.01 | 0.03 | 0.07 | 0.05 | 0.06 | 0.05 | ||

| 0.04 | 0.05 | 0.02 | 0.08 | 0.07 | 0.05 | 0.04 | 0.08 | 0.08 | ||

| 0.03 | 0.03 | 0.03 | 0.0 | 0.01 | 0.0 | 0.0 | 0.0 | 0.0 | ||

[9] always accepts the null hypothesis. [22] fails to control the Type I error when the noise level is small on curve , and [1] cannot control the Type I error when the noise level is large on curve . In contrast, our proposed methods, monotone decomposition with cubic splines (MDCS) and monotone decomposition with smoothing splines (MDSS), demonstrate strong Type I error control in the majority of cases, even though the rejection rates are slightly elevated in a few instances.

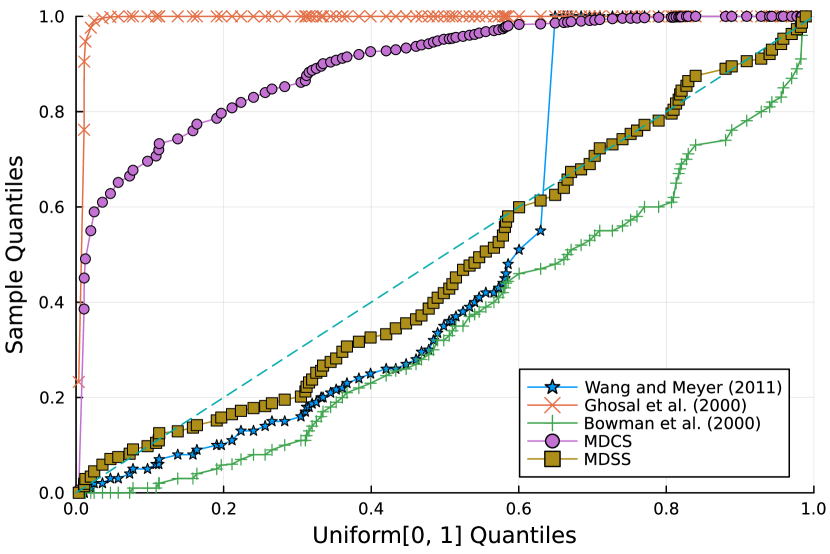

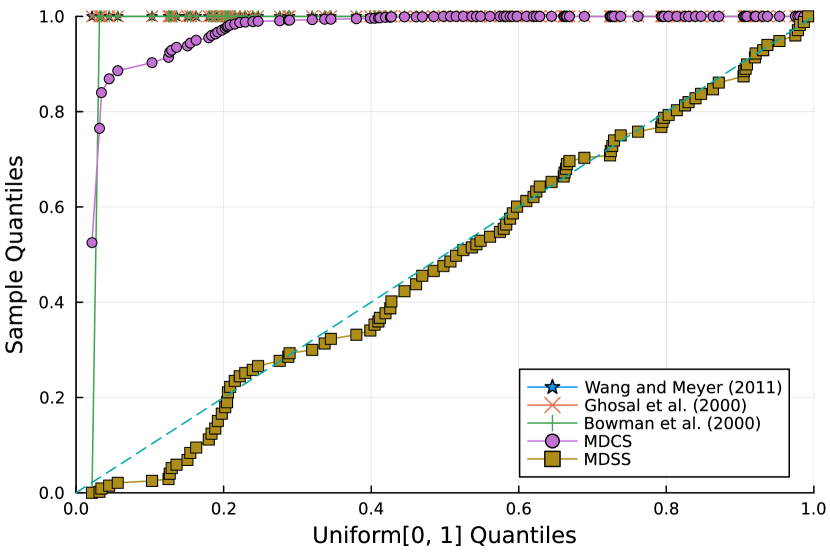

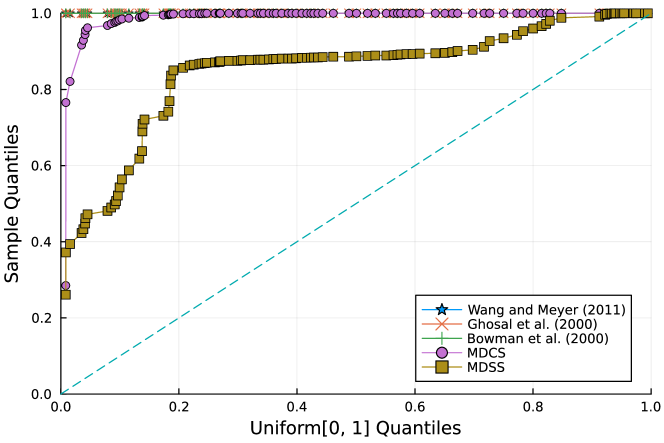

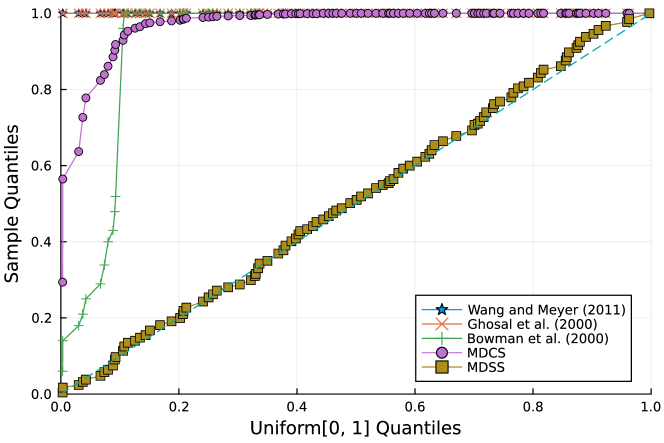

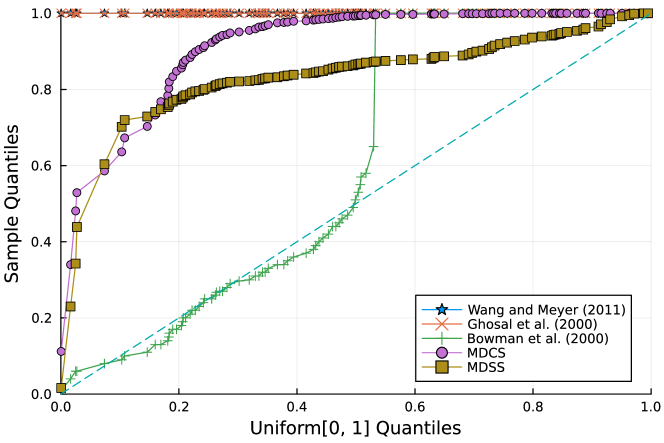

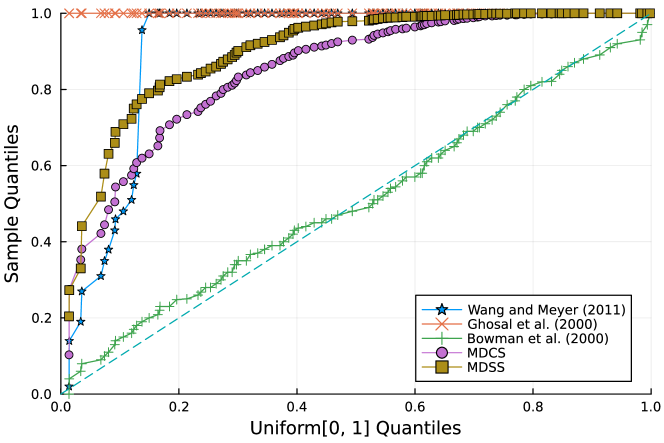

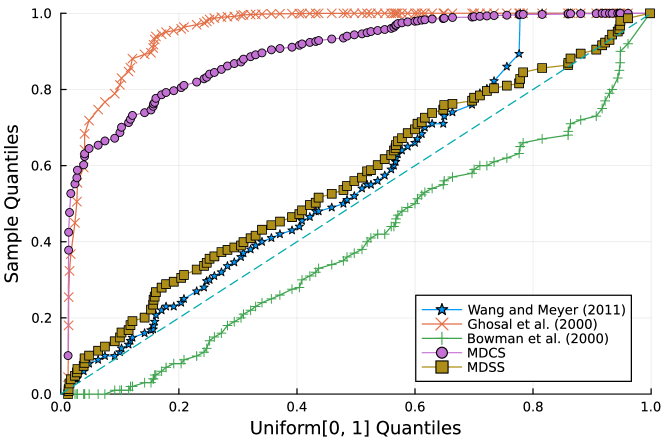

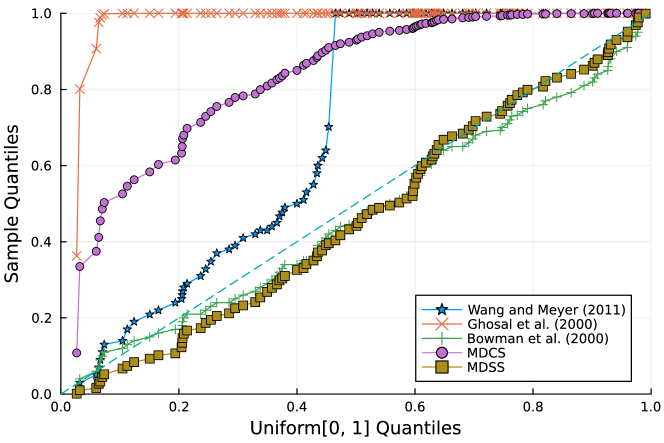

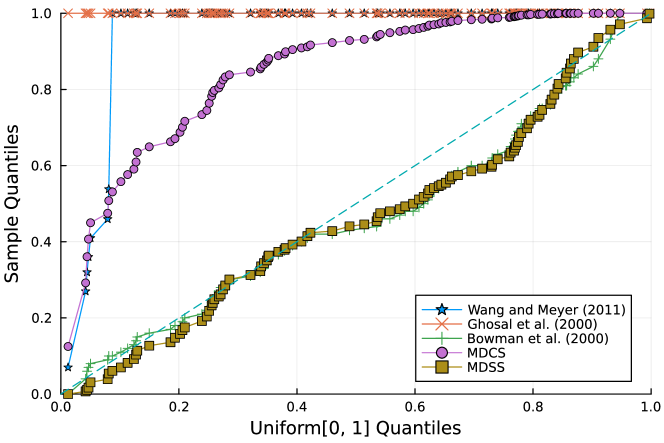

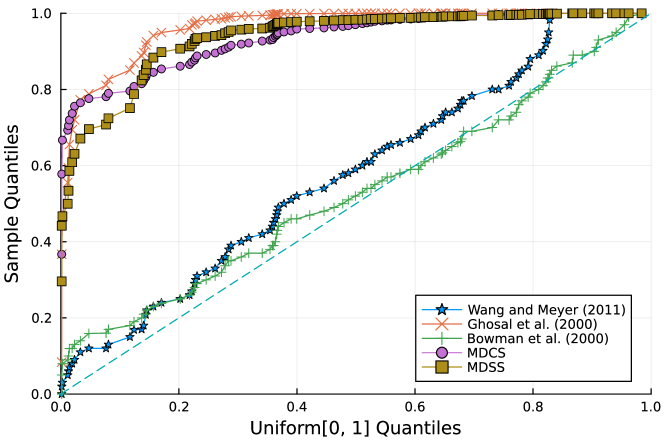

Furthermore, the -value should follow under the null hypothesis. To check the distribution of -value for each approach, Figure 4 displays the uniform QQ plots of 1000 -values for and with sample size and noise level , respectively. The uniform QQ plots of -values for all five curves with different noise levels can be found in the Supplementary Material. Notably, our proposed MDSS aligns pretty well with the diagonal line in the QQ plots, indicating the closest resemblance to the uniform distribution.

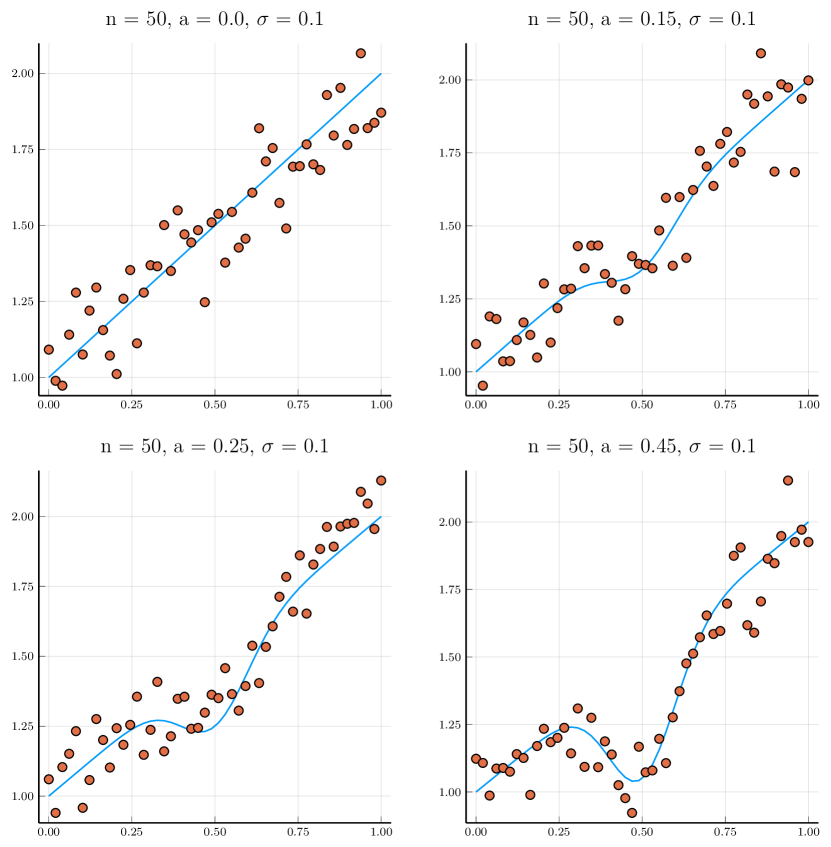

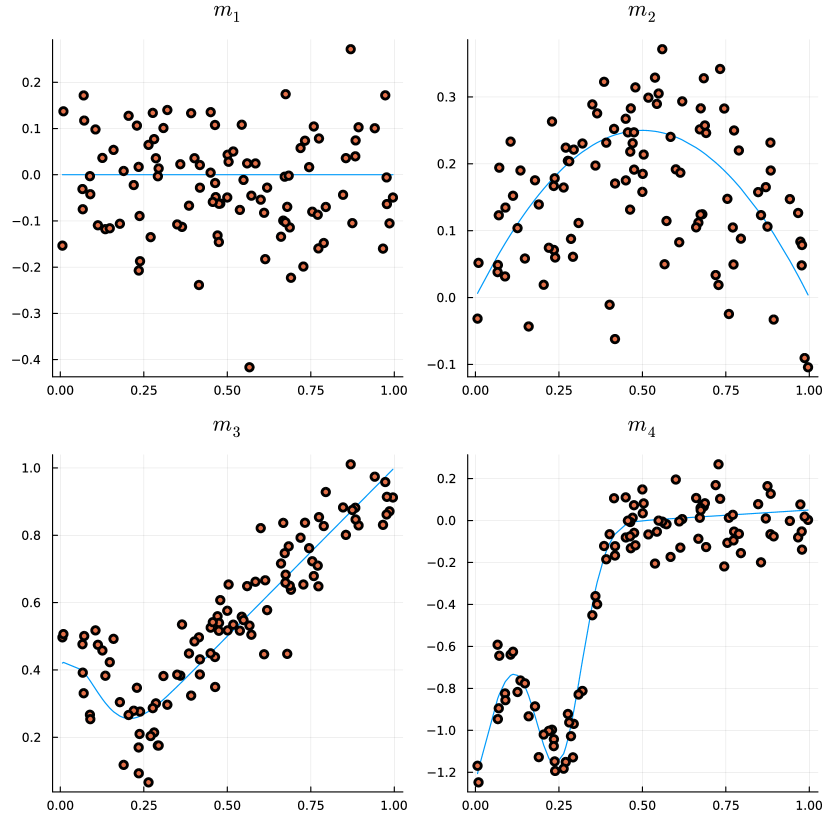

Next, we compare the simulated size and power under the settings of two competitors. The first one is the simulation setting in [1],

where , and 0.15 generate strongly and just monotonic curves, respectively; , and 0.45 produce mildly and strongly non-monotonic shapes, respectively. Also, we consider the simulation setting in [9], , where

and are non-monotone curves. A visualization of those curves can be found in the Supplementary Material.

For each combination of parameters on each curve, 100 simulations are carried out, using a bootstrap simulation size of 100. The proportions of rejecting the null hypothesis, i.e., the simulated size and power, are reported. The complete results are displayed in Table 5, from which we have the following observations:

- •

- •

-

•

For most curves, the behaviors of MDCS are similar to MDSS. But MDCS has better control over Type I errors while losing some power.

In summary, our proposed method can achieve comparable (and even better) power as other methods while controlling the Type I error.

| Methods | [1] | [9] | ||||||||||||||||||

| Curves | Curves | |||||||||||||||||||

| n = 50 | 100 | 200 | n = 50 | 100 | 200 | n = 50 | 100 | 200 | n = 50 | 100 | 200 | n = 50 | 100 | 200 | n = 50 | 100 | 200 | |||

| [22] | a = 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.01 | 0.01 | 0.01 | m1 | 0.1 | 0.04 | 0.04 | 0.06 | 0.02 | 0.06 | 0.07 | 0.07 | 0.1 |

| a = 0.15 | 0.0 | 0.0 | 0.0 | 0.07 | 0.04 | 0.06 | 0.03 | 0.03 | 0.01 | m2 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.09 | 0.27 | 0.45 | |

| a = 0.25 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.13 | 0.24 | 0.64 | m3 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.34 | 0.56 | 0.88 | |

| a = 0.45 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.56 | 0.95 | 1.0 | m4 | 0.36 | 0.57 | 0.98 | 0.39 | 0.57 | 0.98 | 0.23 | 0.38 | 0.78 | |

| [9] | a = 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | m1 | 0.01 | 0.0 | 0.01 | 0.01 | 0.04 | 0.02 | 0.02 | 0.0 | 0.01 |

| a = 0.15 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | m2 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.37 | 0.7 | 0.94 | |

| a = 0.25 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.31 | 0.0 | 0.0 | 0.0 | m3 | 0.97 | 1.0 | 1.0 | 0.94 | 1.0 | 1.0 | 0.1 | 0.33 | 0.87 | |

| a = 0.45 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.06 | 0.71 | 0.99 | m4 | 0.01 | 0.19 | 0.53 | 0.02 | 0.13 | 0.53 | 0.0 | 0.05 | 0.34 | |

| [1] | a = 0.0 | 0.0 | 0.0 | 0.0 | 0.02 | 0.01 | 0.0 | 0.01 | 0.01 | 0.03 | m1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| a = 0.15 | 0.0 | 0.0 | 0.0 | 0.0 | 0.03 | 0.0 | 0.01 | 0.02 | 0.04 | m2 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | |

| a = 0.25 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.09 | 0.16 | 0.44 | m3 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.9 | 1.0 | 1.0 | |

| a = 0.45 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.3 | 0.72 | 0.98 | m4 | 0.0 | 0.0 | 0.0 | 0.01 | 0.02 | 0.0 | 0.34 | 0.33 | 0.28 | |

| MDCS | a = 0.0 | 0.01 | 0.0 | 0.07 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | m1 | 0.03 | 0.02 | 0.02 | 0.0 | 0.01 | 0.0 | 0.0 | 0.0 | 0.0 |

| a = 0.15 | 0.05 | 0.02 | 0.04 | 0.01 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | m2 | 0.98 | 0.99 | 0.95 | 1.0 | 1.0 | 0.94 | 0.18 | 0.35 | 0.65 | |

| a = 0.25 | 0.97 | 1.0 | 1.0 | 0.74 | 0.92 | 0.95 | 0.0 | 0.0 | 0.0 | m3 | 0.89 | 0.93 | 1.0 | 0.9 | 0.97 | 0.99 | 0.16 | 0.22 | 0.29 | |

| a = 0.45 | 1.0 | 1.0 | 1.0 | 0.99 | 1.0 | 1.0 | 0.01 | 0.08 | 0.17 | m4 | 0.89 | 0.91 | 0.94 | 0.88 | 0.92 | 0.99 | 0.51 | 0.68 | 0.81 | |

| MDSS | a = 0.0 | 0.02 | 0.07 | 0.06 | 0.02 | 0.08 | 0.03 | 0.02 | 0.04 | 0.04 | m1 | 0.05 | 0.07 | 0.04 | 0.06 | 0.04 | 0.03 | 0.05 | 0.07 | 0.05 |

| a = 0.15 | 0.05 | 0.03 | 0.05 | 0.03 | 0.06 | 0.05 | 0.02 | 0.05 | 0.06 | m2 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.79 | 0.96 | 1.0 | |

| a = 0.25 | 0.99 | 1.0 | 1.0 | 0.98 | 1.0 | 1.0 | 0.03 | 0.04 | 0.03 | m3 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.67 | 0.83 | 0.97 | |

| a = 0.45 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.46 | 0.82 | 0.98 | m4 | 0.98 | 1.0 | 1.0 | 0.99 | 1.0 | 1.0 | 0.84 | 0.99 | 1.0 | |

7 Application: Monotonicity Test for scRNA-seq Trajectory Inference

Single-cell transcriptome sequencing (scRNA-seq) is a powerful technique that allows researchers to profile transcript abundance at the resolution of individual cells. Trajectory inference aims first to allocate cells to lineages and then order them based on pseudotimes within these lineages. Based on trajectory inference, researchers can discover differentially expressed genes within lineages, such as [21]’s tradeSeq, [20]’s PseudotimeDE, and [13]’s Lamian. These methods mostly focus on the differential genes by checking whether the trajectory is constant along the pseudotime. Once a gene is identified as differentially expressed, researchers may further check whether its expression exhibits a monotone pattern. A non-decreasing expression pattern indicates that the corresponding gene is turning on and needed thereafter along the cell lineage. A decreasing expression pattern indicates that the corresponding gene is needed less and less along the pseudotime. On the other hand, a non-monotone expression pattern indicates that the corresponding gene is part of a more complex dynamics. Such detailed dynamics may illuminate the critical regulatory mechanism of cell differentiation along the corresponding lineage.

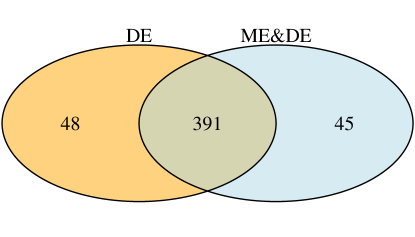

As an analogy to the term differentially expressed (DE) gene when the null hypothesis that the expression of the gene along the trajectory is constant is rejected, we call a non-monotonically expressed (nME) gene when the null hypothesis that the expression is monotonic is rejected. We adopt tradeSeq to identify DE genes, and the monotonicity test via monotone decomposition with cubic spline (MDCS) to find nME genes. Both DE genes and nME genes are selected using the Benjamini–Hochberg (BH) procedure to control the false discovery rate (FDR) with cutoff .

To explore the biological functions of DE genes and nME genes, we examined the Gene Ontology (GO), which is a relational database of terms (concepts) used to describe gene functions, and conducted enrichment analysis [2].

Suppose there are genes in the reference gene list, among which genes are in our analyzed gene set. For a GO term of interest, suppose there are and genes within the reference gene list and our analyzed gene set, respectively, that are annotated to have the GO term. The -value for the one-sided Fisher’s exact test of the null hypothesis that the GO term is not enriched in the analyzed gene set can be calculated based on the hypergeometric distribution:

We repeat this test for multiple GO terms of interest and correct for multiple comparisons via the BH procedure to control the FDR at the cutoff .

7.1 nME genes can identify significant GO terms when DE genes fail

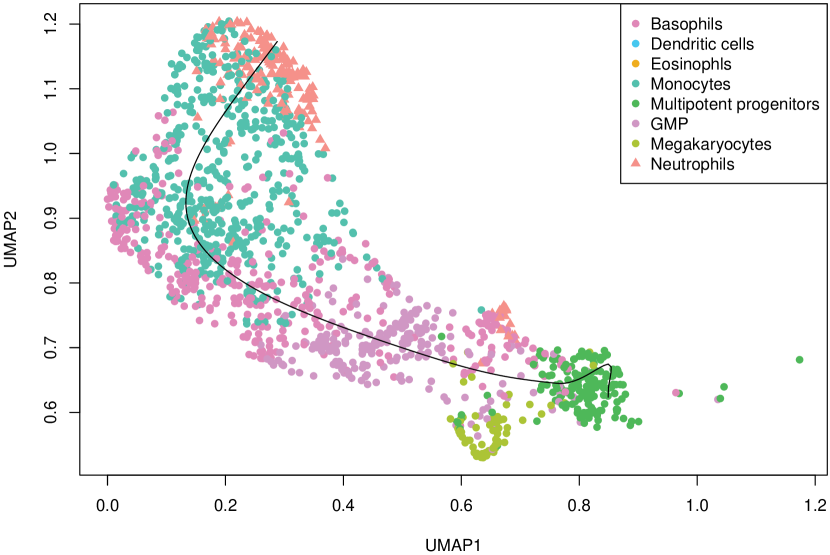

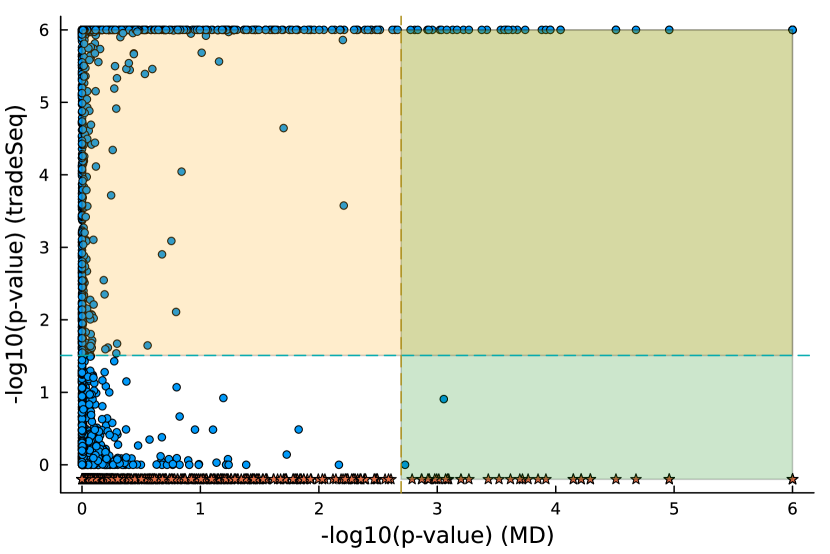

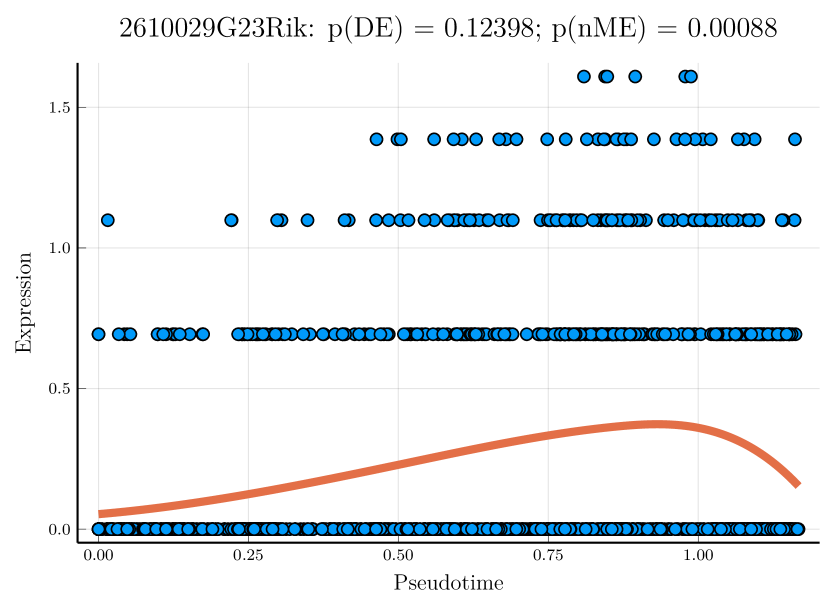

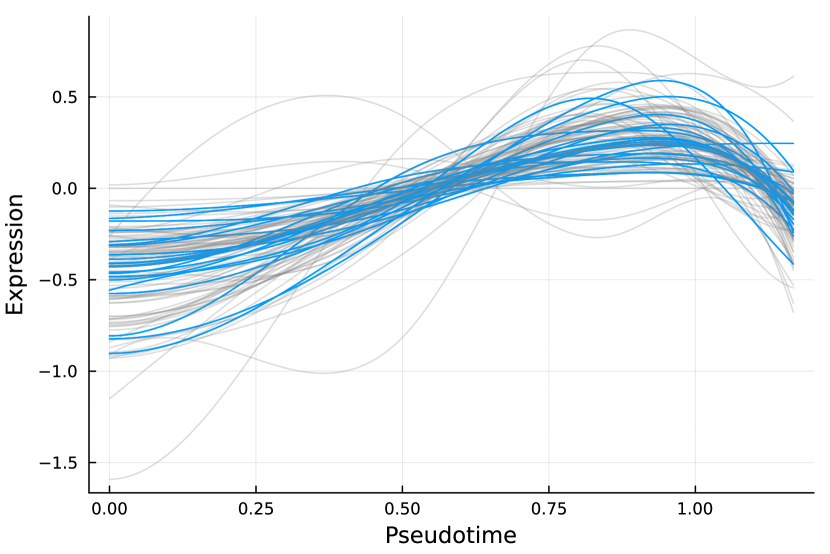

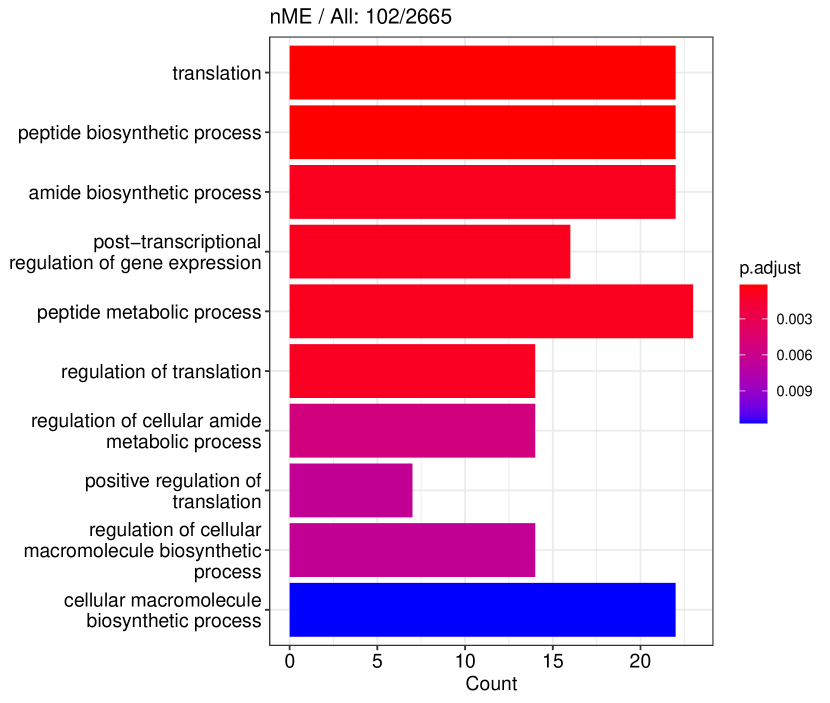

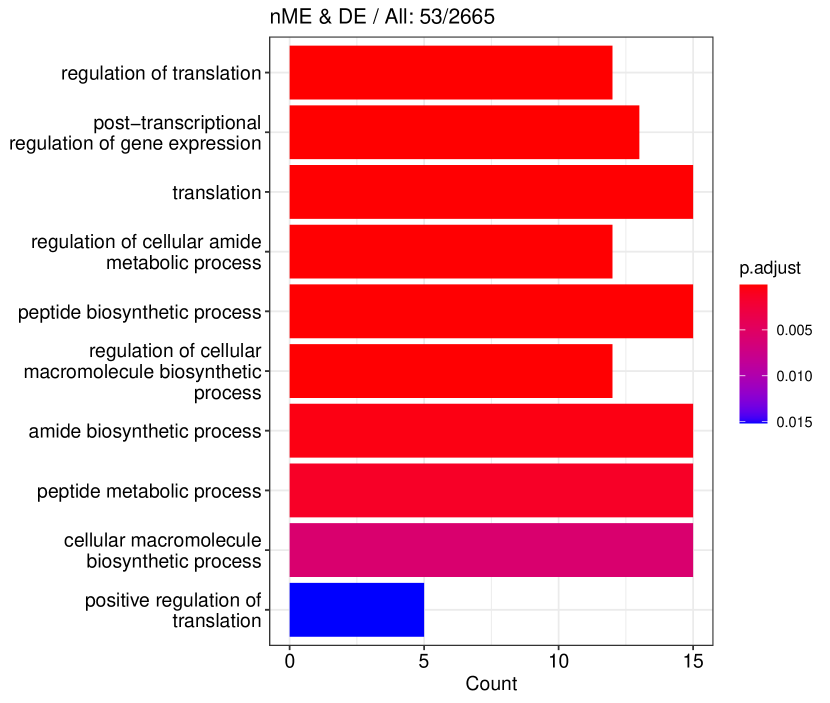

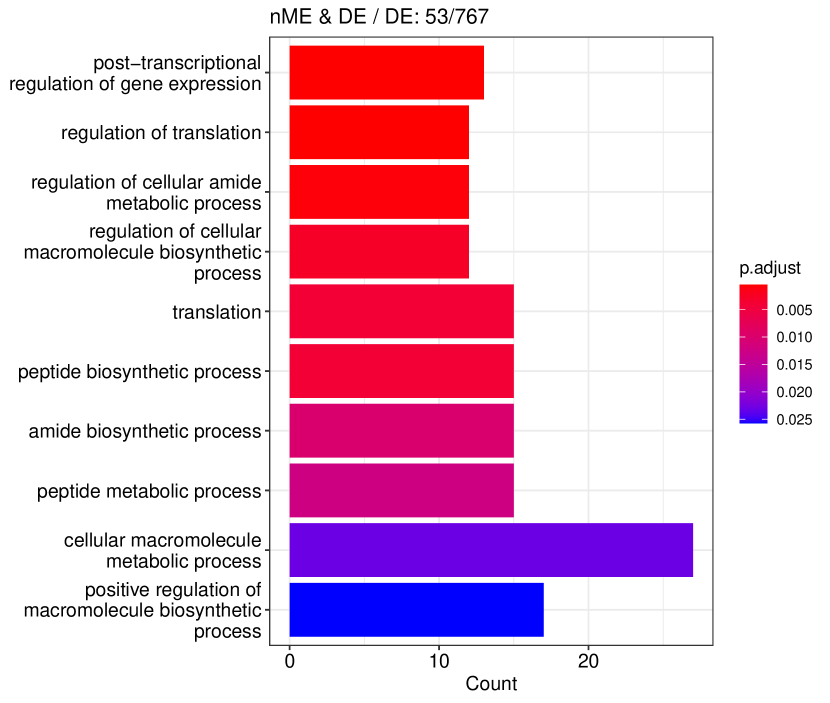

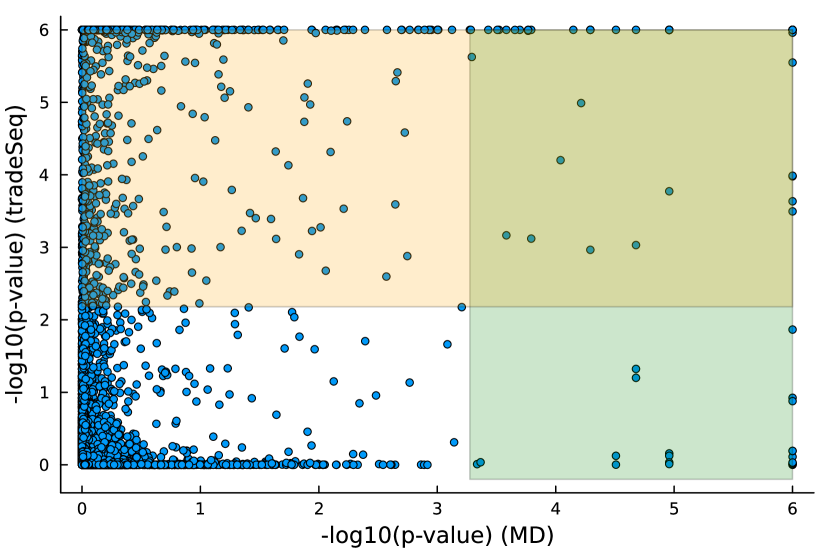

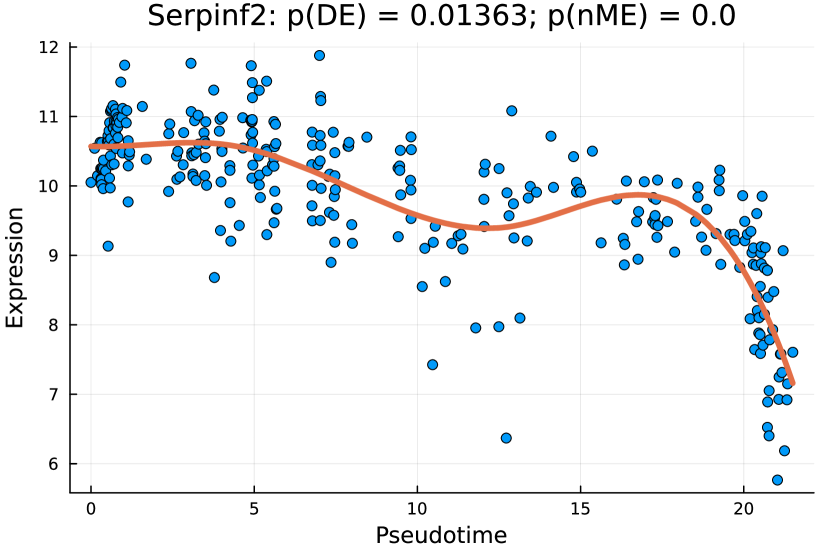

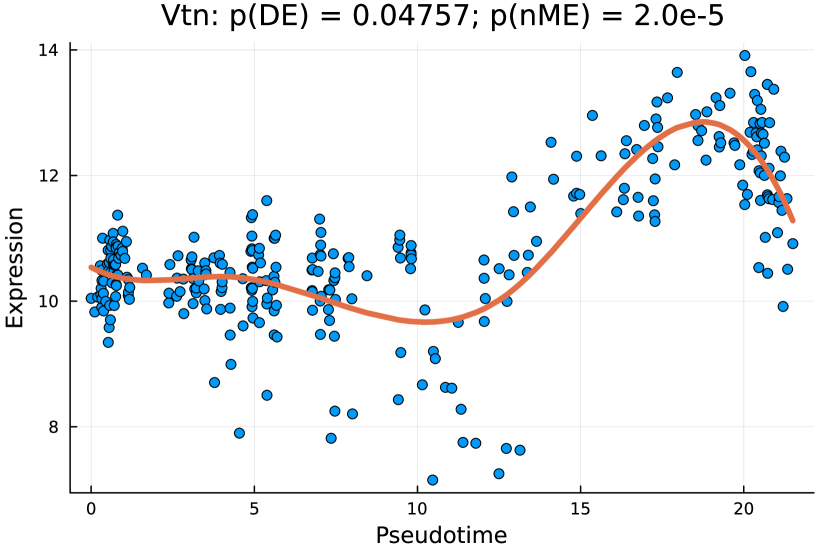

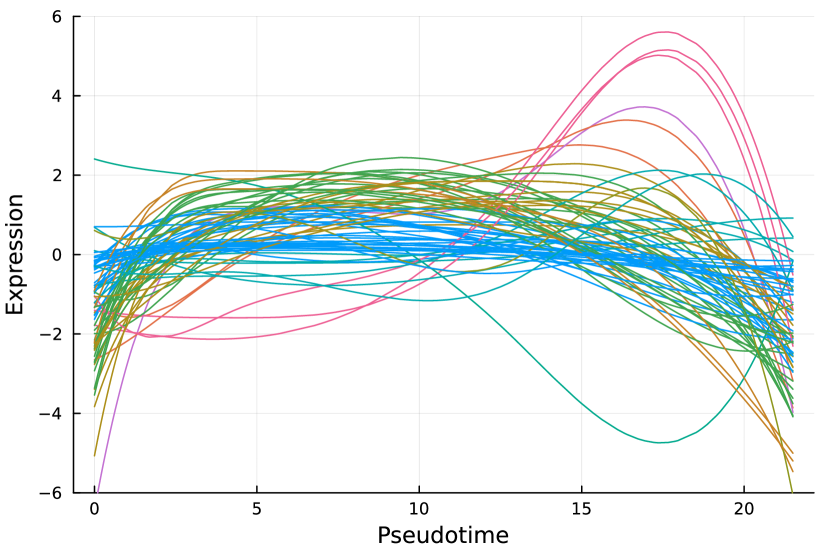

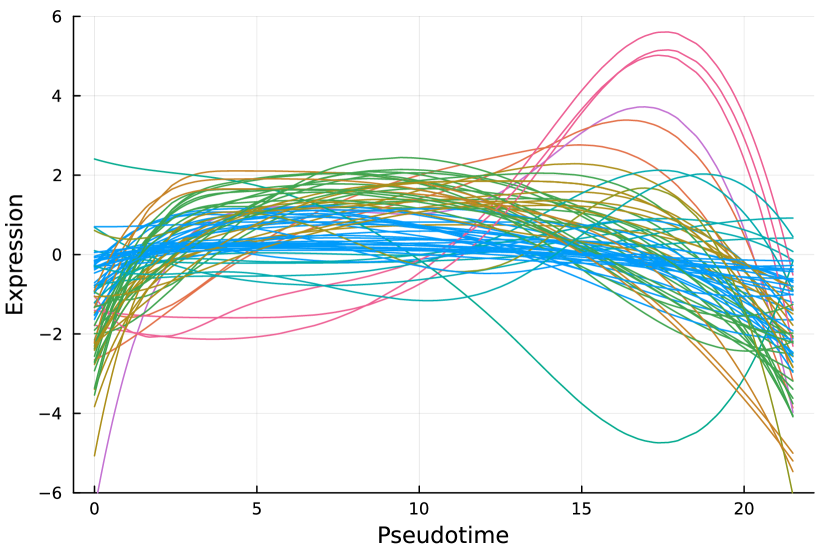

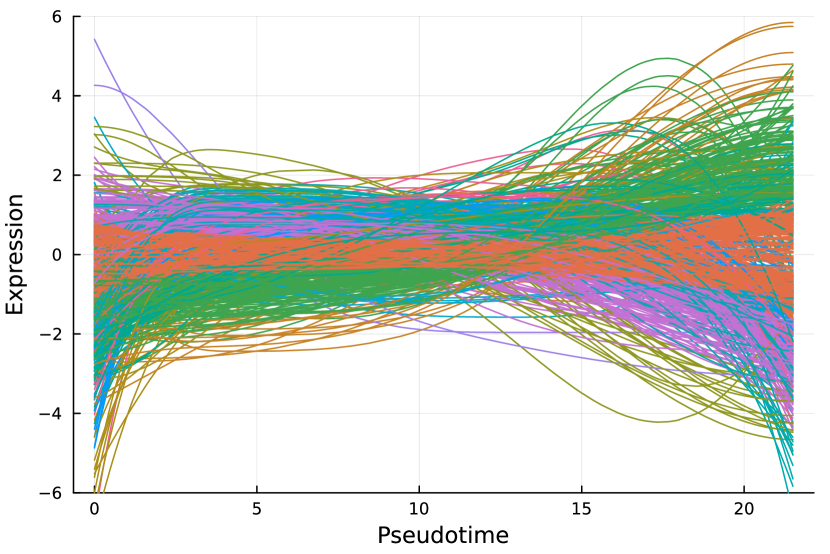

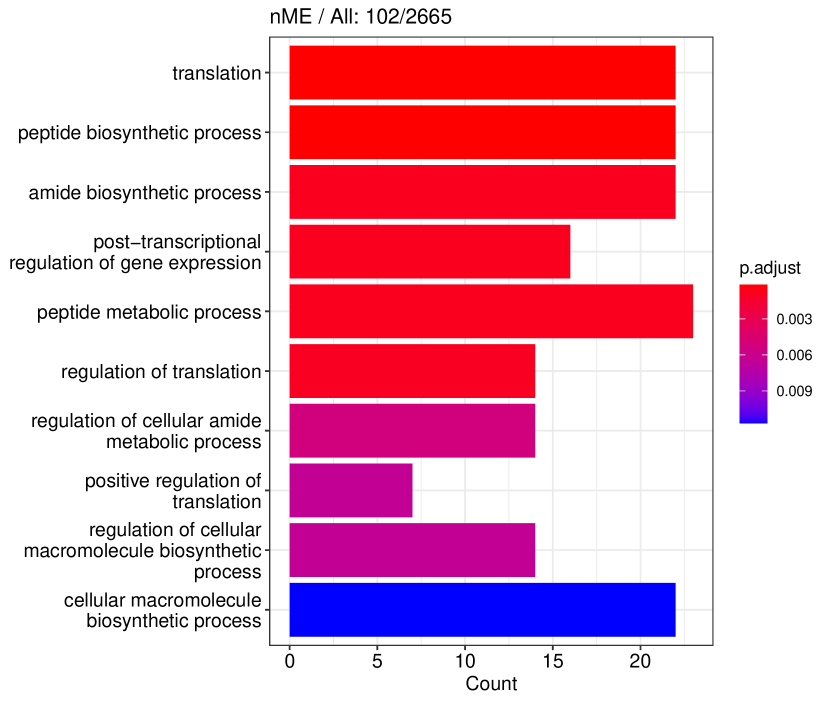

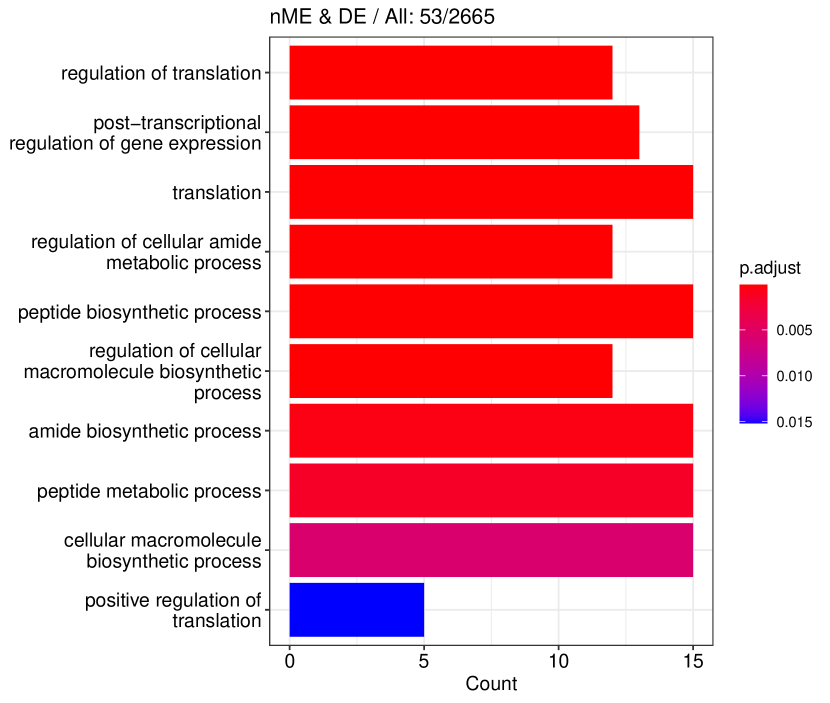

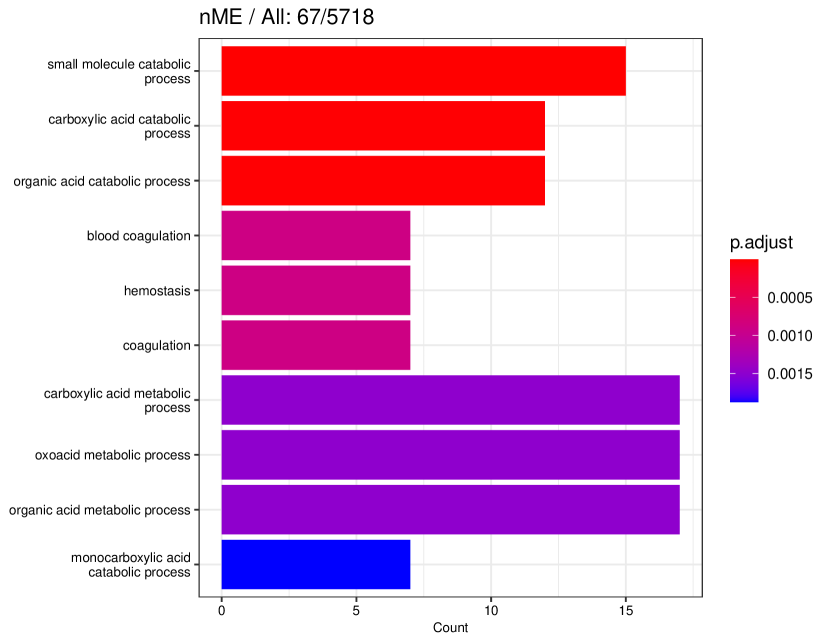

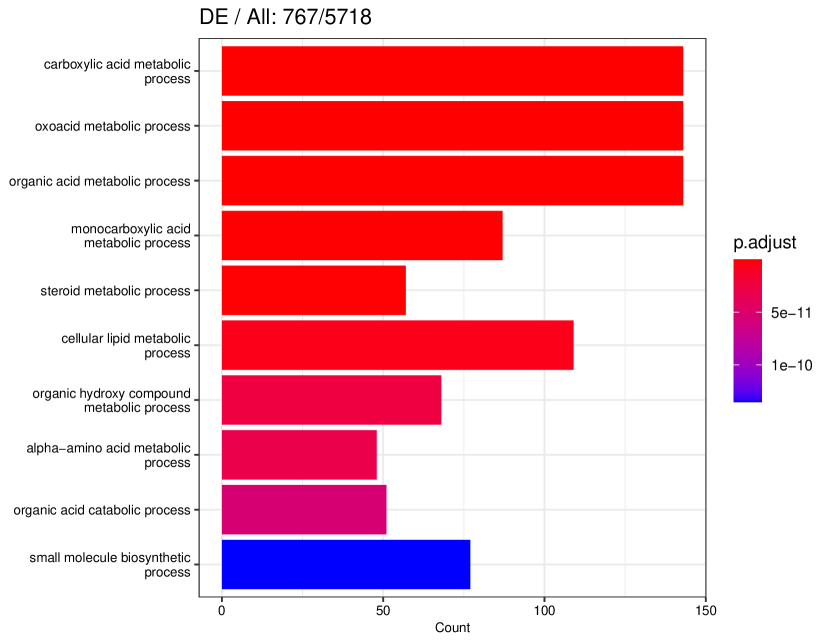

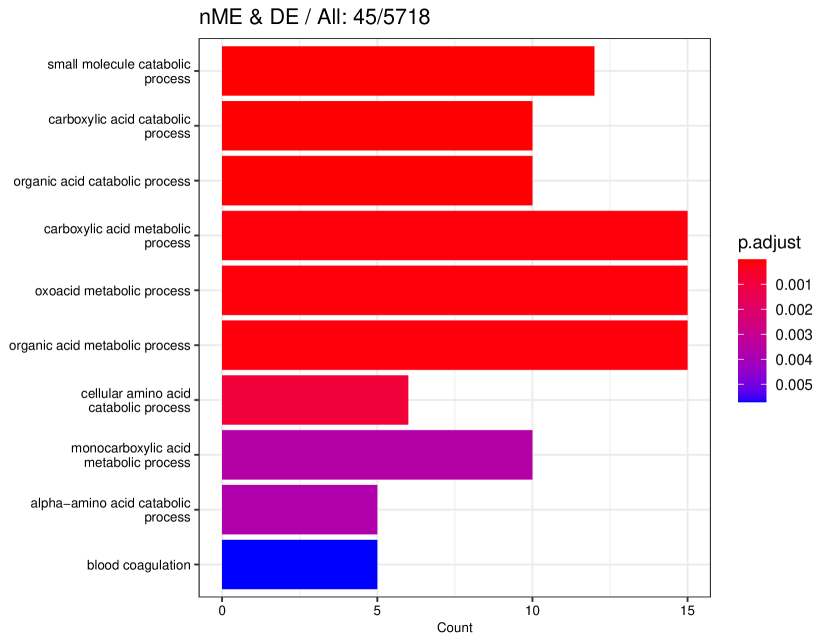

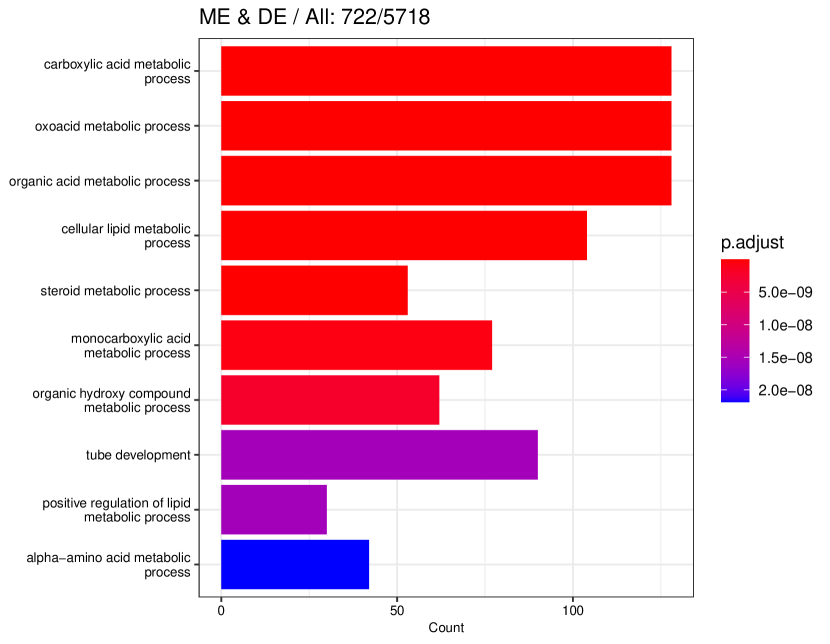

We studied the leukocyte lineage of the mouse bone marrow data set [16], which consists of the expression measurements of 3004 genes at 1474 pseudotime points. Figure 5(a) shows the reduced two-dimensional representation of the data using uniform manifold approximation and projection (UMAP) [15]. Eight cell types are denoted with different colors and shapes. The solid curve is the pseudotime axis, which starts from the cell type Multipotent progenitors at the bottomright and ends at the cell type Neutrophils at the topleft. Note that although a monotone pattern is a special DE pattern, we do not perform the monotonicity test in a two-step manner, i.e., firstly find DE genes and then perform the monotonicity test among those found DE genes. Instead, for each gene, we test whether it is a DE gene or an nME gene independently. Figure 5(b) displays the paired -values in the logarithmic scale, where the dash lines denote the cutoff determined by the BH procedure. As a result, we identified 109 nME genes (it is 102 after GO analysis since 7 genes are not mapped in the GO database) and 767 DE genes, of which 53 genes are in common. These numbers are also noted in the titles of GO bar plots in Figure 6. Figure 5(c) illustrates the fitted trajectory for gene 2610029G23Rik, which is identified as an nME gene but not a DE gene, i.e., it lies in the bottom right green block of Figure 5(b).

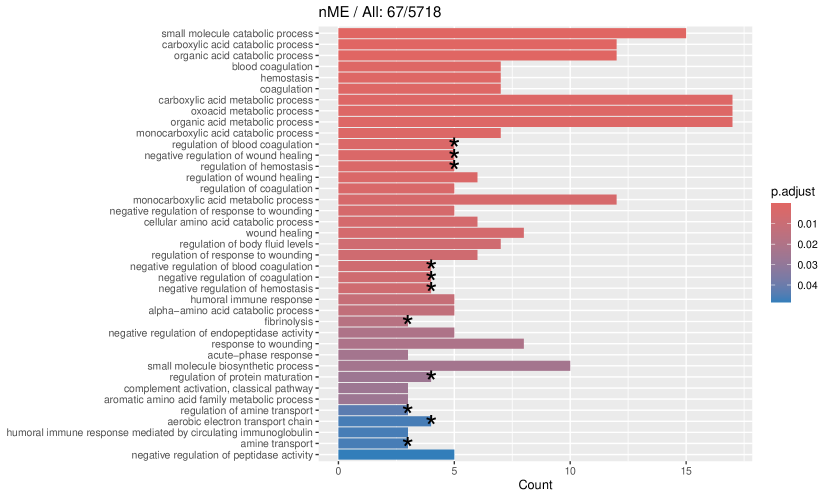

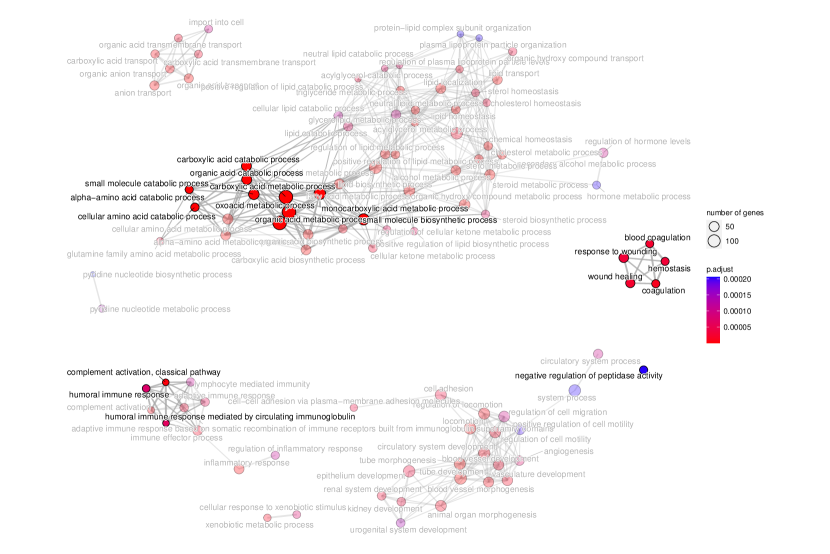

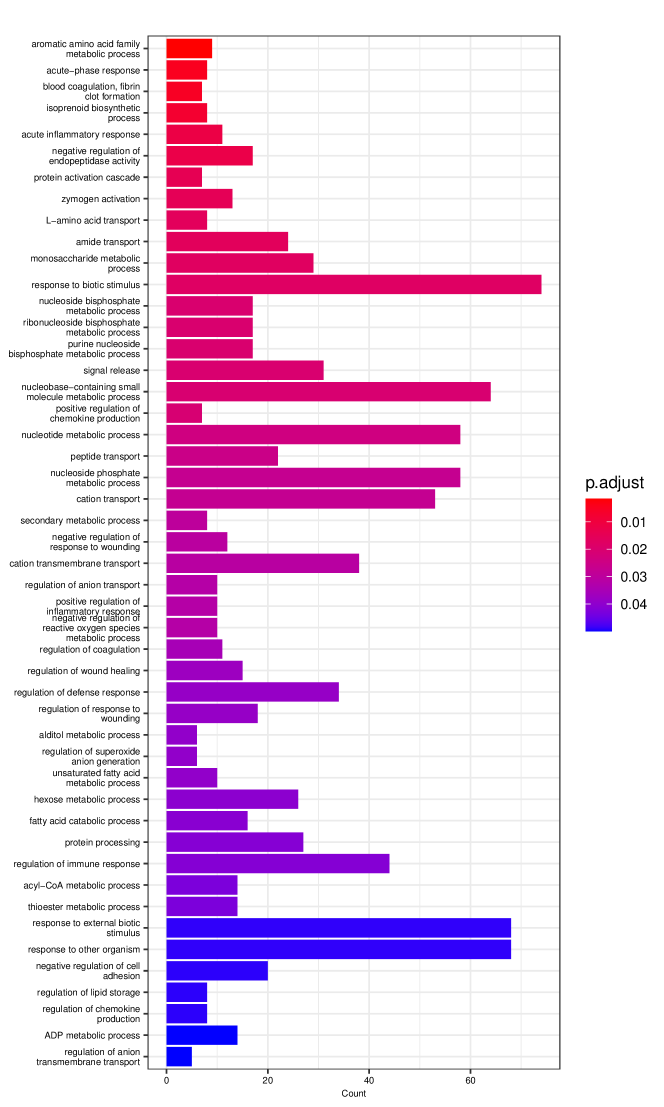

Figure 6 displays GO enrichment analysis on the DE genes and nME genes by R package clusterProfiler [24]. Figures 6(a), 6(b) and 6(c) take the whole 3004 genes as the reference gene list, but note that, because some genes are not mapped in the GO database, there are only 2665 genes after filtering. We cannot find significant GO terms for the DE gene list, as shown in Figure 6(b), which is left blank deliberately due to no significant results. In contrast, we can identify several significant GO terms for the nME gene list. Figures 6(c) and 6(d) display the intersection of the DE gene list and the nME gene list with respect to the whole gene list or the DE gene list, respectively. Both can identify significant GO terms. One possible reason for DE genes failing to identify significant GO terms is that the range of DE genes might be too broad, thus different sub-categories of DE genes (the non-monotonic pattern is a special sub-category) might contribute to different GO terms, but the increased sample size due to incorporating unrelated genes might reduce the significance for determining the significant GO terms. Another potential reason is that the tradeSeq test is not robust enough. As shown by the star symbol in Figure 5(b), there are many NA values returned by tradeSeq, which is a known issue discussed in their GitHub repository111https://github.com/statOmics/tradeSeq/issues/209.

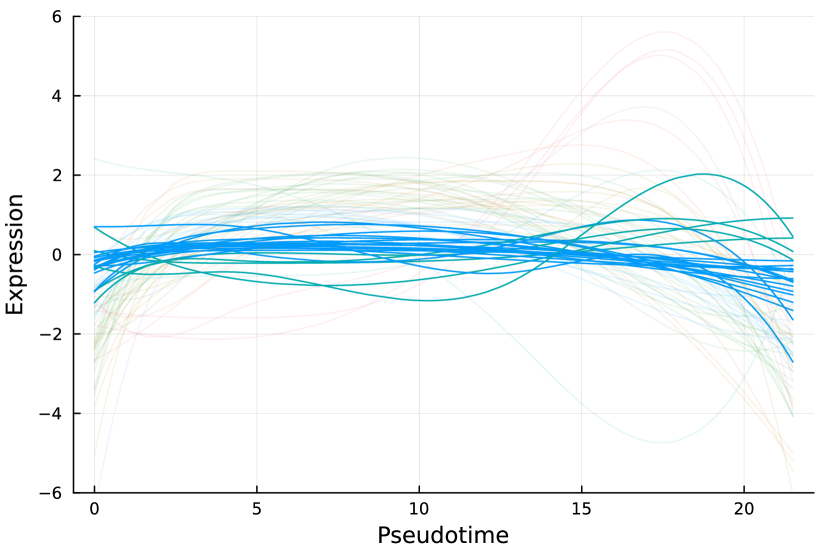

We can further check whether the pattern of trajectory curves in the enriched GO terms agrees with the biological mechanism. Take the first GO term “translation” as an example. Figure 5(d) displays the trajectory curves of 109 nME genes along the pseudotime. Among these 109 nME genes, the curves of 22 genes annotated in the GO term “translation” are highlighted. These genes exhibit a coherent pattern, characterized by an initial increase in expression followed by a subsequent decrease. If we cast the pseudotime axis in Figure 5(d) back to Figure 5(a), the curve pattern implies that the gene expression increases when the cell develops from Multipotent progenitors to Monocytes, and roughly after the gene expression reaching the peak, the cell evolves to Neutrophils. This behavior is consistent with the biological fact that specialized cell types (here Neutrophils) might reduce rates of translation (and hence protein synthesis), since their structure and function are relatively stable.

7.2 nME genes can fine-locate GO terms when DE genes identify too many terms

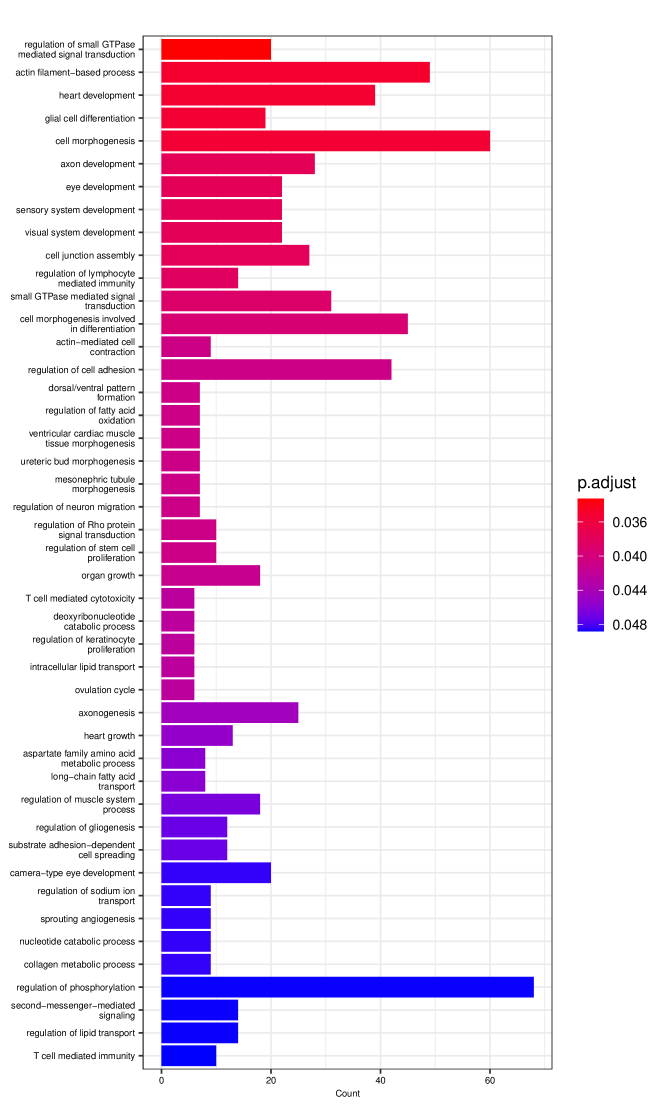

In some scenarios, although GO enrichment analysis can identify significant GO terms given the DE genes, nME genes can further fine-locate GO terms and focus on a small but significant set of GO terms. We analyzed the cholangiocyte lineage from the mouse liver data studied in [8] to demonstrate such an advantage of nME genes. In the dataset, there are 6038 genes and 308 pseudotime points.

tradeSeq identifies 767 DE genes (it is 801 before filtering due to unmapping in GO), and the monotonicity test identifies 67 nME genes (it is 69 before filtering due to unmapping in GO), of which 45 genes are in common. For the 767 DE genes, we identify 439 significant GO terms, whereas for the 67 nME genes, we find 39 significant GO terms. Between the two sets, 28 GO terms are in common. Figure 7(a) displays all GO terms returned by the 39 nME genes, where the star symbol indicates GO terms not shared by the DE genes. Figure 7(b) shows the enrichment map constructed by the GO terms from DE genes, in which the common GO terms shared by the nME genes are highlighted. In the enrichment map, an edge connects two GO terms if there are overlapped genes, and hence, mutually overlapping GO terms tend to cluster together, making it easy to identify functional modules. It is clear that the shared GO terms mainly focus on two clusters: one is isolated from others on the right side and forms a pentagon shape with the keyword “coagulation”; another cluster is located at the left corner of the biggest cluster, related to “catabolic process”. In other words, nME genes can help fine-locate GO terms, which might help save time for researchers without checking too many GO terms from DE genes.

We further check the new GO terms enriched by the nME genes, which are annotated with the star symbol in Figure 7(a). These new GO terms might be contributed by new genes that are not identified as DE genes. For example, we take the GO term “regulation of blood coagulation” as an example, which contains 5 genes {F11, Kng2, Serpinc1, Serpinf2, Vtn} in the nME gene set, where the first three are also in the DE gene set, but the last two are only in the nME gene set. Figures 8(c) and 8(d) display the fitted trajectory of the expression along pseudotime by our monotone decomposition fitting technique, and the -values are also noted in the title of each figure. We observe that the -value for the DE gene is not quite as significant as the one for the nME gene. In other words, these two -values lie in the bottom right green block of Figure 8(a). Using the same data at the hepatoblast stage (an earlier stage than the cholangiocyte stage we considered), [8] identified 68 differentially variable (DV) genes, which indicates that the variances (instead of the mean expression considered in DE genes and nME genes) of gene expressions change along the pseudotime. Accidentally, Serpinf2 and Vtn are the two and the only two which are both in the 68 DV gene set and the 69 nME gene set. The coexistence of DV and nME characteristics in these genes suggests intricate and dynamic expression patterns, which might indicate significant biological interest. The dual nature of being both DV and nME genes underscores the complexity of the regulatory mechanisms governing these specific genetic expressions.

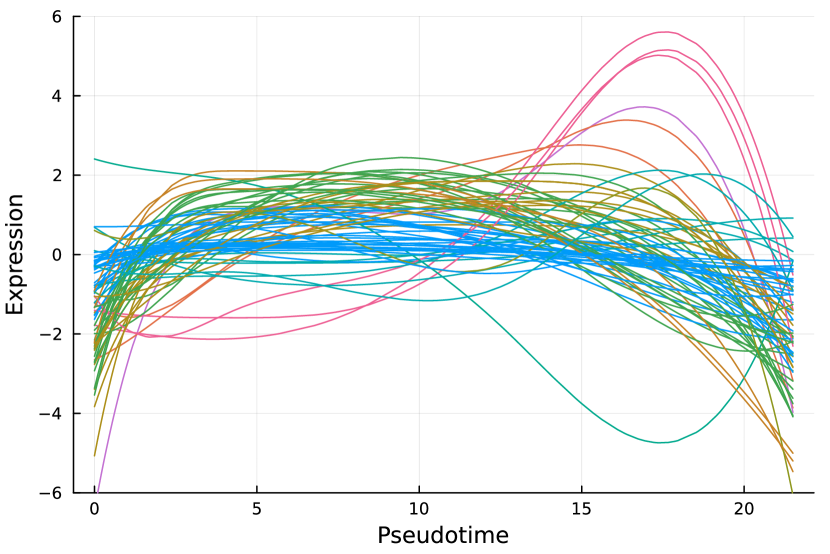

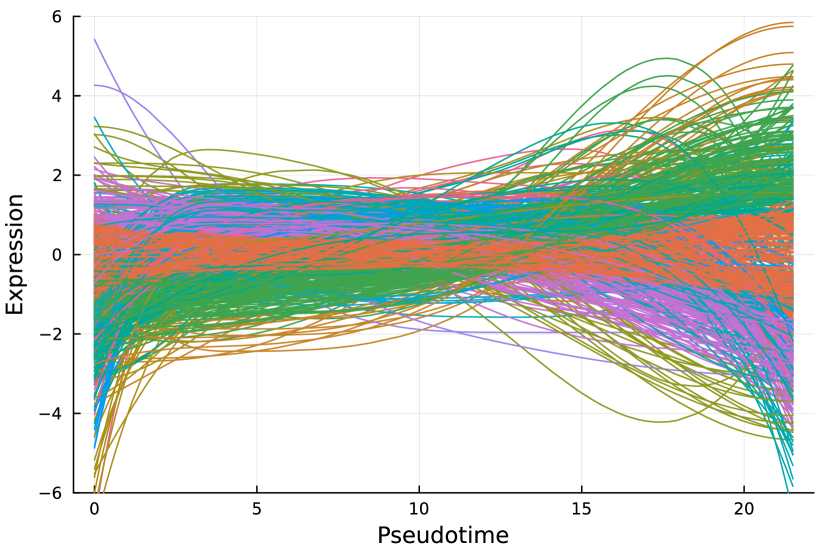

Furthermore, we check all genes that are identified as nME but not DE. Figure 8(b) displays the trajectory curves of all nME genes, and the curves of nME but not DE genes are highlighted, while others are transparent. To facilitate comparative analysis, all curves are centered and different colors denote different clusters (see Section 7.3). Note that the variations of highlighted curves are relatively small compared to curves in transparent, so different methods might make different conclusions. As a result, some non-DE genes are treated as nME genes despite the initial expectation that nME genes should naturally encompass a subset of DE genes.

7.3 nME genes can highlight non-monotonic patterns while DE genes blur them

The clustering of genes based on their fitted expression patterns can reveal intriguing insights for biologists. However, a potential limitation of clustering based on DE genes is the tendency of clustering methods to amalgamate pure monotonic patterns with somewhat intricate non-monotonic patterns (e.g., Figure 5 of [21]). To mitigate the amalgamation of non-monotonic patterns and maintain their clarity, one possible direction is to tailor clustering approaches, such as constructing more suitable similarity measurements. Another direct approach is to focus only on non-monotonic patterns from nME genes.

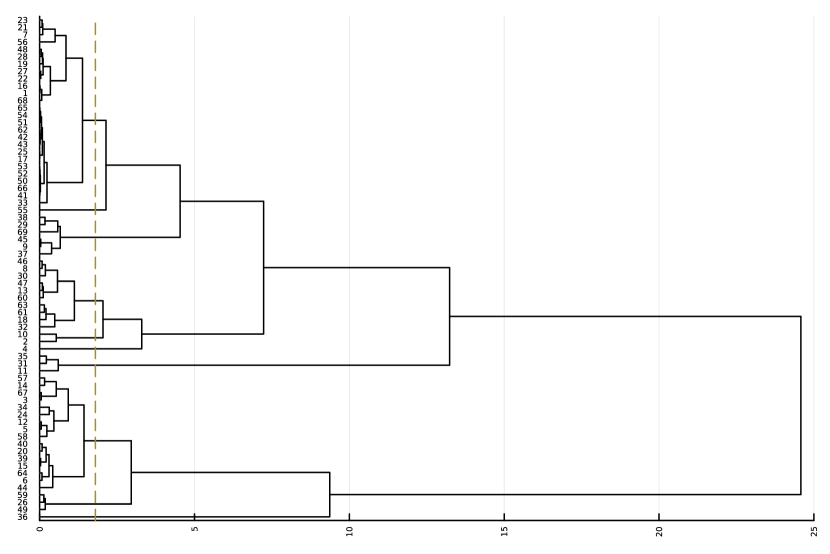

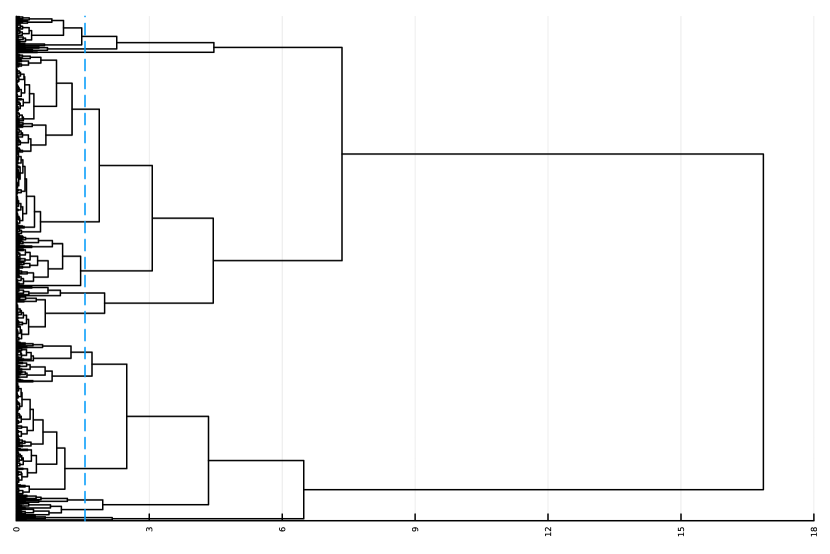

Here, we consider the liver dataset in Section 7.2. Figure 9(a) shows the dendrogram from hierarchical clustering with complete linkage on 69 nME genes. We take the cutoff 2.0 to obtain 10 clusters with clear patterns. Figure 9(b) presents the trajectory curves of those 69 nME genes, and different colors denote different clusters. Notably, we have highlighted three representative clusters, each depicted in its respective figure, ranging from Figure 9(c) to Figure 9(d). Figure 9(c) displays a peak on the right side, while Figure 9(d) showcases a peak on the left side.

On the other hand, we perform hierarchical clustering with a similar cutoff 1.5 and identify 12 clusters, as shown in Figure 9(e). The trajectory curves of 801 DE genes with different colors representing different clusters are displayed in Figure 9(f). It is worth noting that the presence of monotonic patterns has somewhat concealed the underlying wiggly patterns, as evidenced in Figure 9(g), which combines non-monotonic patterns similar to those found in Figure 9(c) with numerous ascending curves. Similarly, Figure 9(h) combines the non-monotonic pattern observed in Figure 9(d), illustrating the challenges in distinguishing these patterns.

Pure non-monotonic patterns hold the potential to identify significant patterns. For example, all three genes in Figure 9(c) are significantly annotated to GO terms “defense response to other organism”, “response to external biotic stimulus”, and “response to other organism”, each accompanied by FDR adjusted -value of 0.00278.

8 Discussions

We formulate the monotone decomposition with monotone splines. It can serve as a fitting method when we sum up two monotone components, and it can be used to conduct a test of monotonicity by checking whether one component is constant.

As a fitting method, the experiments have shown that the monotone decomposition with cubic splines improves the performance, especially in high noise cases. We can explain the better performance in monotone functions theoretically. Similar phenomena have been observed for the monotone decomposition with smoothing splines. However, there are also some limitations:

-

•

The cross-validation procedure for simultaneously tuning two parameters is computationally extensive. The generative bootstrap sampler (GBS) proposed by [19] might be an alternative to speed up the cross-validation step.

-

•

Currently, the theoretical guarantees are only for monotone functions. It would be great if the theoretical results could be extended to general functions.

For the test of monotonicity by monotone decomposition, the proposed statistics based on monotone decomposition show competitive performance and are even much better than the existing approaches.

We also apply the fitting and testing based on monotone decomposition to investigate the monotonic and non-monotonic trajectory patterns in several scRNA-seq datasets. In parallel with the conventional analysis of differentially expressed (DE) genes, we propose the concept of non-monotonically expressed (nME) genes, which might lead to new biological insights.

Acknowledgement

Lijun Wang was supported by the Hong Kong Ph.D. Fellowship Scheme from the University Grant Committee. Hongyu Zhao was supported in part by NIH grant P50 CA196530. JSL was supported in part by the NSF DMS/NIGMS 2 Collaborative Research grant (R01 GM152814).

Supplementary Material

The Supplementary Material contains additional simulation results and technical proofs of propositions.

References

- [1] A.. Bowman, M.. Jones and I. Gijbels “Testing Monotonicity of Regression” In Journal of Computational and Graphical Statistics 7.4, 1998, pp. 489–500 DOI: 10.1080/10618600.1998.10474790

- [2] Elizabeth I. Boyle et al. “GO::TermFinder—Open Source Software for Accessing Gene Ontology Information and Finding Significantly Enriched Gene Ontology Terms Associated with a List of Genes” In Bioinformatics 20.18, 2004, pp. 3710–3715 DOI: 10.1093/bioinformatics/bth456

- [3] Denis Chetverikov “Testing Regression Monotonicity in Econometric Models” In Econometric Theory 35.4, 2019, pp. 729–776 DOI: 10.1017/S0266466618000282

- [4] Hugh A. Chipman, Edward I. George and Robert E. McCulloch “BART: Bayesian Additive Regression Trees” In The Annals of Applied Statistics 4.1 Institute of Mathematical Statistics, 2010, pp. 266–298 DOI: 10.1214/09-AOAS285

- [5] Hugh A. Chipman, Edward I. George, Robert E. McCulloch and Thomas S. Shively “mBART: Multidimensional Monotone BART” In Bayesian Analysis 17.2 International Society for Bayesian Analysis, 2022, pp. 515–544 DOI: 10.1214/21-BA1259

- [6] Russell Davidson and Emmanuel Flachaire “The Wild Bootstrap, Tamed at Last” In Journal of Econometrics 146.1, 2008, pp. 162–169 DOI: 10.1016/j.jeconom.2008.08.003

- [7] Carl De Boor “A Practical Guide to Splines” New York: Springer, 1978

- [8] Shila Ghazanfar et al. “Investigating Higher-Order Interactions in Single-Cell Data with scHOT” In Nature Methods 17.8 Nature Publishing Group, 2020, pp. 799–806 DOI: 10.1038/s41592-020-0885-x

- [9] Subhashis Ghosal, Arusharka Sen and Aad W. van der Vaart “Testing Monotonicity of Regression” In The Annals of Statistics 28.4 Institute of Mathematical Statistics, 2000, pp. 1054–1082 JSTOR: 2673954

- [10] Robert B. Gramacy “Surrogates: Gaussian Process Modeling, Design and Optimization for the Applied Sciences” Chapman Hall/CRC, 2020

- [11] Peter Hall and Nancy E. Heckman “Testing for Monotonicity of a Regression Mean by Calibrating for Linear Functions” In The Annals of Statistics 28.1 Institute of Mathematical Statistics, 2000, pp. 20–39 JSTOR: 2673980

- [12] Trevor Hastie, Robert Tibshirani and Jerome Friedman “The Elements of Statistical Learning: Data Mining, Inference, and Prediction” Springer Science & Business Media, 2009

- [13] Wenpin Hou et al. “A Statistical Framework for Differential Pseudotime Analysis with Multiple Single-Cell RNA-seq Samples” In Nature Communications 14.1 Nature Publishing Group, 2023, pp. 7286 DOI: 10.1038/s41467-023-42841-y

- [14] Jan R Magnus and Heinz Neudecker “Matrix Differential Calculus with Applications in Statistics and Econometrics” John Wiley & Sons, Ltd, 2019 DOI: 10.1002/9781119541219

- [15] Leland McInnes, John Healy, Nathaniel Saul and Lukas Großberger “UMAP: Uniform Manifold Approximation and Projection” In Journal of Open Source Software 3.29, 2018, pp. 861 DOI: 10.21105/joss.00861

- [16] Franziska Paul et al. “Transcriptional Heterogeneity and Lineage Commitment in Myeloid Progenitors” In Cell 163.7, 2015, pp. 1663–1677 DOI: 10.1016/j.cell.2015.11.013

- [17] James O. Ramsay and Bernard W. Silverman “Functional Data Analysis”, Springer Series in Statistics New York, NY: Springer, 2005

- [18] Carl Edward Rasmussen and Christopher K.. Williams “Gaussian Processes for Machine Learning”, Adaptive Computation and Machine Learning Cambridge, Mass: MIT Press, 2006

- [19] Minsuk Shin, Shijie Wang and Jun S. Liu “Generative Multi-purpose Sampler for Weighted M-estimation” In Journal of Computational and Graphical Statistics 0.ja Taylor & Francis, 2023, pp. 1–53 DOI: 10.1080/10618600.2023.2292668

- [20] Dongyuan Song and Jingyi Jessica Li “PseudotimeDE: Inference of Differential Gene Expression along Cell Pseudotime with Well-Calibrated p-Values from Single-Cell RNA Sequencing Data” In Genome Biology 22.1, 2021, pp. 124 DOI: 10.1186/s13059-021-02341-y

- [21] Koen Van den Berge et al. “Trajectory-Based Differential Expression Analysis for Single-Cell Sequencing Data” In Nature Communications 11.1 Nature Publishing Group, 2020, pp. 1201 DOI: 10.1038/s41467-020-14766-3

- [22] Jianqiang C. Wang and Mary C. Meyer “Testing the Monotonicity or Convexity of a Function Using Regression Splines” In The Canadian Journal of Statistics / La Revue Canadienne de Statistique 39.1 [Statistical Society of Canada, Wiley], 2011, pp. 89–107 JSTOR: 41304465

- [23] Lijun Wang, Xiaodan Fan, Huabai Li and Jun S. Liu “Monotone Cubic B-Splines” arXiv, 2023 DOI: 10.48550/arXiv.2307.01748

- [24] Guangchuang Yu et al. “clusterProfiler: A Universal Enrichment Tool for Interpreting Omics Data”, Bioconductor version: Release (3.17), 2023 DOI: 10.18129/B9.bioc.clusterProfiler

Appendix A More Simulation Results

A.1 Candidate Kernels

Random functions with kernel , including Squared Exponential (SE) kernel , Rational Quadratic (RQ) kernel , Matérn (Mat) kernel and Periodic kernel [18].

where are the parameters, and is a modified Bessel function. In particular, “Mat12” refers to the Matérn kernel with , and similarly, “Mat32” and “Mat52” indicate and , respectively. Any additional parameters are appended to the kernel name; for example, “SE-1” represents the Squared Exponential kernel with , “Mat12-1” denotes the Matérn kernel with , and “RQ-0.1-0.5” is the Rational Quadratic kernel with parameter .

A.2 Random Function Generation

A random function with kernel is generated as follows,

-

1.

Generate random points, .

-

2.

Calculate the covariance matrix based on kernel , . Practically, add a small constant, say , on the diagonal of to prevent numerically ill-conditioned matrix [10].

-

3.

Generate a random Gaussian vector with the above covariance matrix, .

A.3 Monotone Decomposition with Cubic Splines

| curve | MSFE | MSPE | p-value | prop. | |||

| CubicSpline | MonoDecomp | CubicSpline | MonoDecomp | ||||

| 0.2 | 1.95e+00 (1.4e-02) | 1.94e+00 (1.5e-02) | 1.46e+00 (6.1e-02) | 1.46e+00 (6.4e-02) | 4.44e-01 | 0.57 | |

| 0.4 | 3.84e+00 (3.4e-02) | 3.83e+00 (3.0e-02) | 2.97e+00 (1.5e-01) | 2.90e+00 (1.3e-01) | 2.79e-01 | 0.57 | |

| 0.6 | 5.80e+00 (4.3e-02) | 5.77e+00 (4.7e-02) | 4.45e+00 (2.0e-01) | 4.22e+00 (1.9e-01) | 7.97e-02 (.) | 0.63 | |

| 1.0 | 9.71e+00 (7.3e-02) | 9.73e+00 (7.3e-02) | 7.50e+00 (3.1e-01) | 6.93e+00 (2.6e-01) | 5.91e-03 (**) | 0.59 | |

| 0.2 | 1.89e+00 (1.6e-02) | 1.88e+00 (1.7e-02) | 1.53e+00 (6.9e-02) | 1.54e+00 (5.9e-02) | 3.81e-01 | 0.47 | |

| 0.4 | 3.83e+00 (3.0e-02) | 3.80e+00 (3.0e-02) | 2.82e+00 (1.4e-01) | 2.89e+00 (1.2e-01) | 1.71e-01 | 0.45 | |

| 0.6 | 5.74e+00 (4.6e-02) | 5.68e+00 (4.9e-02) | 4.78e+00 (2.1e-01) | 4.71e+00 (1.6e-01) | 2.93e-01 | 0.49 | |

| 1.0 | 9.65e+00 (7.4e-02) | 9.60e+00 (7.2e-02) | 7.63e+00 (3.5e-01) | 7.17e+00 (2.7e-01) | 2.21e-02 (*) | 0.6 | |

| 0.2 | 1.91e+00 (1.3e-02) | 1.88e+00 (1.5e-02) | 1.51e+00 (5.8e-02) | 1.61e+00 (6.6e-02) | 3.47e-02 (*) | 0.53 | |

| 0.4 | 3.83e+00 (2.9e-02) | 3.82e+00 (3.1e-02) | 2.79e+00 (1.3e-01) | 2.71e+00 (1.1e-01) | 1.97e-01 | 0.57 | |

| 0.6 | 5.72e+00 (4.4e-02) | 5.68e+00 (4.4e-02) | 4.45e+00 (1.8e-01) | 4.27e+00 (1.7e-01) | 1.15e-01 | 0.61 | |

| 1.0 | 9.57e+00 (5.9e-02) | 9.49e+00 (7.3e-02) | 7.56e+00 (2.8e-01) | 7.08e+00 (3.1e-01) | 3.59e-02 (*) | 0.57 | |

| sigmoid | 0.2 | 1.91e+00 (1.5e-02) | 1.91e+00 (1.6e-02) | 1.69e+00 (5.4e-02) | 1.59e+00 (5.0e-02) | 6.14e-03 (**) | 0.65 |

| 0.4 | 3.89e+00 (3.2e-02) | 3.88e+00 (3.2e-02) | 2.99e+00 (1.2e-01) | 2.96e+00 (1.1e-01) | 3.53e-01 | 0.47 | |

| 0.6 | 5.77e+00 (4.8e-02) | 5.76e+00 (4.8e-02) | 4.35e+00 (1.7e-01) | 4.14e+00 (1.4e-01) | 4.19e-02 (*) | 0.56 | |

| 1.0 | 9.51e+00 (8.5e-02) | 9.50e+00 (8.7e-02) | 7.33e+00 (3.2e-01) | 6.61e+00 (2.6e-01) | 1.45e-03 (**) | 0.56 | |

| SE-1 | 0.2 | 1.93e+00 (1.4e-02) | 1.93e+00 (1.6e-02) | 1.62e+00 (7.3e-02) | 1.59e+00 (5.2e-02) | 3.21e-01 | 0.52 |

| 0.4 | 3.81e+00 (3.4e-02) | 3.78e+00 (3.6e-02) | 3.18e+00 (1.4e-01) | 3.16e+00 (1.2e-01) | 3.93e-01 | 0.54 | |

| 0.6 | 5.77e+00 (4.4e-02) | 5.77e+00 (4.6e-02) | 4.67e+00 (2.0e-01) | 4.34e+00 (1.6e-01) | 2.88e-02 (*) | 0.57 | |

| 1.0 | 9.55e+00 (7.0e-02) | 9.51e+00 (7.6e-02) | 7.29e+00 (3.2e-01) | 6.62e+00 (2.7e-01) | 5.51e-03 (**) | 0.63 | |

| SE-0.1 | 0.2 | 1.76e+00 (2.0e-02) | 1.78e+00 (2.5e-02) | 3.54e+00 (5.3e-02) | 3.54e+00 (6.7e-02) | 4.92e-01 | 0.6 |

| 0.4 | 3.54e+00 (3.6e-02) | 3.55e+00 (3.8e-02) | 6.59e+00 (1.1e-01) | 6.25e+00 (1.0e-01) | 1.36e-04 (***) | 0.66 | |

| 0.6 | 5.57e+00 (5.4e-02) | 5.59e+00 (6.0e-02) | 9.20e+00 (1.6e-01) | 9.13e+00 (1.6e-01) | 3.14e-01 | 0.59 | |

| 1.0 | 9.29e+00 (8.5e-02) | 9.20e+00 (9.3e-02) | 1.44e+01 (2.6e-01) | 1.38e+01 (2.4e-01) | 5.93e-04 (***) | 0.7 | |

| Mat12-1 | 0.2 | 2.12e+00 (3.2e-02) | 2.15e+00 (2.9e-02) | 5.43e+00 (5.2e-02) | 5.29e+00 (6.5e-02) | 7.85e-03 (**) | 0.72 |

| 0.4 | 4.08e+00 (4.7e-02) | 4.02e+00 (4.8e-02) | 7.55e+00 (9.5e-02) | 7.20e+00 (8.9e-02) | 7.51e-09 (***) | 0.72 | |

| 0.6 | 5.82e+00 (6.5e-02) | 5.79e+00 (5.8e-02) | 9.34e+00 (1.4e-01) | 8.94e+00 (1.1e-01) | 1.35e-04 (***) | 0.7 | |

| 1.0 | 9.79e+00 (8.9e-02) | 9.73e+00 (9.1e-02) | 1.26e+01 (2.9e-01) | 1.17e+01 (2.4e-01) | 9.31e-07 (***) | 0.68 | |

| Mat12-0.1 | 0.2 | 3.87e+00 (6.8e-02) | 3.88e+00 (7.3e-02) | 1.26e+01 (1.6e-01) | 1.25e+01 (1.8e-01) | 2.28e-01 | 0.57 |

| 0.4 | 5.15e+00 (8.3e-02) | 5.08e+00 (6.9e-02) | 1.47e+01 (1.5e-01) | 1.42e+01 (1.3e-01) | 1.25e-03 (**) | 0.66 | |

| 0.6 | 6.77e+00 (1.0e-01) | 6.64e+00 (8.8e-02) | 1.67e+01 (1.8e-01) | 1.61e+01 (1.7e-01) | 2.66e-04 (***) | 0.67 | |

| 1.0 | 1.04e+01 (1.3e-01) | 1.04e+01 (1.3e-01) | 2.07e+01 (2.4e-01) | 2.01e+01 (2.4e-01) | 1.85e-04 (***) | 0.73 | |

| Mat32-1 | 0.2 | 1.87e+00 (1.6e-02) | 1.87e+00 (1.6e-02) | 2.40e+00 (4.5e-02) | 2.29e+00 (4.3e-02) | 1.30e-03 (**) | 0.66 |

| 0.4 | 3.82e+00 (3.5e-02) | 3.79e+00 (3.6e-02) | 3.99e+00 (1.0e-01) | 3.92e+00 (9.6e-02) | 2.08e-01 | 0.63 | |

| 0.6 | 5.77e+00 (4.4e-02) | 5.76e+00 (4.3e-02) | 5.60e+00 (1.6e-01) | 5.28e+00 (1.3e-01) | 4.84e-03 (**) | 0.68 | |

| 1.0 | 9.62e+00 (8.2e-02) | 9.61e+00 (8.3e-02) | 9.01e+00 (3.5e-01) | 8.00e+00 (2.6e-01) | 1.46e-04 (***) | 0.56 | |

| Mat32-0.1 | 0.2 | 2.05e+00 (3.5e-02) | 2.11e+00 (4.4e-02) | 5.46e+00 (7.6e-02) | 5.54e+00 (1.1e-01) | 1.82e-01 | 0.57 |

| 0.4 | 3.84e+00 (5.4e-02) | 3.77e+00 (6.0e-02) | 8.83e+00 (1.1e-01) | 8.41e+00 (1.3e-01) | 8.21e-05 (***) | 0.74 | |

| 0.6 | 5.64e+00 (7.0e-02) | 5.58e+00 (7.6e-02) | 1.17e+01 (1.5e-01) | 1.13e+01 (1.4e-01) | 1.68e-05 (***) | 0.69 | |

| 1.0 | 9.62e+00 (1.1e-01) | 9.53e+00 (9.7e-02) | 1.68e+01 (2.5e-01) | 1.58e+01 (2.1e-01) | 4.67e-10 (***) | 0.72 | |

| RQ-0.1-0.5 | 0.2 | 1.72e+00 (2.9e-02) | 1.74e+00 (3.1e-02) | 4.14e+00 (6.5e-02) | 4.12e+00 (7.4e-02) | 3.53e-01 | 0.61 |

| 0.4 | 3.77e+00 (4.6e-02) | 3.68e+00 (4.6e-02) | 7.33e+00 (1.1e-01) | 6.92e+00 (9.9e-02) | 1.44e-06 (***) | 0.77 | |

| 0.6 | 5.63e+00 (7.2e-02) | 5.54e+00 (6.4e-02) | 1.03e+01 (1.7e-01) | 9.61e+00 (1.6e-01) | 2.72e-07 (***) | 0.72 | |

| 1.0 | 9.55e+00 (1.1e-01) | 9.50e+00 (1.2e-01) | 1.50e+01 (2.4e-01) | 1.44e+01 (2.6e-01) | 5.01e-03 (**) | 0.68 | |

| Periodic-0.1-4 | 0.2 | 1.68e+00 (2.8e-02) | 1.70e+00 (2.7e-02) | 4.27e+00 (7.0e-02) | 4.21e+00 (6.6e-02) | 2.00e-01 | 0.6 |

| 0.4 | 3.40e+00 (4.2e-02) | 3.48e+00 (5.2e-02) | 7.90e+00 (1.1e-01) | 7.84e+00 (1.3e-01) | 2.81e-01 | 0.65 | |

| 0.6 | 5.42e+00 (7.3e-02) | 5.45e+00 (7.0e-02) | 1.12e+01 (1.6e-01) | 1.08e+01 (1.8e-01) | 2.52e-02 (*) | 0.65 | |

| 1.0 | 9.41e+00 (1.2e-01) | 9.31e+00 (1.1e-01) | 1.72e+01 (3.0e-01) | 1.60e+01 (2.5e-01) | 2.03e-10 (***) | 0.63 | |

| curve | MSFE | MSPE | p-value | prop. | |||

| CubicSpline | MonoDecomp | CubicSpline | MonoDecomp | ||||

| 0.1 | 9.68e-01 (8.2e-03) | 9.71e-01 (8.2e-03) | 7.38e-01 (3.6e-02) | 7.07e-01 (3.3e-02) | 1.47e-03 (**) | 0.65 | |

| 0.2 | 1.92e+00 (1.6e-02) | 1.93e+00 (1.6e-02) | 1.53e+00 (7.7e-02) | 1.45e+00 (7.2e-02) | 1.03e-03 (**) | 0.74 | |

| 0.5 | 4.88e+00 (3.6e-02) | 4.90e+00 (3.6e-02) | 3.67e+00 (1.5e-01) | 3.37e+00 (1.3e-01) | 2.20e-06 (***) | 0.77 | |

| 1.0 | 9.63e+00 (8.2e-02) | 9.73e+00 (8.0e-02) | 7.12e+00 (3.2e-01) | 5.92e+00 (2.5e-01) | 1.73e-10 (***) | 0.77 | |

| 0.1 | 9.56e-01 (7.6e-03) | 9.58e-01 (7.1e-03) | 7.32e-01 (3.5e-02) | 6.98e-01 (2.9e-02) | 1.01e-03 (**) | 0.61 | |

| 0.2 | 1.93e+00 (1.6e-02) | 1.94e+00 (1.5e-02) | 1.47e+00 (7.1e-02) | 1.38e+00 (5.8e-02) | 2.88e-05 (***) | 0.69 | |

| 0.5 | 4.83e+00 (4.3e-02) | 4.86e+00 (4.2e-02) | 3.66e+00 (1.5e-01) | 3.41e+00 (1.4e-01) | 7.49e-03 (**) | 0.64 | |

| 1.0 | 9.55e+00 (8.1e-02) | 9.68e+00 (7.8e-02) | 8.03e+00 (3.7e-01) | 7.02e+00 (2.7e-01) | 2.01e-06 (***) | 0.69 | |

| 0.1 | 9.56e-01 (7.4e-03) | 9.57e-01 (7.3e-03) | 7.51e-01 (3.3e-02) | 7.26e-01 (3.0e-02) | 3.12e-04 (***) | 0.61 | |

| 0.2 | 1.93e+00 (1.5e-02) | 1.94e+00 (1.5e-02) | 1.40e+00 (6.6e-02) | 1.32e+00 (5.9e-02) | 4.32e-07 (***) | 0.67 | |

| 0.5 | 4.82e+00 (3.9e-02) | 4.84e+00 (3.7e-02) | 3.52e+00 (1.7e-01) | 3.02e+00 (1.4e-01) | 9.36e-11 (***) | 0.8 | |

| 1.0 | 9.74e+00 (7.6e-02) | 9.82e+00 (7.5e-02) | 7.47e+00 (3.3e-01) | 6.09e+00 (2.5e-01) | 6.35e-11 (***) | 0.8 | |

| sigmoid | 0.1 | 9.53e-01 (7.3e-03) | 9.60e-01 (7.5e-03) | 8.84e-01 (2.4e-02) | 8.55e-01 (2.5e-02) | 3.16e-03 (**) | 0.68 |

| 0.2 | 1.87e+00 (1.4e-02) | 1.89e+00 (1.4e-02) | 1.81e+00 (6.2e-02) | 1.67e+00 (5.2e-02) | 5.12e-05 (***) | 0.71 | |

| 0.5 | 4.78e+00 (3.8e-02) | 4.82e+00 (3.6e-02) | 3.83e+00 (1.7e-01) | 3.51e+00 (1.3e-01) | 2.42e-04 (***) | 0.66 | |

| 1.0 | 9.50e+00 (7.7e-02) | 9.62e+00 (7.7e-02) | 7.62e+00 (3.4e-01) | 6.36e+00 (2.4e-01) | 5.51e-08 (***) | 0.61 | |

| SE-1 | 0.1 | 9.69e-01 (6.9e-03) | 9.74e-01 (6.7e-03) | 8.68e-01 (3.5e-02) | 8.18e-01 (3.0e-02) | 1.44e-04 (***) | 0.73 |

| 0.2 | 1.92e+00 (1.6e-02) | 1.92e+00 (1.6e-02) | 1.62e+00 (6.1e-02) | 1.55e+00 (5.6e-02) | 3.30e-04 (***) | 0.66 | |

| 0.5 | 4.84e+00 (3.6e-02) | 4.88e+00 (3.5e-02) | 3.77e+00 (1.7e-01) | 3.52e+00 (1.3e-01) | 2.43e-03 (**) | 0.61 | |

| 1.0 | 9.69e+00 (6.9e-02) | 9.77e+00 (6.7e-02) | 7.31e+00 (3.1e-01) | 6.15e+00 (2.5e-01) | 2.24e-09 (***) | 0.63 | |

| SE-0.1 | 0.1 | 8.51e-01 (1.1e-02) | 9.02e-01 (1.9e-02) | 1.88e+00 (3.1e-02) | 1.98e+00 (6.3e-02) | 3.83e-02 (*) | 0.57 |

| 0.2 | 1.73e+00 (1.6e-02) | 1.79e+00 (3.1e-02) | 3.43e+00 (4.8e-02) | 3.48e+00 (1.1e-01) | 2.94e-01 | 0.73 | |

| 0.5 | 4.47e+00 (5.0e-02) | 4.58e+00 (6.0e-02) | 7.77e+00 (1.4e-01) | 7.73e+00 (1.7e-01) | 3.74e-01 | 0.67 | |

| 1.0 | 9.31e+00 (8.4e-02) | 9.52e+00 (8.4e-02) | 1.45e+01 (2.6e-01) | 1.41e+01 (2.5e-01) | 3.91e-04 (***) | 0.76 | |

| Mat12-1 | 0.1 | 1.45e+00 (2.5e-02) | 1.56e+00 (2.4e-02) | 4.42e+00 (5.5e-02) | 4.61e+00 (5.8e-02) | 2.37e-07 (***) | 0.37 |

| 0.2 | 2.19e+00 (3.5e-02) | 2.30e+00 (3.3e-02) | 5.46e+00 (5.7e-02) | 5.50e+00 (6.5e-02) | 1.78e-01 | 0.64 | |

| 0.5 | 4.95e+00 (5.5e-02) | 5.05e+00 (5.2e-02) | 8.18e+00 (9.8e-02) | 8.04e+00 (1.1e-01) | 1.52e-02 (*) | 0.73 | |

| 1.0 | 9.80e+00 (9.1e-02) | 9.94e+00 (9.0e-02) | 1.21e+01 (2.6e-01) | 1.16e+01 (2.2e-01) | 6.76e-04 (***) | 0.74 | |

| Mat12-0.1 | 0.1 | 3.41e+00 (6.6e-02) | 3.83e+00 (8.4e-02) | 1.16e+01 (1.4e-01) | 1.25e+01 (2.3e-01) | 1.85e-06 (***) | 0.23 |

| 0.2 | 3.75e+00 (7.9e-02) | 4.17e+00 (9.4e-02) | 1.24e+01 (1.7e-01) | 1.32e+01 (2.3e-01) | 2.05e-07 (***) | 0.27 | |

| 0.5 | 5.91e+00 (9.0e-02) | 6.36e+00 (1.0e-01) | 1.56e+01 (1.6e-01) | 1.62e+01 (2.4e-01) | 1.01e-03 (**) | 0.35 | |

| 1.0 | 1.04e+01 (1.4e-01) | 1.08e+01 (1.2e-01) | 2.11e+01 (2.2e-01) | 2.11e+01 (2.3e-01) | 4.48e-01 | 0.48 | |

| Mat32-1 | 0.1 | 9.39e-01 (9.2e-03) | 9.51e-01 (8.3e-03) | 1.33e+00 (2.3e-02) | 1.29e+00 (2.0e-02) | 5.22e-04 (***) | 0.7 |

| 0.2 | 1.91e+00 (1.7e-02) | 1.92e+00 (1.6e-02) | 2.27e+00 (4.7e-02) | 2.18e+00 (4.2e-02) | 1.76e-04 (***) | 0.71 | |

| 0.5 | 4.79e+00 (4.0e-02) | 4.84e+00 (3.8e-02) | 5.01e+00 (1.4e-01) | 4.53e+00 (1.1e-01) | 3.31e-07 (***) | 0.66 | |

| 1.0 | 9.59e+00 (8.2e-02) | 9.67e+00 (8.1e-02) | 8.49e+00 (2.9e-01) | 7.58e+00 (2.6e-01) | 3.94e-08 (***) | 0.67 | |

| Mat32-0.1 | 0.1 | 1.25e+00 (3.3e-02) | 1.42e+00 (4.2e-02) | 3.86e+00 (8.2e-02) | 4.27e+00 (1.3e-01) | 1.67e-05 (***) | 0.42 |

| 0.2 | 1.97e+00 (3.8e-02) | 2.23e+00 (6.7e-02) | 5.41e+00 (7.8e-02) | 5.95e+00 (1.9e-01) | 1.59e-03 (**) | 0.53 | |

| 0.5 | 4.66e+00 (5.9e-02) | 4.97e+00 (6.9e-02) | 1.03e+01 (1.2e-01) | 1.06e+01 (1.8e-01) | 2.05e-02 (*) | 0.55 | |

| 1.0 | 9.55e+00 (1.2e-01) | 9.82e+00 (1.2e-01) | 1.69e+01 (2.3e-01) | 1.67e+01 (2.7e-01) | 4.00e-02 (*) | 0.68 | |

| RQ-0.1-0.5 | 0.1 | 8.92e-01 (1.9e-02) | 9.95e-01 (3.3e-02) | 2.37e+00 (4.1e-02) | 2.58e+00 (1.0e-01) | 1.91e-02 (*) | 0.51 |

| 0.2 | 1.82e+00 (2.7e-02) | 1.95e+00 (3.7e-02) | 4.15e+00 (7.0e-02) | 4.36e+00 (1.1e-01) | 6.07e-03 (**) | 0.57 | |

| 0.5 | 4.60e+00 (6.2e-02) | 4.84e+00 (7.4e-02) | 9.08e+00 (1.5e-01) | 8.99e+00 (2.0e-01) | 2.66e-01 | 0.61 | |

| 1.0 | 9.30e+00 (9.9e-02) | 9.57e+00 (9.8e-02) | 1.48e+01 (2.3e-01) | 1.43e+01 (2.2e-01) | 6.64e-03 (**) | 0.74 | |

| Periodic-0.1-4 | 0.1 | 8.82e-01 (3.6e-02) | 9.46e-01 (3.8e-02) | 2.44e+00 (1.1e-01) | 2.58e+00 (1.2e-01) | 2.75e-03 (**) | 0.54 |

| 0.2 | 1.65e+00 (2.6e-02) | 1.80e+00 (4.4e-02) | 4.26e+00 (6.2e-02) | 4.47e+00 (1.3e-01) | 3.92e-02 (*) | 0.67 | |

| 0.5 | 4.34e+00 (5.1e-02) | 4.54e+00 (6.0e-02) | 9.44e+00 (1.3e-01) | 9.41e+00 (1.9e-01) | 4.14e-01 | 0.59 | |

| 1.0 | 9.25e+00 (1.3e-01) | 9.64e+00 (1.3e-01) | 1.76e+01 (2.6e-01) | 1.72e+01 (2.9e-01) | 3.24e-02 (*) | 0.66 | |

A.4 Monotone Decomposition Fitting with Smoothing Splines

A.4.1 A complete version for Table 2

| curve | MSFE | MSPE | p-value | prop. | |||

| SmoothSpline | MonoDecomp | SmoothSpline | MonoDecomp | ||||

| 0.1 | 9.57e-01 (8.3e-03) | 9.73e-01 (8.2e-03) | 7.38e-01 (2.4e-02) | 8.67e-01 (2.4e-02) | 1.74e-14 (***) | 0.25 | |

| 0.5 | 4.79e+00 (4.1e-02) | 4.80e+00 (4.1e-02) | 3.41e+00 (1.2e-01) | 3.39e+00 (1.1e-01) | 3.61e-01 | 0.46 | |

| 1.0 | 9.65e+00 (8.9e-02) | 9.69e+00 (8.5e-02) | 6.44e+00 (2.9e-01) | 6.35e+00 (2.5e-01) | 1.68e-01 | 0.49 | |

| 1.5 | 1.44e+01 (1.1e-01) | 1.45e+01 (1.1e-01) | 9.31e+00 (3.9e-01) | 9.14e+00 (3.4e-01) | 4.68e-02 (*) | 0.6 | |

| 2.0 | 1.92e+01 (1.6e-01) | 1.92e+01 (1.5e-01) | 1.23e+01 (4.9e-01) | 1.15e+01 (4.3e-01) | 4.45e-06 (***) | 0.81 | |

| 0.1 | 9.46e-01 (8.0e-03) | 9.89e-01 (8.0e-03) | 8.65e-01 (2.4e-02) | 1.11e+00 (2.5e-02) | 0.00e+00 (***) | 0.2 | |

| 0.5 | 4.86e+00 (4.4e-02) | 4.88e+00 (4.3e-02) | 3.65e+00 (1.2e-01) | 3.55e+00 (1.2e-01) | 9.06e-03 (**) | 0.58 | |

| 1.0 | 9.75e+00 (8.2e-02) | 9.77e+00 (8.2e-02) | 6.47e+00 (1.8e-01) | 6.21e+00 (1.7e-01) | 1.18e-04 (***) | 0.65 | |

| 1.5 | 1.45e+01 (1.1e-01) | 1.46e+01 (1.1e-01) | 9.16e+00 (3.1e-01) | 8.67e+00 (2.8e-01) | 1.67e-06 (***) | 0.81 | |

| 2.0 | 1.92e+01 (1.8e-01) | 1.92e+01 (1.6e-01) | 1.16e+01 (5.9e-01) | 1.09e+01 (4.9e-01) | 1.15e-04 (***) | 0.78 | |

| 0.1 | 9.53e-01 (7.6e-03) | 9.64e-01 (7.7e-03) | 7.96e-01 (2.6e-02) | 9.13e-01 (2.6e-02) | 5.02e-12 (***) | 0.26 | |

| 0.5 | 4.80e+00 (4.3e-02) | 4.82e+00 (3.9e-02) | 3.33e+00 (1.5e-01) | 3.30e+00 (1.3e-01) | 2.60e-01 | 0.51 | |

| 1.0 | 9.74e+00 (8.4e-02) | 9.75e+00 (8.4e-02) | 5.94e+00 (2.3e-01) | 5.82e+00 (2.1e-01) | 3.12e-02 (*) | 0.58 | |

| 1.5 | 1.45e+01 (1.3e-01) | 1.46e+01 (1.3e-01) | 9.10e+00 (4.4e-01) | 8.83e+00 (4.1e-01) | 7.93e-03 (**) | 0.69 | |

| 2.0 | 1.95e+01 (1.5e-01) | 1.95e+01 (1.5e-01) | 1.08e+01 (4.9e-01) | 1.06e+01 (4.4e-01) | 3.80e-02 (*) | 0.57 | |

| sigmoid | 0.1 | 9.58e-01 (8.0e-03) | 9.58e-01 (7.9e-03) | 7.75e-01 (2.7e-02) | 7.69e-01 (2.5e-02) | 1.81e-01 | 0.56 |

| 0.5 | 4.82e+00 (4.2e-02) | 4.82e+00 (4.2e-02) | 3.55e+00 (1.3e-01) | 3.49e+00 (1.2e-01) | 2.45e-02 (*) | 0.61 | |

| 1.0 | 9.60e+00 (7.8e-02) | 9.64e+00 (7.5e-02) | 5.99e+00 (2.9e-01) | 5.68e+00 (2.4e-01) | 4.47e-04 (***) | 0.67 | |

| 1.5 | 1.43e+01 (1.6e-01) | 1.44e+01 (1.5e-01) | 8.94e+00 (5.4e-01) | 8.36e+00 (4.9e-01) | 5.86e-07 (***) | 0.65 | |

| 2.0 | 1.93e+01 (1.6e-01) | 1.94e+01 (1.5e-01) | 1.15e+01 (6.4e-01) | 1.08e+01 (5.4e-01) | 2.06e-05 (***) | 0.7 | |

| SE-1 | 0.1 | 9.66e-01 (7.5e-03) | 9.71e-01 (7.4e-03) | 7.54e-01 (2.4e-02) | 8.19e-01 (2.6e-02) | 7.24e-08 (***) | 0.26 |

| 0.5 | 4.88e+00 (3.9e-02) | 4.89e+00 (3.8e-02) | 3.15e+00 (1.2e-01) | 3.10e+00 (1.1e-01) | 5.92e-02 (.) | 0.55 | |

| 1.0 | 9.67e+00 (8.3e-02) | 9.70e+00 (8.1e-02) | 6.32e+00 (2.8e-01) | 6.11e+00 (2.5e-01) | 3.86e-03 (**) | 0.6 | |

| 1.5 | 1.47e+01 (1.1e-01) | 1.47e+01 (1.1e-01) | 8.65e+00 (3.8e-01) | 8.39e+00 (3.4e-01) | 2.48e-02 (*) | 0.55 | |

| 2.0 | 1.93e+01 (1.5e-01) | 1.94e+01 (1.5e-01) | 1.15e+01 (4.3e-01) | 1.10e+01 (3.8e-01) | 6.24e-04 (***) | 0.7 | |

| SE-0.1 | 0.1 | 7.68e-01 (9.1e-03) | 7.94e-01 (9.4e-03) | 1.71e+00 (2.7e-02) | 1.79e+00 (3.0e-02) | 3.41e-09 (***) | 0.24 |

| 0.5 | 4.36e+00 (4.4e-02) | 4.40e+00 (4.5e-02) | 6.65e+00 (1.0e-01) | 6.72e+00 (1.0e-01) | 2.24e-03 (**) | 0.42 | |

| 1.0 | 8.92e+00 (9.2e-02) | 8.99e+00 (9.0e-02) | 1.23e+01 (2.1e-01) | 1.23e+01 (2.1e-01) | 4.32e-01 | 0.57 | |

| 1.5 | 1.41e+01 (1.3e-01) | 1.42e+01 (1.3e-01) | 1.76e+01 (3.1e-01) | 1.72e+01 (3.1e-01) | 5.96e-05 (***) | 0.64 | |

| 2.0 | 1.89e+01 (1.7e-01) | 1.91e+01 (1.7e-01) | 2.16e+01 (4.3e-01) | 2.11e+01 (4.3e-01) | 2.13e-04 (***) | 0.68 | |

| Mat12-1 | 0.1 | 1.16e+00 (2.0e-02) | 1.23e+00 (1.9e-02) | 3.71e+00 (2.7e-02) | 3.80e+00 (3.1e-02) | 5.72e-14 (***) | 0.15 |

| 0.5 | 4.80e+00 (4.9e-02) | 4.87e+00 (4.5e-02) | 7.50e+00 (8.3e-02) | 7.51e+00 (8.3e-02) | 3.83e-01 | 0.4 | |

| 1.0 | 9.65e+00 (9.5e-02) | 9.69e+00 (9.2e-02) | 1.15e+01 (2.3e-01) | 1.13e+01 (2.1e-01) | 3.40e-04 (***) | 0.56 | |

| 1.5 | 1.43e+01 (1.1e-01) | 1.43e+01 (1.1e-01) | 1.40e+01 (3.0e-01) | 1.38e+01 (2.8e-01) | 3.13e-03 (**) | 0.64 | |

| 2.0 | 1.96e+01 (1.6e-01) | 1.97e+01 (1.6e-01) | 1.69e+01 (4.4e-01) | 1.64e+01 (3.7e-01) | 1.97e-04 (***) | 0.69 | |

| Mat12-0.1 | 0.1 | 2.60e+00 (3.9e-02) | 2.88e+00 (3.8e-02) | 9.86e+00 (7.0e-02) | 1.03e+01 (8.1e-02) | 0.00e+00 (***) | 0.06 |

| 0.5 | 4.81e+00 (6.4e-02) | 5.11e+00 (6.1e-02) | 1.35e+01 (8.5e-02) | 1.37e+01 (9.4e-02) | 6.90e-06 (***) | 0.3 | |

| 1.0 | 9.76e+00 (1.2e-01) | 9.92e+00 (1.1e-01) | 1.90e+01 (2.2e-01) | 1.90e+01 (2.2e-01) | 2.06e-01 | 0.58 | |

| 1.5 | 1.46e+01 (1.7e-01) | 1.48e+01 (1.6e-01) | 2.29e+01 (2.8e-01) | 2.25e+01 (2.7e-01) | 1.21e-05 (***) | 0.69 | |

| 2.0 | 1.93e+01 (1.8e-01) | 1.95e+01 (1.7e-01) | 2.70e+01 (4.1e-01) | 2.65e+01 (3.6e-01) | 3.05e-04 (***) | 0.66 | |

| Mat32-1 | 0.1 | 9.15e-01 (8.0e-03) | 9.20e-01 (7.7e-03) | 1.18e+00 (2.1e-02) | 1.19e+00 (2.2e-02) | 5.89e-02 (.) | 0.45 |

| 0.5 | 4.86e+00 (4.2e-02) | 4.88e+00 (4.0e-02) | 4.35e+00 (1.3e-01) | 4.32e+00 (1.2e-01) | 2.45e-01 | 0.52 | |

| 1.0 | 9.59e+00 (8.5e-02) | 9.64e+00 (7.5e-02) | 7.20e+00 (2.4e-01) | 7.04e+00 (2.0e-01) | 3.65e-02 (*) | 0.49 | |

| 1.5 | 1.45e+01 (1.3e-01) | 1.46e+01 (1.3e-01) | 1.03e+01 (3.7e-01) | 1.00e+01 (3.3e-01) | 7.41e-03 (**) | 0.65 | |

| 2.0 | 1.95e+01 (1.7e-01) | 1.96e+01 (1.7e-01) | 1.26e+01 (5.1e-01) | 1.19e+01 (4.4e-01) | 6.29e-05 (***) | 0.68 | |

| Mat32-0.1 | 0.1 | 8.83e-01 (1.4e-02) | 9.47e-01 (1.4e-02) | 2.92e+00 (2.4e-02) | 3.02e+00 (2.6e-02) | 3.54e-10 (***) | 0.21 |

| 0.5 | 4.22e+00 (5.3e-02) | 4.37e+00 (5.0e-02) | 8.99e+00 (9.9e-02) | 9.03e+00 (9.9e-02) | 1.82e-01 | 0.45 | |

| 1.0 | 8.96e+00 (1.1e-01) | 9.14e+00 (1.0e-01) | 1.48e+01 (2.2e-01) | 1.47e+01 (2.1e-01) | 1.44e-01 | 0.54 | |

| 1.5 | 1.41e+01 (1.6e-01) | 1.43e+01 (1.6e-01) | 1.99e+01 (3.5e-01) | 1.97e+01 (3.4e-01) | 8.29e-02 (.) | 0.59 | |

| 2.0 | 1.92e+01 (1.9e-01) | 1.94e+01 (1.8e-01) | 2.36e+01 (4.4e-01) | 2.29e+01 (3.9e-01) | 6.38e-08 (***) | 0.74 | |

| RQ-0.1-0.5 | 0.1 | 7.21e-01 (9.5e-03) | 7.52e-01 (1.0e-02) | 2.04e+00 (2.1e-02) | 2.07e+00 (2.2e-02) | 1.35e-04 (***) | 0.38 |

| 0.5 | 4.31e+00 (4.8e-02) | 4.41e+00 (4.7e-02) | 7.50e+00 (9.8e-02) | 7.55e+00 (9.5e-02) | 1.36e-01 | 0.46 | |

| 1.0 | 9.10e+00 (1.1e-01) | 9.22e+00 (1.1e-01) | 1.27e+01 (2.4e-01) | 1.25e+01 (2.2e-01) | 1.21e-03 (**) | 0.6 | |

| 1.5 | 1.43e+01 (1.5e-01) | 1.44e+01 (1.5e-01) | 1.75e+01 (3.3e-01) | 1.74e+01 (3.1e-01) | 1.29e-01 | 0.5 | |

| 2.0 | 1.91e+01 (1.9e-01) | 1.92e+01 (1.8e-01) | 2.10e+01 (5.4e-01) | 2.07e+01 (5.0e-01) | 1.08e-02 (*) | 0.63 | |

| Periodic-0.1-4 | 0.1 | 7.11e-01 (8.8e-03) | 7.44e-01 (1.0e-02) | 2.07e+00 (2.7e-02) | 2.11e+00 (2.6e-02) | 3.37e-06 (***) | 0.27 |

| 0.5 | 4.07e+00 (4.6e-02) | 4.20e+00 (4.6e-02) | 8.20e+00 (1.2e-01) | 8.35e+00 (1.1e-01) | 1.66e-03 (**) | 0.27 | |

| 1.0 | 8.69e+00 (1.0e-01) | 8.89e+00 (9.1e-02) | 1.44e+01 (2.1e-01) | 1.43e+01 (1.9e-01) | 3.03e-01 | 0.49 | |

| 1.5 | 1.39e+01 (1.7e-01) | 1.42e+01 (1.6e-01) | 2.06e+01 (2.7e-01) | 2.02e+01 (2.7e-01) | 1.96e-05 (***) | 0.67 | |

| 2.0 | 1.90e+01 (1.8e-01) | 1.92e+01 (1.8e-01) | 2.47e+01 (3.9e-01) | 2.45e+01 (3.8e-01) | 4.46e-02 (*) | 0.63 | |

A.4.2 Tune with fixed

First, we fix the parameter for the roughness penalty as the CV-tuned one for the smoothing splines. Then, we choose the parameter to minimize the CV error. Figure 11 demonstrates the procedure. The left panel shows the CV-error curve for each candidate parameter , and the right panel compares the monotone decomposition fitting given the parameter which minimized the curve in the left panel to the smoothing spline fitting. The monotone decomposition achieves a better MSPE, and it is obvious that the better performance is mainly due to the shrinkage on the local modes based on the shapes of fitting curves.

The average results based on 100 repetitions are summarized in Table 9. The table shows that we can obtain better performance in the high noise cases, and comparable results in the smaller noise scenarios.

| curve | SNR | MSFE | MSPE | p-value | prop. | |||

| SmoothSpline | MonoDecomp | SmoothSpline | MonoDecomp | |||||

| 0.1 | 1.03e+01 (1.9e-01) | 9.30e-03 (1.4e-04) | 9.55e-03 (1.4e-04) | 6.78e-04 (4.8e-05) | 9.03e-04 (5.4e-05) | 7.17e-12 (***) | 0.24 | |

| 0.2 | 2.68e+00 (7.4e-02) | 3.72e-02 (6.4e-04) | 3.76e-02 (6.3e-04) | 2.15e-03 (1.8e-04) | 2.29e-03 (1.7e-04) | 1.03e-02 (*) | 0.33 | |

| 0.5 | 5.04e-01 (2.1e-02) | 2.35e-01 (3.8e-03) | 2.37e-01 (3.6e-03) | 1.36e-02 (1.4e-03) | 1.23e-02 (9.5e-04) | 3.49e-02 (*) | 0.44 | |

| 1.0 | 1.75e-01 (1.0e-02) | 9.48e-01 (1.5e-02) | 9.59e-01 (1.5e-02) | 5.17e-02 (3.0e-03) | 4.81e-02 (2.7e-03) | 7.22e-03 (**) | 0.48 | |

| 1.5 | 1.23e-01 (1.2e-02) | 2.07e+00 (3.5e-02) | 2.09e+00 (3.6e-02) | 1.03e-01 (1.0e-02) | 9.57e-02 (8.8e-03) | 1.14e-02 (*) | 0.57 | |

| 2.0 | 1.13e-01 (2.3e-02) | 3.82e+00 (5.9e-02) | 3.87e+00 (5.6e-02) | 1.94e-01 (2.4e-02) | 1.55e-01 (1.6e-02) | 1.98e-04 (***) | 0.63 | |

| 0.1 | 1.76e+01 (3.3e-01) | 8.80e-03 (1.3e-04) | 9.92e-03 (1.4e-04) | 8.51e-04 (4.8e-05) | 1.70e-03 (7.3e-05) | 0.00e+00 (***) | 0.15 | |

| 0.2 | 4.51e+00 (1.1e-01) | 3.62e-02 (6.0e-04) | 3.74e-02 (6.1e-04) | 2.89e-03 (1.5e-04) | 3.11e-03 (1.6e-04) | 4.74e-02 (*) | 0.51 | |

| 0.5 | 7.70e-01 (2.3e-02) | 2.33e-01 (3.5e-03) | 2.36e-01 (3.5e-03) | 1.51e-02 (9.3e-04) | 1.36e-02 (8.0e-04) | 3.25e-04 (***) | 0.52 | |

| 1.0 | 2.43e-01 (1.6e-02) | 9.44e-01 (1.6e-02) | 9.54e-01 (1.6e-02) | 5.32e-02 (3.9e-03) | 4.63e-02 (2.9e-03) | 1.77e-04 (***) | 0.69 | |

| 1.5 | 1.58e-01 (1.6e-02) | 2.09e+00 (3.7e-02) | 2.12e+00 (3.5e-02) | 1.11e-01 (1.1e-02) | 9.53e-02 (1.1e-02) | 1.90e-05 (***) | 0.69 | |

| 2.0 | 1.36e-01 (2.1e-02) | 3.75e+00 (7.0e-02) | 3.80e+00 (6.6e-02) | 1.74e-01 (2.1e-02) | 1.37e-01 (1.6e-02) | 1.55e-04 (***) | 0.74 | |

| 0.1 | 5.10e+01 (1.1e+00) | 9.06e-03 (1.7e-04) | 9.42e-03 (1.6e-04) | 6.66e-04 (5.0e-05) | 9.11e-04 (5.6e-05) | 3.13e-06 (***) | 0.36 | |

| 0.2 | 1.26e+01 (3.1e-01) | 3.65e-02 (6.4e-04) | 3.69e-02 (6.0e-04) | 2.35e-03 (2.6e-04) | 2.34e-03 (1.8e-04) | 4.72e-01 | 0.37 | |

| 0.5 | 1.98e+00 (4.5e-02) | 2.40e-01 (3.9e-03) | 2.41e-01 (3.8e-03) | 1.16e-02 (9.4e-04) | 1.10e-02 (7.9e-04) | 4.03e-02 (*) | 0.52 | |

| 1.0 | 5.89e-01 (2.2e-02) | 9.07e-01 (1.4e-02) | 9.14e-01 (1.3e-02) | 5.39e-02 (5.7e-03) | 4.79e-02 (4.5e-03) | 3.84e-04 (***) | 0.55 | |

| 1.5 | 3.02e-01 (1.9e-02) | 2.11e+00 (3.6e-02) | 2.13e+00 (3.5e-02) | 1.02e-01 (1.0e-02) | 9.10e-02 (8.7e-03) | 5.87e-04 (***) | 0.62 | |

| 2.0 | 2.00e-01 (2.9e-02) | 3.79e+00 (6.3e-02) | 3.81e+00 (6.2e-02) | 1.71e-01 (2.7e-02) | 1.53e-01 (2.4e-02) | 1.71e-04 (***) | 0.72 | |

| sigmoid | 0.1 | 1.70e+01 (3.2e-01) | 9.31e-03 (1.4e-04) | 9.36e-03 (1.4e-04) | 6.33e-04 (4.3e-05) | 6.03e-04 (3.3e-05) | 5.12e-02 (.) | 0.55 |

| 0.2 | 4.38e+00 (6.9e-02) | 3.66e-02 (4.6e-04) | 3.68e-02 (4.6e-04) | 2.44e-03 (1.7e-04) | 2.39e-03 (1.6e-04) | 7.53e-02 (.) | 0.61 | |

| 0.5 | 7.87e-01 (2.7e-02) | 2.32e-01 (4.0e-03) | 2.33e-01 (3.9e-03) | 1.50e-02 (1.0e-03) | 1.34e-02 (7.9e-04) | 1.59e-05 (***) | 0.63 | |

| 1.0 | 2.31e-01 (1.3e-02) | 9.44e-01 (1.6e-02) | 9.51e-01 (1.6e-02) | 3.96e-02 (3.9e-03) | 3.43e-02 (3.1e-03) | 8.84e-06 (***) | 0.67 | |

| 1.5 | 1.70e-01 (1.5e-02) | 2.09e+00 (3.3e-02) | 2.12e+00 (3.1e-02) | 1.12e-01 (1.0e-02) | 8.82e-02 (7.8e-03) | 4.95e-05 (***) | 0.64 | |

| 2.0 | 1.26e-01 (1.9e-02) | 3.69e+00 (6.5e-02) | 3.73e+00 (6.3e-02) | 1.67e-01 (2.4e-02) | 1.32e-01 (1.7e-02) | 1.58e-04 (***) | 0.75 | |

| SE-1 | 0.1 | 3.43e+01 (4.3e+00) | 9.02e-03 (1.6e-04) | 9.16e-03 (1.5e-04) | 7.53e-04 (7.0e-05) | 7.32e-04 (5.5e-05) | 2.40e-01 | 0.43 |

| 0.2 | 5.41e+00 (6.9e-01) | 3.77e-02 (6.6e-04) | 3.78e-02 (6.5e-04) | 2.31e-03 (2.0e-04) | 2.36e-03 (1.9e-04) | 2.04e-01 | 0.4 | |

| 0.5 | 1.20e+00 (1.4e-01) | 2.38e-01 (3.9e-03) | 2.39e-01 (3.8e-03) | 1.24e-02 (1.2e-03) | 1.12e-02 (9.5e-04) | 7.34e-03 (**) | 0.51 | |

| 1.0 | 3.59e-01 (3.9e-02) | 9.38e-01 (1.6e-02) | 9.50e-01 (1.6e-02) | 4.74e-02 (3.6e-03) | 4.12e-02 (2.8e-03) | 4.67e-04 (***) | 0.63 | |

| 1.5 | 1.68e-01 (1.9e-02) | 2.13e+00 (3.2e-02) | 2.15e+00 (3.2e-02) | 9.58e-02 (8.1e-03) | 8.60e-02 (6.7e-03) | 3.72e-04 (***) | 0.6 | |

| 2.0 | 1.18e-01 (1.2e-02) | 3.68e+00 (5.9e-02) | 3.71e+00 (5.7e-02) | 1.69e-01 (1.6e-02) | 1.48e-01 (1.2e-02) | 2.36e-04 (***) | 0.75 | |

| SE-0.1 | 0.1 | 1.50e+02 (6.4e+00) | 6.36e-03 (1.3e-04) | 7.06e-03 (1.8e-04) | 3.00e-03 (7.7e-05) | 3.51e-03 (1.6e-04) | 3.81e-05 (***) | 0.25 |

| 0.2 | 3.55e+01 (1.9e+00) | 2.79e-02 (5.5e-04) | 2.90e-02 (5.7e-04) | 1.00e-02 (3.1e-04) | 1.05e-02 (3.3e-04) | 6.47e-05 (***) | 0.39 | |

| 0.5 | 4.98e+00 (2.3e-01) | 1.97e-01 (3.9e-03) | 2.02e-01 (3.7e-03) | 4.70e-02 (1.7e-03) | 4.74e-02 (1.5e-03) | 2.98e-01 | 0.38 | |

| 1.0 | 1.30e+00 (6.9e-02) | 8.23e-01 (1.6e-02) | 8.54e-01 (1.5e-02) | 1.54e-01 (5.3e-03) | 1.55e-01 (4.6e-03) | 3.62e-01 | 0.35 | |

| 1.5 | 6.35e-01 (3.9e-02) | 1.96e+00 (4.0e-02) | 2.02e+00 (4.0e-02) | 3.20e-01 (1.2e-02) | 3.23e-01 (1.3e-02) | 2.79e-01 | 0.51 | |

| 2.0 | 3.69e-01 (2.3e-02) | 3.71e+00 (6.7e-02) | 3.79e+00 (6.8e-02) | 4.69e-01 (1.8e-02) | 4.62e-01 (1.7e-02) | 1.57e-01 | 0.54 | |

| Mat12-1 | 0.1 | 3.51e+01 (2.7e+00) | 1.41e-02 (4.1e-04) | 1.58e-02 (4.3e-04) | 1.40e-02 (2.3e-04) | 1.48e-02 (2.6e-04) | 3.30e-10 (***) | 0.2 |

| 0.2 | 1.29e+01 (9.7e-01) | 3.80e-02 (1.0e-03) | 4.17e-02 (9.8e-04) | 2.39e-02 (4.6e-04) | 2.44e-02 (5.0e-04) | 1.39e-02 (*) | 0.43 | |

| 0.5 | 1.97e+00 (1.5e-01) | 2.27e-01 (4.6e-03) | 2.38e-01 (4.4e-03) | 5.88e-02 (1.7e-03) | 5.78e-02 (1.5e-03) | 1.09e-01 | 0.54 | |

| 1.0 | 6.51e-01 (5.1e-02) | 9.19e-01 (1.6e-02) | 9.40e-01 (1.5e-02) | 1.26e-01 (4.8e-03) | 1.20e-01 (4.1e-03) | 1.12e-03 (**) | 0.57 | |

| 1.5 | 3.52e-01 (4.4e-02) | 2.10e+00 (3.4e-02) | 2.14e+00 (3.1e-02) | 2.13e-01 (1.2e-02) | 1.97e-01 (9.0e-03) | 2.02e-02 (*) | 0.62 | |