Deep Edge-Aware Interactive Colorization against Color-Bleeding Effects

Abstract

Deep neural networks for automatic image colorization often suffer from the color-bleeding artifact, a problematic color spreading near the boundaries between adjacent objects. Such color-bleeding artifacts debase the reality of generated outputs, limiting the applicability of colorization models in practice. Although previous approaches have attempted to address this problem in an automatic manner, they tend to work only in limited cases where a high contrast of gray-scale values are given in an input image. Alternatively, leveraging user interactions would be a promising approach for solving this color-breeding artifacts. In this paper, we propose a novel edge-enhancing network for the regions of interest via simple user scribbles indicating where to enhance. In addition, our method requires a minimal amount of effort from users for their satisfactory enhancement. Experimental results demonstrate that our interactive edge-enhancing approach effectively improves the color-bleeding artifacts compared to the existing baselines across various datasets.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d9ff6453-d73f-46d6-b308-74e3c0a72528/x1.png)

1 Introduction

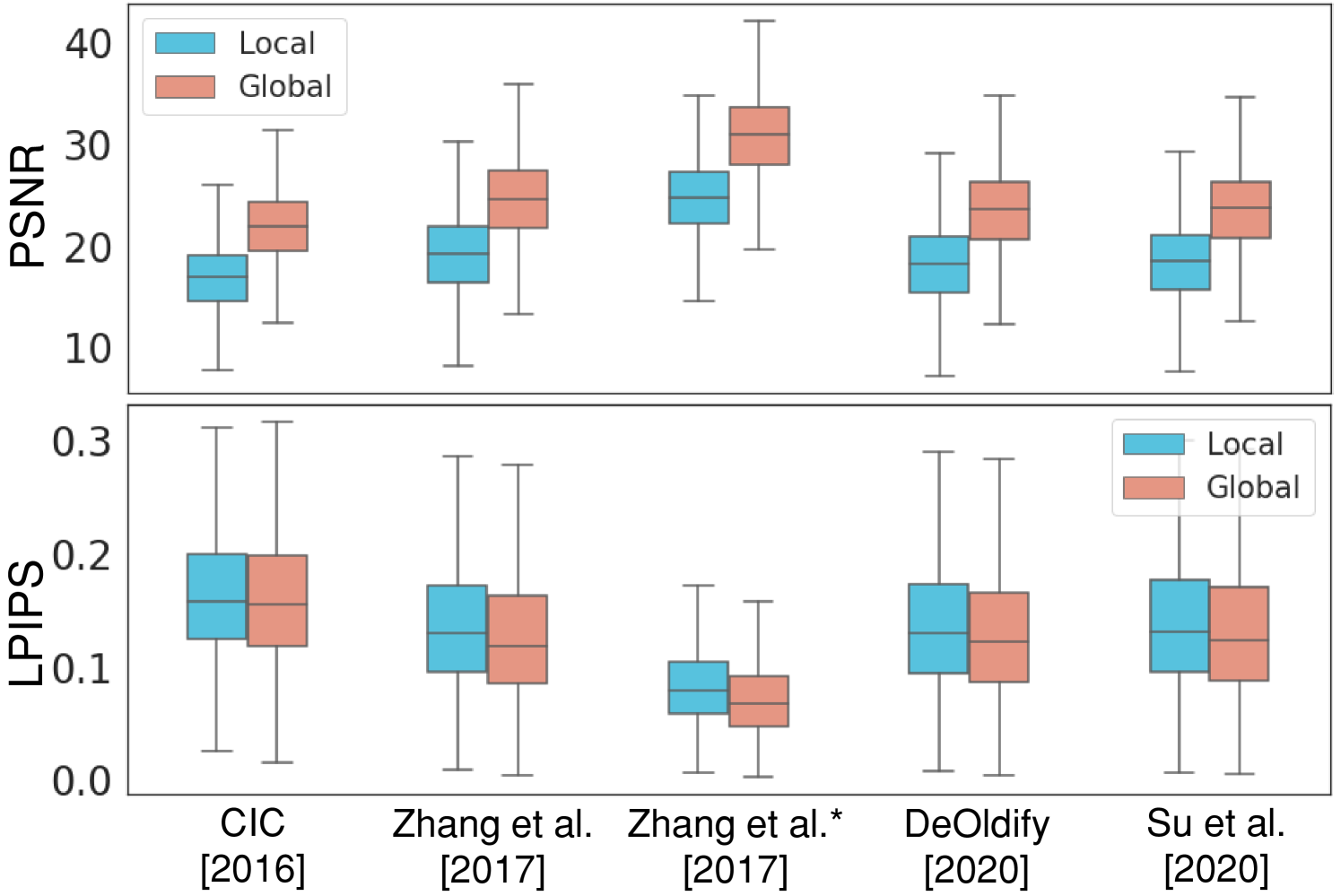

In recent years, deep image colorization methods [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11] have achieved a great performance on the generation of a realistic colorized image given a gray-scale or a sketch image. However, these methods often contain the color-bleeding artifact, a problematic color spreading across the adjacent objects. As shown in Fig. Deep Edge-Aware Interactive Colorization against Color-Bleeding Effects, the color-bleeding artifacts degrade the colorization quality particularly along the edges (red), compared to that in the whole image (blue). For the quantitative analysis, we also compare the quality of the colorized outputs between the existing methods [3, 12, 6, 13, 5] along the edges and the entire region in Fig. 2. The result demonstrates that existing colorization methods, including Zhang et al. [6] and Su et al. [5] which are widely used, suffer from the quality degradation particularly along the edges. Therefore, we believe there is still room for further improvement in the colorization task by resolving such color-bleeding problem.

Some approaches have addressed this issue by applying a sharpening filter on an image to colorize [14, 15, 16], or leveraging additional tasks, such as semantic segmentation, to enhance the boundaries of the semantic objects [5, 13]. However, their improvements are limited to edges appearing with strong gray-scale contrast or along the objects corresponding to the predefined categories. Color bleeding often occurs between the different objects that share similar gray-scale intensities and also along the edges that appear inside the objects, such as a zigzag pattern of a color pencil in Fig. 5. Therefore, tackling these bleeding edges at any desired locations still remains challenging, even in the recently proposed colorization methods. Moreover, evaluation of the color bleeding regions can be highly subjective depending on the users, as the plausible boundaries of the multi-modal colorized objects can differ by point of view.

Therefore, we propose a novel interactive edge enhancement framework that takes a direct user interaction annotating a color-bleeding edge. Unlike the previous approaches, our framework guarantees the reliable edge enhancement in any desired regions by utilizing user interactions. In addition, our interactive approach only requires users the minimum efforts for edge enhancement. We first apply a simple add-on edge-enhancing network, which takes both scribbles and an intermediate activation map of the colorization network as inputs. This network encodes an edge-corrective representation for its input activation map, particularly in the regions annotated by the scribbles, and adds it into the original activation map by a residual connection. Given this refined representation for the bleeding edges, the following layers of the colorization network can generate the edge-enhanced colorization output.

Experimental results demonstrate that our method has a remarkable performance over the baselines on diverse benchmark datasets, ImageNet [17], COCO-Stuff [19] and Place205 [20]. Moreover, we introduce a new evaluation metric for measuring how reliably the colorization methods obey the color boundaries. Also, we confirm that our approach takes the reasonable amount of time and efforts through the user-study, representing its potential in practical applications . Furthermore, we explore the applicability of our approach in the task of sketch colorization as well, by validating our method on Yumi’s Cells [21] and Danbooru [22] datasets.

2 Related Work

2.1 Unconditional and Conditional Colorization

Deep learning-based colorization methods [1, 3, 2, 16, 23, 24, 4, 5] have proposed fully automatic colorization approaches without any additional conditions. These unconditional models predict the most plausible colors for the given input image, even without any laborious color annotations provided by a user. By leveraging conditions given by the user, the recent colorization methods have accomplished multi-modal colorization. One of the widely used conditions is a reference image [11, 8, 9]. However, a reference image containing visually different contents from the gray-scale often induces implausible results. On the other hand, a color palette or a scribble hint given by a user directly designates the user’s preference on both color and region [25, 6, 7]. However, as we observed in Fig. 2, both unconditional and conditional colorization often fail to preserve the color edge, resulting in generating color-bleeding artifacts along the boundary regions.

2.2 Edge-Aware Colorization

Classical approaches [14, 15] address the bleeding artifacts in an optimization problem. Huang et al. [14] develop an edge detection algorithm for improving edges information during colorization. Yin et al. [15] propose a sharpening filter applied over a colorized image, alleviating the bleeding artifacts via optimization. Similarly, Zhao et al. [16] propose a joint bilateral filter which considers the adjacent color values for sharpening the edges. However, as these approaches mainly rely on the edges of input image, they still fail on the boundaries between the objects that share the similar gray-scale intensities. In contrast, our approach involves a direct interaction, which refines the boundary representation in any region regardless of its edge pixel values in the input image.

Recently, Su et al. [5] and Zhao et al. [13] leverage the semantic segmentation and object detection, respectively, when training a colorization model. These tasks enforce the network to learn the semantic objects, which may help to recognize the boundary of such objects to colorize. However, since both semantic segmentation and object detection recognize the objects defined by particular classes, the methods may still suffer from the color bleeding along the objects that are not classified by any categories. For example, in Fig. 5, the color-bleeding artifacts across the patterns inside the balloon would not be fully addressed by these methods, as such patterns are not classified into a certain class. In our approach, we leverage the scribbles that are independent of object types, allowing more general edge enhancement against these methods.

3 Proposed Method

3.1 Overall Workflow

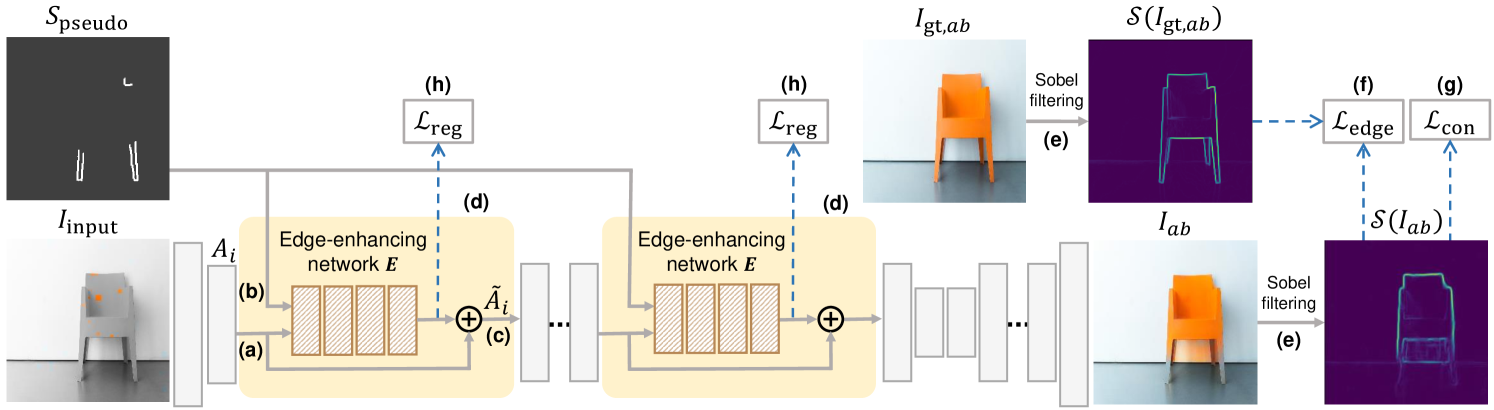

This section provides a detailed description of our proposed method, as described in Fig. 4. As an interactive approach, we design an edge-enhancing network to take scribbles, which annotates the color-bleeding edges, as additional inputs. These scribbles, which we term pseudo-scribbles, are automatically generated to approximate the real-world user hints (Section 3.2). The network also takes the intermediate activation maps of the colorization network, and refines them along the edges annotated by the pseudo-scribbles. Afterward, these refined representations pass through the following layers to obtain an edge-enhanced colorized output in the end (Section 3.3). To train our model, we introduce an edge-enhancing loss, which enforces the model to recover the clear edges close to those of the ground-truth image. Also, we propose both feature-regularization loss and consistency loss to prevent undesirable color distortion which debases the overall quality of edge-enhanced outputs (Section 3.4).

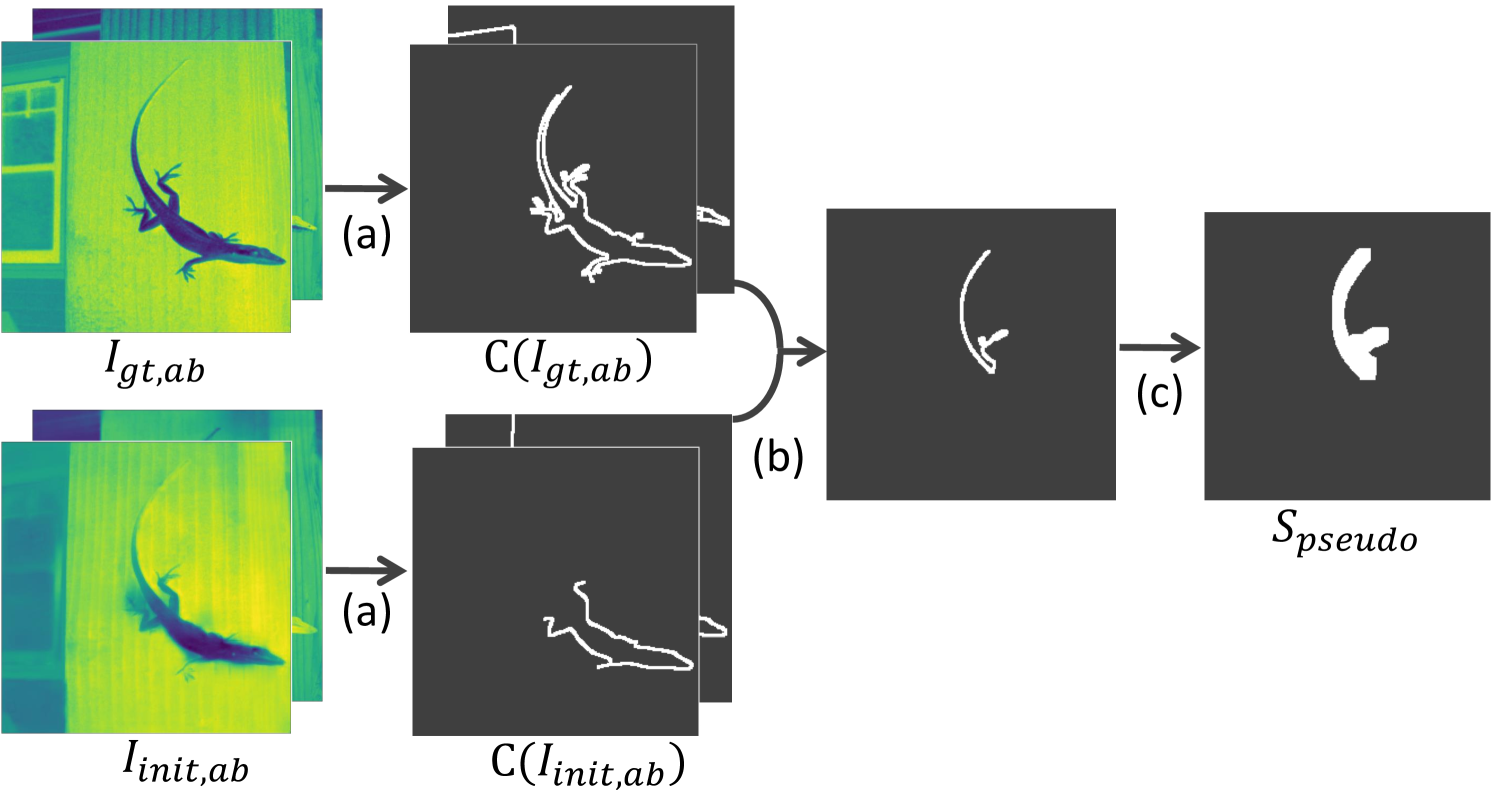

3.2 Pseudo-Scribble

Our approach requires a user-driven hint to be trained in an interactive manner, but collecting real-world user annotations needs a lot of time and human resources, which is prohibitive. Instead, we automatically generate the pseudo-scribbles , which emulate the real user scribbles, for each training image. The overall procedure of generating the is presented in Fig. 3. First, we obtain the color-bleeding outputs from a pre-trained colorization model. Afterwards, we apply the Canny edge detector [18] , a widely used edge detecting algorithm, onto the ab color channels of a ground-truth image and a , respectively (Fig. 3 (a)). Then, we can obtain the binary maps and which represent the edges.

By selecting one of the edges that appears in , but not in , we can have a single edge where the baseline fails to preserve boundary as clearly as does (Fig. 3 (b)). Note that while a gecko’s tail is shown to be selected in (b), other scribbles can be chosen in the training as well, such as its paw. Afterward, to better approximate a real user’s hint, the are formed to be thick and coarse enough to ) be easy to draw and ) contain the bleeding boundary. To this end, we apply a width transformation that randomly modifies the width of the selected edge between 1 and 11 pixels (Fig. 3 (c)).

3.3 Edge-Enhancing Network

We apply an edge-enhancing network to refine the intermediate representations of a colorization network, correcting the erroneously spread colors across the boundaries. This network encodes the corrective representations from both scribbles and the intermediate features as inputs and adds them to the original features with the residual connection. Suppose that we want to modify an activation map , where is the set of intermediate activation maps from different encoder layers of the colorization model (Fig. 4 (a)). To obtain the scribbles, we generate a by the procedure described in Section 3.2 and downscale it to match the spatial resolution of the activation map from the -th layer (Fig. 4 (b)). Then, and are concatenated to provide an input for edge-enhancing network . Given the concatenated tensor, we can obtain an activation map representing the correction of to alleviate color-bleeding artifacts. Therefore, by applying a residual connection with , (Fig. 4 (c)) refined activation map is calculated as

| (1) |

where is the proposed edge-enhancing network and a concatenation.

We apply the edge-enhancing networks to the encoder because the edge-enhancing performance is empirically better when applying our network E to the encoder than the decoder. Detailed comparisons on the qualitative results of the network E applied in the encoder and decoder layer are provided in Section D. To encourage edge refinement in both low- and high-level representations, we apply multiple edge-enhancing networks in both shallow and deep layers of the encoder (Fig. 4 (d)).

| Kernel Size | Methods | ImageNet ctest [17] | COCO-Stuff [19] | Place205 [20] | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| LPIPS | PSNR | LPIPS | PSNR | LPIPS | PSNR | |||||

| K=7 | CIC [3] | 0.248 | 13.281 | 0.247 | 13.368 | 0.254 | 13.577 | |||

| DeOldify [12] | 0.250 | 13.234 | 0.251 | 13.059 | 0.227 | 14.258 | ||||

| Zhang et al. [6] | 0.246 | 13.248 | 0.206 | 14.755 | 0.219 | 14.815 | ||||

| +Ours | 0.217 | 13.919 | 0.192 | 15.037 | 0.211 | 15.104 | ||||

| Zhang et al. [6]∗ | 0.208 | 14.966 | 0.158 | 17.456 | 0.171 | 17.530 | ||||

| +Ours∗ | 0.177 | 16.041 | 0.143 | 17.953 | 0.161 | 17.906 | ||||

| Su et al. [5]∗ | 0.185 | 16.393 | 0.187 | 15.971 | 0.194 | 17.032 | ||||

| +Ours∗ | 0.177 | 16.507 | 0.176 | 16.188 | 0.187 | 17.098 | ||||

| K=Full | CIC [3] | 0.172 | 21.001 | 0.164 | 21.456 | 0.153 | 21.873 | |||

| DeOldify [12] | 0.159 | 21.433 | 0.149 | 21.985 | 0.156 | 21.933 | ||||

| Zhang et al. [6] | 0.148 | 21.981 | 0.135 | 22.729 | 0.138 | 22.846 | ||||

| +Ours | 0.147 | 22.026 | 0.134 | 22.729 | 0.138 | 22.845 | ||||

| Zhang et al. [6]∗ | 0.086 | 27.202 | 0.080 | 27.681 | 0.087 | 27.697 | ||||

| +Ours ∗ | 0.085 | 27.559 | 0.078 | 27.955 | 0.087 | 27.935 | ||||

| Su et al. [5]∗ | 0.091 | 26.211 | 0.089 | 26.050 | 0.090 | 27.414 | ||||

| +Ours∗ | 0.091 | 26.291 | 0.088 | 26.233 | 0.089 | 27.486 | ||||

3.4 Objective Functions

Edge-Enhancing Loss. Inspired by the gradient difference loss (GDL) [26] which sharpens the video prediction, we propose an edge-enhancing loss for enhancing the edges in a target region. This loss enforces an edge-enhancing network to generate the refined activation maps that enhance the edges of to be close to those of (Fig. 4 (f)). To obtain the edge map, we utilize the Sobel filter, a differentiable edge extracting filter, onto the CIE ab channels of images, obtaining both horizontal and vertical derivative approximations of color intensities (Fig. 4 (e)).

The resulting color gradient is formally written as

| (2) |

where and are horizontal and vertical Sobel filters which convolve with the given image, and returns the gradient magnitude of them. Our proposed edge-enhancing loss can be written as

| (3) |

where denotes a set of coordinates whose values are non-zero in a binary mask . only activates a set of pixels within certain distance from the target edge, i.e., .

Feature-Regularization Loss. We wish for our proposed method to improve the edges while maintaining the original performance of the colorization network. Therefore, we introduce a feature-regularization loss (Fig. 4 (h)) to the output of the edge-enhancing network . This encourages our network to learn optimal edge enhancement while avoiding the excessive perturbations in the network activation maps. This loss is formulated as

| (4) |

where denotes an index of a layer to be revised and a concatenation.

Consistency Loss. While our proposed network enhances the gradient of colors around the given edges, it can unintentionally induce color distortions in the undesirable regions, i.e., outside of the target edges. Therefore, we design an additional constraint, named consistency loss (Fig. 4 (g)), to prevent these unnecessary changes. This loss further optimizes our network to learn the refinements only in the desired regions. penalizes the unfavorable changes of our enhanced output from the initial colorized output , only in the regions where we wish for the colors to remain. As the binary mask mentioned above indicates the regions for the colors to be changed by edge enhancement, we apply this loss on the pixels whose values of are zero. This is enabled by multiplying on each channel. This loss can be formulated as

| (5) |

where denotes a set of coordinates whose values are non-zero in a binary mask .

In summary, the overall objective function for training the edge-enhancing network is defined as

| (6) |

where , and are hyperparameters, and is the number of layers with the edge-enhancing network.

3.5 Implementation Details

In our experiments, we use the colorization networks introduced in Zhang et al. [6] and Su et al. [5] as our backbone colorization models. Zhang et al. first proposes an interactive colorization approach that takes color hints, achieving state-of-the-art performance over the existing conditional methods. Su et al. achieves superior performance in an unconditional setting by leveraging an object detection module for an instance-level colorization. We empirically confirm that applying our framework to the baselines taking explicit color hints results in better optimized edge-enhancing networks, compared to training on unconditional ones. Therefore, similar to Zhang et al., we re-implement Su et al. to take local color hints as additional inputs, which is not available in the original paper. Further details are provided in Section I.

| Method | Cluster Discrepancy Ratio | |||||||

|---|---|---|---|---|---|---|---|---|

|

|

|

||||||

| CIC [3] | 0.383 | 0.401 | 0.381 | |||||

| DeOldify [12] | 0.437 | 0.445 | 0.441 | |||||

| Zhang et al. [6] | 0.385 | 0.391 | 0.377 | |||||

| + Ours | 0.502 | 0.521 | 0.473 | |||||

| Zhang et al. [6]∗ | 0.418 | 0.421 | 0.402 | |||||

| + Ours ∗ | 0.543 | 0.547 | 0.508 | |||||

| Su et al. [5]∗ | 0.336 | 0.325 | 0.336 | |||||

| + Ours ∗ | 0.394 | 0.398 | 0.371 | |||||

4 Experiments

Baselines. We compare our proposed model with various colorization methods, including both unconditional and conditional ones. Unconditional baselines include CIC [3], DeOldify [12], and Zhang et al. without color hints. For conditional baselines, we utilize Zhang et al. and Su et al. with local hints, as mentioned in Section 3.5.

Dataset. The experiments are conducted with dataset including ImageNet [17], COCO-Stuff [19] and Place205 [20], which are generally used in colorization tasks.

Evaluation Measure. For evaluation, we assess the performance of our proposed method and other prior models by utilizing two measures, peak signal-to-ratio (PSNR) and learned perceptual image patch similarity (LPIPS) [27]. In addition, we newly propose a metric named cluster discrepancy ratio (CDR), which is designed to measure the degree of color-bleeding effects.

4.1 Cluster Discrepancy Ratio

Although PSNR and LPIPS are generally used for evaluating the colorization performance in a rich literature [3, 6, 5, 4, 2, 9, 11, 16], they are essentially based on the color difference between a generated image and a ground-truth one. Therefore, a colorized image that contains well-preserved edges but different colors from the ground-truth may be underrated by these two metrics. Note that this image would appear even more realistic compared to a bleeding image with similar colors. This specific failure case is described with an example and its scores in Section C.

To compensate for this concern, we propose a novel evaluation metric that measures the discrepancy of color clusters grouped by the super-pixels defined by a simple linear iterative clustering method [28]. The super-pixels have their cluster assignments based on color similarity. Inspired by this, we can perform binary classification on whether two adjacent pixels with different cluster assignments in the ground-truth still have different color values in the colorized outputs, especially along the edges. Specifically, for the pixel in a set of coordinates along the boundary of the ground-truth , we identify whether the adjacent pixel within kernel size have different cluster assignments from that of . For those who have different cluster assignments from , the cluster index of , we define a set that consists of their coordinates. Then, in the generated outputs , we count the number of adjacent pixels that belong to and have the same cluster assignment as . The number indicates how many pixels are from different clusters in the , but share the same colors in the , which corresponds to the color-bleeding artifacts. Therefore, the super-pixel-based cluster discrepancy ratio can be written as

| (7) |

where denotes a set of coordinates for the pixels of edges, and a cluster assignment given to the super-pixels of the and . All possible shifts within the kernel size are described as

| (8) |

4.2 Qualitative Results

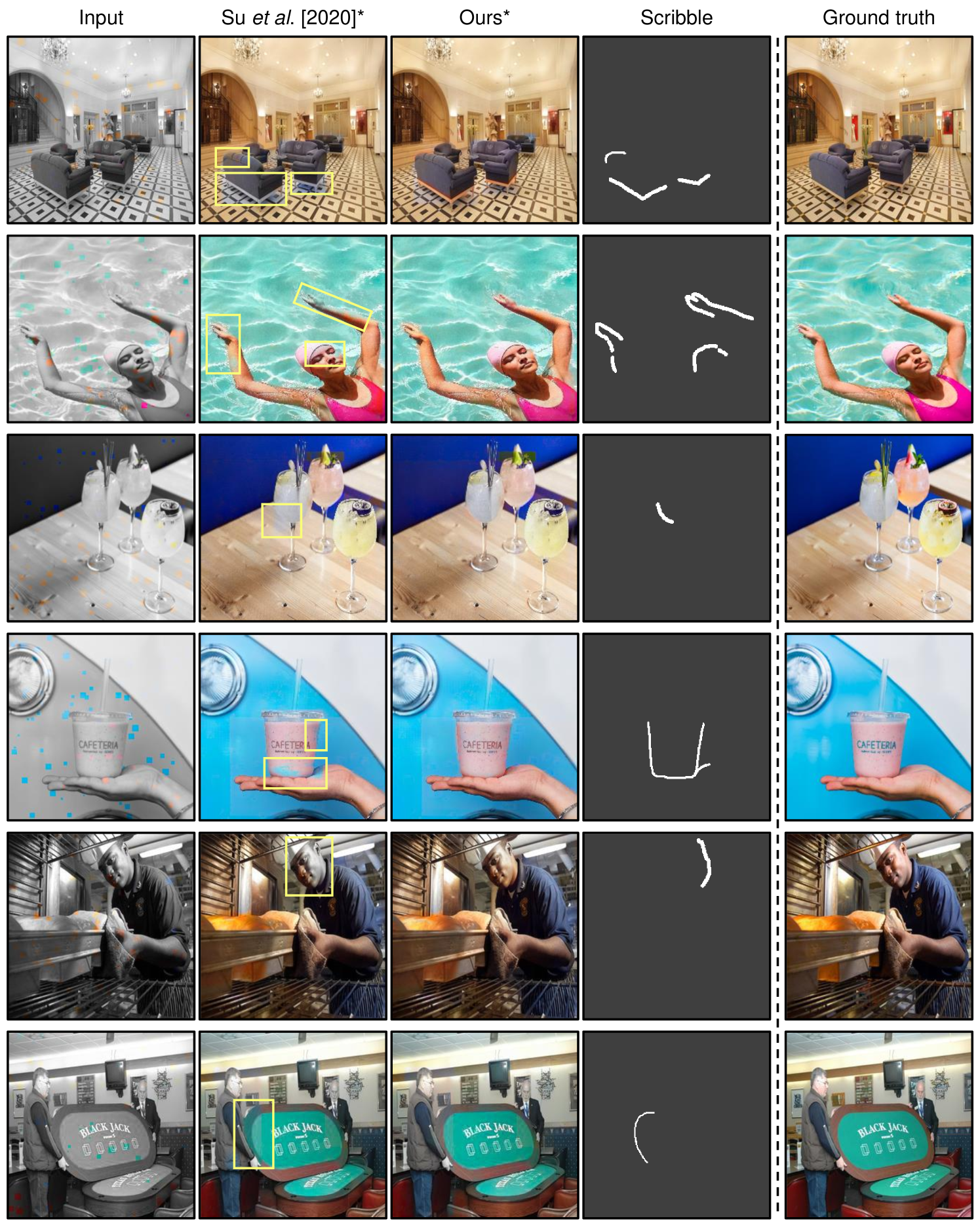

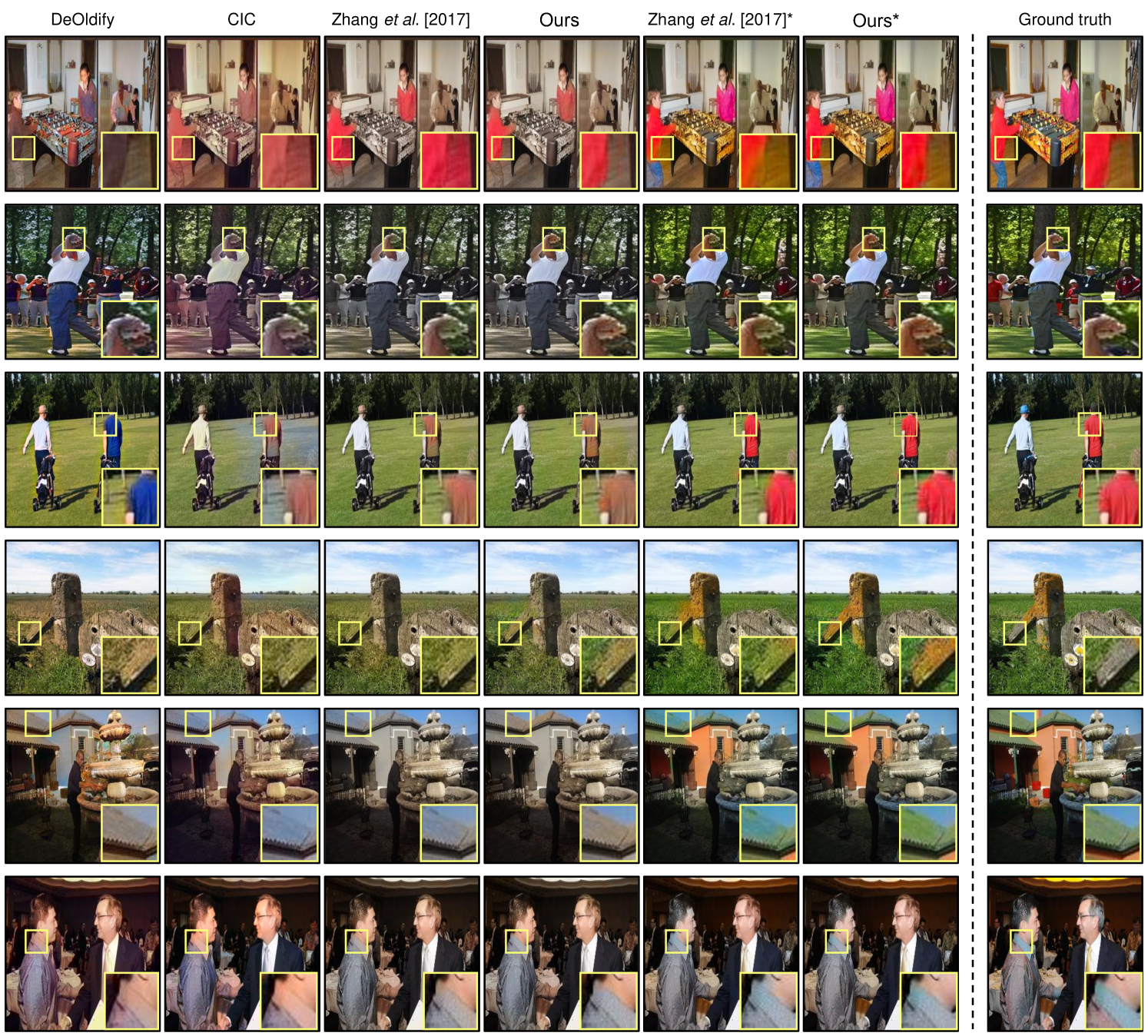

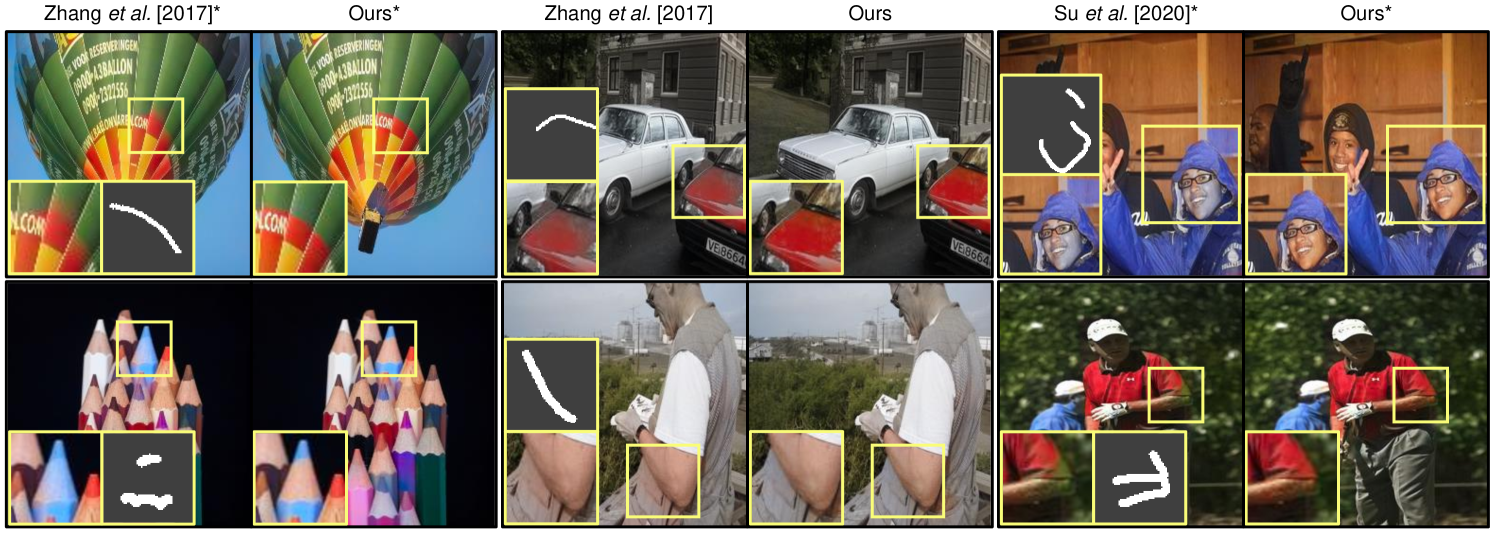

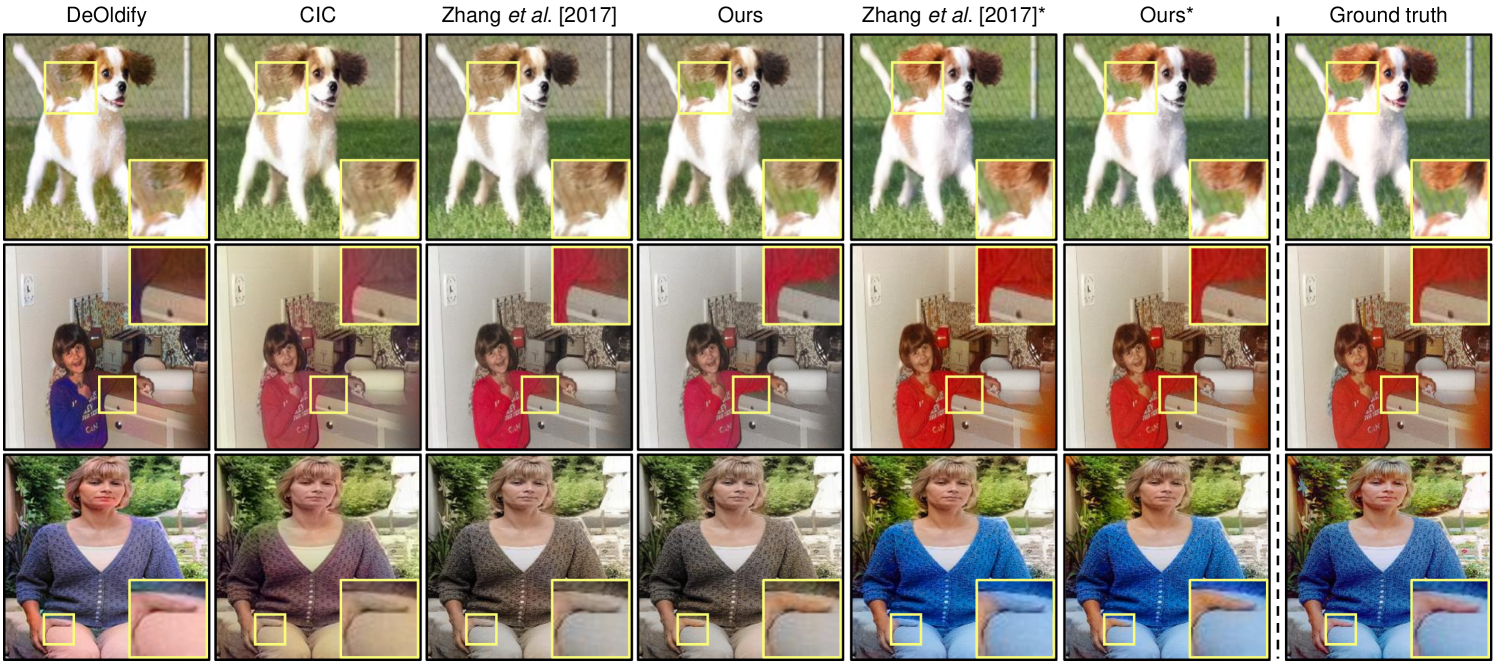

In Fig. Deep Edge-Aware Interactive Colorization against Color-Bleeding Effects, we visualize the images having color-bleeding artifacts from the conditional colorization model of Zhang et al. [6] and their enhanced results using our proposed method. This demonstrates that our approach robustly corrects the bleeding boundaries even when roughly drawn scribbles are given. In addition, multiple edges can be enhanced in a single feed-forward when their corresponding scribbles are given at once. Fig. 5 provides additional qualitative examples of edge enhancement in our approach applied to both the conditional and unconditional model of Zhang et al., and conditional of Su et al. [5] In Fig. 6, colorized images have the color-bleeding region for the baseline models. Comparing with other approaches, our method refines the coarse region. We present additional qualitative results of our approach with these two baselines in Figs. 15 and 16.

4.3 Quantitative Comparison

As the improvement in the colorization outputs induced by our approach arises in a local region along the edges, we present the results of PSNR and LPIPS measured in these particular locations, as well as in a global region. To conduct a local evaluation, we randomly sample from the test samples of each dataset, which contain the regions of color-bleeding, as explained in Section 3.2. Afterward, we report the local scores measured within the kernel size of along the edge pixels of those . For global evaluation, we report the scores calculated on the entire region, specifying a kernel size as Full. To accommodate the scribbles similar to the real hints in our evaluation, we provide with random widths ranging from 1 to 5 pixels for edge enhancement. Table 1 shows that our model effectively advances the colorization performance of two baselines, outperforming the existing methods. As bleeding effects are mostly mitigated in the local regions, large improvements are observed when the evaluation regions are localized along the edges. In addition, Table 2 presents the CDR measured for both our method and the baselines. Our method achieves a higher score against other baselines, demonstrating its superiority in colorizing the adjacent instances with different colors.

In Table 3, we ablate , , and width augmentation technique to analyze their effectiveness quantitatively. When we ablate , overall performance on PSNR, LPIPS, and CDR is slightly degraded, which is mainly due to the color distortion in the wrong regions. Our method without results in degraded scores of PSNR and LPIPS while obtaining the best score in CDR. Since suppresses the excessive perturbations in the refined feature maps of our edge-enhancing network, removing this loss causes an excessive edge enhancement (e.g., saturated colors along the edge) as well as undesirable color distortions in the entire region. As we ablate the width augmentation for the in the training, our approach achieves the best score in CDR, while PSNR and LPIPS score become even worse than Zhang et al. This implies that our edge-enhancing network tends to excessively enhance the color boundaries when unseen thick scribble is provided in the test time, ruining the overall colorization quality. Therefore, width augmentation plays a critical role in learning a robust color enhancing, invariant to the width of a given scribble. We support this claim with the qualitative results of the models with these objective functions ablated in Section B. In summary, our proposed method with every proposed objective function and augmented achieves an optimal performance of edge enhancement.

4.4 Verification for Labor-Efficient Interaction

We believe that our approach provides fast and reliable interactions, which help users to correct the color bleeding in an intuitive manner. To verify its usefulness with regard to labor efficiency, we conduct a user study with 13 novice participants using a user interface that we provide. Each participant is given five randomly selected colorized images that contain the color-bleeding artifacts. They are instructed to enhance the images by identifying color-bleeding areas and drawing scribbles until they obtain satisfactory results. On average, finding color-bleeding areas and drawing scribbles take and seconds per image, and each participant draws scribbles to enhance an image. The resultant improvement of PSNR is from to and LPIPS from to , indicating that participants provide meaningful edges for enhancement. These results demonstrate that our method have potentials to be applied in practical applications. We provide additional analysis on the robustness of our method across different users in Section A.

5 Experiments on Sketch Colorization

In this section, we further explore the potentials of our method in sketch colorization as well. Compared to the gray-scale image, the sketch image contains a set of thin lines that explicitly define the semantic boundaries between objects. However, as shown in the Fig. 7, color-bleeding artifacts across these lines are easily observed, which indicates that the model still fails to preserve the boundary even when they contain edges in the input image. The qualitative results in the columns denoted as “ours” in Fig. 7 demonstrate that our method performs robust edge preservation in the sketch colorization as well. We train and evaluate our method and the baselines on comic domain dataset including Yumi’s Cells [21] and Danbooru [22]. The implementation details about sketch colorization are described in Section I. To further demonstrate the effectiveness of our method on sketch colorization, we present quantitative and qualitative results in H.

6 Conclusion

In this paper, we propose a novel and simple approach to effectively alleviate the color-bleeding artifacts which significantly degrades the quality of colorized outputs. Our method improves the bleeding edges by refining the intermediate features of the colorization network in the desired regions via user-interactive scribbles as additional inputs. Extensive experiments demonstrate its outstanding performance and reasonable labor efficiency, manifesting its potentials in practical applications.

Acknowledgements This work was supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government(MSIT) (No. 2019-0-00075, Artificial Intelligence Graduate School Program(KAIST)). This work was also supported by Institute of Information & communications Technology Planning & Evaluation(IITP) grant funded by the Korea government(MSIT) (No. 2021-0-01778, Development of human image synthesis and discrimination technology below the perceptual threshold) We thank all researchers at NAVER WEBTOON Corp.

References

- [1] Satoshi Iizuka, Edgar Simo-Serra, and Hiroshi Ishikawa. Let there be color!: Joint end-to-end learning of global and local image priors for automatic image colorization with simultaneous classification. Proc. the ACM Transactions on Graphics (ToG), 35, 2016.

- [2] Gustav Larsson, Michael Maire, and Gregory Shakhnarovich. Learning representations for automatic colorization. In Proc. of the European Conference on Computer Vision (ECCV), 2016.

- [3] Richard Zhang, Phillip Isola, and Alexei A Efros. Colorful image colorization. In Proc. of the European Conference on Computer Vision (ECCV), 2016.

- [4] Seungjoo Yoo, Hyojin Bahng, Sunghyo Chung, Junsoo Lee, Jaehyuk Chang, and Jaegul Choo. Coloring with limited data: Few-shot colorization via memory augmented networks. In Proc. of the IEEE conference on computer vision and pattern recognition (CVPR), 2019.

- [5] Jheng-Wei Su, Hung-Kuo Chu, and Jia-Bin Huang. Instance-aware image colorization. In Proc. of the IEEE conference on computer vision and pattern recognition (CVPR), 2020.

- [6] Richard Zhang, Jun-Yan Zhu, Phillip Isola, Xinyang Geng, Angela S. Lin, Tianhe Yu, and Alexei A. Efros. Real-time user-guided image colorization with learned deep priors. Proc. the ACM Transactions on Graphics (ToG), 36, 2017.

- [7] Lvmin Zhang, Chengze Li, Tien-Tsin Wong, Yi Ji, and Chunping Liu. Two-stage sketch colorization. Proc. the ACM Transactions on Graphics (ToG), 37:261:1–261:14, 2018.

- [8] Mingming He, Dongdong Chen, Jing Liao, Pedro V Sander, and Lu Yuan. Deep exemplar-based colorization. Proc. the ACM Transactions on Graphics (ToG), 37:47, 2018.

- [9] Bo Zhang, Mingming He, Jing Liao, Pedro V Sander, Lu Yuan, Amine Bermak, and Dong Chen. Deep exemplar-based video colorization. In Proc. of the IEEE conference on computer vision and pattern recognition (CVPR), 2019.

- [10] Y. Xiao, P. Zhou, Y. Zheng, and C. Leung. Interactive deep colorization using simultaneous global and local inputs. In The IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 1887–1891, 2019.

- [11] J. Lee, E. Kim, Y. Lee, D. Kim, J. Chang, and J. Choo. Reference-based sketch image colorization using augmented-self reference and dense semantic correspondence. In Proc. of the IEEE conference on computer vision and pattern recognition (CVPR), 2020.

- [12] Jason Antic. deoldify. https://github.com/jantic/DeOldify, 2020. [Online; accessed 07-11-2020].

- [13] Jiaojiao Zhao, Jungong Han, Ling Shao, and Cees Snoek. Pixelated semantic colorization. International Journal of Computer Vision, 128, 04 2020.

- [14] Yi-Chin Huang, Yi-Shin Tung, Jun-Cheng Chen, Sung-Wen Wang, and Ja-Ling Wu. An adaptive edge detection based colorization algorithm and its applications. In Proc. of the ACM International Conference on Multimedia, 2005.

- [15] Hui Yin, Yuanhao Gong, and Guoping Qiu. Side window filtering. In Proc. of the IEEE conference on computer vision and pattern recognition (CVPR), 2019.

- [16] Jiaojiao Zhao, L. Liu, Cees G. M. Snoek, J. Han, and L. Shao. Pixel-level semantics guided image colorization. ArXiv, abs/1808.01597, 2018.

- [17] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. Imagenet: A large-scale hierarchical image database. In Proc. of the IEEE conference on computer vision and pattern recognition (CVPR), 2009.

- [18] J. Canny. A computational approach to edge detection. The IEEE Transactions on Pattern Analysis and Machine Intelligence (TPMAI), 8:679–698, 1986.

- [19] Holger Caesar, Jasper Uijlings, and Vittorio Ferrari. Coco-stuff: Thing and stuff classes in context. In Proc. of the IEEE conference on computer vision and pattern recognition (CVPR), 2018.

- [20] B. Zhou, A. Lapedriza, A. Khosla, A. Oliva, and A. Torralba. Places: A 10 million image database for scene recognition. The IEEE Transactions on Pattern Analysis and Machine Intelligence (TPMAI), 40:1452–1464, 2018.

- [21] NaverWebtoon. Yumi’s cells. https://comic.naver.com/webtoon/list.nhn?titleId=651673, 2019. [Online; accessed 22-11-2019].

- [22] Aaron Gokaslan Gwern Branwen. Danbooru2017: A large-scale crowdsourced and tagged anime illustration dataset. https://www.gwern.net/Danbooru2017, 2018. [Online; accessed 22-03-2018].

- [23] Man M. Ho, L. Zhang, Alexander Raake, and J. Zhou. Semantic-driven colorization. ArXiv, abs/2006.07587, 2020.

- [24] Patricia Vitoria, Lara Raad, and Coloma Ballester. Chromagan: Adversarial picture colorization with semantic class distribution. In The IEEE Winter Conference on Applications of Computer Vision, 2020.

- [25] Anat Levin, Dani Lischinski, and Yair Weiss. Colorization using optimization. Proc. the ACM Transactions on Graphics (ToG), 23:689–694, 2004.

- [26] Michaël Mathieu, Camille Couprie, and Yann LeCun. Deep multi-scale video prediction beyond mean square error. In Proc. the International Conference on Learning Representations (ICLR), 2016.

- [27] Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In Proc. of the IEEE conference on computer vision and pattern recognition (CVPR), 2018.

- [28] R. Achanta, A. Shaji, K. Smith, A. Lucchi, P. Fua, and S. Süsstrunk. Slic superpixels compared to state-of-the-art superpixel methods. The IEEE Transactions on Pattern Analysis and Machine Intelligence (TPMAI), 34:2274–2282, 2012.

- [29] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In Proc. the International Conference on Learning Representations (ICLR), 2015.

- [30] Vinod Nair and Geoffrey E. Hinton. Rectified linear units improve restricted boltzmann machines. In Proc. the International Conference on Machine Learning (ICML), 2010.

- [31] Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proc. the International Conference on Machine Learning (ICML), 2015.

- [32] Holger WinnemöLler, Jan Eric Kyprianidis, and Sven C Olsen. Xdog: an extended difference-of-gaussians compendium including advanced image stylization. Computers & Graphics, 36:740–753, 2012.

This supplementary material complements our paper with additional experimental results and their analysis. First, Section A presents how insensitive our edge-enhancing method is across the different real-world users. Then, qualitative results of the ablation study are presented in Section B, followed by an explanation about the analysis on our proposed metric CDR, with an example in Section C. In Section D, we provide the qualitative comparisons between our approach applied in encoder and decoder layers as well as their analysis. As an interactive colorization approach, we construct our own user interface, where users can draw scribbles with adjustable widths for edge enhancement. Section E describes this tool with the step-by-step demonstration. Furthermore, we provide additional quantitative and qualitative results of our method applied to two baselines (Zhang et al. [6] and Su et al. [5]) over various datasets, in Sections F and G. Moreover, Section H contains both quantitative results and qualitative examples of edge enhancements in sketch colorization, complementary to Section 5. Lastly, Section I provides the implementation details, such as the settings for training, network architecture, and hyper-parameters for edge-extracting modules in the generation of .

Appendix A Variance of Edge Enhancement across Users

To verify the robustness of the proposed method against ambiguous scribbles across different users, we measure the improved PSNR, LPIPS, and CDR on the enhanced colorization outputs obtained by different users. To this end, among the color-bleeding edges that were used in the user study (Section 4.4), we selected the scribbles for the edges that were enhanced by at least four different participants in our user study. For each edge, we calculated the improvement of these evaluation scores in the local regions near the scribbles drawn by the different users, following the same evaluation procedure in Section 4.4. The resulting mean and standard deviation are , , and for PSNR, LPIPS and CDR, respectively. These results indicate that our method achieves consistent improvement under various scribbles drawn by different users. In addition, we provide a qualitative example of these results in Fig. 8 with their evaluation scores. Our method robustly improves the color-bleeding edges given varying styles of the user scribbles.

Appendix B Ablation Studies on Width Augmentation and Consistency Loss

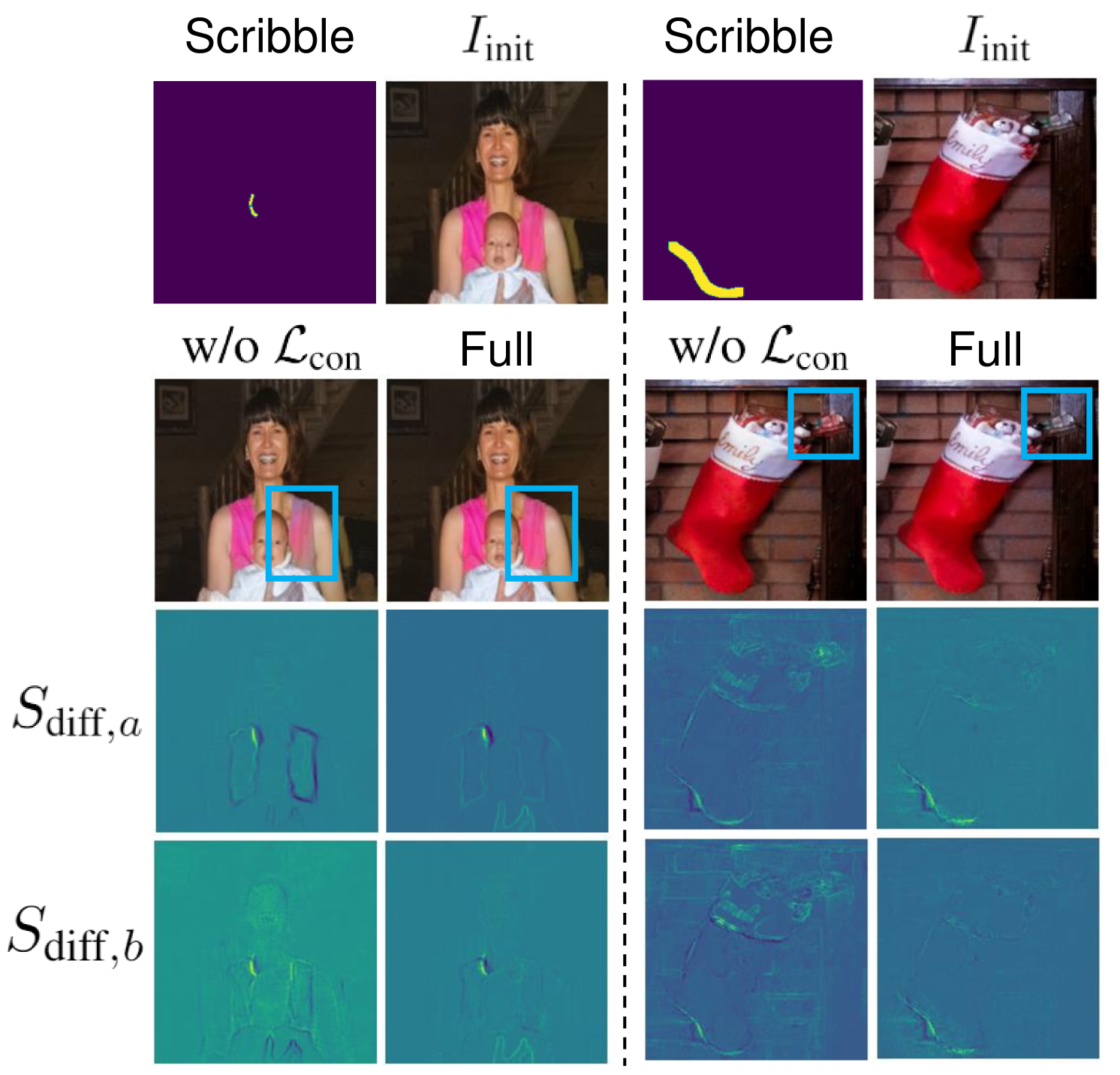

This section provides the qualitative results of ablation studies on the scribble width augmentation and the consistency loss , described in the Section 3.2 and 3.4, respectively. In Fig. 9, the first column shows a binary map of user-driven scribble with its width varying from 1 to 11 pixels. The columns named and represent how the edge values are changed in CIE ab channels. and are obtained by subtracting from , where approximates the edges, as described in Eq. 2, is an initial colorized output by a backbone network and is a refined output by our method.

The fourth column (w/o Aug) presents the generated outputs of the model trained without width augmentation in a training phase, while the seventh column (Full) contains the outputs with augmentation. As the width of scribbles w increases, especially when w becomes larger than , the width of changed edges and become thick as well. This results in an excessive increase of edges in channels, producing extremely vivid colors (e.g., red) along the given boundaries. In contrast, our model trained with augmentations maintains the increase of enhanced edges regardless of given scribble’s widths, robustly generating the plausible color corrections for all possible scribbles. This allows the users to provide their interactions without giving much effort to drawing sharp thin scribbles.

Fig. 10 compares the qualitative results of our model trained with and without . As explained in Section 3.4, enforces our model not to generate unintentional color changes outside of the target edges. Therefore, the model trained without this objective function tends to produce unnecessary changes of colors in the wrong regions. As shown in third and fourth rows, and of the model with shows more sparse changes of pixels, compared to that without . Ablation of causes color distortions, such as washed-out colors or unintended color changes, illustrated in the images with blue bounding boxes. For example, a woman with a pink vest contains a washed-out color on her right side of the vest. This can be observed in the , specifically in the dark boundaries of the vest (decreased edges).

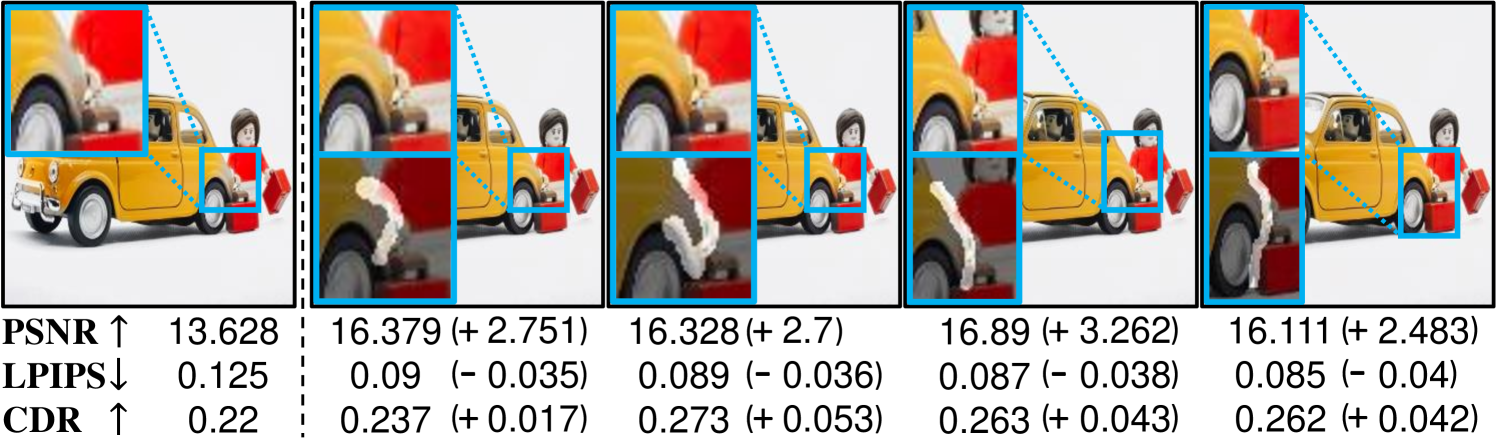

Appendix C Analysis on Cluster Discrepancy Ratio

This section provides a specific example that demonstrates the necessity of our proposed metric, i.e., CDR. As mentioned in Section 4.1, this metric aims to cover the blind spot of two generally used metrics PSNR and LPIPS [27] in the colorization task. In Fig. 11, the first and the second columns are the ground-truth and colorized output with color-bleeding artifacts in the yellow box, respectively. is another example of colorized output with its bleeding edge enhanced by our proposed approach. Note that this is colorized with a different color from the ground-truth, enabled by providing user-interactive color hints. As contains the different colors from the ground-truth, unlike , it is shown to record lower PSNR and higher LPIPS score than . However, contains a clear color edge, while contains the color-bleeding effects, which make more visually favorable than . The results of these metrics on against represent that they often underrate the quality of realistic sharp images that contain different colors from ground-truth. Our metric, however, essentially evaluates whether the colors are different across the adjacent objects and less dependent on the ground-truth colors. Therefore, the discrepancy ratio achieves a higher score for compared to , showing that it is possible to robustly evaluate the color-bleeding artifacts.

Note that the sole use of our metric for evaluating colorization methods also reveals a bottleneck as mentioned in Section 4.3. More specifically, as our metric focuses on evaluating whether the colors are different between edges, saturated colors along the edges can be evaluated as favorable. Therefore, it is recommended to consider all of these metrics to fully evaluate the general colorization performance, including the edge preservation.

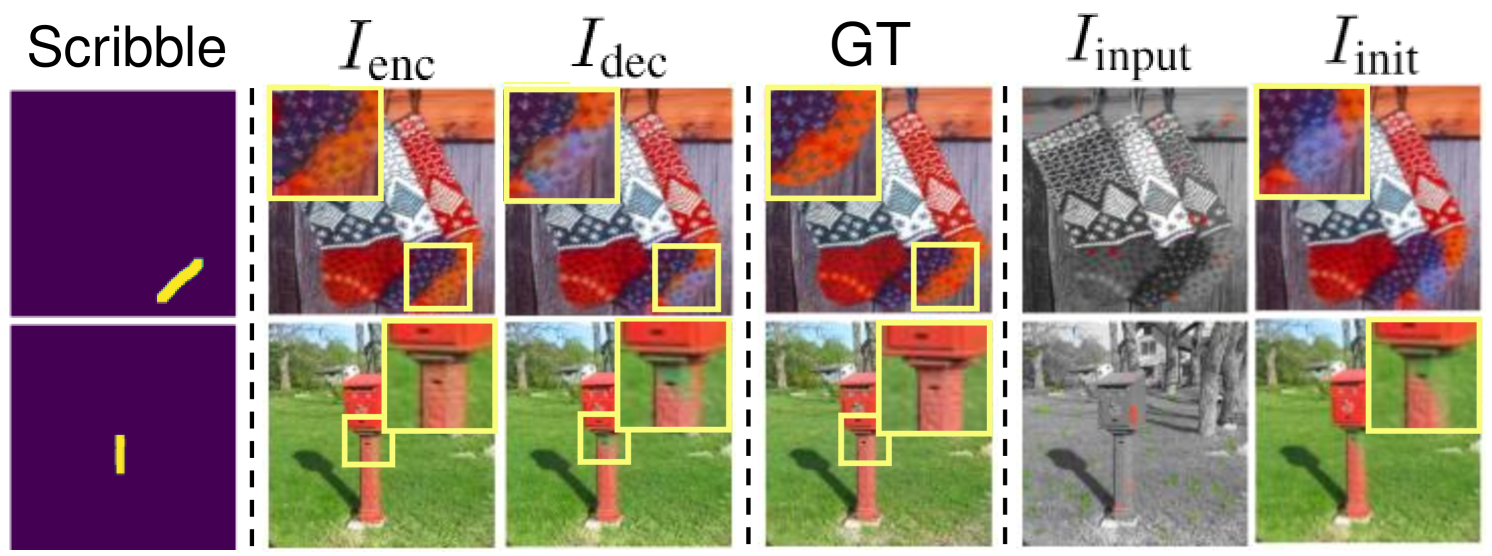

Appendix D Selecting Layers for Edge-Enhancing Network

As briefly described in Section 3.3, we empirically find that applying edge-enhancing network in the encoder layers achieves the intended results. To show the effectiveness of this choice of layers, Fig. 12 compares the results of applied in encoder and decoder layers, and . It is shown that successfully preserves the edges between the different objects, correcting the colors spreading in the regions. For example, by giving a vertical scribble, wrongly spread green pixels inside the fire hydrant are corrected to a red color. However, merely increases the color difference along the edges without removing the green pixels inside the edges. We believe that applied in the encoder layers refines the representations related to the edges, helping the following layers to spread the colors corresponding to the enhanced edges. In this regard, we choose the encoder layers of the backbone network as an appropriate option for integrating .

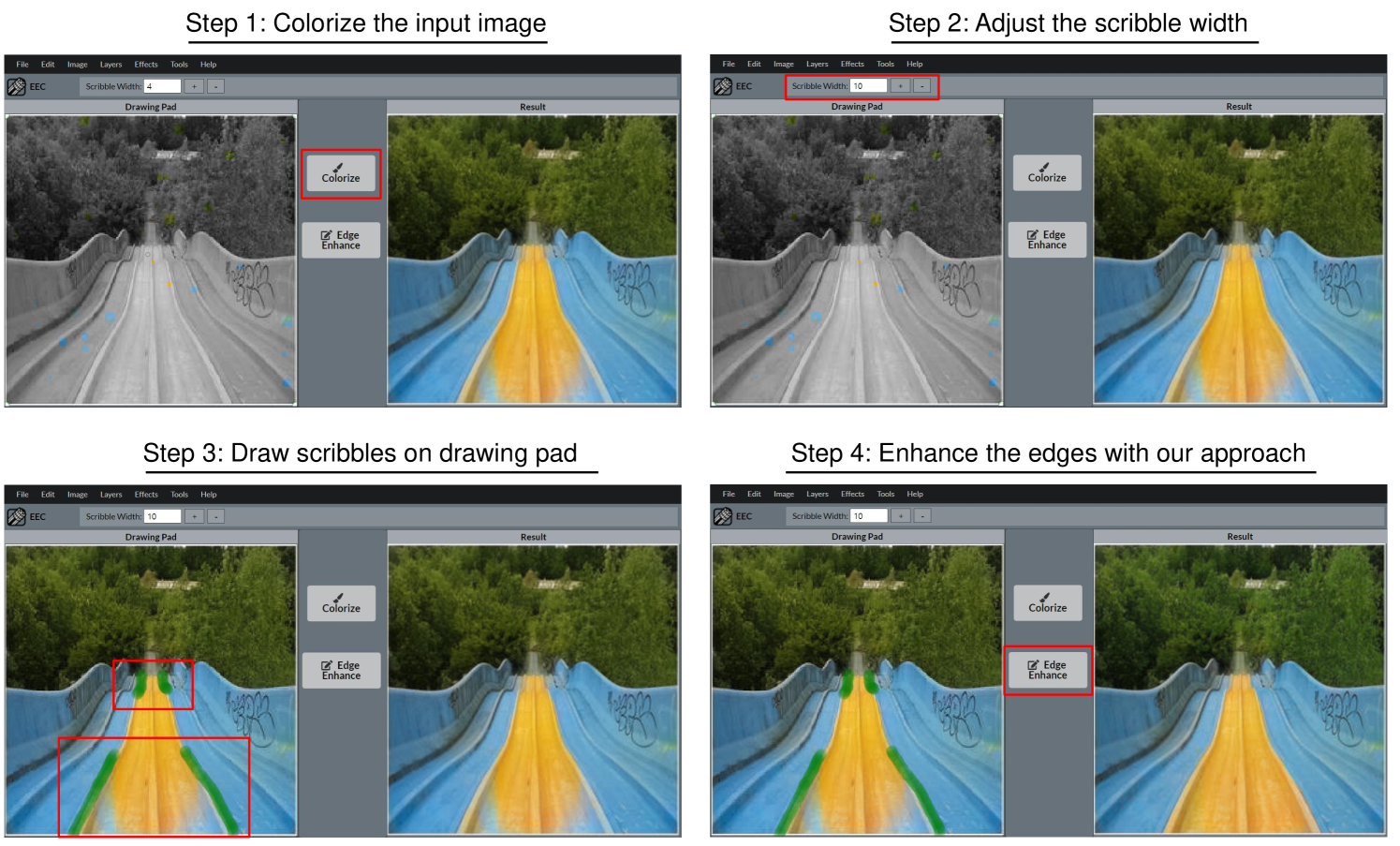

Appendix E User-Interaction Demonstration

This section provides a step-by-step demonstration of edge enhancement through our user interface tool, as illustrated in Fig. 13. First, the user uploads and colorizes an input image (left panel) with additional color hints by clicking the Colorize button. Then, the user adjusts the width of the scribble and draws on color-bleeding edges of the colorized image shown in the left panel. After applying the scribbles, clicking the Edge Enhance button forwards the drawn scribbles into our edge-enhancing network to correct the annotated edges and show the improved result in the right panel.

Appendix F Additional Quantitative Results

As described in Section 4, we apply CIC [3], DeOldify [12] and Zhang et al. [6] as our baseline models for unconditional colorization, and Zhang et al. [6], Su et al. [5] as our baseline for conditional colorization task. Table 4 contains the additional rows of quantitative comparisons of our model and baselines in the kernel size of and , complementary to Table 1. For the qualitative results, we demonstrate that our framework is applicable to Zhanget al., which is the most widely used conditional colorization method, and Su et al., which is a recently proposed colorization approach. Utilizing our approach on these backbone networks improves the general colorization quality over the various dataset, outperforming the existing baselines. As our approach significantly improves the local color-bleeding artifacts, it improves the applied baselines especially when evaluated near the edge regions (i.e., small kernel size).

Table 5 demonstrates the robustness of edge enhancing performance applied to Zhang et al. when the width of a given scribble varies from to pixel diameter. For the CDR, we evaluate it within the kernel size of along the given edges. We observe that our model robustly enhances the local colorization performance in every dataset, given any scribble widths. Similar to the Table 4, the difference of global score between our model and baselines becomes minor when averaging the scores over all the spatial dimensions.

| Kernel Size | Methods | ImageNet ctest [17] | COCO-Stuff [19] | Place205 [20] | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| LPIPS | PSNR | LPIPS | PSNR | LPIPS | PSNR | |||||

| K=7 | CIC [3] | 0.248 | 13.281 | 0.247 | 13.368 | 0.254 | 13.577 | |||

| DeOldify [12] | 0.250 | 13.234 | 0.251 | 13.059 | 0.227 | 14.258 | ||||

| Zhang et al. [6] | 0.246 | 13.248 | 0.206 | 14.755 | 0.219 | 14.815 | ||||

| +Ours | 0.217 | 13.919 | 0.192 | 15.037 | 0.211 | 15.104 | ||||

| Zhang et al. [6]∗ | 0.208 | 14.966 | 0.158 | 17.456 | 0.171 | 17.530 | ||||

| +Ours∗ | 0.177 | 16.041 | 0.143 | 17.953 | 0.161 | 17.906 | ||||

| Su et al. [5]∗ | 0.185 | 16.393 | 0.187 | 15.971 | 0.194 | 17.032 | ||||

| +Ours∗ | 0.177 | 16.507 | 0.176 | 16.188 | 0.187 | 17.098 | ||||

| K=15 | CIC [3] | 0.221 | 14.377 | 0.237 | 13.937 | 0.219 | 14.841 | |||

| DeOldify [12] | 0.218 | 14.399 | 0.217 | 14.362 | 0.226 | 14.382 | ||||

| Zhang et al. [6] | 0.209 | 14.662 | 0.204 | 14.975 | 0.218 | 14.962 | ||||

| +Ours | 0.195 | 14.963 | 0.191 | 15.144 | 0.210 | 15.204 | ||||

| Zhang et al. [6]∗ | 0.181 | 16.278 | 0.155 | 17.708 | 0.169 | 17.739 | ||||

| +Ours∗ | 0.159 | 17.113 | 0.142 | 18.144 | 0.161 | 18.054 | ||||

| Su et al. [5]∗ | 0.166 | 17.335 | 0.169 | 17.000 | 0.177 | 17.889 | ||||

| +Ours∗ | 0.159 | 17.477 | 0.159 | 17.225 | 0.170 | 18.004 | ||||

| K=23 | CIC [3] | 0.221 | 14.394 | 0.220 | 14.596 | 0.227 | 14.635 | |||

| DeOldify [12] | 0.226 | 14.171 | 0.216 | 14.431 | 0.225 | 14.458 | ||||

| Zhang et al. [6] | 0.210 | 14.696 | 0.204 | 14.975 | 0.217 | 15.056 | ||||

| +Ours | 0.195 | 14.987 | 0.191 | 15.180 | 0.210 | 15.282 | ||||

| Zhang et al. [6]∗ | 0.164 | 17.195 | 0.154 | 17.806 | 0.169 | 17.864 | ||||

| +Ours∗ | 0.147 | 17.868 | 0.141 | 18.204 | 0.161 | 18.147 | ||||

| Su et al. [5]∗ | 0.154 | 17.945 | 0.156 | 17.611 | 0.164 | 18.567 | ||||

| +Ours∗ | 0.148 | 18.081 | 0.148 | 17.840 | 0.158 | 18.689 | ||||

| K=Full | CIC [3] | 0.172 | 21.001 | 0.164 | 21.456 | 0.153 | 21.873 | |||

| DeOldify [12] | 0.159 | 21.433 | 0.149 | 21.985 | 0.156 | 21.933 | ||||

| Zhang et al. [6] | 0.148 | 21.981 | 0.135 | 22.729 | 0.138 | 22.846 | ||||

| +Ours | 0.147 | 22.026 | 0.134 | 22.729 | 0.138 | 22.845 | ||||

| Zhang et al. [6]∗ | 0.086 | 27.202 | 0.080 | 27.681 | 0.087 | 27.697 | ||||

| +Ours ∗ | 0.085 | 27.559 | 0.078 | 27.955 | 0.087 | 27.935 | ||||

| Su et al. [5]∗ | 0.091 | 26.211 | 0.089 | 26.050 | 0.090 | 27.414 | ||||

| +Ours∗ | 0.091 | 26.291 | 0.088 | 26.233 | 0.089 | 27.486 | ||||

| Kernel Size | Datasets | Metrics | Zhang et al. [6]∗ | Scribble Width | ||||

|---|---|---|---|---|---|---|---|---|

| 1 | 3 | 5 | 7 | 9 | ||||

| K=7 | ImageNet ctest [17] | LPIPS | 0.208 | 0.182 | 0.178 | 0.174 | 0.166 | 0.203 |

| PSNR | 14.966 | 15.877 | 16.040 | 16.022 | 16.303 | 14.862 | ||

| CDR | 0.376 | 0.411 | 0.436 | 0.451 | 0.471 | 0.447 | ||

| COCO-Stuff [19] | LPIPS | 0.211 | 0.185 | 0.181 | 0.174 | 0.168 | 0.180 | |

| PSNR | 14.964 | 15.790 | 15.938 | 16.074 | 16.210 | 15.594 | ||

| CDR | 0.372 | 0.417 | 0.446 | 0.465 | 0.478 | 0.459 | ||

| Place205 [20] | LPIPS | 0.223 | 0.205 | 0.201 | 0.193 | 0.190 | 0.201 | |

| PSNR | 15.091 | 15.714 | 15.877 | 16.036 | 16.100 | 15.540 | ||

| CDR | 0.330 | 0.389 | 0.409 | 0.431 | 0.438 | 0.425 | ||

| K=Full | ImageNet ctest [17] | LPIPS | 0.086 | 0.085 | 0.085 | 0.085 | 0.084 | 0.087 |

| PSNR | 27.202 | 27.555 | 27.558 | 27.510 | 27.554 | 27.293 | ||

| CDR | – | – | – | – | – | – | ||

| COCO-Stuff [19] | LPIPS | 0.080 | 0.078 | 0.078 | 0.078 | 0.078 | 0.079 | |

| PSNR | 27.677 | 27.964 | 27.959 | 27.953 | 27.939 | 27.880 | ||

| CDR | – | – | – | – | – | – | ||

| Place205 [20] | LPIPS | 0.087 | 0.087 | 0.087 | 0.087 | 0.087 | 0.087 | |

| PSNR | 27.697 | 27.945 | 27.931 | 27.926 | 27.897 | 27.877 | ||

| CDR | – | – | – | – | – | – | ||

Appendix G Additional Qualitative Results

Figs. 15 and 16 present the qualitative examples of edge enhancement applied to Zhang et al. [6], and Su et al. [5], respectively. The second and third columns of the figures contain the inference outputs of the baselines and their enhanced images using our method, respectively. Their scribbles, which are used to enhance the images, are visualized in the fourth column. Yellow boxes in the second column represent color-bleeding areas. All the images are collected from https://unsplash.com and Place205 [20].

The qualitative comparisons between our method, especially applied to Zhang et al. [6], and other baselines, are presented in Fig. 17. While all the baselines in the figure contain the color-bleeding artifact in the regions bounded with a yellow box, our approach improves the edges, as shown in the fourth and sixth columns. Yellow boxes indicate the color-bleeding areas, and we provide the enlarged views of the areas on the right lower corner in the images. The images are selected from COCO-Stuff [19] and Place205 [20].

Appendix H Sketch Colorization

In Section 5, we demonstrate that our approach has the potential to enhance the edges in the sketch colorization task as well. In this study, we utilize the two datasets, Yumi’s Cells [21] and Danbooru [22]. Table 6 demonstrates that applying edge-enhancing network on the colorization model, newly trained for sketch colorization task, can also improve the performance, especially along the edge regions. Additional qualitative results complementary to the Fig 7 are illustrated in Fig. 14.

| Kernel Size | Methods | Yumi’s Cells [21] | Danbooru [22] | ||||||

|---|---|---|---|---|---|---|---|---|---|

| LPIPS | PSNR | CDR | LPIPS | PSNR | CDR | ||||

| K=7 | Zhang et al. [6]∗ | 0.240 | 10.738 | 0.231 | 0.322 | 11.970 | 0.191 | ||

| +Ours∗ | 0.226 | 11.287 | 0.298 | 0.317 | 12.335 | 0.214 | |||

| K=15 | Zhang et al. [6]∗ | 0.217 | 12.194 | – | 0.374 | 9.829 | – | ||

| +Ours∗ | 0.210 | 12.487 | – | 0.371 | 10.092 | – | |||

| K=23 | Zhang et al. [6]∗ | 0.219 | 12.211 | – | 0.290 | 12.146 | – | ||

| +Ours∗ | 0.211 | 12.531 | – | 0.286 | 12.448 | – | |||

| K=Full | Zhang et al. [6]∗ | 0.153 | 19.280 | – | 0.204 | 18.698 | – | ||

| +Ours∗ | 0.154 | 19.291 | – | 0.201 | 18.975 | – | |||

Appendix I Implementation Details

This section provides the training details for edge enhancement, detailed architecture of edge-enhancing network, and hyper-parameters used in generation, complementary to Section 3.5. Afterward, hyper-parameters of the super-pixel methods for our proposed CDR are explained as well. Additionally, the implementation details of the sketch colorization are explained, complementary to the Section 5.

Training Details for Edge-Enhancing Network We train and evaluate our model with a fixed size of 256 256 images for every dataset. We apply three edge-enhancing networks on the and encoder layers of Zhang et al. [6]. In Su et al. [5], instance-level colorization branch takes the patches of images cropped by their bounding boxes after the object detection module. These object-level colorized outputs are fused into a full-image colorization branch via fusion modules, predicting the final colors. For the full-image branch, we provide the pseudo-scribbles for the full image, as we do in Zhang et al. To accommodate our interactions into this object-level colorization branch as well, we crop the pseudo-scribbles as well as the images by their bounding boxes and give them to the branch as inputs. Therefore, our edge-enhancing networks applied in this branch take the cropped scribbles corresponding to the cropped patches of color-bleeding artifacts, generating the refined activations to be fused into the full-image branch. Both three edge-enhancing networks are applied on the and encoder layers of instance-level and full-image network, respectively. In the training phase, we set the hyper-parameters for each loss function as 50 and in Zhang et al., and 50 and 10 in Su et al. The width of the augmentation module for the is randomly sampled from 1 to 10 pixels in the training phase. We use Adam [29] optimizer with and . The learning rate is initially set to 0.01 and is gradually decayed for every epoch.

Edge-Enhancing Network Architecture Edge-enhancing network consists of 4 convolutional layers, each of which contains a 33 convolution filter with a stride of 1, ReLU [30] and Batch normalization layer [31].

Pseudo-Scribble Generation For generating the plausible approximation of real user-provided scribbles, we tune the hyper-parameters of the Canny edge extractor [18]111Canny edge-extractor consists of 5 steps, which include noise reduction, Sobel filtering, non-maximum suppression, double threshold and edge tracking. on every dataset, including ImageNet [17], COCO-Stuff [19], Place205 [20], Yumi’s Cells [21] and Danbooru [22], written in Table 7. We report Sigma (), high-threshold (THh), low-threshold (THl), and threshold gaps (THgap) of each dataset. Sigma stands for the standard deviation of the Gaussian kernel for the noise reduction step. Both THh and THl denote the threshold values for the double threshold step after the non-maximum suppression. We apply different high thresholds for and to select highly probable edges for the bleeding artifacts. In other words, we apply a rigid criterion on the ground-truth image for extracting the edges compared to the generated outputs, resulting in severely weak edges from this comparison. We denote this threshold gap as THgap.

Cluster Discrepancy Ratio We use simple linear iterative clustering [28] (SLIC) method to assign each pixel with a cluster assignment based on its colors and textures. To focus on the color information, we run the SLIC on channels of both ground-truth and colorized outputs. We set the number of clusters to , compactness to , and sigma to for each image. After we compute each ratio from the and channels and average them to produce the final score.

Sketch Colorization We adjust the part of architecture and the training details of Zhang et al. [6] to enable its colorization with local hints in the sketch colorization task. We replace ) the input image from gray-scale to sketch image, and ) output channel size from 2 for channels to 3 for RGB outputs. To obtain the sketch image from the color image of each dataset, we first apply Gaussian blurring ( to remove noisy edges and utilize a widely used edge extractor algorithm called XDoG [32], as used in Lee et al. [11] for the sketch colorization task. Afterward, we obtain 54,317 training images and 6,036 test images for Yumi’s Cells [21], and 7,014 and 380 images for Danbooru [22]. Then, we apply the training details proposed in the original paper [6], such as providing color hints and objective functions. Note that the objective functions for color prediction are adjusted from minimizing the difference of channels to RGB channels between generated output and the ground-truth. To train our model on this network, we convert the both generated RGB outputs images and ground-truth into Lab images to apply our proposed objective edge-enhancing loss.

| Datasets | THh | THl | THgap | |

|---|---|---|---|---|

| ImageNet [17] | 1.2 | 0.7 | 0.2 | 0.4 |

| COCO-Stuff [19] | 1.2 | 0.7 | 0.2 | 0.4 |

| Place205 [20] | 1.2 | 0.7 | 0.2 | 0.4 |

| Yumi’s Cells [21] | 1.3 | 0.7 | 0.2 | 0.4 |

| Danbooru [22] | 0.7 | 0.8 | 0.2 | 0.5 |