Deep-Ensemble-Based Uncertainty Quantification in

Spatiotemporal Graph Neural Networks for Traffic Forecasting

Abstract

Deep-learning-based data-driven forecasting methods have produced impressive results for traffic forecasting. A major limitation of these methods, however, is that they provide forecasts without estimates of uncertainty, which are critical for real-time deployments. We focus on a diffusion convolutional recurrent neural network (DCRNN), a state-of-the-art method for short-term traffic forecasting. We develop a scalable deep ensemble approach to quantify uncertainties for DCRNN. Our approach uses a scalable Bayesian optimization method to perform hyperparameter optimization, selects a set of high-performing configurations, fits a generative model to capture the joint distributions of the hyperparameter configurations, and trains an ensemble of models by sampling a new set of hyperparameter configurations from the generative model. We demonstrate the efficacy of the proposed methods by comparing them with other uncertainty estimation techniques. We show that our generic and scalable approach outperforms the current state-of-the-art Bayesian and number of other commonly used frequentist techniques.

1 Introduction

Traffic forecasting is a foundational component of an intelligent transportation system. Precise forecasting across normal and extreme traffic conditions is crucial to improving traffic control, mitigating congestion, and resolving other traffic-related issues. Recently, data-driven deep learning approaches [1, 2, 3] have received increased attention in traffic forecasting because of their impressive accuracy. However, uncertainty quantification (UQ) for traffic forecasting has received limited attention from the research community. UQ is essential for understanding the limitations of the predictive models and how to use the models in an active traffic management system.

Uncertainty in traffic forecasting can be decomposed into two categories: aleatoric uncertainty, which is irreducible uncertainty in the data, and epistemic uncertainty, which is uncertainty in the model [4]. Aleatoric uncertainty occurs because of the inherent variability in traffic data. For example, at a given time of day, the flow and speed vary greatly. Or, faulty sensors may cause aleatoric uncertainty. This form of uncertainty is a data property, not a predictive model attribute. With aleatoric uncertainty, gathering more data in the same manner or employing a better model cannot reduce it. In contrast, regions that are underrepresented in the training dataset (out-of-distribution generalization [5]) can cause a mismatch between model estimation and data distribution. This situation creates epistemic uncertainty. In this case, epistemic uncertainty may be reduced by collecting more data. Proper estimation of both the aleatoric and epistemic uncertainty for traffic forecasting is critical for understanding the limitations of the model. Furthermore, determining these uncertainty measures can be useful for detecting sensor locations where the data is most uncertain and taking measures to improve the data collection.

For decades, traffic forecasting techniques have been extensively investigated using integrated autoregressive moving average [6], Kalman filters [7], support vector machines [8, 9], and artificial neural networks [10, 11]. Recently, deep learning techniques have received attention because of the impressive forecasting accuracy compared with statistical approaches and traditional machine learning approaches. Researchers have explored recurrent neural networks (RNNs) and long short-term memory networks [12] and convolutional neural networks [13, 14] for short-term traffic forecasting. Recently, graph neural networks such as spatiotemporal graph convolutional networks [1], diffusion convolutional recurrent neural networks (DCRNN) [2], and graph multi-attention networks [3] have achieved state-of-the-art performance by capturing the spatiotemporal dynamics of the road network. However, without proper quantification of the uncertainty, forecast accuracy is not trustworthy for using the model in practice.

By estimating the aleatoric and epistemic uncertainty separately for traffic forecasting models, insight into whether the predictive uncertainty comes from the model or is inherently present in the data can provide insight into how to best use the model. For example, the 90% quantile or 10% quantile of the aleatoric uncertainty measure provide will predictions for the worst and best traffic conditions in a given location. This information can then be used to inform rerouting algorithms and other proactive traffic management strategies. On the other hand, if for example, the structure of the road network changes, the distribution of the training and test data may no longer represent the associated changes in the traffic patterns. This will increase the model’s epistemic uncertainty and capture the model’s lack of confidence in the forecasting results (out-of-distribution generalization [5]). In the absence of additional data for training, the epistemic uncertainty can be used to establish trustworthiness and to know when and where the model predictions are reliable.

We focus on DCRNN [2], one of the most promising approaches to traffic forecasting. It has achieved state-of-the-art performance by capturing both spatial and temporal dependencies of the traffic network through a combination of graph and recurrent neural networks. Our proposed approach for uncertainty estimation in DCRNN is comprised of the following steps:

-

•

The DCRNN is configured to model the distribution of traffic data using simultaneous quantile regression (SQR).

-

•

A scalable Bayesian optimization method is used to tune the hyperparameters of the DCRNN model with simultaneous quantile regression.

-

•

A set of high-performing hyperparameter configurations from the hyperparameter optimization is used to fit a generative model; this captures the joint probability distribution of the high-performing hyperparameter values. A new set of hyperparameter configurations is then sampled from the generative model, and the corresponding DCRNN model are trained simultaneously.

-

•

A set of high performing models is selected for ensemble construction and a variance decomposition approach is then used to estimate the aleatoric and epistemic uncertainties from the ensemble predictions.

The main contributions of the paper are:

-

•

A scalable approach to construct deep ensembles using Bayesian hyperparameter optimization and generative modeling,

-

•

A conceptually simple, yet effective, Gaussian assumption-free uncertainty quantification approach to quantify uncertainty of a DCRNN model,

-

•

The first demonstration of aleatoric and epistemic uncertainty estimation for short-term traffic forecasting, and

-

•

A demonstrated improvement over the state-of-the-art Bayesian DCRNN trained by stochastic gradient Markov Chain Monte Carlo for uncertainty estimation in DCRNN.

2 Diffusion Convolutional Recurrent Neural Network (DCRNN)

The DCRNN considers the road network as a weighted directed graph , where the set of nodes represents traffic measurement (sensor) locations, the set of edges represents the connectivity between the nodes, and the adjacency matrix () represents the proximity between the nodes. Given the graph and historical time series data of traffic metrics , DCRNN seeks to learn a function f(.) to forecast short-term traffic metrics for time steps given the past traffic metrics as input:

where is a vector of traffic metrics defined over vertices at time step .

DCRNN is an encoder-decoder neural network architecture with RNN layers that operates on a graph. It models the temporal dependency of the traffic using gated recurrent units (GRUs), a variant of RNN. Specifically, the matrix multiplications of the GRU cell are replaced by a diffusion-convolutional operation to model the spatial dependency of the road network. The modified GRU cell is defined as

| (1) |

where and represent the traffic state and final state at time , respectively; , , and are the update gate, reset gate, and cell state, respectively; denotes the diffusion-convolution operation; and and are parameters of the GRU cell. The diffusion-convolution operation on the graph is defined as

| (2) |

where is a maximum diffusion step; and are the in-degree and out-degree diagonal matrices, respectively; is the adjacency matrix; and and are the learnable filters for the diffusion process and reverse one, respectively.

The encoder takes the input adjacency matrix and time series data and maps the data into a latent representation. The decoder takes the latent representation and produces forecasts for future traffic for timestamps. In the training, DCRNN optimizes the following mean absolute error (MAE) loss using a minibatch stochastic gradient algorithm:

| (3) |

where and are, respectively, the observed and corresponding forecast values for the time step and sensor location; is the total timestamps for each sensor locations; and is the total number of sensor locations.

3 Methodology

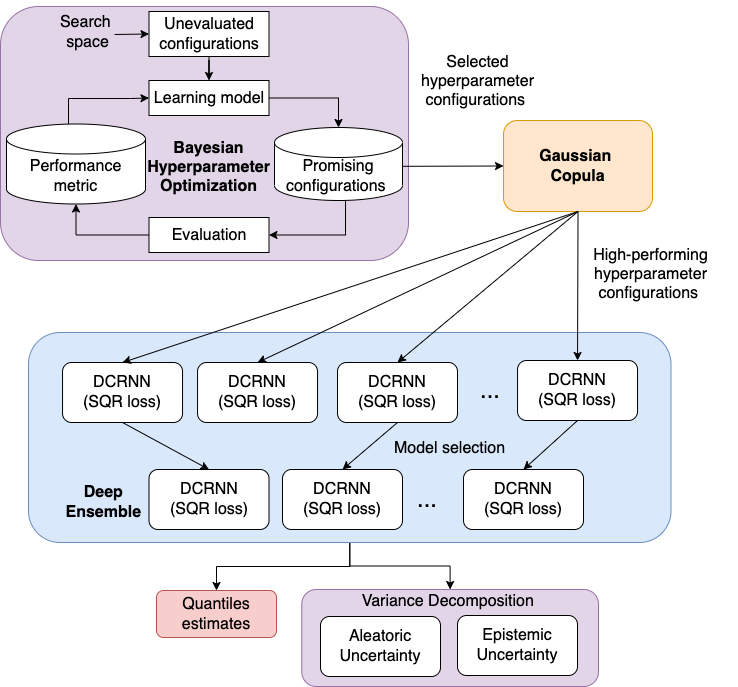

We focus on uncertainty quantification in DCRNN predictions. Our proposed method, DCRNN with simultaneous quantile regression (DCRNN-SQRUQ), seeks to estimate both aleatoric (data) and epistemic (model) uncertainties. Our methodology has four steps, as illustrated in Figure 1: (1) simultaneous quantile regression (SQR) loss for DCRNN, (2) hyperparameter optimization using scalable Bayesian optimization method, (3) high-performing hyperparameter generation using a Gaussian copula model, and (4) aleatoric and epistemic uncertainty estimation using variance decomposition for deep ensemble.

3.1 Simultaneous quantile regression

The first step of our approach is the adaptation of SQR in DCRNN to predict the quantiles of the forecasting traffic distribution.

Consider a typical regression setting consisting of input features and output variable taking real values . Let be the cumulative distribution function of ; the quantile of can be calculated by the quantile distribution function , where . The quantile regression approach seeks to model that approximates the conditional quantile distribution function . Specifically, the quantile regression estimates the value of at a given quantile conditioned on the given values of input features . This estimation can be achieved by using the pinball loss function [15, 16]:

| (4) |

Building on this, the recently proposed SQR approach estimates all the quantiles simultaneously by solving the following optimization problem:

| (5) |

This optimization problem is solved by using minibatch stochastic gradient descent, where random quantile levels (uniform distribution) are sampled afresh for each training point and for each minibatch.

In DCRNN, for each sensor location and for each forecast time step, we seek to estimate the traffic metric at all quantiles conditioned on the past traffic metrics defined over sensor locations. This is achieved by replacing the MAE loss minimization with the SQR minimization. Applying Eq. 5 in Eq. 3, we get

| (6) |

The key advantages of SQR include well-calibrated prediction intervals; joint estimation of the quantiles that can eliminate the unacceptable crossing quantiles [17]; and the ability to model non-Gaussian, skewed, asymmetric, multimodal, and heteroskedastic noise in the data.

3.2 Scalable hyperparameter optimization

The second step of our approach is the use of a scalable hyperparameter search for tuning the hyperparameters of DCRNN.

DCRNN has several training hyperparameters, including batch size, a threshold max_grad_norm to clip the gradient norm to avoid exploring gradient problem of RNN [18], initial learning rate and learning rate decay, maximum diffusion steps, and architecture configurations (network topology parameters) such as number of encoder and decoder layers, number of RNN units per layers, and filter type (i.e., random walk, Laplacian). The hyperparameters are crucial in determining DCRNN’s forecasting accuracy.

To tune the hyperparameters of the DCRNN model with SQR, we use the DeepHyper [19] open source software that has a scalable Bayesian optimization (BO) method. Given the training and validation data, a neural network model, and the feasible set of values of the training hyperparameters, DeepHyper uses an asynchronous BO method seeks to minimize the validation error by repeatedly training the neural network model with various hyperparameter values. The DeepHyper BO uses an incrementally updated random forest model to learn the relationship between the hyperparameter values and their corresponding validation errors. The BO method uses the random forest model to predict the validation errors of the evaluated hyperparameter configurations along with the uncertainty associated with the predicted value. The BO search involves both evaluation of hyperparameter configurations, where the random forest model is most uncertain, and exploitation, focusing on hyperparameter configurations that are closer to the previously found high-performing configurations. The balance between exploration and exploitation is achieved by selecting an unevaluated hyperparameter configuration that minimizes the lower confidence bound () acquisition function:

| (7) |

where and are respectively the predicted estimate and the standard deviation of that estimate from the random forest model of the given unevaluated hyperparameter configuration sampled from the hyperparameter space and where is a user-defined parameter that controls the trade-off between the exploration and exploitation.

A key reason for choosing DeepHyper for the DCRNN hyperparameter tuning is scalability. The DeepHyper BO follows a single-manager multiple-workers parallization scheme, where the manager runs the search, generates hyperparameter configurations, and sends them to workers for evaluation; each worker is responsible for evaluating a hyperparameter configuration by training the neural network model with the given configuration, computing the validation error, and returning that value to the manager. The manager generates hyperparameter configurations for evaluation in an asynchronous manner: as soon as one or more workers returns the validation error, the manager uses the returned values to retrain the surrogate model within the BO and generates the next hyperparameter configuration for the worker. To enable simultaneous multiworker evaluations, the BO uses a constant liar scheme, where configurations are generated sequentially by refitting the random forest model with a lie for the validation error, given by a predicted value from the model itself, for the previously selected unevaluated hyperparameter configuration.

We use DeepHyper to tune the hyperparameters of DCRNN not only to find the best hyperparameter configuration with minimal validation error but also to extract a set of hyperparameter values with minimal validation errors for generative modeling. Moreover, we scale the hyperparameter search on an increasing number of compute nodes to see how scaling impacts the overall uncertainty estimation in DCRNN.

3.3 Generative modeling and ensemble training

The third step of our approach is generative modeling. We model the joint probability distribution of the high-performing hyperparameter configurations from the previous step and use the distribution for generating more high-performing hyperparameter configurations to train an ensemble of DCRNN models.

Copula is a statistical approach used to model the joint probability distribution of a given set of random variables by analyzing the dependencies between their marginal distributions. We use a multivariate Gaussian copula model [20] to learn the joint probability distribution between high-performing hyperparameter configurations. The copula model takes multiple marginal distributions of the hyperparameters and returns a multivariate distribution as follows:

| (8) |

where represents the cumulative distribution function (CDF) of a multivariate normal, with covariance and mean 0, and is the inverse CDF for the standard normal. This captures the joint probability distribution of the high-performing hyperparameter values such as learning rate, batch size, number of layers, and objective function.

The rationale for generative modeling through multivariate Gaussian copula is twofold. First, in hyperparameter search problems characterized by integer, real, and categorical hyperparameters, there are multiple high-performing regions; while BO cannot sample these regions uniformly, it can sample a few configurations from different high-performing regions. By selecting these high-performing configurations and fitting a Gaussian copula, we can sample from the sparsely sampled high-performing regions as well. Second, if we have to find more high-performing configurations from different regions directly with BO, then we have to run the search multiple times with different initialization; this process will be both computationally expensive and resource intensive.

Once the copula model is trained, we sample a number of hyperparameter configurations from and run multiple DCRNN training sessions with the sampled hyperparameter configurations. From these trained DCRNN models, we select a set of high-performing models for ensemble construction.

3.4 Variance decomposition for DCRNN ensembles

The fourth step of our approach is to use the ensemble of DCRNN models to estimate the traffic metrics and the associated aleatoric and epistemic uncertainty using variance decomposition.

Let be the ensemble of models. Previous studies have showed that if each model is configured to predict the mean and variance of an output distribution that is assumed to be Gaussian and if the model is trained with negative log likelihood loss [21], the empirical estimation of the mean and the total variance can be written as follows.

| (9) |

The total uncertainty quantified by is a combination of aleatoric and epistemic uncertainty, which is given by the mean of the predictive variance of each model in the ensemble and the predictive variance of the mean of each model in the ensemble.

Our proposed SQR-based DCRNN seeks to predict quantiles of the distribution as opposed to the mean and variance of the distribution. Thus it is more generic and free from Gaussian assumptions. We derive the empirical estimate of the median () and the total variance of the ensemble as

| (10) |

where and are the 33% and 66% quantiles of the output distribution, respectively.

4 Experimental results

In this section we extensively evaluate our proposed DCRNN-SQRUQ method and compare it with state-of-the-art UQ techniques proposed for DCRNN. We conduct experiments on the METR-LA dataset, which contains speed profiles, given in miles per hour (mph), of 207 sensor locations collected from the loop detector on the highways of Los Angeles County. It contains time series data from March 1, 2012, to June 30, 2012, aggregated at 5-minute intervals, for a total of 34,272 timestamps. From the 4-month data, 70% (first 12 weeks approx.) is used for training, 10% (1.7 weeks approx.) for validation, and 20% (3.4 weeks approx.) for testing. This dataset is widely used for benchmarking traffic forecasting techniques [22, 23, 24] including the recent state-of-the-art UQ estimation work [25]. The default hyperparameter configuration used for DCRNN is as follows: batch size – 64; filter type – dual/bidirectional random walk (captures both the upstream and the downstream traffic dynamic); number of diffusion steps – 2; RNN layers – 2; RNN units per layer– 64; optimizer – Adam; threshold for gradient clipping – 5; initial learning rate – 0.01; and learning rate decay – 0.1. The adjacency matrix of the graph connectivity has been built by using the driving distance between the sensor locations. The DCRNN model uses the past 60 minutes of time series data at each node of the graph to forecast traffic for the next 5, 10, 15, …, 60 minutes. To evaluate the performance, we use the same metrics used in the current state-of-the-art DCRNN UQ estimation work [25]. These metrics are the mean absolute error (MAE), mean interval score (MIS), and Interval score. MAE is defined as

| (11) |

where is the total number of sensor locations and is the number of timestamps for each sensor locations. MIS and interval scores are defined as

| (12) |

| (13) |

where is the total number of sensor locations and is the number of timestamps for each sensor locations. The estimated upper and lower quantiles and are the and quantile for the confidence interval , and is the observed value. For example, if , then the upper quantile is or 97.5%, the lower quantile is or 2.5%, and the confidence interval is or 95%.

Note that MIS defined in Eq. 12 penalizes for two factors: (1) a large interval width , and (2) the observed value being either higher or lower than the upper or lower quantiles. MIS focuses only on calculating intervals by examining the upper and lower quantiles. It does not taken into account the median/ mean prediction. For example, the upper quantile value is 63.33, the lower quantile value is 60.97, and the observed value is 58.87. Then, MIS is (63.33 - 60.97) + 2/0.05 (60.97 - 58.87) = 2.36 + 40* 2.1 = 82.36. If the observed value is 61.87, then the MIS is just the interval (63.33 - 60.97) = 2.36.

The MIS score measures how narrow a given confidence interval defined by the quantiles is and whether the ground truth is within the interval or not. Additional details on this score can be found in [26].

The experimental evaluation was conducted on a GPU-based cluster with 126 nodes. Each of them contains two 2.4 GHz Intel Haswell E5-2620 v3 CPUs and one NVIDIA Tesla K80 dual-GPU card (two K40 GPUs), enabling two model trainings per node. The code was implemented in TensorFlow 2.4 and used Python 3.7.0. We used SDV package (v0.14.0) [27] for fitting the GC models, where one-hot encoding was used for handling the categorical hyperparameter values.

For the hyperparameter search (HPS), we use the learning rate in the continuous range [.0001, 0.02], learning rate decay ratio in the continuous range [0.001, 0.02], filter type in the categorical range [Laplacian, random walk, dual random walk], batch size [8, 16, 32, 64, 128, 256], maximum diffusion steps [1, 2, 3, 4, 5], number of layers for the encoder [1, 2, 3, 4, 5], number of layers for the decoder [1, 2, 3, 4, 5], number of RNN units per layer [4, 5, 6,..,128], number of epoch [20,21,…,100], and threshold for gradient clipping [1,2,3,…,10] in the discrete range.

4.1 Impact of scaling hyperparameter search on ensemble accuracy

Here we show that scaling the HPS results in an increased number of high-performing hyperparameter configurations that can improve the quality of Gaussian copula model and the overall ensemble accuracy.

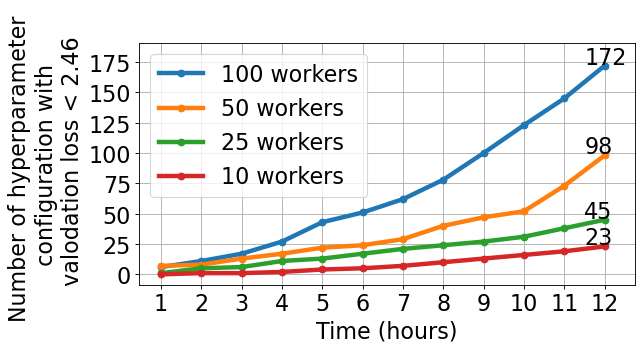

For the scaling experiments, we ran DeepHyper HPS runs with 10, 25, 50, and 100 workers, each with 12 hours of wall time as the budget. We note that each compute node in the cluster can run up to 2 workers simultaneously. These runs resulted in 49, 108, 203, and 453 model evaluations, respectively. The default hyperparameter configuration achieved a validation SQR loss of . Therefore, we consider the hyperparameter configurations as high performing when they have a validation loss less than .

Figure 2 shows the cumulative number of high-performing configurations obtained by DeepHyper as a function of search time. We observe that the number of high-performing configurations increases almost linearly with the increase in the number of workers. In particular, with 100 workers, the search achieves 50 high-performing configurations within 6 hours, whereas the search with 50 workers takes 10 hours to reach that number. In 6 hours, the 100-worker search finds more high-performing configurations than the 25- and 10-worker searches find. The number of high-performing configurations obtained by 10-, 25-, 50-, and 100-worker searches is 23, 45, 98, and 172, respectively.

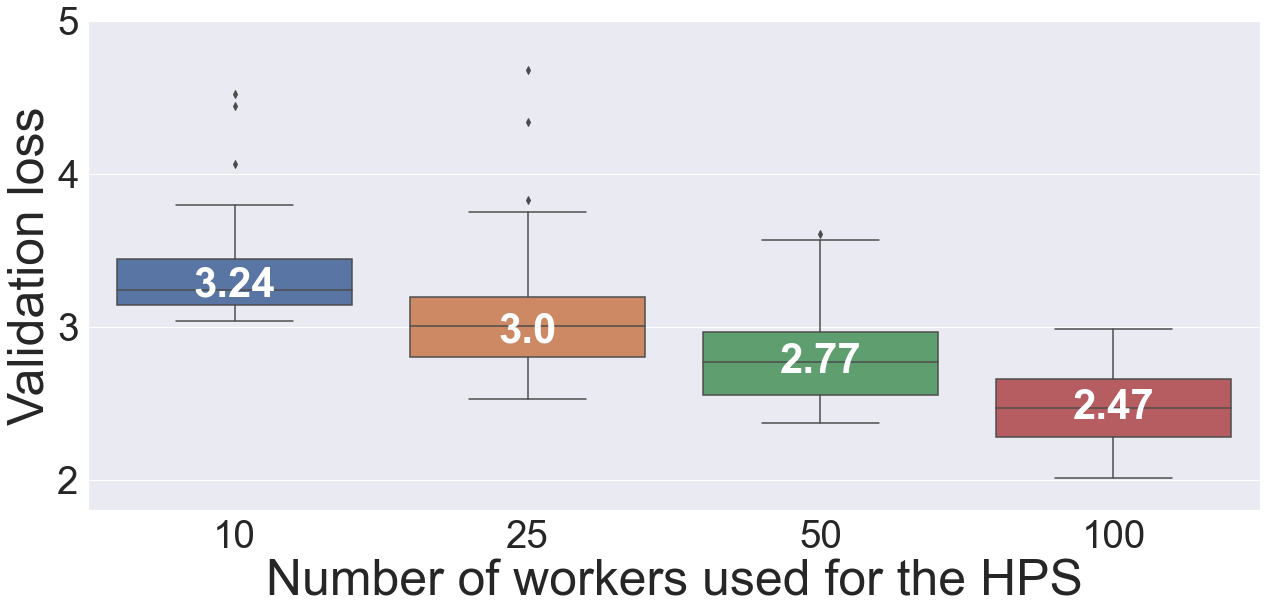

Next we selected a number of high-performing configurations from the DeepHyper HPS runs for generative modeling. For a given worker-count search run, we computed a 10% quantile of the validation loss values of all hyperparameter configurations. We then selected the configurations that were less than or equal to that cut-off value. These configurations were used to fit a Gaussian copula (GC) model. We applied this procedure for each worker-count search run and denoted the resulting five GC models as GC-10, GC-25, GC-50, and GC-100. Table 1 lists the time taken by each GC model to fit the configurations and sample new hyperparameter configurations from the distribution. We observe that time required for fitting the GC model and sampling from the model is negligible (less than 3 seconds for fitting and 0.04 second for sampling on a single CPU node). Once they were fitted, we sampled 100 new hyperparameter configurations from each GC model and trained all them simultaneously on 50 K80 nodes (two model training per node).

| Fit time (sec) | Sample time (sec) | |

|---|---|---|

| GC-10 | 2.57 | 0.030 |

| GC-25 | 2.82 | 0.031 |

| GC-50 | 2.89 | 0.032 |

| GC-100 | 2.96 | 0.038 |

Figure 3 shows a box plot of the validation loss distribution of 100 trained models from GC-10, GC-25, GC-50, and GC-100. The results clearly show that the validation loss significantly decreases as we move to GC models trained with a larger number of workers. The median values of the validation loss distributions are 3.24, 3.0, 2.77, and 2.47 for GC models trained with 10-, 25-, 50-, and 100-workers HPS results, respectively.

For model selection, we selected the top 25 models from the generated 100 based on the validation loss. The number 25 is motivated by the fact that the state-of-the-art SG-MCMC method used 25 posterior sample models from the trained model.

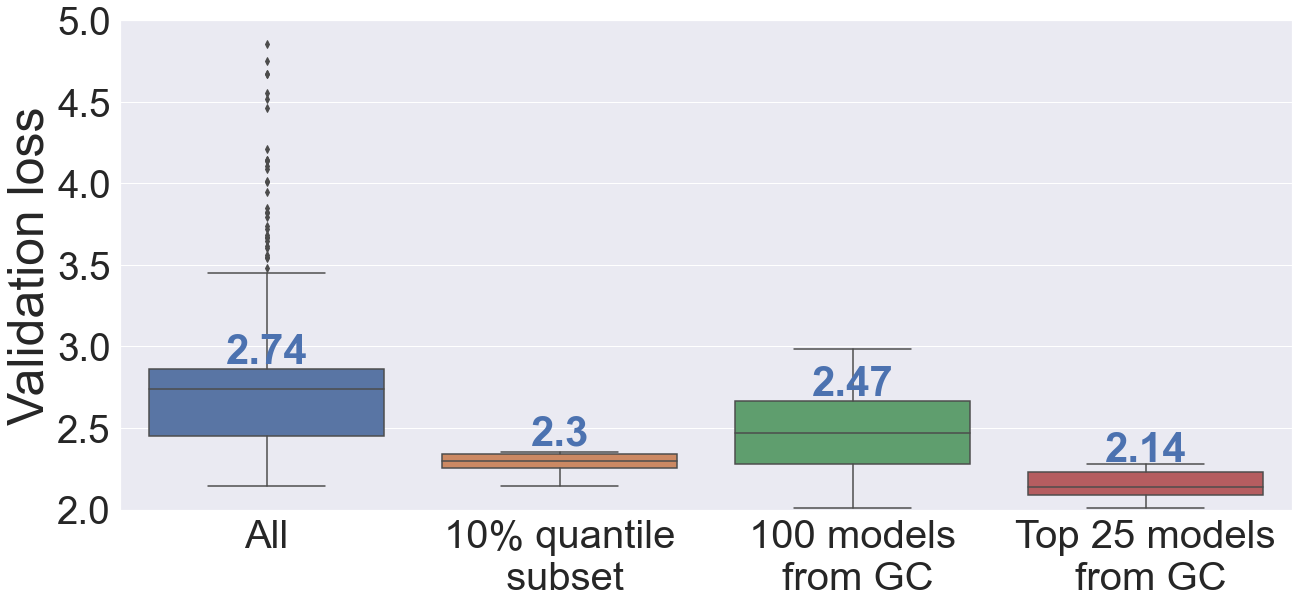

We also compared the validation loss distributions of (1) all the hyperparameter configurations in the HPS run, (2) 10% quantile subset of the HPS run used for training the GC-100 model, (3) 100 models from GC-100, and (4) the top 25 of 100 models from GC-100. The results are shown in Figure 4. The results show that the validation loss distribution achieved by the top 25 models is superior to the hyperparameter values found by the search alone. Moreover, using all 100 models from the GC model results in a validation loss distribution that is better than that of HPS run. The strategy of generating models from GC and selecting a subset from them seems a good strategy. We expect that by generating a large number of high-performing configurations by scaling HPS further and/or by using faster GPUs, we can train better GC models, which will result in overall improvement in the ensemble validation loss distribution.

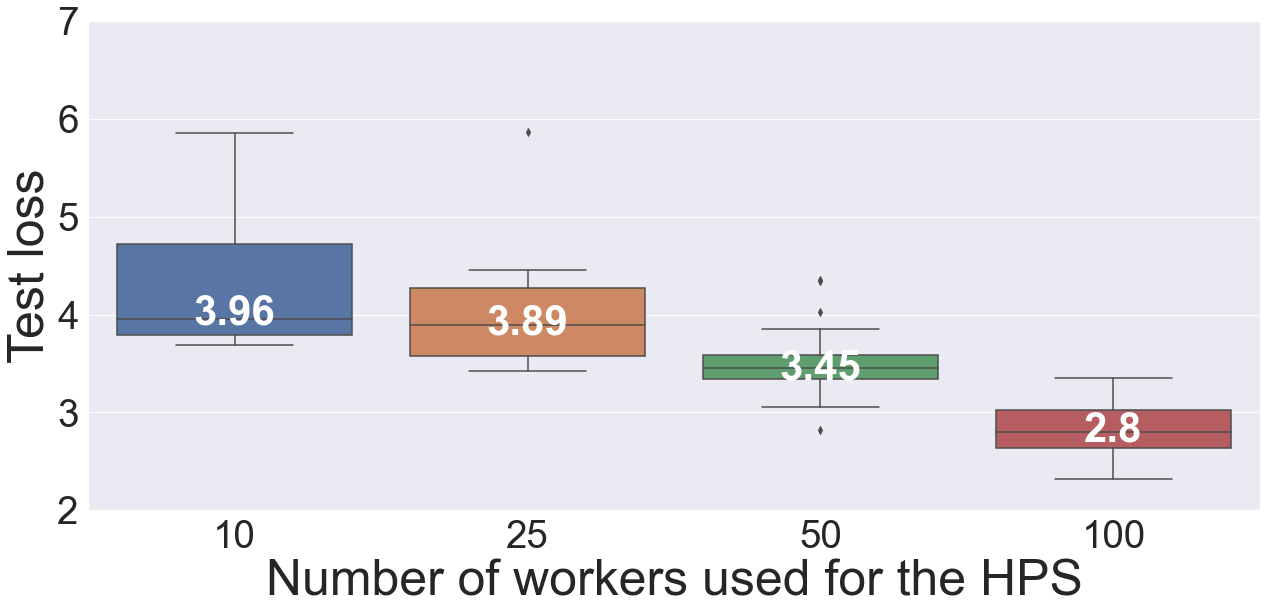

Using the selected 25 models, we performed inference on the test data and computed the test loss. The results are shown in Figure 5. We observe that the 25 models generated with a larger number of workers for the HPS significantly reduce the test loss. The trend is similar to the validation loss distribution with 100 models. The medians of the distribution are 3.96, 3.89, 3.45, and 2.8 for 10, 25, 50, and 100 workers, respectively. The findings lead us to conclude that when conducted on a large number of workers, HPS yields better configurations, which result in improved overall predictive accuracy of the ensembles.

4.2 Uncertainty estimation and comparison with the state-of-the-art

| T | Metric | Point | Bootstrap | Quantile | SQ | MIS | MC Dropout | SG-MCMC | DCRNN-SQRUQ |

| 15 mins | MAE (mean) | 2.38 | 2.63 | 2.43 | 2.67 | 2.63 | 2.47 | 2.32 | 2.21 |

| MAE (median) | 2.20 | ||||||||

| MIS | 39.76 | 18.32 | 29.04 | 18.26 | 27.61 | 32.21 | 24.79 | ||

| Interval | 5.8 | 12.42 | 8.38 | 12.46 | 8.99 | 8.73 | 8.29 | ||

| 30 mins | MAE (mean) | 2.73 | 3.19 | 2.79 | 3.1 | 3.08 | 2.94 | 2.54 | 2.44 |

| MAE (median) | 2.45 | ||||||||

| MIS | 38.48 | 21.54 | 40.93 | 21.09 | 33.38 | 31.87 | 27.4 | ||

| Interval | 7.86 | 13.48 | 8.37 | 13.99 | 11.1 | 12.62 | 10.53 | ||

| 60 mins | MAE (mean) | 3.14 | 3.99 | 3.19 | 3.75 | 3.65 | 3.7 | 3 | 2.83 |

| MAE (median) | 2.80 | ||||||||

| MIS | 38.58 | 25.74 | 60.56 | 24.33 | 43.11 | 30.35 | 29.33 | ||

| Interval | 11.65 | 14.5 | 8.38 | 15.55 | 14.46 | 18.79 | 12.34 |

In this section we compare the uncertainty estimates from our proposed DCRNN-SQRUQ with several other DCRNN UQ techniques that were used in a recent study [25] and show that our method outperforms all other methods. For the experimental comparison, we adopt the same setup in terms of error metrics, forecasting horizons, and confidence interval as described in [25].

The uncertainty estimation techniques reported in [25] are as follows. (1) Bootstrap [28]: This method randomly samples 50% of training data points to train DCRNN model. Prediction is performed on the entire test dataset, and 25 samples are collected in order to calculate mean predictions and confidence intervals. (2) Quantile [29]: This method uses pinball loss for DCRNN, and the prediction is performed for three different quantiles 2.5%, 50%, and 97.5% (95% confidence interval). (3) Spline quantile regression (SQ) [30]: This method models the quantile functions using linear splines and optimizes the continuous ranked probability score as a loss function. (4) Mean Interval Score [25]: MIS is directly used in the loss function along with MAE, and a multiheaded model is used to jointly output the upper bound, lower bound, and prediction for a given input. (5) Monte Carlo Dropout [31]: This method uses the algorithm develops by Zhu et al. [32]. A 5% random drop rate is used during the testing time over 50 iterations to get a stable prediction. (6) Stochastic Gradient Markov Chain Monte Carlo (SG-MCMC) [33]: This is the current state of the art Bayesian neural network for DCRNN and uses a subsampling technique to minimize the cost of Markov chain Monte Carlo (MCMC) per iteration. A Gaussian prior (0, 0.4) with randomly initialization of (0, 0.2) is used for the model parameters. The mean and confidence intervals are obtained by averaging from 25 posterior model samples.

The comparison results are shown in the Table 2. The results of the six methods (Bootstrap, Quantile, SQ, MIS, MC Dropout, SG-MCMC) are taken directly from [25]. The performance of each method is evaluated by using the error metrics MAE and MIS (95% confidence interval). The comparison is done on three different forecasting horizons: 15 minutes, 30 minutes, and 60 minutes.

The results show that our DCRNN-SQRUQ method outperforms the state-of-the-art SG-MCMC method and a number of other UQ methods. The mean and the median MAE of our DCRNN-SQRUQ shows a better prediction accuracy in MAE than does SG-MCMC across all three forecasting horizon. Moreover, the MIS score and interval (defined in Eqs. 12 and 13) show that our method achieves a low MIS score and interval with a narrow interval compared with other techniques. An exception is for the MIS method that directly minimizes the MIS score in the loss function; as expected, it achieves the best MIS performance. However, the prediction accuracy and interval score of DCRNN-SQRUQ are better than those found by MIS. Overall, our proposed DCRNN-SQRUQ is more robust in prediction accuracy and achieves MIS and interval scores that are better than the state of the art SG-MCMC method.

4.3 Uncertainty vs traffic dynamics

Here we estimate aleatoric and epistemic uncertainty using DCRNN-SQRUQ and investigate the relationships between the traffic dynamics and the estimated uncertainty measures. According to this analysis, increased traffic dynamics produced by fast changes in traffic behavior result in high aleatoric and epistemic uncertainty. Currently, none of the existing UQ methods developed for DCRNN provide separate aleatoric and epistemic uncertainties.

Traffic dynamics describe the change in traffic behavior observed by the variability of the traffic speed. High dynamics indicate instability in the speed caused by rapid change of traffic behavior, whereas low dynamics indicate stable behavior of the traffic. The traffic dynamics at a given sensor location can be measured by the coefficient of variation (COV) [23] of the time series data (speed). The COV becomes large (small) when the variations/change in the speed values are high (low). To measure COV, we calculated the mean () and standard deviation () of the speed of each sensor location over the entire timeline. The COV is estimated by /. Afterwards, the COV on METR-LA dataset are binned in four ranges: (1) COV ; (2) COV ; (3) COV ; and (4) COV. The binning helps us identify the sensor location with low to high traffic dynamics. Of a total of 207 sensor locations, 34 locations have COV between 0.2 and 0.3, 120 locations have COV between 0.3 and 0.4, 42 locations have COV between 0.4 and 0.5, and 11 locations have COV more than 0.5.

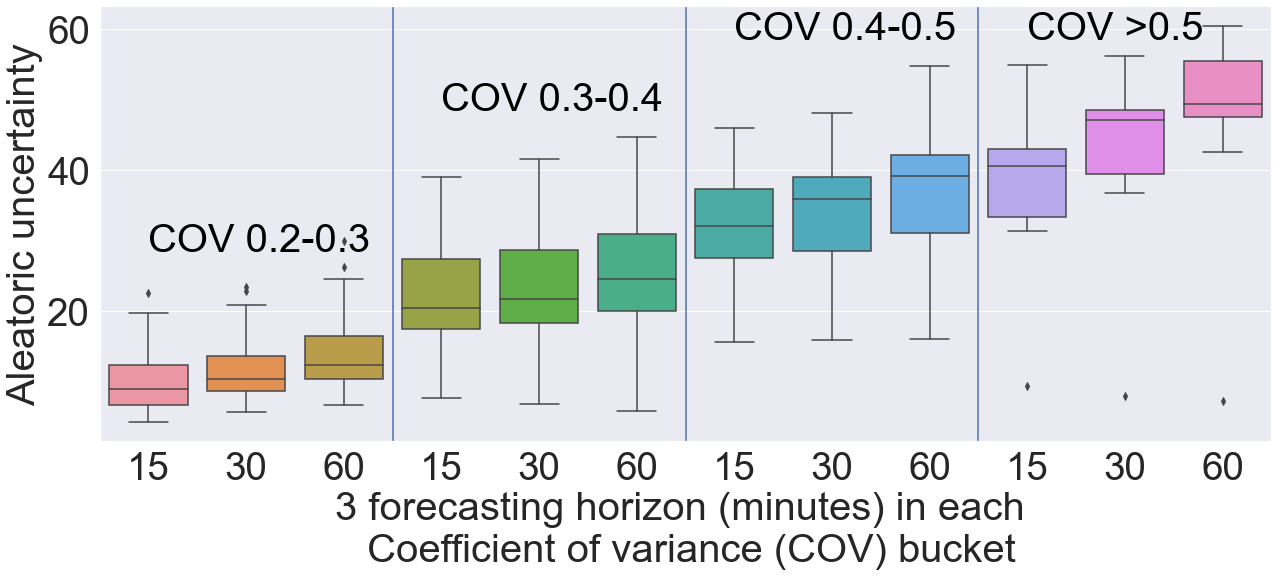

We estimate the aleatoric and epistemic uncertainty using Eq. 10. The aleatoric uncertainty is the mean of the prediction variance of the 2.5% and 97.5% quantile over the top 25 models selected from the ensemble. For each sensor location we now have the COV value and the aleatoric uncertainty measure. We separate the sensor locations based on the COV values into the four bins mentioned above and plot the distribution of aleatoric uncertainty across three forecasting horizons in box-and-whisker plot in Figure 6. We have three forecasting horizons and four COV bins, for a total of 12 box plots per figure. Across all the COV bins we can observe that 25%, median, and 75% quantiles of the aleatoric uncertainty increase with the increasing COV values. The box plots for the 15-, 30-, and 60-minute forecasting horizons show a similar trend. This result indicates that the aleatoric uncertainty is high on the sensor locations with high traffic dynamics or COV values. Since the aleatoric uncertainty is irreducible, we cannot mitigate it. In a proactive traffic management system, however, aleatoric uncertainty can be used to estimate worst-case traffic management and planning.

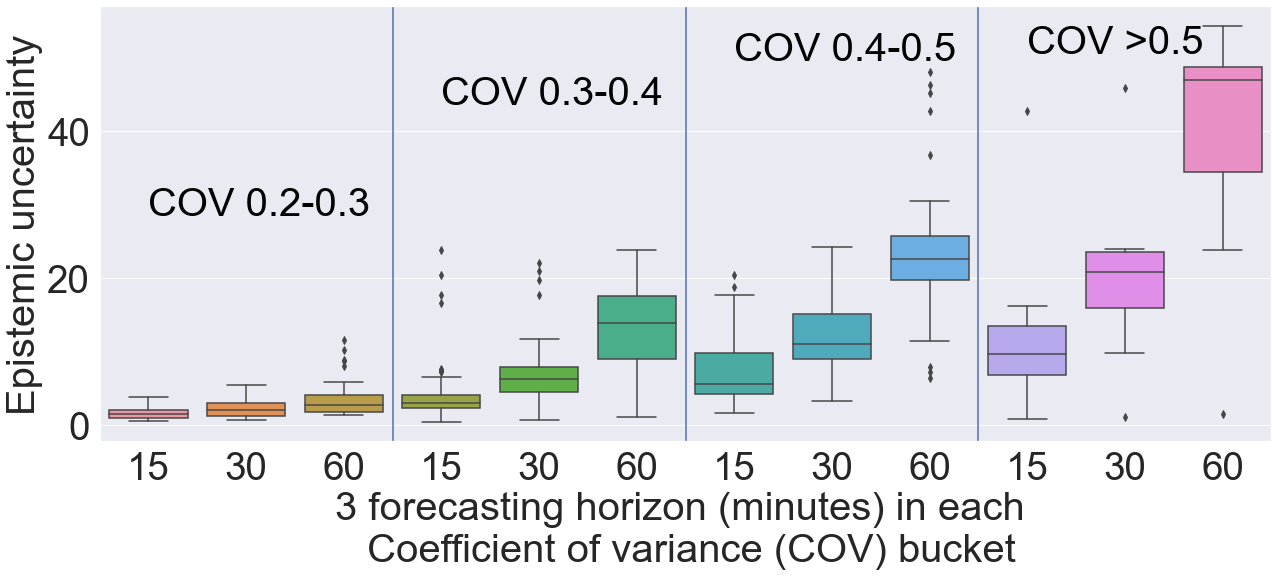

Next, we estimate the epistemic uncertainty by using Eq. 10. Epistemic uncertainty measures the variance of the mean across top 25 models selected from the ensemble. Similar to the aleatoric uncertainty, we separate the sensor locations based on COV values into four bins and plot the distribution of epistemic uncertainty across three forecasting horizons in box-and-whisker plots in Figure 7. A similar trend can be observed here: the epistemic or model uncertainty increases for the sensor locations having high COV value or traffic dynamics. The epistemic uncertainty for 60-minute forecasting is high for COV (9th box in the Figure 7). The predictive uncertainty of the model is high due to the high traffic dynamics. Therefore, we recommend performing 15-minute forecasts for those sensor location where COV (11 sensors belong to this bin). The 15-minute forecast (7th box in Figure 7) has a median of the distribution of the epistemic uncertainty below 10. Similarly, uncertainty is high for 60-minute forecasting in the COV bin between 0.4 to 0.5. Hence, we recommend performing 30-minute forecasting for those sensor locations (42 sensors belong to this bin). The ability to provide these uncertainty measures and consequent recommendations is crucial to putting these models into practice. Limiting the forecasting horizon for highly dynamic sensors will stabilize the DCRNN prediction and minimize the risk of using the forecasting results for crucial decision making.

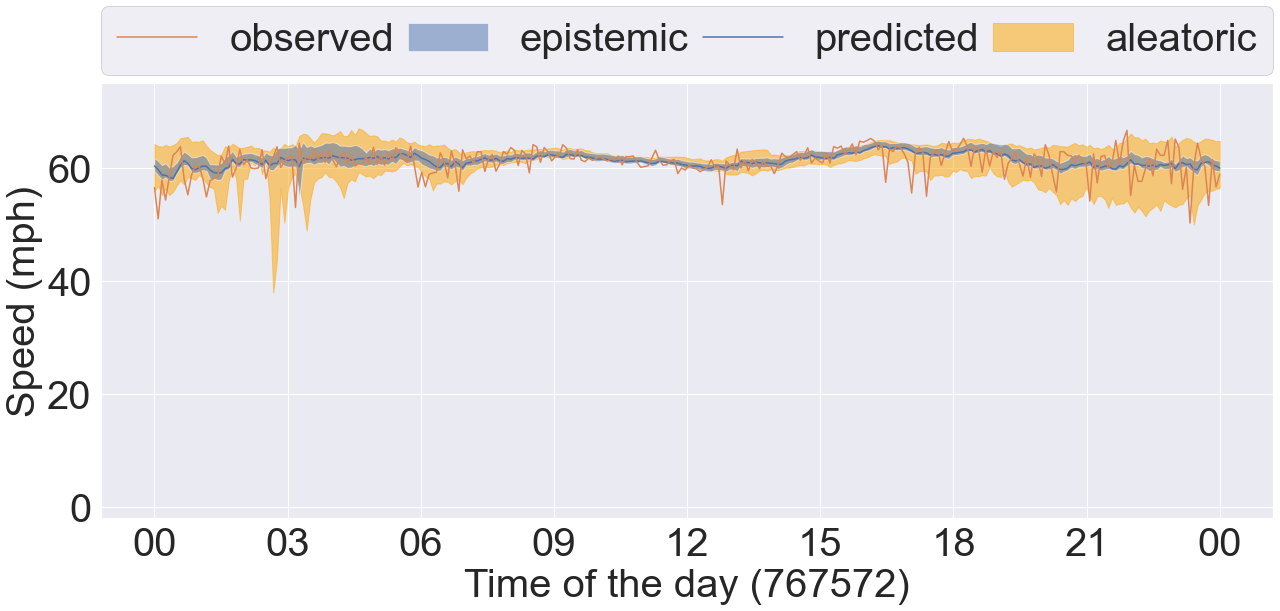

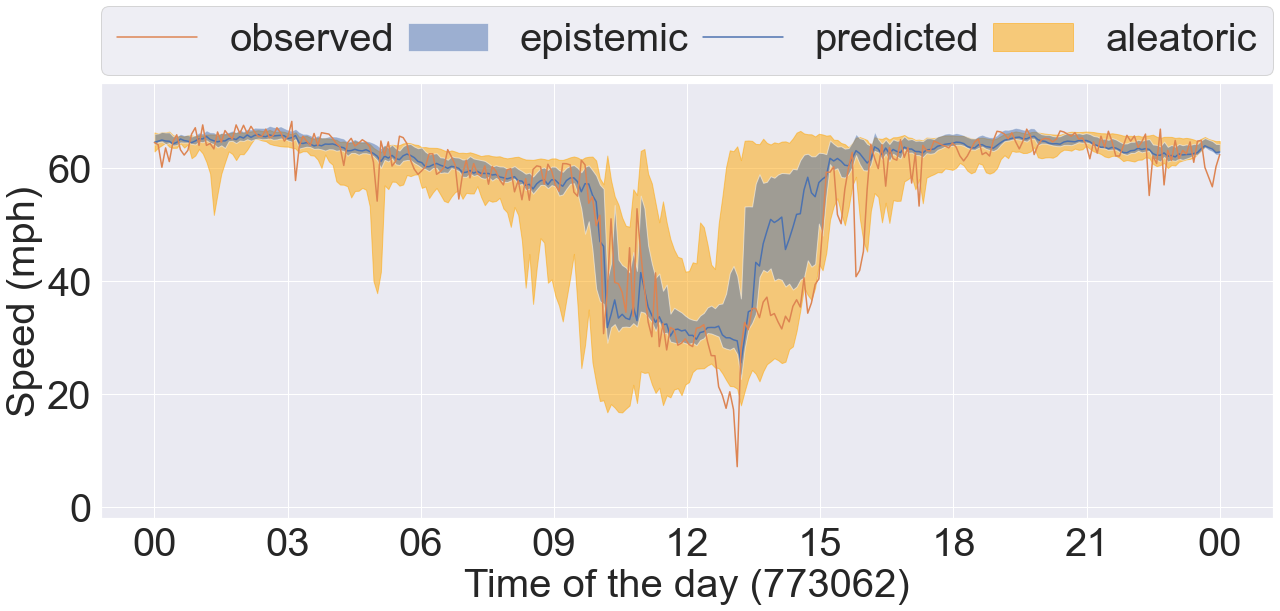

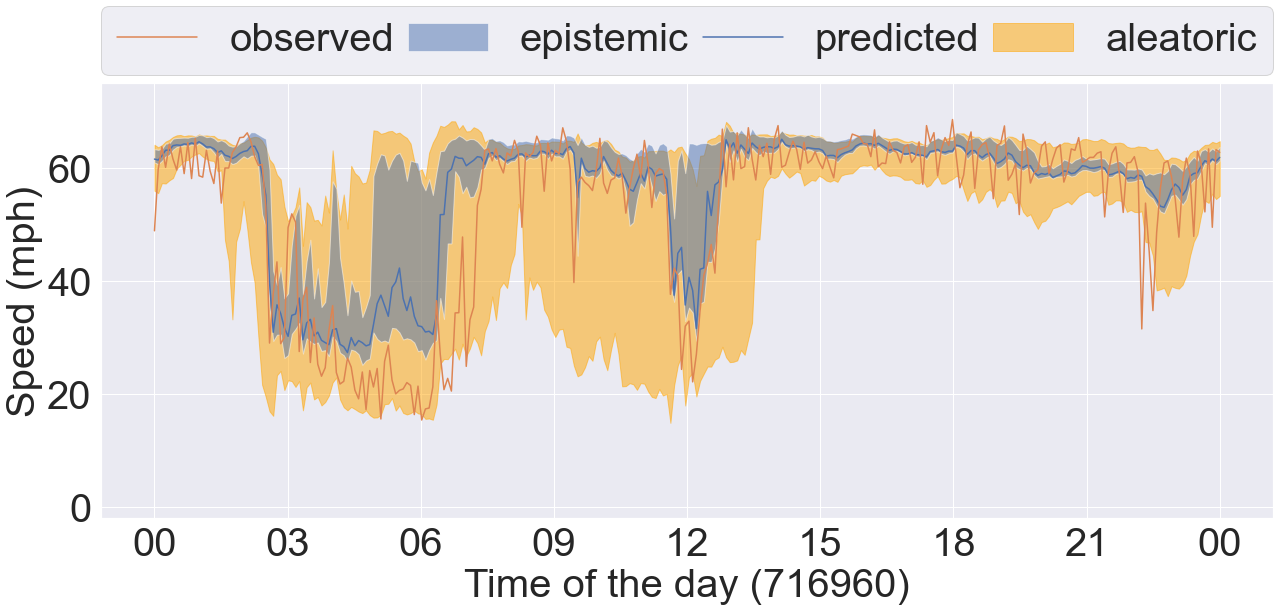

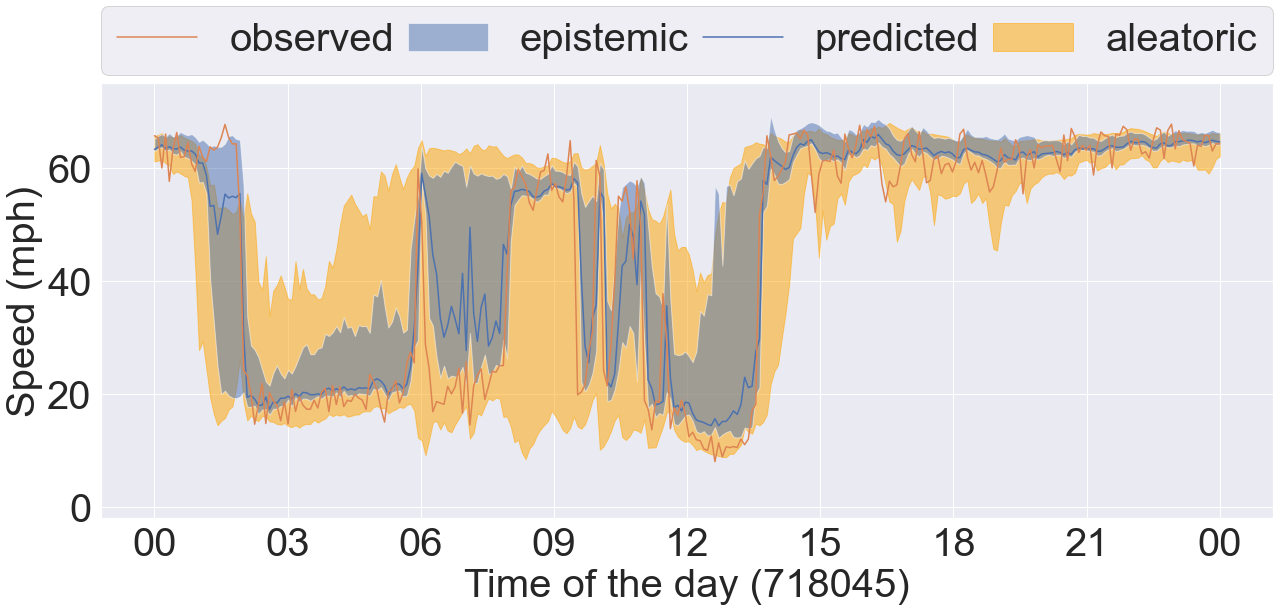

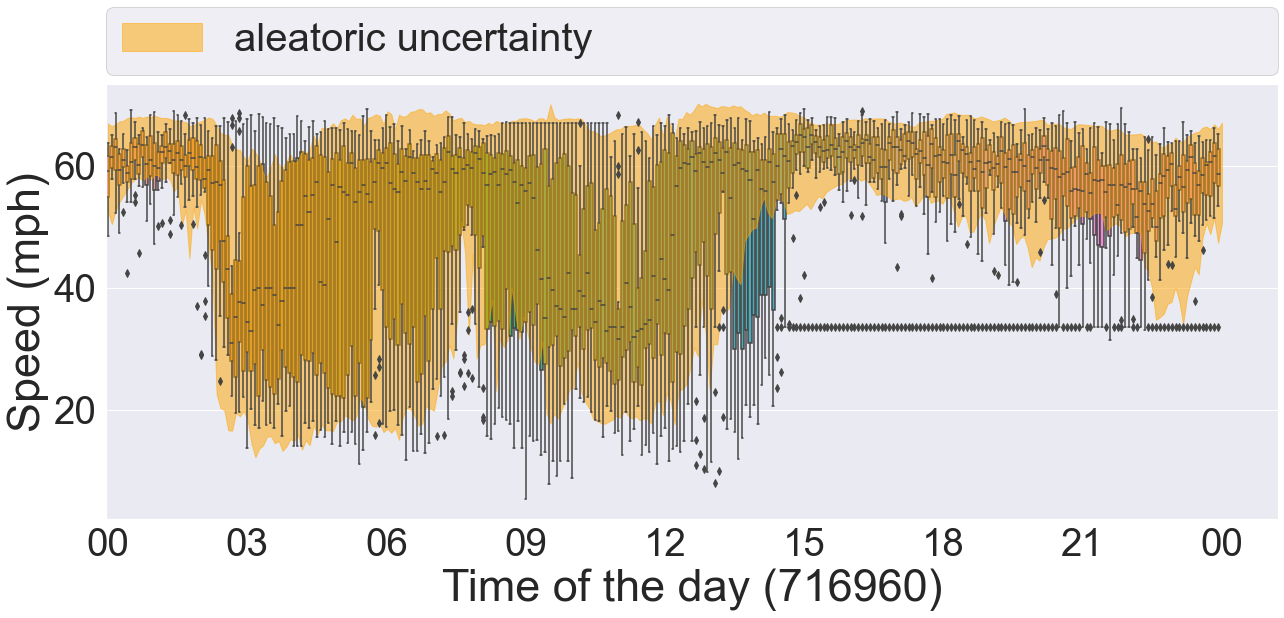

Further, we plot 4 sets of time series data taken from 4 different COV bins in Figure 8. Each plot contains the observed and predicted timeseries data, and the epistemic and aleatoric uncertainty intervals estimated by our proposed method DCRNN-SQRUQ methods. The results shown in the Figure 8 are for the 60-minute forecasting horizon. The epistemic uncertainty interval is calculated by using the 2.5% and 97.5% quantiles of the median prediction of the top 25 models - shown in blue. The aleatoric uncertainty intervals are the medians of the 2.5% and 97.5% quantile of the top 25 models- shown in yellow. Figure 8(a) shows the sensor location with the lowest COV value (between 0.2 and 0.3). Traffic behavior does not change rapidly in this COV bin. Hence, the interval band is narrow for both of the uncertainty measures. Figure 8(b) shows the sensor location with COV value between 0.3 and 0.4. Traffic dynamics are higher compared with the previous bin. Both the aleatoric and epistemic uncertainty measures are high around 9 am and 3 pm. The traffic dynamic increases more in Figure 8(c), and the uncertainty band becomes even wider here. Epistemic uncertainty estimates are high during the drop/rise in traffic speed. In contrast, aleatoric uncertainty is high around 6 am, 9 am, 12 pm, and 11 pm. Specifically, during 9 am the uncertainty is high but the traffic is stable. This uncertainty is coming from the data. Therefore, to validate it, we plot the timeseries data of the same sensor (sensor ID: 716960) over multiple days in box-and-whisker plot shown in the Figure 9. The results show huge variation in the speed profile during 3 am, 9 am, 12 pm, and 11 pm. We also plot the aleatoric uncertainty on top of the daily variation of the data. We see that our uncertainty measure covers the variation in the data. This explains the high uncertainty at 9 am in Figure 8(c). Likewise, the traffic dynamics increase more in Figure 8(d) where the COV is greater than 0.5. The aleatoric uncertainty is high from 3 am to 7 am because of the variety of patterns in the data. The epistemic uncertainty is high during the drop/rise of traffic speed, which is difficult for the model to capture.

5 Related work

Quantifying predictive uncertainty is important for deep learning models. Various methods for quantifying uncertainty, such as [34, 35], have been investigated over the past decade. In this paper we look at the uncertainty quantification techniques that are used specifically in traffic forecasting.

Uncertainty quantification has not yet been extensively adopted for traffic forecasting. Only a few attempts have been made to measure uncertainty in highway traffic forecasts. The generalized autoregressive conditional heteroscedasticity (GARCH) [36, 37, 38] model was used to estimate the uncertainty of traffic forecasting with a Kalman filter. A bootstrapping technique ass used by Matas et al. [39] for uncertainty estimation. Quantile regression recently has been developed to forecast traffic volumes with confidence intervals [40]. Bayesian neural networks were used [41, 42, 32] to predict the travel time along with the confidence intervals. All of these methods, however, perform forecasting on individual locations. None of the methods consider spatiotemporal dynamics of all the locations together in their models.

Recently, Wu et al. [25] developed and tested six uncertainty estimation techniques for DCRNN. These include five frequentist methods—bootstrap, MIS, quantile regression, spline quantile regression, Monte Carlo dropout, and one Bayesian model trained through SG-MCMC. They compared these strategies empirically and reported on the effectiveness and computational trade-offs of various UQ methods. According to their experimental results, Bayesian DCRNN with SG-MCMC results in superior mean prediction when compared to the five frequentist methods but it is computationally expensive. Frequentist approaches, on the other hand, are inexpensive and effective in covering data variations. The uncertainty calculated in Bayesian DCRNN is the total uncertainty, a combination of both epistemic and aleatoric; it cannot distinguish between the two types of uncertainty. Moreover, Bayesian neural networks are computationally more expensive to train than their deterministic counter parts and typically do not scale well for large data sets due to the sequential nature of the specialized training procedures.

Our method is robust in mean predictions and is effective in covering variations in the data. Moreover, the hyperparameter estimation and ensemble can be scaled over multiple compute nodes. Overall, our method is more effective and more efficient compared with other Bayesian and frequentist approaches in terms of accuracy and scalability.

Our approach was inspired by four recent works on uncertainty estimation through deep ensembles: deep ensemble [43], hyper ensemble [44], neural ensemble search [45], and joint neural and hyperparameter search ensembles [21]. These methods assume a Gaussian distribution for the output variable, perform regression on a single variable, do not make use of scale for hyperparameter search to generate ensembles, and do not adopt generative modeling. The uniqueness of our method stems from 1) its application to spatial temporal graph neural networks, 2) inclusion of Gaussian-assumption-free simultaneous quantile regression, 3) multi-output spatial temporal regression, 4) the use of hyperparameter search at scale, and 5) Gaussian copula generative modeling for ensemble construction.

6 Summary and conclusion

We developed DCRNN-SQRUQ, a scalable Gaussian assumption-free ensemble-based uncertainty quantification for traffic forecasting for spatial temporal graph neural networks. DCRNN-SQRUQ it employs simultaneous quantile regression loss for DCRNN, Bayesian hyperparameter search, generative modeling with a Gaussian copula mode, ensemble training, and variance decomposition for Gaussian quantile regression ensembles. DCRNN with SQR effectively captures well-calibrated forecasting intervals. The Bayesian optimization-based scalable hyperparameter search efficiently finds the most promising hyperparameter configuration. We also showed that scaling the hyperparameter search is important for finding promising hyperparameter configurations and for improving the forecasting accuracy of the ensembles. The Gaussian copula generative model is inexpensive yet efficient in generating more high-performing hyperparameter configurations from promising hyperparameter configurations found in a hyperparameter search. Furthermore, we developed a variance decomposition methodology for DCRNN ensembles with SQR loss function and use it for estimating the aleatoric (data) uncertainty and epistemic (model) uncertainty.

We demonstrated that our approach outperformed the state-of-the-art Bayesian neural network method and a variety of other frequentist uncertainty techniques. Experiments on a real-world dataset showed that our method is robust in mean predictions and effective in capturing data variations. In addition, we investigated the relationship between aleatoric/epistemic uncertainty and traffic dynamics. The analysis showed that increased traffic dynamics caused by rapid changes in traffic behavior resulted in high aleatoric and epistemic levels. Because aleatoric uncertainty is irreducible, it can be used for worst-case traffic planning in proactive traffic management. The epistemic uncertainty can be used to identify the limitations of the model and appropriate location-specific forecasting horizons. Currently, no other UQ method can provide separate aleatoric and epistemic uncertainty estimates for the DCRNN and, in general, for any other traffic forecasting methods. From a practitioner perspective, it provides a mechanism for reducing the forecasting horizon when the uncertainty measure is too high. This is very important for reducing the risk of using the model in real-world decision making.

The key advantages of our approach are generality and scalability. Our proposed approach for building model ensembles is based on a scalable hyperparameter search and computationally inexpensive generative modeling. In principle, we can apply our UQ approach to any neural network models. This involves taking the default neural network architecture, using simultaneous quantile regression loss, running hyperparameter search, training a generative model from the hyperparameter search runs, performing model selection, and decomposing the uncertainty estimates. The scalability of the approach is derived from scalable hyperparameter search and scalable ensemble training. Both phases provide improvement with respect to ensemble predictive accuracy and uncertainty estimation.

Our future work will include (1) application of the proposed UQ methods for various spatial and temporal forecasting neural network models; (2) comparison with other forms of Bayesian neural networks; (3) advanced generative modeling with autoencoders; and (4) uncertainty-informed traffic planning using reinforcement learning.

References

- [1] Yu B, Yin H, Zhu Z. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. arXiv preprint arXiv:170904875. 2017.

- [2] Li Y, Yu R, Shahabi C, Liu Y. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. In: International Conference on Learning Representations (ICLR ’18); 2018. .

- [3] Zheng C, Fan X, Wang C, Qi J. Gman: A graph multi-attention network for traffic prediction. arXiv preprint arXiv:191108415. 2019.

- [4] Tagasovska N, Lopez-Paz D. Single-model uncertainties for deep learning. arXiv preprint arXiv:181100908. 2018.

- [5] Lakshminarayanan B, Pritzel A, Blundell C. Simple and scalable predictive uncertainty estimation using deep ensembles. arXiv preprint arXiv:161201474. 2016.

- [6] Williams BM, Hoel LA. Modeling and forecasting vehicular traffic flow as a seasonal ARIMA process: Theoretical basis and empirical results. Journal of Transportation Engineering. 2003;129(6):664-72.

- [7] Kumar SV. Traffic flow prediction using Kalman filtering technique. Procedia Engineering. 2017;187:582-7.

- [8] Castro-Neto M, Jeong YS, Jeong MK, Han LD. Online-SVR for short-term traffic flow prediction under typical and atypical traffic conditions. Expert systems with Applications. 2009;36(3):6164-73.

- [9] Ahn J, Ko E, Kim EY. Highway traffic flow prediction using support vector regression and Bayesian classifier. In: 2016 International Conference on Big Data and Smart Computing (BigComp). IEEE; 2016. p. 239-44.

- [10] Chan KY, Dillon TS, Singh J, Chang E. Neural-network-based models for short-term traffic flow forecasting using a hybrid exponential smoothing and Levenberg–Marquardt algorithm. IEEE Transactions on Intelligent Transportation Systems. 2012;13(2):644-54.

- [11] Karlaftis MG, Vlahogianni EI. Statistical methods versus neural networks in transportation research: Differences, similarities and some insights. Transportation Research Part C: Emerging Technologies. 2011;19(3):387-99.

- [12] Ma X, Tao Z, Wang Y, Yu H, Wang Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transportation Research Part C: Emerging Technologies. 2015;54:187-97.

- [13] Zhang J, Zheng Y, Qi D, Li R, Yi X. DNN-based prediction model for spatio-temporal data. In: Proceedings of the 24th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems. ACM; 2016. p. 92.

- [14] Zhang J, Zheng Y, Qi D. Deep spatio-temporal residual networks for citywide crowd flows prediction. In: Thirty-First AAAI Conference on Artificial Intelligence; 2017. .

- [15] Fox M, Rubin H. Admissibility of quantile estimates of a single location parameter. The Annals of Mathematical Statistics. 1964:1019-30.

- [16] Koenker R, Bassett Jr G. Regression quantiles", Econometrica: Journal of the Economic Society, Vol. 46, No. 1; 1978.

- [17] Takeuchi I, Le Q, Sears T, Smola A, et al. Nonparametric quantile estimation. Journal of Machine Learning Research. 2006:1231–1264.

- [18] Pascanu R, Mikolov T, Bengio Y. On the difficulty of training recurrent neural networks. In: International Conference on Machine Learning; 2013. p. 1310-8.

- [19] Balaprakash P, Salim M, Uram TD, Vishwanath V, Wild SM. DeepHyper: Asynchronous hyperparameter search for deep neural networks. In: 2018 IEEE 25th international conference on high performance computing (HiPC). IEEE; 2018. p. 42-51.

- [20] Schmidt T. Coping with copulas. Copulas–From theory to application in finance. 2007;3:34.

- [21] Egele R, Maulik R, Raghavan K, Balaprakash P, Lusch B. AutoDEUQ: Automated Deep Ensemble with Uncertainty Quantification. arXiv preprint arXiv:211013511. 2021.

- [22] Li Y, Yu R, Shahabi C, Liu Y. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. GitHub; 2018. https://github.com/liyaguang/DCRNN.

- [23] Mallick T, Balaprakash P, Rask E, Macfarlane J. Graph-partitioning-based diffusion convolutional recurrent neural network for large-scale traffic forecasting. Transportation Research Record. 2020;2674(9):473-88.

- [24] Zheng C, Fan X, Wang C, Qi J. Gman: A graph multi-attention network for traffic prediction. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 34; 2020. p. 1234-41.

- [25] Wu D, Gao L, Xiong X, Chinazzi M, Vespignani A, Ma YA, et al. Quantifying Uncertainty in Deep Spatiotemporal Forecasting. arXiv preprint arXiv:210511982. 2021.

- [26] Gneiting T, Raftery AE. Strictly proper scoring rules, prediction, and estimation. Journal of the American Statistical Association. 2007;102(477):359-78.

- [27] Patki N, Wedge R, Veeramachaneni K. The Synthetic Data Vault. In: 2016 IEEE International Conference on Data Science and Advanced Analytics (DSAA); 2016. p. 399-410.

- [28] Efron B, Hastie T. Computer age statistical inference. vol. 5. Cambridge University Press; 2016.

- [29] Koenker. Quantile Regression (Econometric Society Monographs, No. 38). Cambridge university press; 2005.

- [30] Gasthaus J, Benidis K, Wang Y, Rangapuram SS, Salinas D, Flunkert V, et al. Probabilistic forecasting with spline quantile function RNNs. In: The 22nd international conference on Artificial Intelligence and Statistics. PMLR; 2019. p. 1901-10.

- [31] Gal Y, Ghahramani Z. Dropout as a Bayesian approximation: Representing model uncertainty in deep learning. In: international conference on machine learning. PMLR; 2016. p. 1050-9.

- [32] Zhu L, Laptev N. Deep and confident prediction for time series at uber. In: 2017 IEEE International Conference on Data Mining Workshops (ICDMW). IEEE; 2017. p. 103-10.

- [33] Ma YA, Chen T, Fox EB. A complete recipe for stochastic gradient MCMC. arXiv preprint arXiv:150604696. 2015.

- [34] Abdar M, Pourpanah F, Hussain S, Rezazadegan D, Liu L, Ghavamzadeh M, et al. A review of uncertainty quantification in deep learning: Techniques, applications and challenges. Information Fusion. 2021;76:243-97.

- [35] Hüllermeier E, Waegeman W. Aleatoric and epistemic uncertainty in machine learning: An introduction to concepts and methods. Machine Learning. 2021;110(3):457-506.

- [36] Guo J, Williams BM. Real-time short-term traffic speed level forecasting and uncertainty quantification using layered Kalman filters. Transportation Research Record. 2010;2175(1):28-37.

- [37] Guo J, Huang W, Williams BM. Adaptive Kalman filter approach for stochastic short-term traffic flow rate prediction and uncertainty quantification. Transportation Research Part C: Emerging Technologies. 2014;43:50-64.

- [38] Tsekeris T, Stathopoulos A. Short-term prediction of urban traffic variability: Stochastic volatility modeling approach. Journal of Transportation Engineering. 2010;136(7):606-13.

- [39] Matas A, Raymond JL, Ruiz A. Traffic forecasts under uncertainty and capacity constraints. Transportation. 2012;39(1):1-17.

- [40] Hoque JM, Erhardt GD, Schmitt D, Chen M, Wachs M. Estimating the uncertainty of traffic forecasts from their historical accuracy. Transportation Research Part A: Policy and Practice. 2021;147:339-49.

- [41] van Hinsbergen CI, Van Lint J, Van Zuylen H. Bayesian committee of neural networks to predict travel times with confidence intervals. Transportation Research Part C: Emerging Technologies. 2009;17(5):498-509.

- [42] Mazloumi E, Rose G, Currie G, Moridpour S. Prediction intervals to account for uncertainties in neural network predictions: Methodology and application in bus travel time prediction. Engineering Applications of Artificial Intelligence. 2011;24(3):534-42.

- [43] Lakshminarayanan B, Pritzel A, Blundell C. Simple and scalable predictive uncertainty estimation using deep ensembles. Advances in neural information processing systems. 2017;30.

- [44] Wenzel F, Snoek J, Tran D, Jenatton R. Hyperparameter ensembles for robustness and uncertainty quantification. Advances in Neural Information Processing Systems. 2020;33:6514-27.

- [45] Zaidi S, Zela A, Elsken T, Holmes CC, Hutter F, Teh Y. Neural ensemble search for uncertainty estimation and dataset shift. Advances in Neural Information Processing Systems. 2021;34.