Deep Ensemble Collaborative Learning by using Knowledge-transfer Graph

for Fine-grained Object Classification

Abstract

Mutual learning, in which multiple networks learn by sharing their knowledge, improves the performance of each network. However, the performance of ensembles of networks that have undergone mutual learning does not improve significantly from that of normal ensembles without mutual learning, even though the performance of each network has improved significantly. This may be due to the relationship between the knowledge in mutual learning and the individuality of the networks in the ensemble. In this study, we propose an ensemble method using knowledge transfer to improve the accuracy of ensembles by introducing a loss design that promotes diversity among networks in mutual learning. We use an attention map as knowledge, which represents the probability distribution and information in the middle layer of a network. There are many ways to combine networks and loss designs for knowledge transfer methods. Therefore, we use the automatic optimization of knowledge-transfer graphs to consider a variety of knowledge-transfer methods by graphically representing conventional mutual-learning and distillation methods and optimizing each element through hyperparameter search. The proposed method consists of a mechanism for constructing an ensemble in a knowledge-transfer graph, attention loss, and a loss design that promotes diversity among networks. We explore optimal ensemble learning by optimizing a knowledge-transfer graph to maximize ensemble accuracy. From exploration of graphs and evaluation experiments using the datasets of Stanford Dogs, Stanford Cars, and CUB-200-2011, we confirm that the proposed method is more accurate than a conventional ensemble method.

1 Introduction

When networks are trained under the same conditions, such as network architecture and data set, they produce different errors, even though they have the same level of accuracy, depending on random factors such as the initial values of the network and data selected as mini-batches. This change can be said to indicate that the network acquires different knowledge during training depending on the training conditions. ensemble and knowledge-transfer methods have performed well on a variety of problems by using multiple networks with different weight parameters for training and inference.

An ensemble method executes inference using multiple learned networks. The inference is done by averaging the output of each network for the input samples. This improves ensemble accuracy compared with inference using a single network. Due to the nature of using multiple networks, it is also effective in various problem settings such as adversarial attack and out-of-distribution detection [13, 6, 5, 17]. Compared with a single network, the computational cost increases with the number of networks used in an ensemble, so an efficient ensemble methods have been proposed [28, 29, 23, 14].

Knowledge transfer is a learning method in which a network shares the knowledge acquired in learning with the goal of network compression and network performance improvement. There are two types of knowledge-transfer methods: unidirectional [10] and bidirectional [33]. Unidirectional knowledge transfer [10] is a one-way knowledge-transfer method that learns an untrained network with shallow layers using a learned network with deep layers. Bidirectional knowledge transfer [33] is a mutual-learning method using multiple untrained networks. These two types of methods use probability distributions as knowledge. Knowledge-transfer methods have also been proposed for various conditions such as network size and knowledge to be used, e.g., knowledge transfer to unlearned networks of similar layer depths and knowledge transfer using features of intermediate layers as network knowledge [22, 15, 30, 26, 21, 1, 31, 25, 32, 3]. With these methods, the combination of networks and the direction of knowledge transfer are manually designed. A knowledge-transfer graph is used of considering various knowledge-transfer methods by optimizing the knowledge-transfer direction and network combination as hyperparameters. In knowledge-transfer graphs [18], networks are represented as nodes and knowledge propagation from node to node as edges, and knowledge transfer is represented as a directed graph. Since there is no need to fix the network architecture or knowledge-transfer method in advance, various learning methods can be represented by combining them.

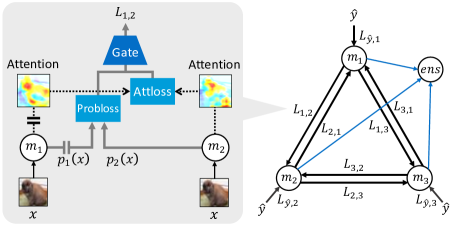

In this study, we propose an ensemble method using knowledge transfer to improve ensemble accuracy by promoting diversity among networks during training. We focus on mutual learning and knowledge-transfer graphs for this purpose. In addition to traditional knowledge transfer, we use mutual learning to separate the outputs between networks. If the probability distributions are directly separated, the performance of the networks may degrade. Therefore, we promote diversity from two perspectives: probability distribution and an attention map, which represents intermediate information. The proposed method consists of an ensemble mechanism for constructing an ensemble in a knowledge transfer graph to optimize the loss design between nodes. Figure 1 shows an overview of the proposed method. We explore how to learn to improve ensemble accuracy by optimizing a knowledge-transfer graph.

Our contributions are as follows:

-

•

We carry out mutual learning for ensemble learning using loss designs that promotes diversity among networks. We evaluated several loss designs and confirmed that ensemble accuracy improves.

-

•

We propose an ensemble method using knowledge transfer that consists of an ensemble mechanism and a loss design in a knowledge-transfer graph to promote diversity and investigate various ensemble-learning methods. The optimized knowledge-transfer graph was evaluated on the datasets of fine-grained object classification tasks, which confirmed that the proposed method results in be improved ensemble accuracy than the ensemble method using individually trained networks.

2 Related work

2.1 Ensemble method

Ensemble method is one of the oldest machine-learning methods. Ensemble method in deep learning is a simple method of averaging the probability distributions or logits output by networks with different weight parameters. Ensemble accuracy improves as the number of networks included increases, and after a certain number of networks, ensemble accuracy stops improving. Hyperparameter ensemble [29] shows that ensemble accuracy can be improved by combining the initial values of the network weights and hyperparameters. For ensemble method in Few-Shot learning [6], in addition to applying different randomization to each network, we introduced a loss design in which the probability distributions of the networks are brought closer together and further apart and showed that bringing the networks closer together is more effective when the number of networks in an ensemble is small, and separating them is more effective when the number of networks in an ensemble is large. Due to the nature of ensembles that use multiple networks, they are also effective in various problem settings such as adversarial attack and out-of-distribution detection [13, 5, 17].

The learning and inference cost of ensembles increases with the number of networks. In knowledge transfer [23, 14], training a single network to mimic the ensembled probability distribution has been shown to perform as well as an ensemble with a single network. In batch ensemble [28] and hyperparameter ensembles [29], the number of parameters is prevented from increasing by commonizing some of the parameters, thereby reducing the training and inference costs.

2.2 Knowledge transfer

Knowledge transfer is a learning method in which a network shares the knowledge it has acquired through learning with the goal of network compression and network performance improvement. There are two types of knowledge-transfer methods, i.e., unidirectional and bidirectional.

Unidirectional knowledge transfer uses a teacher network, which is a network that has been trained, and a student network, which is an untrained network. In addition to the teacher label, the output of the teacher network is used as a pseudo-supervisor label to train the student network. Hinton et al. [10] proposed knowledge distillation (KD), which uses the probability distribution of a teacher network with a large number of parameters to train a student network with a small number of parameters. This method is also effective for teacher and student networks with the same number of parameters [8]. A two-stage knowledge-transfer method using three networks has also been proposed [19].

Bidirectional knowledge transfer uses the probability distributions output by networks other than as pseudo-supervisory labels by using only the student networks. The first bidirectional knowledge-transfer method was proposed by Zhang et al. [33] called deep mutual learning (DML).

2.3 Knowledge-transfer graph

A knowledge-transfer graph [18] is used to consider a variety of knowledge transfer methods by using hyperparameter search, where the network type and knowledge-transfer method are hyperparameters. A knowledge-transfer graph represents knowledge-transfer methods as a graph, which is a unified representation of conventional methods. The networks are represented as nodes, and knowledge transfers are represented as directed edges. The direction of the effective edge represents the direction of the knowledge transfer. The destination of the knowledge transfer is called the target node, and the source of the knowledge is called the source node. If we assume two nodes, one of which is a learned network, and the edge is in one direction, we express a KD. By defining two nodes as untrained networks and edges as bidirectional, one expresses DML.

A knowledge-transfer graph executes various knowledge transfers by applying one of four gate functions (through gate, cutoff gate, linear gate, and correct gate) to the loss of knowledge transfer.

The through gate passes the loss of each input sample as it is and is defined as

| (1) |

The cutoff gate does not execute loss calculation and is defined as

| (2) |

The linear gate changes the weights linearly with training time and is defined as

| (3) |

where is the number of the current iterations and is the total number of iterations at the end of the training The correct gate passes only the samples that the source node answered correctly and is defined as

| (6) |

where is the output of the source node and is a label.

The number of nodes and types of networks are defined in advance, and the gate functions of the networks and edges used as nodes are optimized by hyperparameter search to consider the various knowledge-transfer method and obtain optimal graph.

3 Proposed Method

The proposed ensemble method uses knowledge transfer. Since ensembles average probability distributions and logits, we believe that the more diversity there is in the outputs between networks, the better the effect. The proposed method consists of a loss design where the outputs are separated. We investigated the ensemble effects of separating and combining network knowledge. The number of possible combinations of these loss designs is enormous and difficult to design manually. Therefore, we extend ensemble learning with mutual learning to the optimization of a knowledge-transfer graph.

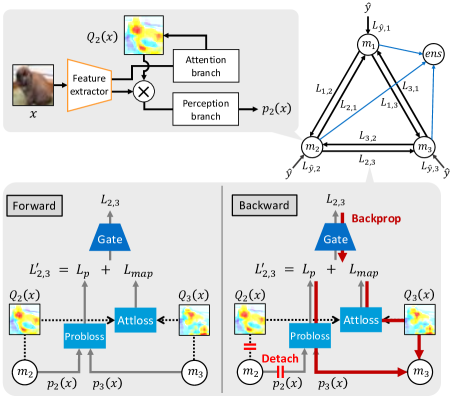

We add ensemble nodes that form ensembles using conventional knowledge-transfer graphs. For edge loss computation, a loss term is added using the output of the middle layer in addition to the output of the final layer of a network. By optimizing the accuracy of the ensemble nodes to maximize accuracy, a variety of ensemble-learning methods can be considered.

3.1 Mutual learning for ensembles

The output used for knowledge transfer can be divided into probability distributions and middle features. In this study, we used attention maps as middle features. To learn as a minimization problem, we used a different loss design for each output when bringing them closer together and when separating them. The target network was the knowledge transfer destination and the source network was the knowledge source.

When the probability distributions is brought closer together, kullback-leibler(KL)-divergence is used, and when it is farther separated, cosine similarity is used. The loss function using KL-divergence is defined as

| (7) |

| (8) |

where is the number of classes, is the number of samples, is the input sample, is the probability distribution of the source network, and is the probability distribution of the target network. The loss function using cosine similarity is defined as

| (9) |

The attention map responds strongly to regions that are effective in learning the input sample. Since the size of the target object in the sample varies, the size of the strongly responsive region differs depending on the sample. Thus, there are cases in which the similarity is high even though the object is responding strongly to different parts of the object. Therefore, we crop the attention map. The source network is cropped to a square centered on the pixel with the highest attention, and the target network is cropped to the same position as the source network. The cropping is done in multiple sizes, and the average of the similarities for each size is used as the similarity of the attention map. When the attention map is brought closer together, mean squared error is used, and when it is farther separated, cosine similarity is used. The loss function using mean squared error is defined as

| (10) |

where is the number of crops, is the attention map of the source network, and is the attention map of the target network. The loss function using cosine similarity is defined as

| (11) |

3.2 Knowledge-transfer graphs for ensembles

A knowledge-transfer graph selects the nodes for which we want to improve accuracy and executes a hyperparameter search. We add an ensemble node with a mechanism for constructing ensemble to the knowledge transfer graph to target the ensemble. The ensemble node forms an ensemble using the outputs of all the nodes. The ensemble node is defined as

| (12) |

where is the number of nodes, is the logits output by the nodes, and is the input sample.

We add knowledge transfer using the attention map to the edge between nodes. Figure 2 shows the process flow of loss calculation. In the conventional edge between nodes, the loss per sample is calculated using the probability distribution output by the node. Next, the gate function is applied to the loss. Knowledge transfer using the attention map is also carried out in the same manner. The loss for each sample is calculated using the attention map obtained from the node. Next, the gate function is applied to the losses. The gate function is the same for the loss of the probability distribution and that of the attention map. Finally, the loss of the probability distribution and that of the attention map are added. The loss function at the edge between the nodes is defined as

| (13) |

where is the gate function, is the loss of the probability distribution, and is the loss of the attention map. The loss is calculated for each edge. The final node loss is defined as

| (14) |

where is the cross-entropy loss between the probability distribution output by the node and label.

The hyperparameters of a knowledge-transfer graph are the loss design of the probability distribution, that of the attention map, and the gate function. There are six combinations of losses between edges: bring the probability distribution closer to it of other edge (Eq. 8), separate the probability distribution (Eq. 9), bring the attention map closer to it of other edge (Eq. 10), separate the attention map (Eq. 11), Bring the probability distribution and attention map closer to it of other edge at the same time (Eqs. 8 and 10), and Separate the probability distribution and attention map at the same time (Eqs. 9 and 11). The network to be used as a node is fixed to that determined before optimization.

Random search and the asynchronous successive halving algorithm (ASHA) are used to optimize a knowledge-transfer graph. The combination of hyperparameters is determined randomly, and the knowledge-transfer graph evaluates the ensemble nodes at epochs. If the accuracy of the ensemble node is less than the average accuracy at the same epoch in the past, the learning is terminated and the next knowledge-transfer graph is trained.

|

|

4 Experiments

We evaluated the proposed method. Section 4.2 describes ensemble learning with mutual learning, Section 4.3 visualizes optimized knowledge transfer graphs, Section 4.4 compares the proposed method with conventional ensemble methods, and Section 4.5 evaluates the generalizability of knowledge-transfer graphs for different datasets.

4.1 Experimental setting

Datasets The datasets we used were Stanford Dogs [11], Stanford Cars [12], and CaltechUSCD Birds (CUB-200-2011) [27]. These datasets belong to the fine-grained object classification task. Stanford Dogs consists of 120 classes of dog breeds and uses 12,000 images for training and 8,580 images for testing. Stanford Cars consists of 196 classes of cars and uses 8,144 images for training and 8,041 images for testing. CUB-200-2011 consists of 200 classes of birds and uses 5,994 images for training and 5,794 images for testing. To optimize a knowledge-transfer graph, half of the training data, selected to maintain class balance in the dataset, were used for training during the exploration of graph, and the rest of the data were used for evaluation during the exploration of graph. For the comparative evaluation discussed in Sections 4.4 and 4.5, the original training data and testing data were used.

Networks For the networks, we used ResNet-18[9] and attention branch network (ABN) [7] based on ResNet. We used attention transfer (AT) [32] to create an attention map in ResNet-18. AT is a knowledge-transfer method that creates an attention map by averaging feature maps in the channel direction. The attention map is created using the feature map output using the fourth ResBlock and crops it to sizes and for loss calculation. ABN creates an attention map by using a method based on the class activation map [34] and weights the attention map to the feature map by using the attention mechanism. The attention map is cropped to , , and for loss calculation. We believe that since the attention map is applied to the attention mechanism, the effect of the attention map on the probability distribution is better transmitted. In the attention mechanism, residual processing is used to prevent loss of features due to the attention map, however, in the experiment, residual processing was not used to convey the changes in the attention map more strongly to the feature map.

Implementation details The learning conditions were the same for all experiments. The optimization algorithms used were stochastic gradient descent and momentum. The initial learning rate was 0.1, momentum was 0.9, coefficient of weight decay was 0.0001, batch size was 16, and number of epochs was 300. The learning rate was decayed by a factor of 10 at 150 epochs and 225 epochs. In the optimization of the knowledge-transfer graph, we tried 6,000 combinations of hyperparameters. We used PyTorch [20] as a framework for deep learning and Optuna [2] as a framework for hyperparameter search. For the optimization of a knowledge-transition graph, we used 90 Quadro P5000 servers. Each result represents the mean and standard deviation of five trials.

|

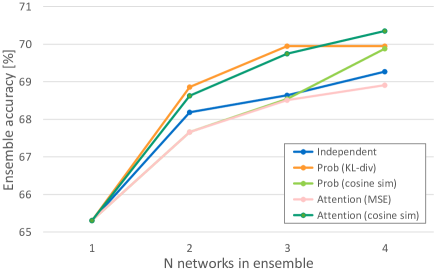

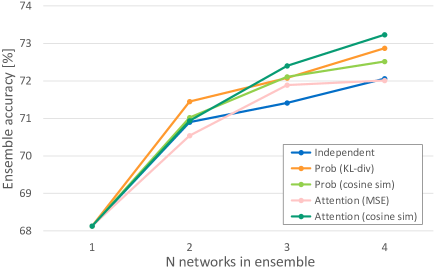

4.2 Ensemble learning with mutual learning

In addition to the cross-entropy loss with labels when training networks, one of Eqs.8 to 11 is applied as a loss design between networks. The results using ResNet-18 are shown in Fig. 3. The results using ABN are shown in Fig. 3. When the number of networks used in the ensemble was small, it was effective to bring the probability distribution closer together, and when the number of networks was large, it was effective to separate the probability distribution and the attention map. Therefore, it is effective to add diversity to the probability distribution and attention map in the ensemble. For AT, the accuracy of the loss design was reversed at the four networks. For ABN, the accuracy of the loss design that separated the attention map exceeded that of the loss design that brought the probability distributions closer together at the three networks. Therefore, the change in the attention map has more impact on the probability distribution by weighting it to the feature map by using the attention mechanism.

| Method | Node | Node-to-node loss design | Gate | Accuracy [%] | |

|---|---|---|---|---|---|

| Average of nodes | Ensemble | ||||

| Independent | ResNet18 2 | - | - | 65.31 0.16 | 68.19 0.20 |

| Independent | ABN 2 | - | - | 68.13 0.16 | 70.90 0.19 |

| DML | ABN 2 | Prob(KL-divergence) | Fixed(Through) | 69.91 0.46 | 71.45 0.52 |

| Ours | ABN 2 | Optimized | Optimized | 72.77 0.23 | 73.86 0.26 |

| Independent | ResNet18 3 | - | - | 65.08 0.23 | 68.64 0.38 |

| Independent | ABN 3 | - | - | 68.04 0.28 | 71.41 0.34 |

| DML | ABN 3 | Prob(KL-divergence) | Fixed(Through) | 70.50 0.26 | 72.08 0.42 |

| Ours | ABN 3 | Optimized | Optimized | 70.95 0.16 | 73.41 0.30 |

| Independent | ResNet18 4 | - | - | 65.29 0.35 | 69.27 0.49 |

| Independent | ABN 4 | - | - | 68.30 0.27 | 72.06 0.53 |

| DML | ABN 4 | Prob(KL-divergence) | Fixed(Through) | 71.50 0.31 | 72.87 0.29 |

| Ours | ABN 4 | Optimized | Optimized | 71.46 0.22 | 74.16 0.22 |

| Independent | ResNet18 5 | - | - | 65.00 0.24 | 69.47 0.13 |

| Independent | ABN 5 | - | - | 68.24 0.26 | 72.32 0.18 |

| DML | ABN 5 | Prob(KL-divergence) | Fixed(Through) | 71.15 0.28 | 72.50 0.16 |

| Ours | ABN 5 | Optimized | Optimized | 70.23 0.33 | 74.14 0.50 |

| Training | Exploring | Number of nodes | |||

|---|---|---|---|---|---|

| graph | graph | 2 | 3 | 4 | 5 |

| CUB-200-2011 | Stanford Dogs | 72.06 0.16 | 71.82 0.25 | 73.03 0.11 | 72.13 0.17 |

| CUB-200-2011 | CUB-200-2011 | 69.81 0.38 | 74.17 0.23 | 72.22 0.30 | 74.05 0.58 |

| Stanford Cars | Stanford Dogs | 89.76 0.25 | 89.94 0.19 | 89.98 0.13 | 90.41 0.11 |

| Stanford Cars | Stanford Cars | 89.44 0.09 | 90.04 0.09 | 89.57 0.19 | 90.73 0.19 |

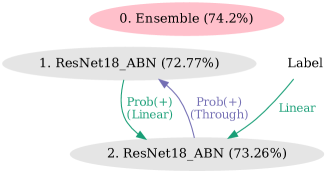

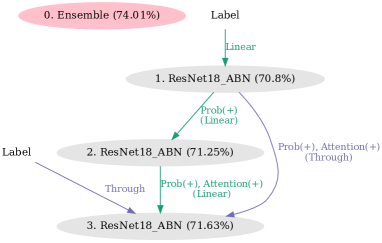

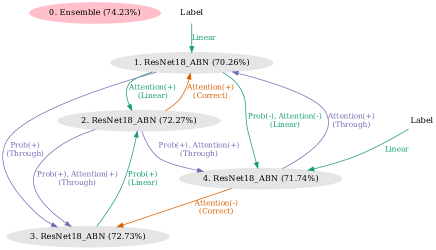

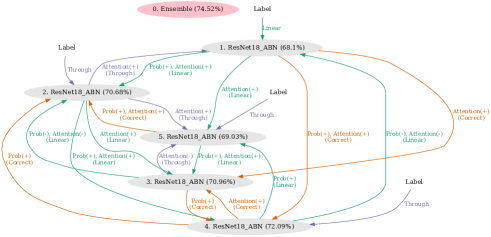

4.3 Visualization of optimized knowledge-transfer graphs

Figure 4 shows the knowledge-transfer graphs of 2 to 5 nodes optimized on Stanford Dogs. At node 2, we obtained a graph that is an extension of DML. At node 3, we obtained a graph that combines the conventional knowledge-transfer methods of KD and TA. For 4 and 5 nodes, we obtained graphs with a mixture of loss designs that are brought closer together and loss designs that are separated.

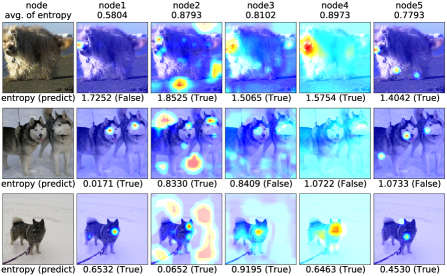

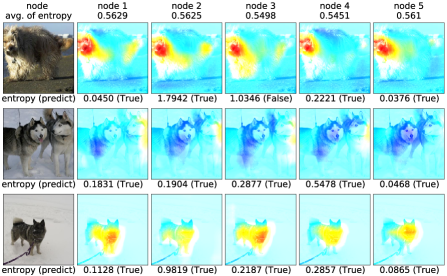

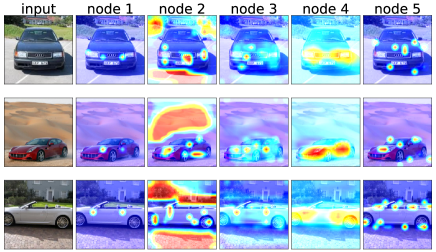

Figure 5 shows the attention map in the optimized knowledge-transfer graph with five nodes. Each node has a different focus of attention.

Looking at the average entropy, nodes 1 and 5, which focus on a single point on the dog’s head, have low entropy. Nodes 2, 3, and 4, which focus on the whole image or background, have higher entropy than nodes 1 and 5. This means that inferences are made on the basis of the importance of different locations and the state of attention affects probability distribution.

Figure 5 shows the attention map in the ensemble method using individually trained networks. Comparred to the optimized knowledge-transfer graph, the average entropy of the ensemble method using individually trained networks is lower. This is because the attention regions are almost the same among the networks even though they are trained individually.

4.4 Comparison with conventional methods

Table 1 shows the average and ensemble accuracies of the nodes of the proposed and conventional methods for Stanford Dogs. ”Ours” is the result of the optimized knowledge-transfer graph with the proposed method, ”Independent” is the result of the individually trained network, and ”DML” is the result of the network with DML. The ensemble accuracy of ”Ours” was higher than that of ”Independent” and ”DML” at any number of nodes. Comparing ”Independent(ABN)” and ”DML”, we can see that the improvement in ensemble accuracy was small compared with the improvement in node accuracy. With ”Ours”, compared with ”DML”, ensemble accuracy also improved as network accuracy improved. Therefore, we can say that ”Ours” obtained the graph that generates more diversity by learning.

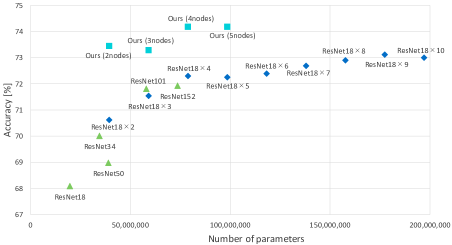

Figure 6 shows the comparison results with ABN and different base networks. The vertical axis is accuracy and the horizontal axis is the total number of parameters. In Stanford Dogs, the accuracy of the single network and ”Independent” varied with the number of parameters. The knowledge-transfer graph shows that the ensemble with high parameter efficiency can be constructed by mutual learning with diversity without changing the network structure. When the number of networks is increased, ensemble accuracy reaches a ceiling of around 73%. This shows that the proposed method achieved an accuracy that exceeds the limit with a conventional method.

4.5 Generalizability of graphs

We evaluated the optimized graph structure using Stanford Dogs on various datasets. Table 2 shows the ensemble accuracies for CUB-200-2011 and Stanford Cars. The accuracies of the knowledge-transfer graphs with different datasets used for optimization are comparable. This shows that the structure of the optimized graph is generalizable. The accuracies of some knowledge-transfer graphs differ depending on the data used in the optimization. We believe this is caused by a large number of combinations of graph structures, which eventually results in the acquisition of different graph structures.

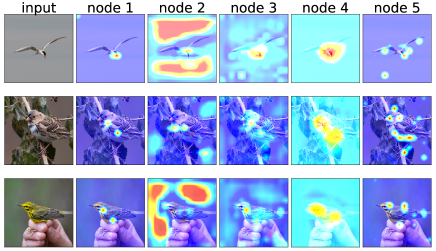

Figures 7 and 8 shows the attention maps of the knowledge-transfer graph with five nodes optimized by Stanford Dogs. The attention maps show a similar trend to that in Figure 5 when trained on a different dataset than the exploration. This shows the generalizability of the structure of the optimized graph in terms of the attention map that is eventually obtained.

5 Conclusion and Future Work

We proposed knowledge-transfer graph for ensemble method. We added the loss design as a hyperparameter to promote diversity among networks and optimized the graph structure to improve ensemble accuracy. We evaluated our method on a dataset of a fine-grained object classification task and the ensemble accuracy of our method was higher than that the result of the network with DML and the result of the individually trained network. For the number of hyperparameter combinations, 6,000 pairs were selected by random search and the branches were pruned using the ASHA. Since the number of combinations of graph structures increases in proportion to the number of nodes, learning methods with better performance may be obtained by increasing the number of trials. In the future, we will study the introduction of Bayesian optimization and its application to different tasks.

References

- [1] Sungsoo Ahn, Shell Xu Hu, Andreas Damianou, Neil D Lawrence, and Zhenwen Dai. Variational information distillation for knowledge transfer. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 9163–9171, 2019.

- [2] Takuya Akiba, Shotaro Sano, Toshihiko Yanase, Takeru Ohta, and Masanori Koyama. Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the 25rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2019.

- [3] Aditya Chattopadhay, Anirban Sarkar, Prantik Howlader, and Vineeth N Balasubramanian. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In IEEE Winter Conference on Applications of Computer Vision (WACV), 2018.

- [4] Guobin Chen, Wongun Choi, Xiang Yu, Tony Han, and Manmohan Chandraker. Learning efficient object detection models with knowledge distillation. In Advances in Neural Information Processing Systems (NeurIPS), pages 742–751, 2017.

- [5] Ali Dabouei, Sobhan Soleymani, Fariborz Taherkhani, Jeremy Dawson, and Nasser M. Nasrabadi. Exploiting joint robustness to adversarial perturbations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [6] Nikita Dvornik, Cordelia Schmid, and Julien Mairal. Diversity with cooperation: Ensemble methods for few-shot classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019.

- [7] Hiroshi Fukui, Tsubasa Hirakawa, Takayoshi Yamashita, and Hironobu Fujiyoshi. Attention branch network: Learning of attention mechanism for visual explanation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [8] Tommaso Furlanello, Zachary Lipton, Michael Tschannen, Laurent Itti, and Anima Anandkumar. Born again neural networks. In International Conference on Machine Learning (ICML), volume 80 of Proceedings of Machine Learning Research, pages 1607–1616, 2018.

- [9] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In IEEE conference on computer vision and pattern recognition (CVPR), pages 770–778, 2016.

- [10] Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. Distilling the knowledge in a neural network. In Neural Information Processing Systems (NIPS) Deep Learning and Representation Learning Workshop, 2015.

- [11] Aditya Khosla, Nityananda Jayadevaprakash, Bangpeng Yao, and Li Fei-Fei. Novel dataset for fine-grained image categorization. In First Workshop on Fine-Grained Visual Categorization, IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, June 2011.

- [12] Jonathan Krause, Michael Stark, Jia Deng, and Li Fei-Fei. 3d object representations for fine-grained categorization. In 4th International IEEE Workshop on 3D Representation and Recognition (3dRR-13), Sydney, Australia, 2013.

- [13] Samuli Laine and Timo Aila. Temporal ensembling for semi-supervised learning. In 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings. OpenReview.net, 2017.

- [14] Xu Lan, Xiatian Zhu, and Shaogang Gong. Knowledge distillation by on-the-fly native ensemble. In Advances in Neural Information Processing Systems (NeurIPS), pages 7527–7537, 2018.

- [15] Yufan Liu, Jiajiong Cao, Bing Li, Chunfeng Yuan, Weiming Hu, Yangxi Li, and Yunqiang Duan. Knowledge distillation via instance relationship graph. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- [16] Yifan Liu, Ke Chen, Chris Liu, Zengchang Qin, Zhenbo Luo, and Jingdong Wang. Structured knowledge distillation for semantic segmentation. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- [17] Andrey Malinin, Bruno Mlodozeniec, and Mark Gales. Ensemble distribution distillation. In International Conference on Learning Representations, 2020.

- [18] Soma Minami, Tsubasa Hirakawa, Takayoshi Yamashita, and Hironobu Fujiyoshi. Knowledge Transfer Graph For Deep Collaborative Learning. In Asian Conference on Computer Vision (ACCV), 2020.

- [19] Seyed-Iman Mirzadeh, Mehrdad Farajtabar, Ang Li, and Hassan Ghasemzadeh. Improved knowledge distillation via teacher assistant: Bridging the gap between student and teacher. In Association for the Advancement of Artificial Intelligence (AAAI), 2020.

- [20] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. Pytorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc., 2019.

- [21] Baoyun Peng, Xiao Jin, Jiaheng Liu, Dongsheng Li, Yichao Wu, Yu Liu, Shunfeng Zhou, and Zhaoning Zhang. Correlation congruence for knowledge distillation. In International Conference on Computer Vision (ICCV), 2019.

- [22] Adriana Romero, Nicolas Ballas, Samira Ebrahimi Kahou, Antoine Chassang, Carlo Gatta, and Yoshua Bengio. Fitnets: Hints for thin deep nets. In International Conference on Learning Representations (ICLR), 2015.

- [23] Guocong Song and Wei Chai. Collaborative learning for deep neural networks. In Advances in Neural Information Processing Systems (NeurIPS), pages 1837–1846, 2018.

- [24] Antti Tarvainen and Harri Valpola. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Advances in Neural Information Processing Systems (NeurIPS), 2017.

- [25] Yonglong Tian, Dilip Krishnan, and Phillip Isola. Contrastive representation distillation. In International Conference on Learning Representations, 2020.

- [26] Frederick Tung and Greg Mori. Similarity-preserving knowledge distillation. In International Conference on Computer Vision (ICCV), 2019.

- [27] C. Wah, S. Branson, P. Welinder, P. Perona, and S. Belongie. The Caltech-UCSD Birds-200-2011 Dataset. Technical report, 2011.

- [28] Yeming Wen, Dustin Tran, and Jimmy Ba. Batchensemble: an alternative approach to efficient ensemble and lifelong learning. In International Conference on Learning Representations, 2020.

- [29] Florian Wenzel, Jasper Snoek, Dustin Tran, and Rodolphe Jenatton. Hyperparameter ensembles for robustness and uncertainty quantification. In H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 6514–6527. Curran Associates, Inc., 2020.

- [30] Junho Yim, Donggyu Joo, Jihoon Bae, and Junmo Kim. A gift from knowledge distillation: Fast optimization, network minimization and transfer learning. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 4133–4141, 2017.

- [31] Lu Yu, Vacit Oguz Yazici, Xialei Liu, Joost van de Weijer, Yongmei Cheng, and Arnau Ramisa. Learning metrics from teachers: Compact networks for image embedding. In Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- [32] Sergey Zagoruyko and Nikos Komodakis. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. In International Conference on Learning Representations (ICLR), 2017.

- [33] Ying Zhang, Tao Xiang, Timothy M Hospedales, and Huchuan Lu. Deep mutual learning. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- [34] Bolei Zhou, Aditya Khosla, Agata Lapedriza, Aude Oliva, and Antonio Torralba. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.