Deep Hypergraph U-Net for Brain Graph Embedding and Classification

Abstract

-Background. Network neuroscience examines the brain as a complex system represented by a network (or connectome), providing deeper insights into the brain morphology and function, allowing the identification of atypical brain connectivity alterations, which can be used as diagnostic markers of neurological disorders.

-Existing Methods. Graph embedding methods which map data samples (e.g., brain networks) into a low dimensional space have been widely used to explore the relationship between samples for classification or prediction tasks. However, the majority of these works are based on modeling the pair-wise relationships between samples, failing to capture their higher-order relationships.

-New Method. In this paper, inspired by the nascent field of geometric deep learning, we propose Hypergraph U-Net (HUNet), a novel data embedding framework leveraging the hypergraph structure to learn low-dimensional embeddings of data samples while capturing their high-order relationships. Specifically, we generalize the U-Net architecture, naturally operating on graphs, to hypergraphs by improving local feature aggregation and preserving the high-order relationships present in the data.

-Results. We tested our method on small-scale and large-scale heterogeneous brain connectomic datasets including morphological and functional brain networks of autistic and demented patients, respectively.

-Conclusion. Our HUNet outperformed state-of-the art geometric graph and hypergraph data embedding techniques with a gain of 4-14% in classification accuracy, demonstrating both scalability and generalizability.

keywords:

Neurological disorder diagnosis, Machine Learning, Computer-Aided Diagnosis, Geometric Deep Learning, Hypergraph UNet1 Introduction

Studying the connectivity of the human brain provides us with a deep understanding of how the brain operates as a highly complex interconnected system. Network neuroscience, in particular, aims to chart the brain connectome by modeling it as a network, where each node represents a specific anatomical region of interest (ROI) and the weight of the edge connecting pairs of nodes encodes their relationship in function, structure or morphology (Fornito et al., 2015; Heuvel and Sporns, 2019). Studies of brain networks primarily investigated structural and functional connectivities derived from diffusion weighted and functional magnetic resonance imaging (MRI), respectively (Park and Friston, 2013; Heuvel and Sporns, 2019). On a methodological level, graph theory techniques have been widely used to analyze brain networks, giving new insights into atypical alterations of brain connectivity caused by neurological or neuropsychiatric disorders (Fornito et al., 2015). Studies combining these techniques have uncovered that diseases such as schizophrenia (Fornito et al., 2012; Alexander-Bloch et al., 2012), Alzheimer’s Disease (AD) (Buckner et al., 2009; Mahjoub et al., 2018), autism spectrum disorder (ASD) (Morris and Rekik, 2017; Soussia and Rekik, 2017) affect the connectomics of the brain, implying that pinning down connectional changes in the brain could reveal clinically useful diagnostic markers.

To this aim, investigating a population of brain connectomes using graph-based embedding techniques has become popular, given their capacity to model the one-to-one relationship between data samples (i.e. connectomes) and circumvent the curse of dimensionality in learning tasks such as brain connectome classification or generation. Existing graph embedding techniques can be broken down into three main categories: (1) matrix factorization based, (2) deep learning methods based on random walks and (3) neural network based methods. Matrix factorization focuses on factorizing a high dimensional data matrix into lower dimensional matrices while preserving the topological properties of the data to factorize. Such methods first encode relationships between nodes into an affinity matrix, which is then factorized to generate the embedding. These vary depending on the properties of the matrix. For instance, while graph factorization (GF) technique uses the adjacency matrix (Ahmed et al., 2013), GraRep (Cao et al., 2015) uses -step transition probability matrices. However, matrix factorization based methods usually consider the first order proximity and some of these methods which consider high-order proximities such as the GraRep suffer from scalability issues (Goyal and Ferrara, 2018).

Unlike matrix factorization methods, random-walk based deep learning approaches such as DeepWalk (Perozzi et al., 2014) and node2vec (Grover and Leskovec, 2016) focus on optimizing embeddings to encode the statistics of random walks rather than trying to come up with a deterministic node similarity measure. These approaches use random walks in graphs to generate node sequences in order to learn node representations. Given a starting node in a graph, these methods select one of the neighbors and then repeat the process after moving onto the neighboring node to generate node sequences. Random walks have had different uses in approximating different properties in graphs including node similarity (Fouss et al., 2007) and centrality (Newman, 2005). They are especially helpful when a graph is too large to consider in its entirety or when a graph is only partially observable (Goyal and Ferrara, 2018). DeepWalk (Perozzi et al., 2014), one of the initial works using this approach, performs truncated random walks graphs. Instead, node2vec (Grover and Leskovec, 2016) uses a biased random walk procedure which uses depth first sampling and breadth first sampling together. However, since these approaches use local windows to operate they fail to characterize the global structure of the graph (Cai et al., 2018).

More recently, there has been a surge of interest in deep graph neural networks (GNN) (Kipf and Welling, 2017; Gao and Ji, 2019; Wang et al., 2016), given their remarkable capacity to model the deeply nonlinear relationship between data samples (i.e. connectomes) rooted in message passing, aggregation, and composition rules between node connectomic features (Ktena et al., 2017; Bessadok et al., 2019b, a; Banka and Rekik, 2019). Graph embedding also witnessed the introduction of different neural networks such as autoencoders (Wang et al., 2016), the multilayer perceptron (Tang et al., 2015), graph convolutional network (GCN) (Kipf and Welling, 2017) and generative adversarial network (GAN) (Wang et al., 2017). For example, structural deep network embedding (SDNE) (Wang et al., 2016) leveraged deep autoencoders to conserve the information from the first and second order proximities by jointly optimizing both proximities. Their method applies highly non-linear functions in order to create an embedding that captures the non-linearity of the graph. However this approach can be computationally expensive to operate on large sparse graphs (Goyal and Ferrara, 2018). GCN handles this issue by defining a convolution operation for graphs with the aim of iteratively aggregating the embedding neighbors of nodes to update the embedding. Considering only the local neighborhood makes the method scalable while multiple iterations allow for the characterization of the global features. Graph U-Net (GUNet) (Gao and Ji, 2019) is an encoder-decoder architecture leveraging graph convolution and it improves on GCN by generalizing the seminal U-Net (Ronneberger et al., 2015) designed for Euclidean spaces (e.g., images) to non-Euclidean spaces (e.g., graphs), allowing high-level feature encoding and receptive field enlargement through the sampling of important nodes in the graph.

More interestingly, such graph embedding architectures allow to circumvent the curse of dimensionality in learning based tasks such as brain connectome classification or generation (Bessadok et al., 2019b, a) by learning low-dimensional embeddings of node attributes such as connectome features while preserving their similarities. These learned embeddings can then be used as inputs for machine learning methods for tasks such as node classification (Bessadok et al., 2019a; Banka and Rekik, 2019) and link prediction (Liu et al., 2017). However, a major limitation of current deep graph embedding architectures is that they are unable to capture many-to-many (i.e., high-order) relationships between samples, hence the learned feature embeddings only consider node-to-node edges in the population graph.

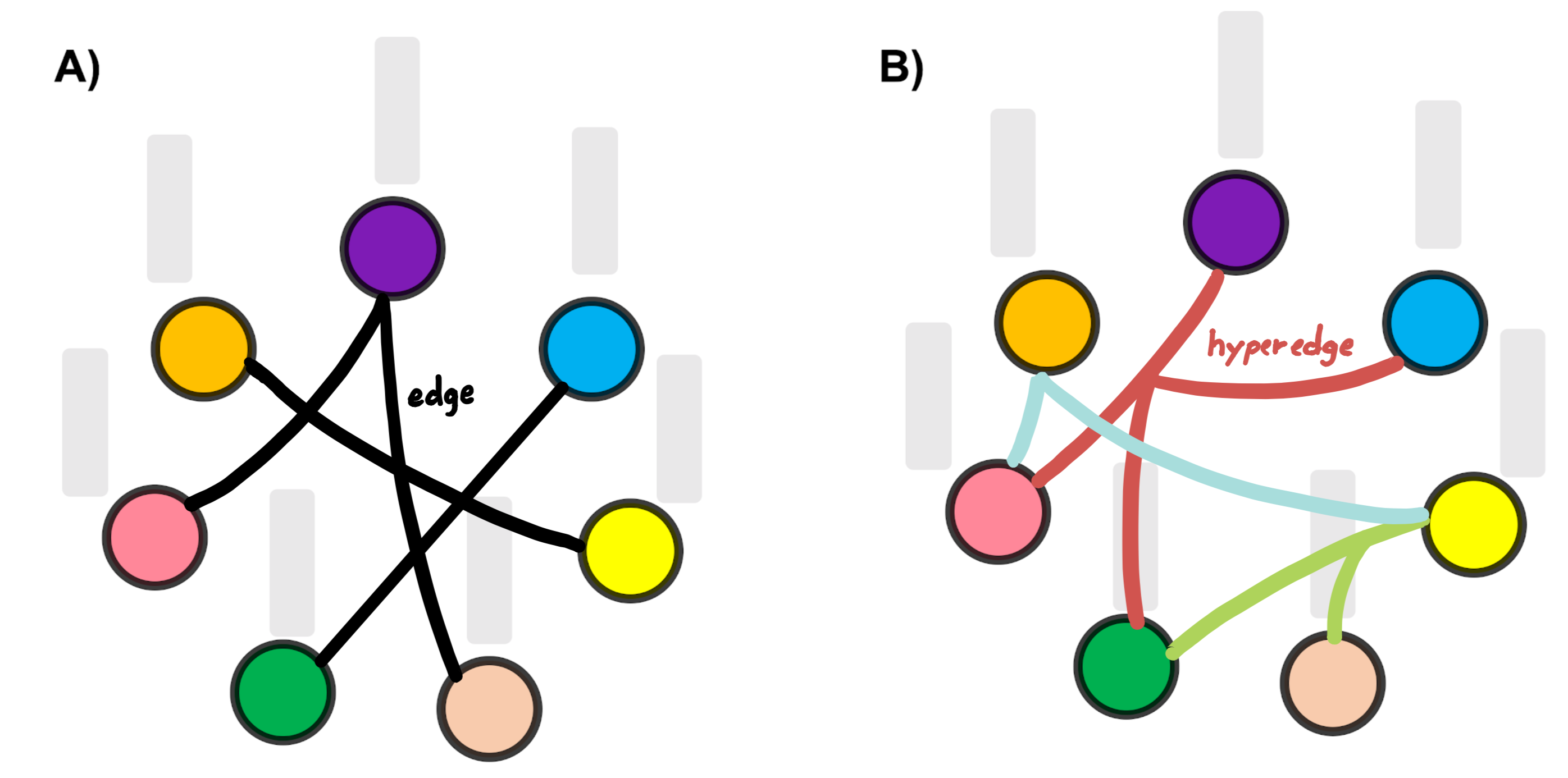

Hypergraph Neural Network (HGNN) (Feng et al., 2019) addresses this problem through the use of hypergraph structure for data modeling. The main difference between a traditional graph and a hypergraph, as illustrated in (Fig. 1), is that graphs are only able to represent one-to-one node relationships via edges while hypergraphs are able to capture high-order relationships between nodes via the concept of a hyperedge connecting a subset of nodes. Even tough the traditional hypergraph learning approach usually suffers from high computational costs, HGNN manages to eliminate this challenge by devising a hyperedge convolution operation. However, HGNN only uses the devised hypergraph convolution operation for learning the hypernode embeddings.

In this paper we propose the Hypergraph U-Net (HUNet) architecture for high-order data embedding by generalizing the graph U-Net (Gao and Ji, 2019) to hypergraphs. HUNet, unlike HGNN takes advantage of the U-Net architecture to improve the local feature aggregation through pooling and unpooling operations while still leveraging the hypergraph convolution operation to learn more representative feature embeddings. It enables the inferring and aggregation of node embeddings while exploring the global high-order structure present between subsets of data samples, becoming more gnostic of real data complexity.

We evaluate HUNet on two different types of brain connectomic datasets for neurological disorder diagnosis and show that HUNet achieves a large gain in classification accuracy compared to state-of-the-art methods on both datasets, demonstrating scalability and generalizability as we perturb training and test sets and vary their sizes.

| Mathematical notation | Definition |

|---|---|

| brain network of subject | |

| number of nodes in the initial hypergraph | |

| input feature embeddings where is the input feature dimension | |

| number of hyperedges in the initial hypergraph | |

| number of nodes at HUNet level | |

| feature embeddings at HUNet level , is the feature dimension | |

| hypergraph incidence matrix | |

| entries where is a vertex and is a hyperedge | |

| degree of a vertex | |

| degree of a hyperedge | |

| diagonal matrix of vertex degrees | |

| diagonal matrix of hyperedge degrees | |

| diagonal weight matrix of a hypergraph | |

| normalized hypergraph connectivity based on incidence matrix | |

| a learnable matrix where is the input feature size | |

| and is the output feature size | |

| updated feature embeddings at HUNet level | |

| hypergraph convolution operation at HUNet level |

2 Proposed method

2.1 Previous Works

In graph embedding the aim is learning how to project node features into a low-dimensional space while preserving the structural relationships in the graph such that nodes with links are also close to each other in this new embedding space. These node embeddings can then be used as inputs for machine learning methods to tackle a variety of prediction and network analysis tasks. Our work builds upon recent advances in graph embedding and hypergraph learning techniques.

2.1.1 Graph U-Net embedding architecture

A wide variety of graph embedding models using different approaches have been proposed and applied to tasks such as node classification (Perozzi et al., 2014; Tang et al., 2015), node clustering (Tang et al., 2016), link prediction (Wang et al., 2016), graph alignment (Bessadok et al., 2019a), graph classification (Dai et al., 2016; Banka and Rekik, 2019) and graph visualization (Cao et al., 2016) in the recent years. More recently, Graph U-Net (GUNet) (Gao and Ji, 2019) was proposed as an U-Net like architecture for graph data. By adapting Euclidean pooling and unpooling operations, which are critical when building encoder-decoder architectures, to non-Euclidean graph data with no spatial locality and order information. GUNet comprises Graph Convolutional Networks (GCN) layers (Kipf and Welling, 2017) to learn deeply composed embeddings of the graph nodes by exploring their hierarchical topological neighbors via the ‘neighbor of a neighbor’ composition rule. They show that this encoder-decoder architecture outperforms conventional GCN in learning well representative embeddings of the node features. However, GUNet is only able to learn from pair-wise relationships between the nodes, thereby ignoring the many-to-many high-order relationships present in many real-world data.

2.1.2 Hypergraph learning

Very recently, hypergraphs, originally introduced in (Zhou et al., 2006), have started to gain momentum in geometric deep learning thanks to their ability to capture high-order relationship between data samples in various tasks such as feature selection (Zhang et al., 2017), image classification (Yu et al., 2012), social network analysis (Fang et al., 2014) and sentiment prediction using multi-modal data (Ji et al., 2018). More recently, the inception of hypergraph neural network (HGNN) (Feng et al., 2019) as the first geometric deep learning model on hypergraph structure introduced hyperedge convolution operations based on the hypergraph Laplacian encoding the hypergraph spectra. However, HGNN is restricted to using hypergraph convolution for learning hypernode embeddings in a semi-supervised manner where training node labels supervise the estimation of the node feature mapping from layer to layer. In the absence of node labels, HGNN cannot be trained. Besides, HUNet takes advantage of the U-Net architecture, aiming to learn well representative feature embeddings by improving the first-order local feature aggregation through pooling and unpooling operations while combining them with hypergraph convolution operation for improving global feature aggregation compared to applying convolution alone for feature aggregation as in HGNN architecture (Feng et al., 2019).

2.2 Proposed Geometric Hypergraph U-Net (HUNet)

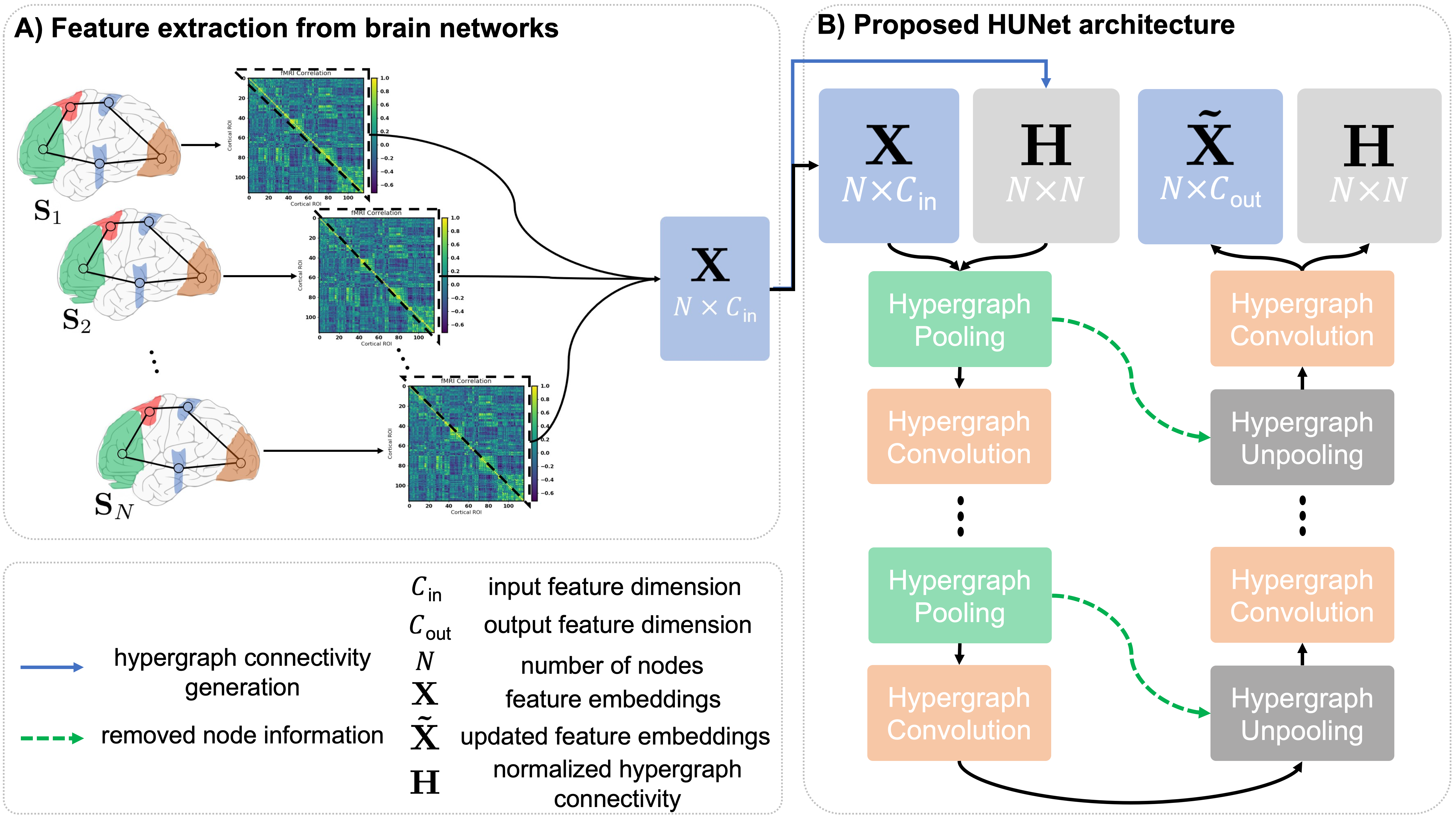

In this section we explain our proposed HUNet architecture, shown in (Fig. 2) and its components. The key idea behind the HUNet architecture is to learn a many-to-many node embedding with a high-order feature aggregation rule by leveraging the advantage of using hypergraphs to model the high-order relations between hypernodes compared to existing deep graph-based embedding methods. With this purpose, we propose the hypergraph pooling (hPool) and hypergraph unpooling (hUnpool) layers.

2.2.1 Hypergraph convolution

Even tough graphs are adequate for representing pair-wise relationships between different nodes, in many applications higher-order relationships, which graphs are unable to represent, are present between subsets of nodes. For such applications one can take advantage of the hypergraph structure encoding shared interactions between a subset of nodes by connecting them with a single hyperedge (Zhou et al., 2006) (Fig.1). We define the basic hypergraph as , where is a node set, is a hyperedge set, is a diagonal weight matrix, where is the number of hyperedges, assigning weights to hyperedges. In our experiments is initialized as an identity matrix meaning that all the hyperedges have the same weight. We then define a hypergraph incidence matrix , where is the number of hypernodes in the hypergraph, with elements representing whether a hypernode is contained in a hyperedge or not as follows:

| (1) |

where represents a hypernode and represents a hyperedge. We also define the hyperedge degree which represents the number of hypernodes in a hyperedge and node degree denoting the number of hyperedges connected to a hypernode as:

| (2) |

In order to adapt the hypergraph convolution operation to our encoder-decoder U-Net architecture, given a hypergraph with hypernodes, we produce the normalized hypergraph connectivity as follows:

| (3) |

where denotes the diagonal hypernode degree matrix and represents the diagonal hyperedge degree matrix. , constructed from the initial hyperpraph, is also pooled and unpooled along with the feature embeddings in the pooling and unpooling layers but unlike the feature embeddings, it only changes in dimensionality. Normalized hypergraph connectivity at HUNet level , where is the number of nodes in the hypergraph at level , is pooled and restored by the respective pooling and unpooling layers at level . The feature embedding , where is the feature dimension at level , is taken as input from the previous pooling or unpooling layer along with . The hypergraph feature matrix is first transformed by the embedding function learned by the hypergraph convolution layer at level to extract dimensional node features, then diffused through to aggregate the embedded hypernode features across hyperedges that contain them. The hypernode feature embedding matrix is then passed on to the next HUNet level and updated as follows:

| (4) |

2.2.2 Hypergraph pooling layer

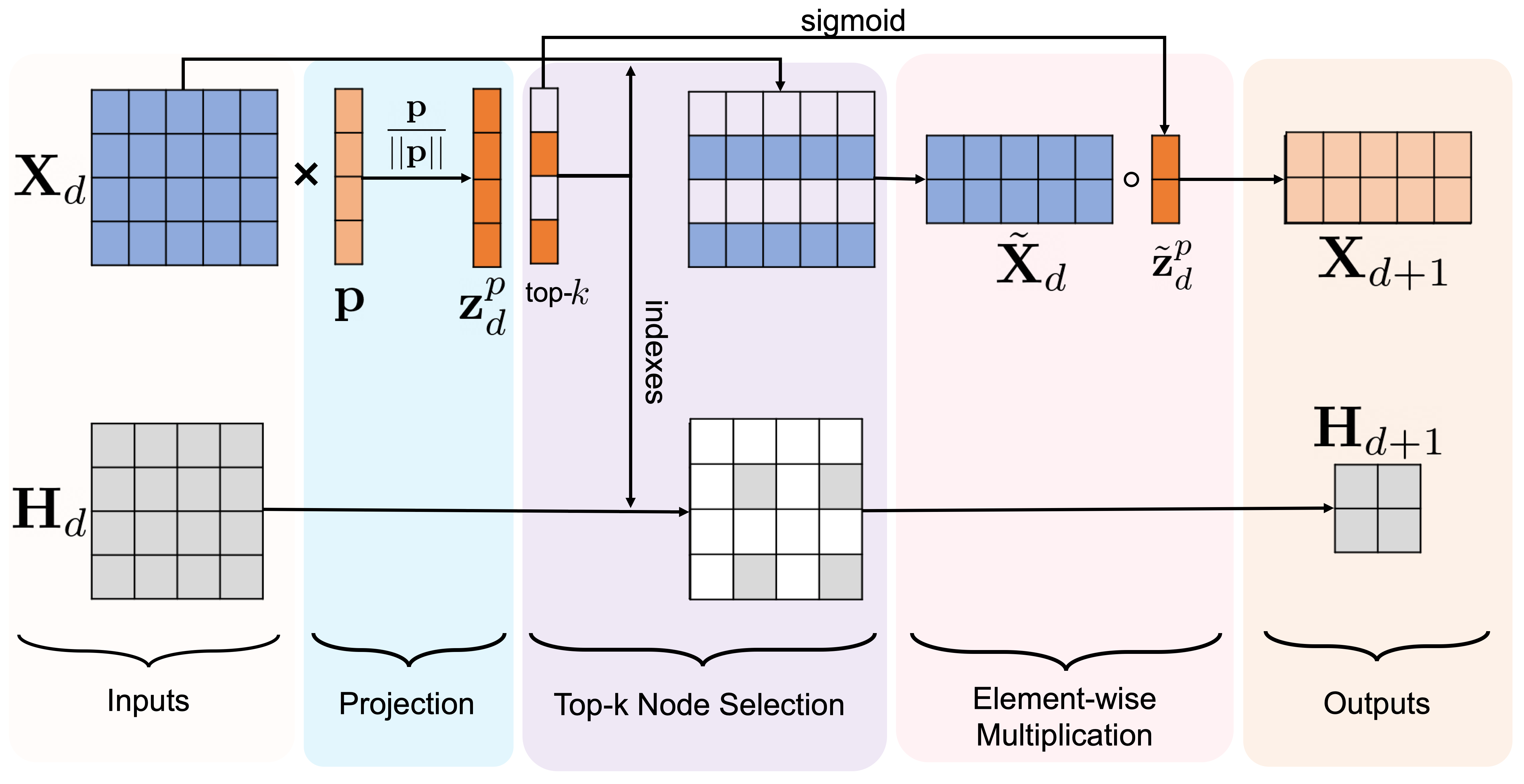

We propose a hypergraph pooling (hPool) layer to down-sample our hypergraph. Instead of passing the graph adjacency matrix to our pooling layer as in (Gao and Ji, 2019), we use the described in the previous part in order to adapt the pooling operation to hypergraphs (Fig. 3). This layer is used to choose a subset of hypernodes that form a smaller hypergraph while losing as little information as possible. In order to learn how to select such hypernodes, a trainable projection vector is used to map all hypernode features to a real-valued score. This projection allows the use of top- hypernode pooling for selecting the most important hypernodes. The individual hypernode scores represent how much information is preserved after the projection onto the vector. This means that selecting the top scoring -hypernodes to form the new hypergraph would maximize information preservation. We define the layer-wise propagation rule for this pooling layer as follows:

| (5) | ||||

where and denote the feature embedding and hypergraph connectivity, respectively, at depth with denoting the feature embedding dimension. is output of the projection of onto . operation returns the indexes of nodes with the largest scores in . These indexes are then used to produce the pooled feature embeddings and the pooled hypergraph connectivity . We select the largest entries from and apply a sigmoid function to produce . Next, we apply an element-wise multiplication, represented by to and , thereby generating the new feature embedding to pass onto the next HUNet level along with . Note that only the dimensionality of the hypergraph connectivity changes from layer to layer during both encoding (i.e., pooling) and decoding (i.e., unpooling) steps in the HUNet architecture (Fig 2).

2.2.3 Hypergraph unpooling layer

An unpooling operation is needed in order to up-sample the hypergraph data that was previously pooled in the hypergraph encoding phase. To this end, we propose hypergraph unpooling layer (hUnpool) that generalizes the unpooling operation proposed in (Gao and Ji, 2019) to hypergraphs by leveraging the normalized hypergraph connectivity . This layer takes the connectivity information about the removed nodes from the hPool layer at the same level of the HUNet to reconstruct the hypergraph and place back the removed nodes (Fig 2). However, the nodes are placed back with empty feature vectors which are filled in using the hypergraph convolution operation (Eq. 4).

Using a hyper U-Net instead of a graph U-Net gives the ability to preserve high-order sample interactions when mapping data samples into a low-dimensional space. The normalized hypergraph connectivity drives the hypergraph convolution in both encoding (top-down) and decoding phases (bottom-up) as illustrated in Fig 2. Blocks of hypergraph pooling and hypergraph unpooling layers are stacked in order to construct the HUNet architecture. Hypergraph convolution follows every hypergraph pooling layer to update the features of the nodes using their first-order local neighbors as well as every hypergraph unpooling layer to fill in the empty node features that were added back. The algorithm of our HUNet architecture with -depth is detailed in Algorithm. 1.

3 Results

3.1 Evaluation Dataset

We evaluate our HUNet and comparison state-of-the-art methods on small-scale and large-scale connectomic datasets derived from different neuroimaging modalities (structural and functional MRI) to demonstrate the ability of HUNet in better generalizing across data scales and handling heterogeneous data distributions.

Small-scale morphological data We use a subset of ADNI GO444http://adni.loni.usc.edu public dataset, consisting of 77 subjects (41 AD and 36 Late Mild Cognitive Impairment), where each subject has a structural T1-w MR image (Mueller et al., 2005). Data used in the preperation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). The ADNI was launched in 2003 as a public-private partnership, led by Principal Investigator Michael W. Weiner, MD. The primary goal of ADNI has been to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer’s disease (AD). For preprocessing, we follow the steps defined by (Mahjoub et al., 2018). In order to reconstruct left and right cortical hemispheres from T1-w MRI (Fischl, 2004), FreeSurfer (Fischl, 2012) processing pipeline was used for each subject. Next, each cortical hemisphere was divided into 35 cortical regions using Desikan-Killany cortical atlas (Fischl, 2004). We then use cortical attributes: maximum principal curvature, cortical thickness, sulcal depth and average curvature to derive four morphological brain connectivity matrices. For each attribute, we extract a feature vector by retrieving the off-diagonal upper triangular part elements of each attribute-specific connectivity matrix.

Large-scale functional network data We also evaluate our method on a large scale functional network dataset, consisting of 517 subjects (245 ASD and 272 Control) from the ABIDE555http://preprocessed-connectomes-project.org/abide/ preprocessed dataset (Cameron et al., 2013). Different preprocessing steps were carried out by the data processing assistant for resting-state fMRI (DPARSF) pipeline, which is established on statistical parametric maps (SPM) and resting-state fMRI data analysis toolkit (REST). In order to ensure a steady signal, first 10 volumes of rs-fMRI images were abandoned. Based on a six-parameter (rigid body), all images were slice timing corrected and realigned to the middle in order to cut down on inter-scan head motion (Tang et al., 2018). After this step, the functional data were registered in Montreal Neurological Institute (MNI) space using a resolution of . In order to boost the signal to noise ratio, spatial smoothing was then applied using a Gaussian kernel of 6 mm. Lastly, a band-pass filtering (0.01-0.1 Hz) was applied to the time series of each voxel (Price et al., 2014; Huang et al., 2017). Detailed explanations for these steps can be found in http://preprocessed-connectomes-project.org/abide/. Each brain rfMRI was partitioned into 116 ROIs to construct connectivity matrices where we select the upper off-diagonal triangles as feature vectors for the individual subjects (hypernodes).

3.2 Evaluation and comparison methods

Performance measures We evaluated the performance of the methods for node classification by using the results derived from the morphological and functional connectomic datasets in terms of accuracy, sensitivity and specificity. For the ADNI dataset we also averaged the results on the four morphological attributes to calculate an overall result.

Parameter setting The initial hypergraph was constructed from the features using -nearest neighbors algorithm with for the morphological dataset and for the functional dataset as it has more nodes. We used for both GUNet and HUNet and for HUNet and for GUNet in both datasets. We set the learning rate to across architectures and datasets. For HGNN, we used 2 hypergraph convolutional layers with a dropout layer in-between at rate.

Evaluation Table. 2 compares the performance of HUNet to our baselines on the morphological connectomic dataset in terms of classification accuracy, sensitivity and specificity. Clearly, HUNet outperforms the baseline methods on most connectomic views, improving the classification accuracy by a margin of 7-14%. As for the large-scale functional dataset, HUNet outperformed other baselines by 4% as shown in Table. 3. The results show that HUNet achieves a classification accuracy gain of both 3-14% across multi-scale heterogeneous datasets in comparison with state-of-the-art methods. This shows that our model is scalable and generalizable.

| Model | Max principal curvature | Cortical thickness | Sulcal depth | Average curvature | Overall | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ACC | SEN | SPEC | ACC | SEN | SPEC | ACC | SEN | SPEC | ACC | SEN | SPEC | ACC | SEN | SPEC | |

| GUNet (Gao and Ji, 2019) | |||||||||||||||

| HGNN (Feng et al., 2019) | |||||||||||||||

| HUNet (ours) | |||||||||||||||

4 Discussion

We have presented HUNet, a hypergraph embedding architecture for learning high-order representative data embeddings that surpasses state-of-the art network embedding frameworks. We proposed our embedding architecture, designed to avoid the inability of existing deep graph embedding architectures to learn from the many-to-many relationships between different nodes (i.e., data samples). With our proposed framework, we treated the brain graph of each patient as a node in a hypergraph structure and learned a feature embedding which recapitulates the higher-order relationships prevalent between different subjects and used this feature embedding to classify nodes (i.e., subjects) into different brain states. Finally we demonstrated the outperformance of our method on two different types of brain connectomic datasets for neurological disorder diagnosis.

Our experimental results showed that HUNet was able to improve on GUNet architecture by an average of % on the small-scale morphological dataset, achieving up to a % difference in terms of classification accuracy in the individual views as shown in (Table. 2). We also demonstrated that HUNet outperforms GUNet on the large-scale functional network dataset by a margin of % as listed in (Table. 3). This supports our claim of improving on graph-based methods through the use of hypergraphs to preserve high-order sample interactions when mapping data samples into a low-dimensional space. We also compared our method to HGNN architecture, which is the first geometric deep learning model on hypergraph structure. Clearly, HUNet outperformed the HGNN architecture (Feng et al., 2019) by an average of % on the small-scale morphological dataset, demonstrating improvements of up to % in the individual views in terms of classification accuracy (Table. 2). We also observe an improvement of % on the large-scale functional network dataset as shown in (Table. 3). This further demonstrates that our hypergraph feature embedding architecture leveraging pooling and unpooling layers through the use of a U-Net encoder-decoder architecture is able to learn more representative and discriminative embeddings of the data features in comparison with solely relying on hypergraph convolutions for learning the hypernode embeddings as for HGNN (Feng et al., 2019).

Limitations and recommendations for future work The parameters to optimize in the design of HUNet design architecture include the depth of the HUNet and the pooling ratios used in the hPool layers. Although we demonstrated the generalizability and scalability of our method, we note that if the depth parameter is increased too much the generalization ability weakens, which results in over-fitting. On the other hand, a large decrease in the pooling ratio, which means lowering the number of nodes to keep at each level of the HUNet, may result in having too few nodes to train on when coupled with a high depth parameter –particularly for small-scale datasets. In future work, one can integrate a hyper attention mechanism to improve the quality of our hyper pooling and unpooling layers as in graph attention network (Veličković et al., 2017).

Broader impact Dimensionality reduction or sample embedding is a fundamental step in many machine learning tasks such as classification, regression, and clustering to overcome the curse of dimensionality. Hence, learning how to embed samples into a low-dimensional space will have a broader impact in many real-world artificial intelligence applications. In this work, we leveraged the structure of a hypergraph to incorporate the high-order relationships existing among subsets of samples in real-life data. In fact, hypergraph representation learning has been lagging behind compared to its graph counterpart in geometric deep learning (Bronstein et al., 2017). In this work we extended the field of hypergraph representation learning to encoder-decoder architectures through the generalization of pooling and unpooling operations to hypergraphs within a U-Net architecture.

Specifically, we addressed a fundamental scientific question: How to encode and decode many-to-many high-order relationships present in real life datasets to improve predictive learning tasks? More importantly, we rooted the application of our proposed hypergraph encoder-decoder architecture in the field of network neuroscience with the aim of driving precision medicine forward. Our interdisciplinary work combined three research fields: data embedding, network neuroscience, and precision medicine, having different societal and economic impacts. First, it propelled the development of automated neurological disorder diagnosis systems. This can alleviate the societal burden of brain disorders by ensuring early and accurate automated diagnosis for effective treatment. Our proposed HUNet architecture treats the subjects as different nodes in a hypergraph, giving insights into the disordered brain alterations in neurological disorder patients, which can help tease apart variations in disorders. This will impact the future of disordered brain connectivity related treatment and diagnosis methods. Second, the development of the field of hypergraph-based data embedding can widen the horizon of geometric deep learning and its applications to complex samples with various interaction patterns.

5 Conclusion

In this paper we proposed the HUNet architecture, where the key idea is to learn a many-to-many node embedding with a high-order feature aggregation rule by leveraging the structure of hypergraphs as they are able to model high-order interactions between subsets of nodes compared to existing deep graph-based embedding methods. With this mindset, we proposed the hypergraph pooling (hPool) and hypergraph unpooling (hUnpool) layers and generalized the U-Net architecture to hypergraphs. Using HUNet, we outperformed state-of-the-art graph and hypergraph sample embedding architectures using brain connectome datasets of varying scales, disorders, and distributions. Since our proposed HUNet architecture captures interactions between subsets of patients as hypernodes to pool in the feature embedding process, one can investigate the interpretability of the learned pooling and unpooling weights in order to group subjects with similar disordered brain alterations together. Designing an interpretable HUNet as for generative adversarial networks (GANs) in (Chen et al., 2016) can help propel the field of precision medicine with the aim of gaining further insights into disordered brain alterations caused by neurological disorders and most importantly their variation across subsets of patients. We refer interested readers to our GitHub HUNet source code available at https://github.com/basiralab/HUNet.

6 Acknowledgements

This work was funded by generous grants from the European H2020 Marie Sklodowska-Curie action (grant no. 101003403) to I.R.

References

- Ahmed et al. (2013) Ahmed, A., Shervashidze, N., Narayanamurthy, S., Josifovski, V., Smola, A.J., 2013. Distributed large-scale natural graph factorization. Proceedings of the 22nd international conference on World Wide Web - WWW ’13 .

- Alexander-Bloch et al. (2012) Alexander-Bloch, A.F., Vértes, P.E., Stidd, R., Lalonde, F., Clasen, L., Rapoport, J., Giedd, J., Bullmore, E.T., Gogtay, N., 2012. The anatomical distance of functional connections predicts brain network topology in health and schizophrenia. Cerebral Cortex 23, 127–138.

- Banka and Rekik (2019) Banka, A., Rekik, I., 2019. Adversarial connectome embedding for mild cognitive impairment identification using cortical morphological networks. International Workshop on Connectomics in Neuroimaging , 74–82.

- Bessadok et al. (2019a) Bessadok, A., Mahjoub, M.A., Rekik, I., 2019a. Hierarchical adversarial connectomic domain alignment for target brain graph prediction and classification from a source graph. International Workshop on PRedictive Intelligence In MEdicine , 105–114.

- Bessadok et al. (2019b) Bessadok, A., Mahjoub, M.A., Rekik, I., 2019b. Symmetric dual adversarial connectomic domain alignment for predicting isomorphic brain graph from a baseline graph. International Conference on Medical Image Computing and Computer-Assisted Intervention , 465–474.

- Bronstein et al. (2017) Bronstein, M.M., Bruna, J., LeCun, Y., Szlam, A., Vandergheynst, P., 2017. Geometric deep learning: going beyond euclidean data. IEEE Signal Processing Magazine 34, 18–42.

- Buckner et al. (2009) Buckner, R.L., Sepulcre, J., Talukdar, T., Krienen, F.M., Liu, H., Hedden, T., Andrews-Hanna, J.R., Sperling, R.A., Johnson, K.A., 2009. Cortical hubs revealed by intrinsic functional connectivity: Mapping, assessment of stability, and relation to alzheimer’s disease. Journal of Neuroscience 29, 1860–1873.

- Cai et al. (2018) Cai, H., Zheng, V.W., Chang, K.C.C., 2018. A comprehensive survey of graph embedding: Problems, techniques, and applications. IEEE Transactions on Knowledge and Data Engineering 30, 1616–1637.

- Cameron et al. (2013) Cameron, C., Yassine, B., Carlton, C., Francois, C., Alan, E., András, J., Budhachandra, K., John, L., Qingyang, L., Michael, M., et al., 2013. The neuro bureau preprocessing initiative: open sharing of preprocessed neuroimaging data and derivatives. Frontiers in Neuroinformatics 7.

- Cao et al. (2015) Cao, S., Lu, W., Xu, Q., 2015. Grarep: Learning graph representations with global structural information, in: Proceedings of the 24th ACM international on conference on information and knowledge management, pp. 891–900.

- Cao et al. (2016) Cao, S., Lu, W., Xu, Q., 2016. Deep neural networks for learning graph representations, in: Thirtieth AAAI conference on artificial intelligence.

- Chen et al. (2016) Chen, X., Duan, Y., Houthooft, R., Schulman, J., Sutskever, I., Abbeel, P., 2016. InfoGAN: Interpretable representation learning by information maximizing generative adversarial nets. Advances in Neural Information Processing Systems 29 , 2172–2180.

- Dai et al. (2016) Dai, H., Dai, B., Song, L., 2016. Discriminative embeddings of latent variable models for structured data, in: International conference on machine learning, pp. 2702–2711.

- Fang et al. (2014) Fang, Q., Sang, J., Xu, C., Rui, Y., 2014. Topic-sensitive influencer mining in interest-based social media networks via hypergraph learning. IEEE Transactions on Multimedia 16, 796–812.

- Feng et al. (2019) Feng, Y., You, H., Zhang, Z., Ji, R., Gao, Y., 2019. Hypergraph neural networks. Proceedings of the AAAI Conference on Artificial Intelligence 33, 3558–3565.

- Fischl (2004) Fischl, B., 2004. Automatically parcellating the human cerebral cortex. Cerebral Cortex 14, 11–22.

- Fischl (2012) Fischl, B., 2012. Freesurfer. NeuroImage 62, 774–781.

- Fornito et al. (2015) Fornito, A., Zalesky, A., Breakspear, M., 2015. The connectomics of brain disorders. Nature Reviews Neuroscience 16, 159–172.

- Fornito et al. (2012) Fornito, A., Zalesky, A., Pantelis, C., Bullmore, E.T., 2012. Schizophrenia, neuroimaging and connectomics. NeuroImage 62, 2296–2314.

- Fouss et al. (2007) Fouss, F., Pirotte, A., Renders, J.M., Saerens, M., 2007. Random-walk computation of similarities between nodes of a graph with application to collaborative recommendation. IEEE Transactions on Knowledge and Data Engineering 19, 355–369.

- Gao and Ji (2019) Gao, H., Ji, S., 2019. Graph u-nets. arXiv preprint arXiv:1905.05178 .

- Goyal and Ferrara (2018) Goyal, P., Ferrara, E., 2018. Graph embedding techniques, applications, and performance: A survey. Knowledge-Based Systems 151, 78–94.

- Grover and Leskovec (2016) Grover, A., Leskovec, J., 2016. node2vec. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining .

- Heuvel and Sporns (2019) Heuvel, M.P.V.D., Sporns, O., 2019. A cross-disorder connectome landscape of brain dysconnectivity. Nature Reviews Neuroscience 20, 435–446.

- Huang et al. (2017) Huang, X.H., Song, Y.Q., Liao, D.A., Lu, H., 2017. Detecting community structure based on optimized modularity by genetic algorithm in resting-state fmri. Advances in Neural Networks - ISNN 2017 Lecture Notes in Computer Science , 457–464.

- Ji et al. (2018) Ji, R., Chen, F., Cao, L., Gao, Y., 2018. Cross-modality microblog sentiment prediction via bi-layer multimodal hypergraph learning. IEEE Transactions on Multimedia 21, 1062–1075.

- Kipf and Welling (2017) Kipf, T.N., Welling, M., 2017. Semi-Supervised Classification with Graph Convolutional Networks In International Conference on Learning Representations (ICLR).

- Ktena et al. (2017) Ktena, S.I., Parisot, S., Ferrante, E., Rajchl, M., Lee, M., Glocker, B., Rueckert, D., 2017. Distance metric learning using graph convolutional networks: Application to functional brain networks. International Conference on Medical Image Computing and Computer-Assisted Intervention , 469–477.

- Liu et al. (2017) Liu, Z., Zheng, V.W., Zhao, Z., Zhu, F., Chang, K.C.C., Wu, M., Ying, J., 2017. Semantic proximity search on heterogeneous graph by proximity embedding, in: Thirty-First AAAI Conference on Artificial Intelligence.

- Mahjoub et al. (2018) Mahjoub, I., Mahjoub, M.A., Rekik, I., 2018. Brain multiplexes reveal morphological connectional biomarkers fingerprinting late brain dementia states. Scientific Reports 8, 4103.

- Morris and Rekik (2017) Morris, C., Rekik, I., 2017. Autism spectrum disorder diagnosis using sparse graph embedding of morphological brain networks. Graphs in Biomedical Image Analysis, Computational Anatomy and Imaging Genetics , 12–20.

- Mueller et al. (2005) Mueller, S.G., Weiner, M.W., Thal, L.J., Petersen, R.C., Jack, C., Jagust, W., Trojanowski, J.Q., Toga, A.W., Beckett, L., 2005. The alzheimers disease neuroimaging initiative. Neuroimaging Clinics of North America 15, 869–877.

- Newman (2005) Newman, M.J., 2005. A measure of betweenness centrality based on random walks. Social Networks 27, 39–54.

- Park and Friston (2013) Park, H.J., Friston, K., 2013. Structural and functional brain networks: From connections to cognition. Science 342, 1238411–1238411.

- Perozzi et al. (2014) Perozzi, B., Al-Rfou, R., Skiena, S., 2014. Deepwalk. Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining - KDD ’14 .

- Price et al. (2014) Price, T., Wee, C.Y., Gao, W., Shen, D., 2014. Multiple-network classification of childhood autism using functional connectivity dynamics. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2014 Lecture Notes in Computer Science , 177–184.

- Ronneberger et al. (2015) Ronneberger, O., Fischer, P., Brox, T., 2015. U-net: Convolutional networks for biomedical image segmentation. International Conference on Medical image computing and computer-assisted intervention , 234–241.

- Soussia and Rekik (2017) Soussia, M., Rekik, I., 2017. High-order connectomic manifold learning for autistic brain state identification. International Workshop on Connectomics in Neuroimaging , 51–59.

- Tang et al. (2018) Tang, C., Wei, Y., Zhao, J., Nie, J., 2018. The dynamic measurements of regional brain activity for resting-state fmri: d-alff, d-falff and d-reho. Medical Image Computing and Computer Assisted Intervention – MICCAI 2018 Lecture Notes in Computer Science , 190–197.

- Tang et al. (2015) Tang, J., Qu, M., Wang, M., Zhang, M., Yan, J., Mei, Q., 2015. Line: Large-scale information network embedding, in: Proceedings of the 24th international conference on world wide web, pp. 1067–1077.

- Tang et al. (2016) Tang, M., Nie, F., Jain, R., 2016. Capped lp-norm graph embedding for photo clustering. Proceedings of the 2016 ACM on Multimedia Conference - MM ’16 .

- Veličković et al. (2017) Veličković, P., Cucurull, G., Casanova, A., Romero, A., Lio, P., Bengio, Y., 2017. Graph attention networks. arXiv preprint arXiv:1710.10903 .

- Wang et al. (2016) Wang, D., Cui, P., Zhu, W., 2016. Structural deep network embedding, in: Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining, pp. 1225–1234.

- Wang et al. (2017) Wang, H., Wang, J., Wang, J., Zhao, M., Zhang, W., Zhang, F., Xie, X., Guo, M., 2017. Graphgan: Graph representation learning with generative adversarial nets. 1711.08267.

- Yu et al. (2012) Yu, J., Tao, D., Wang, M., 2012. Adaptive hypergraph learning and its application in image classification. IEEE Transactions on Image Processing 21, 3262–3272.

- Zhang et al. (2017) Zhang, Z., Bai, L., Liang, Y., Hancock, E., 2017. Joint hypergraph learning and sparse regression for feature selection. Pattern Recognition 63, 291–309.

- Zhou et al. (2006) Zhou, D., Huang, J., Schölkopf, B., 2006. Learning with hypergraphs: Clustering, classification, and embedding. NIPS, Vancouver, British Columbia, Canada , 1601–1608.