Deep Learning and Explainable AI: New Pathways to Genetic Insights

Abstract

Deep learning-based AI models have been extensively applied in genomics, achieving remarkable success across diverse applications. As these models gain prominence, there exists an urgent need for interpretability methods to establish trustworthiness in model-driven decisions. For genetic researchers, interpretable insights derived from these models hold significant value in providing novel perspectives for understanding biological processes. Current interpretability analyses in genomics predominantly rely on intuition and experience rather than rigorous theoretical foundations. In this review, we systematically categorize interpretability methods into input-based and model-based approaches, while critically evaluating their limitations through concrete biological application scenarios. Furthermore, we establish theoretical underpinnings to elucidate the origins of these constraints through formal mathematical demonstrations, aiming to assist genetic researchers in better understanding and designing models in the future. Finally, we provide feasible suggestions for future research on interpretability in the field of genetics.

123

1 Introduction

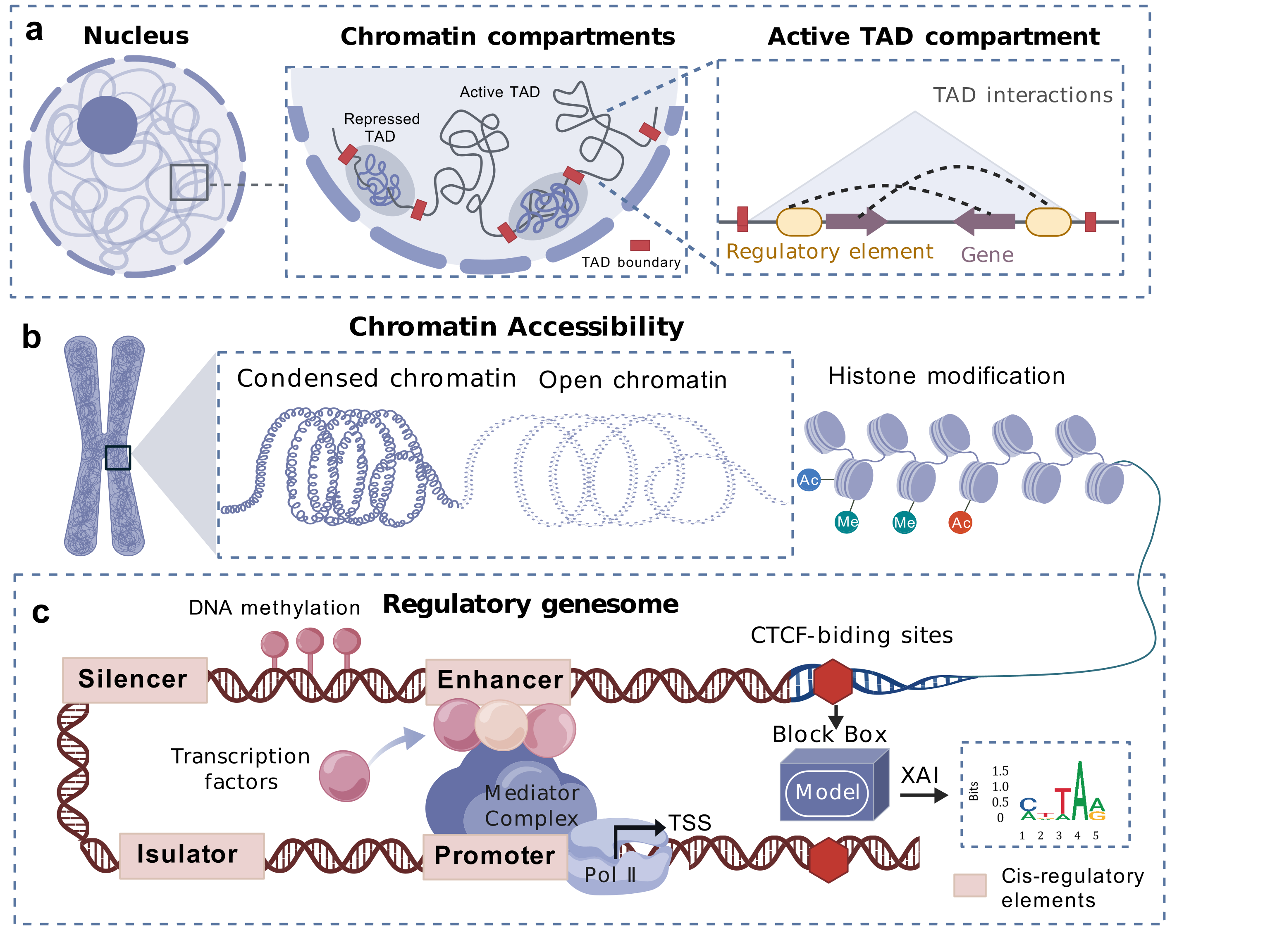

Deep learning has demonstrated powerful modeling and analytical capabilities in 3D genomics and regulatory genomics, enabling efficient mining of complex regulatory patterns from high-dimensional genomic data [17]. In 3D genomics, deep learning models can predict spatial features of chromatin, including dynamic changes of topologically associating domains (TADs) [42], chromatin compartments [42], and chromatin loop [19], as shown in Figure 1(a), decoding genome function from sequence through structure [11]. These models can also decipher cell-type-specific chromosomal spatial organization patterns [30], quantify spatial interaction strengths between genomic loci, and uncover their associations with gene expression regulation. In regulatory genomics, deep learning facilitates the integration of multi-omics data (e.g., Hi-C [18], ATAC-seq [16], HiChIP [26]) to predict regulatory variants of DNA methylation [43], predict potential motif-motif interactions [2, 10], efficiently identify cis-regulatory elements [39] and infer cooperative transcription factor binding networks [10], as presented in Figure 1(b, c).

Although deep learning methods have achieved remarkable success in these biological fields, due to the use of multi-layer nonlinear transformations, complex architectures, and a large number of parameters, deep learning models are often perceived as “ black boxes” by humans, making their internal mechanisms difficult to interpret and understand. The inherent “ black-box ” nature of these models not only limits their reliability and practical applicability, but also makes it hard to understand how their predictions relate to actual biological processes, which hinders the translation of research findings into biological mechanisms [3].

In recent years, the development of eXplainable AI (XAI) methods has emerged as a promising approach to address the “ black-box” challenge [25]. These methods aim to reveal the inner workings of neural network models in ways that are understandable to humans, or to explain how specific input features influence the prediction of model, thereby enhancing model transparency and trustworthiness. Therefore, many recent studies have employed interpretable deep learning methods to gain biologically relevant insights, such as identifying cis-regulatory elements that influence gene expression [34]. In this work, we systematically review and categorize the current interpretability techniques accordingly applied to two major tasks: 3D genome modeling and gene regulatory network prediction.

Despite various interpretable deep learning methods have shown promising results, each comes with its own limitations, and there remains a lack of rigorous mathematical proofs regarding the specific limitations of their applicability. In particular, many critical claims in existing studies (e.g.,“ enforcing transparency techniques may compromise model performance” [28]) are primarily based on empirical observations and lack solid theoretical foundations. To address this gap, we provide theoretical analyses of the limitations of certain interpretability methods.

The contributions of this paper are as follows:

• We propose a novel classification framework that categorizes interpretable deep learning methods in genomics into two types: (1) Input interpretability, encompassing convolutional kernel visualization, gradient-based methods, and perturbation-based methods. (2) Model interpretability, including attention mechanisms and transparent models based on biological prior knowledge.

• We not only provide intuitive explanations of each method and introduce their applications but also rigorously reveal the inherent limitations of selected interpretable approaches through mathematical derivation, thus offering theoretical support for method evaluation and selection.

2 Interpretable methods

In this section, we systematically introduce interpretable approaches in deep learning applications for genomics, categorizing them into two main types: input interpretability and model interpretability. Input interpretability methods aim to explore key features learned from the input or to identify input features that have a significant impact on the predictions, employing techniques such as convolutional kernel visualization to identify learned sequence motifs, gradient-based methods to quantify feature importance, and perturbation-based analyses to assess the functional impact of input modifications. In contrast, model interpretability approaches, enhance transparency through architectural designs, including attention mechanisms that reveal the relationships between features and biologically inspired transparent models that explicitly align network components with known biological entities. The methods are systematically summarized in Table 1. The introduction of each method is as follows.

2.1 Input Interpretability

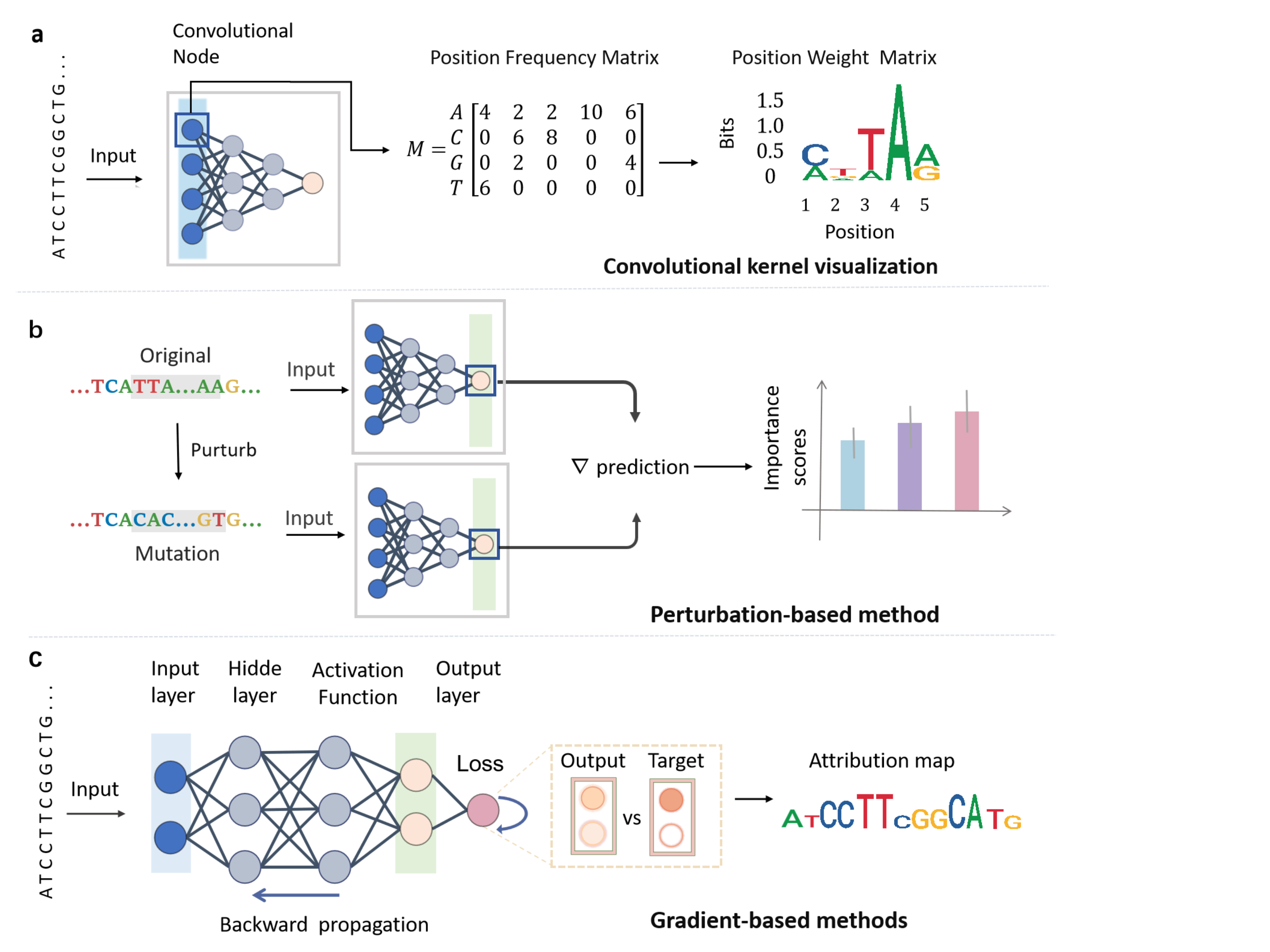

Input interpretability aims to reveal key patterns within the input features or to assess the importance of each feature for the prediction of the model. Unlike traditional k-mer-based feature engineering methods that directly reveal feature importance, deep neural networks employ multi-layer nonlinear transformations for automated feature extraction, resulting in opaque decision processes. To address this, there exist many input interpretability methods, which we further categorize into three classes: convolutional kernel visualization, gradient-based methods, and perturbation methods. Figure 2 illustrates the underlying principles of each method.

Convolutional kernel visualization. With the advancement of deep learning technology, convolutional neural networks (CNNs) have been widely applied in genomic research, such as enhancer prediction [24], transcription factor binding site prediction [41], and chromatin accessibility prediction [14]. CNNs can automatically extract features from DNA sequences. Specifically, CNNs slide multiple filters across the DNA sequence in the convolutional layers, obtaining activation values for each position relative to each filter. These activation values are then used as features and passed to fully connected layers for prediction. As shown in Figure 2(a), the convolutional kernel weights in the first layer are first converted to a position frequency matrix (PFM), followed by log-scaling to produce a standard position weight matrix (PWM) [1, 28], which captures a short sequence motif. Therefore, visualizing these filters can help us understand which sequence patterns the model has extracted that are crucial for prediction. A potential issue here is the constraints in weight learning: unconstrained or inappropriate constraints during weight learning may lead to scaling problems, causing the importance of certain sequences to be disproportionately emphasized or underestimated. In most studies, a commonly used visualization strategy involves computing the activation values of multiple sequences for each trained filter, indicating the degree of match between each sequence and the filter. The sequences with the highest activation values are then selected to generate a corresponding PWM, representing the motif learned by the filter. Then we can obtain motifs learned by multiple filters and match them with existing motif databases to verify whether the model has captured biologically relevant and important features. For example, DeepEnhancer [24] uses a CNN to predict enhancers from DNA sequences. Its first convolutional layer applies 128 filters of length 8 to extract features. By visualizing these filters, the model identifies motifs and matches them with the known transcription factor binding site database JASPAR [23], successfully recognizing biologically relevant motifs. Furthermore, Basset [14] recovered a large number of known DNA-binding protein motifs by analyzing the parameters of the 300 convolutional filters in the convolutional layer.

Gradient-based methods. Gradient-based importance analysis methods achieve global quantitative evaluation of feature importance by computing the partial derivatives of model outputs relative to input features. As shown in Figure 2(c), this approach utilizes backpropagation to compute the gradients of the loss function with respect to sequence features, where gradient magnitudes directly measure the contribution of individual nucleotide in the DNA sequence. For instance, in genomic analyses, positive/negative gradient peaks correspond to enhancer and silencer regions, respectively [15]. However, gradient-based methods are susceptible to vanishing gradients or gradient saturation. In deep neural networks or when using saturating activation functions, excessively small gradients may introduce bias in feature importance estimation. This limitation can be mitigated by integrated gradients, which correct importance scores through path integration [33]. Moreover, an alternative approach, DeepLIFT [31], provides local explanations by comparing the prediction differences between test instances and reference sequences (e.g., background nucleotide frequencies), thereby avoiding the saturation effects of gradient methods. DeepLIFT has been employed to identify critical nucleotides in splice sites [44]. The application of DeepLIFT algorithm successfully identified expression-predictive motifs (EPMs) in both 5’UTR and 3’UTR regions, which exhibit distinct sequence-specific positional preferences [29]. Notably, the attribution rules of DeePLIFT match Shapley values, providing a robust feature importance framework [21]. Both integrated gradients and DeepLIFT require a predefined reference baseline, and the choice of this baseline can significantly influence the accuracy of attribution results. However, there is currently no consensus or established guideline on how to select an appropriate reference.

| Tool Name and URL | Year | Strategy | Model | Application | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

2024 | Convolutional kernel visualization | U-net | Regulatory Genomics | ||||||

|

2025 |

|

|

Regulatory Genomics | ||||||

|

2016 | Convolutional kernel visualization | CNN | Regulatory Genomics | ||||||

|

2025 |

|

CNN, Transformer | 3D Genomics | ||||||

|

2022 | Perturbation-based methods | CNN | 3D Genomics | ||||||

|

2023 |

|

|

3D Genomics | ||||||

|

2025 | Perturbation-based methods | Random Forest | Regulatory Genomics | ||||||

|

2024 |

|

CNN | Regulatory Genomics | ||||||

|

2023 |

|

Transformer | Regulatory Genomics | ||||||

|

2024 |

|

|

Regulatory Genomics | ||||||

|

2025 | Gradient-based methods | Transformer | Regulatory Genomics | ||||||

|

2021 |

|

Transformer | Regulatory Genomics | ||||||

|

2018 |

|

Neural Network | 3D Genomics | ||||||

|

2021 | Transparent models | Neural Network | Regulatory Genomics |

Perturbation-based methods. Perturbation-based methods infer the importance of features by manipulating the input features and observing the changes in the output of the model. Perturbation-based interpretability methods were first introduced in the field of computer vision, where specific regions of an image are masked to observe changes in predictions, thereby assessing the importance of those regions [38]. This intuitive approach aligns well with human reasoning and has since been widely adopted in other domains. Similarly to image perturbation, one can consider altering certain specific segments in biological gene sequences to determine the importance of the features corresponding to these segments. For example, we can perturb parts of the sequence and observe changes in the output, as seen in Figure 2(b). The discrepancy between the predictions for these new sequences and the original sequence is quantified as the attribution score, serving as a critical metric for evaluating the functional significance of individual nucleotides in the context of the trained model. It is also feasible to mutate a specific nucleotide within the sequence into each of the other three nucleotides and observe the resultant changes in the output. By repeating this operation for all nucleotides, a matrix of dimensions can be obtained, which is commonly referred to as the attribution map [32]. iMAP [20] is used to knock out genes for the discovery of therapeutic targets for diseases. The perturbation model based on -VAES [4] conducts perturbation analysis on single-cell RNA datasets to predict gene expression changes during gene knockout, toxin response, and embryonic development. A perturbation map [6] was developed to provide a scalable approach for evaluating how specific genetic alterations impact the local, proximal, and distal tumor microenvironment (TME) states. However, many neural networks are trained in a way that resists Dropout [12]. As a result, multiple neurons may be associated with the importance of the same feature, which can lead to the failure of perturbation methods.

For input interpretability, while weight matrices corresponding to convolutional kernels can reflect the importance of sequences, the lack of proper constraints during their learning process may distort the perceived importance of these sequences. Gradient-based methods can evaluate feature importance by computing the partial derivatives of model output with respect to input features, while they may also face the potential issue of gradient vanishing. Perturbation-based methods assess the importance of altered features by introducing minor perturbations to the input and observing the resulting changes in model outputs, yet they may lead to an underestimation of feature importance due to neuronal redundancy.

2.2 Model interpretability

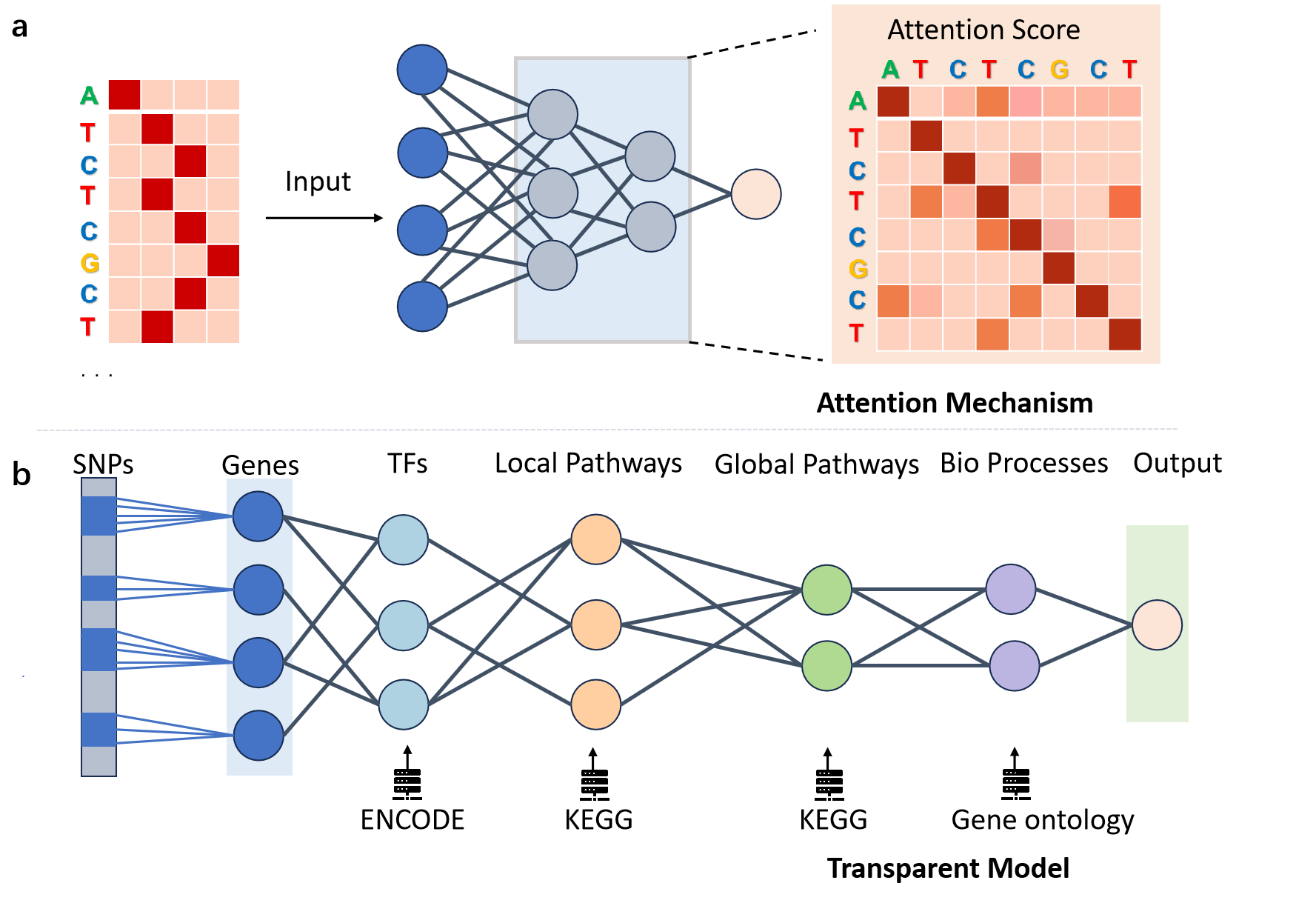

Model interpretability aims to facilitate human understanding of internal mechanisms and decision-making processes through architectural design. For example, traditional linear models are inherently interpretable, as we can directly understand the importance of each feature through its assigned weight. In contrast, deep neural networks typically consist of multiple hidden layers and numerous parameters, making it difficult to comprehend their decision-making processes solely based on their architecture or parameters. Therefore, many researchers are currently exploring model-based interpretability techniques to enhance the credibility of the model and uncover new biological insights. These methods can be mainly categorized into two types: attention mechanisms and transparent models based on biological prior knowledge. The principles of the various methods are illustrated in Figure 3.

Attention mechanisms. The attention mechanism is a widely adopted technique in neural networks. A representative example is the Transformer model [37], which leverages self-attention to capture dependencies between tokens within a sequence. The attention weights inherently provide a form of interpretability [27], offering insight into which input tokens the model focuses on when making predictions and revealing interactions between them. The visualization results are shown in Figure 3(a). EpiBERT [13] introduces a masking technique for genomic loci and enhancer regions, extracts query and key matrices from each attention layer, averages the resulting weights across all layers and attention heads, and visualizes these weights to indicate the degree of correlation between different positions. Enformer [2] extracts the query row at the transcription start site (TSS), where the keys denote different spatial positions and the attention values reflect the focus of the model on these positions during the prediction of TSS. In addition, C.Origami [34] exploits attention scores from Transformer models to identify cis-regulatory elements that play critical roles in 3D chromatin architecture. However, in DNA sequences, sequences at multiple positions may be associated with the same biological feature, which is mathematically termed multicollinearity, and this characteristic may lead to instability in the weights of attention mechanism matrices.

Transparent models based on biological prior knowledge. Unlike traditional neural networks, where neurons in the hidden layer typically lack clear biological meaning, transparent neural network models are explicitly designed so that their hidden nodes correspond directly to specific biological units at a fine-grained level, thereby significantly enhancing interpretability [22, 9, 36]. To construct models with intrinsically interpretable units, it is necessary to incorporate prior knowledge into the network architecture design. For instance, by mapping bottom-layer nodes to molecular entities (e.g., genes or proteins) and deeper-layer nodes to functional modules (e.g., metabolic pathways or organelles), a hierarchical biological representation system can be established, as illustrated in Figure 3(b). Deep neural networks build higher-order biological representations through progressive integration of lower-level features, for example, the second layer may capture cooperative interactions between transcription factor binding motifs, while deeper layers can map to complex systems such as complete biological pathways [28]. As a breakthrough in biological applications, DCell [22] pioneered a modeling approach that combines both neural network computational power and model transparency. The input layer of DCell directly corresponds to gene nodes, while its second layer constructs functional group nodes based on the hierarchical structure of Gene Ontology, establishing an association model between genotype and yeast growth rate. The GenNet [36] model utilizes genetic variants (SNPs) as input layer nodes and maps them to gene nodes in the second layer through NCBI RefSeq gene annotations, thereby constructing predictive association models between genotypes and complex phenotypes (e.g., schizophrenia, hair color). Through transparent variable construction based on multi-omics data, P-NET [9] has identified MDM4 as a biomarker in metastatic prostate cancer. Although transparent models offer significant interpretability advantages, their construction relies on domain-specific prior knowledge, which to some extent limits their applicability. Furthermore, the technical approaches used to ensure model transparency may adversely affect predictive performance.

For model interpretability, the attention mechanism reveals the importance of input tokens and reflects the correlations between different tokens. Multicollinearity among input tokens can lead to numerical instability in attention weights. Transparent neural networks can fully incorporate biologically relevant prior knowledge to reveal specific biological functional relationships, yet they may lack universality and cause models to overlook not clearly defined relationships.

3 Theoretical analysis

In this section, we present a systematic theoretical analysis of the technical limitations inherent in each methodological approach.

3.1 The limitation of input interpretability

Analysis reveals three fundamental limitations in input interpretability approaches for deep neural networks: First, unconstrained weight optimization in convolutional layers provably causes scaling instability, mathematically demonstrating disproportionate feature amplification. Second, the inherent neuronal redundancy from dropout mechanisms obscures node-level importance attribution. Third, the null gradient of ReLU for negative inputs, combined with the multiplicative accumulation of small weights through successive layers, results in progressive gradient decay during backpropagation.

The unconstrained learning of weights. CNNs for DNA sequence prediction extract local features by sliding multiple convolutional kernels across the input sequence. The learned kernels can ultimately be interpreted as PWM, representing the key motifs identified by the model from the input sequences. However, it should be noted that the learning of weights should be regulated by constraints. Otherwise, it is highly likely to lead to scaling issues.

Suppose we study a classification problem where a DNA sequence is input to determine whether each position is related to a certain structure. The input sequences are typically represented as a matrix, where represents the entry at position (i, j) and the convolutional kernel is a matrix of size . To facilitate the analysis of the problem, the network structure is simplified. Specifically, the fully connected layers are abstracted into a single layer, and the binary cross-entropy loss function is adopted as the loss function. The sigmoid function is used as the activation function for the last layer of the neural network. The weights of the convolutional kernel are updated through gradient descent. Then in each learning iteration, the weights will be updated in the following manner:

| (1) |

To further analyze the scaling problem of the weights, the partial derivatives will be expanded in accordance with the chain rule. Taking , which is located at the first row and the first column of the convolutional kernel as an example:

| (2) |

| (3) | ||||

The sequence feature values are obtained by convolutional operation (using as an example):

The output is obtained by multiplying the fully-connected weight matrix with the feature sequence.

| (4) |

Furthermore, the update formula for the weights of the convolutional kernel can be specifically written.

| (5) |

Among them, . If the true label is 1, then . If the subsequent part is greater than 0, it will cause the weight to increase. At this time, if there is no constraint, there will be a risk that the weight will continue to increase. Eventually, a certain weight will become too large.

Then it is common practice to transform the PWM into a PFM[28] through the softmax operation, and then obtain the standard PWM matrix via a logarithmic transformation.

The softmax operation on the convolutional kernel is shown in the following equation (still taking as an example):

| (6) |

It can be seen that if is too large while the other weight values are relatively small, it leads to .

And if is small and the other weight is relatively large, it will lead to .

This will lead to the irrationality of the weights. It will cause the functions of certain bases in the sequence to be overemphasized, while the significance of some other bases is neglected.

For the purpose of ensuring the positive and negative nature of the values, when performing the logarithmic transformation, it is usually carried out by , which is always determined according to the condition to satisfy the stability of the values. Due to being always small, when approaches 0, the value after logarithmic transformation will tend to negative infinity. When approaches 1, the value after the logarithmic transformation will tend to 0. Both scenarios will lead to the distortion of .

This may lead to the effect of the bases in certain parts of the input DNA sequence being overly amplified, while the roles of the bases at other positions are ignored. This process theoretically analyzes why unconstrained learning can lead to scaling issues, thus rendering the interpretation of the DNA sequence lacking in reliability.

The redundancy of neurons. Perturbation-based methods can evaluate the contribution of individual neurons by selectively removing them and observing the resulting changes in model predictions. However, deep neural networks are usually trained in a way that resists Dropout. The mechanism of Dropout is to deactivate some neurons during each training session. This ensures that certain specific features in the input DNA sequence do not rely on a fixed neuron, but can be captured by multiple neurons. More specifically, it is , which means that the -th neuron in the -th layer has the probability of being retained during training process.

Suppose that there are two different neurons, and , in the first convolutional layer, and are the final contributions of neuron and neuron in the entire neural network.

In standard CNNs, adjacent layer neurons are tightly coupled through weight updates, enabling collaborative feature learning. Dropout randomly disconnects the interneuron pathways during training, forcing independent feature acquisition. This causes redundant node formation: multiple subnetworks repeatedly learn identical DNA sequence features, meaning minimal output changes when removing specific neurons do not necessarily indicate low predictive importance of these nodes.

Since a neural network contains numerous neurons, as well as parameters such as weights and biases, it can be regarded as a high-dimensional manifold . The number of neurons in each neural network as a whole, the initial weights and other factors together constitute an abstract parameter . From this perspective, the neural network training process is to select a minimum point of the loss function on . Suppose that the overall parameter settings under the initial conditions are . At this time, for any input , the output of the model can be expressed as . and can be regarded as two distinct minor parameters within the parameter space . Removing such a neuron is equivalent to perturbing in a certain parameter direction. If these two neurons are redundant with respect to each other, that is, there exist parameter directions such that for any input :

| (7) |

This equation indicates that the output results after small perturbations in two directions are approximately equal, where denotes an extremely small error related to .

And these two parameter directions can be regarded as symmetric, and the corresponding covariant derivatives commute.

| (8) |

Then the components of the Riemann curvature tensor of the parameter space in the directions of and are:

| (9) |

It represents that the manifold is locally flat in the two parameter directions represented by the removal of redundant nodes. Assume that is removed. Then it can be regarded as a perturbation in the direction of in the parameter space, with . Subsequently, the variations in the output can be calculated:

Due to the large number and complexity of parameters in the entire neural network, the removal of a single neuron can be regarded as a minor change in a certain direction, and is an infinitesimal quantity. At the same time, since the manifold is locally flat in the subspace spanned by and , and approach 0.

In summary, we obtain that approaches 0. That is, when redundancy occurs in neurons due to the Dropout mechanism, the output of the model does not change significantly after removing a single neuron, which makes it hard to access the importance of the node.

Vanishing gradient in DNA sequences. Vanishing gradient is a common issue in gradient-based model interpretation methods, and is closely tied to the choice of activation function. For instance, the ReLU activation function outputs zero for negative inputs while propagating positive inputs unchanged. However, its constant unity gradient for positive inputs may still lead to vanishing gradients during backpropagation in deep networks. Consider a single-layer neural network with the output given by:

| (10) |

Here, represents the weights matrix, is the input, and is the bias term. The normal input and output relationship can be expressed as . The gradient of the output with respect to the input can be calculated as:

| (11) |

According to the derivative definition of ReLU, if , then , and the gradient is . Conversely, if , then , and the gradient is 0. Extending this to a multi-layer neural network, assume output of each layer passes through a ReLU activation function, with the output of -th layer given by:

| (12) |

Here, is the input, and is the output. To calculate the gradient of the output with respect to the input , the chain rule must be applied:

| (13) |

The gradient of each layer is:

| (14) |

If the input to a layer , then , and thus, the gradient for that layer is 0. This means that the gradient at this layer vanishes and cannot continue to propagate backward. Additionally, the gradient is also influenced by the weight matrices, as shown by:

When are very small, the value of the gradient will also be very small, leading to vanishing gradient.

3.2 The limitation of model interpretability

This section systematically demonstrates the inherent limitations of model interpretability approaches in DNA sequence analysis. First, attention mechanisms intrinsically exhibit numerical instability, which can be mathematically proven to induce matrix ill-conditioning under multicollinearity. Second, biologically-constrained transparent models may yield higher loss values compared to their unconstrained counterparts.

Instability of in the estimation of the weights. The computation of attention weights typically involves two vectors, namely the query vector and the key vector. The attention score is calculated by taking the dot product of the query matrix and the key matrix :

| (15) |

Since the input features often exhibit multicollinearity, some of the vectors in the key matrix are linearly correlated. That is, there exists an index such that .

Consider the covariance matrix of , and , due to the multicollinearity, the rank of the matrix will satisfy , then we have:

| (16) |

The matrix also satisfies the condition of partial linear correlation. Therefore, . Since the matrix is a positive semi-definite matrix, all the eigenvalues of are greater than or equal to 0. At this time, there will be:

| (17) |

So there must exist which satisfies that , i.e., .

To demonstrate that multicollinearity leads to numerical instability, we first present the following lemma and theorem.

Lemma 1.

In the sense of the 2-norm, for any matrix , if is the largest eigenvalue of , then .

Proof.

Arbitrarily select a matrix and any unit vector that can be transformed by . According to the definition of the norm and the unit vector, we have:

| (18) |

Then write in another form:

| (19) |

Due to , is a positive semi-definite matrix. Suppose that its eigenvalues satisfy , the corresponding eigenvectors form an orthonormal basis of . Let , since , so . Subsequently, we can derive the following equation:

| (20) |

| (21) |

is the largest eigenvalue of , which is marked as . Then, .

Up to this point, we have obtained .

∎

Theorem 2.

Multicolinearity leads to the condition number of the matrix tending to infinity.

Proof.

According to Lemma 1, we have and .

| (22) |

∎

This means that multicollinearity will lead to an excessively large condition number of the matrix, which in turn poses a potential risk of numerical instability [40].

Constructing transparent models with prior knowledge. Transparent models constructed based on prior knowledge have attracted widespread attention due to their computational efficiency and interpretability. However, transparent models also have their limitations. Biological constraints within transparent models may diminish their predictive performance. For example, Hard-coding TF-TF interactions may underperform unconstrained models. (although the use of milder regularization techniques can mitigate this decrease to some extent). The following serves as an illustration of this. Suppose that the DNA samples input into two models are identical, with all other confounding factors excluded. The loss function for the hard-coded transparent model is defined as follows:

| (23) |

There, denotes the number of input samples, is the true label of the -th sample, and represents the predicted probability of the -th sample by the hard-coded model. This prediction is subject to the constraint . The regularization coefficient is associated with the prior constraint term, while corresponds to the regularization coefficient for the adjustable parameters in the hard-coded model, which usually has a smaller value. signifies the fixed weights in the hard-coded model, is the preset baseline weight reference value, and is the weight in the hard-coded model that can be freely adjusted. The loss function of the hard-coded model is primarily determined by the first term, which is the cross-entropy loss. The loss function for the unconstrained model is defined as follows:

| (24) |

In comparison, the predicted probability of the unconstrained model is determined by the free parameters . Here, is the regularization coefficient for the unconstrained model (with a smaller value). Similarly, the loss function of the unconstrained model is primarily determined by the first term, which is the cross-entropy loss. Due to the limitations imposed by hard coding, it holds that . To demonstrate that the performance of the hard-coded model may potentially decrease compared to the unconstrained model, it is only necessary to prove that the difference between the loss functions of the hard-coded model and the unconstrained model is not less than zero.

Where , , and . From this, it can be concluded that , which proves that the hard-coded model may perform worse than the unconstrained model.

4 Future Directions

Although we have theoretically analyzed the problems existing in various interpretable methods, how to optimize and improve these methods with limitations remains a direction worthy of exploration.

Overall, on the one hand, research demonstrates that existing interpretation approaches, including attention-based visualization techniques, feature importance analysis, and causal reasoning-exhibit significant variations in terms of information presentation formats, analytical granularity, and reliability. Such heterogeneity not only poses substantial challenges for researchers in selecting and applying appropriate methods, but may also potentially compromise the credibility of model-derived decisions to some extent. Consequently, establishing a systematic evaluation framework for explainable methods becomes particularly crucial. Future research should focus on developing comprehensive and objective assessment metrics to construct a unified benchmarking standard, thereby effectively enhancing the reliability and practical utility of explainability studies.

On the other hand, we expect that interpretable information will not rely solely on a single method or a certain input information. With the development of AI and the improvement of computing power, multimodal technology is thriving. In the future, multimodal technology can be applied to integrate various forms of input data, such as gene sequence and protein structures. At the same time, by comprehensively considering the information provided by different types of interpretation methods, the understanding and reasoning abilities of the model can be enhanced, providing a more reliable guarantee for genetic predictions.

5 Conclusions

With the extensive application of deep learning in the field of bioinformatics, reliable interpretability has become increasingly crucial for understanding the prediction results. This article provides a systematic overview of interpretability methods for deep learning applied in the field of bioinformatics and then categorizes these methods into two primary types: input interpretability and model interpretability, further detailing various interpretive approaches within each category. Additionally, we theoretically analyze the limitations of these interpretive methods and provide guidance for future research on interpretability in the field of genetics.

References

- Alipanahi et al. [2015] B. Alipanahi, A. Delong, M. T. Weirauch, and B. J. Frey. Predicting the sequence specificities of dna-and rna-binding proteins by deep learning. Nature biotechnology, 33(8):831–838, 2015.

- Avsec et al. [2021] Ž. Avsec, V. Agarwal, D. Visentin, J. R. Ledsam, A. Grabska-Barwinska, K. R. Taylor, Y. Assael, J. Jumper, P. Kohli, and D. R. Kelley. Effective gene expression prediction from sequence by integrating long-range interactions. Nature methods, 18(10):1196–1203, 2021.

- Azodi et al. [2020] C. B. Azodi, J. Tang, and S.-H. Shiu. Opening the black box: interpretable machine learning for geneticists. Trends in genetics, 36(6):442–455, 2020.

- [4] A. Bjerregaard, V. Das, and A. Krogh. Interpretable single-cell perturbations from decoder gradients. In ICLR 2025 Workshop on Machine Learning for Genomics Explorations.

- Bravo Gonzalez-Blas et al. [2024] C. Bravo Gonzalez-Blas, I. Matetovici, H. Hillen, I. I. Taskiran, R. Vandepoel, V. Christiaens, L. Sansores-Garcia, E. Verboven, G. Hulselmans, S. Poovathingal, et al. Single-cell spatial multi-omics and deep learning dissect enhancer-driven gene regulatory networks in liver zonation. Nature Cell Biology, 26(1):153–167, 2024.

- Dhainaut et al. [2022] M. Dhainaut, S. A. Rose, G. Akturk, A. Wroblewska, S. R. Nielsen, E. S. Park, M. Buckup, V. Roudko, L. Pia, R. Sweeney, et al. Spatial crispr genomics identifies regulators of the tumor microenvironment. Cell, 185(7):1223–1239, 2022.

- Dibaeinia et al. [2025] P. Dibaeinia, A. Ojha, and S. Sinha. Interpretable ai for inference of causal molecular relationships from omics data. Science Advances, 11(7):eadk0837, 2025.

- Dudnyk et al. [2024] K. Dudnyk, D. Cai, C. Shi, J. Xu, and J. Zhou. Sequence basis of transcription initiation in the human genome. Science, 384(6694):eadj0116, 2024.

- Elmarakeby et al. [2021] H. A. Elmarakeby, J. Hwang, R. Arafeh, J. Crowdis, S. Gang, D. Liu, S. H. AlDubayan, K. Salari, S. Kregel, C. Richter, et al. Biologically informed deep neural network for prostate cancer discovery. Nature, 598(7880):348–352, 2021.

- Fu et al. [2025] X. Fu, S. Mo, A. Buendia, A. P. Laurent, A. Shao, M. d. M. Alvarez-Torres, T. Yu, J. Tan, J. Su, R. Sagatelian, et al. A foundation model of transcription across human cell types. Nature, pages 1–9, 2025.

- Fudenberg et al. [2020] G. Fudenberg, D. R. Kelley, and K. S. Pollard. Predicting 3d genome folding from dna sequence with akita. Nature methods, 17(11):1111–1117, 2020.

- Hinton et al. [2012] G. E. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever, and R. R. Salakhutdinov. Improving neural networks by preventing co-adaptation of feature detectors. arXiv preprint arXiv:1207.0580, 2012.

- Javed et al. [2025] N. Javed, T. Weingarten, A. Sehanobish, A. Roberts, A. Dubey, K. Choromanski, and B. E. Bernstein. A multi-modal transformer for cell type-agnostic regulatory predictions. Cell Genomics, 2025.

- Kelley et al. [2016] D. R. Kelley, J. Snoek, and J. L. Rinn. Basset: learning the regulatory code of the accessible genome with deep convolutional neural networks. Genome research, 26(7):990–999, 2016.

- Kelley et al. [2018] D. R. Kelley, Y. A. Reshef, M. Bileschi, D. Belanger, C. Y. McLean, and J. Snoek. Sequential regulatory activity prediction across chromosomes with convolutional neural networks. Genome research, 28(5):739–750, 2018.

- Klemm et al. [2019] S. L. Klemm, Z. Shipony, and W. J. Greenleaf. Chromatin accessibility and the regulatory epigenome. Nature Reviews Genetics, 20(4):207–220, 2019.

- Koo and Ploenzke [2020] P. K. Koo and M. Ploenzke. Deep learning for inferring transcription factor binding sites. Current opinion in systems biology, 19:16–23, 2020.

- Lieberman-Aiden et al. [2009] E. Lieberman-Aiden, N. L. Van Berkum, L. Williams, M. Imakaev, T. Ragoczy, A. Telling, I. Amit, B. R. Lajoie, P. J. Sabo, M. O. Dorschner, et al. Comprehensive mapping of long-range interactions reveals folding principles of the human genome. science, 326(5950):289–293, 2009.

- Lin et al. [2025] M.-Y. Lin, Y.-C. Lo, and J.-H. Hung. Unveiling chromatin dynamics with virtual epigenome. Nature Communications, 16(1):3491, 2025.

- Liu et al. [2022] B. Liu, Z. Jing, X. Zhang, Y. Chen, S. Mao, R. Kaundal, Y. Zou, G. Wei, Y. Zang, X. Wang, et al. Large-scale multiplexed mosaic crispr perturbation in the whole organism. Cell, 185(16):3008–3024, 2022.

- Lundberg and Lee [2017] S. M. Lundberg and S.-I. Lee. A unified approach to interpreting model predictions. Advances in neural information processing systems, 30, 2017.

- Ma et al. [2018] J. Ma, M. K. Yu, S. Fong, K. Ono, E. Sage, B. Demchak, R. Sharan, and T. Ideker. Using deep learning to model the hierarchical structure and function of a cell. Nature methods, 15(4):290–298, 2018.

- Mathelier et al. [2016] A. Mathelier, O. Fornes, D. J. Arenillas, C.-y. Chen, G. Denay, J. Lee, W. Shi, C. Shyr, G. Tan, R. Worsley-Hunt, et al. Jaspar 2016: a major expansion and update of the open-access database of transcription factor binding profiles. Nucleic acids research, 44(D1):D110–D115, 2016.

- Min et al. [2017] X. Min, W. Zeng, S. Chen, N. Chen, T. Chen, and R. Jiang. Predicting enhancers with deep convolutional neural networks. BMC bioinformatics, 18:35–46, 2017.

- Molnar et al. [2020] C. Molnar, G. Casalicchio, and B. Bischl. Interpretable machine learning–a brief history, state-of-the-art and challenges. In Joint European conference on machine learning and knowledge discovery in databases, pages 417–431. Springer, 2020.

- Mumbach et al. [2016] M. R. Mumbach, A. J. Rubin, R. A. Flynn, C. Dai, P. A. Khavari, W. J. Greenleaf, and H. Y. Chang. Hichip: efficient and sensitive analysis of protein-directed genome architecture. Nature methods, 13(11):919–922, 2016.

- Naim and Asher [2024] O. Naim and N. Asher. On explaining with attention matrices. In ECAI 2024, pages 1035–1042. IOS Press, 2024.

- Novakovsky et al. [2023] G. Novakovsky, N. Dexter, M. W. Libbrecht, W. W. Wasserman, and S. Mostafavi. Obtaining genetics insights from deep learning via explainable artificial intelligence. Nature Reviews Genetics, 24(2):125–137, 2023.

- Peleke et al. [2024] F. F. Peleke, S. M. Zumkeller, M. Gültas, A. Schmitt, and J. Szymański. Deep learning the cis-regulatory code for gene expression in selected model plants. Nature Communications, 15(1):3488, 2024.

- Schuette et al. [2025] G. Schuette, Z. Lao, and B. Zhang. Chromogen: Diffusion model predicts single-cell chromatin conformations. Science Advances, 11(5):eadr8265, 2025.

- Shrikumar et al. [2017] A. Shrikumar, P. Greenside, and A. Kundaje. Learning important features through propagating activation differences. In International conference on machine learning, pages 3145–3153. PMlR, 2017.

- Simonyan et al. [2013] K. Simonyan, A. Vedaldi, and A. Zisserman. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034, 2013.

- Sundararajan et al. [2016] M. Sundararajan, A. Taly, and Q. Yan. Gradients of counterfactuals. arXiv preprint arXiv:1611.02639, 2016.

- Tan et al. [2023] J. Tan, N. Shenker-Tauris, J. Rodriguez-Hernaez, E. Wang, T. Sakellaropoulos, F. Boccalatte, P. Thandapani, J. Skok, I. Aifantis, D. Fenyö, et al. Cell-type-specific prediction of 3d chromatin organization enables high-throughput in silico genetic screening. Nature biotechnology, 41(8):1140–1150, 2023.

- Theodoris et al. [2023] C. V. Theodoris, L. Xiao, A. Chopra, M. D. Chaffin, Z. R. Al Sayed, M. C. Hill, H. Mantineo, E. M. Brydon, Z. Zeng, X. S. Liu, et al. Transfer learning enables predictions in network biology. Nature, 618(7965):616–624, 2023.

- van Hilten et al. [2021] A. van Hilten, S. A. Kushner, M. Kayser, M. A. Ikram, H. H. Adams, C. C. Klaver, W. J. Niessen, and G. V. Roshchupkin. Gennet framework: interpretable deep learning for predicting phenotypes from genetic data. Communications biology, 4(1):1094, 2021.

- Vaswani et al. [2017] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- Zeiler and Fergus [2014] M. D. Zeiler and R. Fergus. Visualizing and understanding convolutional networks. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part I 13, pages 818–833. Springer, 2014.

- Zhang et al. [2025] G. Zhang, C. Song, M. Yin, L. Liu, Y. Zhang, Y. Li, J. Zhang, M. Guo, and C. Li. Trapt: a multi-stage fused deep learning framework for predicting transcriptional regulators based on large-scale epigenomic data. Nature Communications, 16(1):3611, 2025.

- Zhang et al. [2024] Y. Zhang, H. Zhu, Z. Song, Y. Chen, X. Fu, Z. Meng, P. Koniusz, and I. King. Geometric view of soft decorrelation in self-supervised learning. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pages 4338–4349, 2024.

- Zheng et al. [2021] A. Zheng, M. Lamkin, H. Zhao, C. Wu, H. Su, and M. Gymrek. Deep neural networks identify sequence context features predictive of transcription factor binding. Nature machine intelligence, 3(2):172–180, 2021.

- Zhou [2022] J. Zhou. Sequence-based modeling of three-dimensional genome architecture from kilobase to chromosome scale. Nature genetics, 54(5):725–734, 2022.

- Zhou et al. [2025] J. Zhou, D. R. Weinberger, and S. Han. Deep learning predicts dna methylation regulatory variants in specific brain cell types and enhances fine mapping for brain disorders. Science Advances, 11(1):eadn1870, 2025.

- Zuallaert et al. [2018] J. Zuallaert, F. Godin, M. Kim, A. Soete, Y. Saeys, and W. De Neve. Splicerover: interpretable convolutional neural networks for improved splice site prediction. Bioinformatics, 34(24):4180–4188, 2018.