[1]\fnmJianxu \surChen

1]\orgnameLeibniz-Institut für Analytische Wissenschaften – ISAS – e.V., \orgaddress\cityDortmund, \countryGermany 2]\orgnameRuhr University Bochum, \orgaddress\cityBochum, \countryGermany

Deep learning based Image Compression for Microscopy Images: An Empirical Study

Abstract

With the fast development of modern microscopes and bioimaging techniques, an unprecedentedly large amount of imaging data are being generated, stored, analyzed, and shared through networks. The size of the data poses great challenges for current data infrastructure. One common way to reduce the data size is by image compression. Effective image compression methods could help reduce the data size significantly without losing necessary information, and therefore reduce the burden on data management infrastructure and permit fast transmission through the network for data sharing or cloud computing. This present study analyzes multiple classic and deep learning based image compression methods, as well as an empirical study on their impact on downstream deep learning based image processing models. We used deep learning based label-free prediction models (i.e., predicting fluorescent images from bright field images) as an example downstream task for the comparison and analysis of the impact of image compression. Different compression techniques are compared in compression ratio, image similarity and, most importantly, the prediction accuracy of label-free models on original and compressed images. We found that AI-based compression techniques largely outperform the classic ones with minimal influence on the downstream 2D label-free tasks. In the end, we hope the present study could shed light on the potential of deep learning based image compression and raise the awareness of potential impacts of image compression on downstream deep learning models for analysis. The codebase has been released at: https://github.com/MMV-Lab/data-compression

1 Introduction

Image compression is the process of reducing the size of digital images while retaining the useful information for reconstruction. This is achieved by removing redundancies in the image data, resulting in a compressed version of the original image that requires less storage space and can be transmitted more efficiently. In many fields of research, including microscopy, high-resolution images are often acquired and processed, leading to significant challenges in terms of storage and computational resources. In particular, researchers in the microscopy image analysis field are often faced with infrastructure limitations, such as limited storage capacity or network bandwidth. Image compression can help mitigate such challenges, allowing researchers to store and transmit images efficiently without compromising their quality and validity. Lossless image compression refers to the compression techniques preserving every bit of information in data and make error-free reconstruction, ideal for applications where data integrity is paramount. However, the limited capability in size reduction, e.g., 2 3 compression ratio walker2023comparison is far from sufficient for easing the data explosion crisis. In this work, we focuses on lossy compression methods, where some information lost may occur but can yield significantly higher compression ratio. Despite lossy compression techniques (both classic and deep learning based) are widely employed in the computer vision field, their feasibility and impact in the field of biological microscopy images remain largely underexplored.

In this paper, we proposed a two-phase evaluation pipeline, compression algorithms comparison and downstream tasks analysis in the context of microscopy images. In order to fully explore the impact of lossy image compression on downstream image analysis tasks, we employed a set of label-free models, a.k.a., in-silico labelling christiansen2018silico . Label-free model denotes a deep learning approach capable of directly predicting fluorescent images from transmitted light bright-field images Ounkomol2018Label-freeMicroscopy . Considering the large amount bright-field images are being used in regular biological studies, it is of great importance that such data compression techniques can be utilized without compromising the prediction quality.

Through intensive experiments we demonstrated that deep learning based compression methods can outperform the classic algorithms in terms of compression ratio and post-compression reconstruction quality, and their impact on the downstream label-free task, indicating their huge potentials in the bioimaging field. Meanwhile, we made an preliminary attempt to build 3D compression models and reported the current limitation and possible future directions. Overall, we want to raise the awareness of the importance and potentials of deep learning based compression techniques, and hopefully help a strategical planning of future data infrastructure for bioimaging.

Specifically, the main contribution of the paper is:

-

1.

Benchmark common classic and deep learning-based image compression techniques in the context of 2D grayscale bright-field microscopy images.

-

2.

Empirically investigate the impact of data compression to the downstream label-free tasks.

-

3.

Expand the scope of the current compression analysis for 3D microscopy images.

The remaining of this paper is organized as follows: section 2 will introduce classic and deep learning-based image compression techniques, followed by the method descriptions in section 3 and experimental settings in section 4. Results and discussions will be presented in section 5 with conclusions in section 6.

2 Related Works

The classic data compression techniques are well studied in the last few decades, with the development of JPEG Wallace1992jpeg , a popular lossy compression algorithm since 1992, and its successors JPEG 2000 Marcellin2002AnJPEG-2000 , JPEG XR Dufaux2009TheNutshell , etc. In recent years some more powerful algorithms, such as Limited Error Raster Compression (LERC) are proposed HowAPI . Generally, the compression process approximately involves the following steps: color transform (with optional downsampling), Domain transform (e.g., Discrete Cosine Transform (DCT) ahmed1974discrete in JPEG), quantization and further lossless coding (e.g. run-length encoding (RLE) or Huffmann coding huffman1952method ).

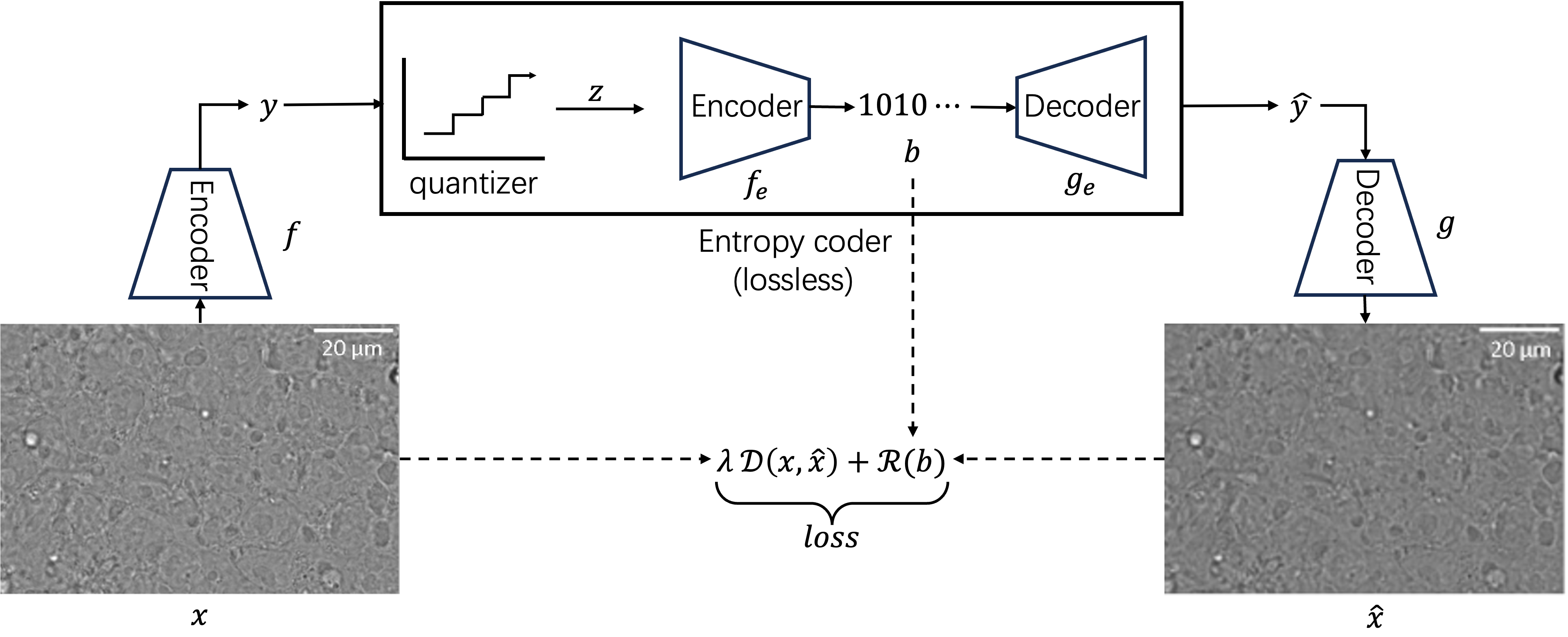

Recently, deep learning based image compression gains its popularity thanks to the significantly improved compression performance. Roughly speaking, a deep learning based compression model consists of two sub-networks: a neural encoder that compresses the image data and a neural decoder that reconstructs the original image from the compressed representation. Besides, the latent representation will be further losslessly compressed by some entropy coding techniques (e.g. arithmetic coding Rissanen1979Arithmetic ) as seen in fig. 1. Specially, the latent vector will be firstly discretized into : . Afterwards, will be encoded/decoded by the entropy coder () and decompressed by the neural decoder : . The objective is to minimize the loss function containing Rate-Distortion trade-off cover1999elements ; shannon1959coding :

| (1) | |||

| (2) | |||

| (3) |

where corresponds to the rate loss term, which highlights the compression ability of the system. is the entropy model that provides prior probability to the entropy coding, and denotes the information entropy and can approximately estimate the optimal compression ability of the entropy encoder , defined by the Shannon theory shannon1959coding ; shannon1948mathematical . is the distortion term, which can control the reconstruction quality. is the norm or perceptual metric, e.g. MSE, MS-SSIM wang2003multiscale , etc. The trade-off between these two terms is achieved by the scale hyper-parameter .

Since the lossless entropy coding entails the accurate modeling of the prior probability of the quantized latent representation , Ballé et al. Balle2018VariationalHyperprior justified that there exists statistical dependencies in the latent representation using current fully factorized entropy model, which will lead to suboptimal performance and not adaptive to all images. To further improve the entropy model, Ballé et al. proposes a hyperprior approach Balle2018VariationalHyperprior , where a hyper latent (also called side information) is generated by the auxillary neural encoder from the latent space : , then the scale parameter of the entropy model can be estimated by the output of the auxillary decoder : , so that the entropy model can be adaptively adjusted by the input image , with the bit-rate further enhanced. Minnen et al. Minnen2018JointCompression extended the work to get the more reliable entropy model by jointly combining the data from the above mentioned hyperprior and the proposed autoregressive Context Model.

Besides the improvement in the entropy model, lots of effort are also put into the enhancement of the network architecture. Ballé et al. balle2015density replaced the normal RELU activation to the proposed Generalized Division Normalization (GDN) module in order to better capture the image statistics. Johnston et al. johnston2019computationally optimized the GDN module in a computational efficient manner without sacrificing the accuracy. Cheng et al. Cheng2020LearnedModules introduced the skip connection and attention mechanism. The transformer-based auto-encoder was also reported for data compression in recent years zhu2021transformer .

3 Methodology

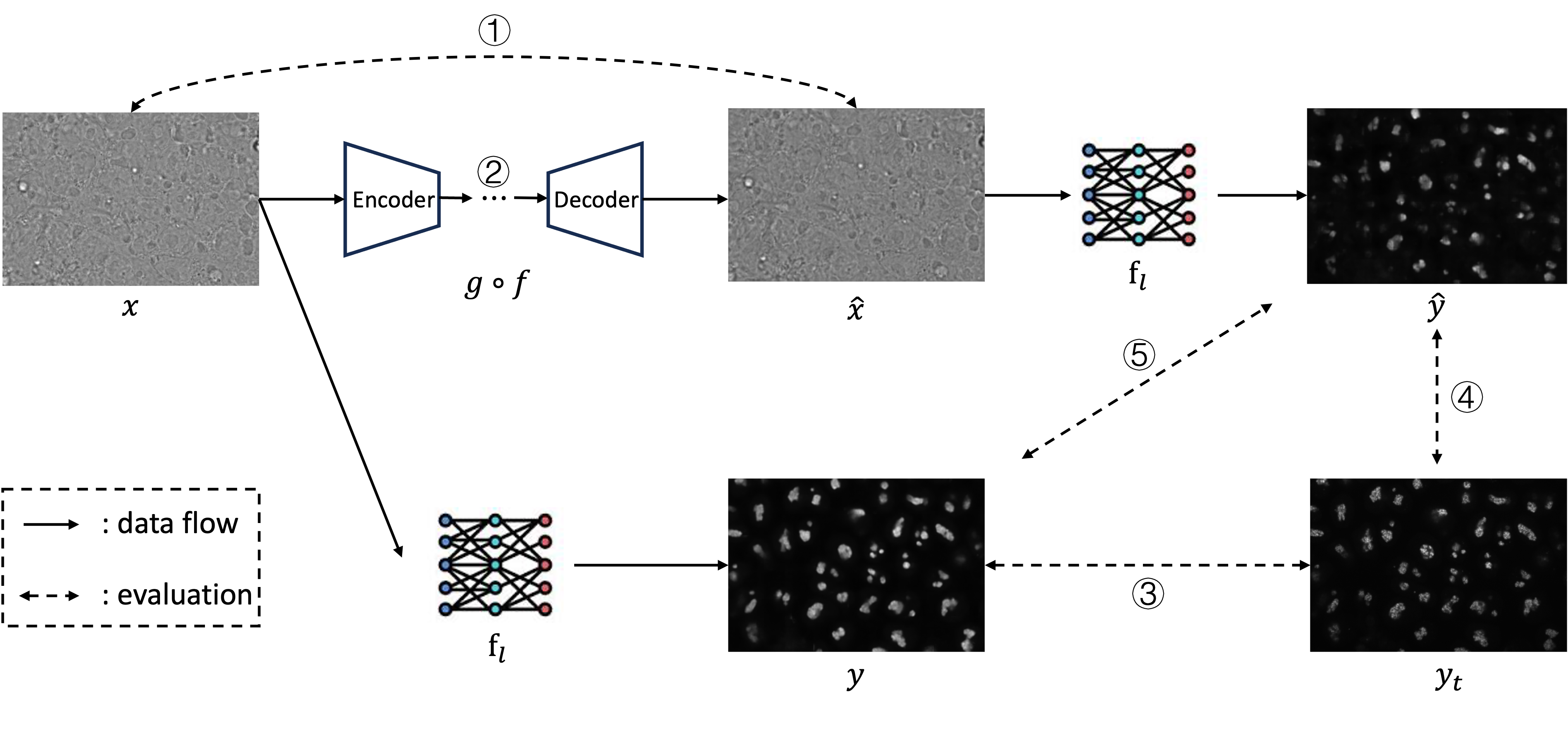

The evaluation pipeline was proposed in this study to benchmark the performance of the compression model in the bioimage field and estimate their influence to the downstream label-free generation task. Illustrated in fig. 2, the whole pipeline contains two parts: compression part: and downstream label-free part: , where the former is designed to measure the Rate-Distortion performance of the compression algorithms and the latter aims to quantify their influence to the downstream task.

During the compression part, the raw image will be transformed to the reconstructed image through the compression algorithm :

| (4) |

where represents the compression process and denotes the decompression process. Note that the compression methods could be both classic strategies (e.g. jpeg) and deep-learning based algorithms. The performance of the algorithm can be evaluated through Rate-Distortion performance, as explained in eqs. 1, 2 and 3.

In the downstream label-free part, the prediction will be made by the model using both the raw image and the reconstructed image :

| (5) |

The evaluation to measure the compression influence to the downstream tasks is made by:

| (6) | |||

| (7) | |||

| (8) |

where the evaluation metric L is the collection of different metrics on different image pairs . V is the collection of the raw prediction , prediction made by the reconstructed image and the ground truth . S is formed by pairwise combinations of elements from V. represents the metric we used to measure the relation between image pairs. In this study we totally utilized four metrics: Mean Squared Error (MSE), Structural Similarity Index Measure (SSIM), Peak Signal-to-Noise Ratio (PSNR) and Pearson Correlation.

To conclude, through the above proposed two-phase evaluation pipeline, the compression performance of the compression algorithm will be fully estimated and their impact to the downstream task will also be well investigated.

4 Experimental settings

4.1 Dataset

The dataset used in this study is the human induced Pluripotent Stem Cells (hiPSC) single cell image dataset forCellScience2018HiPSCDataset released by the Allen Institute for Cell Science. We utilized grayscale brightfield images and its corresponding fluorescent image pairs from the fibrillarin (FBL) cell line, where the dense fibrillar component of nucleolus is endogenously tagged. For 3D experiments, 500 samples were chosen from the dataset with 395 for training and the remaining 105 samples for evaluation. While in terms of 2D experiments, the middle slice of each 3D sample was extracted, resulting in 2D slices of 624 924 pixels.

4.2 Implementation Details

During the first compression part of the proposed two-phase evaluation pipeline, we made the comparison using both classic methods and deep-learning based algorithms. In terms of the classic compression, we employed the Python package ’tifffile’ to apply 3 classic image compression: JPEG 2000, JPEG XR, LERC, focusing on level 8 for the highest image quality preservation. To enhance compression efficiency, we used a 1616-pixel tile-based approach, facilitating image data access during compression and decompression. This methodology enabled a thorough exploration of the storage vs. image quality trade-off.

Regarding learned-based methods, 6 pre-trained models proposed in Balle2018VariationalHyperprior ; Minnen2018JointCompression ; Cheng2020LearnedModules were applied in 2D compression, with each kind of model trained with 2 different metrics (MSE and MS-SSIM), resulting in 12 models in total. The pretrained checkpoints were provided by the CompressAI tool begaint2020compressai . For the 3D senario, an adapted bmshj2018-factorized compression model Balle2018VariationalHyperprior was trained and evaluated on our microscopy dataset. For the first 50 epochs, MSE metric was employed in the reconstruction loss term, followed by MS-SSIM metric for another 50 epochs to enhance the image quality.

When it comes to the second label-free generation part, the pretrained Pix2Pix 2D (Fnet 2D as the generator) and Fnet 3D model were obtained from the mmv_im2im Python package SonneckMMV_Im2Im:Transformation . All the labelfree 2D models were trained by images compressed with the JPEGXR algorithm. For 3D models, raw images were involved in the training.

5 Results and Discussion

In this section, we will present and analyze the performance of the image compression algorithms and their impact on the downstream label-free task, using the proposed two-phase evaluation pipeline.

5.1 Data Compression Results

Firstly, we did the compression performance comparison experiment in the context of grayscale microscopic bright-field image, based on the first part of the evaluation pipeline. The results show that deep-learning based compression algorithms behave well in terms of the reconstruction quality and compression ratio ability in both 2D and 3D cases, and outperform the classic methods.

The second to the fourth rows in tables 1 and 2 demonstrate the quantitative rate-distortion performance for the three traditional compression techniques involved. Although the classic method LERC achieved highest result in all the quality metrics for the reconstructed image, it just saves 12.36% of the space, which is way lower compared to the deep learning-based methods. Meanwhile, JPEG-2000-LOSSY can achieve comparable compression ratio with respect to AI-based algorithms, but its quality metric ranks the bottom, with only 0.1576 in correlation and 0.4244 in SSIM. The above results compellingly showcase that the classic methods cannot make a trade-off in the rate-distortion performance.

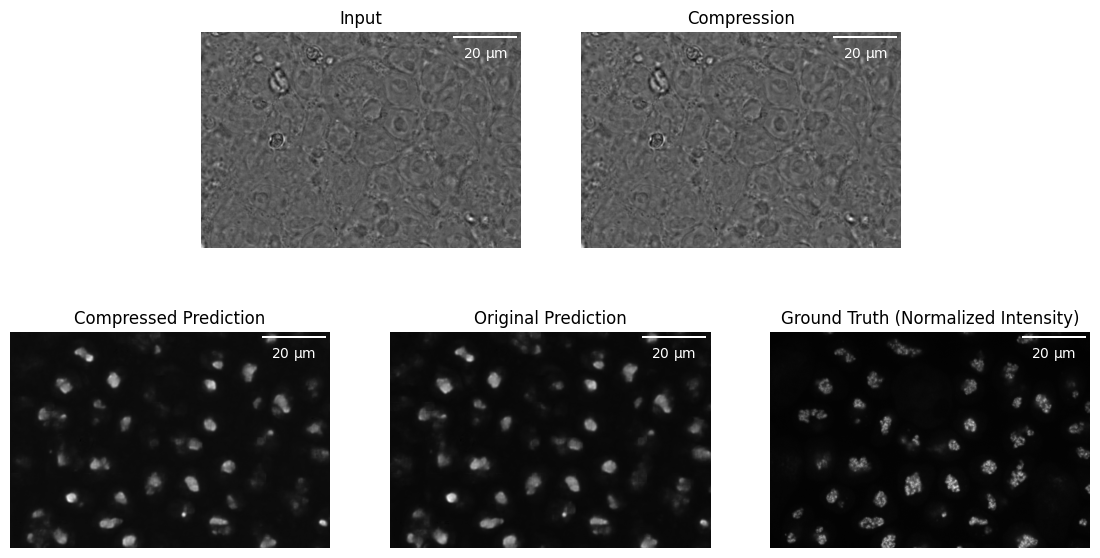

Besides, results from deep learning models exhibit close similarities, yielding favorable outcomes, as illustrated in tables 1 and 2 from the fifth row to the last. Notably, the ’mbt2018-ms-ssim-8’ method exhibits a slight advantage in terms of SSIM, achieving a value of 0.9705. Conversely, the ‘mbt2018-mean-ms-ssim-8’ method showcases a slight edge in correlation, with a score of 0.9866. When considering compression ratio, ’cheng2020-anchor-mse-6’ outperforms the others, with an compression ratio of 47.2978. A sample result is visualized in fig. 3.

| Compression | MSE () | SSIM | Correlation | PSNR () |

| original | 0 | 1 | 1 | 108.1308 |

| JPEGXR | 0.0011 | 0.8284 | 0.899 | 30.4992 |

| JPEG-2000-LOSSY | 0.0498 | 0.4244 | 0.1576 | 15.8519 |

| LERC | 0.0061 | 0.9803 | 0.9934 | 51.72 |

| bmshj2018-factorized-mse-8 | 0.0002 | 0.9623 | 0.9844 | 38.4742 |

| bmshj2018-factorized-ms-ssim-8 | 0.0003 | 0.9704 | 0.9859 | 37.3699 |

| bmshj2018-hyperprior-mse-8 | 0.0002 | 0.9585 | 0.9829 | 38.4364 |

| bmshj2018-hyperprior-ms-ssim-8 | 0.0003 | 0.9691 | 0.9854 | 37.1714 |

| mbt2018-mean-mse-8 | 0.0002 | 0.9559 | 0.9817 | 37.9746 |

| mbt2018-mean-ms-ssim-8 | 0.0003 | 0.9704 | 0.9866 | 37.6723 |

| mbt2018-mse-8 | 0.0002 | 0.9563 | 0.9823 | 38.1687 |

| mbt2018-ms-ssim-8 | 0.0003 | 0.9705 | 0.986 | 37.2873 |

| cheng2020-anchor-mse-6 | 0.0006 | 0.9133 | 0.961 | 34.3726 |

| cheng2020-anchor-ms-ssim-6 | 0.0009 | 0.9537 | 0.9738 | 33.4254 |

| cheng2020-attn-mse-6 | 0.0005 | 0.9138 | 0.9609 | 34.5608 |

| cheng2020-attn-ms-ssim-6 | 0.0006 | 0.9538 | 0.9727 | 34.0428 |

| Compression | Compression ratio | Space saving () |

| original | 1.1236 | 10.94 |

| JPEGXR | 1.3458 | 24.58 |

| JPEG-2000-LOSSY | 28.5981 | 93.70 |

| LERC | 1.1419 | 12.36 |

| bmshj2018-factorized-mse-8 | 15.9426 | 93.64 |

| bmshj2018-factorized-ms-ssim-8 | 19.3469 | 94.82 |

| bmshj2018-hyperprior-mse-8 | 21.3869 | 95.07 |

| bmshj2018-hyperprior-ms-ssim-8 | 23.2744 | 95.68 |

| mbt2018-mean-mse-8 | 23.4083 | 95.50 |

| mbt2018-mean-ms-ssim-8 | 23.1368 | 95.65 |

| mbt2018-mse-8 | 23.746 | 95.55 |

| mbt2018-ms-ssim-8 | 22.9054 | 95.61 |

| cheng2020-anchor-mse-6 | 47.2978 | 97.81 |

| cheng2020-anchor-ms-ssim-6 | 38.0068 | 97.35 |

| cheng2020-attn-mse-6 | 47.0159 | 97.80 |

| cheng2020-attn-ms-ssim-6 | 37.3312 | 97.30 |

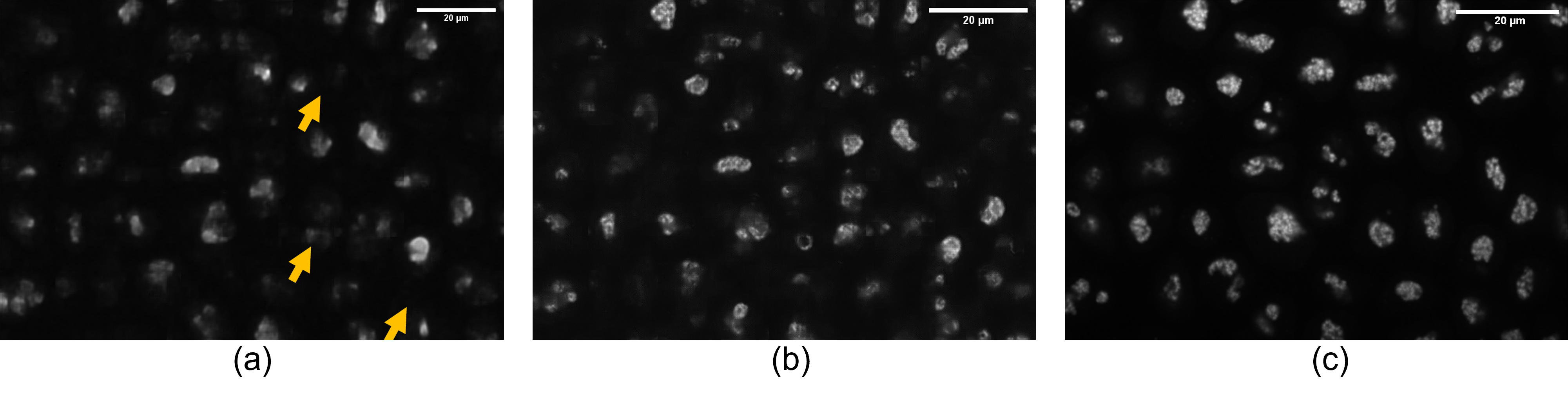

Illustrated in fig. 5, the 3D compression result is visually plausible and the quantitative evaluation metrics are listed in the first row in table 5. The metrics are relatively high, reaching 0.922 in SSIM and 0.949 in correlation. Regarding the compression ratio, 97.74 of space will be saved.

In brief, the above findings suggest that deep-learning based compression methods behave well in the context of microscopic image field, averagely outperform the classic methods in terms of reconstruction ability and compression ratios.

5.2 Downstream Label-free Results

We also conducted an experiment to assess the impact of the aforementioned compression techniques on downstream AI-based bioimage analysis tasks, specifically the label-free task in our study. Our results indicate that in 2D cases, the prediction accuracy is higher when the input image is compressed using deep learning-based methods, as opposed to traditional methods. Furthermore, this accuracy closely aligns with the predictions derived from the raw image, suggesting that deep-learning based compression methods have a minimal impact on the downstream task.

tables 3 and 4 exhibits the influence of data compression to the downstream label-free task in 2D cases. Regarding the comparison of the accuracy between the predictions using compressed input and original input (table 3), we found that although the slight degradation in correlation and PSNR, the average SSIM value among deep learning-based methods is akin to the original prediction and surpasses the classic methods, with ’mbt2018-ms-ssim-8’ model reaching the highest value (0.7439). If we compare the similarity between the predictions using compressed images and original images (table 4), ’mbt2018-ms-ssim-8’ and LERC ranked the highest in SSIM and correlation, respectively.

| Compression | MSE () | SSIM | Correlation | PSNR () |

| original | 0.005 | 0.7333 | 0.7399 | 23.2962 |

| JPEGXR | 0.006 | 0.6541 | 0.4304 | 22.4266 |

| JPEG-2000-LOSSY | 0.0347 | 0.3838 | 0.0238 | 17.3313 |

| LERC | 0.0056 | 0.6841 | 0.7394 | 22.8782 |

| bmshj2018-factorized-mse-8 | 0.0044 | 0.7258 | 0.6382 | 23.9536 |

| bmshj2018-factorized-ms-ssim-8 | 0.0046 | 0.7342 | 0.7093 | 23.7132 |

| bmshj2018-hyperprior-mse-8 | 0.0047 | 0.7128 | 0.6045 | 23.8299 |

| bmshj2018-hyperprior-ms-ssim-8 | 0.0043 | 0.7378 | 0.6912 | 23.9392 |

| mbt2018-mean-mse-8 | 0.0044 | 0.7229 | 0.6097 | 23.9387 |

| mbt2018-mean-ms-ssim-8 | 0.0044 | 0.7415 | 0.7073 | 23.8704 |

| mbt2018-mse-8 | 0.0043 | 0.7194 | 0.6207 | 23.9625 |

| mbt2018-ms-ssim-8 | 0.0044 | 0.7439 | 0.7102 | 23.8387 |

| cheng2020-anchor-mse-6 | 0.0065 | 0.5991 | 0.4375 | 22.4682 |

| cheng2020-anchor-ms-ssim-6 | 0.0045 | 0.7145 | 0.6389 | 23.7949 |

| cheng2020-attn-mse-6 | 0.008 | 0.568 | 0.4208 | 21.8606 |

| cheng2020-attn-ms-ssim-6 | 0.0044 | 0.718 | 0.6418 | 23.8619 |

| Compression | MSE () | SSIM | Correlation | PSNR () |

| original | 0.0 | 1.0 | 1.0 | 108.1308 |

| JPEGXR | 0.0061 | 0.8255 | 0.573 | 22.4105 |

| JPEG-2000-LOSSY | 0.0323 | 0.4835 | 0.0346 | 16.7578 |

| LERC | 0.0003 | 0.9624 | 0.9995 | 52.1393 |

| bmshj2018-factorized-mse-8 | 0.0026 | 0.9103 | 0.859 | 27.0981 |

| bmshj2018-factorized-ms-ssim-8 | 0.0009 | 0.9541 | 0.9483 | 30.9527 |

| bmshj2018-hyperprior-mse-8 | 0.0034 | 0.8904 | 0.8158 | 25.9064 |

| bmshj2018-hyperprior-ms-ssim-8 | 0.0014 | 0.9427 | 0.9232 | 29.4052 |

| mbt2018-mean-mse-8 | 0.0031 | 0.8983 | 0.8161 | 26.1503 |

| mbt2018-mean-ms-ssim-8 | 0.001 | 0.9516 | 0.9451 | 30.7766 |

| mbt2018-mse-8 | 0.003 | 0.8989 | 0.8327 | 26.4424 |

| mbt2018-ms-ssim-8 | 0.0009 | 0.9551 | 0.9483 | 31.0031 |

| cheng2020-anchor-mse-6 | 0.0066 | 0.7776 | 0.5942 | 22.2884 |

| cheng2020-anchor-ms-ssim-6 | 0.0026 | 0.9078 | 0.8499 | 26.3781 |

| cheng2020-attn-mse-6 | 0.0078 | 0.7452 | 0.5713 | 21.769 |

| cheng2020-attn-ms-ssim-6 | 0.0026 | 0.9098 | 0.8523 | 26.5122 |

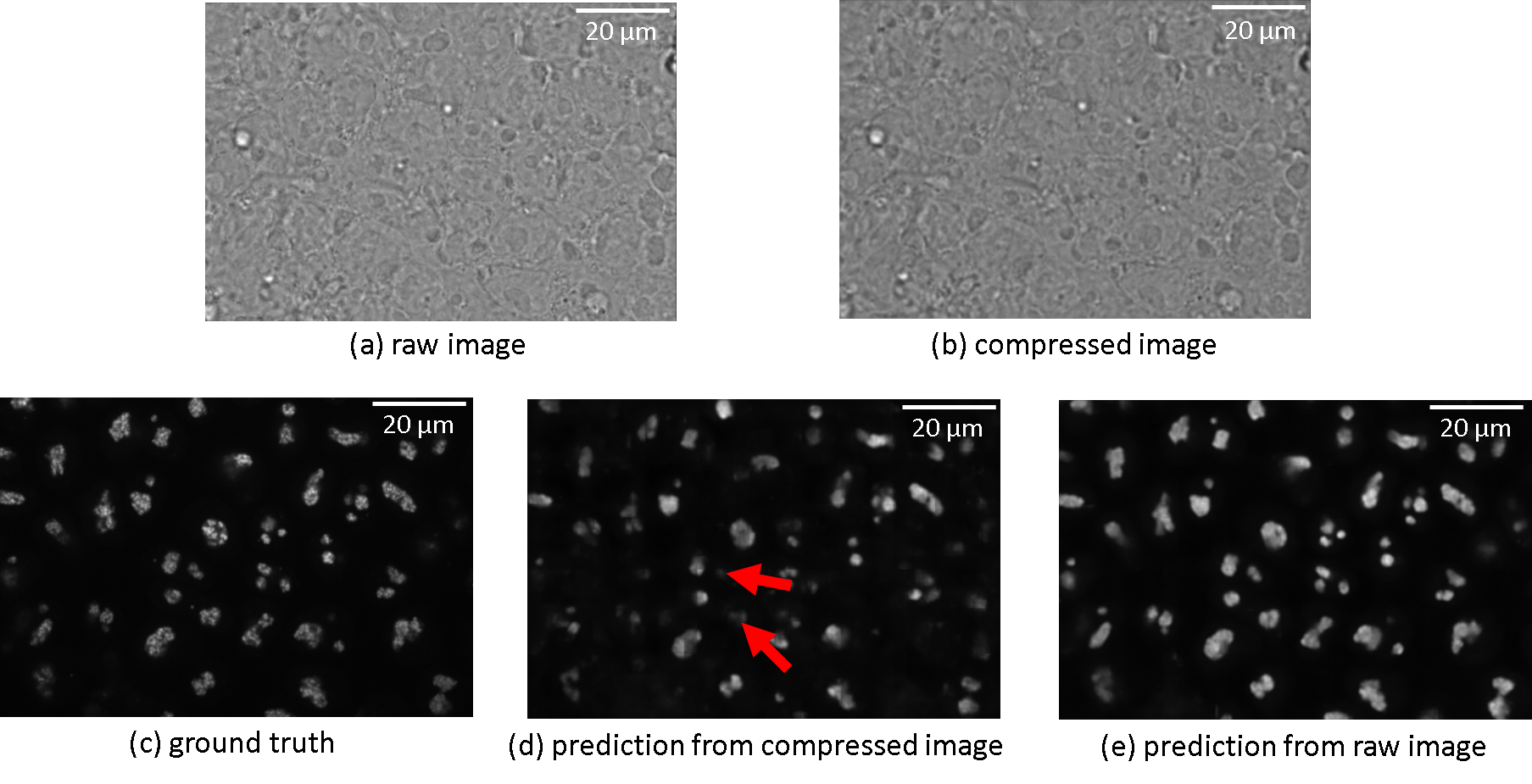

When it comes to 3D cases, The prediction from the compressed image is not comparable to that predicted by the raw bright-field image (2.54 dB in PSNR and 0.08 in SSIM), as shown in the second and third rows from table 5, indicating a quality downgrade during compression. This can be attributed primarily to the ignorance of considering compression in the training phase of the labelfree model, which will be discussed subsequently. Illustrated in fig. 5, despite the visually plausible reconstruction result, the information loss during the compression process also heavily affects the downstream label-free generation task. For instance, The fibrillarin structure pointed by the arrow in the prediction result from the compressed image is missing, which is quite obvious in the corresponding prediction from the raw image.

Briefly, the above result suggests that in 2D cases, the downstream task will be less affected when deep-learning based methods were applied. However, the prediction accuracy will be largely affected in 3D cases.

| Comparison* | MSE | SSIM | Correlation | PSNR () |

| (a) to (b) | 0.3344 | 0.9220 | 0.9484 | 28.1366 |

| (d) to (c) | 0.0010 | 0.8495 | 0.5981 | 30.0605 |

| (e) to (c) | 0.0006 | 0.9268 | 0.9066 | 32.6057 |

| (d) to (e) | 0.0015 | 0.8203 | 0.6576 | 28.5425 |

-

*The index here is consistent with fig. 5. (a). raw bright-field image; (b). compressed bright-field image; (c). label-free ground truth (d). label-free prediction from compressed image; (e). label-free prediction from raw image

Given that the 2D labelfree models were all trained with compressed images, it is also crucial to measure the impact of compression during the training phase in the downstream labelfree task. For this purpose, we devised the following experiment: two label-free models using uncompressed data and JPEGXR-compressed data as input were trained respectively and we compared the performance of these models on JPEG XR compressed input images. Illustrated in fig. 4, we observed significant artifacts in the prediction when the model was not trained on the compressed data used as input, which is subject to the low quality metrics shown in table 1. However, artifacts were almost mitigated when the model was trained with data using the same compression algorithm, which has the closer data distribution. This may also explains the precision drop in 3D cases, where the compression were not considered in the training phase. The above phenomenon highlights the importance of considering compression in the training process in order to achieve favorable outcomes.

6 Conclusion

In this research, we proposed a two-phase evaluation pipeline, in order to benchmark the rate-distortion performance of different data compression techniques in the context of grayscale microscopic brightfield images and fully explored the influence of such compression to the downstream label-free task. We found that AI-based image compression methods can significantly outperform classic compression methods and have minor influence on the following label-free model prediction. Despite some limitations, we hope our work can raise the awareness of the application of deep learning-based image compression in the bioimaging field and provide insights into the way of integration with other AI-based image analysis tasks.

References

- \bibcommenthead

- (1) Walker, L. A., Li, Y., Mcglothlin, M. & Cai, D. Xiang, G. (ed.) A comparison of lossless compression methods in microscopy data storage applications: Microscopy compression comparison. (ed.Xiang, G.) Proceedings of the 2023 6th International Conference on Software Engineering and Information Management, 154–159 (2023).

- (2) Christiansen, E. M. et al. In silico labeling: predicting fluorescent labels in unlabeled images. Cell 173 (3), 792–803 (2018) .

- (3) Ounkomol, C., Seshamani, S., Maleckar, M. M., Collman, F. & Johnson, G. R. Label-free prediction of three-dimensional fluorescence images from transmitted-light microscopy. Nature methods 15 (11), 917–920 (2018) .

- (4) Wallace, G. The jpeg still picture compression standard. IEEE Transactions on Consumer Electronics 38 (1), xviii–xxxiv (1992). 10.1109/30.125072 .

- (5) Marcellin, M., Gormish, M., Bilgin, A. & Boliek, M. An overview of JPEG-2000 523–541 (2002). 10.1109/DCC.2000.838192 .

- (6) Dufaux, F., Sullivan, G. J. & Ebrahimi, T. The JPEG XR image coding standard [Standards in a Nutshell]. IEEE Signal Processing Magazine 26 (6) (2009). 10.1109/MSP.2009.934187 .

- (7) How lerc raster compression works. URL https://www.gpxz.io/blog/lerc.

- (8) Ahmed, N., Natarajan, T. & Rao, K. R. Discrete cosine transform. IEEE transactions on Computers 100 (1), 90–93 (1974) .

- (9) Huffman, D. A. A method for the construction of minimum-redundancy codes. Proceedings of the IRE 40 (9), 1098–1101 (1952) .

- (10) Rissanen, J. & Langdon, G. G. Arithmetic coding. IBM Journal of Research and Development 23 (2), 149–162 (1979). 10.1147/rd.232.0149 .

- (11) Cover, T. M. Elements of information theory (John Wiley & Sons, 1999).

- (12) Shannon, C. E. et al. Coding theorems for a discrete source with a fidelity criterion. IRE Nat. Conv. Rec 4 (142-163), 1 (1959) .

- (13) Shannon, C. E. A mathematical theory of communication. The Bell system technical journal 27 (3), 379–423 (1948) .

- (14) Wang, Z., Simoncelli, E. P. & Bovik, A. C. Multiscale structural similarity for image quality assessment. The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003 2, 1398–1402 (2003). 10.1109/ACSSC.2003.1292216 .

- (15) Ballé, J., Minnen, D., Singh, S., Hwang, S. J. & Johnston, N. Variational image compression with a scale hyperprior. 6th International Conference on Learning Representations, ICLR 2018 - Conference Track Proceedings (2018). URL https://arxiv.org/abs/1802.01436v2 .

- (16) Minnen, D., Ballé, J. & Toderici, G. Joint Autoregressive and Hierarchical Priors for Learned Image Compression. Advances in Neural Information Processing Systems 2018-December, 10771–10780 (2018). URL https://arxiv.org/abs/1809.02736v1 .

- (17) Ballé, J., Laparra, V. & Simoncelli, E. P. Density modeling of images using a generalized normalization transformation. arXiv preprint arXiv:1511.06281 (2015) .

- (18) Johnston, N., Eban, E., Gordon, A. & Ballé, J. Computationally efficient neural image compression. arXiv preprint arXiv:1912.08771 (2019) .

- (19) Cheng, Z., Sun, H., Takeuchi, M. & Katto, J. Learned Image Compression with Discretized Gaussian Mixture Likelihoods and Attention Modules. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 7936–7945 (2020). 10.1109/CVPR42600.2020.00796 .

- (20) Zhu, Y., Yang, Y. & Cohen, T. Transformer-based transform coding. International Conference on Learning Representations (2021) .

- (21) for Cell Science, A. I. hiPSC single cell image dataset. https://open.quiltdata.com/b/allencell/packages/aics/hipsc_single_cell_image_dataset (2018).

- (22) Bégaint, J., Racapé, F., Feltman, S. & Pushparaja, A. Compressai: a pytorch library and evaluation platform for end-to-end compression research. arXiv preprint arXiv:2011.03029 (2020) .

- (23) Sonneck, J. & Chen, J. Mmv_im2im: An open source microscopy machine vision toolbox for image-to-image transformation. arXiv preprint arXiv:2209.02498 (2022) .

- (24) Sollmann, J. & Chen, J. AI-based Compression Applied on Brigthfield Images used for Fluorescence Prediction. Focus on Microscopy (2023) .