Deep Learning for Visual Localization and Mapping: A Survey

Abstract

Deep learning based localization and mapping approaches have recently emerged as a new research direction and receive significant attentions from both industry and academia. Instead of creating hand-designed algorithms based on physical models or geometric theories, deep learning solutions provide an alternative to solve the problem in a data-driven way. Benefiting from the ever-increasing volumes of data and computational power on devices, these learning methods are fast evolving into a new area that shows potentials to track self-motion and estimate environmental model accurately and robustly for mobile agents. In this work, we provide a comprehensive survey, and propose a taxonomy for the localization and mapping methods using deep learning. This survey aims to discuss two basic questions: whether deep learning is promising to localization and mapping; how deep learning should be applied to solve this problem. To this end, a series of localization and mapping topics are investigated, from the learning based visual odometry, global relocalization, to mapping, and simultaneous localization and mapping (SLAM). It is our hope that this survey organically weaves together the recent works in this vein from robotics, computer vision and machine learning communities, and serves as a guideline for future researchers to apply deep learning to tackle the problem of visual localization and mapping.

Index Terms:

Deep Learning, Visual SLAM, Visual Odometry, Visual-inertial Odometry, Global LocalizationI Introduction

Localization and mapping serve as essential requirements for both human beings and mobile agents. As a motivating example, humans possess the remarkable ability to perceive their own motion and the surrounding environment through multisensory perception. They heavily rely on this awareness to determine their location and navigate through intricate three-dimensional spaces. In a similar vein, mobile agents, encompassing a diverse range of robots like self-driving vehicles, delivery drones, and home service robots, must possess the capability to perceive their environment and estimate positional states through onboard sensors. These agents actively engage in sensing their surroundings and autonomously make decisions [1]. Equivalently, the integration of emerging technologies like Augmented Reality (AR) and Virtual Reality (VR) intertwines the virtual and physical realms, making it imperative for machines to possess perceptual awareness. This awareness forms the foundation for seamless interaction between humans and machines. Furthermore, the applications of these concepts extend to mobile and wearable devices such as smartphones, wristbands, and Internet-of-Things (IoT) devices. These devices offer a wide array of location-based services, ranging from pedestrian navigation and sports/activity monitoring to emergency response.

Enabling a high level of autonomy for these and other digital agents requires precise and robust localization, while incrementally building and maintaining a world model, with the capability to continuously process new information and adapt to various scenarios. In this work, localization broadly refers to the ability to obtain internal system states of robot motion, including locations, orientations and velocities, whilst mapping indicates the capacity to perceive external environmental states, including scene geometry, appearance and semantics. They can act individually to sense internal or external states respectively, or can operate jointly as a simultaneous localization and mapping (SLAM) system.

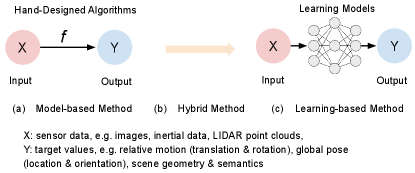

The problem of localization and mapping has been studied for decades, with a range of algorithms and systems being developed, for example, visual odometry [2], visual-inertial odometry [3], image-based relocalization[4], place recognition[5], SLAM [6]. These algorithms and systems have demonstrated their efficacy in supporting a wide range of real-world applications, such as delivery robots, self-driving vehicles, and VR devices. However, the deployment of these systems is not without challenges. Factors such as imperfect sensor measurements, dynamic scenes, adverse lighting conditions, and real-world constraints somewhat hinder their practical implementation. In light of these limitations, recent advancements in machine learning, particularly deep learning, have prompted researchers to explore data-driven approaches as an alternative solution. Unlike conventional model-based approaches that rely on concrete and explicit algorithms tailored to specific application domains, learning-based methods leverage the power of deep neural networks to extract features and construct implicit neural models, as shown in Figure 1. By training these networks on large datasets, they learn to obtain ability to generate poses and describe scenes, even in challenging environments such as those characterized by high dynamics and poor lighting conditions. Consequently, deep learning-based localization and mapping methods exhibit good robustness and accuracy compared to their traditional counterparts. Deep learning-based localization and mapping remain active areas of research, and further investigations are necessary to fully understand the strengths and limitations of different approaches.

In this article, we extensively review the existing deep learning based visual localization and mapping approaches, and try to explore the answers to the following two questions:

-

•

1) Is deep learning promising to visual localization and mapping?

-

•

2) How can deep learning be applied to solve the problem of visual localization and mapping?

The two questions will be revisited by the end of this survey. As vision is major information source for most mobile agents, this work will focus on vision based solutions. The field of deep learning based localization and mapping is still relatively new, and there are a growing number of different approaches and techniques that have been proposed in recent years. Notably, although the problem of localization and mapping falls into the key notion of robotics, the incorporation of learning methods progresses in tandem with other research areas such as machine learning, computer vision and even natural language processing. This cross-disciplinary area thus imposes non-trivial difficulty when comprehensively summarizing related works into a survey paper. We hope that our survey can help to promote collaboration and knowledge sharing within the research community, and foster new ideas and facilitate interdisciplinary research on deep learning based localization and mapping. In addition, this survey can help to identify key research challenges and open problems in the field, guide future research efforts, and provide guidance for researchers and practitioners who are interested in using deep learning solutions in their works. To the best of our knowledge, this is the first survey article that thoroughly and extensively covers existing work on deep learning for visual localization and mapping.

| Year | Content | Reference |

|---|---|---|

| 2005 | probalistic SLAM | Thrun et al. [7] |

| 2006 | SLAM tutorial | Durrant-whyte et al. [8] |

| 2010 | pose-graph SLAM | Grisetti et al. [9] |

| 2011 | visual odometry tutorial | Scaramuzza et al. [10] |

| 2015 | visual place recognition | Lowry et al. [5] |

| 2016 | SLAM in robust-perception age | Cadena et al. [11] |

| 2018 | dynamic SLAM | Saputra et al.[12] |

| 2018 | deep learning for robotics | Sunderhauf et al.[1] |

| 2022 | perception and navigation | Tang et al. [13] |

| 2023 | deep learning based SLAM | This survey |

I-A Comparison with Other Surveys

As an established field, the development of SLAM problem has been well summarized by several survey papers in literature [8, 14], with their focus lying in the conventional model-based localization and mapping approaches. The seminal survey [11] provides a thorough discussion on existing SLAM works, reviews the history of development and charts several future directions. Although this paper contains a section which briefly discusses deep learning models, it does not overview this field comprehensively, especially due to the explosion of research in this area of the past five years. Other SLAM survey papers only focus on individual flavours of SLAM systems, including the probabilistic formulation of SLAM [7], visual odometry [10], pose-graph SLAM [9], and SLAM in dynamic environments [12]. We refer readers to these surveys for a better understanding of the conventional solutions to SLAM systems. On the other hand, [1] has a discussion on the applications of deep learning to robotics research; however, its main focus is not on localization and mapping specifically, but a more general perspective towards the potentials and limits of deep learning in a broad context of robotic policy learning, reasoning and planning. A recent survey [13] discusses deep learning based perception and navigation. Compared to [13] that throws a broader view on environment perception, motion estimation and reinforcement learning based control for autonomous systems, we provide a more comprehensive review and deep analysis on odometry estimation, relocalization, mapping and other aspects of visual SLAM.

I-B Survey Organization

The remainder of the paper is organized as follows: Section 2 offers an overview and presents a taxonomy of existing deep learning based localization and mapping; Sections 3, 4, 5, 6 discuss the existing deep learning approaches on incremental motion (odometry) estimation, global relocalization, mapping, and SLAM back-ends respectively; Sections 7 and 8 review the learning based uncertainty estimation and sensor fusion methods; and finally Section 9 concludes the article, and discusses the limitations and future prospects.

II Taxonomy of Existing Approaches

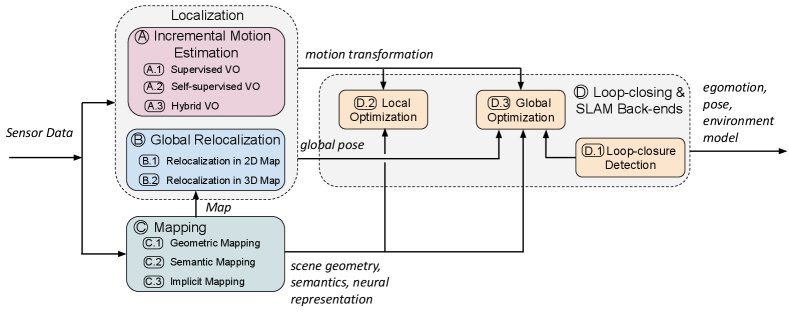

From the perspective of learning approaches, we provide a taxonomy of existing deep learning based visual localization and mapping, to connect the fields of robotics, computer vision and machine learning. Based on their main technical contributions towards a complete SLAM system, related approaches can be broadly categorized into four main types in our context: incremental motion estimation (visual odometry), global relocalization, mapping, and loop-closing and SLAM Back-ends, as illustrated by the taxonomy shown in Figure 2:

A) Incremental Motion Estimation concerns the calculation of the incremental change in pose, in terms of translation and rotation, between two or more frames of sensor data. It continuously tracks self-motion, and is followed by a process to integrate these pose changes with respect to an initial state to derive global pose. Incremental motion estimation, i.e. visual odometry (VO), can be used in providing pose information in a scenario without a pre-built map or as odometry motion model to assist the feedback loop of robot control. Deep learning is applied to estimate motion transformations from various sensor measurements in an end-to-end fashion or extract useful features to support a hybrid system.

B) Global Relocalization retrieves the global pose of mobile agents in a known scene with prior knowledge. This is achieved by matching the inquiry input data with a pre-built map or other spatial references. It can be leveraged to reduce the pose drift of a dead reckoning system or retrieve the absolute pose when motion tracking is lost [7]. Deep learning is used to tackle the tricky data association problem that is complicated by the changes in views, illumination, weather and scene dynamics, between the inquiry data and map.

C) Mapping builds and reconstructs a consistent environmental model to describe the surroundings. Mapping can be used to provide environment information for human operators or high-level robot tasks, constrain the error drifts of self-motion tracking, and retrieve the inquiry observation for global localization [11]. Deep learning is leveraged as a useful tool to discover scene geometry and semantics from high-dimensional raw data for mapping. Deep learning based mapping methods are sub-divided into geometric, semantic, and implicit mapping, depending on whether the neural network learns the explicit geometry, or semantics of a scene, or encodes the scene into implicit neural representation.

D) Loop-closing and SLAM Back-ends detect loop closures and optimize the aforementioned incremental motion estimation, global localization and mapping modules to boost the performance in a simultaneous localization and mapping (SLAM) system. These modules perform to ensure the consistency of entire system as follows: local optimization ensures the local consistency of camera motion and scene geometry; once a loop closure is detected by loop-closing module, system error drifts can be mitigated by global optimization.

Besides the modules mentioned above, other modules that also contribute to a SLAM system include:

E) Uncertainty Estimation provides a metric of belief in the learned poses and mapping, critical to probabilistic sensor fusion and back-end optimization in a SLAM system.

F) Sensor Fusion exploits the complementary properties of each sensor modality, and aims to discover the suitable data fusion strategy such that more accurate and robust localization and mapping can be achieved.

In the following sections, we will discuss these components in details.

III Incremental Motion Estimation

We begin with incremental motion (odometry) estimation, i.e. visual odometry (VO), which continuously tracks camera egomotion and yields motion transformations. Given an initial state, global trajectories are reconstructed by integrating these incremental poses. Thus, it is critical to keep the estimate of each motion transformation accurate enough to ensure high-prevision localization in a global scale. This section presents deep learning approaches to achieve visual odometry.

Deep learning is capable of extracting high-level feature representations from raw images directly, and thereby provides an alternative to solve visual odometry (VO) problem, without requiring hand-crafted feature detectors. Existing deep learning based VO models can be categorized into end-to-end VO and hybrid VO, depending on whether they are purely DNN based or a combination of classical VO algorithms and DNNs. Depending on the availability of ground-truth labels in the training phase, end-to-end VO systems are further classified into supervised VO and unsupervised VO. Table II lists and compares deep learning based visual odometry methods.

III-A Supervised Learning of Visual Odometry

Supervised learning based visual odometry (VO) methods aim to train a deep neural network model on labelled datasets to construct a function from consecutive images to motion transformations, instead of exploiting the geometric structures of images as in conventional VO algorithms [10]. At its most basic, the input to the deep neural network (DNN) consists of a pair of consecutive images, while the output corresponds to the estimated translation and rotation between the two frames of images.

One of the early works in this area is Konda et al. [18]. Their approach formulates visual odometry (VO) as a classification problem, and predicts the discrete changes of direction and velocity from input images using a convolutional neural network (ConvNet). However, this method is limited in its ability to estimate the full camera trajectory, and relies on a series of discrete motion estimates instead. Costante et al. [19] propose a method that overcomes some of the limitations of the Konda et al. [18] approach by using dense optical flow to extract visual features, and then using a ConvNet to estimate the frame-to-frame motion of the camera. This method shows performance improvements over the Konda et al. approach, and can generate smoother and more accurate camera trajectories. Despite the promising results of both approaches, they are not strictly an end-to-end learning model from images to motion estimates, and still fall short of traditional VO algorithms, e.g. VISO2 [20] in terms of accuracy and robustness. One limitation of both methods is that they do not fully exploit the rich geometric information contained in the input images, which is crucial for accurate motion estimation. Furthermore, the datasets used to train and evaluate these approaches are limited in their diversity and may not generalize well to different scenarios.

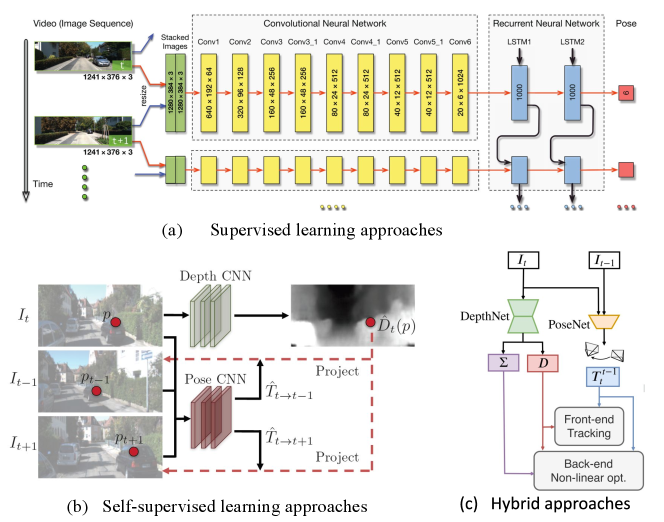

To enable end-to-end learning of visual odometry, DeepVO [15] utilizes a combination of convolutional neural network (ConvNet) and recurrent neural network (RNN). Figure 3 (a) shows the architecture of this typical RNN+ConvNet based VO model, which extracts visual features from pairs of images via a ConvNet, and passes features through RNNs to model the temporal correlation of features. Its ConvNet encoder is based on a FlowNet [21] structure to extract visual features suitable for optical flow and self-motion estimation. The recurrent model summarizes history information into its hidden states, so that the output is inferred from both past experience and current ConvNet features from sensor observations. DeepVO is trained on datasets with groundtruthed poses as training labels. To recover the optimal parameters of this framework, the optimization target is to minimize the Mean Square Error (MSE) of the estimated translations and Euler angle based rotations :

| (1) |

where are the estimates of relative pose from DNN at the timestep , are the corresponding groundtruth values, are the parameters of the DNN framework, and is the number of samples. This data-driven solution reports good results on estimating the pose of driving vehicles on several benchmarks. On the KITTI odometry dataset[22], it shows competitive performance over conventional monocular VO, e.g. VISO2 [20] and ORB-SLAM (without loop closure) [6]. It is worth noting that supervised VO naturally produces trajectory with absolute scale from monocular camera, while classical monocular VO algorithm is scale-ambiguous. This is probably because DNN implicitly learns and maintains the global scale from large collections of images. Although DeepVO reports good results in experimental scenarios, its performance has still not been extensively evaluated by large-scale datasets (e.g., across cities) or real-world experiments/demonstrations in the wild.

| Model | Year | Sensor | Scale | Performance | Contributions | ||

| Seq09 | Seq10 | ||||||

| Supervised | Konda et al.[18] | 2015 | MC | Yes | - | - | formulate VO as a classification problem |

| Costante et al.[19] | 2016 | MC | Yes | 6.75 | 21.23 | extract features from optical flow for VO estimates | |

| DeepVO[15] | 2017 | MC | Yes | - | 8.11 | combine RNN and ConvNet for end-to-end learning | |

| Zhao et al.[23] | 2018 | MC | Yes | - | 4.38 | generate dense 3D flow for VO and mapping | |

| Saputra et al.[24] | 2019 | MC | Yes | - | 8.29 | curriculum learning and geometric loss constraints | |

| Xue et al.[25] | 2019 | MC | Yes | - | 3.47 | memory and refinement module | |

| Saputra et al.[26] | 2019 | MC | Yes | - | - | knowledge distilling to compress deep VO model | |

| Koumis et al.[27] | 2019 | MC | Yes | - | - | 3D convolutional networks | |

| DAVO [28] | 2020 | MC | Yes | - | 5.37 | Use attention to weight semantics and optical flow | |

| Self-supervised | SfmLearner[16] | 2017 | MC | No | 17.84 | 37.91 | novel view synthesis for self-supervised learning |

| UnDeepVO[29] | 2018 | SC | Yes | 7.01 | 10.63 | use fixed stereo line to recover scale metric | |

| GeoNet[30] | 2018 | MC | No | 43.76 | 35.6 | geometric consistency loss and 2D flow generator | |

| Zhan et al.[31] | 2018 | SC | Yes | 11.92 | 12.45 | use fixed stereo line for scale recovery | |

| Struct2Depth[32] | 2019 | MC | No | 10.2 | 28.9 | introduce 3D geometry structure during learning | |

| GANVO[33] | 2019 | MC | No | - | - | adversarial learning to generate depth | |

| Wang et al.[34] | 2019 | MC | Yes | 9.30 | 7.21 | integrate RNN and flow consistency constraint | |

| Li et al.[35] | 2019 | MC | No | - | - | global optimization for pose graph | |

| Gordon[36] | 2019 | MC | No | 2.7 | 6.8 | camera matrix learning | |

| Bian et al.[37] | 2019 | MC | No | 11.2 | 10.1 | consistent scale from monocular images | |

| Li et al.[38] | 2020 | MC | No | 5.89 | 4.79 | meta learning to adapt into new environment | |

| Zou et al.[39] | 2020 | MC | No | 3.49 | 5.81 | model the long-term dependency | |

| Zhao et al.[40] | 2021 | MC | No | 8.71 | 9.63 | introduce masked GAN to remove inconsistency | |

| Chi et al.[41] | 2021 | MC | No | 2.02 | 1.81 | collaborative learning of optical flow, depth and motion | |

| Li et al.[42] | 2021 | MC | No | 1.87 | 1.93 | online adaptation | |

| Sun et al.[40] | 2022 | MC | No | 7.14 | 7.72 | introduce cover and filter masks | |

| Dai et al. [43] | 2022 | MC | No | 3.24 | 1.03 | introduce attention and pose graph optimization | |

| VRVO[44] | 2022 | MC | Yes | 1.55 | 2.75 | use virtual data to recover scale | |

| Hybrid | Backprop KF[45] | 2016 | MC | Yes | - | - | a differentiable Kalman filter based VO |

| Yin et al.[46] | 2017 | MC | Yes | 4.14 | 1.70 | introduce learned depth to recover scale metric | |

| Barnes et al.[47] | 2018 | MC | Yes | - | - | integrate learned depth and ephemeral masks | |

| DPF[48] | 2018 | MC | Yes | - | - | a differentiable particle filter based VO | |

| Yang et al.[49] | 2018 | MC | Yes | 0.83 | 0.74 | use learned depth into classical VO | |

| CNN-SVO[50] | 2019 | MC | Yes | 10.69 | 4.84 | use learned depth to initialize SVO | |

| Zhan et al.[51] | 2020 | MC | Yes | 2.61 | 2.29 | integrate learned optical flow and depth | |

| Wagstaff et al.[52] | 2020 | MC | Yes | 2.82 | 3.81 | integrate classical VO with learned pose corrections | |

| D3VO[17] | 2020 | MC | Yes | 0.78 | 0.62 | integrate learned depth, uncertainty and pose | |

| Sun et al.[53] | 2022 | MC | Yes | - | - | integrate learned depth into DSO | |

-

•

Year indicates the publication year (e.g. the date of conference) of each work.

-

•

Sensor: MC and SC represent monocular camera and stereo camera respectively.

-

•

Supervision represents whether it is a supervised or unsupervised end-to-end model, or a hybrid model

-

•

Scale indicates whether a trajectory with a global scale can be produced.

-

•

Performance reports the localization error (a small number is better), i.e. the averaged translational RMSE drift (%) on lengths of 100m-800m on the KITTI odometry dataset[22]. Most works were evaluated on the Sequence 09 and 10, and thus we took the results on these two sequences from their original papers for a performance comparison. Note that the training sets may be different in each work.

Enhancing the generalization capability of supervised Visual Odometry (VO) models and improving their efficacy for operating in real-time on devices with limited resources are still formidable challenges. While supervised learning-based VO is trained on extensive datasets of image sequences with ground-truth poses, not all sequences are equally informative or challenging for the model to learn. Curriculum learning is a technique that gradually elevates the complexity of the training data by initially presenting simple sequences and progressively introducing more challenging ones. In [24], curriculum learning is integrated into the supervised VO model by increasing the amount of motion and rotation in the training sequences, enabling the model to learn to estimate camera motion more robustly and generalize better to new data. Knowledge distillation is another approach that can be introduced to improve the efficiency of supervised VO models by compressing a large model through teaching a smaller one. This method is applied in [26], reducing the number of network parameters and making the model more suitable for real-time operation on mobile devices. Compared to pure supervised VO without knowledge distillation, this method significantly reduces network parameters by 92.95% and enhances computation speed by 2.12 times.

Furthermore, to enhance the localization performance, a memory module that stores global information about the scene and camera motion is introduced in [25]. The background information is then utilized by a refining module that enhances the accuracy of the predicted camera poses. Additionally, attention mechanisms have been implemented to weigh the inputs from different sources and enhance the efficacy of supervised VO models. For example, DAVO [28] integrates an attention module to weigh the inputs from semantic segmentation, optical flow, and RGB images, leading to improved odometry estimation performance. Despite the promising end-to-end learning performance achieved on publicly available datasets by these supervised VO frameworks, their deployment performance in real-world scenarios remains to be further verified as of the writing of this survey.

Overall, supervised learning-based visual odometry models primarily rely on ConvNet or RNN to learn pose transformations automatically from raw images. Recent advancements in machine learning, including attention mechanisms, GANs, and knowledge distillation, have allowed these models to extract more expressive visual features and accurately model motion. However, these learning methods often require a vast amount of training data with precise poses as labels to optimize model parameters and improve robustness. While supervised learning-based VO models have demonstrated promising end-to-end learning performance on publicly available datasets, their deployment performance in real-world scenarios requires further validation. Additionally, obtaining labeled data is often time-consuming and costly, and inaccurate labels can occur. In the following section, we will discuss recent efforts to address the issue of label scarcity through self-supervised learning techniques.

III-B Self-supervised Learning of Visual Odometry

There are growing interests in exploring self-supervised learning of visual odometry (VO). Self-supervised solutions are capable of exploiting unlabelled sensor data, and thus it saves human efforts. Compared with supervised approaches, they normally show better adaptation ability in new scenarios, where no labelled data are available. This has been achieved in a self-supervised framework that jointly learns camera ego-motion and depth from video sequences, by utilizing view synthesis as a self-supervisory signal [16].

As shown in Figure 3 (b), a typical self-supervised VO framework [16] consists of a depth network to predict depth maps, and a pose network to produce motion transformations between images. The entire framework takes consecutive images as input, and the supervision signal is based on novel view synthesis - given a source image , the view synthesis task is to generate a synthetic target image . A pixel of source image is projected onto a target view via:

| (2) |

where is the camera’s intrinsic matrix, denotes the camera motion matrix from target frame to source frame, and denotes the per-pixel depth maps in the target frame. The training objective is to ensure the consistency of the scene geometry by optimizing the photometric reconstruction loss between the real target image and the synthetic one:

| (3) |

where denotes pixel coordinates, is the target image, and is the synthetic target image generated from the source image .

However, there are basically two main problems that remain unsolved in the original work [16]: 1) this monocular image based approach is not able to provide pose estimates in a consistent global scale. Due to the scale ambiguity, no physically meaningful global trajectory can be reconstructed, limiting its real usage; 2) the photometric loss assumes that the scene is static and without camera occlusions. Although the authors propose the use of an explainability mask to remove scene dynamics, the influence of these environmental factors is still not addressed completely, which violates the assumption.

To solve global scale problem, [29, 31] propose to utilize stereo image pairs to recover the absolute scale of pose estimation. They introduce an additional spatial photometric loss between the left and right pairs of images, as the stereo baseline (i.e. motion transformation between the left and right images) is fixed and known throughout the dataset. Once the training is complete, the network produces pose predictions using only monocular images. Compared with [16], they are able to produce camera poses with global metric scale and higher accuracy. Another approach is to use virtual stereo data from simulator to recover the absolute scale of pose estimation in VRVO[44]. It utilizes a generative adversarial network (GAN) to generate virtual stereo data that is similar to real-world data. By bridging the gap between virtual and real data using adversarial learning, the pose network is then trained using the virtual data to recover the absolute scale of pose estimation. [37] tackles the scale issue by introducing a geometric consistency loss, that enforces the consistency between predicted depth maps and reconstructed depth maps. The framework transforms the predicted depth maps into a 3D space, and projects them back to produce reconstructed depth maps. By doing so, the depth predictions can remain scale-consistent over consecutive frames, enabling pose estimates to be scale-consistent as well. Different from previous works that either use stereo images [29, 31] or virtual data [44], this work successfully produces scale-consistent camera poses and depth estimates only using monocular images.

The photometric consistency constraint is based on the assumption that the entire scene consists only of rigid static structures such as buildings and lanes. However, in real-world applications, the presence of environmental dynamics such as pedestrians and vehicles can cause distortion in the photometric projection, leading to reduced accuracy in pose estimation. To address this concern, GeoNet [30] divides its learning process into two sub-tasks by estimating static scene structures and motion dynamics separately through a rigid structure reconstructor and a non-rigid motion localizer. In addition, GeoNet enforces a geometric consistency loss to mitigate the issues caused by camera occlusions and non-Lambertian surfaces. [23] adds a 2D flow generator along with a depth network to generate 3D flow. Benefiting from better 3D understanding of environment, this framework is able to produce more accurate camera poses, along with a point cloud map. GANVO [33] employs a generative adversarial learning paradigm for depth generation and introduces a temporal recurrent module for pose regression. This method improves accuracy in depth map and pose estimation, as well as tolerating environmental dynamics. [54] also utilizes a generative adversarial network (GAN) to generate more realistic depth maps and poses, and further encourages more accurate synthetic images in the target frame. Unlike hand-crafted metrics, a discriminator is used to evaluate the quality of synthetic image generation. In doing so, the generative adversarial setup facilitates the generated depth maps to be more texture-rich and crisper. In this way, high-level scene perception and representation are accurately captured and environmental dynamics are implicitly tolerated. [40] introduces a masked GAN into joint learning of depth and visual odometry (VO) estimation, addressing influences from light-condition changes and occlusions. By incorporating MaskNet and a Boolean mask scheme, it mitigates the impacts of occlusions and visual field changes, improving adversarial loss and image reconstruction. A scale-consistency loss ensures accurate pose estimation in long monocular sequences. Similarly, [55] introduces hybrid masks to mitigate the negative impact of dynamic environments. Cover masks and filter masks alleviate adverse effects on VO estimation and view reconstruction processes. Both approaches demonstrate competitive depth prediction and globally consistent VO estimation in car-driving scenarios.

Recent attempts [38, 42] design online learning strategies that enable the learned model to adapt into new environments. These approaches allow the learning model to automatically update its parameters and learn from new data without forgetting the previously learned knowledge. Collaborative learning of multiple learning tasks, such as optical flow, depth, and camera motion estimation, has also been shown to improve the performance of self-supervised VO [41]. By jointly optimizing the different learning targets, it exploits the complementary information between them so that learns more robust representations for pose estimation. To further improve visual odometry, [43] proposes a self-supervised VO with an attention mechanism and pose graph optimization. The introduced attention mechanism is sensitive to geometrical structure and helps to accurately regress the rotation matrix.

Overall, self-supervised learning-based visual odometry methods have emerged as a promising approach for estimating camera poses and scene depths without the need for labeled data during training. They normally consist of two ConvNet based neural networks- one for depth estimation and the other for pose estimation. Compared to supervised learning-based methods, self-supervised approaches offer several advantages, including the ability to handle non-rigid dynamics and adapt to new environments in real-time. However, despite these benefits, self-supervised VO methods still underperform compared to their supervised counterparts, and there remain challenges with scaling and scene dynamics.

As demonstrated in Table II, self-supervised VO still cannot compete with supervised VO in performance, its concerns of scale metric and scene dynamics problem have been largely resolved with the efforts of many researchers. With the benefits of self-supervised learning and ever-increasing improvement on performance, self-supervised VO would be a promising solution to deep learning based SLAM. Currently, end-to-end learning based VOs have not been proved to surpass the state-of-the-art model-based VOs in performance. Next section will show how to combine the benefits from both sides to construct hybrid approaches.

III-C Hybrid Visual Odometry

Unlike end-to-end approaches that rely solely on a deep neural network to interpret pose from data, hybrid approaches combine classical geometric models with a deep learning framework. The deep neural network is used to replace part of a geometry model, which allows for more expressive representations.

One of the key challenges in traditional monocular visual odometry (VO) is the scale-ambiguity problem, where monocular VOs can only estimate relative scale. This poses a problem in scenarios where absolute scale is required. One way to solve this issue is to integrate learned depth estimates into a classical visual odometry algorithm, which helps to recover the absolute scale metric of poses. Depth estimation is a well-established research area in computer vision, and various methods have been proposed to tackle this problem. For instance, Godard et al. [56] proposed a deep neural model that predicts per-pixel depths in an absolute scale. The details of depth learning are discussed in Section V-A1.

In [46], a ConvNet produces coarse depth values from raw images, which are then refined by conditional random fields. The scale factor is calculated by comparing the estimated depth predictions with the observed point positions. Once the scale factor is obtained, the ego-motions with absolute scale are obtained by multiplying the scale-factor and estimated translations from a monocular VO algorithm. This approach mitigates the scale problem by incorporating depth information. Additionally, [47] proposes the integration of predicted ephemeral masks (i.e., the area of moving objects) with depth maps in a traditional VO system to enhance its robustness to moving objects. This method enables the system to produce metric-scale pose estimates using a single camera, even when a significant portion of the image is obscured by dynamic objects. [52] proposes to combine a classical VO with learned pose corrections, that largely reduces the error drifts of classical VOs. Compared with pure learning based VOs, instead of directly regressing inter-frame pose changes, this approach regresses pose corrections from data, without the need of pose ground truth as training data. Similarly, [53] proposes to improve classical monocular VO with learned depth estimates. This framework consists of a monocular depth estimation module with two separate working modes to assist localization and mapping, and it demonstrates strong generalization ability to diverse scenes, compared with existing learning based VOs. Furthermore, [51] integrates learned depth and optical flow predictions into a conventional VO model. Specifically, this framework uses optical flow and single-view depth predictions from deep ConvNets as intermediate outputs to establish 2D-2D/3D-2D correspondences, and the depth estimates with consistent scale can mitigate the scale drift issue in monocular VO/SLAM systems. By integrating deep predictions with geometry-based methods, the study shows that deep VO models can complement standard VO/SLAM systems.

D3VO [17] is proposed to incorporate the predictions of depth, pose, and photometric uncertainty from deep neural networks into direct visual odometry (DVO) [57]. In D3VO, a self-supervised framework is employed to learn depth and ego-motion jointly, similar to the approaches discussed in Section III-B. D3VO employs the uncertainty estimation method proposed by [58] to generate a photometric uncertainty map that indicates which parts of the visual observations can be trusted. As illustrated in Fig. 3 (c), the learned depth and pose estimates are integrated into the front-end of a VO algorithm, and the uncertainties are used in the system back-end. This method shows impressive results on the KITTI [22] and EuroC [59] benchmarks, surpassing several popular conventional VO/VIO systems, e.g. DSO [60], ORB-SLAM [6] and VINS-Mono [3]. This indicates the promise of integrating learning methods with geometric models.

In addition to geometric models, there have been studies that combine physical motion models with deep neural networks, such as through a differentiable Kalman filter [45, 61] or a differentiable particle filter [48]. In [45], Kalman filter is transformed into a differentiable module that is combined with deep neural networks for an end-to-end training. [61] proposes DynaNet, a hybrid model integrating deep neural networks (DNNs) and state-space models (SSMs) to leverage their strengths. DynaNet enhances interpretability and robustness in car-driving scenarios by combining powerful feature representation from DNNs with explicit modeling of physical processes from SSMs. The incorporation of a recursive Kalman filter enables optimal filtering on the feature state space, facilitating accurate positioning estimation, and showcasing its ability to detect failures through internal filtering model parameters such as the rate of innovation (Kalman gain). Instead of Kalman filter, [48] presents a differentiable particle filter with learnable motion and measurement models. The proposed differentiable particle filter can approximate complex nonlinear functions, allowing for efficient training of motion models by optimizing state estimation performance. Both two works incorporate the physical motion model of visual odometry into the state update process of filtering. Thus, the physical model serves as an algorithmic prior in the learning process. Compared with ConvNet or LSTM based models, differentiable filters improve the data efficiency and generalization ability of the learning based motion estimation.

In summary, hybrid models that combine geometric or physical priors with deep learning techniques are generally more accurate than end-to-end VO/SLAM systems and can even outperform conventional monocular VO systems on common benchmarks. Geometry-based models integrate deep neural networks into VO/SLAM pipelines to improve depth and egomotion estimation, as well as increase robustness to dynamic objects. Physical motion-based models combine deep neural networks with physical motion models, such as the Kalman filter or particle filter, to integrate the physical motion model of VO/SLAM systems into the learning process. Combining the benefits from combining geometric or physical priors with deep learning, hybrid models are normally more accurate than end-to-end VO at this stage, as shown in Table II. It is notable that recent hybrid models even outperform some representative conventional monocular VO systems on common benchmarks [17]. This demonstrates the rapid rate of progress in this area.

III-D Performance Comparison of Deep Learning based Visual Odometry (VO) Methods

Table II presents a comprehensive comparison of existing works focusing on deep learning-based visual odometry (VO). The table includes information regarding the sensor type utilized, the model employed, whether the method produces a trajectory with an absolute scale, and the performance evaluation conducted on the KITTI dataset. A concise overview of the contribution made by each model is also provided. The KITTI dataset [22] serves as a widely recognized benchmark for visual odometry estimation and comprises a collection of sensor data captured during car-driving scenarios. As most deep learning based approaches use the trajectory 09 and 10 of the KITTI dataset to test a trained model, we compared them according to the averaged Root Mean Square Error (RMSE) of the translation for all the subsequences of lengths (100, 200, .., 800) meters, which is provided by the official KITTI VO/SLAM evaluation metrics.

Hybrid VO models demonstrate superior performance compared to both supervised and unsupervised VO approaches. This is attributed to the hybrid model’s ability to leverage the well-established geometry models of traditional VO algorithms alongside the powerful feature extraction capabilities offered by deep learning methods. Although supervised VO models still outperform unsupervised approaches, the performance gap between them is diminishing as the limitations of self-supervised VO methods are gradually addressed. Notably, recent advancements have shown that self-supervised VO can now recover scale-consistent poses from monocular images [37]. Overall, data-driven visual odometry shows a remarkable increase in model performance, indicating the potentials of deep learning approaches in achieving more accurate visual odometry estimation in the future. However, it is worth noting that this upward trend is not always consistent, as several published papers focus on addressing intrinsic issues within learning frameworks rather than solely aiming to achieve the best performance.

| Model | Year | Agnostic | Performance (m/degree) | Contributions | |||

| 7Scenes | Cambridge | ||||||

| Relocalization in 2D Map | Explicit Map | NN-Net [62] | 2017 | Yes | 0.21/9.30 | - | combine retrieval and relative pose estimation |

| DeLS-3D [63] | 2018 | No | - | - | jointly learn with semantics | ||

| AnchorNet [64] | 2018 | Yes | 0.09/6.74 | 0.84/2.10 | anchor point allocation | ||

| RelocNet [65] | 2018 | Yes | 0.21/6.73 | - | camera frustum overlap loss | ||

| CamNet [66] | 2019 | Yes | 0.04/1.69 | - | multi-stage image retrieval | ||

| PixLoc [67] | 2021 | Yes | 0.03/0.98 | 0.15/0.25 | cast camera localization as metric learning | ||

| Implicit Map | PoseNet [68] | 2015 | No | 0.44/10.44 | 2.09/6.84 | first neural network in global pose regression | |

| Bayesian PoseNet [69] | 2016 | No | 0.47/9.81 | 1.92/6.28 | estimate Bayesian uncertainty for global pose | ||

| BranchNet [70] | 2017 | No | 0.29/8.30 | - | multi-task learning for orientation and translation | ||

| VidLoc [71] | 2017 | No | 0.25/- | - | efficient localization from image sequences | ||

| Geometric PoseNet [72] | 2017 | No | 0.23/8.12 | 1.63/2.86 | geometry-aware loss | ||

| SVS-Pose [73] | 2017 | No | - | 1.33/5.17 | data augmentation in 3D space | ||

| LSTM PoseNet [74] | 2017 | No | 0.31/9.85 | 1.30/5.52 | spatial correlation | ||

| Hourglass PoseNet [75] | 2017 | No | 0.23/9.53 | - | hourglass-shaped architecture | ||

| MapNet [76] | 2018 | No | 0.21/7.77 | 1.63/3.64 | impose spatial and temporal constraints | ||

| SPP-Net [77] | 2018 | No | 0.18/6.20 | 1.24/2.68 | synthetic data augmentation | ||

| GPoseNet [78] | 2018 | No | 0.30/9.90 | 2.00/4.60 | hybrid model with Gaussian Process Regressor | ||

| LSG [79] | 2019 | No | 0.19/7.47 | - | odometry-aided localization | ||

| PVL [80] | 2019 | No | - | 1.60/4.21 | prior-guided dropout mask to improve robustness | ||

| AdPR [81] | 2019 | No | 0.22/8.8 | - | adversarial architecture | ||

| AtLoc [82] | 2019 | No | 0.20/7.56 | - | attention-guided spatial correlation | ||

| GR-Net [83] | 2020 | No | 0.19/6.33 | 1.12/2.40 | construct a view graph | ||

| MS-Transformer [84] | 2021 | Yes | 0.18/ 7.28 | 1.28/2.73 | extend to multiple scenes with transformers | ||

-

•

Year indicates the publication year (e.g. the date of conference) of each work.

-

•

Agnostic indicates whether it can generalize to new scenarios.

- •

IV Global Relocalization

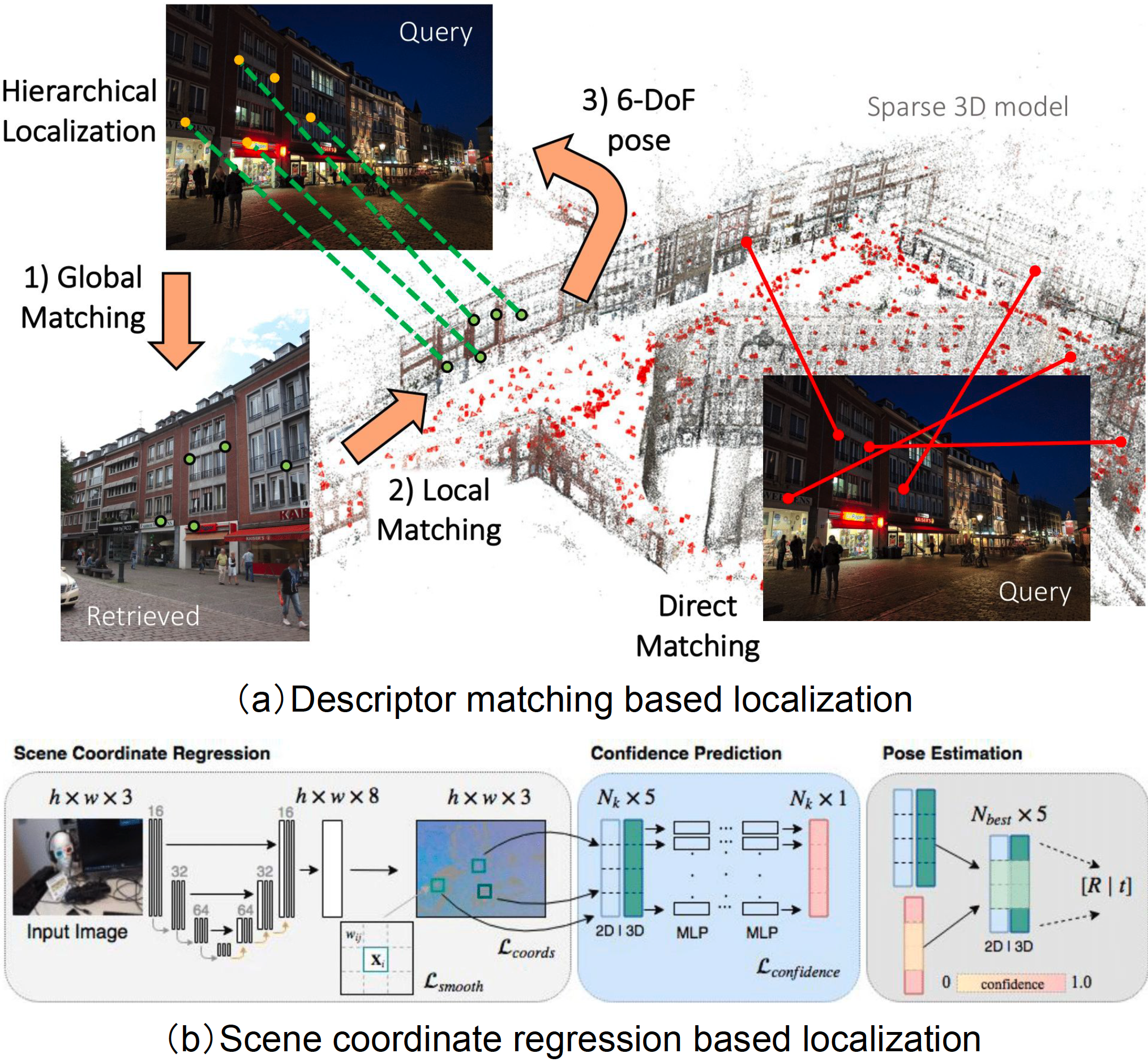

Global relocalization is the process of determining the absolute camera pose within a known scene. Different from incremental motion estimation (visual odometry) that can perform in unfamiliar environments, global relocalization relies on prior knowledge of the scene and utilizes a 2D or 3D scene model. Basically, it establishes the relation between sensor observations and the map by matching a query image or view against a pre-built model, followed by returning an estimate of the global pose. According to the type of map used, deep learning-based methods for global relocalization can be categorized into two categories: Relocalization in a 2D Map, where input 2D images are matched against a database of geo-referenced images or an implicit neural map; Relocalization in a 3D Map, where correspondences are established between 2D image pixels and 3D points from an explicit or implicit scene model. Table III and IV summarize the existing approaches in deep learning based global relocalization within a 2D map or a 3D map, respectively.

IV-A Relocalization in a 2D Map

Relocalization in a 2D map involves estimating the image pose relative to a 2D map. This type of map can be created explicitly using a geo-referenced database or implicitly encoded within a neural network.

IV-A1 Explicit 2D Map Based Relocalization

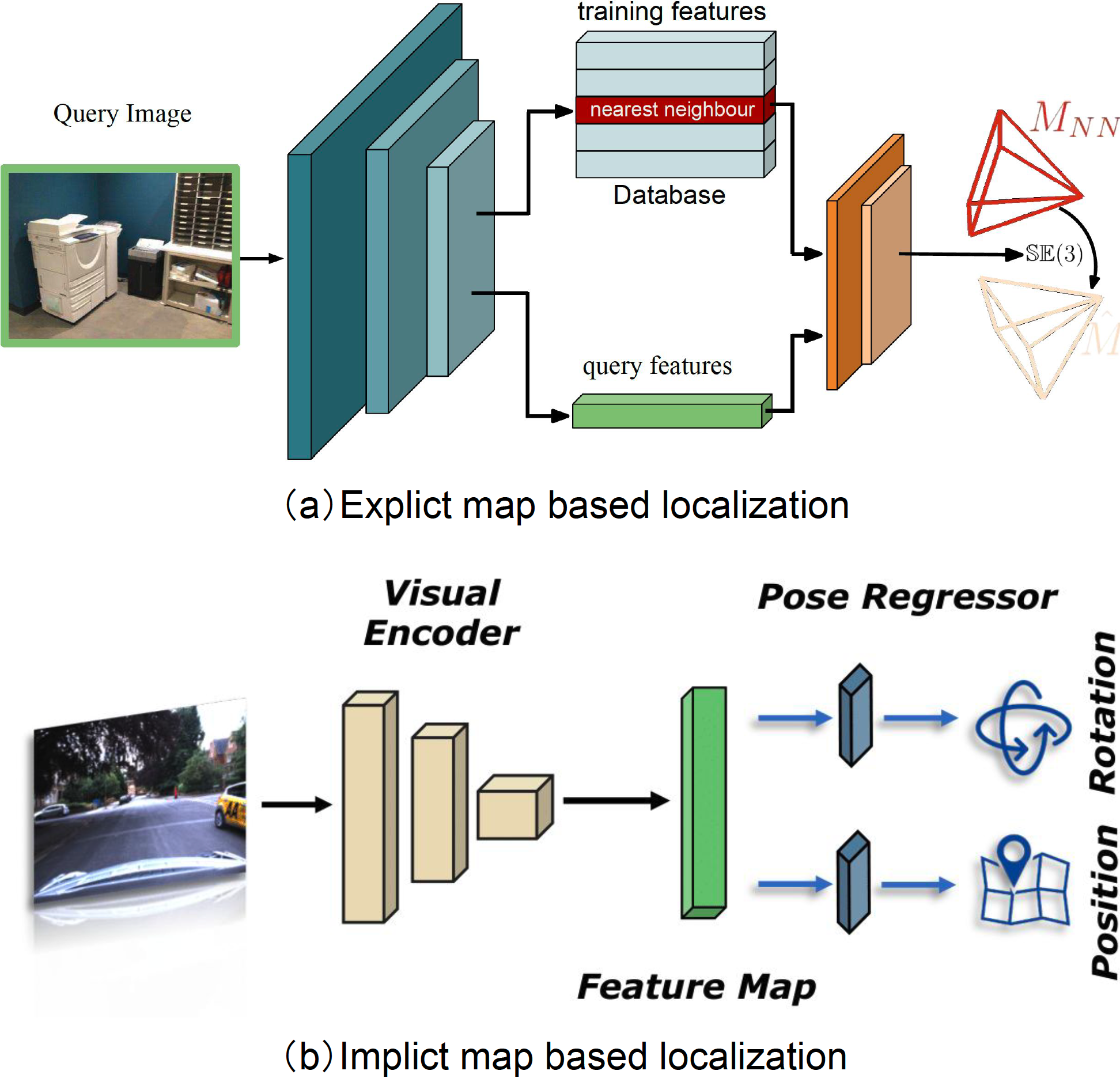

Explicit 2D map based relocalization typically represents a scene by a database of geo-tagged images (references) [86, 87, 88]. Figure 4 (a) illustrates the two stages of this relocalization process with 2D references: image retrieval and pose regression.

In the first stage, the goal is to determine the most relevant part of the scene represented by reference images to the visual query. This is achieved by finding suitable image descriptors for image retrieval, which is a challenging task. Deep learning-based approaches [89, 90] use pre-trained convolutional neural networks (ConvNets) to extract image-level features that are invariant to changes in viewpoint, lighting, and other factors that can affect image appearance. In challenging situations, local descriptors are extracted and aggregated to obtain robust global descriptors. For instance, NetVLAD [91] uses a trainable generalized VLAD layer (Vector of Locally Aggregated Descriptors [92], a descriptor vector used in image retrieval), while CamNet [66] applies a two-stage retrieval approach that combines image-based coarse retrieval and pose-based fine retrieval to select the most similar reference frames for the final precise pose estimation.

The second stage of explicit 2D map-based relocalization aims to obtain more precise poses of the queries by performing additional relative pose estimation with respect to the retrieved images. Traditionally, this is tackled by epipolar geometry, relying on the 2D-2D correspondences determined by local descriptors [93, 94, 95]. In contrast, deep learning-based approaches regress the relative poses directly from pairwise images. For example, NN-Net [62] uses a neural network to estimate the pairwise relative poses between the query and the top N ranked references, followed by a triangulation-based fusion algorithm that coalesces the predicted N relative poses and the ground truth of 3D geometry poses to obtain the absolute query pose. Alternatively, RelocNet [65] introduces a frustum overlap loss to assist global descriptors learning that are suitable for camera localization.

Explicit 2D map-based relocalization is scalable and flexible, as it does not require training on specific scenarios. However, maintaining a database of geo-tagged images and accurate image retrieval can be challenging, making it difficult to scale to large-scale scenarios. Moreover, explicit 2D map-based relocalization is normally time-consuming compared to implicit-map-based counterparts, which will be discussed in the next section.

IV-A2 Implicit 2D Map Based Relocalization

Implicit 2D map based relocalization directly regresses camera pose from single images, by implicitly representing a 2D map inside a deep neural network. The common pipeline is illustrated in Figure 4 (b) - the input to a neural network is single images, while the output is the global position and orientation of query images.

PoseNet [68] is the first approach to tackle the camera relocalization problem by training a ConvNet to predict camera pose from single RGB images in an end-to-end manner. It leverages the main structure of GoogleNet [96] to extract visual features and removes the last softmax layers. Instead, a fully connected layer is introduced to output a 7 dimensional global pose, which consists of position and orientation vectors in 3 and 4 dimensions, respectively. However, PoseNet has some limitations. It is designed with a naive regression loss function that does not take into account the underlying geometry of the problem. This leads to hyper-parameters requiring expensive hand-engineering to be tuned, and it may not generalize well to new scenes. Additionally, due to the high dimensionality of the feature embedding and limited training data, PoseNet suffers from overfitting problems.

Various extensions are proposed to enhance the original pipeline, for example, by exploiting LSTM units to reduce the dimensionality [74], applying synthetic generation to augment training data [73, 70, 77, 97], replacing the backbone [75], modelling pose uncertainty [69, 78, 98], introducing geometry-aware loss function [72] and associating features via an attention mechanism [82]. A prior guided dropout mask is additionally adopted in RVL [80] to further eliminate the uncertainty caused by dynamic objects. VidLoc [71] incorporates temporal constraints of image sequences to model the temporal connections of input images for visual localization. Moreover, additional motion constraints, including spatial constraints and other sensor constraints from GPS or SLAM systems are exploited in MapNet [76], to enforce the motion consistency between predicted poses. Similar motion constraints are also introduced by jointly optimizing a relocalization network and visual odometry network [99, 79, 100]. However, being application-specific, scene representations learned from localization tasks may ignore some useful features they are not designed for. To this end, VLocNet++ [101] additionally exploits the inter-task relationship between learning semantics and regressing poses, achieving impressive results. More recently, Graph Neural Networks (GNNs) are introduced to tackle the multi-view camera relocalization task in GR-Net [83] and PoGO-Net [102], enabling the messages of different frames to be transferred beyond temporal connections. MS-Transformer [84] extends the absolute pose regression paradigm for learning a single model on multiple scenes.

Both explicit and implicit 2D map-based relocalization methods exploit the benefits of deep learning in automatically extracting crucial features for global relocalization in environments lacking distinctive features. Implicit map-based learning approaches directly regress the absolute pose of a camera through a DNN, making them easier to implement and more efficient than explicit map-based learning approaches. However, current implicit map-based approaches exhibit performance limitations, and their dependence on scene-specific training prevents them from generalizing to unfamiliar scenes without necessitating retraining. In the next section, we will introduce the concept of learning to match images against a 3D model for global relocalization.

IV-B Relocalization in a 3D Map

Relocalization in a 3D map involves recovering the camera pose of a 2D image with respect to a pre-built 3D scene model. This 3D map is constructed from color images using approaches such as structure-from-motion (SfM) [12] or range images using approaches such as truncated-signed-distance-function (TSDF) [103]. As depicted in Figure 5, 3D map based methods establish 2D-3D correspondences between the 2D pixels of a query image and the 3D points using local descriptors [104, 105, 106, 107] or scene coordinate regression [108, 109, 85, 110]. These 2D-3D matches are then used to compute the camera pose by applying a Perspective-n-Point (PnP) solver [111] within a RANSAC loop [112].

IV-B1 Local Descriptor Based Relocalization

Local descriptor based relocalization relies on establishing correspondences between 2D map inputs and the given explicit 3D model using feature descriptors. As the learning of feature descriptor is typically coupled with keypoint detection, existing learning methods can be divided into three types: detect-then-describe, detect-and-describe, and describe-then-detect, according to the role of detector and descriptor in the learning process.

| Model | Year | Agnostic | Performance (m/degree) | Contributions | |||

| 7Scenes | Cambridge | ||||||

| Relocalization in 3D Map | Descriptor Based | NetVLAD [91] | 2016 | Yes | - | - | differentiable VLAD layer |

| DELF [113] | 2017 | Yes | - | - | attentive local feature descriptor | ||

| InLoc [114] | 2018 | Yes | 0.04/1.38 | 0.31/0.73 | dense data association | ||

| SVL [115] | 2018 | No | - | - | leverage a generative model for descriptor learning | ||

| SuperPoint [116] | 2018 | Yes | - | - | jointly extract interest points and descriptors | ||

| Sarlin et al. [117] | 2018 | Yes | - | - | hierarchical localization | ||

| NC-Net [118] | 2018 | Yes | - | - | neighbourhood consensus constraints | ||

| 2D3D-MatchNet [119] | 2019 | Yes | - | - | jointly learn the descriptors for 2D and 3D keypoints | ||

| HF-Net [120] | 2019 | Yes | 0.042/1.3 | 0.356/0.31 | coarse-to-fine localization | ||

| D2-Net [121] | 2019 | Yes | - | - | jointly learn keypoints and descriptors | ||

| Speciale et al [122] | 2019 | No | - | - | privacy preserving localization | ||

| OOI-Net [123] | 2019 | No | - | - | objects-of-interest annotations | ||

| Camposeco et al. [124] | 2019 | Yes | - | 0.56/0.66 | hybrid scene compression for localization | ||

| Cheng et al. [125] | 2019 | Yes | - | - | cascaded parallel filtering | ||

| Taira et al. [126] | 2019 | Yes | - | - | comprehensive analysis of pose verification | ||

| R2D2 [127] | 2019 | Yes | - | - | learn a predictor of the descriptor discriminativeness | ||

| ASLFeat [128] | 2020 | Yes | - | - | leverage deformable convolutional networks | ||

| CD-VLM [129] | 2021 | Yes | - | - | cross-descriptor matching | ||

| VS-Net [130] | 2021 | No | 0.024/0.8 | 0.136/0.24 | vote by segmentation | ||

| Scene Coordinate Regression | DSAC [131] | 2017 | No | 0.20/6.3 | 0.32/0.78 | differentiable RANSAC | |

| DSAC++ [132] | 2018 | No | 0.08/2.40 | 0.19/0.50 | without using a 3D model of the scene | ||

| Angle DSAC++ [133] | 2018 | No | 0.06/1.47 | 0.17/0.50 | angle-based reprojection loss | ||

| Dense SCR [134] | 2018 | No | 0.04/1.4 | - | full frame scene coordinate regression | ||

| Confidence SCR [135] | 2018 | No | 0.06/3.1 | - | model uncertainty of correspondences | ||

| ESAC [136] | 2019 | No | 0.034/1.50 | - | integrates DSAC in a Mixture of Experts | ||

| NG-RANSAC [137] | 2019 | No | - | 0.24/0.30 | prior-guided model hypothesis search | ||

| SANet [138] | 2019 | Yes | 0.05/1.68 | 0.23/0.53 | scene agnostic architecture for camera localization | ||

| MV-SCR [139] | 2019 | No | 0.05/1.63 | 0.17/0.40 | multi-view constraints | ||

| HSC-Net [140] | 2020 | No | 0.03/0.90 | 0.13/0.30 | hierarchical scene coordinate network | ||

| KFNet [141] | 2020 | No | 0.03/0.88 | 0.13/0.30 | extends the problem to the time domain | ||

| DSM [142] | 2021 | Yes | 0.027/0.92 | 0.27/0.52 | dense coordinates prediction | ||

-

•

Year indicates the publication year (e.g. the date of conference) of each work.

-

•

Agnostic indicates whether it can generalize to new scenarios.

- •

-

•

Contributions summarize the main contributions of each work compared with previous research.

Detect-then-describe is a common pipeline for local descriptor-based relocalization. This approach first performs feature detection and then extracts a feature descriptor from a patch centered around each keypoint [143, 144]. The keypoint detector is responsible for providing robustness or invariance against possible real issues such as scale transformation, rotation, or viewpoint changes by normalizing the patch accordingly. However, some of these responsibilities might also be delegated to the descriptor. The common pipeline varies from using hand-crafted detectors [145, 146] and descriptors [147, 148], replacing either the descriptor [149, 150, 151, 118, 152, 153, 154, 155, 156, 157] or detector [158, 159, 160] with a learned alternative, or learning both the detector and descriptor [161, 162, 163, 164]. For efficiency, the feature detector often considers only small image regions and typically focuses on low-level structures such as corners or blobs [165], while the descriptor often captures higher level information in a larger patch around the keypoint.

In contrast, detect-and-describe approaches advance the description stage. By sharing a representation from deep neural network, SuperPoint [116] and R2D2 [127] attempt to learn a dense feature descriptor and a feature detector. However, they rely on different decoder branches which are trained independently with specific losses. On the contrary, D2-net [121] and ASLFeat [128] share all parameters between detection and description and use a joint formulation that simultaneously optimizes for both tasks. Different from these works, which purely rely on image features, P2-Net [166] proposes a unified descriptor between 2D and 3D representations for pixel and point matching.

Alternatively, the describe-then-detect approach, e.g. D2D [167], postpones the detection to a later stage but applies such detector on pre-learned dense descriptors to extract a sparse set of keypoints and corresponding descriptors.

In practice, descriptors are commonly used to perform sparse feature extraction and matching for the requirement of efficiency with keypoint detector. Moreover, by disabling the function of keypoint detector, dense feature extraction and matching [168, 169, 170, 115, 114, 171, 172], show better matching results than sparse feature matching, particularly under strong variations in illumination [173]. More recently, new approaches have been proposed to establish correspondence for visual localization. For example, CD-VLM [129] uses cross-descriptor matching to overcome challenges in cross-seasonal and cross-domain visual localization. VS-Net [130] proposes a scene-specific landmark-based approach, which uses a set of keyframe-based landmarks to establish correspondences in visual localization. These new approaches offer promising alternatives for robust and accurate visual localization.

IV-B2 Scene Coordinate Regression Based Localization

Different from local descriptor-based relocalization which relies on matching descriptors between images and an explicit 3D map to establish 2D-3D correspondences, scene coordinate regression approaches eliminate the need for explicit 3D map construction and descriptor extraction, making it relatively more efficient. Instead of relying on explicit 3D maps, these methods learn an implicit transformation from 2D pixel coordinates to 3D point coordinates. By estimating the 3D coordinates of each pixel in the query image within the world coordinate system (i.e., the scene coordinates [85, 174]), these approaches allow for more flexibility in dealing with different environments and scene structures. This makes scene coordinate regression a promising alternative for relocalization tasks, especially in scenarios where explicit 3D maps may not be available or accurate enough.

DSAC [131] is a relocalization pipeline that leverages a ConvNet to regress scene coordinates and incorporates a novel differentiable RANSAC algorithm to allow for end-to-end training of the pipeline. This approach has been extended in several ways to improve its performance and applicability. For example, reprojection loss [132, 175, 133] and multi-view geometric constraints [139] have been introduced to enable unsupervised learning and joint learning of observation confidences [135, 137] to enhance sampling efficiency and accuracy. Other strategies, such as Mixture of Experts (MoE) [136] and hierarchical coarse-to-fine [140, 176], have been integrated to eliminate environment ambiguities. Different from these, KFNet [141] extends the scene coordinate regression problem to the time domain, effectively bridging the performance gap between temporal and one-shot relocalization approaches. However, these methods are still limited to a specific scene and cannot be generalized to unseen scenes without retraining. To address this limitation, SANet [138] regresses the scene coordinate map of the query by interpolating the 3D points associated with the retrieved scene images, making it a scene-agnostic method. Unlike aforementioned methods which are trained in a sparse manner, Dense SCR and DSM [134, 142] perform scene coordinate regression in a dense manner, making the computation more efficient during testing. Moreover, they incorporate global context into the regression process to improve robustness. Overall, these advances in scene coordinate regression and relocalization techniques offer promising avenues for improving localization accuracy in diverse scenarios.

Scene coordinate regression-based methods can be more efficient than local descriptor-based methods as they eliminate the need for descriptor extraction and matching. These methods can directly regress the corresponding 3D point for a given 2D pixel, thus generating 2D-3D correspondences efficiently. Additionally, implicit 3D map-based relocalization methods have shown promising results, exhibiting robust and accurate performance in small indoor environments and achieving comparable, if not better, performance than explicit 3D map-based methods. It is worth noting, however, that the effectiveness of these implicit methods in large-scale outdoor scenes has not been demonstrated. This is due to their dependence on learning a regression function that maps 2D image coordinates to 3D scene coordinates, which may not generalize well to outdoor scenes with diverse illumination, weather conditions, and scene layouts.

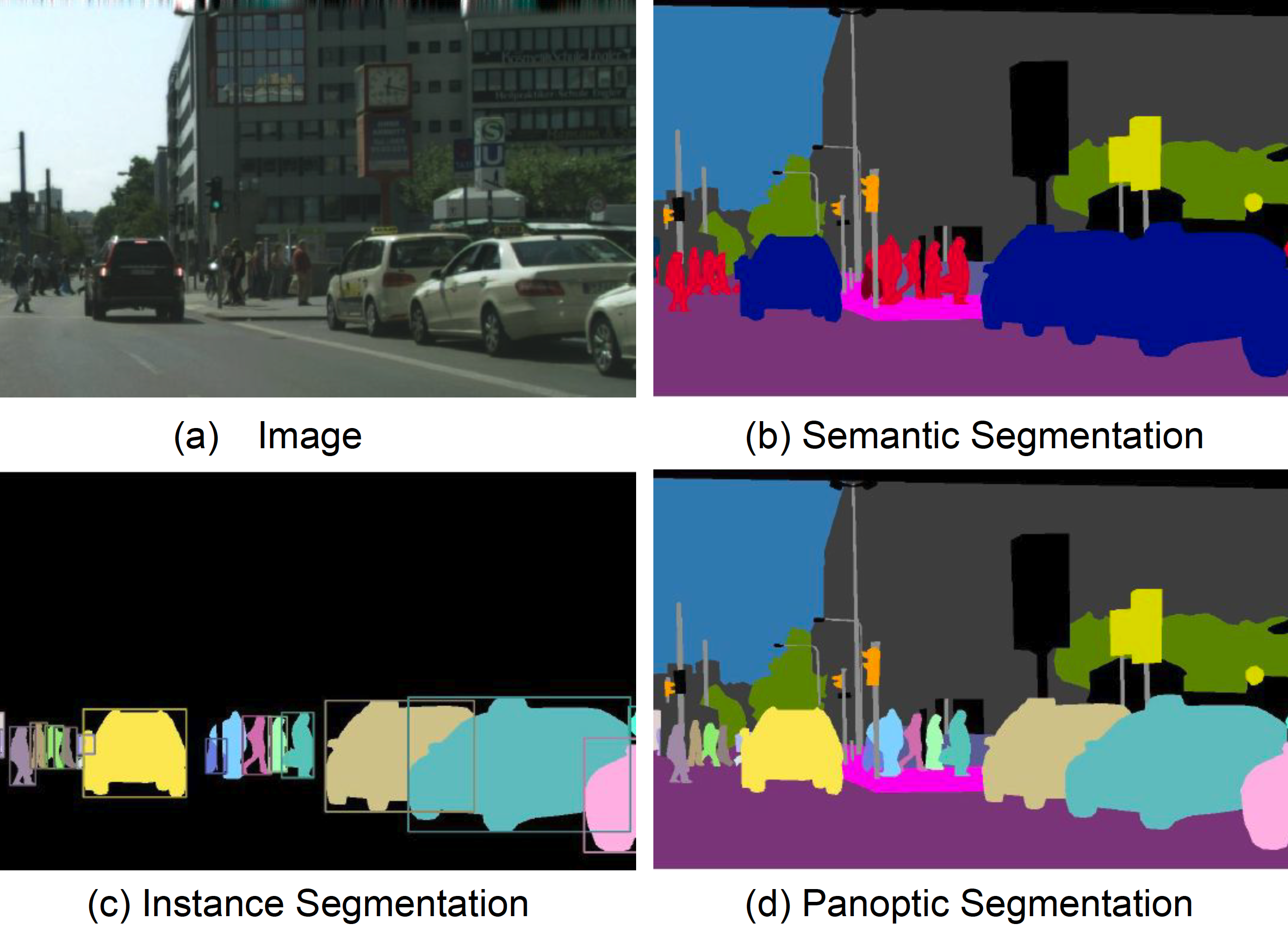

V Mapping

Mapping refers to the ability of a mobile agent to perceive and build a consistent environmental model to describe surroundings. Deep learning has fostered a set of tools for scene perception and understanding, with applications ranging from depth prediction, object detection, to semantic labelling and 3D geometry reconstruction. This section provides an overview of existing works relevant to deep learning based mapping (scene perception) methods. We categorize them into geometric mapping, semantic mapping, and implicit mapping.

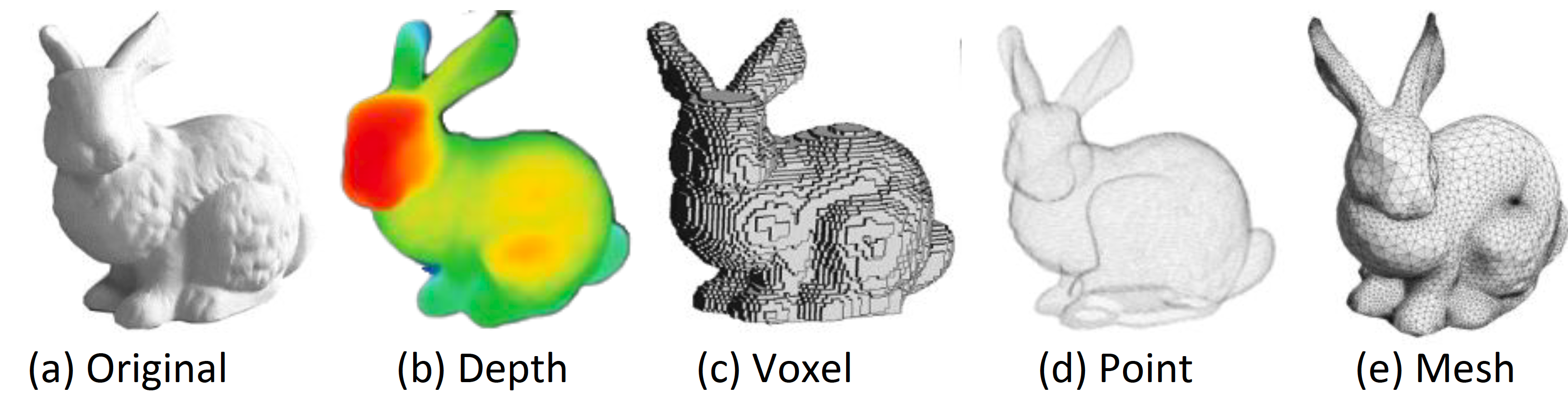

V-A Geometric Mapping

Broadly, geometric mapping captures the shape and structural description of a scene. The classical mapping algorithms can be categorized into sparse features or dense methods. As deep learning based approaches mostly represent scene with dense representations, this section focuses on introducing relevant works in this area. Typical choices of dense scene representations include depth, point, boundary, mesh and voxel. Figure 6 visualizes these representative geometric representations on the Stanford Bunny benchmark. Inspired by [11], we further divide the learning approaches into two parts: raw dense representations and boundary dense representations.

V-A1 Raw Dense Representations

Conditioned on input images, deep learning approaches are able to generate 2.5D depth maps or 3D points as raw dense representations that express scene geometry in high resolution. Such raw representations serve as fundamental components to constitute a scene that is well-suited to robotic tasks, such as obstacle avoidance. In SLAM (Simultaneous Localization and Mapping) systems, these raw dense mapping methods are jointly used with motion tracking. For example, dense scene reconstruction can be achieved by fusing per-pixel depth and RGB images, such as DTAM [177] and [178, 179].

1) 2.5D depth representation: Learning depth from raw images is a fast evolving area in computer vision community. There are generally three main categories: supervised learning based, self-supervised learning with spatial consistency based and self-supervised learning with temporal consistency based depth estimation.

One of the earliest approaches is [180] that takes a single image as input, and processes to output per-pixel depths. It uses two deep neural networks, i.e. one for coarse global prediction and the other for local refinement, and applies scale-invariant error to measure depth relations. This method achieves new state-of-the-art performance on NYU Depth and KITTI datasets. More accurate depth prediction is achieved by jointly optimizing the depth and self-motion estimation [181]. This work learns to produce depth and camera motion from unconstrained image pairs via ConvNet based encoder-decoder structure and an iterative network that improves predictions. The network estimates surface normals, optical flow, and matching confidence, with a training loss based on spatial relative differences. Compared to traditional depth estimation methods, this approach achieves higher accuracy and robustness, and outperforms single-image-based depth learning network [181] by better generalizing to unseen structures. [182] proposes a ConvNet based neural model to estimate depth from monocular images by using continuous conditional random field (CRF) learning and a structured learning scheme that learns the unary and pairwise potentials of continuous CRF in a unified deep CNN framework. This model improves upon supervised learning based depth estimation and is relatively more efficient. While these supervised learning methods have shown superior performance compared to traditional structure-based methods, such as [183], their effectiveness is limited by the availability of labeled data during model training, making generalization to new scenarios difficult.

On the other side, recent advances in this field focus on unsupervised solutions, by reformulating depth prediction as a novel view synthesis problem. [184] utilizes photometric consistency loss as a self-supervision signal for training neural models. With stereo images and a known camera baseline, it synthesizes the left view from the right image, and the predicted depth maps of the left view. By minimizing the distance between synthesized images and real images, i.e. the spatial consistency, the parameters of the networks are recovered via this self-supervision in an end-to-end manner. Similarly, [56] proposes a single image depth estimation model that uses binocular stereo footage instead of ground truth depth data. Their approach utilizes an image reconstruction loss to generate disparity images and enforces consistency between disparities produced relative to both the left and right images to improve performance and robustness, outperforming [182] and [184].

In addition to spatial consistency, temporal consistency can also be used as a self-supervised signal[16]. These approaches synthesize the image in the target time frame from the source time frame, while simultaneously recovering egomotion and depth estimation. Importantly, this framework only requires monocular images to learn both depth maps and egomotion. As we have discussed this part in Section III-B, we refer the readers to Section III-B for more details.

The learned depth information can be integrated into SLAM systems to address some limitations of classical monocular solution. For example, CNN-SLAM [185] utilizes the learned depths from single images into a monocular SLAM framework (i.e. LSD-SLAM [186]). It shows how learned depth maps contribute to mitigating the absolute scale recovery problem in pose estimates and scene reconstruction. With the dense depth maps predicted by ConvNets, CNN-SLAM provides dense scene predictions in texture-less areas, which is normally hard for a conventional SLAM system.

2) 3D Points Representation: Deep learning techniques have also been introduced to generate 3D points from raw images. The point-based formulation represents the 3-dimensional coordinates (x, y, z) of points in 3D space. While this formulation is straightforward and easily manipulated, it encounters the challenge of ambiguity, wherein different configurations of point clouds can represent the same underlying geometry.

The pioneer work in this domain is PointNet [187] that directly operates on point clouds, without the need for unnecessary conversion to regular 3D voxel grids or image collections. PointNet is specifically designed to handle the permutation invariance of points in the input, and its applications span various tasks, such as object classification, part segmentation, and scene semantic parsing. Furthermore, [188] develops a deep generative model that can generate 3D geometry in point-based formulation from single images. In their work, a loss function based on Earth Mover’s distance is introduced to tackle the problem of data ambiguity. However, their method has only been validated on the reconstruction task of single objects. As of now, no research on point generation for scene reconstruction has been found, primarily due to the large computational burden associated with such endeavors.

V-A2 Boundary and Spatial-Partitioning Representations

Beyond unstructured raw dense representations (i.e. 2.5D depth maps and 3D points), boundary representations express the 3D scene with explicit surfaces and spatial-partitioning (i.e. boundaries).

1) Surface mesh representation: Mesh-based formulation naturally captures the surface of 3D shape. It encodes the underlying surface structure of 3D models, such as edges, vertices and faces. Several works consider the problem of learning mesh generation from images [189, 190] or point clouds data [191, 192, 193]. However, these approaches are only able to reconstruct single objects, and limited to generating models with simple structures or from familiar classes. To tackle the problem of scene reconstruction in mesh representation, [194] integrates the sparse features from monocular SLAM with the dense depth maps from ConvNets to the update 3D mesh representation. In this work, SLAM-measured sparse features and CNN-predicted dense depth maps are fused to obtain a more accurate 3D reconstruction, a 3D mesh representation is updated by integrating accurately tracked sparse features points. The proposed work shows a reduction in the mean residual error of 38% compared to ConvNet-based depth map prediction alone in 3D reconstruction. To allow efficient computation and flexible information fusion, [195] utilizes 2.5D mesh to represent scene geometry. In this approach, the image plane coordinates of mesh vertices are learned by deep neural networks, while depth maps are optimized as free variables. A factor graph is utilized to integrate information in a flexible and continuous manner through the use of learnable residuals. Experimental evaluation on synthetic and real data shows the effectiveness and practicability of the proposed approach.

2) Surface function representation: This representation describes the surface as the zero-crossing of an implicit function. A popular choice is signed distance function, a continuous volumetric field, in which the magnitude of a point is the distance to the surface boundary and the sign determines whether it is inside or outside. DeepSDF is proposed learn to generate such a continuous field by a classifier, indicating which boundary is the shape surface [196]. Specifically, DeepSDF is a learned continuous Signed Distance Function (SDF) representation of a class of shapes that enables high quality shape representation, interpolation and completion from partial and noisy 3D input data. It represents a shape’s surface by a continuous volumetric field and explicitly represents the classification of space as being part of the shapes interior or not. DeepSDF can represent an entire class of shapes and has impressive performance on learning 3D shape representation and completion while reducing the model size by an order of magnitude compared with previous works. Another approach, Occupancy Networks generate a continuous 3D occupancy function with deep neural networks, representing the decision boundary with neural classifier[197], a description of the 3D output at infinite resolution without excessive memory footprint. The effectiveness of this approach has been validated for 3D reconstruction from single images, noisy point clouds, and coarse discrete voxel grids, and demonstrate competitive results over baselines. To further improve Occupancy Networks, Convolutional Occupancy Networks [198] combines Convolutional encoders with implicit occupancy decoders. This method is empirically validated through experiments reconstructing complex geometry from noisy point clouds and low-resolution voxel representations. In addition, [199] leverages deep fully-connected neural network to optimize a radiance field function to represent a scene. Their experiments demonstrate good performance in novel view synthesis task. Compared with raw representations, surface function representation reduces storage memory significantly. Different from aforementioned methods that are limited to closed surfaces, NDF [200] is proposed to predict unsigned distance fields for arbitrary 3D shapes, which is more flexible in practical usages.

3) Voxel representation: Similar to the usage of pixel (i.e. 2D element) in images, voxel is a volume element in a three-dimensional space. Previous works explore to use multiple input views, to reconstruct the volumetric representation of a scene [201, 202] and objects [203]. For example, SurfaceNet [201] learns to predict the confidence of a voxel to determine whether it is on surface or not, and reconstruct the 2D surface of a scene. SurfaceNet is based on a 3D convolutional network that encodes the camera parameters together with the images in a 3D voxel representation, allowing for the direct learning of both photo-consistency and geometric relations of the surface structure. This framework is evaluated on the large-scale scene reconstruction dataset, demonstrating its effectiveness for multiview stereopsis. RayNet [202] reconstructs the scene geometry by extracting view-invariant features while imposing geometric constraints. It encodes the physics of perspective projection and occlusion via Markov Random Fields while utilizing a ConvNet to learn view-invariant feature representations. Some works focus on generating high-resolution 3D volumetric models [204, 205]. For example, [205] designes a convolutional decoder based on octree-based formulation to enable scene reconstruction in much higher resolution. This network predicts the structure of the octree and the occupancy values of individual cells, making it valuable for generating complex 3D shapes. Unlike standard decoders with cubic complexity, this architecture allows for higher resolution outputs with limited memory budget. Others can be found on scene completion from RGB-D data [206, 207]. One limitation of voxel representation is its high computational requirement, especially when attempting to reconstruct a scene in high resolution.

Choosing optimal representation for mapping is still an open question. The choice of scene representation for SLAM depends on a range of factors, including the sensor modality, the level of detail required, the computational resources available, and the size and complexity of the environment. In general, dense representations, such as depth maps or point clouds, offer a comprehensive and detailed view of the scene but incur a high computational and memory cost. This renders them more suitable for small-scale scenes. On the other hand, boundary representations, such as mesh and surface function-based formulations, are preferred for large-scale outdoor environments due to their ability to capture the scene’s structure and geometry while keeping memory and computational requirements within feasible limits.

V-B Semantic Map

Semantic mapping connects semantic concepts (i.e. object classification, material composition etc) with environment geometry. The advances in deep learning greatly foster the developments of object recognition and semantic segmentation. Maps with semantic meanings enable mobile agents to have a high-level understanding of their environments beyond pure geometry, and allow for a greater range of functionality and autonomy.